1. Introduction

Volatility prediction in financial markets is essential for portfolio optimization, risk management, and option pricing. Accurate volatility predictions enable investors to make informed decisions, hedge against adverse market movements, and optimize capital allocation. Traditional statistical time series models, such as Generalized Autoregressive Conditional Heteroskedasticity (GARCH) models, have been predominantly used for volatility estimation and forecasting [

1]. While GARCH-type models effectively capture volatility clustering and persistence characteristics of financial markets, they are inherently limited by their linear structure and reliance on distributional assumptions, making them less effective at capturing the complex, nonlinear dynamics and regime shifts prevalent in modern financial markets [

2].

Deep learning has substantially advanced financial time series forecasting by enabling automated feature extraction from raw data. Long Short-Term Memory (LSTM) networks capture long-term dependencies effectively [

3], while Transformers model global temporal relations through self-attention without sequential constraints [

4]. Hybrid designs combining Convolutional Neural Networks (CNNs) for spatial features and LSTMs or Transformers for temporal learning have shown strong performance. Nevertheless, most models process time series in a one-dimensional form, potentially overlooking rich multi-scale temporal-frequency structures inherent in financial volatility.

To address the limitation of one-dimensional representations, researchers have adopted signal processing techniques that extract multi-scale information from financial data. Time–frequency analysis methods capture both temporal and spectral characteristics, effectively revealing market dynamics. Among them, the Wavelet Transform decomposes signals into time-localized frequency components, identifying short-term volatility spikes and long-term trends [

5]. Transforming time series into two-dimensional representations, such as scalograms or spectrograms, exposes hidden structures missed in raw sequences. Integrating these representations with deep learning has improved forecasting accuracy by providing richer multi-scale features. Beyond wavelets, decomposition methods such as Empirical Mode Decomposition (EMD) and Variational Mode Decomposition (VMD) also enhance performance. However, most prior studies still rely on CNNs or recurrent neural networks (RNNs) to extract features from such representations, limiting their ability to capture global dependencies.

In parallel with signal processing advances, vision-based approaches have emerged as a new paradigm by transforming financial time series into two-dimensional images. This enables the use of computer vision models for market prediction. Early studies converted candlestick charts and technical indicator plots into images analyzed by CNNs to capture spatial patterns unseen in raw sequences. Later methods, such as the Gramian Angular Field and its quantum variants [

6], further encoded temporal dependencies into image representations. Pre-trained CNNs have achieved strong generalization across markets, but their focus on local features limits global understanding. Vision Transformers (ViTs), leveraging self-attention to model long-range dependencies, offer greater potential to capture both local and global structures in time–frequency representations.

Accurate volatility estimation is essential for building reliable prediction models. Traditional close-to-close estimators lose valuable information by ignoring intraday price movements. To address this, Parkinson [

7] proposed using the daily high–low range, providing more efficient and robust variance estimates. Recent research has also explored integrating multiple information sources for improved forecasting. Combining realized volatility measures with deep learning architectures has shown that intraday information enhances accuracy [

8]. Moreover, volatility exhibits dependencies across multiple time scales, motivating models that jointly capture short-term fluctuations and long-term persistence [

9]. Our approach addresses this challenge through time–frequency analysis and a parallel architecture that effectively integrates multi-scale volatility features.

Building upon these advances, this study proposes TF-ViTNet, a novel dual-path hybrid model that addresses three key limitations in existing work. First, while most deep learning models process time series in one-dimensional sequential form, we transform volatility time series into two-dimensional scalograms via Continuous Wavelet Transform (CWT) to capture multi-scale temporal-frequency structures. Second, whereas signal processing techniques are typically combined with traditional sequential models, we employ a ViT to analyze scalogram images, enabling the capture of both local and global patterns through self-attention mechanisms. Third, while existing vision-based approaches predominantly rely on CNNs that excel at local feature extraction, ViTs capture broader global dependencies through self-attention, potentially leveraging time–frequency representations more effectively. Our approach employs a parallel architecture where a ViT pathway analyzes scalograms and an LSTM pathway processes numerical technical indicators, with both streams independently processed and integrated at the final stage. This design allows each modality to develop specialized representations before fusion, distinguishing our approach from integrated models that combine features at each time step. We evaluate TF-ViTNet on NASDAQ and S&P 500 indices from 2010 to 2024, demonstrating superior performance compared to baseline LSTM and various hybrid architectures across different volatility regimes. In this study, we focus exclusively on one–step-ahead volatility prediction, where the model estimates the next day’s volatility using information available up to the current day. Multi-step forecasting is not considered in this work and represents an important direction for future extension.

The main contributions of this study are threefold. First, we introduce the novel combination of CWT-based time–frequency scalograms with a Vision Transformer architecture for volatility prediction. While CWT and time–frequency analysis have been widely used in prior studies, their integration with ViTs within a parallel dual-path architecture has not yet been explored. Second, we apply ViTs to financial volatility prediction, exploiting their ability to capture global spatiotemporal patterns embedded in scalogram images through self-attention mechanisms. Third, we design a novel dual-path parallel architecture that effectively fuses heterogeneous data representations by combining a ViT pathway for scalograms with an LSTM pathway for numerical technical indicators, allowing each modality to develop specialized features independently before integration.

The remainder of this paper is structured as follows:

Section 2 provides a comprehensive review of the literature across deep learning for financial forecasting, signal processing methods, vision-based approaches, and volatility modeling.

Section 3 describes our methodology, including data preprocessing, the proposed TF-ViTNet architecture, and experimental design.

Section 4 outlines the experimental methodology and presents statistical analyses of financial time-series data.

Section 5 presents comprehensive experimental results and analysis.

Section 6 discusses the findings and concludes with limitations and future research directions.

3. Methodology

3.1. Time–Frequency Analysis

Financial time series exhibit complex non-stationary characteristics and abrupt short-term fluctuations that cannot be adequately captured only by traditional time series analysis. To effectively analyze these dynamic properties, time–frequency analysis techniques that simultaneously represent signals in both temporal and spectral domains have emerged as powerful tools for financial signal processing. The conventional Fourier transform provides global frequency information averaged over the entire signal duration, which limits its ability to capture transient phenomena and time-varying spectral characteristics that are prevalent in financial markets. To address this limitation, the Short-Time Fourier Transform (STFT) was developed to provide localized frequency information by partitioning the signal into overlapping time windows and applying the Fourier transform to each segment [

44]. The STFT of a signal

is defined as shown in Equation (

1).

where

,

,

f,

j, and

represent the original signal, a window function centered at time

that localizes the analysis, the frequency, the imaginary unit, and the temporal position of the analysis window, respectively. While STFT provides time-localized frequency information, it suffers from the fundamental trade-off between temporal and frequency resolution due to the fixed window length. This limitation becomes particularly pronounced when analyzing financial time series that contain both rapid transient events and slower trend components.

CWT offers a more flexible approach by employing basis functions that are naturally localized in both time and frequency domains. Unlike STFT, CWT uses wavelets with varying time–frequency resolution, providing fine temporal resolution for high-frequency components and fine frequency resolution for low-frequency components. For financial signal analysis, the Morlet wavelet is commonly employed due to its optimal time–frequency localization properties. The Morlet wavelet is defined as shown in Equation (

2).

where

denotes the central frequency parameter. Using the wavelet function, the CWT is defined as shown in Equation (

3).

where

a,

b, and

indicate the scale parameter that controls the dilation of the wavelet and inversely relates to frequency, the translation parameter representing temporal localization, and its complex conjugate, respectively.

The squared magnitude of the wavelet coefficients represents the signal energy at each time position b and scale a, which can be visualized as a two-dimensional scalogram image. In this representation, rows correspond to frequency bands, columns represent time, with higher frequencies in the upper region and lower frequencies in the lower region. The intensity of each pixel indicates the energy concentration at the corresponding time–frequency location.

In this study, we apply CWT directly to 60-day sequences of Parkinson’s volatility series to generate scalogram images that capture the energy distribution across time and frequency domains. These generated scalograms serve as image inputs to our proposed ViT architecture, enabling the model to learn complex spatiotemporal patterns that are not readily apparent in the original time series representation. The transformation from time series to scalogram representation offers several advantages for volatility prediction: different frequency components can reveal various market dynamics by capturing distinct patterns across frequency bands, complex temporal dependencies can be transformed into spatial patterns naturally suited for computer vision techniques, the time–frequency representation can help distinguish signal from noise components across different scales, and hidden periodic patterns or regime changes in volatility may become more apparent in the scalogram.

3.2. Vision Transformer

The Transformer model, originally proposed by [

4] for natural language processing, achieved remarkable success by overcoming the limitations of RNNs. The core self-attention mechanism of Transformers models relationships between all elements in an input sequence in parallel, effectively learning long-range dependencies and global context. This architectural success led to its extension into computer vision, resulting in the Vision Transformer.

Unlike traditional CNNs that progressively integrate local features to understand entire images, ViT introduced a novel approach by dividing images into multiple fixed-size patches and processing them as sequence data [

45]. As illustrated in

Figure 1, each patch is converted into a vector through embedding, positional encoding containing spatial information is added, and then fed into a standard Transformer encoder. This structure enables ViT to learn global relationships between patches across the entire image from the initial layers of the model.

Specifically, an input image

is divided into

N patches of size

. Each patch is transformed into a

D-dimensional embedding vector through linear transformation

, and learnable positional embeddings

are added to preserve spatial location information. Additionally, a class token

is prepended to the sequence for classification purposes, and the final input sequence

to the encoder is defined as shown in Equation (

4).

where

,

,

,

,

,

N,

P,

C, and

D represent the input sequence to the Transformer encoder, the learnable class token for classification, the

i-th image patch, the linear embedding matrix that maps patches to

D dimensions, the learnable positional embeddings containing spatial information, the total number of patches, the patch size, the number of image channels, and the embedding dimension, respectively.

These patch embeddings are then fed into the Transformer encoder, where the self-attention mechanism evaluates interactions between patches and learns global features. The core operation of self-attention is scaled dot-product attention using query

Q, key

K, and value

V vectors, and is defined as shown in Equation (

5).

where

denotes the dimension of the key vectors. The scaling factor prevents the dot products from becoming excessively large, facilitating stable gradient propagation. ViT employs multi-head attention, which performs this attention operation multiple times in parallel, allowing the model to attend to information from different representation subspaces. Each attention head is computed independently as shown in Equation (

6), and the outputs from all heads are then concatenated and projected. Multi-head attention with

h heads is defined as shown in Equation (

7).

where

,

,

are learnable projection matrices for each head, and

is the projection matrix that combines outputs from all heads. Each Transformer encoder block consists of multi-head self-attention and a feed-forward network, incorporating layer normalization and residual connections. The computation of the intermediate representation

is shown in Equation (

8), and the final output of 𝓁-th encoder block

is defined as shown in Equation (

9).

where MSA, LN, MLP, and

represent multi-head self-attention, layer normalization, multi-layer perceptron, and the output of the 𝓁-th block, respectively.

In this study, we utilize scalograms transformed through CWT as input images for ViT. Financial time series data contains complex time–frequency information beyond simple time series values, and scalograms can quantitatively and intuitively represent this information in two-dimensional image format. Since ViT demonstrates strength in capturing global contextual information, it can effectively learn relationships between visual characteristics conveyed through scalograms and complex volatility patterns in financial data.

For implementation, we employed the

vit_tiny_patch16_224 pre-trained model from the timm library as a feature extractor [

46]. Input images have a size of

pixels and are divided into a

grid of patches with a patch size of

. Each patch is linearly transformed into a 384-dimensional embedding vector, and learnable 384-dimensional positional encoding is added. The Transformer encoder consists of 12 encoder blocks, each with 6 multi-head attention heads, and the MLP internal dimension is set to 1536, which is 4 times 384. The classification head is removed to purely extract token features. The extracted patch token sequence is then passed to an LSTM module to learn temporal dependencies.

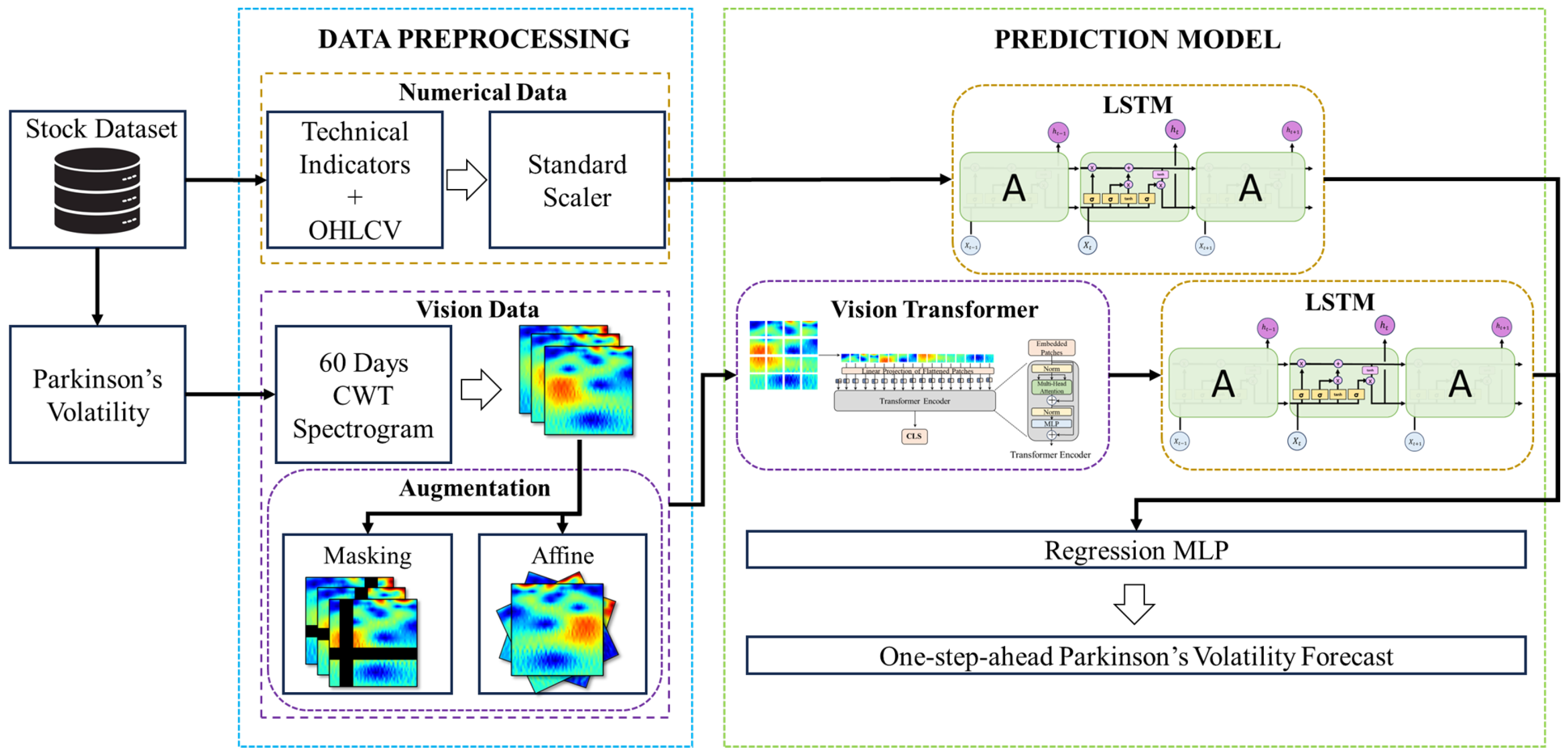

3.3. Proposed TF-ViTNet for Volatility Prediction

This study proposes the Time–Frequency Vision Transformer Network (TF-ViTNet), a dual-path hybrid model for volatility prediction. TF-ViTNet simultaneously learns visual features from scalogram images and temporal features from numerical data to capture complex patterns inherent in financial time series from multiple perspectives. The proposed model consists of two parallel pathways: a ViT-based refinement path that analyzes image-based visual patterns and an LSTM path that analyzes numerical temporal trends. The scalogram feature vector extracted by ViT and the time-series feature vector extracted by the LSTM path exist in different feature spaces. The refinement path performs a feature-space transformation, non-linearly reshaping the ViT-derived feature vector through the LSTM’s gate mechanism. Through this refinement process, the ViT feature vector is refined and aligned into an optimal form for fusion with the numerical feature vector from the LSTM path. Features independently extracted from each path are integrated in the final stage to predict Parkinson’s volatility for the next trading day.

The first path receives scalogram images generated through CWT as input. The generated scalograms apply data augmentation techniques such as masking and affine transformation to improve the model’s generalization performance. The core of this path is utilizing a pre-trained ViT as a feature extractor. ViT effectively captures global spatial patterns and interrelationships across the entire image, and through transfer learning, applies visual feature understanding capabilities learned from large-scale image datasets to financial scalogram analysis. High-dimensional feature vectors extracted through ViT are sequentially passed to LSTM layers to enhance temporal context. The LSTM module is not intended to recover temporal information already encoded in scalograms, but rather to refine the sequential structure of ViT patch embeddings. Since ViT processes patches as an unordered token sequence with position embeddings, the additional LSTM layer allows the model to learn smoother temporal transitions across patches and align the extracted visual features with the temporal dynamics learned in the numerical path.

The second path directly receives multivariate time series data, including OHLCV data, Parkinson’s volatility from previous time points, and traditional technical indicators such as RSI and MACD. This path uses a standard LSTM network to effectively handle the non-stationarity and long-term dependencies inherent in financial data. LSTM learns long-term trends and temporal dependencies inherent in numerical data by selectively remembering important information from the past and reflecting it in current predictions.

The two feature vectors generated at the final stage of each path are combined into a single high-dimensional vector through concatenation. This integrated vector contains rich information implying both visual patterns and quantitative trends of the market, and is ultimately passed to a multi-layer perceptron that performs regression. This multi-layer perceptron is a fully connected neural network containing one or more hidden layers, and performs the role of mapping nonlinear relationships from complex features integrated from both paths to the final prediction target of Parkinson’s volatility for the next trading day. The overall structure of the model is shown in

Figure 2.

The overall data processing, training, and evaluation pipeline of the volatility prediction model proposed in this study is presented in Algorithm 1. This process consists of four stages: (1) a preprocessing stage that generates numerical sequences and scalogram images from raw time series data, (2) an optimal hyperparameter search stage using Optuna [

47], (3) a training stage for the dual-path model with optimized hyperparameters, and (4) a stage that evaluates the performance of the final model.

The main notation used in the algorithm is as follows:

,

L,

,

I,

y,

, and

represent raw time series data, sequence length, technical indicator sequences, CWT scalogram images, target volatility values, model parameters, and hyperparameter set, respectively.

| Algorithm 1 Proposed Volatility Prediction Algorithm |

- Require:

Raw time series data , Hyperparameter search space , Sequence length L - Ensure:

Final model parameters , Performance metrics M

- 1:

; - 2:

- 3:

for each day t in available period do - 4:

; - 5:

; - 6:

- 7:

end for - 8:

- 9:

▹ using Optuna (TPE Sampler)

- 10:

Initialize model parameters with hyperparameters - 11:

for do - 12:

for mini-batch do ▹ Dual-path forward pass - 13:

- 14:

; - 15:

- 16:

- 17:

; - 18:

end for - 19:

if EarlyStopping on then ; break - 20:

end if - 21:

end for

- 22:

- 23:

▹ MSE, RMSE, R2 - 24:

return

|

To fully exploit the potential of this complex structure, various hyperparameters related to the model’s learning process, structural complexity, and generalization performance must be carefully optimized. In this study, we aimed to efficiently discover the optimal combination using Optuna, an automated hyperparameter search tool based on Bayesian optimization.

To enhance the learning stability and robustness of the model, we adopted Huber Loss as the loss function [

48]. Huber Loss is a hybrid loss function that behaves like mean squared error when prediction errors are smaller than a certain threshold and like mean absolute error when errors exceed the threshold. Due to this characteristic, it converges stably through the smooth gradient of mean squared error in typical situations. However, it reacts less sensitively to large prediction errors caused by sudden market volatility, similar to mean absolute error, preventing the model from being excessively influenced by outliers. In particular, instability in the training process of Vision Transformer-based models is known as a major problem that impairs accuracy, and this instability can be difficult to detect as it manifests as subtle performance degradation rather than complete training failure [

49]. Therefore, adopting outlier-robust Huber Loss is an effective strategy for stable model training. As the optimization algorithm, we used the Adam optimizer, which induces fast and stable convergence through adaptive learning rates, and is a commonly used choice for training ViT architectures [

49,

50].

The main hyperparameters subject to search are directly related to the model’s learning process, structure, expressiveness, and generalization performance. To control the learning process, we adjusted the optimizer’s learning rate and mini-batch size. As variables determining the model’s structure, we searched for the Vision Transformer’s feature vector dimension, the hidden layer size and number of layers of the LSTM processing image features. Finally, to suppress overfitting and improve generalization performance, we optimized the dropout ratio and weight decay coefficient. The specific search space for each hyperparameter is summarized in

Section 4.1.

The hyperparameter search space in this study was designed to precisely explore the balance between the model’s learning stability, expressiveness, and generalization performance. To achieve this, we included not only general learning-related variables such as learning rate and batch size, but also structural elements such as ViT Output Dimension in the search space. This is to directly explore the impact of model structure on task-specific performance and find the most suitable model capacity for the data [

51]. The search ranges for core hyperparameters, learning rate and batch size, were set based on empirically proven ranges from previous studies on ViT model training [

52]. The wide learning rate range in log scale helps efficiently explore stable convergence points, and the batch size was configured to balance the accuracy of gradient estimation and computational efficiency. Additionally, we included weight decay and dropout as search variables to control overfitting. Weight decay limits the magnitude of weights, and dropout prevents unnecessary synchronization between neurons to enhance model robustness [

53]. Since the optimal regularization strength in modern architectures such as ViT heavily depends on data and model complexity [

54], this study aimed to identify the optimal combination through automated search rather than simply adopting universal values [

55].

3.4. Evaluation Metrics

To comprehensively evaluate the regression performance of the model, we adopted four metrics: Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Percentage Error (MAPE), and the coefficient of determination (

), which are defined as shown in Equations (

10a)–(

10d), where

,

,

, and

n represent the actual value, predicted value, mean of actual values, and total number of samples, respectively.

where MSE calculates the average of squared differences between actual and predicted values, imposing larger penalties for greater errors. RMSE, computed as the square root of MSE, shares the same unit as the actual values, enabling intuitive interpretation of prediction errors. MAPE expresses prediction accuracy as the average percentage difference between actual and predicted values, allowing for scale-independent comparison across datasets. The coefficient of determination

indicates the proportion of variance in the actual values explained by the model’s predictions relative to the mean.

These evaluation metrics serve not only to measure model performance but also to validate the practical improvement in predictive power of the proposed model compared to benchmark models using previous-day volatility as predictions. Additionally, these metrics were utilized as criteria for finding the optimal parameter combination during hyperparameter optimization with Optuna and as the basis for early stopping to prevent overfitting and select the optimal model.

4. Experiments and Data

This section empirically validates the volatility prediction performance of the proposed TF-ViTNet model and quantitatively analyzes its superiority through comparison with various benchmark models. The experiments are conducted using S&P500 and NASDAQ index data from 2010 to 2024 in a walk-forward manner. Specifically, for testing year t, the model is trained on data from t–10 years (e.g., 2000–2007 for testing 2010), validated on the subsequent two years (e.g., 2008–2009), and then tested on year t. This rolling evaluation continues annually through 2024, ensuring consistent and non-overlapping training and testing periods. This approach strictly evaluates the model’s generalization performance through sequential validation that predicts the future from past data, similar to real financial market environments. All benchmark models undergo hyperparameter optimization using Optuna for fair evaluation. Prediction performance is comprehensively assessed based on MSE, RMSE, and metrics. The following subsections detail the configuration of benchmark models and provide comparative analysis of prediction results over the entire period.

4.1. Experiments

To objectively evaluate the prediction performance of the proposed TF-ViTNet model, we conduct comparative analysis with various benchmark models. The benchmark models range from simple baselines to sophisticated hybrid architectures that utilize both image and numerical data.

Table 1 summarizes the configuration of each benchmark model.

To ensure a fair comparison, all deep learning models (the proposed TF-ViTNet and all baseline models) were trained and optimized under a unified hyperparameter configuration, as summarized in

Table 2. All models were trained for up to 200 epochs using the Adam optimizer and HuberLoss. We employed an Early Stopping patience of 10 and Gradient Clipping with a max_norm of 1.0 to ensure stable convergence. A ReduceLROnPlateau scheduler was also employed for adaptive learning rate adjustment. Key hyperparameters such as Batch Size, Learning Rate, Dropout Rate, and Weight Decay, along with specific architectural parameters (e.g., hidden sizes), were tuned using Optuna, with the search spaces defined in

Table 2.

Baseline Models

To establish the lower bound of prediction performance, we introduce a Naive Benchmark that assumes the previous day’s volatility persists to the current day. This persistence model () reflects the fundamental characteristic of volatility clustering, where high volatility tends to be followed by high volatility in financial markets.

In addition to the Naive Benchmark, we include a Random Walk model, a widely used reference model in volatility studies [

56]. The Random Walk assumes that daily volatility follows the previous value plus Gaussian innovation and is defined as shown in Equation (

11).

where

is the empirical standard deviation of daily volatility changes. This baseline captures both volatility persistence and inherent randomness observed in financial data, and serves as a lower bound that forecasting models are expected to outperform.

We also establish an LSTM-only model using 23 technical indicators to assess how well purely quantitative approaches without scalogram images can predict volatility. The model receives a 60-day sequence of technical indicators with shape as input. A single LSTM layer with 256 hidden units processes this sequence and outputs the hidden state vector at the final time step. This vector passes through a 256-dimensional fully connected layer, then through a single-neuron regression head to predict next-day volatility.

Hybrid Models with Integrated Inputs

These models integrate image features at each time step of the time series by extracting a single feature vector from the scalogram image and repeatedly combining it with numerical data at all 60 time steps.

The CNN feature extractor follows a standard VGGNet-style CNN architecture [

57]. The input image passes through four consecutive convolutional blocks, each consisting of two Conv2d layers with

kernels, ReLU activation functions, and a

MaxPool2d layer for dimensionality reduction. The number of channels progressively increases as 32, 64, 128, and 256, effectively extracting hierarchical visual features from the image. The feature map is then standardized to

through AdaptiveAvgPool2d and flattened into a one-dimensional vector. This vector passes through two fully connected layers (1024 and 256 dimensions), with 50% Dropout applied between them to prevent overfitting.

The ViT feature extractor is based on the pre-trained vit_tiny_patch16_224 model from the timm library [

46]. We remove the classification head and pass the CLS token through a Projection layer to generate the final image feature vector. The extracted feature vector is then combined with all 60 time steps of the numerical data sequence, creating an expanded sequence that is fed into the LSTM network. This allows image information to continuously influence the model’s learning of temporal patterns.

Hybrid Model with Parallel Processing

In contrast to the previous approach, these models process image and numerical data through independent pathways and then combine the results. This dual-path architecture generates embeddings optimized for each data type before final integration.

The proposed TF-ViTNet and its direct comparison target TF-CNet are both based on this parallel processing structure. Both models share the same LSTM structure in the numerical path but differ in the image path feature extractor. TF-ViTNet uses Vision Transformer to learn global patterns of scalograms, while TF-CNet uses CNN to extract local features. Performance comparison of these two models reveals how the feature extraction methods of ViT and CNN affect volatility prediction within the same parallel architecture.

The image path generates a single feature vector through CNN or ViT, treats it as input with sequence length 1, and passes it through a dedicated LSTM layer to capture temporal context. The numerical path processes 60 days of numerical data through the same structure as the Baseline LSTM model to generate a 256-dimensional embedding. This 60-day length was chosen based on a medium-term perspective; 60 trading days, corresponding to approximately three months or one financial quarter, is a period widely used to filter out short-term daily noise and capture significant medium-term momentum and volatility clustering phenomena. In the final stage, both embeddings (256 dimensions each) are concatenated into a 512-dimensional integrated vector, which is fed into a single-neuron fully connected layer for final prediction. This dual-path structure was originally proposed for video analysis to separately learn spatial and temporal information [

58], but is effectively applied in this study to process data with different characteristics.

Econometric Grounding

We incorporate three standard volatility forecasting models for comparative analysis: the GARCH(1,1) model [

59], the Heterogeneous Autoregressive model of Realized Volatility (HAR-RV) [

9], and the Realized GARCH model [

8]. These models are specifically chosen to benchmark our proposed model (TF-ViTNet) and other deep learning baselines against established econometric methods.

To ensure a fair and direct comparison with our proposed model, all econometric baselines were evaluated using the identical walk-forward validation scheme: a rolling 10-year training window was used to forecast the next single day’s volatility. All models share the same prediction target, Parkinson’s Volatility (). The implementation details are as follows:

GARCH(1,1): This model captures volatility persistence using lagged squared log-returns () and past variance (). As its natural output (conditional variance) is not on the same scale as , the one-step-ahead prediction is linearly scaled to match the mean and standard deviation of the training set’s series.

HAR-RV: This model is specifically designed to capture the long-memory property of realized volatility. Following [

9], it regresses the current

directly on the average

of the previous day, week (5 days), and month (22 days). As it is fitted directly to the target series, no scaling is required.

Realized GARCH: This model [

8] extends the GARCH framework by incorporating the realized volatility measure (

) directly as an external regressor. Similar to GARCH(1,1), its variance prediction is linearly scaled to match the target

series for accurate comparison.

The performance of these econometric models is presented alongside our deep learning models in

Section 5.1, providing a comprehensive benchmark for evaluating the contributions of the proposed architectures.

4.2. Data

This study uses daily market data from the NASDAQ Composite and S&P 500 indices spanning from 2000 to 2024. The dataset includes open, high, low, close prices (OHLC) and trading volume. We select these two major U.S. indices to capture different market characteristics: NASDAQ represents technology-focused stocks with higher volatility, while S&P 500 represents a diversified portfolio across various sectors with more stable movements. Although the evaluation period begins in 2010, data from 2000 are used to ensure sufficient historical context for model training in the walk-forward design. The data undergoes preprocessing including missing value handling, outlier removal, and normalization before model training. Financial time series contain irregular noise and nonlinear characteristics that make it difficult to clearly identify market trends from raw data alone. To address this limitation, technical indicators are widely used to quantify latent market patterns through statistical processing of historical data [

60]. These indicators transform the original time series into multidimensional feature vectors, enabling learning models to more effectively capture complex relationships inherent in the data. We categorize indicators into four groups to comprehensively capture various market aspects: trend, momentum, volatility, and volume.

Momentum indicators measure the speed and strength of price changes to diagnose overbought or oversold market conditions. The Relative Strength Index (RSI) predicts major market turning points based on the relative strength of price gains versus losses [

61]. The Stochastic Oscillator measures momentum by evaluating the relative position of the current close within the price range over a specific period [

62]. Williams %R operates on a similar principle. The Moving Average Convergence Divergence (MACD) is a representative trend-following momentum indicator that simultaneously shows trend strength and direction through the relationship between two exponential moving averages [

63]. Volatility indicators measure the amplitude or degree of dispersion in asset prices. Bollinger Bands form standard deviation bands around a moving average to visually represent relative levels of price volatility [

64]. Average True Range (ATR) quantifies market volatility by measuring the average range of daily price movements [

61]. Parkinson’s volatility, the core prediction target of this study, is also an important volatility measure that utilizes intraday high and low prices. Volume indicators combine volume information with price to assess trend reliability. Volume-Weighted Average Price (VWAP) calculates the average execution price over a specific period using volume as weights, reflecting market energy. All calculated indicators constitute a multivariate time series dataset used as input to the LSTM network.

Table 3 presents the formulas for all technical indicators grouped by category, and

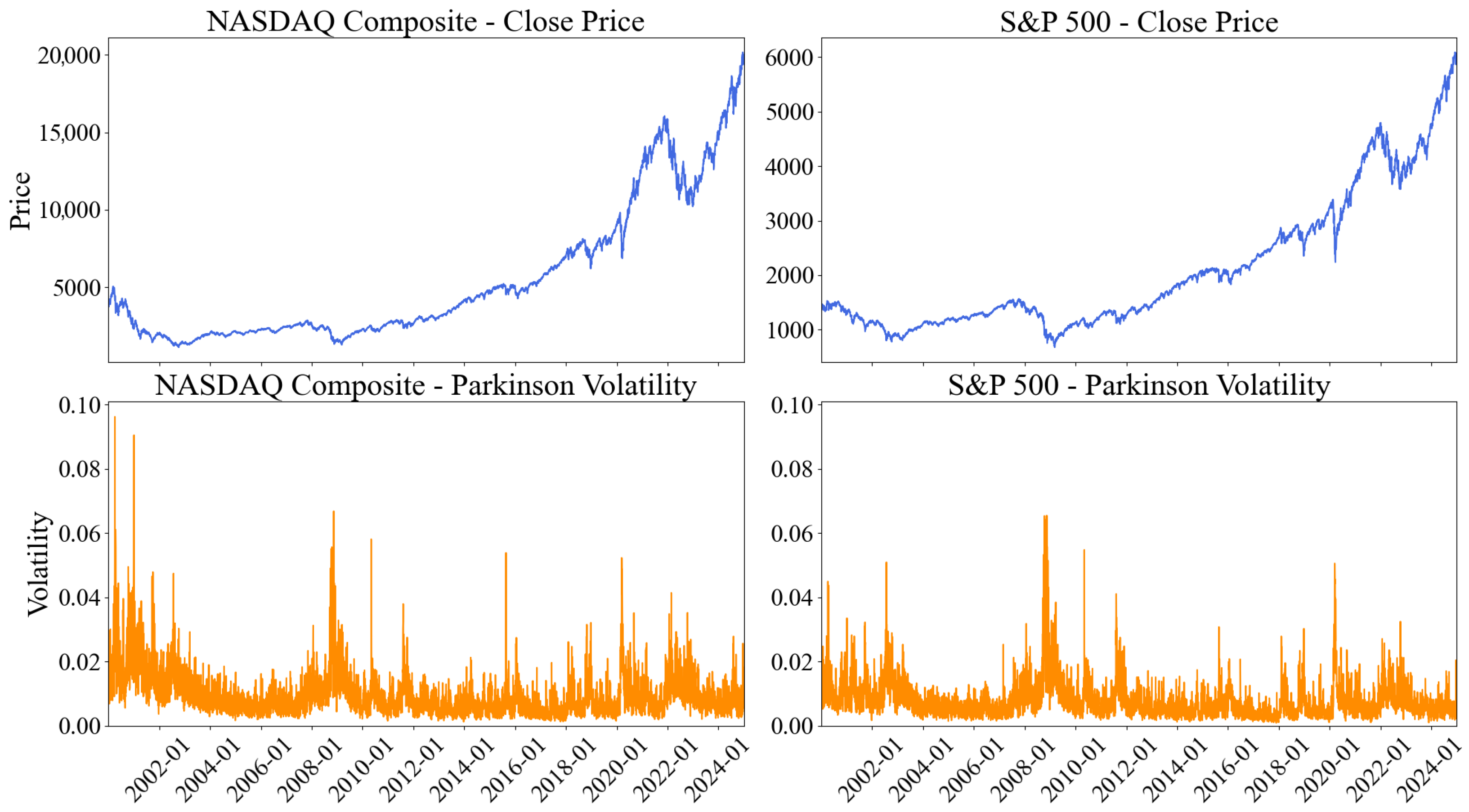

Figure 3 shows the time series evolution of closing prices and Parkinson’s volatility for both indices.

Analysis of NASDAQ and S&P 500 index data from 2000 to 2024 reveals that both markets recorded steady long-term growth but with distinct characteristics.

Figure 3 visualizes the temporal evolution of closing prices and Parkinson’s volatility for both indices. Both markets show RSI averages of 54.27 and 54.06, indicating mild buying pressure above 50, while ADX averages of 23.18 and 22.74 suggest clear trending behavior. However, the technology-focused NASDAQ exhibits significantly higher volatility with a standard deviation to average closing price ratio of 0.84, recording higher long-term returns. In contrast, the diversified S&P 500 shows a ratio of 0.60, indicating relatively lower volatility and more stable movement with gradual upward momentum.

Table 4 and

Table 5 provide comprehensive descriptive statistics for all indicators, including measures of central tendency, dispersion, distribution shape, and stationarity tests.

This study adopts walk-forward cross-validation to preserve temporal dependencies in time series data. Unlike standard k-fold cross-validation, this approach ensures that training data always temporally precedes validation and test data, preventing look-ahead bias. Specifically, we train and validate the model on 10 years of data, then evaluate prediction performance on the following 1-year period, repeating this process across the entire period. This approach is a standard methodology for realistically estimating model performance in actual market environments [

65]. To ensure technical indicators can be accurately calculated at each training start point, we acquire data from 2000, two years before the analysis period begins.

Close-based volatility has an obvious limitation in that it fails to reflect intraday price movements. To address this, we adopt Parkinson’s volatility, which utilizes daily high and low prices, as the target time series signal for analysis [

7]. Parkinson’s volatility explicitly incorporates intraday fluctuation ranges in its calculation, providing statistically more efficient and accurate estimation of actual volatility compared to close-based volatility. This richer capture of intraday noise and price movements inherent in financial time series provides meaningful information to the model.

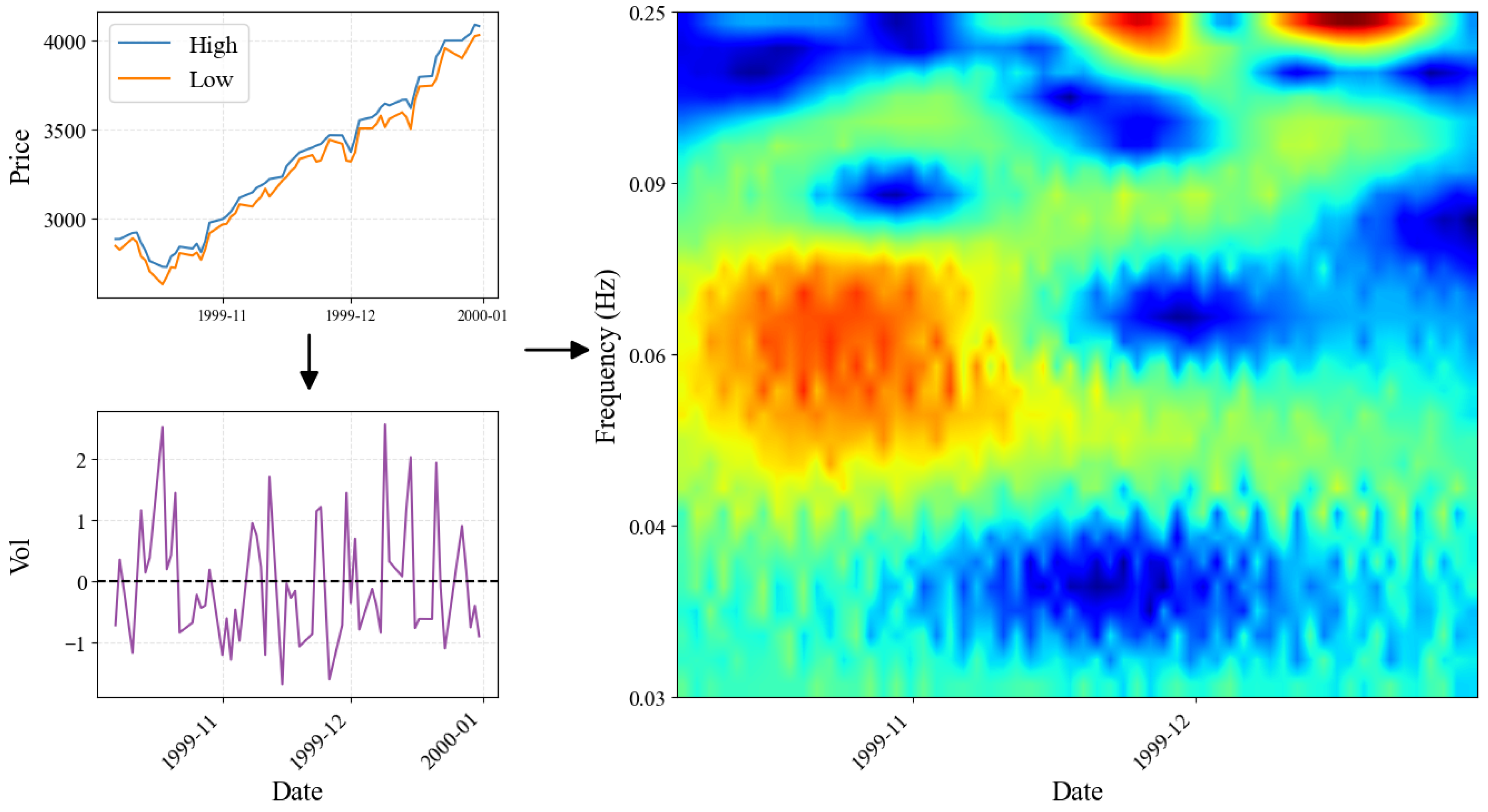

To capture the complex dynamic characteristics of financial time series, we transform the Parkinson’s volatility signal into a two-dimensional scalogram image in the time–frequency domain using CWT. The process of generating a single input image proceeds as follows. First, we extract the Parkinson’s volatility time series for the past 60 trading days from the analysis point, then normalize the signal to zero mean and unit standard deviation. We apply a wavelet (cmor1.5-1.0) with bandwidth 1.5 and center frequency 1.0 to the normalized signal. If the bandwidth is lower (e.g., B = 0.5), the image becomes too smeared along the time axis, making it difficult to identify the precise timing of volatility bursts. Conversely, if the bandwidth is higher (e.g., B = 2.5), information along the frequency axis is compressed, making it difficult to distinguish between the diverse shapes of volatility patterns. A center frequency (C) of 1.0 is used because it effectively captures the main energy of the volatility signal near the center of the scalogram. If this value is too low (e.g., C = 0.5), the image is concentrated only in the low-frequency band (bottom), and if it is too high (e.g., C = 1.5), seemingly unnecessary high-frequency noise (top) is emphasized. For multi-resolution analysis, the scale range is set from 4 to 31. The minimum scale of 4 effectively filters out high-frequency daily noise, while the maximum scale of 31 fully encompasses the crucial weekly and monthly market cycles. The scalogram is constructed by applying absolute values to the complex coefficient matrix computed through CWT, and is visualized with a jet colormap. This transformation process was carried out using using Python 3.12.12 and the PyWavelets 1.9.0 open-source library [

66]. Unlike STFT which uses fixed windows, the CWT-based approach has the advantage of effectively representing both low-frequency long-term trends and high-frequency short-term volatility simultaneously. The finally generated scalogram image compactly encodes the dynamic characteristics of the original time series and is used as input data for the ViT model to learn microscopic and macroscopic patterns.

Figure 4 demonstrates the transformation process from raw price data to scalogram representation. The top panel shows the daily high and low prices, the middle panel displays the calculated Parkinson’s volatility time series with its normalized version, and the bottom panel presents the resulting CWT scalogram that serves as input to the Vision Transformer.

5. Results

5.1. Overall Forecasting Performance

We evaluate the prediction performance of TF-ViTNet against eight benchmark models across two major U.S. indices over a 15-year testing period from 2010 to 2024. The benchmark models include standard econometric models (GARCH, HAR-RV, and Realized-GARCH), a naive benchmark model, an LSTM-only model using numerical indicators, two integrated hybrid models (CNN-LSTM and ViT-LSTM) that combine image features at each time step, and a parallel hybrid model (TF-CNet) that independently processes image and numerical data.

Table 6 summarizes the overall performance metrics including

, MSE, RMSE and MAPE for all models.

The proposed TF-ViTNet achieves the highest values of 0.387 for NASDAQ and 0.436 for S&P 500, demonstrating superior predictive accuracy in terms of explained variance compared to all deep learning and econometric benchmark models in both markets. In terms of MSE, TF-ViTNet achieves for NASDAQ, the lowest of all models. For S&P 500, its MSE () is second only to the HAR-RV model (), though it significantly outperforms the HAR-RV in . The results for MAPE are more nuanced; while TF-ViTNet outperforms the LSTM and naive baselines, the econometric models (particularly HAR-RV) achieve lower MAPE scores. This highlights the different optimization goals of each metric, where TF-ViTNet excels at capturing overall variance () while HAR-RV is competitive in average percentage error. The performance gap is more pronounced in the more volatile NASDAQ market, where TF-ViTNet substantially outperforms TF-CNet with a negative of −0.095. In contrast, both parallel architectures (TF-ViTNet and TF-CNet) perform competitively in the more stable S&P 500 market.

First, the results demonstrate that the proposed hybrid approach consistently outperforms both traditional econometric models and simpler deep learning baselines in terms of , MSE, and RMSE, although its performance in MAPE is slightly less competitive. For the NASDAQ market, TF-ViTNet significantly outperforms the best-performing econometric model, HAR-RV, and the LSTM-only baseline. This advantage is even more pronounced in the S&P 500 market, where TF-ViTNet again surpasses HAR-RV and the LSTM-only model. This suggests that the time–frequency features captured by the ViT provide substantial predictive value beyond the autoregressive components modeled by GARCH/HAR-RV and the sequential information from numerical indicators alone.

Second, hybrid models incorporating scalogram images generally show strong performance improvements over the LSTM-only baseline. For S&P 500, most hybrid models outperform the LSTM-only model ( of 0.235). For NASDAQ, most hybrid models like CNN-LSTM (0.308) and ViT-LSTM (0.265) also outperform the LSTM baseline (0.223). These results suggest that transforming time series data into time–frequency domain images can provide meaningful information for volatility prediction, though performance depends critically on appropriate architecture design.

Third, the choice of image encoder and architecture structure significantly impacts performance. For the parallel structure, TF-ViTNet using ViT substantially outperforms the CNN-based TF-CNet. Specifically, TF-ViTNet achieves of 0.387 compared to −0.095 for TF-CNet on NASDAQ, and 0.436 compared to 0.375 on S&P 500. In contrast, for the integrated structure, CNN-LSTM consistently outperforms ViT-LSTM in both markets. This suggests that the parallel structure, which combines two independent information streams at the final stage, creates positive synergy with ViT’s ability to capture global context in scalograms.

5.2. Performance Analysis by Period

To assess the temporal stability and robustness of model performance, we examine annual results across the 15-year testing period from 2010 to 2024. This period-by-period analysis is crucial for understanding how models perform under varying market conditions, including periods of high volatility such as the 2011 European debt crisis and the 2020 COVID-19 pandemic, as well as more stable bull market periods. Unlike aggregate metrics that may mask year-to-year fluctuations, annual performance reveals whether a model’s success stems from consistent predictive power or from exceptional performance in specific years.

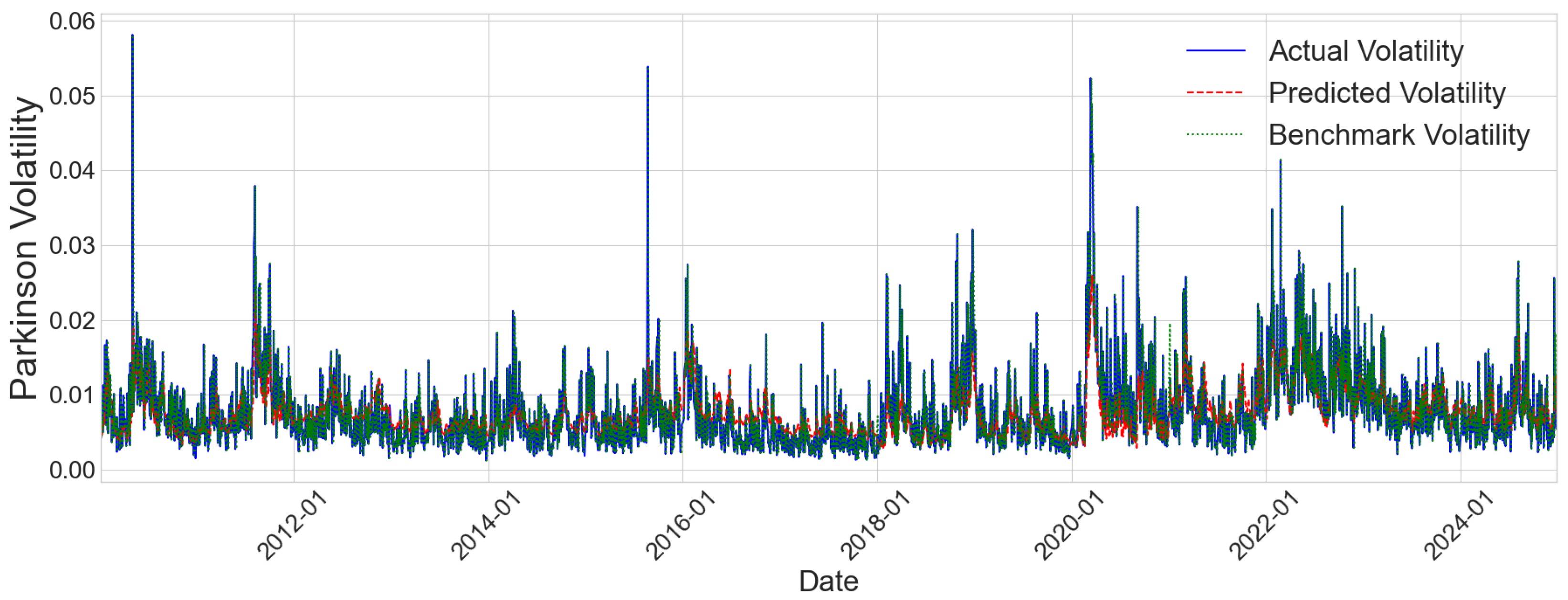

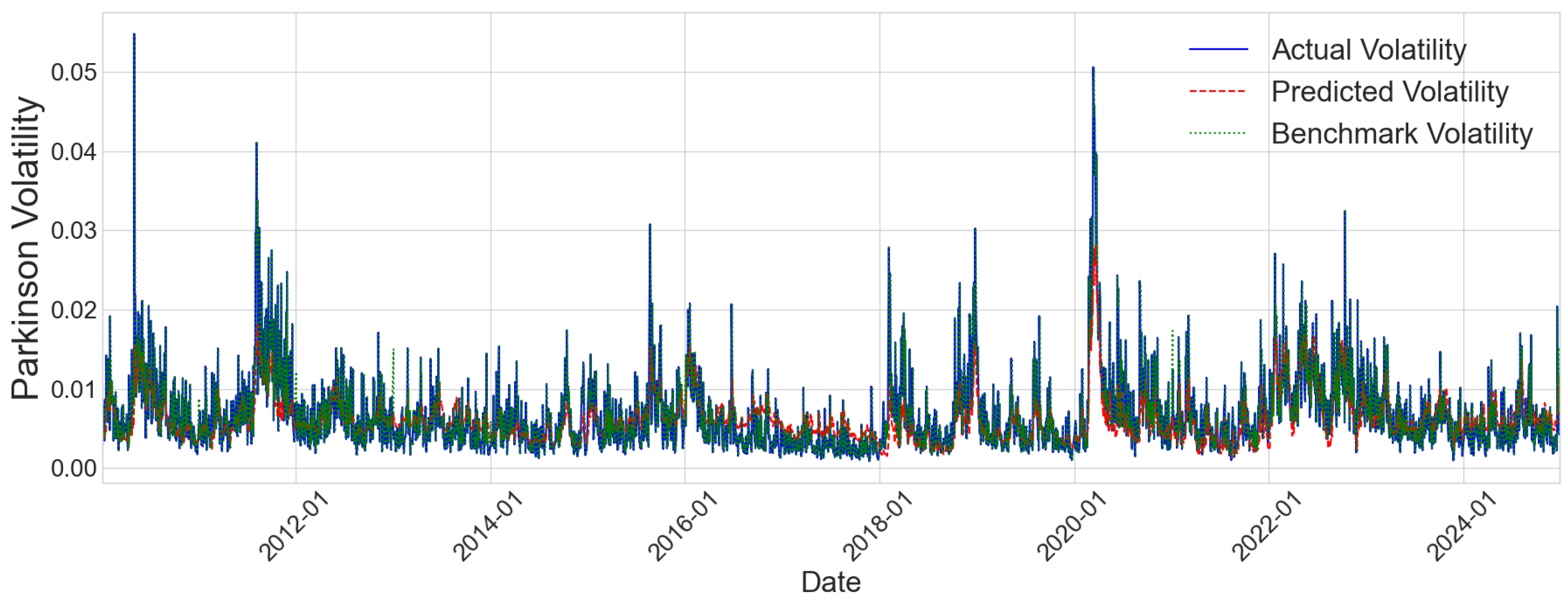

Figure 5 and

Figure 6 visualize the TF-ViTNet model’s prediction performance over the entire testing period for NASDAQ and S&P 500, respectively. The time series plots show actual volatility in blue, predicted volatility in red, and naive benchmark predictions in green. Both figures demonstrate that TF-ViTNet successfully captures the general volatility dynamics, tracking major volatility spikes during crisis periods such as 2011, 2015–2016, and particularly the dramatic surge during the 2020 COVID-19 pandemic. The model shows strong alignment with actual volatility patterns during both calm and turbulent periods, though some divergence is visible during extreme volatility events where all models struggle. Notably, the predicted volatility follows the actual trend more closely than the naive benchmark across most periods, validating the effectiveness of our approach.

Table 7 and

Table 8 present the annual

values for all models on NASDAQ and S&P 500, respectively. These results are obtained without applying data augmentation to the scalogram images, isolating the effect of architecture design. The ‘Overall’ row represents the result of a single

metric calculated on the entire dataset, which integrates all actual volatility values and the model’s predicted values accumulated over the entire test period from 2010 to 2024. Therefore, the ’Overall’

serves as a comprehensive measure of the model’s long-term and cumulative predictive performance, without being excessively biased by anomalous performance or slumps in specific years.

The annual results reveal considerable performance variation across different market conditions and notable differences in model behavior between the two indices. For NASDAQ, TF-ViTNet achieves the highest in five years: 2011, 2014, 2015, 2019, and 2024. The most impressive result occurs in 2011, a period of heightened volatility associated with the European debt crisis, where TF-ViTNet records an of 0.434. However, the model also experiences challenging years, with 2012, 2013, and 2017 showing negative or near-zero values.

For S&P 500, the competitive landscape differs markedly. While TF-ViTNet achieves the best overall performance as shown in

Table 6, it wins in only two individual years: 2012 and 2023. CNN-LSTM dominates across multiple years, particularly during the stable growth period from 2014 to 2016 and again in 2018 and 2021, achieving

values consistently above 0.35. This suggests that CNN-based integrated architectures may have advantages in capturing volatility patterns during prolonged stable market regimes. TF-ViTNet’s strength lies in its more balanced performance across diverse conditions, avoiding the severe failures seen in some models during difficult periods.

A consistent pattern across both markets is the difficulty all models face during certain transitional or highly uncertain periods. Years 2012, 2013, 2017, and 2022 show widespread negative or low values across multiple models, suggesting fundamental challenges in volatility prediction during specific market regimes. The naive benchmark occasionally outperforms sophisticated models in these difficult years, particularly in 2020 for both indices, highlighting that complex architectures do not guarantee superior performance under all conditions. TF-ViTNet’s overall advantage stems from achieving competitive performance during favorable periods while maintaining relative stability during challenging years, resulting in the highest cumulative performance over the full 15-year period.

TF-ViTNet consistently demonstrates statistically significant predictive advantages over the benchmark in annual performance. This superiority is validated by a paired t-test on loss differentials (** denotes 1% and * denotes 5% significance levels). TF-ViTNet achieved high values across both NASDAQ (e.g., 2011, 2014, 2019, 2024) and S&P 500 (e.g., 2012, 2023), recording the highest overall for the S&P 500 over the 15-year period. These results confirm that TF-ViTNet captures complex volatility patterns more effectively than traditional benchmarks and other models, proving its practical value in financial forecasting tasks.

However, when comparing p-values, while TF-ViTNet significantly outperforms most benchmarks, its comparison with the CNN-LSTM model yields more ambiguous results. Therefore, a detailed comparison between TF-ViTNet and CNN-LSTM was conducted over 100 individual stocks, and the results of this analysis are presented in a subsequent section.

5.3. Impact of Data Augmentation

Data augmentation is a regularization technique that artificially increases training data diversity to prevent overfitting [

67]. To evaluate its impact on volatility prediction performance, we compare three augmentation strategies applied to the TF-ViTNet model’s scalogram images. The ‘Affine’ strategy combines random horizontal flipping with 50% probability [

67] and Color Jitter [

68], which randomly adjusts brightness, contrast, and saturation within a range of 0.2 and hue within 0.1. The ‘Masking’ strategy randomly masks portions of the scalogram in frequency or time domains, inspired by SpecAugment [

69]. The ‘All’ strategy combines both Affine and Masking techniques.

Table 9 and

Table 10 present the annual

values for each augmentation strategy alongside the baseline without augmentation for NASDAQ and S&P 500, respectively.

The impact of data augmentation reveals markedly different patterns between the two markets. For NASDAQ, the model without augmentation achieves the highest overall

of 0.387, outperforming all augmentation strategies with Affine at 0.364, Masking at 0.353, and All at 0.355. This counter-intuitive result demonstrates that data augmentation does not universally improve performance and can degrade it in certain contexts [

70]. The annual breakdown shows that no augmentation wins in 7 out of 15 years, particularly during highly volatile periods such as 2011 with

of 0.434, and 2019–2021 where it consistently achieves the best results. Notably, however, the All strategy demonstrates strong performance in specific market conditions, winning in 6 years, including 2010, 2012, 2014, 2015, 2016, and 2018. This high frequency of year-level victories despite lower overall performance suggests that aggressive augmentation can be highly effective during certain market regimes but may introduce excessive regularization that degrades performance in others. The trade-off is particularly evident in 2016, where All achieves 0.347 compared to 0.200 for the baseline, versus 2020-2021, where it substantially underperforms with 0.113 and 0.075 compared to 0.354 and 0.236 without augmentation.

For S&P 500, the results strongly favor augmentation. The Affine strategy achieves the highest overall of 0.436, substantially surpassing the no-augmentation baseline of 0.404. Masking records 0.380 and All achieves 0.391. The superior performance of Affine is driven by consistent advantages across multiple years, achieving the best results in 2012, 2015, 2018, 2020, and tying for best in 2023. Particularly impressive is the 2020 result, where Affine achieves of 0.583 compared to 0.399 without augmentation, demonstrating substantial benefits during the volatile COVID-19 period. Interestingly, while no augmentation wins in 7 years, including 2011, 2013, 2014, 2021, 2022, 2023, and 2024, the Affine strategy’s stronger performance in key volatile years drives its overall advantage.

These contrasting results can be attributed to fundamental differences in market characteristics. The technology-focused NASDAQ exhibits higher volatility with a standard deviation to mean ratio of 0.84, while the diversified S&P 500 shows a more stable ratio of 0.60. In volatile markets like NASDAQ, volatility patterns themselves are noisy and irregular, making it difficult for the model to learn stable representations. Adding data augmentation on top of this inherent noise creates a problem where augmentation-induced variations compound the market’s natural volatility, overwhelming the learning signal. Conversely, in the more stable S&P 500 market, cleaner underlying patterns provide a solid foundation that can withstand the additional variation introduced by augmentation. Here, augmentation acts as intended regularization, helping the model generalize without corrupting the base signal. These findings emphasize that augmentation effectiveness depends critically on the signal-to-noise ratio of the target data, with noisy data suffering from compounded distortion while clean data benefits from improved generalization.

5.4. Performance Analysis with STFT Spectrograms

This section presents a comparative analysis of the TF-ViTNet model’s performance using different input transformations for volatility prediction. Specifically, the model’s efficiency is evaluated when Short-Time Fourier Transform (STFT) spectrograms and Continuous Wavelet Transform (CWT) scalograms are applied.

Both STFT and CWT are widely utilized in financial time series for their ability to extract meaningful features from non-stationary and complex signals. STFT provides a fixed-resolution time–frequency representation that is computationally efficient, whereas CWT offers a flexible multi-resolution analysis better suited for capturing transient patterns, albeit with increased computational demands.

The evaluation was conducted by calculating annual

metrics for the NASDAQ and S&P 500 indices under identical model settings. As shown in

Table 11, the CWT-based representations generally resulted in higher

values than STFT across both markets, indicating superior predictive accuracy. However, STFT retained advantages in computational speed, suggesting a trade-off between accuracy and efficiency. These findings emphasize the importance of selecting appropriate time–frequency transformation methods in designing deep learning models for financial volatility forecasting.

5.5. Performance by Market Regime

To formally analyze regime-specific performance, we partition the test data into “Bull” and “Bear” market regimes. Following the classical methodology of [

71], a Bear market is declared after a 20% decline from a previous market peak, and a Bull market is declared after a 20% rise from a previous market trough. This allows us to evaluate whether the model’s predictive power is consistent across different long-term market trends.

Table 12 presents the

performance of all models, enabling a direct comparison between the proposed TF-ViTNet and multiple benchmark architectures.

For the NASDAQ index, TF-ViTNet demonstrates substantially higher predictive ability during Bear markets () compared with Bull markets (). A similar pattern is observed for several benchmark models, although TF-ViTNet generally achieves the highest performance across both regimes. This suggests that the time–frequency representations learned by TF-ViTNet are particularly effective at capturing the complex, nonlinear volatility structures that intensify during downturns.

For the S&P 500 index, TF-ViTNet again exhibits stronger performance in Bear markets (), whereas CNN-LSTM attains slightly higher performance during Bull markets. This comparison highlights complementary strengths across models, while still showing that TF-ViTNet maintains a competitive advantage when market conditions become more turbulent.

Overall, the expanded regime-level evaluation—now including all benchmark models—offers a clearer understanding of where TF-ViTNet delivers marginal improvements and how model performance shifts across different market environments.

5.6. Forecasting Performance by Volatility Regime

To analyze model robustness across different volatility conditions, we partition the test data into quintiles based on actual Parkinson’s volatility values and measure Mean Absolute Error (MAE) for each quintile. This quintile-based analysis reveals how models perform in low versus high volatility regimes, which is critical for practical risk management applications where performance during extreme market conditions is particularly important.

Table 13 presents the MAE values multiplied by 1000 for all models across the five volatility quintiles for both NASDAQ and S&P 500.

The quintile analysis reveals distinct patterns in model performance across volatility regimes. A consistent finding across both markets is that prediction errors increase substantially in the highest volatility quintile (Q5) for all models. For NASDAQ, MAE values in Q5 range from 5.817 for TF-ViTNet to 8.394 for TF-CNet, representing roughly 3–5 times higher errors compared to mid-range quintiles. Similarly, for S&P 500, Q5 errors range from 5.118 for the naive benchmark to 13.915 for LSTM, demonstrating the fundamental challenge of predicting extreme volatility events.

Despite the overall difficulty in high volatility regimes, TF-ViTNet demonstrates notable advantages. For NASDAQ Q5, TF-ViTNet achieves the lowest MAE of 5.817, outperforming all other models, including the naive benchmark at 5.933. This represents a significant advantage in the most critical regime, where accurate predictions are most valuable for risk management. The model also maintains competitive performance in mid-range volatility quintiles, achieving near-optimal results in Q3 and Q4. However, in low-volatility quintiles (Q1–Q2), simpler models like ViT-LSTM perform better, suggesting that the sophisticated parallel architecture may introduce unnecessary complexity when volatility patterns are straightforward.

For S&P 500, the results show a different pattern. In the critical highest volatility quintile (Q5), while the naive benchmark achieves the best performance at 5.118, TF-ViTNet records 5.325, outperforming all other deep learning models. This contrasts with NASDAQ where TF-ViTNet dominated even the benchmark in extreme conditions, and the difference can be attributed to the S&P 500’s more stable characteristics, where simple persistence forecasts remain effective even during high volatility periods. In lower volatility regimes (Q1–Q4), TF-ViTNet maintains competitive performance with MAE values ranging from 1.464 to 1.998, consistently ranking among the top performers alongside ViT-LSTM and CNN-LSTM. The consistent superiority of TF-ViTNet over competing deep learning approaches across all quintiles, particularly in the critical high volatility regime, demonstrates the robustness of our parallel architecture design.

These findings underscore that TF-ViTNet’s primary strength lies in maintaining stability during extreme market conditions, particularly in volatile markets like NASDAQ. While the model may not always achieve the lowest errors in calm periods, its ability to remain robust when volatility spikes makes it particularly valuable for risk management applications where tail risk prediction is paramount.

5.7. Performance for Individual Stocks: S&P 500 Top-50 and Russell 3000 Low-Liquidity Equities

To further assess the generalizability and robustness of the proposed models, we extended our analysis beyond index-level prediction to individual equities. Specifically, Parkinson’s volatility was predicted for (i) the 50 largest constituents of the S&P 500 by market capitalization and (ii) an additional set of 20 low-liquidity stocks sampled from the Russell 3000 index. For each equity, both TF-ViTNet and CNN-LSTM were independently trained and tested following the same training–validation–test procedure used in the index experiments, focusing on the most recent year, 2024.

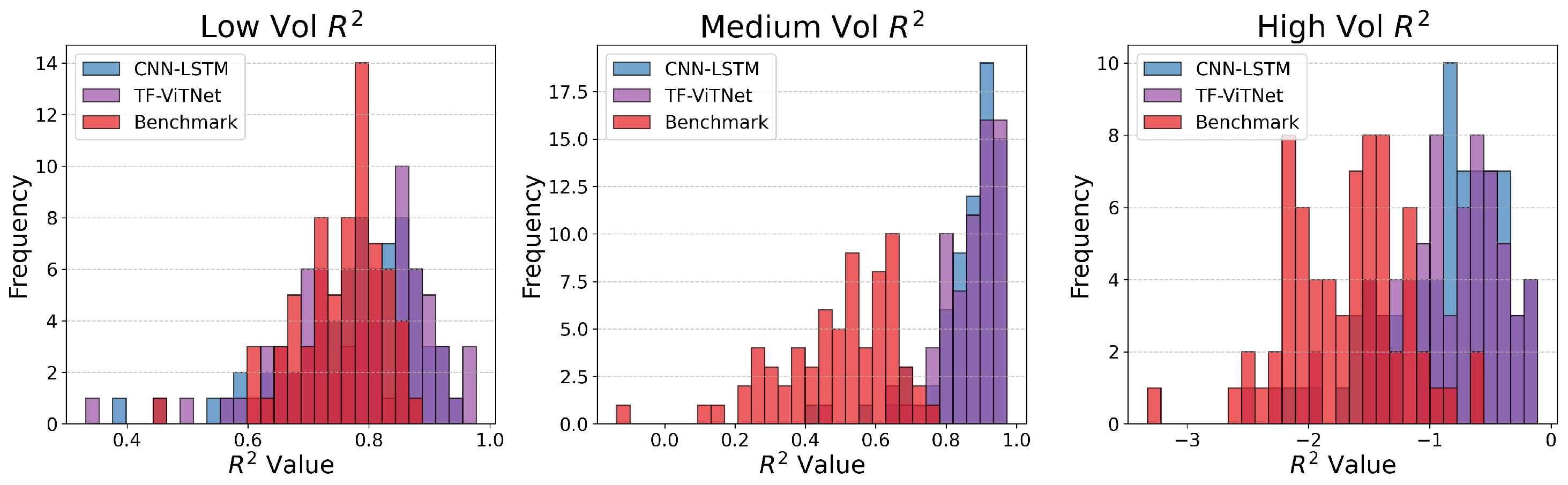

To examine how model performance varies across different volatility environments, we performed a volatility-tier analysis for each stock. Specifically, the 250 daily observations in 2024 were sorted by the actual Parkinson volatility and partitioned into three equally sized groups (Low/Medium/High volatility). For each tier, was computed separately using only the observations belonging to that volatility level. This procedure yields, for every individual equity, three volatility-specific scores that reveal how predictive accuracy changes across calm, moderate, and turbulent market conditions.

Figure 7 summarizes the cross-sectional distribution of these tier-specific

scores across all 70 equities. In all three volatility tiers—Low, Medium, and High—the distribution of TF-ViTNet is consistently shifted to the right relative to CNN-LSTM, indicating higher average

and more stable predictive performance across different volatility regimes. Although both models exhibit weaker accuracy under high-volatility conditions, TF-ViTNet maintains a noticeably stronger tail behavior with fewer severely negative

outliers. A detailed equity-level breakdown is provided in Appendices

Appendix A and

Appendix B.

6. Conclusions

This study proposes TF-ViTNet, a novel dual-path hybrid model that integrates time–frequency analysis with ViTs for financial market volatility prediction. The model transforms Parkinson’s volatility time series into scalogram images via CWT and analyzes them using a ViT to extract spatiotemporal patterns. Simultaneously, a separate LSTM pathway learns temporal features from technical indicators, and the two streams are integrated at the final stage to predict future volatility.

Empirical analysis using NASDAQ and S&P 500 index data over a 15-year testing period from 2010 to 2024 yields five important findings. First, regarding the effectiveness of image-based representations, the hybrid approach combining scalogram images with technical indicators consistently outperforms models using numerical data alone. TF-ViTNet achieves substantial improvements over the baseline LSTM model, with particularly strong results for S&P 500. This demonstrates that two-dimensional time–frequency representations provide meaningful complementary information for volatility prediction beyond what can be captured by one-dimensional numerical sequences alone.

Second, concerning parallel versus integrated architecture, the parallel structure, where image and numerical pathways are processed independently before final fusion, demonstrates clear advantages over integrated architectures that combine features at each time step. The parallel TF-ViTNet consistently outperforms the integrated ViT-LSTM across both indices. This suggests that allowing each modality to develop specialized representations independently before integration enables more effective feature learning compared to early fusion strategies.

Third, regarding ViT versus CNN for image encoding, the choice of image encoder significantly impacts performance, with effects varying by architecture type. In parallel architectures, ViT-based TF-ViTNet substantially outperforms CNN-based TF-CNet, especially for the volatile NASDAQ market, where TF-CNet shows negative performance. This superiority stems from ViT’s ability to capture global patterns in spectrograms through self-attention mechanisms. Conversely, in integrated architectures, CNN-LSTM outperforms ViT-LSTM in both markets, suggesting that CNNs’ inductive biases for local pattern extraction align better with sequential feature combination strategies.

Fourth, based on the impact of data augmentation, effectiveness varies dramatically by market characteristics. For the more stable S&P 500 market, Affine augmentation improves overall performance, demonstrating that regularization benefits markets with cleaner underlying patterns where the stronger signal can tolerate additional variation without corruption. Conversely, for the highly volatile NASDAQ market, the model without augmentation achieves the best performance, suggesting that augmentation introduces unwanted noise that compounds the market’s inherent volatility. When underlying patterns are already noisy and irregular, augmentation-induced variations interfere with the already-weak signal. This finding emphasizes that augmentation strategies must be tailored to specific market signal-to-noise characteristics rather than applied universally.

Fifth, regarding performance across volatility regimes, TF-ViTNet demonstrates differential effectiveness depending on volatility levels. In extreme high volatility conditions, the model excels particularly for NASDAQ, achieving the lowest prediction errors among all models including the naive benchmark. For S&P 500, TF-ViTNet outperforms all deep learning models in high volatility, though it is slightly behind the naive benchmark. In mid-range volatility, TF-ViTNet maintains competitive performance, while in low-volatility regimes, simpler models occasionally achieve lower errors. This pattern indicates that TF-ViTNet’s primary strength lies in maintaining stability during market stress when accurate predictions are most critical for risk management, even if it does not always achieve the absolute lowest errors during calm periods.

Our results can be contextualized within the broader literature on volatility prediction. Cho and Lee [

72] employed deep learning models to predict absolute returns as a proxy for stock volatility using S&P 500 data, achieving annual

values of 0.223 in 2018, 0.261 in 2019, and 0.502 in 2020. In comparison, TF-ViTNet achieves

values of 0.185, 0.307, and 0.583 for the same years on the same index. While our model underperforms slightly in 2018, it demonstrates superior performance in 2019 and particularly in 2020 during the COVID-19 volatility surge, suggesting that our time–frequency approach with ViTs may be especially effective in capturing extreme volatility patterns. Moreover, our use of Parkinson’s volatility, which incorporates intraday high-low range information, likely provides a more statistically efficient volatility measure compared to absolute returns, contributing to improved predictive accuracy.

Despite these promising results, several limitations warrant consideration. The model’s architectural sophistication comes at the cost of substantial computational requirements and sensitivity to hyperparameter choices, which may pose challenges for resource-constrained environments. Our validation focuses on two major U.S. equity indices, and generalizability to other asset classes with different volatility characteristics remains to be validated. Furthermore, like many deep learning models, TF-ViTNet operates largely as a black box, and understanding which specific time–frequency patterns drive predictions remains limited. Future research could address these limitations by developing attention visualization techniques to interpret model behavior and extending the framework to multi-asset settings. More sophisticated fusion mechanisms, such as cross-modal attention, may further improve performance, while rigorous testing in real-time trading simulations would provide crucial insights into practical deployment feasibility under realistic market constraints.

In conclusion, this study advances financial volatility prediction by demonstrating that parallel integration of time–frequency representations analyzed through ViTs with numerical technical indicators can achieve superior and more robust predictions compared to traditional approaches. The findings contribute to both the theoretical understanding of integrating heterogeneous data representations for financial forecasting and the practical development of more reliable risk management tools.

Beyond methodological contributions, our results carry direct implications for risk management and investment decision-making. The model’s strong performance during high-volatility regimes—when accurate forecasts are most critical—suggests that TF-ViTNet can serve as an effective early-warning mechanism for market turbulence, supporting timely adjustments to portfolio exposure, leverage, and hedging strategies. Conversely, the model’s comparatively moderate advantage in low-volatility periods highlights its suitability for adaptive volatility targeting frameworks, allowing investors to recalibrate position sizes based on regime-dependent prediction accuracy. These insights indicate that TF-ViTNet is particularly valuable for practitioners seeking robust volatility predictions to inform dynamic risk control, stress-scenario preparation, and strategic asset allocation under varying market conditions.