1. Introduction

We work within a real Hilbert space

, with its associated inner product

and norm

. The focus is on a nonempty, closed, and convex subset

of

. A mapping

is nonexpansive if

,

. Its fixed-point set,

):=

is closed and convex whenever nonempty. For a proper function

, the subdifferential at

is

We say

g is subdifferential at

w if

. The indicator function

is defined as

Note that is a convex function when is a convex set.

In 2006, Moudafi et al. [

1] examined the convergence of a scheme for a hierarchical fixed-point problem (in short, HFPP): Find

such that

with

are nonexpansive. Let

denote the set of solutions of HFPP (

1). If

, then

. Hence, HFPP (

1) can be reformulated as a variational inclusion: find

such that

where

denotes the normal cone to

at

given by

If we set

then

is just

. Moreover, it is noted that HFPP (

1) is of significant interest since it encompasses, as particular cases, several well-known problems, including variational inequalities over fixed-point sets, hierarchical minimization problems, and related models (see Moudafi [

2]).

Moudafi [

2], in 2007, introduced a Krasnoselskii–Mann-type iteration for HFPP (

1): given

with

. This scheme unifies many fixed-point methods applied in areas such as signal processing and image reconstruction, and its convergence theory provides a common framework for analyzing related algorithms (see [

1,

3,

4,

5,

6,

7,

8]).

This work addresses the variational inequality (VI) defined by finding

such that

introduced in [

9] where

and the solution set is denoted by Sol (VI (

4)). A standard approach for solving VI (

4) is the projected gradient method:

with

, where

is the metric projection onto

. This method requires

to be inverse strongly (or strongly) monotone, which can be restrictive. To relax this, Korpelevich [

10] introduced the extragradient iteration

Many subsequent works have proposed enhancements to this scheme see, e.g., [

11,

12,

13,

14,

15].

A key limitation of the extragradient method is the double projection onto

required in each step, which is often computationally intensive. This issue was addressed by Censor et al. [

13,

16,

17] via the subgradient extragradient method. Their approach, designed for VI (

4) and subsequently extended to equilibrium problems [

18], reduces the computational load by calculating the second projection onto a half-space instead of the original set

.

This sequence

converges weakly to Sol (VI (

4)).

The problem of determining an element belonging to

was addressed by Takahashi and Toyoda [

19], who developed the subsequent iterative procedure

Under suitable conditions, weak convergence of the iterates to a solution was guaranteed.

The preceding algorithm can fail when the operator

is merely monotone and

-Lipschitz-continuous. To overcome this limitation, Nadezhkina and Takahashi [

20] incorporated Korpelevich’s extragradient technique, introducing the following scheme:

with

,

. The sequence

converges weakly to a point

Drawing inspiration from the preceding discussion, we present a Krasnosel’skiǐ–Mann iterative scheme that integrates the ideas from (

3), (

7), and (

9) to approximate a common solution of HFPP (

1) and VI (

4). We establish a weak convergence result for this method and provide an illustrative example. This approach generalizes and unifies several existing results [

2,

13,

16,

17,

20].

2. Preliminaries

Throughout the paper, we denote the strong and weak convergence of a sequence to a point by and , respectively. denotes the set of all weak limits of . Let us recall the following concepts which are of common use in the context of convex and nonlinear analysis.

It is well known that a real Hilbert space satisfies the following:

- (i)

The identity

for all

and

;

- (ii)

Opial’s condition; i.e., if for any sequence

in

such that

, for some

, the inequality

holds for all

.

For every point

, there exists a unique nearest point in

denoted by

such that

The mapping is called the metric projection of onto .

It is well known (see [

21] for details) that

is nonexpansive and satisfies

Moreover,

is characterized by the fact

and

and

Definition 1 ([

22])

. An operator is said to be- (i)

- (ii)

Maximal monotone if M is monotone and the graph, , is not properly contained in the graph of any other monotone operator.

Lemma 1 ([

3,

23])

. Let . Then,- (i)

is called -Lipschitz-continuous with if - (ii)

is called quasi-nonexpansive if - (iii)

is a maximal monotone mapping on ;

- (iv)

is demiclosed on H in the sense that, if converges weakly to and converges strongly to 0, then .

Lemma 2 ([

24])

. Let be a nonempty subset of and be a sequence in H such that the following conditions hold:- (i)

For every , exists;

- (ii)

Every sequential weak cluster point of is in .

Then, converges weakly to a point in

3. Krasnosel’skiǐ–Mann-Type Subgradient Extragradient Algorithm

Our contribution is a Krasnosel’skiǐ–Mann-type subgradient extragradient algorithm designed to solve HFPP (

1) in conjunction with VI (

4).

Lemma 3. Algorithm 1 generates a sequence which decreases monotonically and is bounded below by .

| Algorithm 1 Iterative scheme |

-

Initialization: Given Select an arbitrary starting point : Set . -

Iterative Steps: For the current point : -

Step 1. Calculate

where for all . -

Step 2. Update and proceed to Step 1.

|

Proof. It is clear that

forms a monotonically decreasing sequence, since

is a Lipschitz-continuous mapping with constant

. For

, we have

Clearly,

admits the lower bound

. □

Remark 1. We have and it follows that

Theorem 1. Let be monotone and -Lipschitz-continuous ( unknown), nonexpansive, and be continuous quasi-nonexpansive with monotone. Assume that . Then, the sequences and produced by Algorithm 1 converge weakly to , where .

Proof. For convenience, we divide the proof into following steps:

Step I. Let produced by Algorithm 1. Then,

Proof of Step I. Since,

, we have

This implies that

Since

is monotone, we have

Thus, by combining it with (

14), we get

Next, we estimate

Since

and

, we have

This implies that

Since,

, we have

, and using (

16) and (

17) in (

15), we get

Step II. The sequences

,

, and

generated by Algorithm 1 are bounded.

Proof of Step II. Let , then

Hence, it follows from (

18) and (19) that

It follows from Lemma 3 that

which yields that

with

. Hence, by (21), we get

It follows from Algorithm 1 and (

22) that we have

This implies that

exists and is finite. Therefore,

is bounded, and consequently, we deduce that

,

, and

are bounded.

Step III.

Proof of Step III. Since

, we have from Algorithm 1 and (

22) that

This implies that

Since

exists and is finite,

, and

, (

23) implies that

Since

it follows from (

24) that

Since

it follows from (

24) that

Since

it follows from (

24) that

Since

it follows from (

24)–(

26) that

Since

it follows from (

26) and (

28) that

Since

is bounded,

, a subsequence with

Further, it follows from (

24)–(

26) that there exists a subsequence

of

,

of

, and

of

such that

It follows from Lemma 1(ii), (

24), and (

28) that

,

.

Step IV. Next, we show that

Proof of Step IV. First, we will show that . It follows from Algorithm 1 that

Applying monotonicity of

and

, we have

It follows from (

28)–(

30) that

Since

we get

Since

is convex,

and

, and thus

which implies that

Applying

, we have

That is,

We now show that

. Define

where

is the normal cone to

at

. Since

is maximal monotone,

if and only if

. For

, we have

and hence

, hence

, for all

.

On the other hand, from

and

, we have

This implies that

Since

,

,

, and the concept of

, obtain

Taking the limit

and using continuity of

, we obtain

. Hence, by maximal monotonicity,

, implying

and therefore

.

Due to existing and Lemma 2, the sequence converges weakly to □

Some direct consequences of Theorem 1 are derived below.

Letting

, Algorithm 1 reduces to a following Krasnosel’skiǐ–Mann subgradient extragradient scheme for approximating a fixed point of a nonexpansive mapping

and solving VI (

4).

Theorem 2. Let be monotone and -Lipschitz-continuous ( unknown), and be nonexpansive. Assume that . Then, the sequences and produced by Algorithm 2 converge weakly to , where .

| Algorithm 2 Iterative scheme |

-

Initialization: Given select an arbitrary starting point : Set . -

Iterative Steps: For the current point : -

Step 1. Calculate

where for all . -

Step 2. Update and proceed to Step 1.

|

Applications in Optimization and Monotone Operator Theory

Consider a nonexpansive mapping

and a maximal monotone operator

F. The associated inclusion problem is to find a zero of

F:

For , let be the resolvent of F, which is nonexpansive. Define . By Theorem 1, we have the following result.

Theorem 3. Let be monotone and -Lipschitz-continuous ( unknown), and let be a continuous quasi-nonexpansive mapping with monotone. Furthermore, let be maximal monotone, and set as its resolvent. Assume that . Then, the sequences and produced by Algorithm 1 converge weakly to , where .

Proof. Noting that

for

, problem (

35) is equivalent to finding a fixed point of

. The result is then an immediate consequence of Theorem 1. □

Setting in Theorem 2 yields the following.

Theorem 4. Let be monotone and -Lipschitz-continuous ( unknown), let . Assume that . Then, the sequences and produced by Algorithm 2 converge weakly to , where .

Remark 2. Let be a proper, convex, and lower semicontinuous function. Its subdifferential is then a maximal monotone operator. Applying our framework to the minimization problemwe note that its solution set corresponds to , the set of zeros of the subdifferential. Therefore, a solution to (36) is obtained directly from Theorem 4. 4. Numerical Example

The weak convergence behavior of Algorithm 1 is illustrated via the following two numerical examples.

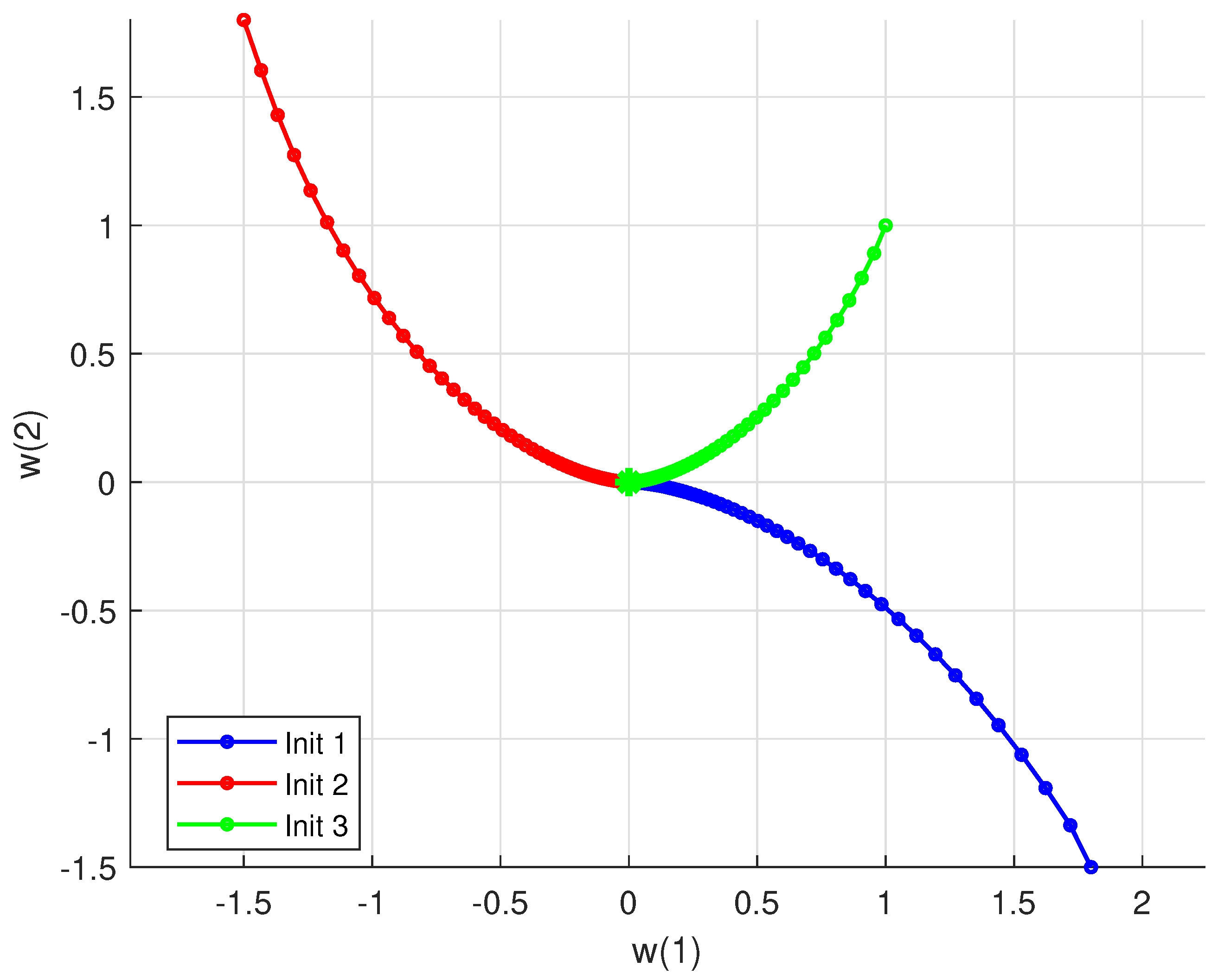

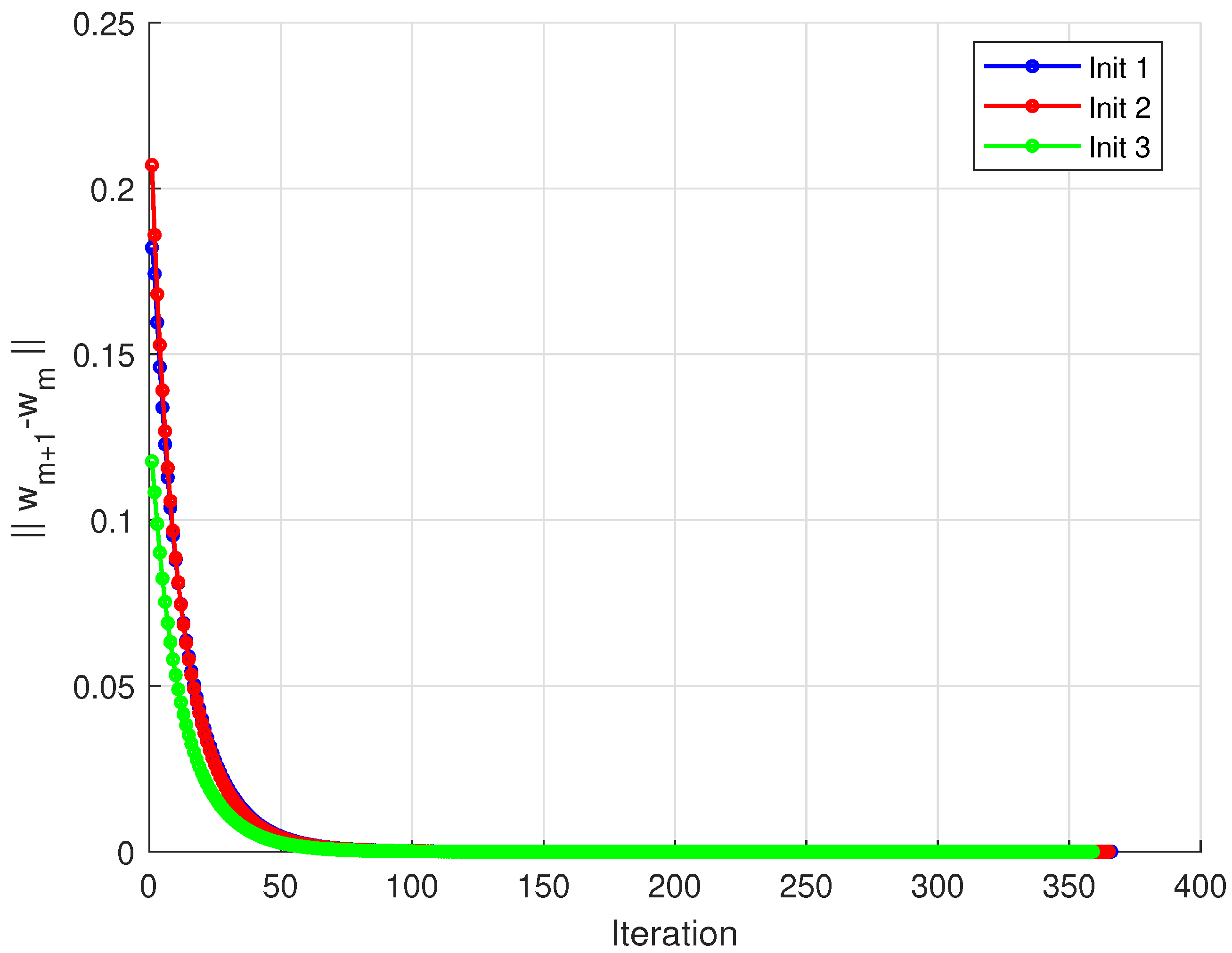

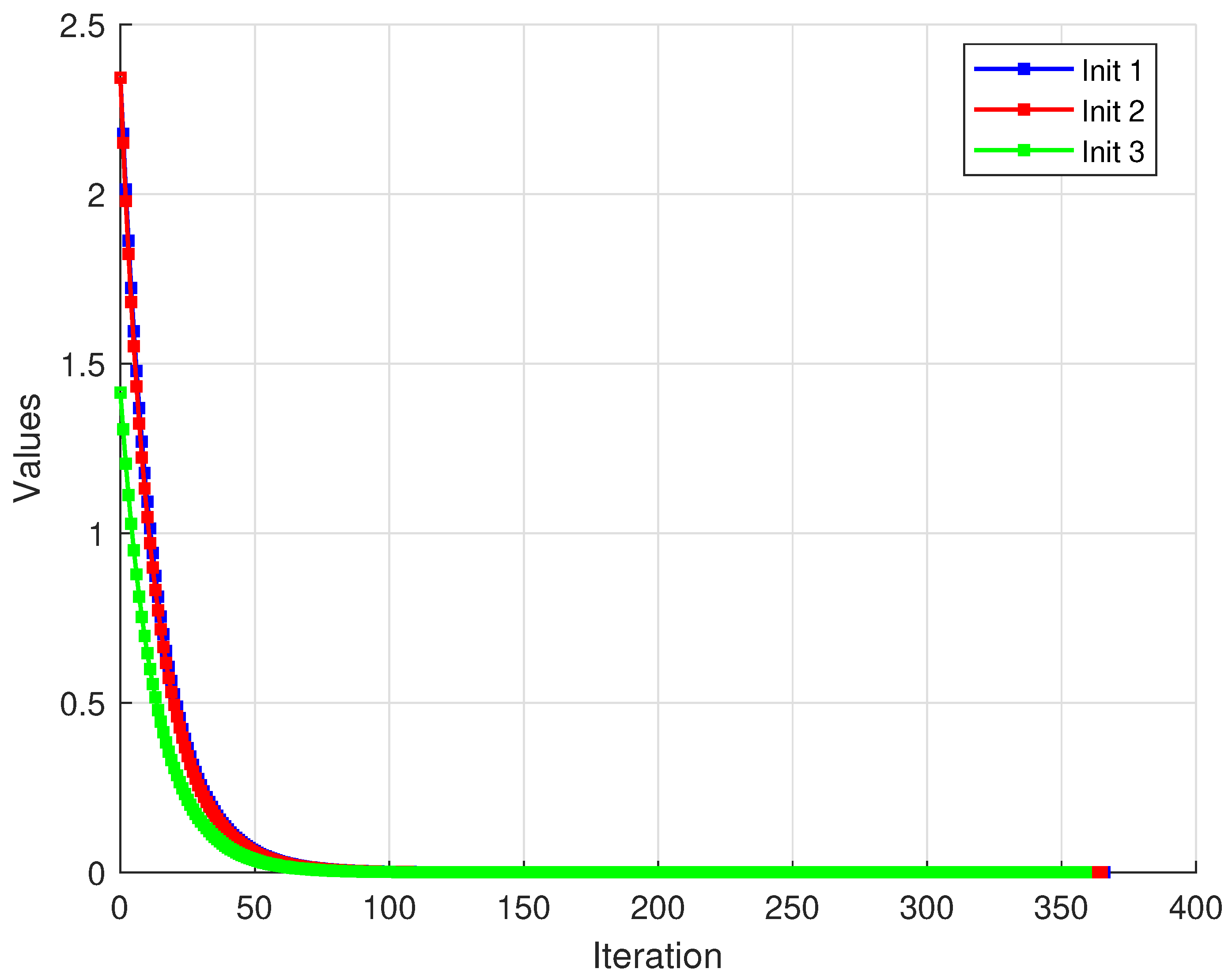

Example 1. We illustrate the proposed algorithm in the finite-dimensional Hilbert space . The monotone operator is chosen asthe feasible set is , and the mappings are and . The projection onto is defined componentwise as The distinct initial points are taken as , , and , with parameters , , , and .

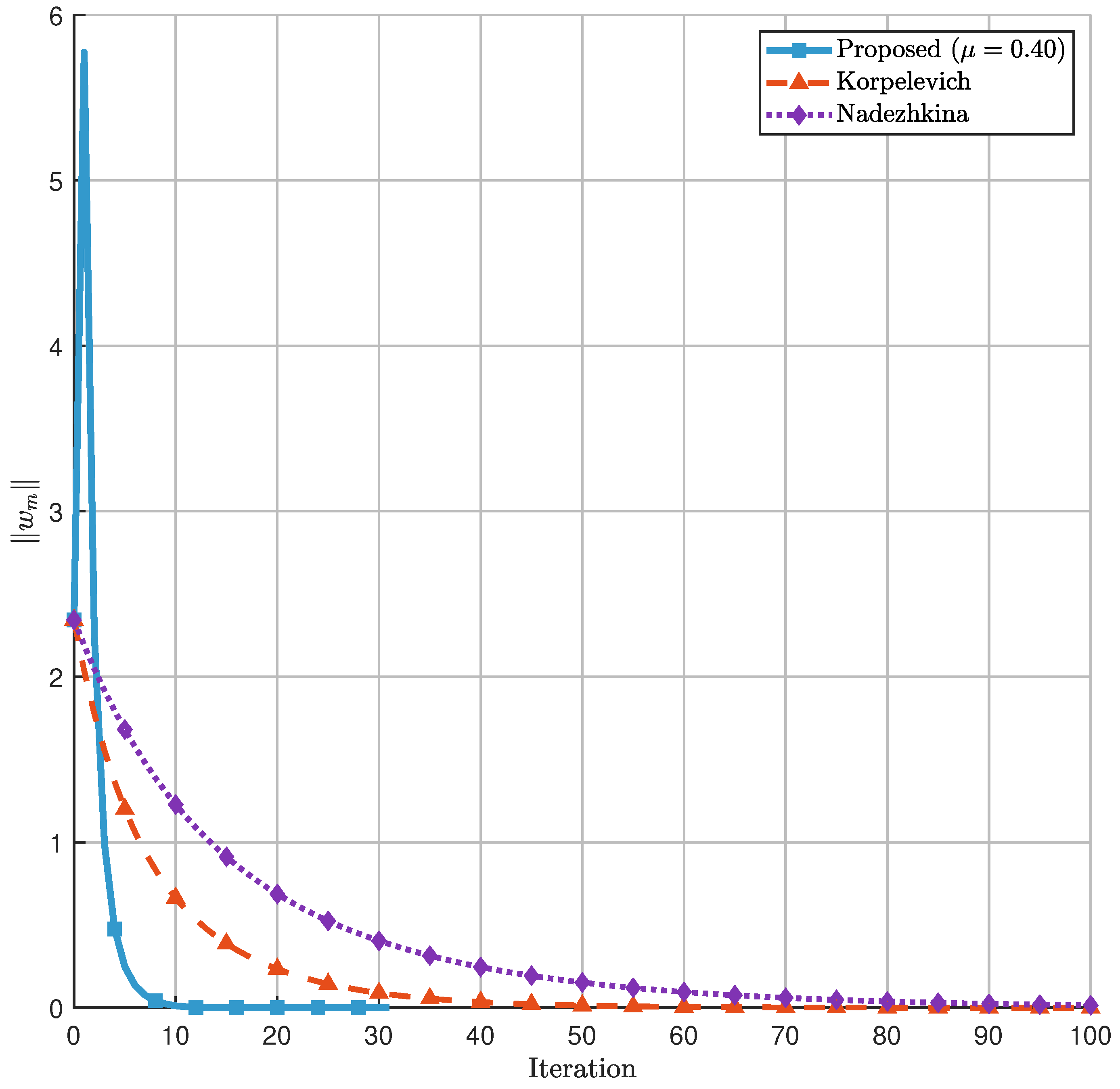

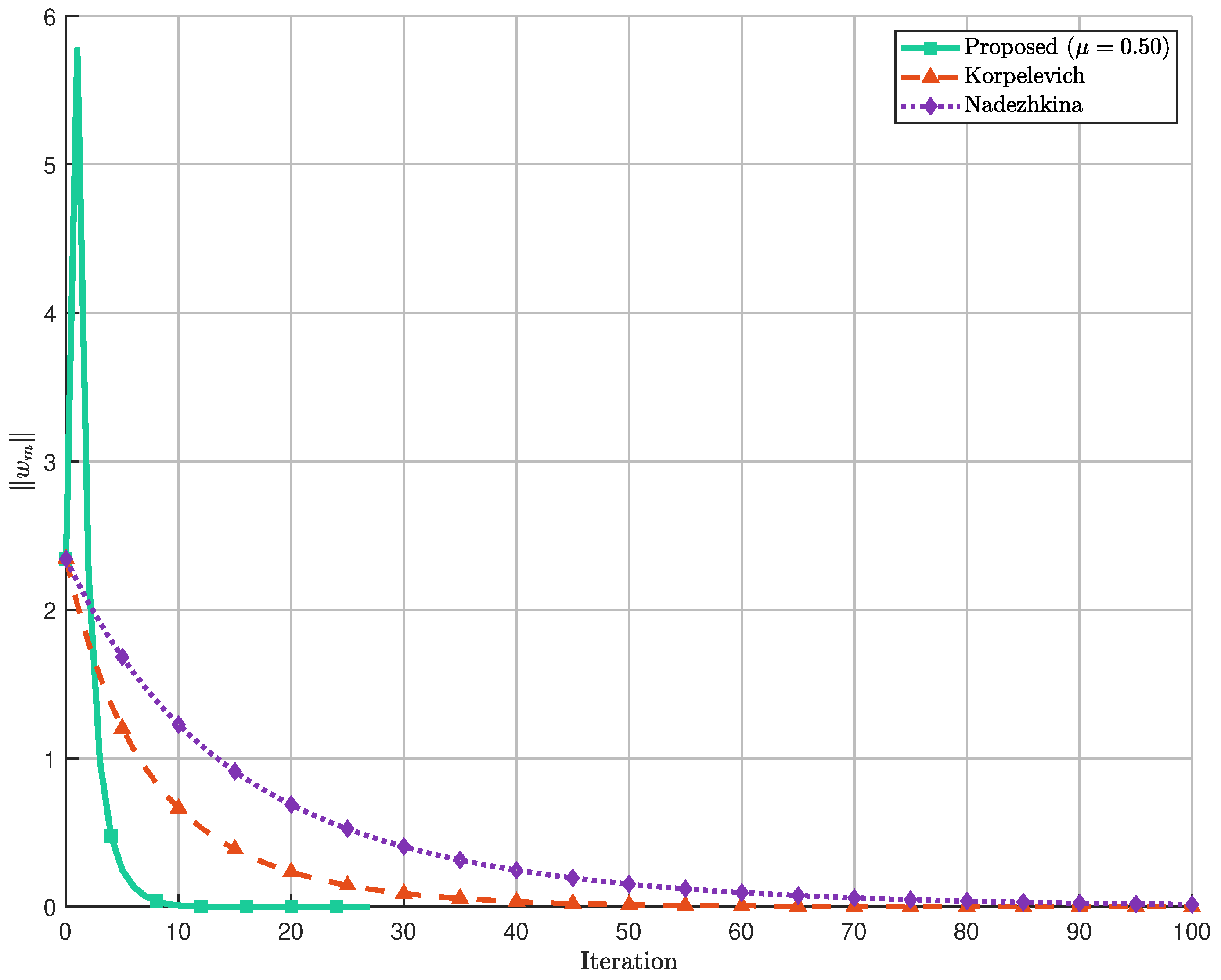

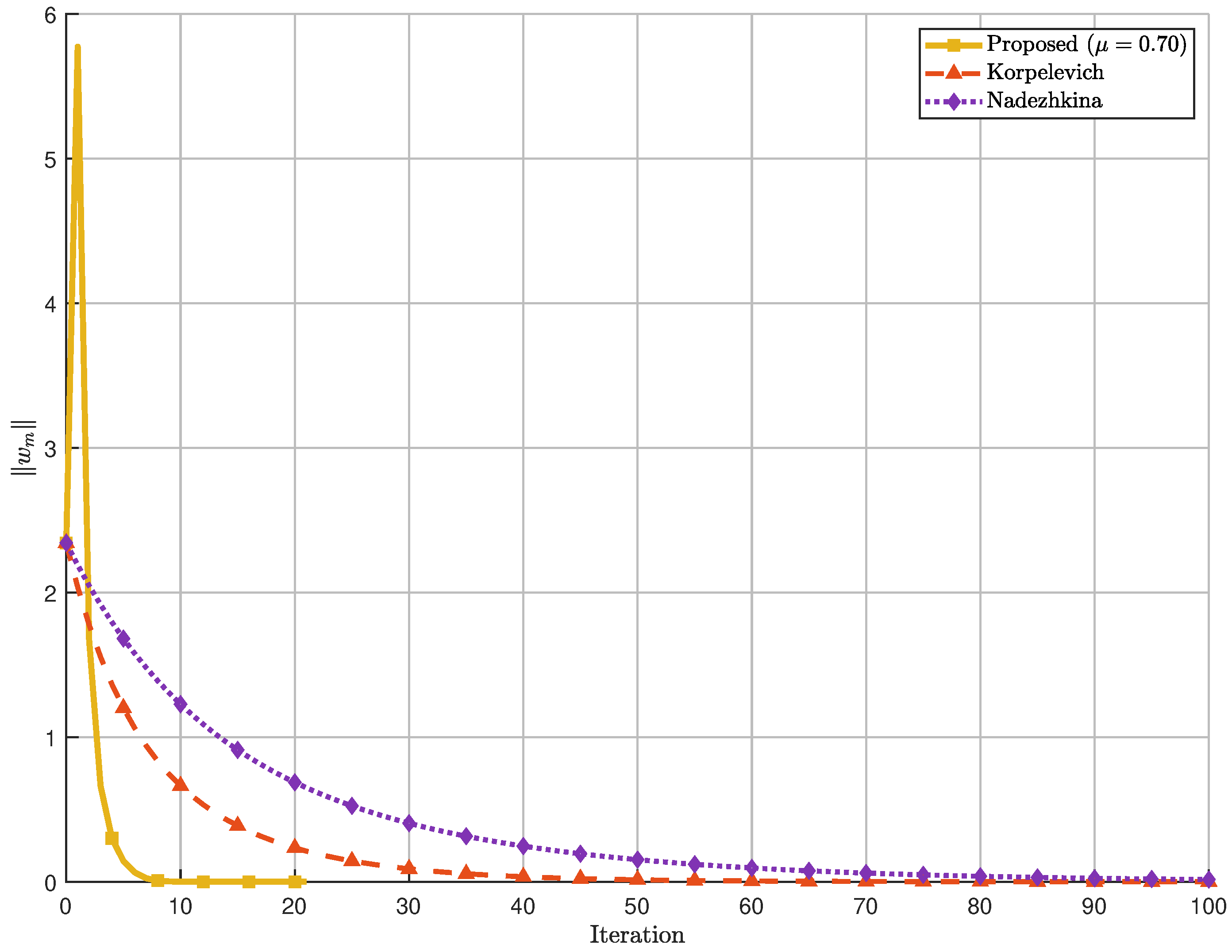

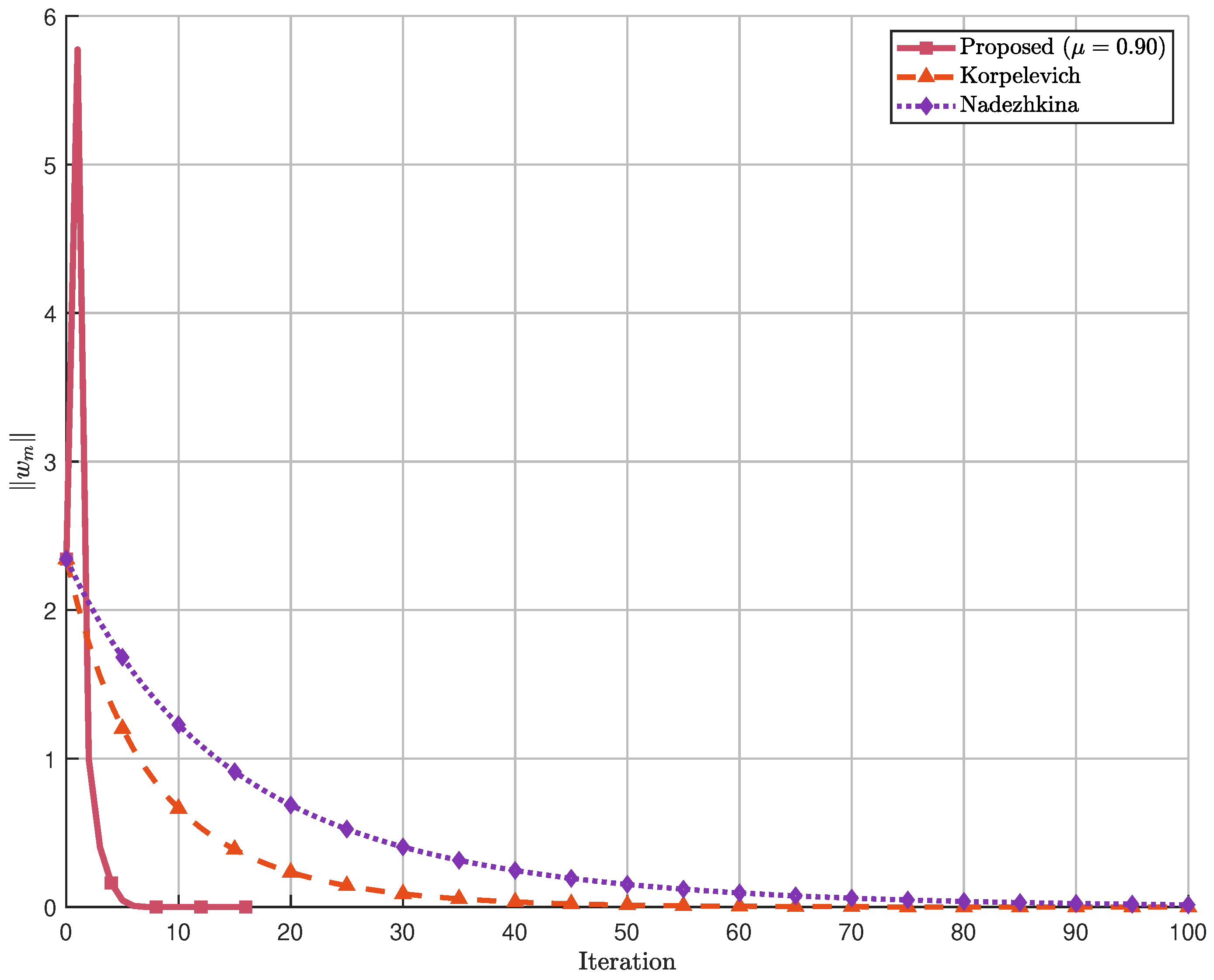

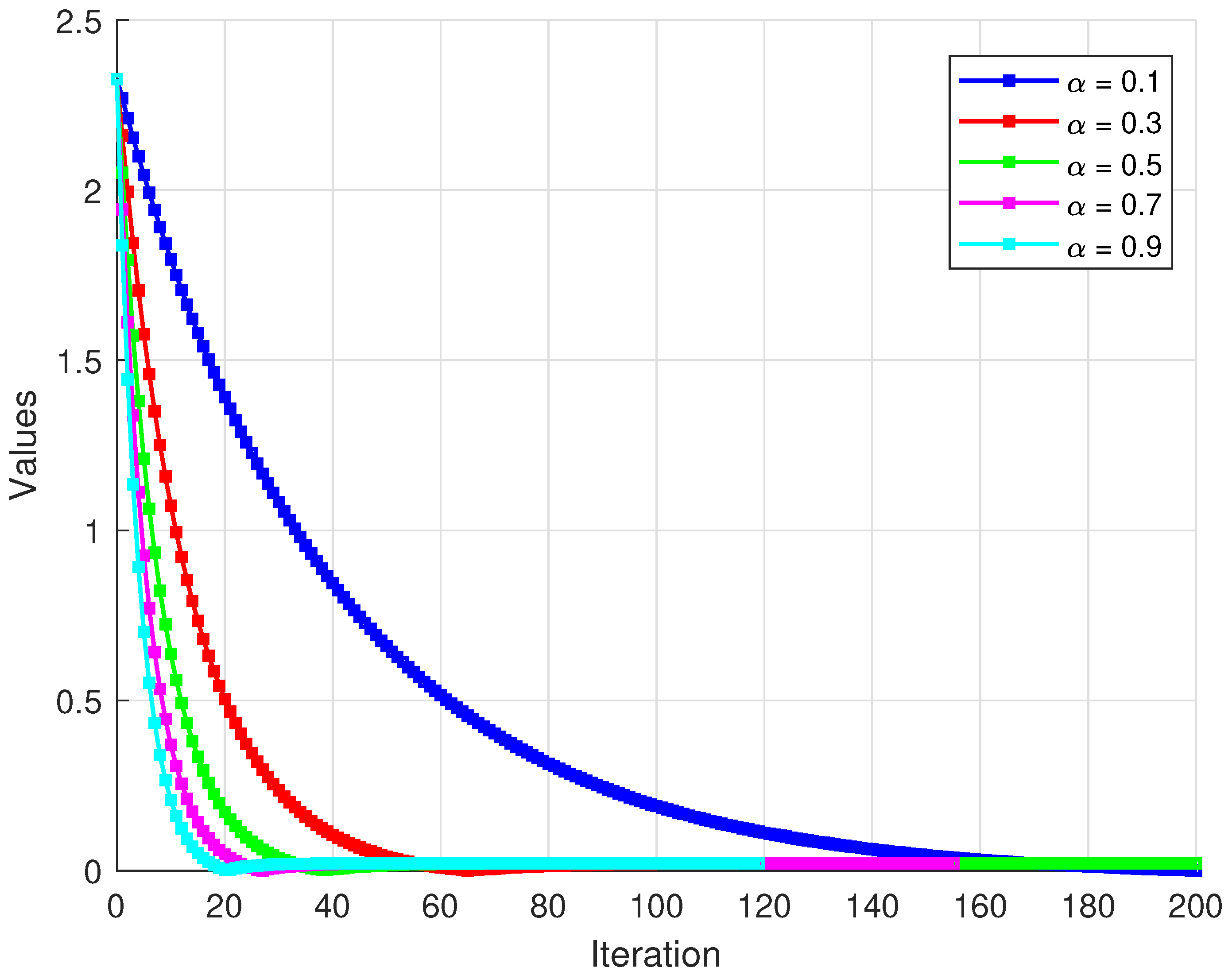

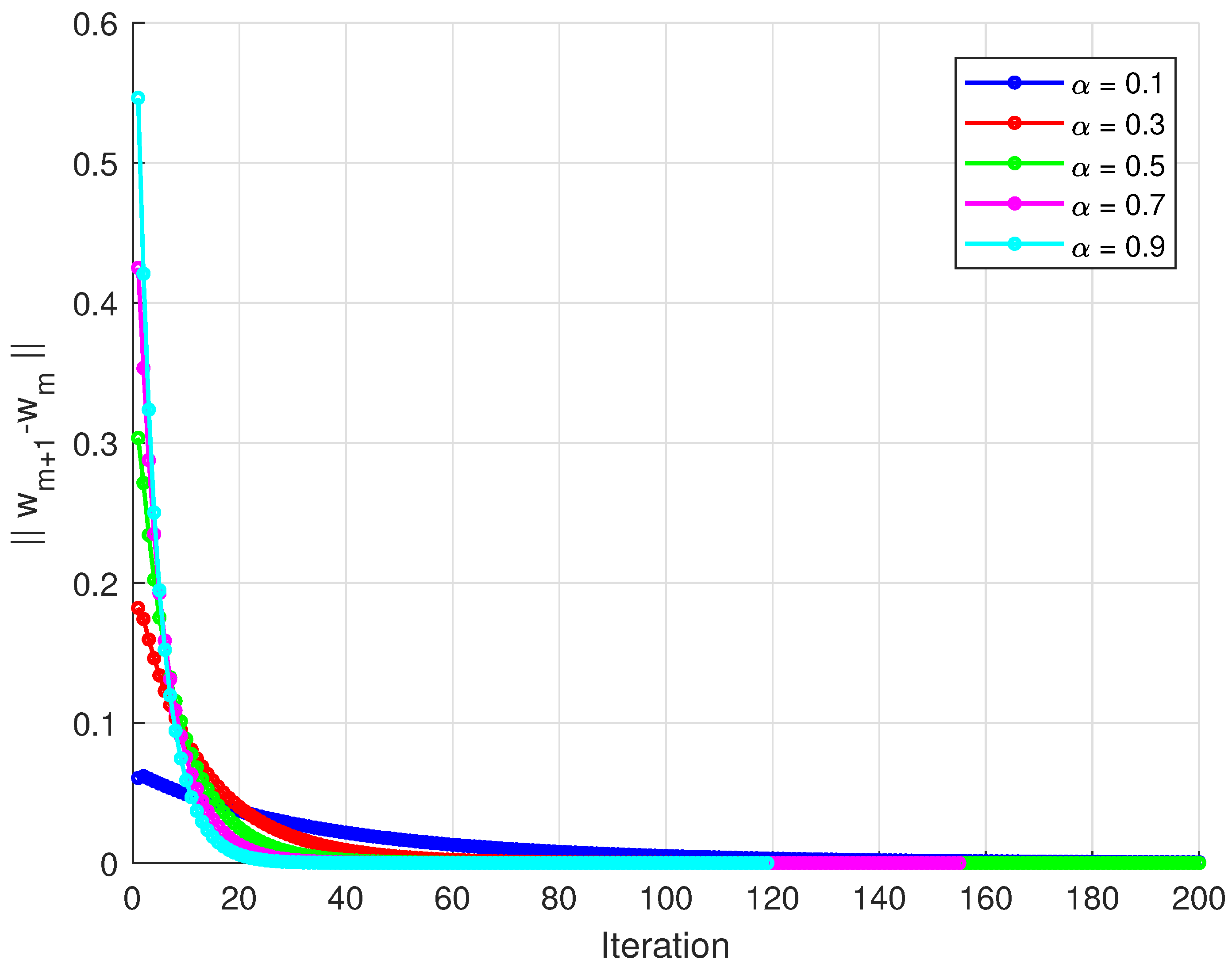

The sequence is generated by Algorithm 1. All numerical computations and graphical analyses were implemented in MATLAB R2015(a). Figure 1 displays the convergence paths of the algorithm from different starting points. The trajectories demonstrate how different initial points converge to similar solution regions. Figure 2 shows the norm of differences between consecutive iterates. All three curves exhibit rapid initial decrease followed by gradual convergence. Figure 3 tracks the distance from each iterate to the approximate solution. Different initial points converge at comparable rates to the same solution region. Figure 4 and Figure 5 display the convergence of the generating sequence and the step differences for distinct values of α with initial point . Numerical results show that the iterates converge weakly to a unique fixed point (close to the origin), and the step-to-step differences decay rapidly to zero, confirming the theoretical weak convergence of the algorithm. The iteration was terminated when . 4.1. Convergence Comparison Analysis

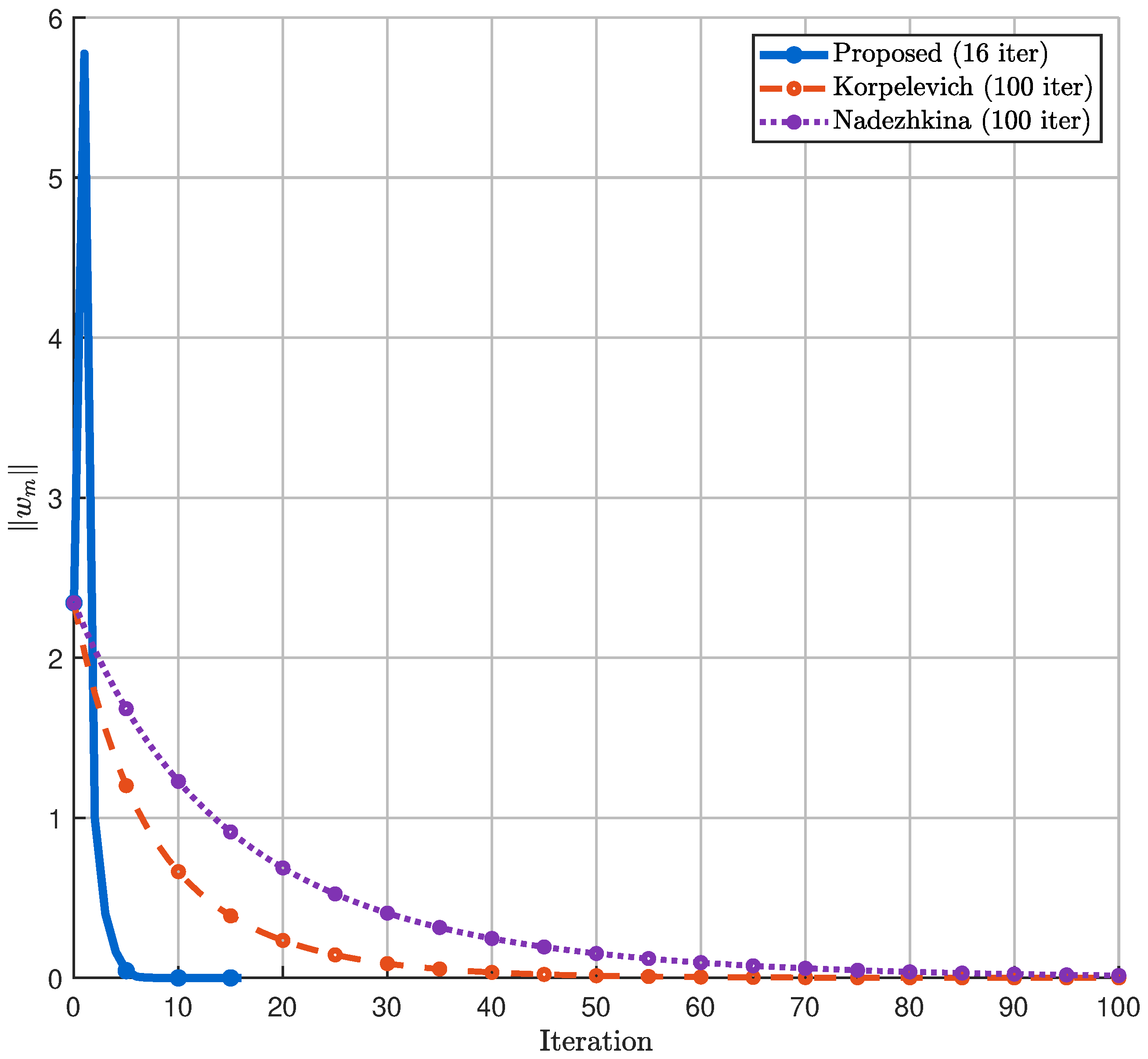

We compare the performance of three optimization algorithms: the proposed method and the Korpelevich [

10] and Nadezhkina algorithms [

20]. The comparison is based on their convergence behavior and computational efficiency. The convergence comparison of the three algorithms starts from initial point

. The values of parameters were set as in

Table 1.

Figure 6 illustrates the convergence behavior of the three algorithms, where the y-axis represents the norm

(distance from solution) and the x-axis shows the iteration count. The proposed algorithm demonstrates significantly faster convergence, reaching the solution tolerance in substantially fewer iterations compared to the traditional methods. The Korpelevich method [

10] exhibits steady but slower convergence, while the Nadezhkina algorithm [

20] shows the slowest convergence rate among the three.

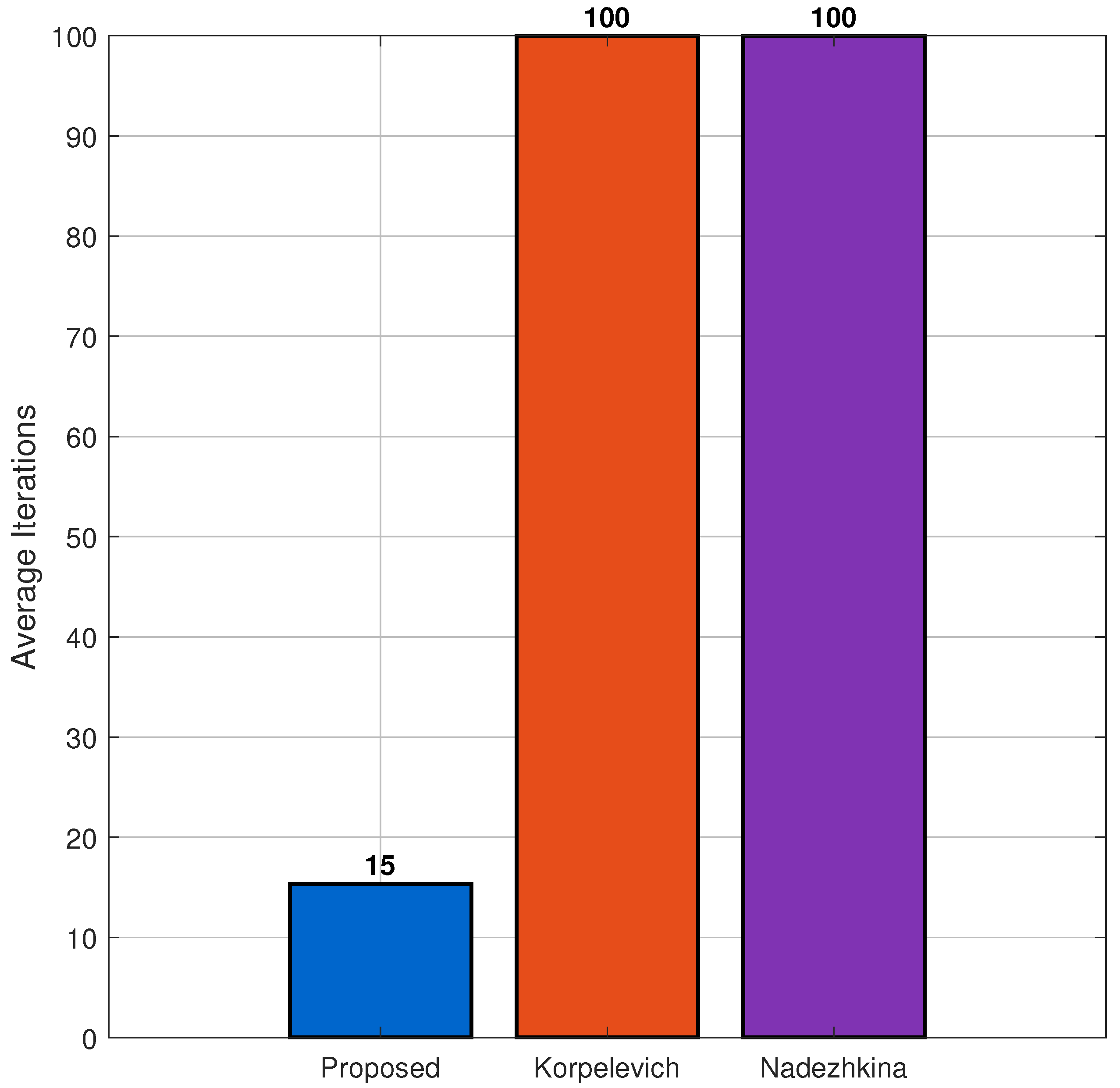

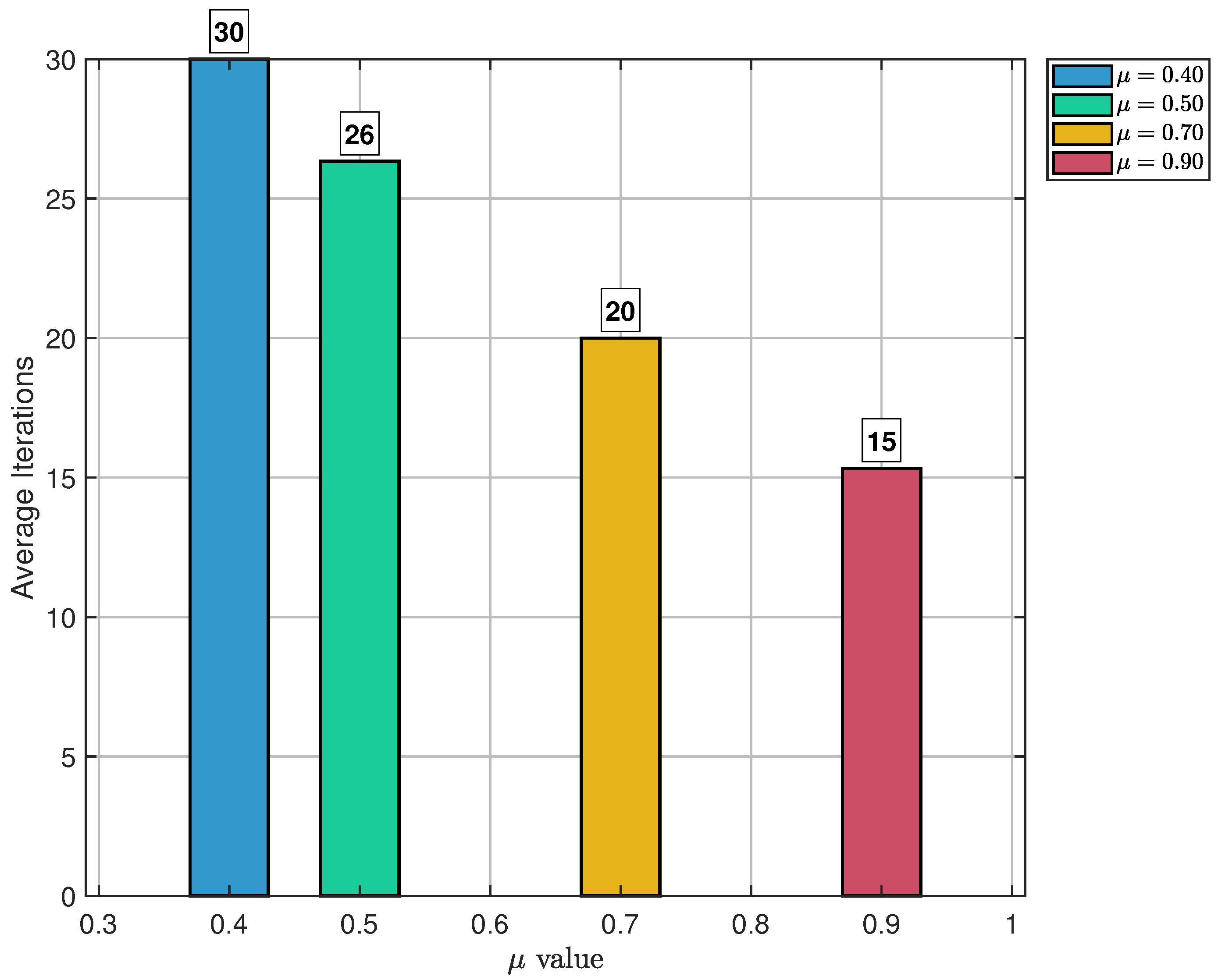

The

Figure 7 displays a bar chart comparing the average number of iterations required for convergence across multiple initial points. Each bar corresponds to one algorithm, with numeric labels indicating exact averages. The proposed algorithm requires the lowest average number of iterations to achieve convergence, establishing it as the most efficient method. The performance comparison summarized as in

Table 2.

: Optimal performance with fastest convergence;

: Good performance, slightly slower than optimal;

and : Suboptimal performance with slower convergence rates.

Figure 8.

Convergence for .

Figure 8.

Convergence for .

Figure 9.

Convergence for .

Figure 9.

Convergence for .

Figure 10.

Convergence for .

Figure 10.

Convergence for .

Figure 11.

Convergence for .

Figure 11.

Convergence for .

Figure 12 demonstrates that

provides the best performance with the lowest average iteration count. The performance degradation as

moves away from this optimal value in either direction highlights the importance of proper parameter tuning.

The comprehensive analysis demonstrates that the Proposed algorithm represents a significant advancement over traditional methods, offering both theoretical improvements and practical benefits for solving optimization problems in this class.

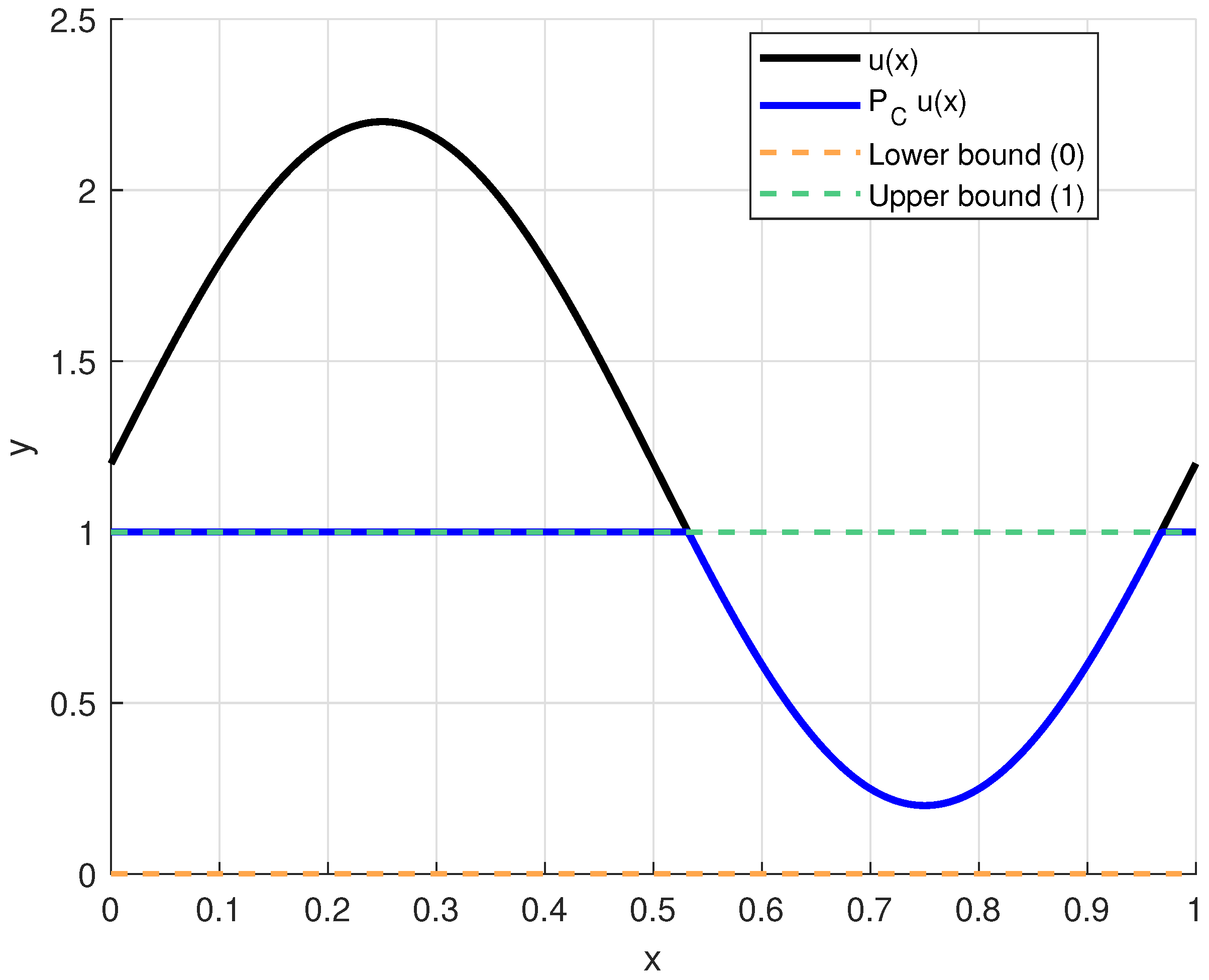

Example 2. We now consider the Hilbert space with the inner productFunctions are discretized over N uniform grid points, and the norm is approximated by the discrete -norm Consider and the projection mapping onto is defined pointwise for any function asAlso, the projection is expressed in Figure 13. We define the operators , and where the target function is . The iteration step applies and then projects the result onto via . Since is the identity, . The HFPP conditiontogether with the contraction and the projection , leads numerically to the solution , Thus, the generating sequence convergence weakly to 0.2. 4.2. Application in Image Reconstruction

We analyze a MATLAB implementation of an iterative optimization algorithm for image deconvolution and reconstruction. The algorithm addresses the problem of recovering an original image from blurred and noisy observations using a variational approach with projection constraints (see [

25] for details).

The algorithm solves the regularized least-squares optimization problem

subject to box constraints

where

H: Blurring operator (convolution with Gaussian kernel);

y: Observed noisy and blurred image;

x: Image to be reconstructed;

: Regularization term implicitly handled through operators U and V.

4.3. Implementation

- (i)

Data Generation:

Original Image: Shepp–Logan phantom of size .

Blur Kernel: Gaussian filter of size with .

Noise Model: Additive Gaussian noise with variance .

- (ii)

The main algorithm components are as follows:

- (iii)

Algorithm parameters describe in

Table 3.

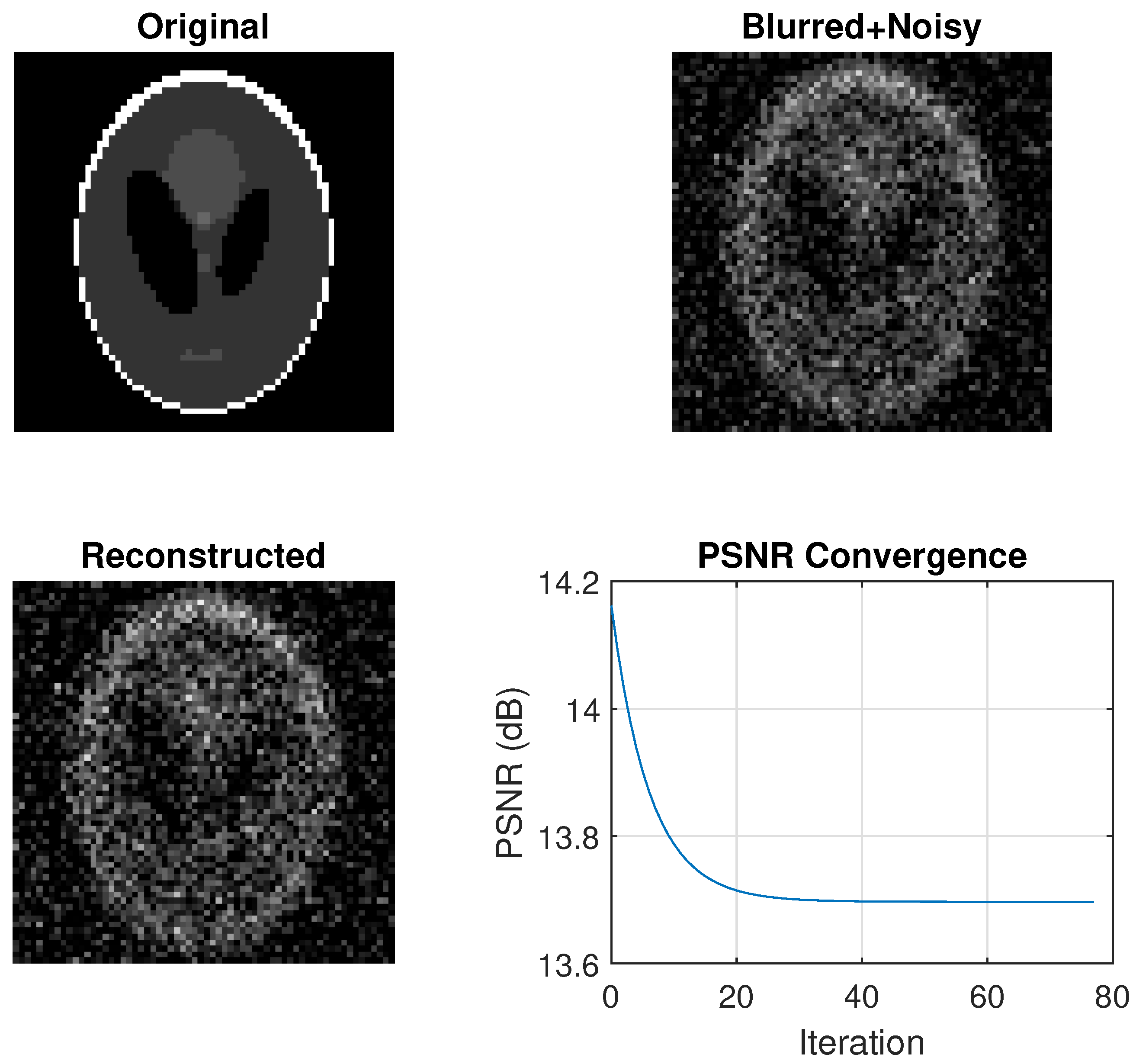

The generated figure, shown in

Figure 14, contains four subplots:

- (i)

Original phantom: Ground truth image for comparison.

- (ii)

Blurred + Noisy: Degraded input image (PSNR ≈ 20–25 dB).

- (iii)

Reconstructed: Final output after algorithm convergence.

- (iv)

PSNR Convergence: Monotonic improvement in reconstruction quality.

Figure 14.

Image deconvolution, restoration, and convergence.

Figure 14.

Image deconvolution, restoration, and convergence.

The iterative optimization algorithm successfully reconstructs images from blurred and noisy observations. The algorithm provides a solid foundation for image reconstruction problems and can be extended with more sophisticated regularization terms for improved performance in challenging scenarios.