A New Memory-Processing Unit Model Based on Spiking Neural P Systems with Dendritic and Synaptic Behavior for Kronecker Matrix–Matrix Multiplication

Abstract

1. Introduction

2. The Proposed Memory-Processing Unit Model Based on Spiking Neural P Systems,

- 1.

- 2.

- denote neurons of the form , where

- (a)

- is a multiset over alphabet O.

- (b)

- denotes the finite set of spiking rules as follows:

- i.

- , where E is a regular expression over alphabet O, , . d is the time units delay for this rule.

- ii.

- , for and .

- iii.

- , where Q is a finite set of queries of the forms and . The query indicates that neuron () requests p copies of from neuron (). On the other hand, the query indicates that all spikes () are requested from neuron () [32].

- (c)

- represents the synapses. Here, synapses have a specific synaptic weight (w), which indicates that spikes are received by some neuron () [33].

- (d)

- denote the input and output neurons, respectively.

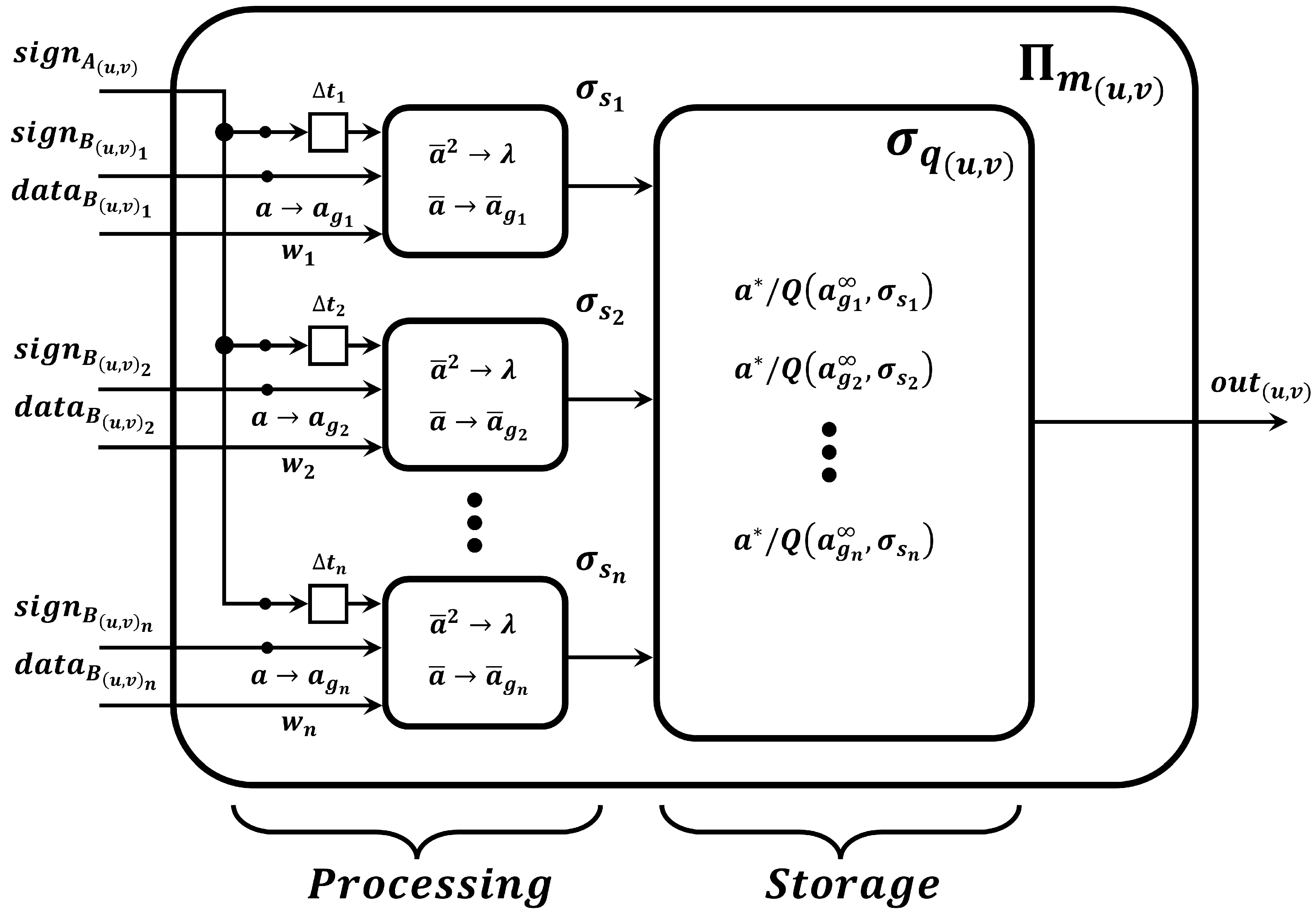

- Basic spiking neural memory cell, .The proposed neural memory cell, , is composed of a set of dendritic delays (), a set of neurons (), a set of synaptic weights (), and a neuron (), as shown in Figure 1.Figure 1. Schematic of basic neural memory cell, .The proposed spiking neural memory cell performs the dot product between two vectors () in parallel. To perform this operation, the vectors A and B must comply with the data format under the following criteria:

- -

- The components of vector A are represented with the synaptic weights ().

- -

- The components of vector B are decomposed into individual integer digits and encoded as spike trains.

- -

- The signs of the components of each vector (A and B) are represented with an anti-spike associated with each component.

- -

- The magnitude of the result of each component is represented by the number of spikes () accumulated in a specific neuron (). If each magnitude of the result is negative, then there is an anti-spike () associated with that magnitude.

- -

- All components of the resulting vector are stored in the same neuron (), which can be read and write with their respective request rules.

Specifically, the operation of the proposed neural memory cell, , is described as follows:- 1.

- At the initial simulation step (), the magnitudes of the components of the vector A are assigned to each synaptic weight (), while the signs of each component are loaded as anti-spikes in the dendritic delays ().

- 2.

- At simulation step , each neuron () receives anti-spikes, which represent the signs of vectors (A and B), from dendritic delays () and inputs (, , …, ), respectively. At this time, each neuron () activates some of its rules to determine the sign of each component of the resulting vector. Here, the forgetting rule () is activated when the result is positive and the firing rule () is activated when the sign is negative. In general, when the sign of each component of the resulting vector is negative, it is sent to the neuron ().

- 3.

- From to , each component of the vector B is fed to the neurons () in the form of trains of colored spikes when the firing rules on synapses (, where ) are activated. In this time interval, the neuron () applies its request rules (()).Therefore, the neuron () contains the results of the dot product (), where each component of the result is expressed with a color of spikes with its respective anti-spike sign when the component is negative.

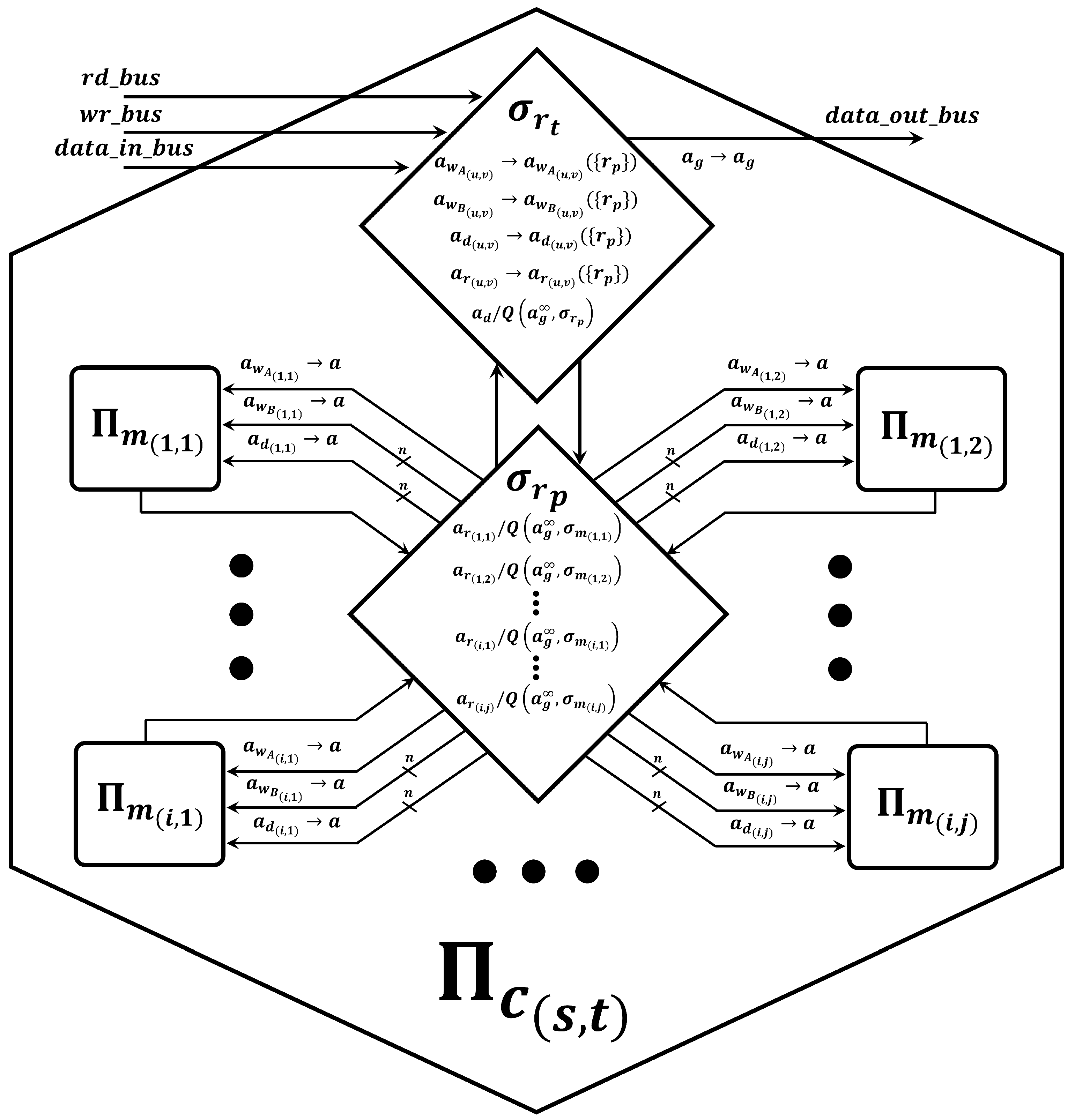

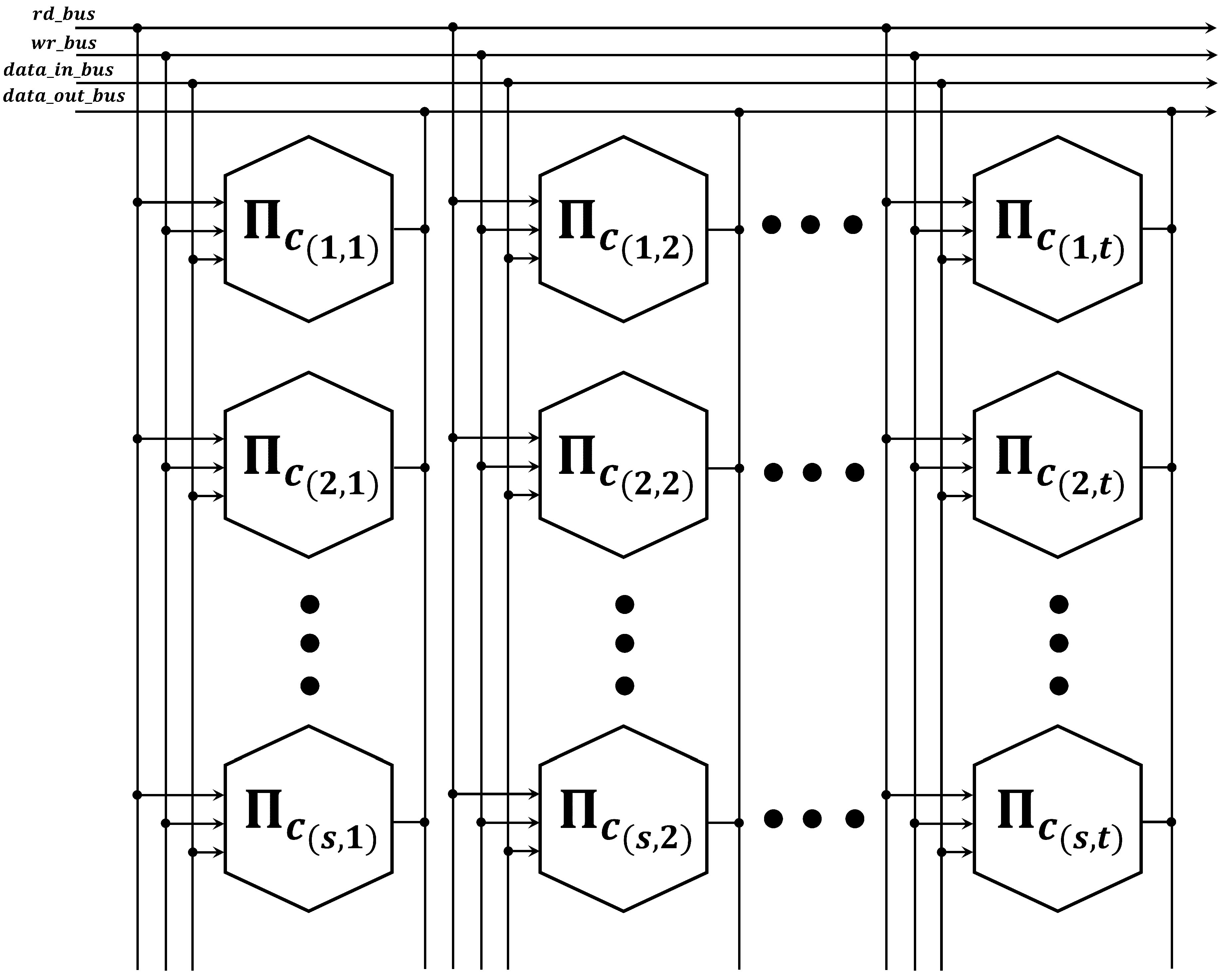

Therefore, each basic spiking neural memory cell, , can perform parallel computation of vector arithmetic operations. In case of performing the computation of matrix operations, such as the inner product and the Kronecker product, these components need to be interconnected by router neurons ( and ), as shown in Figure 2. Under this configuration, we denominated this component as spiking neural memory core, .Figure 2. Schematic of spiking neural memory core, . - Spiking neural memory core, .Given the matrices A of size and B of size , the spiking neural memory core, , performs the calculation of matrix multiplication (). Here, when both matrices A and B have the same dimensions (i.e., ), matrix multiplication is possible. In general, to calculate this operation, the spiking neural memory core, , performs the dot product between the rows of matrix A and the corresponding columns of matrix B through basic spiking neural memory cells (). To obtain the corresponding element in the resulting matrix , the spiking neural memory core performs the dot product between the row i from A and column i from B.In general, the proposed spiking neural memory core, , compute the matrix multiplication, as follows:

- -

- Writing stage. At this stage, the data is fed to each spiking neural memory cell. Specifically, the components of the matrices, A and B, are sent from the buses ( and ) to the basic spiking neural memory cells (). This is achieved by routing the spikes through router neurons ( and ) under the following considerations:

- 1.

- Each component of matrices A and B are represented with the types of spikes and , respectively. On the other hand, the signs of the components are represented with spikes of the type . Here, u and v denote matrix indices; in this way, the spikes are identified between different neurons.

- 2.

- The writing stage begins when the sign spikes of each component are sent via to the router neuron . Here, the firing rule () is activated, and these sign spikes are sent to the router neuron . Therefore, the rules in synapses () on systems are activated according its indices.

- 3.

- Subsequently, the spikes of the matrix components ( and ) enter to the router neuron () via input . As previous case, to transfer of the matrix components, the spikes are distributed from the router neuron () to the different basic spiking neural memory cells ().

- -

- Processing stage. Once the matrix components are loaded into memory, the calculation of the product () begins. Each basic neural memory cell, , perform the dot product between the rows of A and the corresponding columns of B. The resulting matrix C is an matrix, where the calculation of the components of C can be generalized as . This process is repeated for all elements of the resulting matrix C; thus, the resulting components are stored in the basic spiking neural memory cells ().

- -

- Reading stage. At this stage, it is possible to read the results of matrix multiplication by following two steps:

- 1.

- A spike of the type is sent to the router neuron () via , where and . Here, u and v represent the indices of the component of the resulting matrix () to be read. At this moment, a spike is sent to the router neuron () when the rule () is activated. Therefore, the router neuron () requests the component to any of the basic spiking neural memory cells (, , …, , …, ) by activating any of its request rules (, , …, , …, ).

- 2.

- In this step, if the requested result component () is in the router neuron (), it is necessary to send a spike into the router neuron () via . Here, the neuron () makes a request () for the component of the neuron () and stores it in its soma. As a consequence of this, the requested result component is sent to the spiking neural memory core () output via .

3. Discussion

4. Conclusions

- We design a basic spiking neural memory cell, , to perform arithmetic operations, such as addition, subtraction, and multiplication.

- We propose two router neurons ( and ) to efficiently write/read the spikes in each neural memory cell.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ionescu, M.; Păun, G.; Yokomori, T. Spiking neural P systems. Fundam. Informaticae 2006, 71, 279–308. [Google Scholar] [CrossRef]

- Pleṣa, M.I.; Gheorghe, M.; Ipate, F.; Zhang, G. Applications of spiking neural P systems in cybersecurity. J. Membr. Comput. 2024, 6, 310–317. [Google Scholar] [CrossRef]

- Zandron, C. An Overview on Applications of Spiking Neural Networks and Spiking Neural P Systems. Lang. Coop. Commun. Essays Dedic. Erzsébet Csuhaj-Varjú Celebr. Her Sci. Career 2025, 15840, 267–278. [Google Scholar]

- Zeng, X.; Xu, L.; Liu, X.; Pan, L. On languages generated by spiking neural P systems with weights. Inf. Sci. 2014, 278, 423–433. [Google Scholar] [CrossRef]

- Peng, H.; Wang, J. Spiking Neural P Systems and Variants. In Advanced Spiking Neural P Systems: Models and Applications; Springer: Berlin/Heidelberg, Germany, 2024; pp. 15–49. [Google Scholar]

- Shen, Y.; Liu, X.; Yang, Z.; Zang, W.; Zhao, Y. Spiking neural membrane systems with adaptive synaptic time delay. Int. J. Neural Syst. 2024, 34, 2450028. [Google Scholar] [CrossRef]

- Wang, L.; Liu, X.; Han, Z.; Zhao, Y. Spiking neural P systems with neuron permeability. Neurocomputing 2024, 576, 127351. [Google Scholar] [CrossRef]

- Shen, Y.; Qiu, L.; Yang, Z.; Zhao, Y. Weighted target indications spiking neural P systems with inhibitory rules and time schedule. J. Membr. Comput. 2024, 6, 245–254. [Google Scholar] [CrossRef]

- Wu, R.; Zhao, Y. Spiking neural P systems with structural plasticity and mute rules. Theor. Comput. Sci. 2024, 1000, 114554. [Google Scholar] [CrossRef]

- Garcia, L.; Sanchez, G.; Vazquez, E.; Avalos, G.; Anides, E.; Nakano, M.; Sanchez, G.; Perez, H. Small universal spiking neural P systems with dendritic/axonal delays and dendritic trunk/feedback. Neural Netw. 2021, 138, 126–139. [Google Scholar] [CrossRef]

- Garcia, L.; Sanchez, G.; Avalos, J.G.; Vazquez, E. Spiking neural P systems with myelin and dendritic spines. Neurocomputing 2023, 552, 126522. [Google Scholar] [CrossRef]

- Liu, Y.; Zhao, Y. Spiking neural P systems with membrane potentials, inhibitory rules, and anti-spikes. Entropy 2022, 24, 834. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, X. Spiking neural P systems with microglia. IEEE Trans. Parallel Distrib. Syst. 2024, 35, 1239–1250. [Google Scholar] [CrossRef]

- Lazo, P.P.L.; De La Cruz, R.T.A.; Macababayao, I.C.H.; Cabarle, F.G.C. Universality of SN P systems with stochastic application of rules. J. Membr. Comput. 2022, 4, 166–176. [Google Scholar] [CrossRef]

- Lv, Z.; Bao, T.; Zhou, N.; Peng, H.; Huang, X.; Riscos-Núñez, A.; Pérez-Jiménez, M.J. Spiking neural p systems with extended channel rules. Int. J. Neural Syst. 2021, 31, 2050049. [Google Scholar] [CrossRef] [PubMed]

- Ning, X.; Yang, G.; Sun, Z.; Song, X. On the universality of spiking neural P systems with multiple channels and Autapses. IEEE Access 2024, 12, 8773–8779. [Google Scholar] [CrossRef]

- Song, T.; Rodríguez-Patón, A.; Zheng, P.; Zeng, X. Spiking neural P systems with colored spikes. IEEE Trans. Cogn. Dev. Syst. 2017, 10, 1106–1115. [Google Scholar] [CrossRef]

- Cabarle, F.G.C.; Adorna, H.N.; Jiang, M.; Zeng, X. Spiking neural P systems with scheduled synapses. IEEE Trans. Nanobiosci. 2017, 16, 792–801. [Google Scholar] [CrossRef]

- Pan, L.; Păun, G. Spiking neural P systems with anti-spikes. Int. J. Comput. Commun. Control 2009, 4, 273–282. [Google Scholar] [CrossRef]

- Pan, L.; Wang, J.; Hoogeboom, H.J. Spiking neural P systems with astrocytes. Neural Comput. 2012, 24, 805–825. [Google Scholar] [CrossRef]

- Wu, T.; Pan, L. Spiking neural P systems with communication on request and mute rules. IEEE Trans. Parallel Distrib. Syst. 2022, 34, 734–745. [Google Scholar] [CrossRef]

- Rangel, J.; Anides, E.; Vázquez, E.; Sanchez, G.; Avalos, J.G.; Duchen, G.; Toscano, L.K. New High-Speed Arithmetic Circuits Based on Spiking Neural P Systems with Communication on Request Implemented in a Low-Area FPGA. Mathematics 2024, 12, 3472. [Google Scholar] [CrossRef]

- Duchen, G.; Diaz, C.; Sanchez, G.; Perez, H. First steps toward memory processor unit architecture based on SN P systems. Electron. Lett. 2017, 53, 384–385. [Google Scholar] [CrossRef]

- Gao, J.; Ji, W.; Chang, F.; Han, S.; Wei, B.; Liu, Z.; Wang, Y. A systematic survey of general sparse matrix-matrix multiplication. ACM Comput. Surv. 2023, 55, 1–36. [Google Scholar] [CrossRef]

- Asifuzzaman, K.; Miniskar, N.R.; Young, A.R.; Liu, F.; Vetter, J.S. A survey on processing-in-memory techniques: Advances and challenges. Mem.-Mater. Devices Circuits Syst. 2023, 4, 100022. [Google Scholar] [CrossRef]

- Zou, X.; Xu, S.; Chen, X.; Yan, L.; Han, Y. Breaking the von Neumann bottleneck: Architecture-level processing-in-memory technology. Sci. China Inf. Sci. 2021, 64, 160404. [Google Scholar] [CrossRef]

- Hong, Y.; Jeon, S.; Park, S.; Kim, B.S. Implementation of an quantum circuit simulator using classical bits. In Proceedings of the 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Incheon, Republic of Korea, 13–15 June 2022; pp. 472–474. [Google Scholar]

- Feng, L.; Yang, G. Deep kronecker network. Biometrika 2024, 111, 707–714. [Google Scholar] [CrossRef]

- Panagos, I.I.; Sfikas, G.; Nikou, C. Visual speech recognition using compact hypercomplex neural networks. Pattern Recognit. Lett. 2024, 186, 1–7. [Google Scholar] [CrossRef]

- Wang, H.; Wan, C.; Jin, H. Efficient Modeling Attack on Multiplexer PUFs via Kronecker Matrix Multiplication. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2025, 44, 2883–2896. [Google Scholar] [CrossRef]

- Song, T.; Jiang, Y.; Shi, X.; Zeng, X. Small universal spiking neural P systems with anti-spikes. J. Comput. Theor. Nanosci. 2013, 10, 999–1006. [Google Scholar] [CrossRef]

- Pan, L.; Păun, G.; Zhang, G.; Neri, F. Spiking neural P systems with communication on request. Int. J. Neural Syst. 2017, 27, 1750042. [Google Scholar] [CrossRef]

- Wang, J.; Hoogeboom, H.J.; Pan, L.; Paun, G.; Pérez-Jiménez, M.J. Spiking neural P systems with weights. Neural Comput. 2010, 22, 2615–2646. [Google Scholar] [CrossRef] [PubMed]

- Koch, C. Biophysics of Computation: Information Processing in Single Neurons; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

- Nenadic, Z.; Ghosh, B.K. Computation with biological neurons. In Proceedings of the 2001 American Control Conference (Cat. No. 01CH37148), Arlington, VA, USA, 25–27 June 2001; Volume 1, pp. 257–262. [Google Scholar]

- Bhalla, U.S. Molecular computation in neurons: A modeling perspective. Curr. Opin. Neurobiol. 2014, 25, 31–37. [Google Scholar] [CrossRef] [PubMed]

- Deng, W.; Aimone, J.B.; Gage, F.H. New neurons and new memories: How does adult hippocampal neurogenesis affect learning and memory? Nat. Rev. Neurosci. 2010, 11, 339–350. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Garcia, L.; Anides, E.R.; Vazquez, E.; Toscano, L.K.; Sanchez, G.; Avalos, J.G.; Sanchez, G. A New Memory-Processing Unit Model Based on Spiking Neural P Systems with Dendritic and Synaptic Behavior for Kronecker Matrix–Matrix Multiplication. Mathematics 2025, 13, 3663. https://doi.org/10.3390/math13223663

Garcia L, Anides ER, Vazquez E, Toscano LK, Sanchez G, Avalos JG, Sanchez G. A New Memory-Processing Unit Model Based on Spiking Neural P Systems with Dendritic and Synaptic Behavior for Kronecker Matrix–Matrix Multiplication. Mathematics. 2025; 13(22):3663. https://doi.org/10.3390/math13223663

Chicago/Turabian StyleGarcia, Luis, Esteban Ramse Anides, Eduardo Vazquez, Linda Karina Toscano, Gabriel Sanchez, Juan Gerardo Avalos, and Giovanny Sanchez. 2025. "A New Memory-Processing Unit Model Based on Spiking Neural P Systems with Dendritic and Synaptic Behavior for Kronecker Matrix–Matrix Multiplication" Mathematics 13, no. 22: 3663. https://doi.org/10.3390/math13223663

APA StyleGarcia, L., Anides, E. R., Vazquez, E., Toscano, L. K., Sanchez, G., Avalos, J. G., & Sanchez, G. (2025). A New Memory-Processing Unit Model Based on Spiking Neural P Systems with Dendritic and Synaptic Behavior for Kronecker Matrix–Matrix Multiplication. Mathematics, 13(22), 3663. https://doi.org/10.3390/math13223663