CGPA-UGRCA: A Novel Explainable AI Model for Sentiment Classification and Star Rating Using Nature-Inspired Optimization

Abstract

1. Introduction

1.1. Problem Statement

1.2. Motivation

1.3. Contributions

- ⮚

- This research proposes the CGPA model hybridized with the UGCRA for multi-level sentiment review analysis. This approach enhances sentiment classification by optimizing accuracy and efficiency while accounting for inherent uncertainties, enabling precise sentiment intensity assessment across various dimensions.

- ⮚

- DistilBERT is employed to extract both explicit and implicit attributes from online reviews, enabling a nuanced understanding of sentiments and opinions expressed in the text.

- ⮚

- XAI techniques, specifically SHAPs, are used to enhance the transparency and interpretability of the model’s predictions. This approach facilitates the final ranking of classified reviews, predicts ratings on a scale of one to five stars, and generates a recommendation list based on the predicted user ratings.

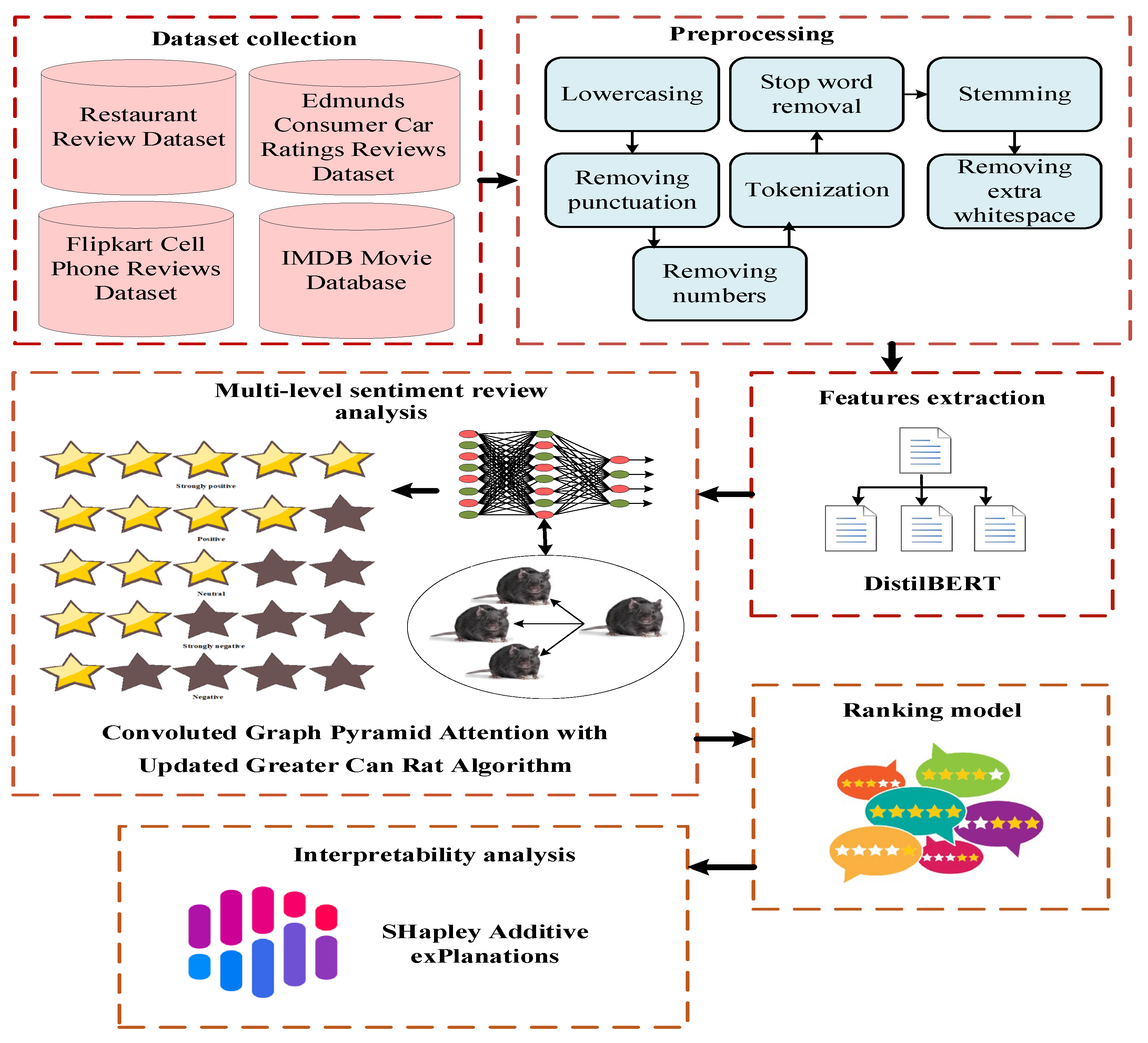

2. Proposed Method

2.1. Data Collection

2.2. Preprocessing

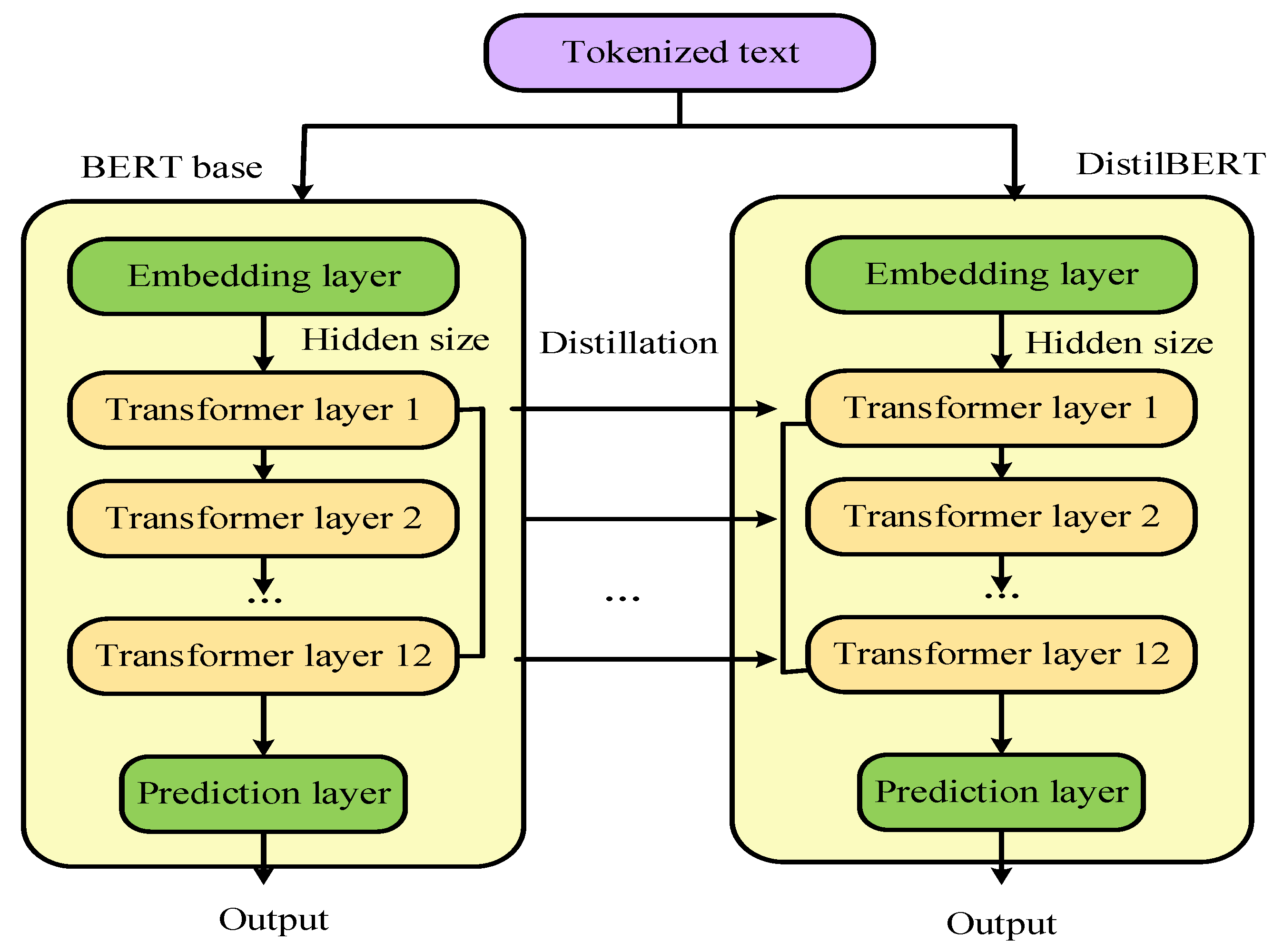

2.3. Feature Extraction

2.4. Multi-Level Sentiment Review Analysis

| Algorithm 1. Pseudocode of UGCRA. |

| Initialize the parameters , , , , Input: UGCRA population, maximum iterations: Output: Best result Compute the fitness function of UGCRA Choose the fittest UGCRA as a Candidate : Update the global optimal position Update the other GCRA according to the position of by Equation (12) for determine , , , , : if Update the position of the search agent using Equation (13) Verify the boundary constraint. else Update the new position of the search agent according to Equation (14) Verify the boundary constraint. end if Determine each UGCR’s suitability for a new position Update search agent according to Equation (15) Update the new search space using the Levy flight Equations (16) and (17) Update the best position Choose a new Candidate Cbest end for return Cbest end |

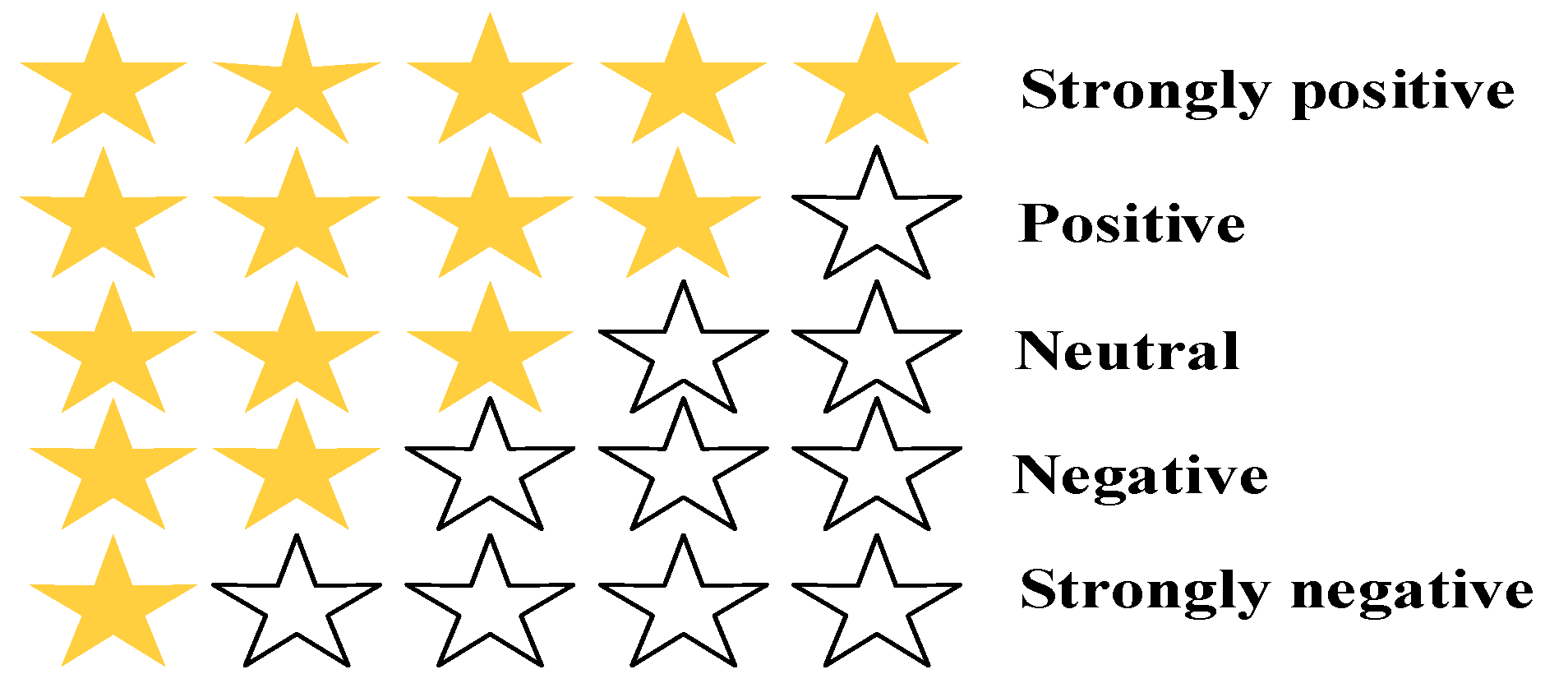

2.5. Polarity Finding

2.6. Ranking Model

2.7. Interpretability Analysis

2.8. Computational Complexity of CGPA-UGCRA

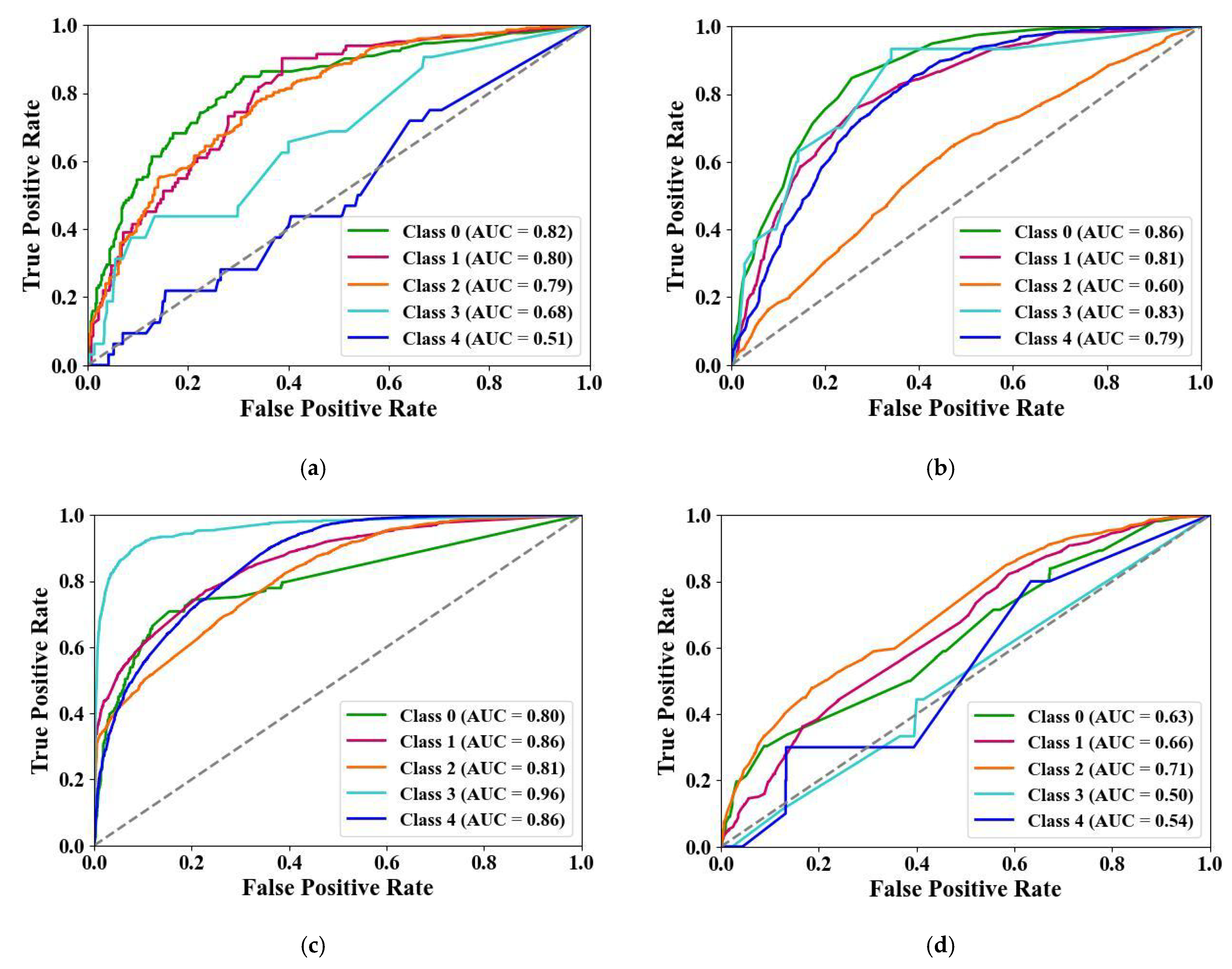

3. Experimental Outcomes

3.1. Dataset Description

3.2. Enhanced Interpretability Analysis with SHAPs

3.3. Ablation Study

4. Discussion

Limitation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kumar, N.; Hanji, B.R. Aspect-based sentiment score and star rating prediction for travel destination using Multinomial Logistic Regression with fuzzy domain ontology algorithm. Expert Syst. Appl. 2024, 240, 122493. [Google Scholar] [CrossRef]

- Darraz, N.; Karabila, I.; El-Ansari, A.; Alami, N.; El Mallahi, M. Integrated sentiment analysis with BERT for enhanced hybrid recommendation systems. Expert Syst. Appl. 2025, 261, 125533. [Google Scholar] [CrossRef]

- Kumar, L.K.; Thatha, V.N.; Udayaraju, P.; Siri, D.; Kiran, G.U.; Jagadesh, B.N.; Vatambeti, R. Analyzing Public Sentiment on the Amazon Website: A GSK-based Double Path Transformer Network Approach for Sentiment Analysis. IEEE Access 2024, 12, 28972–28987. [Google Scholar] [CrossRef]

- Sun, B.; Song, X.; Li, W.; Liu, L.; Gong, G.; Zhao, Y. A user review data-driven supplier ranking model using aspect-based sentiment analysis and fuzzy theory. Eng. Appl. Artif. Intell. 2024, 127, 107224. [Google Scholar] [CrossRef]

- Karabila, I.; Darraz, N.; EL-Ansari, A.; Alami, N.; EL Mallahi, M. BERT-enhanced sentiment analysis for personalized e-commerce recommendations. Multimed. Tools Appl. 2024, 83, 56463–56488. [Google Scholar] [CrossRef]

- Punetha, N.; Jain, G. Game theory and MCDM-based unsupervised sentiment analysis of restaurant reviews. Appl. Intell. 2023, 53, 20152–20173. [Google Scholar] [CrossRef]

- Kaur, G.; Sharma, A. A deep learning-based model using hybrid feature extraction approach for consumer sentiment analysis. J. Big Data 2023, 10, 5. [Google Scholar] [CrossRef]

- Tripathy, G.; Sharaff, A. AEGA: Enhanced feature selection based on ANOVA and extended genetic algorithm for online customer review analysis. J. Supercomput. 2023, 79, 13180–13209. [Google Scholar] [CrossRef]

- Kotagiri, S.; Sowjanya, A.M.; Anilkumar, B.; Devi, N.L. Aspect-oriented extraction and sentiment analysis using optimized hybrid deep learning approaches. Multimed. Tools Appl. 2024, 83, 88613–88644. [Google Scholar] [CrossRef]

- He, Z.; Zheng, L.; He, S. A novel approach for product competitive analysis based on online reviews. Electron. Commer. Res. 2023, 23, 2259–2290. [Google Scholar] [CrossRef]

- Nawaz, A.; Awan, A.A.; Ali, T.; Rana, M.R. Product’s behaviour recommendations using free text: An aspect based sentiment analysis approach. Clust. Comput. 2020, 23, 1267–1279. [Google Scholar] [CrossRef]

- Danyal, M.M.; Khan, S.S.; Khan, M.; Ullah, S.; Mehmood, F.; Ali, I. Proposing sentiment analysis model based on BERT and XLNet for movie reviews. Multimed. Tools Appl. 2024, 83, 64315–64339. [Google Scholar] [CrossRef]

- Tan, K.L.; Lee, C.P.; Lim, K.M. Roberta-Gru: A hybrid deep learning model for enhanced sentiment analysis. Appl. Sci. 2023, 13, 3915. [Google Scholar] [CrossRef]

- Devi, N.L.; Anilkumar, B.; Sowjanya, A.M.; Kotagiri, S. An innovative word embedded and optimization based hybrid artificial intelligence approach for aspect-based sentiment analysis of app and cellphone reviews. Multimed. Tools Appl. 2024, 83, 79303–79336. [Google Scholar] [CrossRef]

- Abbas, S.; Boulila, W.; Driss, M.; Sampedro, G.A.; Abisado, M.; Almadhor, A. Active learning empowered sentiment analysis: An approach for optimizing smartphone customer’s review sentiment classification. IEEE Trans. Consum. Electron. 2023, 70, 4470–4477. [Google Scholar] [CrossRef]

- Restaurant Reviews Aspect-based Sentiment Analysis. Available online: https://www.kaggle.com/code/kamonkornbuangsoong/restaurant-reviews-aspect-based-sentiment-analysis/notebook (accessed on 24 October 2024).

- Edmunds-Consumer Car Ratings and Reviews. Available online: https://www.kaggle.com/datasets/ankkur13/edmundsconsumer-car-ratings-and-reviews (accessed on 24 October 2024).

- Flipkart Cell Phone Reviews. Available online: https://www.kaggle.com/datasets/nkitgupta/flipkart-cell-phone-reviews?select=flipkart_products.db (accessed on 24 October 2024).

- Analyzing-the-IMDB-Movie-Dataset. Available online: https://github.com/shishir349/Analyzing-the-IMDB-Movie-Dataset/blob/master/.gitignore (accessed on 24 October 2024).

- Gupta, K.; Jiwani, N.; Afreen, N. A combined approach of sentimental analysis using machine learning techniques. Rev. d’Intelligence Artif. 2023, 37, 1–6. [Google Scholar] [CrossRef]

- Bahaa, A.; Kamal, A.E.; Fahmy, H.; Ghoneim, A.S. DB-CBIL: A DistilBert-Based Transformer Hybrid Model using CNN and BiLSTM for Software Vulnerability Detection. IEEE Access 2024, 12, 64446–64460. [Google Scholar] [CrossRef]

- Igali, A.; Abdrakhman, A.; Torekhan, Y.; Shamoi, P. Tracking Emotional Dynamics in Chat Conversations: A Hybrid Approach using DistilBERT and Emoji Sentiment Analysis. arXiv 2024, arXiv:2408.01838. [Google Scholar] [CrossRef]

- Wang, H.; Li, F. A text classification method based on LSTM and graph attention network. Connect. Sci. 2022, 34, 2466–2480. [Google Scholar] [CrossRef]

- Ding, Y.; Ma, Z.; Wen, S.; Xie, J.; Chang, D.; Si, Z.; Wu, M.; Ling, H. AP-CNN: Weakly supervised attention pyramid convolutional neural network for fine-grained visual classification. IEEE Trans. Image Process. 2021, 30, 2826–2836. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Saha, A.K.; Pal, J.; Abualigah, L.; Mirjalili, S. Greater Canee rat algorithm (GCRA): A nature-inspired metaheuristic for optimization problems. Heliyon 2024, 10, e31629. [Google Scholar] [CrossRef]

- Amazon Product Reviews. Available online: https://www.kaggle.com/datasets/saurav9786/amazon-product-reviews (accessed on 23 October 2025).

- Trip Advisor Hotel Reviews. Available online: https://www.kaggle.com/datasets/andrewmvd/trip-advisor-hotel-reviews (accessed on 23 October 2025).

- Branco, A.; Parada, D.; Silva, M.; Mendonça, F.; Mostafa, S.S.; Morgado-Dias, F. Sentiment Analysis in Portuguese Restaurant Reviews: Application of Transformer Models in Edge Computing. Electronics 2024, 13, 589. [Google Scholar] [CrossRef]

- Tan, K.L.; Lee, C.P.; Lim, K.M.; Anbananthen, K.S. Sentiment analysis with ensemble hybrid deep learning model. IEEE Access 2022, 10, 103694–103704. [Google Scholar] [CrossRef]

- Sarhan, A.M.; Ayman, H.; Wagdi, M.; Ali, B.; Adel, A.; Osama, R. Integrating machine learning and sentiment analysis in movie recommendation systems. J. Electr. Syst. Inf. Technol. 2024, 11, 53. [Google Scholar] [CrossRef]

- Ojo, S.; Abbas, S.; Marzougui, M.; Sampedro, G.A.; Almadhor, A.S.; Al Hejaili, A.; Ivanochko, I. Graph Neural Network for Smartphone Recommendation System: A Sentiment Analysis Approach for Smartphone Rating. IEEE Access 2023, 11, 140451–140463. [Google Scholar] [CrossRef]

- Balaganesh, N.; Muneeswaran, K. A novel aspect-based sentiment classifier using whale optimized adaptive neural network. Neural Comput. Appl. 2022, 34, 4003–4012. [Google Scholar] [CrossRef]

- Amiri, M.H.; Mehrabi Hashjin, N.; Montazeri, M.; Mirjalili, S.; Khodadadi, N. Hippopotamus optimization algorithm: A novel nature-inspired optimization algorithm. Sci. Rep. 2024, 14, 5032. [Google Scholar] [CrossRef]

- Ahmed, M.; Sulaiman, M.H.; Mohamad, A.J.; Rahman, M. Gooseneck barnacle optimization algorithm: A novel nature inspired optimization theory and application. Math. Comput. Simul. 2024, 218, 248–265. [Google Scholar] [CrossRef]

- Ghetas, M.; Issa, M. A novel reinforcement learning-based reptile search algorithm for solving optimization problems. Neural Comput. Appl. 2024, 36, 533–568. [Google Scholar] [CrossRef]

| References | Methodology | Classification | Limitations | ||

|---|---|---|---|---|---|

| Positive | Neutral | Negative | |||

| Punetha N. et al. [6] | MCDM | ✓ | × | ✓ | Limited to sentiment analysis on social media. |

| Kaur G et al. [7] | HFV+LSTM | ✓ | ✓ | ✓ | Lacks scalability across diverse domains. |

| Tripathy G et al. [8] | AEGA | ✓ | × | ✓ | High computational cost. |

| Kotagiri S et al. [9] | RSO-EGBA | ✓ | ✓ | ✓ | Focused only on social media platforms. |

| He Z et al. [10] | ID-KS | ✓ | × | ✓ | Overemphasis on emoji usage in non-emoji contexts. |

| Nawaz A et al. [11] | ABSA | ✓ | × | ✓ | Limited applicability outside the textile industry. |

| Danyal MM et al. [12] | XLNet | ✓ | × | ✓ | Resource-intensive preprocessing stage. |

| Tan KL et al. [13] | RoBERTa-GRU | ✓ | × | ✓ | High model complexity. |

| Devi NL et al. [14] | RO-EASGB | ✓ | ✓ | ✓ | Requires extensive preprocessing. |

| Abbas S et al. [15] | ALML | ✓ | × | ✓ | Complex fusion of multimodal data. |

| Raw Texts | Preprocessed Texts | Preprocessing Methods |

|---|---|---|

| Really great.... value for money... | really great value money | Punctuation removal, stop word removal |

| Just simply WOW.... | simply wow | Lowercasing, punctuation removal, and stop word removal |

| Wow superb I love it ❤️👍 battery backup so nice 👍👍 | wow superb love battery backup nice | Stop word removal, and lowercasing |

| Christopher Nolan’s epic trilogy concludes in glorious fashion and gives us a thought provoking and suitably satisfying conclusion to an epic saga. It’s emotional, intense and has a great villain in Tom Hardy. | christophernolan epic trilogy concludes glorious fashion gives us thought provoking suitably satisfying conclusion epic saga emotional intense great villain tom hardy | Lowercasing, punctuation removal, stop word removal |

| Where Gabriela personaly greets you and recommends you what to eat. | gabrielapersonaly greets recommends eat | Lowercasing, stop word removal, and word stemming |

| For those that go once and don’t enjoy it, all I can say is that they just don’t get it. | go enjoy say get | Lowercasing, stop word removal, contraction expansion, and stemming or lemmatization |

| Parameters | Description |

|---|---|

| Learning rate | 0.001 |

| Algorithm | Updated Greater Cane Rat Algorithm |

| Batch size | 32 |

| Iterations | 100 |

| Epochs | 100 |

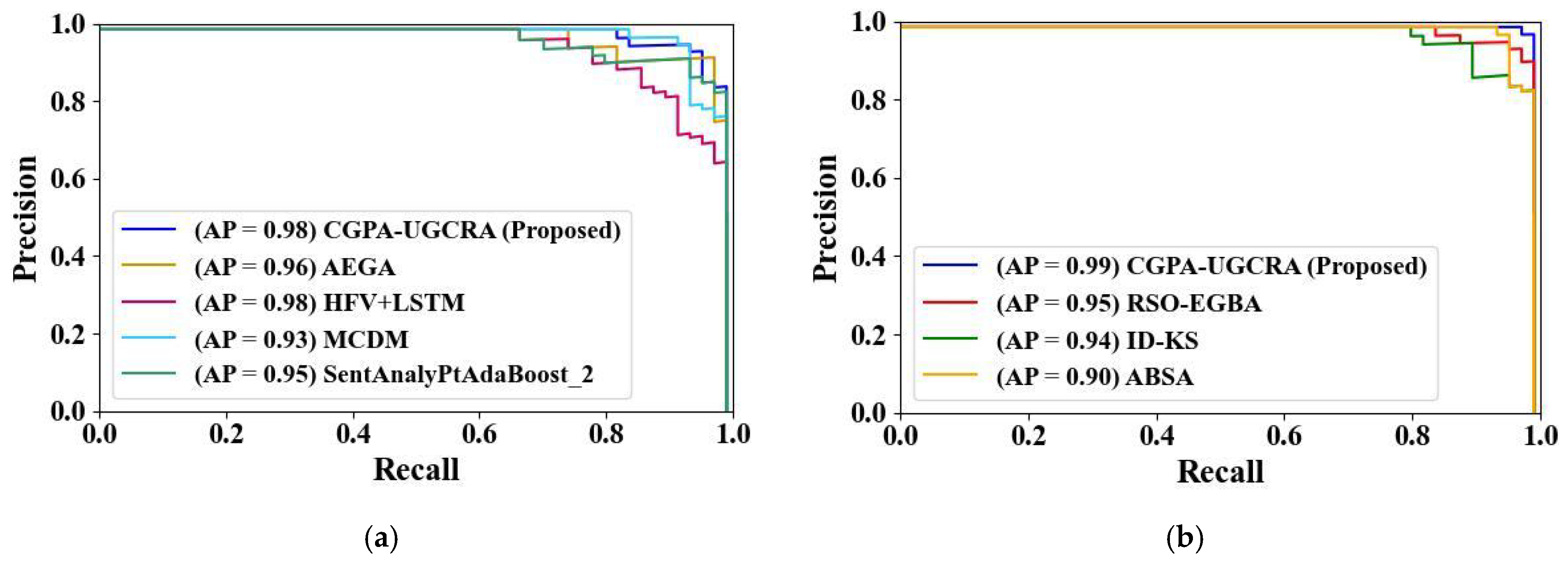

| Methods | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| RSO-EGBA [9] | 92 | 84 | 91 | 86 |

| ID-KS [10] | 87 | 87.50 | 86 | 86.67 |

| ABSA [11] | 90 | 88.42 | 80.2 | 84 |

| CGPA-UGCRA (proposed) | 99.87 | 99.88 | 99.47 | 99.67 |

| Methods | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| RO-EASGB [14] | 99 | 97 | 98.9 | 98 |

| ALML [15] | 89 | 72 | 95 | 93 |

| GNN [31] | 97 | 96 | 98 | 97 |

| SWOANN [32] | 85 | 83 | 86 | 78 |

| CGPA-UGCRA (proposed) | 99.98 | 99.95 | 99.95 | 99.95 |

| Dataset | Domain | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|---|

| Restaurant Reviews | Food Service | 99.72 | 99.30 | 99.30 | 99.30 |

| Edmunds Car Ratings | Automotive | 99.87 | 99.88 | 99.47 | 99.67 |

| Flipkart Cell Phone Reviews | Electronics | 99.98 | 99.95 | 99.95 | 99.95 |

| IMDB Movie Database | Entertainment | 99.64 | 99.10 | 99.10 | 99.10 |

| Amazon Product Reviews | E-commerce | 98.93 | 98.61 | 98.52 | 98.69 |

| Trip Advisor Hotel Reviews | Hospitality | 98.81 | 98.47 | 98.41 | 98.54 |

| Datasets | Number of Reviews | Accuracy (%) | Specificity (%) | Precision (%) | |

|---|---|---|---|---|---|

| Restaurant Review | Strongly positive | 159 | 99.55 | 99.72 | 98.89 |

| Positive | 1717 | 99.69 | 99.31 | 98.24 | |

| Neutral | 398 | 99.62 | 99.01 | 98.05 | |

| Strongly negative | 179 | 99.02 | 99.26 | 98.63 | |

| Negative | 703 | 99.53 | 99.65 | 98.29 | |

| Edmunds-Consumer Car Ratings Reviews | Strongly positive | 4224 | 99.83 | 99.89 | 99.58 |

| Positive | 2576 | 99.52 | 99.70 | 99.82 | |

| Neutral | 975 | 99.94 | 99.58 | 99.35 | |

| Strongly negative | 166 | 99.11 | 99.88 | 99.29 | |

| Negative | 558 | 99.76 | 99.23 | 99.41 | |

| Flipkart Cell Phone Reviews | Strongly positive | 33,719 | 99.97 | 99.98 | 99.93 |

| Positive | 11,030 | 99.38 | 99.36 | 99.45 | |

| Neutral | 3737 | 99.29 | 99.43 | 99.73 | |

| Strongly negative | 4396 | 99.93 | 99.33 | 99.32 | |

| Negative | 611 | 99.29 | 99.31 | 99.46 | |

| IMDB Movie | Strongly positive | 49 | 99.64 | 99.77 | 99.10 |

| Positive | 2508 | 99.32 | 99.70 | 99.80 | |

| Neutral | 2177 | 99.11 | 99.69 | 99.27 | |

| Strongly negative | 20 | 99.10 | 99.37 | 99.77 | |

| Negative | 289 | 99.91 | 99.56 | 99.50 | |

| Methods | Restaurant Review | Edmunds-Consumer Car Ratings Reviews | Flipkart Cell Phone Reviews | IMDB Movie | ||||

|---|---|---|---|---|---|---|---|---|

| Recall | F1 Score | Recall | F1 Score | Recall | F1 Score | Recall | F1 Score | |

| Without DistilBERT feature extraction method | 93.92 | 94 | 92 | 89.2 | 96.5 | 88.9 | 86.8 | 90.9 |

| With DistilBERT | 99.5 | 98.3 | 99.94 | 99 | 99.32 | 99.3 | 99.28 | 98.5 |

| Without UGCRA optimization | 86.3 | 86 | 85.3 | 85 | 89.9 | 88 | 84.6 | 89 |

| With UGCRA optimization | 99.95 | 99 | 99.34 | 99.3 | 99.7 | 99.5 | 99.38 | 99.60 |

| Without CGPA | 88.40 | 87.90 | 87.50 | 86.80 | 90.20 | 89.10 | 85.70 | 88.40 |

| With CGPA (proposed full model) | 99.30 | 99.30 | 99.47 | 99.67 | 99.95 | 99.95 | 99.10 | 99.10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Srivastava, A.K.; Pooja; Ali, M.; Gulzar, Y. CGPA-UGRCA: A Novel Explainable AI Model for Sentiment Classification and Star Rating Using Nature-Inspired Optimization. Mathematics 2025, 13, 3645. https://doi.org/10.3390/math13223645

Srivastava AK, Pooja, Ali M, Gulzar Y. CGPA-UGRCA: A Novel Explainable AI Model for Sentiment Classification and Star Rating Using Nature-Inspired Optimization. Mathematics. 2025; 13(22):3645. https://doi.org/10.3390/math13223645

Chicago/Turabian StyleSrivastava, Amit Kumar, Pooja, Musrrat Ali, and Yonis Gulzar. 2025. "CGPA-UGRCA: A Novel Explainable AI Model for Sentiment Classification and Star Rating Using Nature-Inspired Optimization" Mathematics 13, no. 22: 3645. https://doi.org/10.3390/math13223645

APA StyleSrivastava, A. K., Pooja, Ali, M., & Gulzar, Y. (2025). CGPA-UGRCA: A Novel Explainable AI Model for Sentiment Classification and Star Rating Using Nature-Inspired Optimization. Mathematics, 13(22), 3645. https://doi.org/10.3390/math13223645