1. Introduction

Software testing constitutes an indispensable process that forms the foundation for guaranteeing software product quality and reliability. The absence of a systematic testing protocol would substantially impede software companies’ product release capabilities, given the potential repercussions of distributing defective software to end-users [

1]. Accurate reliability evaluation before deployment is crucial for ensuring software quality and determining optimal release timing. Particularly in security-critical applications, SRGMs assume vital importance by enabling the forecasting of defect patterns and evaluating software reliability throughout the testing cycle [

2].

SRGMs primarily function to characterize software fault manifestations and project future fault occurrence rates. Numerous SRGM variants have been developed to address complexities in modern systems. The foundational Goel–Okumoto model, which presumes a time-decreasing failure rate [

3], demonstrates applicability during initial software lifecycle phases but reveals limitations in complex system environments. Subsequent innovations including the delayed S-shaped and inflection S-shaped models were conceived to overcome these constraints. Concurrently, scholarly contributions from Nagaraju et al. and Garg et al. have introduced enhanced software reliability frameworks tailored to diverse software operational contexts [

4,

5]. Sindhu advanced this domain through an refined Weibull model incorporating modified defect detection rates to accommodate operational uncertainties [

6]. Yang further expanded the methodological spectrum by proposing an additive reliability framework employing stochastic differential equations for analyzing censored data in multi-component architectures [

7].

The core challenge in SRGM development centers on effective modeling strategies that incorporate crucial factors such as fault dependency relationships, software module interactions, and test coverage metrics [

8,

9,

10,

11]. A particularly significant advancement in this domain involves the integration of testing effort function (TEF) into the modeling framework. The incorporation of TEF enables a comprehensive representation of resource utilization during testing phases and its consequential impact on fault detection efficiency [

12]. Early research by Yamada characterized testing effort through Rayleigh and exponential curves [

13], while Li and colleagues expanded this work by integrating both delayed and infected S-shaped TEFs into reliability growth modeling [

14]. Subsequent developments include Dhaka’s implementation of exponential additive Weibull distribution as a testing effort function [

15], and Kushwaha’s incorporation of Logistic testing effort functions within SRGM frameworks [

16].

Despite these advancements, current methodologies frequently overlook the inherently dynamic nature of TEF, particularly failing to address how phase-specific variations in resource allocation influence both testing efficiency and fault detection rate (FDR). Many established models employ simplified TEF representations that primarily correlate with temporal progression or testing milestones [

17], without adequately accounting for the distinctive resource consumption patterns across different testing phases. These simplistic representations limit the ability of conventional SRGMs to accurately capture the nonlinear and phase-dependent relationships between testing effort and fault detection in complex testing environments, representing a significant research gap that demands further investigation.

To bridge this methodological gap, we propose an innovative SRGM framework that simultaneously models the dynamic interplay between TEF and FDR. Our approach leverages Burr-XII and Burr-III distributions to characterize the evolving nature of FDR, enabling enhanced adaptability to the distinctive characteristics of various testing phases [

18,

19]. This formulation effectively captures the nuanced effects of resource consumption on fault detection capabilities. In contrast to traditional static modeling paradigms, our proposed framework explicitly accommodates temporal and phase-dependent variations in testing resource allocation, thereby providing more precise reliability assessment mechanisms for complex testing scenarios.

Following the proposal of a SRGM, parameter estimation constitutes the next critical step. It is vital for the model’s accuracy and effectiveness, as it directly impacts predictive capability and reliability assessment. Least squares estimation (LSE) [

20,

21] is a commonly employed method for this purpose. LSE estimates parameters by minimizing the sum of squared errors between predicted and observed values, making it suitable for linear models. However, with the increasing complexity of software systems, LSE becomes inadequate for handling nonlinear relationships [

22]. Beyond traditional estimation methods, some studies have also explored the use of meta-heuristic optimization algorithms, such as genetic algorithms (GA) and particle swarm optimization (PSO), for estimating parameters in complex SRGMs [

23]. Our GRU-HMM approach offers a distinct, deep learning-based alternative to these population-based optimization strategies.

Deep learning methods have emerged as a novel approach for parameter estimation in this context. For instance, Wu [

24] proposed a framework for learning SRGM parameters through the weights of deep neural networks, essentially utilizing deep learning feedback mechanisms. Similarly, Kim [

25] developed a software reliability model based on gated recurrent units (GRUs), replacing the activation functions used in prior deep learning models. Nevertheless, existing deep-learning-based SRGM modeling approaches exhibit significant limitations: they simplistically map SRGM expressions to the activation functions or hidden layer outputs of neural networks. This results in parameters that violate physical constraints, insufficient long-term prediction stability inherent to GRUs, and an inability to adapt to phase transitions in resource allocation ultimately leading to significant prediction volatility in complex scenarios.

To address these challenges and enhance estimation accuracy, we propose a hy-brid GRU-HMM method. This approach leverages the GRU’s proficiency in processing sequential data and the HMM’s capability in modeling discrete hidden states [

26,

27], thereby offering a more suitable framework for complex software reliability modeling.

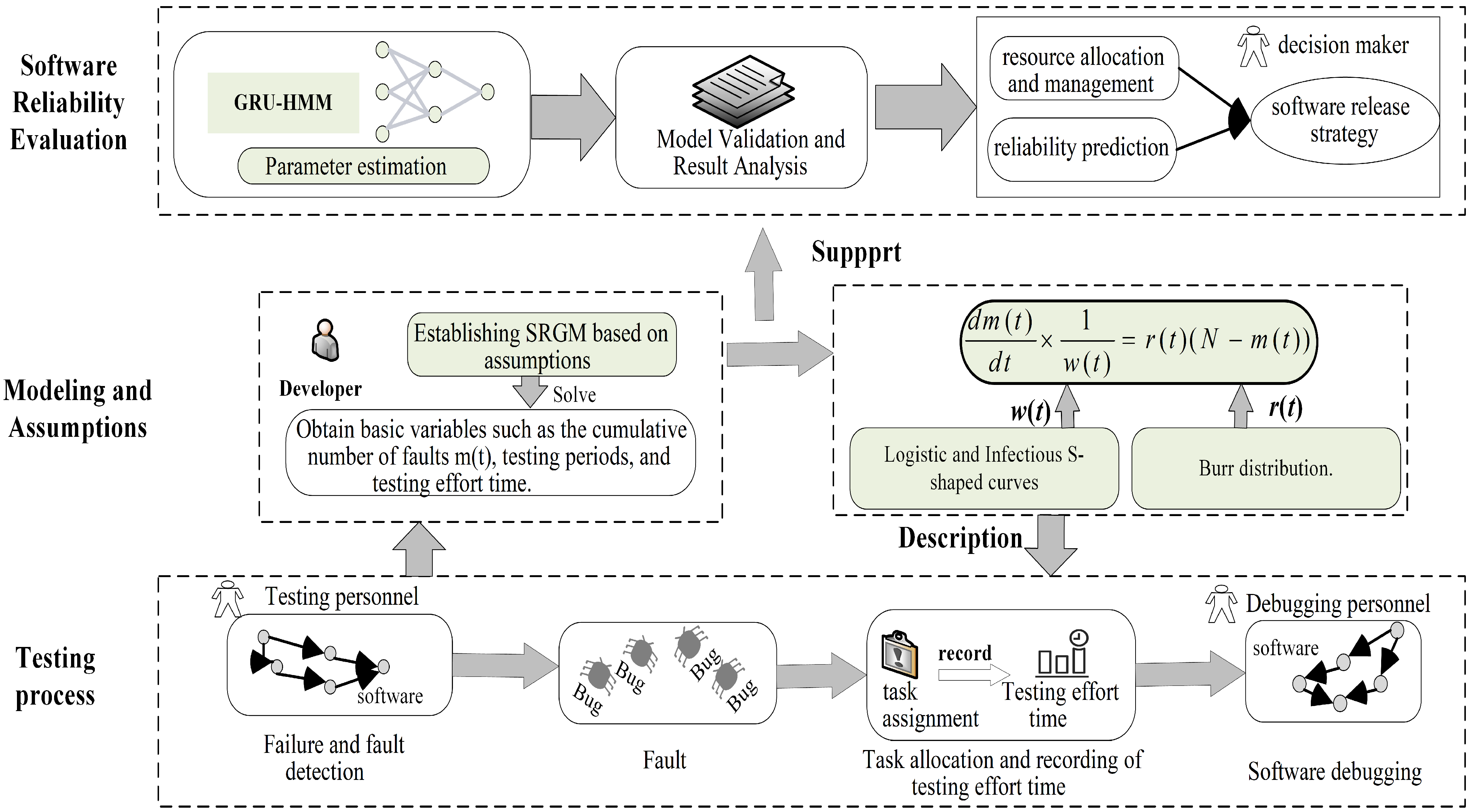

Figure 1 illustrates the basic process of SRGM modeling based on our understanding of the testing process, covering the testing process related to software reliability re-search, software reliability modeling, and their corresponding relationships. The high-lighted content in

Figure 1 represents the core of this paper, focusing on two main aspects: reliability modeling and parameter estimation. The introduction outlines the research context and motivations. The related work section reviews SRGMs and relevant theoretical advancements.

Section 2 presents the SRGM,

Section 3 introduces the parameter estimation method, and

Section 4 validates the model.

Section 5 concludes the study and suggests future research directions.

Our paper offers three contributions to software reliability growth modeling:

We propose a novel SRGM framework that integrates Burr-III and Burr-XII distributions with S-shaped testing effort functions to capture the nonlinear and phase-dependent dynamics of the fault detection rate.

We design a hybrid GRU-HMM deep learning method for robust parameter estimation, effectively addressing the challenges of nonlinearity and phase transitions in complex testing environments.

We conduct extensive empirical studies on real-world datasets, demonstrating that our proposed models significantly outperform traditional baselines in both goodness-of-fit and prediction accuracy.

2. Preliminaries

2.1. Software Reliability Growth Models

Software reliability growth models are statistical methods used to quantify the improvement of software reliability during testing and early operation [

21]. The core idea is that as testing progresses, defects are discovered and removed, leading to a gradual enhancement of reliability.

SRGMs primarily rely on failure data collected during testing, such as inter-failure times or cumulative failure counts. The key objective is to estimate the mean value function and the failure intensity function to capture the system’s reliability evolution [

28].

The theoretical foundation of most SRGM is the non-homogeneous Poisson process (NHPP), which assumes that the failure rate changes over time. As faults are fixed, the number of remaining defects decreases, leading to a decline in the failure rate.

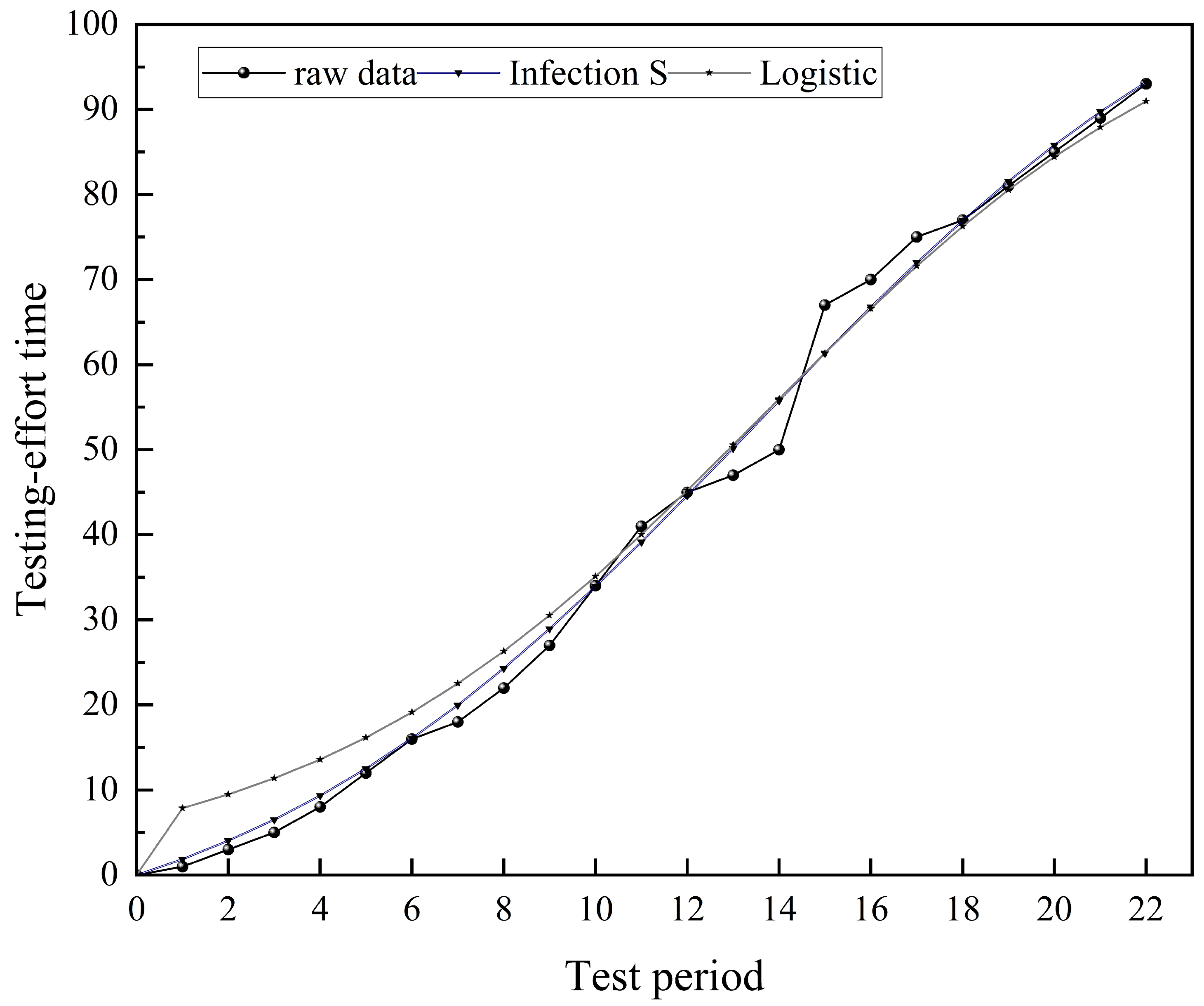

2.2. Testing Effort Function

The testing effort function (TEF) is an important mathematical concept in software reliability modeling, used to characterize the distribution pattern of testing re-sources over time. It is defined as a non-negative, monotonically increasing function of time, reflecting how testing activities accumulate throughout the software lifecycle. Formally, TEF is typically denoted as W(t), where W(t) represents the testing time, and W(t) indicates the total amount of testing effort invested up to time t.

In software reliability growth models, the introduction of the testing effort function significantly enhances the model’s ability to represent realworld testing processes. Traditional SRGMs often assume a uniform testing intensity throughout the entire testing period. However, in practice, the allocation of testing resources usually varies over time, exhibiting non-uniform characteristics. For example, testing efforts may be minimal in the early stages of a project but increase sharply as the release deadline approaches. By incorporating TEF into SRGMs, time-driven models can be transformed into effort-driven models, allowing for a more accurate representation of the actual relationship between fault detection rates and testing input.

Mathematically, the testing effort function is typically used to replace the time variable t in traditional software reliability growth models, transforming the cumulative failure function (i.e., the mean value function)

m(

t) into

m(

W(

t)). Specifically, the model becomes

where

N denotes the total number of potential faults in the system and

b is the fault detection efficiency parameter.

The testing effort function (TEF) is characterized by two distinct curve types: convex-type and S-type. Convextype TEFs emphasize aggressive early-stage resource allocation to maximize risk mitigation efficiency. Conversely, S-type TEFs model phased testing processes, reflecting incremental workload evolution from initial system configuration, through sustained regression validation, to eventual stabilization. This phased structure makes S-type TEFs particularly suitable for safety-critical systems, where workload dynamics must align with rigorous reliability requirements. The selection between these curves critically influences SRGM accuracy in simulating testing rhythms, enabling data-driven optimization of resource-reliability trade-offs.

Table 1 summarizes the key TEF variants examined in this study, including their mathematical formulation.

2.3. Burr Distribution

The Burr distribution is a flexible continuous probability distribution widely used in fields such as engineering, economics, medicine, and reliability analysis. Introduced by Burr in 1942 [

29], it effectively fits various types of data. A key feature of the Burr distribution is its ability to model different tail behaviors by adjusting parameters, allowing it to represent multiple distribution forms. The Burr-III and Burr-XII distributions are widely used in SRGM due to their flexibility in modeling various failure patterns [

18,

19]. They effectively capture the dynamic changes in failure detection rates, and their ability to model both light-tailed and heavy-tailed behaviors improves the accuracy of SRGM predictions.

The effectiveness of Burr distributions in modeling software fault detection lies in their inherent flexibility to adapt to various testing phases, a feature not fully captured by traditional distributions like the Exponential or Weibull, which often assume a fixed failure pattern. The key advantage of Burr-III and Burr-XII distributions is their ability to model both light-tailed and heavy-tailed behaviors. This enables them to accurately represent the entire software testing lifecycle: initially capturing the slow detection rate of early testing phases, often due to team learning curves; then modeling the accelerated fault discovery during peak testing activity; and finally reflecting the saturation effect in later stages as the number of remaining faults diminishes. This multi-phase adaptability makes Burr distributions particularly suitable for modern software testing environments, where both the testing effort allocation and fault detection efficiency vary nonlinearly over time.

In software reliability modeling, the Burr-type fault detection rate is defined by its cumulative distribution function (CDF) and probability density function (PDF). The CDF quantifies the cumulative probability of detecting faults by time t, while the PDF, derived as the derivative of the CDF, characterizes the instantaneous probability density of fault detection at any specific time.

The CDF of the Burr-XII distribution are defined as

The CDF of the Burr-III distribution are defined as

In the Burr distribution, the parameters b > 0 and k > 0 are shape parameters. The parameter k governs the distribution’s shape and tail thickness, while b primarily influences the overall morphology of the distribution, particularly determining its skewness.

The fault detection rate, denoted as

r(

t), can be expressed using the PDF

f(

t) as follows:

where

f(

t) is the probability density function, and the fault detection rate

r(

t) can be expressed as

Here, 1 represents the probability of undetected faults at time t, which corresponds to the proportion of remaining faults.

Based on this, the fault detection rate expressions for the Burr-XII and Burr-III distributions can be derived. The fault detection rate for the Burr-XII distribution is expressed as:

Similarly, the fault detection rate for the Burr-III distribution is expressed as

3. Proposed Method

This chapter introduces the implementation process of the above methodology, beginning with a description of the SRGM model construction steps, followed by a detailed explanation of the parameter estimation technique, and finally presenting the application framework of the method in practical scenarios.

3.1. Software Reliability Modeling

To describe the dynamic process of software fault detection more accurately, this section proposes a novel software reliability growth model based on Burr-type fault detection rates and S-shaped test effort functions, under the following assumptions:

- (1)

The occurrence of software faults and their subsequent elimination follow a non-homogeneous Poisson process, where the failure rate varies over time.

- (2)

Software faults occur at random times, caused by the remaining faults in the system.

- (3)

The ratio of the average number of faults detected during a specific time interval to the test effort expended is directly proportional to the number of remaining faults in the system.

- (4)

The fault detection rate is a time-varying function, described by Burr-III and Burr-XII distributions.

- (5)

The consumption of test resources is modeled using different types of S-shaped Test Effort Functions, such as the Infection S-type and the Logistic-type.

- (6)

When a fault is detected, it is immediately eliminated, and no new faults are introduced.

Given that the failure intensity, which represents the number of failures occurring per unit time at time

t, can be derived from the fundamental assumptions of the model, it is expressed as

The failure intensity is directly proportional to the remaining number of faults

in the software. The proportionality constant is the composite fault detection rate

, which accounts for both the testing effort consumption rate

and the inherent fault detection rate

. The expression is as follows:

To solve for the mean value function

, we rearrange Equation (

2) into a standard differential equation:

Solving this first-order linear differential equation with respect to

:

where

is the constant of integration.

Applying the initial condition

,

Substituting back, we obtain the definite integral form of the mean value function:

Beyond empirical metrics, the structural saturation behavior of an SRGM is a key theoretical property, often analyzed through the lens of Hausdorff saturation [

30,

31]. This property, for which two-sided estimates have been developed as a model selection criterion [

32], describes the asymptotic bound of the mean value function.

The proposed framework demonstrates well-behaved saturation properties, anchored in its S-shaped testing effort function—which is inherently bounded and converges to a finite maximum—and the adaptable fault detection dynamics afforded by the Burr-type distribution. Under the non-homogeneous Poisson process (NHPP) assumption, these elements jointly ensure that the cumulative number of detected faults approaches a finite upper bound, consistent with established saturation theory. This structural coherence not only supports empirical robustness but also aligns with theoretical expectations formalized via Hausdorff saturation [

30,

31], where two-sided bounds serve as model selection criteria [

32].

Existing NHPP-based SRGMs vary considerably in their assumptions and applicability. The Goel–Okumoto model, while simple and widely adopted, assumes constant fault detection and perfect debugging, neglecting early-stage inefficiencies and learning effects. The delayed S-Shaped model introduces a learning curve, better matching real-world fault accumulation trends, yet retains idealized debugging assumptions. The Yamada imperfect debugging model incorporates fault insertion during repairs, improving realism at the expense of parameter complexity. Similarly, the modified Weibull model introduces environmental variability via the Weibull distribution but faces challenges in parameter interpretation. The inflected S-shaped TEF model ties fault detection to effort saturation, though it presumes a consistent effort–detection relationship not always observed in practice.

These models collectively illustrate a persistent trade-off: enhanced realism often entails greater complexity and data requirements, whereas simpler models risk overlooking essential dynamics of the testing process. Our approach seeks a balanced integration of dynamic TEF and Burr-type FDR, maintaining theoretical grounding in saturation behavior while preserving practical estimability.

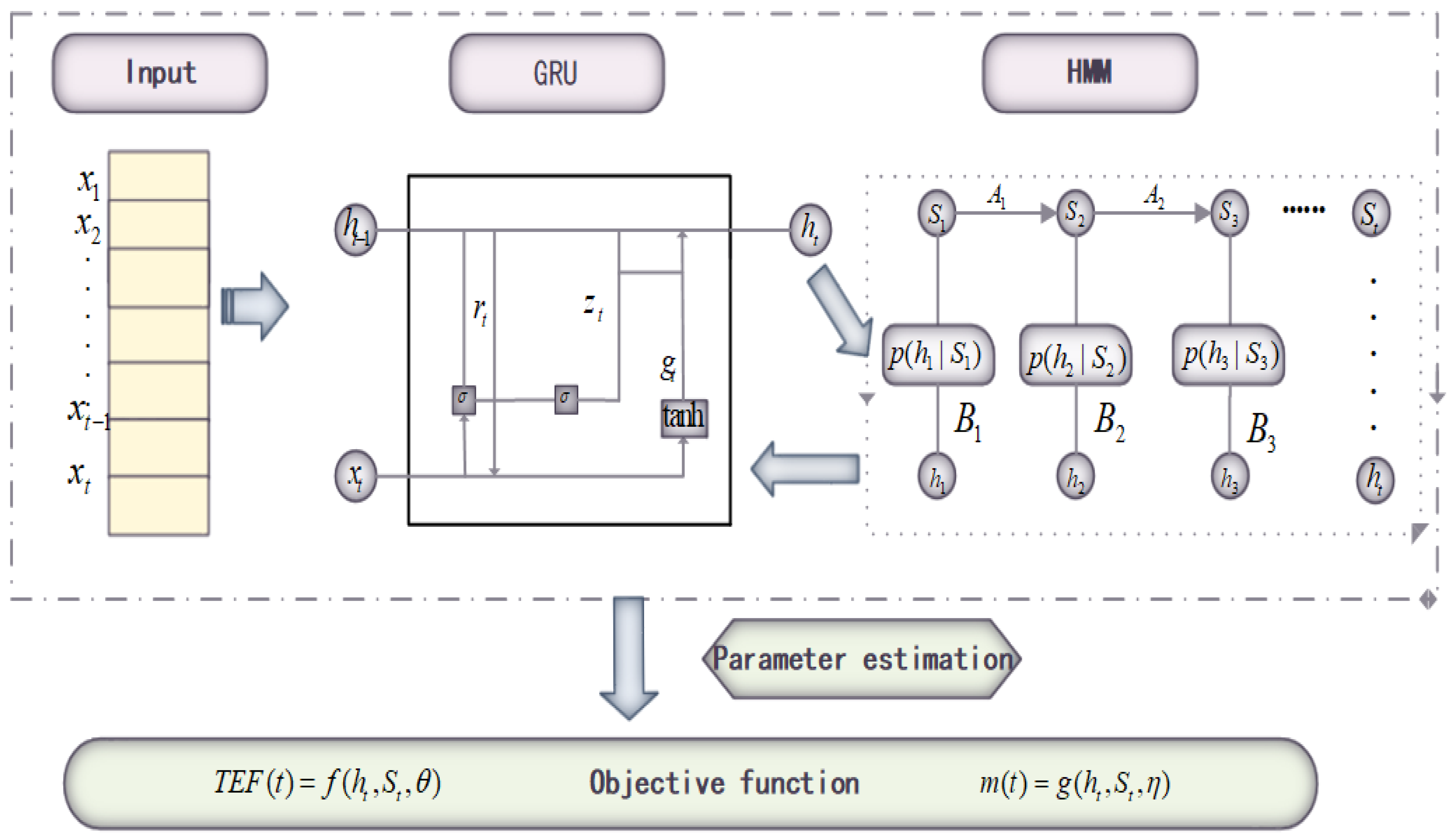

3.2. Parameter Estimation

This study introduces a hybrid GRU-HMM framework for robust parameter estimation in SRGMs, designed to overcome the limitations of traditional methods like LSE in capturing nonlinear and phase-varying fault dynamics. The GRU module acts as a temporal encoder, processing sequential testing data (e.g., CPU hours, defect counts) to model complex interactions between testing effort and failures. It is preferred over RNNs and LSTMs for its balance of gradient stability and computational efficiency. The derived latent features are then processed by an HMM, which models them as observations from discrete hidden states, each representing a distinct testing phase with a unique parameter set. By inferring transition probabilities between these states, the HMM dynamically adjusts parameters in response to shifts in testing strategy, ensuring alignment with actual processes. This coupling yields time-varying reliability models that preserve SRGMs’ analytical form while integrating data-driven dynamics. The framework is further regularized with domain knowledge to ensure physical plausibility and employs Monte Carlo dropout for uncertainty quantification, providing confidence intervals critical for release planning. The result is an accurate, interpretable, and adaptable tool for reliability assessment in complex testing environments.

GRU-HMM Architecture Design

The proposed architecture consists of two components: a GRU network for temporal feature extraction, and an HMM-based phase inference and parameter fusion module for multi-stage parameter estimation.

- (1)

GRU Temporal Encoder

The GRU computes the hidden state at time step

t using the following equations. Given the normalized input sequence:

(e.g., test effort or observed failures), the GRU network processes the sequence to generate a hidden state

as follows:

where

is the hidden state at the current time step,

is the input data at the current time step,

and

are the outputs of the reset gate and update gate respectively, and

is the candidate hidden state.

- (2)

HMM Inference Module

The output sequence

is modeled as observations of an HMM with a discrete hidden state set:

, corresponding to latent test phases. Each hidden state

is associated with a distinct SRGM parameter vector:

Note: The parameter denotes the shape parameter of the Burr distribution, distinguished from the hidden state index k.

The hidden state process is modeled as a discrete-time hidden Markov model defined by

Initial state distribution: , where

State transition probabilities: , forming the transition matrix with for all i.

The emission distribution links the continuous GRU-generated latent features

to the discrete hidden states

. We assume this follows a multivariate Gaussian distribution:

where

is the mean vector and

is a diagonal covariance matrix. Both

and

are learnable parameters representing the distribution characteristics of the GRU hidden states conditioned on hidden state

k.

To compute the posterior state probabilities , we employ the forward–backward algorithm:

Forward Pass: Compute the forward probabilities

recursively:

Backward Pass: Compute the backward probabilities

recursively:

Using these computed forward and backward probabilities, the posterior state probability at each time step is given by

This posterior represents the probability of being in state k at time t, given all observed GRU features . The denominator ensures proper normalization across all possible states.

The parameters of the HMM (initial distribution , transition matrix A, and emission parameters ) are learned jointly with the GRU parameters through backpropagation, maximizing the likelihood of the observed sequence .

- (3)

Parameter Fusion and SRGM Construction

The posterior state distribution

obtained from the HMM inference module is utilized to compute a time-varying parameter vector through weighted fusion:

where

represents the SRGM parameter vector associated with hidden state

k, as defined in Equation (

17).

The fused parameters are substituted into analytical SRGM equations to compute test effort functions , detection rate , and the cumulative failure mean function .

3.3. Loss Function and Optimization

To train the model, the predicted cumulative fault count

is compared with the actual observed value

, and the mean squared error is used as the loss function:

The entire network is trained end-to-end using the Adam optimizer, with joint optimization of parameters across multiple components. These include

Weights of the GRU network;

Initial state distribution and state transition matrix A of the HMM;

Gaussian emission parameters and for each hidden state;

SRGM parameter set for each latent state k.

This enables dynamic estimation of multi-phase software reliability model parameters.

3.4. Innovation: Adaptive Regularization and Uncertainty Quantification

To enhance estimation robustness and quantify predictive confidence, we introduce two key improvements:

3.4.1. Physics-Informed Adaptive Regularization

We augment the loss function with domain-driven regularization terms:

where

represents empirical parameter bounds derived from historical data. The second term enforces non-negativity of the fault detection rate. Hyperparameters

and

are optimized via Bayesian optimization.

3.4.2. Monte Carlo Dropout for Uncertainty Quantification

We implement dropout within GRU layers and perform

M stochastic forward passes during inference:

The final prediction is obtained by averaging over the Monte Carlo samples:

Prediction intervals are constructed using the empirical quantiles of the Monte Carlo samples.

Figure 2 illustrates the multi-stage workflow of the GRU-HMM framework for constructing the SRGM. The process begins with the input of sequential testing data. This data is first processed by a Gated Recurrent Unit network to capture temporal dependencies and extract relevant features. Subsequently, a Hidden Markov Model analyzes these features to infer the latent phases of the software testing process. Finally, the framework integrates the outputs through a dynamic parameter fusion mechanism to accurately estimate the parameters of the SRGM.

5. Conclusions

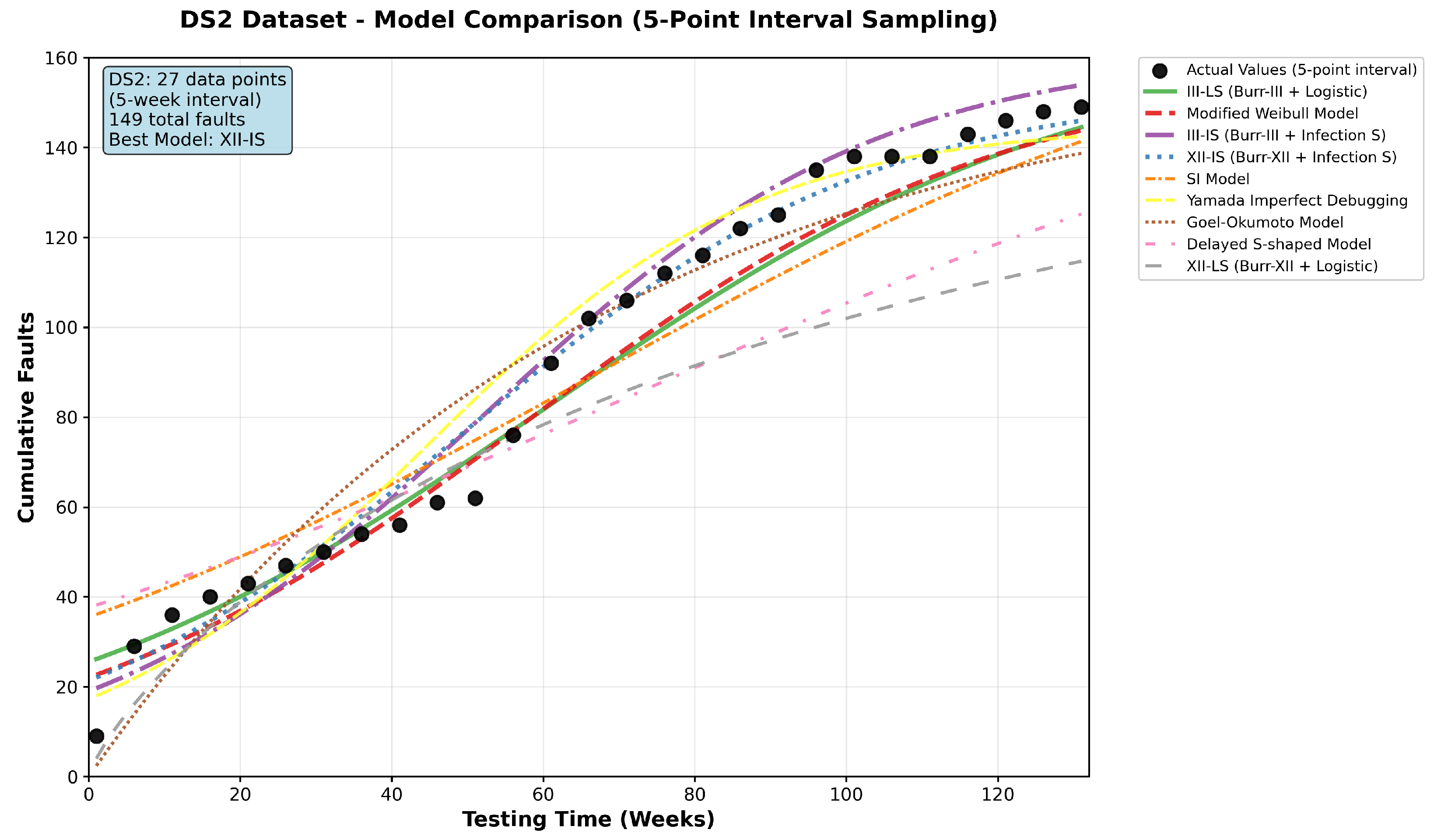

This study proposed a novel SRGM that integrates Burr-type fault detection rates with S-shaped testing effort functions, estimated using an advanced GRU-HMM framework. Our empirical validation on real-world datasets confirms that this approach substantially enhances predictive accuracy and fitting performance over traditional models.

The key findings reveal that S-shaped TEFs, particularly the Infection S-type, are superior for modeling the non-linear consumption of testing effort. Furthermore, the GRU-HMM estimation method proved to be exceptionally robust, especially for complex models and larger datasets, outperforming both LSE and standalone GRU by effectively capturing phase transitions and temporal dependencies. Among the various combinations, the models pairing Burr distributions with the Infection S-shaped TEF (III-is and XII-is) consistently delivered the best performance. For instance, the XII-is model achieved an MSE of 48.9 and an AIC of 481.3 on the DS2 dataset, which is a significant improvement over the best baseline model (MSE = 64.2, AIC = 548.9). This highlights the importance of a synergistic model design and a powerful estimator.

To position our contribution within the broader context, we provide a comparative discussion with emerging deep learning-based approaches. Unlike representative frameworks such as the deep neural network weight learning method by Wu et al. and the SRGM-as-activation model by Kim et al., which primarily rely on the temporal learning capability of networks, our GRU-HMM framework is explicitly designed to capture the discrete phase transitions inherent in software testing efforts. This fundamental architectural difference—the synergistic combination of GRU for temporal dynamics and HMM for latent phase inference—provides a principled solution to a key challenge in reliability modeling, offering a more structured and interpretable approach that is theoretically better suited for handling non-stationary dynamics.

Practically, the achieved higher prediction accuracy directly translates into more reliable release decisions and optimized testing resource allocation, potentially reducing costly post-release failures. Future work will focus on developing an automated framework for optimal model selection and optimizing the computational efficiency of the GRU-HMM estimator for broader deployment.