Eliminating Packing-Aware Masking via LoRA-Based Supervised Fine-Tuning of Large Language Models

Abstract

1. Introduction

2. Background and Related Work

2.1. Preliminaries

2.2. SDPA with Packing

2.3. SDPA with LoRA

3. Methodology

3.1. Decomposing SDPA Output Without PAM

3.2. Eliminating PAM via LoRA

3.3. Perturbation Influence in LoRA Without PAM

3.4. Runtime Overheads of PAM

| Algorithm 1 Packing-aware mask generation | |

| 1: function MakeBlockDiagKeepMaskAndPos() | |

| 2: | |

| 3: if or then | |

| 4: | |

| 5: end if | |

| 6: | ▷ append end sentinel |

| 7: ; | ▷ keep-mask (1 = keep, 0 = mask) |

| 8: for to do | |

| 9: , | ▷ segment |

| 10: if then | |

| 11: | ▷ place a 1-block on the diagonal |

| 12: end if | |

| 13: end for | |

| 14: | ▷ outer product; zero padded rows/cols |

| 15: | ▷M stays a keep-mask for Algorithm 2 |

| 16: return | |

| 17: end function | |

| Algorithm 2 Collate with masking and timing |

|

- For a 3D block mask of size , converting the mask to float32 (line 10) and transforming the values in masking area to negative values (line 12), perform full-tensor reads/writes and arithmetic at , making the step bandwidth-bound and increasing kernel launches relative to simply forwarding the mask.

- Repeated preprocessing without semantic effect such as additional no-ops with ‘.contiguous()’ and ‘.clone()’ introduces extra kernels and full-tensor copies, as described in lines 13 to 15. When repeated rep times, they scale linearly with rep, further amplifying GPU memory traffic without changing computation semantics.

- Forcing the SDPA backend to math instead of Flash attention [18] (i.e., disabling flash/mem_efficient) removes the most optimized attention kernels as shown in line 23. Even with identical masks, the attention computation itself becomes slower, compounding the overheads introduced by 1 and 2 factors.

- Performing the above transformations inside each attention layer causes the same mask to be reprocessed L times per forward (for L layers). In contrast, a cached, additive mask prepared once at the model level would amortize this cost; thus, layer-local preprocessing multiplies both kernel count and memory traffic (lines 8 to 17).

| Algorithm 3 Runtime overheads in attention layer with PAM | ||

| 1: function ComplexCustomAttentionForward() | ||

| 2: | ▷, M may be , | |

| 3: | ||

| 4: | ||

| 5: | ▷ user options: enable, preprocess_in_forward, repeat_preproc, disable_sdp | |

| 6: if and then | ▷ supports | |

| 7: | ||

| 8: if and then | ||

| 9: if then | ||

| 10: | ||

| 11: end if | ||

| 12: | ▷0/1 keep-mask → additive mask: keep, mask | |

| 13: for to do | ||

| 14: ; Contiguous(m); Clone(m) | ||

| 15: | ▷ intentional kernel/memory stress without changing values | |

| 16: end for | ||

| 17: end if | ||

| 18: Unsqueeze(m, axis = 1) | ▷ | |

| 19: else if then | ▷ padding mask path | |

| 20: | ||

| 21: end if | ||

| 22: if and then | ||

| 23: return MathOnly) | ||

| 24: | ▷ force math-only attention (disable Flash/SDPA) | |

| 25: else | ||

| 26: return | ||

| 27: end if | ||

| 28: end function | ||

4. Results and Discussion

4.1. Experimental Setup

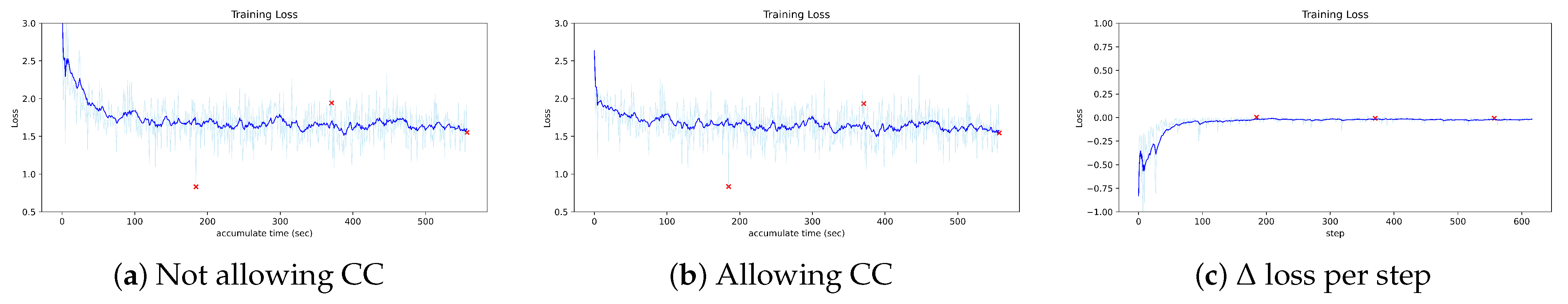

4.2. Reducing Training Time by Eliminating PAM

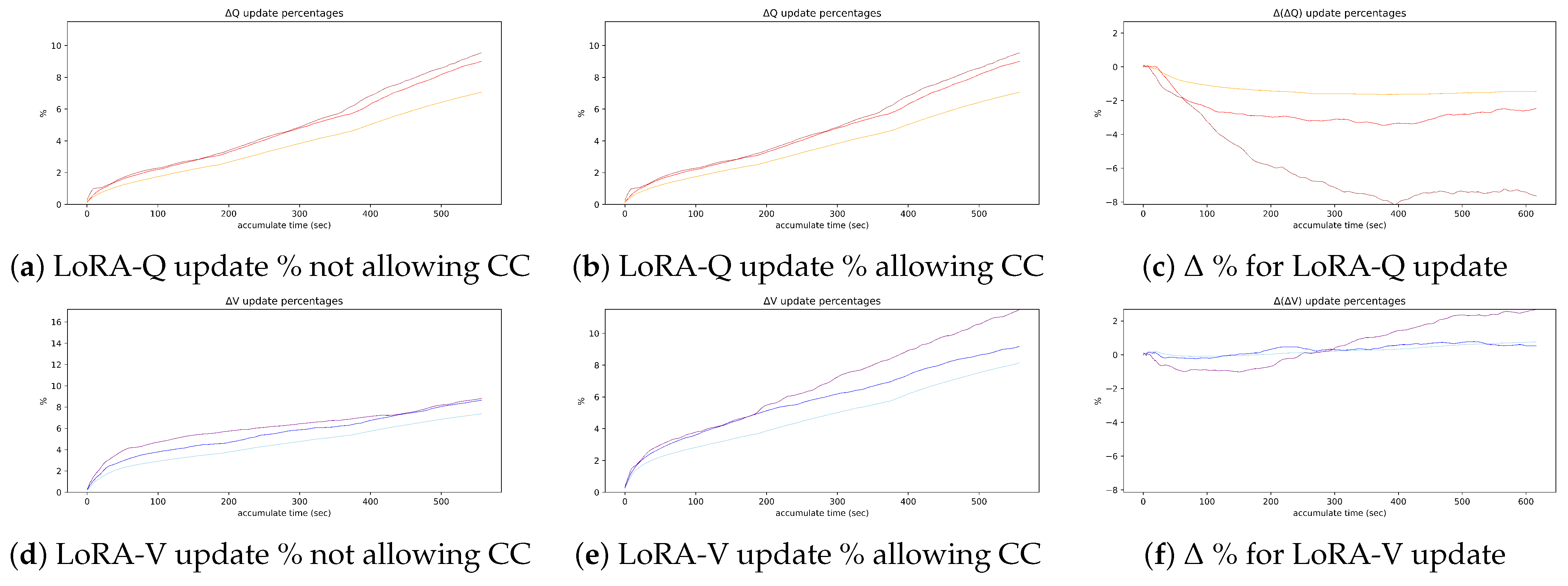

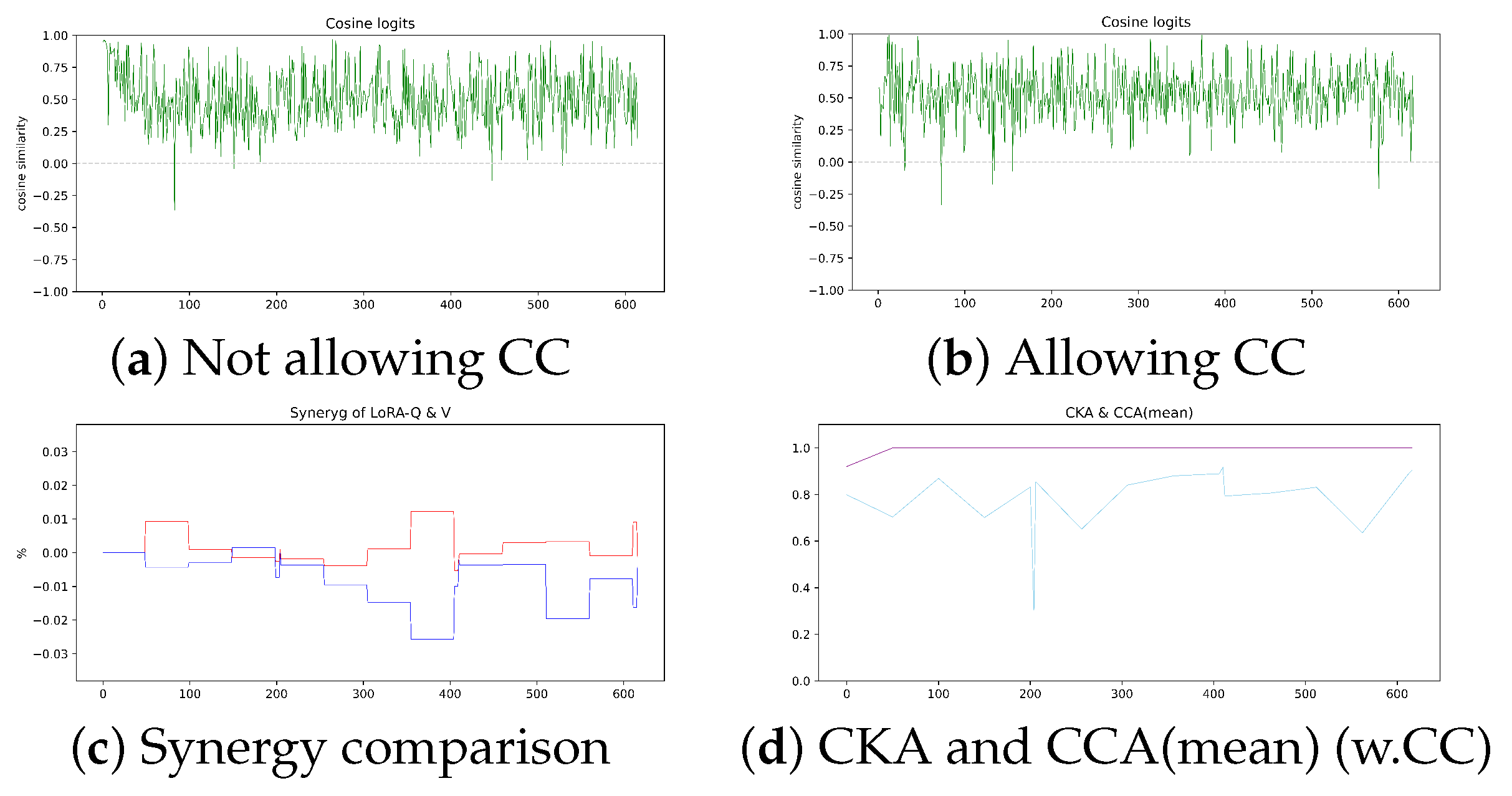

4.3. Complementary Training Between LoRA-V and LoRA-Q

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Derivation of First-Order Perturbations for LoRA-Q and LoRA-V

Appendix B. Detailed Tables for Training Time Results

| Models | Allow CC | Packing Time (s) | Step Time (s) | Mask gen. (ms) | Mask h2d. (ms) | Mask (ms) | Step Time -Mask (s) | Epoch Time (s) |

|---|---|---|---|---|---|---|---|---|

| NR | × | 95.0622 | 0.7413 | 2.3773 | 0.4832 | 2.8605 | 0.7384 | 152.4633 |

| ✓ | 93.6683 | 0.7395 | 0.1182 | 0.0877 | 0.2059 | 0.7393 | 152.1033 | |

| −1.3939 | −0.0018 | −2.2591 | −0.3955 | −2.6546 | 0.0009 | −0.3600 | ||

| −1.4663 | −0.2428 | −95.0280 | −81.8502 | −92.8020 | 0.1157 | −0.2361 | ||

| HL | × | 112.5778 | 0.7217 | 2.0247 | 0.4538 | 2.4785 | 0.7192 | 148.4300 |

| ✓ | 111.4029 | 0.7241 | 0.1204 | 0.1682 | 0.2886 | 0.7238 | 148.9233 | |

| −1.1749 | 0.0024 | −1.9042 | −0.2856 | −2.1899 | 0.0046 | 0.4933 | ||

| −1.0437 | 0.3325 | −94.0534 | −62.9352 | −88.3559 | 0.6382 | 0.3323 | ||

| OR | × | 74.0801 | 0.7365 | 2.3400 | 0.3996 | 2.7395 | 0.7338 | 140.4333 |

| ✓ | 72.8015 | 0.7193 | 0.1047 | 0.0781 | 0.1828 | 0.7191 | 137.1533 | |

| −1.2786 | −0.0172 | −2.2353 | −0.3215 | −2.5568 | −0.0146 | −3.2800 | ||

| −1.7260 | −2.3354 | −95.5256 | −80.4555 | −93.3272 | −1.9957 | −2.3356 | ||

| MR | × | 75.4886 | 0.8768 | 1.0961 | 0.2668 | 1.3629 | 0.8754 | 349.5333 |

| ✓ | 74.3069 | 0.8682 | 0.1080 | 0.1013 | 0.2093 | 0.8680 | 346.1067 | |

| −1.1817 | −0.0086 | −0.9880 | −0.1656 | −1.1536 | −0.0074 | −3.4267 | ||

| −1.5654 | −0.9808 | −90.1469 | −62.0315 | −84.6430 | −0.8506 | −0.9803 |

| Models | Allow CC | Packing Time (s) | Step Time (s) | Mask gen. (ms) | Mask h2d. (ms) | Mask (ms) | Step Time -Mask (s) | Epoch Time (s) |

|---|---|---|---|---|---|---|---|---|

| NR | × | 427.7267 | 0.7496 | 2.3093 | 0.3778 | 2.6870 | 0.7469 | 381.2733 |

| ✓ | 424.4628 | 0.7346 | 0.1704 | 0.1522 | 0.3227 | 0.7343 | 373.6800 | |

| −3.2639 | −0.0149 | −2.1388 | −0.2255 | −2.3644 | −0.0126 | −7.5933 | ||

| −0.7631 | −2.0011 | −92.6211 | −59.7141 | −87.9903 | −1.6917 | −1.9916 | ||

| HL | × | 374.9585 | 0.7537 | 2.5680 | 0.3756 | 2.9436 | 0.7508 | 383.3700 |

| ✓ | 371.2932 | 0.7457 | 0.1661 | 0.1158 | 0.2819 | 0.7454 | 379.3267 | |

| −3.6653 | −0.0079 | −2.4019 | −0.2598 | −2.6617 | −0.0053 | −4.0433 | ||

| −0.9775 | −1.0614 | −93.5319 | −69.1693 | −90.4233 | −0.7111 | −1.0547 | ||

| OR | × | 357.2428 | 0.7430 | 2.2023 | 0.4577 | 2.6599 | 0.7403 | 349.6967 |

| ✓ | 354.3143 | 0.7334 | 0.1282 | 0.1501 | 0.2784 | 0.7331 | 345.2100 | |

| −2.9285 | −0.0095 | −2.0740 | −0.3075 | −2.3816 | −0.0072 | −4.4867 | ||

| −0.8198 | −1.2921 | −94.1788 | −67.2056 | −89.5334 | −0.9750 | −1.2830 | ||

| MR | × | 377.5409 | 0.8698 | 1.2790 | 0.2471 | 1.5260 | 0.8683 | 849.5233 |

| ✓ | 374.1231 | 0.8696 | 0.1125 | 0.1227 | 0.2352 | 0.8694 | 849.3233 | |

| −3.4178 | −0.0002 | −1.1665 | −0.1243 | −1.2908 | 0.0011 | −0.2000 | ||

| −0.9053 | −0.0230 | −91.2041 | −50.3440 | −84.5872 | 0.1256 | −0.0235 |

| Models | Allow CC | Packing Time (s) | Step Time (s) | Mask gen. (ms) | Mask h2d. (ms) | Mask (ms) | Step Time -Mask (s) | Epoch Time (s) |

|---|---|---|---|---|---|---|---|---|

| NR | × | 1081.9873 | 0.7433 | 2.1832 | 0.3702 | 2.5534 | 0.7407 | 841.1333 |

| ✓ | 1075.0005 | 0.7395 | 0.1253 | 0.0799 | 0.2052 | 0.7393 | 836.8600 | |

| −6.9868 | −0.0038 | −2.0580 | −0.2902 | −2.3482 | −0.0015 | −4.2733 | ||

| −0.6457 | −0.5112 | −94.2607 | −78.4171 | −91.9637 | −0.1960 | −0.5080 | ||

| HL | × | 1013.1999 | 0.7645 | 2.2612 | 0.3829 | 2.6441 | 0.7619 | 865.1433 |

| ✓ | 1005.8142 | 0.7399 | 0.0857 | 0.0933 | 0.1791 | 0.7397 | 837.2800 | |

| −7.3857 | −0.0246 | −2.1755 | −0.2896 | −2.4651 | −0.0221 | −27.8633 | ||

| −0.7290 | −3.2178 | −96.2100 | −75.6333 | −93.2264 | −2.9054 | −3.2207 | ||

| OR | × | 908.6478 | 0.7459 | 2.0874 | 0.4092 | 2.4966 | 0.7434 | 781.4233 |

| ✓ | 902.3665 | 0.7379 | 0.0889 | 0.1283 | 0.2172 | 0.7377 | 773.1000 | |

| −6.2812 | −0.0079 | −1.9985 | −0.2809 | −2.2794 | −0.0057 | −8.3233 | ||

| −0.6913 | −1.0725 | −95.7411 | −68.6461 | −91.3002 | −0.7695 | −1.0651 | ||

| MR | × | 943.3118 | 0.8586 | 1.1662 | 0.2702 | 1.4365 | 0.8572 | 1864.6200 |

| ✓ | 936.4189 | 0.8529 | 0.1082 | 0.1044 | 0.2126 | 0.8527 | 1852.2800 | |

| −6.8929 | −0.0057 | −1.0580 | −0.1658 | −1.2238 | −0.0045 | −12.3400 | ||

| −0.7307 | −0.6639 | −90.7220 | −61.3620 | −85.2001 | −0.5222 | −0.6618 |

| Exp. | Allow CC | Packing Time (s) | Step Time (s) | Mask gen. (ms) | Mask h2d. (ms) | Mask (ms) | Step Time -Mask (s) | Epoch Time (s) |

|---|---|---|---|---|---|---|---|---|

| Online (Fast) | × | 62.5291 | 0.8278 | 1.1136 | 0.2923 | 1.4059 | 0.8264 | 732.3633 |

| ✓ | 59.8893 | 0.8272 | 0.1190 | 0.1996 | 0.3186 | 0.8269 | 731.7800 | |

| −2.6398 | −0.0007 | −0.9947 | −0.0927 | −1.0874 | 0.0005 | −0.5833 | ||

| −4.2218 | −0.0725 | −89.3139 | −31.7140 | −77.3384 | 0.0590 | −0.0796 | ||

| Offline (Fast) | × | 94.0748 | 0.8306 | 1.1359 | 0.2363 | 1.3722 | 0.8292 | 682.4933 |

| ✓ | 91.7167 | 0.8303 | 0.1792 | 0.2463 | 0.4255 | 0.8299 | 682.2200 | |

| −2.3581 | −0.0003 | −0.9566 | 0.0100 | −0.9467 | 0.0006 | −0.2733 | ||

| −2.5067 | −0.0361 | −84.2240 | 4.2319 | −68.9914 | 0.0780 | −0.0400 |

| Trained Param. | Allow CC | Packing Time (s) | Step Time (s) | Mask gen. (ms) | Mask h2d. (ms) | Mask (ms) | Step Time -Mask (s) | Epoch Time (s) |

|---|---|---|---|---|---|---|---|---|

| LoRA-Q & LoRA-V | × | 95.0622 | 0.7413 | 2.3773 | 0.4832 | 2.8605 | 0.7384 | 152.4633 |

| ✓ | 93.6683 | 0.7395 | 0.1182 | 0.0877 | 0.2059 | 0.7393 | 152.1033 | |

| −1.3939 | −0.0018 | −2.2591 | −0.3955 | −2.6546 | 0.0009 | −0.3600 | ||

| −1.4663 | −0.2428 | −95.0280 | −81.8502 | −92.8020 | 0.1157 | −0.2361 | ||

| LoRA-V -only | ✓ | 93.6826 | 0.6357 | 0.1414 | 0.0783 | 0.2196 | 0.6355 | 130.7400 |

| −1.3796 | −0.1056 | −2.2360 | −0.4049 | −2.6409 | −0.1030 | −21.7233 | ||

| −1.4512 | −14.2452 | −94.0521 | −83.7955 | −92.3230 | −13.9428 | −14.2482 | ||

| LoRA-Q -only | ✓ | 93.6948 | 0.6389 | 0.1611 | 0.1316 | 0.2927 | 0.6386 | 131.4000 |

| −1.3674 | −0.1024 | −2.2163 | −0.3516 | −2.5678 | −0.0998 | −21.0633 | ||

| −1.4385 | −13.8136 | −93.2234 | −72.7649 | −89.7675 | −13.5193 | −13.8153 |

| r | Allow CC | Packing Time (s) | Step Time (s) | Mask gen. (ms) | Mask h2d. (ms) | Mask (ms) | Step Time -Mask (s) | Epoch Time (s) |

|---|---|---|---|---|---|---|---|---|

| 8 | × | 95.0622 | 0.7413 | 2.3773 | 0.4832 | 2.8605 | 0.7384 | 152.4633 |

| ✓ | 93.6683 | 0.7395 | 0.1182 | 0.0877 | 0.2059 | 0.7393 | 152.1033 | |

| −1.3939 | −0.0018 | −2.2591 | −0.3955 | −2.6546 | 0.0009 | −0.3600 | ||

| −1.4663 | −0.2428 | −95.0280 | −81.8502 | −92.8020 | 0.1157 | −0.2361 | ||

| 4 | ✓ | 93.6475 | 0.7240 | 0.0845 | 0.0778 | 0.1623 | 0.7238 | 148.9000 |

| −1.4147 | −0.0173 | −2.2928 | −0.4054 | −2.6982 | −0.0146 | −3.5633 | ||

| −1.4881 | −2.3337 | −96.4455 | −83.8990 | −94.3262 | −1.9774 | −2.3372 | ||

| 16 | ✓ | 93.6269 | 0.7251 | 0.0510 | 0.1238 | 0.1748 | 0.7249 | 149.1200 |

| −1.4353 | −0.0163 | −2.3263 | −0.3594 | −2.6857 | −0.0135 | −3.3433 | ||

| −1.5099 | −2.1854 | −97.8547 | −74.3791 | −93.8892 | −1.8301 | −2.1929 |

| r | Step Loss | Epoch Last Loss | q_rel_mean | q_p95 | q_max | v_rel_mean | v_p95 | v_max | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | |

| 8 | −0.045 | 0.101 | −0.004 | 0.005 | −1.351 | 0.385 | −2.668 | 0.791 | −5.935 | 2.225 | 0.260 | 0.269 | 0.327 | 0.304 | 0.654 | 1.295 |

| 4 | −0.041 | 0.099 | −0.002 | 0.004 | −0.518 | 0.255 | −1.525 | 0.692 | −4.897 | 1.877 | 1.182 | 0.511 | 1.333 | 0.567 | 1.045 | 1.056 |

| 16 | −0.047 | 0.100 | −0.003 | 0.005 | −1.910 | 0.574 | −3.131 | 0.875 | −6.418 | 2.363 | −0.333 | 0.147 | −0.233 | 0.179 | −0.602 | 0.469 |

References

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama 2: Open Foundation and Fine-Tuned Chat Models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. Preprint 2018, 1–12. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Krell, M.M.; Kosec, M.; Perez, S.P.; Fitzgibbon, A. Efficient Sequence Packing without Cross-contamination: Accelerating Large Language Models without Impacting Performance. arXiv 2021, arXiv:2107.02027. [Google Scholar] [CrossRef]

- Ge, H.; Feng, J.; Huang, Q.; Fu, F.; Nie, X.; Zuo, L.; Lin, H.; Cui, B.; Liu, X. ByteScale: Efficient Scaling of LLM Training with a 2048K Context Length on More Than 12,000 GPUs. arXiv 2025, arXiv:2502.21231. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 5485–5551. [Google Scholar]

- Kosec, M.; Fu, S.; Krell, M.M. Packing: Towards 2x NLP BERT Acceleration. 2021. Available online: https://openreview.net/forum?id=3_MUAtqR0aA (accessed on 1 September 2025).

- Zheng, Y.; Zhang, R.; Zhang, J.; Ye, Y.; Luo, Z. LlamaFactory: Unified Efficient Fine-Tuning of 100+ Language Models. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024; pp. 400–410. [Google Scholar]

- Bai, Y.; Lv, X.; Zhang, J.; He, Y.; Qi, J.; Hou, L.; Tang, J.; Dong, Y.; Li, J. LongAlign: A Recipe for Long Context Alignment of Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; pp. 1376–1395. [Google Scholar]

- Wang, S.; Wang, G.; Wang, Y.; Li, J.; Hovy, E.; Guo, C. Packing Analysis: Packing Is More Appropriate for Large Models or Datasets in Supervised Fine-tuning. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2025, Vienna, Austria, 27 July–1 August 2025; pp. 4953–4967. [Google Scholar]

- Yao, Y.; Tan, J.; Liang, K.; Zhang, F.; Niu, Y.; Hu, J.; Gong, R.; Lin, D.; Xu, N. Hierarchical Balance Packing: Towards Efficient Supervised Fine-tuning for Long-Context LLM. arXiv 2025, arXiv:2503.07680. [Google Scholar] [CrossRef]

- Staniszewski, K.; Tworkowski, S.; Jaszczur, S.; Zhao, Y.; Michalewski, H.; Kuciński, Ł.; Miłoś, P. Structured Packing in LLM Training Improves Long Context Utilization. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; pp. 25201–25209. [Google Scholar]

- Dong, J.; Jiang, L.; Jin, W.; Cheng, L. Threshold Filtering Packing for Supervised Fine-Tuning: Training Related Samples within Packs. In Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies, Albuquerque, NM, USA, 29 April–4 May 2025; pp. 4422–4435. [Google Scholar]

- Ding, H.; Wang, Z.; Paolini, G.; Kumar, V.; Deoras, A.; Roth, D.; Soatto, S. Fewer truncations improve language modeling. In Proceedings of the 41st International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024; Volume 439, pp. 11030–11048. [Google Scholar]

- Kundu, A.; Lee, R.D.; Wynter, L.; Ganti, R.K.; Mishra, M. Enhancing training efficiency using packing with flash attention. arXiv 2024, arXiv:2407.09105. [Google Scholar] [CrossRef]

- Han, I.; Jayaram, R.; Karbasi, A.; Mirrokni, V.; Woodruff, D.P.; Zandieh, A. Hyperattention: Long-context attention in near-linear time. arXiv 2023, arXiv:2310.05869. [Google Scholar] [CrossRef]

- Han, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. In Proceedings of the International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Zi, B.; Qi, X.; Wang, L.; Wang, J.; Wong, K.-F.; Zhang, L. Delta-lora: Fine-tuning high-rank parameters with the delta of low-rank matrices. arXiv 2023, arXiv:2309.02411. [Google Scholar] [CrossRef]

- Lin, C.; Li, L.; Li, D.; Zou, J.; Xue, W.; Guo, Y. Nora: Nested low-rank adaptation for efficient fine-tuning large models. arXiv 2024, arXiv:2408.10280. [Google Scholar] [CrossRef]

- Mao, Y.; Huang, K.; Guan, C.; Bao, G.; Mo, F.; Xu, J. DoRA: Enhancing Parameter-Efficient Fine-Tuning with Dynamic Rank Distribution. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024; Volume 1, pp. 11662–11675. [Google Scholar]

- Martins, A.; Astudillo, R. From softmax to sparsemax: A sparse model of attention and multi-label classification. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1614–1623. [Google Scholar]

- Yin, Q.; He, X.; Zhuang, X.; Zhao, Y.; Yao, J.; Shen, X.; Zhang, Q. StableMask: Refining causal masking in decoder-only transformer. In Proceedings of the 41st International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024; Volume 2354, pp. 57033–57052. [Google Scholar]

- Yao, X.; Qian, H.; Hu, X.; Xu, G.; Liu, W.; Luan, J.; Wang, B.; Liu, Y. Theoretical Insights into Fine-Tuning Attention Mechanism: Generalization and Optimization. arXiv 2025, arXiv:2410.02247. [Google Scholar] [CrossRef]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. QLORA: Efficient finetuning of quantized LLMs. In Proceedings of the 37th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023; Volume 441, pp. 10088–10115. [Google Scholar]

- Conover, M.; Hayes, M.; Mathur, A.; Xie, J.; Wan, J.; Shah, S.; Ghodsi, A.; Wendell, P.; Zaharia, M.; Xin, R. Free Dolly: Introducing the World’s First Truly Open Instruction-Tuned LLM. 2023. Available online: https://www.databricks.com/blog/2023/04/12/dolly-first-open-commercially-viable-instruction-tuned-llm (accessed on 1 September 2025).

- Taori, R.; Gulrajani, I.; Zhang, T.; Dubois, Y.; Li, X.; Guestrin, C.; Liang, P.; Hashimoto, T.B. Stanford Alpaca: An Instruction-Following LLaMA Model. GitHub Repository. 2023. Available online: https://github.com/tatsu-lab/stanford_alpaca (accessed on 1 September 2025).

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-Art Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; pp. 38–45. [Google Scholar]

- Jiang, A.Q.; Sablayrolles, A.; Mensch, A.; Bamford, C.; Chaplot, D.S.; de las Casas, D.; Bress, F.; Lengyel, G.; Lample, G.; Saulnier, L.; et al. Mistral 7B. arXiv 2023, arXiv:2310.06825. [Google Scholar] [CrossRef]

- Hendrycks, D.; Burns, C.; Basart, S.; Zou, A.; Mazeika, M.; Song, D.; Steinhardt, J. Measuring massive multitask language understanding. arXiv 2020, arXiv:2009.03300. [Google Scholar] [CrossRef]

- Kornblith, S.; Norouzi, M.; Lee, H.; Hinton, G. Similarity and matching of neural network representations. arXiv 2019, arXiv:1905.00414. [Google Scholar] [CrossRef]

- Raghu, M.; Gilmer, J.; Yosinski, J.; Sohl-Dickstein, J. Svcca: Singular vector canonical correlation analysis for deep learning dynamics and interpretability. In Proceedings of the Advances in Neural Information Processing Systems (30), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Morcos, A.; Raghu, M.; Bengio, S. Insights on representational similarity in neural networks with canonical correlation. In Proceedings of the Advances in Neural Information Processing Systems (31), Montreal, QC, Canada, 3–8 December 2018. [Google Scholar]

| Exp. | Allow CC | Packing Time (s) | Step Time (s) | Mask gen. (ms) | Mask h2d. (ms) | Mask (ms) | Step Time -Mask (s) | Epoch Time (s) |

|---|---|---|---|---|---|---|---|---|

| Base | 0.8307 | 0.1000 | 0.1641 | 0.1641 | 0.8307 | 390.1433 | ||

| Online (Fast) | × | 60.0617 | 0.7493 | 1.8111 | 0.2653 | 2.0764 | 0.7472 | 166.1000 |

| ✓ | 58.9256 | 0.7265 | 0.1027 | 0.1300 | 0.2326 | 0.7263 | 161.0433 | |

| −1.1361 | −0.0228 | −1.7085 | −0.1353 | −1.8438 | −0.0210 | −5.0567 | ||

| −1.8916 | −3.0428 | −94.3294 | −50.9989 | −88.7979 | −2.8045 | −3.0444 | ||

| Online (Delay) | × | 60.4502 | 0.7509 | 2.3954 | 0.2660 | 2.6613 | 0.7482 | 166.4400 |

| ✓ | 58.8972 | 0.7353 | 0.0600 | 0.1214 | 0.1814 | 0.7351 | 162.9933 | |

| −1.5530 | −0.0155 | −2.3354 | −0.1446 | −2.4800 | −0.0130 | −3.4467 | ||

| −2.5691 | −2.0775 | −97.4952 | −54.3609 | −93.1838 | −1.7535 | −2.0708 | ||

| Offline (Fast) | × | 92.7946 | 0.7491 | 2.2307 | 0.2656 | 2.4963 | 0.7466 | 154.0567 |

| ✓ | 91.4681 | 0.7406 | 0.0806 | 0.0610 | 0.1417 | 0.7405 | 152.3067 | |

| −1.3266 | −0.0085 | −2.1500 | −0.2046 | −2.3546 | −0.0061 | −1.7500 | ||

| −1.4296 | −1.1347 | −96.3868 | −77.0331 | −94.3236 | −0.8231 | −1.1359 | ||

| Offline (Delay) | × | 95.0622 | 0.7413 | 2.3773 | 0.4832 | 2.8605 | 0.7384 | 152.4633 |

| ✓ | 93.6683 | 0.7395 | 0.1182 | 0.0877 | 0.2059 | 0.7393 | 152.1033 | |

| −1.3939 | −0.0018 | −2.2591 | −0.3955 | −2.6546 | 0.0009 | −0.3600 | ||

| −1.4663 | −0.2428 | −95.0280 | −81.8502 | −92.8020 | 0.1157 | −0.2361 | ||

| Exp. | Step Loss | Epoch Last Loss | q_rel_mean | q_p95 | q_max | v_rel_mean | v_p95 | v_max | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | |

| Online(Fast) | 0.036 | 0.576 | −0.010 | 0.001 | −0.805 | 0.197 | −1.366 | 0.639 | −3.400 | 1.178 | 0.465 | 0.352 | 0.470 | 0.357 | 0.295 | 0.216 |

| Online(Delay) | 0.044 | 0.597 | 0.001 | 0.014 | −0.795 | 0.199 | −1.260 | 0.596 | −3.707 | 1.452 | 0.260 | 0.230 | 0.621 | 0.401 | 0.594 | 0.499 |

| Offline(Fast) | −0.046 | 0.106 | 0.003 | 0.004 | −1.306 | 0.373 | −2.049 | 0.597 | −5.717 | 2.077 | 0.206 | 0.262 | 0.008 | 0.285 | 0.470 | 1.252 |

| Offline(Delay) | −0.044 | 0.101 | −0.004 | 0.005 | −1.351 | 0.385 | −2.668 | 0.791 | −5.935 | 2.225 | 0.260 | 0.269 | 0.327 | 0.304 | 0.654 | 1.295 |

| Model Name | Base Architecture | # of Parameters |

|---|---|---|

| NousResearch/Llama-2-7b-hf 1 (NR) | Llama 2 | 7B |

| huggyllama/llama-7b 2 (HL) | LLaMA 1 | 6.74B |

| openlm-research/open_llama_7b [2] (OR) | OpenLLaMA | 7B |

| mistralai/Mistral-7B-v0.1 [31] (MR) | Mistral 7B | 7B |

| NousResearch/Llama-2-13b-hf 3 (NR(13B)) | Llama 2 | 13B |

| Exp. | Allow CC | Abstract Algebra | Computer Security | Econometrics | College Biology | Astronomy | High School | Clinical Knowledge | Professional Law |

|---|---|---|---|---|---|---|---|---|---|

| Base | 0.300 | 0.510 | 0.289 | 0.347 | 0.303 | 0.252 | 0.370 | 0.330 | |

| Online (Fast) | × | 0.280 | 0.470 | 0.325 | 0.465 | 0.355 | 0.258 | 0.375 | 0.365 |

| ✓ | 0.330 | 0.480 | 0.316 | 0.382 | 0.316 | 0.232 | 0.385 | 0.360 | |

| ✓ | 0.050 | 0.010 | −0.009 | −0.083 | −0.039 | −0.026 | 0.010 | −0.005 | |

| ✓ | 0.030 | −0.030 | 0.027 | 0.035 | 0.013 | −0.020 | 0.015 | 0.030 | |

| Online (Delay) | × | 0.320 | 0.520 | 0.307 | 0.479 | 0.309 | 0.219 | 0.430 | 0.350 |

| ✓ | 0.310 | 0.480 | 0.342 | 0.403 | 0.336 | 0.219 | 0.400 | 0.350 | |

| ✓ | −0.010 | −0.040 | 0.035 | −0.076 | 0.026 | 0.000 | −0.030 | 0.000 | |

| ✓ | 0.010 | −0.030 | 0.053 | 0.056 | 0.033 | −0.033 | 0.030 | 0.020 | |

| Offline (Fast) | × | 0.340 | 0.530 | 0.325 | 0.472 | 0.342 | 0.298 | 0.475 | 0.355 |

| ✓ | 0.340 | 0.500 | 0.298 | 0.410 | 0.362 | 0.252 | 0.405 | 0.325 | |

| ✓ | 0.000 | −0.030 | −0.026 | −0.062 | 0.020 | −0.046 | −0.070 | −0.030 | |

| ✓ | 0.040 | −0.010 | 0.009 | 0.063 | 0.059 | 0.000 | 0.035 | −0.005 | |

| Offline (Delay) | × | 0.340 | 0.580 | 0.333 | 0.479 | 0.355 | 0.272 | 0.490 | 0.340 |

| ✓ | 0.350 | 0.460 | 0.289 | 0.410 | 0.342 | 0.252 | 0.425 | 0.280 | |

| ✓ | 0.010 | −0.120 | −0.044 | −0.069 | −0.013 | −0.020 | −0.065 | −0.060 | |

| ✓ | 0.050 | −0.050 | 0.063 | 0.039 | 0.039 | 0.000 | 0.055 | −0.050 | |

| Datasets | Models | Packing Time (s) | Step Time (s) | Mask gen. (ms) | Mask h2d. (ms) | Mask (ms) | Step Time -Mask (s) | Epoch Time (s) | |

|---|---|---|---|---|---|---|---|---|---|

| databricks databricks -dolly-15k | NR | ✓ | −1.3939 | −0.0018 | −2.2591 | −0.3955 | −2.6546 | 0.0009 | −0.3600 |

| −1.4663 | −0.2428 | −95.0280 | −81.8502 | −92.8020 | 0.1157 | −0.2361 | |||

| HL | ✓ | −1.1749 | 0.0024 | −1.9042 | −0.2856 | −2.1899 | 0.0046 | 0.4933 | |

| −1.0437 | 0.3325 | −94.0534 | −62.9352 | −88.3559 | 0.6382 | 0.3323 | |||

| OR | ✓ | −1.2786 | −0.0172 | −2.2353 | −0.3215 | −2.5568 | −0.0146 | −3.2800 | |

| −1.7260 | −2.3354 | −95.5256 | −80.4555 | −93.3272 | −1.9957 | −2.3356 | |||

| MR | ✓ | −1.1817 | −0.0086 | −0.9880 | −0.1656 | −1.1536 | −0.0074 | −3.4267 | |

| −1.5654 | −0.9808 | −90.1469 | −62.0315 | −84.6430 | −0.8506 | −0.9803 | |||

| tatsu-lab /alpaca | NR | ✓ | −1.3939 | −0.0018 | −2.2591 | −0.3955 | −2.6546 | 0.0009 | −0.3600 |

| % | −1.4663 | −0.2428 | −95.0280 | −81.8502 | −92.8020 | 0.1157 | −0.2361 | ||

| HL | ✓ | −1.1749 | 0.0024 | −1.9042 | −0.2856 | −2.1899 | 0.0046 | 0.4933 | |

| % | −1.0437 | 0.3325 | −94.0534 | −62.9352 | −88.3559 | 0.6382 | 0.3323 | ||

| OR | ✓ | −1.2786 | −0.0172 | −2.2353 | −0.3215 | −2.5568 | −0.0146 | −3.2800 | |

| % | −1.7260 | −2.3354 | −95.5256 | −80.4555 | −93.3272 | −1.9957 | −2.3356 | ||

| MR | ✓ | −1.1817 | −0.0086 | −0.9880 | −0.1656 | −1.1536 | −0.0074 | −3.4267 | |

| % | −1.5654 | −0.9808 | −90.1469 | −62.0315 | −84.6430 | −0.8506 | −0.9803 | ||

| yahma/ alpaca _cleaned | NR | ✓ | −6.9868 | −0.0038 | −2.0580 | −0.2902 | −2.3482 | −0.0015 | −4.2733 |

| −0.6457 | −0.5112 | −94.2607 | −78.4171 | −91.9637 | −0.1960 | −0.5080 | |||

| HL | ✓ | −7.3857 | −0.0246 | −2.1755 | −0.2896 | −2.4651 | −0.0221 | −27.8633 | |

| −0.7290 | −3.2178 | −96.2100 | −75.6333 | −93.2264 | −2.9054 | −3.2207 | |||

| OR | ✓ | −6.2812 | −0.0079 | −1.9985 | −0.2809 | −2.2794 | −0.0057 | −8.3233 | |

| −0.6913 | −1.0725 | −95.7411 | −68.6461 | −91.3002 | −0.7695 | −1.0651 | |||

| MR | ✓ | −6.8929 | −0.0057 | −1.0580 | −0.1658 | −1.2238 | −0.0045 | −12.3400 | |

| −0.7307 | −0.6639 | −90.7220 | −61.3620 | −85.2001 | −0.5222 | −0.6618 |

| Datasets | Models | Step Loss | Epoch Last Loss | q_rel_mean | q_p95 | q_max | v_rel_mean | v_p95 | v_max | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | ||

| databricks/ databricks -dolly-15k | NR | −0.045 | 0.101 | −0.004 | 0.005 | −1.351 | 0.385 | −2.668 | 0.791 | −5.935 | 2.225 | 0.260 | 0.269 | 0.327 | 0.304 | 0.654 | 1.295 |

| HL | −0.039 | 0.133 | −0.017 | 0.009 | −1.355 | 0.344 | −1.880 | 0.814 | −0.898 | 1.567 | −0.017 | 0.257 | −0.751 | 0.161 | −2.196 | 0.786 | |

| OR | −0.074 | 0.125 | −0.042 | 0.027 | −1.197 | 0.356 | −1.994 | 0.993 | −5.736 | 3.386 | 0.074 | 0.322 | −0.897 | 0.393 | −0.800 | 0.453 | |

| MR | −0.032 | 0.071 | −0.016 | 0.035 | −2.907 | 0.879 | −3.332 | 1.321 | −10.206 | 4.398 | −0.726 | 0.475 | −0.808 | 0.426 | −1.942 | 1.329 | |

| tatsu-lab/ alpaca | NR | −0.028 | 0.112 | −0.012 | 0.012 | −1.829 | 0.383 | −4.924 | 1.530 | −9.461 | 2.933 | 0.156 | 0.378 | 0.362 | 0.499 | 1.221 | 1.427 |

| HL | −0.038 | 0.115 | −0.015 | 0.011 | −1.453 | 0.273 | −2.099 | 0.620 | 0.084 | 0.636 | −0.094 | 0.281 | −1.779 | 0.420 | −2.295 | 0.661 | |

| OR | −0.047 | 0.104 | −0.022 | 0.005 | −1.286 | 0.252 | −1.863 | 0.530 | −8.959 | 3.848 | 0.255 | 0.469 | −0.718 | 0.327 | −0.469 | 0.275 | |

| MR | −0.019 | 0.057 | −0.022 | 0.026 | −3.473 | 0.776 | −6.520 | 1.989 | −14.982 | 5.375 | −0.307 | 0.912 | −0.305 | 0.601 | 0.315 | 1.537 | |

| yahma/ alpaca _cleaned | NR | −0.011 | 0.028 | 0.001 | 0.004 | −1.273 | 0.197 | −3.821 | 0.985 | −5.540 | 1.662 | 0.696 | 0.401 | 1.619 | 0.701 | 3.503 | 2.006 |

| HL | −0.012 | 0.048 | −0.014 | 0.009 | −1.031 | 0.276 | −0.869 | 0.367 | 1.186 | 0.808 | 0.275 | 0.266 | −0.061 | 0.491 | 0.624 | 1.419 | |

| OR | −0.020 | 0.037 | −0.009 | 0.007 | −1.384 | 0.273 | −1.779 | 0.514 | −15.632 | 7.442 | 0.717 | 0.450 | 0.003 | 0.234 | −0.164 | 0.430 | |

| MR | −0.009 | 0.025 | 0.002 | 0.021 | −4.016 | 0.851 | −13.299 | 4.164 | −40.767 | 19.104 | 0.148 | 0.592 | 0.436 | 0.564 | −1.673 | 1.078 | |

| Exp. | Packing Time (s) | Step Time (s) | Mask gen. (ms) | Mask h2d. (ms) | Mask (ms) | Step Time -Mask (s) | Epoch Time (s) | |

|---|---|---|---|---|---|---|---|---|

| Online (Fast) | −2.6398 | −0.0007 | −0.9947 | −0.0927 | −1.0874 | 0.0005 | −0.5833 | |

| −4.2218 | −0.0725 | −89.3139 | −31.7140 | −77.3384 | 0.0590 | −0.0796 | ||

| Offline (Fast) | −2.3581 | −0.0003 | −0.9566 | 0.0100 | −0.9467 | 0.0006 | −0.2733 | |

| −2.5067 | −0.0361 | −84.2240 | 4.2319 | −68.9914 | 0.0780 | −0.0400 |

| Exp. | Step Loss | Epoch Last Loss | q_rel_mean | q_p95 | q_max | v_rel_mean | v_p95 | v_max | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | |

| Online(Fast) | 1.267 | 2.814 | 0.000 | 0.000 | 0.483 | 0.360 | 2.382 | 2.437 | 7.378 | 3.454 | 8.883 | 5.252 | 20.151 | 12.563 | 43.604 | 17.801 |

| Offline(Fast) | −0.053 | 0.679 | 0.000 | 0.000 | 1.484 | 0.788 | 6.269 | 3.070 | 9.388 | 3.967 | 3.839 | 2.572 | 6.666 | 4.406 | −3.644 | −3.644 |

| Trained Param. | Packing Time (s) | Step Time (s) | Mask gen. (ms) | Mask h2d. (ms) | Mask (ms) | Step Time -Mask (s) | Epoch Time (s) | |

|---|---|---|---|---|---|---|---|---|

| LoRA-Q & LoRA-V | −1.3939 | −0.0018 | −2.2591 | −0.3955 | −2.6546 | 0.0009 | −0.3600 | |

| −1.4663 | −0.2428 | −95.0280 | −81.8502 | −92.8020 | 0.1157 | −0.2361 | ||

| LoRA-V -only | −1.3796 | −0.1056 | −2.2360 | −0.4049 | −2.6409 | −0.1030 | −21.7233 | |

| −1.4512 | −14.2452 | −94.0521 | −83.7955 | −92.3230 | −13.9428 | −14.2482 | ||

| LoRA-Q -only | −1.3674 | −0.1024 | −2.2163 | −0.3516 | −2.5678 | −0.0998 | −21.0633 | |

| −1.4385 | −13.8136 | −93.2234 | −72.7649 | −89.7675 | −13.5193 | −13.8153 |

| Trained Parameters | Step Loss | Epoch Last Loss | q_rel_mean | q_p95 | q_max | v_rel_mean | v_p95 | v_max | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | Avg. | Std. | |

| LoRA-Q & LoRA-V | −0.045 | 0.101 | −0.004 | 0.005 | −1.351 | 0.385 | −2.668 | 0.791 | −5.935 | 2.225 | 0.260 | 0.269 | 0.327 | 0.304 | 0.654 | 1.295 |

| LoRA-V-only | −0.031 | 0.100 | 0.010 | 0.008 | −5.052 | 2.161 | −7.324 | 2.850 | −10.831 | 4.522 | −0.457 | 0.186 | −0.435 | 0.247 | −0.804 | 0.417 |

| LoRA-Q-only | 0.032 | 0.080 | 0.031 | 0.014 | 0.597 | 0.348 | −0.371 | 0.218 | −3.561 | 2.052 | −4.540 | 1.693 | −5.501 | 1.872 | −6.092 | 1.662 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seo, J.W.; Jung, H.-Y. Eliminating Packing-Aware Masking via LoRA-Based Supervised Fine-Tuning of Large Language Models. Mathematics 2025, 13, 3344. https://doi.org/10.3390/math13203344

Seo JW, Jung H-Y. Eliminating Packing-Aware Masking via LoRA-Based Supervised Fine-Tuning of Large Language Models. Mathematics. 2025; 13(20):3344. https://doi.org/10.3390/math13203344

Chicago/Turabian StyleSeo, Jeong Woo, and Ho-Young Jung. 2025. "Eliminating Packing-Aware Masking via LoRA-Based Supervised Fine-Tuning of Large Language Models" Mathematics 13, no. 20: 3344. https://doi.org/10.3390/math13203344

APA StyleSeo, J. W., & Jung, H.-Y. (2025). Eliminating Packing-Aware Masking via LoRA-Based Supervised Fine-Tuning of Large Language Models. Mathematics, 13(20), 3344. https://doi.org/10.3390/math13203344