3.1. Statistical Process Control

In Shewhart’s seminal book [

15] on the topic of control in manufacturing, Shewhart explains that a phenomenon is said to be in control when, “through the use of past experience, we can predict, at least within limits, how the phenomenon may be expected to vary in the future.” This notion provides an instructive framework for thinking about convergence because it offers a natural way to consider the distributional characteristics of the EI as a proper random variable. In its most simplified form, SPC considers an approximation of a statistic’s sampling distribution as repeated sampling occurs in time. Thus, Shewhart can express his idea of control as the expected behavior of random observations from this sampling distribution. For example, an

-chart tracks the mean of repeated samples (all of size

n) through time so as to expect the arrival of each subsequent mean in accordance with the known or estimated sampling distribution for the mean,

. By considering confidence intervals on this sampling distribution we can draw explicit boundaries (i.e., control limits) to identify when the process is in control and when it is not. Observations violating our expectations (falling outside of the control limits) indicate an out of control state. Since neither

nor

are typically known, it is common to collect an initial set of data from which point estimates of

and

may establish an initial standard for control that is further refined as the process proceeds. This logic relies upon the typical asymptotic results of the central limit theorem (CLT), and care should be taken to verify the relevant assumptions required.

It is important to note that we are not performing traditional SPC in this context, as the EI criterion will be stochastically decreasing as an optimization routine proceeds. Only when convergence is reached will the EI series look approximately like an in control process. Thus, our perspective is completely reversed from the traditional SPC approach—we start with a process that is out of control, and we determine convergence when the process stabilizes and becomes locally in control. An alternative way to think about our approach is to consider performing SPC backwards in time on our EI series. Starting from the most recent EI observations and looking back, we declare convergence if the process starts in control and then becomes out of control. This pattern generally appears only when the optimization has progressed and reached a local mode without other prospects for a global mode. If the optimization were still proceeding, then the EI would still be decreasing and the most recent iterations of optimization would not appear to be in control.

3.2. Expected Log-Normal Approximation to the Improvement (ELAI)

For the sake of obtaining a robust convergence criterion to track via SPC, it is important to carefully consider properties of the improvement distributions which generate the EI values. The improvement criterion is strictly positive but decreasingly small; thus, the improvement distribution is often strongly right skewed, in which case, the EI is far from normal. Additionally, this right skew becomes exaggerated as convergence approaches, due to the decreasing trend in the EI criterion. These issues naturally suggest modeling transformations of the improvement, rather than directly considering the improvement distribution on its own. One of the simplest of the many possible helpful transformations in this case would consider the log of the improvement distribution. However due to the Monte Carlo sample-based implementation of the Gaussian process, it is not uncommon to obtain at least one sample that is computationally indistinguishable from zero in double precision. Thus, simply taking the log of the improvement samples can result in numerical failure, particularly as convergence approaches, even though the quantities are theoretically strictly positive. Despite this numerical inconvenience, the distribution of the improvement samples is often very well approximated by the log-normal distribution.

We avoid the numerical issues by using a model-based approximation. With the desire to model

, we switch to a log-normal perspective. Recall that if a random variable

then another random variable

Y = log(

X) is distributed

. Furthermore, if

ω and

ψ are the mean and variance of a log-normal sample, respectively, then the mean,

θ, and variance,

ϕ, of the associated normal distribution are given by the following relation.

Using this relation, we do not need to transform any of the improvement samples. We compute the empirical mean and variance of the unaltered, approximately log-normal, improvement samples, then use relation (3) to directly compute

ω as the Expectation under the Log-normal Approximation to the Improvement (ELAI). The ELAI value is useful for assessing convergence because of the reduced right skew of the log of the posterior predictive improvement distribution. Additionally, the ELAI serves as a computationally robust approximation of the

under reasonable log-normality of the improvements. Furthermore, both the

and ELAI are distributed approximately normally in repeated sampling. This construction allows for more consistent and accurate use of the fundamental theory on which our SPC perspective depends. We note that the approximation will be more accurate when the process is near or at convergence; the accuracy of the approximation is not critical while the process is out of control and not yet in convergence, so we only need a good approximation in the region of convergence.

3.3. Exponentially Weighted Moving Average

The Exponentially Weighted Moving Average (EWMA) control chart [

16,

17] elaborates on Shewhart’s original notion of control by viewing the repeated sampling process in the context of a moving average smoothing of series data. The general concept of a control chart is that it is monitoring a process that is “in control”, and it checks to see if the process changes, flagging a new point as not in control if it is outside of expectations. The expectations are based on having a stable mean and a stable variance; changes in either the mean or the variance can trigger a loss of control.

In our context, pre-convergence ELAI evaluations tend to be variable and overall decreasing, and so do not necessarily share distributional consistency among all observed values. Thus, a weighted series perspective was chosen to follow the moving average of the most recent ELAI observations while still smoothing with some memory of older evaluations. EWMA achieves this robust smoothing behavior, relative to shifting means, by assigning exponentially decreasing weights to successive points in a rolling average among all of the points of the series. Thus, the EWMA can emphasize recent observations and shift the focus of the moving average to the most recent information while still providing shrinkage towards the global mean of the series.

Let be the ELAI value observed at the iteration of optimization forwards in time, such that , where T is then the most recently completed iteration of optimization. To step backwards in time, we introduce to track indices scanning from the most recent iteration of optimization backwards towards the first iteration. Indexing backwards in time, let be the EWMA statistic associated with , and progressing through the previous iterations of optimization the EWMA statistics are expressed as . To initialize the recurrence, is set to the observed mean of Y over the modeled period. Here, is a smoothing parameter that defines the weight assigned to the most recent observation. The recursive expression of the statistic ensures that all subsequent weights geometrically decrease.

is estimated by minimizing the sum of squared forecasting deviations, based on the method described in [

18].

is therefore automatically selected by solving:

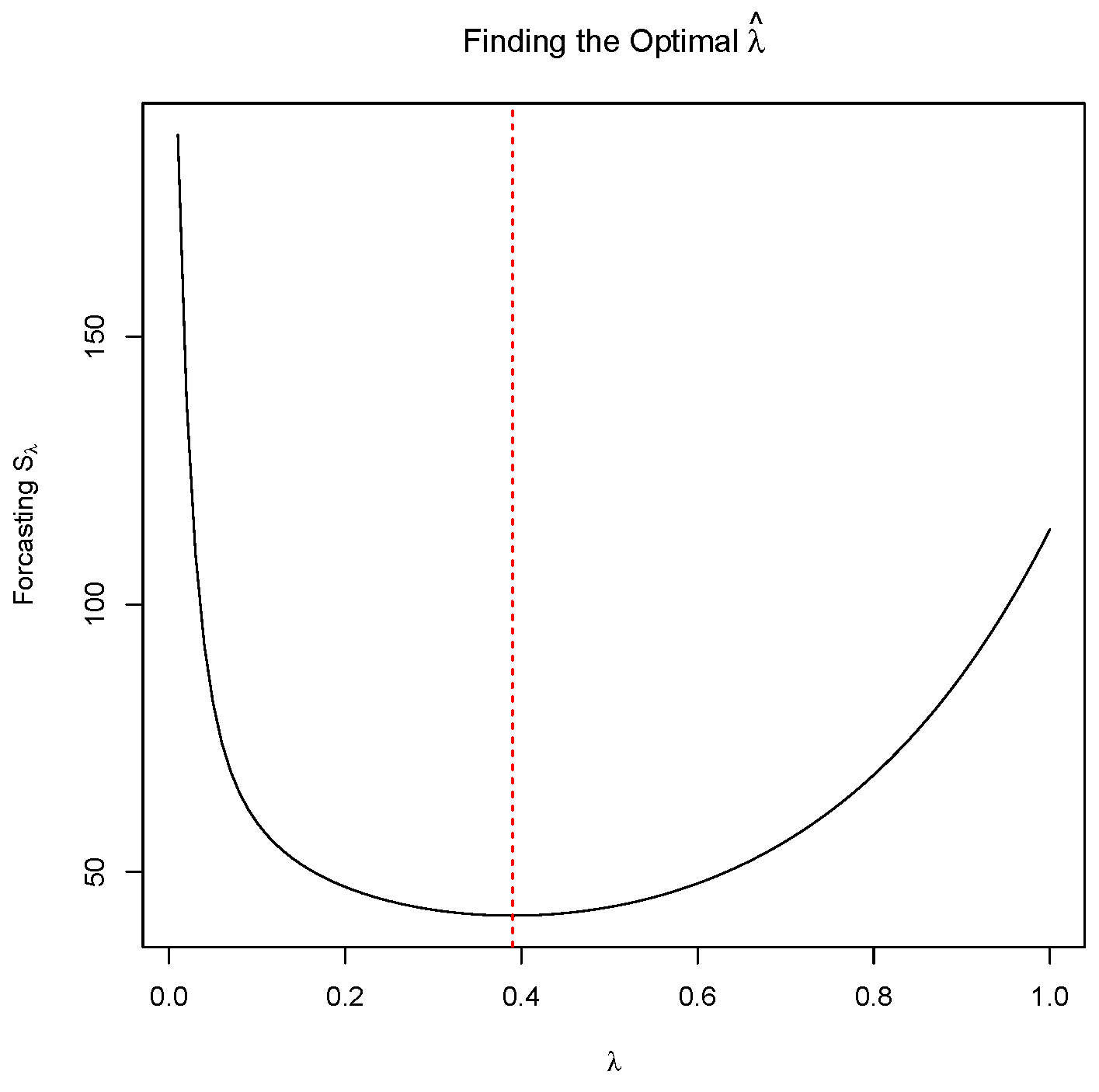

Figure 3 shows the sum of squared forecasting deviations as a function of

. The relatively flat region around the minimum in

Figure 3 demonstrates that EWMA charts can be very robust to reasonable choices of

for a large range of sub-optimal choices of

around

. In fact,

Figure 3 shows that for

, the sum of squared forecasting deviations stays within 10% of the minimum possible value.

Identifying convergence in this setting now requires the computation of control limits on the EWMA statistic. As in the simplified

-chart, defining the control limits for the EWMA setting amounts to considering an interval on the sampling distribution of interest. In the EWMA case, we are interested in the sampling distribution of the

. Assuming that the

are

, then [

16] show that we can write

in terms of

.

Thus, if

, then the sampling distribution for

is

. Furthermore, by choosing a confidence level through choice of a constant

c, the control limits based on this sampling distribution are seen in Equation (

6).

Notice that since has a dependence on s, the control limits do as well. Looking back through the series brings us away from the focus of the moving average, and thus the control limits widen as , where the control limits approach .

Our aim in applying the EWMA framework in this context is to recognize the fundamental notion of control that EWMA enforces in the newly arriving EI values, as optimization proceeds. Convergence often arises as a subtle shift of the EI distribution into place. In this context, a more traditional

chart will often overlook convergence as a subtle random fluctuation, when in fact it is often this subtle signal that we aim to pick-up. EWMA is among the better techniques for recognizing such subtly shifting means [

19,

20], while maintaining the capability to detect abrupt shifts in mean. As convergence approaches, the newly arriving

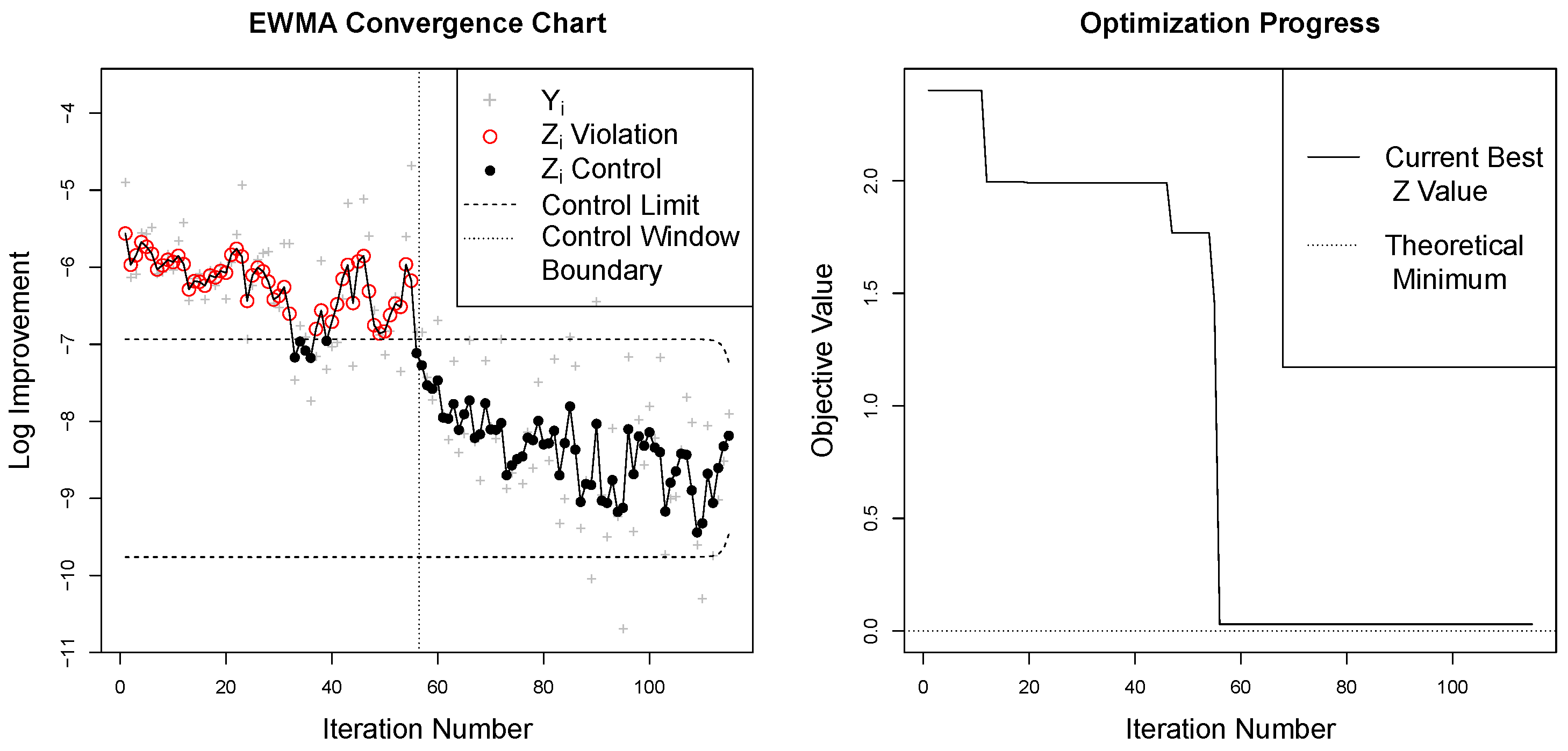

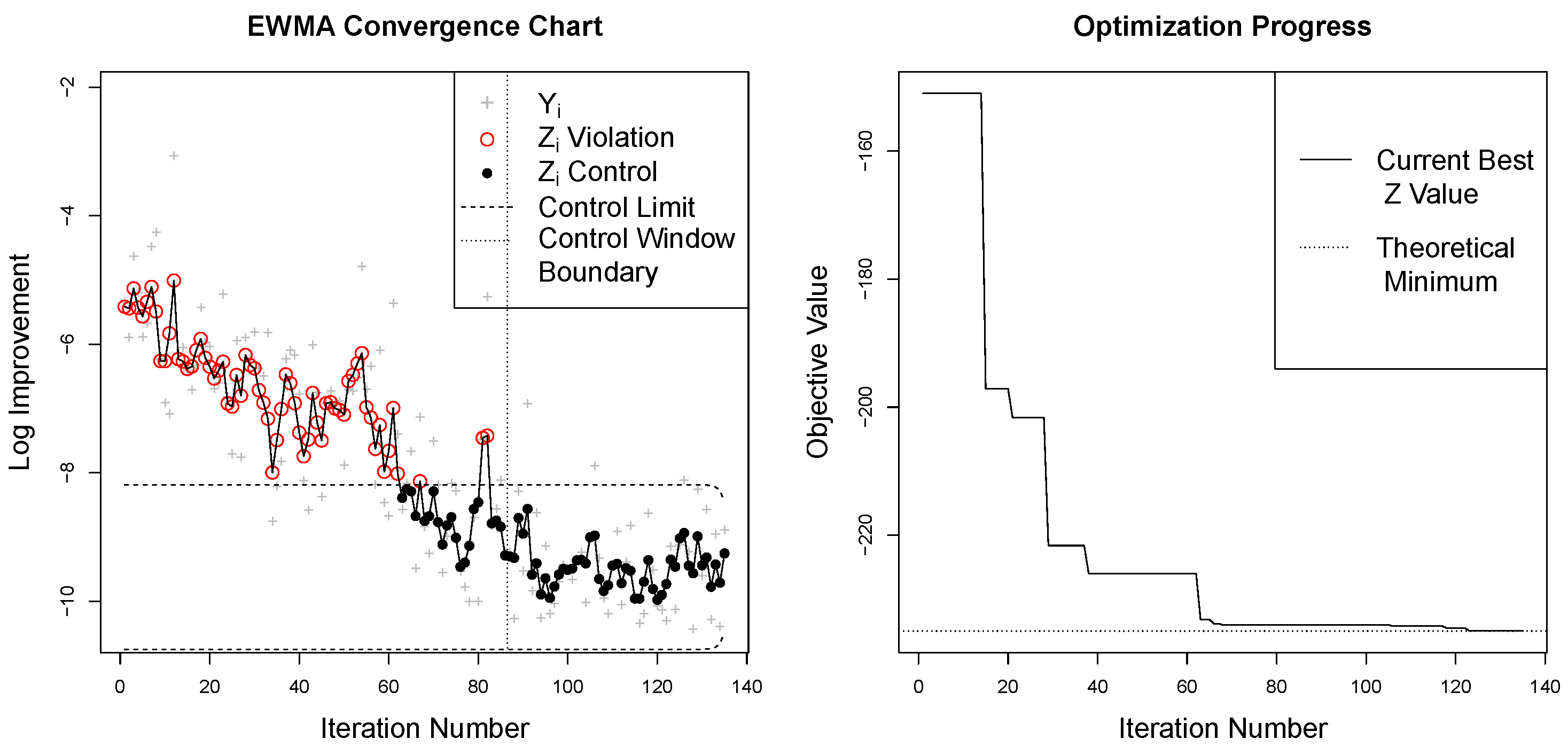

Y begin to fit into the

EWMA framework and the

Z increasingly begin to fall within the EWMA control limits. EWMA’s recognition of such a controlled region in the newly arriving ELAI values indicates the notion of distributional consistency that is necessary for defining convergence for stochastic measures of convergence, such as EI.

The control chart framework assumes that the

are

when the process is in control. We describe our approach as “inspired by Statistical Process Control” because the

are not actually

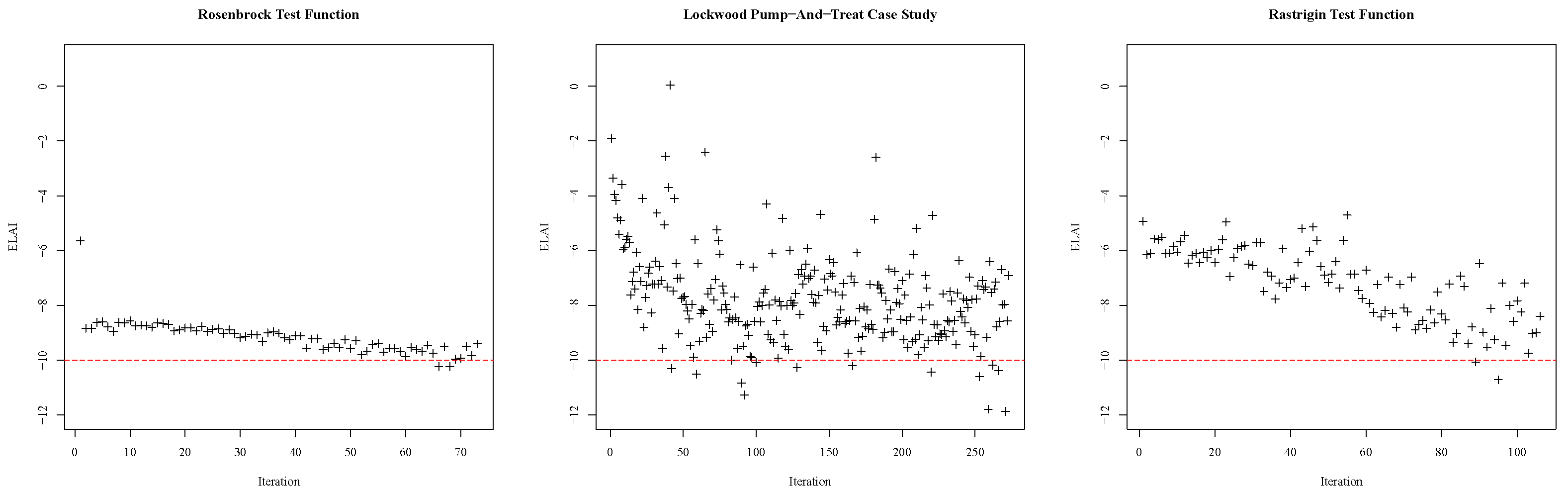

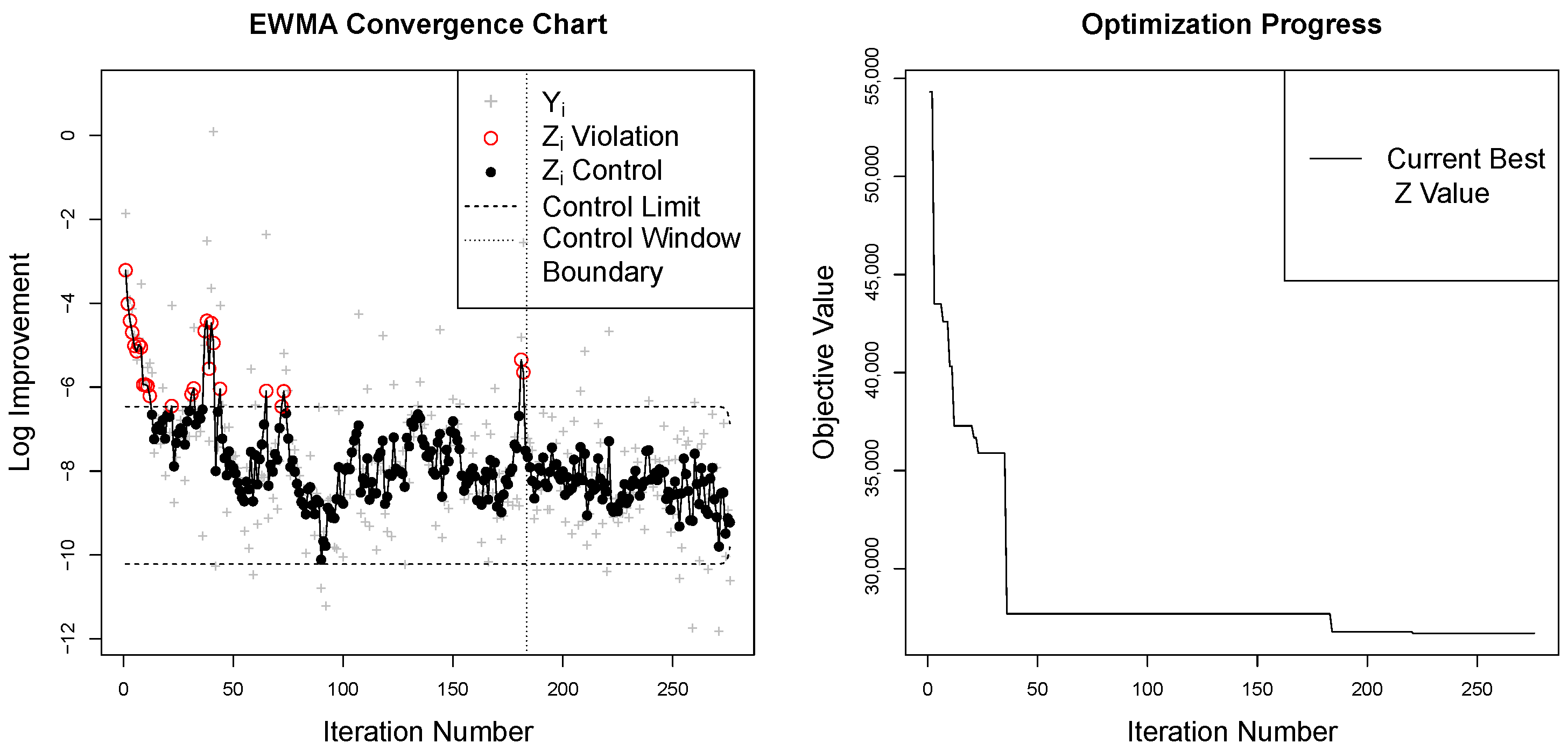

The early iterations of the convergence processes seen in

Figure 1 certainly do not display

. However, as the series approaches convergence, the

eventually do enter a state of control, see, for example,

Figure 4. For these

at convergence, they are still not exactly

, because the surrogate model variance will continue to very slightly decrease with each iteration, but an

approximation is reasonable. The realization of such a controlled region of the series defines the notion of consistency that allows for the identification of convergence.

3.4. The Control Window

The final structural feature needed to compute the EWMA convergence chart is the control window. The control window contains a fixed number, w, of the most recently observed Y (i.e., ). Only information from the w points currently residing inside the control window is used to calculate the control limits and . To assess convergence, the EWMA statistic is computed for all values. Initially, the convergence algorithm is allowed to fill the control window by collecting an initial set of w ELAI observations. As a new observation arrives, the window slides to exclude the oldest observation and include the newest to maintain its definition as the w most recent observations.

The purpose of the control window is two-fold. First, it serves to dichotomize the series for evaluating subsets of the for distributional consistency. Second, it offers a structural way for basing the standard for consistency (i.e., the control limits) only on the most recent and relevant information in the series.

The size of the control window, w, may vary from problem to problem based on the difficulty of optimization in each case. A reasonable way of choosing w is to consider the number of observations necessary to establish a standard of control. In this setting, w is a kind of sample size, and as such the choice of w will naturally increase as the variability in the ELAI series increases. Just as in other sample size calculations, the choice of an optimal w must consider the cost of poor inference (premature identification of convergence) associated with underestimating w, against the cost of over sampling (continuing to sample after convergence has occurred) associated with overestimating w. Providing a default choice of w is somewhat arbitrary without careful analysis of the particulars of the objective function behavior, the costs of each successive objective function evaluation, and the users’ risk tolerance to prematurely declaring convergence.

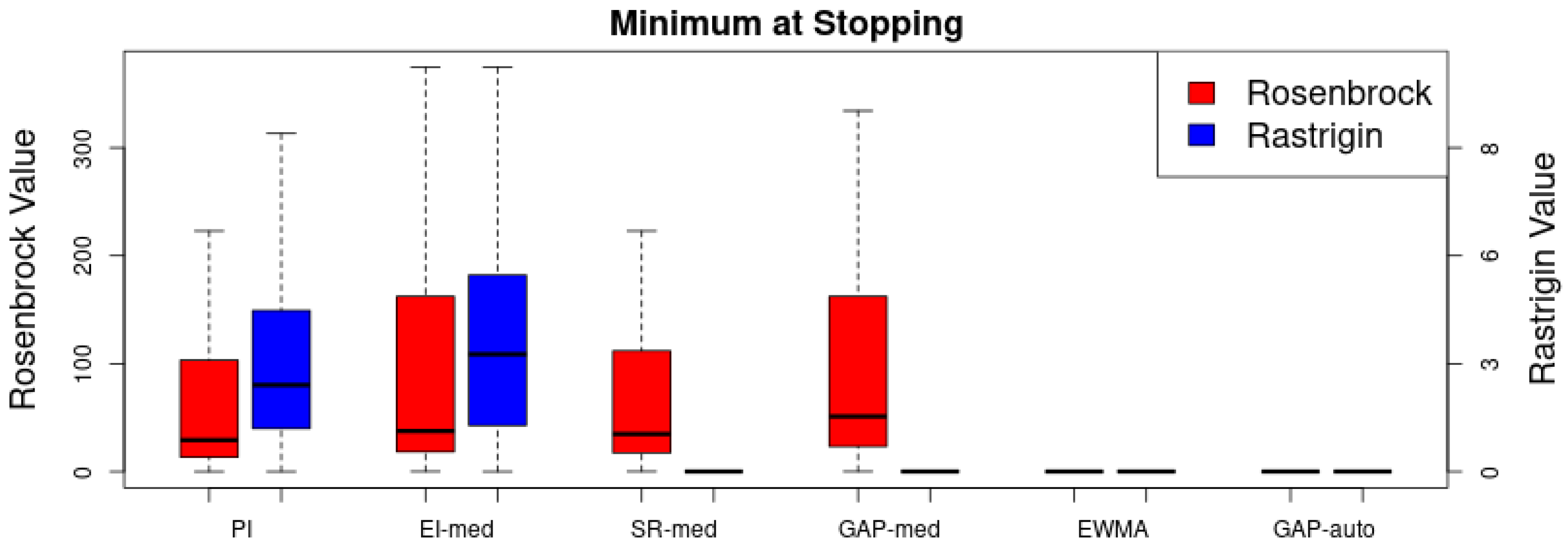

For the purpose of exploring the behavior of w in examples presented here, we use the following procedure for educating the choice of w. We hand tune w for two informative known example functions (i.e., Rosenbrock and Rastrigin). From the exploration of w in known examples, it is clear that w needs to increase directly with ELAI variance. Furthermore, if one considers the form of sample size calculations based on classical power analysis, sample size increases directly proportional with the sample variance. Thus, we linearly extrapolate the choice of w for two higher dimensional problems from the behavior of w in the hand-tuned test function examples. The value of w linearly increases from a value of 30 (based on sampling conventions) with a slope term structured to adjust the window size to the observed ELAI variance () in the new problems based on the sensitivity of the w estimates with the observed variances in the hand-tuned examples so that .

3.5. Identifying Convergence

In identifying convergence, we not only hope that the ELAI series reaches a state of control, but we anticipate that the ELAI series demonstrates a move from pre-convergence to a consistent state of convergence. To recognize the move into convergence we combine the notion of the control window with the EWMA framework to construct the so called EWMA Convergence Chart. Since we expect EI values to decrease upon convergence, the primary recognition of convergence is that new ELAI values demonstrate values that are consistently lower than initial pre-converged values.

First, we require that all exponentially weighted values inside the control window fall within the control limits. This ensures that the most recent ELAI values demonstrate distributional consistency within the bounds of the control window. Second, since we are looking for the point when the process changes from the initial pre-converged state of the system to a state of convergence, we require at least one point beyond the initial control window to fall outside the defined EWMA control limits. This second rule suggests that the new ELAI observations have established a state of control which is significantly different from the previous pre-converged ELAI observations. Jointly enforcing these two rules implies convergence based on the notion that convergence enjoys a state of consistently decreased expectation of finding new minima in future function evaluations.

Considering the optimization procedure outlined in

Figure 2, the check for convergence indicated in step (7) amounts to computing new EWMA

values, and control limits, from the inclusion of the most recent observation of the improvement distribution, and checking if the subsequent set of

satisfy both of the above rules of the EWMA convergence chart. Satisfying one, or neither, of the convergence rules indicates insufficient exploration and further iterations of optimization are required to gather more information about the objective function. The time complexity of computing the EWMA convergence chart is

, which is fast relative to inference for the Gaussian process surrogate model.