Adaptive Tree-Structured MTS with Multi-Class Mahalanobis Space for High-Performance Multi-Class Classification

Abstract

1. Introduction

2. Relevant Theories

2.1. Mahalanobis–Taguchi System

- Step 1

- Construction of the MS

- Step 2

- Validation of the MS

- Step 3

- Feature selection and MS optimization

- Step 4

- Calculate threshold value

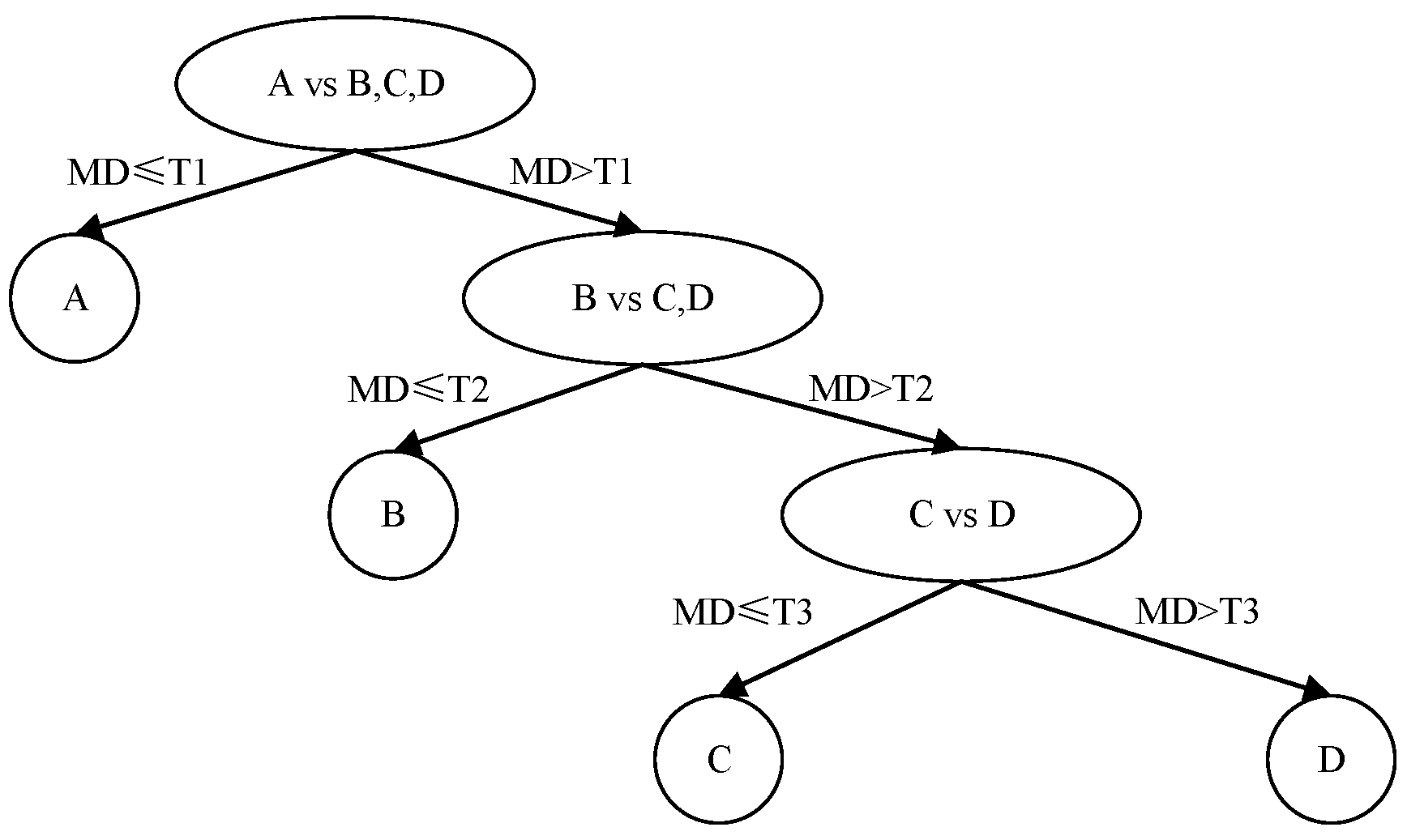

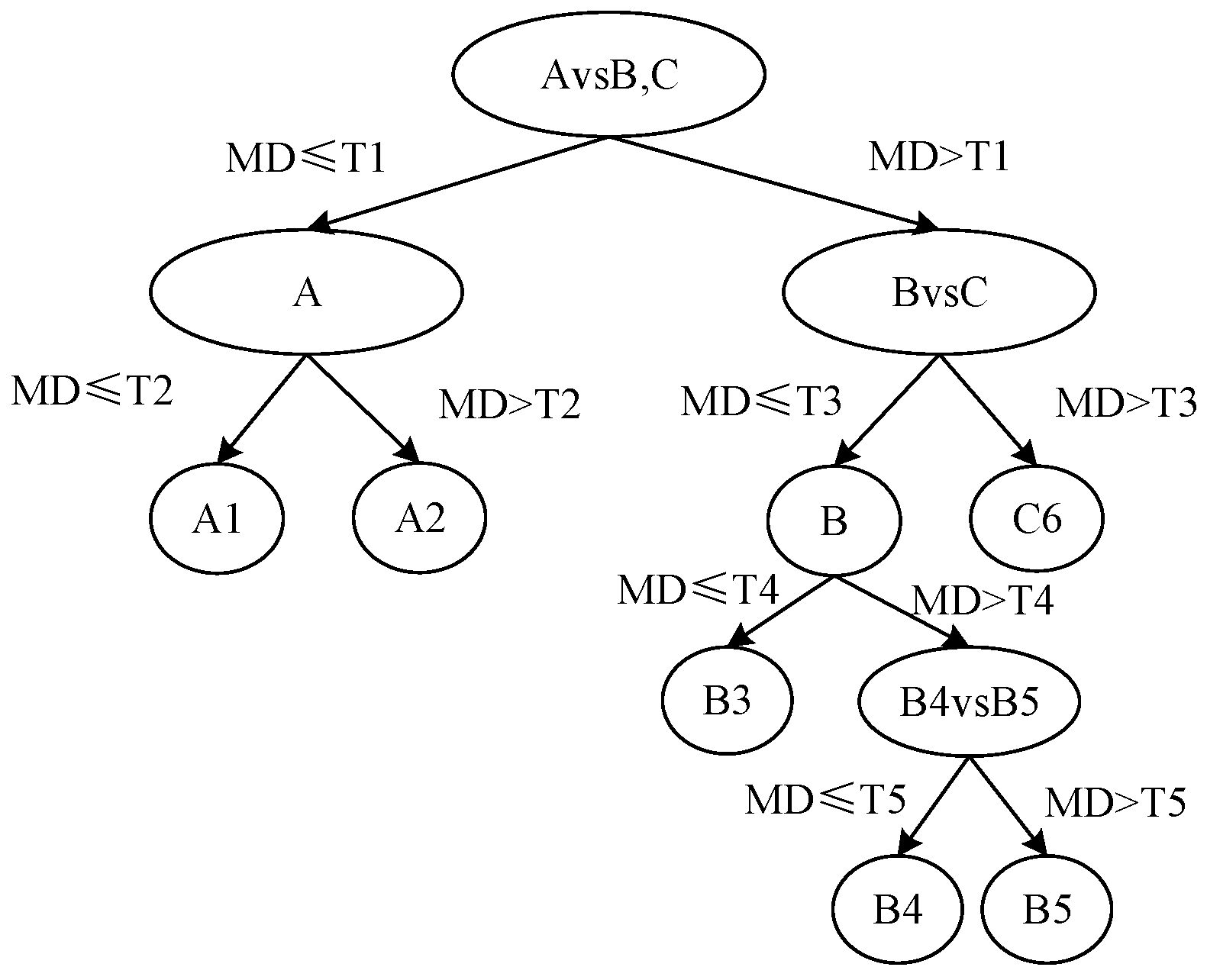

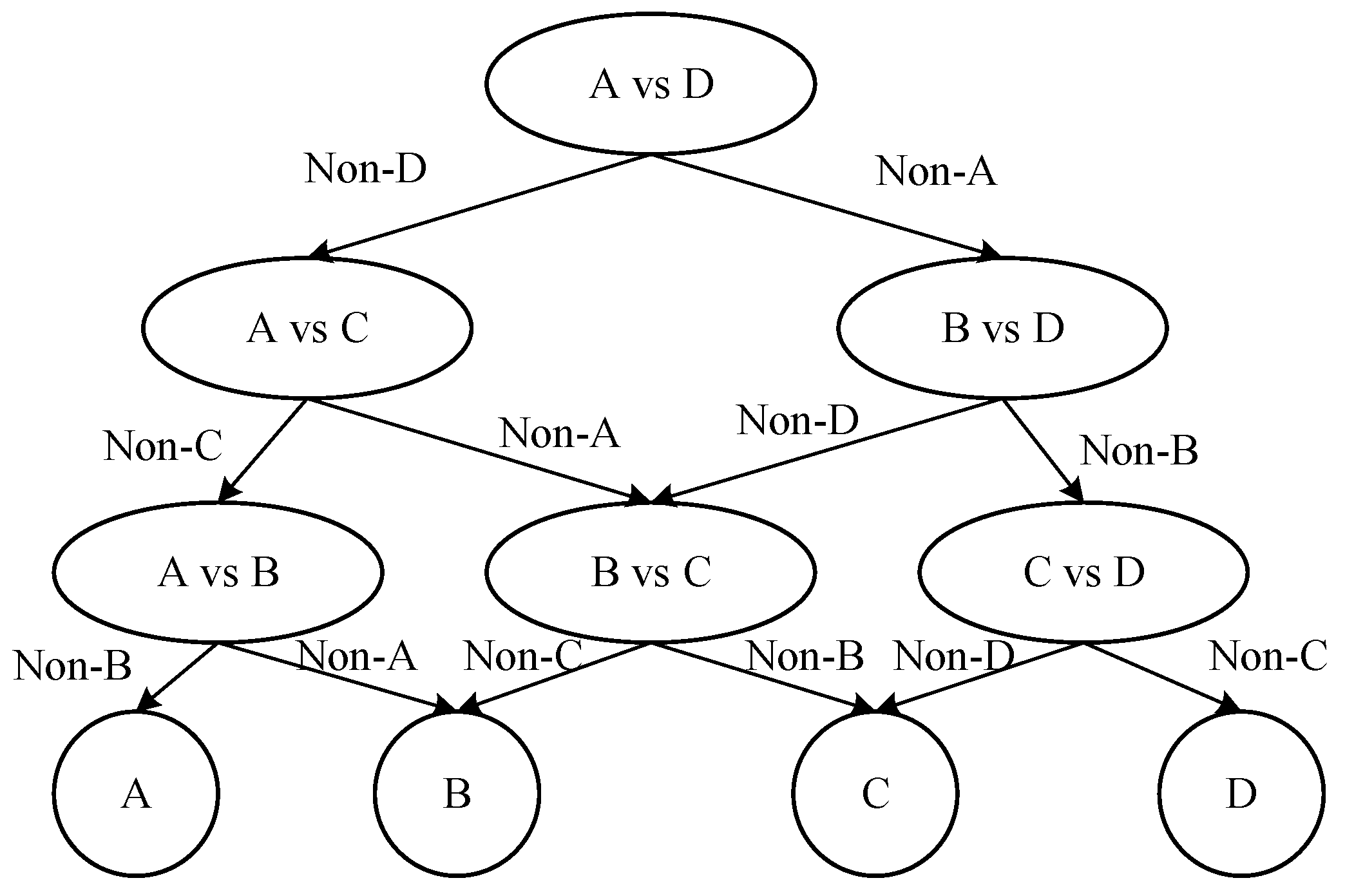

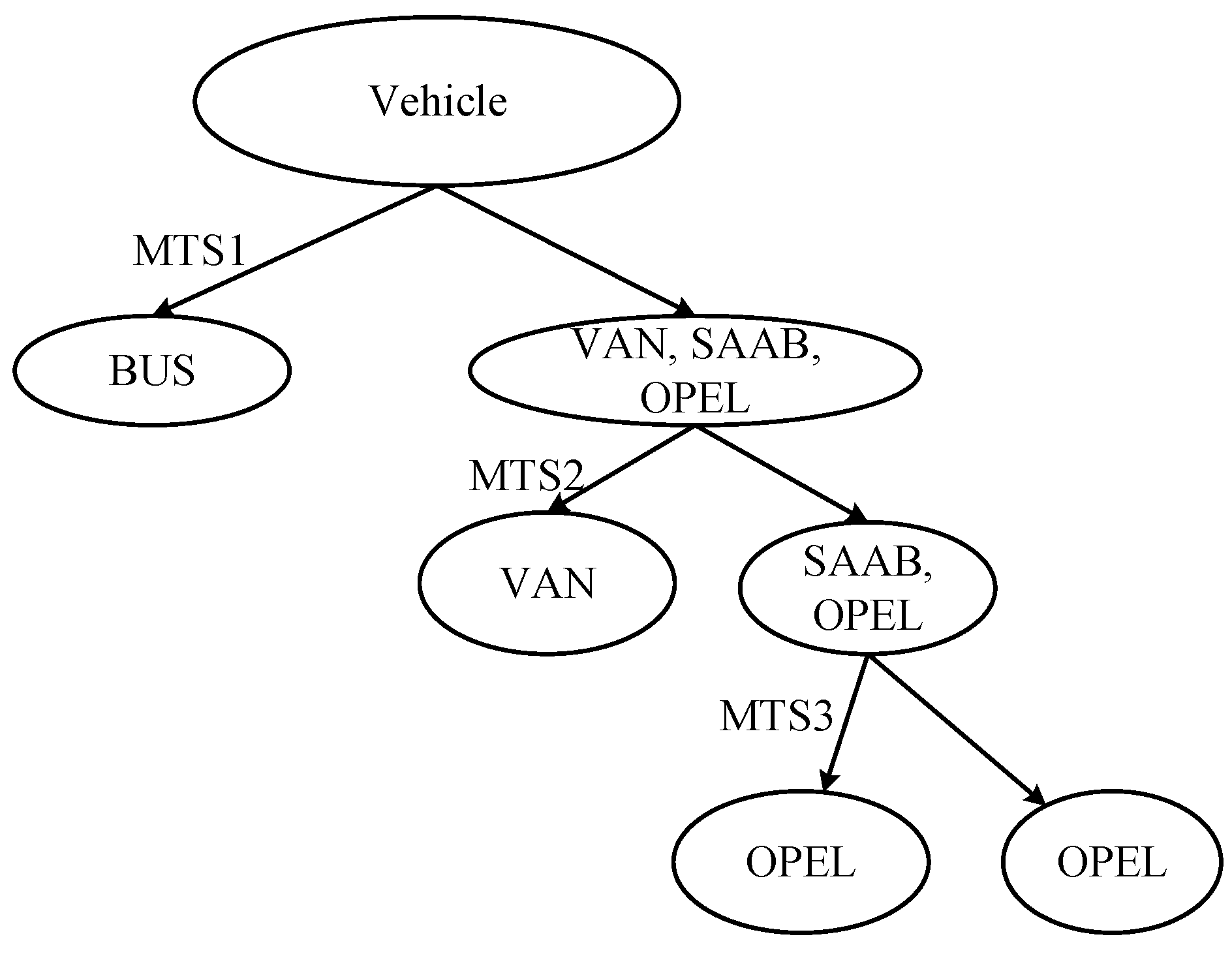

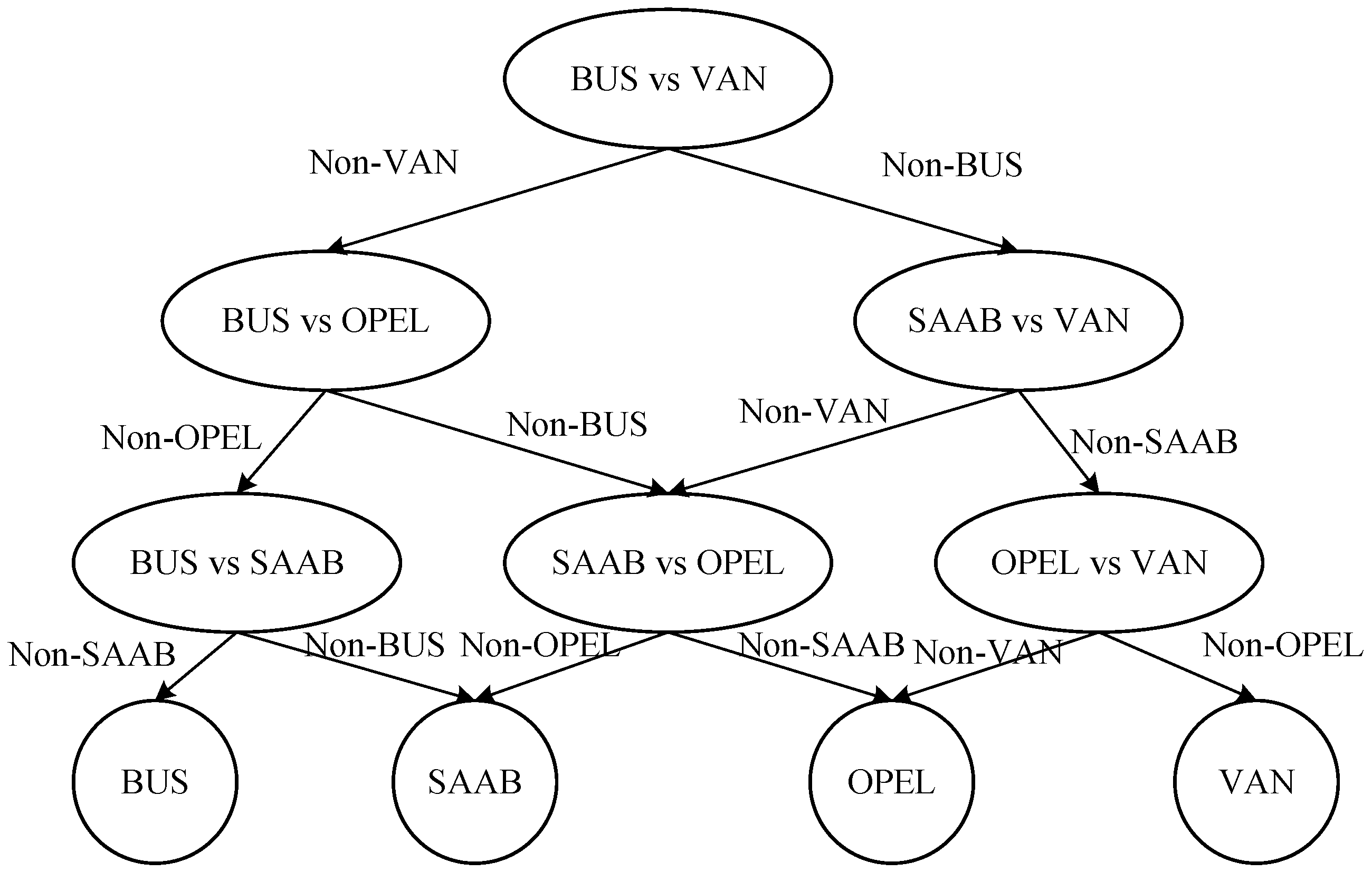

2.2. Tree-Structured MTS Multi-Class Classification Method

2.3. Hybrid Multi-Objective Particle Swarm Optimization Algorithm

| Algorithm 1. The HMOPSO algorithm |

| Input: N: Number of particles M: Number of objective functions maxIter: Maximum iterations bounds_cont: Continuous variable boundaries [lb1, ub1], [lb2, ub2], …, [lbₙ, ubₙ] bounds_disc: Discrete variable boundaries [min1, max1], [min2, max2], …, [minₘ, maxₘ] Output: gbest: Global best solution (non-dominated solutions in Pareto front) 1: // Initialization phase 2: Initialize particle population population 3: For i = 1 to N do: 4: Initialize continuous position x_cont 5: Initialize continuous velocity v_cont 6: Initialize discrete position x_disc 7: Initialize discrete velocity v_disc 8: Calculate fitness fitness 9: Set personal best pbest 10: Set personal best fitness pbest_fitness 11: Add particle to population 12: End For 13: // Initialize global best 14: gbest ← select non-dominated solutions from population 15: gbest_fitness ← corresponding fitness values 16: // Main loop 17: For iter = 1 to maxIter do: 18: For each particle in population do: 19: // Update continuous variables 20: Generate random vectors r1, r2 ∈ [0, 1]^n 21: Update continuous velocity: 22: v_cont ← w × v_cont + c1 × r1 × (pbest_cont − x_cont) + c2 × r2 × (gbest_cont − x_cont) 23: Update continuous position: 24: x_cont ← x_cont + v_cont 25: Apply boundary constraints to ensure x_cont remains within bounds_cont 26: 27: // Update discrete variables 28: Generate random vectors r1_disc, r2_disc ∈ [0, 1]^m 29: Update discrete velocity: 30: v_disc ← c1 × r1_disc × (pbest_disc − x_disc) + c2 × r2_disc × (gbest_disc − x_disc) 31: Convert velocity to probability: 32: prob ← sigmoid(v_disc) = 1/(1 + exp(-v_disc)) 33: For each discrete variable j do: 34: If rand() < prob[j] then: 35: x_disc[j] ← randomly flip within bounds_disc[j] 36: End If 37: 38: // Evaluate new position 39: new_fitness ← evaluate(x_cont, x_disc) 40: 41: // Update personal best 42: If new_fitness dominates pbest_fitness then: 43: pbest ← (x_cont, x_disc) 44: pbest_fitness ← new_fitness 45: End If 46: End For 47: 48: // Update global best 49: Update gbest by selecting non-dominated solutions from all particles’ pbest 50: 51: // Optional: Apply mutation operation to maintain diversity 52: Mutate particles with a certain probability 53: End For 54: Return gbest// Return the found Pareto optimal solution set |

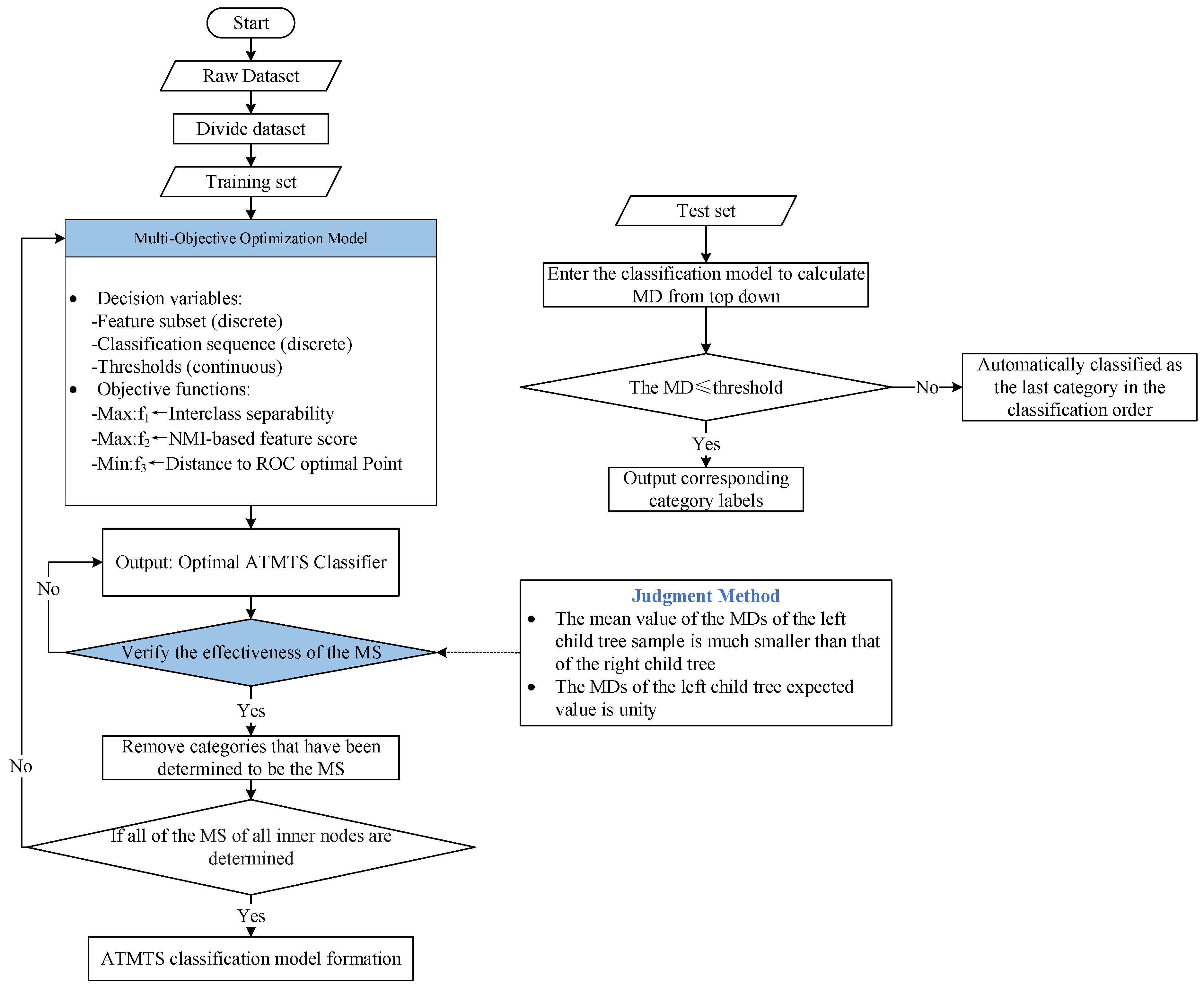

3. Proposed Methodology

3.1. Overview of the ATMTS Framework

- An adaptive tree-building mechanism that determines the optimal sequence of dichotomous classifications without relying on pre-defined hierarchical knowledge.

- A normalized mutual information (NMI)-based criterion for evaluating feature subsets, effectively capturing both linear and nonlinear relationships.

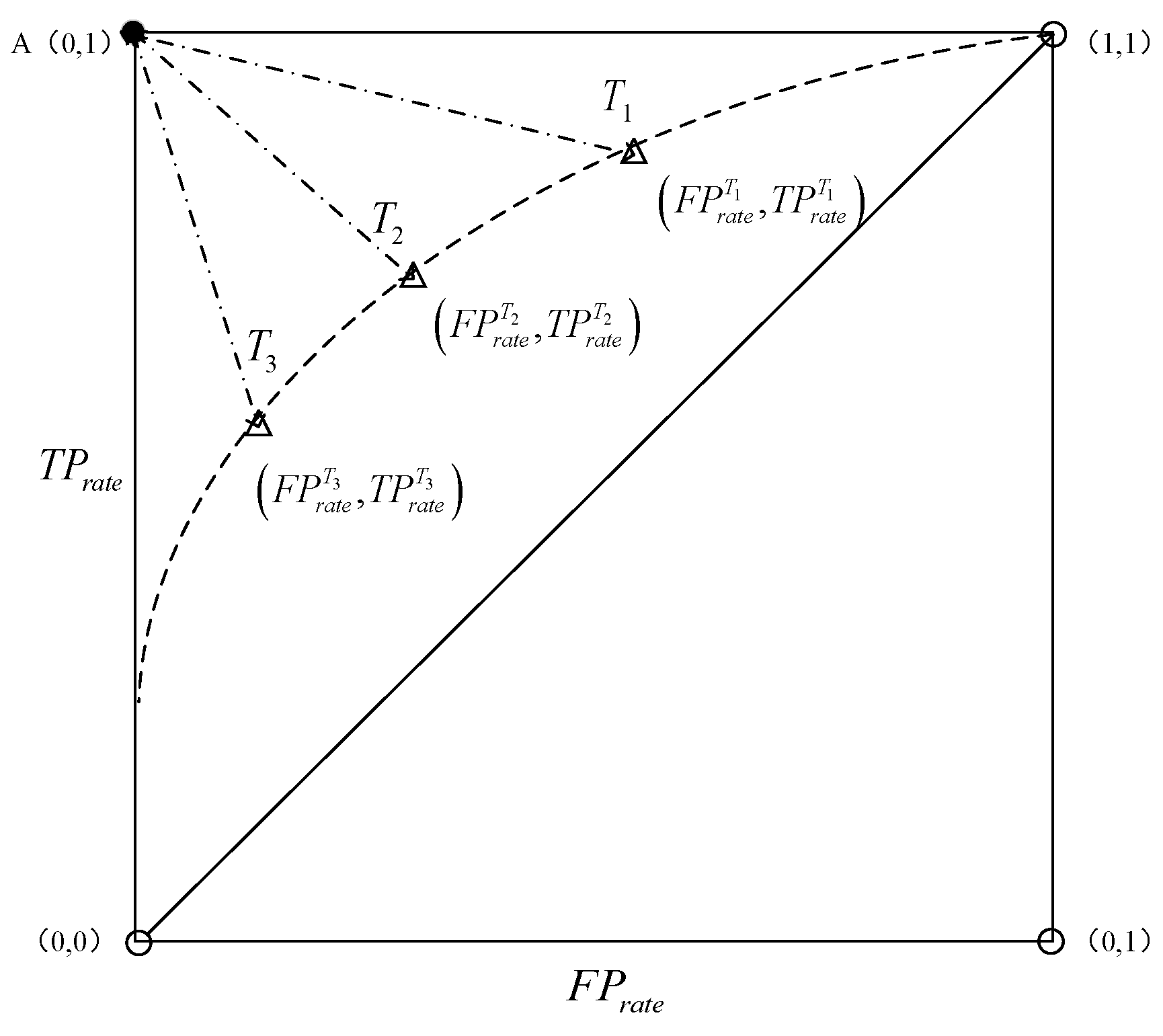

- An ROC-based objective threshold determination method that minimizes human subjectivity.

- A joint optimization model that unifies the above components into a single framework.

- A HMOPSO-based solver designed to handle the mixed-variable optimization problem efficiently.

3.2. Formulation of Objective Functions

- (1)

- Interclass separability

- (2)

- Normalized mutual information

- (3)

- Proximity to the theoretical optimal point

3.3. The Joint Optimization Model

3.4. Model Solving Based on HMOPSO

3.5. Detailed Implementation Procedure of the ATMTS

4. Data Experiment

4.1. Research Data and Experimental Approach

- ATMTS: Our proposed joint optimization model adaptively determines the classification sequence, feature subset, and decision thresholds.

- PBT-MTS: Utilizes an orthogonal array and SNR for feature selection, with thresholds set by a Probabilistic Threshold Model (PTM) [32]. The classification order of categories is determined by iteratively calculating the classification accuracy of each category when used as the MS; the category achieving the highest accuracy is prioritized for classification first, and the process continues accordingly.

- DAG-MTS: This method adopts a DAG as the underlying classification structure in order to improve hierarchical decision-making. Except for the use of the DAG structure, its other procedures are identical to those of PBT-MTS, including feature selection with orthogonal arrays and SNR, threshold determination through the PTM, and the iterative strategy for determining the order of category classification.

- RF: The key parameters, including the number of trees (Ntree) and the number of features to sample at each split (mtry) are optimized for each dataset via grid search.

- SVM: A Gaussian Radial Basis Function (RBF) kernel is used. The box constraint C and kernel scale (γ) are optimized for each dataset through grid search.

- KNN: The number of nearest neighbors (K) is optimized through grid search. The distance metric is set to Euclidean distance.

- BPNN: A single hidden layer network is used. The number of hidden units (Hiddensize) is determined via grid search.

- AdaBoost: The algorithm is implemented with decision trees of depth 1 as base learners. The number of estimators (n_estimators) is set by grid search.

- XGBoost, LightGBM, CatBoost: All hyperparameters, including learning rate (learning_rate) and maximum depth (max_depth) are optimized via grid search within recommended ranges from the literature.

- CNN: A shallow convolutional architecture is applied. The number of filters (n_filters), kernel sizes (kernel_size) and learning rate (learning_rate) are optimized through grid search.

4.2. Evaluation Metrics Selection

4.3. Comprehensive Performance Comparison

4.3.1. Comparative Analysis of Experimental Results

4.3.2. Statistical Significance Testing

4.3.3. Comparative Analysis with Existing Studies

4.3.4. Computational Complexity Comparison

4.3.5. Model Interpretability Analysis

4.4. Performance Comparison Between ATMTS, PBT-MTS, and DAG-MTS

4.5. Validation of Optimization Model Effectiveness

5. Conclusions and Future Outlook

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ma, S.; Shi, S.; Zhang, Y.; Gao, H. A High-precision method for detecting rolling bearing faultis in unmanned aerial vehicle based on improved 1DCNN-Informer model. Measurement 2025, 256, 118200. [Google Scholar] [CrossRef]

- Wang, Y.; Du, X. Rolling Bearing Fault Diagnosis Based on SCNN and Optimized HKELM. Mathematics 2025, 13, 2004. [Google Scholar] [CrossRef]

- Xiu, X.C.; Pan, L.L.; Yang, Y.; Liu, W.Q. Efficient and Fast Joint Sparse Constrained Canonical Correlation Analysis for Fault Detection. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 4153–4163. [Google Scholar] [CrossRef] [PubMed]

- Razavi-Termeh, S.V.; Bazargani, J.S.; Sadeghi-Niaraki, A.; Angela Yao, X.; Choi, S.-M. Spatial prediction and visualization of PM 2.5 susceptibility using machine learning optimization in a virtual reality environment. Int. J. Digit. Earth 2025, 18, 2513589. [Google Scholar] [CrossRef]

- Jyotiyana, M.; Kesswani, N.; Kumar, M. A deep learning approach for classification and diagnosis of Parkinson’s disease. Soft Comput. 2022, 26, 9155–9165. [Google Scholar] [CrossRef]

- Wu, Z.; Zhou, C.; Xu, F.; Lou, W. A CS-AdaBoost-BP model for product quality inspection. Ann. Oper. Res. 2022, 308, 685–701. [Google Scholar] [CrossRef]

- Heo, W.; Kim, E. Smoothing the Subjective Financial Risk Tolerance: Volatility and Market Implications. Mathematics 2025, 13, 680. [Google Scholar] [CrossRef]

- Chang, Z.P.; Li, Y.W.; Fatima, N. A theoretical survey on Mahalanobis-Taguchi system. Measurement 2019, 136, 501–510. [Google Scholar] [CrossRef]

- Su, C.T.; Hsiao, Y.H. Multiclass MTS for Simultaneous Feature Selection and Classification. IEEE Trans. Knowl. Data Eng. 2009, 21, 192–205. [Google Scholar]

- Shimura, J.; Takata, D.; Watanabe, H.; Shitanda, I.; Itagaki, M. Mahalanobis-Taguchi method based anomaly detection for lithium-ion battery. Electrochim. Acta 2024, 479, 143890. [Google Scholar] [CrossRef]

- Zhang, C.H.; Cheng, X.R.; Li, K.; Li, B. Hotel recommendation mechanism based on online reviews considering multi-attribute cooperative and interactive characteristics. Omega-Int. J. Manage S 2025, 130, 103173. [Google Scholar] [CrossRef]

- Sikder, S.; Mukherjee, I.; Panja, S.C. A synergistic Mahalanobis-Taguchi system and support vector regression based predictive multivariate manufacturing process quality control approach. J. Manuf. Syst. 2020, 57, 323–337. [Google Scholar] [CrossRef]

- Halim, N.A.M.; Abu, M.Y.; Razali, N.S.; Aris, N.H.; Sari, E.; Jaafar, N.N.; Ghani, A.S.A.; Ramlie, F.; Muhamad, W.Z.A.W.; Harudin, N. Impact of Mahalanobis-Taguchi System on Health Performance Among Academicians. Int. J. Technol. 2025, 16, 846–864. [Google Scholar] [CrossRef]

- Hsiao, Y.H.; Su, C.T. Multiclass MTS for Saxophone Timbre Quality Inspection Using Waveform-shape-based Features. IEEE Trans. Syst. Man. Cybern. B Cybern. 2009, 39, 690–704. [Google Scholar] [CrossRef] [PubMed]

- Soylemezoglu, A.; Jagannathan, S.; Saygin, C. Mahalanobis Taguchi System (MTS) as a Prognostics Tool for Rolling Element Bearing Failures. J. Manuf. Sci. E-T Asme 2010, 132, 051014. [Google Scholar] [CrossRef]

- Peng, Z.M.; Cheng, L.S.; Zhan, J.; Yao, Q.F. Fault classification method for rolling bearings based on the multi-featureextraction and modified Mahalanobis-Taguchi system. J. Vib. Shock 2020, 39, 249–256. [Google Scholar]

- Peng, Z.; Cheng, L.; Yao, Q. Multi-feature Extraction for Bearing Fault Diagnosis Using Binary-tree Mahalanobis-Taguchi System. In Proceedings of the 31st Chinese Control And Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 3303–3308. [Google Scholar]

- Zhan, J.; Cheng, L.; Peng, Z. Rolling Bearings Fault Diagnosis Using VMD and Multi-tree Mahalanobis Taguchi System. IOP Conf. Ser. Mater. Sci. Eng. 2019, 692, 12034–12043. [Google Scholar] [CrossRef]

- Duan, Y.; Zou, B.; Xu, J.; Chen, F.; Wei, J.; Tang, Y.Y. OAA-SVM-MS: A fast and efficient multi-class classification algorithm. Neurocomputing 2021, 454, 448–460. [Google Scholar] [CrossRef]

- Tan, L.M.; Wan Muhamad, W.Z.A.; Yahya, Z.R.; Junoh, A.K.; Azziz, N.H.A.; Ramlie, F.; Harudin, N.; Abu, M.Y.; Tan, X.J. A survey on improvement of Mahalanobis Taguchi system and its application. Multimed. Tools Appl. 2023, 82, 43865–43881. [Google Scholar] [CrossRef]

- Chang, Z.; Wang, Y.; Chen, W. Dynamic Identification of Relative Poverty Among Chinese Households Using the Multiway Mahalanobis–Taguchi System: A Sustainable Livelihoods Perspective. Sustainability 2025, 17, 5384. [Google Scholar] [CrossRef]

- Huang, C.-L.; Hsu, T.-S.; Liu, C.-M. The Mahalanobis-Taguchi system—Neural network algorithm for data-mining in dynamic environments. Expert. Syst. Appl. 2009, 36, 5475–5480. [Google Scholar] [CrossRef]

- Luo, Y.; Zou, X.; Xiong, W.; Yuan, X.; Xu, K.; Xin, Y.; Zhang, R. Dynamic State Evaluation Method of Power Transformer Based on Mahalanobis–Taguchi System and Health Index. Energies 2023, 16, 2765. [Google Scholar] [CrossRef]

- Ramlie, F.; Muhamad, W.Z.A.W.; Harudin, N.; Abu, M.Y.; Yahaya, H.; Jamaludin, K.R.; Abdul Talib, H.H. Classification Performance of Thresholding Methods in the Mahalanobis-Taguchi System. Appl. Sci. 2021, 11, 3906. [Google Scholar] [CrossRef]

- Yang, M.; Liu, Y.; Yang, J.; Precup, R.-E. A Hybrid Multi-Objective Particle Swarm Optimization with Central Control Strategy. Comput. Intell. Neurosci. 2022, 2022, 1522096. [Google Scholar] [CrossRef]

- Hao, H.Q.; Zhu, H.P.; Luo, Y.B. Preference learning based multiobjective particle swarm optimization for lot streaming in hybrid flowshop scheduling with flexible assembly and time windows. Expert. Syst. Appl. 2025, 290, 128345. [Google Scholar] [CrossRef]

- Woodall, W.H.; Koudelik, R.; Tsui, K.-L.; Kim, S.B.; Stoumbos, Z.G.; Carvounis, C.P. A Review and Analysis of the Mahalanobis—Taguchi System. Technometrics 2003, 45, 1–15. [Google Scholar] [CrossRef]

- Iquebal, A.S.; Pal, A.; Ceglarek, D.; Tiwari, M.K. Enhancement of Mahalanobis-Taguchi System via Rough Sets based Feature Selection. Expert. Syst. Appl. 2014, 41, 8003–8015. [Google Scholar] [CrossRef]

- Kim, S.-G.; Park, D.; Jung, J.-Y. Evaluation of One-Class Classifiers for Fault Detection: Mahalanobis Classifiers and the Mahalanobis-Taguchi System. Processes 2021, 9, 1450. [Google Scholar] [CrossRef]

- Hassan, M.S.; Chin, V.J.; Gopal, L. Accurate diagnosis of concurrent faults in photovoltaic systems using CONMI-based feature selection and Support vector machines. Energy Convers. Manag. 2025, 344, 120293. [Google Scholar] [CrossRef]

- Wang, C.; Shi, J.; Yang, Y.; Wang, R. Two Methods With Bidirectional Similarity for Optimal Selections of Supplier Portfolio and Supplier Substitute Based on TOPSIS and IFS. IEEE Access 2024, 12, 1761–1773. [Google Scholar] [CrossRef]

- Su, C.T.; Hsiao, Y.H. An Evaluation of the Robustness of MTS for Imbalanced Data. IEEE Trans. Knowl. Data Eng. 2007, 19, 1321–1332. [Google Scholar] [CrossRef]

- Demir, S.; Sahin, E.K. Predicting occurrence of liquefaction-induced lateral spreading using gradient boosting algorithms integrated with particle swarm optimization: PSO-XGBoost, PSO-LightGBM, and PSO-CatBoost. Acta Geotech. 2023, 18, 3403–3419. [Google Scholar] [CrossRef]

- Le Nguyen, K.; Shakouri, M.; Ho, L.S. Investigating the effectiveness of hybrid gradient boosting models and optimization algorithms for concrete strength prediction. Eng. Appl. Artif. Intel. 2025, 149, 110568. [Google Scholar]

- Sun, Y.; Gong, J.; Zhang, Y. A Multi-Classification Method Based on Optimized Binary Tree Mahalanobis-Taguchi System for Imbalanced Data. Appl. Sci. 2022, 12, 10179. [Google Scholar] [CrossRef]

- Bentéjac, C.; Csörgő, A.; Martínez-Muñoz, G. A comparative analysis of gradient boosting algorithms. Artif. Intel. Rev. 2020, 54, 1937–1967. [Google Scholar] [CrossRef]

- Kucukosmanoglu, M.; Garcia, J.O.; Brooks, J.; Bansal, K. Influence of cognitive networks and task performance on fMRI-based state classification using DNN models. Sci. Rep. 2025, 15, 23689. [Google Scholar] [CrossRef]

- Qaedi, K.; Abdullah, M.; Yusof, K.A.; Hayakawa, M.; Zulhamidi, N.F.I. Multi-class classification automated machine learning for predicting earthquakes using global geomagnetic field data. Nat. Hazards 2025, 121, 14531–14544. [Google Scholar] [CrossRef]

- Solorio-Fernández, S.; Carrasco-Ochoa, J.A.; Martínez-Trinidad, J.F. A systematic evaluation of filter Unsupervised Feature Selection methods. Expert. Syst. Appl. 2020, 162, 113745. [Google Scholar] [CrossRef]

- Bais, F.; van der Neut, J. Adapting the Robust Effect Size Cliff’s Delta to Compare Behaviour Profiles. Surv. Res. Methods 2022, 16, 329–352. [Google Scholar]

- Renkas, K.; Niewiadomski, A. Hierarchical Fuzzy Logic Systems in Classification: An Application Example. In Proceedings of the 16th International Conference on Artificial Intelligence and Soft Computing (ICAISC), Zakopane, Poland, 11–15 June 2017; pp. 302–314. [Google Scholar]

- Alharbi, N.M.; Osman, A.H.; Mashat, A.A.; Alyamani, H.J. Letter Recognition Reinvented: A Dual Approach with MLP Neural Network and Anomaly Detection. Comput. Syst. Sci. Eng. 2024, 48, 175–198. [Google Scholar] [CrossRef]

- Xu, C.; Wang, Y.; Bao, X.; Li, F. Vehicle Classification Using an Imbalanced Dataset Based on a Single Magnetic Sensor. Sensors 2018, 18, 1690. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.F.; Liang, X.; Sheng, G.; Kwok, J.T.; Wang, M.L.; Li, G.S. Noniterative Sparse LS-SVM Based on Globally Representative Point Selection. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 788–798. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

| Dataset | Number of Samples | Number of Feature Variables | Number of Categories |

|---|---|---|---|

| Letter Recognition | 20,000 | 16 | 26 |

| White wine quality | 4898 | 11 | 7 |

| Glass | 214 | 9 | 6 |

| Page-blocks | 5437 | 10 | 5 |

| Vehicle | 846 | 18 | 4 |

| Method | Hyper-Parameter | Grid Search Values |

|---|---|---|

| RF | Ntree mtry | 50–500 (step size 50) 2, 5, 10, 20 |

| SVM | C γ | 2−8–28 (step size: 1) 2−8–28 (step size: 1) |

| KNN | K | 1–20 (step size: 1) |

| BPNN | Hiddensize | , where n is number of input nodes, m is number of output nodes, a = 1−10 (step size: 1) |

| Adaboost | n_estimators | 50–500 (step size: 50) |

| XGboost | learning_rate max_depth | 0.025, 0.05, 0.1, 0.2, 0.3 1–6 (step size 1) |

| LightGBM | learning_rate max_depth | 0.025, 0.05, 0.1, 0.2, 0.3 2–10 (step size: 1) |

| CatBoost | learning_rate max_depth | 0.025, 0.05, 0.1, 0.2, 0.3 1–10 (step size: 1) |

| CNN | n_filters kernel_size learning_rate | 16, 32, 64 3, 5, 7 0.025, 0.05, 0.1, 0.2, 0.3 |

| Dataset | Method | Evaluation Index | ||||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Macro-F1 | Macro-R | Macro-P | MCC | Macro-AUC | Kappa Coefficient | ||

| Letter Recognition | ATMTS | 0.976 ± 0.003 | 0.955 ± 0.005 | 0.945 ± 0.002 | 0.964 ± 0.004 | 0.945 ± 0.003 | 0.960 ± 0.005 | 0.944 ± 0.003 |

| PBT-MTS | 0.937 ± 0.005 | 0.915 ± 0.004 | 0.906 ± 0.003 | 0.925 ± 0.005 | 0.905 ± 0.004 | 0.921 ± 0.005 | 0.904 ± 0.004 | |

| DAG-MTS | 0.950 ± 0.004 | 0.929 ± 0.003 | 0.919 ± 0.003 | 0.938 ± 0.003 | 0.919 ± 0.003 | 0.934 ± 0.004 | 0.918 ± 0.005 | |

| RF | 0.960 ± 0.004 | 0.939 ± 0.005 | 0.930 ± 0.005 | 0.948 ± 0.003 | 0.929 ± 0.004 | 0.945 ± 0.004 | 0.929 ± 0.003 | |

| SVM | 0.947 ± 0.004 | 0.926 ± 0.005 | 0.916 ± 0.005 | 0.936 ± 0.005 | 0.915 ± 0.004 | 0.931 ± 0.005 | 0.915 ± 0.002 | |

| KNN | 0.957 ± 0.004 | 0.936 ± 0.005 | 0.928 ± 0.004 | 0.944 ± 0.005 | 0.927 ± 0.003 | 0.941 ± 0.004 | 0.926 ± 0.003 | |

| BPNN | 0.944 ± 0.003 | 0.922 ± 0.005 | 0.913 ± 0.005 | 0.932 ± 0.003 | 0.912 ± 0.003 | 0.928 ± 0.005 | 0.912 ± 0.004 | |

| AdaBoost | 0.935 ± 0.005 | 0.914 ± 0.004 | 0.904 ± 0.002 | 0.924 ± 0.003 | 0.904 ± 0.003 | 0.920 ± 0.004 | 0.903 ± 0.005 | |

| XGBoost | 0.961 ± 0.005 | 0.940 ± 0.004 | 0.931 ± 0.004 | 0.950 ± 0.005 | 0.930 ± 0.003 | 0.946 ± 0.003 | 0.929 ± 0.004 | |

| LightGBM | 0.953 ± 0.004 | 0.932 ± 0.004 | 0.923 ± 0.003 | 0.942 ± 0.005 | 0.922 ± 0.003 | 0.938 ± 0.003 | 0.921 ± 0.004 | |

| CatBoost | 0.968 ± 0.004 | 0.947 ± 0.003 | 0.937 ± 0.003 | 0.957 ± 0.002 | 0.937 ± 0.003 | 0.952 ± 0.004 | 0.936 ± 0.003 | |

| CNN | 0.969 ± 0.003 | 0.948 ± 0.005 | 0.938 ± 0.005 | 0.957 ± 0.005 | 0.938 ± 0.003 | 0.953 ± 0.003 | 0.937 ± 0.003 | |

| White wine quality | ATMTS | 0.682 ± 0.007 | 0.661 ± 0.005 | 0.652 ± 0.010 | 0.670 ± 0.011 | 0.651 ± 0.008 | 0.666 ± 0.005 | 0.650 ± 0.011 |

| PBT-MTS | 0.653 ± 0.009 | 0.632 ± 0.005 | 0.623 ± 0.005 | 0.641 ± 0.012 | 0.622 ± 0.007 | 0.637 ± 0.005 | 0.621 ± 0.005 | |

| DAG-MTS | 0.661 ± 0.010 | 0.640 ± 0.006 | 0.631 ± 0.010 | 0.649 ± 0.007 | 0.630 ± 0.006 | 0.645 ± 0.009 | 0.629 ± 0.012 | |

| RF | 0.667 ± 0.010 | 0.646 ± 0.010 | 0.637 ± 0.010 | 0.655 ± 0.006 | 0.636 ± 0.007 | 0.651 ± 0.006 | 0.635 ± 0.004 | |

| SVM | 0.664 ± 0.011 | 0.643 ± 0.007 | 0.634 ± 0.009 | 0.652 ± 0.007 | 0.633 ± 0.005 | 0.648 ± 0.012 | 0.632 ± 0.005 | |

| KNN | 0.657 ± 0.011 | 0.636 ± 0.006 | 0.627 ± 0.007 | 0.645 ± 0.010 | 0.626 ± 0.006 | 0.641 ± 0.007 | 0.625 ± 0.008 | |

| BPNN | 0.672 ± 0.007 | 0.651 ± 0.009 | 0.642 ± 0.008 | 0.660 ± 0.009 | 0.641 ± 0.010 | 0.656 ± 0.009 | 0.640 ± 0.005 | |

| AdaBoost | 0.671 ± 0.006 | 0.650 ± 0.005 | 0.641 ± 0.007 | 0.659 ± 0.005 | 0.640 ± 0.009 | 0.655 ± 0.008 | 0.639 ± 0.012 | |

| XGBoost | 0.664 ± 0.008 | 0.643 ± 0.006 | 0.634 ± 0.007 | 0.652 ± 0.011 | 0.633 ± 0.007 | 0.648 ± 0.012 | 0.632 ± 0.009 | |

| LightGBM | 0.680 ± 0.009 | 0.659 ± 0.011 | 0.650 ± 0.010 | 0.668 ± 0.008 | 0.649 ± 0.011 | 0.664 ± 0.011 | 0.648 ± 0.005 | |

| CatBoost | 0.680 ± 0.010 | 0.659 ± 0.011 | 0.649 ± 0.008 | 0.668 ± 0.008 | 0.649 ± 0.006 | 0.664 ± 0.005 | 0.648 ± 0.006 | |

| CNN | 0.689 ± 0.006 | 0.668 ± 0.011 | 0.659 ± 0.005 | 0.677 ± 0.011 | 0.658 ± 0.004 | 0.673 ± 0.007 | 0.657 ± 0.006 | |

| Glass | ATMTS | 0.752 ± 0.006 | 0.731 ± 0.005 | 0.722 ± 0.011 | 0.740 ± 0.005 | 0.721 ± 0.009 | 0.736 ± 0.011 | 0.720 ± 0.011 |

| PBT-MTS | 0.731 ± 0.006 | 0.710 ± 0.007 | 0.701 ± 0.006 | 0.719 ± 0.009 | 0.700 ± 0.006 | 0.715 ± 0.006 | 0.699 ± 0.010 | |

| DAG-MTS | 0.719 ± 0.005 | 0.698 ± 0.006 | 0.689 ± 0.008 | 0.707 ± 0.006 | 0.688 ± 0.012 | 0.703 ± 0.008 | 0.687 ± 0.012 | |

| RF | 0.745 ± 0.011 | 0.724 ± 0.009 | 0.715 ± 0.005 | 0.733 ± 0.012 | 0.714 ± 0.012 | 0.729 ± 0.012 | 0.713 ± 0.006 | |

| SVM | 0.726 ± 0.011 | 0.705 ± 0.008 | 0.696 ± 0.011 | 0.714 ± 0.011 | 0.695 ± 0.007 | 0.710 ± 0.008 | 0.694 ± 0.009 | |

| KNN | 0.714 ± 0.008 | 0.693 ± 0.006 | 0.684 ± 0.005 | 0.702 ± 0.006 | 0.683 ± 0.007 | 0.698 ± 0.005 | 0.682 ± 0.006 | |

| BPNN | 0.725 ± 0.010 | 0.704 ± 0.007 | 0.695 ± 0.006 | 0.713 ± 0.006 | 0.694 ± 0.006 | 0.709 ± 0.012 | 0.693 ± 0.005 | |

| AdaBoost | 0.733 ± 0.010 | 0.712 ± 0.008 | 0.703 ± 0.008 | 0.721 ± 0.007 | 0.702 ± 0.007 | 0.717 ± 0.011 | 0.701 ± 0.008 | |

| XGBoost | 0.736 ± 0.007 | 0.715 ± 0.005 | 0.706 ± 0.009 | 0.724 ± 0.005 | 0.705 ± 0.010 | 0.720 ± 0.007 | 0.704 ± 0.008 | |

| LightGBM | 0.736 ± 0.005 | 0.715 ± 0.007 | 0.706 ± 0.011 | 0.724 ± 0.004 | 0.705 ± 0.010 | 0.720 ± 0.009 | 0.704 ± 0.006 | |

| CatBoost | 0.750 ± 0.009 | 0.729 ± 0.011 | 0.720 ± 0.004 | 0.738 ± 0.011 | 0.719 ± 0.005 | 0.734 ± 0.009 | 0.718 ± 0.007 | |

| CNN | 0.755 ± 0.011 | 0.734 ± 0.008 | 0.725 ± 0.011 | 0.743 ± 0.005 | 0.724 ± 0.004 | 0.739 ± 0.007 | 0.723 ± 0.009 | |

| Page blocks | ATMTS | 0.968 ± 0.005 | 0.947 ± 0.003 | 0.937 ± 0.003 | 0.955 ± 0.002 | 0.937 ± 0.005 | 0.952 ± 0.002 | 0.936 ± 0.004 |

| PBT-MTS | 0.945 ± 0.005 | 0.924 ± 0.004 | 0.914 ± 0.002 | 0.932 ± 0.003 | 0.914 ± 0.005 | 0.929 ± 0.003 | 0.913 ± 0.002 | |

| DAG-MTS | 0.932 ± 0.003 | 0.911 ± 0.003 | 0.901 ± 0.004 | 0.919 ± 0.003 | 0.901 ± 0.005 | 0.916 ± 0.005 | 0.900 ± 0.002 | |

| RF | 0.966 ± 0.002 | 0.945 ± 0.002 | 0.935 ± 0.004 | 0.953 ± 0.004 | 0.935 ± 0.002 | 0.950 ± 0.005 | 0.934 ± 0.003 | |

| SVM | 0.960 ± 0.005 | 0.939 ± 0.005 | 0.929 ± 0.005 | 0.947 ± 0.005 | 0.929 ± 0.004 | 0.944 ± 0.004 | 0.928 ± 0.005 | |

| KNN | 0.950 ± 0.004 | 0.929 ± 0.004 | 0.919 ± 0.003 | 0.937 ± 0.002 | 0.919 ± 0.003 | 0.934 ± 0.003 | 0.918 ± 0.002 | |

| BPNN | 0.952 ± 0.002 | 0.931 ± 0.003 | 0.921 ± 0.003 | 0.939 ± 0.005 | 0.921 ± 0.003 | 0.936 ± 0.003 | 0.920 ± 0.003 | |

| AdaBoost | 0.958 ± 0.003 | 0.937 ± 0.005 | 0.927 ± 0.005 | 0.945 ± 0.004 | 0.927 ± 0.003 | 0.942 ± 0.003 | 0.926 ± 0.004 | |

| XGBoost | 0.959 ± 0.003 | 0.938 ± 0.004 | 0.928 ± 0.004 | 0.946 ± 0.005 | 0.928 ± 0.005 | 0.943 ± 0.003 | 0.927 ± 0.005 | |

| LightGBM | 0.942 ± 0.004 | 0.921 ± 0.005 | 0.911 ± 0.004 | 0.929 ± 0.005 | 0.911 ± 0.004 | 0.926 ± 0.004 | 0.910 ± 0.004 | |

| CatBoost | 0.947 ± 0.005 | 0.926 ± 0.004 | 0.916 ± 0.003 | 0.934 ± 0.005 | 0.916 ± 0.005 | 0.931 ± 0.005 | 0.915 ± 0.003 | |

| CNN | 0.967 ± 0.002 | 0.946 ± 0.004 | 0.936 ± 0.004 | 0.954 ± 0.003 | 0.936 ± 0.004 | 0.951 ± 0.004 | 0.935 ± 0.002 | |

| Vehicle | ATMTS | 0.860 ± 0.005 | 0.839 ± 0.009 | 0.829 ± 0.012 | 0.847 ± 0.006 | 0.828 ± 0.005 | 0.843 ± 0.010 | 0.827 ± 0.009 |

| PBT-MTS | 0.829 ± 0.009 | 0.808 ± 0.008 | 0.798 ± 0.004 | 0.816 ± 0.005 | 0.797 ± 0.005 | 0.812 ± 0.007 | 0.796 ± 0.009 | |

| DAG-MTS | 0.830 ± 0.012 | 0.809 ± 0.010 | 0.799 ± 0.010 | 0.817 ± 0.012 | 0.798 ± 0.010 | 0.813 ± 0.009 | 0.797 ± 0.005 | |

| RF | 0.830 ± 0.004 | 0.809 ± 0.005 | 0.799 ± 0.005 | 0.817 ± 0.012 | 0.798 ± 0.008 | 0.813 ± 0.008 | 0.797 ± 0.009 | |

| SVM | 0.836 ± 0.009 | 0.815 ± 0.009 | 0.805 ± 0.009 | 0.823 ± 0.010 | 0.804 ± 0.006 | 0.819 ± 0.005 | 0.803 ± 0.008 | |

| KNN | 0.828 ± 0.006 | 0.807 ± 0.005 | 0.797 ± 0.010 | 0.815 ± 0.007 | 0.796 ± 0.010 | 0.811 ± 0.006 | 0.795 ± 0.011 | |

| BPNN | 0.830 ± 0.006 | 0.809 ± 0.007 | 0.799 ± 0.010 | 0.817 ± 0.005 | 0.798 ± 0.012 | 0.813 ± 0.005 | 0.797 ± 0.006 | |

| AdaBoost | 0.833 ± 0.011 | 0.812 ± 0.011 | 0.802 ± 0.010 | 0.820 ± 0.006 | 0.801 ± 0.008 | 0.816 ± 0.009 | 0.800 ± 0.011 | |

| XGBoost | 0.863 ± 0.010 | 0.842 ± 0.011 | 0.832 ± 0.009 | 0.850 ± 0.011 | 0.831 ± 0.005 | 0.846 ± 0.005 | 0.830 ± 0.008 | |

| LightGBM | 0.854 ± 0.011 | 0.833 ± 0.012 | 0.823 ± 0.011 | 0.841 ± 0.006 | 0.822 ± 0.006 | 0.837 ± 0.005 | 0.821 ± 0.009 | |

| CatBoost | 0.869 ± 0.004 | 0.848 ± 0.007 | 0.838 ± 0.010 | 0.856 ± 0.010 | 0.837 ± 0.010 | 0.852 ± 0.011 | 0.836 ± 0.012 | |

| CNN | 0.868 ± 0.011 | 0.847 ± 0.010 | 0.837 ± 0.010 | 0.855 ± 0.009 | 0.836 ± 0.007 | 0.851 ± 0.005 | 0.835 ± 0.006 | |

| Dataset | Friedman χ2 | p-Value |

|---|---|---|

| Letter Recognition | 15.82 | <0.001 |

| White wine quality | 12.45 | 0.002 |

| Glass | 9.76 | 0.021 |

| Page blocks | 14.33 | <0.001 |

| Vehicle | 11.90 | 0.003 |

| Dataset | Methods | p-Value | Cliff’s Delta |

|---|---|---|---|

| Letter Recognition | ATMTS vs. PBT-MTS | <0.001 | 0.548 (large) |

| ATMTS vs. DAG-MTS | 0.003 | 0.412 (Medium) | |

| ATMTS vs. AdaBoost | <0.001 | 0.521 (large) | |

| ATMTS vs. SVM | 0.001 | 0.463 (large) | |

| White wine quality | ATMTS vs. PBT-MTS | 0.008 | 0.372 (Mediun) |

| ATMTS vs. KNN | 0.012 | 0.341 (Small) | |

| Glass | ATMTS vs. DAG-MTS | 0.017 | 0.328 (Small) |

| ATMTS vs. SVM | 0.025 | 0.301 (Samll) | |

| Page blocks | ATMTS vs. PBT-MTS | <0.001 | 0.592 (Large) |

| ATMTS vs. DAG-MTS | 0.002 | 0.485 (Large) | |

| ATMTS vs. LightGBM | 0.006 | 0.401 (Medium) | |

| Vehicle | ATMTS vs. PBT-MTS | 0.005 | 0.439 (Large) |

| ATMTS vs. DAG-MTS | 0.021 | 0.308 (Small) | |

| ATMTS vs. RF | 0.019 | 0.315 (Small) |

| x1 | x2 | x3 | x4 | x5 | x6 | x7 | x8 | x9 | x10 | x11 | x12 | x13 | x14 | x15 | x16 | x17 | x18 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MTS1 | 1 | 0 | 1 | 1 | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 1 |

| MTS2 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 1 |

| MTS3 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | 0 | 1 | 1 | 1 |

| x1 | x2 | x3 | x4 | x5 | x6 | x7 | x8 | x9 | x10 | x11 | x12 | x13 | x14 | x15 | x16 | x17 | x18 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MTS1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 1 | 1 | 1 |

| MTS2 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 1 | 1 | 1 | 1 |

| MTS3 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 0 | 1 | 1 | 1 | 1 |

| x1 | x2 | x3 | x4 | x5 | x6 | x7 | x8 | x9 | x10 | x11 | x12 | x13 | x14 | x15 | x16 | x17 | x18 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BUS vs. VAN | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 1 |

| BUS vs. OPEL | 0 | 1 | 0 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 1 | 0 |

| SAAB vs. VAN | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 0 |

| BUS vs. SAAB | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 1 | 0 |

| SAAB vs. OPEL | 1 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 0 |

| OPEL vs. VAN | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 0 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Y.; Chen, Y.; Xu, Y. Adaptive Tree-Structured MTS with Multi-Class Mahalanobis Space for High-Performance Multi-Class Classification. Mathematics 2025, 13, 3233. https://doi.org/10.3390/math13193233

Sun Y, Chen Y, Xu Y. Adaptive Tree-Structured MTS with Multi-Class Mahalanobis Space for High-Performance Multi-Class Classification. Mathematics. 2025; 13(19):3233. https://doi.org/10.3390/math13193233

Chicago/Turabian StyleSun, Yefang, Yvlei Chen, and Yang Xu. 2025. "Adaptive Tree-Structured MTS with Multi-Class Mahalanobis Space for High-Performance Multi-Class Classification" Mathematics 13, no. 19: 3233. https://doi.org/10.3390/math13193233

APA StyleSun, Y., Chen, Y., & Xu, Y. (2025). Adaptive Tree-Structured MTS with Multi-Class Mahalanobis Space for High-Performance Multi-Class Classification. Mathematics, 13(19), 3233. https://doi.org/10.3390/math13193233