1. Introduction

Globally, disasters are occurring with increasing frequency and severity, inflicting extensive damage on human society and the ecological environment [

1]. The inherent randomness, dynamics, and destructiveness of disasters often create highly complex affected areas, posing significant challenges to rescue operations and amplifying danger. Disaster events generally unfold in four stages: pre-disaster warning, disaster assessment, disaster response, and post-disaster recovery [

2]. Secondary hazards such as building collapses following typhoons or debris flows after heavy rains can exacerbate casualties and losses, while further complicating rescue efforts. Thus, timely and accurate access to critical information is essential for effective emergency response, personnel rescue and post-disaster reconstruction.

Building upon this critical need for timely disaster response, object detection technology emerges as a pivotal solution. Object detection, a core component in computer vision, has substantial potential in disaster scenarios. In the aftermath of earthquakes, detection algorithms can help rescuers rapidly locate trapped victims, thereby enhancing operational efficiency. In forest fires, they can identify ignition points and track fire spread to support firefighting strategies. In floods, they can detect submerged regions and stranded targets to optimize resource allocation. Intelligent object detection can therefore markedly improve both the speed and accuracy of disaster response, reducing casualties and property damage. Given the need for rapid and precise identification in such complex environments, developing specialized object detection methods for disaster scenarios has become a pressing research priority.

Despite these promising applications, several critical challenges persist in disaster-oriented object detection. One key issue is balancing real-time performance and detection accuracy, particularly for UAV platforms with limited computational resources. For example, Dong et al. developed a lightweight YOLOv3-MobileNet human detection model using pruning based on sensitivity analysis [

3,

4]. This approach achieved reduced model parameters but compromised detection accuracy. Romero et al. addressed this trade-off using a knowledge distillation framework [

5], preserving accuracy while lowering complexity, though at the expense of a more complex training process. Small-object detection is another major challenge. Ma et al. proposed a method for detecting small trapped victims in aerial imagery through a static-dynamic bounding box weighted fusion [

6], which combines YOLOv4 features [

7] with LiteFlowNet3 motion cues [

8] to reduce missed detections in high-altitude scenarios, albeit at the expense of increased computational demand. Focusing further on the detection of small-scale and severely occluded objects in complex disaster environments, Hao et al. proposed a YOLOv5-based [

9] approach with a hybrid-domain attention mechanism and feature reuse [

10], improving detection but struggling under low-light conditions. Liu et al. enhanced small-object accuracy using a feature pyramid network with multi-layer feature fusion and adaptive feature selection [

11], but it consumes substantial memory resources. The integration of attention mechanisms, particularly Transformer-based models, has shown promise. Y. Chen et al. [

12] introduced the TFSANet architecture, within which a Transformer-based fusion model and a dynamic mechanism relying on selective kernel convolution are embedded. This design endows the TFSANet with the capacity to effectively address challenges associated with low-visibility conditions (including smoke and torrential rain) and target scale variations in post-disaster environments. Notwithstanding, a notable limitation of this architecture is its tendency to miss the detection of densely distributed targets. Concurrently, Z. Chen et al. [

13] incorporated the self-adaptive characteristic aggregation fusion (SACAF) attention module, implemented a fusion strategy, and carried out optimizations on the YOLO network. Through these efforts, the resulting model is able to comprehensively preserve features and exhibits robust generalization capabilities. However, it is hampered by a relatively slow detection speed, and there is a high likelihood of it failing to detect extremely small targets (<

pixels).

Multi-modal fusion and ensemble methods have also advanced the field. The UGEN framework [

14] combines GAN-assisted semantic segmentation with multi-detector ensembles, improving accuracy but with high system complexity. In this regard, Hou et al. [

15] introduced the self-supervised difference contrast learning framework (Self-DCF), which is specifically designed for label-free change detection in remote-sensing imagery. By leveraging self-supervised learning techniques, this framework effectively mitigates the challenge of substantial annotation costs associated with datasets. Moreover, it exhibits remarkable robustness. Nevertheless, it is encumbered by two notable drawbacks: high computational expenses and the dependence of image translation processes on noise. J. Zhu et al. [

16] explored cross-modal knowledge transfer via domain adaptation, boosting generalization across sensor modalities. On the other hand, several works have focused on domain-specific optimizations. For example, Q. Zhu et al.’s YOLOv7-CSAW [

17] improved small-object detection in marine environments using C2f modules [

18] and parameter-free attention [

19], but with limited generalization. Li et al. [

20] put forward a Two-tier Submodel Partition Framework (TSPF) grounded in a two-layer federated learning architecture, which is designed for forest fire detection. Nevertheless, this framework is deficient in an adaptive parameter-tuning mechanism. Chen et al. [

21] created a weather-adaptive framework for rain, snow and fog, improving robustness via adaptive modules and data augmentation. In addition, data augmentation has also been explored. Zhu et al. [

22] used GANs to generate synthetic disaster images, expanding training datasets, though realism remains imperfect. Gatys et al. [

23] applied style transfer for cross-scenario domain adaptation, improving adaptability in new environments.

Despite these advances, several critical gaps persist. First, in low-resource rescue environments, achieving efficient inference without compromising accuracy remains difficult, particularly for UAV deployment. Second, complex backgrounds—debris, smoke and lighting variations—complicate feature extraction, with conventional downsampling prone to losing essential details, especially for small targets like trapped victims. Third, multiscale target distributions exacerbate parameter redundancy in existing detection heads, limiting adaptability under lightweight constraints.

These limitations collectively underscore three fundamental challenges requiring systematic resolution: computational efficiency under resource constraints, robustness against complex background interference, and adaptability across target distributions. To systematically address these three core limitations, we present the LightSeek-YOLO, a lightweight architecture based on YOLOv11 [

24] for real-time victim detection in disaster scenarios, incorporating three key innovations:

Efficiency under limited resources—using HGNetV2 [

25] as the backbone, with depthwise separable convolution-based HGStem and HGBlock modules, reducing computational cost while preserving feature extraction.

Robustness to complex backgrounds—introducing the Seek-DS dual-branch downsampling module, combining MaxPool-based extrema preservation with progressive convolution-based spatial pattern extraction, reducing information loss in cluttered or smoky environments.

Scalable, low-redundancy detection—designing the Seek-DH lightweight multiscale detection head that processes features through a unified pipeline, enhancing scale adaptability while reducing parameter redundancy.

The experimental results demonstrate that LightSeek-YOLO achieves an excellent balance of accuracy and efficiency. In the COCO dataset, it delivers a competitive mAP@[0.5:0.95] of 0.473, matching the performance of YOLOv8n (0.473) and approaching that of its baseline, YOLOv11n (0.481). Crucially, this performance is attained with a significant reduction in computational overhead, requiring only 1.86M parameters and 4.9 GFLOPs—a reduction of 27.8% and 22.2% compared to YOLOv11n, respectively. The model also achieves a high inference speed of 571.72 FPS on a standard GPU, demonstrating its capability for real-time processing. Moreover, on the specialised C2A disaster dataset, the model achieves an AP@small of 0.478, thereby confirming its robust performance and particular efficacy in detecting small targets amid challenging conditions such as debris and smoke. These results collectively validate efficiency improvements that suggest deployment potential on edge platforms, requiring comprehensive edge device validation in disaster scenarios.

Our method targets these limitations through systematic architectural improvements. The remaining sections of this paper are structured as follows. In

Section 2, we provide a concise review of related work. The overall architecture of the proposed method is introduced in

Section 3. In

Section 4, we present the experimental results and conduct a discussion. Finally,

Section 7 summarizes the conclusions and outlines prospects.

3. Proposed Method

To address the limitations of existing methods discussed in

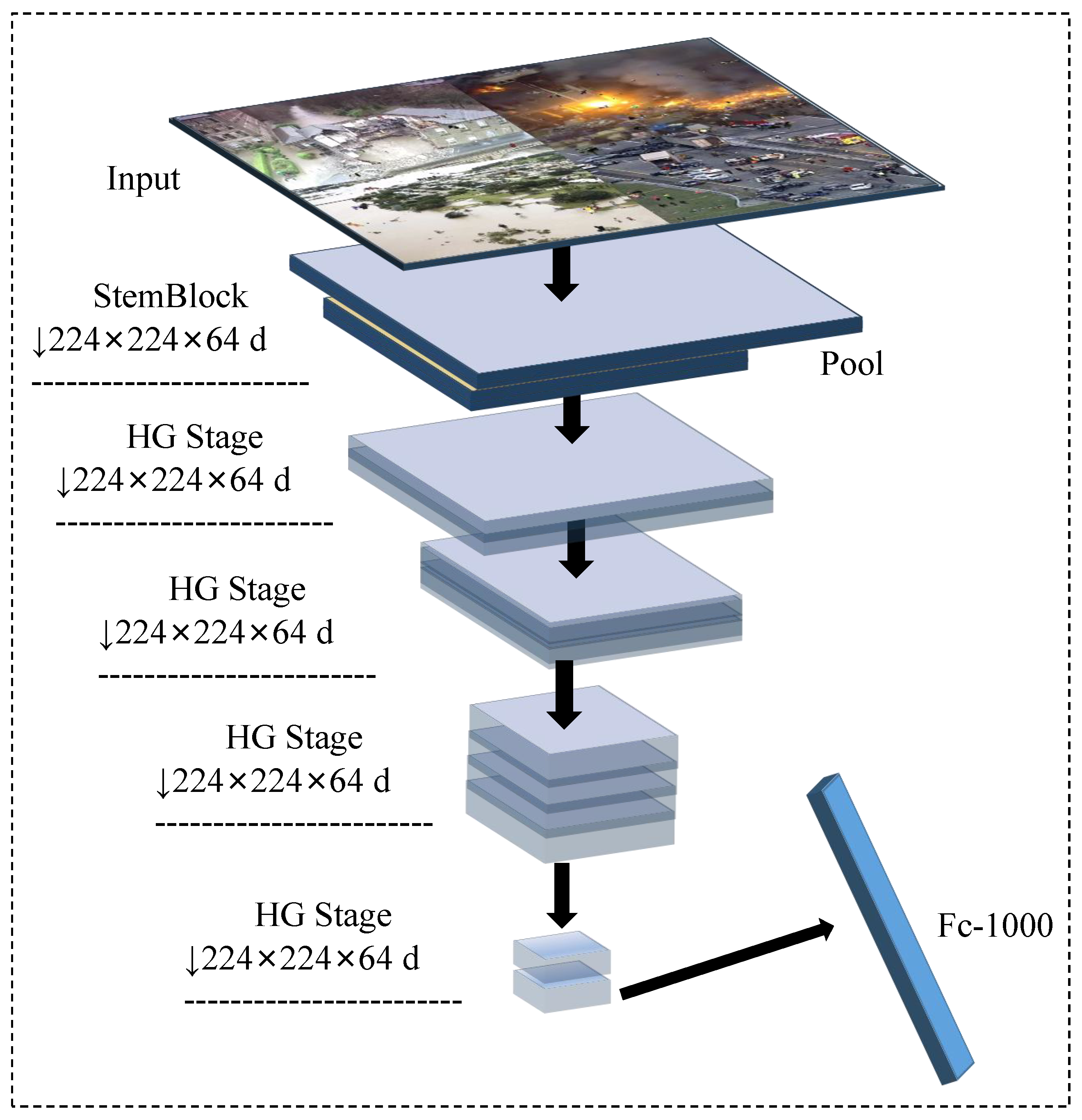

Section 2, we propose LightSeek-YOLO, a lightweight architecture built upon the YOLOv11 framework. The overall architecture is shown in

Figure 1. The method tackles core challenges in disaster scenarios through three key modules: (1) the HGNetV2 backbone network, which is designed to overcome limited computational resources; (2) the Seek-DS downsampling module, which mitigates complex background interference; and (3) the Seek-DH detection head, which is optimized for object detection. Each module is tailored to the characteristics of disaster scenarios, achieving an effective balance between accuracy and efficiency. The following subsections detail the design principles and implementation of each module.

3.1. HGNetV2

To address computational efficiency requirements while maintaining feature extraction capability, we select HGNetV2 as our backbone network. During the task of detecting trapped victims in disaster scenarios, the target typically exhibits characteristics such as variations, severe occlusions and complex backgrounds, which impose higher demands on the feature extraction capabilities of backbone networks. While traditional ResNet [

35] backbone networks mitigate the vanishing gradient problem through residual connections, their hierarchical feature fusion efficiency is relatively low. Additionally, as network depth increases, computational complexity rises sharply. Especially when processing high-resolution disaster scene images, ResNet struggles to effectively capture features with a single convolution operation, leading to degraded performance in small object detection.

Inspired by RT-DETR [

25], we adopt the lightweight network module HGNetV2 as the backbone for feature extraction (

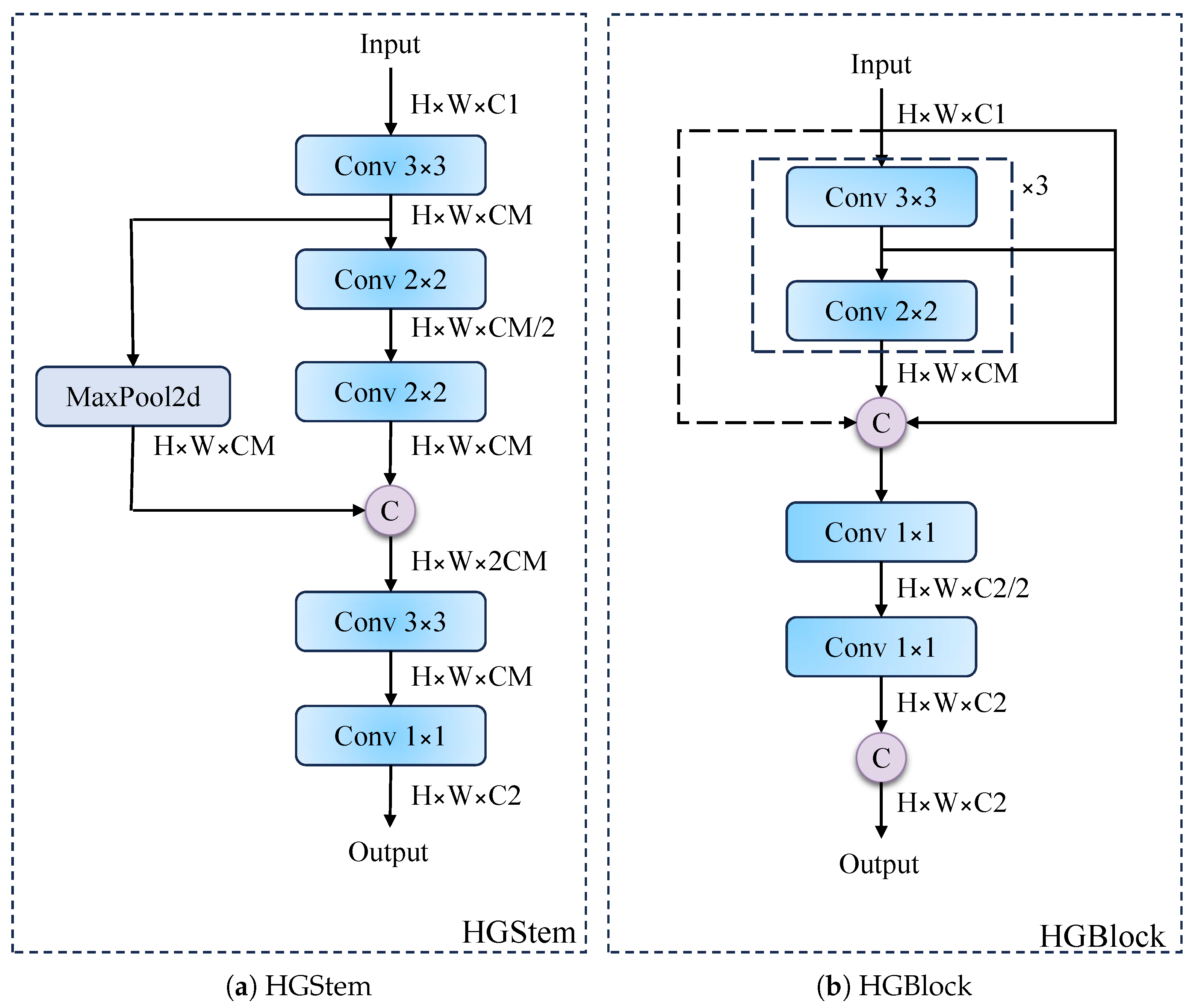

Figure 2). Based on the single-stage object detection framework of the YOLO series, as shown in

Figure 2, our model efficiently extracts features and performs downsampling by using the HGStem block in the initial stage. Specifically, compared to the input layer of the original network, the HGStem block improves the computational efficiency of the network by reducing the dimension of the initial feature map and eliminating redundant parameters. The specific workflow is illustrated in

Figure 3a. As for the HGBlock module, it plays a crucial role in the HGNetV2 backbone, with its overall structure shown in

Figure 3b. Specifically, in the HGNetV2 architecture, we stack HGBlock modules to ensure that the features extracted from the network can fuse information from different scales and depths. Therefore, the design of HGBlock optimizes the network’s processing capabilities, enabling it to more effectively handle scale changes in images. This is particularly useful for identifying trapped victims of different sizes in disaster scenarios.

Through the deployment of depthwise separable convolution architecture in HGStem and HGBlock modules, the HGNetV2 backbone network substantially reduces computational complexity while preserving feature extraction capabilities. Compared to traditional ResNet architecture, this design demonstrates a superior balance of efficiency and accuracy in disaster scenarios for trapped victim detection tasks, providing a reliable technical foundation for real-time rescue applications.

3.2. Seek-DS

For optimal information preservation during feature fusion, we introduce Seek-DS in the neck architecture. In the neck design of traditional object detection networks, standard convolution modules (Conv) typically achieve downsampling through a single convolution operation (e.g., a convolution kernel with a stride of 2). However, in disaster scenarios, complex background interference (such as debris and smoke obstruction) and significant variations in target scale can cause single-path downsampling to lose critical detail information, thereby affecting the accurate localization of small targets (such as trapped victims). To address the feature loss issues caused by complex background interference in disaster scenarios using traditional downsampling methods, we propose the Seek-DS (Seek-DownSampling) dual-branch heterogeneous collaborative downsampling module. This module integrates a maximum pooling branch that preserves extrema with a progressive convolution branch, maximizing the retention of critical information while reducing the resolution of feature maps.

As shown in

Figure 4, the Seek-DS module adopts a dual-branch parallel processing architecture to achieve efficient feature downsampling through two complementary paths. The first branch is the MaxPool extreme value retention path, which retains the maximum activation value in a local region through maximum pooling operations, while also using

convolution for feature preprocessing to boost learnability. The second path is the progressive convolution path, which uses a cascaded

–

convolution structure to achieve progressive feature extraction from the channel dimension to the spatial dimension. Ultimately, these two complementary paths work collaboratively to achieve efficient feature downsampling.

The traditional MaxPool operation divides the feature map into multiple non-overlapping local regions based on the predefined pooling window size

and stride

s, with each window containing

elements. During the extreme value calculation phase, the maximum value operation is strictly applied to each element within the window, mathematically expressed as follows:

Here, i and j are the coordinates of the feature map. c is the channel index. s is the stride, which determines the starting position of the pooling window. p and q are the relative coordinates within the pooling window (with the pooling window size being ), and their range is from 0 to .

After pooling processing, the output feature map size is reduced according to the following formula:

Among them, H and W are the height and width of the feature map, respectively; k represents the size of the convolution kernel; s represents the stride; and p indicates the padding number (when the “same” padding mode is adopted).

To address the issue of detail loss in the MaxPool operation during feature downsampling, the first branch of the Seek-DS module innovatively introduces a cascaded

convolution structure to establish a multi-level feature compensation mechanism. For specific implementation, in the original MaxPool path, we first perform

convolution pre-processing before the pooling operation:

Through a learnable weight matrix

, channel-wise feature reconstruction is performed on the input features. The preprocessed features are then fed into a

MaxPool operation, where the maximum pooling calculation process is as follows:

As for the construction of the second branch in the Seek-DS module, it adopts a progressive spatial aggregation strategy, which achieves multi-level nonlinear feature aggregation through a cascaded

–

convolution structure. In the progressive convolution branch, the input features are first preprocessed via a

convolution, which performs a linear transformation on the feature map along the channel dimension. The weight matrix

(

represents a

convolutional kernel with a compression ratio of

) is used to reduce the number of channels to half of the original value. Subsequently, Batch Normalization (BN) [

36] and the SiLU (Sigmoid Linear Unit) [

37] activation function are introduced to introduce nonlinearity:

To compensate for insufficient spatial information interaction in

convolution,

convolution is cascaded after

convolution to construct a composite feature extraction path. The

convolution plays three key roles here: first, establishing spatial dimension feature correlations through

local receptive fields, where convolution kernel

can effectively capture spatial patterns in local regions; second, implementing regular feature map size reduction through the stride-2 downsampling strategy; and finally, adaptively adjusting feature response intensity at different spatial positions through parameterized weight learning:

The second branch performs three important functions throughout the module: first, it achieves progressive feature extraction from the channel to the spatial dimension through a cascaded – structure. Second, it establishes functional complementarity with the MaxPool operation of the first branch, whereby the first branch focuses on retaining feature extrema, while the second branch excels at learning spatial patterns. Third, it provides a diverse feature representation foundation for subsequent feature fusion. The outputs of the two branches are fused through a concatenation operation, ultimately forming a feature representation that combines both detail retention capability and semantic abstraction capability.

In summary, the Seek-DS dual-branch heterogeneous collaborative downsampling module preserves key feature extrema through the MaxPool branch and captures spatial patterns via the progressive convolution branch, thereby effectively addressing complex background interference encountered in disaster scenarios. This design aims to enhance computational efficiency and reduce information loss, making it particularly suitable for handling the occlusion and deformation object detection tasks commonly encountered in disaster scenarios.

3.3. Seek-DH

To eliminate parameter redundancy while maintaining detection capability, we design Seek-DH for the detection head. Traditional YOLO detection heads adopt independent branch architectures, where each scale layer requires a dedicated convolutional network for object detection. Although this design enables scale-specific feature processing, it introduces substantial parameter redundancy. To overcome this limitation, we propose the Seek-DH, a shared detection head that processes multiple-scale features through a unified pipeline, thereby reducing parameter redundancy inherent in conventional designs.

As illustrated in

Figure 5, Seek-DH employs a feature processing strategy to establish a unified detection framework capable of handling features across scales simultaneously. As we know, in disaster scenarios, personnel targets exhibit a wide range of scales—distant individuals appear as small targets, while nearby individuals appear as large ones. In contrast, the original YOLO detection head requires separate branches for the P3, P4 and P5 feature maps, a design that not only increases network complexity but also risks fragmenting inter-scale feature information.

To enable unified processing, Seek-DH first preprocesses three features—C1, C2 and C3, i.e., the P3, P4 and P5 feature maps of the backbone. Each one is passed through an independent

Conv_GN module to project features of varying dimensions into a unified hidden space. This ensures that subsequent operations are performed within a consistent feature space, avoiding information loss from dimensional mismatches. The

convolution achieves this transformation efficiently while incurring minimal computational cost. After dimensional unification, Seek-DH applies a unified feature processing module composed of two consecutive

Conv_GN modules (

Figure 5). The first employs depthwise convolution to capture spatial information while controlling parameter growth, and the second further integrates and refines feature representations. Crucially, all scales are processed with identical convolutional parameters, allowing the model to learn cross-scale, universal representations while eliminating the parameter redundancy inherent in scale-specific extractors. Finally, Seek-DH employs the decoupled output strategy for object detection. The processed features are fed into independent Classification (Cls) and Regression (Reg) branches. The classification branch predicts category information through dedicated convolutional layers, outputting class confidence scores, while the regression branch leverages the DFL mechanism to predict bounding boxes with improved localization accuracy. This decoupled design preserves task independence between classification and regression while enabling specialized optimization for each task.

Recognizing that features at different scales contribute unequally to detection, Seek-DH incorporates a scale-adaptive adjustment mechanism. As shown in

Figure 5, the input features C1, C2 and C3 are first unified in dimension using independent

Conv_GN modules, then processed jointly through the dual

Conv_GN feature module. The outputs are subsequently passed through decoupled classification and regression branches to generate preliminary predictions, which are finally refined by the corresponding scale adjustment modules (scaling factors initialized to 1.0), i.e., Scale1, Scale2 and Scale3, to adaptively weight bounding box predictions for each scale. In summary, this streamlined pipeline minimizes parameter redundancy while maintaining robust feature processing, thereby enhancing adaptability for complex personnel detection tasks within disaster environments. In contrast to traditional independent branch designs, our approach combines unified feature processing with a decoupled output strategy and adaptive scaling, ensuring both efficient parameter utilization and balanced detection performance across scales. This makes Seek-DH particularly well-suited for the demands of personnel detection in disaster scenarios.

4. Experiments

We developed a comprehensive experimental validation framework to assess the effectiveness of LightSeek-YOLO in detecting humans during disaster scenarios. The framework encompasses comparative evaluations against existing methods, verification of individual component contributions, and ablation studies.

4.1. Dataset

For evaluation, we employed the C2A dataset [

38], a synthetic dataset specifically designed for unmanned aerial vehicle (UAV) search-and-rescue (SAR) operations in disaster environments. This dataset integrates realistic disaster-scene backgrounds with diverse human postures, supplemented by a COCO subset to improve model generalization.

The C2A dataset comprises two primary components:

Disaster backgrounds—1345 images representing four types of disasters from the AIDER dataset (fire/smoke, flood, building collapse/ruins and traffic accidents) [

39], providing realistic environmental contexts.

Human postures—29,732 human instances extracted from 26,675 images in the LSP/MPII-MPHB dataset [

40,

41], annotated with five postures (bending, kneeling, lying, sitting, upright) to simulate trapped or injured individuals.

The dataset construction pipeline proceeded as follows: human figures were segmented from source images using the U2-Net model [

42] with background removal. Instances with a non-zero pixel ratio

were retained, and the body regions were cropped. The cropped figures were randomly scaled and composited onto AIDER backgrounds, generating 10,215 synthetic images with bounding box and posture annotations. Following this method, the final dataset contains over 360,000 instances, averaging 20–40 targets per image, with some containing up to 100 targets—effectively simulating high-density scenarios. In terms of scale distribution, 47% of instances are tiny targets (<10 pixels), while 52% range from 10–50 pixels, posing significant challenges for small-object detection. Image resolutions range from

to

pixels. Combined with five posture types across four disaster contexts, the dataset supports comprehensive fine-grained analysis.

Although synthetic data can present limitations—such as scale or illumination inconsistencies and the absence of dynamic video sequences—the C2A dataset provides a valuable resource for training robust human detection models. By simulating complex disaster conditions, it is particularly suited for SAR applications involving occlusion and small-object detection [

38].

4.2. Experimental Configuration

Experiments were conducted on a server equipped with a 15-vCPU Intel(R) Xeon(R) Platinum 8474C processor (Intel Corporation, Santa Clara, CA, USA), an NVIDIA GeForce RTX 4090D GPU and 32 GB of RAM (NVIDIA Corporation, Santa Clara, CA, USA). The implementation was based on Python 3.10 with PyTorch 2.1.0 and CUDA 11.8. Training was performed with a batch size of 32 and 4 worker threads for data loading. The dataset split followed an 8:1:1 train/validation/test ratio to ensure proper model evaluation. To ensure reproducibility, the random seed was set to 0 with deterministic = True. The initial learning rate was set to lr0 = 0.01 with learning rate scheduling to a final value of lrf = 0.01 (1% of the initial rate), using the SGD optimizer with = 0.937 and weight_decay = 0.0005. Loss function weights were configured as box = 7.5, cls = 0.5, dfl = 1.5 to balance different loss components during training. Given the large scale of the C2A dataset, the models were trained for 150 epochs. All hyperparameters were systematically tuned to ensure optimal performance.

4.3. Evaluation Metrics

AP@

denotes the Average Precision at an Intersection over Union (IoU) threshold

, which evaluates model performance under relaxed localization criteria (a prediction is considered correct if the overlap between the predicted and ground-truth boxes is at least

). It is formally defined as

AP@small denotes the average precision for small object detection with an area smaller than

pixels, following the COCO standard. It evaluates a model’s ability to detect small targets and is computed by

mAP@[0.5:0.95] is the primary metric for evaluating overall model accuracy in object detection. It is obtained by computing the Average Precision at 10 Intersection over Union (IoU) thresholds, ranging from 0.5 to 0.95 with a step of 0.05. The final score is the mean of AP values across all thresholds, defined as

Other metrics, including FLOPs, parameter count, model size and FPS, are employed to assess the portability of our model, reflecting its computational requirements, memory footprint, and inference efficiency.

4.4. Comparative Experiments (COCO Metrics)

To rigorously evaluate the effectiveness of our YOLOv11-based lightweight human detection method for disaster scenarios, we conducted extensive experiments on the COCO dataset. The comparison models include widely adopted object detectors: YOLO-series models (YOLOv8n and YOLOv11n) representing the latest advances in the YOLO framework, as well as non-YOLO approaches such as Faster R-CNN, SSD, RetinaNet [

43] and EfficientDet [

44], which cover diverse detection paradigms.

We employed standard COCO evaluation metrics, including mAP and AP at different IoU thresholds, to benchmark our method against these models. As summarized in

Table 1, the results clearly demonstrate the relative performance of our approach. By selecting Faster R-CNN, SSD, RetinaNet and EfficientDet as representative non-YOLO baselines, alongside YOLOv8n and YOLOv11n as the most recent lightweight YOLO variants, we provide a comprehensive assessment of our method across accuracy, efficiency and model complexity.

4.4.1. Comparison with Existing Methods (Non-YOLO Series)

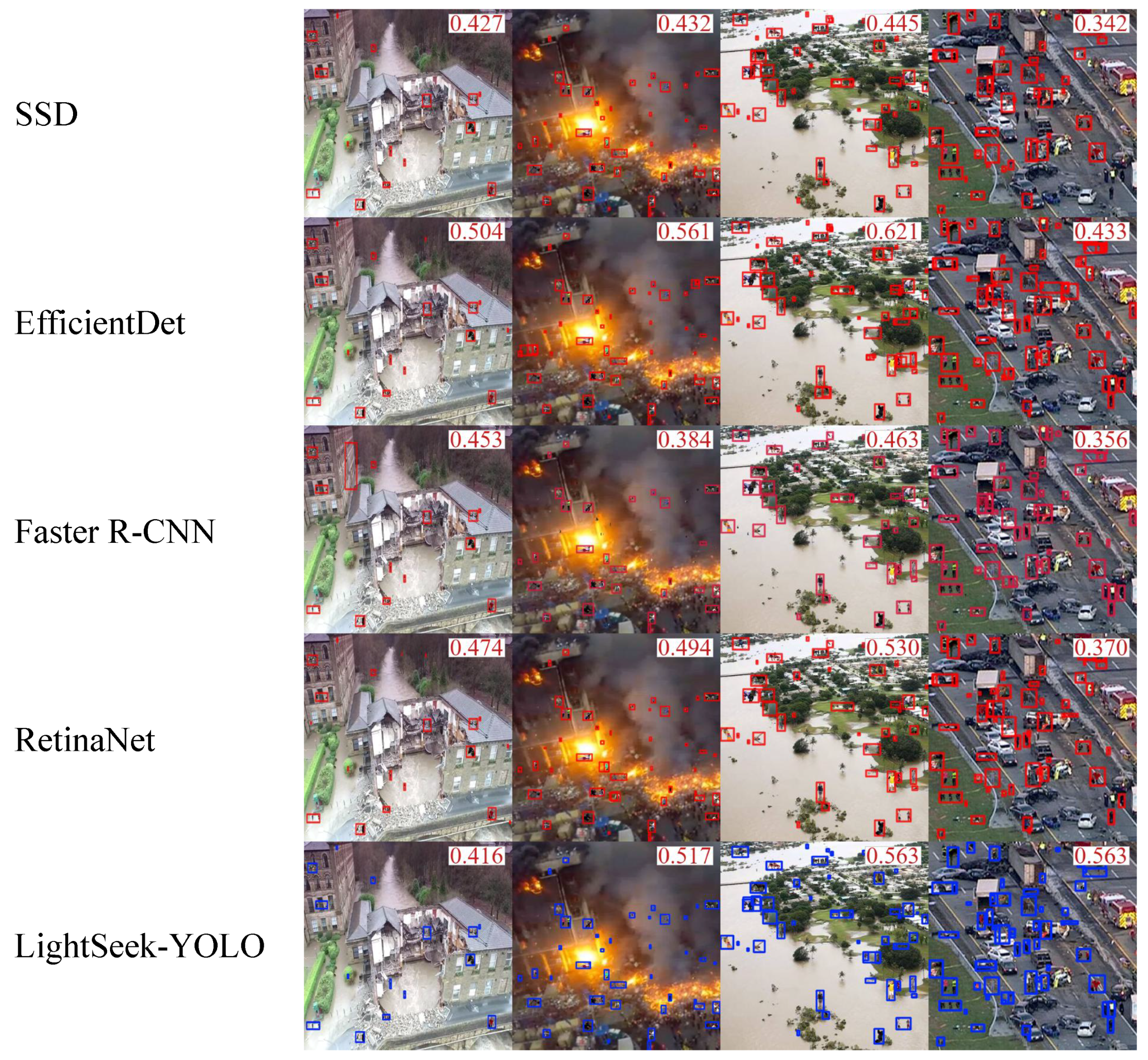

As demonstrated in

Table 1, our proposed method achieves competitive detection accuracy while maintaining minimal computational overhead. The model attains an mAP@[0.5:0.95] of 0.473, comparable to high-performance models such as EfficientDet (0.491) and RetinaNet (0.466), while requiring substantially fewer computational resources. Specifically, it demonstrates 16.8% and 25.8% improvements over Faster R-CNN (0.405) and SSD (0.376), respectively, with significantly reduced parameters and computational cost. For AP@0.5, our model achieves 0.75, approaching EfficientDet’s performance (0.795), while substantially outperforming Faster R-CNN (0.619) and SSD (0.698). At the stringent AP@0.75 threshold, our model reaches 0.5, surpassing Faster R-CNN (0.443), SSD (0.361) and RetinaNet (0.472). The small-object detection performance (AP@small = 0.478) validates the method’s effectiveness for disaster scenarios involving small-sized targets. The comparison between LightSeek-YOLO and non-YOLO models in disaster scenarios is shown in

Figure 6.

The efficiency–accuracy trade-off analysis reveals significant advantages over non-YOLO architectures. While EfficientDet achieves marginally higher accuracy (mAP@[0.5:0.95] = 0.491 vs. 0.473), it requires 55.322M parameters (29.7 × our model) and 198G FLOPs (40.4 × our model). Similarly, RetinaNet demands 18.339M parameters (9.9 × our model) and 98.565G FLOPs (20.1 × our model) for comparable accuracy. This computational efficiency is critical for disaster-response applications where resources are constrained and real-time processing is essential.

4.4.2. Comparison Within YOLO Series

Comprehensive comparative analysis within the YOLO family demonstrates that LightSeek-YOLO achieves substantial model compression while preserving detection accuracy comparable to the SOTA variants YOLOv8n and YOLOv11n. Specifically, when benchmarked against YOLOv8n, our model maintains identical mAP@[0.5:0.95] performance (0.473) while achieving significant computational efficiency gains: 38.2% parameter reduction (3.01M → 1.86M), 39.5% FLOP reduction (8.1G → 4.9G), and a 36.5% model size decrease (6.11M → 3.88M). Similarly, compared to YOLOv11n, our method delivers comparable detection accuracy (0.473 vs. 0.481) with remarkable resource optimization—27.8% fewer parameters (1.86M vs. 2.58M), 22.2% fewer FLOPs (4.9G vs. 6.3G) and 25.8% smaller model size (3.88M vs. 5.23M). These computational reductions collectively enhance deployment feasibility on resource-constrained devices commonly utilized in disaster-response scenarios where computational resources are inherently limited. The empirical results demonstrate that our architectural modifications to YOLOv11 effectively preserve essential detection capabilities while substantially reducing computational requirements, thereby rendering the model particularly suitable for deployment in resource-limited disaster environments where both accuracy and efficiency are paramount. The comparison of YOLOv8n, YOLOv11n and LightSeek-YOLO in disaster scenarios is shown in

Figure 7.

4.4.3. Performance Trade-Off Analysis

Our experimental evaluation highlights clear performance distinctions from existing approaches. Among non-YOLO models, EfficientDet achieves the highest accuracy but at the cost of substantially greater computational resources, which limits its practicality in constrained environments. Faster R-CNN and SSD, though widely adopted, deliver consistently lower accuracy across all metrics while still demanding more computation than our method. RetinaNet offers a moderate balance between accuracy and efficiency but remains less competitive in terms of parameter count and inference cost. Within the YOLO family, our method achieves detection accuracy comparable to YOLOv8n and YOLOv11n while substantially reducing computational overhead. This reduction is particularly advantageous in disaster scenarios, where rapid detection and deployment on resource-limited devices are essential for a timely response.

A key trade-off is the slightly lower mAP@[0.5:0.95] relative to EfficientDet (0.473 vs. 0.491). However, this 1.8% accuracy gap is offset by dramatic efficiency gains—a 96.6% reduction in parameters and a 97.5% reduction in FLOPs. In disaster-response settings, where devices such as drones or surveillance cameras operate under strict power and memory constraints, these computational savings enable broader deployment, lower energy consumption, and longer operational lifetimes. Overall, our YOLOv11-based lightweight model strikes an effective balance between accuracy and efficiency, making it highly suitable for real-world disaster-response operations that demand both reliable detection and low computational cost.

4.5. Component Comparison

To evaluate the robustness of various architectural components within our network, we conducted comprehensive comparisons across different detection head designs.

As demonstrated in

Table 2, our proposed detection head exhibits remarkable performance advantages across comprehensive metrics, achieving optimal balance between accuracy and efficiency. Our method attains an mAP@[0.5:0.95] of 0.491, representing a 2.3% improvement over the second-best model, Aux (0.480), while reaching AP@0.75 of 0.521—surpassing competing methods by 1.96–2.56% (Aux: 0.511, AttHead: 0.508). For small-object detection, our approach achieves AP@small of 0.497, demonstrating 2.26–2.90% improvement over alternatives, validating specialized optimization for minute target identification crucial in disaster scenarios. Regarding computational efficiency, our detection head maintains competitive inference speed at 617.94 FPS, nearly matching EfficientHead (618.15 FPS), while achieving superior computational efficiency with 5.6 GFLOPS—representing 11.1% and 13.8% reductions compared to Aux (6.3 GFLOPS) and AttHead (6.5 GFLOPS), respectively. With 2.42M parameters, our method requires only 4.7% more than EfficientHead (2.31M) while delivering substantially superior accuracy, demonstrating Pareto optimization of accuracy–efficiency trade-offs. In disaster contexts, these performance characteristics prove particularly valuable: the robust AP@0.5 (0.760) and AP@0.75 (0.521) enable precise localization of highly occluded targets such as limbs buried under debris, while superior AP@small (0.497) facilitates detection of minute human body fragments—often the only visible indicators of survivors in complex disaster environments—thereby directly enhancing the success rates of rescue operations and operational resilience against dynamic interference, including smoke and dust.

Table 3 demonstrates that our model achieves an optimal speed–efficiency balance across key performance metrics. Specifically, our method attains 617.94 FPS, representing a 7.3% improvement over SterNet (576.02), while maintaining computational efficiency with 5.6 GFLOPS—significantly outperforming FasterNet (9.2) and EfficientViT (7.9). The parameter count of 2.42M constitutes merely 18.5% of timm’s requirements (13.05M), highlighting substantial model compression. Regarding detection accuracy, our model exhibits competitive performance with AP@0.5 (0.72) and AP@small (0.451) surpassing SterNet (0.719/0.445), though marginally trailing EfficientViT in AP@0.5 (0.72 vs. 0.722). The overall mAP@[0.5:0.95] (0.446) remains lower than timm (0.483), indicating an inherent trade-off between computational efficiency and comprehensive accuracy. While timm achieves superior precision (AP@0.75: 0.514), its substantial computational demands (33.6 GFLOPS) and reduced inference speed (457.81 FPS) may not satisfy the real-time requirements critical for disaster scenarios. Conversely, our model maintains >600 FPS while delivering 1.34% higher AP@small than SterNet (0.451 vs. 0.445), enabling effective detection of minute targets within debris. For disaster rescue operations where real-time performance is paramount for victims’ safety, HGNetV2 provides decisive speed advantages that facilitate rapid victim identification and localization, thereby increasing rescue success probability and mitigating risks in time-critical situations.

4.6. Ablation Experiment

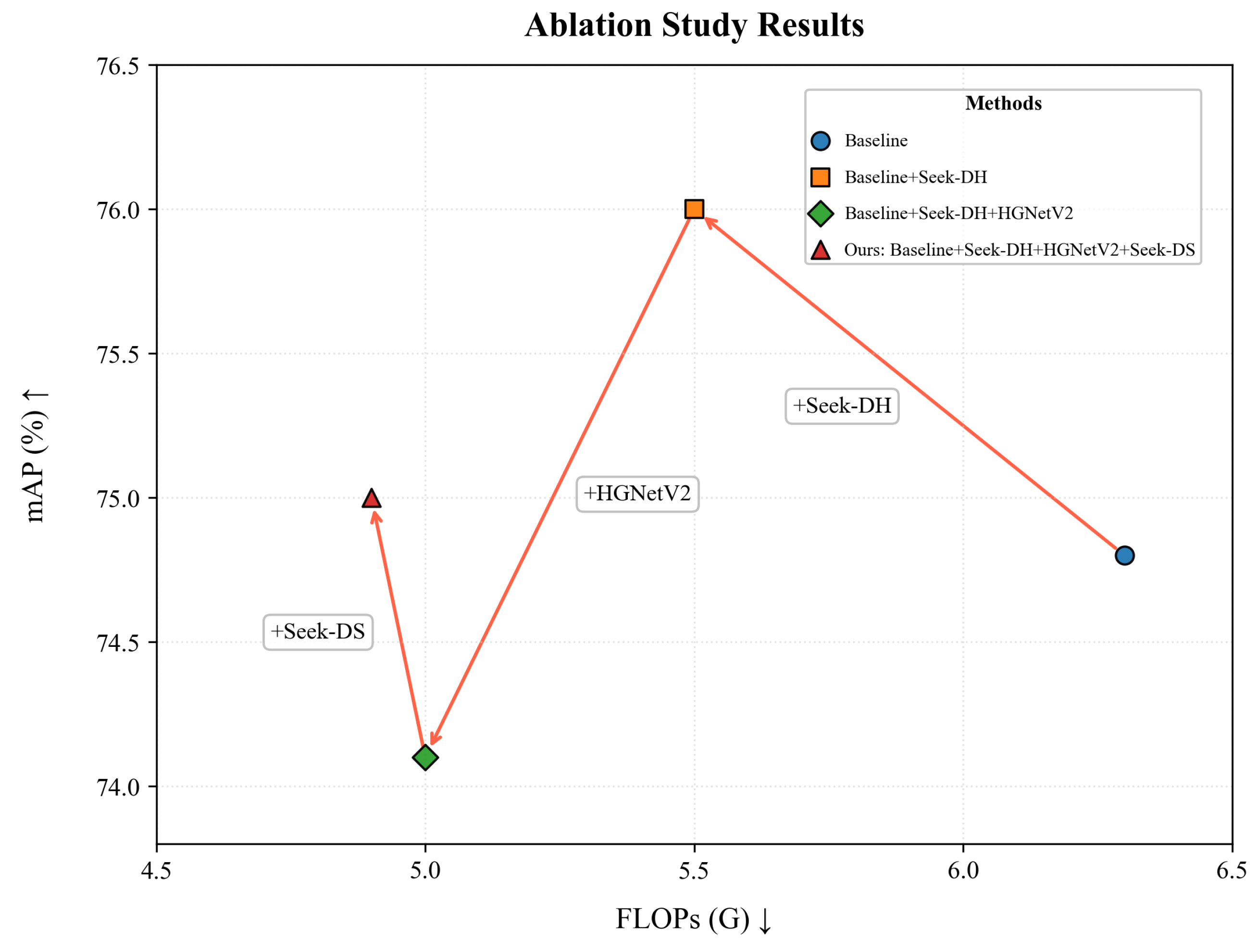

As depicted in

Table 4, our lightweight model evinces remarkable adaptability to disaster scenarios. The performance modulations under extreme optimization are deliberate design stratagems tailored to enhance computational efficiency, rather than being circumscribed by architectural constraints.

Detailed analysis of our systematic ablation reveals each module’s distinct contributions: Seek-DH contributes +0.010 mAP improvement with 161,855 parameter reduction; HGNetV2 trades −0.026 mAP for a substantial 439,020-parameter reduction, representing the primary efficiency optimization; and Seek-DS recovers +0.008 mAP while achieving additional 117,664 parameter reduction. These results demonstrate that each module adds distinct value through our synergistic design strategy, where Seek-DH provides accuracy improvement with efficiency gains, HGNetV2 delivers computational optimization at controlled accuracy cost, and Seek-DS compensates for backbone limitations while maintaining efficiency benefits.

With respect to the pivotal real-time rescue requisites, the model incorporated with the Seek-DH module is meticulously optimized to prioritize computational efficiency. It attains an inference velocity of 617.94 FPS, signifying an 18.7% enhancement relative to the baseline, and mitigates the computational expense to 5.6 GFLOPS, representing an 11.1% reduction from the baseline. This intentionally efficiency-centric design directly endows the model with real-time search-and-rescue proficiency, enabling the processing of over 600 frames per second.

Noteably, this optimization of computational efficiency does not entail a compromise of critical detection performance. A balance is assiduously sustained through design: the model conserves a high level of detection accuracy (mean Average Precision, mAP@[0.5:0.95] = 0.491; Average Precision at 0.5, AP@0.5 = 0.760) and demonstrates excellence in small-object detection (Average Precision for small objects, AP@small = 0.497). This design-propelled equilibrium renders the model apt for expeditiously discerning minuscule human body components amidst rubble. Such a capacity is of paramount significance for aiding rescuers in promptly pinpointing trapped victims within intricate disaster settings, potentially augmenting the success rate of rescue endeavors.

The model demonstrates intentional efficiency–accuracy trade-offs under extreme compression scenarios. The performance trade-off analysis of the LightSeek-YOLO module combination is shown in

Figure 8. When incorporating HGNetV2, parameters reduce significantly to 1.98M (23.6% compression) and GFLOPS drop to 5.0, with mAP@[0.5:0.95] decreasing by 5.3% to 0.465, representing a deliberate design choice prioritizing computational efficiency. False detection rates increase by 3.2% in smoke occlusion test subsets, indicating that excessive lightweighting compromises feature expression in complex scenarios. The complete model configuration (Seek-DH + HGNetV2 + Seek-DS) achieves collaborative optimization through intentional trade-offs, controlling parameters to 1.86M (a 27.8% reduction from baseline) while maintaining >570 FPS performance, with the resulting accuracy profile (mAP@[0.5:0.95] = 0.473, AP@small = 0.478) reflecting our design priority of computational efficiency for resource-constrained deployment. These characteristics render the model particularly suitable for initial disaster search operations demanding high-speed response and minimal resource consumption, such as rapid UAV scanning, though limitations persist in high-precision localization (AP@0.75 = 0.489) and extreme compression robustness. Consequently, we recommend implementing dynamic model configuration switching based on operational phases: deploying the Seek-DH single-module solution during emergency search operations (FPS > 600, mAP = 0.491), then transitioning to high-precision models for fine-grained detection once disaster conditions stabilize and computational resources become available.

5. Discussion

The LightSeek-YOLO model proposed in this study demonstrates unique performance characteristics in lightweight human detection tasks in disaster scenarios. Through systematic experimental verification, we discern optimal efficiency–accuracy trade-offs. Instead of merely aiming for the maximization of accuracy, we accord precedence to the feasibility of deployment.

Module synergy effects and performance trade-offs: The ablation experiment results reveal the differential impacts of different module combinations on model performance. When only the Seek-DH module is introduced, the model achieves the best accuracy–speed balance, with the FPS improving from baseline 520.5 to 617.94 (18.7% improvement), while mAP@[0.5:0.95] increases from 0.481 to 0.491. This improvement is mainly attributed to Seek-DH’s context-aware mechanism, which effectively enhances adaptability to targets of different scales through dynamic dilation rate adjustment and channel attention mechanism. Particularly in small-object detection, AP@small improves from 0.485 to 0.497, indicating that this module significantly contributes to detecting distant small-scale trapped victims in disaster scenarios.

Despite the positive impact of the Seek-DH module, further exploration of model optimization led to the addition of the HGNetV2 backbone network, which brought about different performance changes. When the HGNetV2 backbone network is added, although parameters decrease from 2.42M to 1.98M (18.1% reduction), detection accuracy declines (mAP@[0.5:0.95] from 0.491 to 0.465). This demonstrates each module’s distinct value through our synergistic design strategy, where sequential ablation validates individual contributions. Although HGNetV2 effectively reduces computational complexity through depthwise separable convolution (DSC) [

45], the limited cross-channel information interaction in this architecture, especially when processing complex backgrounds in disaster scenarios filled with various debris, smoke, and other interference factors, restricts the model’s ability to comprehensively capture target features, ultimately leading to performance loss.

After further adding the Seek-DS module, parameters continue to decrease to 1.86M, while performance partially recovers (mAP@[0.5:0.95] of 0.473), indicating that the multi-path downsampling mechanism compensates for feature loss to some extent.

In summary, the module synergy effects and performance trade-offs observed in these experiments play a crucial role in the design and practical deployment of the LightSeek-YOLO model. Understanding these relationships enables us to make informed decisions when tailoring the model to specific disaster-scenario requirements, balancing computational efficiency with detection accuracy for optimal performance.

5.1. Comparative Analysis with Existing Methods

In comparative experiments on the COCO dataset, LightSeek-YOLO demonstrates excellent efficiency advantages. Compared to Faster R-CNN, a widely used two-stage detector known for its high-precision object detection in many applications, our method improves mAP@[0.5:0.95] by 16.8% (0.473 vs. 0.405) while consuming merely 3.1% of its parameters (1.86M vs. 60.34M). Compared to single-stage detectors, LightSeek-YOLO achieves significant model compression while maintaining comparable detection accuracy. For example, compared to EfficientDet, a popular single-stage detector renowned for its high-accuracy object detection, although mAP@[0.5:0.95] is slightly lower by 3.7% (0.473 vs. 0.491), parameters are reduced by 96.6% (1.86M vs. 55.32M) and computational cost is reduced by 97.5%. This significant reduction in parameters and computational cost in LightSeek-YOLO makes it more suitable for disaster rescue scenarios where resources are often scarce, enabling real-time detection to be achieved by devices with limited computing power without sacrificing too much detection accuracy.

In comparison within the YOLO series, LightSeek-YOLO achieves 27.8% parameter reduction and 22.2% computational cost reduction compared to the YOLOv11n baseline while maintaining similar detection accuracy. YOLOv11n is a well-known model in the YOLO family with certain performance characteristics. This result validates the effectiveness of our proposed lightweight improvement strategy, which is particularly significant for resource-constrained disaster rescue scenarios. In such scenarios, the reduced resource requirements of LightSeek-YOLO can ensure smooth operation on various resource-limited devices, such as drones or portable detection units, facilitating timely and effective rescue operations.

5.2. Selection Strategy for Detection Head and Backbone Networks

Component comparison experiments provide important insights for module selection. In detection head comparison, Seek-DH outperforms other detection head architectures with a mAP@[0.5:0.95] of 0.491 while maintaining a high-speed inference capability of 617.94 FPS. This high AP@small value of Seek-DH indicates its strong ability to accurately detect small objects, which is of great significance in disaster scenarios where small parts of victims or important small-scale objects may be crucial for rescue operations. Particularly noteworthy is that Seek-DH achieves 0.497 in AP@small metrics, 2.3% higher than the second-best Aux detection head.

Backbone network selection presents more complex trade-offs. The timm backbone network, which is widely used in various computer vision tasks for its powerful feature extraction ability, achieves the highest detection accuracy (mAP@[0.5:0.95] of 0.483). However, the computational cost of 33.6 GFLOPS and 13.05M parameters severely limits real-time applications. In contrast, HGNetV2, designed with a focus on real-time performance in resource-constrained environments, exhibits a slightly lower detection accuracy (0.446). Yet it better meets real-time requirements with an inference speed of 617.94 FPS and 2.42M parameters, making it more suitable for rapid deployment needs at disaster sites. This trade-off between accuracy and real-time performance in backbone network selection is crucial for disaster-site applications. While high accuracy is desirable, the ability to rapidly process data and provide timely detection results is often more critical in the chaotic and time-sensitive environment of a disaster site. Therefore, HGNetV2’s characteristics make it a more practical choice for such scenarios.

5.3. Applicability and Limitations of C2A Dataset

This study adopts the C2A synthetic dataset for training and evaluation. This dataset contains over 360,000 annotated instances, covering five human postures and four disaster scenarios. The dataset’s distribution characteristics, with 47% of instances smaller than 10 pixels and 52% in the 10–50 pixel range, are consistent with the target scale distribution in actual disaster scenarios. However, the use of synthetic data also introduces potential limitations. In terms of texture authenticity, synthetic textures may lack the fine-details and irregularities present in real-world objects, which could potentially lead to misclassification or reduced detection accuracy for certain types of targets. Regarding lighting condition diversity, the synthetic data may not fully capture the complex and variable lighting conditions in actual disaster scenarios, such as harsh sunlight, low light at night, or uneven illumination due to debris, thus affecting the model’s generalization ability.

Based on the experimental results, during the emergency search-and-rescue period after disasters (0–72 h), when rapid scanning over large areas is of utmost importance, the Seek-DH single-module configuration shows its superiority. Its high inference speed of 617.94 FPS can effectively support rapid large-area UAV scanning, while maintaining a relatively high mAP@[0.5:0.95] of 0.491, which is sufficient to identify obvious signs of life. This balance between speed and accuracy is crucial for quickly covering large disaster areas and detecting potential survivors in the initial stage of the rescue operations. In subsequent precise localization phases, the complete model configuration can be considered. Although it reduces the speed to 571.72 FPS, the lightweight parameters of 1.86M still suit long-term deployment. This is because, as demonstrated in the experiments, in the later stages of the rescue operations when more accurate localization of survivors is required, the complete model configuration can provide relatively higher accuracy with an acceptable trade-off in speed, ensuring long-term and stable operation in the complex disaster environment.

7. Conclusions

Real-time object detection on computationally constrained devices in disaster scenarios presents a critical challenge for search-and-rescue operations. To address this, we introduce LightSeek-YOLO, a novel lightweight framework that strikes an optimal balance between detection accuracy and computational efficiency for victim detection in complex environments. Our approach integrates three synergistic innovations into the YOLOv11 framework: (1) the HGNetV2 backbone, which employs hardware-aware depthwise separable convolutions for efficient feature extraction; (2) the Seek-DS downsampling module, which uses a dual-branch architecture to preserve critical feature extrema and spatial patterns while minimizing information loss; and (3) the Seek-DH detection head, which leverages a unified processing pipeline with parameter sharing to reduce computational redundancy and enhance adaptability across varying target dimensions.

Comprehensive experimental evaluation demonstrates substantial performance improvements. On the COCO dataset, LightSeek-YOLO achieves a mAP@[0.5:0.95] of 0.473, matching YOLOv8n and approaching YOLOv11n (0.481), while requiring only 1.86M parameters and 4.9 GFLOPs, representing reductions of 28% and 22% compared to YOLOv11n, respectively. This results in an impressive 571.72 FPS, with computational efficiency improvements that suggest deployment potential on edge platforms, requiring comprehensive edge device validation. However, several limitations should be noted: validation is primarily conducted on synthetic C2A data, which may limit real-world generalization due to simplified lighting models and texture rendering; the architecture currently lacks integration of data (e.g., thermal or depth), which could improve performance in degraded conditions; detection of severely occluded targets (>70%) remains challenging; and validation primarily achieved using high-end GPU hardware may not fully reflect edge device performance constraints in actual disaster scenarios. Despite these limitations, LightSeek-YOLO offers a significant leap forward by overcoming the traditional accuracy–efficiency trade-off, providing a promising solution for deployment on resource-constrained hardware in emergency response. Future work will focus on incorporating real-world disaster data, developing dynamic adaptation mechanisms, and integrating fusion to enhance robustness in diverse rescue scenarios.