1. Introduction

The quest to represent complex mathematical functions in terms of simpler constituent parts is a central theme in analysis and applied mathematics. A pivotal moment in this endeavor arose from the list of 23 problems presented by David Hilbert in 1900, which profoundly shaped the mathematical landscape of the 20th century. Hilbert’s 13th problem, in particular, posed a fundamental question about the limits of function composition. The problem seeks solutions to a general 7th-degree polynomial, which are finite combinations of functions of a single variable each, i.e., if

, where

g is a simple known function. [

1,

2].

In the late 1950s, Andreĭ Kolmogorov and his graduate student Vladimir Arnold provided a powerful and affirmative answer to this problem. Their work culminated in the Kolmogorov–Arnold representation theorem (KART) [

3,

4], which states that any multivariable continuous function

f defined on a compact domain, such as the

n-dimensional unit cube, can be represented exactly by a finite superposition of single variable functions and the single binary operation of addition. The canonical form of the theorem is expressed as

where

are the innerfunctions, and

are the outer functions, which are assumed to be continuous. Lorentz [

5] and Sprecher [

6] showed that the functions

can be replaced by only one function

and that the functions

can be replaced by

, where

is a constant and

are monotonic and Lipschitz-continuous functions.

Perceptrons were introduced around the same time by Frank Rosenblatt [

7] for simple classification tasks. However, their limitations were famously highlighted in the 1969 book “

Perceptrons” by Marvin Minsky and Seymour Papert [

8]. They proved that a single-layer perceptron could not solve problems that were not linearly separable, such as the simple XOR logical function. This critique led to a significant decline in neural network research for over a decade, often termed the first “AI winter.” The revival came in the 1980s with the popularization of the multilayer perceptron (MLP), which was introduced in the late 1960s [

9]. By adding one or more “hidden” layers between the input and output layers, MLPs could overcome the limitations of the single-layer perceptron. The key breakthrough that made these deeper networks practical was the backpropagation algorithm. While developed earlier, its popularization by David Rumelhart, Geoffrey Hinton, and Ronald Williams in 1986 [

10] provided an efficient method to train the weights in these new, multilayered networks, sparking a renewed wave of interest in the field.

With MLPs and derived neural network architectures demonstrating practical success [

11,

12,

13], the next crucial step was to understand their theoretical capabilities. The Universal Approximation Theorem provides this foundation, showing that a standard MLP with just one hidden layer can, in principle, approximate any continuous function to any desired degree of accuracy. George Cybenko [

14] used tools from functional analysis, like the Hahn–Banach theorem and the Riesz representation theorem, and showed that, given any multivariable continuous function in a compact domain, there exist values of the weights and biases of a multilayer perceptron with a sigmoidal model whose loss with respect to the function is bound by any given positive value

. Almost concurrently, Kurt Hornik, Maxwell Stinchcombe, and Halbert White [

15] provided a different, more general proof for the universal approximation property of a multilayer perceptron with any bounded, non-constant, and continuous activation function.

The applicability of KART to continuous functions in a compact domain was first highlighted by Hecht-Nielsen [

16,

17]. However, the functions constructed in Kolmogorov’s proofs, as well as in their later simplifications or improvements, are highly complex and non-smooth, which were very different from the much simpler activation functions used in MLPs [

18]. Soon, a series of papers by Věra Kůrková [

19,

20] showed that the one-dimensional inner and outer functions could be approximated using two-layer MLPs and obtained a relationship between the number of hidden units and the loss based on the properties of the function being approximated.

It was not until much later in the 2020s that KART had a direct practical application in the domain of neural networks and machine learning. Kolmogorov–Arnold networks (KANs) were first proposed by Liu et al. [

21,

22] as an alternative to MLPs, which demonstrated interpretable hidden activations and higher regression accuracy. They used learnable basis splines to model the constituent hidden values, similar to KART, and their stacked representation is simplified compared to the original form used in KART. Subsequent works retained the same representation but replaced splines with other series of functions, such as radial basis functions and Chebyshev polynomials [

23,

24,

25,

26], which were computationally faster and demonstrated superior scaling properties with larger numbers of learnable parameters. However, issues of speed and numerical stability on smaller floating-point types remain, especially compared to the well-established MLPs [

27,

28].

To address these challenges, we propose a variant of the representation theorems by Lorentz [

5] and Sprecher [

6]. We set the phases of the sinusoidal activations to linearly spaced constant values and establish their mathematical foundation to confirm their validity. Previous work has explored this approximation series compared to MLPs and basis-spline approximations and showed competitive performance on the inherently discontinuous domain task of labeling hand-written numerical characters [

29]. We extend this work by providing a constructive proof of the approximation series for single-variable and multivariable functions and evaluating performance on several such functions with features such as rapid and changing oscillation frequencies. We also extend the comparison from [

29] to include fixed-frequency Fourier transform methods and MLP with piecewise-linear and periodic activation functions.

Table 1 summarizes the advancements in this area.

The remainder of this paper is organized as follows. In

Section 2, we establish the necessary auxiliary results to prove the approximation theorem for one-dimensional functions.

Section 3 extends the results from the previous section to derive a universal approximation theorem for two-layer neural networks. In

Section 4, we test several functions and compare the numerical performance of our proposed networks with the classical Fourier transform. Finally, in

Section 5, we discuss potential applications and future research directions.

2. Sinusoidal Universal Approximation Theorem

The concept of approximating any function using simple, manageable functions has been widely utilized in deep learning and neural networks. In addition to the multilayer perceptron (MLP) approach, the Kolmogorov–Arnold representation theorem (KART) has gained increasing attention from data scientists, who have developed Kolmogorov–Arnold Networks (KANs) to create more interpretable neural networks. In the classical Fourier transform, any continuous, piecewise continuously differentiable (

) function on a compact interval can be expressed as a Fourier series of sine and cosine functions. In contrast, Kolmogorov demonstrated that any continuous multivariable function can be represented as form (

1).

By extending Kolmogorov’s idea and refining both outer and inner functions using sine terms, we can demonstrate that any continuous function in can be approximated by a finite sum of sinusoidal functions with varying frequencies and linearly spaced phases.

Theorem 1. Let f be a continuous function on and . For any , there exist , frequencies , and coefficients such that To prove this theorem, we need a series of lemmas to approximate the sine function with its Taylor series and then apply the Weierstrass approximation theorem to bring the sum of sinusoidal functions arbitrarily close to any continuous function.

First, we need to approximate the sinusoidal function using its Taylor polynomial.

Lemma 1. Let and . For every ,where Proof. Set

. We can rewrite

where

, determined by (

3), is the Taylor polynomial of the function

centered at

, and the remainder

for some

.

Since

and

,

Therefore,

for all

. This completes the proof of Lemma 1. □

Lemma 2. For every , there exists such that for all ,where , , and is determined by (3). Proof. From Lemma 1, we have

for

,

,

, and

. Therefore,

Since

, for a given

, we can choose

such that for all

, we obtain (

4) to complete the proof of Lemma 3. □

Lemma 3 (Weierstrass Approximation [

30]).

Let f be a continuous function on . Then, for any , there exists such that for all where is the Bernstein polynomial of f determined by Lemma 4. Let be any polynomial, and let for all . Then, there exists and such thatfor all , where is determined by (3). Proof. Recall the formula determined by (

3); then, we have

To obtain (

6), we need to choose

and

such that

Equivalently, we need to find

and

such that

Consider the following

matrix:

Since

,

for all

. By induction in

N, we can select

such that

. Therefore, the system of Equation (

7) with the augmented matrix

M has a solution for

. This completes the proof of Lemma 4. □

Proof of Theorem 1. For a given

, according to Lemmas 2 and 3, there exists

such that for all

, we have

where

is the Bernstein polynomial of

f, and

for some

(to be chosen later) and

.

By virtue of Lemma 4, we can find

and

such that

Now, combining (

8) and (

9), we obtain the conclusion of Theorem 1. □

4. Numerical Analysis

To evaluate the performance of our sinusoidal approximation, we compared it to the Fourier series for approximating one-dimensional functions. For higher-dimensional problems with two inputs and one output, we benchmark our approach against two-layer (MLPs) [

10], using either ReLU [

31] or sine activation functions, basis-spline KANs (B-SplineKANs), and against multi-dimensional truncated Fourier series. In both cases, we consider functions with a single output and compute the relative

error as follows:

Based on Theorem 1, we constructed our SineKAN model and analyzed its performance numerically using the following neural network formulation for one-dimensional functions:

where

,

, and

b are the learnable frequency parameters, amplitude functions, and a bias term, respectively. For multi-dimensional functions, Theorem 2 guides the construction of SineKAN layers as follows:

where

and

are learnable amplitude tensors,

and

are learnable bias vectors, and

and

are learnable frequency vectors. For the one-dimensional case, we consider functions defined on a uniform grid of input values from

to 1. These functions pose challenges for convergence in Fourier series due to their singularities or non-periodicity:

The first function,

is non-periodic, has a small magnitude across the domain, and exhibits a strong singularity at

.

The second function,

shows rapid growth and high-frequency oscillations near

.

The third function,

incorporates phase shifts to evaluate the model’s performance and convergence with respect to linearly spaced phases.

The final two functions are particularly challenging for Fourier series convergence, allowing us to test our model’s convergence behavior:

All models are fitted using the Trust Region Reflective algorithm for least-squares regression from the

scipy package [

32]. Each function is fitted for a default of 100 steps per fitted parameter. The hyperparameters used for model dimensionality on this task are provided in

Table 2, where H is hidden layer dimensionality, Grid In and Grid Out are KAN expansion grid sizes, and Degree is the polynomial degree for B-Splines. Grid In and Grid Out entries are scaled together as pairs.

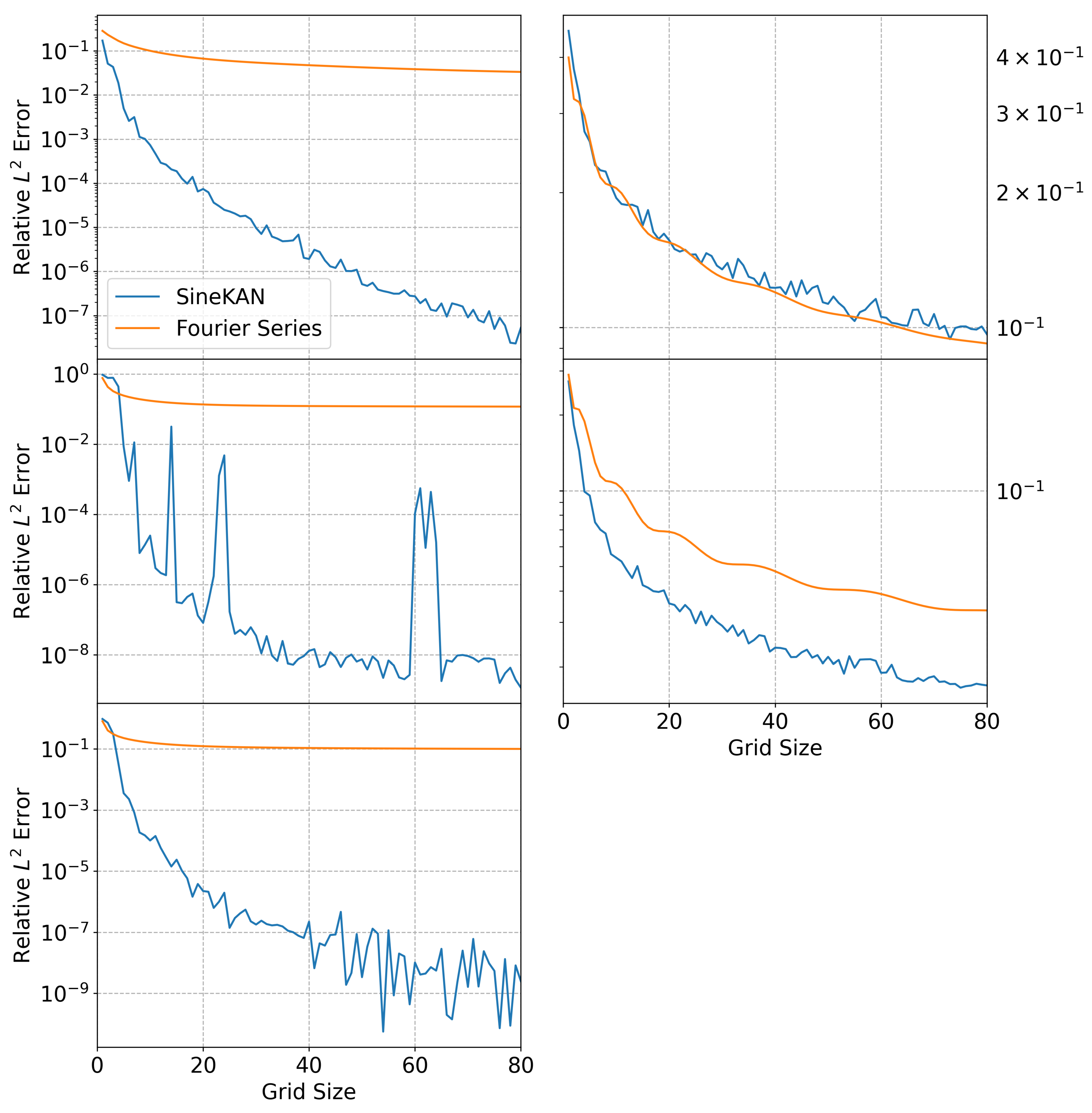

Results for 1D functions are shown in

Figure 1. For (

19)–(

21), the SineKAN approximation significantly outperforms the Fourier series approximation. In (

22), performance is roughly comparable between the two. We observe that the function in (

22) has less regularity, which causes both the SineKAN and the Fourier series to converge slowly.

For multi-dimensional functions, we benchmarked the following two equations on a 100 by 100 mesh grid of input values ranging from 0.01 to 1:

The first function,

with parameters

,

,

, and

, features Gaussian-like terms that create a complex surface, suitable for testing convergence on smooth but non-trivial landscapes.

The second function (Rosenbrock function),

with parameters

and

, represents a nonlinear, non-symmetric surface, ideal for evaluating convergence in challenging multi-dimensional optimization problems.

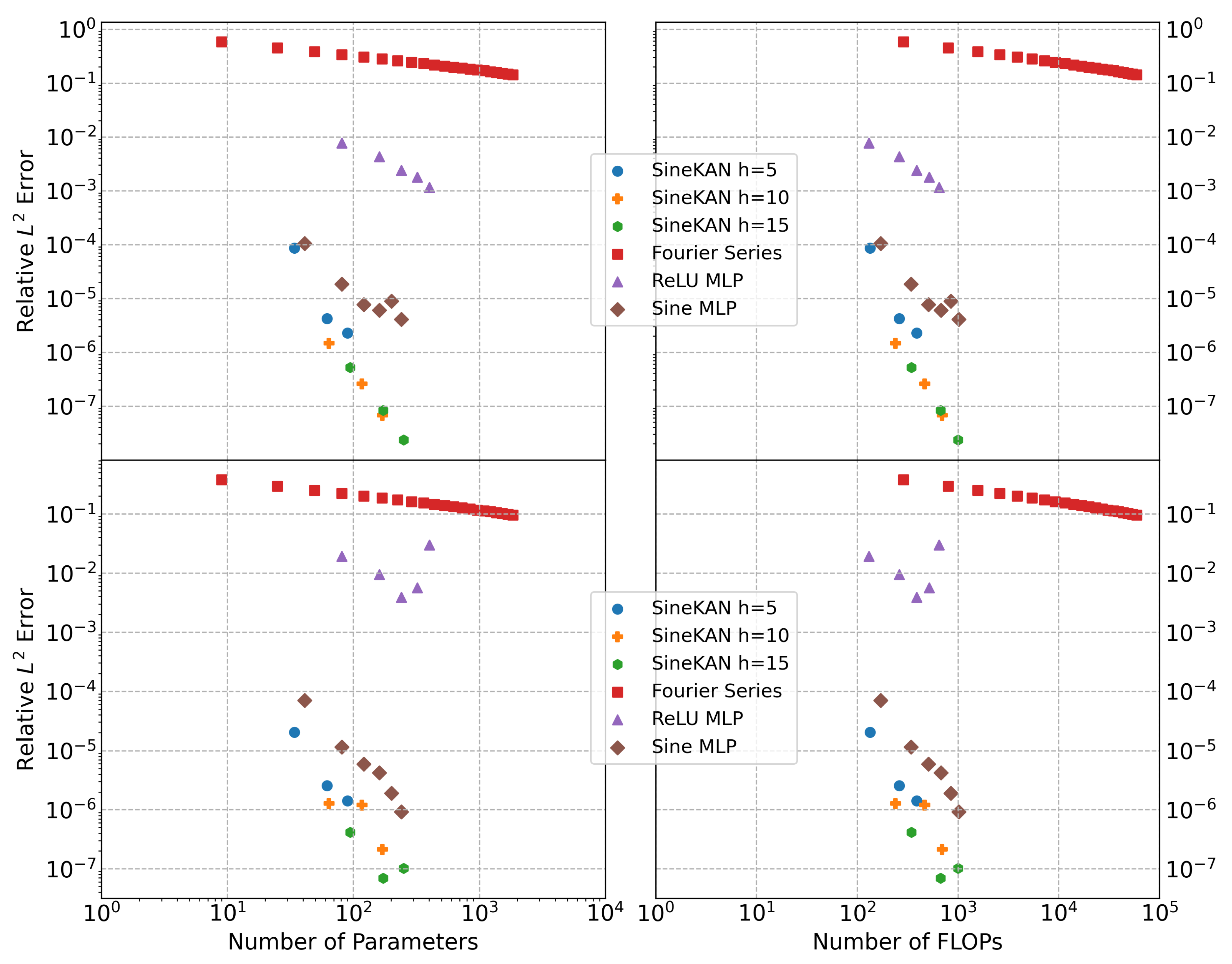

We show in

Figure 2 that the two-layer SineKAN outperforms the two-layer MLP with sinusoidal activation functions as a function of the number of parameters. MLP with ReLU activations performs substantially worse, with an error several orders of magnitude higher as a function of the number of parameters and Fourier series, with a characteristic error roughly one to two orders of magnitude greater than the two-layer MLP with ReLU.

We also computed performance as a function of the number of relative FLOPs or compute units. To do this calculation, we ran 10 million iterations using numpy arrays of size 1024 to estimate the relative compute time of addition, multiplication, ReLU, and sine; we found that, when setting addition and multiplication to approximately 1 FLOP, ReLU costs an estimated 1.5 FLOPs, and sine functions cost 12 FLOPs. We carried out similar estimates in PyTorch (

https://pytorch.org/, accessed on 15 September 2025) and found that the relative FLOPs for sine would be closer to 3.5 in PyTorch, and the relative FLOPs for ReLU would be around 1 FLOP.

Figure 2 is based on numpy estimates.

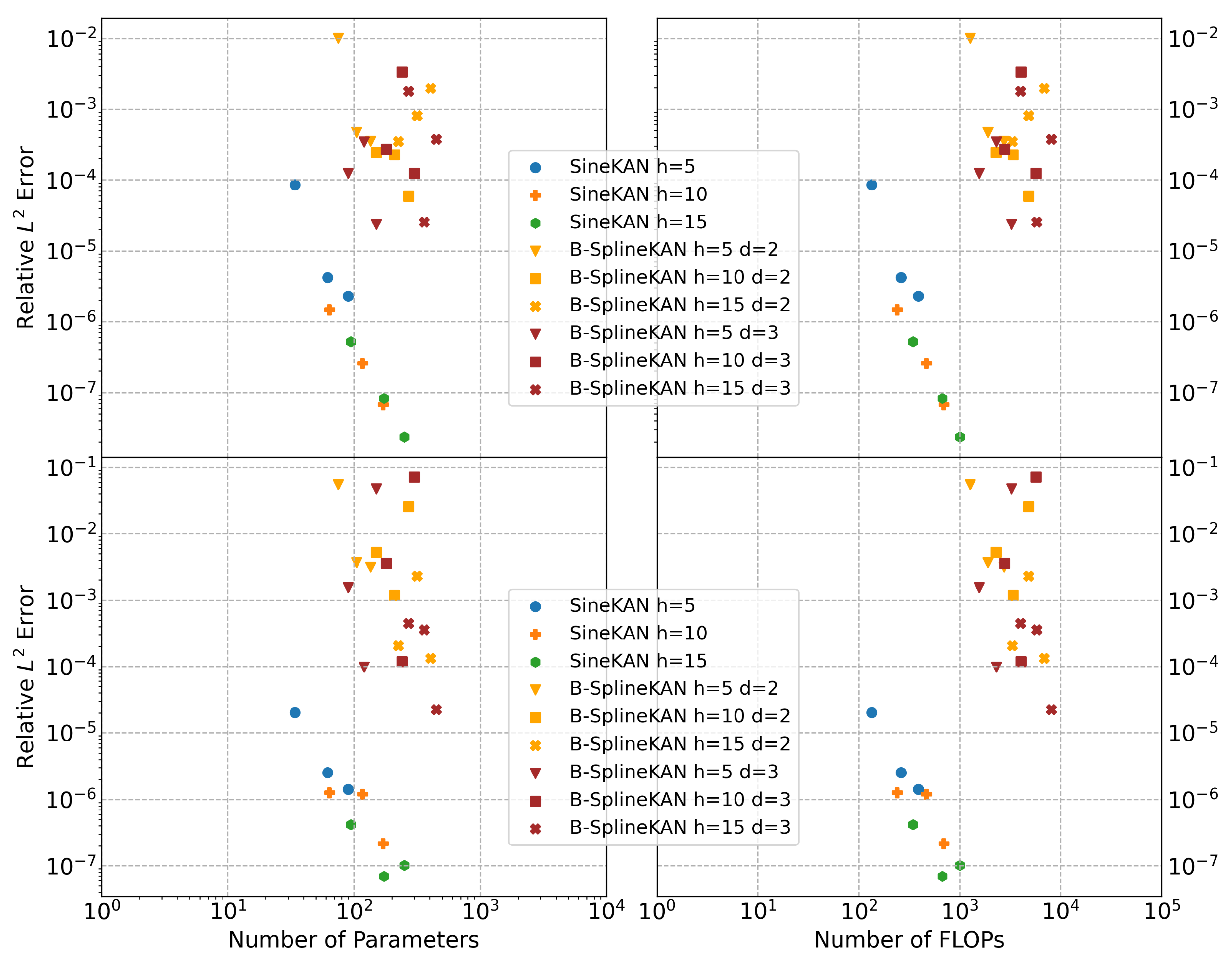

Further, in

Figure 3, we show that the SineKAN model scales well compared to B-SplineKAN. The characteristic error with comparable numbers of parameters is typically two full orders of magnitude less for SineKAN, while B-SplineKAN models require substantially more FLOPs for the same number of parameters. Moreover, we find that, when compared to MLP and SineKAN models, B-SplineKAN models have significantly worse consistency in convergence with larger models’ dimensionalities, which may necessitate much more precise tuning of hyperparameters or higher-order optimization algorithms.

In

Table 3, we show the best-performing models explored. Here, we also evaluate (for comparison) the number of nodes in the computational graphs of the models that can result in a significant bottleneck in backpropagation optimization. The characteristic error for SineKAN is substantially lower than for all other models, including the second-best MLP with sine activations, when controlling for similar numbers of parameters and FLOPs. MLP models with sine activations still achieve comparable performance that is within one to two orders of magnitude of error but require a smaller number of nodes, meaning their optimal training times could potentially be lower as a result.

5. Discussion

The original implementation of the KAN model, developed by Liu et al., used basis-spline functions [

22]. These were proposed as an alternative to MLP due to their improved explainability, domain segmentation, and strong numerical performance in modeling functions. However, later work showed that, when accounting for increases in time and space complexity, the basis-spline KAN underperformed compared to MLP [

27]. Previous work on the Sirens model has shown that, for function modeling, particularly for continuously differentiable functions, sinusoidal activations can improve the performance of MLP architectures [

33]. This motivated the development of the SineKAN architecture, which builds on both concepts by combining the learnable on-edge activation functions and on-node weighted summation of KAN with the periodic activations from Sirens [

29].

Previous work in [

29,

34] has established that, on tasks that require large models and with discontinuous space mappings, the SineKAN model can perform comparably to, if not better than, other commonly used fundamental machine learning models, including LSTM [

12], MLP [

10], and B-SplineKAN [

22]. We extend these two previous works by providing a robust constructive proof for the approximation power of the SineKAN model. We show that a single layer is sufficient for the approximation of arbitrary one-dimensional functions and that a two-layer SineKAN is sufficient for the approximation of arbitrary multivariable functions bounded by the same constraints as the original KART [

35].

In

Figure 1,

Figure 2 and

Figure 3, we show that these functions can achieve low errors in modeling mathematical functions with features such as rapid- and variable-frequency oscillations. For two-dimensional D functions, we show that SineKAN outperforms MLP, including MLP with sinusoidal activations, with flexible model parameter combinations when accounting for both time and space complexity of the models. This strongly motivates further exploration of this model for numerical approximation tasks.

Given the inherent periodic nature of sinusoidal functions, our approximation framework (

2) shows strong potential for modeling periodic and time-series data. Future work will explore the extension of SineKAN to continual learning tasks, particularly in scenarios involving dynamic environments or non-stationary data. Further directions include theoretical analysis of generalization bounds, integration with neural differential equations, and applications in signal processing and real-time prediction systems.