1. Introduction

Intentions are ubiquitous and play a critical part in our daily decision-making, helping to guide future actions and logical decision-making. Still, as far as we are aware, no significant attempt has been made to model and apply the function of intent in decision-making within a framework of rational choice. Since no system has been put in place that incorporates a true intention recognition system into a decision-making system, it is always believed that the intentions of other pertinent agents are provided as input [

1]. Intentions shape decisions by linking them to preferences and the pursuit of utility maximization. Decision problems are resolved based on preferences, with the overall goal of maximizing utility. On the other hand, intentions provide a highly useful foundation for defining what it means to perform and repeat an action [

2].

Decision-making involves cognitive and affective stages in which individuals evaluate options and form intentions before acting. While some decisions stem from deliberate reasoning, others are shaped by implicit attitudes, the unconscious judgments formed through experience and socialization that influence how choices are perceived. These attitudes may align with explicit preferences or diverge from them, often revealing real intention more accurately in fast or intuitive contexts. This interplay underpins the study of how internal states are expressed through nonverbal behaviors, such as hand gestures, within decision-making scenarios [

3].

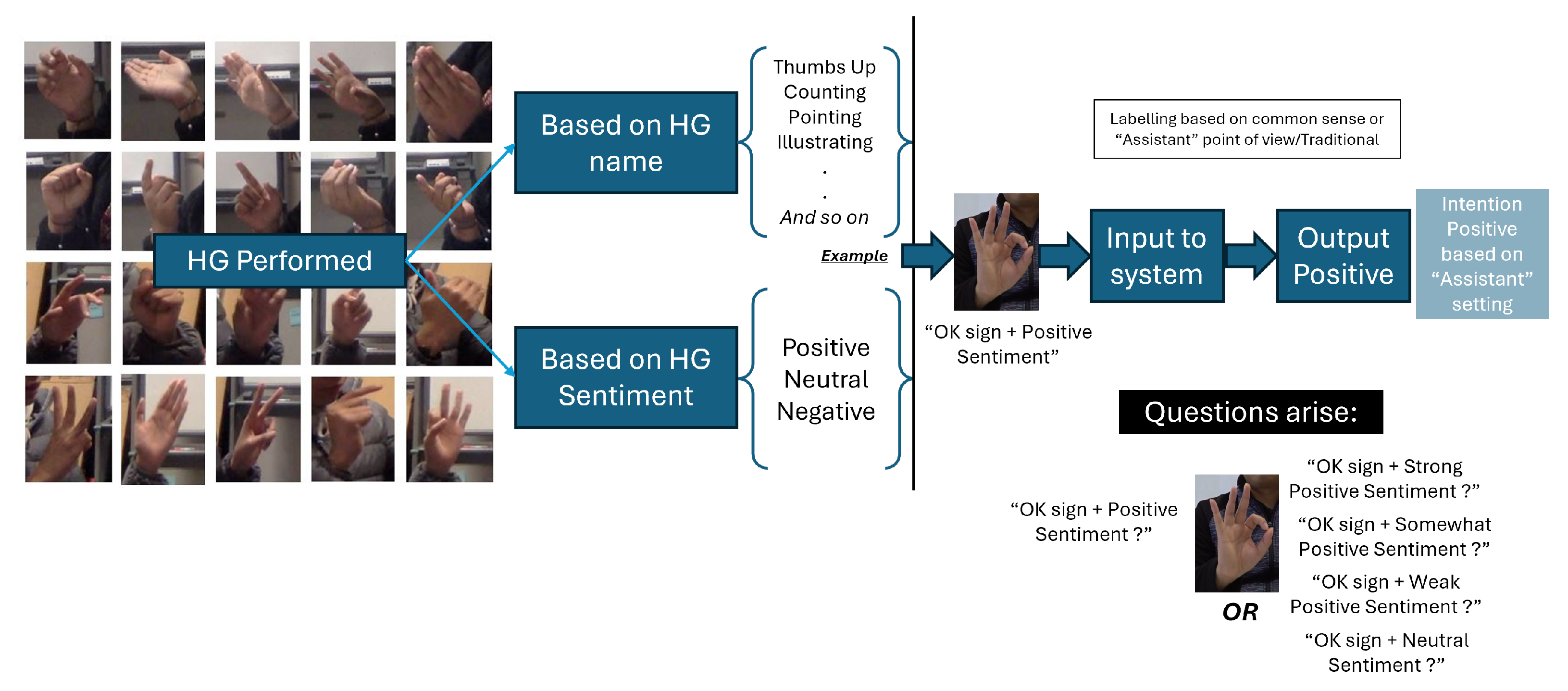

Building on this perspective, we turn to the role of hand gestures as observable expressions of intention within decision-making contexts. We investigate the real intention of human hand gestures as part of an action through an experiment that we conducted in our laboratory. For example, hand gestures like “Thumbs Up” and “Thumbs Down” are frequently thought to have universal meanings and are usually connected to approval or disapproval. This premise is present in many gesture recognition systems, which use predefined visual labels to train models to recognize and categorize gestures [

4,

5]. Nevertheless, gestures do not always express clear intentions. Gesture interpretation can vary depending on the individual, situational context, or emotional state. Therefore, a seemingly Positive action could convey ambiguity, neutrality, or even social camouflage [

6,

7].

These disparities draw attention to a disconnect between the actual meaning that gestures convey and their predetermined labeling. Conventional techniques for classifying gestures frequently depend on strict classifications (Positive, Neutral, and Negative), which ignore the complexity and diversity of human expression. Due to societal, cultural, or emotional limitations, some people may purposefully conceal their genuine intentions [

8]. The same gesture may therefore mean different things to different people. To address this gap, gestures should be viewed as expressive, context-dependent behaviors rather than as fixed visual representations. They are responsible for both articulating and forming ideas in nonverbal communication [

9,

10]. In contrast to direct physical movements, gestures are the result of internal emotional and mental states [

11,

12].

In order to choose a gesture that corresponds with their internal state, people instinctively combine sensory input, emotional experience, and social context to select a gesture that aligns with their internal state [

3]. Before choosing a gesture in response to external cues, the human mind quickly and frequently integrates sensory information, emotional evaluation, and social concerns [

5,

13]. As a result, depending on personal circumstances, the selected gesture serves as a channel for communicating the results of this internal evaluation process, either explicitly or implicitly (

Figure 1).

In this study, we have three main considerations: the decision-making process, the hand gestures resulting from the decision-making process, and the real intention behind the hand gestures. However, to understand the hand gestures, this study also introduces a method to interpret the hand gestures by integrating participant-selected descriptive words with hand gestures to characterize human intention using a fuzzy logic-based framework. Rather than interpreting gestures in isolation, this method grounds meaning in participants’ sentiment expressions, conveyed through language, offering a richer and more personalized understanding of gesture interpretation [

5,

13].

This approach is operationalized through a tea-tasting experiment, where participants expressed their impressions using both hand gestures and descriptive words. These word–gesture combinations reveal that a single gesture may carry varied emotional meanings based on the individual’s subjective evaluation [

14]. For example, gestures that are usually linked with Positive sentiment, such as “OK” or “Thumbs Up,” can represent ambivalence, hesitancy, or societal conformity. These findings challenge the assumption of fixed gesture meanings and highlight the role of personal and situational context in shaping interpretation [

7].

To accommodate this interpretive variability, the study employs a fuzzy logic-based system that maps gestures onto a continuous sentiment scale [

15]. Fuzzy logic is particularly suited to modeling the ambiguity inherent in nonverbal expression, as it allows for partial membership across sentiment categories. By linking the observable features of gestures [

16] with the underlying affective states inferred from language, the system minimizes misclassification and supports more nuanced, flexible, and human-aligned interpretations [

17]. In this sense, sentiment analysis is positioned as a stage within the broader investigation of hand gesture intention in decision-making process, rather than as an endpoint, providing the empirical basis for future intention-focused studies.

1.1. Significance of the Study

This study challenges the assumption that hand gestures convey universally fixed meanings by examining how individuals subjectively interpret and express intention through gesture.

It introduces a fuzzy logic-based framework to model interpretive ambiguity, enabling gestures to partially belong to multiple sentiment categories and better reflect internal cognitive and emotional states.

The proposed approach offers a more human-aligned interpretation system by moving beyond rigid classifications and enabling flexible, graded modeling of intention relevant for advancing gesture recognition, decision-making analysis, and human–computer interaction.

1.2. Research Objectives

This study sets out the following objectives:

To investigate how individuals express intuitive or affective judgments through hand gestures in response to external stimuli.

To analyze the variability in gesture interpretation by modeling how a single gesture can represent a range of sentiment expressions based on individual perception.

To design and validate a fuzzy inference system capable of representing non-binary, context-sensitive interpretations of intention using hand gesture data and participant-selected descriptive words.

1.3. Hypotheses

Grounded in the need to account for subjectivity and interpretive ambiguity in gesture-based communication, this study proposes the following hypotheses:

H1: The interpretation of hand gestures is not fixed but varies by individual and context, with some gestures showing consistent meanings while others exhibit significant ambiguity.

H2: A fuzzy logic-based interpretation system can better accommodate interpretive uncertainty and overlapping sentiment boundaries than traditional categorical classification approaches.

2. Related Work

2.1. Intention in Decision-Making Process

In 1991, Mathieson, K. [

18], in the paper entitled

Predicting User Intentions: Comparing the Technology Acceptance Model (TAM) with Theory of Planned Behavior (TPB), concluded that both TAM and TPB can predict the intention to use an information system quite well. However, TAM only supplies very general information on users’ opinions by identifying two key variables that affect users’ attitudes towards the acceptance and adoption of new information systems—perceived usefulness and perceived ease of use [

19]—while TPB provides more specific information that can be a better guide for development.

Neither the Technology Acceptance Model (TAM) nor the Theory of Planned Behavior (TPB) is a widely recognized psychological framework that explains how individual intentions influence human behavior. In TPB, for example, this theory holds that three main elements influence a person’s behavioral intentions: their attitudes about the behavior, subjective norms (also referred to as social norms), and perceived behavioral control. These elements combine to forecast a person’s likelihood of engaging in a particular behavior [

20].

However, how is intention related to human decision-making? Human decision-making is commonly examined through theoretical frameworks such as the Theory of Planned Behavior (TPB) and the Technology Acceptance Model (TAM), particularly in the context of technology adoption. Notably, the TPB highlights behavioral intention as a central component, representing an individual’s motivation to perform a specific action. This intention is shaped by factors such as personal attitudes, perceived behavioral control, and social norms [

21]. These motivational elements collectively influence whether an individual is likely to follow through with a given behavior, making intention a critical predictor of actual decision-making.

Understanding intention provides valuable insight into the cognitive and motivational mechanisms underlying human decision-making [

3]. Whether in the context of technology adoption or broader behavioral domains, intention serves as a bridge between internal beliefs and observable actions. By examining how intentions are formed, influenced, and eventually translated into behavior, researchers can gain a deeper understanding of the dynamic processes that guide human decision-making, highlighting the need for models that can capture the complexity and variability of human intention.

2.2. Interpreting Human Intention Beyond Gesture Classification

Researchers have increasingly recognized that speech is often accompanied by gestures, posture, and facial expressions, which serve as embodied manifestations of inner mental processes [

22]. In addition, Grice, in 1957, [

23] claimed that language is an intentional action, which has been widely accepted in pragmatics and psycholinguistics, where humans are aware that they are capable of numerous automated tasks, but they typically do not include language in this list. People converse and drive simultaneously, but we do not typically assume that they are doing so intentionally and conversing on autopilot.

Gestures are embodied manifestations of inner mental processes [

24], not only physical signs. According to [

10], gestures have two cognitive purposes. They help people externalize and organize their thoughts and communicate with others [

25]. However, many gesture-based computer systems continue to use strict mappings between gesture form and predetermined meaning, which frequently reduces gesture interpretation. For many years, researchers have been fascinated by hand gesture recognition because it provides a natural and intuitive way for humans to interact with machines [

26]. However, understanding the real intention behind gestures still presents unresolved complexities, since most approaches study the hand gesture in isolation [

4,

25,

27].

Conventional approaches to gesture interpretation often initially rely on predefined classification schemas or socially accepted meanings to assign value to gestures [

17,

28]. With the emergence of machine learning and deep learning techniques, modern systems now employ architectures to improve accuracy and responsiveness [

29]. These models’ designs have enabled real-time classification of both static and dynamic gestures, greatly expanding the range of use cases, particularly in assistive technologies, augmented reality, and immersive environments [

30,

31,

32]. However, these models frequently fail to capture the intricate, dynamic, and context-dependent nature of human intention [

33,

34,

35].

Instead of only recognizing gestures, a realistic gesture interface needs to understand the entire process. Users are not required to learn, commit to memory, or perform motions. Users are free to make any gesture they want based on their common sense, which includes the function’s semantics and their everyday interactions with both people and technology. By taking into account the interaction context as well as the gesture, the interface also automatically performs the association between the gesture and the function [

36]. However, compared to gesture identification, gesture understanding is more difficult [

37].

2.3. Fuzzy Logic for Intention Analysis

Comprehending the fundamental mechanism of human decision-making using intention estimation is essential for human–robot or human–agent interaction. While numerous sports and even daily life exhibit human flexible cooperative behaviors, the process of cooperative decision-making remains unclear [

38]. The nature of intentionality is central to ongoing debates in the philosophy of mind, particularly regarding what the mind is and what it means to possess one. Intention raises important considerations about key mental states such as seeing, remembering, believing, wishing, hoping, knowing, intending, feeling, and experiencing [

33].

In 2015, a study titled “Algorithm for driver intention detection with fuzzy logic and edit distance” [

39] discussed the fact that no technology currently exists that can directly measure a driver’s intention or anticipate it with precision in every circumstance. Only projections with included uncertainty are currently feasible. It is possible to deduce the driver’s goal by observing their behavior [

40,

41], creating a mental model, and then forecasting future behaviors. To create an algorithm, the input variables must be chosen, the necessary transformations must be calculated, and the algorithm’s intended output must be specified, a concept that is the basis of fuzzy logic.

In another study, it was concluded that fuzzy set theory is ideal for clinical and educational evaluation because it offers a mathematical foundation for simulating ambiguity and gradation in human judgment. In contrast to binary or discrete scoring methods, fuzzy set theory has been utilized in medical education to assess student performance, clinical decision-making, and communication abilities. This approach provides more nuanced feedback [

42]. In addition, nowadays researchers are also trying to understand intention reasoning and clarification failure by combining LLM and fuzzy logic [

43]. In contrast to humans, who make decisions based on experience, LLMs are unable to infer complicated situations beyond the incoming data due to a lack of common sense. The inability of LLMs to retain previous context when dealing with complex intentions or attitude alterations can result in mistakes when attempting to infer implicit user demands.

The fuzzy set theory of computational intelligence is popular. This theory focuses on creating systems that can process flexible data [

44]. Built upon this foundation, fuzzy logic emerges as one of the most effective quantitative methods for reasoning under uncertainty. One of its key strengths lies in the close relationship between verbal descriptions and the resulting mathematical models, allowing for intuitive yet rigorous representation of human-like reasoning [

41]. The suggested method assumes that qualitative data is obtained from experts who express their opinions based on their expertise, experience, and intuition [

45].

Fuzzy logic is a computing technique that uses the same variable to analyze potential truth values. It is derived from the mathematical study of multivalued logic. Fuzzy logic uses true values between 0 and 1, which means that the method can produce solutions based on data ranges rather than discrete data points, in contrast to classical logic, which requires statements to be either true or false. In this situation, data with subjective or relative definitions can be interpreted using fuzzy logic. Falsehoods or claims of absolute truth are uncommon in real-world contexts because people view and understand information differently [

46].

In this study, fuzzy logic is employed as a flexible framework for modeling human intention under conditions of ambiguity, subjectivity, and imprecision. By enabling graded reasoning rather than binary categorization, it is well-suited for interpreting gestures whose meanings are context-dependent and individually variable. Applied within affective computing, decision-making, and human–computer interaction, this approach captures the complexity of real-world behavior while remaining interpretable and adaptable, supporting more nuanced and human-centered interpretations that reflect the dynamic and approximate nature of internal states.

3. Methodology

3.1. Experiment

This study begins with an experiment conducted in our laboratory, referred to as the “Tea Tasting Experiment”. The details of the experiment are as follows:

Participants: The study involved 55 participants, comprising 18 females and 37 males. The participants represented diverse backgrounds in terms of nationality, field of expertise, and age distribution. All participants were students from the Kyushu Institute of Technology (Kyutech), Wakamatsu Campus, Tobata Campus, Iizuka Campus, and Kitakyushu City University.

Equipment: The experiment utilized three types of cameras: Logitech HD 1080 Webcam (Logitech Inc., San Jose, CA, USA; camera 1), Sony RX0 (Sony Corporation, Tokyo, Japan; camera 2), and MacBook Pro 2017 built-in camera (Apple Inc., Sunnyvale, CA, USA; camera 3). In addition, five types of ready-to-drink (RTD) tea beverages were sourced from Japan.

Task: In this experiment, participants were presented with five types of ready-to-drink (RTD) tea from Japan. They were instructed to taste each tea and express their preference using a hand gesture that reflected their perception of the flavor. Following this, participants were asked to select three colors that best represented their sensory experience and overall impression of each tea. This approach aimed to explore the relationship between taste perception, hand gestures, and color associations, providing deeper insights into the implicit cognitive and emotional responses elicited by different tea flavors.

The data from this experiment were approved by the Ethics Committee of Kyushu Institute of Technology (Approval No. 24-11: “Hand gesture video data collection experiment for observation of decision-making process”).

3.2. Experiment Flow

Data collection was initially conducted in our laboratory, with participants recruited from the Kyutech Wakamatsu Campus and Kitakyushu City University. The experimental setup was subsequently relocated to the Kyutech Iizuka Campus and finally to the Kyutech Tobata Campus. The procedures and treatment protocols were identical across all locations. Each participant entered the experimental room individually, and before the experiment began, comprehensive instructions were provided detailing the procedures and tasks to be performed at each stage. Written informed consent was obtained from all participants.

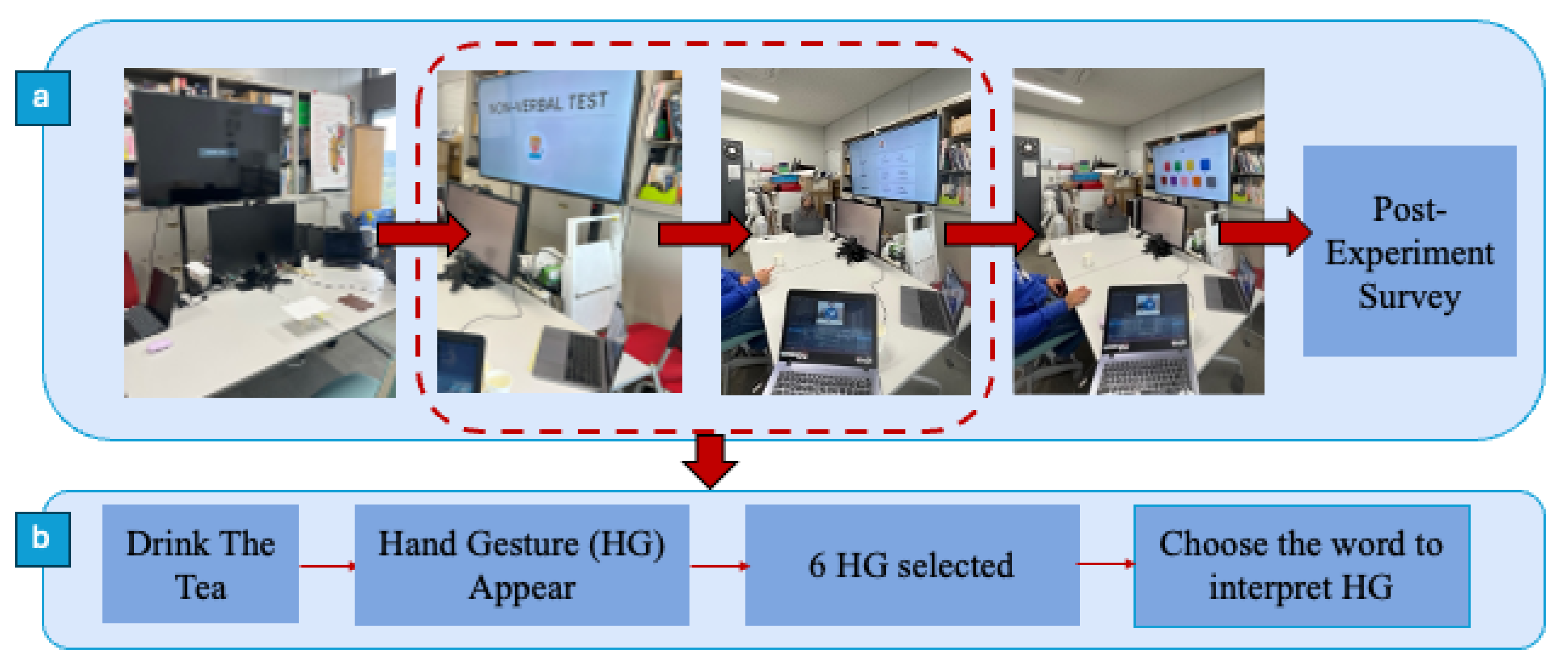

Figure 2 in section (a) shows the experimental setup, which was created to guarantee ecological validity, uniformity, and high-quality video recording. Several cameras supported a dual-monitor station positioned in front of the participants, enabling synchronized recording. The session began with the prompt “non-verbal test,” marking the transition to a gesture-based assessment mode. Five distinct samples of ready-to-drink (RTD) tea were given to participants sequentially.

In

Figure 2, the lower segment of part (b) presents the procedural flow of the task in four discrete stages. When paired with the gesture form, this self-reported verbal data provided a semantic layer that enabled a more complex and participant-centered understanding of intention. The modeling of interpretive ambiguity and the depiction of gesture-based mood as graded rather than categorical were made possible by this integrated approach, which served as the basis for the later use of fuzzy logic.

3.3. Data Description

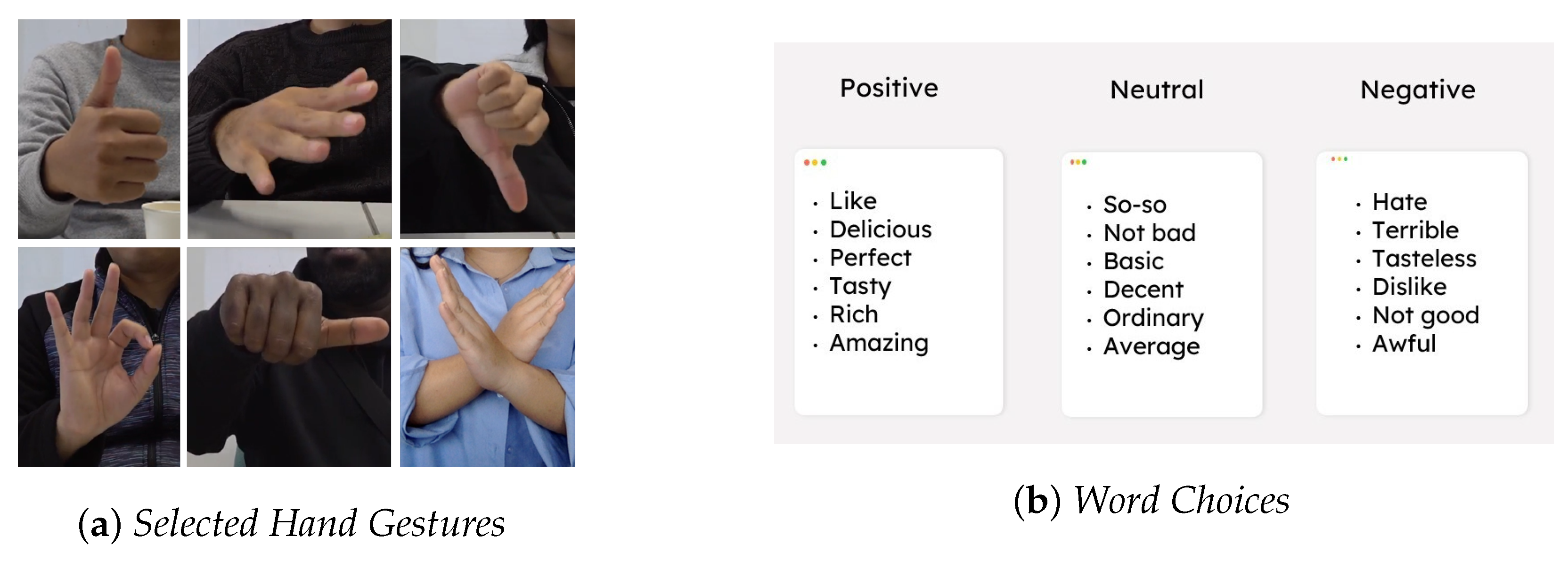

Gesture: In our experimental study, we identified twelve distinct configurations of hand gestures, which varied in execution: some were performed with one hand, and others with both hands, and they were further differentiated by whether the left or right hand was used. For this study, six gestures (

Figure 3a) were selected based on the frequency and consistency of sentiment-related words chosen by participants to describe their gestures. Specifically, two gestures were predominantly associated with Positive descriptors, two with Negative descriptors, and two with Neutral descriptors. These selected gestures will serve as the core dataset for analyzing how hand gestures correspond to perceived intention, as inferred through participant-generated verbal interpretations.

Words: To classify these gestures, we analyzed both their physical characteristics and the semantic interpretations provided by participants. After performing each gesture, participants were asked to select descriptive words that best conveyed the meaning or intention behind their actions in

Figure 3b. These words were then categorized into three sentiment classes: Positive, Neutral, and Negative. Importantly, participants were not restricted to a specific sentiment category; they were free to choose any words they felt most accurately reflected the essence of their hand gestures. This open-ended approach enabled a more nuanced understanding of how individuals use gestures to convey varying degrees of emotional and cognitive intent.

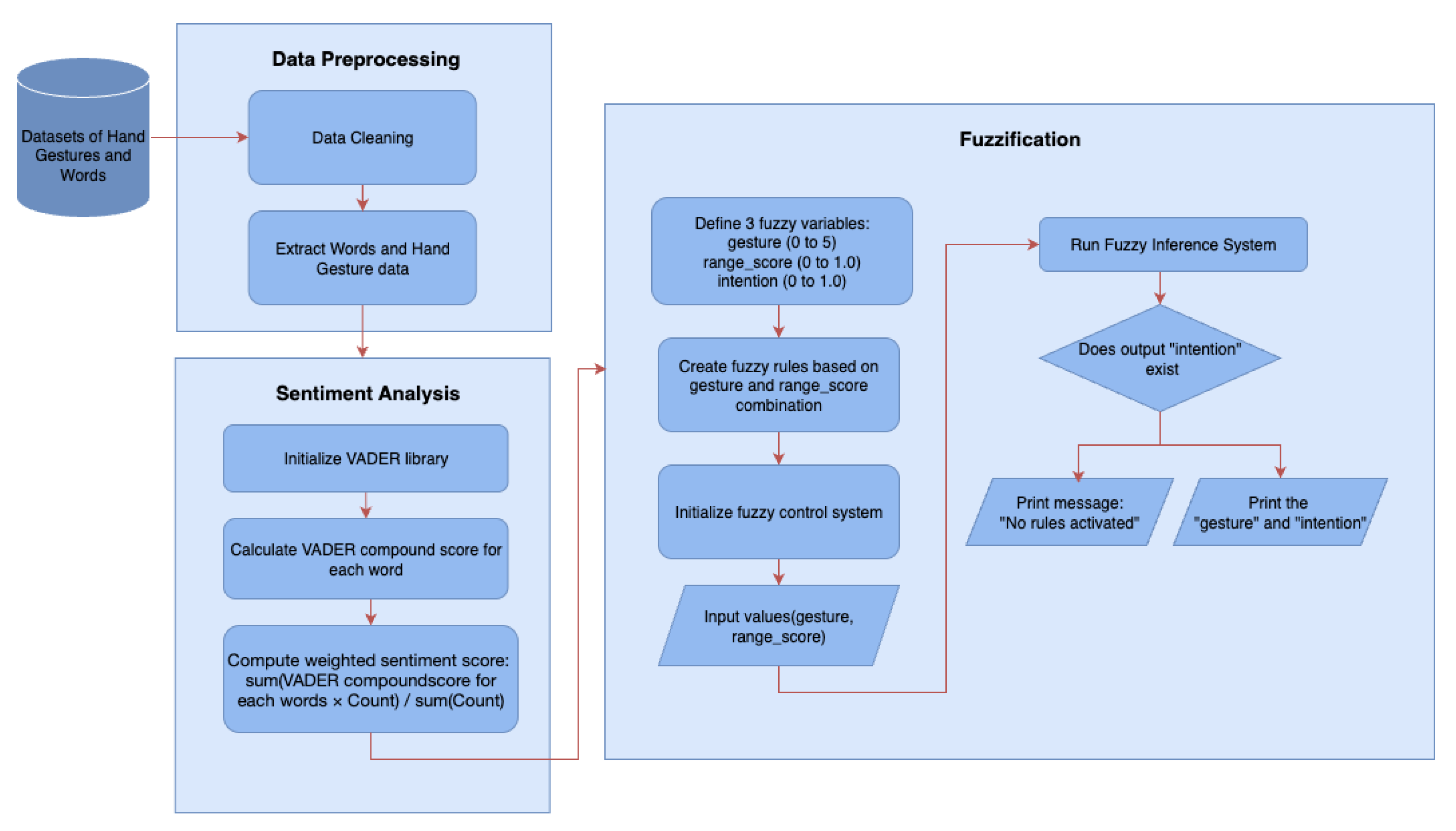

Figure 4 shows the overall workflow of the proposed system, which combines verbal sentiment analysis with hand gesture data in an inference framework based on fuzzy logic. Data preparation, which involves cleaning and organizing raw datasets with participant-generated hand gestures and descriptive words, is the first step in the process. Color associations were also collected during the experiment, but the present study focuses on gestures and words; the analysis of color data will be presented in future work. This workflow enables the system to model overlapping gesture meanings by leveraging verbal sentiment cues and fuzzy reasoning, resulting in more human-aligned interpretations of nonverbal behavior.

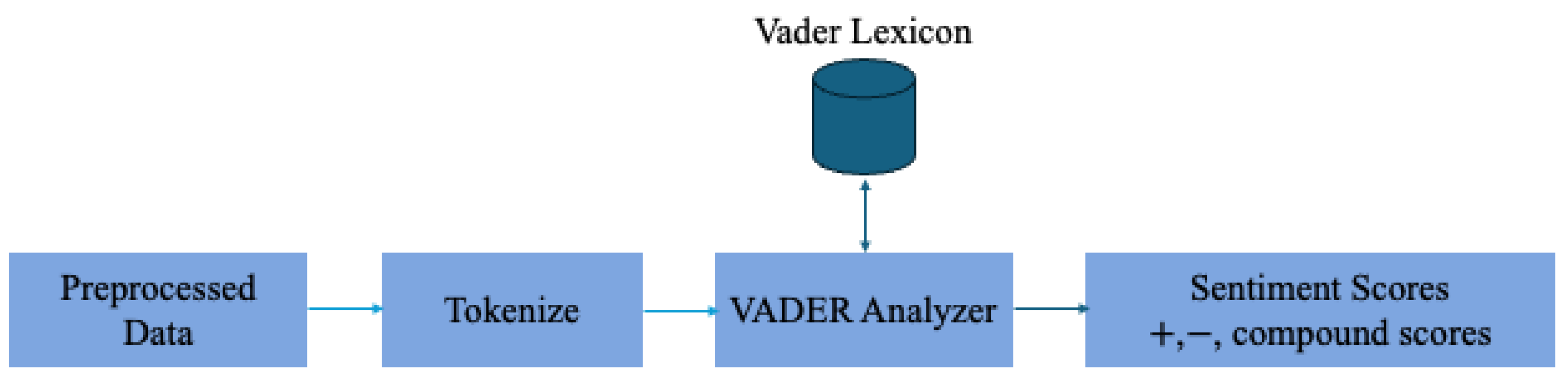

3.4. Sentiment Analysis of Gesture–Word Association Using VADER

In human–computer interaction (HCI), sentiment analysis (SA) and emotion detection are essential for enabling systems to interpret and respond to users’ emotional states [

47]. These methods enhance interaction quality by aligning system feedback with user intent [

48]. Among the SA techniques, lexicon-based approaches are preferred for their interpretability, computational efficiency, and transparency.

One widely adopted lexicon-based tool is the Valence Aware Dictionary and Sentiment Reasoner (VADER). VADER is particularly effective for short, informal text, such as social media posts or participant annotations, and operates efficiently in real-time or sparse-data scenarios. It combines a predefined sentiment lexicon with heuristics—such as capitalization, punctuation, degree modifiers, and conjunctions—to compute polarity and compound scores [

28,

49]. The compound score, normalized between −

1 (Extremely Negative) and +

1 (Extremely Positive), represents the overall emotional valence of the input [

50].

In this study, VADER was applied to the three descriptive words participants selected after each hand gesture. These self-reported descriptors provide a semantic complement to gesture data, reflecting participants’ internal evaluations and intentions. Converting this qualitative input into sentiment scores enables quantification of the affective meaning behind gestures.

Figure 5, adapted from [

50], illustrates the sentiment analysis pipeline.

To compute the final sentiment score for each gesture, a weighted average is applied as follows:

This formula aggregates the affective weight of all descriptors for a gesture, producing a single value that represents its collective emotional interpretation.

Algorithm 1, details the classification process.

| Algorithm 1 Gesture Sentiment Classification Using VADER |

- 1:

procedure ClassifyGestureSentiment(gesture_words) - 2:

Combine all words into a list: gesture_list - 3:

for each word in gesture_list do - 4:

Compute VADER_Score[word] - 5:

end for - 6:

for each word in gesture_list do - 7:

WeightedValue[word] ← VADER_Score[word] × Count[word] - 8:

end for - 9:

WeightedSum ← sum of all WeightedValue - 10:

TotalCount ← sum of all word counts - 11:

WeightedScore ← WeightedSum ÷ TotalCount - 12:

return WeightedScore - 13:

end procedure

|

This approach bridges the gap between affective modeling and linguistic descriptors by allowing a quantitative assessment of the semantic component underlying each gesture.

3.5. Hand Gesture Intention Analysis Using Fuzzy Logic

Interpreting hand gestures to infer intention remains challenging due to the subjectivity and contextual variability of real-world communication. Conventional models often treat gestures as fixed, unambiguous symbols, ignoring cultural, situational, and emotional factors that may alter their meaning. In our previous work, gestures were interpreted in natural conversations alongside speech [

17], but intention modeling was not addressed.

To address this limitation, the proposed model augments gesture recognition with participant-generated descriptive words. Rather than relying solely on gesture type, participants selected three adjectives to describe their intended meaning. These descriptors act as a semantic bridge between physical expression and internal cognitive–emotional states.

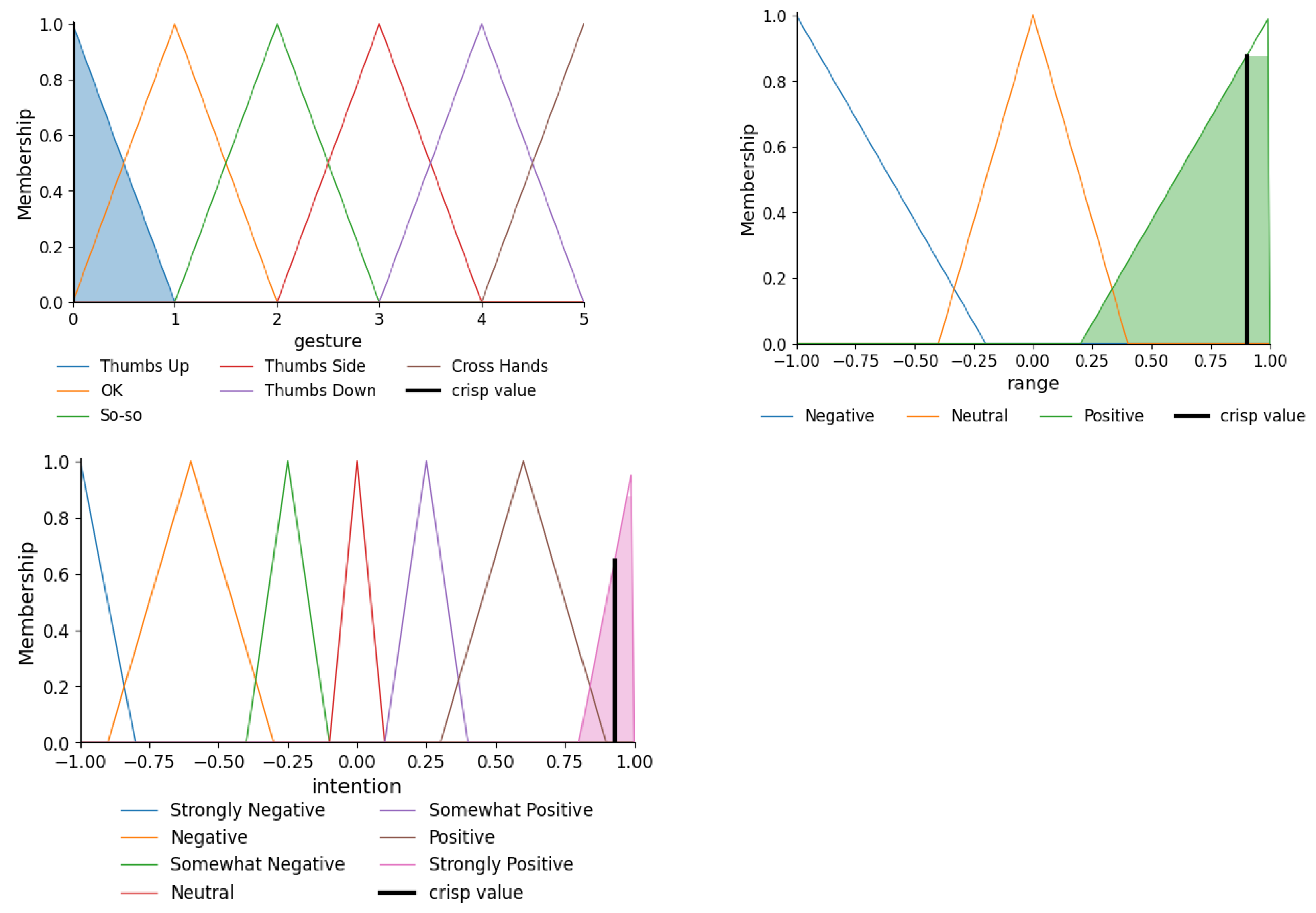

A fuzzy logic decision system was implemented to manage interpretive uncertainty. Fuzzy logic is well-suited for reasoning over ambiguous or overlapping concepts, making it ideal for modeling gesture–word relationships. The system is defined by three variables:

Gesture: This is a categorical input (indexed with values from 0 to 5, which represent Thumbs Up, OK, Thumbs Sideways, So-so, Thumbs Down, Crossed Hands).

Range Score: This is a continuous input (0.0–1.0), derived from the weighted VADER sentiment scores (

Section 3.4).

Intention: This is a continuous output (0.0–1.0), representing inferred sentiment polarity and strength.

Fuzzy membership functions, enabling seamless transitions across category boundaries, are defined for each variable. Gesture–sentiment combinations are linked to fuzzy intention labels through an interpretable IF–THEN rule base, allowing inference even when input meanings overlap or are imprecise. Once all fuzzy rules and membership functions are defined, each gesture–sentiment pair is evaluated: if a valid rule is activated, the system returns a fuzzy intention score; otherwise, the combination is flagged as semantically incongruous, indicating a weak or contradictory match between gesture and descriptors.

By adopting probabilistic interpretation and avoiding rigid categorization, this method mirrors the complexity and ambiguity inherent in natural nonverbal communication. It accommodates subtle variations in human intention while filtering implausible inputs.

3.5.1. Crisp -Rule Activation

The first step evaluates whether a gesture–sentiment pairing is semantically valid, referencing a predefined set of crisp rules. Valid pairs proceed to intention assignment; invalid pairs are excluded (

Table 1).

This filtering ensures that fuzzy reasoning is only applied to semantically plausible input pairs.

3.5.2. Fuzzy Intention Assignment

Valid gesture–sentiment pairs that pass the crisp-rule activation stage are assigned fuzzy intention labels using an interpretable IF–THEN rule base. This rule structure links each gesture type and sentiment category to a corresponding intention outcome, as formalized in Equation (

2):

Table 2 presents the complete mapping of gesture–sentiment combinations to intention labels. For example, a Thumbs Up with a Neutral sentiment is interpreted as leaning toward positivity (Neutral → Somewhat Positive), while a Thumbs Down with a Negative sentiment intensifies rejection (Negative → Strongly Negative). Gestures such as the OK Sign, So-so, and Thumbs Sideways are represented across all three sentiment inputs because participant responses linked them to descriptors spanning Negative, Neutral, and Positive categories. Including these variations ensures that the rule base reflects empirical participant interpretations rather than imposing fixed meanings.

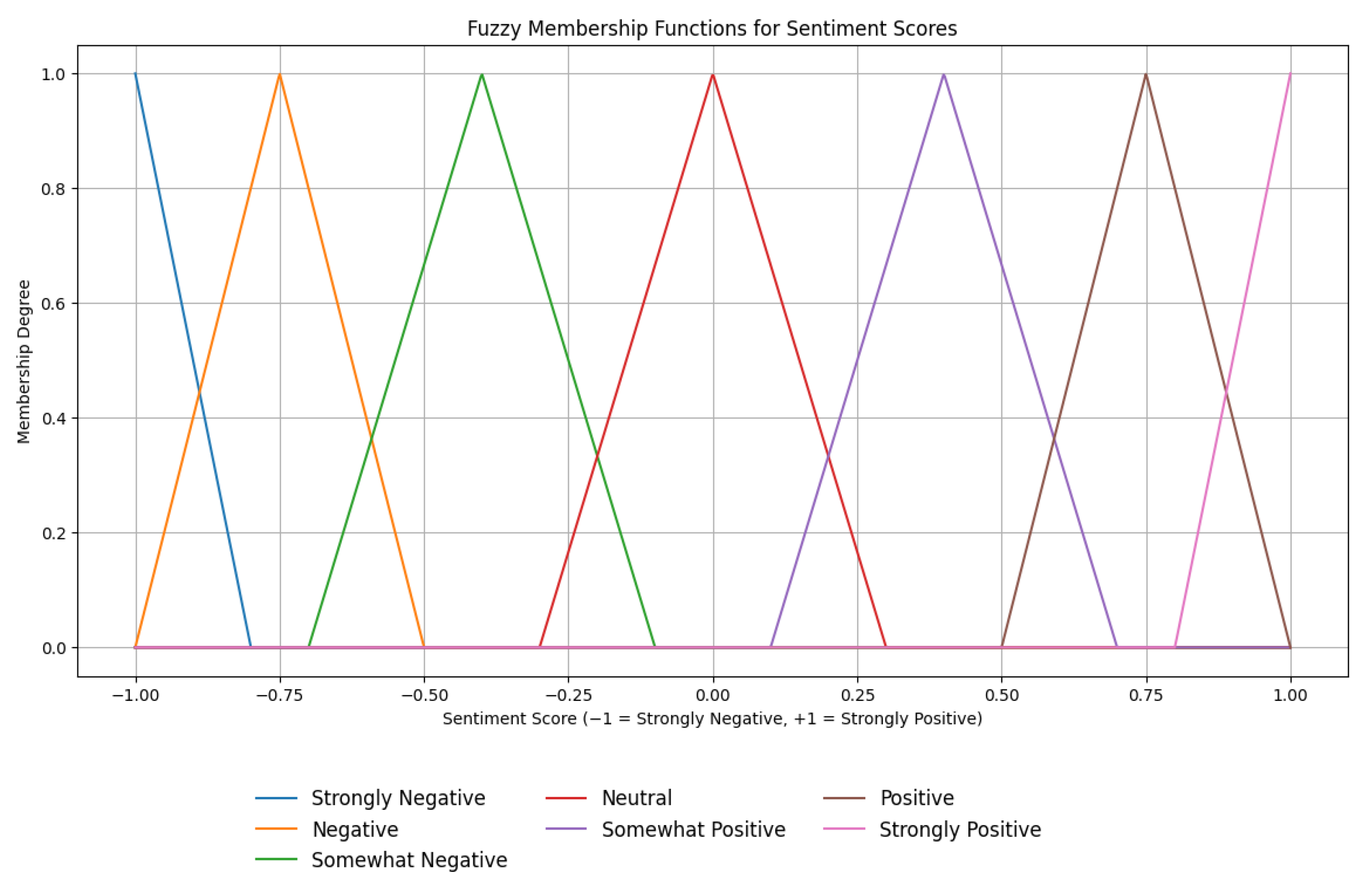

3.5.3. Fuzzy Sentiment Membership Modeling

This subsection defines the input side of the framework, where participant-derived sentiment scores are fuzzified into three categories: Negative, Neutral, and Positive. Weighted sentiment ratings, obtained from participant-selected descriptive words and calculated via VADER, were mapped into triangular membership functions to represent the gradation and ambiguity of human emotional expression.

Triangular membership functions were defined over the normalized sentiment score range

. Each function is specified by three parameters

, which determine the base and peak of the triangle. As shown in

Table 3, these overlapping functions map VADER sentiment scores to fuzzy membership values for the Negative, Neutral, and Positive categories, allowing scores to partially belong to multiple categories and thus reflecting the fuzzy boundaries typical in affective communication.

These fuzzy sentiment memberships provide the foundation for combining sentiment and gesture type in the inference process to produce interpretable intention outputs.

3.5.4. Fuzzy Intention Membership Modeling

Each intention label is mapped to a triangular membership function over a normalized domain, allowing partial and overlapping memberships between adjacent states.

Table 4 lists the center values.

The overlapping nature of adjacent membership functions allows for shared degrees of belonging between neighboring intention categories. This fuzzy representation accommodates the inherent uncertainty and variability in human affective judgment, with the fuzzy membership itself serving as the system’s final interpretive output.

3.5.5. Fuzzy Inference System Setup

This subsection describes the inference process, which integrates gesture inputs and fuzzified sentiment values to produce intention estimates. A fuzzy inference system (FIS) was constructed, in which predefined membership functions for gestures, sentiment categories, and intention classes (described in the previous subsections) were combined through a rule base to generate fuzzy intention outputs.

The system uses six gesture types and three sentiment categories as antecedents. Each rule applies a logical AND operation between gesture and sentiment and maps the result to an appropriate intention class. The rule base follows the mappings presented in

Table 2, and the initialization process is summarized in Algorithm 2.

The completed FIS merges gesture and sentiment information to produce interpretable intention outputs. This framework accommodates uncertainty, supports graded semantic interpretation, and reflects the cognitive nuance of human decision-making. Validation with participant data is presented in the

Section 4.

| Algorithm 2 Fuzzy Inference System for Gesture Intention Modeling |

- 1:

procedure FuzzyGestureIntentionSystem - 2:

Define gesture over - 3:

Define range_score over - 4:

Define intention over - 5:

gesture_names ← [Thumbs Up, OK, So-so, Thumbs Sideways, Thumbs Down, Crossed Hands] - 6:

for to do - 7:

Define triangular membership for gesture_names[i] at - 8:

end for - 9:

Define range_score membership functions: - 10:

Negative - 11:

Neutral - 12:

Positive - 13:

Define intention membership functions: - 14:

Strongly Negative - 15:

Negative - 16:

Somewhat Negative - 17:

Neutral - 18:

Somewhat Positive - 19:

Positive - 20:

Strongly Positive - 21:

Define fuzzy rules: - 22:

(Thumbs Up AND Neutral) → Neutral - 23:

(Thumbs Up AND Positive) → Strongly Positive - 24:

(OK AND Negative) → Somewhat Negative - 25:

(OK AND Neutral) → Neutral - 26:

(OK AND Positive) → Somewhat Positive - 27:

(So-so AND Negative) → Somewhat Negative - 28:

(So-so AND Neutral) → Neutral - 29:

(So-so AND Positive) → Somewhat Positive - 30:

(Thumbs Sideways AND Negative) → Somewhat Negative - 31:

(Thumbs Sideways AND Neutral) → Neutral - 32:

(Thumbs Sideways AND Positive) → Somewhat Positive - 33:

(Thumbs Down AND Negative) → Strongly Negative - 34:

(Thumbs Down AND Neutral) → Neutral - 35:

(Crossed Hands AND Negative) → Strongly Negative - 36:

(Crossed Hands AND Neutral) → Neutral - 37:

Create fuzzy control system intention_ctrl with defined rules - 38:

Create simulation environment intention_sim from intention_ctrl - 39:

Set gesture and range_score as inputs - 40:

Compute fuzzy output intention - 41:

if intention exists in output then - 42:

Print “Valid” - 43:

else - 44:

Print “No rules activated” - 45:

end if - 46:

end procedure

|

4. Result

4.1. Gesture Sentiment Classification Using VADER

Following the procedure described in

Section 3.4, the VADER sentiment analysis tool was applied to all participant-selected descriptive words associated with each gesture. Each word was assigned a compound sentiment score, which was then weighted by the frequency of selection across participants. The weighted scores were summed and normalized by the total number of responses for that gesture, producing a final sentiment score for each word–gesture pairing.

Table 5 presents the results of this analysis. For each descriptor, the table lists its VADER compound score, the corresponding sentiment category (Positive, Neutral, or Negative), and the frequency with which it was chosen for each gesture type: Thumbs Up (TU), OK, Thumbs Sideways (TS), So-so (SS), Thumbs Down (TD), and Crossed Hands (CH). This tabulation provides a transparent overview of the lexical distributions that informed subsequent fuzzy mapping and intention inference stages.

The table reveals that certain gestures are predominantly associated with Positive descriptors (e.g., Thumbs Up, OK), while others, particularly Thumbs Down and Crossed Hands, are linked to Strongly Negative terms. Neutral descriptors are distributed across several gestures, indicating possible interpretive ambiguity or contextual dependence. These sentiment assignments form the basis for the fuzzy mapping process in

Section 4.2, where gesture–word sentiment pairs are converted into fuzzy intention labels for subsequent analysis.

4.2. Fuzzy Intention Inference from Gesture–Sentiment Scores

In this stage, the normalized sentiment scores obtained from

Section 4.1 were used as inputs to the fuzzy inference system (FIS) to estimate the underlying intention behind each gesture. The FIS integrated two inputs, gesture type and sentiment score, and mapped them to an intention space comprising seven categories: Strongly Negative, Negative, Somewhat Negative, Neutral, Somewhat Positive, Positive, and Strongly Positive.

The sentiment scores were mapped onto seven overlapping triangular membership functions to represent varying degrees of evaluative intention, from strong disapproval to strong approval.

Table 6 specifies the membership parameters for each category.

The output variable intention was modeled using these triangular functions, enabling partial membership in adjacent categories to capture interpretive uncertainty.

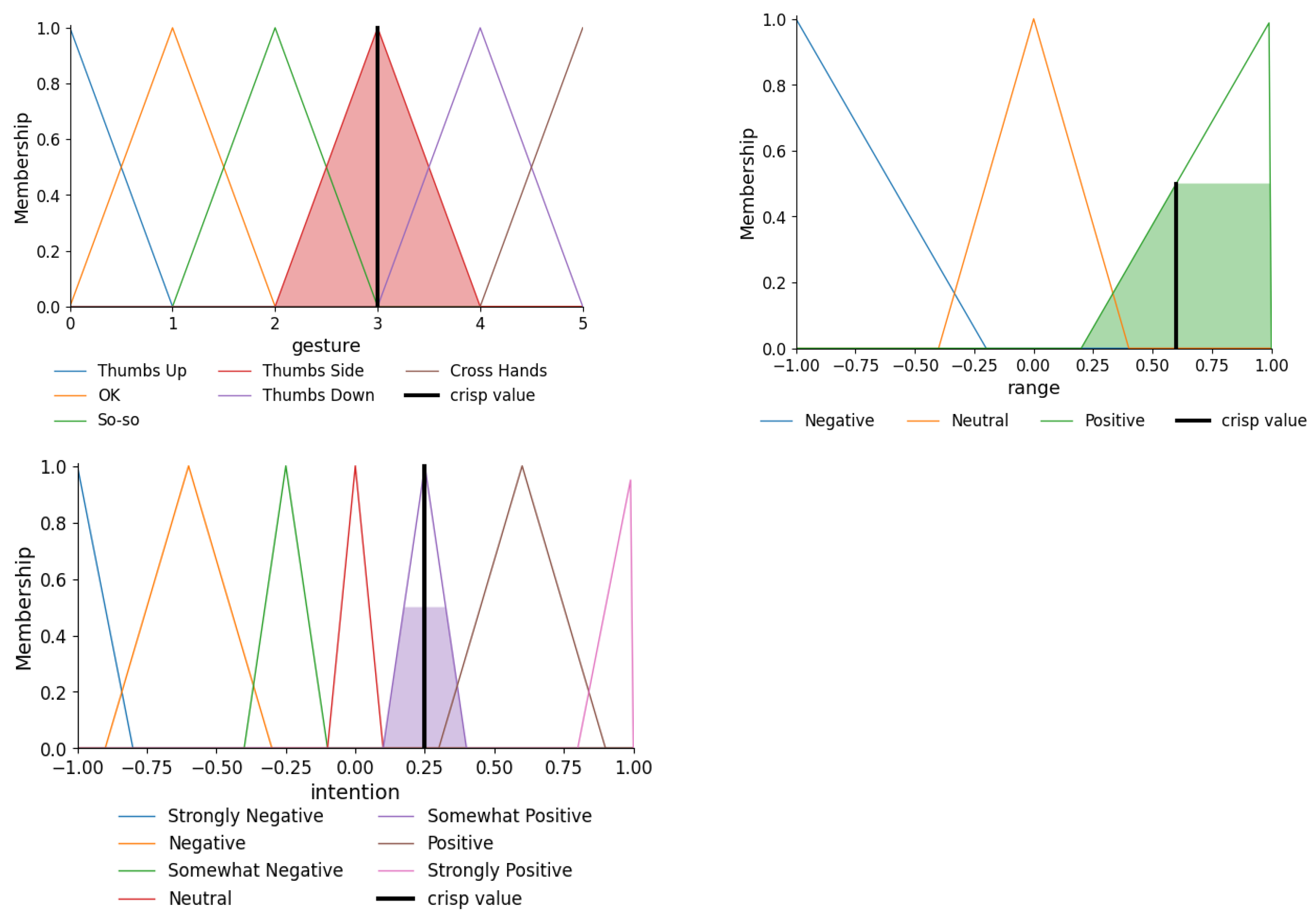

Figure 6 illustrates the complete set of membership functions, which form the basis for the rule evaluation process described in

Section 4.3.

4.3. Fuzzy Rule Evaluation and Output Summary

The fuzzy inference system applied the predefined rule base described in Equation (

2) to map each gesture–sentiment pairing to the most probable intention class. The gesture–sentiment pairs obtained from the VADER classification (

Section 4.1) were evaluated against the rule base established during system design (

Section 3.5.2). Combinations not supported by the crisp activation matrix (

Table 1) were marked as “No rule activated” and excluded from inference.

Table 7 presents the complete fuzzy rule base used in this study. The consequent intention for each valid combination is expressed as either a single category or a graded shift along the intention continuum (e.g., Neutral → Positive), reflecting the possibility of partial membership in adjacent classes.

The structure of the rule base highlights how gesture–sentiment combinations determine graded intention shifts. Positive gestures (e.g., Thumbs Up) exhibit upward transitions when paired with Positive sentiments, while Neutral gestures (e.g., So-so, Thumbs Sideways) maintain central positioning with limited deviation. Negative gestures (e.g., Thumbs Down, Crossed Hands) remain restricted to the Negative domain, with no active rules promoting a shift toward Positive outcomes. This mapping forms the foundation for the gesture-level validation presented in

Section 4.4.

4.4. System Validation Using Six Hand Gesture Inputs

To validate the proposed fuzzy inference framework for gesture intention recognition, six common hand gestures were tested: Thumbs Up, OK, So-so, Thumbs Sideways, Thumbs Down, and Crossed Hands. For each gesture, three analyses were performed: (i) evaluation of gesture membership activation, (ii) assessment of the corresponding sentiment score region, and (iii) determination of the final intention output using the fuzzy rules defined in

Section 3.5.5 and the evaluation process outlined in

Section 4.3.

Thumbs Up:

The Thumbs Up gesture produced high membership values in the Positive and Strongly Positive intention classes, as shown in

Figure 7. While these categories dominated the output, a small degree of overlap with the Neutral region was observed due to the fuzzy boundaries between adjacent states. This indicates that although Thumbs Up is consistently interpreted as Strongly Positive, it occasionally admits limited Neutral spillover. In summary, Thumbs Up reliably conveyed strong Positive intention with only minor Neutral overlap.

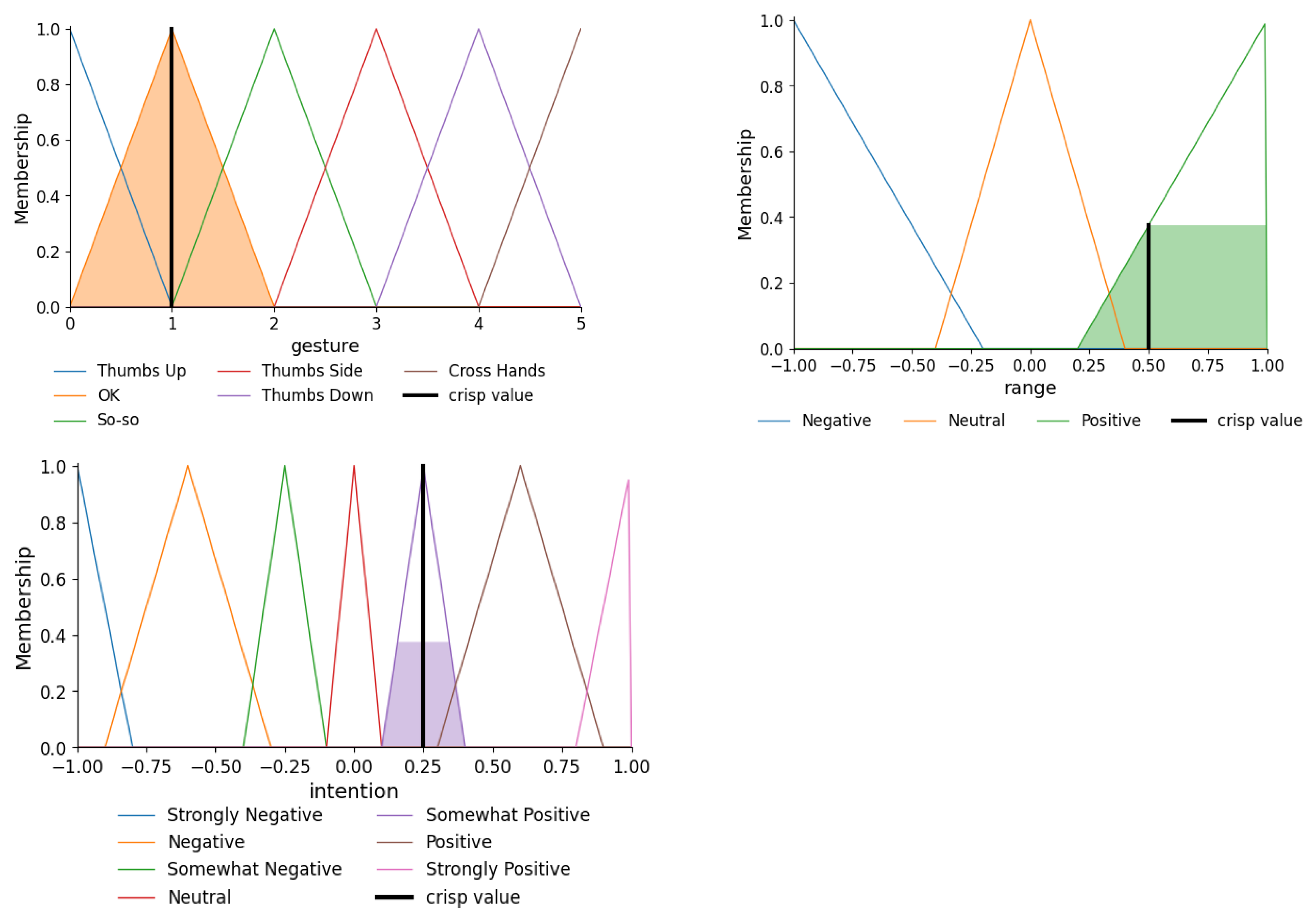

OK Sign:

The OK gesture produced dominant activation in the Positive sentiment region, as shown in

Figure 8. However, in the intention space, its peak was located near the center of the Somewhat Positive class, with partial overlap into Neutral and Positive. This indicates that while the OK sign carries a Positive meaning, it is generally weaker and more variable than Thumbs Up. In summary, the OK sign conveyed Positive intention but with lower intensity and greater variability compared to Thumbs Up.

Thumbs Sideways:

The Thumbs Sideways gesture produced activation centered in the Somewhat Positive intention class, with partial overlap into Neutral, as shown in

Figure 9. Although its sentiment score placed it within the Positive input region, the resulting intention output reflected only moderate positivity, weaker than that observed for Thumbs Up or OK. In summary, Thumbs Sideways conveyed a mild Positive evaluation with occasional neutrality, confirming its role as a more moderate and less emphatic gesture.

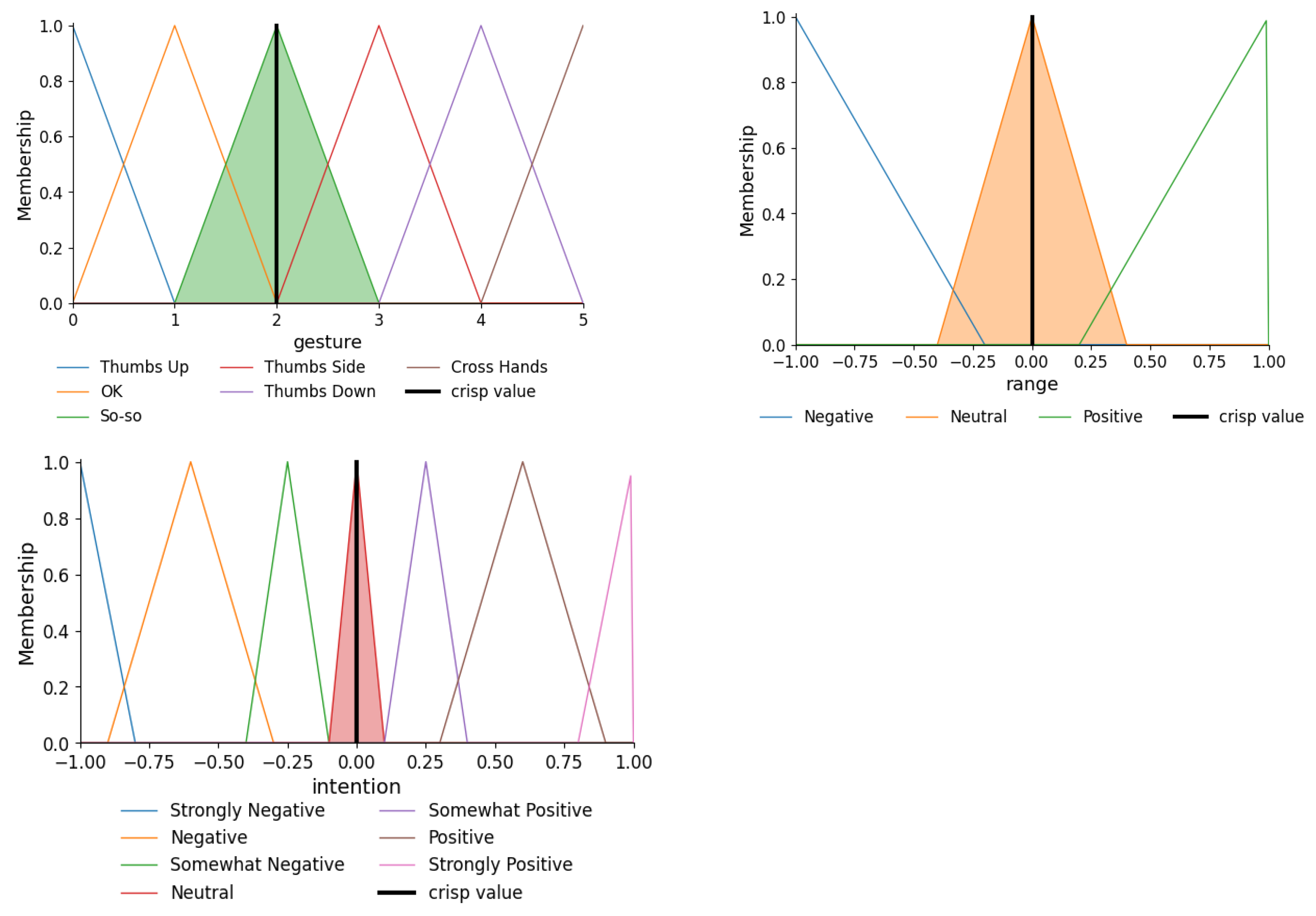

So-so:

The So-so gesture was consistently mapped to the Neutral category across both sentiment input and intention output, as shown in

Figure 10. The black bar aligns with the Neutral peak, and only negligible membership was observed in adjacent classes. This outcome reflects the inherently ambiguous nature of So-so, which conveys indifference or uncertainty rather than clear polarity. In summary, So-so clustered tightly around neutrality, confirming its role as an ambiguous evaluative gesture.

Thumbs Down:

The Thumbs Down gesture showed dominant activation in the Strongly Negative intention category, with additional membership in the Negative class, as illustrated in

Figure 11. Its sentiment input score placed it well within the Negative region, with negligible Neutral overlap. This outcome reflects the high degree of certainty conveyed by Thumbs Down, making it a clear marker of rejection or disapproval. In summary, Thumbs Down consistently expressed strong Negative intention with minimal ambiguity.

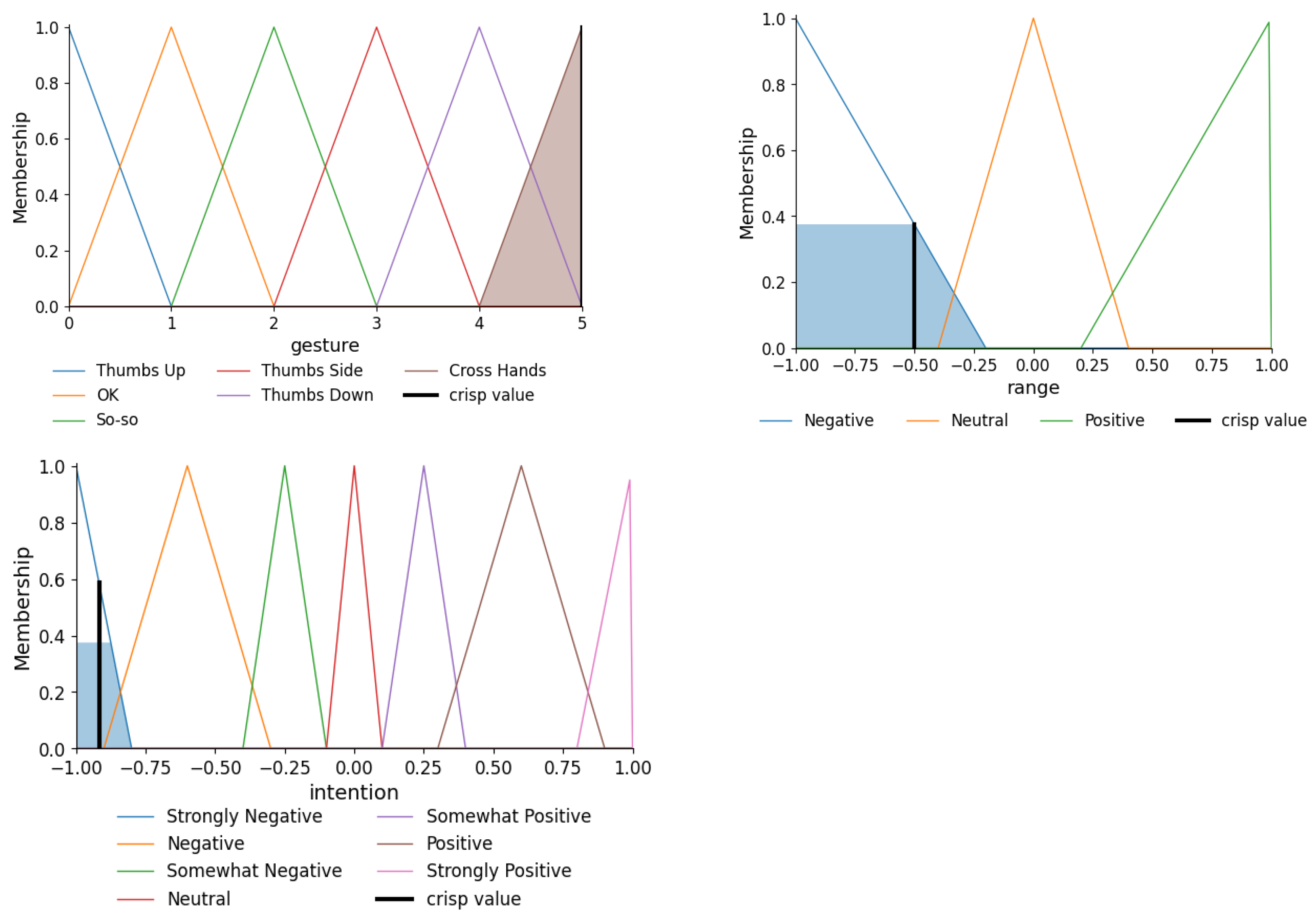

Crossed Hands:

The Crossed Hands gesture exhibited dominant activation in the Strongly Negative intention class, with additional membership in the Negative category, as shown in

Figure 12. Its sentiment input score was positioned firmly within the Negative range, producing no overlap with Neutral or Positive regions. This pattern highlights Crossed Hands as the most emphatic form of rejection in the dataset, stronger and less ambiguous than Thumbs Down. In summary, Crossed Hands remained firmly Negative, representing the clearest signal of disapproval among all gestures.

Across all six gestures, the fuzzy inference framework consistently distinguished between strong, moderate, and Neutral evaluative intentions. These results demonstrate the framework’s ability to model both polarity (Positive, Neutral, Negative) and gradience in human evaluative expression, producing outputs that align with intuitive human interpretations. Together, these results provide empirical validation of the system’s interpretive capacity and establish a foundation for the broader theoretical and applied implications discussed in

Section 5.

5. Discussion

In this study, participant-generated sentiment descriptors were incorporated into a fuzzy logic-based framework for interpreting decision-making intentions from hand gestures. The framework achieved its primary goal of capturing interpretive ambiguity and subjective diversity in real-world nonverbal communication by allowing gestures to partially belong to multiple sentiment categories.

Our findings provide strong support for H1, confirming that gesture interpretation is not universally fixed but varies according to gesture type and contextual sentiment. Positive gestures such as Thumbs Up and OK often overlapped with neutral boundaries, reflecting interpretive flexibility. Neutral gestures (Thumbs Sideways, So-so) generally remained within the Neutral class but occasionally shifted toward positivity. By contrast, Negative gestures (Thumbs Down, Crossed Hands) consistently remained within the Negative spectrum, exhibiting minimal overlap with other categories. This asymmetry underscores the relative certainty of Negative evaluations compared to the flexibility of Positive and Neutral ones.

Crucially, the framework was data-driven: fuzzy membership functions and rule activations were derived directly from participant responses, ensuring that graded intention outputs reflected empirical gesture–sentiment patterns rather than predefined assumptions. This empirical grounding strengthens the interpretive validity of the system.

Support for H2 is also evident in the system’s ability to handle overlapping sentiment boundaries and interpretive uncertainty. The fuzzy inference mechanism enabled graded transitions across the intention continuum, moving beyond rigid categorical labels. By aligning computational outputs with the nuanced ways humans interpret gestures, the framework offers a more human-aligned interpretive approach, relevant for advancing gesture recognition, decision-making analysis, and human–computer interaction.

Theoretically, these findings support the view that form, context, and individual perception collectively shape gesture meaning. Practically, the framework’s graded inference capacity is particularly valuable for applications in behavioral analytics, assistive technologies, and affective computing, where detecting subtle intention shifts can enhance personalization and responsiveness.

Nonetheless, several limitations remain. The current rule base, while sufficient for demonstrating feasibility, was static and predefined, limiting its ability to capture individual, cultural, or situational variation. Reliance on VADER sentiment analysis also provided effective polarity detection but may not fully capture subtler affective nuances. The validation reflected the exploratory nature of the study and laid the foundation for future benchmarking by concentrating on proving the viability in the fuzzy logic framework rather than evaluating its performance against alternative methods. Future research will examine flexible and adaptive fuzzy systems, use more sophisticated sentiment analysis techniques to capture subtle emotional cues, and test the framework on bigger, more varied datasets to improve its cross-context applicability.

6. Conclusions

This study establishes that human decision-making intentions can be interpreted in a context-sensitive and empirically grounded way by combining participant-driven semantic information with a rule-based fuzzy inference framework. Derived directly from gesture–sentiment mappings reported by participants, the system captured interpretive ambiguity and subjective diversity while maintaining data-driven validity. In doing so, it provided evidence for both of the following hypotheses: (H1) that gesture interpretation is not fixed but varies by type and context, and (H2) that fuzzy inference can accommodate uncertainty and overlapping intention boundaries more effectively than rigid categorical systems.

These findings carry important theoretical and practical implications. Theoretically, they reinforce the view that gesture meaning emerges from the interaction of form, context, and polarity. The asymmetry observed indicated that Positive and Neutral gestures were more flexible, while Negative gestures were more stable. This shows why communicative polarity should be considered when designing interpretive models. Practically, the graded inference approach demonstrates promise for deployment in behavioral analytics, assistive technologies, and affective computing, where detecting subtle intention shifts can enhance adaptivity, personalization, and responsiveness.

Beyond gesture interpretation, the proposed framework contributes to the broader discourse on human–computer interaction and decision-making research. By showing how fuzzy logic can bridge computational precision with human interpretive fluidity, this study highlights a pathway toward intelligent systems capable of handling ambiguity and gradience in natural human communication. Such an approach is especially relevant in healthcare, education, and cross-cultural contexts, where nuanced interpretation of user state is critical.

Future work will extend this framework by incorporating adaptive fuzzy systems capable of refining rules dynamically in response to user input, employing sentiment analysis methods that go beyond polarity detection to capture subtler emotional cues, and validating the model across larger and more diverse cultural datasets. Together, these extensions would enhance the stability, generalizability, and applicability of the framework. Therefore, even though the current study emphasizes sentiment interpretation significantly, it does so to move toward a more thorough understanding of hand gesture intention in the decision-making process, making sure that the methods used and the way the research is framed are consistent. Ultimately, this study underscores the promise of combining fuzzy inference with participant-driven semantics as a foundation for next-generation gesture recognition systems that are not only technically robust but also aligned with the complexities of human communication.

Author Contributions

Conceptualization, D.C.S. and K.Y.; Methodology, D.C.S., F.M.R. and K.Y.; Software, D.C.S. and F.M.R.; Validation, D.C.S., F.M.R. and K.Y.; Formal Analysis, D.C.S. and F.M.R.; Investigation, D.C.S., F.M.R. and K.Y.; Data Curation, D.C.S. and F.M.R.; Writing—Original Draft, D.C.S.; Writing—Review and Editing, D.C.S. and K.Y.; Visualization, D.C.S. and F.M.R.; Supervision, K.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the JST/SPRING Global engineering Doctoral Human Resources Development Project, Grant Number: JPMJSP2154.

Institutional Review Board Statement

The study protocol was reviewed and approved by the Ethics Committee of Kyushu Institute of Technology (Approval No. 24-11: Hand Gesture Video Data Collection Experiment for Observing Decision-Making Processes) on 24 March 2025.

Informed Consent Statement

All participants were fully informed of the objectives, procedures, and intended academic use of the study before participation, and written informed consent was obtained from each participant.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CH | Crossed Hands |

| FIS | Fuzzy Inference System |

| H1 | Hypothesis 1 |

| H2 | Hypothesis 2 |

| HCI | Human–Computer Interaction |

| LLM | Large Language Model |

| RTD | Ready-To-Drink |

| SA | Sentiment Analysis |

| SS | So-so Hand Gesture |

| TAM | Technology Acceptance Model |

| TD | Thumbs Down Hand Gesture |

| TPB | Theory of Planned Behavior |

| TS | Thumbs Sideways Hand Gesture |

| TU | Thumbs Up Hand Gesture |

| VADER | Valence Aware Dictionary and Sentiment Reasoner |

References

- Han, T.A.; Pereira, L.M. Intention Based Decision Making and Applications. In Intention Recognition, Commitment and Their Roles in the Evolution of Cooperation; Studies in Applied Philosophy, Epistemology and Rational Ethics; Springer: Berlin/Heidelberg, Germany, 2013; Volume 9. [Google Scholar]

- Kesting, P. The Meaning of Intentionality for Decision Making. SSRN Electron. J. 2006. [Google Scholar] [CrossRef]

- Silpani, D.C.; Yoshida, K. Enhancing Decision-Making System with Implicit Attitude and Preferences: A Comprehensive Review in Computational Social Science. Int. J. Affect. Eng. 2025, 24, 161–179. [Google Scholar] [CrossRef]

- Ahmad, A.K.; Silpani, D.C.; Yoshida, K. The Impact of Large Sample Datasets on Hand Gesture Recognition by Hand Landmark Classification. Int. J. Affect. Eng. 2023, 22, 253–259. [Google Scholar] [CrossRef]

- Clough, S.; Duff, M.C. The Role of Gesture in Communication and Cognition: Implications for Understanding and Treating Neurogenic Communication Disorders. Front. Hum. Neurosci. 2020, 14, 323. [Google Scholar] [CrossRef]

- Bobkina, J.; Romero, E.D.; Ortiz, M.J.G. Kinesic communication in traditional and digital contexts: An exploratory study of ESP undergraduate students. System 2023, 115, 103034. [Google Scholar] [CrossRef]

- Pang, H.T.; Zhou, X.; Chu, M. Cross-cultural Differences in Using Nonverbal Behaviors to Identify Indirect Replies. J. Nonverbal Behav. 2024, 48, 323–344. [Google Scholar] [CrossRef]

- Yu, A.; Berg, J.M.; Zlatev, J.J. Emotional acknowledgment: How verbalizing others’ emotions fosters interpersonal trust. Organ. Behav. Hum. Decis. Processes 2021, 164, 116–135. [Google Scholar] [CrossRef]

- Gordon, R.; Ramani, G.B. Integrating Embodied Cognition and Information Processing: A Combined Model of the Role of Gesture in Children’s Mathematical Environments. Front. Psychol. 2021, 12, 650286. [Google Scholar] [CrossRef] [PubMed]

- Kendon, A. Gesture: Visible Action as Utterance; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Hyusein, G.; Goksun, T. The creative interplay between hand gestures, convergent thinking, and mental imagery. PLoS ONE 2023, 18, e0283859. [Google Scholar] [CrossRef]

- Hyusein, G.; Goksun, T. Give your ideas a hand: The role of iconic hand gestures in enhancing divergent creative thinking. Psychol. Res. 2024, 88, 1298–1313. [Google Scholar] [CrossRef]

- Kelly, S.D.; Tran, Q.-A.N. Exploring the Emotional Functions of Co-Speech Hand Gesture in Language and Communication. Cogn. Sci. 2023, 17, 586–608. [Google Scholar] [CrossRef]

- Luo, Y.; Yu, J.; Liang, M.; Wan, Y.; Zhu, K.; Santosa, S.S. Emotion Embodied: Unveiling the Expressive Potential of Single-Hand Gestures. In Proceedings of the CHI ‘24: 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–17. [Google Scholar]

- Mandryk, R.L.; Atkins, M.S. A fuzzy physiological approach for continuously modeling emotion during interaction with play technologies. Int. J.-Hum.-Comput. Stud. 2007, 65, 329–347. [Google Scholar] [CrossRef]

- Anand, J.; Thamilarasi, V.; Rayal, A.; Gupta, H.K.; Jyothi, K.; Vishwakarma, P. Fuzzy Logic-Based Deep Learning for Human-Machine Interaction and Gesture Recognition in Uncertain and Noisy Environments. In Proceedings of the 2025 First International Conference on Advances in Computer Science, Electrical, Electronics, and Communication Technologies (CE2CT), Bhimtal, India, 21–22 February 2025. [Google Scholar]

- Silpani, D.C.; Suematsu, K.; Yoshida, K. A Feasibility Study on Hand Gesture Intention Interpretation Based on Gesture Detection and Speech Recognition. J. Adv. Comput. Intell. Intell. Inform. 2022, 26, 375–381. [Google Scholar] [CrossRef]

- Mathieson, K. Predicting User Intentions: Comparing the Technology Acceptance Model with the Theory of Planned Behavior. Inf. Syst. Res. 1991, 2, 173–191. [Google Scholar] [CrossRef]

- Tseng, S.-M. Determinants of the Intention to Use Digital Technology. Information 2025, 16, 170. [Google Scholar] [CrossRef]

- Ajzen, I. The Theory of Planned Behavior. Organ. Behav. Hum. Decis. Process 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Vorobyova, K.; Alkadash, T.M.; Nadam, C. Investigating Beliefs, Attitudes, And Intentions Regarding Strategic Decision-Making Process: An Application Of Theory Planned Behavior With Moderating Effects Of Overconfidence And Confirmation Biases. Spec. Ugdym. Educ. 2022, 1, 43. [Google Scholar]

- Campisi, E.; Mazzone, M. Do people intend to gesture? A review of the role of intentionality in gesture production and comprehension. Spec. Ugdym. Educ. 2022, 1, 43. [Google Scholar]

- Grice, H.P. Meaning. Philos. Rev. 1957, 66, 377–388. [Google Scholar] [CrossRef]

- Riva, G.; Waterworth, A.J.; Waterworth, L.E.; Mantovani, F. From intention to action: The role of presence. New Ideas Psychol. 2011, 29, 24–37. [Google Scholar] [CrossRef]

- Murad, B.K.; Alasadi, A.H. Advancements and Challenges in Hand Gesture Recognition: A Comprehensive Review. Iraqi J. Electr. Electron. Eng. 2024, 20, 154–164. [Google Scholar] [CrossRef]

- Linardakis, M.; Varlamis, I.; Papadopoulos, G.T. Survey on Hand Gesture Recognition from Visual Input. arXiv 2025, arXiv:2501.11992. [Google Scholar] [CrossRef]

- Gao, Q.; Jiang, S.; Shull, P.B. Simultaneous Hand Gesture Classification and Finger Angle Estimation via a Novel Dual-Output Deep Learning Model. Sensors 2020, 20, 2972. [Google Scholar] [CrossRef] [PubMed]

- Rukka, F.M.; Silpani, D.C.; Yoshida, K. Fuzzy Sentiment Analysis for Hand Gesture Interpretation in Words Expression. In Proceedings of the Computer Information Systems, Biometrics and Kansei Engineering, Fukuoka, Japan, 11–13 September 2025. [Google Scholar]

- Lai, K.; Yanushkevich, S.N. CNN+RNN Depth and Skeleton based Dynamic Hand Gesture Recognition. In Proceedings of the 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 3451–3456. [Google Scholar]

- Yan, K.; Lam, C.-F.; Fong, S.; Marques, J.A.L.; Millham, R.C.; Mohammed, S. A Novel Improvement of Feature Selection for Dynamic Hand Gesture Identification Based on Double Machine Learning. Sensors 2025, 25, 1126. [Google Scholar] [CrossRef]

- Molchanov, P.; Yang, X.; Gupta, S.; Kim, K.; Tyree, S.; Kautz, J. Online Detection and Classification of Dynamic Hand Gestures with Recurrent 3D Convolutional Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4207–4215. [Google Scholar]

- Ahn, J.-H.; Kim, J.-H. A Stable Hand Tracking Method by Skin Color Blob Matching. Pac. Sci. Rev. 2011, 12, 146–151. [Google Scholar]

- Pierre, J. Intentionality. In The Stanford Encyclopedia of Philosophy, Spring 2023 ed.; Zalta, E.N., Nodelman, U., Eds.; Metaphysics Research Lab, Stanford University: Stanford, CA, USA, 2023. [Google Scholar]

- Giotakos, O. Modeling Intentionality in the Human Brain. Front. Psychiatry 2023, 14, 1163421. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Tang, J.; Xu, X.; Dai, W.; Liu, Y.; Xiao, J.; Lu, H.; Zhou, Z. sEMG-based Gesture-Free Hand Intention Recognition: System, Dataset, Toolbox, and Benchmark Results. arXiv 2024, arXiv:2411.14131. [Google Scholar] [CrossRef]

- Vuletic, T.; Duffy, A.; Hay, L.; McTeaugue, C.; Campbell, G.; Grealy, M. Systematic literature review of hand gestures used in human computer interaction interfaces. Int. J.-Hum.-Comput. Stud. 2019, 129, 74–94. [Google Scholar] [CrossRef]

- Zeng, X.; Wang, X.; Zhang, T.; Yu, C.; Zhao, S.; Chen, Y. GestureGPT: Toward Zero-shot Free-form Hand Gesture Understanding with Large Language Model Agents. Proc. ACM-Hum.-Comput. Interact. 2024, 8, 462–499. [Google Scholar] [CrossRef]

- Itoda, K.; Watanabe, N.; Takefuji, Y. Analyzing Human Decision Making Process with Intention Estimation Using Cooperative

Pattern Task. In Artificial General Intelligence. AGI 2017. Lecture Notes in Computer Science; Everitt, T., Goertzel, B., Potapov, A., Eds.; Springer: Cham, Switzerland, 2017; p. 10414. [Google Scholar]

- Heine, J.; Sylla, M.; Langer, I.; Schramm, T.; Abendroth, B.; Bruder, R. Algorithm for Driver Intention Detection with Fuzzy Logic and Edit Distance. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems, Gran Canaria, Spain, 15–18 September 2015; pp. 1022–1027. [Google Scholar]

- Critchfield, T.S.; Reed, D.D. The Fuzzy Concept of Applied Behavior Analysis Research. Behav. Anal. 2017, 15, 123–159. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Wang, G.-P.; Chen, S.-Y.; Yang, X.; Liu, J. Modeling and analyzing of conformity behavior: A fuzzy logic approach. Optik 2014, 125, 6594–6598. [Google Scholar] [CrossRef]

- Zheng, W.; Turner, L.; Kropczynski, J.; Ozer, M.; Nguyen, T.; Halse, S. LLM-as-a-Fuzzy-Judge: Fine-Tuning Large Language Models as a Clinical Evaluation Judge with Fuzzy Logic. arXiv 2025, arXiv:2506.11221. [Google Scholar]

- Zongyu, C.; Lu, F.; Zhu, Z.; Li, Q.; Ji, C.; Chen, Z.; Liu, Y.; Xu, R. Bridging the Gap Between LLMs and Human Intentions: Progresses and Challenges in Instruction Understanding, Intention Reasoning, and Reliable Generation. arXiv 2025, arXiv:2502.09101. [Google Scholar] [CrossRef]

- Chellaswamy, C.; Durgadevi, J.J.; Srinivasan, S. An intelligent hand gesture recognition system using fuzzy logic. In Proceedings of the IET Chennai Fourth International Conference on Sustainable Energy and Intelligent Systems (SEISCON 2013), Chennai, India, 12–14 December 2013. [Google Scholar]

- Ekel, P.I.; Libório, M.P.; Ribeiro, L.C.; Ferreira, M.A.D.d.O.; Pereira Junior, J.G. Multi-Criteria Decision under Uncertainty as Applied to Resource Allocation and Its Computing Implementation. Mathematics 2024, 12, 868. [Google Scholar] [CrossRef]

- Rosário, A.T.; Dias, J.C.; Ferreira, H. Bibliometric Analysis on the Application of Fuzzy Logic into Marketing Strategy. Businesses 2023, 3, 402–423. [Google Scholar] [CrossRef]

- Bin, L.; Su, H.; Gui, L.; Cambria, E.; Xu, R. Aspect-based sentiment analysis via affective knowledge enhanced graph convolutional networks. Knowl.-Based Syst. 2022, 235, 107643. [Google Scholar]

- Bachate, M.; Suchitra, S. Sentiment analysis and emotion recognition in social media: A comprehensive survey. Appl. Soft Comput. 2025, 174, 112958. [Google Scholar] [CrossRef]

- Hoti, M.; Ajdari, J. Sentiment Analysis Using the Vader Model for Assessing Company Services Based on Posts on Social Media. SEEU Rev. 2023, 18, 19–33. [Google Scholar] [CrossRef]

- Balahadia, F.; Cruz, A.A.C. Analyzing Public Concern Responses for Formulating Ordinances and Laws using Sentiment Analysis through VADER Application. Int. J. Comput. Sci. Res. 2021, 6, 842–856. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).