1. Introduction

In computer vision, interpreting and analyzing high-resolution images is critical for applications such as remote sensing, medical diagnostics, autonomous driving, and industrial inspection. These images often span gigapixel scales, where even large objects occupy only a small fraction of the overall scene. This scale disparity, combined with dense object distributions, complex shapes, and background clutter, makes precise instance segmentation particularly challenging [

1]. Additionally, direct full-resolution processing imposes prohibitive memory and computational requirements, necessitating strategies that divide the image into more manageable regions [

2].

Instance segmentation extends object detection by producing pixel-level masks for each object, enabling accurate delineation of object boundaries [

3]. While this capability is essential for analyzing large-scale imagery, the size and complexity of such data often require tiled inference. Tiling allows segmentation models to operate on smaller image patches without aggressive downsampling, preserving fine details while reducing computational load. Among tiling strategies, SAHI [

4] is widely adopted, supporting both non-overlapping and overlapping configurations. Overlapping tiles improve boundary preservation and object completeness but introduce duplicate or fragmented detections, especially along tile edges. Post-processing is essential to reconcile these duplicates into coherent object instances. Standard approaches such as NMS [

5] and NMM [

6] rely primarily on Intersection over Union (IoU) thresholds to eliminate or merge detections. However, in tiled high-resolution inference, adjacent fragments may have minimal overlap despite representing the same object, leading to missed merges and inconsistent boundaries.

Recent frameworks have explored various strategies to improve detection performance in large-scale images. Telcceken et al. [

7] proposed a multi-stage object detection pipeline combining an Image Slicing Algorithm (ISA), the Segment Anything Model (SAM), and super-resolution (SRGAN) to enhance small object detection in satellite and aerial imagery [

8,

9]. SegTrackDetect [

10] introduced a region-of-interest-driven, window-based approach for tiny object detection, combining semantic segmentation and tracking to limit inference to relevant sub-windows and aggregating results with overlapping box suppression. Yang et al. [

11] proposed RS-YOLOX, an improved YOLOX-based detector for remote sensing images that integrates Efficient Channel Attention (ECA), Adaptively Spatial Feature Fusion (ASFF), and the varifocal loss function, with inference adapted to the SAHI tiling framework. While these methods achieve strong performance in detection tasks, they primarily operate at the bounding-box level and focus on architectural or pre-processing improvements, without directly addressing the merging of fragmented masks during post-processing.

Merging fragmented or spatially disjoint instance masks remains a persistent challenge in tiled high-resolution inference. Furthermore, scalable, deduplication methods that explicitly account for spatial proximity and boundary alignment remain underexplored. This gap motivates the development of a post-processing algorithm specifically optimized for workflows in high-resolution imagery, capable of efficiently merging fragmented or misaligned masks beyond the limitations of IoU-based heuristics.

The main contributions of this work are as follows:

Evaluation of a tiling-based training strategy in which large high-resolution aerial images are cropped into 512 × 512 tiles for model training. Our approach, evaluated with YOLOv11, aims to improve large-scale detection by consolidating overlaps while reducing computation. The resulting training dataset is published as iSAID-512Tiles [

12] to support reproducibility and further research.

Systematic evaluation of the SAHI method under varying tile overlap configurations to determine the optimal balance between segmentation accuracy and computational efficiency.

Introduction of a novel deduplication algorithm, Spatial Mask Merging (SMM), which merges overlapping or fragmented masks based on pixel-level inclusion and boundary distance metrics and is optimized using R-tree spatial indexing to enable efficient and scalable post-processing.

Comparative evaluation of SMM against established methods, including NMS and NMM, on the iSAID [

13] benchmark using standard segmentation performance metrics.

The remainder of this paper is organized as follows:

Section 2 reviews related work on instance segmentation in large-scale images, tile-based inference strategies, and deduplication algorithms.

Section 3 describes the experimental setup, including the proposed SMM algorithm, the tiling strategy for large-image segmentation, and the datasets used.

Section 4 presents the experimental results, including the impact of tile overlap on performance and a comparative analysis of SMM against traditional deduplication methods.

Section 5 discusses the advantages, limitations, and broader applicability of the proposed SMM algorithm. Finally,

Section 6 concludes the paper and outlines directions for future research.

2. Related Work

Instance segmentation of small, irregular objects in high-resolution images remains challenging due to complex shapes, dense object distributions, and extreme scale variations, particularly in domains such as remote sensing and medical imaging [

14,

15]. Processing such data imposes significant memory and computational demands. To address these challenges, several strategies have been explored, including training on image crops rather than downscaling [

16], applying multi-scale fusion and transformer-based models [

14], and designing specialized architectures such as EU-Net for water body extraction [

17]. Other promising directions include GAN-assisted training and synthetic data generation to reduce reliance on large annotated datasets [

18]. However, relatively few studies investigate the benefits of training on tiled inputs instead of full-resolution images, despite their potential for substantial computational savings.

Slicing-Aided Hyper Inference (SAHI) [

4] is a model-agnostic tiling strategy designed to efficiently process high-resolution images by dividing them into smaller tiles. This approach alleviates memory and computational constraints and has been widely adopted in medical imaging, remote sensing, and aerial surveillance. SAHI supports both non-overlapping and overlapping tile configurations: non-overlapping tiles improve efficiency but often miss or fragment objects at tile boundaries, whereas overlapping tiles capture boundary objects more completely, improving accuracy for small or partially visible instances [

19].

NMS [

5] is a standard post-processing method in object detection that removes redundant detections by retaining the highest-confidence candidate among overlapping boxes, based on an Intersection over Union (IoU) threshold. While computationally efficient, NMS struggles with fragmented or adjacent objects exhibiting low overlap, a frequent occurrence in instance segmentation. Syncretic-NMS, or Non-Maximum Merge (NMM) [

6], addresses this limitation by merging highly correlated neighboring boxes after suppression, preserving more complete object representations in dense scenes. These shortcomings are particularly evident in crowded scenes or tiled inference workflows, where improper suppression may lead to false positives or missed detections [

20].

Despite advances in object detection and segmentation post-processing, there remains limited focus on deduplication strategies tailored to tiled inference with overlapping tiles. In high-resolution domains such as satellite and medical imaging, tiling is essential for managing memory and computation, yet it often fragments large objects across tile boundaries. Recent frameworks have advanced tiling-based detection pipelines through enhanced pre-processing, model improvements, and bounding-box-level merging strategies. However, standard NMS and NMM still struggle in these settings, failing to associate detections that exhibit minimal overlap or lie outside predefined spatial search radii [

21]. This lack of robust deduplication methods represents a clear gap in the literature. Furthermore, although spatial indexing techniques such as R-trees are widely used in geographic information systems, map services, and spatial databases, they remain underexplored in instance segmentation workflows, where they could accelerate candidate mask retrieval for merging across tile boundaries. A detailed overview of R-tree structure, operations, and applications is provided in

Appendix B.

In summary, while prior work has advanced large-scale image analysis through improved detection models and tiling strategies, the integration of spatially efficient post-processing for overlapping-tile instance segmentation, particularly through the use of R-tree indexing for scalable candidate retrieval, remains insufficiently addressed.

3. Materials and Methods

This section explains the proposed SMM deduplication algorithm as a post-processing method, along with the dataset, segmentation backbone, and training strategy used for its evaluation.

3.1. Dataset

iSAID [

13] is a benchmark dataset designed explicitly for instance segmentation in aerial imagery. It contains 655,451 object instances across 2806 high-resolution images, covering 15 commonly occurring object categories such as vehicles, ships, buildings, and sports fields.

iSAID is characterized by several distinctive properties: (a) a large number of high-resolution images, (b) dense instance-level annotations, (c) high object density and class imbalance, (d) significant variation in object scale and orientation, and (e) small, ambiguous instances requiring contextual reasoning. Annotations follow the COCO format and were created and validated by professional annotators. The imagery used in iSAID originates from the DOTA-v1.0 dataset [

22], which comprises very large aerial images (ranging from

to

pixels) acquired from various sensors, including Google Earth and JL-1 and GF-2 satellites. All usage of imagery from Google Earth must comply with its terms of use. Both the iSAID and DOTA datasets are available strictly for academic purposes; any commercial use is prohibited.

To facilitate the training of deep segmentation models on standard hardware, a derived dataset, iSAID-512Tiles, has been prepared and publicly released [

12]. It consists of approximately 10,000 tiles of size

pixels, extracted from the iSAID training and validation splits. Each tile includes YOLO-format annotations with normalized bounding boxes and class IDs, aligned with iSAID categories. The tiles are partitioned into training (70%), validation (20%), and test (10%) subsets using a random split, ensuring that all tiles from the same original image remain in the same subset to prevent data leakage [

23]. This tiling strategy preserves spatial diversity while substantially reducing the memory and computational resources required to process full-size aerial images.

3.2. Segmentation Backbone and Training Protocol

The baseline segmentation model for evaluating the proposed post-processing pipeline is the YOLOv11s-seg architecture [

24], a lightweight instance segmentation network optimized for real-time applications. It comprises 203 layers and approximately 10.1 million parameters, with a computational cost of 35.6 GFLOPs. The architecture integrates convolutional backbones with C2PSA attention modules to enhance spatial awareness with minimal overhead, C3k2 blocks for cross-stage feature reuse, and multi-scale heads for refining mask predictions across network depths.

Training and evaluation were conducted using the Ultralytics YOLOv11 framework in PyTorch [

25] (version 8.3.159), on an NVIDIA A100-SXM4 GPU (40 GB VRAM) with Automatic Mixed Precision (AMP) enabled to accelerate computation and reduce memory usage. The model was trained for 100 epochs using a batch size of 16, a learning rate of 0.01, and the SGD optimizer with momentum 0.9, as automatically selected by the framework. Inference benchmarks achieved an average processing speed of 0.9 ms per image (excluding I/O). Evaluation followed standard metrics: precision, recall, mean Average Precision at IoU 0.50 (

), and mean Average Precision across IoU thresholds 0.50–0.95 (

), reported for both bounding boxes and segmentation masks.

Initialization used pretrained weights from a prior YOLOv11s-seg model trained on the same dataset split but a reduced subset of ∼6000 images. This earlier model, trained for 100 epochs using the AdamW optimizer (lr = 0.000526, momentum = 0.9), achieved 77.6% precision, 61.2% recall, 67.7% , and 41.5% for mask segmentation. These weights provided a better initialization, facilitating faster convergence in the final training run. The resulting model served solely as the baseline segmentation backbone in the subsequent evaluation of the proposed post-processing method.

3.3. Spatial Mask Merging Algorithm (SMM)

The proposed algorithm resolves duplicate, adjacent, or fragmented instance masks by casting merging as a global graph clustering problem [

26]. Unlike IoU-only post-processing, decisions are taken at the mask level and integrate spatial geometry and semantic consistency. Candidate relations are restricted using an R-tree spatial index that retrieves neighbors within a fixed search radius

, after which a weighted compatibility graph is clustered to yield globally consistent groups.

Let

denote predictions with

, where

is a binary mask with boundary

,

is an axis-aligned box,

is a confidence, and

is a class label. Define the boundary distance and mask IoU, as follows:

Neighborhoods are pruned by the R-tree as

where

is the minimum

box distance and

is retrieved in

time using an R-tree built over

. For each candidate pair

, an edge weight combines geometric overlap, proximity, and confidence, as follows:

with

and coefficients

. The weighted graph is

with vertices

, edges

, and weights

.

Global grouping is obtained via correlation clustering by minimizing

where

controls the trade-off between over-merging and under-merging.

A common issue in mask merging is chained merging, where objects are grouped indirectly (e.g.,

A overlaps with

B,

B overlaps with

C, but

A and

C are dissimilar). To avoid this, a pairwise validity constraint is enforced, as follows:

with

where

is a threshold ensuring that all members of a cluster are mutually compatible. This constraint prevents weakly related instances from being linked through intermediates. Algorithm 1 outlines the proposed SMM, where the same class masks within radius

are linked, and each edge weight blends spatial distance, overlap (IoU), and detection score.

| Algorithm 1 Proposed Spatial Mask Merging |

Require: Predictions with ; search radius ; thresholds ; weights ; clustering penalty Ensure: Merged instances

- 1:

; build R -tree index R over - 2:

Initialize , weight map W ▹ - 3:

for to n do - 4:

- 5:

for each do - 6:

if then - 7:

; continue - 8:

end if - 9:

Compute via ( 1) - 10:

- 11:

if then - 12:

; ▹ undirected: - 13:

end if - 14:

end for - 15:

end for - 16:

- 17:

Cluster G via ( 4) under constraint ( 5), yielding partition - 18:

For each , compute by mask fusion, box update, and score aggregation - 19:

return

|

This method formulates mask deduplication as a global graph clustering problem, modeling all predicted instances as vertices in a weighted graph, which are then partitioned into clusters by optimizing a unified objective function. Unlike greedy heuristics that make local, stepwise decisions, the clustering approach evaluates the whole graph structure, ensuring globally consistent groupings and preventing indirect or chained merges. The detailed mathematical formulation of the merging function

is provided in

Appendix A.

3.3.1. Algorithm Parameters

Spatial thresholds, edge weighting factors, and clustering penalties govern the SMM algorithm. Their roles and typical ranges are summarized in

Table 1.

While primarily control candidate generation, the edge-weight coefficients , penalty , and anti-chaining threshold regulate the partitioning resolution. Optimal values are application-dependent and vary with object density, morphology, and whether inference is tiled or global.

3.3.2. Time and Space Complexity Analysis

The performance of the SMM algorithm is analyzed in terms of time and space complexity and compared against conventional deduplication methods. Let n denote the number of predicted objects before merging, m the average number of spatial neighbors retrieved per R-tree query, and k the average number of elements in a merge cluster, where typically . Let denote the cost of computing edge weights (distance, IoU, and score similarity) and the cost of applying the merging function to k objects.

The algorithm begins with R-tree construction over

n bounding boxes, requiring

time and

space. Graph construction requires examining

m neighbors per object, yielding

The correlation clustering step is NP-hard in the worst case; however, approximate solvers (LP relaxation with rounding or local heuristics) achieve near-linear complexity in the number of edges, i.e.,

where

hides logarithmic factors. The merging of final clusters adds

. Thus, the overall expected runtime is

Since

m is bounded by local density and

k is small, the runtime scales close to

in practice.

The space complexity is linear in the number of vertices and edges, as follows:

dominated by the storage of masks and the R-tree index.

In contrast, NMS sorts predictions by confidence and performs pairwise IoU comparisons, requiring

time in the worst case. NMM includes additional mask merges, yielding

. Both methods require

space.

Table 2 summarizes the asymptotic complexity of each method.

The efficiency of SMM stems from avoiding exhaustive pairwise comparisons by restricting edges via R-tree queries, while the clustering formulation ensures global consistency and prevents chained merging. This enables scalability to dense detection scenarios where NMS and NMM suffer quadratic degradation. Performance of the proposed SMM-based instance segmentation pipeline was evaluated using standard detection and segmentation metrics, including accuracy, precision, recall, F1 score, and Panoptic Quality (PQ). These measures were selected to capture both recognition and mask-level agreement, and their formal definitions are provided in

Appendix C.

4. Results

This section presents the results of our experiments, focusing on the evaluation of tile-based training, optimal overlap configurations in SAHI, and the performance of the SMM algorithm compared with traditional deduplication methods.

4.1. Segmentation Accuracy on the iSAID Dataset

To evaluate the segmentation accuracy of the YOLOv11s-seg model, validation on the iSAID dataset across 15 aerial object classes was performed, including both large and small targets. Key metrics include precision, recall, mean Average Precision at an IoU threshold of 0.50 (mAP50), the stricter mAP averaged from 0.50 to 0.95 (mAP50–95), and the F1 score. Detailed per-class and overall results are presented in

Table 3.

The model performs exceptionally well on structured and well-defined objects such as tennis courts (mAP50–95 = 72.7%) and planes (mAP50–95 = 58.4%). For densely clustered or irregular objects like small vehicles, the model’s performance drops (mAP50–95 = 18.6%), which is consistent with known challenges in segmenting small and overlapping objects in aerial scenes. The bridge class, with few annotated instances and complex geometries, exhibits the lowest performance (mAP50–95 = 11.5%). Despite these outliers, the model achieves an overall segmentation performance of 68.0% mAP50 and 41.3% mAP50–95, along with an F1 score of 0.699, indicating strong generalization across diverse object scales and types.

Efficiency metrics are summarized in

Table 4. Inference is highly efficient, with an average per-tile time of 0.9 milliseconds. Pre-processing and post-processing contribute minimally to latency, at 0.1 ms and 1.9 ms, respectively. Peak GPU memory usage reached 40.5 GB on an NVIDIA A100-40GB, validating that tile-based training is feasible even on memory-constrained systems.

4.2. Impact of Overlap Ratio on Segmentation Accuracy and Efficiency

To evaluate the trade-off between segmentation quality and computational cost in tile-based inference, an analysis of the effect of varying tile overlap ratios using the SAHI framework was performed. The goal was to identify an overlap range that offers strong segmentation performance without incurring prohibitive inference time.

Figure 1 compares segmentation performance and computational cost across different overlap ratios. The left plot illustrates several segmentation metrics and provides a comprehensive view of both overall consistency and performance variation across the dataset. The right plot shows the average inference time per image, measured over 30 randomly selected samples.

The results show that segmentation performance improves as the overlap ratio increases from 0% to around 6%, where metrics such as F1 score and recall peak. Between 6% and 9%, these metrics plateau, indicating diminishing returns. Beyond 9%, segmentation performance begins to decline or stagnate, suggesting that additional overlap does not provide meaningful benefit. Average precision remains relatively stable across all overlaps, ranging between 0.53 and 0.59, while accuracy stays consistently high, reflecting robust background classification even in worst-case scenarios.

In contrast, inference time increases sharply with higher overlap ratios. For low overlap values (3–9%), average inference time remains under 5 s per image. However, as overlap exceeds 12%, redundancy from overlapping tiles significantly increases processing time, with inference exceeding 6.5 s per image at 18% and above. This highlights the trade-off between segmentation accuracy and computational efficiency, reinforcing the value of identifying an optimal overlap range.

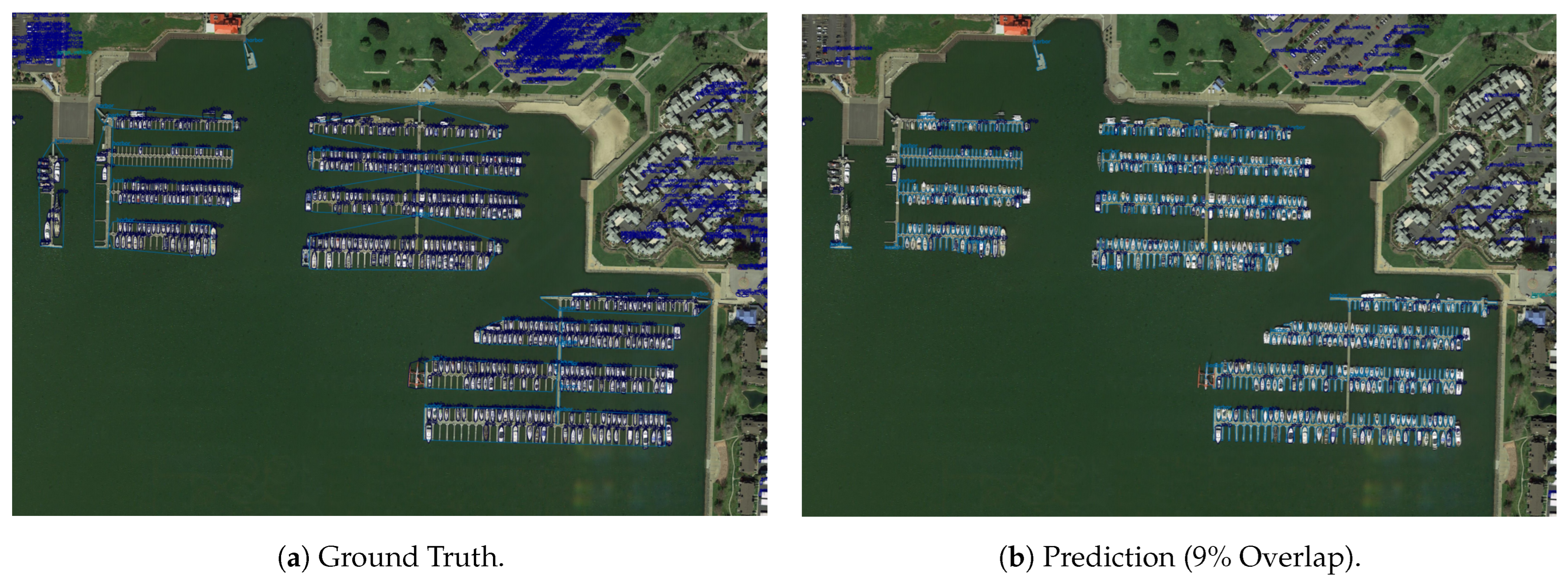

Figure 2 presents a qualitative comparison for the image P0864. The left panel shows the ground truth (GT) instance annotations, while the right panel depicts the prediction results using a 9% tile overlap during inference. For this particular image, the average F1 score improved by approximately 10.4% when increasing the overlap ratio from 0% to 9%. This reflects a more accurate alignment between predicted and ground truth masks, in terms of both object coverage and classification.

Figure 3 shows segmentation results using 0% (blue) and 6% (red) tile overlap. The comparison highlights how even a small overlap improves object coverage, especially near tile boundaries, by recovering instances missed at 0%. Although higher overlaps offer marginal gains in some metrics, the increased computational cost does not justify their use in most scenarios. Therefore, an overlap ratio in the range of 6% to 9% as the optimal configuration is recommended, as this range achieves strong segmentation performance while maintaining reasonable inference times.

4.3. Evaluation of Proposed SMM on the iSAID Dataset

This section evaluates the performance of the proposed SMM algorithm on the challenging iSAID dataset and compares it against NMS, NMM, and Greedy NMM (GNMM) baselines. The iSAID dataset, being a large-scale instance segmentation benchmark with a significant proportion of small objects, presents unique challenges. Alongside segmentation quality, runtime performance was treated as a key evaluation metric, enabling a direct comparison of the computational efficiency of SMM with NMS, NMM, and GNMM. Our evaluation highlights both runtime and accuracy trade-offs across the methods.

The most pronounced gains with the SMM algorithm were observed in Panoptic Quality (PQ) and Precision, where SMM outperformed the next-best method, NMM, by 3.1% and 6.9%, respectively. The F1 score also increased by 3.2%, reflecting a better balance between false positives and false negatives. Accuracy improved by 2.6%, while recall exhibited a marginal gain of 0.06% compared with the runner-up algorithm. These consistent improvements highlight the effectiveness of the mask merging strategy in SMM, particularly in densely packed scenes with many small and overlapping objects, as characteristic of the iSAID dataset. While SMM exhibits higher execution time and memory usage compared with NMS, which is highly efficient but does not perform any merging, it demonstrates clear advantages over NMM and GNMM, offering the best trade-off between computational overhead and segmentation quality. In this context, SMM’s ability to consolidate overlapping masks leads to measurable accuracy gains, underscoring the value of mask merging for large-scale instance segmentation.

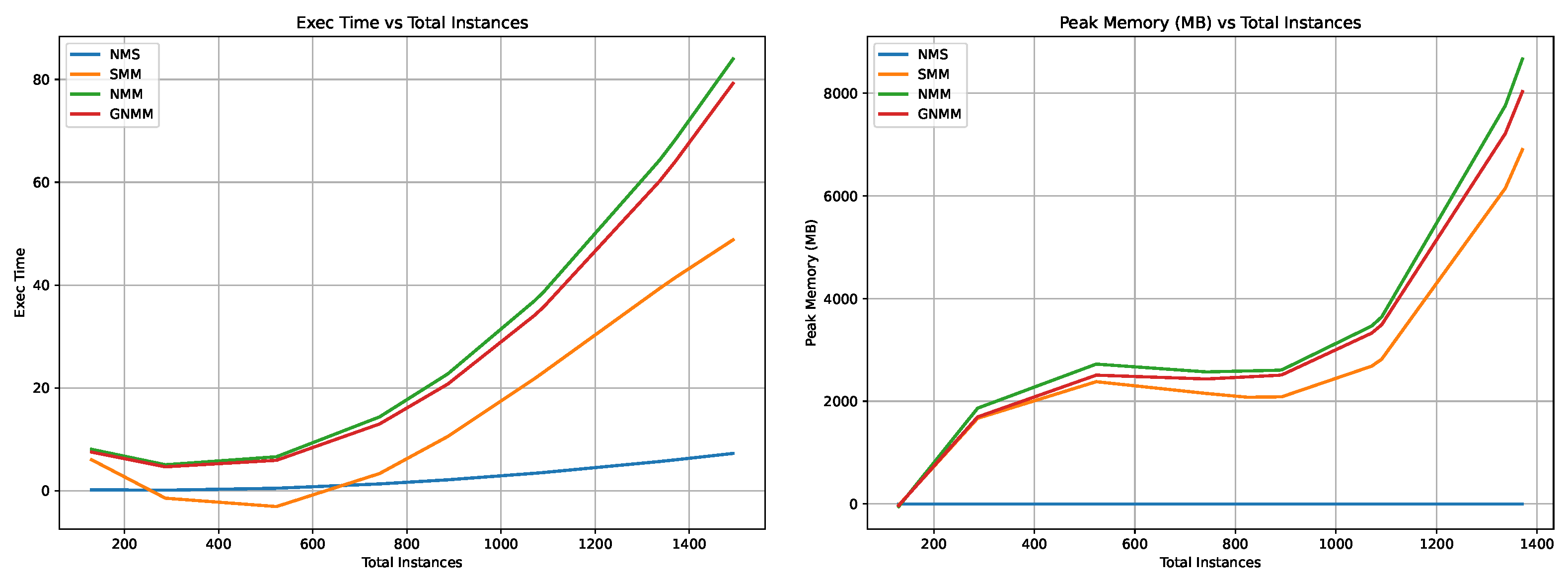

Figure 4 illustrates the computational efficiency of the evaluated methods across varying instance densities. NMS clearly dominates in both speed and memory, maintaining nearly flat trends regardless of instance count. SMM demonstrates favorable scalability, with execution time and memory growing moderately with the number of instances, peaking well below GNMM and NMM. GNMM incurs higher overhead than SMM but remains consistently lighter than NMM, which exhibits the steepest rise in both runtime and memory. These results validate SMM as a strong compromise between efficiency and segmentation refinement, particularly in dense scenes where NMS underperforms due to the absence of merging.

A Tree-structured Parzen Estimator (TPE), a Bayesian optimization algorithm over the predefined ranges in

Table 1, was run on the iSAID validation split to maximize the mean F1 across prediction–GT pairs. Each trial evaluated the complete file set using GPU-accelerated, batched mask IoUs, while a median-based pruner stopped underperforming trials early. The best configuration achieved a mean F1 of 0.7585.

Table 5 reports the selected hyperparameters together with their post hoc relative importances from the hyperparameter tuning study.

The most influential factors are , , and , with highlighted as the most dominant parameter. This aligns with iSAID’s crowded, small-object scenes, where careful spatial gating and conservative clique formation reduce over-merging of adjacent instances. The high value for (0.859) favors merges only when overlap is substantial, again appropriate for densely packed vehicles and ships. shows moderate impact, while (confidence weight) and have low importances. is largely subsumed by the neighborhood defined via . All best values lie comfortably inside the suggested ranges, indicating that those ranges are well chosen. Subsequent tuning could focus on , ], and for iSAID-like distributions.

In summary, the overall improvements introduced by SMM are consistent and well targeted. The most significant gain is in PQ, where SMM outperforms the next-best method by 3.1% and exceeds NMS by more than 40%. Precision also improves by nearly 7% compared with the next-best baseline, highlighting SMM’s enhanced ability to suppress false positives. Alongside modest but steady gains in Accuracy, Recall, and F1 score, these results establish SMM as a robust and flexible merging strategy, balancing computational cost with notable gains in segmentation quality on the challenging iSAID benchmark.

5. Discussion

SMM models the entire set of predictions as a weighted graph, where edges encode similarity through a combination of boundary distance, IoU, and confidence. This global formulation allows clustering under a single optimization objective rather than local, stepwise merging. The inclusion of an anti-chaining constraint further ensures that all members of a cluster are mutually compatible, avoiding indirect merges where objects are linked only through intermediate connections. The favorable runtime scaling of SMM relative to NMM (

Figure 4) is directly attributable to the use of R-tree spatial indexing, which reduces neighbor retrieval from quadratic to near-linear complexity. Graph clustering adds an optimization step, but approximate solvers operate in polynomial time and remain efficient in practice, validating the feasibility of this global approach without the need for an explicit ablation.

While SMM delivers measurable gains in segmentation quality, these improvements come with computational overheads. As shown in

Table 6, SMM incurs a longer execution time and higher peak memory usage than NMS, which remains the most efficient baseline. Overhead arises primarily from computing edge weights (distance and IoU) and performing clustering. Although R-tree indexing mitigates this by restricting unnecessary comparisons, memory usage increases with the number of candidate edges stored in dense scenes. Bold values in

Table 6 indicate the best results across algorithms.

Another limitation is the sensitivity of SMM to its hyperparameters. While the Bayesian-guided search produced robust configurations on iSAID, transferring the same values to datasets with different object scales, densities, or score calibration may degrade performance. Moreover, the current formulation is frame-wise and does not enforce temporal consistency. Extending SMM with sequence-level constraints (e.g., track-aware clustering or temporal smoothness on edges) is a promising direction for future work.

The main advantages of SMM are as follows:

Improved precision and Panoptic Quality, achieved by integrating multiple cues into a global clustering objective that reduces false positives and merges fragmented detections.

Globally consistent merging through full-graph evaluation and anti-chaining constraints that prevent inconsistent groupings often introduced by greedy heuristics.

Scalable candidate retrieval enabled by R-tree indexing, which ensures sub-quadratic edge construction and supports large-scale datasets.

Robust tile-aware consolidation that resolves both redundant overlaps and fragmented boundaries, producing coherent outputs across tiled image regions.

Compatibility with diverse pipelines, as SMM is model-agnostic and functions purely as a post-processing step for both tiled and non-tiled inference workflows.

Consequently, SMM can be applied to other domains where fragmented or duplicated detections occur. Applications include medical imaging (merging fragmented organ or lesion segmentations across overlapping fields of view), industrial inspection (consolidating detections of small defects on high-resolution product scans), and autonomous driving (resolving duplicated detections of pedestrians or vehicles across overlapping sensor fields).

This work builds on general principles of correlation clustering. Still, it adapts them uniquely to mask merging by defining task-specific edge weights, introducing an anti-chaining constraint and integrating R-tree acceleration and a dedicated merging function. These adaptations address a persistent gap in existing deduplication methods. By combining global optimization with efficient spatial indexing, SMM achieves consistent gains in precision, F1 score, and PQ, without prohibitive cost, and represents a practical and generalizable post-processing strategy.

6. Conclusions

This paper examines post-processing challenges in instance segmentation for high-resolution imagery, with a focus on handling objects across overlapping tiles. In this work, low-resolution tiling was deliberately adopted to reduce computational demands and enable training on memory-constrained hardware. We evaluated the feasibility of training instance segmentation models on such tiles and demonstrated that tile-based approaches not only reduce computational demands but also enhance local precision. Furthermore, an optimal overlap ratio in the range of 6% to 9% was identified when using slicing-aided hyper inference, balancing improved segmentation performance with computational efficiency. Notably, an overlap of just 6% yielded a 10.4% improvement in F1 score when compared with the non-overlapping baseline.

To reduce the adverse effects of tiling on detection redundancy, the SMM algorithm is introduced as an R-tree-accelerated graph clustering method that integrates pixel-level overlap, boundary proximity, and detection confidence, rather than relying solely on box IoU, and that is well suited for scenarios involving a high number of predicted instances. Comparative analysis against baseline algorithms demonstrated that SMM more effectively handles fragmented and adjacent instance detections, leading to consistent improvements in segmentation performance. Specifically, SMM achieved a 3.2% increase in F1 score, a nearly 7% gain in precision, and a 3.1% improvement in Panoptic Quality (PQ) over the next-best method.

Future research will be directed toward adaptive parameter tuning for the SMM algorithm, which will also be explored further to optimize deduplication behavior across additional datasets and segmentation models. Incorporating temporal consistency will be another direction, particularly for video or time-series segmentation. Additionally, integration with real-time inference pipelines should be investigated to facilitate faster and more scalable deployment in practical applications.

Author Contributions

Conceptualization, formal analysis, and validation, M.M. (Marko Mihajlovic) and M.M. (Marina Marjanovic); methodology, software, investigation, resources, data curation, writing—original draft, and visualization, M.M. (Marko Mihajlovic); supervision, project administration, funding acquisition, and writing—review and editing, M.M. (Marina Marjanovic). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The experiments conducted in this study are based on the iSAID dataset [

13], a large-scale, densely annotated benchmark for instance segmentation in aerial images. iSAID contains 2806 high-resolution images with a total of 655,451 annotated object instances across 15 common categories. All annotations are pixel-accurate and were generated by expert annotators. The dataset is publicly available at

https://captain-whu.github.io/iSAID/ (accessed on 7 July 2025). To support reproducibility and future research, the derived iSAID-512Tiles dataset, comprising approximately 10,000 tiled

images with YOLO-format annotations, has been publicly released at

https://github.com/mihajlov39547/iSAID-Sliced-512 [

12] (accessed on 7 July 2025). The YOLO models were implemented using the open-source Ultralytics YOLO repository (version 8.3.163), available at

https://github.com/ultralytics/ultralytics (accessed on 7 July 2025) and licensed under the GNU Affero General Public License v3.0 (AGPL-3.0). All deduplication algorithms were implemented under controlled and uniform conditions to ensure a fair comparison, following established practices from academic and applied computer vision literature. These implementations are research-grade prototypes and not optimized for production; therefore, the reported results should be interpreted within that context. Raw predictions were generated using the InferenceSlicer from the supervision library (version 0.22.0), in conjunction with the inference framework (version 0.30.0). The supervision library is available at

https://github.com/roboflow/supervision (accessed on 7 July 2025) and is licensed under the MIT License. The inference framework is available at

https://github.com/roboflow/inference (accessed on 7 July 2025) and is also licensed under the MIT License. No proprietary or restricted data or software were used in this study.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| AdamW | Adaptive Moment Estimation with Weight Decay |

| AMP | Automatic Mixed Precision |

| ASFF | Adaptively Spatial Feature Fusion |

| CIoU | Complete Intersection over Union |

| CN | Correct Negative |

| CNN | Convolutional Neural Network |

| CP | Correct Positive |

| ECA | Efficient Channel Attention |

| FLOPs | Floating Point Operations |

| FP | False Positive |

| GFLOPs | Giga Floating Point Operations |

| GNMM | Greedy NMM |

| GPU | Graphics Processing Unit |

| GT | Ground Truth |

| IN | Incorrect Negative |

| IP | Incorrect Positive |

| IoU | Intersection over Union |

| ISA | Image Slicing Algorithm |

| mAP | Mean Average Precision |

| mAP50 | Mean Average Precision at 50% IoU |

| MSE | Mean Squared Error |

| NMM | Non-Mask Merging |

| NMS | Non-Maximum Suppression |

| PQ | Panoptic Quality |

| PSO | Particle Swarm Optimization |

| R-Tree | Rectangle Tree |

| SAHI | Slicing-Aided Hyper Inference |

| SAM | Segment Anything Model |

| SGD | Stochastic Gradient Descent |

| SMM | Spatial Mask Merging |

| SRGAN | Super-Resolution Generative Adversarial Network |

| TPE | Tree-structured Parzen Estimator |

| YOLO | You Only Look Once |

Appendix A. Merging Function Details

Let be a valid merge group obtained from the clustering stage, where each consists of a binary mask , an axis-aligned bounding box , a confidence score , and a shared class label for all .

The merging function

is defined as follows:

Mask fusion: Combine masks via pixel-wise logical OR

Bounding box update: Compute the minimal axis-aligned rectangle enclosing all boxes

Score aggregation: The merged score can be defined as the arithmetic mean

or as a weighted mean by mask size

Class label assignment: Since all members of

A share the same label, the merged label is

The merged object is therefore

The function satisfies the following:

Idempotence: .

Symmetry: Invariant to the ordering of elements in A.

Mask preservation: , ensuring no loss of coverage.

This definition guarantees that merging consolidates spatially and semantically consistent detections into a single coherent instance, improving robustness of tiled inference without introducing chained or inconsistent groupings.

Appendix B. Insights into R-Tree Spatial Indexing

The R-tree is a height-balanced, tree-based spatial indexing structure, similar in design to a B-tree. It was introduced to efficiently manage spatial data objects that occupy non-zero regions in multi-dimensional space by Antonin Guttman in 1984 [

27]. Each node in an R-tree corresponds to a disk page (in disk-resident implementations), and the structure is fully dynamic, supporting insertions, deletions, and searches without requiring periodic reorganization. An R-tree stores spatial objects using minimum bounding rectangles (MBRs). Leaf nodes contain entries of the form

, where

I is the MBR of a spatial object and the identifier points to the actual data tuple. Non-leaf nodes contain entries of the form

, where

I is the smallest rectangle that spatially contains all rectangles in the child node [

28].

An R-tree satisfies the following structural properties:

Every leaf node contains between m and M entries, unless it is the root.

For each entry in a leaf node, I is the smallest rectangle that spatially contains the corresponding data object.

Every non-leaf node has between m and M children, unless it is the root.

For each entry in a non-leaf node, I is the smallest rectangle that spatially contains all rectangles in the child node.

The root node has at least two children unless it is a leaf.

All leaf nodes appear at the same level.

The height of an R-tree containing N entries is at most , assuming a minimum branching factor of m. Nodes tend to have more than m entries, which improves space utilization and reduces tree height. Most of the space is used by leaf nodes, and the parameter m can be tuned experimentally for performance optimization.

The R-tree is constructed incrementally through successive insertions. Each spatial object is inserted into the tree using the Insert algorithm, which ensures that the tree remains balanced and that bounding rectangles are updated appropriately.

The insertion process involves the following steps:

ChooseLeaf: Starting from the root, the algorithm descends the tree to find the appropriate leaf node. At each level, it selects the child node whose bounding rectangle requires the least enlargement to include the new entry. Ties are broken by choosing the rectangle with the smallest area.

Insert Entry: If the selected leaf node has space, the new entry is inserted directly. If the node is full, it is split and the split is propagated upward using the AdjustTree algorithm.

AdjustTree: This algorithm updates the bounding rectangles of all ancestor nodes to reflect the insertion. If a split propagates to the root, a new root is created, increasing the height of the tree by one.

Searching in an R-tree is performed by recursively traversing the tree from the root. The search algorithm identifies all entries whose bounding rectangles overlap with a given search region.

The search procedure operates as follows:

Internal Nodes: For each entry in the current node, if the bounding rectangle overlaps the search region, the corresponding subtree is recursively searched.

Leaf Nodes: Each entry is checked to determine whether its rectangle overlaps the search region. If so, the entry is reported as a qualifying result.

This recursive search strategy allows the R-tree to efficiently prune large portions of the search space, especially when bounding rectangles are tight and well separated. However, due to possible overlaps, multiple subtrees may need to be explored, which can affect worst-case performance.

Although R-trees offer efficient spatial indexing, their use in traditional methods like Non-Maximum Suppression (NMS) and Non-Maximum Merging (NMM) is limited. These algorithms require iterative pairwise comparisons and dynamic updates to the active detection set, which are not well suited to the static structure of R-trees. They also depend on overlap-based metrics such as Intersection-over-Union (IoU) or mask similarity, which R-trees, designed to operate on bounding boxes, do not inherently support [

29]. Frequent merging or removal of detections would require continuous updates to the R-tree structure, diminishing its performance benefits [

30].

Appendix C. Evaluation Metrics

To assess the performance of the proposed instance segmentation pipeline, a set of standard evaluation metrics widely used in segmentation and object detection tasks is computed: accuracy, precision, recall, and F1 score. These metrics provide complementary insights into detection quality and mask-level agreement and are especially relevant for benchmarking segmentation methods in high-resolution and densely populated scenes [

31].

Evaluation focuses on instance-level matching, comparing predicted object masks against ground truth annotations. The following definitions are used: (correct positives), (incorrect positives), and (incorrect negatives).

The metrics are defined as follows:

In addition to the F1 score, we report the Panoptic Quality (PQ), a metric designed for panoptic segmentation tasks. PQ jointly evaluates both the recognition and segmentation performance of a model by combining two components: Segmentation Quality (SQ) and Recognition Quality (RQ).

This decomposition provides a more interpretable assessment of model performance by isolating segmentation precision from instance recognition ability. PQ penalizes both over-segmentation (e.g., fragmented masks) and under-segmentation (e.g., missed instances), making it particularly suitable for evaluating deduplication methods aimed at reducing mask redundancy.

References

- Yang, F.; Yuan, X.; Ran, J.; Shu, W.; Zhao, Y.; Qin, A.; Gao, C. Accurate instance segmentation for remote sensing images via adaptive and dynamic feature learning. Remote Sens. 2021, 13, 4774. [Google Scholar]

- Rolih, B.; Ameln, D.; Vaidya, A.; Akcay, S. Divide and conquer: High-resolution industrial anomaly detection via memory efficient tiled ensemble. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 3866–3875. [Google Scholar]

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. A survey of deep learning-based object detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar] [CrossRef]

- Akyon, F.C.; Altinuc, S.O.; Temizel, A. Slicing aided hyper inference and fine-tuning for small object detection. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; IEEE: New York, NY, USA, 2022; pp. 966–970. [Google Scholar]

- Hosang, J.; Benenson, R.; Schiele, B. Learning non-maximum suppression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4507–4515. [Google Scholar]

- Chu, J.; Zhang, Y.; Li, S.; Leng, L.; Miao, J. Syncretic-NMS: A merging non-maximum suppression algorithm for instance segmentation. IEEE Access 2020, 8, 114705–114714. [Google Scholar] [CrossRef]

- Telçeken, M.; Akgun, D.; Kacar, S.; Bingol, B. A New Approach for Super Resolution Object Detection Using an Image Slicing Algorithm and the Segment Anything Model. Sensors 2024, 24, 4526. [Google Scholar] [CrossRef]

- Yan, H.; Kong, X.; Wang, J.; Tomiyama, H. ST-YOLO: An Enhanced Detector of Small Objects in Unmanned Aerial Vehicle Imagery. Drones 2025, 9, 338. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, X.; Song, C. Research on a small target object detection method for aerial photography based on improved YOLOv7. Vis. Comput. 2025, 41, 3487–3501. [Google Scholar] [CrossRef]

- Kos, A.; Majek, K.; Belter, D. SegTrackDetect: A window-based framework for tiny object detection via semantic segmentation and tracking. SoftwareX 2025, 30, 102110. [Google Scholar] [CrossRef]

- Yang, L.; Yuan, G.; Zhou, H.; Liu, H.; Chen, J.; Wu, H. RS-YOLOX: A high-precision detector for object detection in satellite remote sensing images. Appl. Sci. 2022, 12, 8707. [Google Scholar] [CrossRef]

- Mihajlovic, M. iSAID-512Tiles: A Sliced Aerial Image Dataset for Instance Segmentation. Singidunum University, Belgrade, Serbia. 2025. Available online: https://github.com/mihajlov39547/iSAID-Sliced-512 (accessed on 7 July 2025).

- Waqas Zamir, S.; Arora, A.; Gupta, A.; Khan, S.; Sun, G.; Shahbaz Khan, F.; Zhu, F.; Shao, L.; Xia, G.S.; Bai, X. isaid: A large-scale dataset for instance segmentation in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 28–37. [Google Scholar]

- Zhang, X.; Shen, J.; Hu, H.; Yang, H. A new instance segmentation model for high-resolution remote sensing images based on edge processing. Mathematics 2024, 12, 2905. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Xia, G.S.; Bai, X.; Yang, W.; Yang, M.Y.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; et al. Object detection in aerial images: A large-scale benchmark and challenges. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7778–7796. [Google Scholar] [CrossRef] [PubMed]

- Benčević, M.; Qiu, Y.; Galić, I.; Pižurica, A. Segment-then-segment: Context-preserving crop-based segmentation for large biomedical images. Sensors 2023, 23, 633. [Google Scholar] [CrossRef] [PubMed]

- Cao, H.; Tian, Y.; Liu, Y.; Wang, R. Water body extraction from high spatial resolution remote sensing images based on enhanced U-Net and multi-scale information fusion. Sci. Rep. 2024, 14, 16132. [Google Scholar] [CrossRef]

- Hu, W.; Yin, Y.; Tan, Y.K.; Tran, A.; Kruppa, H.; Zimmermann, R. GAN-assisted road segmentation from satellite imagery. ACM Trans. Multimed. Comput. Commun. Appl. 2024, 21, 1–29. [Google Scholar] [CrossRef]

- Yang, Z.; Wang, X.; Wu, J.; Zhao, Y.; Ma, Q.; Miao, X.; Zhang, L.; Zhou, Z. Edgeduet: Tiling small object detection for edge assisted autonomous mobile vision. IEEE/ACM Trans. Netw. 2022, 31, 1765–1778. [Google Scholar] [CrossRef]

- Tang, Y.; Liu, M.; Li, B.; Wang, Y.; Ouyang, W. OTP-NMS: Toward optimal threshold prediction of NMS for crowded pedestrian detection. IEEE Trans. Image Process. 2023, 32, 3176–3187. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Wang, J. A Fast Postprocessing Algorithm for the Overlapping Problem in Wafer Map Detection. J. Sens. 2021, 2021, 2682286. [Google Scholar] [CrossRef]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In Proceedings of the 14th International Joint Conference on Artificial Intelligence (IJCAI-95), Montreal, QC, Canada, 20–25 August 1995; Volume 14, pp. 1137–1145. [Google Scholar]

- He, L.H.; Zhou, Y.Z.; Liu, L.; Cao, W.; Ma, J.H. Research on object detection and recognition in remote sensing images based on YOLOv11. Sci. Rep. 2025, 15, 14032. [Google Scholar] [CrossRef] [PubMed]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO (Version 8.0.0) [Computer Software]. Available online: https://github.com/ultralytics/ultralytics (accessed on 7 July 2025).

- Bansal, N.; Blum, A.; Chawla, S. Correlation clustering. Mach. Learn. 2004, 56, 89–113. [Google Scholar] [CrossRef]

- Guttman, A. R-trees: A dynamic index structure for spatial searching. In Proceedings of the 1984 ACM SIGMOD International Conference on Management of Data, Boston, MA, USA, 18–21 June 1984; pp. 47–57. [Google Scholar]

- Manolopoulos, Y.; Nanopoulos, A.; Papadopoulos, A.N.; Theodoridis, Y. R-Trees: Theory and Applications: Theory and Applications; Springer Science & Business Media: Heidelberg, Germany, 2006. [Google Scholar]

- Shin, J.; Zhou, L.; Wang, J.; Aref, W.G. An update-intensive lsm-based r-tree index. VLDB J. 2025, 34, 1–24. [Google Scholar] [CrossRef]

- Silva, Y.N.; Xiong, X.; Aref, W.G. The RUM-tree: Supporting frequent updates in R-trees using memos. VLDB J. 2009, 18, 719–738. [Google Scholar] [CrossRef]

- Hossin, M.; Sulaiman, M.N. A review on evaluation metrics for data classification evaluations. Int. J. Data Min. Knowl. Manag. Process 2015, 5, 1–11. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).