3.5. Comparison Results Between FQFODE and State-of-the-Art WFLOP Optimizers

Table 5,

Table 6 and

Table 7 report the statistical comparison results of FQFODE against other state-of-the-art WFLOP optimizers under various wind direction and turbine count settings. In the tables, each instance is denoted as “WS

Xtn

Y,” where

X represents the number of wind directions and

Y the number of turbines. The columns “Mean” and “Std” correspond to the average and standard deviation obtained from 25 independent runs, respectively. To quantitatively evaluate the significance of performance differences between FQFODE and the comparison algorithms, we adopt the Wilcoxon rank-sum test to compute the “W/T/L” (Win/Tie/Loss) statistics. It is worth emphasizing that the Wilcoxon rank-sum test, as a non-parametric statistical method, takes into account not only the means and standard deviations across multiple runs, but also the median and distributional characteristics of the results. This makes it a more comprehensive and reliable indicator of performance differences among algorithms. The Wilcoxon test has been widely recognized and adopted in the field of evolutionary computation and metaheuristic optimization, providing a robust means for assessing performance significance. Based on this, the “W/T/L” metric offers an intuitive summary of how often FQFODE outperforms, matches, or underperforms compared to its peers on each benchmark instance. This statistical analysis and presentation format are consistent with the prevailing practices in the metaheuristic optimization literature, ensuring the scientific rigor and comparability of the experimental results.

All Wilcoxon rank-sum tests in this study are conducted at a significance level of , following standard practice in evolutionary computation to ensure statistical rigor and result comparability.

In terms of average power efficiency (Mean), FQFODE achieves the best or near-best performance in the vast majority of test scenarios. For example, in

WS2tn25 and

WS5tn25, FQFODE reaches a Mean of 98.68% and 98.73%, respectively—values that are already close to the normalized upper bound of “average turbine utilization” defined in this study (see

Table 5). The advantage remains evident in more complex scenarios. For instance, in

WS4tn50, FQFODE achieves a Mean of 92.27%, outperforming LSHADE (85.09%) and MS_SHADE (91.77%) (see

Table 6). In

WS2tn80, FQFODE achieves a Mean of 80.41%, compared to 80.12% by FODE and 79.05% by CGPSO (see

Table 5). Beyond Mean values,

Best and

Std (standard deviation) also offer meaningful insight. In

WS2tn80, FQFODE achieves a Best of 81.25%, compared to 80.65% for FODE and 79.78% for CGPSO. The corresponding Std values are 0.32%, 0.88%, and 0.31%, respectively. In

WS4tn50, FQFODE achieves a Best of 92.64%, slightly surpassing FODE (92.52%) and CGPSO (91.78%), with lower Std values of 0.18%, 0.25%, and 0.57%, respectively (see

Table 5).

A similar trend holds in comparisons with PSO-based algorithms. In

WS4tn80, FQFODE achieves a Mean and Best of 80.60% and 81.11%, while SUGGA achieves 78.88% and 79.38%, and AGPSO achieves 79.55% and 80.31% (see

Table 7). It should be noted that in a few low-complexity cases, MS_SHADE’s Mean is comparable to or slightly better than FQFODE: for instance, in

WS5tn25, MS_SHADE reaches 98.84% vs. 98.73%, and in

WS6tn25, both perform similarly (97.43% vs. 97.42%). However, in medium-to-high complexity cases (tn = 50 or 80), FQFODE consistently outperforms in both Mean and Best metrics (see

Table 6). These advantages are further confirmed by the W/T/L statistics. Compared with CGPSO, LSHADE, SUGGA, and AGPSO, FQFODE achieves a perfect record of 15/0/0 (see

Table 5 and

Table 7). Compared with FODE, the record is 12/3/0 (

Table 5), and compared with MS_SHADE, it is 11/3/1 (

Table 6). For example, in

WS2tn80, FQFODE improves upon FODE by 0.29 percentage points in Mean (80.41% vs. 80.12%), 0.60 points in Best (81.25% vs. 80.65%), and reduces Std from 0.88% to 0.32%. In

WS4tn50, FQFODE improves upon LSHADE by 7.18 points in Mean (92.27% vs. 85.09%) and upon MS_SHADE by 0.50 points (92.27% vs. 91.77%), while also exhibiting better stability with a lower Std of 0.18%. In

WS6tn50, FQFODE achieves a Mean and Best of 89.53% and 89.91%, respectively, compared to FODE’s 89.46% and 89.76% and CGPSO’s 88.61% and 89.16%. In lower-complexity settings, FQFODE continues to lead in Best: for instance, in

WS2tn25, Best reaches 98.96% (vs. 98.89% for FODE and 98.88% for CGPSO), while in

WS3tn25 and

WS4tn25, Best values are also higher at 98.11% and 98.64%, with corresponding Std values of 0.11% and 0.15%. Although in a few cases (e.g.,

WS4tn80;

WS5tn80) FQFODE and FODE yield similar Mean results, FQFODE still consistently outperforms PSO- and GA-based optimizers. For example, in

WS2tn50, the Best is 91.80% (vs. 91.59% for AGPSO); in

WS3tn50, the Best is 90.35% (vs. 90.15% for AGPSO); and in

WS4tn80, the Best is 81.11% (vs. 80.31% for AGPSO). In most scenarios, FQFODE’s margin over SUGGA exceeds 1.5 percentage points.

In summary, FQFODE demonstrates outstanding optimization performance across a diverse range of test scenarios involving multiple wind directions and varying numbers of turbines. Whether in low-dimensional, relatively simple problems (e.g., WS2tn25, where the Mean approaches and Best reaches 98.96%) or in high-dimensional, complex layout challenges with multiple wind directions (e.g., WS4tn50 and WS2tn80), FQFODE consistently achieves optimal or near-optimal solutions. Moreover, it excels simultaneously in all three key metrics—Mean, Best, and Std—either outperforming other methods or matching the best-performing alternatives. By integrating fractional-order difference operators with a federated stage-wise Q-learning strategy for dynamic parameter control, FQFODE effectively balances exploration and exploitation in the WFLOP task. This is reflected in its ability to produce higher average solution quality, stronger extremal performance, and lower inter-run variance. Compared with other advanced optimization algorithms, FQFODE exhibits stronger adaptability to the high-dimensional, discrete, and nonlinear characteristics of WFLOP, making it a more stable and reproducible layout optimization strategy for real-world applications.

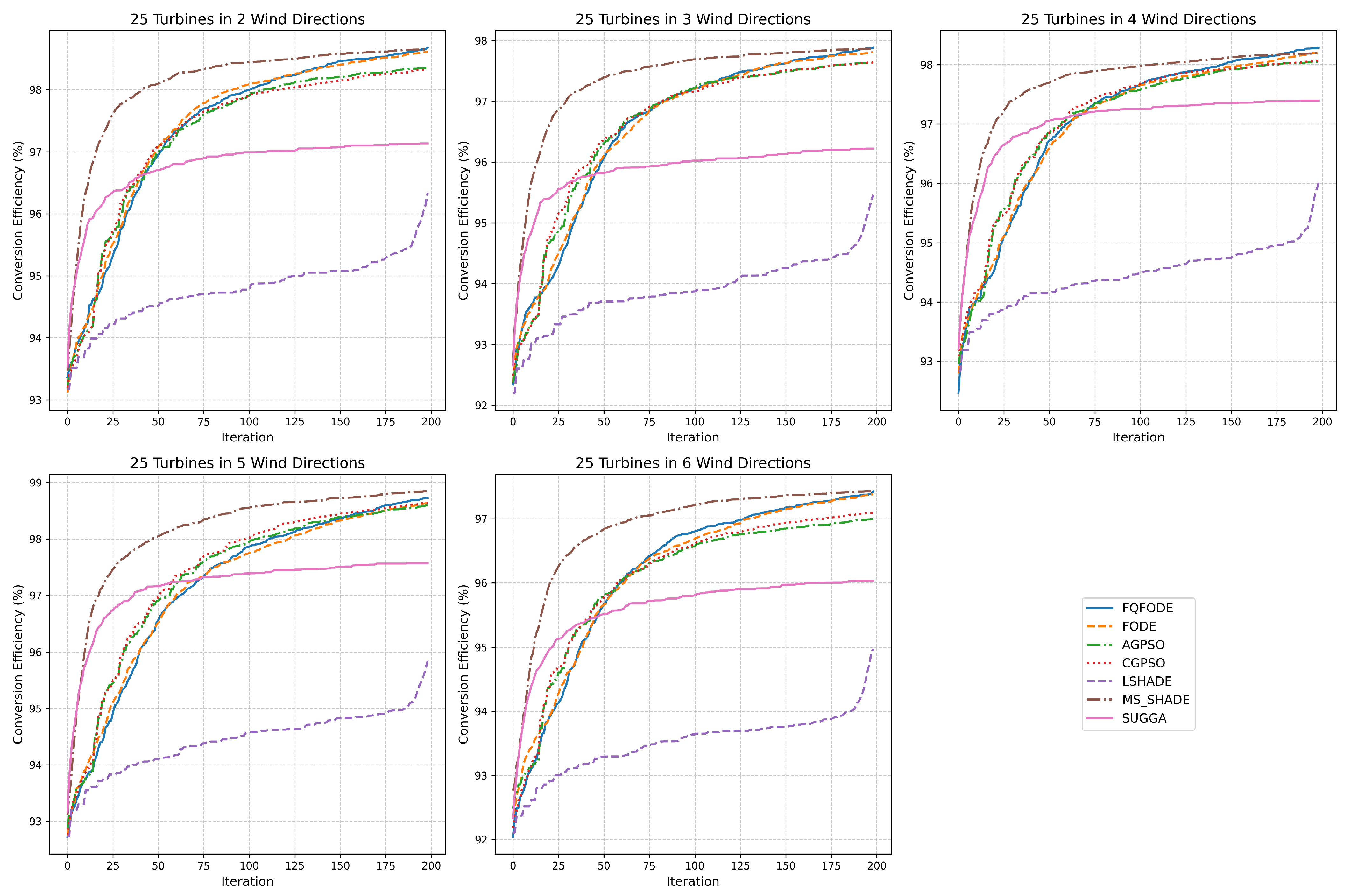

Figure 4,

Figure 5,

Figure 6,

Figure 7,

Figure 8 and

Figure 9 illustrate the convergence behavior and statistical boxplots of different optimizers under various multi-directional wind conditions. The convergence plots show that in the tn25 setting, FQFODE and MS_SHADE perform comparably in most cases, but FQFODE achieves consistent gains over FODE across all wind conditions, reflecting its greater stability and efficiency during search.

Figure 10 provides a concise visual summary of the win/tie/loss (W/T/L) performance dominance of FQFODE across all comparison cases. It complements the detailed tabular results (

Table 5,

Table 6 and

Table 7) by offering a holistic view of how often FQFODE wins, ties, or loses against each algorithm. This visualization greatly enhances the readability of the extensive statistical data and aligns with recommendations from the reviewers to improve result interpretation. Results are 98.61%, 97.88% vs. 97.81%, and 98.29% vs. 98.20%, respectively; in ws5, 98.73% vs. 98.63%; and in ws6, 97.42% vs. 97.38%. As the problem scale increases to tn50 and tn80, the advantage of FQFODE becomes more stable and pronounced. Compared to MS_SHADE, FQFODE gains approximately +0.45/+0.45/+0.50/+0.36/+0.44 percentage points across ws2–ws6 in the tn50 setting and +1.36/+0.84/+1.09/+1.05/+0.65 in the tn80 setting. Compared to FODE, the improvements are approximately +0.08/+0.10/+0.22/+0.16/+0.07 in tn50 and +0.29/+0.16/+0.16 in ws2, ws3, and ws6 under tn80, with performance nearly identical under ws4 and ws5. These results indicate that in more complex discrete solution spaces, FQFODE not only achieves better final upper bounds and Mean values, but also approaches high-quality solutions with fewer iterations under the same evaluation budget. Compared to FODE and LSHADE-type algorithms, FQFODE leverages its inherited Q-tables obtained through distributed federated pretraining and the stage-wise parameter adaptation mechanism to enhance both global search ability and convergence efficiency in complex scenarios, making it better suited to the discrete structural nature of WFLOP.

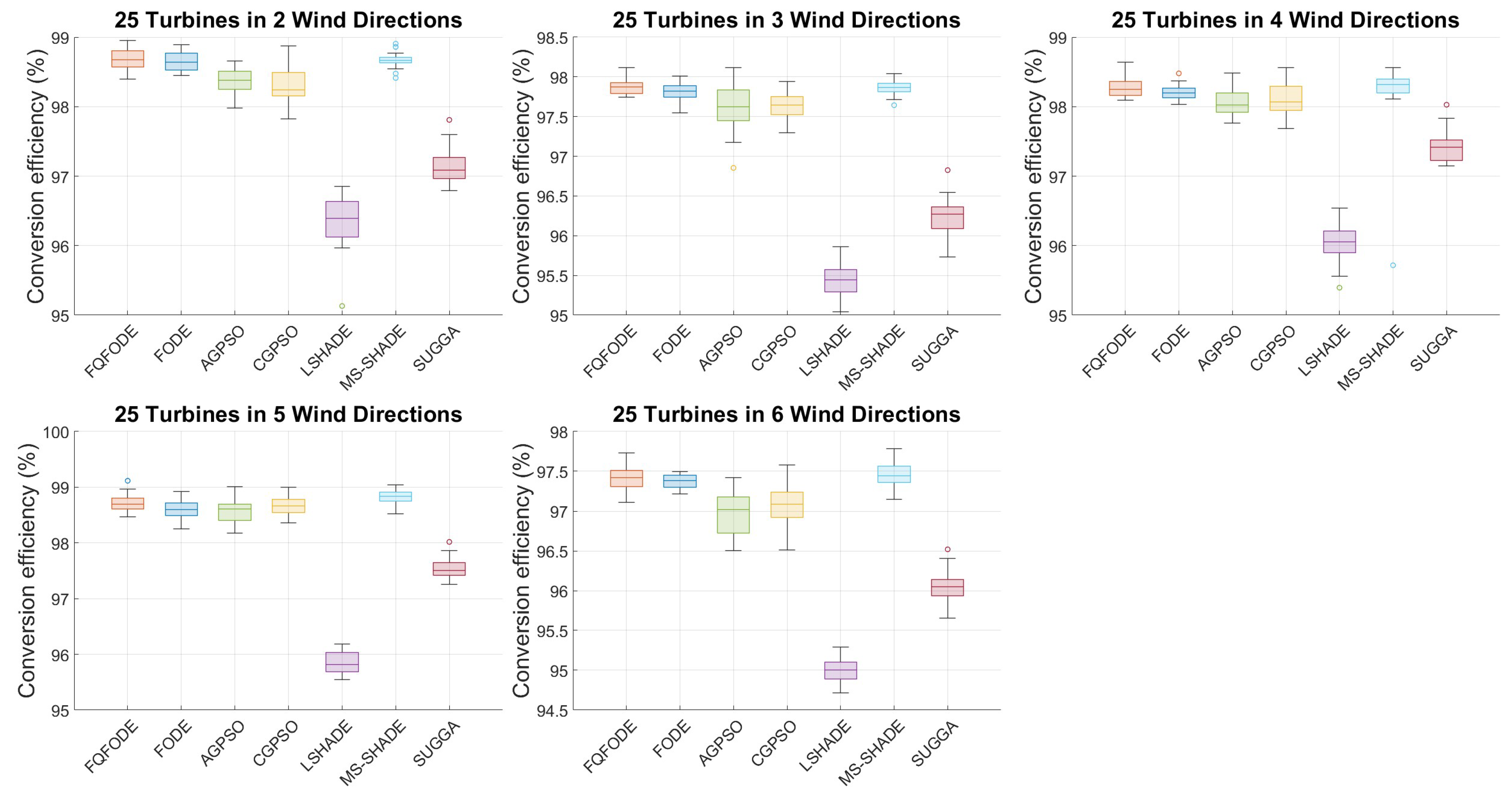

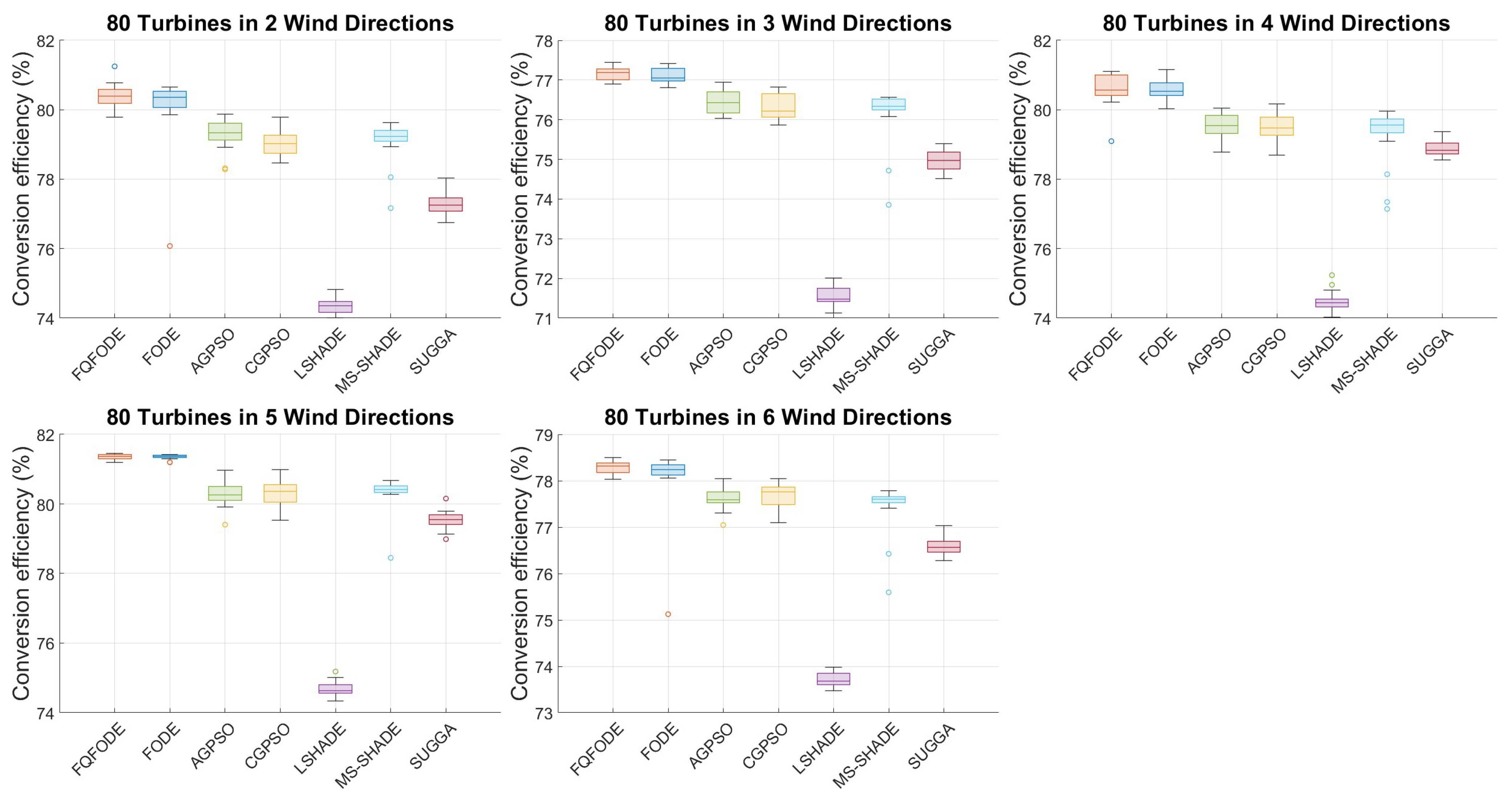

In the boxplots shown in

Figure 7,

Figure 8 and

Figure 9, FQFODE consistently shows higher medians, tighter interquartile ranges (IQR), and shorter whiskers, often achieving higher maximum values (upper bounds), which reflects superior typical performance and stronger robustness. For instance, in the tn50 setting, the standard deviation in ws2 is 0.16% for FQFODE compared to 0.20% for MS_SHADE; in ws5, it is 0.14% vs. 0.18%. In tn80 with ws6, the Std is 0.13% vs. 0.26%. From the perspective of “remaining improvement space,” in tn80 under ws2, FQFODE improves from 80.12% to 80.41%, which means it utilizes about 1.46% of the 19.88 percentage point gap from the FODE baseline to the theoretical 100% upper limit—highlighting its refined optimization capacity in later iterations.

Further Insights into Performance, Convergence Dynamics, and Realistic Adaptability

Beyond standard metrics such as Mean, Std, and Best, the performance differences among algorithms can be better understood from three interconnected perspectives: convergence dynamics, solution stability, and robustness to real-world complexities.

(1) Convergence-Speed vs. Solution-Quality Trade-off. As illustrated in

Figure 4,

Figure 5 and

Figure 6, FQFODE demonstrates a distinct two-phase convergence behavior. It initially exhibits fast convergence to rapidly explore promising solution regions and then gradually stabilizes with finer adjustments, leading to high-quality final layouts. This adaptive convergence pattern contrasts with CGPSO and LSHADE, which tend to stagnate early, and with AGPSO, which converges more slowly but less effectively. Such convergence dynamics reflect a typical trade-off: algorithms emphasizing rapid convergence may risk premature stagnation, while overly explorative ones may struggle to refine competitive layouts. FQFODE, through stage-wise Q-learning and historical memory integration, effectively balances these two goals.

(2) Stability and Robustness across Runs. Boxplots in

Figure 7,

Figure 8 and

Figure 9 reveal that FQFODE consistently achieves higher medians, tighter interquartile ranges (IQRs), and lower standard deviations compared to its peers. For example, in the

tn80-ws6 scenario, the standard deviation of FQFODE is only 0.13%, versus 0.26% for MS_SHADE and 0.23% for AGPSO. These observations imply that FQFODE produces not only high-performing but also highly stable solutions—critical for deployment in real-world conditions with volatile wind patterns.

(3) Practical Interpretability under Realistic Constraints. Real-world wind farm layouts must contend with fluctuating wind speeds, irregular terrains, and exclusion zones. In such settings, fast convergence may become even more valuable as it enables earlier adaptation to operational constraints. Moreover, solution stability translates to higher robustness against external disturbances. In our experiments, scenarios with larger turbine counts (tn80) and more wind directions (ws6) simulate such complex conditions. In these cases, FQFODE maintains both convergence speed and layout quality. For instance, in WS6tn80, FQFODE outperforms FODE by 0.16% in the Mean and achieves better stability (0.13% vs. 0.64% Std), illustrating its strong adaptability to fragmented, discrete landscapes.

Conclusion. These results collectively highlight that the performance advantage of FQFODE is not merely statistical but structural and functional: it reflects an algorithmic capability to maintain efficient search, adapt to real-world landscape complexity, and reliably deliver high-quality solutions. Therefore, the proposed framework is not only superior in theoretical benchmark terms but also more viable for practical wind farm layout optimization scenarios compared with optimizers that primarily rely on local search; FQFODE’s design—emphasizing global exploration and historical knowledge integration—significantly reduces the risk of premature convergence. Its strong performance in the WFLOP stems from both the non-local search and historical memory characteristics introduced by the fractional-order difference operators in the FODE framework and the dynamic adjustment of the key parameter a via federated Q-learning-based pretraining and stage-wise Q-table control. These capabilities together enable FQFODE to steadily deliver high-quality solutions across complex, multi-directional, and multi-scale discrete optimization scenarios.

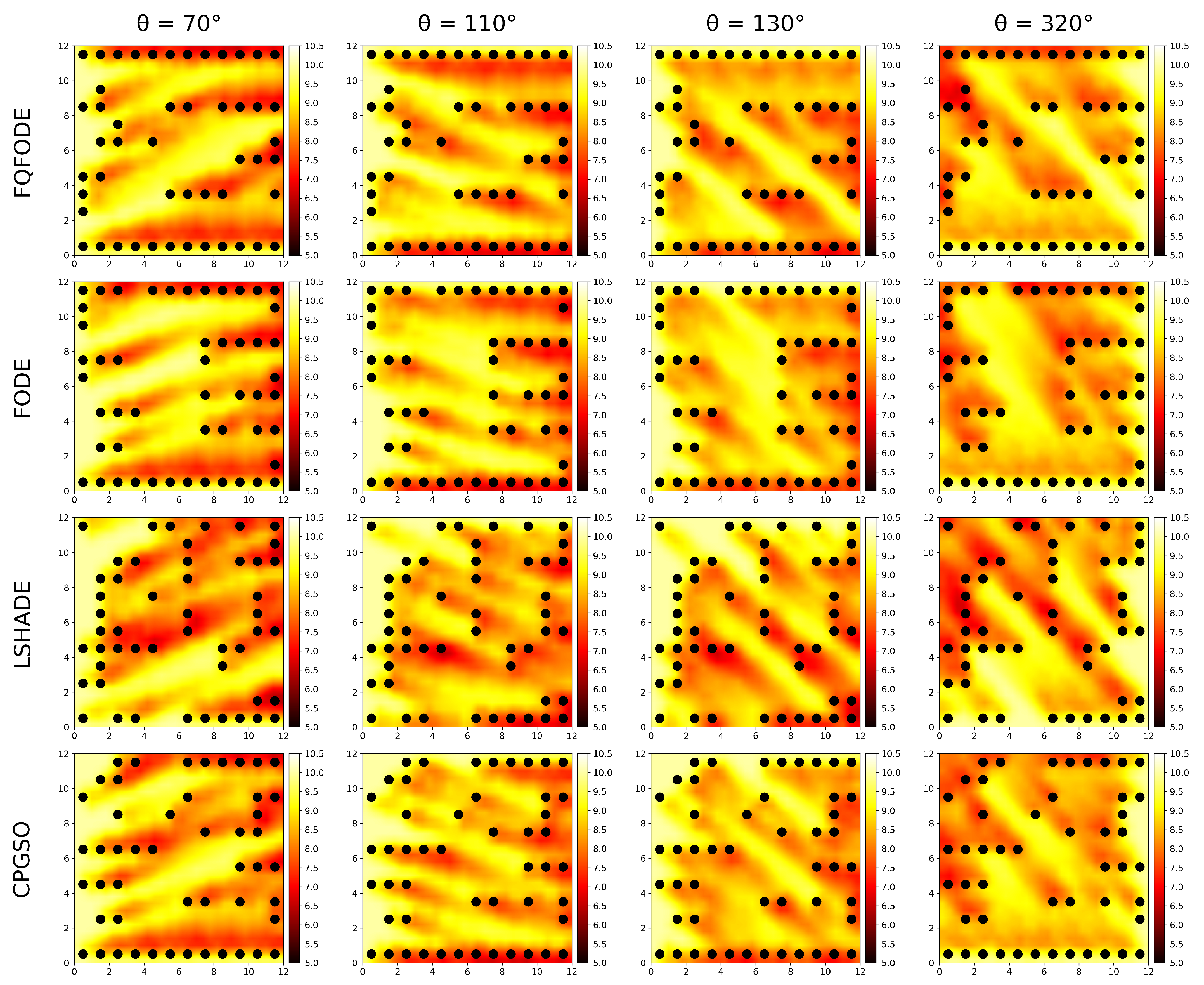

Figure 11 illustrates the turbine layouts produced by four algorithms under four wind-direction conditions for the

tn50 scenario, highlighting their adaptability to wake effects.

Site discretization. The

rectangular site is uniformly partitioned into 144 candidate coordinates, each grid cell

corresponding to a potential turbine location. In the figure,

black dots denote the discrete coordinates that are actually occupied by turbines, while blank cells remain unused. The background color—from dark red to light yellow—indicates wind-speed intensity (from low to high). The results show that wake interference is primarily concentrated in downstream regions of the turbines, emphasizing the importance of minimizing such interference during optimization. Specifically, the layout generated by

LSHADE places many turbines in regions of severe wake overlap, resulting in constrained performance. In contrast,

FQFODE arranges turbines in linear patterns along both sides of the wind field, significantly mitigating wake effects. This strategy improves overall power-generation efficiency, underscoring the advantage of

FQFODE in wind farm layout optimization.The figure therefore provides an intuitive visualization of the layout–strategy differences across algorithms and offers strong support for understanding the relationship between spatial distribution of solutions and power performance. In this scenario,

FQFODE not only achieves a high energy-conversion rate, but also exhibits a more compact, nearly grid-like pattern. This regularity minimizes turbine-to-turbine interference and benefits practical concerns such as installation, maintenance, and efficient land usage. Although algorithms such as

AGPSO and

CGPSO can also reach relatively high efficiency,

FQFODE consistently produces the best solutions with less variation across multiple runs, indicating its robustness in high-dimensional, challenging scenarios.

Overall, these visualization results are consistent with the earlier quantitative analyses based on tables, convergence curves, and boxplots. Whether in simple scenarios where near-perfect solutions are frequently obtained, or in medium-to-high complexity conditions where highly regularized layout patterns emerge, FQFODE consistently demonstrates strong control over the discrete solution space and precise exploration of globally optimal regions. This not only provides further intuitive evidence of its superiority in the WFLOP but also offers a highly efficient and practically implementable layout reference for real-world wind farm design and planning.

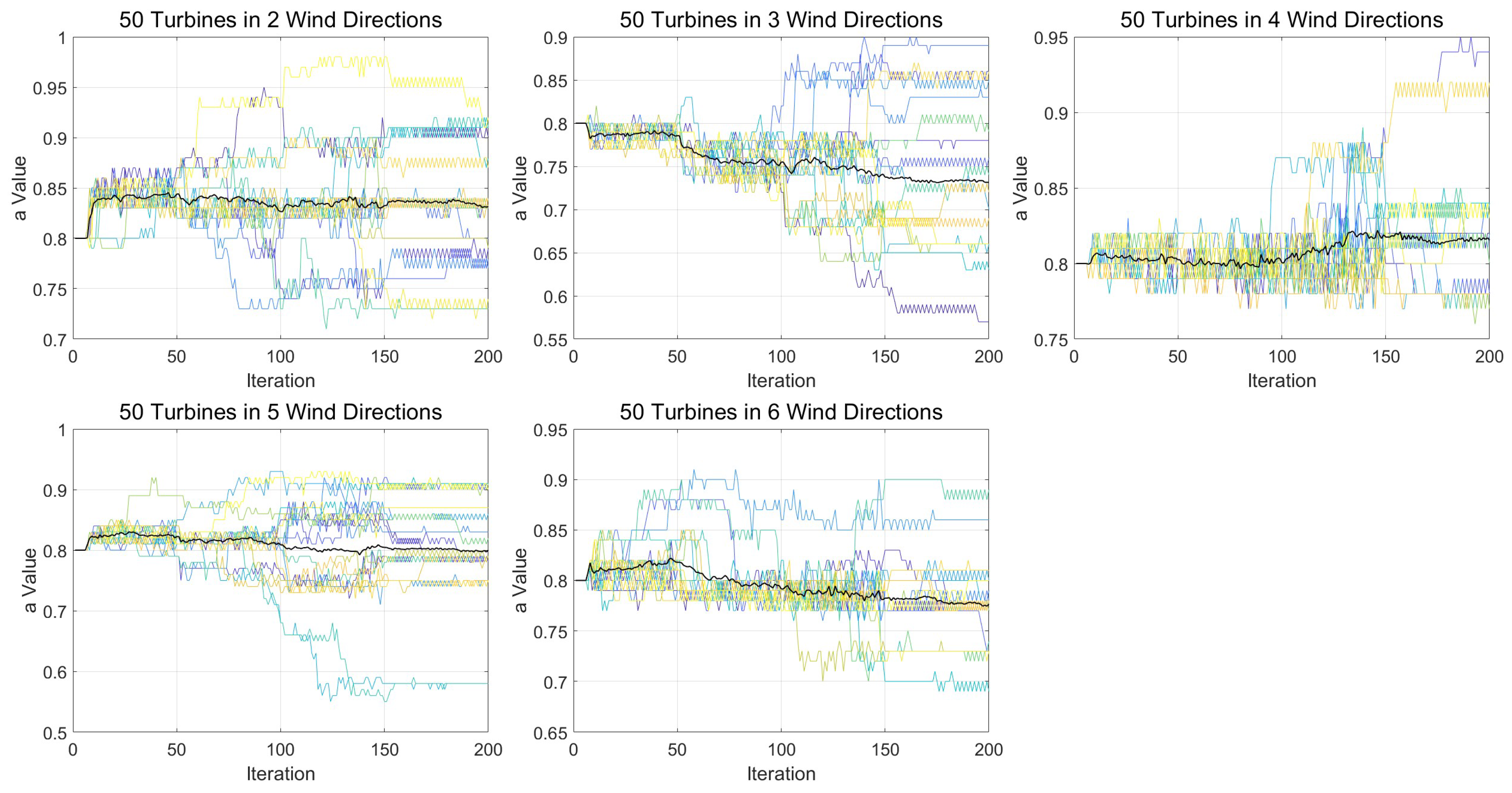

3.6. Q-Learning Analysis

We begin by analyzing the evolutionary behavior of FQFODE across different experiments using the convergence curves of the dynamically adjusted parameter

a, as shown in

Figure 4,

Figure 5 and

Figure 6. Each curve in the figure represents one independent run. For each run, the 200 total iterations are divided into four stages of 50 iterations each, corresponding to stage-specific Q-tables (of size 101 × 3) inherited from the distributed federated pretraining. It can be observed that under the same wind condition, the Q-learning mechanism dynamically adjusts the value of the differential weight parameter

a based on the current population’s power efficiency and diversity status, thereby implementing an adaptive parameter adjustment strategy that responds to environmental feedback. Due to the differences in early search trajectories caused by distinct random seeds, the early-stage evolution of

a may follow different directions. In most cases, significant variations in

a occur during the first two stages (iterations 1–100), whereas the later stages (iterations 101–200) show a more stable trend. This indicates that each stage-specific Q-table is capable of independently adjusting

a based on the current state and expected return, thereby enhancing the stability and adaptiveness of parameter control.

To further interpret the convergence patterns in

Figure 12,

Figure 13 and

Figure 14, we provide an in-depth analysis of how the dynamic changes in parameter

a reflect the internal adjustment strategies of FQFODE. In our design, the value of

a determines the extent to which the current generation emphasizes local exploitation versus historical exploration, and its variations over time directly affect the search behavior of the algorithm. Several key trends are observed:

When a decreases, the influence of current differential vectors weakens, which enhances the mutation’s jump range. This behavior increases global exploration capability, making it easier to escape from local optima.

Conversely, when a increases, it strengthens the influence of current search directions, encouraging the algorithm to focus more on promising regions. This improves local exploitation capability and precision refinement around high-quality solutions.

In some cases, a shows a sharp increase during early iterations. This typically occurs when the random initialization produces relatively good solutions, prompting the algorithm to enter a exploitation phase earlier, as early exploitation leads to faster improvement in those scenarios.

In other cases, a experiences a sudden drop from a high value. This pattern often follows a temporary stagnation phase, indicating that the algorithm attempts to escape suboptimal local regions by reintroducing greater exploration diversity.

These observations collectively suggest that the Q-learning mechanism does not merely adjust a arbitrarily but learns to balance exploration and exploitation based on the current state of the population and feedback from the optimization environment. Such dynamic and context-sensitive behavior enables FQFODE to flexibly respond to different wind farm layout complexities, which is critical for maintaining both convergence quality and search robustness.

To further validate the practical contribution of the proposed Q-learning strategy, we designed a corresponding ablation experiment, and the results are presented in

Table 5. In this experiment, the Q-learning module in FQFODE was removed to form the baseline algorithm “NO-QL” (i.e., the original FODE). The results show that FQFODE consistently outperforms NO-QL across all wind conditions and turbine counts, demonstrating the performance-enhancing effect of the Q-learning mechanism. Notably, during the convergence phase, Q-learning not only accelerates the optimization process but also improves the final solution quality. By dynamically adjusting the key parameter

a, the strategy strengthens the algorithm’s responsiveness and adaptability across different evolutionary stages, making it a critical factor in achieving efficient search and convergence.

As shown in

Table 8, FQFODE consistently outperforms the NO-QL baseline across all wind conditions and turbine configurations.

Bold values indicate the winner between the two strategies.

It is worth noting that although the average improvement in power efficiency brought by the Q-learning mechanism appears modest—approximately —its practical significance in large-scale wind farms is substantial. Take, for example, a typical wind farm with 80 turbines (each rated at and with an annual capacity factor of ), which yields an estimated annual power generation of . Based on this estimate, the efficiency improvement translates into approximately of additional electricity per year—equivalent to around 2.4 million kilowatt-hours. Assuming an average industrial electricity price of in mainland China, this would yield an additional annual revenue of approximately 960,000 CNY. Referring to typical European onshore wind electricity prices (e.g., EUR ), the corresponding annual revenue increase could reach approximately EUR 192,000. This clearly demonstrates that even seemingly small improvements in efficiency can lead to substantial economic value in real-world systems.