A Fast and Privacy-Preserving Outsourced Approach for K-Means Clustering Based on Symmetric Homomorphic Encryption

Abstract

1. Introduction

1.1. Related Work

1.2. Example Applications of SHE

1.3. Our Contributions

- During the protocol, we develop the Modified Euclidean Distance (MED) to avoid non-linear operations (e.g., division and square root) and reduce both the computational and communication costs.

- Comprehensive experiments and comparisons were conducted to demonstrate our solution’s superiority in prediction accuracy, computational efficiency, and total runtime (including computation and communication).

2. Preliminaries

2.1. K-Means

2.2. Symmetric Homomorphic Encryption (SHE)

- is the key generation algorithm, which uses input security parameters and (satisfying ) to randomly generate a secret key and public parameters . The secret key is , where p and q are two large prime numbers satisfying , is a random number satisfying , and the message space . Then, is computed, and the public parameters are set to .

- is the encryption algorithm, which encrypts a message using the secret key . The ciphertext is computed as , where and are randomly sampled. is the decryption algorithm, which recovers the message m from a ciphertext using the secret key sk via the computation .

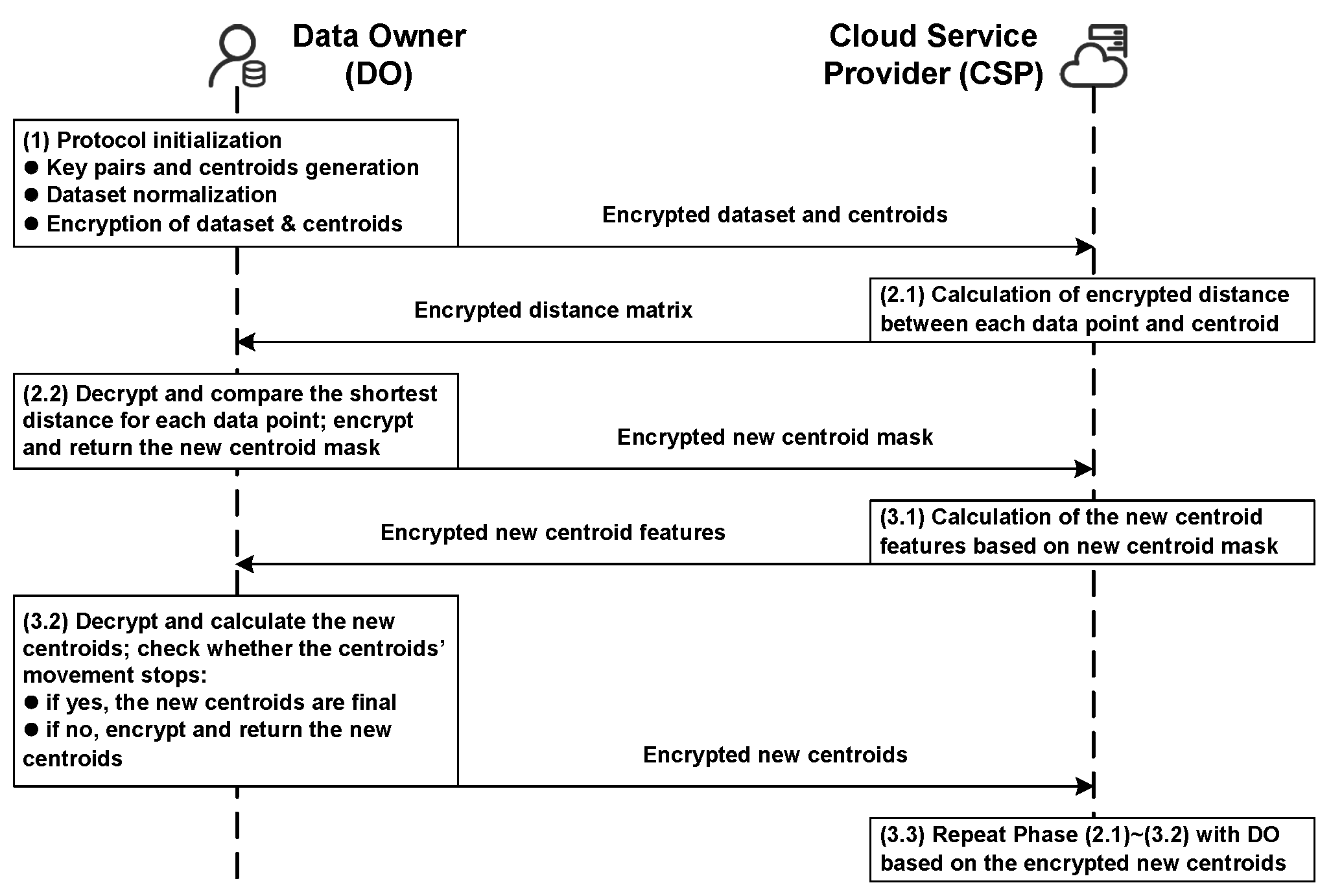

3. System Design

3.1. System Architecture

3.2. Security Models and Desired Properties

- Dataset Privacy: The protocol must ensure the cannot learn the contents of the original clustering dataset provided by the .

- Model Privacy: The protocol must protect the confidentiality of the k-means model parameters throughout the entire outsourced clustering process. Specifically, should not learn (i) the feature values of the cluster centroids or (ii) the distances between individual data points and these centroids.

4. Protocol Implementation

4.1. Protocol Initialization

4.2. The First Protocol Round (Distance Calculation and Comparison)

4.3. The Second Protocol Round (Centroid Calculation and Update)

5. Experimental Results and Analysis

5.1. Prediction Accuracy and Comparison

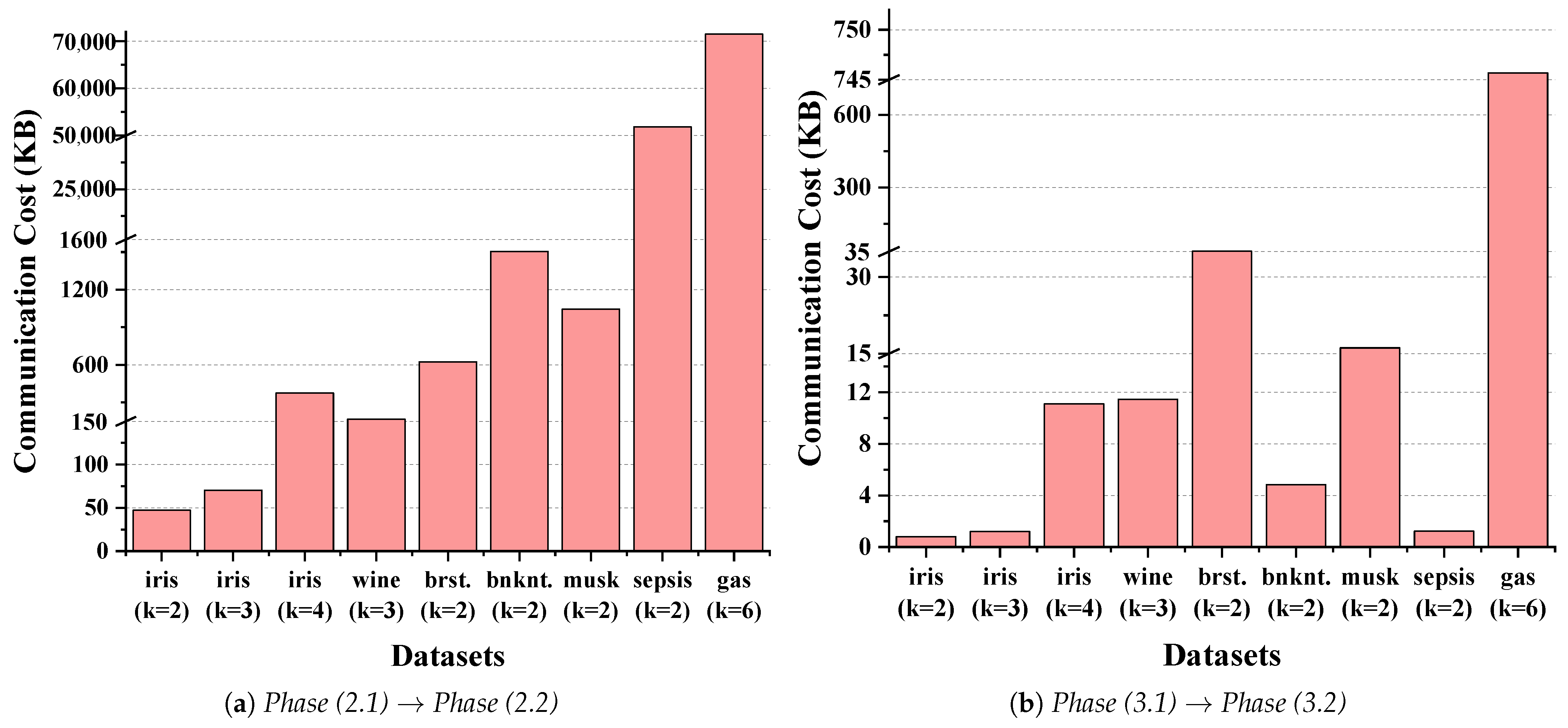

5.2. Communication Overhead of Our Scheme

- The first component is generated at the end of Phase (1), when the transmits the initial encrypted data package to the , which includes the dataset, initial centroids, and public parameters.

- The second component occurs at the end of Phase (2.2) and consists of the encrypted new centroid mask vectors.

- The first part is the encrypted distance matrix transmitted at the end of Phase (2.1), which the uses for comparison.

- The second part, sent at the end of Phase (3.1), consists of the encrypted accumulated centroid features, which are required for the new centroid calculation.

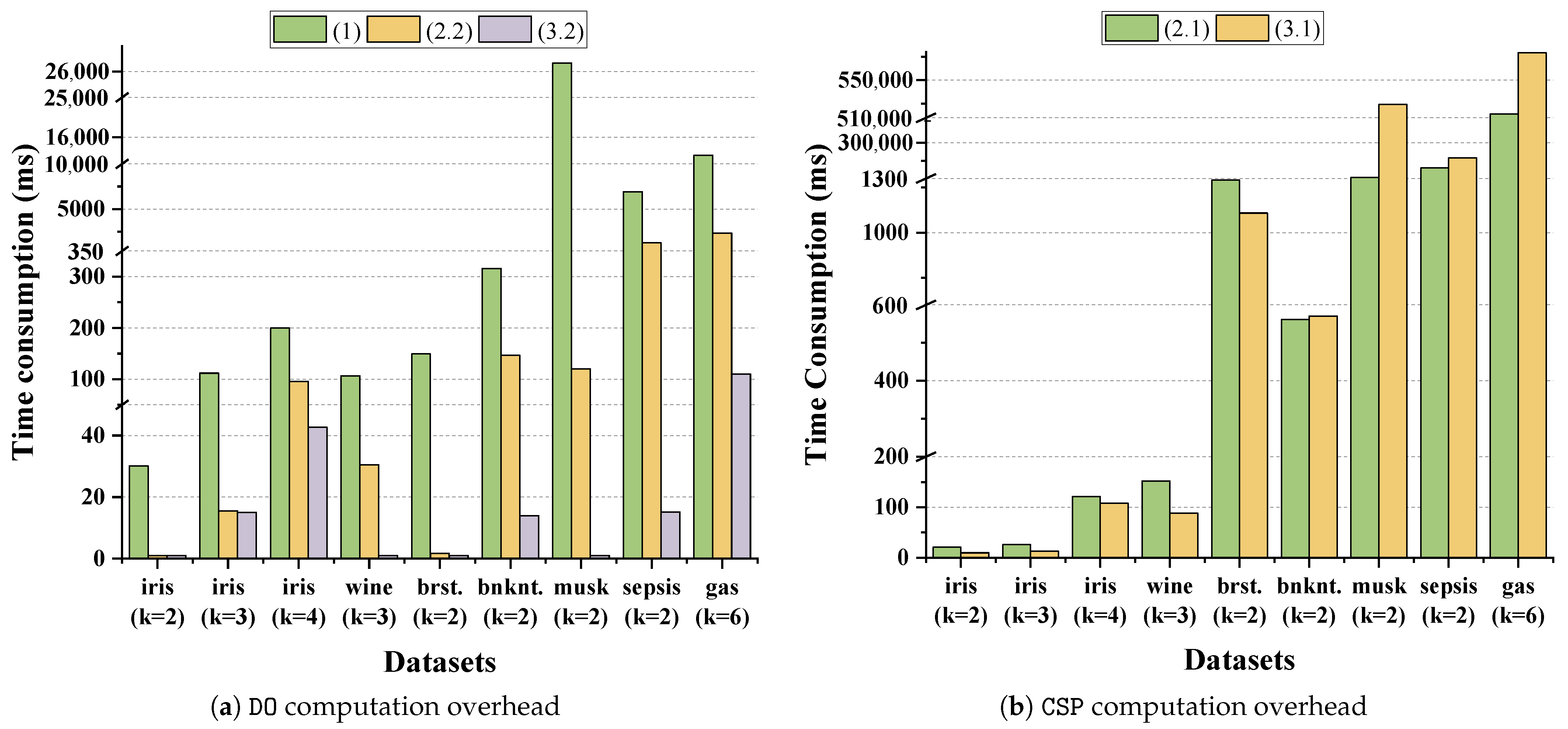

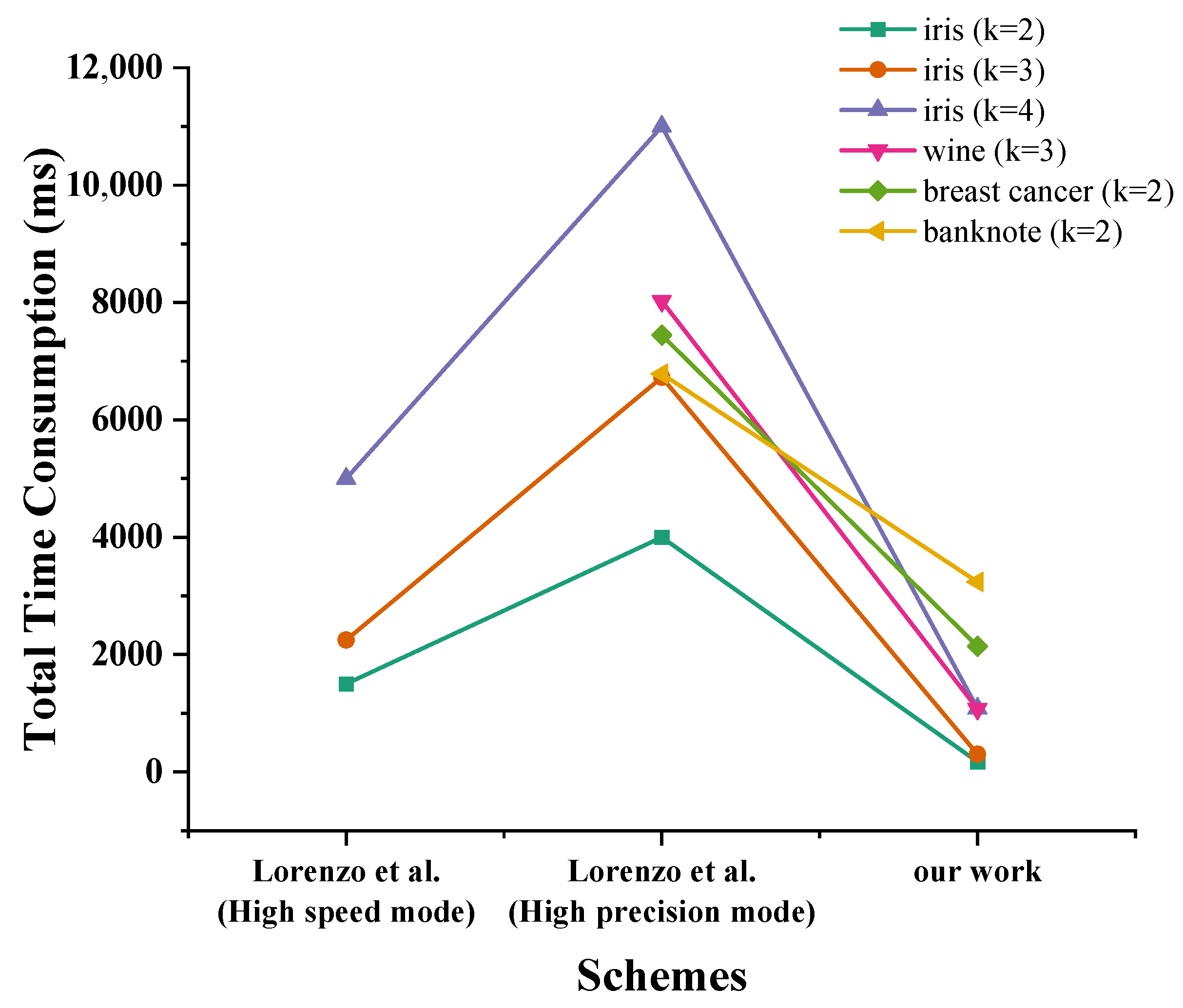

5.3. Time Performance and Comparison

- Phase (1). In this phase, the computation time is the time overhead of protocol initialization, mainly including the key pair generation, dataset normalization, and encryption of the dataset and initial centroids. The initial setup requires the to encrypt data point features and centroid features. As typically , the complexity is dominated by dataset encryption, resulting in the initial computational complexity .

- Phase (2.2). This phase involves two main operations: comparing distances to find the nearest centroid for each data point and generating the new centroid masks. During this process, the mainly performs plaintext comparisons to find the nearest centroid for each point and encrypts and returns mask values. The complexity of this phase is dominated by the cryptographic operations.

- Phase (3.2). In this phase, the mainly needs to perform division operation of the new (accumulated) centroid feature by the number of data points that are closest to the corresponding centroid and check for convergence. Therefore, decryption of encrypted accumulated features and encryption of the new centroid features are needed, and the complexity of this phase is thus .

- Phase (2.1). performs ciphertext addition and multiplication to calculate the distances between each data point and each centroid. To compute the Modified Euclidean Distance (MED) between one data point and one centroid, the performs operations for each of the d dimensions. This involves d homomorphic multiplications and homomorphic additions. Since this must be performed for all n data points and k centroids, the total complexity for this phase is homomorphic operations.

- Phase (3.1). In this phase, the performs homomorphic multiplications and additions to compute the new accumulated centroid features. The calculates the accumulated features for each new centroid based on the equation in Phase (3.1). For each of the features, this requires a summation over all n data points, which involves n homomorphic multiplications and homomorphic additions. Therefore, the total complexity of this phase is also homomorphic operations.

5.4. Security Analysis

- Dataset Privacy. The clustering dataset is encrypted using the secret key . Without access to , the has no means to decrypt the data features. This directly guarantees the privacy of the dataset.

- Model Privacy. Throughout the protocol, all sensitive model parameters are encrypted with . This includes initial/updated centroid features, intermediate MED values, centroid masks, and accumulated features. The operates exclusively on these ciphertexts. Given the IND-CPA security of SHE, the cannot infer any information about these parameters, thus ensuring the privacy of the k-means model.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Cheon, J.H.; Kim, D.; Park, J.H. Towards a Practical Cluster Analysis over Encrypted Data. In Proceedings of the Selected Areas in Cryptography–SAC 2019, Waterloo, ON, Canada, 12–16 August 2019; Paterson, K.G., Stebila, D., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 227–249. [Google Scholar]

- Catak, F.O.; Aydin, I.; Elezaj, O.; Yildirim-Yayilgan, S. Practical Implementation of Privacy Preserving Clustering Methods Using a Partially Homomorphic Encryption Algorithm. Electronics 2020, 9, 229. [Google Scholar] [CrossRef]

- Jaschke, A.; Armknecht, F. Unsupervised Machine Learning on Encrypted Data. In Proceedings of the Selected Areas in Cryptography–SAC 2018, Calgary, AB, Canada, 15–17 August 2018; Cid, C., Jacobson, M.J., Jr., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 453–478. [Google Scholar]

- Lorenzo, R. Fast but approximate homomorphic k-means based on masking technique. Int. J. Inf. Secur. 2023, 22, 1605–1619. [Google Scholar] [CrossRef]

- Sakellariou, G.; Gounaris, A. Homomorphically encrypted k-means on cloud-hosted servers with low client-side load. Computing 2019, 101, 24. [Google Scholar] [CrossRef]

- Mohassel, P.; Rosulek, M.; Trieu, N. Practical Privacy-Preserving K-means Clustering. Proc. Priv. Enhancing Technol. 2020, 2020, 414–433. [Google Scholar] [CrossRef]

- Bunn, P.; Ostrovsky, R. Secure two-party k-means clustering. In Proceedings of the 14th ACM Conference on Computer and Communications Security, Alexandria, VA, USA, 29 October–2 November 2007; CCS ’07. pp. 486–497. [Google Scholar] [CrossRef]

- Liu, X.; Jiang, Z.L.; Yiu, S.M.; Wang, X.; Tan, C.; Li, Y.; Liu, Z.; Jin, Y.; Fang, J. Outsourcing Two-Party Privacy Preserving K-Means Clustering Protocol in Wireless Sensor Networks. In Proceedings of the 2015 11th International Conference on Mobile Ad-hoc and Sensor Networks (MSN), Shenzhen, China, 16–18 December 2015; pp. 124–133. [Google Scholar] [CrossRef]

- Zhang, E.; Li, H.; Huang, Y.; Hong, S.; Zhao, L.; Ji, C. Practical multi-party private collaborative k-means clustering. Neurocomputing 2022, 467, 256–265. [Google Scholar] [CrossRef]

- Chillotti, I.; Gama, N.; Georgieva, M.; Izabachène, M. TFHE: Fast fully homomorphic encryption over the torus. J. Cryptol. 2020, 33, 34–91. [Google Scholar] [CrossRef]

- Brakerski, Z.; Gentry, C.; Vaikuntanathan, V. (Leveled) fully homomorphic encryption without bootstrapping. In Proceedings of the 3rd Innovations in Theoretical Computer Science Conference, Cambridge, MA, USA, 8–10 January 2012; ITCS ’12. pp. 309–325. [Google Scholar] [CrossRef]

- Cheon, J.H.; Kim, A.; Kim, M.; Song, Y. Homomorphic Encryption for Arithmetic of Approximate Numbers. In Proceedings of the Advances in Cryptology–ASIACRYPT 2017, Hong Kong, China, 3–7 December 2017; Takagi, T., Peyrin, T., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 409–437. [Google Scholar]

- Paillier, P. Public-key cryptosystems based on composite degree residuosity classes. In Proceedings of the International Conference on the Theory and Applications of Cryptographic Techniques, Prague, Czech Republic, 2–6 May 1999; pp. 223–238. [Google Scholar]

- Mahdikhani, H.; Lu, R.; Zheng, Y.; Shao, J.; Ghorbani, A.A. Achieving O(log3n) Communication-Efficient Privacy-Preserving Range Query in Fog-Based IoT. IEEE Internet Things J. 2020, 7, 5220–5232. [Google Scholar] [CrossRef]

- Zhao, J.; Zhu, H.; Wang, F.; Lu, R.; Liu, Z.; Li, H. PVD-FL: A Privacy-Preserving and Verifiable Decentralized Federated Learning Framework. IEEE Trans. Inf. Forensics Secur. 2022, 17, 2059–2073. [Google Scholar] [CrossRef]

- Zhao, J.; Zhu, H.; Wang, F.; Lu, R.; Wang, E.; Li, L.; Li, H. VFLR: An Efficient and Privacy-Preserving Vertical Federated Framework for Logistic Regression. IEEE Trans. Cloud Comput. 2023, 11, 3326–3340. [Google Scholar] [CrossRef]

- Miao, Y.; Liu, Z.; Li, X.; Li, M.; Li, H.; Choo, K.K.R.; Deng, R.H. Robust Asynchronous Federated Learning With Time-Weighted and Stale Model Aggregation. IEEE Trans. Dependable Secur. Comput. 2024, 21, 2361–2375. [Google Scholar] [CrossRef]

- Sun, L.; Zhang, Y.; Zheng, Y.; Song, W.; Lu, R. Towards Efficient and Privacy-Preserving High-Dimensional Range Query in Cloud. IEEE Trans. Serv. Comput. 2023, 16, 3766–3781. [Google Scholar] [CrossRef]

- Zheng, Y.; Lu, R.; Guan, Y.; Shao, J.; Zhu, H. Efficient and Privacy-Preserving Similarity Range Query over Encrypted Time Series Data. IEEE Trans. Dependable Secur. Comput. 2022, 19, 2501–2516. [Google Scholar] [CrossRef]

- Miao, Y.; Xu, C.; Zheng, Y.; Liu, X.; Meng, X.; Deng, R.H. Efficient and Secure Spatial Range Query over Large-scale Encrypted Data. In Proceedings of the 2023 IEEE 43rd International Conference on Distributed Computing Systems (ICDCS), Hong Kong, China, 18–21 July 2023; pp. 1–11. [Google Scholar] [CrossRef]

- Zheng, Y.; Lu, R.; Guan, Y.; Zhang, S.; Shao, J.; Zhu, H. Efficient and Privacy-Preserving Similarity Query with Access Control in eHealthcare. IEEE Trans. Inf. Forensics Secur. 2022, 17, 880–893. [Google Scholar] [CrossRef]

- Dua, D.; Graf, C. UCI Machine Learning Repository. 2017. Available online: https://ergodicity.net/2013/07/ (accessed on 4 August 2025).

- Iris. 2025. Available online: http://archive.ics.uci.edu/dataset/53/iris (accessed on 4 August 2025).

- Wine. 2025. Available online: http://archive.ics.uci.edu/dataset/186/wine+quality (accessed on 4 August 2025).

- Breast Cancer. 2025. Available online: http://archive.ics.uci.edu/dataset/17/breast+cancer+wisconsin+diagnostic (accessed on 4 August 2025).

- Banknote. 2025. Available online: https://archive.ics.uci.edu/dataset/267/banknote+authentication (accessed on 4 August 2025).

- Musk. 2025. Available online: https://archive.ics.uci.edu/dataset/75/musk-version-2 (accessed on 4 August 2025).

- Sepsis. 2025. Available online: https://archive.ics.uci.edu/dataset/827/sepsis+survival+minimal+clinical+records (accessed on 4 August 2025).

- Gas. 2025. Available online: https://archive.ics.uci.edu/dataset/224/gas+sensor+array+drift+dataset (accessed on 4 August 2025).

- Ultsch, A. CLUSTERING WITH SOM: U* C. In Proceedings of the Workshop on Self-Organizing Maps, WSOM’05, Paris, France, 5–8 September 2005; pp. 75–82. [Google Scholar]

- Halkidi, M.; Batistakis, Y.; Vazirgiannis, M. Clustering validity checking methods: Part II. ACM Sigmod Rec. 2002, 31, 19–27. [Google Scholar] [CrossRef]

| Schemes | [7,8,9] | [2,7] | [3] | [5] | [4] | Our Work |

|---|---|---|---|---|---|---|

| Num of servers | >1 | >1 | 1 | 1 | 1 | 1 |

| Techniques | SS | Paillier [13] | TFHE [10] | BGV [11] | CKKS [12] | SHE [14] |

| Comp. overhead | medium | low | high | medium | medium | low |

| Comm. overhead | medium | medium | high | medium | medium | low |

| Datasets | Centroid Num. (k) | Dim. (d) | Data Point Num. (n) |

|---|---|---|---|

| iris [23] | 3 | 4 | 150 |

| wine [24] | 3 | 13 | 178 |

| breast cancer [25] | 2 | 30 | 569 |

| banknote [26] | 2 | 5 | 1372 |

| musk [27] | 2 | 166 | 6598 |

| sepsis [28] | 2 | 3 | 110,204 |

| gas [29] | 6 | 128 | 13,910 |

| Related Works | Datasets | Evaluation Metrics | Accuracy Comparison |

|---|---|---|---|

| (i) [4] | Iris | Mean error | 2.95∼7.21% (>Our 0%) |

| (ii) [2] | Self-synthetic | Adjusted Rand Index | 49∼72.4% (≈Our 40∼80%) |

| (iii) [3] | FCPS [30] | Misclassification rate | 0∼3% (≥Our 0%) |

| (iv) [5] | Self-synthetic | Relative error | 0.65∼26.91% (>Our 0%) |

| Comparison Results Between [2] Based on Paillier and Our Work | ||||

|---|---|---|---|---|

| Dataset Size | Research [2] | Our work | ||

| 2000 | 99,402.12 s ≈ 27.61 h | 1.14 s | ||

| 3000 | 255,790.18 s ≈ 71.05 h | 1.29 s | ||

| 4000 | 377,794.82 s ≈ 104.94 h | 1.86 s | ||

| 5000 | 680,925.54 s ≈ 189.15 h | 4.76 s | ||

| Comparison results between [3] (exact/approximate mode) based on TFHE and our work | ||||

| [3] (Exact mode) | [3] (Approximate mode) | Our work | ||

| Runtime per Iteration | 873.46 h | 15.56 h | 0.166 s | |

| Iteration Number | 15 | 40 | 5 | |

| Total Time | 545.91 days ≈ 17.95 months | 25.93 days ≈ 0.85 months | 0.83 s | |

| Comparison results between [5] (fastest/slowest mode) based on BGV and our work | ||||

| [5] (Fastest Mode) | [5] (Slowest Mode) | Our work | ||

| , | 1479 s | 3164 s | , | 0.16 s |

| , | 150 s | 702 s | , | 0.97 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, W.; Xu, S. A Fast and Privacy-Preserving Outsourced Approach for K-Means Clustering Based on Symmetric Homomorphic Encryption. Mathematics 2025, 13, 2893. https://doi.org/10.3390/math13172893

Tang W, Xu S. A Fast and Privacy-Preserving Outsourced Approach for K-Means Clustering Based on Symmetric Homomorphic Encryption. Mathematics. 2025; 13(17):2893. https://doi.org/10.3390/math13172893

Chicago/Turabian StyleTang, Wanqi, and Shiwei Xu. 2025. "A Fast and Privacy-Preserving Outsourced Approach for K-Means Clustering Based on Symmetric Homomorphic Encryption" Mathematics 13, no. 17: 2893. https://doi.org/10.3390/math13172893

APA StyleTang, W., & Xu, S. (2025). A Fast and Privacy-Preserving Outsourced Approach for K-Means Clustering Based on Symmetric Homomorphic Encryption. Mathematics, 13(17), 2893. https://doi.org/10.3390/math13172893