Prototype-Based Two-Stage Few-Shot Instance Segmentation with Flexible Novel Class Adaptation

Abstract

1. Introduction

2. Related Work

2.1. Instance Segmentation

2.2. Few-Shot Instance Segmentation (FSIS)

2.3. Incremental FSIS

2.4. Key Technologies

3. Methodology

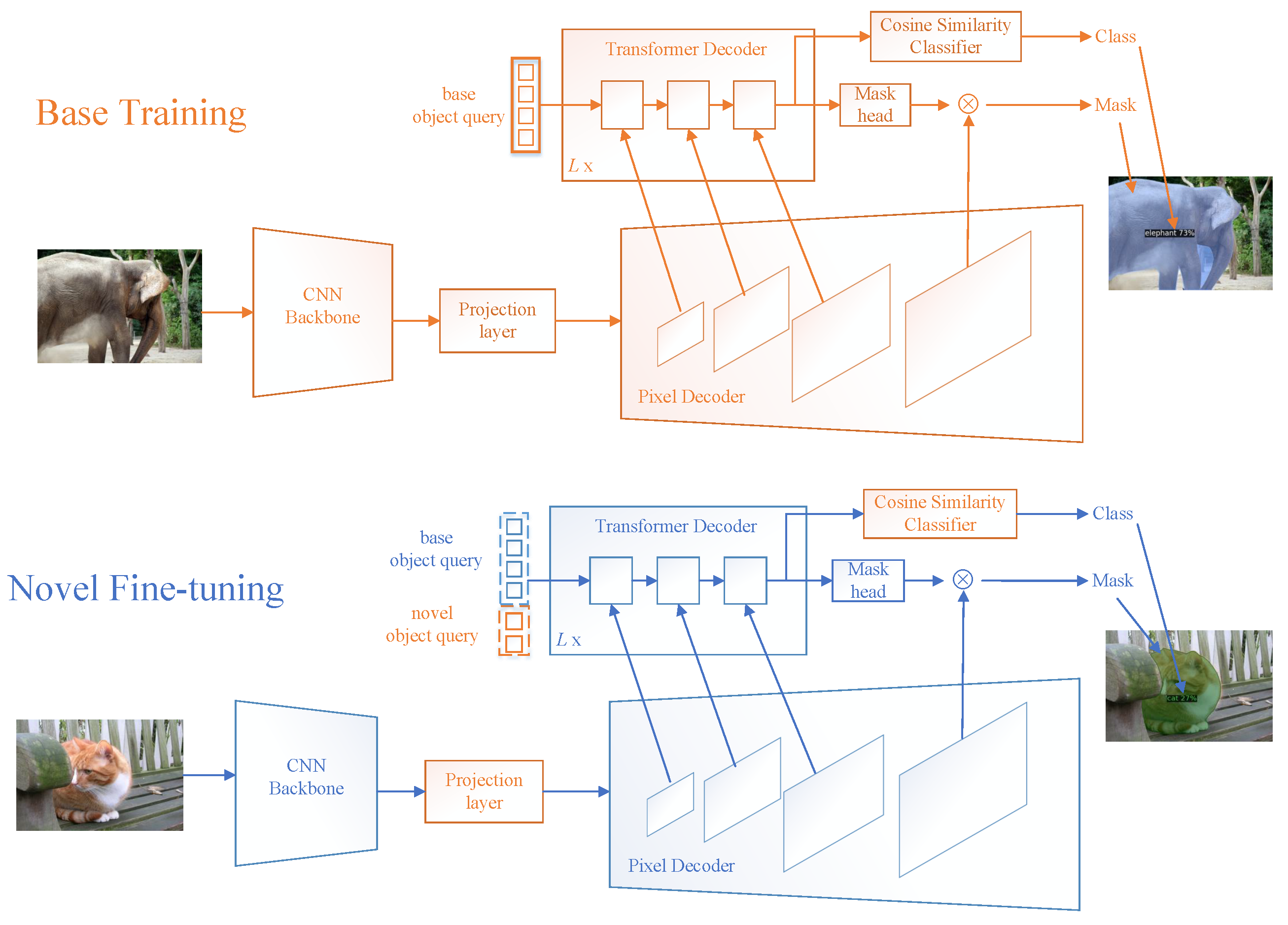

3.1. Framework Overview

3.2. Base Training Stage

3.3. Novel Fine-Tuning Stage

3.4. Incremental Class Addition

4. Experiment

4.1. Experiment Setup

4.2. Datasets

4.3. Baselines

4.4. Implementation Details

4.5. Experimental Environment

- -

- GPUs: Two NVIDIA RTX 3090 (24 GB GDDR6X each), enabling parallel processing and large batch training.

- -

- CPU: Intel Xeon W-1290 (10 cores, 3.2 GHz) with 32 GB DDR4 RAM, supporting data preprocessing and model deployment.

- -

- Software: CUDA 11.3, cuDNN 8.2, and Python 3.8. Dependencies include OpenCV 4.5.3 (image augmentation), matplotlib 3.5.1 (visualization), and scikit-learn 1.0.2 (statistical analysis).

4.6. Comparative Experiments

4.7. Ablation Studies

4.8. Parameter Sensitivity

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Tian, C.; Zheng, M.; Li, B.; Zhang, Y.; Zhang, S.; Zhang, D. Perceptive self-supervised learning network for noisy image watermark removal. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 7069–7079. [Google Scholar] [CrossRef]

- Milletari, F.; Navab, N.; Ahmadi, S.-A. V-Net: Fully convolutional neural networks for volumetric medical image segmentation. arXiv 2016, arXiv:1606.04797. [Google Scholar] [CrossRef]

- Tian, C.; Song, M.; Fan, X.; Zheng, X.; Zhang, B.; Zhang, D. A Tree-guided CNN for image super-resolution. IEEE Trans. Consum. Electron. 2025, 71, 3631–3640. [Google Scholar] [CrossRef]

- Meng, D.; Chen, X.; Fan, Z.; Zeng, G.; Li, H.; Yuan, Y.; Sun, L.; Wang, J. Conditional detr for fast training convergence. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Zhang, C.; Wang, Y.; Zhu, L.; Song, J.; Yin, H. Multi-graph heterogeneous interaction fusion for social recommendation. ACM Trans. Inf. Syst. (TOIS) 2021, 40, 1–26. [Google Scholar] [CrossRef]

- Zhu, L.; Wu, R.; Zhu, X.; Zhang, C.; Wu, L.; Zhang, S.; Li, X. Bi-direction label-guided semantic enhancement for cross-modal hashing. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 3983–3999. [Google Scholar] [CrossRef]

- Zhu, L.; Zhang, C.; Song, J.; Zhang, S.; Tian, C.; Zhu, X. Deep multigraph hierarchical enhanced semantic representation for cross-modal retrieval. IEEE MultiMedia 2022, 29, 17–26. [Google Scholar] [CrossRef]

- Wang, X.; Huang, T.E.; Darrell, T.; Gonzalez, J.E.; Yu, F. Frustratingly simple few-shot object detection. arXiv 2020, arXiv:2003.06957. [Google Scholar] [CrossRef]

- Yan, X.; Chen, Z.; Xu, A.; Wang, X.; Liang, X.; Lin, L. Meta R-CNN: Towards general solver for instance-level low-shot learning. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9577–9586. [Google Scholar]

- Michaelis, C.; Ustyuzhaninov, I.; Bethge, M.; Ecker, A.S. One-shot instance segmentation. arXiv 2018, arXiv:1811.11507. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhu, L.; Wu, R.; Liu, D.; Zhang, C.; Wu, L.; Zhang, Y.; Zhang, S. Textual semantics enhancement adversarial hashing for cross-modal retrieval. Knowl.-Based Syst. 2025, 317, 113303. [Google Scholar] [CrossRef]

- Tian, C.; Zheng, M.; Lin, C.-W.; Li, Z.; Zhang, D. Heterogeneous window transformer for image denoising. IEEE Trans. Syst. Man. Cybern. Syst. 2024, 54, 6621–6632. [Google Scholar] [CrossRef]

- Chen, L.-C.; Wang, H.; Qiao, S. Scaling wide residual networks for panoptic segmentation. arXiv 2020, arXiv:2011.11675. [Google Scholar]

- Du, X.; Zoph, B.; Hung, W.-C.; Lin, T.-Y. Simple training strategies and model scaling for object detection. arXiv 2021, arXiv:2107.00057. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Tian, C.; Liu, K.; Zhang, B.; Huang, Z.; Lin, C.-W.; Zhang, D. A Dynamic Transformer Network for Vehicle Detection. IEEE Trans. Consum. Electron. 2025, 71, 2387–2394. [Google Scholar] [CrossRef]

- Chen, K.; Pang, J.; Wang, J.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Shi, J.; Ouyang, W.; et al. Hybrid task cascade for instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Kang, B.; Liu, Z.; Wang, X.; Yu, F.; Feng, J.; Darrell, T. Few-shot object detection via feature reweighting. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8420–8429. [Google Scholar]

- Tian, C.; Zheng, M.; Jiao, T.; Zuo, W.; Zhang, Y.; Lin, C.-W. A self-supervised CNN for image watermark removal. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 7566–7576. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Fan, Z.; Yu, J.-G.; Liang, Z.; Ou, J.; Gao, C.; Xia, G.-S.; Li, Y. FGN: Fully guided network for few-shot instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9172–9181. [Google Scholar]

- Kirillov, A.; Wu, Y.; He, K.; Girshick, R. PointRend: Image segmentation as rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT++: Better rEal-Time Instance Segmentation. Ph.D. Thesis, University of California, Berkeley, CA, USA, 2019. [Google Scholar]

- Arbeláez, P.; Pont-Tuset, J.; Barron, J.T.; Marques, F.; Malik, J. Multiscale combinatorial grouping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Bao, H.; Dong, L.; Wei, F. BEiT: BERT pretraining of image transformers. arXiv 2021, arXiv:2106.08254. [Google Scholar]

- Zhu, L.; Cai, L.; Song, J.; Zhu, X.; Zhang, C.; Zhang, S. MSSPQ: Multiple semantic structure-preserving quantization for cross-modal retrieval. In Proceedings of the 2022 International Conference on Multimedia Retrieval, Newark, NJ, USA, 27–30 June 2022; pp. 631–638. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the ECCV 2018 15th European Conference, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Cheng, B.; Collins, M.D.; Zhu, Y.; Liu, T.; Huang, T.S.; Adam, H.; Chen, L.-C. Panoptic-DeepLab: A simple, strong, and fast baseline for bottom-up panoptic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Cheng, B.; Girshick, R.; Dollár, P.; Berg, A.C.; Kirillov, A. Boundary iou: Improving object-centric image segmentation evaluation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Cheng, B.; Parkhi, O.; Kirillov, A. Pointly-supervised instance segmentation. arXiv 2021, arXiv:2104.06404. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2021, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Zhu, L.; Yu, W.; Zhu, X.; Zhang, C.; Li, Y.; Zhang, S. MvHAAN: Multi-view hierarchical attention adversarial network for person re-identification. World Wide Web 2024, 27, 59. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.M.A.; Gool, L.V.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Fang, Y.; Yang, S.; Wang, X.; Li, Y.; Fang, C.; Shan, Y.; Feng, B.; Liu, W. Instances as queries. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Ganea, D.A.; Boom, B.; Poppe, R. Incremental few-shot instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1185–1194. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Gao, P.; Zheng, M.; Wang, X.; Dai, J.; Li, H. Fast convergence of detr with spatially modulated co-attention. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. CCNet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Kirillov, A.; Girshick, R.; He, K.; Dollár, P. Panoptic feature pyramid networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.-Y.; Cubuk, E.D.; Le, Q.V.; Zoph, B. Simple copy-paste is a strong data augmentation method for instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Kirillov, A.; He, K.; Girshick, R.; Rother, C.; Dollár, P. Panoptic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Kirillov, A.; Levinkov, E.; Andres, B.; Savchynskyy, B.; Rother, C. InstanceCut: From edges to instances with multicut. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Gidaris, S.; Komodakis, N. Dynamic few-shot visual learning without forgetting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4367–4375. [Google Scholar]

- Li, Y.; Zhao, H.; Qi, X.; Chen, Y.; Qi, L.; Wang, L.; Li, Z.; Sun, J.; Jia, J. Fully convolutional networks for panoptic segmentation with point-based supervision. arXiv 2021, arXiv:2108.07682. [Google Scholar] [CrossRef] [PubMed]

- Tian, C.; Zhang, X.; Liang, X.; Li, B.; Sun, Y.; Zhang, S. Knowledge distillation with fast CNN for license plate detection. IEEE Trans. Intell. Vehicles 2023. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhu, L.; Zhang, C.; Song, J.; Liu, L.; Zhang, S.; Li, Y. Multi-graph based hierarchical semantic fusion for cross-modal representation. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; IEEE Computer Society: Washington, DC, USA, 2021; pp. 1–6. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Huang, S.; Lu, Z.; Cheng, R.; He, C. Fapn: Feature-aligned pyramid network for dense image prediction. arXiv 2021, arXiv:2108.07058. [Google Scholar]

- Li, Z.; Wang, W.; Xie, E.; Yu, Z.; An kumar, A.; Alvarez, J.M.; Lu, T.; Luo, P. Panoptic segformer. arXiv 2021, arXiv:2109.03814. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv 2021, arXiv:2103.14030. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Neuhold, G.; Ollmann, T.; Rota Bulo, S.; Kontschieder, P. The mapillary vistas dataset for semantic understanding of street scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Perez-Rua, J.M.; Zhu, X.; Hospedales, T.M.; Xiang, T. Incremental few-shot object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13846–13855. [Google Scholar]

- Cheng, B.; Schwing, A.G.; Kirillov, A. Per-pixel classification is not all you need for semantic segmentation. In Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS 2021), Online, 6–14 December 2021. [Google Scholar]

| Shots | Inc. | Method | Detection | Segmentation | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Overall | Base | Novel | Overall | Base | Novel | |||||||||

| AP | AP50 | AP | AP50 | AP | AP50 | AP | AP50 | AP | AP50 | AP | AP50 | |||

| 1 | - | Base-Only | 28.67 | 43.53 | 38.22 | 58.04 | — | — | 26.34 | 41.55 | 35.12 | 55.40 | — | — |

| MTFA | 24.32 | 39.64 | 31.73 | 51.49 | 2.10 | 4.07 | 22.98 | 37.48 | 29.85 | 48.64 | 2.34 | 3.99 | ||

| ✓ | ONCE | 13.6 | N/A | 17.9 | N/A | 0.7 | N/A | — | — | — | — | — | — | |

| iMTFA | 21.67 | 31.55 | 27.81 | 40.11 | 3.23 | 5.89 | 20.13 | 30.64 | 25.9 | 39.28 | 2.81 | 4.72 | ||

| 5 | - | Base-Only | 28.67 | 43.53 | 38.22 | 58.04 | — | — | 26.34 | 41.55 | 35.12 | 55.40 | — | — |

| MTFA | 26.39 | 41.52 | 33.11 | 51.49 | 6.22 | 11.63 | 25.07 | 39.95 | 31.29 | 49.55 | 6.38 | 11.14 | ||

| ✓ | ONCE | 13.7 | N/A | 17.9 | N/A | 1.0 | N/A | — | — | — | — | — | — | |

| iMTFA | 19.62 | 28.06 | 24.13 | 33.69 | 6.07 | 11.15 | 18.22 | 27.10 | 22.56 | 33.25 | 5.19 | 8.65 | ||

| 10 | - | Base-Only | 28.67 | 43.53 | 38.22 | 58.04 | — | — | 26.34 | 41.55 | 35.12 | 55.40 | — | — |

| MTFA | 27.44 | 42.84 | 33.83 | 52.04 | 8.28 | 15.25 | 25.97 | 41.28 | 31.84 | 50.17 | 8.36 | 14.58 | ||

| ✓ | ONCE | 13.7 | N/A | 17.9 | N/A | 1.2 | N/A | — | — | — | — | — | — | |

| iMTFA | 19.26 | 27.49 | 23.36 | 32.41 | 6.97 | 12.72 | 17.87 | 26.46 | 21.87 | 32.01 | 5.88 | 9.81 | ||

| Shots | Method | All Classes | Base Classes | Novel Classes | |||

|---|---|---|---|---|---|---|---|

| AP | AP50 | AP | AP50 | AP | AP50 | ||

| 1-Shot Experiments | |||||||

| iMTFA | 20.13 | 30.64 | 25.90 | 39.28 | 2.81 | 4.72 | |

| 1 | Ours | 27.41 | 41.64 | 35.99 | 54.69 | 1.67 | 2.47 |

| MTFA | 24.32 | 39.64 | 31.73 | 51.49 | 2.10 | 3.99 | |

| 5-Shot Experiments | |||||||

| iMTFA | 18.22 | 27.10 | 22.56 | 33.25 | 5.19 | 8.65 | |

| 5 | MTFA | 26.39 | 41.52 | 33.11 | 51.49 | 6.22 | 11.14 |

| Ours | 27.00 | 40.00 | 34.18 | 50.71 | 5.45 | 7.88 | |

| 10-Shot Experiments | |||||||

| iMTFA | 17.87 | 26.46 | 21.87 | 32.01 | 5.88 | 9.81 | |

| 10 | MTFA | 27.44 | 42.84 | 33.83 | 52.04 | 8.28 | 14.58 |

| Ours | 26.47 | 39.06 | 32.61 | 48.22 | 8.06 | 11.60 | |

| 1-Shot | 5-Shot | 10-Shot | ||||

|---|---|---|---|---|---|---|

| AP (Det) | AP50 (Det) | AP (Det) | AP50 (Det) | AP (Det) | AP50 (Det) | |

| FGN | —— | —— | —— | —— | —— | —— |

| MTFA | 9.99 | 21.68 | 10.53 | 22.17 | 11.24 | 23.05 |

| iMTFA | 11.47 | 22.41 | 12.01 | 23.15 | 12.58 | 24.02 |

| Ours | 3.61 | 5.75 | 9.17 | 14.39 | 12.65 | 19.75 |

| Method | 1-Shot | 5-Shot | 10-Shot | |||

|---|---|---|---|---|---|---|

| AP | AP50 | AP | AP50 | AP | AP50 | |

| MRCN+ft-full | —— | —— | 1.30 | 2.70 | 1.90 | 4.70 |

| Meta-RCNN | —— | —— | 2.80 | 6.90 | 4.40 | 10.60 |

| iMTFA | 2.83 | 4.75 | 5.24 | 8.73 | 5.94 | 9.96 |

| Ours | 2.62 | 4.10 | 6.75 | 10.23 | 9.68 | 14.47 |

| Novel Query | Knowledge Distillation | All | Base | Novel | |||

|---|---|---|---|---|---|---|---|

| AP | AP50 | AP | AP50 | AP | AP50 | ||

| 18.30 | 27.92 | 21.29 | 32.54 | 9.33 | 14.04 | ||

| ✓ | 28.09 | 41.87 | 33.93 | 50.78 | 7.81 | 11.31 | |

| ✓ | 17.75 | 27.13 | 20.44 | 31.35 | 9.68 | 14.47 | |

| ✓ | ✓ | 26.47 | 39.06 | 32.61 | 48.22 | 8.06 | 11.60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Q.; Zhang, Y.; Xiao, P.; Ying, M.; Zhu, L.; Zhang, C. Prototype-Based Two-Stage Few-Shot Instance Segmentation with Flexible Novel Class Adaptation. Mathematics 2025, 13, 2889. https://doi.org/10.3390/math13172889

Zhu Q, Zhang Y, Xiao P, Ying M, Zhu L, Zhang C. Prototype-Based Two-Stage Few-Shot Instance Segmentation with Flexible Novel Class Adaptation. Mathematics. 2025; 13(17):2889. https://doi.org/10.3390/math13172889

Chicago/Turabian StyleZhu, Qinying, Yilin Zhang, Peng Xiao, Mengxi Ying, Lei Zhu, and Chengyuan Zhang. 2025. "Prototype-Based Two-Stage Few-Shot Instance Segmentation with Flexible Novel Class Adaptation" Mathematics 13, no. 17: 2889. https://doi.org/10.3390/math13172889

APA StyleZhu, Q., Zhang, Y., Xiao, P., Ying, M., Zhu, L., & Zhang, C. (2025). Prototype-Based Two-Stage Few-Shot Instance Segmentation with Flexible Novel Class Adaptation. Mathematics, 13(17), 2889. https://doi.org/10.3390/math13172889