1. Introduction

Fog computing is a distributed computing paradigm that offers processing at the edge devices as far as possible instead of depending completely on the cloud servers. It enhances the capability of cloud computing with faster decision-making capability, making it suitable for bandwidth-constrained applications. It overcomes the limitations of a cloud computing environment with real-time data processing, reduced latency, and local decision-making capability. It allows the system to operate independently of the cloud, even in the case of connectivity failures. It offers scalable solutions by evenly distributing the load across the edge devices. Mobile fog devices also provide context-aware services that follow the end users wherever they move [

1,

2]. Because of these characteristics, fog computing has been adopted in a variety of applications, including smart cities, industrial IoT, retail, agriculture, transportation, etc.

Two key components of fog computing are fog nodes and fog network. A fog node is composed of computing capability, storage, and network connectivity. The fog network is responsible for establishing the communication infrastructure among fog nodes. The fog nodes perform data gathering, data analytics, and a short duration of storage and transmission of only a filtered form of data to cloud servers. They bring the computation near the origin of data to offer benefits in terms of reduced latency, enhanced security, and enriched efficiency. Local computation helps in the immediate processing of the input data to arrive at independent decisions at a faster pace. The servicing delay and computation cost are minimized as they offer high scalability by utilizing the resources available in edge devices. The overall responsiveness is high as they reduce the bandwidth requirement by reducing the data transmission rate to remote cloud servers. Mobility among the fog nodes makes it suitable for Internet of Things (IoT) applications. Mobility is ensured through application communication with stationary fog nodes as well as vehicle-to-vehicle communication. The network topology is highly dynamic, and fog nodes can enter and leave the network easily [

3,

4].

Heterogeneity has become the fundamental principle of fog computing. The fog nodes exhibit varying resource capacity, bandwidth availability, and power constraints. The geographically distributed form of fog nodes offers redundancy and depends on the cloud connectivity only for coordination purposes. The fog nodes are often subjected to failures, poor connectivity, improper placement of applications, and disruption in the network services. Failures among the fog nodes are quite common because of wide geographical distribution, a distributed form of deployment, battery-constrained computing, and overflow of resources.

Since the edge computing paradigm is distributed in nature, the optimal placement of applications across fog nodes is a challenging task as the application placement policies must operate under the constraints of energy consumption, computation overhead, and bandwidth usage. The application placement typically involves two steps, i.e., mapping and automatically adjusting. In the mapping step, the application is mapped to a specific fog node, and sufficient resources are provided for execution. During the automatic adjustment step, horizontal scaling or vertical scaling is performed to adjust to the varying resource demands. An application is composed of activities such as data receiving, preprocessing, data analysis, and event handling. Most of the modern applications are non-monolithic in nature as they are composed of several independent deployable components. In order to make application placement more adaptable, it is necessary to consider application scaling and proper task distribution to deal with the changing volume of incoming requests [

1,

5].

The traditional application placement policies strive to meet the quality of service requirements in terms of latency, bandwidth, energy consumption, and service level agreement (SLA) violations. However, the application requirements keep varying, and it is difficult to predict them. Fog applications usually exhibit stringent quality of service (QoS) requirements. In addition to QoS requirements, security, privacy, location sensitivity, deadline sensitivity, state persistence, and fault tolerance requirements also need to be taken into consideration. Three different modes are considered for application placement: batch scheduling, static scheduling, and dynamic scheduling. In batch scheduling, application requests are batched and then transferred for execution. In static scheduling, applications are scheduled without considering the current system operation status. Dynamic scheduling considers both static system counterparts and short term variations to arrive at placement decisions. The entire application placement strategy is handled by the controller node which is situated either in the fog layer or in the cloud layer. Multiple controller nodes may be used to coordinate and arrive at placement decisions. However, finding the best fog node for application placement is difficult as there is no universal definition for it and it varies depending on the application needs [

6,

7].

The reinforcement learning technique is increasingly integrated with the edge computing paradigm as it significantly improves the intelligence factor, flexibility, and adaptability nature of edge systems. Incorporating machine learning at the edge devices enables immediate data analysis and reduces the overall latency incurred. Local decisions are taken up at edge nodes which makes them reliable and minimizes the risk of data breaches. Quantile Temporal Difference Learning (QTDL) is a distributed form of reinforcement learning technique which learns the art of prediction from full distribution of returns instead of only the expected value return. It is not possible to learn return distributions in an infinite dimensional space. Hence, QTDL learns approximation of return distribution by choosing probability distribution representation and uses a subset of parameterized finite dimensional set of parameters. It aims to learn certain quantiles from of return distribution from every state of the Markov Decision Process (MDP). QTDL is highly nonlinear in nature and is made up of multiple fixed points. However, it often ends up in suboptimal policies due to overestimation of value associated with certain actions. Computational complexity is high due to frequent update of quantile values. Accurate estimation of quantile values is difficult since a large number of samples representing the state of fog nodes and fog network are needed. Temporal difference error (i.e., wrong estimation of value function and reward gathered from transition) may also occur [

8,

9]. The Small Language Model (SLM) is a scaled-down version of the Large Language Model (LLM). It is a lightweight model with fewer parameters to tune which offers a viable option in terms of enhanced efficiency and reduced cost of computation. The working principle of SLM is the same as that of LLM which includes several layers of attention mechanisms and also reduces the size of the dataset through mechanisms like knowledge distillation, pruning, quantization, and deployment of expert-regulated architectures. The computational efficiency of SLM is higher than that of LLM as it involves fewer parameters and offers lower memory requirements [

10,

11]. The SLM-guided QTDL effectively handles the ambiguous and noisy state space environment. It enhances the uncertainty modeling through exploration over the environment with high variance and sparse rewards. Heuristic guidance is required to handle multimodal inputs and to prevent early the tendency to take meaningless or misleading actions. The data-driven quantiles of temporal difference learning are blended with the informed heuristics of the SLM to prevent quantile loss and over- or underestimation of the quantile ranks.

In this paper, an SLM-guided temporal difference learning framework is proposed to perform optimal application placement in fog computing. The QTD learning performs approximation by assigning an equal weighted mixture of Dirac deltas. The particle locations are approximate as are also the certain count of quantiles. The heuristic value generated by the SLM is used to learn an exact Q value of the TD learning. It prevents hallucination by avoiding improper guidance to the TD learning agent. The heuristic-guided Q function is converged with optimal Q function of MDP. Also SLM dynamically adapts to the change in the application requirements with few computational resources through efficient exploration of the state space environment. The SLM-generated Q value offers a greater level of flexibility through proper reshaping of reward function. The bias in the TD Q learning agent’s performance is prevented by providing adequate guidance to arrive at the optimal solution.

The main objectives of this paper are as follows.

Design and development of the lightweight model, i.e., SLM-enabled QTDL framework for efficient placement of fog node applications.

Utilization of the heuristic value generated by SLM to aid QTDL in arriving at exact Q value computation and learning.

Prevention of the tendency of TD learning agent to become trapped in hallucination through proper guidance and fine tuning of the parameters.

Proper reshaping of QTDL reward function through precise estimation of Q function of QTDL agents.

Enhancing the flexibility and adaptability of QTDL framework by achieving the trade-off between exploration and exploitation of the large state space of the fog environment.

Expected value analysis of the QTDL framework considering finite and infinite fog computing environment.

Experimental evaluation of the QTDL framework using iFogSim simulator, (developed by Cloud Computing and Distributed Systems (CLOUDS) laboratory at the University of Melbourne, Australia). The performance metrics considered for evaluation are energy consumption, makespan time violation, budget violation, and load imbalance.

The remaining parts of this paper are organized as follows.

Section 2 discusses related work;

Section 3 presents the proposed work; and

Section 4 deals with results and discussion.

Section 5 gives expected value analysis, and finally,

Section 6 provides the conclusions.

2. Related Work

In this section, brief descriptions of recent papers on application placement among nodes in fog computing are provided and their limitations are identified.

Mohammed et al. presented a memetic algorithm-based model for placement of applications in an edge computing environment [

12]. Each application is partitioned into different forms of granularity which include class and task. The application placement in fog computing is considered as a non-polynomial time-hard problem. The conventional memetic algorithm is modified to provide a reasonable solution for the application placement problem within a predefined decision time. The memetic algorithm is an extension of the conventional evolutionary algorithm and mainly aims to accelerate evolutionary search space to arrive at the optimum solution. Memetic algorithm pairs up with the conventional genetic algorithm with two refinement methodologies: local search and individual learning. Each is responsible for representing a candidate solution and later the potential solution is extracted from the population of candidate solutions. Five main steps are involved in the memetic algorithm: initialization, selection, crossover, mutation, and local search procedure. The application placement is composed of three strategies which include pre-scheduling, batch placement of application, and recovery from failure. During the pre-scheduling phase, the broker unit arranges the concurrent applications for execution. The batch application placement of application is carried out using an optimized version of the memetic algorithm. Potential failures during runtime are overcome using the lightweight failure recovery methodology. The overall execution time and energy consumption of the applications are minimized using a weighted cost model. However, the approach is prone to premature convergence and higher computational cost as it employs a local instead of global search.

Zoltan et al. discussed a decentralized approach for application placement in fog computing [

6]. Factors causing difficulty in application placement are the huge number of application and infrastructure nodes. The main aim of decentralization is to operate separately over different parts of the architecture to enhance scalability. Fog colonies are constructed composing resources that are spread across the geographical region. The number of fog nodes in each colony is dynamically distributed and overlapping of fog nodes is permitted. Applications are assigned to the non-overlapped fog nodes to retain a higher level of flexibility. Good cooperation among the fog colonies is established which prevents missed synergy and also avoids convergence towards suboptimal solutions. An integer linear programming algorithm is utilized as a feasibility approach that restricts all variables to be integers. At first, a problem instance is selected and converted into an integer linear program. Then, an external solver is applied to arrive at a new application placement and migration policies. The host is identified for all newly added applications, and the hosts are arranged in an ascending order. First, the application is placed on to the first host and if it is not feasible, migration decisions are made using integer-valued logic. The dynamically distributed fog colonies are paired with good coordination among them which leads to promising results in terms of resource utilization. However, uncertainty and nonlinearity are not taken into consideration, which makes it impossible to adapt to higher dimensionality problems.

Heba et al. discussed a Quality of Experience (QoE)-based prioritization model for application placement in fog computing [

13]. Higher round-trip time in data processing usually affects user perceptions of the Quality of Service (QoS). User context and application runtime context are taken into consideration. Environment runtime context is influenced by parameters like location, network strength, and battery level. Similarly, the application runtime context is influenced by parameters like usefulness, ease of use, boring, enjoyable, and monotonous. The user expectation is judged based on reliability, sustainability, and availability. At first, priority is assigned for applications considering runtime, application usage, and user expectations for quality. Then, the applications are allocated to fog nodes considering the proximity, computation capability, and intended response time. QoS violation causes a direct influence on the application placement strategy. Recalculation and reprioritization of the applications happen, and the updated plan will be conveyed to the fog action implementer. The quality rating gain metric plays a major role in prioritizing the applications. It is determined by the product of QoE and the score of the computing capability. The watchdog is responsible for receiving the update information and checking for Service Level Violations (SLVs) and critical changes in the context of operation. This approach improves the QoE of the users with respect to application placement time and the power consumption is reduced. However, the approach is not suitable for real-time fog environments since there is a chance of a higher priority application starving for an infinite period of time due to the influence of uncertainty among fog nodes. Also, the power consumption of fog nodes is higher in the urge to meet the service delivery deadline. Compatibility of the solution across multiple applications is also less since different categories of applications are not taken into consideration.

Reinout et al. presented a multiple objective-based application placement approach which aimed to gain a maximum reward formulation [

14]. The application placement problem is considered as a meta learning problem where task distribution is performed over distribution of preferences. The application placement approach is trained considering the meta policies. The meta policy is trained simultaneously by considering a huge collection of task distributions which are randomly sampled over Markov Decision Process (MDP). A trained neural network model is deployed to reduce the resource consumption rate of the application placement logic. The few shot adaptation to the new application is supported by giving relative preference to multiple objectives. The generic version of the Bellman equation is utilized to formulate a single parametric representation of optimal policy over the large state space of preferences. During the initial stage of learning, the Q learning agent performs optimal policy for any given preference under few samples of preferences. The series of loss functions are minimized using homotopy optimization. The efficient alignment between the application preferences and optimal solution is derived by reusing the transitions from the learning stage using hindsight experience replay. Since computing of optimality filter over the Q table is not possible, mini batch transition is performed before updating the parameters. The main goal is to draw parameters from Gaussian distribution to perform policy which is a combination of stochastic search and policy gradient. The results are formulated using pareto solutions which ensure solutions are correct in terms of optimality and computational efficiency. The performance is found to be good over continuous control problems which are enriched with high degree of freedom. However, the approach lacks scalability as convex approximation is performed over optimal policies to efficiently handle individual policies which grow linearly along with the increase in domain size.

Mohammed et al. presented a distributed deep learning approach for application placement in fog computing [

15]. The incoming applications are modeled as directed acyclic graphs composed of diverse topologies. The application placement complexity is very high as dependency among applications involves several additional constraints pertaining to resource requirement. Here, an actor critic-distributed Q learning algorithm is designed which is dependent on weighted actor–learner architectures. Two types of neural networks are used to optimize the learning process. Actor neural network selects a random action which is based on the current policy. Similarly, the critic neural network estimates a value (return) for those actions. It is a form of deterministic implementation which completes its segment of experience before performing average operation over all the actors’ actions. The agent is capable of communicating value and policy parameters to other agents which diffuse the information across the deep neural network. Robust exploration of the state space is carried out by achieving a proper tradeoff between exploration and exploitation activities. The application features are extracted and represented in the form of MDP. The rewards are formulated in such a way that they directly determine the effectiveness of the actions and reduce the application completion time. The exploration cost of the Q learning agent is reduced using distributed experience trajectory generation. An off-policy correction methodology is employed to converge to an optimal solution at a faster rate. The approach is highly scalable as the computational cost depends on neighboring agents instead of all agents across the network. However, it is computationally very expensive as it requires both actor and critic networks to be trained simultaneously. Also, chances of instability and divergence are higher due to poor function approximation because of frequent interference between the actor agent and critic agent.

Xueying et al. presented a deep reinforcement learning-enriched federated learning algorithm to perform both task scheduling and resource allocation in an edge environment [

16]. The internet of vehicles is a real-time demanding environment which consists of constraints with respect to time and varying environments. The main aim of developing an intelligent transport system is to integrate the sensing and communication mechanisms in order to enhance the rate of communication between the mobbing vehicles. A federated learning algorithm which is self-supervised in nature is capable of offloading partially completed tasks onto the roadside unit to reduce the energy consumption rate among the internet of vehicles. The incoming tasks are divided into local and roadside tasks. The training of the roadside units and local computation units is balanced. A threshold is set for offloading of tasks which prevents the inefficiencies in terms of over- or under-offloading of tasks. Smooth adjustments are made between transmission power and task assignment ratio to minimize the energy consumption resulting in enhanced efficiency. Proper tradeoff between exploration and exploitation phases is achieved through maximum entropy mechanism. The deep Q network is used to reduce the overestimation of bias during value function approximation. Failure during the training phase and premature convergence to a local optimal solution is prevented as the federated learning agent continuously attempts to formulate new policies. However, the data model includes security vulnerabilities like model poisoning, leakage of private data, and higher system level risks. It also exhibits potential bias during decision making because of higher communication costs and poor representation of local data.

To summarize, the existing works exhibit the following drawbacks:

Most of the approaches fall into premature convergence and higher computational cost due to improper distribution of applications among fog nodes.

Inability to handle uncertainty among the application requirements and fog node processing capability due to frequent violation of SLAs.

Higher tendency to produce sub-optimal solutions due to poor exploration of the state space of fog environment.

Higher loss function ratio due to single parametric representation of the application and resources at fog nodes.

Lesser compatibility of application placement policies as the diverse requirement of the applications are not taken into consideration.

The decisions taken up by the traditional machine learning algorithms are subjected to bias due to reinforcement of assumptions which does not align with the objective functions.

3. System Model

The fog computing environment is a decentralized computing environment composed of data, storage, computation, and applications that are placed in between data source and cloud server. Billons of IoT objects are used in everyday life which are deployed extensively and exhibit a high level of connectivity and computation intelligence [

17]. One of the primary requirements of fog computing is the optimization of the application placement to ensure smooth functioning.

Consider an m set of applications, i.e., , and an n set of fog nodes, i.e., Each of the applications is represented considering attributes like deadline , processing power , storage capability , memory capacity , and network traffic usage , i.e., . Similarly, each of the fog nodes is represented considering the resource-related attributes like CPU capability , RAM capacity, , disk storage , and network bandwidth, , i.e., . The SLM-guided QTDL framework Heuristic Q buffer: is initialized. The quantile regression loss is determined for each distribution v, where v is the target value, is the predicted quantile value, and is the quantile level which ranges between 0 and 1. Compute quantile regression loss , where . Update the rule , where is the ith predicted quantile value of state x, is the learning rate. The application placement policies, i.e., are formulated.

The Performance Metrics (PMs) considered for the evaluation of the proposed SLM_QTDL framework are defined as follows:

PM1: Total Energy Consumption (TEC (SLM_QTDL)): The total energy consumption of the SLM_QTDL is the summation of the static energy consumption and dynamic energy consumption at time interval

[

17].

where the static energy consumption represents the energy consumed by the fog nodes when it is active.

Similarly, the dynamic energy consumption represents the energy consumed by the fog nodes while servicing the applications. It is computed by the product of CPU capability of the fog node during the time interval

and maximum energy consumption of the fog node

and

PM2: Makespan Time Violation (MSV(SLM_QTDL)): Makespan time is the total processing delay and communication delay incurred while servicing an application. It is measured through the difference between maximum value of makespan time incurred to process the application using the SLM_QTDL

and makespan time as defined by the QoS profile of the application

.

where

, in which the is the network latency and is the processing latency.

PM3: Budget Violation (BV(SLM_QTDL)): Budget is calculated by considering the cost involved in servicing the applications. Budget violation is measured through the product of cost of executing the application on fog node

and pricing model followed at the fog node

.

PM4: Load Imbalance (LI(SLM_QTDL)): The load over the fog node is computed as the weighted sum of the different type of resources utilized by the fog nodes, i.e., CPU utilization

, memory utilization

, and bandwidth utilization

.

where

are the weights assigned to different type of resources,

.

4. Proposed Work

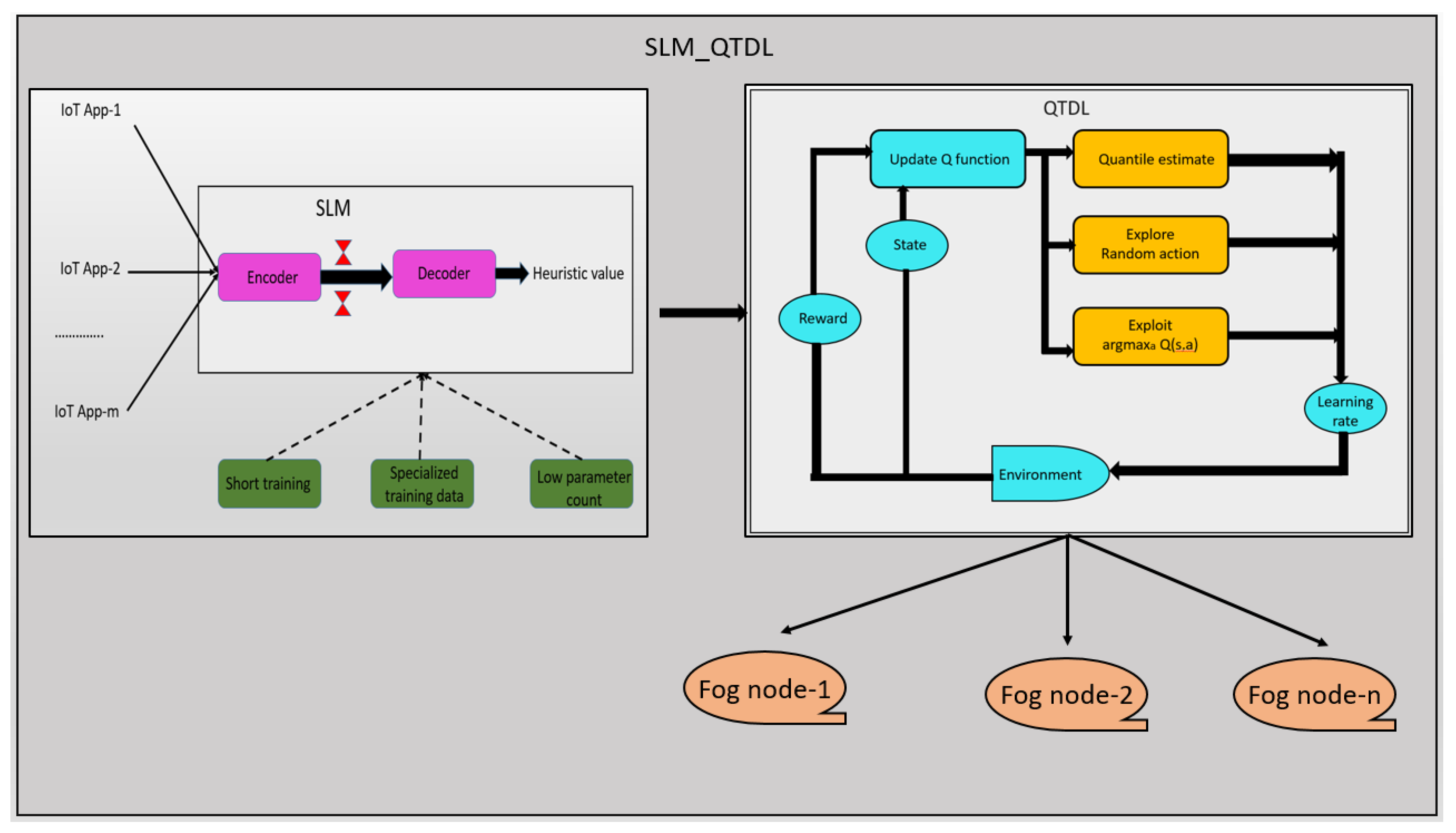

The architecture of SLM-guided QTDL framework for application placement in fog computing is shown in

Figure 1. The framework is composed of two modules: SLM and QTDL. The SLM module inputs the applications with varying resource requirements. The SLM-enriched QTDL learning agent is capable of performing specific tasks over the edge devices which have fewer resources. The foundational elements of QTDL are state (s), action (a), and reward (r). State represents the environment status of the temporal difference learning agent for a given time t. The state includes metrics like bandwidth, CPU capacity, task queue length, and memory. Action indicates the decision operation performed in response to the current system state. The actions might include changing the task scheduling policies, allocating fewer or more resources, and offloading the tasks to appropriate fog nodes. Reward is the feedback signal obtained due to the action performed by the agent. It basically provides guidance of the agent to exhibit a balanced energy efficient behavior. The balanced behavior of the agent is measured by considering improper penalties, energy consumption, and task completion time. An array of SLMs are capable of exhibiting the functionality of an LLM but they are tiny in size and demand smaller dataset for training. The quantile learning over the distribution of returns is more accurate as it can precisely estimate the tradeoff between variance updates and asymptotic predictions. After performing a sufficient amount of estimation over the quantiles, the agent determines the true value function. The integration of SLM with QTDL is a powerful combination of compact language-guided reasoning mechanism with the distributional value estimation capability. This integration is useful in developing frameworks for resource-constrained environments like fog computing. The design principles followed to develop the framework are as follows. The SLM is used to parse and understand the application requirements. Low latency and lesser memory requirements are ensured through contextual embeddings which provide proper guidance for action selection and value estimation. Latent representation alignment is carried out to ensure that the SLM output embeddings are compatible with the quantile network input space. The number of quantiles used are limited by tiny, distilled models. Quantized SLM models perform on-device inference and use the recent quantile outputs for experience replay. Quantile outputs are used to express the uncertainty over the actions which are combined with the language-derived intent to provide explanation over the actions. SLM generates heuristic values which serve as a learned function to guide the QTDL agent based on the application’s understanding. Basically, the SLM interprets the state description of the applications and outputs the scalar value which presents the desirability of the action. The state and action are encoded and passed through the SLM. The output layer of the SLM is fine-tuned using the feedback of reinforcement learning agent to output the heuristics score.

The detailed working of the framework is shown in Algorithm 1. The working of the framework is divided into training and testing phases. During the training phase, quantile regression loss and gradient loss are computed and updated accordingly. The Dirac delta approximation is used to compute the value of quantiles. Quantile regression loss is used to estimate the distribution of returns. Quantile values are updated using gradient loss estimators. Similarly during the testing phase the updated quantile rule is formulated through unbiased estimation of regression loss and gradient loss. The quantile loss will be re-evaluated, and update rules are formulated for each of the applications. The quantile estimate is refined to arrive at precise application placement policies. The QTDL agent can store the collection of predictions at each state of operation

, which is the core component of the agent’s functionality. The agent keeps observing every ongoing transition

in the state space environment and performs update operations over all the Q value estimates whose learning rate is greater than or equal to zero. The temporal difference error is used for learning purpose which is analogous to the basic working principle of the traditional TDL. The influence of temporal difference error over the Q value updating process is determined through their sign, not on the magnitude. The resource allocation predictions made at each state are made to ensure the inclusion of distinct terminology. The inclusion of distinct terms and consideration of the sign makes the predictions accurate through properly learnt parameters. The framework scales easily with the increase in the size of the state space as each state of the agent is capable of storing multiple predictions. Even the small models lack visibility in terms of IoT devices’ reliability, readiness, and resource availability. In-time monitoring of the computation resources in the distributed fog computing environment is required to ensure balanced distribution of applications, enabling the fault tolerant operations, and performing latency and energy aware application placement activity.

| Algorithm 1: Working of SLM-guided QTDL framework for IoT application placement |

1: Start

2: Input: A set of applications, i.e., , quantile estimate , R is the reward set of dimension m, observed transition of state on reward , i.e., s is the current state, is the next state entered after receiving the reward r, Small Language Model (SLM), Prompt (P), Heuristic Q buffer

3: Output: Application placement policies, i.e., .

4: Generate Heuristic Q buffer:

4: Training phase of SLM_QTDL

5: Set for each of the i ranges from 1 to m

6: For each of the applications in the application set, i.e., do

7: Perform sampling over fog state space environment

8: Update the generated Q buffer

9: Sample the transition from updated Q buffer

10: Compute approximation of equally weighted mixture of Dirac deltas for state x, i.e., ,

11: Determine quantile regression loss , for each distribution v, where v is the target value, is the predicted quantile value, is the quantile level which ranges between 0 and 1.

12: Compute quantile regression loss , where

13: Unbiased estimator of negative gradient loss , where is the value threshold, penalizes overestimate with the weight value of , penalizes underestimate with the weight of .

14: Update the rule , where is the ith predicted quantile value of state x, is the learning rate.

15: Output the updated rule + H(s,a)

16: End for

17: Set

18: End for

19: Generate the updated trained quantile value

20: End Training phase of SLM_QTDL

21: Testing phase of SLM_QTDL

22: For each of the applications in the application set, i.e., do

23: Determine quantile regression loss of line number 11

24: Compute quantile regression loss of line number 12

25: Unbiased estimator of negative gradient loss of line number 13

26: Update the rule of line number 14

27: Output the updated rule + H(s,a)

28: End for

29: Set

30: End for

31: Generate the updated tested quantile value

32: End Testing phase of SLM_QTDL

33: Output

34: Stop |

5. Expected Value Analysis

The expected value analysis of the proposed SLM_QTDL framework is carried out considering a typical fog computing scenario. It is common practice in decision theory to determine the outcomes in an uncertain scenario using probability-weighted values. These are the average values that are obtained after repeated conduct of the experiment. The performance of the SLM_QTDL is compared with that of three recent frameworks: Memetic Algorithm (MA) [

12], Prioritization Model (PM) [

6], and Distributed Deep Learning (DLL) [

15]. The performance is evaluated considering the performance metrics of total energy consumption, makespan time violation, budget violation, and load imbalance.

PM1: Energy consumption (

: The energy consumption of the SLM_QTDL is influenced by the value of

and

.

EC(SLM_QTDL):

EC(MA):

EC(PM):

EC(DDL):

The EC(SLM_QTDL) (a) represents the energy consumption of the model after taking the agent action a. The energy is averaged over the policy and integrated with respect to time. The energy consumption modeling is averaged across all IoT devices. By integrating the quantiles, the distributional returns are formulated to incorporate risk awareness inside the TD learning model. The model captures both the mean behavior and risk-causing behavior of the policy formulated. Both the quantile space and probability destiny of quantile returns capture accurate estimation of the energy consumed under dynamically varying fog node characteristics.

PM2: Makespan time violation (MSV(SLM_TDQL)): The makespan time violation of the SLM_QTDL is influenced by the value of maximum value of makespan time

and makespan time as defined by the QoS profile

.

MSV(SLM_TDQL):

MSV(MA) =

MSV(PM) =

MSV(DDL) =

The represents the expected violation of the makespan time after taking action a. The average of makespan time violation is computed considering both worst case execution time and targeted quality of service (QoS) which is aggregated over the policies formulated. The makespan time violation parameters are combined with the distribution of return quantiles to represent the uncertainty of the fog environment. The model is made risk aware by capturing the uncertainty distributional form of temporal difference learning. The effective measurement of makespan time is ensured by deviating the learned application placement policies according to the time sensitive QoS parameters.

PM3: Budget Violation

: The budget violation of the SLM_QTDL is influenced by cost of executing the application on fog node

and pricing model followed at the fog node

.

BV(SLM_QTDL):

BV(MA) =

BV(PM) =

BV(DDL) =

The BV(SLM_QTDL)(a) represents the budget violation of SLM_QTDL agent after executing the action a. The average over the budget violation is performed with respect to time and over all policy actions taken. The edge resources cost and each node pricing is averaged across all applications. The distributional quantile aware resource pricing makes the policy adaptable to risk sensitive situations. The variability in the price and cost are captured by integrating quantile space along with the probability density.

PM4: Load imbalance (LI(SLM_QTDL)): The load imbalance of the SLM_QTDL is influenced by CPU utilization

, memory utilization

, and bandwidth utilization

.

LI(SLM_QTDL):

LI(MA) =

LI(PM) =

LI(DDL) =

The LI(SLM_QTDL) captures the contribution of each of the resources towards load imbalance. The CPU and memory bandwidths of each of the fog nodes are measured over the time interval. The weighted coefficients represent the operational priroity and by aggregating them, a unifed value for the load imbalance metric is obtained. By assigning distributional weights over the resource components a risk-sensitive load-balancing model is developed. The stochastic variance in the resource usage is captured through the combination of quantile returns and probability densities. The overall system resilence is assured by minimizing the chances of load imbalance through quantile aware application placement policies.

6. Results and Discussion

The proposed SLM_QTDL framework is experimentally evaluated using the iFogSim simulator [

18,

19,

20]. The simulation duration is 120 s to 240 s. The simulation parameters are initialized as shown in

Table 1. The QTDL model is trained externally using the Python 3.13.7 scikit learn package. The iFogSim java code is modified to read the QTDL model output. The output generated is used to train the QTDL model which predicts the resource requirement of the incoming applications. The Java machine learning libraries are embedded which include Weka and Deeplearning4j. The custom modules embedded inside the machine learning logic are ModulePlacement and APPModuleScheduler. The QTDL decision steps are selected by modifying controller.java. The iFogSim and QTDL model are run in parallel. For inter process communication, REST API and sockets are used.

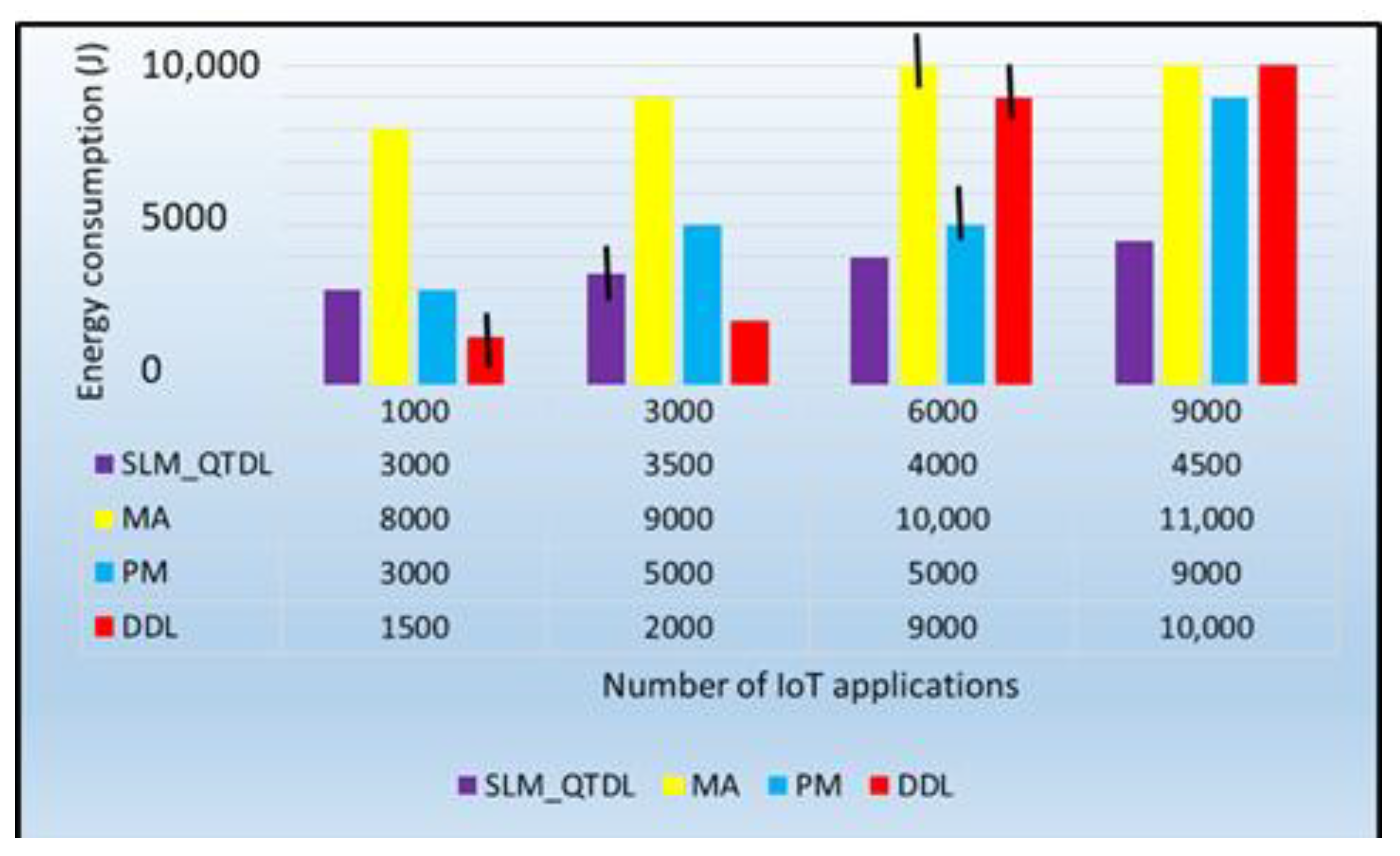

A graph of the number of applications versus energy consumption is shown in

Figure 2. It is observed from the graph that the energy consumption of the SLM_QTDL is consistently less with the increase in the number of applications. The SLM-enriched QTDL needs lesser processing power and is trained very well even with the availability of limited computational resources. Also, it prevents the sharp increases in the TD error through faster and stable reward convergence. Meanwhile, the confidence interval is 95%, i.e., 95 samples among 100 samples contain true average. The 95% confidence interval for SLM_QTDL is [2000–6000] Joules. The energy consumption of MA kept increasing with the increase in the number of applications. As it becomes stuck in local optima due to the use of local search component for decision making, the 95% percentage confidence interval of MA is [3500–13,000] Joules. The energy consumption of PM and DDL remain moderate with the increase in the number of applications. The frequent synchronization between gradient parameters slows down the training process and leads to convergence to suboptimal solutions. The 95% confidence intervals of PM and DDL are [5000–14,000].

A graph of the number of fog nodes versus makespan time violations is given in

Figure 3. It is observed from the graph that the makespan time violation frequency is less for SLM_TDL with the increase in the number of fog nodes, because they incur a fast processing time and converge to an optimal estimation of Q function value. The makespan time violation frequency is moderate for MA and PM. Due to the use of a higher intensity, the local search mechanism and finer tuning of parameters incur a longer time for processing; meanwhile, the makespan time violation frequency is very high for DDL with the increase in the number of fog nodes. Its communication overhead is greater and often ends up in a performance bottleneck due to unstable training.

A graph of the number of applications versus budget violations is given in

Figure 4. It is inferred from the graph that the budget violation frequency is kept consistently lesser for SLM_TDL with the increase in the number of applications as it uses quantile regression and TD learning that are validated using the heuristics. The budget violation frequency remains moderate for MA. The local search mechanisms are highly expensive and eventually slow down the working of memetic logic. The budget violation frequency remains very high for both PM and DDL and due to higher operation capacity and flexibility, they are often prone to overfitting problems.

A graph of the number of fog nodes versus load imbalance is shown in

Figure 5. It is inferred from the graph that the load imbalance frequency is much lower for SLM_TDL because it employs distributed reinforcement learning policies and evaluates the policies using a discounted summation of the rewards for a better exploration of the state space. Also the temporal difference error is less due to proper controlling of hallucination by propagating only true beliefs. The load imbalance frequency of MA remains moderate because it does not scale automatically for a higher dimensional problem; meanwhile, the load imbalance frequency of PM and DDL keeps increasing with the increase in the number of fog nodes. Due to the incorporation of gradient staleness during training, it often leads to higher instability in training and often the model behaves in an erratic operation.

Through implementation, it is observed that the powerful combination of a small language model and temporal difference learning successfully prevents overestimation or underestimation of the Q values. Through proper reward shaping, an ambiguous and noisy state space environment is traversed to arrive at the proper decisions. The applications placement policies are found to be accurate and, in turn, improve the overall system performance with respect to energy consumption, makespan time violations, budget violations, and load imbalance ratio.

7. Conclusions

This paper presents a novel small language model-guided temporal difference learning framework designed to perform optimal application placement in fog computing. The heuristic value generated by the SLM is used to learn the exact Q value of TD learning. This prevents the tendency to enter into hallucination mode and biased decisions by avoiding the improper guidance to TD learning agent. Also the stability is ensured in Q value variance, an improved learning rate, and a decline in the temporal difference error. The SLM-enriched Q value offers a greater level of flexibility through proper reshaping of reward function. The experimental results obtained by the iFogSim 3.3. simulator are good with respect to energy consumption, makespan time violations, budget violations, and load imbalance. However, the SLMs are trained over significantly less data which lacks better understanding of the ongoing dynamics of the fog environment. Small models consist of fewer parameters which limit their applicability and cannot handle ambiguous forms of the applications. As the models are not fine-tuned, they need to be explicitly adapted to the resource-constrained fog environment. In future work, we aim to enhance the scalability of the framework to handle heterogeneous resources and efficiently distribute applications among the fog nodes by satisfying the QoS and QoE constraints of the end users. By fine-tuning the small language models, the in-context and out-context learning will be performed effectively to act under uncertainty in the operating environment. To handle the monitoring issues, lightweight monitoring agents will be incorporated to design a unified framework. A score will be attached to every fog node based on uptime history, task success rate, and failure frequency to choose reliable fog nodes for application placement.