Zero-Shot Learning for S&P 500 Forecasting via Constituent-Level Dynamics: Latent Structure Modeling Without Index Supervision

Abstract

1. Introduction

2. Materials and Methods

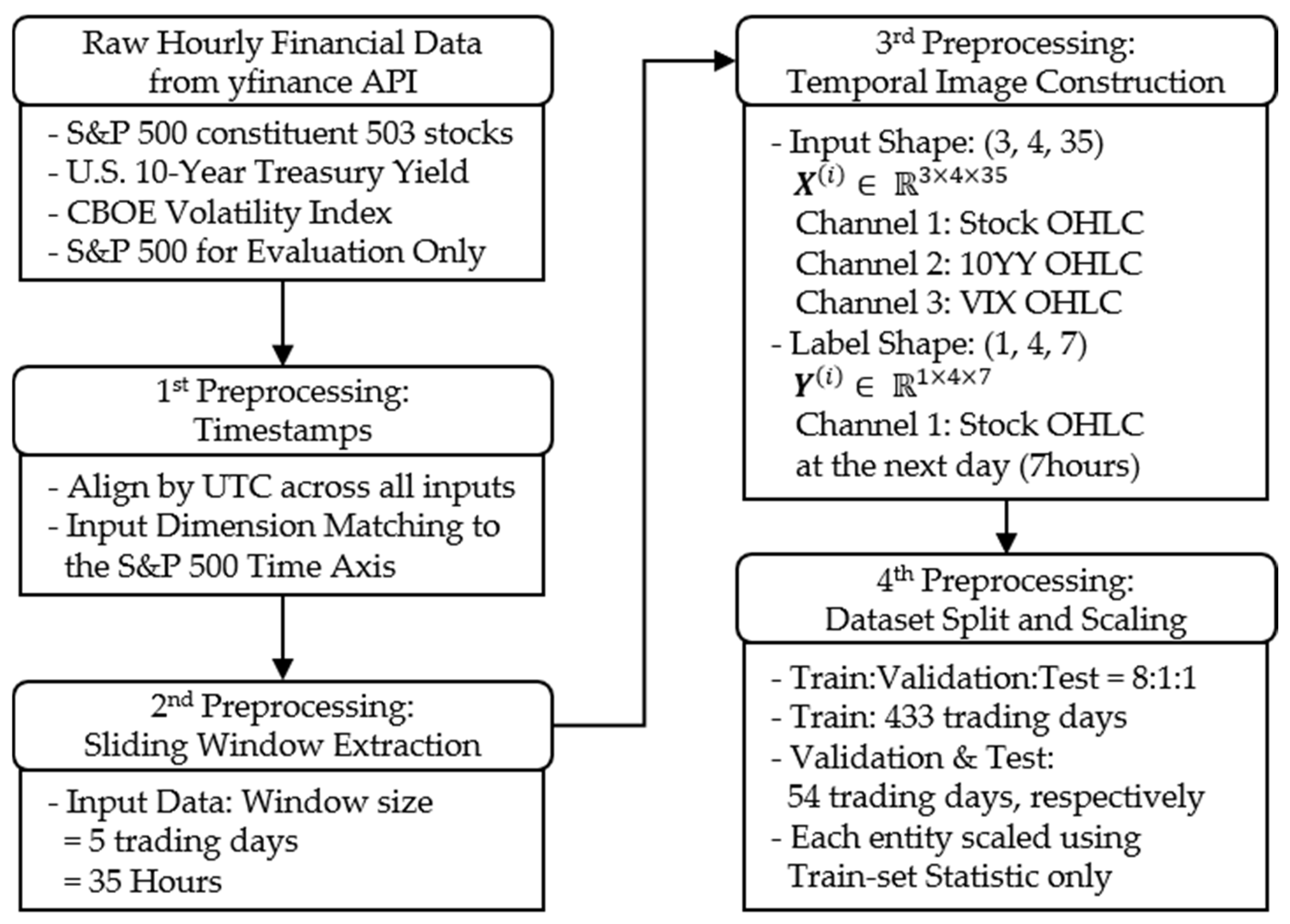

2.1. Dataset

2.2. Study Design

- (1)

- a Variational AutoEncoder for supervised learning;

- (2)

- a Conditional Denoising Diffusion Probabilistic Model (cDDPM);

- (3)

- a spatial Transformer with an encoder–decoder (Transformer-ED);

- (4)

- a spatial Transformer with an encoder only (Transformer-E).

2.3. Methodologies

2.3.1. OHLC Order-Consistency Loss

2.3.2. Variational AutoEncoder for Supervised Learning

2.3.3. Conditional Denoise Diffusion Probabilistic Model

2.3.4. Spatial Transformer: Encoder–Decoder Structure and Encoder-Only Configuration

2.4. Metrics: Average RMSE and Average MAE

3. Results

3.1. Experiment Settings

- (1)

- Direct-supervision setting: The models are trained directly on the S&P 500 index data together with the 10YY and the VIX, allowing it to learn index-level dynamics under explicit supervision. Each model’s weights were selected at the epoch corresponding to the minimum validation loss.

- (2)

- Zero-shot setting (core of our study): The zero-shot models are trained exclusively on the individual constituent stocks, without any access to index-level data, and is subsequently evaluated on the S&P 500 using its OHLC sequence combined with the responding macroeconomic inputs. The zero-shot model—trained without access to the S&P 500 index—was evaluated using the weights from the epoch with the lowest validation loss on constituent-level and macro input.

3.2. Quantitative Experiment Results

3.2.1. Direct-Supervision Forecasting

3.2.2. Zero-Shot Forecasting

3.3. Qualitative Visualization

4. Discussion

4.1. Analysis and Insights

4.2. Limitations and Future Works

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 10YY | U.S. 10-Year Treasury Yield |

| aMAE | Average Mean Absolute Error |

| ARCH | Autoregressive Conditional Heteroskedasticity |

| aRMSE | Average Root Mean Squared Error |

| cDDPM | Conditional Denoising Diffusion Probabilistic Model |

| CNN | Convolutional Neural Network |

| DDPM | Denoising Diffusion Probabilistic Model |

| GAN | Generative Adversarial Network |

| KLD | Kullback–Leibler Divergence |

| MSE | Mean Squared Error |

| LLM | Large Language Model |

| LSTM | Long Short-term Memory |

| OHLC | Open, High, Low, and Close |

| Transformer-ED | Spatial Transformer with an Encoder only |

| Transformer-ED | Spatial Transformer with an Encoder–Decoder |

| VIX | CBOE Volatility Index |

| ZLB | Zero Lower Bound |

References

- Bloom, N. The impact of uncertainty shocks. Econometrica 2009, 77, 623–685. [Google Scholar] [CrossRef]

- Sharpe, W.F. Capital asset prices: A theory of market equilibrium under conditions of risk. J. Financ. 1964, 19, 425–442. [Google Scholar]

- Baker, M.; Wurgler, J.; Yuan, Y. Global, local, and contagious investor sentiment. J. Financ. Econ. 2012, 104, 272–287. [Google Scholar] [CrossRef]

- Engle, R.F. Autoregressive conditional heteroscedasticity with estimates of the variance of United Kingdom inflation. Econom. J. Econom. Soc. 1982, 50, 987–1007. [Google Scholar] [CrossRef]

- Bollerslev, T. Generalized autoregressive conditional heteroskedasticity. J. Econom. 1986, 31, 307–327. [Google Scholar] [CrossRef]

- Hörmann, S.; Horváth, L.; Reeder, R. A functional version of the ARCH model. Econom. Theory 2013, 29, 267–288. [Google Scholar] [CrossRef]

- Aue, A.; Horváth, L.; Pellatt, D.F. Functional generalized autoregressive conditional heteroskedasticity. J. Time Ser. Anal. 2017, 38, 3–21. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Zeng, X.; Cai, J.; Liang, C.; Yuan, C.; Liu, X. A hybrid model integrating long short-term memory with adaptive genetic algorithm based on individual ranking for stock index prediction. PLoS ONE 2022, 17, e0272637. [Google Scholar] [CrossRef]

- Ge, Q. Enhancing stock market Forecasting: A hybrid model for accurate prediction of S&P 500 and CSI 300 future prices. Expert Syst. Appl. 2025, 260, 125380. [Google Scholar]

- Dioubi, F.; Hundera, N.W.; Xu, H.; Zhu, X. Enhancing stock market predictions via hybrid external trend and internal components analysis and long short term memory model. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 102252. [Google Scholar] [CrossRef]

- Kim, J.; Kim, H.-S.; Choi, S.-Y. Forecasting the S&P 500 index using mathematical-based sentiment analysis and deep learning models: A FinBERT transformer model and LSTM. Axioms 2023, 12, 835. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems, Proceedings of the 31th International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Ji, Y.; Luo, Y.; Lu, A.; Xia, D.; Yang, L.; Liew, A.W.-C. Galformer: A transformer with generative decoding and a hybrid loss function for multi-step stock market index prediction. Sci. Rep. 2024, 14, 23762. [Google Scholar] [CrossRef]

- Xie, L.; Chen, Z.; Yu, S. Deep Convolutional Transformer Network for Stock Movement Prediction. Electronics 2024, 13, 4225. [Google Scholar] [CrossRef]

- Yan, J.; Huang, Y. MambaLLM: Integrating Macro-Index and Micro-Stock Data for Enhanced Stock Price Prediction. Mathematics 2025, 13, 1599. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems, Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Curran Associates Inc.: Red Hook, NY, USA, 2014; Volume 27. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. In Advances in Neural Information Processing Systems, Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; Curran Associates Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 6840–6851. [Google Scholar]

- Li, S.; Xu, S. Enhancing stock price prediction using GANs and transformer-based attention mechanisms. Empir. Econ. 2025, 68, 373–403. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, Y.; Liu, P.; Zhang, Q.; Zuo, Y. GAN-enhanced nonlinear fusion model for stock price prediction. Int. J. Comput. Intell. Syst. 2024, 17, 12. [Google Scholar] [CrossRef]

- Park, J.; Ko, H.; Lee, J. Modeling asset price process: An approach for imaging price chart with generative diffusion models. Comput. Econ. 2024, 66, 349–375. [Google Scholar] [CrossRef]

- Nguyen, A.; Yosinski, J.; Clune, J. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 427–436. [Google Scholar]

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding deep learning requires rethinking generalization. arXiv 2016, arXiv:1611.03530. [Google Scholar] [CrossRef]

- Socher, R.; Ganjoo, M.; Manning, C.D.; Ng, A. Zero-shot learning through cross-modal transfer. In Advances in Neural Information Processing Systems, Proceedings of the 27th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013; Curran Associates Inc.: Red Hook, NY, USA, 2013; Volume 26. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Advances in Neural Information Processing Systems, Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; Curran Associates Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning PmLR 2021, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Campbell, J.Y. A variance decomposition for stock returns. Econ. J. 1991, 101, 157–179. [Google Scholar] [CrossRef]

- Yang, J.; Zhou, Y.; Wang, Z. The stock–bond correlation and macroeconomic conditions: One and a half centuries of evidence. J. Bank. Financ. 2009, 33, 670–680. [Google Scholar] [CrossRef]

- Lettau, M.; Wachter, J.A. The term structures of equity and interest rates. J. Financ. Econ. 2011, 101, 90–113. [Google Scholar] [CrossRef]

- Whaley, R.E. The investor fear gauge. J. Portf. Manag. 2000, 26, 12. [Google Scholar] [CrossRef]

- Mallick, S.; Mohanty, M.S.; Zampolli, F. Market volatility, monetary policy and the term premium. Oxf. Bull. Econ. Stat. 2022, 85, 208–237. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Xu, B.; Wang, N.; Chen, T.; Li, M. Empirical evaluation of rectified activations in convolutional network. arXiv 2015, arXiv:1505.00853. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Cai, B.; Yang, S.; Gao, L.; Xiang, Y. Hybrid variational autoencoder for time series forecasting. Knowl.-Based Syst. 2023, 281, 111079. [Google Scholar] [CrossRef]

- Gao, J.; Wang, S.; He, C.; Qin, C. Multi-scale contrast approach for stock index prediction with adaptive stock fusion. Expert Syst. Appl. 2025, 262, 125590. [Google Scholar] [CrossRef]

- Bareket, A.; Pârv, B. Predicting Medium-Term Stock Index Direction Using Constituent Stocks and Machine Learning. IEEE Access 2024, 12, 84968–84983. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. ICLR 2022, 1, 3. [Google Scholar]

- Norlander, E.; Sopasakis, A. Latent space conditioning for improved classification and anomaly detection. arXiv 2019, arXiv:1911.10599. [Google Scholar] [CrossRef]

| Category | Description |

|---|---|

| Time Span | 1 April 2023–20 June 2025 |

| Trading Days | 551 |

| Input Window | 5 Days (35 hourly steps) |

| Tensor Shape | Input: (3, 4, 35); Label: (1, 4, 7) |

| Features | OHLC for Stock, 10YY, VIX and S&P 500 1 |

| Stock Samples (Train/Val/Test) | 208,248/27,162/27,131 |

| Macro/Index Samples (Train/Val/Test) | 433/54/54 |

| Scaling Method | Min–max per entity, using training set statistics |

| Span | Type | Count | Mean | Std | Min | 25% | 50% | 75% | Max |

|---|---|---|---|---|---|---|---|---|---|

| span-1 | Input | 433 | 0.4486 | 0.2931 | 0.0000 | 0.1815 | 0.4579 | 0.6980 | 1.0000 |

| Label | 433 | 0.4561 | 0.2933 | 0.0007 | 0.1876 | 0.4690 | 0.7015 | 1.0000 | |

| span-2 | Input | 54 | 0.9152 | 0.0839 | 0.7139 | 0.8580 | 0.9387 | 0.9835 | 1.0279 |

| Label | 54 | 0.9096 | 0.0865 | 0.7139 | 0.8384 | 0.9336 | 0.9835 | 1.0279 | |

| span-3 | Input | 54 | 0.7919 | 0.1445 | 0.3862 | 0.6808 | 0.7960 | 0.9176 | 0.9814 |

| Label | 54 | 0.8022 | 0.1488 | 0.3862 | 0.6808 | 0.8627 | 0.9303 | 0.9814 |

| Train | Validation | Test | ||||

|---|---|---|---|---|---|---|

| Models 1 | aRMSE | aMAE | aRMSE | aMAE | aRMSE | aMAE |

| VAE | 0.0020 | 0.0357 | 0.0028 | 0.0438 | 0.0059 | 0.0566 |

| cDDPM | 0.2829 | 0.4418 | 0.0215 | 0.0985 | 0.0648 | 0.1990 |

| Transformer-ED | 0.0024 | 0.0366 | 0.0099 | 0.0846 | 0.0111 | 0.0922 |

| Transformer-E | 0.0023 | 0.0353 | 0.0139 | 0.1122 | 0.0164 | 0.1204 |

| Span-1 | Span-2 | Span-3 | ||||

|---|---|---|---|---|---|---|

| Zero-Shot Models 1 | aRMSE | aMAE | aRMSE | aMAE | aRMSE | aMAE |

| VAE (Best) | 0.0006 | 0.0187 | 0.0032 | 0.0486 | 0.0033 | 0.0437 |

| cDDPM | 0.3788 | 0.5408 | 0.0156 | 0.0926 | 0.0612 | 0.1973 |

| Transformer-ED | 0.0051 | 0.0508 | 0.0251 | 0.1513 | 0.0268 | 0.1533 |

| Transformer-E | 0.0084 | 0.0656 | 0.0436 | 0.2061 | 0.0471 | 0.2079 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Noh, Y.; Kim, S. Zero-Shot Learning for S&P 500 Forecasting via Constituent-Level Dynamics: Latent Structure Modeling Without Index Supervision. Mathematics 2025, 13, 2762. https://doi.org/10.3390/math13172762

Noh Y, Kim S. Zero-Shot Learning for S&P 500 Forecasting via Constituent-Level Dynamics: Latent Structure Modeling Without Index Supervision. Mathematics. 2025; 13(17):2762. https://doi.org/10.3390/math13172762

Chicago/Turabian StyleNoh, Yoonjae, and Sangjin Kim. 2025. "Zero-Shot Learning for S&P 500 Forecasting via Constituent-Level Dynamics: Latent Structure Modeling Without Index Supervision" Mathematics 13, no. 17: 2762. https://doi.org/10.3390/math13172762

APA StyleNoh, Y., & Kim, S. (2025). Zero-Shot Learning for S&P 500 Forecasting via Constituent-Level Dynamics: Latent Structure Modeling Without Index Supervision. Mathematics, 13(17), 2762. https://doi.org/10.3390/math13172762