U2-LFOR: A Two-Stage U2 Network for Light-Field Occlusion Removal

Abstract

1. Introduction

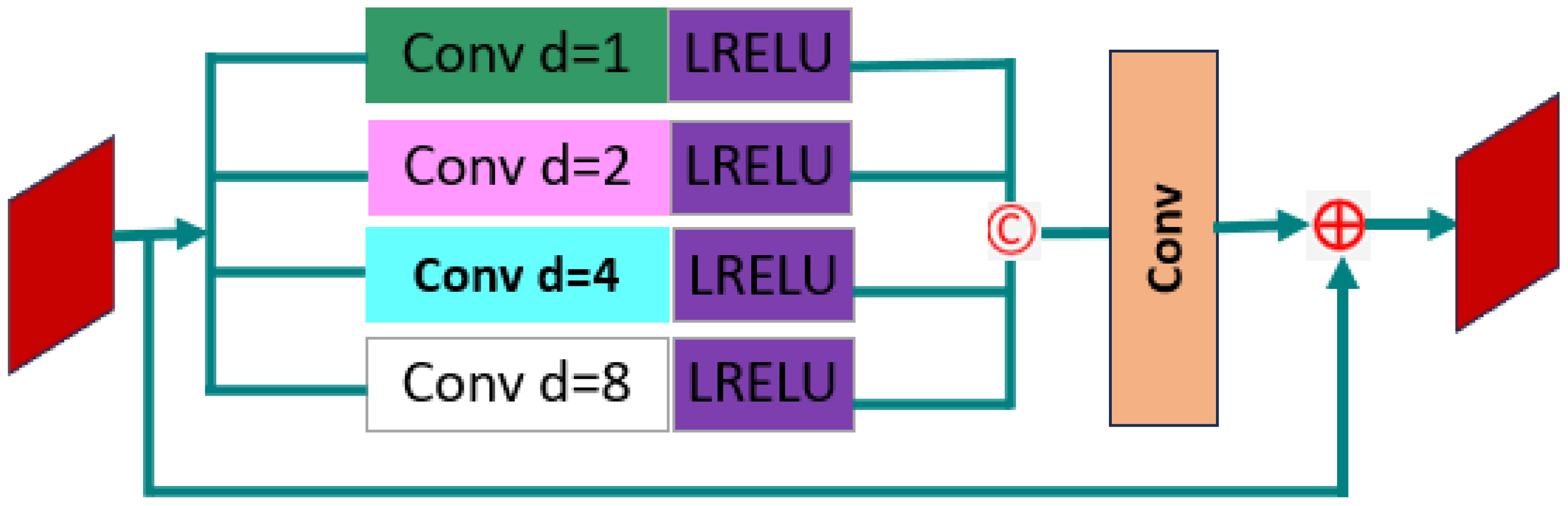

- Context Feature Extraction and Fusion: ResASPP integrates residual connections with atrous spatial pyramid pooling to enhance the receptive field and capture multiscale contextual features. It effectively captures both local and global image structures, enabling precise and robust removal of complex occlusion patterns in LF images.

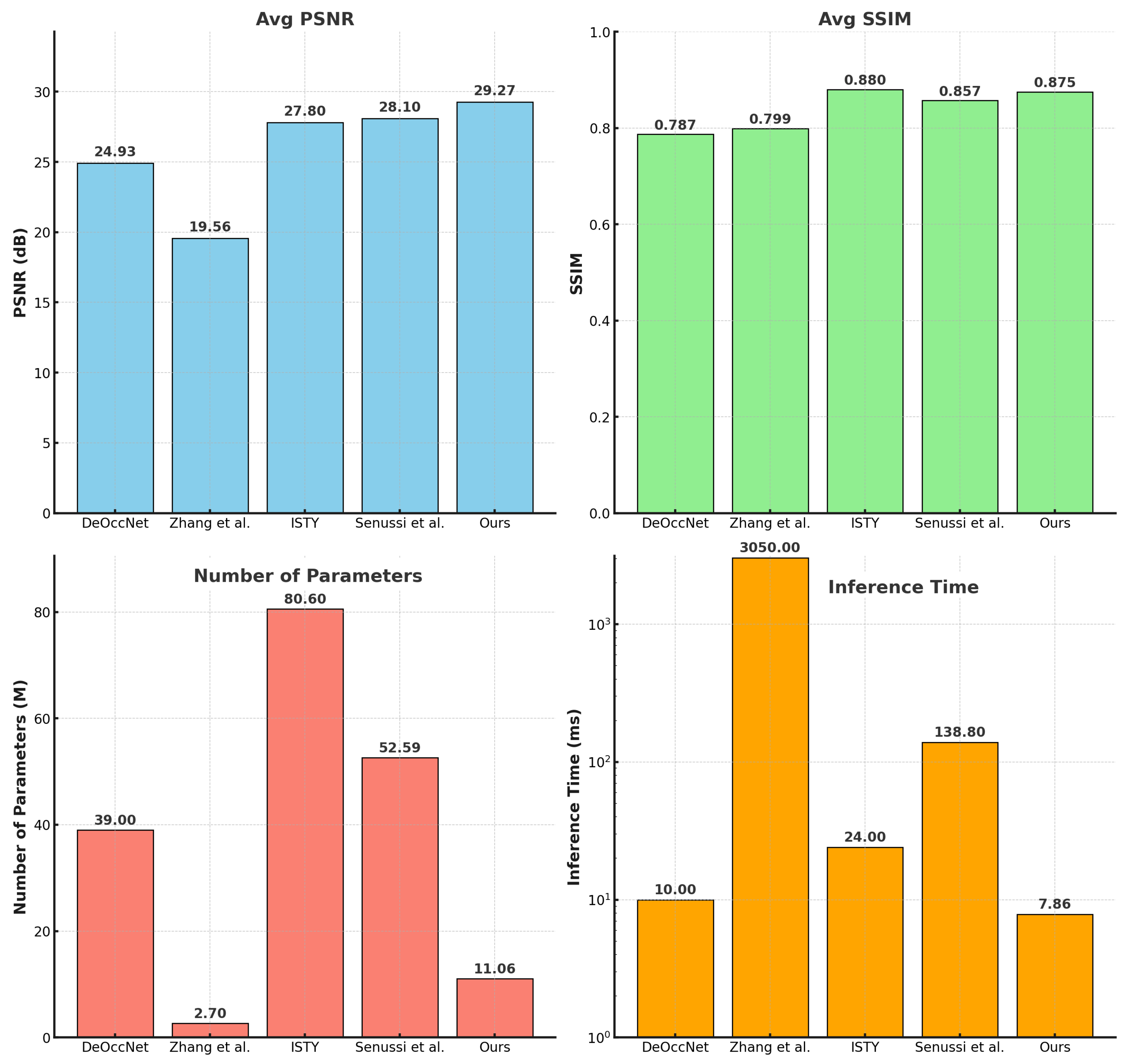

- Compact U2-Net Architecture: We design a two-stage U2-Net architecture, achieving a balance between performance and computational efficiency, with only 11.06 M parameters and an inference time of 7.86 ms, making it suitable for resource-constrained environments.

- Comprehensive Evaluation: Extensive experiments on both synthetic and real-world LF datasets demonstrate the superior performance of our method, achieving an average PSNR of 29.27 dB and SSIM of 0.875, showing its effectiveness across diverse occlusion scenarios.

2. Related Work

2.1. Single-View Image Inpainting

2.1.1. Conventional Methods

2.1.2. Deep Learning-Based Methods

2.2. LF Occlusion Removal

2.2.1. Conventional Methods

2.2.2. Deep Learning-Based Methods

3. Proposed Method

| Algorithm 1 Pseudo-Code of U2-LFOR for Occlusion Removal in LF Images |

|

3.1. LF Feature Extractor

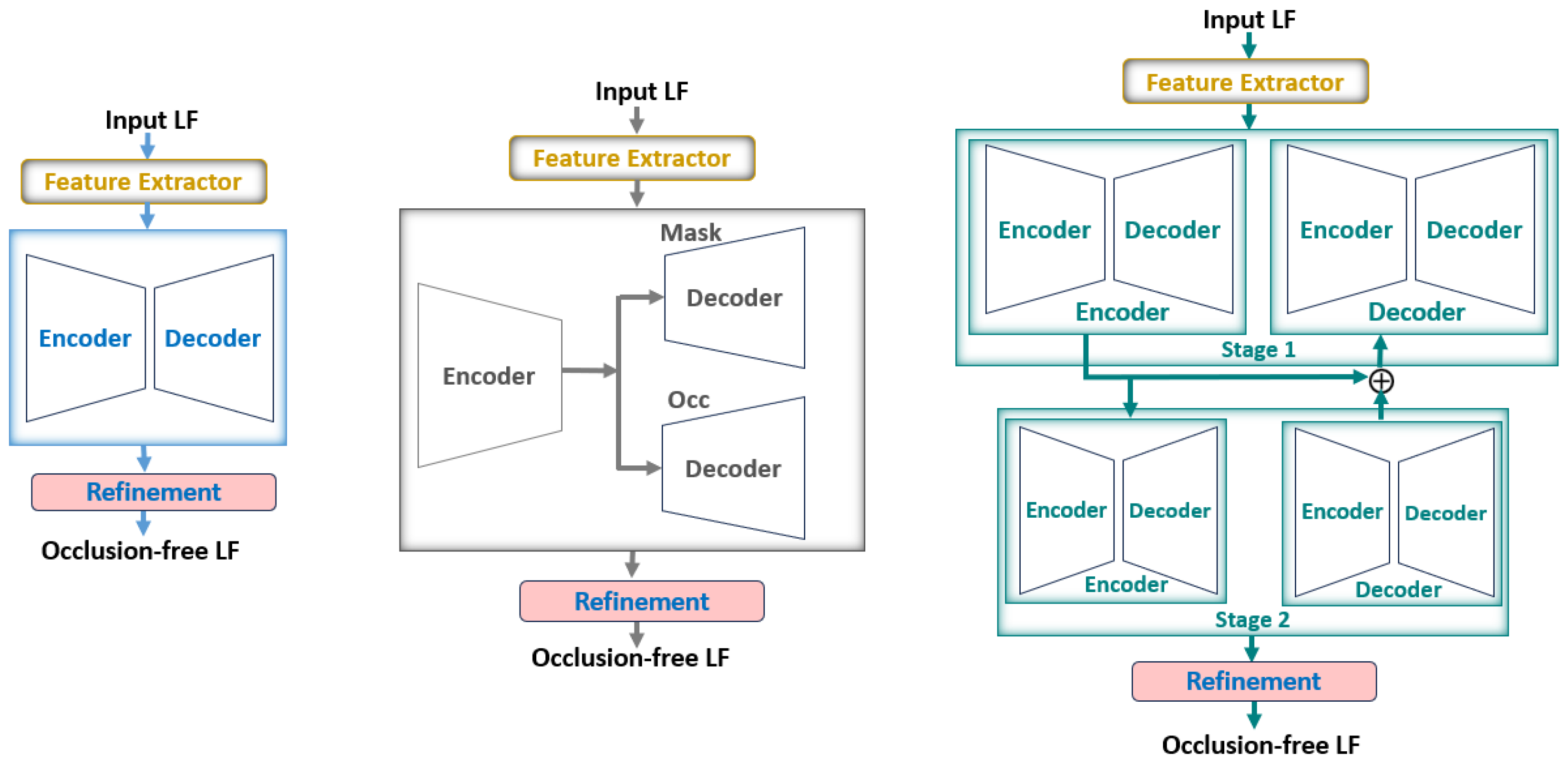

3.2. Two-Stage U2 Net

3.3. Refinement Module

3.4. Loss Function

4. Experiments

4.1. Experimental Setup

4.1.1. Training Dataset

4.1.2. Testing Dataset

4.1.3. Training Details

4.2. Experimental Results

4.2.1. Quantitative Results

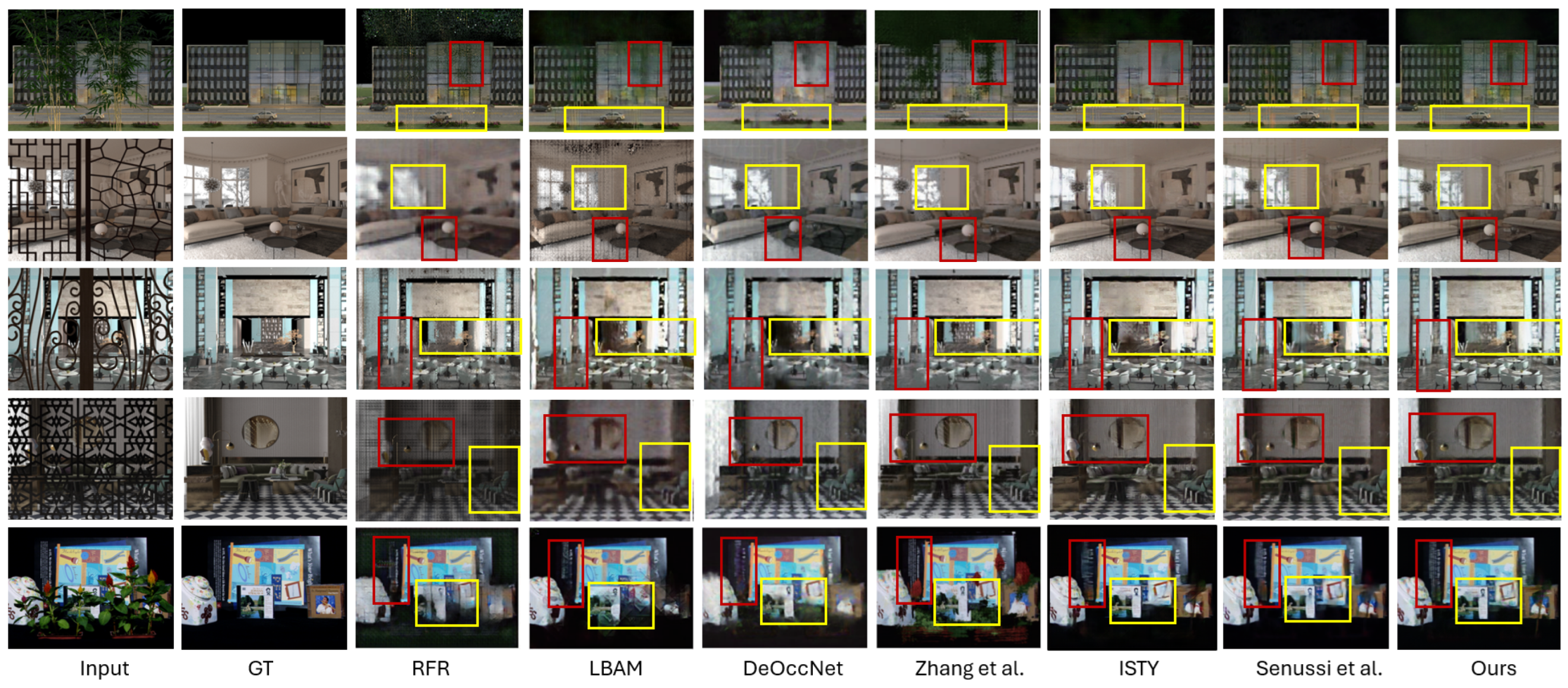

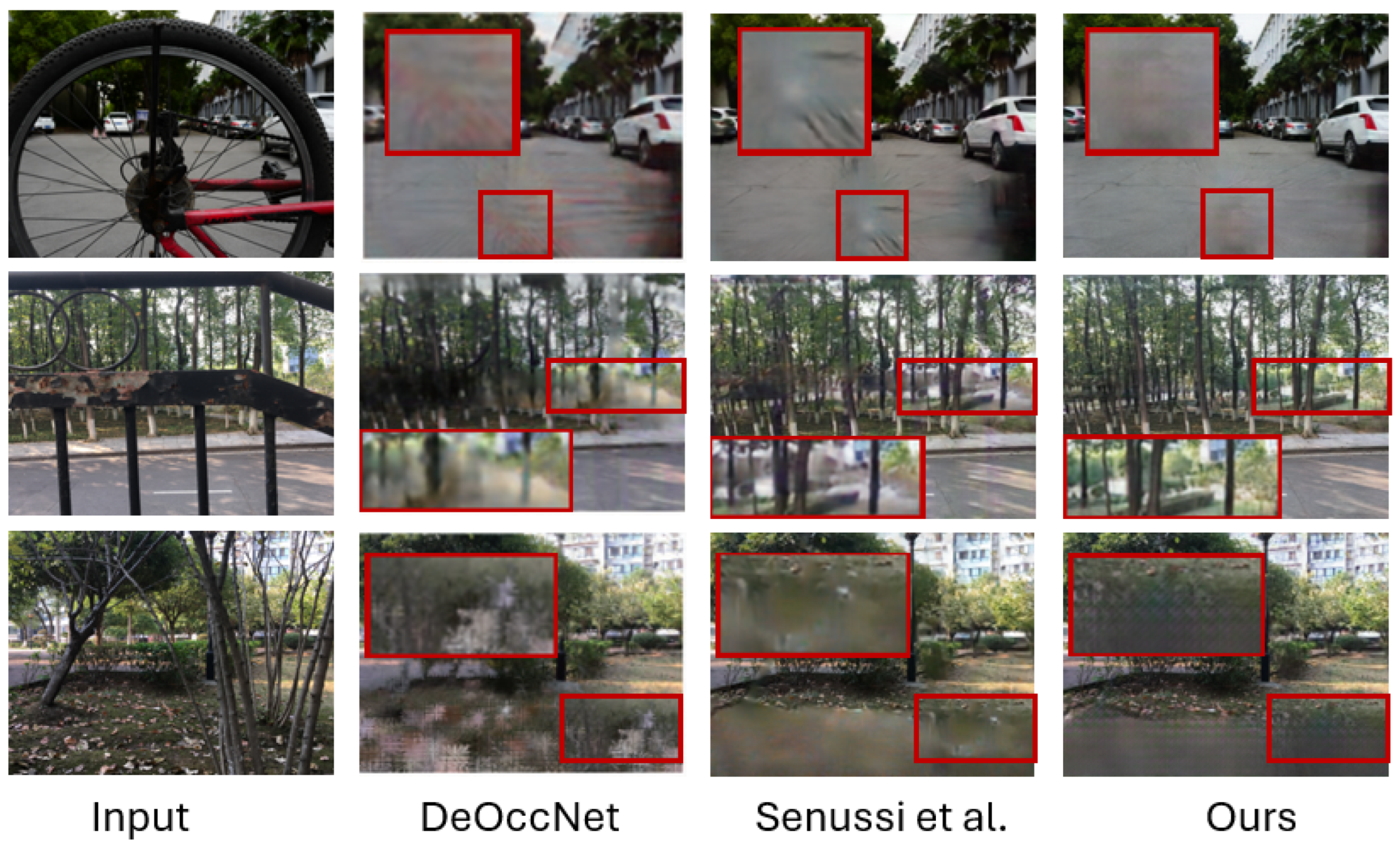

4.2.2. Qualitative Results

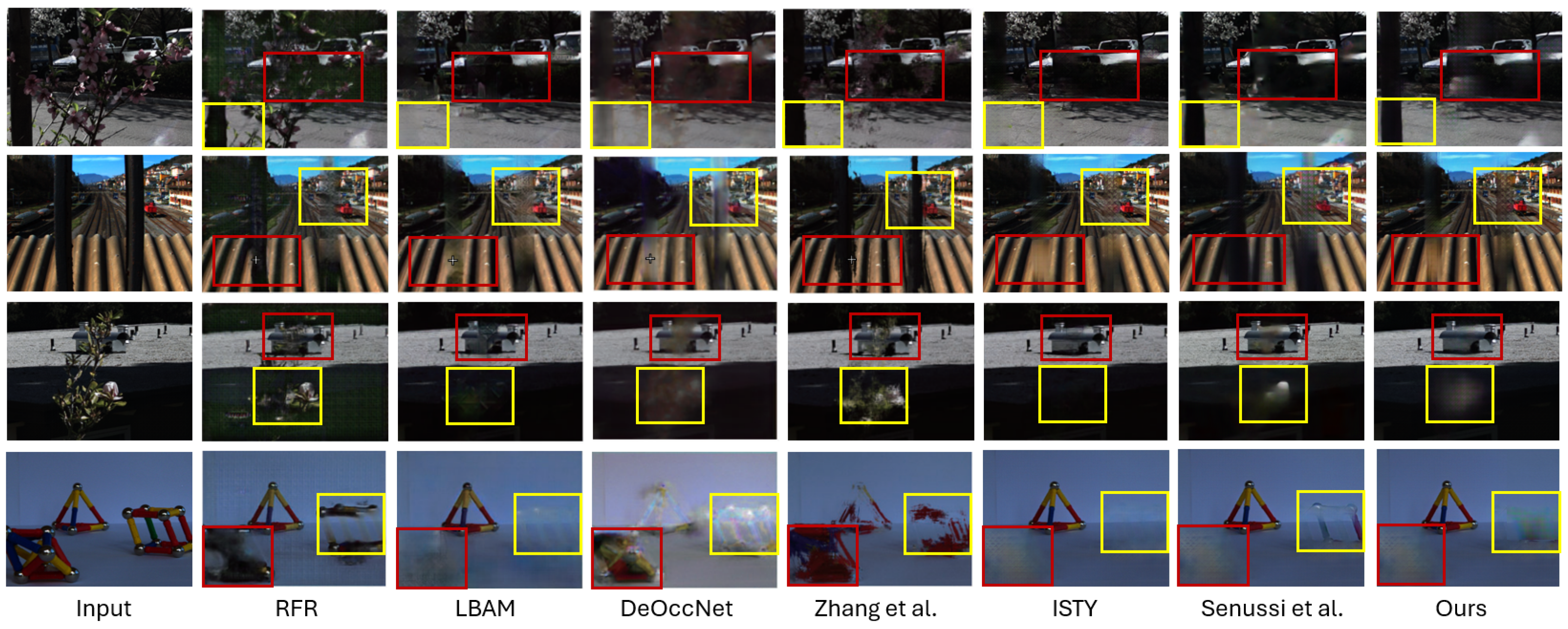

4.2.3. Performance Evaluation on Real-World Scene Data

4.2.4. Evaluation of Computational Efficiency

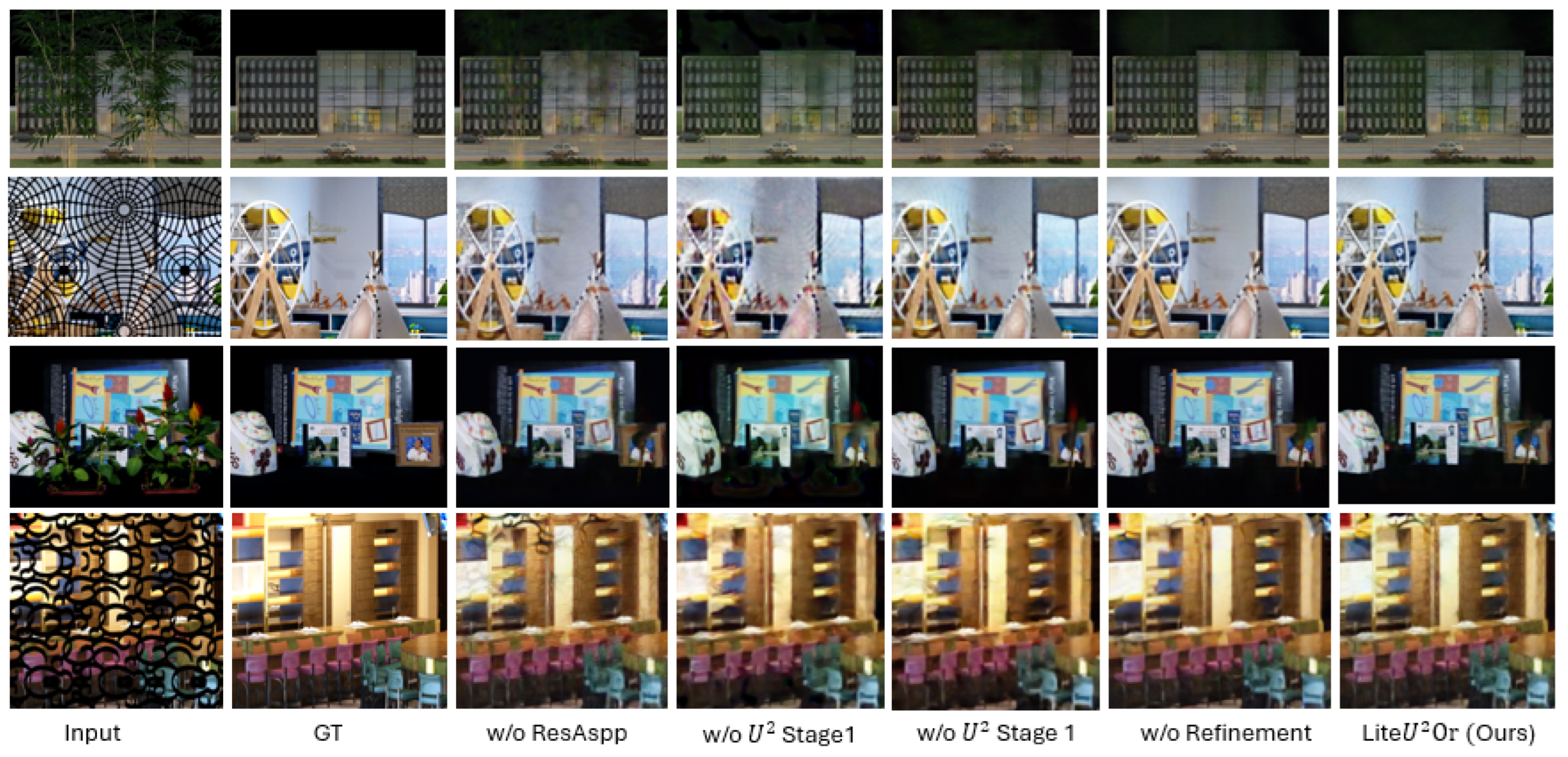

5. Ablation Study

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Samarakoon, T.; Abeywardena, K.; Edussooriya, C.U. Arbitrary Volumetric Refocusing of Dense and Sparse Light Fields. arXiv 2025, arXiv:2502.19238. [Google Scholar]

- Jiang, Y.; Li, X.; Fu, K.; Zhao, Q. Transformer-based light field salient object detection and its application to autofocus. IEEE Trans. Image Process. 2024, 33, 6647–6659. [Google Scholar] [CrossRef]

- Yang, T.; Zhang, Y.; Tong, X.; Zhang, X.; Yu, R. A new hybrid synthetic aperture imaging model for tracking and seeing people through occlusion. IEEE Trans. Circuits Syst. Video Technol. 2013, 23, 1461–1475. [Google Scholar] [CrossRef]

- Yang, T.; Zhang, Y.; Yu, J.; Li, J.; Ma, W.; Tong, X.; Yu, R.; Ran, L. All-in-focus synthetic aperture imaging. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part VI 13. Springer: Cham, Switzerland, 2014; pp. 1–15. [Google Scholar]

- Mahmoud, M.; Yagoub, B.; Senussi, M.F.; Abdalla, M.; Kasem, M.S.; Kang, H.S. Two-Stage Video Violence Detection Framework Using GMFlow and CBAM-Enhanced ResNet3D. Mathematics 2025, 13, 1226. [Google Scholar] [CrossRef]

- Kasem, M.S.; Mahmoud, M.; Yagoub, B.; Senussi, M.F.; Abdalla, M.; Kang, H.S. HTTD: A Hierarchical Transformer for Accurate Table Detection in Document Images. Mathematics 2025, 13, 266. [Google Scholar] [CrossRef]

- Abdalla, M.; Kasem, M.S.; Mahmoud, M.; Yagoub, B.; Senussi, M.F.; Abdallah, A.; Hun Kang, S.; Kang, H.S. ReceiptQA: A Question-Answering Dataset for Receipt Understanding. Mathematics 2025, 13, 1760. [Google Scholar] [CrossRef]

- Chen, Y.; Xia, R.; Yang, K.; Zou, K. Dual degradation image inpainting method via adaptive feature fusion and U-net network. Appl. Soft Comput. 2025, 174, 113010. [Google Scholar] [CrossRef]

- Mahmoud, M.; Kang, H.S. Ganmasker: A two-stage generative adversarial network for high-quality face mask removal. Sensors 2023, 23, 7094. [Google Scholar] [CrossRef]

- Senussi, M.F.; Abdalla, M.; Kasem, M.S.; Mahmoud, M.; Yagoub, B.; Kang, H.S. A Comprehensive Review on Light Field Occlusion Removal: Trends, Challenges, and Future Directions. IEEE Access 2025, 13, 42472–42493. [Google Scholar] [CrossRef]

- Senussi, M.F.; Abdalla, M.; SalahEldin, M.; Kasem, M.M.; Kang, H.S. Spectral Normalized U-Net for Light Field Occlusion Removal. Int. Conf. Future Inf. Commun. Eng. 2025, 16, 294–297. [Google Scholar]

- Li, J.; Hong, J.; Zhang, Y.; Li, X.; Liu, Z.; Liu, Y.; Chu, D. Light-Ray-Based Light Field Cameras and Displays. In Cameras and Display Systems Towards Photorealistic 3D Holography; Springer: Cham, Switzerland, 2023; pp. 27–37. [Google Scholar]

- He, R.; Hong, H.; Cheng, Z.; Liu, F. Neural Defocus Light Field Rendering. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 8268–8279. [Google Scholar] [CrossRef]

- Lee, J.Y.; Hur, J.; Choi, J.; Park, R.H.; Kim, J. Multi-scale foreground-background separation for light field depth estimation with deep convolutional networks. Pattern Recognit. Lett. 2023, 171, 138–147. [Google Scholar] [CrossRef]

- Yan, W.; Zhang, X.; Chen, H. Occlusion-aware unsupervised light field depth estimation based on multi-scale GANs. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 6318–6333. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, S.; Wang, Z.; Xia, P.; Zhou, T. Lfmamba: Light field image super-resolution with state space model. arXiv 2024, arXiv:2406.12463. [Google Scholar] [CrossRef]

- Chao, W.; Zhao, J.; Duan, F.; Wang, G. Lfsrdiff: Light field image super-resolution via diffusion models. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- Gao, R.; Liu, Y.; Xiao, Z.; Xiong, Z. Diffusion-based light field synthesis. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2024; pp. 1–19. [Google Scholar]

- Han, K. Light Field Reconstruction from Multi-View Images. Ph.D. Thesis, James Cook University, Douglas, Australia, 2022. [Google Scholar]

- Chang, X. Method and Apparatus for Removing Occlusions from Light Field Images. 2021. Available online: https://patents.google.com/patent/US20210042898A1/en (accessed on 15 January 2025).

- Liu, Z.S.; Li, D.H.; Deng, H. Integral Imaging-Based Light Field Display System with Optimum Voxel Space. IEEE Photonics J. 2024, 16, 5200207. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, T.; Yang, J.; Wang, L.; An, W.; Guo, Y. DeOccNet: Learning to see through foreground occlusions in light fields. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 118–127. [Google Scholar]

- Li, Y.; Yang, W.; Xu, Z.; Chen, Z.; Shi, Z.; Zhang, Y.; Huang, L. Mask4D: 4D convolution network for light field occlusion removal. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: New York, NY, USA, 2021; pp. 2480–2484. [Google Scholar]

- Pei, Z.; Jin, M.; Zhang, Y.; Ma, M.; Yang, Y.H. All-in-focus synthetic aperture imaging using generative adversarial network-based semantic inpainting. Pattern Recognit. 2021, 111, 107669. [Google Scholar] [CrossRef]

- Zhang, S.; Shen, Z.; Lin, Y. Removing Foreground Occlusions in Light Field using Micro-lens Dynamic Filter. In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), Virtual, 19–27 August 2021; pp. 1302–1308. [Google Scholar]

- Hur, J.; Lee, J.Y.; Choi, J.; Kim, J. I see-through you: A framework for removing foreground occlusion in both sparse and dense light field images. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 229–238. [Google Scholar]

- Wang, X.; Liu, J.; Chen, S.; Wei, G. Effective light field de-occlusion network based on Swin transformer. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 2590–2599. [Google Scholar] [CrossRef]

- Zhang, Q.; Fu, H.; Cao, J.; Wei, W.; Fan, B.; Meng, C.; Fang, Y.; Yan, T. SwinSccNet: Swin-Unet encoder–decoder structured-light field occlusion removal network. Opt. Eng. 2024, 63, 104102. [Google Scholar] [CrossRef]

- Senussi, M.F.; Kang, H.S. Occlusion Removal in Light-Field Images Using CSPDarknet53 and Bidirectional Feature Pyramid Network: A Multi-Scale Fusion-Based Approach. Appl. Sci. 2024, 14, 9332. [Google Scholar] [CrossRef]

- Bertalmio, M.; Sapiro, G.; Caselles, V.; Ballester, C. Image Inpainting. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques; ACM Press: New York, NY, USA, 2000. [Google Scholar]

- Ballester, C.; Bertalmio, M.; Caselles, V.; Sapiro, G.; Verdera, J. Filling-in by joint interpolation of vector fields and gray levels. IEEE Trans. Image Process. 2001, 10, 1200–1211. [Google Scholar] [CrossRef]

- Barnes, C.; Shechtman, E.; Finkelstein, A.; Goldman, D.B. PatchMatch: A randomized correspondence algorithm for structural image editing. ACM Trans. Graph. 2009, 28, 24. [Google Scholar] [CrossRef]

- Wexler, Y.; Shechtman, E.; Irani, M. Space-time completion of video. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 463–476. [Google Scholar] [CrossRef]

- Zhang, F.L.; Wang, J.; Shechtman, E.; Zhou, Z.Y.; Shi, J.X.; Hu, S.M. Plenopatch: Patch-based plenoptic image manipulation. IEEE Trans. Vis. Comput. Graph. 2016, 23, 1561–1573. [Google Scholar] [CrossRef]

- Le Pendu, M.; Jiang, X.; Guillemot, C. Light field inpainting propagation via low rank matrix completion. IEEE Trans. Image Process. 2018, 27, 1981–1993. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Reda, F.A.; Shih, K.J.; Wang, T.C.; Tao, A.; Catanzaro, B. Image inpainting for irregular holes using partial convolutions. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 85–100. [Google Scholar]

- Li, J.; Wang, N.; Zhang, L.; Du, B.; Tao, D. Recurrent feature reasoning for image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7760–7768. [Google Scholar]

- Zhu, M.; He, D.; Li, X.; Li, C.; Li, F.; Liu, X.; Ding, E.; Zhang, Z. Image inpainting by end-to-end cascaded refinement with mask awareness. IEEE Trans. Image Process. 2021, 30, 4855–4866. [Google Scholar] [CrossRef]

- Xie, C.; Liu, S.; Li, C.; Cheng, M.M.; Zuo, W.; Liu, X.; Wen, S.; Ding, E. Image inpainting with learnable bidirectional attention maps. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8858–8867. [Google Scholar]

- Nazeri, K. EdgeConnect: Generative Image Inpainting with Adversarial Edge Learning. arXiv 2019, arXiv:1901.00212. [Google Scholar] [CrossRef]

- Song, Y.; Yang, C.; Shen, Y.; Wang, P.; Huang, Q.; Kuo, C.C.J. Spg-net: Segmentation prediction and guidance network for image inpainting. arXiv 2018, arXiv:1805.03356. [Google Scholar] [CrossRef]

- Ren, Y.; Yu, X.; Zhang, R.; Li, T.H.; Liu, S.; Li, G. Structureflow: Image inpainting via structure-aware appearance flow. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 181–190. [Google Scholar]

- Liao, L.; Xiao, J.; Wang, Z.; Lin, C.W.; Satoh, S. Guidance and evaluation: Semantic-aware image inpainting for mixed scenes. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXVII 16. Springer: Cham, Switzerland, 2020; pp. 683–700. [Google Scholar]

- Yang, C.; Lu, X.; Lin, Z.; Shechtman, E.; Wang, O.; Li, H. High-resolution image inpainting using multi-scale neural patch synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6721–6729. [Google Scholar]

- Zeng, Y.; Lin, Z.; Yang, J.; Zhang, J.; Shechtman, E.; Lu, H. High-resolution image inpainting with iterative confidence feedback and guided upsampling. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIX 16. Springer: Cham, Switzerland, 2020; pp. 1–17. [Google Scholar]

- Yi, Z.; Tang, Q.; Azizi, S.; Jang, D.; Xu, Z. Contextual residual aggregation for ultra high-resolution image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 7508–7517. [Google Scholar]

- Vaish, V.; Garg, G.; Talvala, E.; Antunez, E.; Wilburn, B.; Horowitz, M.; Levoy, M. Synthetic aperture focusing using a shear-warp factorization of the viewing transform. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05)-Workshops, San Diego, CA, USA, 21–23 September 2005; IEEE: New York, NY, USA, 2005; p. 129. [Google Scholar]

- Vaish, V.; Levoy, M.; Szeliski, R.; Zitnick, C.L.; Kang, S.B. Reconstructing occluded surfaces using synthetic apertures: Stereo, focus and robust measures. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; IEEE: New York, NY, USA, 2006; Volume 2, pp. 2331–2338. [Google Scholar]

- Vaish, V.; Wilburn, B.; Joshi, N.; Levoy, M. Using plane+ parallax for calibrating dense camera arrays. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004. CVPR 2004, Washington, DC, USA, 27 June–2 July 2004; IEEE: New York, NY, USA, 2004; Volume 1, p. I. [Google Scholar]

- Pei, Z.; Zhang, Y.; Chen, X.; Yang, Y.H. Synthetic aperture imaging using pixel labeling via energy minimization. Pattern Recognit. 2013, 46, 174–187. [Google Scholar] [CrossRef]

- Pei, Z.; Chen, X.; Yang, Y.H. All-in-focus synthetic aperture imaging using image matting. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 288–301. [Google Scholar] [CrossRef]

- Xiao, Z.; Si, L.; Zhou, G. Seeing beyond foreground occlusion: A joint framework for SAP-based scene depth and appearance reconstruction. IEEE J. Sel. Top. Signal Process. 2017, 11, 979–991. [Google Scholar] [CrossRef]

- Zhang, S.; Chen, Y.; An, P.; Huang, X.; Yang, C. Light field occlusion removal network via foreground location and background recovery. Signal Process. Image Commun. 2022, 109, 116853. [Google Scholar] [CrossRef]

- Song, C.; Li, W.; Pi, X.; Xiong, C.; Guo, X. A dual-pathways fusion network for seeing background objects in light field. In Proceedings of the International Conference on Image, Signal Processing, and Pattern Recognition (ISPP 2022), Guilin, China, 25–27 February 2022; SPIE: Bellingham, WA, USA, 2022; Volume 12247, pp. 339–348. [Google Scholar]

- Piao, Y.; Rong, Z.; Xu, S.; Zhang, M.; Lu, H. DUT-LFSaliency: Versatile dataset and light field-to-RGB saliency detection. arXiv 2020, arXiv:2012.15124. [Google Scholar]

- Raj, A.S.; Lowney, M.; Shah, R. Light-Field Database Creation and Depth Estimation; Stanford University: Palo Alto, CA, USA, 2016. [Google Scholar]

- Rerabek, M.; Ebrahimi, T. New light field image dataset. In Proceedings of the 8th International Conference on Quality of Multimedia Experience (QoMEX), Lisbon, Portugal, 6–8 June 2016. [Google Scholar]

- Bok, Y.; Jeon, H.G.; Kweon, I.S. Geometric calibration of micro-lens-based light field cameras using line features. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 287–300. [Google Scholar] [CrossRef] [PubMed]

| LF Density | Dataset | Category | # of Scenes |

|---|---|---|---|

| Sparse LF | DeOccNet Train [22] | Synthetic | 60 |

| Stanford CD [49] | Real | 30 | |

| 4-synLFs [22] | Synthetic | 4 | |

| 9-synLFs [25] | Synthetic | 9 | |

| Dense LF | DUTLF-V2 [55] | Real | 4204 |

| Stanford Lytro [56] | Synthetic | 71 | |

| EPFL-10 [57] | Synthetic | 10 |

| LF Type | Sparse (Syn) | Sparse (Real) | Dense (Syn) | ||

|---|---|---|---|---|---|

| Name | 4-Syn | 9-Syn | CD | Single Occ | Double Occ |

| PSNR ↑ | |||||

| RFR [37] | 19.89 | 20.69 | 21.13 | 26.28 | 23.25 |

| LBAM [39] | 21.11 | 23.04 | 21.56 | 27.92 | 24.83 |

| DeOccNet [22] | 23.74 | 23.70 | 22.70 | 28.67 | 25.85 |

| Zhang et al. [25] | 14.46 | 22.00 | 20.19 | 23.15 | 18.01 |

| ISTY [26] | 26.42 | 27.04 | 25.17 | 32.44 | 28.31 |

| Senussi et al. [29] | 27.32 | 27.48 | 25.68 | 30.70 | 29.34 |

| U2-LFOR (Ours) | 27.22 | 28.22 | 26.29 | 32.83 | 31.77 |

| SSIM ↑ | |||||

| RFR [37] | 0.668 | 0.672 | 0.646 | 0.867 | 0.801 |

| LBAM [39] | 0.677 | 0.725 | 0.803 | 0.899 | 0.827 |

| DeOccNet [22] | 0.701 | 0.715 | 0.741 | 0.914 | 0.847 |

| Zhang et al. [25] | 0.683 | 0.758 | 0.832 | 0.900 | 0.823 |

| ISTY [26] | 0.836 | 0.849 | 0.870 | 0.947 | 0.902 |

| Senussi et al. [29] | 0.862 | 0.853 | 0.886 | 0.838 | 0.850 |

| U2-LFOR (Ours) | 0.870 | 0.879 | 0.893 | 0.861 | 0.872 |

| Model | # of Network Parameters ↓ | Inference Time ↓ |

|---|---|---|

| LBAM [39] | 69.3 M | 12 ms |

| DeOccNet [22] | 39.0 M | 10 ms |

| Zhang et al. [25] | 2.7 M | 3050 ms |

| ISTY [26] | 80.6 M | 24 ms |

| Senussi et al. [29] | 52.59 M | 138.8 ms |

| U2-LFOR (Ours) | 11.06 M | 7.86 ms |

| LF Type | Sparse (Syn) | Sparse (Real) | Dense (Syn) | ||

|---|---|---|---|---|---|

| Name | 4-Syn | 9-Syn | CD | Single Occ | Double Occ |

| PSNR | |||||

| w/o ResAspp | 27.01 | 27.35 | 25.19 | 32.35 | 31.28 |

| w/o U2 Stage 1 | 25.87 | 26.51 | 24.85 | 31.00 | 29.54 |

| w/o U2 Stage 2 | 27.33 | 28.02 | 25.74 | 31.19 | 29.77 |

| w/o Refinement | 27.19 | 27.78 | 25.92 | 30.52 | 28.88 |

| U2-LFOR (Ours) | 27.22 | 28.22 | 26.29 | 32.83 | 31.77 |

| SSIM | |||||

| w/o ResAspp | 0.858 | 0.863 | 0.883 | 0.854 | 0.870 |

| w/o U2 Stage 1 | 0.847 | 0.854 | 0.872 | 0.833 | 0.836 |

| w/o U2 Stage 2 | 0.868 | 0.886 | 0.887 | 0.837 | 0.841 |

| w/o Refinement | 0.867 | 0.869 | 0.891 | 0.821 | 0.826 |

| U2-LFOR (Ours) | 0.870 | 0.879 | 0.893 | 0.861 | 0.872 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Senussi, M.F.; Abdalla, M.; Kasem, M.S.; Mahmoud, M.; Kang, H.-S. U2-LFOR: A Two-Stage U2 Network for Light-Field Occlusion Removal. Mathematics 2025, 13, 2748. https://doi.org/10.3390/math13172748

Senussi MF, Abdalla M, Kasem MS, Mahmoud M, Kang H-S. U2-LFOR: A Two-Stage U2 Network for Light-Field Occlusion Removal. Mathematics. 2025; 13(17):2748. https://doi.org/10.3390/math13172748

Chicago/Turabian StyleSenussi, Mostafa Farouk, Mahmoud Abdalla, Mahmoud SalahEldin Kasem, Mohamed Mahmoud, and Hyun-Soo Kang. 2025. "U2-LFOR: A Two-Stage U2 Network for Light-Field Occlusion Removal" Mathematics 13, no. 17: 2748. https://doi.org/10.3390/math13172748

APA StyleSenussi, M. F., Abdalla, M., Kasem, M. S., Mahmoud, M., & Kang, H.-S. (2025). U2-LFOR: A Two-Stage U2 Network for Light-Field Occlusion Removal. Mathematics, 13(17), 2748. https://doi.org/10.3390/math13172748