1. Introduction

Modern scientific investigations increasingly generate data characterized by numerous parallel estimation problems, where interest extends beyond global summaries to individual-level inference. This paradigm appears across diverse domains: thousands of genes tested for differential expression in genomic studies [

1,

2,

3], hundreds of schools evaluated for educational effectiveness [

4,

5,

6], multiple clinical sites in medical trials [

7,

8,

9,

10,

11], economic field experiments examining heterogeneous treatment effects across multiple units or contexts [

12,

13], and cognitive neuroscience applications where individual-level neural parameters must be estimated from noisy fMRI time series [

14]. While classical meta-analytic methods excel at estimating population-level parameters—the average effect and its heterogeneity [

15]—contemporary applications often demand reliable inference for specific units within the ensemble. Efron [

16] terms this challenge

finite-Bayes inference: making calibrated probabilistic statements about individual parameters when those parameters are modeled as exchangeable draws from an unknown population distribution.

The distinction between population-level and individual-level inference is fundamental yet often conflated in practice [

17]. Consider a multisite educational trial evaluating an intervention across

K schools. While policymakers may primarily seek the average treatment effect across all schools, individual school administrators require accurate assessments of their specific school’s effect, complete with well-calibrated uncertainty quantification. This local inference problem—estimating the latent effect parameter

for a particular school site

i based on noisy observation

while borrowing strength from the other

schools—exemplifies the finite-Bayes paradigm [

16,

18,

19]. Similar inferential challenges arise in personalized medicine (where individual patient effects matter [

20]), institutional performance evaluation (where specific hospitals or teachers face high-stakes assessments [

21]), and targeted policy interventions (where resources must be allocated based on site-specific estimates [

22]).

Recent methodological advances have begun addressing the unique challenges of finite-Bayes inference. Lee et al. [

18] demonstrated that optimal estimation strategies depend critically on the specific inferential goal—estimating individual effects, ranking units, or characterizing the effect distribution—and that sufficient between-site information can justify flexible semiparametric approaches like Dirichlet Process mixtures (e.g., [

23]) over conventional parametric models. However, their investigation revealed a striking gap: while sophisticated methods exist for point estimation (e.g., [

24]), reliable inference for individual effects remains underdeveloped [

25,

26,

27], particularly when the prior distribution must be nonparametically estimated from the data itself.

This uncertainty quantification challenge lies at the intersection of empirical Bayes and fully Bayesian paradigms. Empirical Bayes methods, which estimate the prior distribution from the data before computing posteriors, have proven remarkably successful for point estimation and compound risk minimization [

28]. Yet their “plug-in” nature—treating the estimated prior as fixed when computing posteriors—produces anti-conservative uncertainty assessments that can severely understate the true variability in finite samples [

25,

29,

30]. This limitation becomes particularly acute in the finite-Bayes setting where accurate individual-level inference, not just average performance, is the goal.

Among empirical Bayes approaches, Efron’s log-spline prior [

29] stands out for elegantly balancing flexibility and stability. By modeling the log-density of the prior distribution through a low-dimensional spline basis, this framework accommodates diverse shapes—including multimodality, heavy tails, and skewness—while maintaining computational tractability and near-parametric convergence rates. The method has found successful applications in field experiments on hiring discrimination [

12], evaluating judicial decisions in the criminal justice system [

31], and measuring the effectiveness of digital advertising [

13]. However, its development and implementation have remained firmly within the empirical Bayes paradigm, inheriting the fundamental limitation of plug-in inference.

Conventional attempts to address uncertainty quantification within the empirical Bayes framework have proven inadequate for finite-Bayes inference [

32]. Efron [

29] proposed two approaches: delta-method approximations and parametric bootstrap procedures. While these methods do capture one form of uncertainty, our theoretical analysis reveals that they target a different quantity for individual-level inference. Specifically, they quantify the

sampling variability of the posterior mean as an estimator—how much

would vary across hypothetical replications of the entire experiment. This frequentist stability measure, while valid for assessing the estimation procedure, fundamentally differs from the

posterior uncertainty about the parameter given the observed data. For stakeholders requiring probabilistic statements about their site-specific effect—“What is the probability that our school’s effect exceeds the district average?”—the former provides little guidance.

The software landscape further complicates practical implementation. The

deconvolveR package (version 1.2-1) [

33] implements Efron’s framework but restricts attention to homoscedastic scenarios where all observations share common variance. Real meta-analyses invariably feature heteroscedastic observations due to varying sample sizes across sites. While the package allows transformation to z-scores as a workaround, an approach taken in the main analysis of hiring discrimination by Kline et al. [

12], this fundamentally alters the inferential target from the effect distribution to the distribution of z-scores—a scientifically different quantity. Moreover, the

deconvolveR does not provide clear routines for posterior uncertainty quantification beyond point estimates, leaving practitioners to implement ad hoc solutions.

This paper develops a fully Bayesian extension of Efron’s log-spline prior that preserves its computational advantages while providing calibrated interval estimation for finite-Bayes inference. By embedding the log-spline framework within a hierarchical Bayesian model and treating the shape parameters as random rather than fixed, our approach automatically propagates all sources of uncertainty—including hyperparameter estimation error—into the final posterior distributions. This yields properly calibrated credible intervals for individual effects without post hoc corrections or asymptotic approximations (e.g., [

25,

32]).

Our contributions are threefold. First, we provide a precise theoretical characterization of different uncertainty concepts in empirical Bayes inference, clarifying why existing approaches fail for individual-level inference. Second, through a simulation study calibrated to realistic meta-analytic scenarios, we demonstrate that our fully Bayesian approach achieves nominal coverage for individual site-specific effects while matching the point estimation accuracy of empirical Bayes. Third, we present a complete, annotated Stan implementation that handles heteroscedastic observations and provides researchers with a practical tool for finite-Bayes inference.

The remainder of this paper is organized as follows:

Section 2 establishes the meta-analytic framework and introduces the deconvolution perspective that motivates our approach.

Section 3 reviews empirical Bayes estimation of the log-spline prior, emphasizing its limitations for uncertainty quantification.

Section 4 develops our fully Bayesian extension, showing how hierarchical modeling naturally resolves the inferential challenges.

Section 5 describes our simulation study design, while

Section 6 presents detailed results comparing point estimation accuracy and uncertainty calibration across methods.

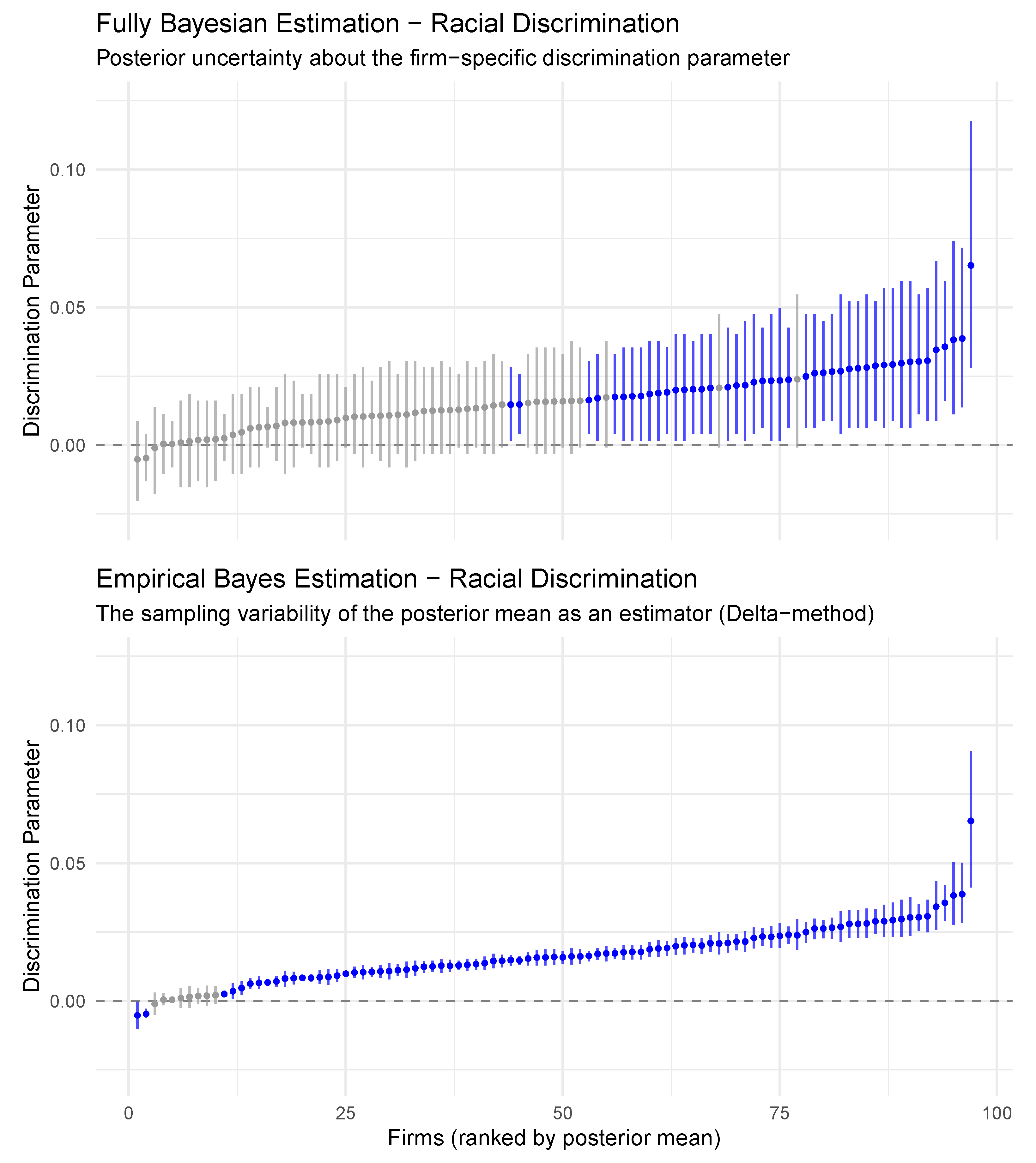

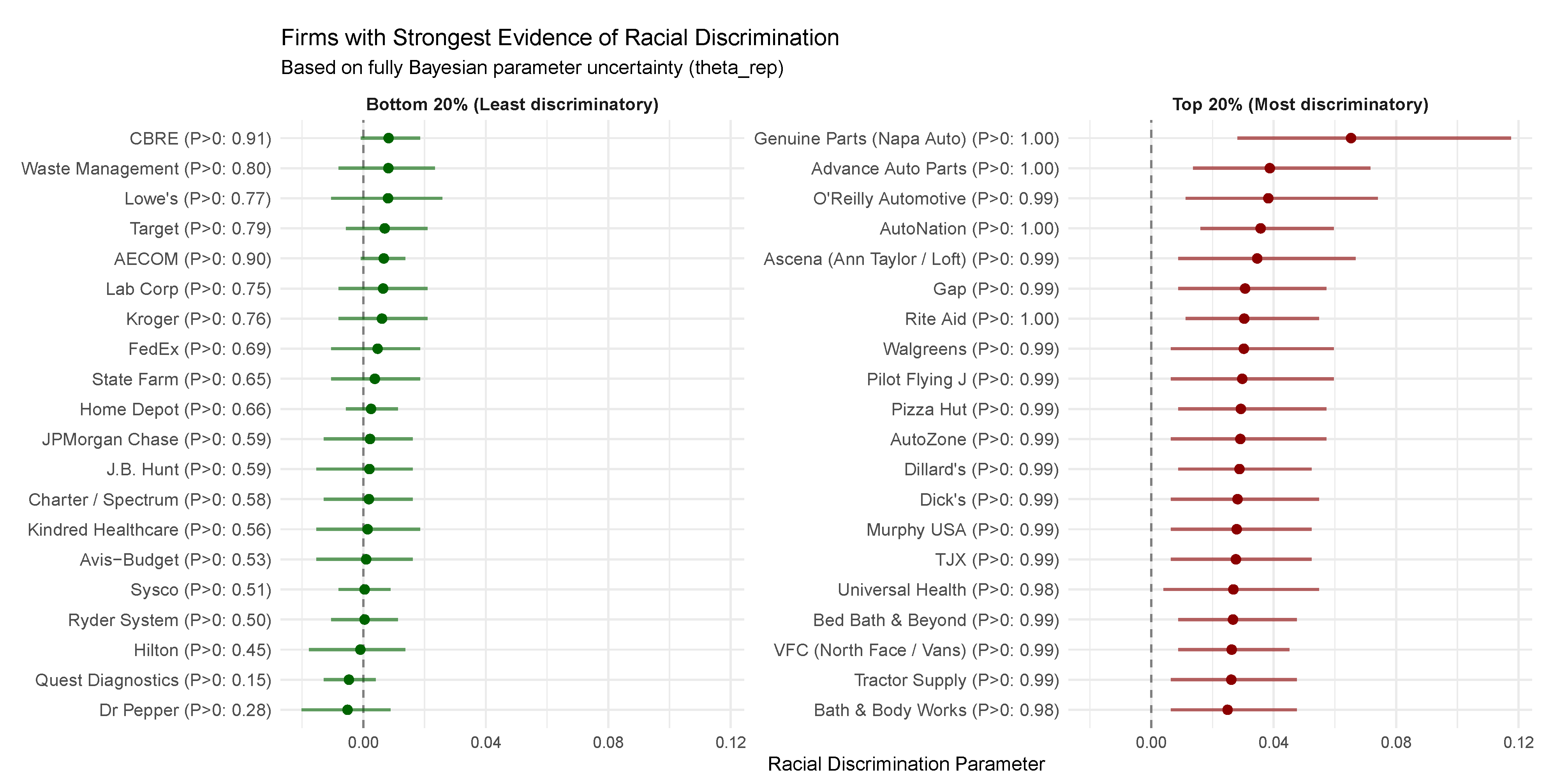

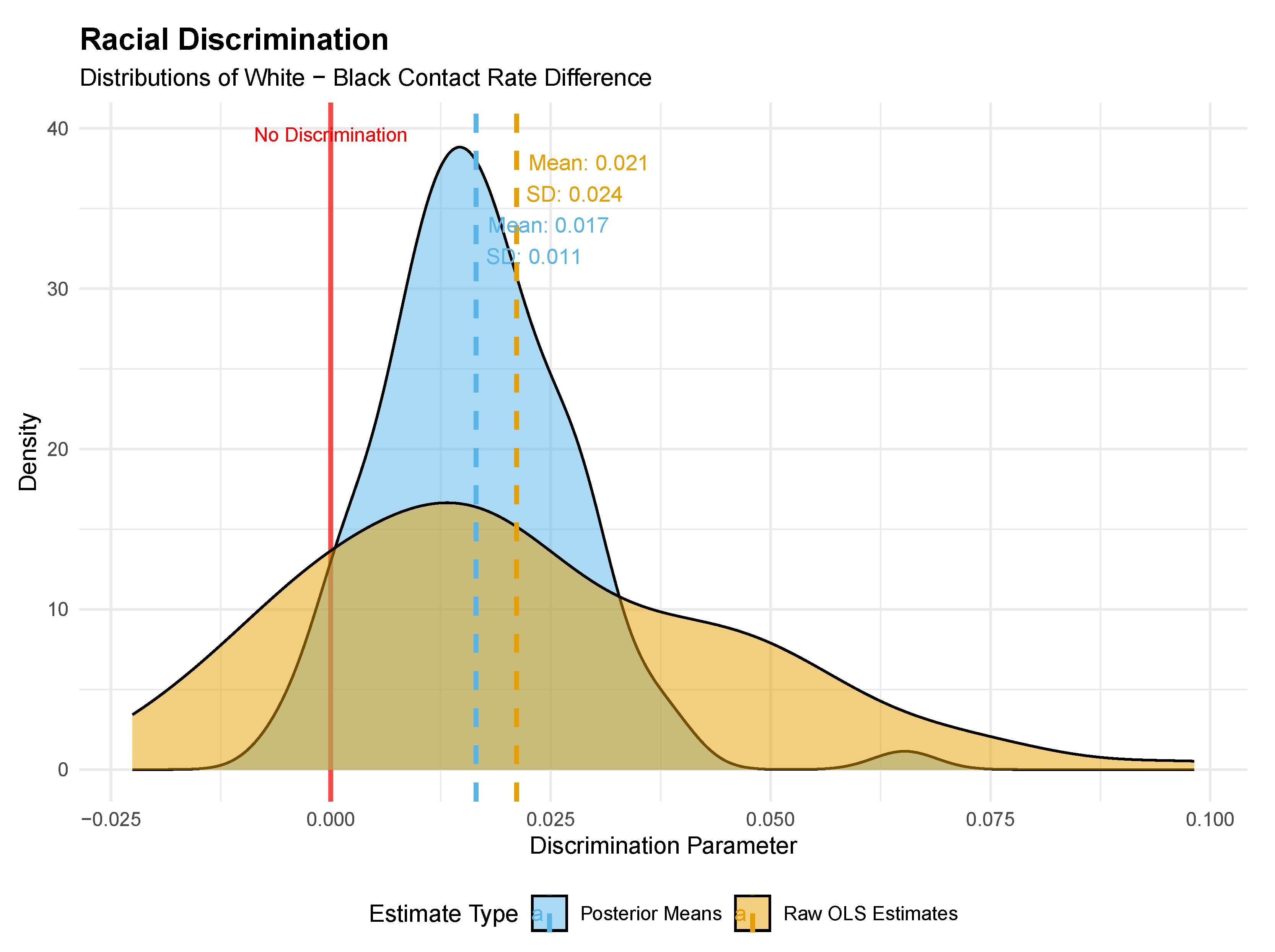

Section 7 demonstrates the practical implications through a reanalysis of firm-level labor market discrimination data from Kline et al. [

12].

Section 8 offers discussion and concluding remarks. The appendices provide extensive technical details:

Appendix A presents mathematical proofs of the uncertainty decomposition;

Appendix B contains the complete Stan implementation;

Appendix C,

Appendix D and

Appendix E examine sensitivity to grid specification, hyperprior choice, and performance in small-K scenarios, respectively.

3. Empirical Bayes Estimation

The empirical Bayes (EB) paradigm provides a practical middle ground between classical frequentist and fully Bayesian approaches by estimating the prior distribution from the data itself. In this section, we review the theoretical framework for EB estimation in meta-analytic settings, emphasizing both its computational advantages and inherent limitations in uncertainty quantification.

3.1. Estimation Strategy: G-Modeling and the Geometry of

Following Efron [

29], we adopt a

g-modeling approach that directly models the prior density

rather than the marginal density

. Specifically, we parameterize

as a member of a flexible exponential family defined on a finite grid

spanning the plausible range of effect sizes, as presented in Equation (

4). The choice of basis matrix

Q determines the flexibility and smoothness of the estimated prior. Following Efron [

29]’s recommendation, we construct

Q using B-splines evaluated at the grid points

, typically with

p ranging from 5 to 10 basis functions.

The role of merits careful interpretation. Unlike traditional hyperparameters that might represent location or scale, the components of control the shape of through the basis expansion. To develop geometric intuition, observe that the log-spline prior belongs to the exponential family with natural parameter , where parameterizes a path through the probability simplex via the exponential map. When , we obtain the maximum entropy distribution , which is uniform on the grid. As increases, the prior becomes increasingly informative, departing from uniformity in a direction determined by .

This geometric perspective reveals how encodes prior information. Decomposing , where and , we see that r controls the “concentration” of the prior, while v determines its “shape.” The eigenvectors of define principal modes of variation in log-density space: components of along dominant eigenvectors produce smooth, unimodal priors, while components along smaller eigenvectors can introduce multimodality or heavy tails. The Fisher information reveals that the effective dimensionality of the estimation problem adapts to the data, with the likelihood naturally emphasizing directions where concentrates mass.

3.2. Penalized Marginal Likelihood Estimation

Given the observed data

, we estimate

by maximizing the penalized log marginal likelihood [

29]:

where the log-likelihood is

and

is a penalty function that regularizes the solution.

The discrete approximation in (6) replaces the integral with a Riemann sum over the grid . For sufficiently fine grids (typically ), this approximation is highly accurate while maintaining computational tractability.

Following Efron [

29], we employ a ridge penalty

with

chosen to contribute approximately 5–10% additional Fisher information relative to the likelihood. This can be formalized by considering the ratio of expected information:

where

is the Fisher information matrix.

A key computational advantage of the log-spline formulation is that is concave in . To see this, note that each is log-convex in the weights , and the exponential family parameterization preserves concavity under the logarithmic transformation. Standard convex optimization algorithms, such as Newton–Raphson or L-BFGS, thus converge rapidly to the global maximum .

3.3. Posterior Inference and Shrinkage Estimation

Having obtained

, and hence

, we can compute the empirical Bayes posterior distribution for each site

i:

These posterior weights yield the EB point estimate and posterior variance:

The EB estimator

exhibits adaptive shrinkage: observations with large

are shrunk less toward the center of

, while those with small signal-to-noise ratios are shrunk more aggressively. This data-driven shrinkage can be understood through the posterior weight function

, which balances the likelihood contribution against the estimated prior density.

Efron [

16] established that under suitable regularity conditions, the EB estimator achieves near-oracle performance in terms of compound risk. Specifically, if the true prior

lies within the log-spline family, then as

:

where

is the compound risk, and

is the oracle Bayes risk achieved by knowing

. This

regret bound, with the dimension

p appearing only as a multiplicative constant, demonstrates the efficiency of the parametric g-modeling approach compared to nonparametric alternatives that typically achieve logarithmic rates.

3.4. Uncertainty Quantification for Plug-In EB

A fundamental limitation of the plug-in EB approach described above is that it treats the estimated prior as fixed when computing posterior quantities. This leads to anti-conservative uncertainty quantification that fails to account for the variability in estimating g from finite data. To understand this issue clearly, we must distinguish between two conceptually different sources of uncertainty:

Definition 1 (Posterior uncertainty of the parameter). For a given prior g and observation , the posterior variance quantifies uncertainty about the true parameter . This is a Bayesian concept representing our updated beliefs about where lies after observing data.

Definition 2 (Sampling uncertainty of the estimator). The frequentist variance quantifies how much the point estimate would vary across repeated realizations of the entire dataset . This measures the stability of the estimation procedure itself.

Efron [

16,

29] addressed the second type of uncertainty using the delta method. Treating

as a function of

, the first-order Taylor expansion yields the following:

where

is obtained from the inverse of the penalized Hessian at convergence. The gradient

can be computed analytically using the chain rule:

where the derivatives of the posterior weights follow from straightforward but tedious algebra involving the exponential family structure.

The delta-method standard error from (11) quantifies

the frequentist variability of the EB estimator—it tells us how uncertain our point estimate is, not how uncertain we should be about

itself. This distinction becomes particularly important in finite samples where the uncertainty in estimating

g can be substantial. To illustrate this conceptually, consider the decomposition:

The plug-in EB approach captures only the first term, while the delta method approximates the second. Neither alone provides a complete picture of our uncertainty about

. A formal proof of this decomposition and its implications for coverage is provided in

Appendix A.

Efron [

16] also proposed a parametric bootstrap (Type III bootstrap) as an alternative to the delta method. The procedure resamples data from the fitted model:

Draw and for

Re-estimate from

Compute where

The empirical distribution of across bootstrap replications estimates the sampling distribution of the EB estimator. However, like the delta method, this still targets estimator uncertainty rather than parameter uncertainty.

This fundamental limitation of plug-in EB methods—their inability to fully propagate hyperparameter uncertainty into posterior inference—motivates the fully Bayesian approach developed in the next section. By placing a hyperprior on and integrating over its posterior distribution, we can obtain posterior intervals for that properly reflect all sources of uncertainty, achieving nominal coverage in finite samples without ad hoc corrections.

4. Fully Bayesian Estimation

4.1. Finite-Bayes Inference and Site-Specific Effects

The empirical Bayes framework developed in the previous section excels at compound risk minimization and provides computationally efficient shrinkage estimators. However, when scientific interest narrows to specific site-level effects—what Efron [

16] terms the

inite-Bayes inferential setting—the plug-in nature of empirical Bayes methods becomes problematic. In this setting, the inferential goal shifts from minimizing average squared error across all

K sites to providing accurate and calibrated uncertainty statements for individual effects

[

18].

To illustrate this distinction, consider a multisite educational trial where site represents a particular school of interest. While the empirical Bayes estimator may achieve excellent average performance across all sites, stakeholders at site require not just a point estimate but a full posterior distribution that accurately reflects all sources of uncertainty. This posterior should account for both the measurement error in and the uncertainty inherent in estimating the population distribution G from finite data.

The finite-Bayes perspective recognizes that different inferential goals demand different solutions [

18,

24]. When the focus narrows to precise and unbiased single-site inference, the anti-conservative uncertainty quantification of plug-in EB methods—which treats

as if it were the true prior—becomes untenable. The fully Bayesian approach developed in this section addresses this limitation by propagating hyperparameter uncertainty through to site-level posterior inference, ensuring that posterior credible intervals achieve their nominal coverage even in finite samples.

4.2. Hierarchical Model Specification

To fully propagate uncertainty from the hyperparameter level to site-specific posteriors, we embed Efron’s log-spline prior within a hierarchical Bayesian framework. The model specification is as follows:

Level 1 (Observation model): Level 2 (Population model with discrete approximation):where

is a fine grid, and

forms a probability mass function on

.

Level 3 (Log-spline prior with adaptive regularization):where

is the spline basis matrix, and

is the vector of ones.

The key innovation relative to the empirical Bayes approach lies in replacing the fixed ridge penalty parameter with the adaptive parameter . The half-Cauchy prior on serves as a weakly informative hyperprior that allows the data to determine the appropriate level of regularization. This specification has several important properties:

Scale-invariance: The half-Cauchy prior remains weakly informative across different scales of , unlike conjugate gamma priors that can become inadvertently informative.

Adaptive regularization: The hierarchical structure is equivalent to the penalized likelihood , but with estimated from the data rather than fixed.

Computational stability: The reparameterization ensures that the posterior distribution remains well-behaved even when some components of are near zero.

The joint posterior density for all parameters given the observed data

is as follows:

where

denotes the normal density with standard deviation

, and

is the half-Cauchy density.

4.3. Marginalization and Posterior Inference

While the joint posterior (18) contains all relevant information, practical inference requires marginalization to obtain site-specific posteriors. The Stan implementation presented in

Appendix B achieves this through a two-stage process that exploits the discrete nature of the approximating grid

.

First, for each MCMC iteration yielding parameters

, we compute the induced prior weights:

Then, the posterior distribution for site

i given the current hyperparameters is as follows:

The key insight is that marginalizing over the posterior distribution of

automatically integrates over all sources of uncertainty:

where

S is the number of MCMC samples.

This marginalization principle extends to any functional of interest. The generated quantities block in the Stan code in

Appendix B demonstrates three important examples:

Posterior mean: .

Posterior mode (MAP): .

Credible intervals: For a credible interval, we find such that .

Each of these quantities automatically incorporates uncertainty from all levels of the hierarchy. Unlike the plug-in EB approach, which conditions on , or the delta-method correction, which approximates only first-order uncertainty, the fully Bayesian posterior samples provide exact finite-sample inference under the model.

Furthermore, if we examine the posterior variance of the estimator

across MCMC iterations:

we recover precisely the quantity approximated by the delta method or Type III bootstrap in the empirical Bayes framework. This demonstrates that the fully Bayesian approach subsumes the uncertainty quantification attempts of empirical Bayes while additionally providing proper posterior inference for the parameters themselves. See

Appendix A for a mathematical treatment of how the FB approach captures both sources of uncertainty.

4.4. Advantages over the Empirical Bayes Approach

Table 1 summarizes the key distinctions between empirical Bayes and fully Bayesian implementations of the log-spline framework across multiple dimensions of statistical practice.

The fully Bayesian approach offers compelling advantages when accurate uncertainty quantification for individual effects is paramount. The ability to make calibrated probability statements—for example, for a clinically relevant threshold —without post hoc corrections represents a fundamental improvement over plug-in methods. This advantage becomes particularly pronounced in settings with moderate K (10–50 sites) where hyperparameter uncertainty substantially impacts site-level inference.

Moreover, the FB framework naturally accommodates model extensions that would require substantial theoretical development in the EB setting. Incorporating site-level covariates, modeling correlation structures, or adding mixture components for null effects can be achieved through straightforward modifications to the Stan code, with uncertainty propagation handled automatically by the MCMC machinery.

The primary cost of these advantages is computational: while EB optimization typically completes in seconds, FB inference may require minutes to hours depending on the problem size and desired Monte Carlo accuracy. However, given that meta-analyses are rarely time-critical and the scientific value of accurate uncertainty quantification, this computational overhead is generally acceptable.

In summary, fully Bayesian inference aligns exactly with the finite-Bayes inferential goal and produces internally coherent uncertainty statements for individual effects, while offering greater modeling flexibility at only modest additional computational cost. The empirical Bayes approach remains valuable for rapid point estimation and approximate shrinkage, particularly when K is large and hyperparameter uncertainty negligible. But when calibrated inference for an individual effect is the priority, the fully Bayesian treatment of the log-spline model provides a rigorous and practically achievable solution to the meta-analytic deconvolution problem.

6. Simulation Results

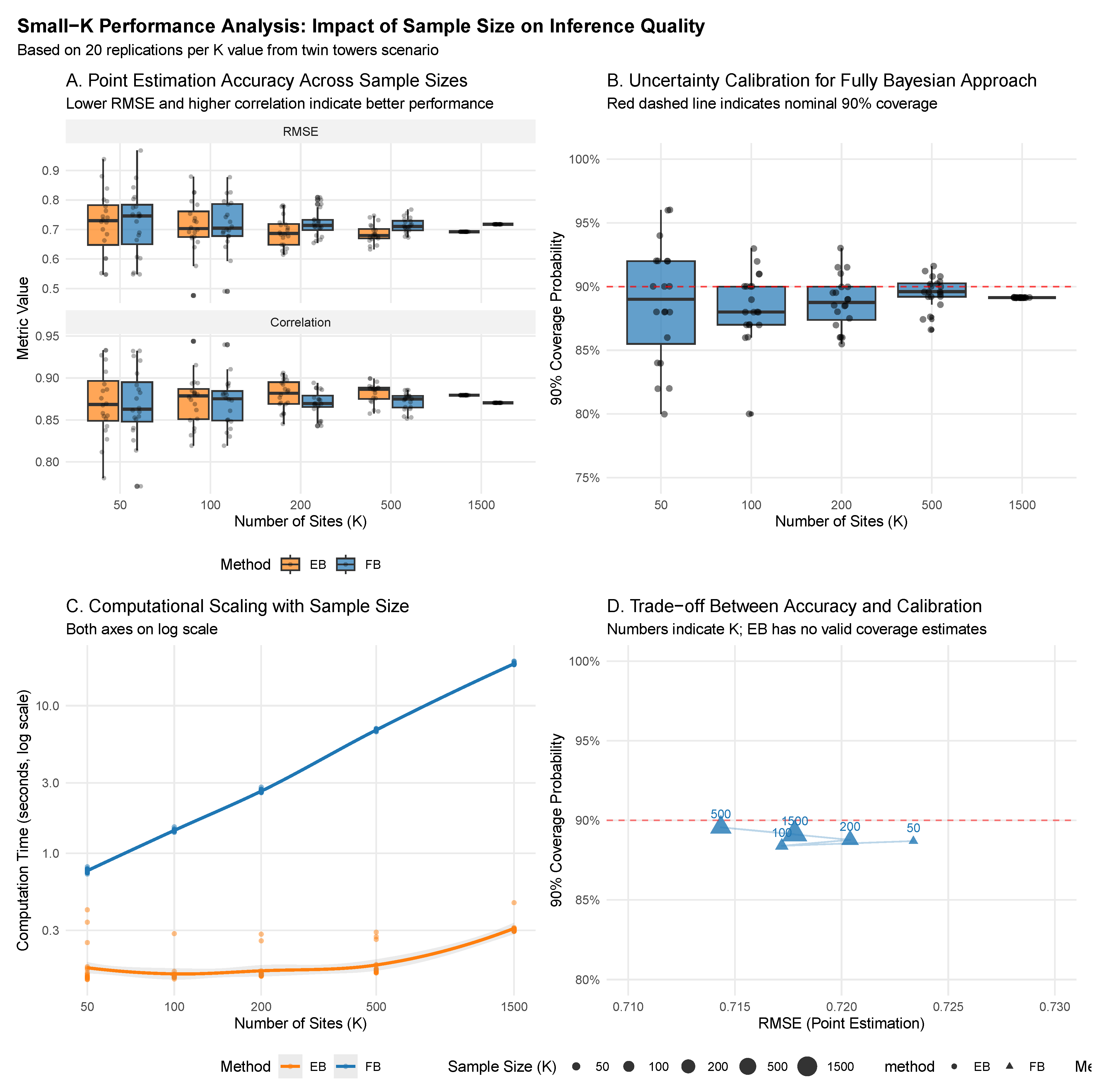

We present our findings in two parts: first examining the accuracy of point estimates in recovering true site-specific effects, followed by an assessment of the calibration and validity of uncertainty quantification. Throughout, we compare the fully Bayesian (FB) approach with empirical Bayes (EB) across the six simulation scenarios defined by reliability and heterogeneity ratio .

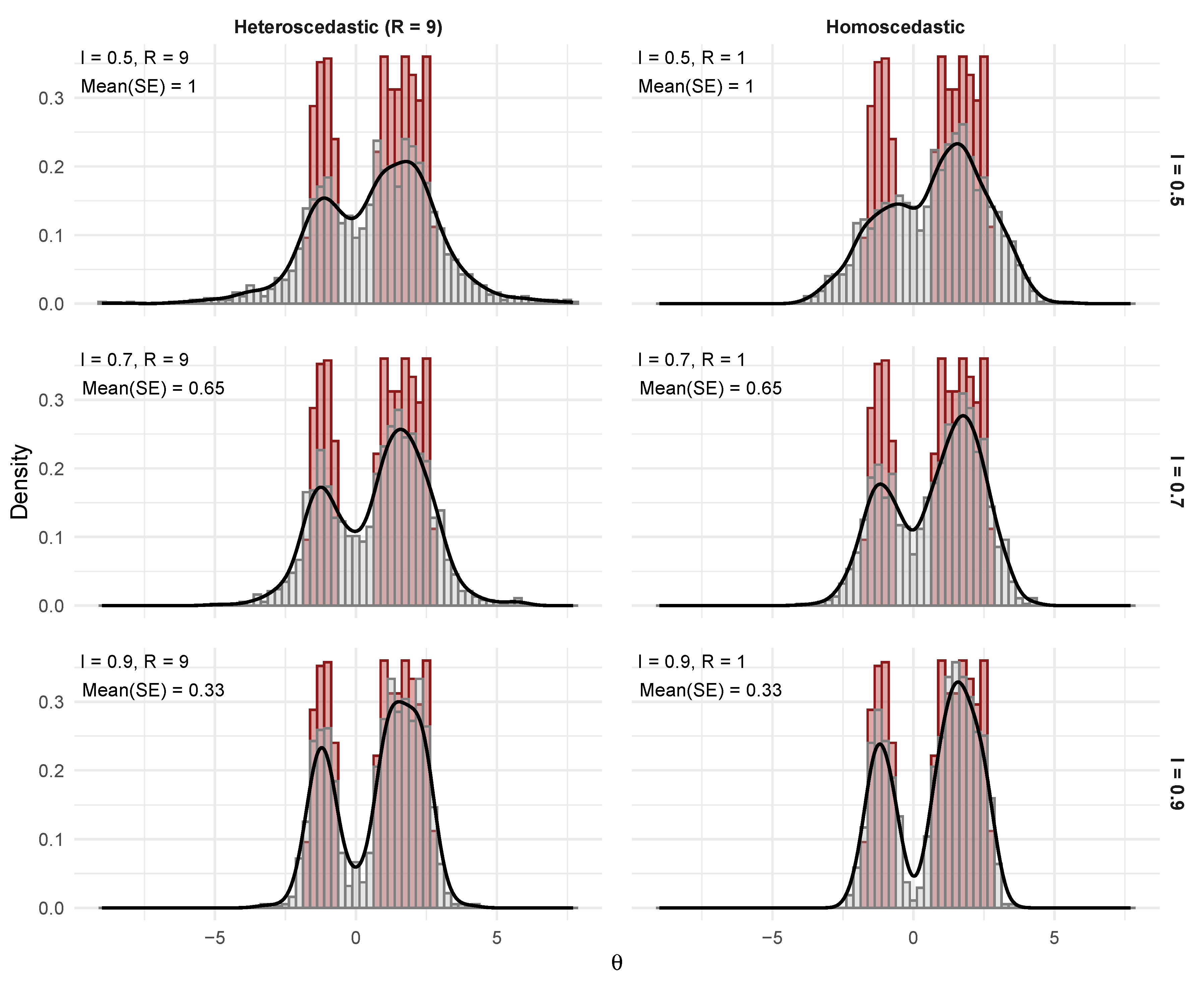

6.1. Point Estimation Accuracy

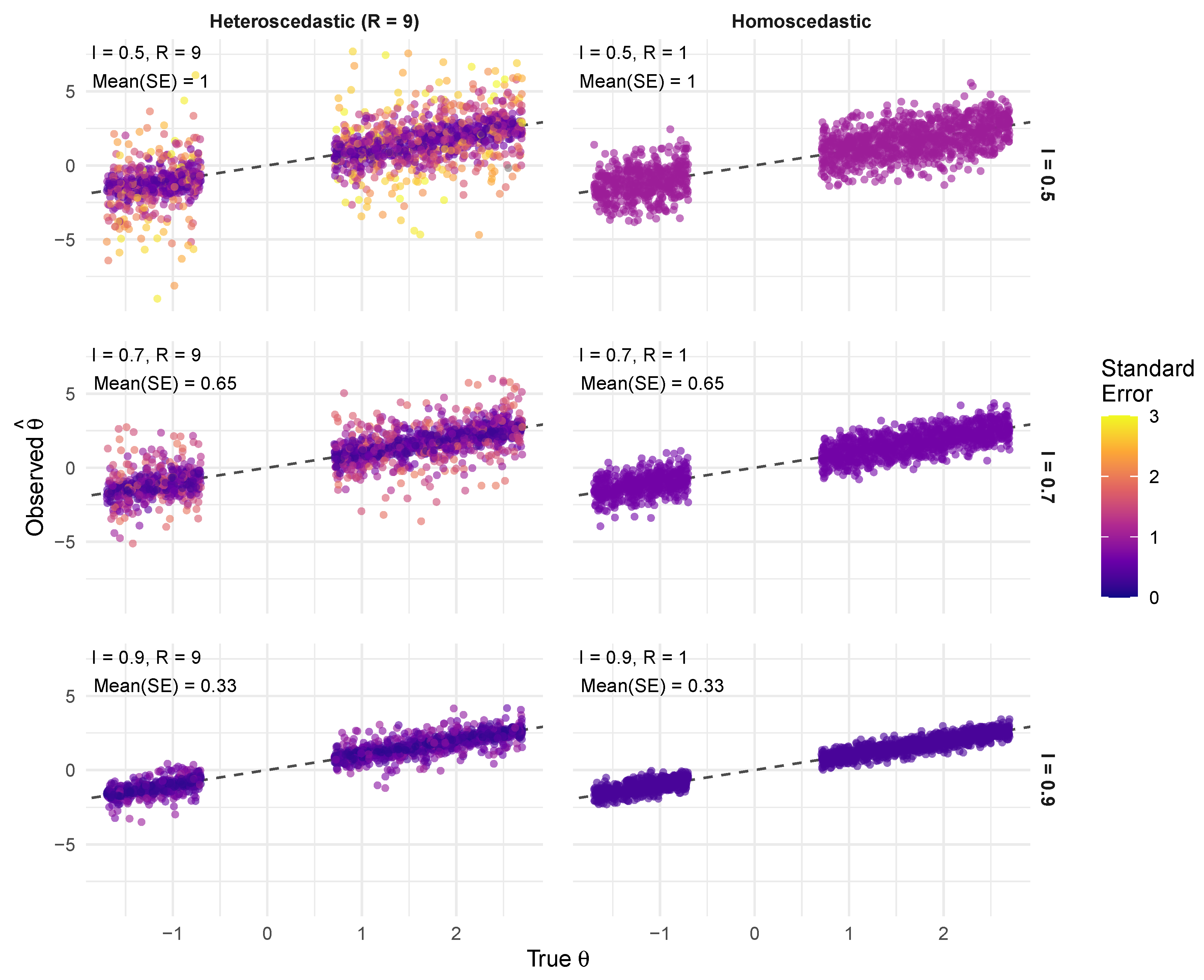

Figure 3 provides a comprehensive visual assessment of how both methods recover the true bimodal distribution across varying data quality conditions. The most striking finding is the near-perfect agreement between FB (solid blue) and EB (dashed orange) posterior means across all scenarios, validating the empirical Bayes approximation for point estimation purposes. Both methods demonstrate sophisticated adaptive shrinkage, producing estimates that optimally balance between the noisy observed data (gray histograms) and the true distribution (red histograms).

The effect of reliability I dominates the recovery performance. At low reliability (), both methods appropriately shrink estimates toward a unimodal compromise, barely preserving the bimodal structure. This conservative behavior reflects optimal decision-making under high measurement error: when individual observations are unreliable, the methods correctly pool information toward the center of the prior. As reliability increases to , the distinct “twin towers” structure emerges clearly in the estimates, closely tracking the true distribution.

Heteroscedasticity shows more subtle effects. Comparing columns in

Figure 3, the heteroscedastic cases (

) exhibit slightly better mode separation, particularly at intermediate reliability (

). This improvement stems from high-precision sites (those with small

) providing sharp information about the distribution’s structure, effectively compensating for noisier observations. However, this benefit is modest compared to the dominant effect of average reliability.

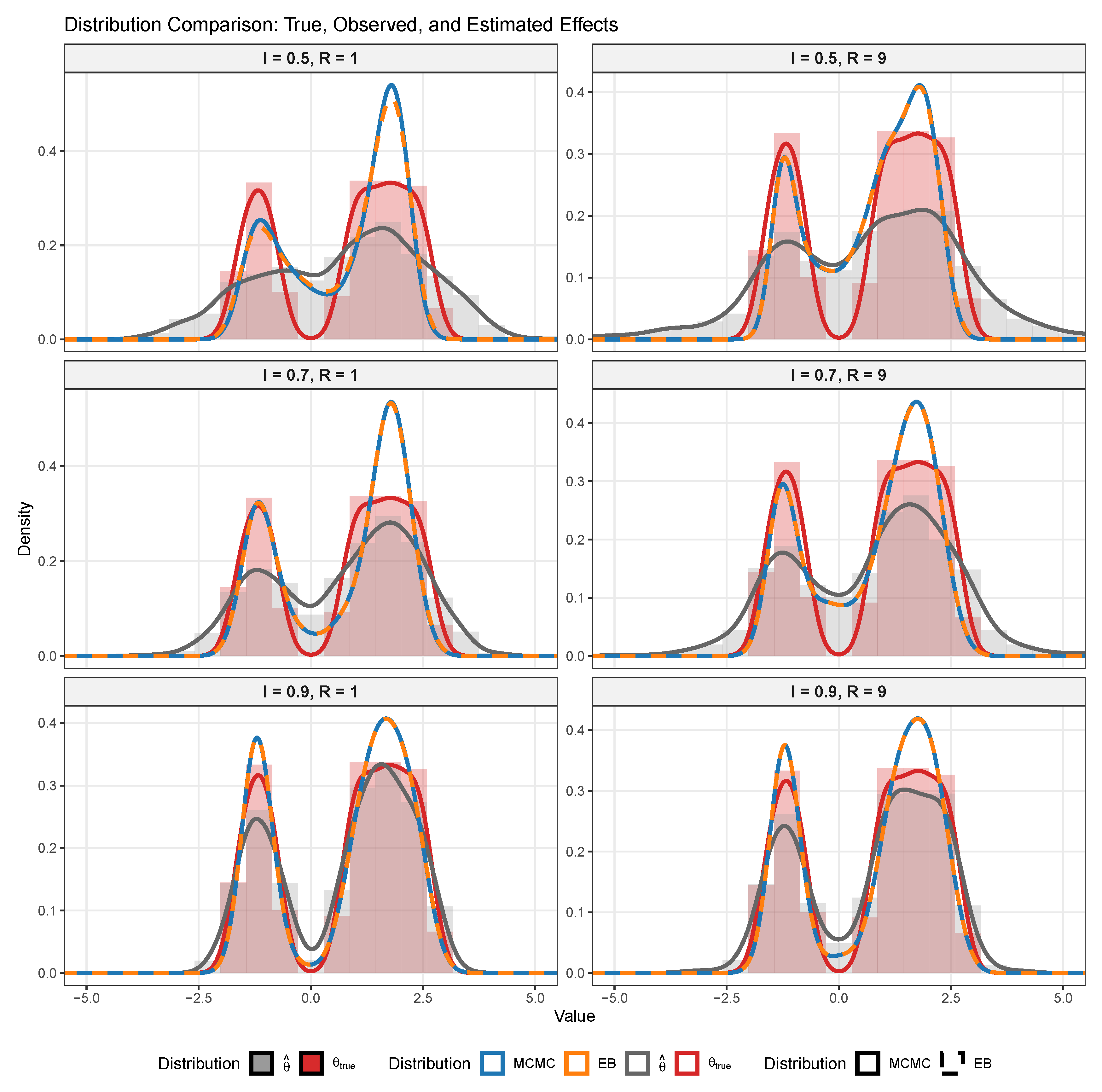

Figure 4 quantifies these visual patterns through formal performance metrics. The RMSE analysis reveals remarkable consistency across methods: differences between FB, EB, and even MAP estimates are typically less than 0.001. For instance, at the challenging condition of

, the RMSE values are 0.757, 0.759, and 0.763 respectively—essentially indistinguishable for practical purposes. This equivalence extends across all conditions, demonstrating that the computational efficiency of EB (seconds) versus FB (minutes) comes with virtually no sacrifice in point estimation quality.

The dramatic impact of reliability on accuracy is quantified precisely: RMSE decreases from approximately 0.76–0.81 at to 0.27–0.35 at , representing a 65% reduction in error. The correlation heatmap reinforces this pattern even more strikingly, with correlations increasing from 0.83–0.85 to 0.97–0.98 across the same range. These near-perfect correlations at high reliability suggest we approach the theoretical limit of estimation accuracy given the discrete grid approximation and finite sample size.

Interestingly, heteroscedasticity’s impact on accuracy metrics is mixed and generally modest. While RMSE sometimes improves slightly under heteroscedasticity (e.g., at : 0.814 vs. 0.757), correlations show the opposite pattern at low reliability (0.829 vs. 0.853). This suggests a trade-off: the benefit of having some high-precision sites is partially offset by the challenge of appropriately weighting vastly different information sources. At high reliability, these differences become negligible, with all methods achieving correlations exceeding 0.97.

The consistency of results across posterior means and modes (MAP) deserves emphasis. Even these fundamentally different point estimates—one minimizing squared error, the other maximizing posterior probability—yield comparable accuracy in the log-spline framework. This robustness suggests that the adaptive shrinkage inherent in the Bayesian framework, rather than the specific choice of point estimate, drives the excellent performance.

These results powerfully demonstrate that when point estimation is the primary goal, the computationally efficient EB approach sacrifices essentially nothing compared to full MCMC inference. The methods’ ability to recover complex bimodal structure from noisy observations, particularly evident as reliability increases, validates the log-spline framework as a flexible yet stable solution to the deconvolution problem.

6.2. Calibration of Uncertainty Estimates

While point estimation accuracy is crucial, the ability to provide well-calibrated credible intervals distinguishes the fully Bayesian approach from empirical Bayes alternatives. In this section, we evaluate two distinct types of uncertainty quantification: (1) parameter uncertainty about the true effects

(denoted

), and (2) estimator uncertainty about the posterior mean as a point estimate (denoted

). The empirical Bayes intervals are computed using the delta method approximation detailed in

Section 3.4, while the fully Bayesian approach provides exact finite-sample inference through MCMC marginalization. We now examine whether the nominal 90% credible intervals achieve their stated coverage and explore deeper aspects of inferential validity.

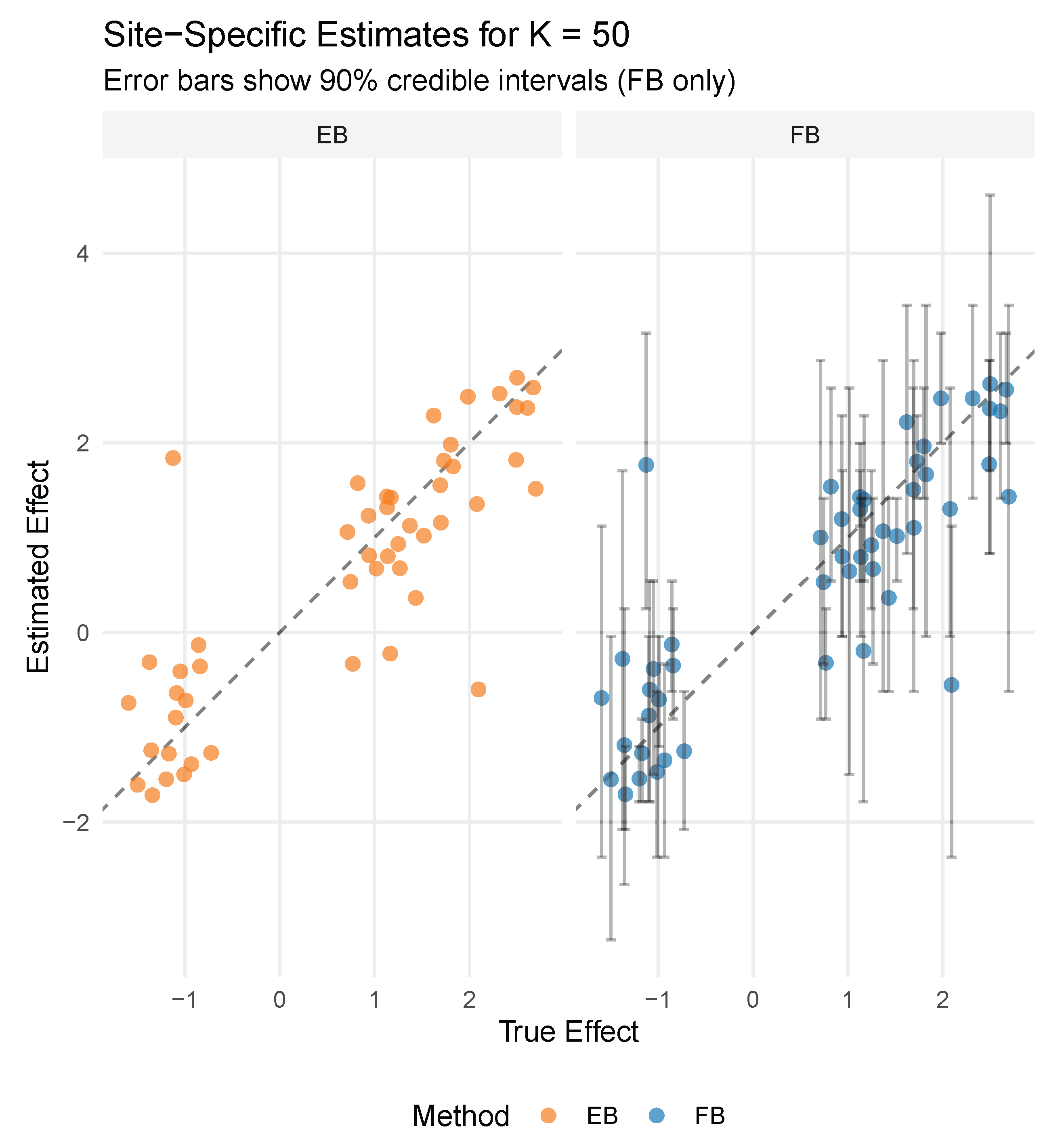

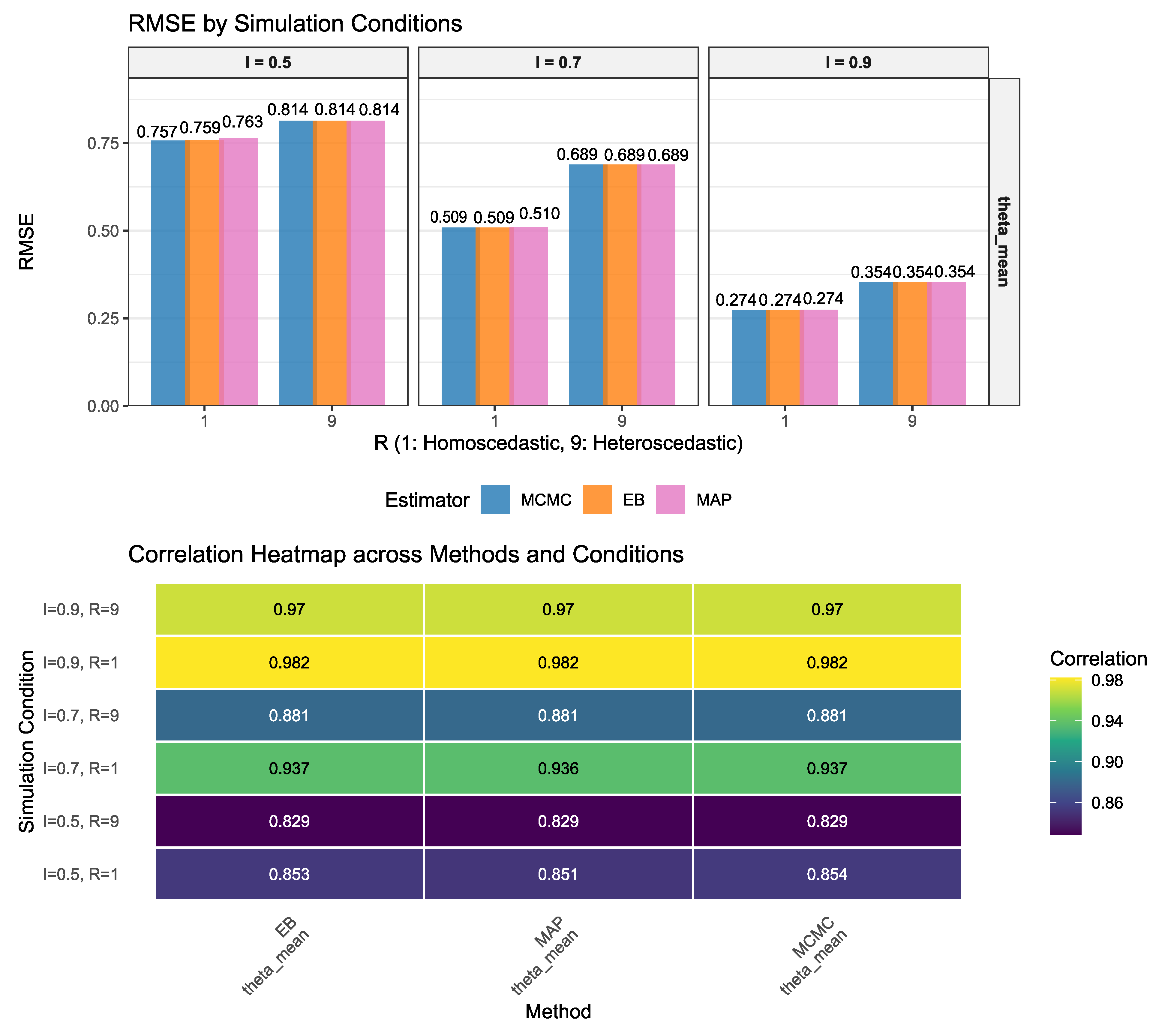

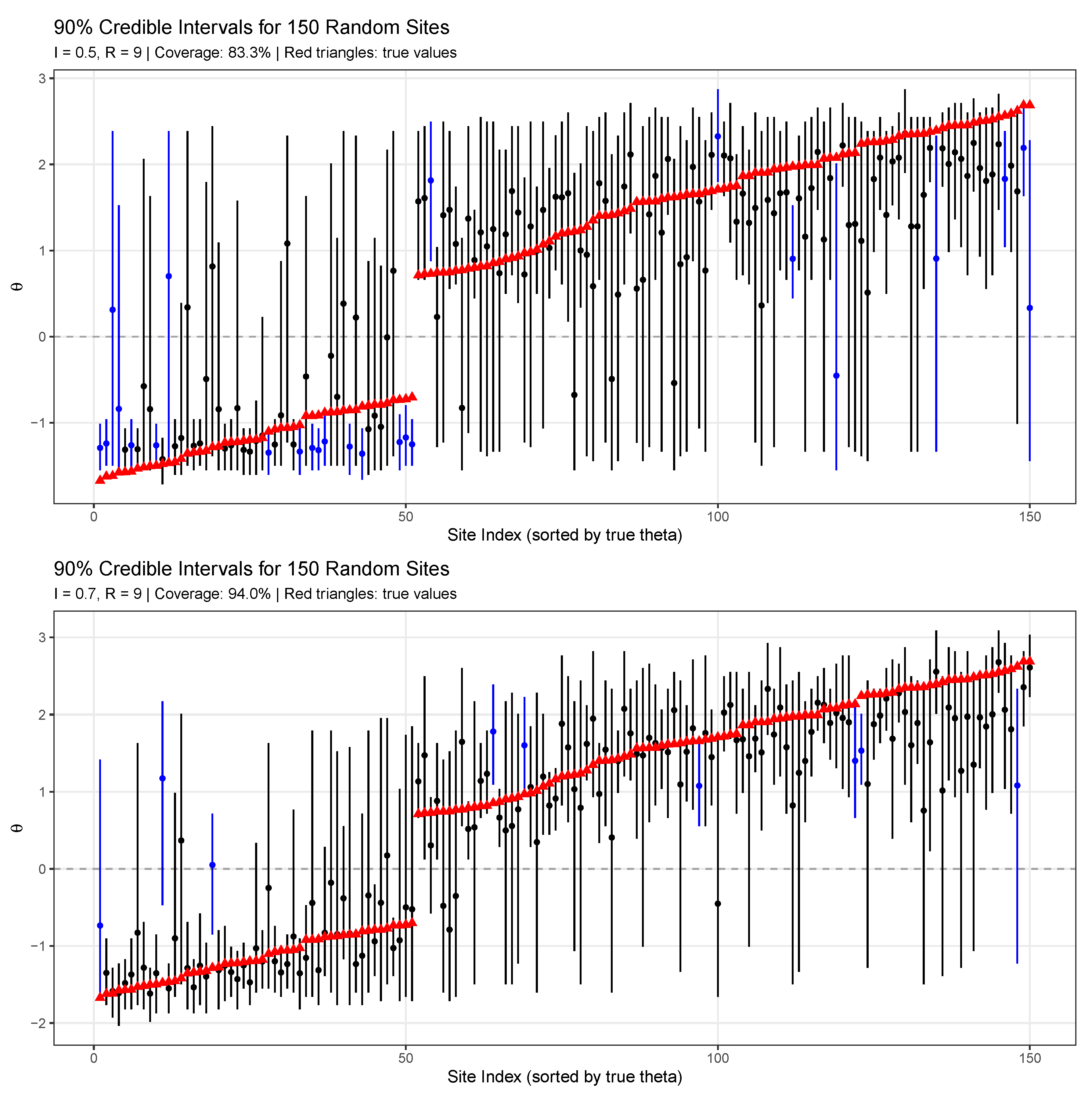

Figure 5 provides an intuitive visualization of interval coverage by displaying 90% credible intervals for 150 randomly sampled sites. The intervals are sorted by true effect size and colored to indicate coverage (black) or non-coverage (blue) of the true values (red triangles). In the top panel (

), 83.3% of intervals contain the true values, falling short of the nominal 90% coverage. This mild undercoverage at low reliability reflects the challenge of uncertainty quantification when measurement error dominates. The bottom panel (

) achieves 94.0% coverage, slightly exceeding the nominal level. The visual pattern reveals that miscoverage is not systematic—both extreme and moderate effects can fall outside their intervals—suggesting proper calibration rather than systematic bias.

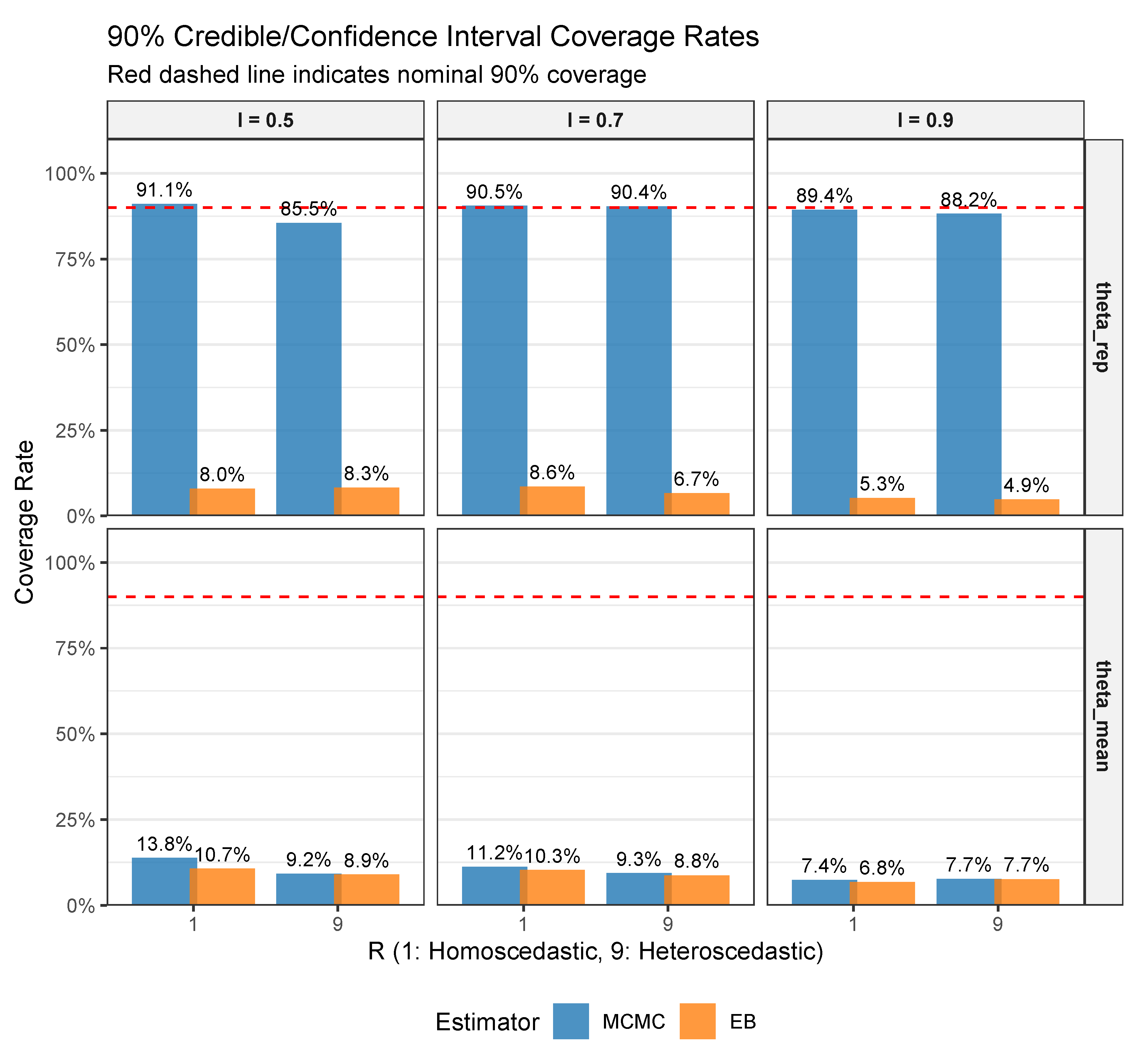

Figure 6 extends this analysis to all 1500 sites across the full range of simulation conditions, revealing a fundamental distinction between two types of uncertainty. The top row displays coverage for

, representing posterior uncertainty about the parameters themselves. Here, the FB approach (MCMC) consistently achieves coverage near the nominal 90% level, ranging from 88.2% to 91.1%. In stark contrast, the EB approach yields severely anti-conservative coverage, dropping as low as 4.9% at high reliability. This dramatic undercoverage directly results from treating the estimated prior

as fixed, thereby ignoring hyperparameter uncertainty—precisely the limitation identified in

Section 3.4 and proven formally in

Appendix A.

The bottom row tells a different story. When examining

—the posterior mean as an estimator—both FB and EB achieve similarly low coverage (6.8% to 13.8%). This apparent failure is not a deficiency but rather reflects that these intervals target estimator uncertainty (how variable the posterior mean is across repeated experiments) rather than parameter uncertainty (where the true

lies given the data). The near-identical performance of FB and EB for

confirms that both methods correctly quantify this narrower source of variability, with FB’s marginal posterior variance of the estimator (Equation (

22)) matching EB’s delta-method approximation.

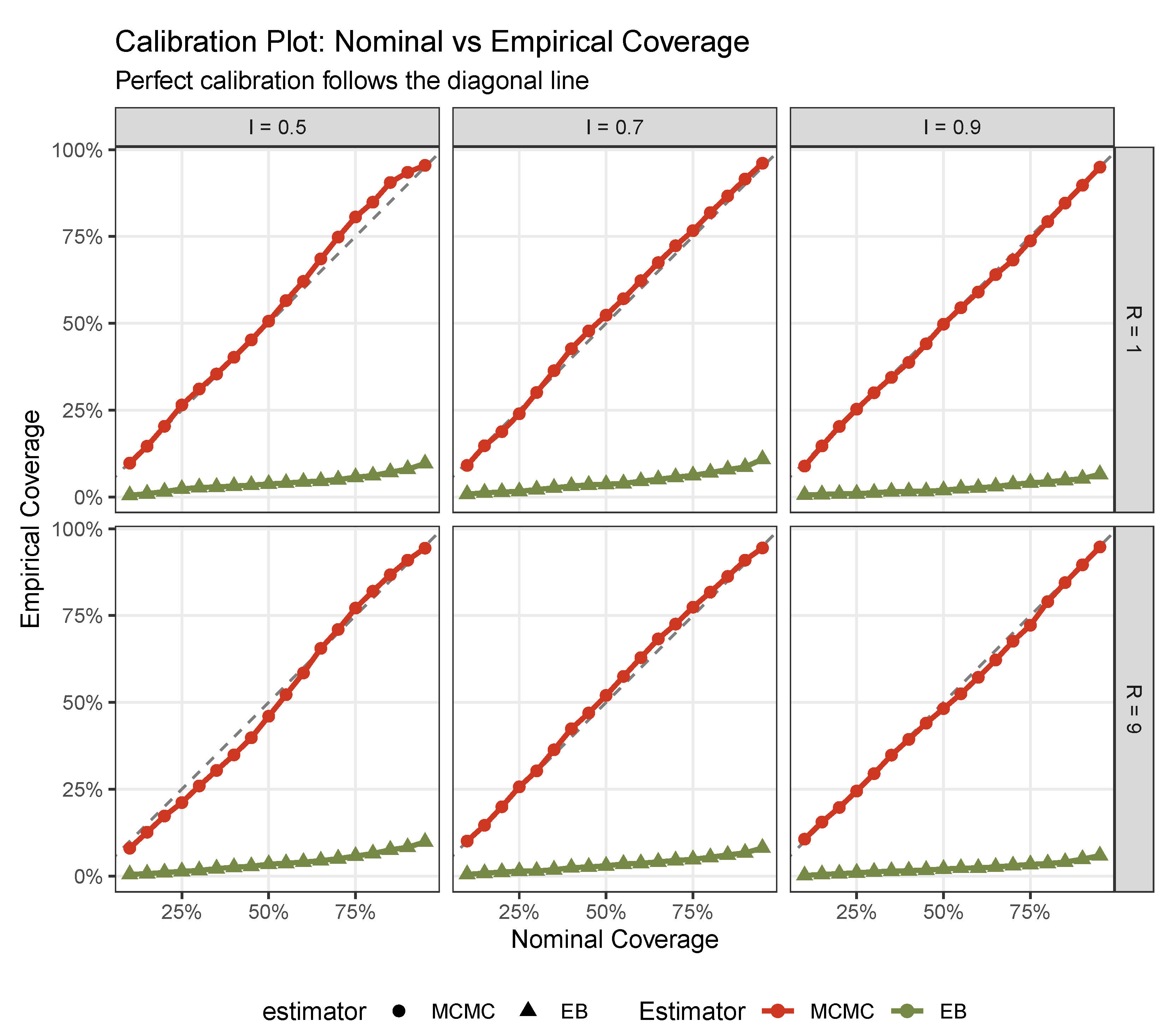

Figure 7 provides a more nuanced assessment through calibration plots, examining coverage across all nominal levels from 10% to 95%. Perfect calibration would follow the diagonal line. The FB approach (red circles) adheres remarkably closely to this ideal across all conditions, with only minor deviations at extreme nominal levels. In contrast, the EB approach (green triangles) exhibits severe miscalibration, with empirical coverage plateauing far below nominal levels. This pattern holds across all reliability and heteroscedasticity conditions, confirming that FB’s proper uncertainty propagation yields well-calibrated inference throughout the probability scale.

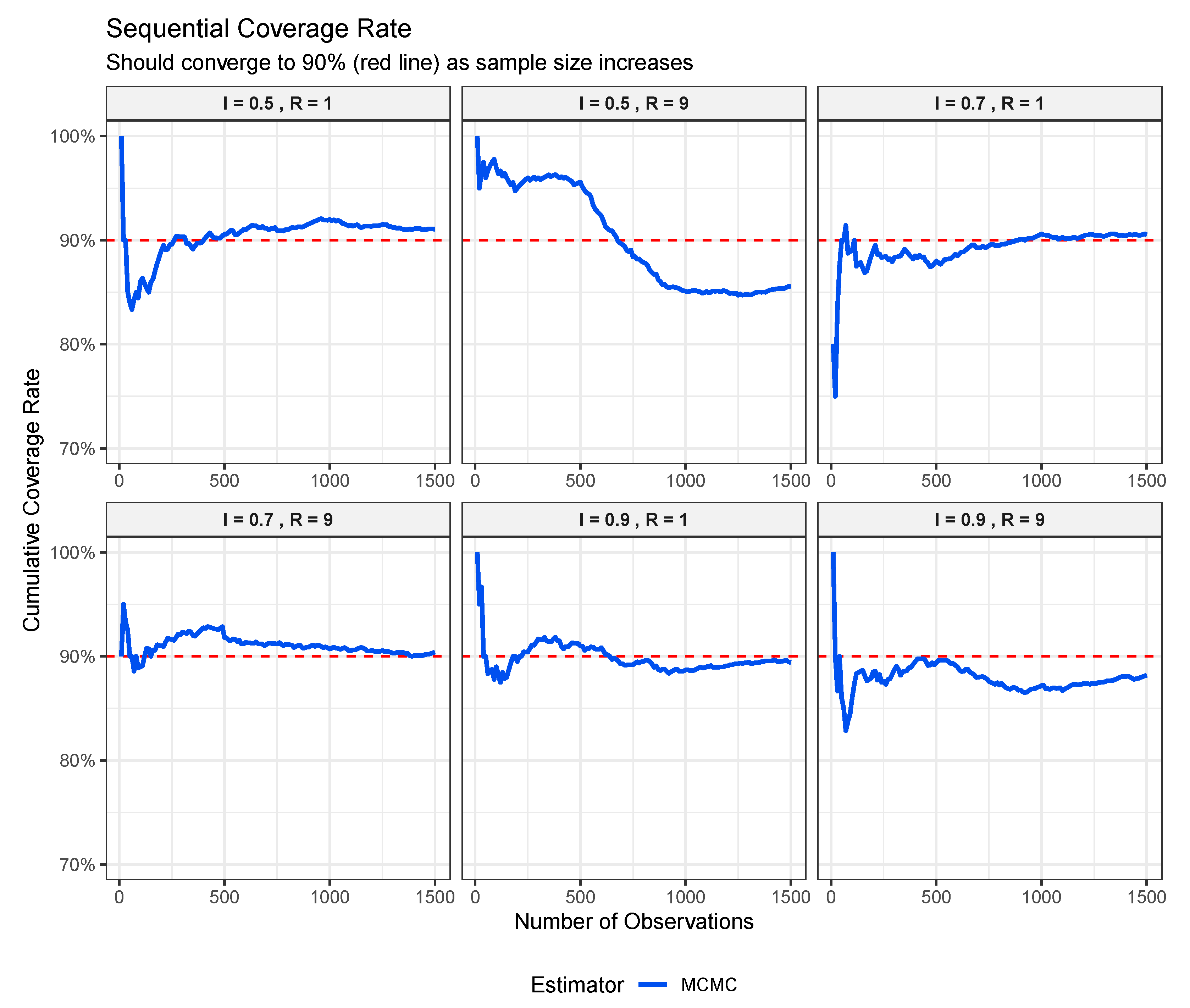

Figure 8 examines the stability of coverage as evidence accumulates. These sequential coverage plots track the cumulative coverage rate as sites are processed in order. For FB inference, coverage quickly stabilizes near 90% after processing a few hundred sites, with only minor fluctuations thereafter. The most notable exception occurs at

, where coverage drifts downward after site 500, suggesting some sensitivity to the ordering of heteroscedastic observations at low reliability. Nevertheless, all conditions eventually converge to reasonable coverage levels, demonstrating the reliability of FB inference even in finite samples.

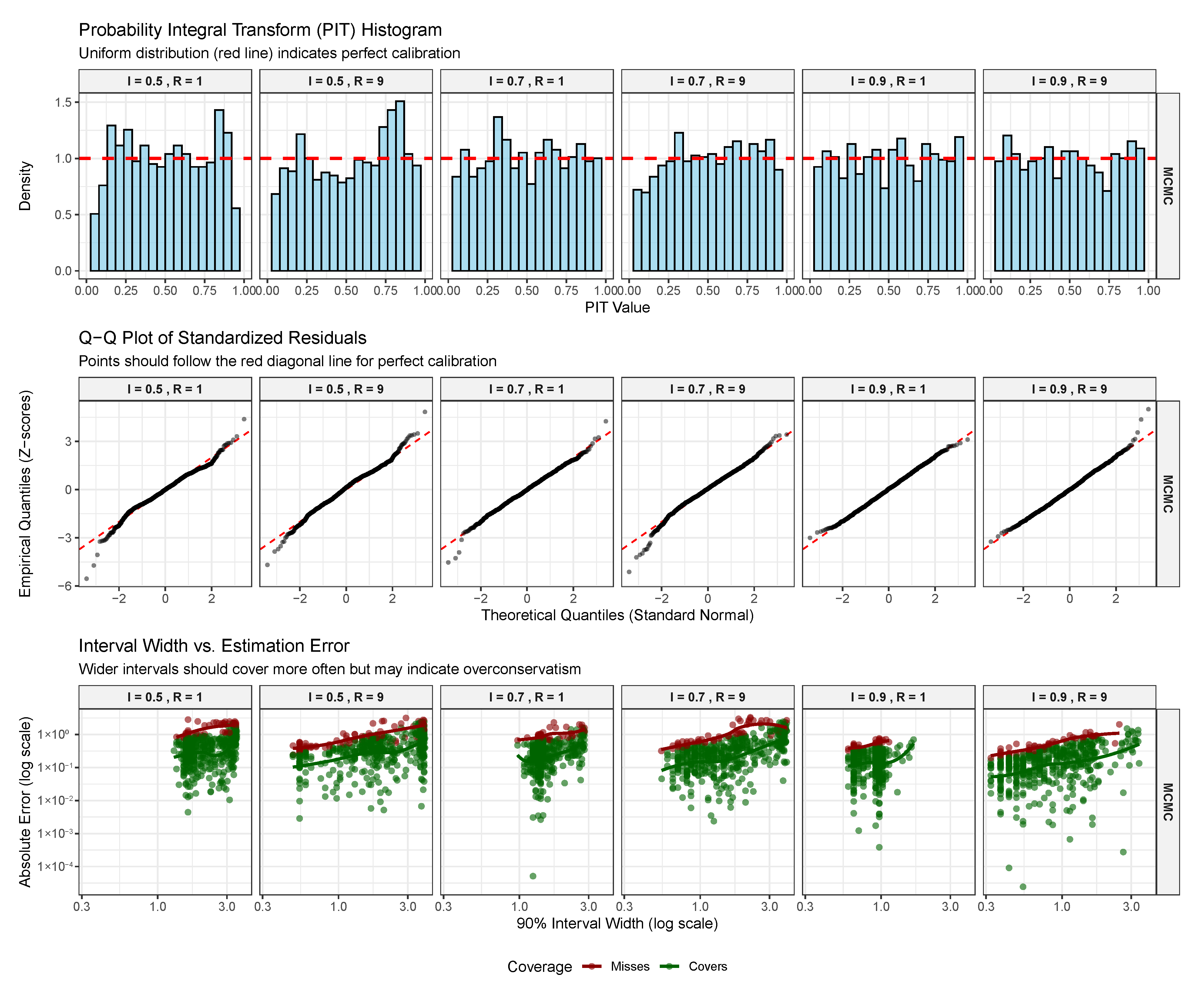

Figure 9 presents three additional diagnostic perspectives. The PIT histograms assess whether the predictive distributions are properly calibrated—under correct specification, these should be uniform. The FB results show reasonable uniformity with mild deviations, particularly some overdispersion at low reliability. The Q-Q plots of standardized residuals similarly confirm approximate normality, with minor heavy-tail behavior at the extremes. Most revealing is the bottom panel examining interval width versus estimation error. The strong positive correlation between interval width and absolute error, combined with high coverage for wide intervals (green points), indicates that the FB approach appropriately adapts uncertainty to the available information. Sites with narrow intervals tend to have small errors, while uncertain estimates correctly acknowledge their imprecision through wider intervals.

Taken together, these results establish a clear hierarchy of uncertainty quantification approaches. The FB method with provides properly calibrated posterior inference for the parameters themselves, achieving nominal coverage through exact finite-sample marginalization over hyperparameter uncertainty. The EB approach, while computationally attractive, yields severely anti-conservative parameter inference due to its plug-in nature. When the inferential target shifts to estimator uncertainty (), both approaches perform equivalently, but such intervals answer a fundamentally different question—how variable is our estimation procedure rather than where does the true parameter lie. For scientific applications requiring honest uncertainty assessment about site-specific effects, the fully Bayesian approach emerges as the principled choice, providing well-calibrated inference at modest additional computational cost.

The calibration results presented here are complemented by extensive sensitivity analyses in the appendices.

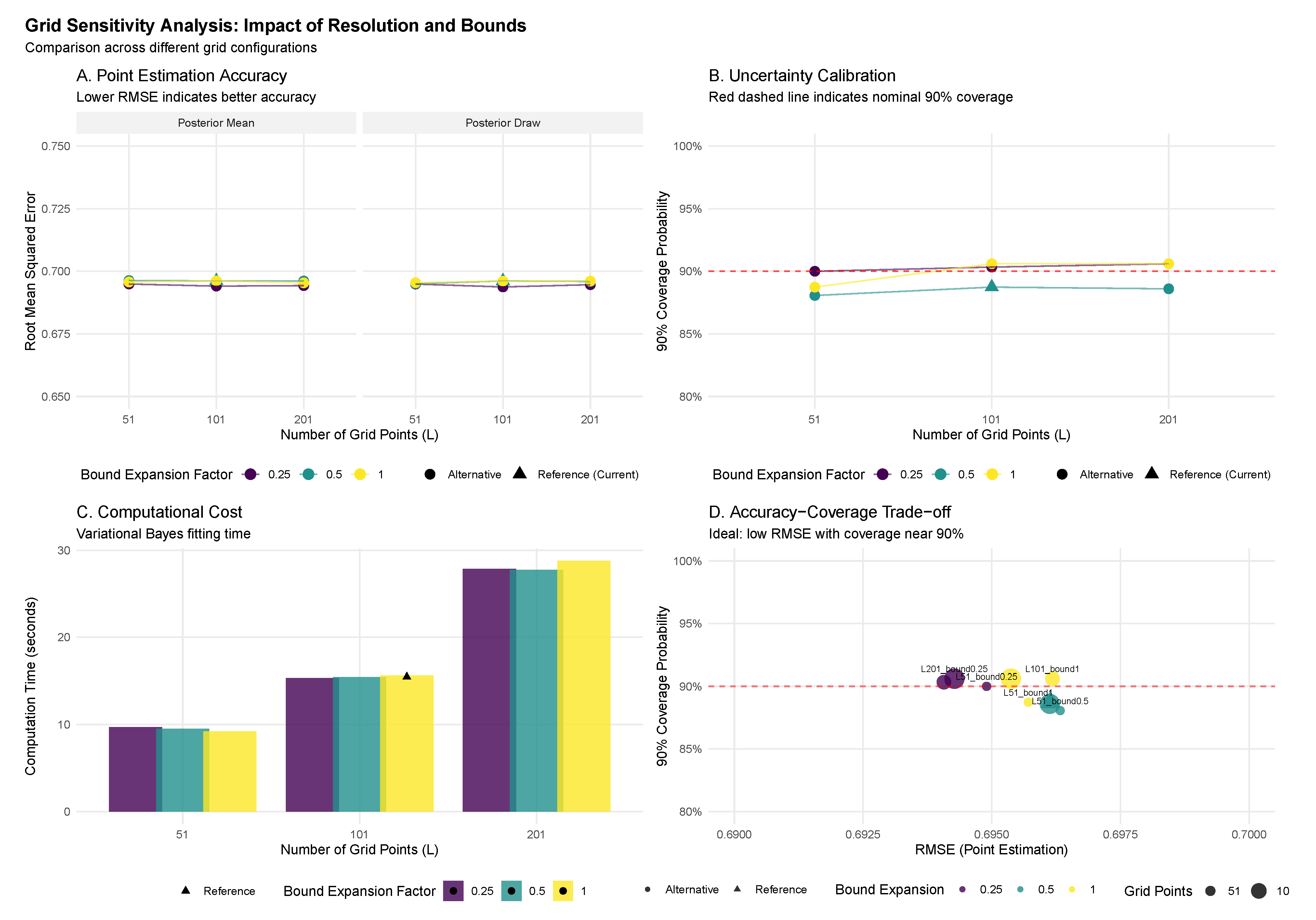

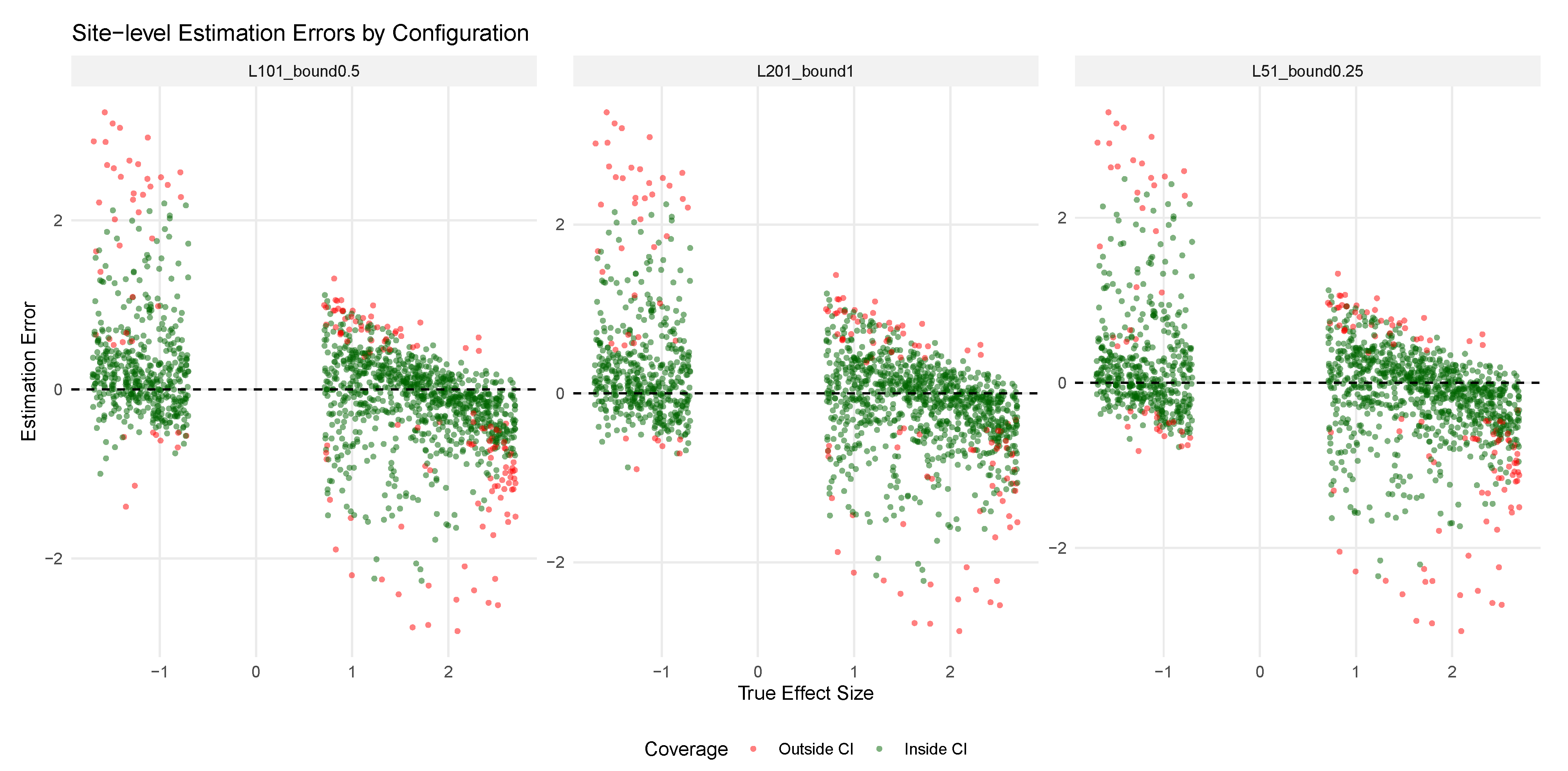

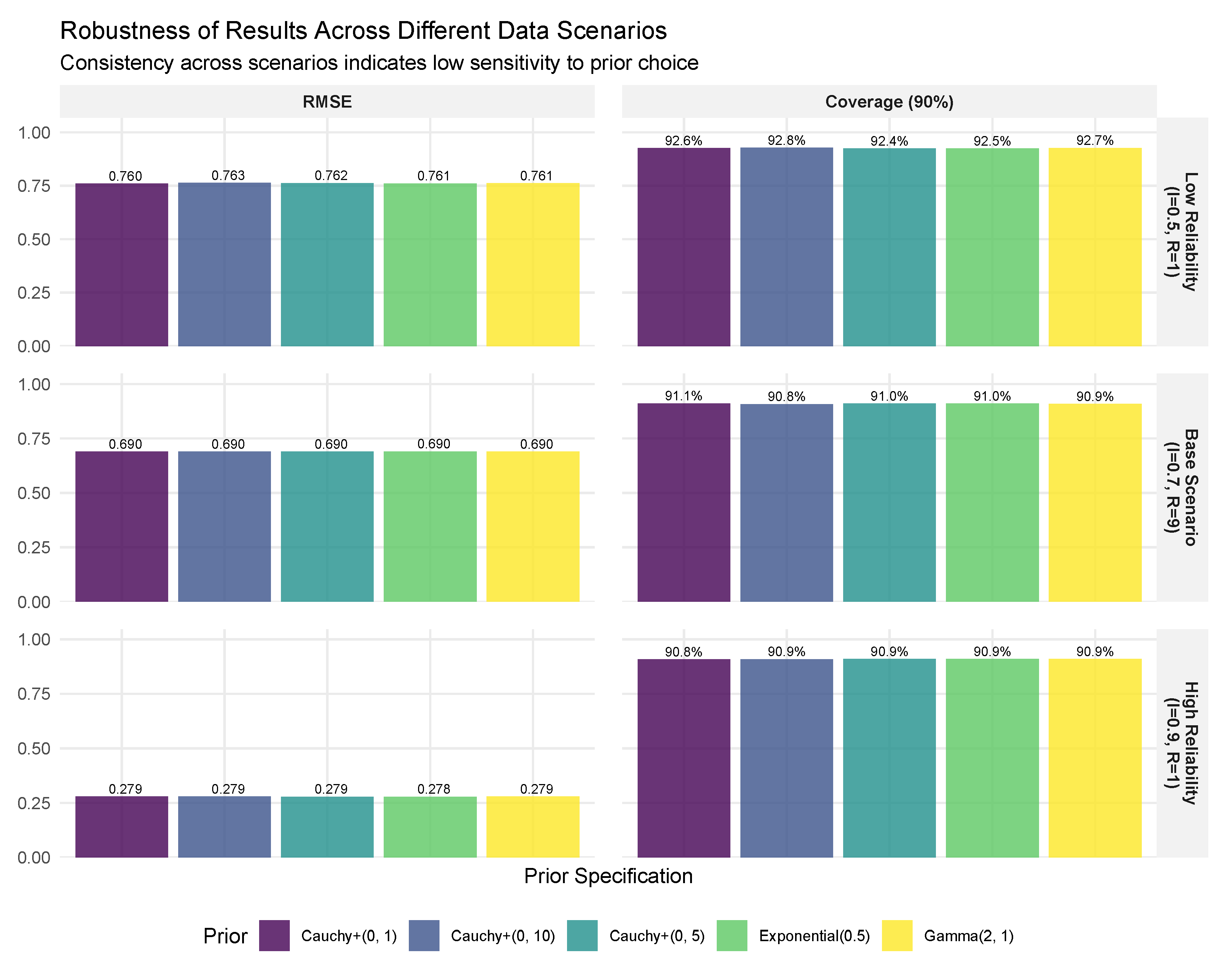

Appendix C examines the robustness of our findings to grid resolution and bounds specification, while

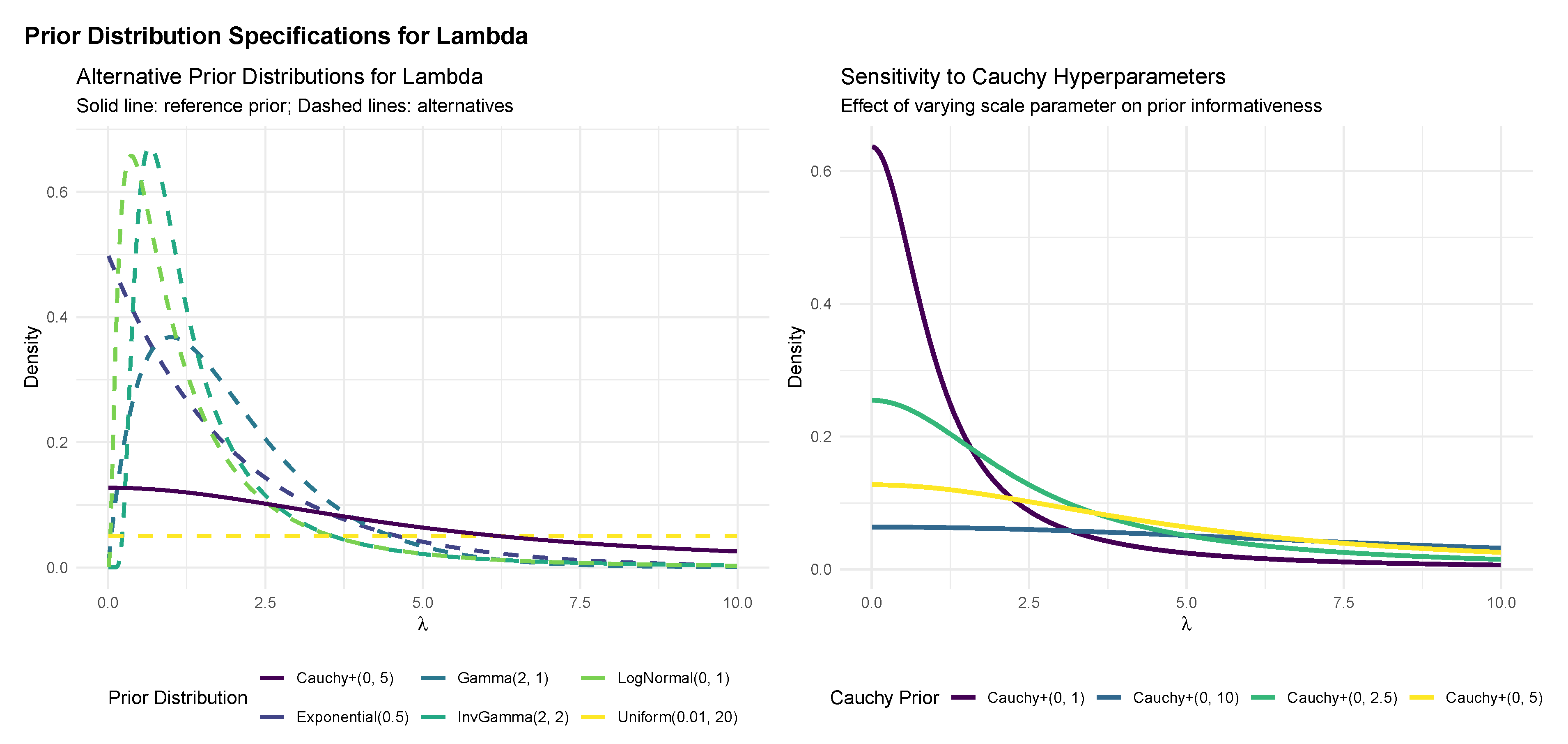

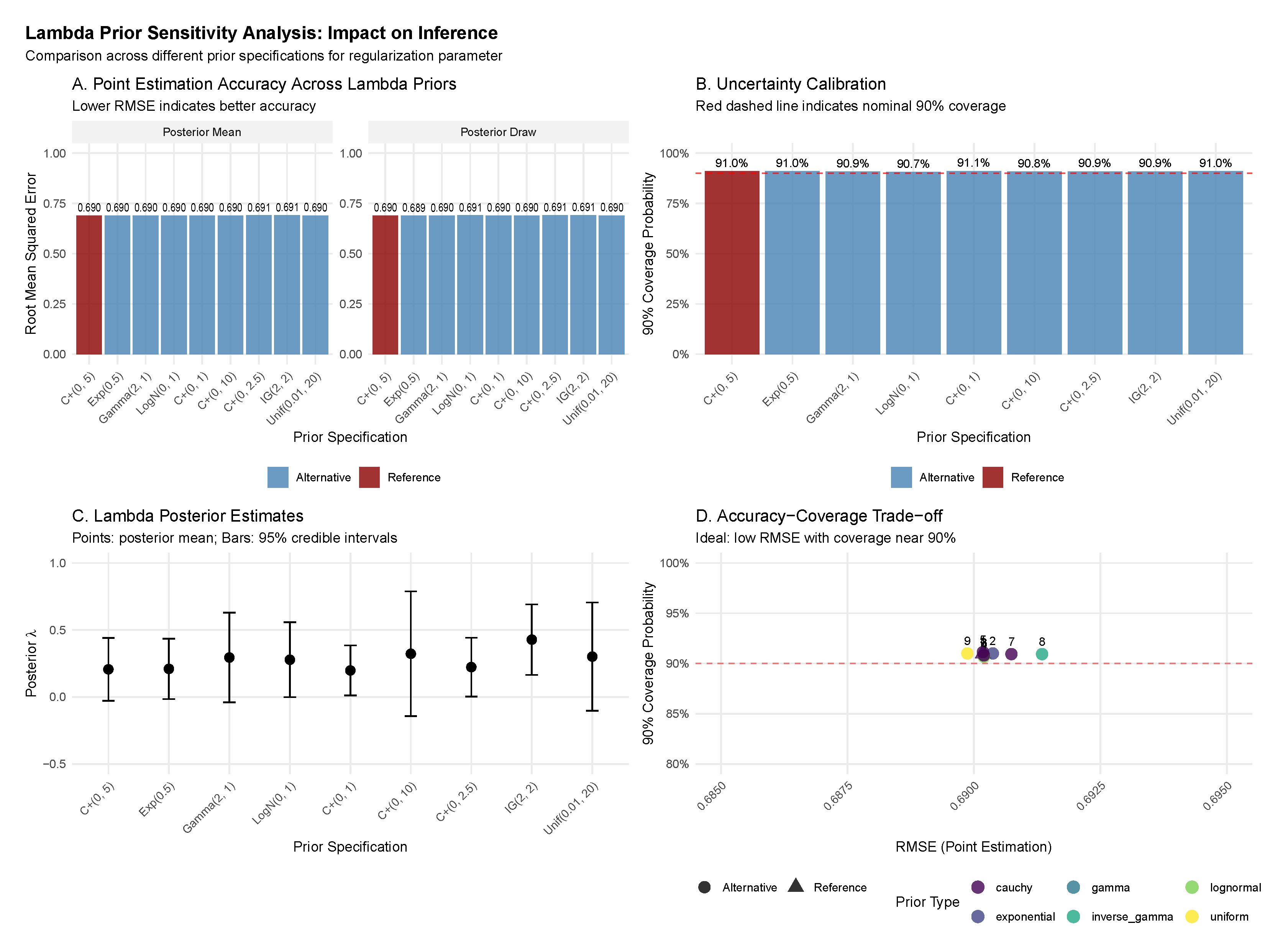

Appendix D investigates sensitivity to the hyperprior choice for

. These supplementary analyses confirm that the superior uncertainty calibration of the fully Bayesian approach persists across a wide range of implementation choices.

8. Discussion and Conclusions

This paper has introduced and validated a fully Bayesian hierarchical model for Efron’s log-spline prior, a powerful semi-parametric tool for large-scale inference. Our work is motivated by a common and critical scientific objective: the need for accurate and reliable inference on individual parameters within a large ensemble, a goal Efron [

16] terms “finite-Bayes inference.” By embedding the

g-modeling framework within a coherent Bayesian structure, we have demonstrated a principled path to overcoming the limitations of standard plug-in Empirical Bayes (EB) methods, particularly in the crucial domain of uncertainty quantification.

Our simulation studies confirm that for the task of point estimation, the traditional EB approach performs admirably. When the inferential goal is limited to obtaining shrinkage estimates, the penalized likelihood optimization of the log-spline prior is computationally efficient and produces accurate posterior means, especially when the signal-to-noise ratio is high. This reinforces the status of EB as a valuable tool for exploratory analysis.

The primary contribution of this work, however, lies in the clarification and resolution of how uncertainty is quantified. A central finding of this paper is the sharp conceptual and empirical distinction between two different forms of uncertainty: the

sampling variability of an estimator and the

posterior uncertainty of a parameter. We have shown that standard EB error estimation methods [

29], such as the Delta method or the parametric bootstrap, address the former. They quantify the frequentist stability of the posterior mean estimator,

, across hypothetical replications of an experiment. While this is a valid measure of procedural reliability, it does not provide what is often required for finite-Bayes inference: a credible interval representing our state of knowledge about the true, unobserved site-specific parameter

.

Our proposed fully Bayesian (FB) approach directly resolves this ambiguity. By treating the prior’s shape parameters (

) and regularization strength (

) as random variables, the MCMC sampling procedure naturally propagates all sources of uncertainty into the final posterior distribution for each

. As our simulation results demonstrate, the resulting 90% credible intervals are well-calibrated, achieving near-nominal coverage across a wide range of conditions. This “one-stop” procedure provides a direct and theoretically coherent solution for researchers, eliminating the need for complex, secondary approximation steps [

25,

32] that are not yet standard in widely used software packages for EB estimation.

Beyond providing well-calibrated intervals, the FB framework offers significant downstream advantages. The availability of a full posterior sample for each

empowers researchers to move beyond simple interval estimation. It enables principled decision-making under any specified loss function, far beyond the implicit squared-error loss of the posterior mean. This opens the door to a host of sophisticated analyses, including multiple testing control via the local false discovery rate [

55], estimation of the empirical distribution function of the true effects [

27], and rank-based inference [

56], all while properly accounting for the full range of uncertainty.

The principal trade-off for these benefits is computational cost. MCMC sampling is inherently more intensive than the optimization routines used in EB. While modern hardware and efficient samplers like NUTS make this approach feasible for many problems, its cost may be a consideration in extremely large-scale applications. This suggests a promising avenue for future work: evaluating the performance of faster, approximate Bayesian inference methods—such as Automatic Differentiation Variational Inference (ADVI; [

57]) or the Pathfinder algorithm [

58]—within this hierarchical

g-modeling context. Assessing how well these methods can replicate the accuracy and calibration of full MCMC would be a valuable contribution, potentially offering a practical compromise between the speed of EB and the inferential completeness of the FB approach.

In conclusion, this study reaffirms the power of Efron’s log-spline prior as a flexible tool for large-scale estimation. More importantly, it demonstrates that by placing this tool within a fully Bayesian framework, we can produce more reliable, interpretable, and useful uncertainty estimates. When the scientific priority is to understand the plausible range of individual site-specific effects, the fully Bayesian approach provides a robust and theoretically grounded solution.