SEND: Semantic-Aware Deep Unfolded Network with Diffusion Prior for Multi-Modal Image Fusion and Object Detection

Abstract

1. Introduction

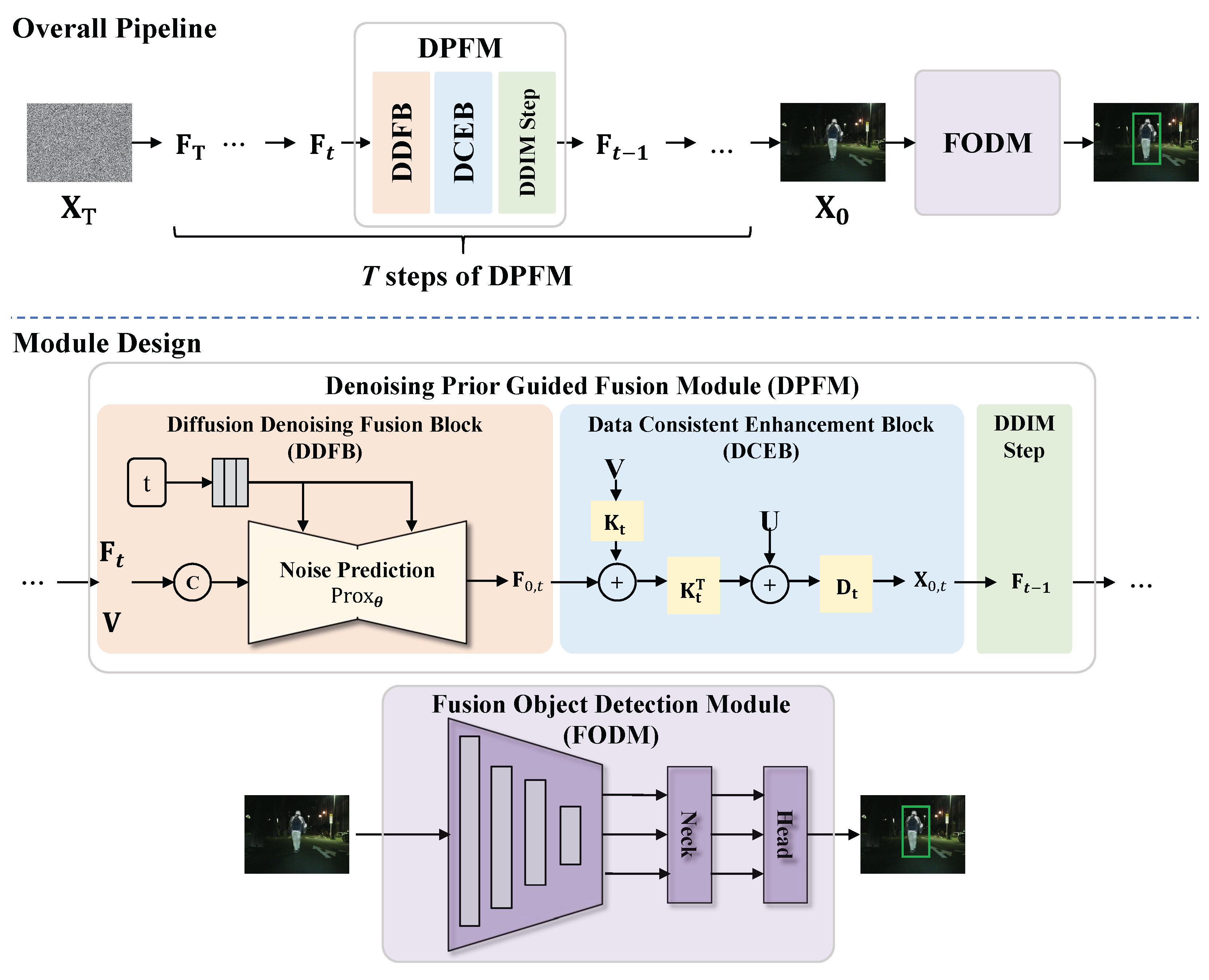

- We propose a novel Semantic-Aware Deep Unfolded Network with Diffusion Prior (SEND) method that synergistically combines a generative diffusion model with a deep unfolding network for joint multi-modality image fusion and object detection.

- The proposed SEND consists of a Denoising Prior Guided Fusion Module (DPFM) and a Fusion Object Detection Module (FODM). We propose a two-stage training strategy to effectively train these two main modules.

- The experimental results show that the proposed SEND method outperforms the comparison methods in both image fusion and object detection, and both tasks are mutually beneficial for each other.

2. Related Works

3. Proposed Method

3.1. Overview

3.2. Denoising Prior Guided Fusion Module

3.2.1. Model Formulation

3.2.2. Optimization Algorithm

3.2.3. Module Design

3.3. Fusion Object Detection Module

3.4. Loss Functions and Training Strategy

3.4.1. Loss Functions for DPFM

3.4.2. Loss Functions for FODM

3.4.3. Training Strategy

| Algorithm 1 Training strategy of SEND. | |

| Input: | Denoising Network , |

| Filters , | |

| Fusion Object Detection Network , | |

| Source Images , | |

| Learnable Hyperparameters , | |

| and Noise Schedule . | |

| |

| Algorithm 2 Sampling strategy of SEND. | |

| Input: | Denoising Network , |

| Filters , | |

| Fusion Object Detection Network , | |

| Source Images , | |

| Learnable Hyperparameters , | |

| and Noise Schedule . | |

| |

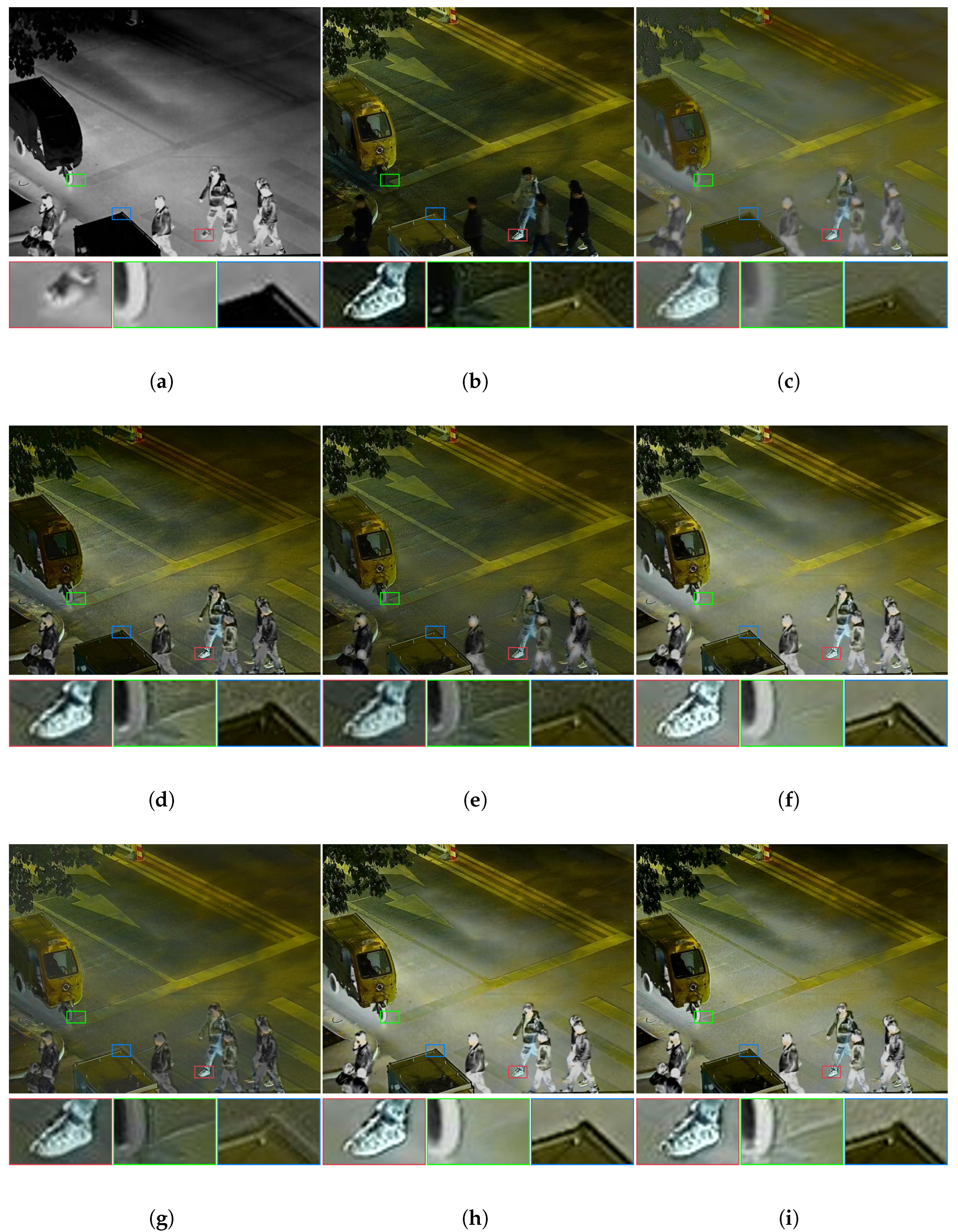

4. Experimental Results

4.1. Settings

4.2. Evaluation Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wan, B.; Zhou, X.; Sun, Y.; Wang, T.; Lv, C.; Wang, S.; Yin, H.; Yan, C. MFFNet: Multi-modal feature fusion network for VDT salient object detection. IEEE Trans. Multimed. 2023, 26, 2069–2081. [Google Scholar] [CrossRef]

- Gao, W.; Liao, G.; Ma, S.; Li, G.; Liang, Y.; Lin, W. Unified Information Fusion Network for Multi-Modal RGB-D and RGB-T Salient Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 2091–2106. [Google Scholar] [CrossRef]

- Liu, T.; Luo, W.; Ma, L.; Huang, J.J.; Stathaki, T.; Dai, T. Coupled network for robust pedestrian detection with gated multi-layer feature extraction and deformable occlusion handling. IEEE Trans. Image Process. 2020, 30, 754–766. [Google Scholar] [CrossRef]

- James, A.P.; Dasarathy, B.V. Medical image fusion: A survey of the state of the art. Inf. Fusion 2014, 19, 4–19. [Google Scholar] [CrossRef]

- Liu, T.; Meng, Q.; Huang, J.J.; Vlontzos, A.; Rueckert, D.; Kainz, B. Video summarization through reinforcement learning with a 3D spatio-temporal u-net. IEEE Trans. Image Process. 2022, 31, 1573–1586. [Google Scholar] [CrossRef]

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Pradhan, P.K.; Das, A.; Kumar, A.; Baruah, U.; Sen, B.; Ghosal, P. SwinSight: A hierarchical vision transformer using shifted windows to leverage aerial image classification. Multimed. Tools Appl. 2024, 83, 86457–86478. [Google Scholar] [CrossRef]

- Pradhan, P.K.; Purkayastha, K.; Sharma, A.L.; Baruah, U.; Sen, B.; Ghosal, P. Graphically Residual Attentive Network for tackling aerial image occlusion. Comput. Electr. Eng. 2025, 125, 110429. [Google Scholar] [CrossRef]

- Huang, J.J.; Wang, Z.; Liu, T.; Luo, W.; Chen, Z.; Zhao, W.; Wang, M. DeMPAA: Deployable multi-mini-patch adversarial attack for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Peng, Y.; Qin, Y.; Tang, X.; Zhang, Z.; Deng, L. Survey on image and point-cloud fusion-based object detection in autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22772–22789. [Google Scholar] [CrossRef]

- Han, J.; Pauwels, E.J.; De Zeeuw, P. Fast saliency-aware multi-modality image fusion. Neurocomputing 2013, 111, 70–80. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, Y.; Blum, R.S.; Han, J.; Tao, D. Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: A review. Inf. Fusion 2018, 40, 57–75. [Google Scholar] [CrossRef]

- Yi, X.; Tang, L.; Zhang, H.; Xu, H.; Ma, J. Diff-IF: Multi-modality image fusion via diffusion model with fusion knowledge prior. Inf. Fusion 2024, 110, 102450. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, Y. Infrared and visible image fusion via gradientlet filter. Comput. Vis. Image Underst. 2020, 197, 103016. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J.; Kittler, J. MDLatLRR: A novel decomposition method for infrared and visible image fusion. IEEE Trans. Image Process. 2020, 29, 4733–4746. [Google Scholar] [CrossRef]

- Ma, J.; Chen, C.; Li, C.; Huang, J. Infrared and visible image fusion via gradient transfer and total variation minimization. Inf. Fusion 2016, 31, 100–109. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Hu, J. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875. [Google Scholar] [CrossRef]

- Zhao, Z.; Bai, H.; Zhang, J.; Zhang, Y.; Xu, S.; Lin, Z.; Timofte, R.; Van Gool, L. Cddfuse: Correlation-driven dual-branch feature decomposition for multi-modality image fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5906–5916. [Google Scholar]

- Zhao, Z.; Bai, H.; Zhu, Y.; Zhang, J.; Xu, S.; Zhang, Y.; Zhang, K.; Meng, D.; Timofte, R.; Van Gool, L. DDFM: Denoising diffusion model for multi-modality image fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 8082–8093. [Google Scholar]

- Deng, X.; Dragotti, P.L. Deep convolutional neural network for multi-modal image restoration and fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3333–3348. [Google Scholar] [CrossRef]

- Zhao, Z.; Xu, S.; Zhang, J.; Liang, C.; Zhang, C.; Liu, J. Efficient and model-based infrared and visible image fusion via algorithm unrolling. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1186–1196. [Google Scholar] [CrossRef]

- Deng, X.; Xu, J.; Gao, F.; Sun, X.; Xu, M. DeepM2CDL: Deep Multi-Scale Multi-Modal Convolutional Dictionary Learning Network. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 2770–2787. [Google Scholar] [CrossRef]

- Li, H.; Xu, T.; Wu, X.J.; Lu, J.; Kittler, J. LRRNet: A novel representation learning guided fusion network for infrared and visible images. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 11040–11052. [Google Scholar] [CrossRef]

- Cao, H.; Tan, C.; Gao, Z.; Xu, Y.; Chen, G.; Heng, P.A.; Li, S.Z. A survey on generative diffusion models. IEEE Trans. Knowl. Data Eng. 2024, 36, 2814–2830. [Google Scholar] [CrossRef]

- Croitoru, F.A.; Hondru, V.; Ionescu, R.T.; Shah, M. Diffusion models in vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10850–10869. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Zheng, J.; Zhu, Z.; Yao, W.; Wu, S. Weighted guided image filtering. IEEE Trans. Image Process. 2014, 24, 120–129. [Google Scholar] [CrossRef]

- Zhang, X.; Ma, Y.; Fan, F.; Zhang, Y.; Huang, J. Infrared and visible image fusion via saliency analysis and local edge-preserving multi-scale decomposition. JOSA A 2017, 34, 1400–1410. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Zhou, Z.; Wang, B.; Zong, H. Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys. Technol. 2017, 82, 8–17. [Google Scholar] [CrossRef]

- Li, G.; Lin, Y.; Qu, X. An infrared and visible image fusion method based on multi-scale transformation and norm optimization. Inf. Fusion 2021, 71, 109–129. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 502–518. [Google Scholar] [CrossRef]

- Wang, D.; Liu, J.; Fan, X.; Liu, R. Unsupervised Misaligned Infrared and Visible Image Fusion via Cross-Modality Image Generation and Registration. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, Vienna, Austria, 23–29 July 2022; pp. 3508–3515. [Google Scholar] [CrossRef]

- Yue, J.; Fang, L.; Xia, S.; Deng, Y.; Ma, J. Dif-fusion: Towards high color fidelity in infrared and visible image fusion with diffusion models. IEEE Trans. Image Process. 2023, 32, 5705–5720. [Google Scholar] [CrossRef]

- Yang, B.; Jiang, Z.; Pan, D.; Yu, H.; Gui, G.; Gui, W. LFDT-Fusion: A latent feature-guided diffusion Transformer model for general image fusion. Inf. Fusion 2025, 113, 102639. [Google Scholar] [CrossRef]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F.; Giannotti, F.; Pedreschi, D. A survey of methods for explaining black box models. ACM Comput. Surv. (CSUR) 2018, 51, 1–42. [Google Scholar] [CrossRef]

- Gregor, K.; LeCun, Y. Learning fast approximations of sparse coding. In Proceedings of the 27th International Conference on iNternational Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 399–406. [Google Scholar]

- Sun, J.; Li, H.; Xu, Z. Deep ADMM-Net for compressive sensing MRI. Adv. Neural Inf. Process. Syst. 2016, 29, 10–18. Available online: https://proceedings.neurips.cc/paper_files/paper/2016/file/1679091c5a880faf6fb5e6087eb1b2dc-Paper.pdf (accessed on 6 August 2025).

- Sreter, H.; Giryes, R. Learned convolutional sparse coding. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2191–2195. [Google Scholar]

- Zhao, Z.; Zhang, J.; Bai, H.; Wang, Y.; Cui, Y.; Deng, L.; Sun, K.; Zhang, C.; Liu, J.; Xu, S. Deep convolutional sparse coding networks for interpretable image fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2369–2377. [Google Scholar]

- He, C.; Li, K.; Xu, G.; Zhang, Y.; Hu, R.; Guo, Z.; Li, X. Degradation-resistant unfolding network for heterogeneous image fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 12611–12621. [Google Scholar]

- De-noising by soft-thresholding. IEEE Trans. Inf. Theory 2002, 41, 613–627.

- Huang, J.J.; Liu, T.; Xia, J.; Wang, M.; Dragotti, P.L. DURRNet: Deep unfolded single image reflection removal network with joint prior. In Proceedings of the ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 5235–5239. [Google Scholar]

- Pu, W.; Huang, J.J.; Sober, B.; Daly, N.; Higgitt, C.; Daubechies, I.; Dragotti, P.L.; Rodrigues, M.R. Mixed X-ray image separation for artworks with concealed designs. IEEE Trans. Image Process. 2022, 31, 4458–4473. [Google Scholar] [CrossRef]

- Huang, J.J.; Liu, T.; Chen, Z.; Liu, X.; Wang, M.; Dragotti, P.L. A Lightweight Deep Exclusion Unfolding Network for Single Image Reflection Removal. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 4957–4973. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Efron, B. Tweedie’s formula and selection bias. J. Am. Stat. Assoc. 2011, 106, 1602–1614. [Google Scholar] [CrossRef] [PubMed]

- Song, J.; Meng, C.; Ermon, S. Denoising diffusion implicit models. arXiv 2020, arXiv:2010.02502. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; Changyu, L.; Hogan, A.; Diaconu, L.; Ingham, F.; Poznanski, J.; Fang, J.; Yu, L.; et al. Ultralytics/yolov5: v3. 1-Bug Fixes and Performance Improvements; Zenodo: Geneva, Switzerland, 2020. [Google Scholar]

- Tang, L.; Yuan, J.; Zhang, H.; Jiang, X.; Ma, J. PIAFusion: A progressive infrared and visible image fusion network based on illumination aware. Inf. Fusion 2022, 83, 79–92. [Google Scholar] [CrossRef]

- Jia, X.; Zhu, C.; Li, M.; Tang, W.; Zhou, W. LLVIP: A visible-infrared paired dataset for low-light vision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 3496–3504. [Google Scholar]

- Liu, J.; Fan, X.; Huang, Z.; Wu, G.; Liu, R.; Zhong, W.; Luo, Z. Target-aware dual adversarial learning and a multi-scenario multi-modality benchmark to fuse infrared and visible for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5802–5811. [Google Scholar]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J. EMFusion: An unsupervised enhanced medical image fusion network. Inf. Fusion 2021, 76, 177–186. [Google Scholar] [CrossRef]

| Methods | MSRS (361 Pairs) | LLVIP (3463 Pairs) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| EN | AG | SD | SCD | VIF | EN | AG | SD | SCD | VIF | |

| 1-11 FusionGAN [51] | 5.44 | 1.45 | 17.08 | 0.98 | 0.44 | 6.55 | 2.93 | 28.01 | 0.74 | 0.37 |

| U2Fusion [30] | 4.95 | 2.10 | 18.89 | 1.01 | 0.48 | 6.84 | 4.99 | 38.55 | 1.35 | 0.55 |

| EMFusion [52] | 6.33 | 2.92 | 35.49 | 1.29 | 0.84 | 6.68 | 4.41 | 34.79 | 1.19 | 0.63 |

| CDDFuse [18] | 6.70 | 3.75 | 43.40 | 1.62 | 1.00 | 7.33 | 5.81 | 48.98 | 1.58 | 0.77 |

| LRRNet [23] | 6.19 | 2.65 | 31.79 | 0.79 | 0.54 | 6.39 | 3.81 | 28.52 | 0.84 | 0.45 |

| Diff-IF [13] | 6.67 | 3.71 | 42.63 | 1.62 | 1.04 | 7.40 | 5.85 | 49.99 | 1.48 | 0.84 |

| SEND (Ours) | 7.60 | 3.70 | 43.45 | 1.63 | 0.98 | 7.57 | 6.64 | 54.39 | 1.50 | 0.73 |

| Methods | LLVIP Test Dataset (2769:347:347) | |||

|---|---|---|---|---|

| Precision | Recall | mAP50 | mAP50-95 | |

| 1-5 Infrared | 0.962 | 0.917 | 0.97015 | 0.64658 |

| Visible | 0.940 | 0.936 | 0.97080 | 0.64932 |

| FusionGAN [51] | 0.954 | 0.961 | 0.98445 | 0.73746 |

| U2Fusion [30] | 0.974 | 0.948 | 0.98713 | 0.73373 |

| EMFusion [52] | 0.969 | 0.956 | 0.98557 | 0.72883 |

| CDDFuse [18] | 0.972 | 0.957 | 0.98611 | 0.74150 |

| LRRNet [23] | 0.964 | 0.955 | 0.98186 | 0.71775 |

| Diff-IF [13] | 0.968 | 0.957 | 0.98602 | 0.75221 |

| SEND (Ours) | 0.977 | 0.962 | 0.98847 | 0.74538 |

| Methods | M3FD Test Dataset (3360:420:420) | |||

|---|---|---|---|---|

| Precision | Recall | mAP50 | mAP50-95 | |

| 1-5 Infrared | 0.785 | 0.604 | 0.67158 | 0.43095 |

| Visible | 0.766 | 0.635 | 0.69091 | 0.43573 |

| FusionGAN [51] | 0.788 | 0.616 | 0.67873 | 0.43817 |

| U2Fusion [30] | 0.838 | 0.600 | 0.69014 | 0.44195 |

| EMFusion [52] | 0.810 | 0.601 | 0.68356 | 0.43657 |

| CDDFuse [18] | 0.781 | 0.605 | 0.67692 | 0.43675 |

| LRRNet [23] | 0.810 | 0.601 | 0.68356 | 0.43657 |

| Diff-IF [13] | 0.777 | 0.610 | 0.67828 | 0.43410 |

| SEND (Ours) | 0.835 | 0.707 | 0.77278 | 0.50065 |

| Methods | Test Infrared and Visible Image Size (480, 640) | ||

|---|---|---|---|

| Params.(M) | FLOPs.(G) | FPS | |

| FusionGAN [51] | 3.521 | 7.634 | 1.215 |

| U2Fusion [30] | 3.251 | 410.004 | 1.730 |

| EMFusion [52] | 2.741 | 96.174 | 5.028 |

| CDDFuse [18] | 3.781 | 9.834 | 4.686 |

| LRRNet [23] | 2.641 | 5.834 | 6.996 |

| Diff-IF [13] | 26.304 | 48.364 | 6.047 |

| SEND (Ours) | 25.177 | 27.961 | 5.327 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, R.; Xiong, M.-Y.; Huang, J.-J. SEND: Semantic-Aware Deep Unfolded Network with Diffusion Prior for Multi-Modal Image Fusion and Object Detection. Mathematics 2025, 13, 2584. https://doi.org/10.3390/math13162584

Zhang R, Xiong M-Y, Huang J-J. SEND: Semantic-Aware Deep Unfolded Network with Diffusion Prior for Multi-Modal Image Fusion and Object Detection. Mathematics. 2025; 13(16):2584. https://doi.org/10.3390/math13162584

Chicago/Turabian StyleZhang, Rong, Mao-Yi Xiong, and Jun-Jie Huang. 2025. "SEND: Semantic-Aware Deep Unfolded Network with Diffusion Prior for Multi-Modal Image Fusion and Object Detection" Mathematics 13, no. 16: 2584. https://doi.org/10.3390/math13162584

APA StyleZhang, R., Xiong, M.-Y., & Huang, J.-J. (2025). SEND: Semantic-Aware Deep Unfolded Network with Diffusion Prior for Multi-Modal Image Fusion and Object Detection. Mathematics, 13(16), 2584. https://doi.org/10.3390/math13162584