Consensus-Regularized Federated Learning for Superior Generalization in Wind Turbine Diagnostics

Abstract

1. Introduction

- We develop and validate a neural network-based federated learning framework specifically tailored for multiclass fault diagnosis in wind turbines.

- We provide a novel mathematical formalization of the client drift problem in the context of wind turbine diagnostics and propose a consensus-regularized learning objective to explicitly counteract it. This reframes the implicit benefit of federated averaging into a concrete and tunable mechanism.

- We establish a rigorous benchmarking methodology to systematically evaluate the performance trade-offs between centralized and federated learning under controlled, non-IID conditions.

- We provide compelling empirical evidence that our FL approach not only preserves privacy but achieves superior diagnostic performance, offering a scalable, secure, and effective solution for the next generation of intelligent wind farm management.

2. Related Works

2.1. Machine Learning for Wind Turbine Fault Diagnosis

2.2. Federated Learning in Industrial Applications

2.3. Mathematical Foundations and Challenges of Federated Learning

3. Problem Formulation

3.1. From Centralized Aggregation to Federated Collaboration

3.2. Mathematical Analysis of Client Drift in Federated Systems

3.3. Consensus-Regularized Federated Optimization

4. Proposed Methodology and System Architecture

4.1. Multiclass Fault Diagnosis Problem Formulated

4.2. Modeling Pipelines and Architectures

- Input Layer: A layer with 53 neurons, corresponding to the d = 53 dimensions of the SCADA feature vector.

- Hidden Layer: A single fully connected hidden layer composed of 128 neurons, which utilizes the ReLU activation function to capture complex, non-linear relationships.

- Output Layer: A final fully connected layer of 6 neurons, one for each fault class, followed by a Softmax activation function to produce the classification probabilities.

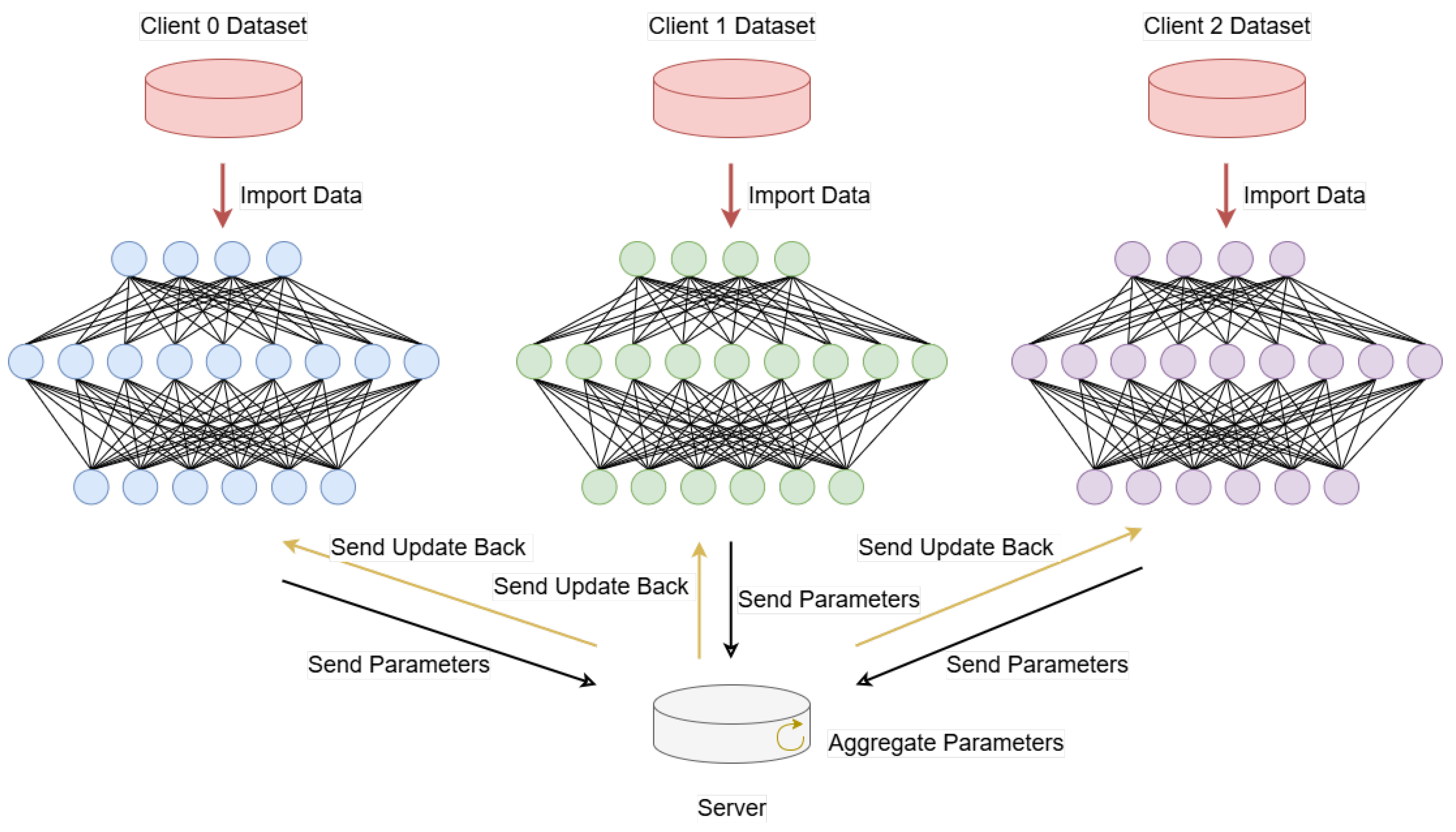

- Distribution: At the start of each communication round t, the central server broadcasts the current global model parameters to all participating clients.

- Local Training: Each client k independently trains the model on its local dataset for one epoch, computing an updated set of parameters that is biased towards its local data distribution.

- Aggregation: The clients then transmit their updated model parameters back to the server. The server aggregates these updates using the Federated Averaging (FedAvg) algorithm to compute the improved global model for the next round, , as formulated in Equation (7).

| Algorithm 1 Consensus-Regularized Federated Learning |

|

4.3. Computational Complexity and Scalability

5. Results and Discussion

5.1. Experimental Setup

5.2. Experimental Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pandit, R.; Astolfi, D.; Hong, J.; Infield, D.; Santos, M. Scada data for wind turbine data-driven condition/performance monitoring: A review on state-of-art, challenges and future trends. Wind. Eng. 2023, 47, 422–441. [Google Scholar] [CrossRef]

- Boyer, S.A. SCADA: Supervisory Control and Data Acquisition; International Society of Automation: Research Triangle Park, NC, USA, 2009. [Google Scholar]

- Aziz, U.; Charbonnier, S.; Bérenguer, C.; Lebranchu, A.; Prevost, F. Critical comparison of power-based wind turbine fault-detection methods using a realistic framework for scada data simulation. Renew. Sustain. Energy Rev. 2021, 144, 110961. [Google Scholar] [CrossRef]

- Maldonado-Correa, J.; Torres-Cabrera, J.; Martín-Martínez, S.; Artigao, E.; Gómez-Lázaro, E. Wind turbine fault detection based on the transformer model using scada data. Eng. Fail. Anal. 2024, 162, 108354. [Google Scholar] [CrossRef]

- Malakouti, S.M. Prediction of wind speed and power with lightgbm and grid search: Case study based on scada system in Turkey. Int. J. Energy Prod. Manag. 2023, 8, 35–40. [Google Scholar]

- Liu, J.; Wang, X.; Wu, S.; Wan, L.; Xie, F. Wind turbine fault detection based on deep residual networks. Expert Syst. Appl. 2023, 213, 119102. [Google Scholar] [CrossRef]

- Elshenawy, L.M.; Gafar, A.A.; Awad, H.A.; AbouOmar, M.S. Fault detection of wind turbine system based on data-driven methods: A comparative study. Neural Comput. Appl. 2024, 36, 10279–10296. [Google Scholar] [CrossRef]

- Wang, T.; Yin, L. A hybrid 3dse-cnn-2dlstm model for compound fault detection of wind turbines. Expert Syst. Appl. 2024, 242, 122776. [Google Scholar] [CrossRef]

- Rahman, A.; Iqbal, A.; Ahmed, E.; Ontor, M.R.H. Privacy-preserving machine learning: Techniques, challenges, and future directions in safeguarding personal data management. Frontline Mark. Manag. Econ. J. 2024, 4, 84–106. [Google Scholar]

- Murdoch, B. Privacy and artificial intelligence: Challenges for protecting health information in a new era. BMC Med. Ethics 2021, 22, 122. [Google Scholar] [CrossRef]

- Bharadiya, J. Machine learning in cybersecurity: Techniques and challenges. Eur. J. Technol. 2023, 7, 1–14. [Google Scholar] [CrossRef]

- Jiang, G.; Zhao, K.; Liu, X.; Cheng, X.; Xie, P. A federated learning framework for cloud-edge collaborative fault diagnosis of wind turbines. IEEE Internet Things J. 2024, 11, 23170–23185. [Google Scholar] [CrossRef]

- Jenkel, L.; Jonas, S.; Meyer, A. Privacy-preserving fleet-wide learning of wind turbine conditions with federated learning. Energies 2023, 16, 6377. [Google Scholar] [CrossRef]

- Khan, L.U.; Saad, W.; Han, Z.; Hossain, E.; Hong, C.S. Federated learning for internet of things: Recent advances, taxonomy, and open challenges. IEEE Commun. Surv. Tutor. 2021, 23, 1759–1799. [Google Scholar] [CrossRef]

- Porté-Agel, F.; Bastankhah, M.; Shamsoddin, S. Wind-turbine and wind-farm flows: A review. Bound. Layer Meteorol. 2020, 174, 1–59. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Li, J.; Poor, H.V. Federated learning for internet of things: A comprehensive survey. IEEE Commun. Surv. Tutor. 2021, 23, 1622–1658. [Google Scholar] [CrossRef]

- Imteaj, A.; Thakker, U.; Wang, S.; Li, J.; Amini, M.H. A survey on federated learning for resource-constrained iot devices. IEEE Internet Things J. 2021, 9, 1–24. [Google Scholar] [CrossRef]

- Martínez-Luengo, M.; Kolios, A.; Wang, L. Fault detection in wind turbines: A comparative study of SVM and GA-SVM techniques. AIMS Energy 2019, 7, 506–522. [Google Scholar]

- Zhang, D.; Qian, L.; Mao, B.; Huang, C.; Huang, B.; Si, Y. A data-driven design for fault detection of wind turbines using random forests and XGboost. IEEE Access 2019, 7, 167287–167300. [Google Scholar] [CrossRef]

- Karamolegkos, N.; Koutroulis, E.; Kourgiantakis, M. A Wind Turbine Fault Diagnosis System Based on Long Short-Term Memory Networks. Energies 2021, 14, 6451. [Google Scholar]

- Yu, H.; Ma, L.; Dai, J.; Zhao, Y. Intelligent Fault Diagnosis of Wind Turbine Gearbox Based on a Novel Convolutional Neural Network. IEEE Access 2021, 9, 44869–44878. [Google Scholar]

- Zhao, L.; Wang, X.; Li, Y. Graph Neural Network Based Fault Diagnosis for Wind Turbine Gearboxes. IEEE Trans. Instrum. Meas. 2022, 71, 1–10. [Google Scholar]

- Li, L.; Fan, Y.; Tse, M.; Lin, K.Y. A review of applications in federated learning. Comput. Ind. Eng. 2020, 149, 106854. [Google Scholar] [CrossRef]

- Saputra, Y.M.; Hoang, D.T.; Nguyen, D.N.; Dutkiewicz, E.; Mueck, M.; Srikanteswara, S. Energy demand prediction for smart homes with federated learning. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Big Island, HI, USA, 9–13 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS), Ft. Lauderdale, FL, USA, 20–22 April 2017; PMLR: New York, NY, USA, 2017; pp. 1273–1282. [Google Scholar]

- Li, X.; Huang, K.; Yang, W.; Wang, S.; Zhang, Z. On the convergence of FedAvg on non-IID data. In Proceedings of the 8th International Conference on Learning Representations (ICLR), Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated optimization in heterogeneous networks. In Proceedings of the 3rd Conference on Machine Learning and Systems (MLSys), Austin, TX, USA, 2–4 March 2020. [Google Scholar]

- Karimireddy, S.P.; Kale, S.; Mohri, M.; Reddi, S.; Stich, S.; Suresh, A.T. SCAFFOLD: Stochastic controlled averaging for federated learning. In Proceedings of the 37th International Conference on Machine Learning (ICML), Online, 13–18 July 2020; PMLR: New York, NY, USA, 2020; pp. 5132–5143. [Google Scholar]

- Doostmohammadian, M.; Qureshi, M.I.; Khalesi, M.H.; Rabiee, H.R.; Khan, U.A. Log-Scale Quantization in Distributed First-Order Methods: Gradient-Based Learning From Distributed Data. IEEE Trans. Autom. Sci. Eng. 2025, 22, 10948–10959. [Google Scholar] [CrossRef]

- Tang, M.; Meng, C.; Wu, H.; Zhu, H.; Yi, J.; Tang, J.; Wang, Y. Fault detection for wind turbine blade bolts based on gsg combined with cs-lightgbm. Sensors 2022, 22, 6763. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Fan, P.; Yang, J.; Ke, S.; Ma, B.; Pei, Y.; Xu, J. Equivalent method for dfig wind farms based on modified lightgbm considering voltage deep drop faults. Int. J. Electr. Power Energy Syst. 2025, 164, 110451. [Google Scholar] [CrossRef]

- Xiang, B.; Liu, Z.; Huang, L.; Qin, M. Based on the wsp-optuna-lightgbm model for wind power prediction. J. Phys. Conf. Ser. 2024, 2835, 012011. [Google Scholar] [CrossRef]

- Dong, X.; Miao, Z.; Li, Y.; Zhou, H.; Li, W. One data-driven vibration acceleration prediction method for offshore wind turbine structures based on extreme gradient boosting. Ocean. Eng. 2024, 307, 118176. [Google Scholar] [CrossRef]

- Tang, M.; Peng, Z.; Wu, H. Fault detection for pitch system of wind turbine-driven doubly fed based on ihho-lightgbm. Appl. Sci. 2021, 11, 8030. [Google Scholar] [CrossRef]

- Yan, Z.; Zhang, L. Interpretable wind power prediction: A machine learning perspective using lightgbm and shap. In Proceedings of the 2024 2nd International Conference on Artificial Intelligence and Automation Control (AIAC), Guangzhou, China, 20–22 December 2024; IEEE: New York, NY, USA, 2024; pp. 225–229. [Google Scholar]

- Xian, Q.; Feng, S.; Yang, Y.; Liu, J. Construction of wind farm load combination forecasting model based on gbdt, lightgbm and rf. In Proceedings of the 2024 IEEE 6th International Conference on Power, Intelligent Computing and Systems (ICPICS), Shenyang, China, 29–31 August 2024; IEEE: New York, NY, USA, 2024; pp. 1305–1310. [Google Scholar]

- Mousaei, A.; Naderi, Y.; Bayram, I.S. Advancing State of Charge Management in Electric Vehicles with Machine Learning: A Technological Review. IEEE Access 2024, 12, 43255–43283. [Google Scholar] [CrossRef]

| Abbreviation | Full Term |

|---|---|

| FL | Federated Learning |

| CR-FL | Consensus-Regularized Federated Learning |

| SCADA | Supervisory Control and Data Acquisition |

| ML | Machine Learning |

| NN | Neural Network |

| LightGBM | Light Gradient Boosting Machine |

| Non-IID | Non-Independent and Identically Distributed |

| AUC | Area Under the Receiver Operating Characteristic Curve |

| ReLU | Rectified Linear Unit |

| Study | Methodology | Model | Key Contribution |

|---|---|---|---|

| Liu et al. [6] | Centralized | Deep Residual Network | Improved feature extraction for fault detection. |

| Wang et al. [8] | Centralized | Hybrid 3D-CNN-LSTM | Captured spatio-temporal features for compound faults. |

| Jiang et al. [12] | Federated | CNN | Cloud-edge collaborative FL framework. |

| Ours | Federated (CR-FL) | Lightweight NN | Proves FL’s generalization superiority over a strong centralized baseline via consensus regularization. |

| Parameter | Centralized (LightGBM) | Federated (Neural Network) |

|---|---|---|

| Model Architecture | Gradient Boosting Tree | MLP (53-128-6) |

| Training Rounds | 50 Boosting Rounds | 50 Communication Rounds |

| Learning Rate () | 0.1 | 0.01 |

| Optimizer | - | Adam |

| Local Epochs (E) | - | 1 |

| Number of Clients (K) | - | 10 |

| Activation (Hidden) | - | ReLU |

| Activation (Output) | - | Softmax (via Cross-Entropy) |

| Batch Size (Local) | - | 32 |

| Consensus Regularization () | - | 0.1 |

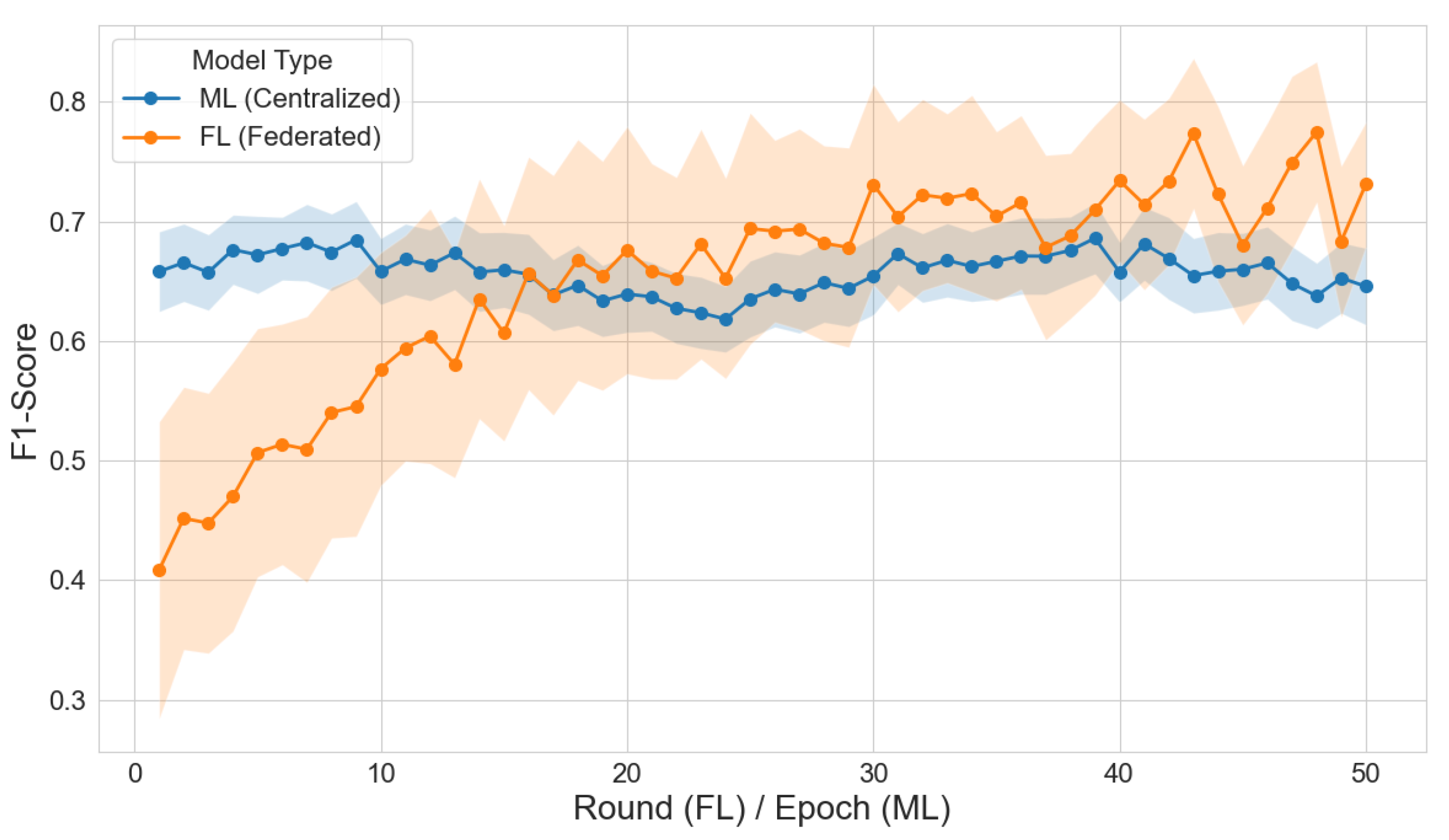

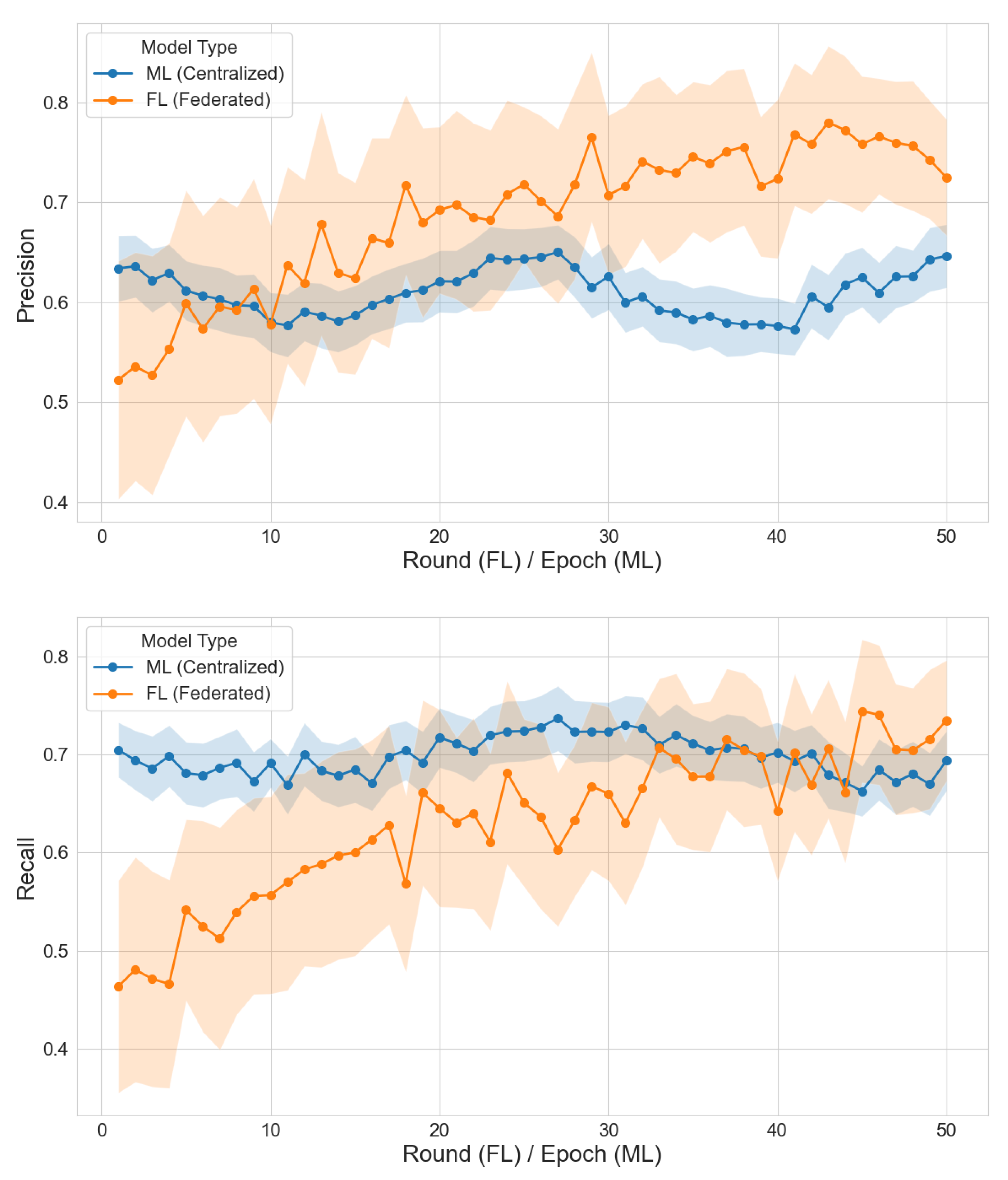

| Metric | Centralized (LightGBM) | Federated (CR-FL) | p-Value |

|---|---|---|---|

| Accuracy | 0.69 ± 0.02 | 0.79 ± 0.02 | <0.001 |

| AUC | 0.90 ± 0.01 | 0.95 ± 0.01 | <0.001 |

| Precision | 0.63 ± 0.03 | 0.75 ± 0.04 | <0.001 |

| Recall | 0.68 ± 0.02 | 0.72 ± 0.03 | <0.05 |

| F1-Score | 0.65 ± 0.02 | 0.73 ± 0.03 | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, L.; Zhou, J.; Peng, Q.; Zhou, Q.; Zhang, H. Consensus-Regularized Federated Learning for Superior Generalization in Wind Turbine Diagnostics. Mathematics 2025, 13, 2570. https://doi.org/10.3390/math13162570

Li L, Zhou J, Peng Q, Zhou Q, Zhang H. Consensus-Regularized Federated Learning for Superior Generalization in Wind Turbine Diagnostics. Mathematics. 2025; 13(16):2570. https://doi.org/10.3390/math13162570

Chicago/Turabian StyleLi, Lan, Juncheng Zhou, Qiankun Peng, Quan Zhou, and Haoming Zhang. 2025. "Consensus-Regularized Federated Learning for Superior Generalization in Wind Turbine Diagnostics" Mathematics 13, no. 16: 2570. https://doi.org/10.3390/math13162570

APA StyleLi, L., Zhou, J., Peng, Q., Zhou, Q., & Zhang, H. (2025). Consensus-Regularized Federated Learning for Superior Generalization in Wind Turbine Diagnostics. Mathematics, 13(16), 2570. https://doi.org/10.3390/math13162570