1. Introduction

Prompt engineering has become crucial for effectively adapting large language models to specific downstream tasks without extensive model retraining [

1,

2,

3]. However, manually crafting suitable prompts is highly dependent on domain expertise and often requires significant trial-and-error, making this approach inefficient and unscalable [

4,

5]. Thus, automating prompt optimization has emerged as a critical research direction to overcome these limitations.

Existing automated prompt optimization approaches mainly involve gradient-based continuous optimization, heuristic discrete search, and reinforcement learning (RL) [

6,

7,

8]. Gradient-based methods efficiently optimize prompts but require access to internal model gradients or probability distributions, restricting their application to open-source or white box LLMs [

9,

10]. Conversely, heuristic discrete search methods, such as evolutionary algorithms, do not rely on gradients and, thus, support black box models. However, these methods suffer from limited exploration and frequently converge to suboptimal solutions [

11]. Reinforcement learning provides a promising direction by naturally balancing exploration and exploitation, yet existing RL-based approaches rarely leverage domain-specific constraints, resulting in potential semantic incoherence [

9,

12]. Additionally, the high-dimensional discrete search space poses significant challenges for achieving effective prompt optimization.

To address these challenges, we propose a Domain-Aware Reinforcement Learning framework for Prompt Optimization (DA-RLPO). We model discrete prompt editing as a Markov Decision Process (MDP). Our method leverages deep Q-learning combined with structured domain knowledge to systematically constrain the search space of candidate edits, thereby improving both search efficiency and optimization quality. An entropy-regularized reward function ensures balanced exploration and exploitation during prompt refinement.

The main contributions of our paper are summarized as follows. First, we propose DA-RLPO, a reinforcement learning framework for discrete prompt optimization. The framework incorporates domain-aware constraints and uses entropy-based reward regularization, enabling effective optimization without gradient information. Second, we introduce a structured knowledge base to guide prompt editing, ensuring semantic coherence and domain relevance. Finally, our extensive experiments demonstrate that DA-RLPO consistently outperforms baselines on text classification tasks, maintains robust performance under limited query budgets, and generalizes effectively to text-to-image generation and reasoning tasks.

3. Methods

3.1. Overview

We propose a reinforcement learning framework for prompt optimization, in which the rewriting process is formalized as a Markov Decision Process with discrete phrase-level actions. The agent iteratively refines an initial prompt via edit operations, guided by task performance and structural constraints. The framework consists of five core components: (i) a structured, domain-aware knowledge base that provides candidate phrases for editing, filtered by syntactic, semantic, and contextual criteria; (ii) an action execution module that applies four discrete edit operations (add, delete, substitute, and swap), with the set of feasible actions at each step determined by the current prompt state; (iii) a state representation module that encodes both semantic and statistical features of recent task performance; (iv) a Deep Q-Network that learns the editing policy by minimizing the temporal-difference error, employing experience replay and periodic target network synchronization; and (v) a composite reward function that balances accuracy improvement with the entropy-based measurement of action diversity.

At each step, the agent encodes the current state, determines the set of valid actions, selects an action according to an

-greedy policy, and applies the corresponding edit. The new prompt and resulting state are evaluated by the reward function, and the transition is stored in the experience replay buffer. Mini-batches are sampled uniformly from the buffer for Q-network updates, and the parameters of the target network are synchronized with the online network at regular intervals. The overall optimization proceeds until a maximum number of episodes is reached. The complete procedure is summarized in Algorithm 1.

| Algorithm 1 Domain-Aware RL-based Prompt Optimization Framework |

- Require:

Initial prompt , editing environment , knowledge base , reward function , number of episodes N, max steps per episode T - Ensure:

Optimized prompt - 1:

Initialize Q-network with random weights - 2:

Initialize target network () - 3:

Initialize experience replay buffer - 4:

for episode to N do - 5:

- 6:

for step to T do - 7:

Encode normalized state s from p - 8:

Determine valid action set - 9:

Select via -greedy policy over - 10:

Apply a to p using and to obtain , - 11:

Compute reward - 12:

Store in buffer - 13:

Sample mini-batch - 14:

Update using TD loss on - 15:

if step then - 16:

Update target network: - 17:

end if - 18:

, - 19:

end for - 20:

end for - 21:

return final optimized prompt

|

3.2. Domain-Aware Decision Flow

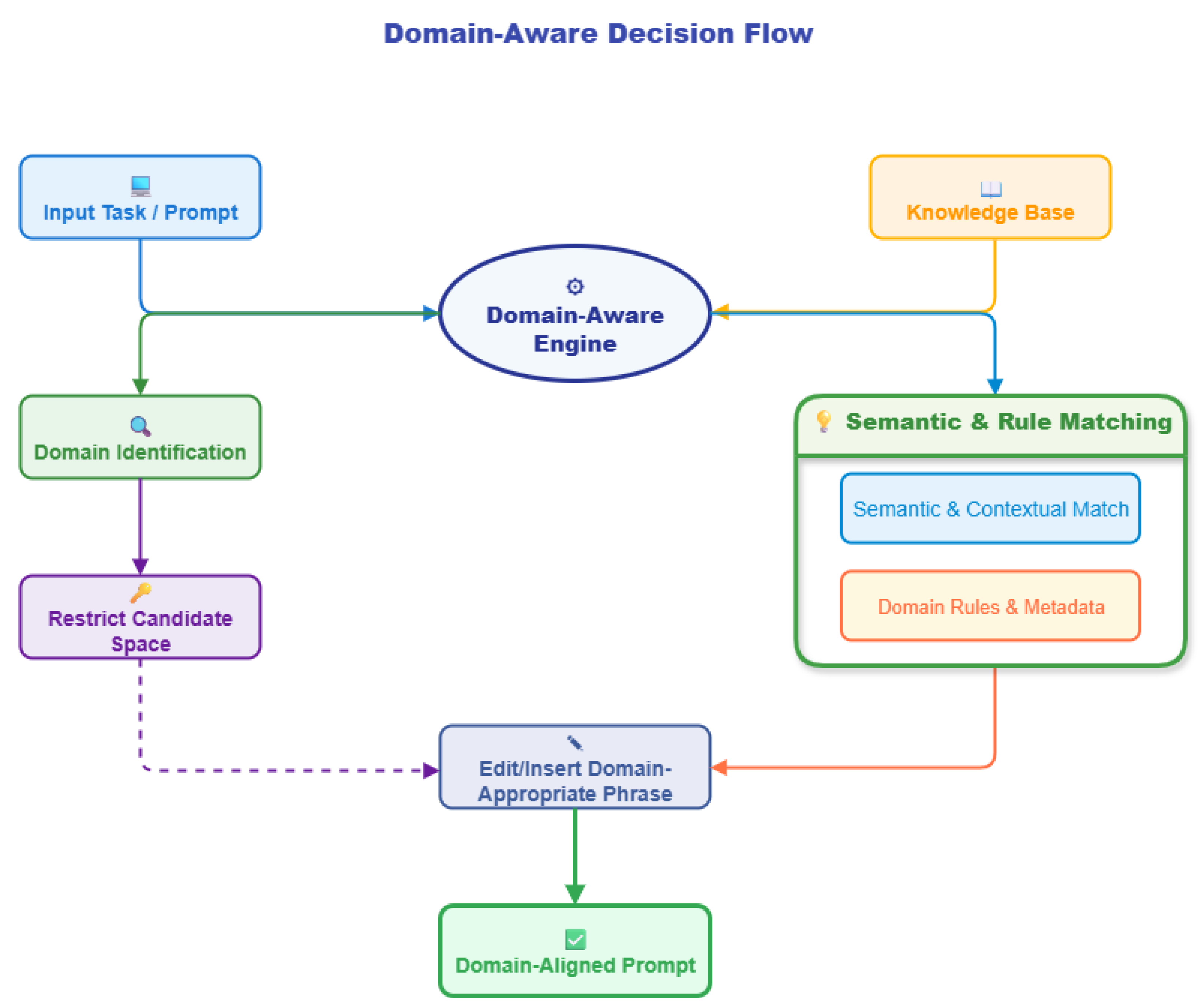

This section presents a detailed description of the domain-aware decision flow underlying DA-RLPO, illustrating the integration of domain-awareness into the prompt optimization process. As illustrated in

Figure 1, the approach centers on a domain-aware engine. This engine processes the initial task prompt and incorporates both semantic and rule-based information from a structured knowledge base, enabling accurate recognition of domain relevance and guiding subsequent editing decisions. Specifically, the input prompt is first processed by a domain identification module, which determines the specific domain associated with the current task. Based on the identified domain, the candidate action space for editing is further constrained by domain knowledge, which effectively reduces the search space and improves the efficiency of the editing process. The domain-aware engine interacts closely with the knowledge base to retrieve and generate candidate phrases that are contextually appropriate for the task, taking into account both semantic similarity and domain-specific rules and metadata. These domain-relevant phrases are then used by the editing module for insertion or substitution, resulting in an optimized prompt with explicit domain characteristics. This decision flow highlights the core feature of the proposed method: prompt editing is achieved through systematic incorporation of domain knowledge and semantic constraints, allowing the framework to produce prompts that are both efficient and contextually appropriate for the identified task domain.

3.3. Knowledge Base

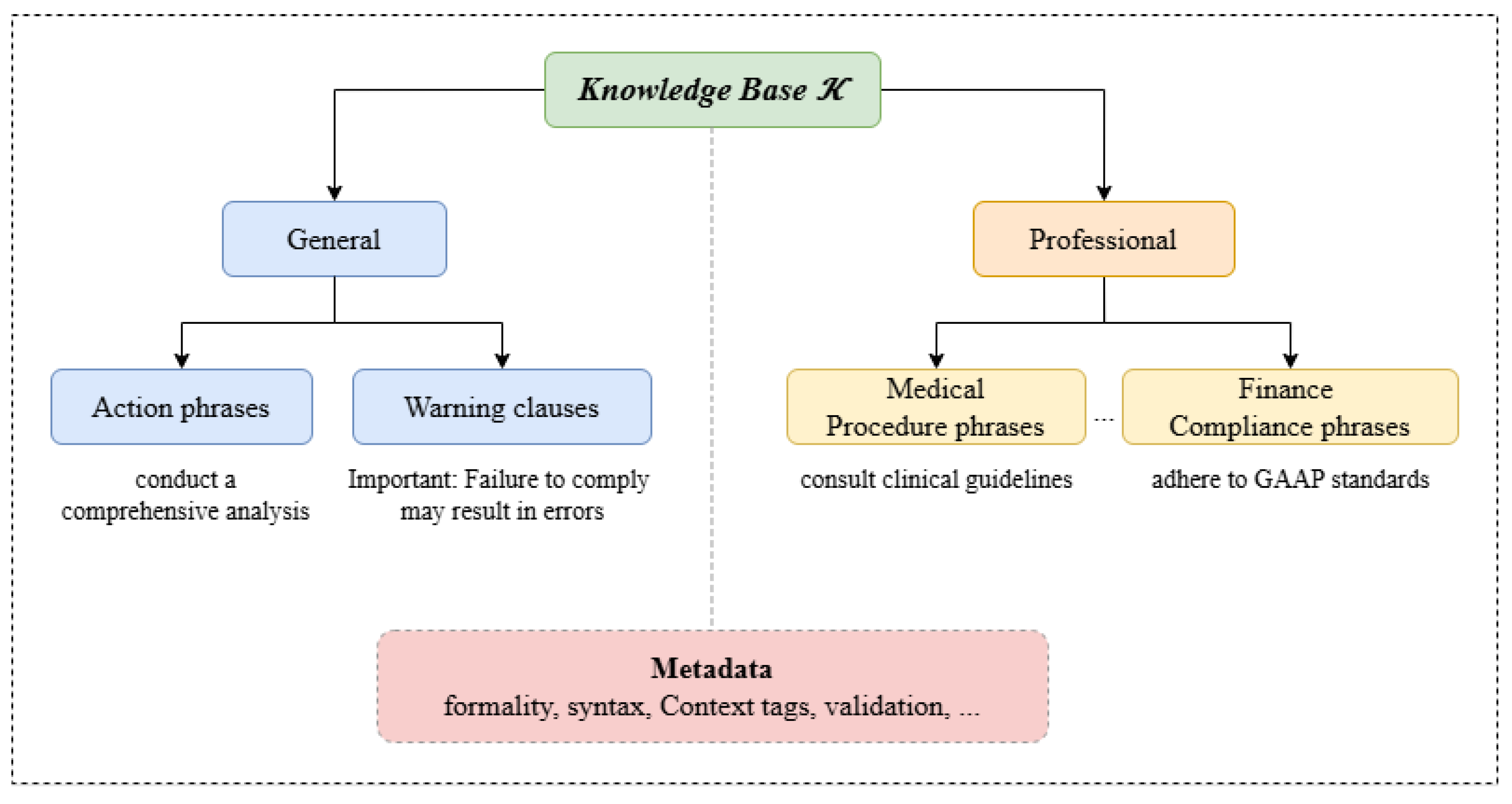

As a foundational component of the domain-aware decision flow described above, we construct a structured knowledge base to enable phrase-level prompt editing under the reinforcement learning framework. Each element in represents a syntactically coherent phrase segment that can be added to or substituted into a prompt, ensuring semantic consistency and domain appropriateness.

The

structure represents domain-specific knowledge and syntactic granularity. As shown in

Table 1, phrases are organized by high-level application domains such as general, medical, and financial. Each phrase is further annotated with its syntactic type, including noun phrases (NPs), verb phrases (VPs), and conditional clauses. This organization enables controlled and flexible phrase-level modifications during editing, while preserving fluency.

Each phrase in is associated with structured metadata describing its properties. These attributes include a numerical formality score reflecting stylistic tone, a syntactic category label that specifies whether the phrase is a noun phrase, verb phrase, or another type, and a set of context tags that indicate appropriate usage scenarios. Additional metadata fields may capture information such as supporting literature references, severity levels, or legal applicability. This rich annotation enables the editing policy to systematically filter or prioritize candidate phrases based on contextual requirements and linguistic constraints.

Candidate retrieval is governed by a set of rule-based criteria defined over . These include syntactic matching constraints and fuzzy context alignment. For substitution operations, phrases must match in syntactic type, and contextual relevance is estimated by comparing metadata tags with the current prompt window.

To guarantee semantic appropriateness and mitigate redundancy, candidate phrases retrieved from

are further filtered according to both semantic similarity and contextual alignment. For each candidate phrase

from the knowledge base, its cosine similarity to an existing phrase

in the current prompt is computed as

where

denotes the embedding vector of phrase

p, obtained using the sentence encoder. Only candidate phrases satisfying

are retained for editing, ensuring sufficient dissimilarity from existing prompt content. This mechanism discourages the insertion of near-duplicate phrases and enhances diversity among generated prompt variants. Additionally, contextual tags and keyword overlap are leveraged to prioritize further phrases that best match the intended usage scenario of the current prompt window.

Figure 2 illustrates the overall architecture of

. During training,

enables the agent to explore phrase-level variations within a constrained yet diverse action space. The integration of this structured knowledge base with reinforcement learning is further detailed in the following section.

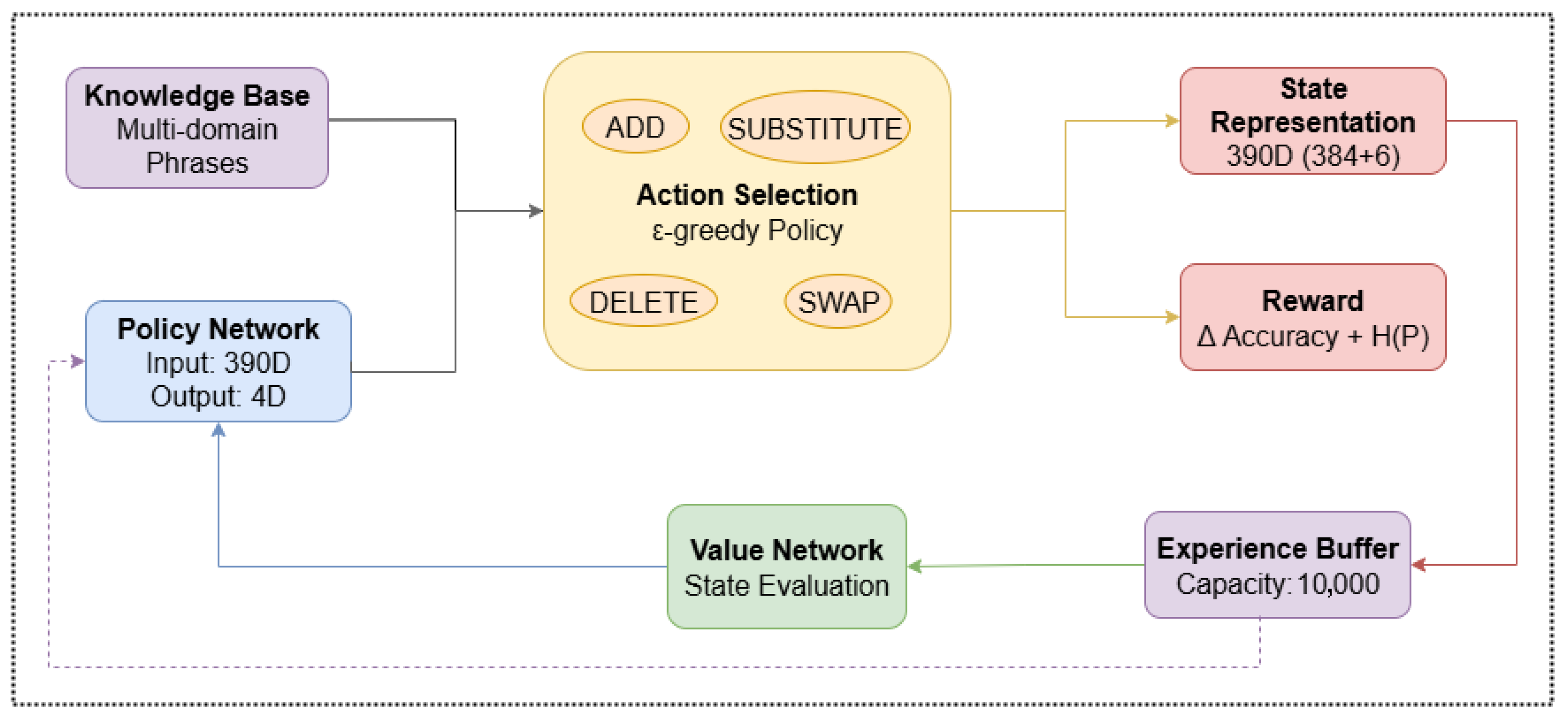

3.4. Reinforcement Learning for Prompt Editing

This section details the reinforcement learning framework adopted for prompt editing. The optimization procedure is formulated as a Markov Decision Process. The state space integrates semantic and statistical features. The action space consists of discrete phrase-level editing operations, which are restricted by rule-based validity constraints. The reward function incorporates both task-specific performance and the entropy of the recent action distribution. Candidate edits are retrieved from the structured knowledge base

according to syntactic, semantic, and contextual relevance. Model training is conducted with a Deep Q-Network utilizing experience replay and periodic target network updates. An overview of the entire process is presented in

Figure 3. The subsequent subsections formally define each component and provide implementation details.

3.4.1. Formulation as Markov Decision Process

We formulate the prompt optimization problem as a discrete-time finite Markov Decision Process, defined by the tuple , where each element is explicitly characterized as follows:

State space (). The state at each time step t encapsulates both semantic and statistical features of the current prompt. Specifically, consists of a semantic embedding vector obtained from Sentence-BERT combined with statistical features reflecting recent task performance, including running mean, standard deviation, and accuracy trend.

Action space (

). The action space is defined as a discrete set of phrase-level editing operations applicable to the current prompt, namely,

Each action modifies the prompt by altering its constituent phrases based on predefined syntactic and semantic constraints detailed in the structured knowledge base .

State transition function (

P). The state transition probability

characterizes the probability of reaching a subsequent state

from state

by applying action

. Given the deterministic nature of our editing operations, state transitions are implicitly defined by

where

denotes the phrase-level editing function executed within the editing environment

, ensuring grammatical correctness and semantic consistency via rule-based validity checks.

Reward function (

R). The reward function

quantitatively evaluates the effectiveness of prompt edits based on improvements in task-specific performance and the diversity of selected actions. Specifically, it is computed as

where

denotes the incremental change in accuracy due to action

, and where

is the entropy of the action distribution, encouraging diverse and explorative editing behavior.

Discount Factor (). The discount factor is introduced to balance immediate and future rewards. In this study, was empirically set to , assigning considerable importance to future prompt refinement outcomes.

This MDP formulation allows us to utilize deep reinforcement learning techniques, specifically deep Q-learning, to learn an optimal policy for prompt editing by maximizing the expected cumulative reward over the editing horizon.

3.4.2. Hybrid State Encoding

The state representation is composed of semantic embedding of the current prompt and statistical features derived from task performance. The semantic embedding is obtained using Sentence-BERT, producing a 384-dimensional vector that captures the overall meaning of the prompt. The statistical features include the running mean of task accuracy over the last t time-steps (), the standard deviation of task accuracy (), and the trend, calculated as the difference in task accuracy over the most recent steps ().

The complete state vector is obtained by concatenating the semantic embedding and the statistical features:

To ensure stable training, the state features are normalized dynamically, using running statistics. For each dimension of

, the running mean

and running standard deviation

are recursively updated at every step according to

where

is the update rate, and all operations are performed element-wise. The normalized state vector is then computed as

This adaptive normalization strategy enables the model to account for changes in the distribution of state features over time and maintain consistent input scaling throughout training.

3.4.3. Action Selection Policy

At each time-step, the agent selects an editing action from the set of feasible actions, which consists of add, substitute, delete, and swap operations.

Figure 4 presents representative candidate prompts generated by applying each editing operation to a common initial prompt. These operations are designed to produce minimal but semantically meaningful changes to the prompt. The add action involves inserting a new phrase into the prompt, either from the knowledge base

or from the deleted sequence. The delete action removes an existing phrase from the prompt, while the substitute action replaces an existing phrase with a new one from

. Finally, the swap action exchanges two phrases within the prompt. Action selection is driven by a Deep Q-Network, which learns the action-value function

. The action selection is guided by an

-greedy policy, in which a random valid action is chosen with probability

, and the action maximizing the current Q-value is selected with probability

.

The set of available actions at each time-step is not fixed, but rather determined by the current state. This restriction is implemented by a validity function that encodes the prompt structure and linguistic constraints. Formally, the set of valid actions is defined as

where

is a binary function that returns 1 if action

a is valid in state

and 0 otherwise. The function

is implemented as a set of rule-based checks, including phrase presence, syntactic compatibility, entity type filtering, and grammar verification. For example, a delete action is allowed only if a non-essential phrase exists, while a swap action requires at least two eligible phrases. At each decision step, the agent samples actions exclusively from

under the current policy. This state-dependent constraint on the action space ensures that only feasible and linguistically appropriate edits are considered, thereby reducing the risk of ungrammatical or semantically inconsistent prompt generation.

3.4.4. Reward Shaping

The reward function is constructed to guide the agent toward prompt edits that improve task performance. The primary component is the change in task accuracy

, which quantifies the improvement achieved after each edit. To further encourage exploration and avoid premature convergence to repetitive behaviors, a diversity term based on the entropy of the action distribution is introduced. The total reward at time-step

t is given by

where

denotes the change in task accuracy, and where

is the entropy of the empirical action distribution at time

t.

The entropy regularization term

promotes exploration by discouraging the policy from repeatedly selecting the same actions. Specifically, the entropy is computed as

where

is the empirical probability of selecting action

a at time-step

t. This probability is estimated from the action frequencies within a fixed-length sliding window up to time

t:

where

denotes the number of times action

a was selected in the recent window. The entropy term thereby regularizes the agent’s behavior, encouraging a broader exploration of the action space and preventing the learning policy from stagnating at suboptimal, repetitive strategies.

3.4.5. Training Mechanism

The DQN is trained using standard reinforcement learning techniques, including experience replay and periodic target network updates. At each step, the agent stores its interaction as a tuple

in an experience replay buffer

:

where

is the state,

the action,

the reward, and

the next state. During learning, mini-batches

are randomly and uniformly sampled from

:

where

denotes the batch size.

The loss function for training is the temporal difference (TD) error,

where

r is the observed reward,

is the discount factor, and

denotes the parameters of the target Q-network.

To stabilize training, the parameters of the target network are periodically synchronized with those of the online network according to

where

C is the target update period,

denotes the parameters of the online Q-network, and

those of the target network.

Additionally, the exploration rate

decays exponentially with the training steps,

where

is the initial exploration rate,

is the decay factor, and

is the minimum value. This schedule ensures that the agent gradually shifts from exploration to exploitation as learning proceeds.

3.5. Complexity Analysis

We analyze the complexity of the proposed reinforcement learning framework. Let T denote the maximum number of editing steps per episode, and let F represent the cost of a forward pass through the Q-network at each step. At each editing step, the agent retrieves k candidate phrases from the knowledge base of size , which requires time. The total complexity per episode is, therefore, . During training, the Q-network is updated via experience replay, with each update processing a mini-batch of size , yielding an additional cost per update. For N episodes, the overall training complexity is . Notably, the use of domain-aware constraints substantially reduces the effective action space and retrieval costs, thereby improving the practical efficiency of the method.

4. Experiments

For this section, we conducted experiments to evaluate the performance of our proposed prompt optimization framework. Our evaluation consisted of the following main parts: (1) an evaluation of eight binary classification tasks, using the Natural-Instructions dataset v2.6 [

41]; (2) an evaluation under the constraint of limited API calls; (3) additional experiments on text-to-image generation tasks, using Stable Diffusion 2.1 and PickScore version 1.0 [

42,

43]; (4) an evaluation of reasoning capability on representative tasks from the GSM8K, ASDiv, AQuA, CSQA, and StrategyQA datasets [

44,

45,

46,

47,

48]; (5) an ablation study to understand the contribution of the key components of our framework; and (6) hyperparameter sensitivity analyses to verify the robustness of the method. The results from these experiments demonstrate the effectiveness, efficiency, and generalization ability of our approach across various practical scenarios.

4.1. Experimental Setup

This section describes the experimental setup used to evaluate the effectiveness of our proposed method. The setup included information about the dataset, baselines, and experimental parameters, ensuring a fair comparison between the various methods.

4.1.1. Dataset

Our experiments were conducted on a subset of the Natural-Instructions dataset v2.6, which includes a wide range of tasks designed to evaluate instruction-following capabilities. We focused on eight binary classification tasks: task019, task021, task022, task050, task069, task137, task139, and task195. These tasks are well-suited for testing prompt optimization strategies, as they involve interpreting and responding to diverse instructions. Each task includes a set of input–output examples that the models are expected to handle effectively.

In addition, to evaluate the generalization of our method, we utilized Stable-Diffusion-2-1 for text-to-image generation tasks, with PickScore used to assess prompt effectiveness. We further conducted experiments on representative reasoning tasks from the GSM8K, ASDiv, AQuA, CSQA, and StrategyQA datasets.

4.1.2. Baselines

To comprehensively evaluate the effectiveness of our proposed reinforcement learning framework, we compared it to five state-of-the-art prompt optimization methods. Specifically, Plum employs metaheuristic algorithms for discrete prompt optimization, leveraging black box model feedback to iteratively select and refine candidate prompts [

49]; BDPL utilizes a variance-reduced policy gradient strategy to optimize discrete prompts, effectively addressing high-variance issues in gradient estimation [

27]; GrIPS conducts discrete prompt generation through phrase-level editing actions, selecting optimal prompts based on empirical task performance [

8]; RLPrompt formulates prompt optimization as a reinforcement learning problem, iteratively refining prompts via token-level edits guided by task performance rewards [

9]; StablePrompt formulates prompt optimization as an online reinforcement learning problem and employs adaptive proximal policy optimization for stable training [

7]. These representative baselines encompass diverse prompt optimization paradigms, providing a comprehensive benchmark to evaluate the advantages of our proposed approach.

4.1.3. Experimental Parameters

To ensure fairness across all the methods, we used the following standardized settings:

Backbone model: GPT-4o was used for all tasks as the underlying language model.

Batch size: Set to 1 for all methods to focus on individual prompt editing.

Time limit: Each method was given 45 min of runtime per task, ensuring an equitable computational budget.

Task-agnostic instruction: Each method was provided with a task-agnostic instruction: You will be given a task. Read and understand the task carefully, and appropriately answer

[list_of_labels] [

49]. The placeholder

[list_of_labels] is replaced with the actual task-specific labels for each experiment.

These experimental parameters were designed to ensure fair and consistent evaluation of the different prompt learning methods. The time limit ensured that all the methods were constrained by similar computational resources, and the task-agnostic instruction provided a general template for all the tasks.

4.2. Prompt Optimization Performance

We evaluated the effectiveness of the proposed method on eight binary classification tasks from the Natural-Instructions v2.6 dataset [

41], comparing it against five baseline prompt optimization methods.

Table 2 summarizes the results regarding average task accuracy with standard deviation across multiple runs.

Across all the tasks, our method achieved the highest average accuracy of 61.13%, consistently outperforming all the baselines. Specifically, compared to GrIPS, BDPL, RLPrompt, and StablePrompt, our reinforcement learning-based framework demonstrated superior editing efficiency and generalization capability. For instance, on task3 our approach significantly improved performance by more than 9 points compared to StablePrompt and by approximately 15 points compared to RLPrompt, highlighting its ability to effectively refine suboptimal initial prompts and achieve substantial accuracy gains. Further analysis indicated that RLPrompt and GrIPS achieved competitive performance on task5, but their performance deteriorated on more complex tasks, due to limited flexibility and domain-specific constraints. In contrast, our method, with its structured action constraints and dynamic domain-awareness integration, consistently maintained stable and improved performance across all the tested tasks.

These results validate the effectiveness of modeling prompt optimization as a sequential decision-making process guided by domain knowledge and structured reinforcement learning, confirming its suitability and robustness for practical prompt optimization scenarios.

4.3. Prompt Optimization with Limited API Calls

To address concerns regarding computational resources, we further evaluated prompt optimization methods under a limited API call budget. As shown in

Table 3, the proposed method achieved the highest average accuracy across all tasks under identical query constraints. Specifically, our approach consistently outperformed all comparative algorithms, demonstrating greater efficiency in utilizing limited queries. In particular, for tasks 3 and 7 our method exhibited substantial improvements, highlighting its effectiveness even in constrained resource scenarios. Compared with other reinforcement learning-based methods like RLPrompt and StablePrompt, our method attains superior accuracy by leveraging structured domain knowledge and entropy-guided exploration to efficiently navigate the prompt space. These results confirm the practicality and efficiency of our framework, making it particularly suitable for real-world scenarios where API calls are limited.

4.4. Prompt Optimization for Text-to-Image Generation

In this experiment, all the images were generated using the Stable-Diffusion-2-1 model [

42]. To objectively evaluate the effectiveness of prompt optimization, we employed PickScore, an offline CLIP-based evaluator trained on a large-scale, high-quality image–text pair dataset [

43]. PickScore quantitatively measures the semantic relevance between generated images and their textual prompts.

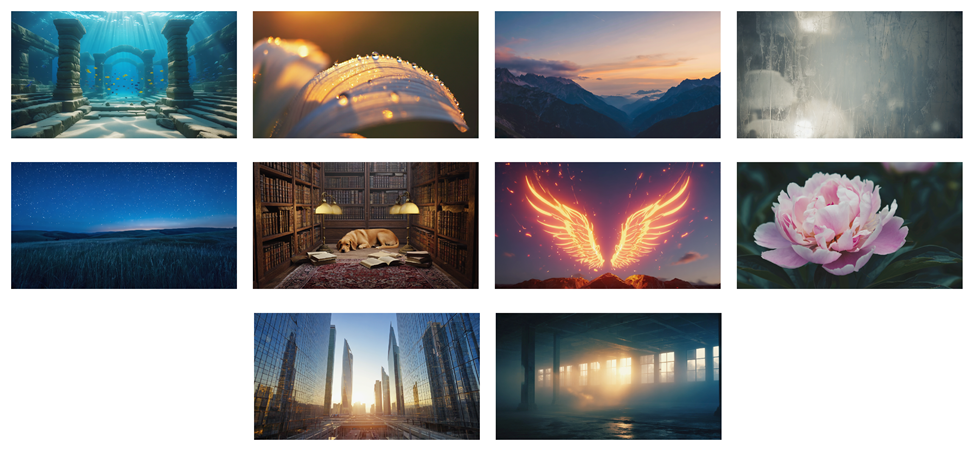

Figure 5 and

Figure 6 show the visual comparisons between images generated using the original and the optimized prompts for ten distinct topics (Sunken Palace, Golden Petals, Mountain Dawn, Misty Mirror, Starlit Sky, Library Dog, Flaming Flight, Morning Peony, Urban Sunrise, and Blazing Tower). It is evident that the images generated using prompts optimized by the proposed method demonstrate notable improvements in visual quality and thematic coherence compared to those generated by the initial prompts.

Quantitatively, the optimized prompts achieved consistently higher PickScores across all ten image topics, especially in cases like Morning Peony and “Urban Sunrise”, where the relative improvements reached approximately 5.5% and 6.5%, respectively. These results suggest that the reinforcement learning-based editing strategy proposed in this paper effectively guides the model toward generating images with improved thematic fidelity and enhanced visual detail.

From the perspective of specific prompt-editing operations, the prompt optimization primarily employed three strategies: substitute vague or abstract descriptions with concrete visual elements, replacing general phrases such as “delicate pink hues” with more explicit details like “deep pink, intricately layered petals” and enhancing the visual richness of the generated images; add descriptive details or lighting elements, as seen in Urban Sunrise, “explicitly mentioning orange light”, and “ crisp shadows”, thereby emphasizing the depiction of lighting and shadow effects; and delete ambiguous or uncertain phrases, such as removing “faintly visible” in the context of “Starlit Sky”, significantly enhancing clarity and visual strength.

The optimized prompts generally yielded images with more focused themes, richer visual detail, and more accurate representation of intended atmospheres, particularly in visually complex scenarios such as “Sunken Palace” and “Flaming Flight”. These findings demonstrate the effectiveness and generalizability of the proposed method in prompt optimization tasks involving large language models, highlighting its practical value for real-world applications.

4.5. Prompt Optimization for Reasoning Tasks

In addition to quantitative evaluation, we present representative examples from five reasoning benchmarks to demonstrate the refinement of prompts by the proposed method via knowledge-guided editing. As shown in

Table 4, our method consistently enhances initial prompts by clarifying key concepts, supplementing missing definitions, or completing logical reasoning steps, thereby improving the interpretability and effectiveness of each prompt for large language models.

Experimental analysis shows that our method consistently enriches initial prompts with explicit definitions, contextual information, and instructive annotations across diverse reasoning benchmarks. For instance, in the CSQA example, the optimized prompt clearly defines the term “choker” and guides consideration of alternative storage locations, thereby prompting more comprehensive reasoning. In the GSM8K and AQuA examples, the refined prompts explicitly outline calculation steps or clarify mathematical relationships. Similarly, for StrategyQA, the edited prompt introduces practical considerations regarding food preparation, thus enabling the model to reason about implicit factors more effectively.

To further demonstrate the domain-awareness capability of the proposed method, we present additional examples in

Table 5. Specifically, these examples illustrate that the domain-aware editing mechanism refines prompts by incorporating precise domain-specific terms and clarifications. For instance, the initial prompt mentioning “lawyers” is enhanced to “attorneys (legal professionals specializing in divorce cases)”, clearly reflecting the legal domain context. Similarly, the term “blood” is modified to “bruises or scrapes” in a sports-related prompt to better align with typical domain-specific language used in athletic contexts. Furthermore, a prompt referencing “electric motor” is refined to explicitly indicate it as an “electromechanical device,” providing a more accurate domain-specific description. These refined prompts clearly indicate that our method systematically leverages structured domain knowledge to enhance the clarity and contextual appropriateness of the generated prompts.

4.6. Ablation Study

Table 6 presents the ablation results. Removing either the knowledge base constraint or the diversity reward term led to a drop in accuracy, demonstrating the importance of both knowledge-guided candidate selection and exploration encouragement in prompt optimization.

4.7. Hyperparameter Sensitivity Analysis

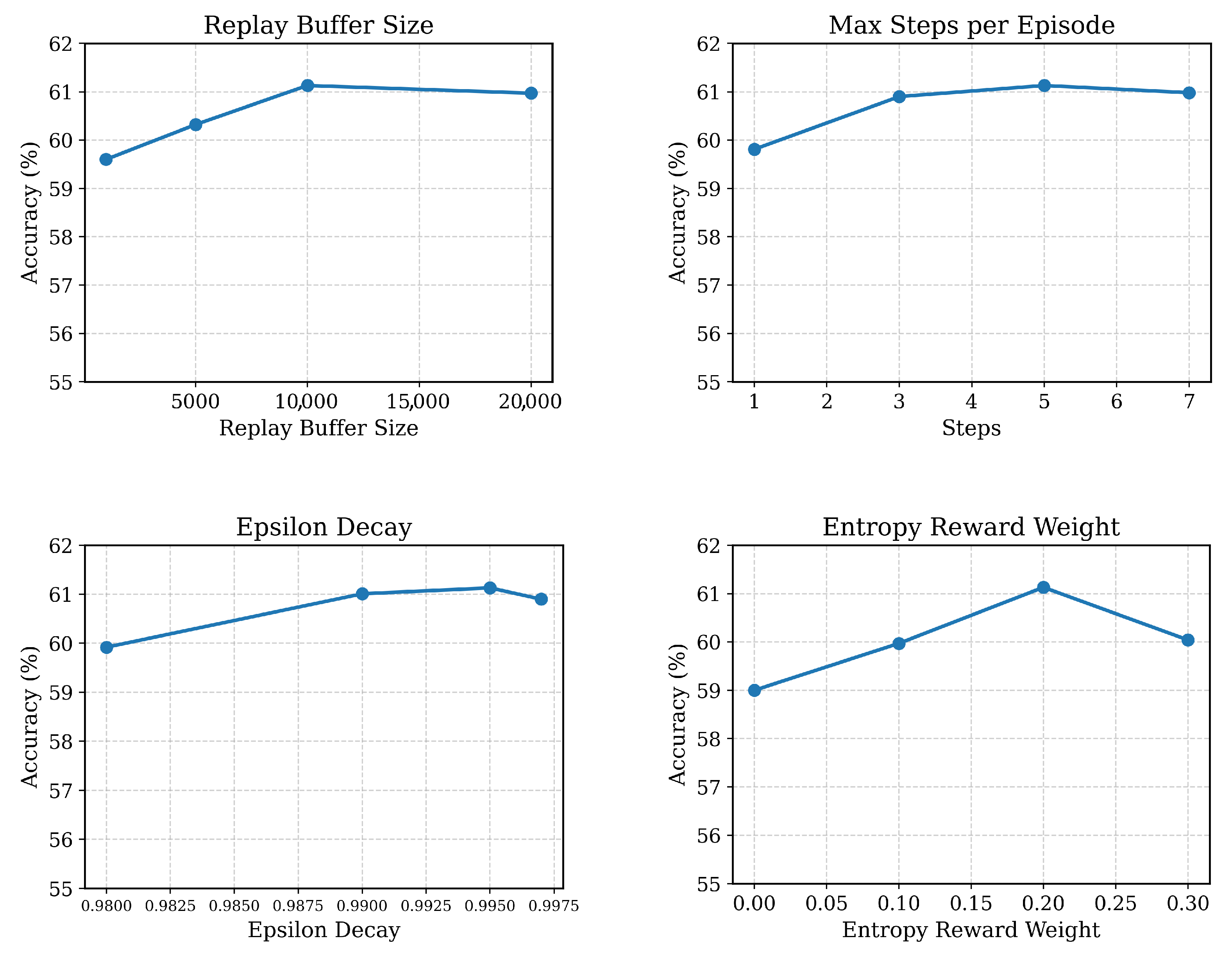

Following the ablation study of core components, we further investigated the sensitivity of our approach to key hyperparameter settings. To better understand the robustness and practical performance of our reinforcement learning-based prompt optimization framework, we conducted a comprehensive sensitivity analysis on four key hyperparameters: replay buffer size, maximum editing steps per episode, epsilon decay rate, and entropy reward weight. All the ablation experiments were performed under the same experimental setup described previously, and the reported accuracy was averaged across all the benchmark tasks.

We examined buffer sizes of 1000, 5000, 10,000, and 20,000. As shown in

Figure 7 (top-left), model accuracy initially improved with increasing buffer size, reaching the best result at 10,000 (61.13%), while further growing yield diminishing returns. This trend reflects the benefit of enhanced sample diversity for stable training but also indicates that excessively large buffers may introduce unnecessary delay or overfitting to outdated transitions.

We varied the maximum number of allowed editing steps per episode among 1, 3, 5, and 7.

Figure 7 (top-right) shows accuracy rising from 59.81% to 61.13% as the number increased to 5, after which it plateaued. This suggests that moderately longer editing trajectories enable more flexible prompt optimization, while excessively long episodes provide limited additional benefit.

The epsilon decay rate governs the exploration–exploitation trade-off in policy learning. We compared decay values of 0.98, 0.99, 0.995, and 0.997.

Figure 7 (bottom-left) demonstrates that an appropriate balance between exploration and exploitation yields the highest accuracy, while too-rapid or too-slow decay reduces overall performance.

We assessed the impact of the entropy regularization weight on the agent’s reward function. As shown in

Figure 7 (bottom-right), setting this weight to 0.2 achieved the highest accuracy, indicating that moderate encouragement of diverse editing actions is beneficial. The absence of diversity regularization (weight = 0) resulted in noticeably worse performance, while overly high weights also degraded the results.

Overall, these results indicate that the proposed method is robust to hyperparameter settings within reasonable ranges, and that the best performance is consistently achieved when balancing diversity, exploration, and efficient trajectory length.