Abstract

Underwater image enhancement is crucial for fields like marine exploration, underwater photography, and environmental monitoring, as underwater images often suffer from reduced visibility, color distortion, and contrast loss due to light absorption and scattering. Despite recent progress, existing methods struggle to generalize across diverse underwater conditions, such as varying turbidity levels and lighting. This paper proposes a novel hybrid UNet–Transformer architecture based on MaxViT blocks, which effectively combines local feature extraction with global contextual modeling to address challenges including low contrast, color distortion, and detail degradation. Extensive experiments on two benchmark datasets, UIEB and EUVP, demonstrate the superior performance of our method. On UIEB, our model achieves a PSNR of 22.91, SSIM of 0.9020, and CCF of 37.93—surpassing prior methods such as URSCT-SESR and PhISH-Net. On EUVP, it attains a PSNR of 26.12 and PCQI of 1.1203, outperforming several state-of-the-art baselines in both visual fidelity and perceptual quality. These results validate the effectiveness and robustness of our approach under complex underwater degradation, offering a reliable solution for real-world underwater image enhancement tasks.

Keywords:

underwater image enhancement; UNet-transformer network; color restoration and detail preservation MSC:

68U10

1. Introduction

Underwater image enhancement (UIE) technology [1,2,3] plays a pivotal role in a wide array of applications, including marine biology, underwater archaeology, and underwater robotics, where high-quality visual data is essential for accurate observation and decision-making. However, underwater images often suffer from significant degradations—such as color casts, low contrast, and blurred details—primarily due to the strong absorption and scattering of light, the differential attenuation of color channels (with red and green being absorbed more rapidly than blue), and the presence of haze-like artifacts caused by suspended particles.

Existing UIE methods can generally be classified into three categories: physical model-based methods, visual prior-based methods, and data-driven approaches. Traditional techniques, like histogram equalization and white balance correction [4,5], adjust pixel values based on contrast or brightness without explicitly considering the underlying physical processes. Physical model-based approaches attempt to invert the degradation by estimating key underwater parameters (e.g., medium transmission and background light), yet they often rely on simplified assumptions that may not hold in real underwater settings. In contrast, data-driven methods, especially those based on deep learning, have shown considerable promise in recovering fine details and correcting color distortions given sufficient training data.

Recent advances have further enriched the UIE landscape by incorporating diverse strategies to tackle the unique challenges of underwater imaging. For instance, one work introduced Physics Inspired System for High Resolution (PhISH-Net) [6], a framework that integrates a physics-based Underwater Image Formation Model (UIFM) [7,8,9,10] with a deep retinex-based enhancement module. This approach first removes backscatter by estimating attenuation coefficients from depth information, then employs a novel Wideband Attenuation prior to guide a lightweight, real-time enhancement network. Similarly, the Hybrid Fusion Method (HFM) [11] addresses white balance distortion and color shifts by combining a color and white balance correction module (leveraging the gray world principle and nonlinear color mapping) with a visibility recovery module based on type-II fuzzy sets and a contrast enhancement module via curve transformation. Another innovative contribution, UIE with Diffusion Prior (UIEDP) [12], formulates underwater image enhancement as a posterior distribution sampling process—merging a pre-trained diffusion model that captures natural image priors with existing enhancement algorithms—to mitigate the adverse effects of low-quality synthesized training data and produce images with a more natural appearance. Moreover, the Principal Component Fusion of Foreground and Background (PCFB) [13] method proposes a two-stage strategy: first, it applies color balance-guided correction and separate contrast enhancement and dehazing to generate foreground and background sub-images; then, it fuses these components using principal component analysis to achieve superior visibility and color fidelity.

Drawing inspiration from recent advances in underwater image enhancement and the complementary strengths of local and global feature modeling, we propose a unified framework that seamlessly integrates fine-detail extraction with robust global context recovery. In our approach, the local feature extraction capability—reminiscent of the strengths found in UNet-like architectures—is enhanced by incorporating a global modeling module based on MaxViT [14]. MaxViT leverages a multi-axis attention mechanism that combines blocked local attention with dilated global attention, enabling efficient global–local interactions at arbitrary resolutions with linear computational complexity. Notably, this design allows the network to capture long-range dependencies even in early, high-resolution stages, which is critical for addressing the pervasive challenges in underwater imaging, such as color degradation, contrast loss, and spatial heterogeneity. Our framework is specifically tailored to the complexities of underwater environments where the interplay between local textures and global illumination patterns determines the overall image quality. By harmonizing local detail preservation with global correction, our method not only enhances color fidelity and contrast but also recovers fine structural details that are essential for downstream tasks in underwater robotics, marine exploration, and scientific imaging. Comprehensive evaluations on large-scale benchmarks, including the Underwater Image Enhancement Benchmark (UIEB) [15] and the Enhanced Underwater Vision Project (EUVP) [16], show that our approach consistently outperforms traditional methods. Experimental results highlight notable improvements in color fidelity, contrast, and detail preservation, demonstrating the robustness and practical effectiveness of our unified framework. In parallel to underwater enhancement research, recent advances in related image processing domains provide valuable architectural and methodological insights. For instance, a CNN–Transformer hybrid model was recently proposed for micro-expression recognition [17], demonstrating how combining local feature extraction and global dependency modeling can yield high recognition accuracy (up to 98%) even on incomplete inputs. Similarly, in the field of intelligent transportation, a deep differentiation segmentation network [18] was introduced to detect foreign objects in urban rail transit with a 95.8% detection rate, showcasing the effectiveness of foreground–background separation and attention mechanisms in complex visual environments. These works reinforce the utility of hybrid structures and attention-guided processing in handling real-world visual degradation, motivating their adaptation to the unique challenges in underwater imaging.

The main contributions of our work can be summarized as follows:

- Unified Local–Global Feature Modeling. We propose a novel framework that integrates the local detail-capturing prowess of UNet-inspired structures with the global context modeling capabilities of a MaxViT-based module, resulting in a coherent and holistic approach to underwater image enhancement.

- Efficient Global Information Integration. By adopting MaxViT’s multi-axis attention—which combines blocked local and dilated global attention—we enable effective global information capture even at early network stages, ensuring that long-range dependencies are efficiently modeled with linear computational complexity.

- Robust Enhancement Performance. Extensive evaluations on benchmark datasets such as UIEB and EUVP demonstrate that our framework substantially improves color correction, contrast enhancement, and fine detail preservation, outperforming conventional methods and providing a reliable foundation for subsequent high-level vision tasks.

2. Related Work

Underwater image enhancement has been explored through various deep learning paradigms, including convolutional neural networks, generative adversarial networks, transformers, and meta/self-supervised learning techniques. In this section, we categorize the representative methods based on their architectural foundations and training strategies, summarize the core contributions and limitations of each category, and position our proposed method within this landscape.

2.1. CNN-Based and GAN-Based Methods

Early work in this domain largely relies on convolutional architectures. WaterNet [15] is a CNN-based end-to-end network that maps degraded inputs to enhanced outputs using a fusion of perceptual, adversarial, and pixel-wise losses. It utilizes a multi-scale feature fusion mechanism to extract both local and global information. Similarly, Ucolor [19] introduces an unsupervised perceptual distance minimization scheme, leveraging color transfer techniques to align underwater image distributions with those of in-air images. Deep-SESR [16] takes a super-resolution perspective, using deep convolutional features to improve image clarity and edge sharpness.

Generative adversarial networks have also been employed to enhance realism and perceptual quality. UGAN [20] formulates enhancement as a GAN-based task, learning to synthesize visually pleasing outputs with natural tones. FUnIE-GAN [21] improves upon this by combining an efficient encoder–decoder structure with perceptual loss supervision, focusing on both speed and fidelity.

These CNN- and GAN-based methods offer effective pixel and perceptual correction capabilities, yet often lack explicit mechanisms to model long-range dependencies and complex contextual relationships, which are crucial in handling large-scale illumination shifts and structural distortions in underwater imagery.

2.2. Transformer-Based and Meta/Self-Supervised Methods

To overcome the locality limitations of convolutions, Transformer-based architectures have been adopted. URSCT-SESR [22] incorporates self-calibrated attention to model both global and local patterns. U-Shape Transformer [23] merges UNet-like CNN encoders with Transformer blocks to improve contextual reasoning. CLUIE [24] further integrates semantic information to enhance perceptual quality and realism beyond traditional low-level processing.

Beyond architectural innovations, several methods focus on improving model robustness and generalization. MetaUE [25] introduces meta-learning to dynamically adapt parameters across diverse underwater domains. Semi-UIR [26] leverages a mean-teacher framework and contrastive learning to operate under limited or no ground-truth labels, guided by no-reference quality metrics.

CSC-SCL [27] introduces a content-style control network with style contrastive learning, aiming to improve generalization across diverse underwater domains with varying color casts and turbidity levels. By disentangling content and style features, the method adaptively modulates enhancement based on distortion characteristics, offering robustness to domain shifts. This perspective highlights the potential of incorporating domain-invariant representations for building more adaptive enhancement systems.

In contrast to existing works, our proposed method builds upon the MaxViT backbone to fuse Mobile Inverted Bottleneck Convolutions and Multi-Axis Self-Attention in a hierarchical UNet-like structure. This hybrid design allows our model to retain local inductive priors while capturing global dependencies across spatial scales. Unlike prior approaches, we explicitly model multi-axis degradation patterns—such as wavelength-specific absorption and spatially variant turbidity—through block-wise and grid-wise attention mechanisms. This architectural innovation fills a critical gap between convolution-based efficiency and Transformer-based contextual modeling, resulting in superior performance across domains with varying underwater conditions.

3. Background

3.1. Transformer

Transformer-based architectures have reshaped both natural language processing (NLP) and computer vision (CV). Introduced by Vaswani et al. [28], the Transformer replaces recurrent neural networks (RNNs)’ sequential processing with self-attention, enabling parallel computation and effective modeling of long-range dependencies. This concept led to the vision transformer (ViT) [29], which partitions images into fixed-size patches, treats them as tokens, and applies self-attention to capture global relationships. Although ViT achieves strong performance, its quadratic complexity with respect to image resolution limits efficiency on high-resolution inputs.

To improve efficiency, Liu et al. [30] introduced the Swin Transformer. It features (1) a shifted window-based self-attention mechanism that restricts computations locally while periodically shifting windows to capture broader context, and (2) a hierarchical structure with patch merging to progressively learn multi-scale features. These innovations enhanced Transformer performance in dense prediction tasks like object detection and semantic segmentation. However, the Swin Transformer, while more efficient, still relies on local windows and retains some of the computational demands of attention-based architectures.

To overcome these challenges, MaxViT combines local window-based and global grid-based attention to enable efficient feature interaction across image regions. This hybrid approach improves spatial representation and supports diverse vision tasks without the computational overhead of full self-attention.

3.2. Loss Function and Evaluation Metrics

To comprehensively guide the network in producing high-fidelity and perceptually convincing underwater image enhancements, we design a composite loss function that integrates pixel-wise reconstruction accuracy, perceptual similarity in feature space, and high-level realism enforced by adversarial training. This multi-term objective function is formulated as follows:

where denotes the image enhancement network parameterized by , J represents the ground-truth clean image, and is the PatchGAN-based discriminator. The loss is composed of three terms: pixel loss, perceptual loss, and adversarial loss, each modulated by corresponding scalar weights , , and , which control the relative contribution of each component.

The pixel loss, implemented as the Mean Absolute Error (L1 loss), is defined by:

where and denote the ground-truth and predicted pixel intensities, respectively. L1 loss is chosen for its empirical robustness to outliers and its ability to provide stable gradient flows during training, making it particularly suitable for regression-based restoration tasks.

The perceptual loss is constructed by computing the L1 distances between deep feature representations extracted from a pre-trained VGG-19 network. Specifically, we utilize the activations from intermediate layers such as conv1_2, conv2_2, and conv3_3, which progressively capture low- to mid-level semantic structures of the image. This loss term encourages the enhanced image to not only match the ground truth in pixel space but also align in perceptually relevant feature domains, thereby improving the visual fidelity as judged by human observers.

The adversarial loss is introduced to further improve the realism of the enhanced outputs. It is implemented using a PatchGAN discriminator composed of three convolutional blocks, where each block contains a convolutional layer, followed by instance normalization and a LeakyReLU activation function. A final sigmoid layer produces patch-level real/fake scores. This formulation encourages the enhanced images to be indistinguishable from clean natural images in the distributional sense, thereby mitigating artifacts and enhancing texture realism.

For quantitative evaluation, we adopt two widely accepted metrics to assess the quality of the enhanced images.

The Peak Signal-to-Noise Ratio (PSNR) is used to evaluate reconstruction fidelity at the pixel level. It is computed as:

where is the maximum pixel value in the image (typically 255 for 8-bit images), and MSE denotes the mean squared error between the enhanced and ground-truth images.

In addition, we employ the Structural Similarity Index Measure (SSIM), which is designed to model perceived changes in structural information, contrast, and luminance between the reference and the enhanced image. The SSIM is defined as:

where , , , , and represent the local means, standard deviations, and cross-covariance between the predicted and ground-truth images, respectively. Constants and are used to stabilize the division.

In conclusion, although the L1 loss forms the backbone of our training objective due to its stability and convergence properties, it is insufficient for capturing perceptual details on its own. Therefore, our final training strategy combines pixel-level loss with perceptual and adversarial supervision, forming a unified composite objective that effectively balances numerical accuracy and visual quality. All sections of the manuscript have been updated to reflect this training configuration consistently.

3.3. UNet

Originally introduced by Ronneberger et al. [31] for biomedical image segmentation, the UNet architecture features a symmetric encoder–decoder structure connected by skip pathways. The encoder progressively reduces spatial dimensions while capturing increasingly abstract features. In contrast, the decoder incrementally restores spatial resolution by leveraging learned representations and detailed features from earlier layers via skip connections. This architecture achieves high pixel-level accuracy and has proven especially effective in image-to-image translation tasks. Its straightforward yet powerful design has led to widespread adoption in domains such as underwater image enhancement.

4. Proposed Method for Underwater Image Enhancement

Our goal is to learn an enhancement operator parameterised by , such that the enhanced image maximises visual quality with respect to a reference distribution of in-air photographs.

We build on top of MaxViT [14], an architecture that unifies Mobile Inverted Bottleneck Convolutions (MBConv) and Multi-Axis Self-Attention (Max-SA) within a hierarchical encoder–decoder framework. This hybrid design is particularly well-suited to underwater image enhancement due to its ability to jointly model localized degradation artifacts—such as backscatter, noise, and blur—while also capturing long-range contextual dependencies associated with global light attenuation, chromatic shifts, and structure distortions.

Unlike generic vision transformers or existing UNet–Transformer hybrids (e.g., URSCT-SESR or U-Shape Transformer), our architecture introduces multi-axis attention to separately address degradation across different spatial scales. Specifically, the use of both block-wise local attention and grid-wise global attention mechanisms enables the effective modeling of the following:

- Wavelength-dependent light absorption and scattering, which vary smoothly across regions and require large receptive fields to correct;

- Color casts and contrast distortion, which are often scene-dependent and influenced by global illumination context;

- Texture and edge degradation, which are local and require high-resolution feature preservation.

Moreover, MaxViT’s design offers better scalability and inductive bias than pure Transformer-based designs. The MBConv layers embedded within each MaxViT block retain strong locality priors and reduce training overhead, while the parallel Max-SA branches enrich the representation with both short- and long-range dependencies. This dual-path modeling is especially advantageous in underwater settings where feature sparsity and semantic ambiguity are prevalent.

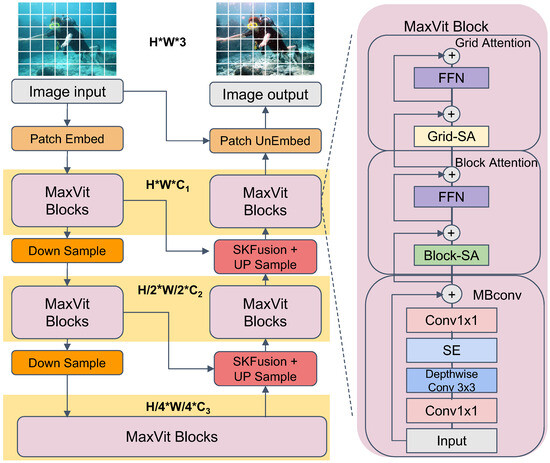

Figure 1 illustrates our model pipeline, which consists of five MaxViT-based encoder and decoder stages connected by skip pathways. The remainder of this section details each architectural component and its contribution to mitigating underwater image degradation.

Figure 1.

Illustration of the proposed model architecture for underwater image enhancement. The UNet structure consists of five MaxViT stages, with downsampling and upsampling operations, as well as skip connections to retain spatial information.

4.1. Problem Formulation

Let denote a raw underwater image drawn from the unknown distribution , and its clean counterpart from the clean set . To guide the enhancement process, we adopt a composite loss function that jointly optimizes low-level pixel fidelity, perceptual quality, and adversarial realism. The enhancement network is learned by minimizing the following:

where denotes the l-th feature map extracted from a pre-trained VGG network, and is a PatchGAN discriminator. The scalar weights control the relative contribution of each loss term. This unified objective ensures that the enhanced output preserves structural fidelity, aligns with human perceptual expectations, and maintains realistic textures, consistent with the design described in Section 3.2.

4.2. MaxViT Blocks: Coupling Convolution and Attention

Given a feature map , each MaxViT block performs

where the first MBConv module captures local spatial features, and the subsequent Max-SA module models global dependencies. The residual connection ensures stable gradient flow and improved training convergence.

4.3. Mobile Inverted Bottleneck Convolution (MBConv)

MBConv follows the structure of pointwise expansion, depthwise spatial convolution, and projection. Formally:

where is the expansion layer, performs depthwise convolution, and projects the result back to the original dimension. The nonlinearity is typically ReLU6, following MobileNetV2 conventions.

To enhance channel-wise feature discrimination, a Squeeze-and-Excitation (SE) module is incorporated:

where denotes the ReLU function, and GAP represents global average pooling. This design adaptively reweights channel responses at a parameter cost of .

The overall computational complexity of MBConv with kernel size k is:

which is linear in spatial resolution, making it well-suited for high-resolution feature maps.

4.4. Multi-Axis Self-Attention (Max-SA)

To address the quadratic complexity of classical self-attention, Max-SA decomposes attention into two sparse patterns: (i) block attention, which applies local attention within non-overlapping windows; and (ii) grid attention, which captures long-range dependencies via overlapping windows. Each attention branch computes queries, keys, and values as

and applies scaled dot-product attention:

The final output is the concatenation over block and grid attentions, reducing complexity to , which is linear in spatial size for fixed .

4.5. Hierarchical Encoder–Decoder

Our network follows a five-stage encoder–decoder design, where each encoder–decoder block incorporates MaxViT modules. Downsampling is performed via strided depthwise convolutions, while upsampling uses interpolation. Skip connections with Selective Kernel Fusion (SKF) [32] preserve multi-scale context. A final residual connection refines the output:

4.6. Positional Encoding and Scalability

Transformer branches adopt Conditional Positional Encoding (CPE) [33], implemented as depthwise convolutions:

enabling implicit position encoding with cost.

Our design ensures scalability: for each stage s, increasing channel width or attention window size leads to linear FLOPs and parameter growth, supporting trade-offs between accuracy and efficiency.

4.7. SKFusion Module

The SKFusion module, illustrated in Figure 1, is designed to adaptively fuse multi-scale features extracted from different stages of the encoder. Inspired by the Selective Kernel mechanism, SKFusion dynamically adjusts the receptive field of the feature fusion process by learning soft attention weights over multiple kernel paths. This enables the network to selectively emphasize fine or coarse features depending on the context, thereby improving the integration of local details and global structures in the decoding stage. Such adaptability is particularly beneficial in underwater scenes where degradation patterns (e.g., blur, scattering, and uneven illumination) vary across spatial scales.

5. Experimental Results

In this section, we present the experimental setup and results for the proposed method, followed by an ablation study. We evaluate our method on three benchmark datasets, UIEB, EUVP, and UFO-120, using PSNR and SSIM as evaluation metrics. We compare our method with several state-of-the-art underwater image enhancement techniques: MMLE [34], UWCNN [35], WaterNet [15], Ucolor [19], MetaUE [25], Semi-UIR [26], Deep-SESR [16], UGAN [20], FUnIE-GAN [21], URSCT-SESR [22], U-Shape Transformer [23], and CLUIE [24].

5.1. Analysis of Experimental Results

5.1.1. Quantitative Comparison on UIEB and EUVP

We evaluate our method on the UIEB [15] and EUVP [16] datasets, two widely used benchmarks tailored for underwater image enhancement. The UIEB dataset consists of 950 real-world underwater images, including 890 images with corresponding high-quality references curated by human annotators, covering diverse scenes with varying levels of turbidity and lighting conditions. The EUVP dataset contains both paired and unpaired underwater images captured under a wide range of visual degradations, providing a rich and diverse testbed for both supervised and unsupervised enhancement tasks. Together, these datasets present real-world challenges such as low contrast, color distortion, structural loss, and non-uniform illumination, enabling comprehensive evaluation of enhancement models.

To quantitatively evaluate performance, we adopt a suite of widely recognized metrics. PSNR and PSNRL measure pixel-level similarity between enhanced and reference images. SSIM captures structural similarity in terms of luminance, contrast, and structure. PCQI assesses perceptual contrast quality, while UCIQE and UIQM are designed specifically for underwater images to reflect colorfulness, sharpness, and contrast. UICM, UIConM, and CCF, respectively, quantify improvements in colorfulness, local contrast, and color fidelity. Together, these metrics provide a well-rounded evaluation from both general and underwater-specific perspectives.

All images in the UIEB and EUVP datasets were resized to 256 × 256 before training and evaluation. During training, we employed random horizontal flipping and rotation for data augmentation. All pixel values were normalized to the [0, 1] range. We used a batch size of 8 and trained all models for 50 epochs using the Adam optimizer with an initial learning rate of 1e-4 and momentum parameters , . The learning rate was halved every 10 epochs. These training settings were consistently applied across all baseline and proposed models to ensure a fair comparison.

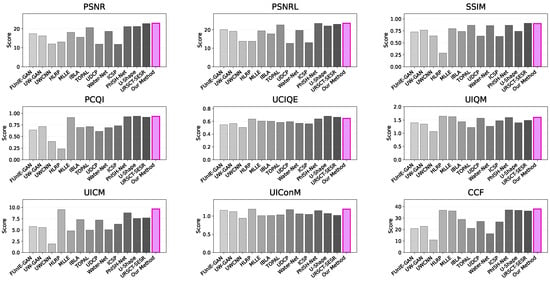

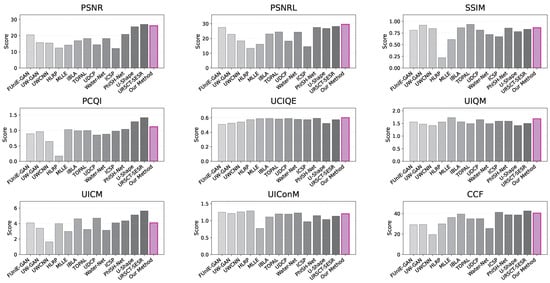

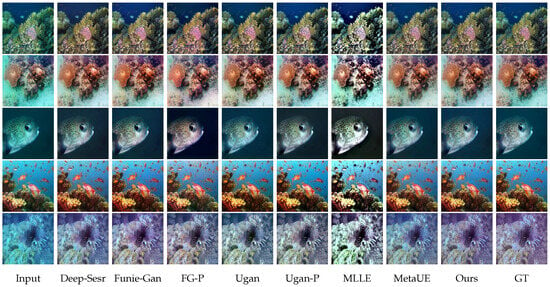

Table 1 provides a detailed quantitative comparison of different underwater image enhancement methods on both UIEB and EUVP datasets across nine evaluation metrics. In addition, visual comparisons are illustrated in Figure 2 and Figure 3, where Figure 2 shows representative enhancement results on the UIEB dataset and Figure 3 presents results on the EUVP dataset. These qualitative results highlight the superior visual fidelity and structural preservation of our method in a variety of underwater scenarios.

Table 1.

Comparison of different underwater image enhancement methods on UIEB and EUVP datasets. The best values for each metric are highlighted in bold.

Figure 2.

Comprehensive benchmarking of underwater image enhancement methods on the UIEB Dataset using perceptual and domain-specific quality metrics.

Figure 3.

Comparative quantitative assessment of state-of-the-art enhancement techniques on the EUVP dataset across general and underwater-oriented evaluation criteria.

On the UIEB dataset, our method achieves a PSNR of 22.91 and an SSIM of 0.902, outperforming most existing approaches such as MLLE, UWCNN, and Water-Net. On the UIEB dataset, our method achieves the highest PSNR and SSIM among all evaluated methods, outperforming both URSCT-SESR and PhISH-Net. This indicates superior performance in both pixel-wise fidelity and structural consistency. On the EUVP dataset, our method attains a top-tier PSNR of 26.12 and an SSIM of 0.860, reflecting strong robustness under diverse illumination and turbidity conditions. Notably, compared with lightweight GAN-based models such as FUnIE-GAN and UW-GAN, our approach produces significantly better visual quality and structural accuracy across both datasets.

Overall, the performance advantages of our model are reflected in its ability to balance pixel-level fidelity, perceptual quality, and structural consistency. As evidenced by the metrics in Table 1 and the visual comparisons in Figure 2 and Figure 3, our method consistently achieves top-tier results across both general and underwater-specific metrics. Particularly strong performance in UIQM and UCIQE further supports the model’s effectiveness in enhancing color, contrast, and perceptual clarity in underwater environments.

5.1.2. UFO-120 Dataset

On the UFO-120 dataset, the self-attention mechanism continues to show its advantages. As shown in Table 2, our method with self-attention achieves a PSNR of 27.13 and an SSIM of 0.83. These results further confirm the superiority of the self-attention-based MaxViT module in capturing fine details and improving the image enhancement process, especially in more complex datasets.

Table 2.

Quantitative comparison on the UFO-120 dataset.

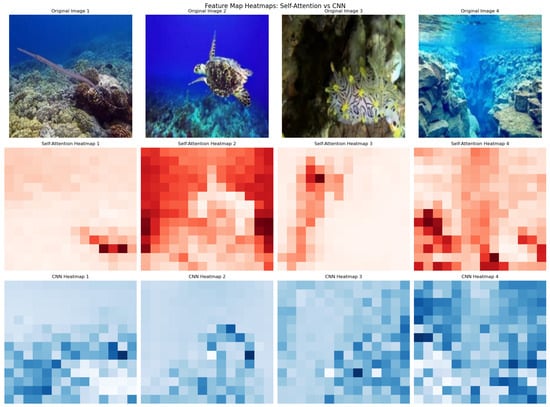

5.2. Ablation Study

In the ablation study, replacing the self-attention mechanism with CNNs results in lower performance across all datasets. As shown in Table 3, on the UIEB dataset, the CNN-based method achieves a PSNR of 22.11, while the self-attention-based method improves this to 22.91. Similar trends are observed on the EUVP and UFO-120 datasets, where the self-attention-based method provides better enhancement, particularly in terms of PSNR and SSIM.

Table 3.

Ablation study: CNN vs. self-attention.

5.3. Computational Efficiency

To support the claim of lightweight design, we compare our method with representative models in terms of FLOPs and inference time on the UIEB dataset. As shown in Table 4, our model achieves the best PSNR and competitive SSIM, while maintaining low computational cost (10.82 GFLOPs) and the fastest inference time (0.0015 s per image). These results confirm the efficiency and practicality of our approach for real-time, edge-level deployment.

Table 4.

Comparison of our method with representative algorithms on the UIEB dataset in terms of PSNR, SSIM, FLOPs, and inference time per image.

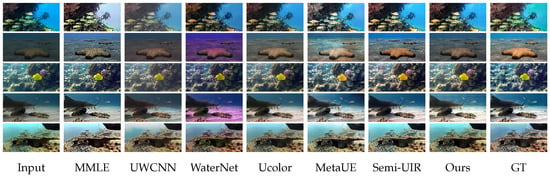

5.4. Visualization of Results

Figure 4 and Figure 5 illustrate the visual comparison of our method with other state-of-the-art methods. On the UIEB dataset, our method restores the colors and enhances the contrast more effectively than methods like MMLE and UWCNN, resulting in clearer and more visually appealing images. On the EUVP dataset, our approach demonstrates substantial improvements in image visibility and fine details, especially under low-light and high-turbidity conditions. These visual results further validate the superiority of our method in underwater image enhancement tasks.

Figure 4.

Results of different algorithms on the UIEB dataset. Columns represent different methods (Input, MMLE, UWCNN, WaterNet, Ucolor, MetaUE, Semi-UIR, ours, GT).

Figure 5.

Results of different algorithms on the EUVP dataset. Each column represents different methods, and each row represents different input cases.

To further analyze the effectiveness of our model, we visualize the feature attention maps extracted from different image regions. Figure 6 presents an example of the attention heatmaps generated by our model, highlighting the regions of interest that contribute most significantly to the enhancement process. Unlike conventional CNN-based approaches that rely on local receptive fields, attention mechanisms capture long-range dependencies, enabling more comprehensive feature aggregation. This property is particularly advantageous in underwater scenarios, where global contrast and contextual understanding are critical for accurate enhancement. As shown in Figure 6, our attention-based model effectively identifies and enhances key structures in the image, leading to improved restoration of fine details and more natural color correction.

Figure 6.

Visualization of attention heatmaps highlighting the most influential regions in the image enhancement process. The heatmaps demonstrate how our model effectively captures global dependencies, leading to improved feature representation and enhancement quality.

6. Conclusions

This paper presents an underwater image enhancement method based on a hybrid UNet–MaxViT architecture, designed to effectively address typical underwater degradation factors such as color distortion, low contrast, and detail loss. By leveraging the local feature extraction capability of UNet and the global context modeling of MaxViT’s multi-axis attention, our model achieves a balanced and efficient representation suitable for complex underwater scenarios.

Extensive experiments on three representative benchmarks—UIEB, EUVP, and UFO-120—demonstrate that our method consistently outperforms existing state-of-the-art approaches across both pixel-level and perceptual quality metrics. The results validate the effectiveness of our design in enhancing visual clarity, preserving fine structure, and maintaining color fidelity across diverse underwater conditions. Ablation studies further confirm the advantage of incorporating self-attention over pure CNN-based designs. Visualizations, including attention heatmaps, show that our model can accurately localize and enhance salient regions in degraded underwater images.

Thanks to its lightweight structure and strong generalization ability, the proposed model is not only competitive in benchmark performance but also practical for real-time deployment on edge devices such as underwater robots or drones. Future work will explore further efficiency optimization and broader generalization to other challenging visual environments.

Author Contributions

Conceptualization, J.J. and J.M.; Methodology, J.J.; Software, J.J.; Validation, J.J. and J.M.; Formal analysis, J.J.; Investigation, J.J.; Resources, J.J.; Data curation, J.J.; Writing—original draft preparation, J.J.; Writing—review and editing, J.M.; Visualization, J.J.; Supervision, J.M.; Project administration, J.M.; Funding acquisition, J.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

All data supporting the reported results are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| UIE | Underwater Image Enhancement |

| UIEB | Underwater Image Enhancement Benchmark |

| EUVP | Enhanced Underwater Vision Project |

| PSNR | Peak Signal-to-Noise Ratio |

| SSIM | Structural Similarity Index Measure |

| PCQI | Perception-based Color Quality Index |

| UCIQE | Underwater Color Image Quality Evaluation |

| UIQM | Underwater Image Quality Measure |

| MBConv | Mobile Inverted Bottleneck Convolution |

| Max-SA | Multi-Axis Self-Attention |

References

- Yang, M.; Hu, J.; Li, C.; Rohde, G.; Du, Y.; Hu, K. An In-Depth Survey of Underwater Image Enhancement and Restoration. IEEE Access 2019, 7, 123638–123657. [Google Scholar] [CrossRef]

- Sahu, P.; Gupta, N.; Sharma, N. A Survey on Underwater Image Enhancement Techniques. Int. J. Comput. Appl. 2014, 87, 19–23. [Google Scholar] [CrossRef]

- Xu, T.; Xu, S.; Chen, X.; Chen, F.; Li, H. Multi-core token mixer: A novel approach for underwater image enhancement. Mach. Vis. Appl. 2025, 36, 1–16. [Google Scholar] [CrossRef]

- Liu, R.; Fan, X.; Zhu, M.; Hou, M.; Luo, Z. Real-World Underwater Enhancement: Challenges, Benchmarks, and Solutions Under Natural Light. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, W.; Ren, P. Self-organized underwater image enhancement. ISPRS J. Photogramm. Remote Sens. 2024, 215, 1–14. [Google Scholar] [CrossRef]

- Chandrasekar, A.; Sreenivas, M.; Biswas, S. Phish-net: Physics inspired system for high resolution underwater image enhancement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January.

- Desai, C.; Benur, S.; Patil, U.; Mudenagudi, U. Rsuigm: Realistic synthetic underwater image generation with image formation model. ACM Trans. Multimed. Comput. Commun. Appl. 2024, 21, 1–22. [Google Scholar] [CrossRef]

- Takao, S. Underwater image sharpening and color correction via dataset based on revised underwater image formation model. Vis. Comput. 2025, 41, 975–990. [Google Scholar] [CrossRef]

- Akkaynak, D.; Treibitz, T. A revised underwater image formation model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6723–6732. [Google Scholar]

- Zhang, M.; Peng, J. Underwater image restoration based on a new underwater image formation model. IEEE Access 2018, 6, 58634–58644. [Google Scholar] [CrossRef]

- An, S.; Xu, L.; Senior Member, I.; Deng, Z.; Zhang, H. HFM: A hybrid fusion method for underwater image enhancement. Eng. Appl. Artif. Intell. 2024, 127, 107219. [Google Scholar] [CrossRef]

- Du, D.; Li, E.; Si, L.; Zhai, W.; Xu, F.; Niu, J.; Sun, F. UIEDP: Boosting underwater image enhancement with diffusion prior. Expert Syst. Appl. 2025, 259, 125271. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, Q.; Feng, Y.; Cai, L.; Zhuang, P. Underwater image enhancement via principal component fusion of foreground and background. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 10930–10943. [Google Scholar] [CrossRef]

- Tu, Z.; Talebi, H.; Zhang, H.; Yang, F.; Milanfar, P.; Bovik, A.; Li, Y. Maxvit: Multi-axis vision transformer. In Computer Vision–ECCV 2022, Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part XXIV; Springer: Berlin/Heidelberg, Germany, 2022; pp. 459–479. [Google Scholar]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Islam, M.J.; Luo, P.; Sattar, J. Simultaneous Enhancement and Super-Resolution of Underwater Imagery for Improved Visual Perception. In Proceedings of the Robotics: Science and Systems (RSS), Corvalis, OR, USA, 12–16 July 2020. [Google Scholar] [CrossRef]

- Tang, Y.; Yi, J.; Tan, F. Facial micro-expression recognition method based on CNN and transformer mixed model. Int. J. Biom. 2024, 16, 463–477. [Google Scholar] [CrossRef]

- Tan, F.; Zhai, M.; Zhai, C. Foreign object detection in urban rail transit based on deep differentiation segmentation neural network. Heliyon 2024, 10, e37072. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Anwar, S.; Hou, J.; Cong, R.; Guo, C.; Ren, W. Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans. Image Process. 2021, 30, 4985–5000. [Google Scholar] [CrossRef] [PubMed]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 7159–7165. [Google Scholar]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Ren, T.; Xu, H.; Jiang, G.; Yu, M.; Zhang, X.; Wang, B.; Luo, T. Reinforced swin-convs transformer for simultaneous underwater sensing scene image enhancement and super-resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Peng, L.; Zhu, C.; Bian, L. U-shape transformer for underwater image enhancement. IEEE Trans. Image Process. 2023, 32, 3066–3079. [Google Scholar] [CrossRef]

- Li, K.; Wu, L.; Qi, Q.; Liu, W.; Gao, X.; Zhou, L.; Song, D. Beyond single reference for training: Underwater image enhancement via comparative learning. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 2561–2576. [Google Scholar] [CrossRef]

- Zhang, Z.; Yan, H.; Tang, K.; Duan, Y. MetaUE: Model-based Meta-learning for Underwater Image Enhancement. arXiv 2023, arXiv:2303.06543. [Google Scholar]

- Huang, S.; Wang, K.; Liu, H.; Chen, J.; Li, Y. Contrastive semi-supervised learning for underwater image restoration via reliable bank. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 18145–18155. [Google Scholar]

- Wang, Z.; Tao, H.; Zhou, H.; Deng, Y.; Zhou, P. A content-style control network with style contrastive learning for underwater image enhancement. Multimed. Syst. 2025, 31, 60. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

- Chu, X.; Tian, Z.; Zhang, B.; Wang, X.; Wei, X.; Xia, H.; Shen, C. Conditional positional encodings for vision transformers. arXiv 2021, arXiv:2102.10882. [Google Scholar]

- Zhang, W.; Zhuang, P.; Sun, H.H.; Li, G.; Kwong, S.; Li, C. Underwater image enhancement via minimal color loss and locally adaptive contrast enhancement. IEEE Trans. Image Process. 2022, 31, 3997–4010. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Anwar, S.; Porikli, F. Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Wang, N.; Zhou, Y.; Han, F.; Zhu, H.; Yao, J. UWGAN: Underwater GAN for real-world underwater color restoration and dehazing. arXiv 2019, arXiv:1912.10269. [Google Scholar]

- Zhuang, P.; Wu, J.; Porikli, F.; Li, C. Underwater image enhancement with hyper-laplacian reflectance priors. IEEE Trans. Image Process. 2022, 31, 5442–5455. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef]

- Jiang, Z.; Li, Z.; Yang, S.; Fan, X.; Liu, R. Target oriented perceptual adversarial fusion network for underwater image enhancement. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6584–6598. [Google Scholar] [CrossRef]

- Drews, P.L.; Nascimento, E.R.; Botelho, S.S.; Campos, M.F.M. Underwater depth estimation and image restoration based on single images. IEEE Comput. Graph. Appl. 2016, 36, 24–35. [Google Scholar] [CrossRef]

- Hou, G.; Li, N.; Zhuang, P.; Li, K.; Sun, H.; Li, C. Non-uniform illumination underwater image restoration via illumination channel sparsity prior. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 799–814. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).