Abstract

This article studies the structure and properties of real-ordered Hilbert spaces, highlighting the roles of the XOR and XNOR logical operators in conjunction with the Yosida and Cayley approximation operators. These fundamental elements are utilized to formulate the Yosida–Cayley Variational Inclusion Problem (YCVIP) and its associated Yosida–Cayley Resolvent Equation Problem (YCREP). To address these problems, we develop and examine several solution methods, with particular attention given to the convergence behavior of the proposed algorithms. We prove both the existence of solutions and the strong convergence of iterative sequences generated under the influence of the aforesaid operators. The theoretical results are supported by a numerical result, demonstrating the practical applicability and efficiency of the suggested approaches.

Keywords:

algorithms; XOR and XNOR operators; resolvent equation; variational inclusion; numerical result; real-ordered Hilbert space MSC:

47H05; 49H10; 47J25

1. Introduction

Variational inequality problems originate from the study of functionals constrained to convex sets and were formally introduced by Stampacchia in 1966 [1]. In his foundational work, Stampacchia developed the concept of variational inequalities to address problems involving inequalities and used the Lax–Milgram theorem [2] to investigate the regularity of solutions to partial differential equations. Since then, variational inequalities have found extensive applications in various fields, such as artificial intelligence and data science, optimal control, mechanics, finance, transportation equilibrium, and engineering sciences. Rockafellar presented a significant extension of this concept in 1976 [3], which introduced the variational inclusion problem—a broader framework that encompasses variational inequalities. In recent decades, variational inequalities and their generalizations have been actively studied in multiple mathematical settings by numerous researchers [4,5,6,7,8,9,10,11]. Hassouni and Moudafi [12] examined a class of combined variational inequalities involving single-valued mappings, later termed variational inclusions. A typical variational inclusion seeks a point at which a maximal monotone operator maps to zero. These problems serve as a unifying framework that generalizes concepts from variational inequalities, equilibrium and optimization problems, complementarity systems, mechanical models, and Nash-type equilibrium formulations.

In parallel with the development of variational theories, logical operations such as exclusive OR (XOR) and exclusive NOR (XNOR) play an essential role in digital computation. The XOR operation returns true if the two Boolean inputs differ, while XNOR yields true when the inputs are equal. These binary operations are fundamental in fault-tolerant systems, parity checking, cryptographic algorithms, and pseudorandom number generation. Both XOR and XNOR are associative and commutative and are instrumental in applications involving linear separability and logic design [13,14,15,16,17,18].

To solve non-linear operator equations and variational problems in Hilbert spaces, various approximation operators have been employed, including the resolvent, Cayley, and Yosida operators. These operators are particularly effective in approximating derivatives of convex functionals and are widely used in the study of diffusion, wave propagation, and heat transfer problems. The development of iterative algorithms based on generalized resolvent operators has been an active area of research [19], with particular attention given to improving the convergence rates of such algorithms. An important acceleration technique involves the use of inertial methods. Initially proposed by Polyak in 1964 [20] for the heavy-ball method, the inertial approach generates each new iteration using a linear combination of the two preceding terms. Chang et al. [21] applied an inertial forward–backward splitting technique to address variational inclusion problems in Hilbert spaces. More recently, Gebrie and Bedne [22] introduced a computational algorithm based on inertial extrapolation to solve generalized split common fixed-point problems, demonstrating its wide applicability. These contributions have inspired further advances in the field, including the development of new algorithms with enhanced convergence properties. Rajpoot et al. [23] recently studied a Yosida variational inclusion problem and its corresponding Yosida-resolvent equation. They employed an inertial extrapolation scheme and provided supporting numerical examples to validate their theoretical results.

Motivated by these recent advances, the present study revisits the Yosida–Cayley solution equation and its associated variational inclusion problem. We propose a novel iterative approach based on inertial extrapolation and analyze its convergence properties within Hilbert spaces. To support our theoretical findings, we provide numerical results conducted using MATLAB 2024b. The results are illustrated through convergence graphs and estimation tables, demonstrating the efficiency of the proposed algorithms.

2. Preliminaries

Let be a real-ordered Hilbert space equipped with the norm and the inner product . Denote by the collection of compact non-empty subsets of , and let be a closed convex cone. Furthermore, let denote the non-empty set consisting of subsets of .

Definition 1

([18]). Let and let be a cone such that The cone is said to be normal if and only if there exists a constant such that

where and .

Definition 2

([18]). A cone κ induces a partial order ≤ on defined by

Two elements and are said to be comparable, denoted by if either or .

Definition 3

([16]). Let ⊕ and ⊙ denote the XOR and XNOR operations, respectively. Consider ; let ∨ and ∧ represent the operators with the least upper bound (lub) and the highest lower bound (glb), respectively. The following properties are satisfied:

- (i)

- ;

- (ii)

- ;

- (iii)

- ;

- (iv)

- ;

- (v)

- (vi)

- If , then ;

- (vii)

- , for any scalar ;

- (viii)

- If , then ;

- (ix)

- If , then if and only if .

Proposition 1

([18]). Let be the normal cone with normal constant . Then for all , the following conditions hold:

- (i)

- ;

- (ii)

- ;

- (iii)

- ;

- (iv)

- If then .

Definition 4.

A mapping is said to be Lipschitz continuous if there exists a constant such that

Definition 5.

A mapping is said to be a-ordered non-extended mapping if there exists a constant such that

Definition 6.

Let be a single-valued mapping, and let be a multi-valued mapping. Then

- (i)

- The mapping Δ is said to be Lipschitz continuous in the first argument if there exists a constant such that, for any

- (ii)

- The mapping Δ is said to be Lipschitz continuous in the second argument if there exists a constant such that, for any

Definition 7.

Let be a single-valued mapping, and let be a multi-valued mapping. Then

- (i)

- The mapping p is called a comparison mapping if for all such that , and and , it holds that

- (ii)

- The comparison mapping Δ is said to be an α-non-ordinary difference mapping, if there exists and such that

- (iii)

- The comparison mapping Δ is called a ρ-ordered rectangular mapping if there exists and such that

- (iv)

- The mapping Δ is a -weak-ordered different mapping if there exists a constant and elements and , such that

Definition 8.

A mapping is said to be -Lipschitz continuous if there exists a constant such that

where denotes the Hausdörff metric on .

Definition 9

([19]). Let be a multi-valued mapping. The resolvent operator associated with , denoted by , is defined by for all and as

where τ denotes the identity operator on .

Definition 10.

The Yosida approximation operator is defined as

where τ denotes the identity operator on .

Definition 11.

The Cayley approximation operator associated with the multi-valued mapping , denoted by , is defined as

where τ is the identity operator.

We need the following lemmas to prove the main results of this paper.

Lemma 1

([15]). Let be a γ-ordered rectangular multi-valued mapping with respect to the resolvent operator . Then, for all , the following inequality holds:

where , provided that .

Thus, the resolvent operator is Lipschitz-type continuous.

Lemma 2

([14]). Let be a -weak-ordered rectangular different multi-valued mapping associated with the resolvent operator . Let be the corresponding Yosida approximation operator. Then, for all , the following inequality holds:

where , provided that .

Thus, the Yosida approximation operator is Lipschitz-type continuous.

Lemma 3

([17]). Let be a -weak-ordered rectangular different multi-valued mapping with respect to the resolvent operator , and let denote the associated Cayley approximation operator. Then, for all , the following inequality holds:

where , provided that .

Thus, the Cayley approximation operator is Lipschitz-type continuous.

3. The Yosida–Cayley Variational Inclusion Problem (YCVIP)

Let , , , and be mappings. Let and denote the Yosida and Cayley approximation operators associated with , respectively. Find , , , and such that

where is a constant and denotes the identity mapping on .

Special Case 1.

If , , and , then (1) reduces to the problem of finding such that

Special Case 2.

If , , and , then (1) further simplifies the classical inclusion problem of finding such that

This is the fundamental variational inclusion problem studied by Rockafellar [3].

4. Fixed-Point Formulation and Iterative Algorithms

Lemma 4.

The Yosida–Cayley Variational Inclusion Problem (1) admits a solution , with corresponding elements , , and , if and only if the following condition is satisfied:

where , and τ denotes the identity operator.

Proof.

Suppose , and satisfy Equation (2). Then we have,

□

To solve The Yosida–Cayley Variational Inclusion Problem (YCVIP), we now develop the following method based on Lemma 4.

Algorithm 1.

Let , and , , and be initial elements. Define the sequences , , , and iteratively as follows:

The above expression can equivalently be written in the symmetrized form:

We now propose the iterative strategy based on (4).

Algorithm 2.

Let , , , and be initial elements. Compute the sequences , , , and iteratively according to the following step-by-step procedure:

where and .

The predictor–corrector approach [24] is employed to describe the following inertial extrapolation scheme.

Algorithm 3.

Let , with initial values , , and . Compute the sequences , , , and recursively as follows:

where for all , and is the extrapolation coefficient.

Let , , and be such that:

where denotes the Hausdorff metric on , and is a constant.

5. Main Result

In this section, we establish the existence and strong convergence of solutions to the Yosida–Cayley Variational Inclusion Problem (YCVIP), utilizing the XOR and XNOR operations.

Theorem 1.

Suppose that is a real-ordered Hilbert space and κ is a cone that induces a partial order. Let be a single-valued mapping, and let be multi-valued mappings. Assume that is a multi-valued mapping such that is a γ-ordered and -weak-ordered rectangular different mapping with respect to its first argument. Additionally, let be a single-valued mapping that is Lipschitz continuous with constant and -non-extended-ordered. Assume that for all , we have and . Suppose that the following conditions hold:

Further, suppose that the following inequality is satisfied:

where and , with for all .

Let for all , where is an extrapolation term satisfying

Then, the sequences , , , and generated by Algorithm 3 strongly converge to the solution , , , and of the YCVIP.

Proof.

We have

Using (iii) of Proposition 1, we get the following.

Substituting Equations (11)–(13) into Equation (15), we obtain the following.

From the definition of -Lipschitz continuity and part (i) of Proposition 1, it follows that

Furthermore, by the Lipschitz continuity of the YCVIP operator and (i) of Proposition 1, we obtain the following.

Now, combining (16)–(18), we get the following.

Since p is -order non-extended mapping, we have

Combining (19) and (20) yields the following result.

Since , , , and for all , and using part (iv) of Proposition 1 along with the Lipschitz continuity and the strong convergence of p, Equation (21) becomes

It follows that

By combining (22) and (23), we obtain the following.

where and .

Since , where is the extrapolating term for all , we have the following.

We infer from condition (14) that

where and . Consequently, from Equation (24), it follows that the sequence is a Cauchy sequence in . Since is complete, there exists such that as . Next, using Equations (8)–(10), we obtain

Thus, , and are also Cauchy sequences in Therefore, there exist , and such that , and as Next, we show that and as

Furthermore,

Since is closed, it follows that . Similarly, we obtain and .

Finally, applying the continuity of the mappings p, Σ, , , and , we conclude that

Therefore, by Lemma 4, is a solution to the Yosida–Cayley Variational Inclusion Problem (YCVIP), where , , and . □

6. Yosida–Cayley Resolvent Equation Problem (YCREP)

For the Yosida–Cayley Variational Inclusion Problem (YCVIP), the iterative schemes described in Algorithms 1 and 2 can be used to establish both the existence and the convergence of solutions. In conclusion, by employing the inertial extrapolation term given in Equation (3), we derive a convergence result for the YCVIP. In this context, we define the Yosida–Cayley Resolvent Equation Problem (YCREP) as follows:

Find , , , and such that

where the operator is defined by

with denoting the identity operator and being a constant.

Therefore, the Yosida–Cayley Variational Inclusion Problem (YCVIP) and the Yosida– Cayley Regularized Extrapolation Problem (YCREP) are comparable.

Lemma 5.

The Yosida–Cayley Variational Inclusion Problem (YCVIP) admits a solution with , , and if and only if the Yosida–Cayley Resolvent Equation Problem (YCREP) admits a solution together with , , and such that

where τ denotes the identity mapping and is a given constant.

Proof.

Let , , , and be the solution of the Yosida–Cayley Variational Inclusion Problem (YCVIP), satisfying the following equation:

Now, we get from (32) and (33)

which is the required YCREP.

Conversely, let , and be the solutions of the YCREP. Then, we have

Thus, the solutions to the YCVIP are given by , , , and , as established in Lemma 5. □

Algorithm 4.

For given initial elements , , , and , construct the sequences , , , , and iteratively.

where . Now YCREP, becomes

From (32) and (36), it follows that

which establishes the desired YCREP.

Algorithm 5.

For given initial elements , , , and , construct the sequences , , , , and iteratively.

When , the YCREP can be written as

We have from (39)

which establishes the desired YCREP.

Algorithm 6.

For given initial elements , , , and , construct the sequences , , , , and iteratively.

where,

Utilizing the predictor–corrector method [24], we construct an inertial extrapolation scheme to solve the YCREP.

Algorithm 7.

For given initial elements , , , and , construct the sequences , , , , and iteratively.

where, .

Theorem 2.

Suppose that and are multi-valued mappings defined on the real-ordered Hilbert space , such that is a γ-ordered and -weakly ordered rectangular different mapping in the first argument. Let and be single-valued mappings, where p is Lipschitz continuous with constant , and also a -ordered non-extended mapping. Assume that , , , and define

for all . Suppose that the following conditions hold:

The following inequality is then satisfied:

where , , and , for all .

Let for all , where is an inertial extrapolation parameter satisfying

Then, the sequences , and generated by Algorithm 7 converge strongly to the solution with , , and of the Yosida–Cayley Resolvent Equation Problem (YCREP).

Proof.

We have

Applying (iii) of Proposition 1, we obtain the following.

Using the Lipschitz continuity of p in (47), we obtain

Now, we have

Since p is a -order non-extended mapping, we have

Now, combining (49) and (50), we have

It follows from (48) and (51) that

Since p is strongly convergent and , , and for all , it follows that

Also, we have

Combining (53) and (54), we obtain

where

Since and is the extrapolation term for all such that and , it follows that

Therefore, by inequality (55), the sequence is a Cauchy sequence in , and hence there exists such that as .

From Equations (8)–(10), we obtain the following.

Thus, the sequences and are also Cauchy sequences in . Hence, there exist , , , and such that

We now show that , , and are .

Additionally,

Since is closed, it follows that . Similarly, we can conclude that and . Furthermore, applying the continuity of the mappings p, Σ, , , and , we obtain the following result.

Therefore, by Lemma 5, is a solution to the Yosida–Cayley Variational Inclusion Problem (YCVIP), where , , and . □

7. Numerical Result

To validate Theorems 1 and 2, we present six estimation tables and the corresponding convergence graphs obtained using MATLAB-R2024b. These numerical results illustrate the effectiveness and convergence behavior of the proposed iterative schemes. Assume that is a real Hilbert space equipped with the standard inner product and the associated norm . Let be a single-valued mapping, be a set-valued mapping, and be multi-valued mappings defined as follows:

- (i)

- Suppose is a -ordered rectangular mapping; then, there existThen, we getThus, is a -ordered rectangular mapping.

- (ii)

- Suppose p is -Lipschitz continuous and -ordered non-extended mapping.We have,Hence, p is a -Lipschitz continuous mapping. Also we have,Thus, p is the -ordered nonextended mapping.

- (iii)

- Let be a single-valued mapping and be multi-valued mappings such thatThus, we obtainThus, is -Lipschitz continuous with constant . Similarly, we can show that andTherefore, is Lipschitz continuous in all the three arguments with constants Consequently, we obtain

- (iv)

- Consider then evaluate the resolvent operator asNow, we haveTherefore, is Lipschitz continuous; here, , andAlso, we haveThen, we have the Lipschitz constant

- (v)

- Again, we have from the Yosida approximation operatorAlso, we getThat is, is Lipschitz continuous with constant where andAgain, we get,Thus, we get the Lipschitz constant

- (vi)

- Now, we evaluate the Cayley approximation operator.Now, we haveThat is, is Lipschitz continuous with constant where , and .And also, we haveTherefore the Lipschitz constant

- (vii)

- We consider the interval and .

- (viii)

- All values of the constants fulfill the requirements (14) and (46) stated in Theorems 1 and 2.

- (ix)

- Obtain from Iterative Algorithm 3

Using MATLAB-R2024b, we investigate six different scenarios that involve the construction of estimation tables and convergence graphs. In each case, multiple initial values are considered along with constant values of the parameters and , where and for all .

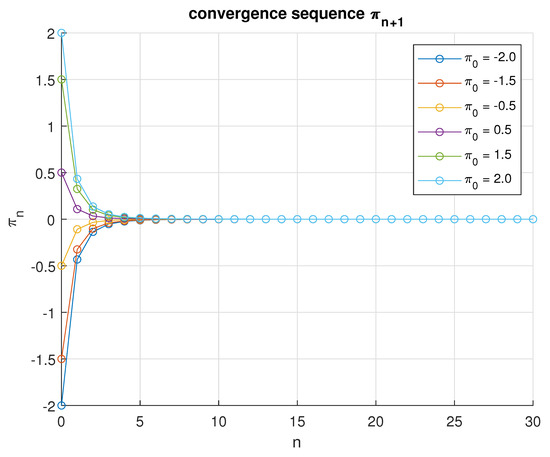

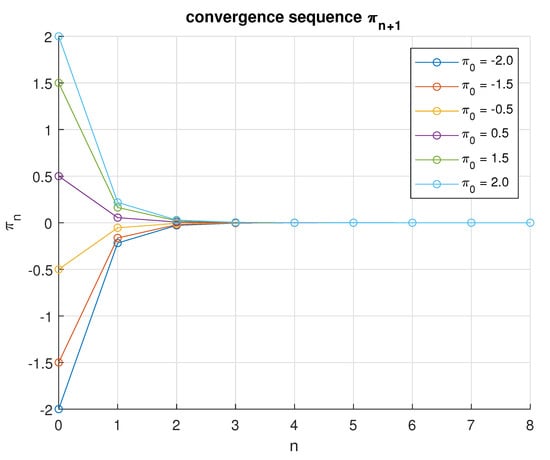

In the first scenario, the parameters are selected as and , with initial values . The Yosida–Cayley Variational Inequality Problem (YCVIP) converges to the solution after 26 iterations, resulting in a highly accurate sequence . The corresponding results are presented in the convergence graph (Figure 1) and estimation shown in Table 1.

Figure 1.

An illustration of the convergence sequence for various initial values when .

Table 1.

The convergence sequence is initialized with the values .

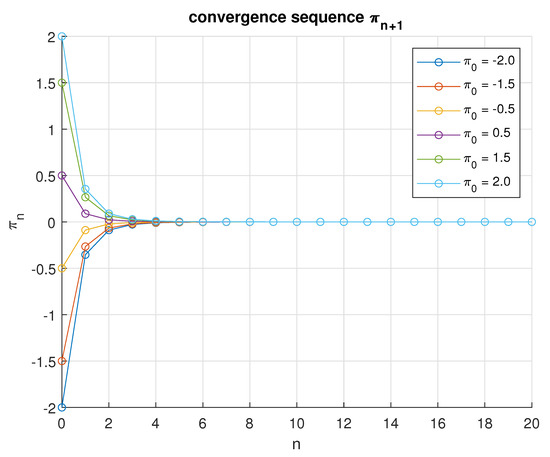

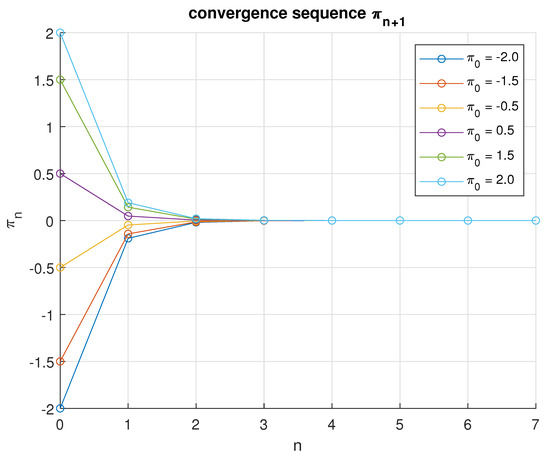

In the second scenario, the parameters are set as and , with the same initial values . The results of the estimation are summarized in Table 2, and the convergence behavior is illustrated in Figure 2 of the sequence that converges to after 15 iterations.

Table 2.

The convergence sequence is initialized with the values .

Figure 2.

An illustration of the convergence sequence for various initial values when .

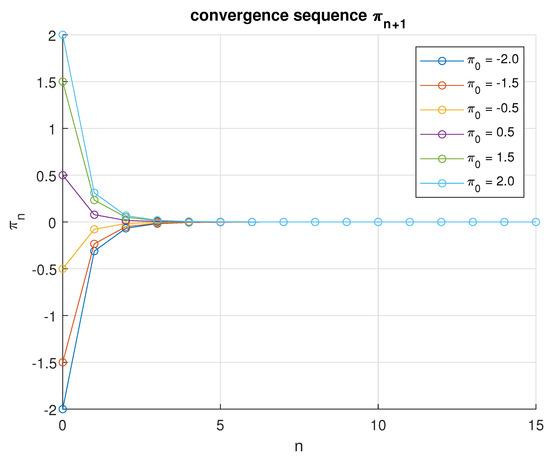

In the third scenario, we set while keeping the same values of and initial points . The numerical results are presented in estimation Table 3, and the convergence behavior is illustrated in Figure 3 of the sequence which converges to after 12 iterations.

Table 3.

The convergence sequence is initialized with the values .

Figure 3.

An illustration of the convergence sequence for various initial values when .

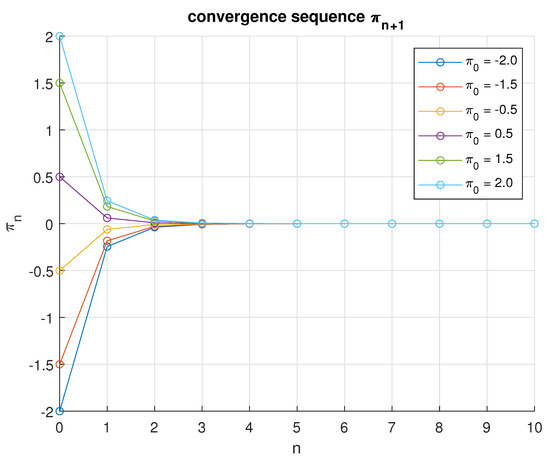

In the fourth scenario, we consider and , while maintaining the same initial values . The sequence converges to the solution after seven iterations. The numerical results are summarized in estimation Table 4, and the convergence behavior is illustrated in Figure 4.

Table 4.

The convergence sequence is initialized with the values .

Figure 4.

An illustration of the convergence sequence for various initial values when .

In the fifth scenario, we take and and the same initial values. We obtain an estimation Table 5 and a convergence graph (Figure 5) of the convergence sequence , which converges to (after six iterations).

Table 5.

The convergence sequence is initialized with the values .

Figure 5.

An illustration of the convergence sequence for various initial values when .

Now in the final scenario, we take and the same initial values and the same value of . Then we get the estimation shown in Table 6 and the convergence graph (Figure 6) of the convergence sequence , which converges at (after five iterations).

Table 6.

The convergence sequence is initialized with the values .

Figure 6.

An illustration of the convergence sequence for various initial values when .

To support our main results, six convergence graphs were obtained, demonstrating that the sequence converges to the solution under different parameter settings. In the first scenario, convergence occurred within 27 iterations for ; in the second, within 16 iterations for ; in the third, within 13 iterations for ; in the fourth, within 8 iterations for ; in the fifth, within 7 iterations for ; and in the sixth and final scenario, convergence was achieved in only 6 iterations for .

These findings indicate that a slower decay in the sequence —as in the final scenario—leads to a faster convergence rate. This behavior is observed while maintaining and . Compared to existing methods such as those of Gebrie and Bedene [22] and Rajpoot et al. [23], the proposed inertial extrapolation model exhibits superior convergence performance. The numerical evidence clearly demonstrates that the integration of inertial extrapolation techniques enhances convergence speed and effectiveness in approaching the optimal solution.

8. Conclusions

In this paper, we studied the Yosida–Cayley Variational Inclusion Problem (YCVIP) and the Yosida–Cayley Resolvent Equation Problem (YCREP) within the framework of real-ordered Hilbert spaces, incorporating both multi-valued and single-valued mappings influenced by XOR and XNOR operations. Our primary focus was on the convergence analysis of these problems through the development of an inertial extrapolation scheme. We proposed and rigorously analyzed several iterative algorithms to solve these problems, establishing both the existence and convergence of solutions under suitable conditions on the involved operators and mappings. Furthermore, a comprehensive numerical experiment was presented to demonstrate the computational efficiency and fast convergence of the proposed methods, thereby highlighting their practical relevance and potential for broader applications.

Author Contributions

Conceptualization: A. and S.S.I.; Methodology: A. and S.S.I.; Software: A. and S.S.I.; Validation: A. and I.A.; Formal analysis: A. and S.S.I.; Writing—original draft preparation: A. and I.A.; Writing—review and editing: I.A. and S.S.I.; Funding: I.A. All authors have read and agreed to the published version of the manuscript.

Funding

The Researchers would like to thank the Deanship of Graduate Studies and Scientific Research at Qassim University for financial support (QU-APC-2025).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hartman, P.; Stampacchia, G. On some non-linear elliptic differential-functional equations. Acta Math. 1966, 115, 271–310. [Google Scholar] [CrossRef]

- Fechner, W. Functional inequalities motivated by the Lax–Milgram theorem. J. Math. Anal. Appl. 2013, 402, 411–414. [Google Scholar] [CrossRef]

- Rockafellar, R. Monotone operators and the proximal point algorithm. SIAM J. Cont. Optim. 1976, 14, 877–898. [Google Scholar] [CrossRef]

- Kegl, M.; Butinar, B.J.; Kegl, B. An efficient gradient-based optimization algorithm for mechanical systems. Commun. Numer. Methods Eng. 2002, 18, 363–371. [Google Scholar] [CrossRef]

- Salahuddin. Solutions of Variational Inclusions over the Sets of Common Fixed Points in Banach Spaces. J. Appl. Non. Dy. 2021, 11, 75–85. [Google Scholar]

- Akram, M.; Dilshad, M. A Unified Inertial Iterative Approach for General Quasi Variational Inequality with Application. Fractal Fract. 2022, 6, 395. [Google Scholar] [CrossRef]

- AlNemer, G.; Rehan Ali, R.; Farid, M. On the Strong Convergence of Combined Generalized Equilibrium and Fixed Point Problems in a Banach Space. Axioms 2025, 14, 428. [Google Scholar] [CrossRef]

- Sitthithakerngkiet, K.; Rehman, H.U.; Ioannis, K.; Argyros, I.K.; Seangwattana, T. Strong convergence of dual inertial fixed point algorithms for computing fixed points over the solution set of a variational inequality problem in real Hilbert spaces. Rend. Circ. Mat. Di Palermo 2025, 74, 82. [Google Scholar] [CrossRef]

- Daoud, S.M.; Shehab, M.; Al-Mimi, M.H.; Abualigah, L.; Zitar, A.R.; Shambour, M.K.Y. Gradient-Based Optimizer (GBO): A Review, Theory, Variants, and Applications. Arch. Comput. Methods Eng. 2023, 30, 2431–2449. [Google Scholar] [CrossRef]

- Rehman, H.U.; Sitthithakerngkiet, K.; Seangwattana, T. Dual-Inertial Viscosity-Based Subgradient Extragradient Methods for Equilibrium Problems Over Fixed Point Sets. Math. Methods Appl. Sci. Anal. Appl. 2025, 48, 6866–6888. [Google Scholar] [CrossRef]

- Altbawi, S.M.A.; Khalid, S.B.A.; Mokhtar, A.S.B.; Hussain Shareef, H.; Husain, N.; Ashraf Yahya, A.; Haider, S.A.; Moin, L.; Alsisi, H.R. An Improved Gradient-Based Optimization Algorithm for Solving Complex Optimization Problems. Processes 2023, 11, 498. [Google Scholar] [CrossRef]

- Hassouni, A.; Moudafi, A. A perturbed algorithm for variational inclusions. J. Math. Anal. Appl. 1994, 185, 706–712. [Google Scholar] [CrossRef]

- Ahmad, I.; Irfan, S.S.; Farid, M.; Shukla, P. Nonlinear ordered variational inclusion problem involving XOR operation with fuzzy mappings. J. Inequal. Appl. 2020, 2020, 36. [Google Scholar] [CrossRef]

- Ahmad, I.; Pang, C.T.; Ahmad, R.; Ishtyak, M. System of Yosida inclusions involving XOR-operation. J. Nonlinear Convex Anal. 2017, 18, 831–845. [Google Scholar]

- Li, H.G.; Pan, X.B.; Deng, Z.Y.; Wang, C.Y. Solving GNOVI frameworks involving (γG, λ)-weak-GRD set-valued mappings in positive Hilbert spaces. Fixed Point Theory Appl. 2014, 2014, 146. [Google Scholar] [CrossRef]

- Iqbal, J.; Rajpoot, A.K.; Islam, M.; Ahmad, R.; Wang, Y. System of Generalized variational inclusions involving Cayley operators and XOR-operation in q-uniformly smooth Banach spaces. Mathematics 2022, 10, 2837. [Google Scholar] [CrossRef]

- Ali, I.; Ahmad, R.; Wen, C.F. Cayley inclusion problem involving XOR-operation. Mathematics 2019, 7, 302. [Google Scholar] [CrossRef]

- Iqbal, J.; Wang, Y.; Rajpoot, A.K.; Ahmad, R. Generalized Yosida inclusion problem involving multi-valued operator with XOR operation. Demonstr. Math. 2024, 57, 20240011. [Google Scholar] [CrossRef]

- Noor, M.A. Generalized set-valued variational inclusions and resolvent equations. J. Math. Anal. Appl. 1998, 228, 206–220. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Chang, S.; Yao, J.C.; Wang, L.; Liu, M.; Zhao, L. On the inertial forward-backward splitting technique for solving a system of inclusion problems in Hilbert spaces. Optimization 2021, 70, 2511–2525. [Google Scholar] [CrossRef]

- Gebrie, A.G.; Bedane, S.D. A simple computational algorithm with inertial extrapolation for generalized split common fixed point problems. Heliyon 2021, 7, e08373. [Google Scholar] [CrossRef] [PubMed]

- Rajpoot, A.K.; Istiak, M.; Ahmed, R.; Wang, Y.; Yao, J.C. Convergence analysis for Yosida variational inclusion problem with its corresponding Yosida resolvent equation problem through inertial extrapolation scheme. Mathematics 2023, 11, 763. [Google Scholar] [CrossRef]

- Noor, M.A.; Noor, K.I. General bivariational inclusions and iterative methods. Int. J. Nonlinear Anal. Appl. 2023, 14, 309–324. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).