Abstract

This paper presents a comparative analysis between classical maximum flow algorithms and modern deep Reinforcement Learning (RL) algorithms applied to traffic optimization in urban environments. Through SUMO simulations and statistical tests, algorithms such as Ford–Fulkerson, Edmonds–Karp, Dinitz, Preflow–Push, Boykov–Kolmogorov and Double are compared. Their efficiency and stability are evaluated in terms of metrics such as cumulative vehicle dispersion and the ratio of waiting time to vehicle number. The results show that classical algorithms such as Edmonds–Karp and Dinitz perform stably under deterministic conditions, while Double suffers from high variation. Recommendations are made regarding the selection of an appropriate algorithm based on the characteristics of the environment, and opportunities for improvement using DRL techniques such as PPO and A2C are indicated.

Keywords:

traffic optimization; maximum flow; deep reinforcement learning; urban environment; intelligent transportation systems MSC:

90B20; 90C35

1. Introduction

With the growth of urban population and the increasing number of motor vehicles, traffic management and optimization in urban environments have become critical issues for modern transportation systems. The efficient distribution of traffic flows has a direct impact on reducing congestion, travel times, fuel consumption and emissions of harmful substances, while improving the overall quality of life in urban environments.

Researchers and engineers rely on both established classical methods and innovative approaches based on artificial intelligence in search of adequate solutions. Classical maximum flow algorithms—such as those of Ford–Fulkerson, Edmonds–Karp, Dinitz, Preflow–Push and Boykov–Kolmogorov—offer formal and efficient modeling of transportation networks using graphs and provide optimal solutions under conditions of a priori known structure and capacities. However, they are limited in their ability to adapt to dynamic conditions and nonlinear interactions in a real environment.

On the other hand, deep reinforcement learning algorithms (RL and Deep RL), such as -learning and Deep -Networks (), exploit the ability to adaptively train agents through interaction with the environment. This allows them to perform better in the changing infrastructure and uncertainty typical of real-world traffic. Maximum flow algorithms, such as Ford–Fulkerson and Edmonds–Karp, have found widespread application in logistics and traffic management in recent decades. With advances in artificial intelligence, machine learning approaches, in particular Reinforcement Learning (RL), offer new possibilities for dynamic training of agents in real-world environments.

1.1. Reinforcement Learning (RL) Algorithms—Overview

Paper [1] presents a new multi-agent reinforcement learning model that combines an actor–critic architecture with a visual attention interface. The goal is to improve the interaction between homogeneous agents in partially observable environments. The model uses a recurrent visual attention interface that extracts latent states from each agent’s partial observations, allowing them to focus on local environments with full perception. The training is performed through centralized training and decentralized execution, which improves coordination between agents. The authors propose a framework for cooperative traffic light control in [2] using a counterfactual multi-agent deep actor–critic approach (MACS). Decentralized actors control the traffic lights in this method, while a centralized critic combines recurrent policies with feed-forward critics. Additionally, a planning module exchanges information between agents, helping individual agents to better understand the entire environment. This approach improves coordination and efficiency in traffic management.

Paper [3] introduces a MASAC model that implements a soft actor–critic algorithm with attention to optimize arterial traffic control. The attention mechanism is implemented in both the actor and the critic to improve the extraction of traffic information. MASAC is the first model to use a SAC algorithm to train arterial traffic control, expanding the decision space and improving the efficiency of traffic control. The authors propose a model-free and data-driven approach in [4] that combines reinforcement learning with macroscopic traffic simulation based on a recently developed network transmission model. This approach is an effective alternative for perimeter control in large urban areas, overcoming the limitations of model-based methods that may not be scalable or suitable for dealing with various effects caused by changes in the shape of macroscopic fundamental diagrams. Paper [5] addresses the problem of traffic control in large networks with many intersections using reinforcement learning techniques and transportation theories. A decentralized deep reinforcement learning model is presented that allows local agents to control traffic lights, improving the scalability and efficiency of traffic management in large urban networks. This approach is tested on a network with over a thousand traffic lights, demonstrating its effectiveness and applicability in real-world conditions. An innovative framework for traffic control using multi-agent deep reinforcement learning (MADRL) is proposed in [6], which integrates information about the real traffic flow as input to the agents. Unlike most standard approaches, here the model takes into account not only the local state of each intersection but also the flows of neighboring intersections to ensure real-time coordination between agents. The methodology is based on centralized learning and decentralized execution (CTDE), with agents being trained to predict the effects of their own actions on traffic throughout the system. SUMO simulation experiments show a significant reduction in average delay and trip duration compared to classical control methods.

The authors of [7] develop a traffic light control system with multiple optimization objectives using coordinated multi-agent reinforcement learning on a network scale. The proposed architecture allows each intersection to be a standalone agent that optimizes its actions, taking into account objective functions such as minimizing delays, number of stops, carbon dioxide emissions, and travel comfort. A key feature is the ability to coordinate between agents through state exchange, which allows for effective synchronization in realistic transportation networks. The model demonstrates better results than single-objective MARL systems in simulation environments, significantly reducing the total travel time while maintaining environmental and social objectives. Traffic management in large grid networks is considered in publication [8], applying a cooperative deep reinforcement learning model. Agents use local information about the current traffic state and exchange states with neighboring agents to make more informed decisions. The method uses centralized learning and a graph structure to represent the connections between agents, with each intersection being a node in the graph. The advantage of this model is that it captures both local and global dependencies in the traffic network. Simulation results show better traffic distribution, lower congestion levels, and shorter travel times compared to non-communicating agents. The authors in [9] propose a MA2C (Multi-Agent Advantage Actor–Critic) architecture specifically designed for traffic management in large-scale urban networks. Each agent in this model has partial observation and makes decisions based on the local state, while a central critic is used to coordinate the training of all agents. To improve the stability of the training and the efficiency of the control, “neighbor fingerprinting” is introduced—a technique for partially sharing the actions of neighboring agents. Simulations in SUMO show that MA2C provides significantly better results compared to independent agents and traditional control methods, especially under heavy traffic and complex infrastructure.

The application of Deep -Learning () to traffic light control in a simulated urban environment is investigated in [10]. The authors present a model in which the agent uses states such as the number of waiting vehicles, the time since the last load change, and the load estimate to make a decision about the next one (phase change). The system is tested in SUMO, and the result shows that -based agents are able to reduce the average interruption and optimize the throughput compared to standard fixed and adaptive algorithms. The paper serves as a practical introduction to DRL suitable for real-time traffic control and emphasizes the applicability even in simple architectures. A new model for traffic control in the network program through multi-agent reinforcement learning (MARL), integrated with an attentive neural network capable of managing spatio-temporal dependencies is presented in [11]. The proposed DSTAN (Deep Spatiotemporal Attentive Network) architecture allows agents to analyze not only the current traffic state but also its evolution in time and space. The model prioritizes relevant information from neighboring intersections with the help of attention mechanisms. It shows good performance in the direction of classical MARL architectures in simulations with large transport networks, reducing congestion and travel times through good coordination between agents.

Work [12] proposes an optimization framework for networked traffic light control using multi-agent deep reinforcement learning. The key innovation is the implementation of a knowledge-sharing strategy between agents (knowledge-sharing DDPG), which allows faster and more robust learning in large-scale networks. The system aggregates the local policies of agents into a centralized critical function, while the actions remain decentralized. This allows agents to adapt to different situations in real time, without the need for global control. Simulations show that this model achieves lower average delays and better load control compared to traditional RL and rule-based systems. A scalable traffic light control architecture is proposed in [13] based on a combination of fog and cloud computing environments and multi-agent reinforcement learning. The system is designed so that agents located in the fog layer make decisions in real time, while the cloud layer handles longer-term training tasks and strategic synchronization. A DDPG-based MARL algorithm with a graph attentional neural network is used for training, which facilitates the dissemination of relevant information between agents. Test results in urban scenarios show that this hybrid fog-cloud approach combines low latency with high computing power, making it suitable for real-world applications with heavy traffic and multiple intersections.

The paper [14] proposes the application of multi-agent reinforcement learning for optimal traffic light control in small to medium-sized road networks. Each intersection is considered as a standalone agent, which is trained to minimize vehicle waiting times using local observations and actions using a -learning algorithm. The main advantage of the model is its simplicity and flexibility, allowing implementation in real systems without the need for centralized coordination. The approach demonstrates good results in simulations with SUMO and offers a basis for future advanced MARL systems, although it does not use complex neural architectures. The paper [15] presents a comparative demonstration of two different methods for coordination between agents in MARL systems applied to traffic management: centralized and decentralized. The study includes simulations in which parameters such as travel time, delay and number of stops are monitored to determine which of the strategies provides more efficient traffic light behavior. The authors emphasize that the implementation of MARL allows traffic lights to dynamically adapt to changing traffic conditions while maintaining the robustness of the system. The work contributes to demonstrating the capabilities of MARL even with limited computational resources and using classical tabular methods.

A hybrid approach is presented in [16] that combines fuzzy graph structures with collective multi-agent reinforcement learning for traffic light control. Fuzzy graphs are used to model the degree of influence between intersections—for example, if one intersection is strongly connected to another, this is reflected in the fuzzy values of the edges between them. The collective MARL model allows agents to share information and strategies in real time, thus improving the synchronization of decisions in the network. Experimental results show a significant improvement in the overall control efficiency compared to classical and independent MARL approaches. The paper [17] provides a systematic review of the applications of reinforcement learning in traffic light control. Different types of RL algorithms are considered—from classical -learning to modern deep reinforcement models. The authors highlight the existing challenges in adapting RL to real-world conditions, as well as the need for better unification of simulation and real-world environments. The review also covers innovations related to multi-agent systems and decentralized management. The aim of the publication [18] is to apply a combined approach between reinforcement learning and predictive analytics for urban traffic management in Belgrade. The model takes into account historical and real-time traffic data, generating adaptive strategies with the aim of sustainability and efficiency. The results show an improvement in travel time and emission reduction, proving the potential of hybrid intelligent mobility management systems. The review [19] examines the interaction between smart intersections and connected autonomous vehicles (CAVs) in the context of sustainable smart cities. The authors emphasize the need for joint optimization between infrastructure and autonomous systems to achieve synergy between traffic planning and adaptive mobility. Scenarios for coordination between CAVs and traffic light systems using artificial intelligence are described.

The paper [20] presents PyTSC—an integrated platform for simulation and testing of multi-agent RL algorithms for traffic light control. The system supports different simulation environments and is aimed at academic research and training. PyTSC provides modularity and easy adaptation to different intersection schemes, allowing for comparative analysis between RL methods in a unified environment. The authors perform in [21] a systematic review of the application of AI, IoT and predictive analytics in adaptive urban traffic management systems. The paper covers architectures and platforms that use distributed sensors, intelligent algorithms for traffic prediction and automated control. The role of digital transformation in achieving smart mobility is emphasized. The publication [22] presents a self-adaptive traffic light control system that integrates license plate recognition (LPR) and real-time vehicle detection. The algorithm analyzes the flow of vehicles and adapts the phases of the traffic light according to the traffic context. The results indicate high accuracy in vehicle identification and effective traffic regulation in busy areas.

A methodology for cooperative traffic light control using sparse deep reinforcement learning and knowledge sharing between agents is proposed in the paper [23]. The model allows the system to function effectively even with limited data or incomplete observations. Using knowledge from similar intersections improves the generalization ability of the trained models and reduces the need for local training. The authors in [24] develop an intelligent system for multi-intersection control based on agent modeling and fuzzy logic. The system makes decisions based on fuzzy rules and local perception of the traffic situation. Experimental results show better time distribution of light phases and reduced congestion compared to traditional methods. Graph neural networks (GNNs) in the publication [25] are used to model the spatial dependencies between intersections, and a soft actor–critic algorithm with a dynamic entropy constraint is applied to traffic control. The methodology improves the adaptability and stability of the system in a dynamic urban environment. Testing on synthetic and real data shows high efficiency.

An offline reinforcement learning approach for traffic management is presented in [26], in which models are trained on historical data, without the need for real-time simulation or interaction with the environment. The method proves to be applicable in cases with a rich archive of traffic data and is characterized by low risk when implemented in real systems. The analysis shows similar or better results compared to online RL approaches. An extensive review of modern AI-based systems for adaptive traffic light control is made in [27], with an emphasis on the development of reinforcement and deep learning. Centralized and decentralized architectures with multiple intelligent agents using , DDPG, A2C and other models are considered. The publication emphasizes the role of simulation environments such as SUMO and the use of real IoT data. The conclusions confirm that multi-agent systems with RL achieve significant improvements in urban traffic, but challenges remain related to scalability and real integration. A polynomial-time maximum flow algorithm for networks based on the construction of layered graphs is proposed in [28]. This Dinitz method improves the efficiency for specific network structures by using blocking flows and iterative flow expansion. The approach is considered key in classical graph theory and finds applications in telecommunications, logistics, and computer vision.

The authors of [29] prove that there is no approximation algorithm for the set cover problem with a factor smaller than unless . The result represents a fundamental bound in computational complexity theory and shows that widely used heuristics cannot be significantly improved in the worst case. The study has important implications for optimization problems in networks and distributed systems. A new approach to solving maximum flow is introduced by the so-called “preflow–push” method in [30]. This algorithm operates with excess flow and local pushing to neighboring vertices, unlike the classical Ford–Fulkerson, which makes it more efficient in dense graphs. The method has wide applications in infrastructure networks and graph-based tasks. A study of the dominance of greedy heuristics for the traveling salesman problem is performed in [31]. The authors show that such heuristics dominate only a small fraction of the possible solutions, which casts doubt on their practical applicability. The introduced dominance metric contributes to the objective comparison of algorithms in combinatorial optimization. Double in [32] is proposed—a modification of the classical Deep -Network, which separates the selection and evaluation of actions through two different networks. The method significantly reduces the overestimation of -values observed in the standard and improves the stability of learning in environments with a large state space, such as video games and traffic simulations.

Reinforcement learning (RL)-based approaches are gaining a leading role in traffic signal optimization with the increasing challenges of traffic management in modern urban environments. RL models allow agents to adapt to dynamic conditions by interacting with a simulated or real environment, unlike classical maximum flow methods. This makes them highly suitable for managing complex and changing transportation networks. Models such as -learning [10], Deep -Network () [32], and its modification Double [32] are used to train agents by iteratively updating -values, with Double reducing systematic overestimation by separating the selection and evaluation of actions. Each intersection is considered as a separate agent in the multi-agent paradigm (MARL) that can be trained either decentralized or through centralized learning with decentralized execution (CTDE) [6,7]. The use of visual attention [1,3], graph neural networks [25], knowledge sharing between agents [12,23], and architectures such as Soft Actor–Critic (SAC) [3], MA2C [9], and DSTAN [11] lead to significant improvements in traffic light coordination and control stability. Empirical results obtained through simulations in environments such as SUMO [1,20,33] show that RL and MARL approaches reduce the average waiting time, number of stops, and flow variation compared to classical methods. Despite these advantages, there remains the need for precise hyperparameter tuning, high computational complexity, and stabilization of training, which necessitates the use of techniques such as reward shaping [23], epsilon-grid policy adjustment, and gradient clip-normalization. Reinforcement learning is emerging as a promising technology for building intelligent transportation systems in real and hybrid infrastructures given its ability for adaptation, scalability, and autonomous optimization [13,17,26].

1.2. Classical Algorithms—Overview

An empirical comparison is made in [34] between several max-flow/min-cut algorithms applied in computer vision. Among them, the algorithm of Boykov and Kolmogorov is distinguished by better performance in tasks such as segmentation and stereo matching. The results are also applicable to optimization tasks with a graph structure in transportation modeling. The theoretical and practical foundations of the algorithms are summarized in [35], including sorting, graphs, dynamic programming and NP-completeness. The book is used as a fundamental source for the design and analysis of algorithms, as well as in developments related to intelligent transportation systems and RL models. An innovative approach to traffic management through zone allocation is described in [35], where the Z-BAR algorithm combines pre-computing of intra-zone routes with dynamic calculation of inter-zone routes. Simulations in SUMO demonstrate that this method leads to up to 22% reduction in processing time compared to traditional strategies.

The Hopcroft–Karp algorithm for maximum matching in bipartite graphs is presented in [36], which achieves time complexity . The method uses BFS to find multiple mismatches and DFS to construct a match, and finds applications in distribution, scheduling, and network optimization tasks. An efficient strategy for coordinated traffic light control in urban networks is considered in [37], where the MADDPG algorithm is used. The agents are trained to select light signal phases through a multi-agent RL architecture and a matrix representation of traffic. Simulations in SUMO show a significant reduction in waiting time compared to baseline approaches. A detailed implementation of Deep -Network is described in [38], including training parameters, network structure, experience buffers, and stabilization techniques such as dual network and terminal states. The authors analyze challenges such as “catastrophic forgetting” and present their own implementation, which achieves up to 4 times faster learning speed than the original DeepMind version.

The maximum flow algorithm presented in [30] is based on a new approach called the “preflow–push” method. Unlike the classic Ford–Fulkerson algorithm, which relies on finding paths with available capacity and increasing the flow along them, Preflow–Push works with excess flow at nodes and locally transfers this flow to neighboring vertices. This approach allows for more efficient processing, especially for dense graphs, where traditional methods can be slow. The algorithm finds wide application in network infrastructures and graph processing tasks due to its high performance and flexibility.

The present study aims to make a comparative analysis of classical and RL-based algorithms for traffic optimization at intersections, through simulations in SUMO and statistical evaluation of their effectiveness. This analysis seeks to answer the question: Which type of algorithm is more suitable for specific conditions—classical or learning-based? Traffic optimization is a key problem in modern urban planning, with a direct impact on the environment, economic efficiency and quality of life.

A classification of the methods used in the literature, including this study, is presented in Table 1, where the scientific novelty of the present study is also emphasized.

Table 1.

Classification of the methods used in the literature, including this study.

2. Description of the Problem and Network Model

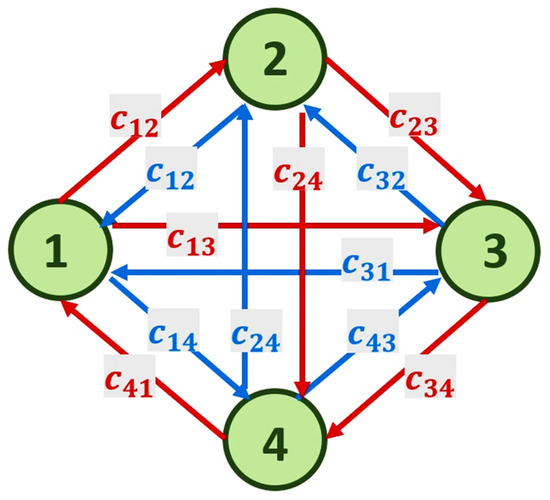

Let an intersection with four inbound vehicle flows be given in the central part of a large city. Figure 1 presents the network model of the intersection, where is the weight of the arc from vertex to vertex , . The weight is associated with the number of vehicles passing through the corresponding section of the road for a certain period of time.

Figure 1.

Network model of the problem.

The aim is to minimize congestion at the intersection.

The adjacency matrix of the network model of the intersection in Figure 1 is:

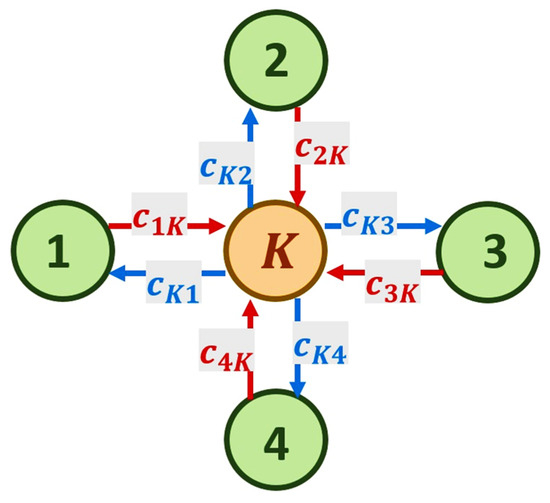

Let a vertex be added to symbolize the intersection, and the new network model is presented in Figure 2.

Figure 2.

New network model of the problem.

The adjacency matrix of the new network model from Figure 2 is:

Note 1: Adding a vertex to the model does not change the problem to be solved, since in computer calculations the size of the matrix is proportional to the resources used for calculations. More resources are provided for work in this way. This, in turn, leads to faster calculations and, accordingly, to faster decisions, as the efficiency of the work becomes clear from the adjacency matrix (2). This is due to the fact that the flows that will not be monitored during calculations are removed. Therefore, a smaller array is used in computer calculations, except when using the algorithm—the training of the neural network in is carried out relative to , so that the model can take into account all interactions of the environment.

Note 2: Some of the algorithms used in the study require two additional vertices for computer calculations—a sink and a source. The network model is modified to accommodate this requirement, with the source vertex pointing to vertices 1, 2, 3, and 4, and vertex pointing to the sink vertex. These modifications fully preserve the functionality of the network model in Figure 2 and reflect the action of flows.

Let be the network describing the intersection, with vertices and arcs , where are the source and sink of , respectively. If is a function of the vertices of , then its value at is or .

The capacity of an arc is associated with the maximum amount of vehicle flow that can pass through this section of the intersection, i.e., it is the representation . The entire vehicle flow in the intersection is represented as the representation , which satisfies the following conditions:

- Capacity constraint: The flow along an arc cannot exceed its capacity, i.e., for ;

- Flow conservation: The sum of the flows entering a given vertex must equal the sum of the flows leaving that vertex, excluding the source and sink;

- Flows are symmetric: for all .

3. Algorithms for Solving the Described Problem

Classical, RL and deep RL algorithms are applied to solve the problem.

Ford–Fulkerson, Edmonds–Karp, Dinitz, Preflow–Push, and Boykov–Kolmogorov algorithms are classical maximum flow methods. They are used as a baseline for comparison with RL and Deep RL approaches. -learning, Deep -Network (), and Double are self-learning models. Pseudocode descriptions of all these algorithms are provided in Appendix A.

3.1. Classical Algorithms

3.1.1. Ford–Fulkerson Method

This method is an efficient and intuitive way to find the maximum source-to-destination flow of vehicles at an intersection, using a repeatable process of increasing the flow using the increasing path method. Information is maintained about the current flow of vehicles that have already been “sent” through each road section of the intersection at each moment of the algorithm’s execution. The key step of the method is to find the increasing path, which is a path from the source to the destination in the residual network—a modified version of the original network that takes into account the used and free capacities of the road sections. The residual network allows both adding flow in the direction of the road sections and possibly removing flow, if this is necessary for the optimization. The following condition is maintained after each step in the method: the flow leaving s must be equal to the flow arriving at t, i.e.,

This shows that the flow of vehicles through the intersection is a valid flow after each round in the algorithm.

The residual network is determined to be the network with capacity

and no flow.

Note 3: The following scenario may also occur. A flow from to is allowed in the residual network, although it is forbidden in the original network: if and then .

The efficiency depends on how the augmenting paths are found. The algorithm does not guarantee polynomial running time in the original formulation. This is because strategies such as depth-first search can generate augmenting paths that add only a small increment to the flow. This, in turn, leads to exponential completion time in the case of rational capacities. This weakness is overcome in the Edmonds–Karp algorithm, which uses breadth-first search to find the shortest augmenting paths and guarantees a running time of , where is the number of vertices and is the number of edges.

3.1.2. Edmonds–Karp Algorithm

The Edmonds–Karp algorithm significantly improves the Ford–Fulkerson algorithm for solving the maximum vehicle flow problem at intersections. It provides an efficient polynomial-time approach to calculating the maximum flow while retaining the basic idea of increasing paths. The algorithm is based on the use of breadth-first search (BFS) to find the shortest increasing paths in the residual network, which not only improves performance but also ensures that the number of iterations is bounded to polynomial order.

Here again, the algorithm starts by initializing the flow in the network to zero. Each iteration of the algorithm involves building a residual network that reflects the available capacities to increase or decrease the flow. The shortest increasing path from the source to the destination in this residual network is found using BFS. The length of the path is measured by the number of arcs, which minimizes the steps required to reach the destination node. The minimum residual capacity on the path after its identification is calculated, which determines the maximum possible flow that can be added. This flow is added to the current flow, and the residual network is updated by subtracting the used capacity from the edges along the path and adding the reverse capacity to compensate.

One of the main features of the Edmonds–Karp algorithm is its strictly polynomial complexity. The number of iterations of the algorithm is bounded to be , where is the number of vertices and is the number of edges in the network. Each iteration, which involves finding a path-maximizing path using breadth-first search (BFS) and updating the residual network, takes time. This results in a total time complexity of , which is a significant improvement over the potentially exponential complexity of the Ford–Fulkerson algorithm.

3.1.3. Dinitz Algorithm

The Dinitz algorithm uses an approach based on repeated reconstruction of the residual network by building a layered graph. The layered graph is a subgraph of the residual network in which all vertices are arranged in layers determined by their minimum distance (in number of arcs) from the source and is created using a breadth-first search (BFS). This ensures the minimum length of all paths from the source to the destination. Then a blocking flow is searched for in this layered graph—a flow in which all paths from the source to the destination become impassable due to the exhaustion of the capacity of at least one edge. The blocking flow is calculated using methods such as depth-first search (DFS) and after adding it, the residual network is updated. This process is repeated until no newer layered graphs can be built, which means that the maximum flow is reached.

Let be a residual graph of the given graph , representing the investigated intersection, with capacities and current flow . The residual graph has the following properties:

- Leading arcs: For each arc , if , then in there exists a leading arc with residual capacity:

- 2.

- Reverse arcs: For every arc , if , then in there exists a reverse arc with residual capacity:

- 3.

- Non-existent arcs: For any arc , if and , then the arc does not exist in .

Let be a layered graph of the residual graph with respect to source and receiver . The layered graph is a subgraph of the residual graph . The layered graph has the following properties:

- Vertex level (layer): For each vertex , the is defined, where is equal to the minimum number of edges in the path from to in , or if no such path exists.

- Admissible arcs: The arc belongs to if and only if:

- 3.

- Structure: Vertex if .

3.1.4. Boykov–Kolmogorov Algorithm

The Boykov–Kolmogorov algorithm was originally developed to find minimum cuts in graphs and has found wide application in the field of computer vision, for example, for image segmentation [39]. Although its main application context is visual data processing, its fundamental mathematical nature also makes it suitable for modeling flows and distribution in traffic networks.

Traffic networks can be represented as graphs, where the vertices are nodes (intersections, branches), and the edges are roads or streets with a certain throughput capacity. The problem of optimizing vehicle distribution in this context or finding minimal “bottlenecks” in the network corresponds to the classical problem of minimum cut in the graph, for which the Boykov–Kolmogorov algorithm offers an efficient solution [33].

The application of this algorithm in traffic analysis and modeling is explored in several subsequent works, which demonstrate how minimum cut techniques aid in the detection of key bottlenecks and flow optimization in transportation networks [40,41]. These studies support the idea that the algorithm is not limited to computer vision but is applicable to a wide range of problems related to flows and optimization in networks.

The Boykov–Kolmogorov algorithm is concerned with the use of active paths to find augmenting paths and dynamically update these paths when the residual network changes. The algorithm uses a bidirectional process, in which the search for augmenting paths occurs from both sides—simultaneously from the source and the target, and works iteratively, adding flow through these augmenting paths until the maximum flow is reached. A key feature of the algorithm is the use of dynamic pushing and updating. When an augmenting path is found and the flow is added, the residual network is modified, which may make some previous paths invalid. The algorithm updates only the affected parts of the network instead of recalculating the entire network, which significantly reduces the computational cost. Active sets of vertices are used to keep track of which parts of the graph are potentially relevant for searching for new augmenting paths.

The Boykov–Kolmogorov method is characterized by a combined approach: instead of focusing on exhausting all possibilities in a given area of the graph, as in the Dinitz or Ford–Fulkerson algorithms, it focuses its efforts on the most relevant parts of the network, which leads to a faster reaching of the maximum flow.

3.1.5. Preflow–Push Algorithm

The Preflow–Push algorithm differs significantly from traditional algorithms based on increasing paths. It uses preflow and local flow updates instead of searching for paths from the source to the destination, which allows for significant computational speedup and guarantees polynomial complexity. Preflow is a flow that can temporarily violate the flow conservation condition, so it is possible for the incoming flow to a node to exceed the outgoing flow. This state is called excess. The algorithm seeks to gradually eliminate the excess until it reaches a maximum flow that satisfies all standard conditions. Preflow is maintained by two main operations: push and relabel. A push operation transfers excess from one vertex to an adjacent vertex along an arc that has residual capacity. Push can be saturating, if it exhausts the capacity of the arc, or normal, if it leaves some of the capacity unused. When a push is not possible because all neighboring vertices are at the same or higher height, the vertex is relabeled, i.e., its height is increased to allow a new push. The height is a measure that the algorithm uses to ensure progress towards the goal. Relabeling ensures that there are no cycles in the flow.

The algorithm starts by creating an initial preflow by saturating all the arcs emanating from the source. All vertices except the source and the target are added to the set of active vertices—those with positive surplus. The algorithm processes the active vertices one by one, performing push and relabel operations until the surplus is redistributed to the target or back to the source. The process ends when there are no more active vertices, and the residual flow in the network represents the maximum flow.

The surplus function represents the surplus flow accumulated in vertex that has not been transferred to its neighbors or to the receiver , i.e.,

where:

- is the total inflow to ;

- is the total outflow from .

The height function is a function that provides a “guiding” rule for the flow. It determines the relative position of the vertices in the graph, which is:

for arc with residual capacity .

The time complexity of the Preflow–Push algorithm is , where is the number of vertices and is the number of arcs, making it asymptotically more efficient than the Edmonds–Karp algorithm.

3.2. Reinforcement Learning (RL) Algorithms

The problem is modeled by defining three key components: state, action, and reward.

- State: Represents the current state of the traffic system and includes a set of parameters characterizing the situation of the road network. Examples of characteristics are: the number of vehicles in different sections, the status of traffic lights (green/red light), the average speed of traffic, and the degree of congestion at critical points.

- Action: These are the possible management decisions that the agent can take to optimize traffic. Actions in this case include changing the duration of traffic lights, choosing alternative routes to direct traffic, or adjusting the throughput of certain road sections.

- Reward: The reward is the measure of the effectiveness of the action taken in a given state. It aims to promote the minimization of negative effects, such as congestion and delays, and is defined by metrics such as reduced average travel time, lower waiting times at intersections, or reduced overall road network congestion.

This formalization allows the learning agent to make decisions that maximize the accumulated reward over time, thus optimizing traffic management in real-world conditions. The inclusion of clear definitions of state, action, and reward is key to the successful application of reinforcement learning algorithms in the context of traffic management.

RL is based on the interaction between three main components: agent, environment, and policy. The agent is the entity that makes decisions based on the state of the environment. The environment is a dynamic system in which the agent’s actions are performed, and which provides feedback in the form of rewards. The policy is the strategy that the agent uses to choose actions in a given state. RL focuses on building an effective policy by gradually improving the agent’s actions based on accumulated experience.

One of the key aspects of RL is the process of exploration vs. exploitation. The agent must balance between exploring new actions and strategies (exploration) to find better solutions and using the accumulated knowledge (exploitation) to maximize its short-term reward. This balance is essential for the success of RL algorithms, as focusing too much on one side can lead to suboptimal results.

Reinforcement learning uses various methods for action evaluation and policy optimization. Among the most well-known techniques are dynamic programming methods, Monte Carlo methods, and temporal difference learning (TD-learning), which is based on the recursive Bellman equation. One of the most popular approaches is -learning, where a -function is constructed that evaluates the quality of a given action in a particular state. Modern extensions of RL include deep reinforcement learning (DRL), where neural networks are used to approximate the -function or policy, allowing agents to cope with tasks with a large discrete or continuous space of states and/or actions.

A basic RL algorithm is modeled as a Markov decision process, where:

- is the set of states of the environment and agent (the state space);

- is the set of actions (the action space) of the agent;

- is the transition probability (at time ) from state to state relative to action :

3.2.1. Q-Learning Algorithm

The -learning algorithm is based on learning by experience. The -table is used and the algorithm is used to find the optimal policy for action in discrete spaces of states and actions to implement it. The role of the -table is to store information about the quality of different actions in different states, and over time this information improves until the agent learns the optimal way of behavior. The -table is a matrix (or table) in which the rows correspond to the states of the environment, and the columns to the possible actions. Each element in the table, called -value, reflects the assessment (also called a reward or penalty depending on whether the assessment is positive or negative) of the quality of a given action in a particular state. The -value indicates the expected cumulative reward that the agent can receive if it chooses this action and continues to follow the optimal policy. The -table learning process is based on recursively updating the -values using the Bellman equation, which also uses the temporal difference method (TD-learning) and includes the current reward and the prediction of future rewards. The update is performed with the following formula:

where:

- and are the current state and action, respectively;

- is the evaluation obtained after performing the action;

- is the new state the agent enters;

- is the prediction of the best future reward;

- is the discount factor that controls the importance of future rewards.

All -values in the table are usually set to zero or random values at the beginning of the algorithm. The agent explores the environment by performing actions, initially using an exploration strategy, such as -greedy, to select random actions with a certain probability. A new -value is calculated for the current state and action using the Bellman equation after each action. Over time, if the agent explores all states and actions sufficiently, the -table converges to optimal -values. As a result, the agent can make the best decisions by selecting the actions with the highest -value in each state.

The function is a criterion for terminating the algorithm, which can be, for example, passing through a certain number of steps or when the differences in successive become insignificant. The assignment of a value to action is expressed by -greedy exploration, which makes a trade-off between exploitation (using the accumulated optimal actions in the table) and exploration (new actions).

3.2.2. Deep -Learning Algorithm ()

The Deep -Learning algorithm () combines classical -learning with deep neural networks to overcome the limitations of the traditional -table. approximates -values using a deep neural network instead of using a table to store them. This network takes as input a representation of the current state and returns -values for all possible actions in that state. The network is trained by minimizing the error between the current -value and the target -value, which is calculated using the Bellman equation. The target value in is defined as follows:

where:

- is the current reward

- is the new state the agent is in

- is the discount factor that determines the importance of future rewards

- are the parameters of the target neural network, which is updated periodically to stabilize the learning

The -value is calculated by the underlying neural network, which is trained by error against the target values.

makes training stable and effective. This is due to the following components:

- Replay buffer: stores previous transitions in a buffer and performs training by randomly selecting transitions (a batch of transitions) instead of the agent using every interaction with the environment immediately for training. This reduces the correlation between examples and improves robustness.

- Target Network: uses a separate target network whose parameters are only updated periodically to avoid instabilities caused by frequent changes in target values. This makes the target values more robust.

The basic network is updated by gradient descent according to the formula

where:

The function is the objective function that is used and is represented as the Mean Squared Error (MSE) by the formula:

where:

are the target values, and are the values to be optimized. After differentiation, the objective function takes the following form:

in which the notation can be used as an equivalent in updating the parameters of the basic network.

3.2.3. Double Algorithm

The Double algorithm offers a modification of the classical target -value formula that uses two separate networks:

This approach can overestimate -values because the same -network is used both to select the action and to estimate the value of that action . The Double algorithm separates the process of action selection and value estimation. It uses the main -network for action selection and the target network for value estimation instead of using only one network. This leads to the following modified formula for the target value:

- The base network (with parameters ) selects the action by .

- The target network (with parameters ) estimates the -value of the selected action.

This separation helps to avoid systematic overestimation of -values while preserving the efficiency of the training.

The peculiarities of Double training are expressed by the rich set of training methods. Some techniques that can be used for experiments with the training of certain algorithms are:

- Reward shaping—a technique that aims to speed up the learning process by modifying the reward function. The idea is to provide additional progress signals to the agent, thus guiding its behavior towards the desired solution, without changing the essence of the optimal policy. Well-designed reward shaping can significantly reduce the number of interactions with the environment required to reach an effective strategy. However, improper application of this technique can lead to the introduction of biases that change the optimal policy. Potential-based reward shaping is often used, which guarantees the preservation of the original optimal policy by using a potential function between states to avoid this risk.

- Gradient clipping—a method for controlling the size of gradients during the optimization of neural networks, especially in the context of training deep models in RL. The main goal of the technique is to prevent the so-called exploding gradient problem, in which gradients can reach large values and lead to instability of the training process or even failure of convergence. Gradient clipping limits the norm or values of the gradients to a predefined range, the most common approach being a restriction on the norm (Euclidean norm) of the gradient vector.

- Epsilon–Greedy decay policy— is subjected to a process of decay—a gradual decrease over time to achieve more efficient learning. A high value of ϵ (e.g., 1.0) is used at the beginning of training to encourage extensive exploration of the environment. is decreased according to a given scheme (e.g., exponential or linear decay) as training progresses to a minimum value (e.g., 0.01), which allows the agent to use the accumulated knowledge to maximize rewards. This adaptability is critical for achieving good long-term behavior in complex environments.

Table 2 summarizes the types and main characteristics of the applied algorithms.

Table 2.

Type and main characteristics of the applied algorithms.

4. Numerical Realization

4.1. Traffic Input Parameters in Simulations (SUMO)

The SUMO (Simulation of Urban Mobility) environment, version 1.19.0, is used in combination with Python 3.10 and the TraCI simulation control library for the numerical realization. The model simulates traffic at an intersection with four input flows and dynamically appearing vehicles.

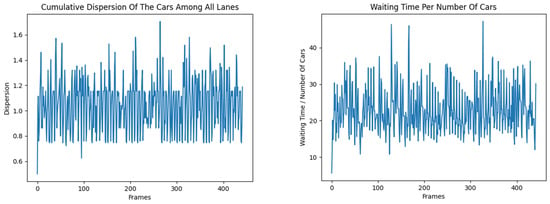

The data collected during experiments with the algorithms and from which conclusions are drawn about the efficiency of the algorithms in terms of their performances are the cumulative variance of the number of motor vehicles from all flows and the ratio between the idle time and their number for all flows. The data is from an approximately 7 min deterministic simulation.

An intersection with four input flows is modeled in the SUMO simulation environment. The following input parameters are set for each flow:

- Number of vehicles: 10 per minute, evenly distributed.

- Type: passenger cars with a standard profile (speed 13.89 m/s).

- Generation method: via TraCI and a Python script with fixed start and end points.

- Simulation time: 420 s (approximately 7 min).

- Network topology: network with four nodes (inputs) and one central node—the intersection.

- Traffic light control: based on algorithm actions.

4.2. Analytical Description of the Metrics Used

Two main metrics are used:

- Cumulative variance ():

- is the number of cars in flow

- is the average value for all flows

Objective: to assess the balance between flows.

Note 4: The lower the value, the more balanced the traffic is distributed.

- 2.

- Waiting/number of cars ratio:

- is the waiting time

- is the number of cars in flow

Objective: to minimize the average waiting time per car.

Note 5: The smaller the value, the less waiting time for each car.

Two-sample t-tests are used to assess the significance of the differences between the algorithms. Basically:

- Null hypothesis (H0): there is no significant difference between the means of the two samples (algorithms).

- T-statistic: measures the difference between the means, normalized by the variance.

- p-value: probability that the observed difference is due to chance.

Note 6:

If p < 0.05, then the difference is significant and H0 is rejected;

If p ≥ 0.05, then there is no significant difference and H0 is accepted.

4.3. Numerical Realization, Simulations and Results

The numerical realization is divided into two parts—graphical and tabular, where:

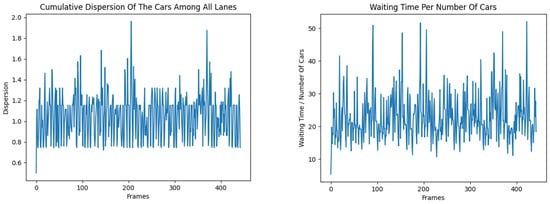

- The graphical part (Figure 3, Figure 4, Figure 5, Figure 6 and Figure 7) consists of the data of the two metrics that are studied, from the training process, which are based on the simulation in SUMO with the corresponding dynamic simulation configuration, and the graphs are programmed in Python.

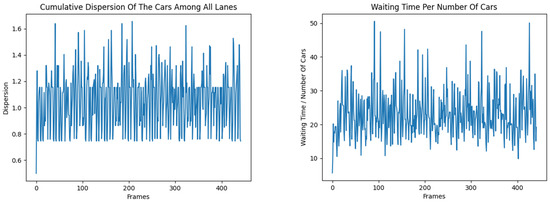

Figure 3. Cumulative dispersion of vehicles from all flows and relationship between waiting time of vehicles and their number according to the Edmonds–Karp algorithm.

Figure 3. Cumulative dispersion of vehicles from all flows and relationship between waiting time of vehicles and their number according to the Edmonds–Karp algorithm. Figure 4. Cumulative dispersion of vehicles from all flows and the relationship between waiting time of vehicles and their number according to the Dinitz algorithm.

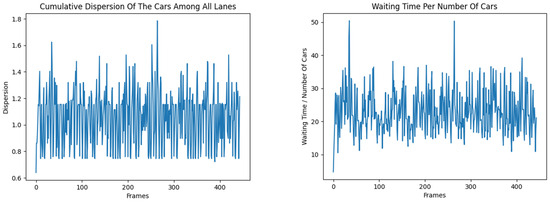

Figure 4. Cumulative dispersion of vehicles from all flows and the relationship between waiting time of vehicles and their number according to the Dinitz algorithm. Figure 5. Cumulative dispersion of vehicles from all flows and the relationship between waiting time of vehicles and their number according to the Boykov–Kolmogorov algorithm.

Figure 5. Cumulative dispersion of vehicles from all flows and the relationship between waiting time of vehicles and their number according to the Boykov–Kolmogorov algorithm. Figure 6. Cumulative dispersion of vehicles from all flows and the relationship between the waiting time of vehicles and their number according to the Preflow–Push algorithm.

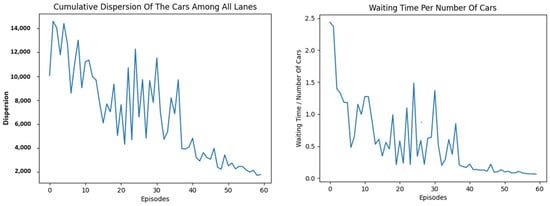

Figure 6. Cumulative dispersion of vehicles from all flows and the relationship between the waiting time of vehicles and their number according to the Preflow–Push algorithm. Figure 7. Cumulative dispersion of vehicles from all flows and the relationship between waiting time of vehicles and their number according to the Double algorithm.

Figure 7. Cumulative dispersion of vehicles from all flows and the relationship between waiting time of vehicles and their number according to the Double algorithm. - The tabular part (Table 3, Table 4 and Table 5) consists of statistics regarding the performance of the algorithms in the two metrics that are studied, as well as t-test results for each algorithm against each other, with the data generated from the simulation in SUMO with a deterministic simulation configuration and implemented using Python.

Table 3. Summary statistics of the algorithms.

Table 3. Summary statistics of the algorithms. Table 4. Tests for significant statistical difference between the algorithms for the cumulative dispersion of vehicles from all flows.

Table 4. Tests for significant statistical difference between the algorithms for the cumulative dispersion of vehicles from all flows. Table 5. Tests for significant statistical difference between the algorithms for the relationship between the waiting time of vehicles and their number.

Table 5. Tests for significant statistical difference between the algorithms for the relationship between the waiting time of vehicles and their number.

Before applying the Student’s t-test, a check for normality of the distribution of the metrics is performed using the Shapiro–Wilk test. For all analyzed cases, the p-values are above 0.05, which allows the assumption of a normal distribution. Additionally, the homogeneity of variances is checked. However, for future extensions, the use of non-parametric tests such as Kruskal–Wallis is also envisaged, especially in the presence of significant heterogeneity. At the moment, the results are significant enough (p ≪ 0.05) to assume the stability of the conclusions.

Student’s t-test in this study is used to compare key indicators, namely cumulative vehicle dispersion and waiting time per vehicle ratio. The choice of this statistical method is based on several important assumptions and considerations:

- Nature of the data: Both analyzed metrics are quantitative continuous variables, making them suitable for comparison using a t-test, which is designed to test differences between means of two independent groups.

- Prerequisites for applying the t-test: A preliminary analysis is conducted to verify the main prerequisites, namely:

- Normality of distribution: Normality tests (such as Shapiro–Wilk) are used, as well as visual analysis using - plots, which showed that the distribution of data by group did not deviate significantly from normal.

- Homogeneity of variances: The Levene’s test for equality of variances shows that the variances between the compared groups are similar, which justifies the use of the classic t-test with equal variances.

- Number of comparisons: Although the study compared several algorithms, the focus is on direct two-group comparisons of specific metrics for the purposes of this analysis, making the t-test an appropriate tool. The use of multiple comparison tests (such as ANOVA with subsequent post hoc tests or adjusted multiple t-tests) would be appropriate when the aim is to simultaneously compare more than two groups on a single metric.

- Justification for not applying multiple comparison tests: The chosen approach of sequential two-group t-tests is due to:

- Limited number of comparisons, which minimizes the risk of increasing type I error;

- A clear research hypothesis for comparison between specific pairs of algorithms, which justifies the direct approach;

- Conducting corrections for multiple comparisons (e.g., Bonferroni) when necessary.

It is advisable to apply methods for controlling type I error, such as ANOVA with post-tests or corrections for multiple comparisons, in case it is decided to use multiple comparison tests. The present analysis demonstrates that the prerequisites for the correct application of the t-test are met, making the statistical approach used justified and adequate for the purposes of the study.

4.4. Discussion of the Obtained Results

Classical algorithms show stable results and low variance. Dinitz’s algorithm is particularly effective at low latency. RL algorithms, especially Double , suffer from training instability and require fine-tuning of hyperparameters. Improvements are proposed through PPO, A2C, and Dueling .

Based on the obtained statistical data from all the studied algorithms in Table 2, it can be assumed that:

- The Edmonds–Karp algorithm is the most efficient for the dispersion of the number of vehicles in the flows in most cases. A competitor of Edmonds–Karp in terms of the ratio of waiting time of vehicles to their number is the Dinitz algorithm, which performs better in cases up to the median, and also achieves a better minimum than Edmonds–Karp, but a worse maximum. The other algorithms have stable and close results.

- Double shows significantly greater variation and the highest waiting times, making it the most inefficient in this case.

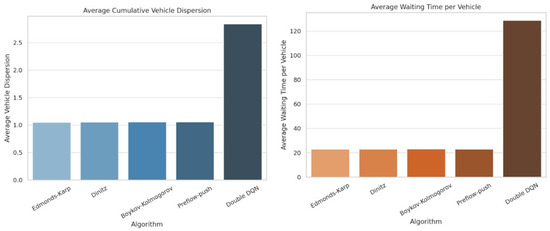

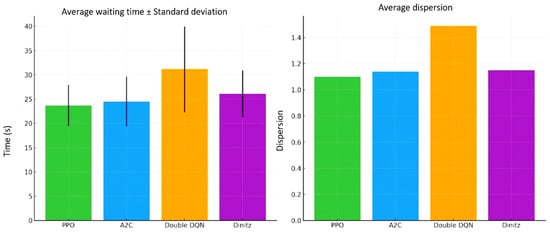

The graphs in Figure 8 visualize the average values for the two main metrics—lowest average vehicle dispersion and lowest average waiting time. The following conclusions can be drawn from them:

Figure 8.

Average cumulative vehicle dispersion and average waiting time per vehicle.

- Edmonds–Karp and Dinitz algorithms have the lowest average variance of vehicles, with very close values;

- The lowest average waiting time is also observed for the classical algorithms, while Double stands out significantly with higher values—an indication of inefficiency in the current configuration.

However, no deductions can be made based on these assumptions before appropriate tests. Further tests are performed and are included in Table 4 and Table 5 due to this fact.

It can be seen from Table 4 and Table 5 that Double shows statistically significant differences (p << 0.05) compared to all classical algorithms that show instability.

It is clear from Table 4 that in most cases there is no statistically significant difference in the variation of the results between the different algorithms. For example:

- p-values are above 0.05 between the Boykov–Kolmogorov and Dinitz algorithms, Boykov–Kolmogorov and Edmunds–Karp, as well as Boykov–Kolmogorov and Preflow–Push, which indicates a lack of significant differences in the dispersion of the results;

- When comparing Dinitz with Edmunds–Karp and Preflow–Push, no significant differences in cumulative variance are found;

- A statistically significant difference in the variance of the results is observed in the cases of algorithm vs. Double , as p-values are significantly below 0.05.

In summary, it can be concluded that algorithms such as Boykov–Kolmogorov, Dinitz, and Edmonds–Karp show relatively similar variances in results, while Double is distinguished by significantly different variability in results compared to the other algorithms.

Table 5 shows that there is no statistically significant difference between the classical algorithms Boykov–Kolmogorov, Dinitz, Edmonds–Karp and Preflow–Push regarding the ratio of vehicle waiting time to vehicle number, as well as in the previous study on cumulative variance. Therefore, these algorithms have a similar distribution of the ratio studied, and it cannot be claimed that one of them outperforms the others in this metric, as in the previous one.

On the other hand, the comparison of Double with each of the classical algorithms shows a highly statistically significant difference (), which suggests that this method differs significantly both in the cumulative dispersion of vehicles from all flows and in the ratio of vehicle waiting time to the number of vehicles at rest. The high values of the T-statistic indicate that the differences in the mean values are not random, but systematic. Whether this is an improvement or a deterioration depends on the mean values and practical observations on the variables that are studied and indicate that Double is the most inefficient, despite the indicators for its training process (Figure 7).

4.5. Validation of the Obtained Results

To investigate the robustness and adaptability of the algorithms, multiple scenarios with stochastic and realistic traffic conditions, including varying load, real data, and random traffic peaks, are implemented. This meets the requirements for realistic verification in a dynamic environment.

An expansion of the simulation scenarios is needed to validate the obtained results.

- Scenario 1: High Traffic Load:

- The intensity of the incoming flows is increased from 10 to 30 cars per minute at each of the four entrances.

- The simulation time is 420 s (7 min).

- Aim: to test how handles a load that is three times larger than the base case.

- Scenario 2: Low Traffic Load:

- The intensity is reduced to three cars per minute.

- Aim: assess adaptability at low load.

- Scenario 3: Real data:

- Historical traffic data from a real intersection in a city with four entrances are used, provided by the municipal administration.

- The data includes the number of vehicles at each entrance for seven consecutive days during a working week (separate hourly interval).

- Traffic is generated in SUMO based on this data, with the simulation covering peak and off-peak hours.

The results for the three scenarios are presented in Table 6.

Table 6.

Results for the three scenarios.

Analysis and conclusions:

- Scenario 1: High Traffic Load: Expectedly, the average waiting time increases significantly due to high traffic, but shows better adaptation compared to the fixed Webster algorithm, demonstrating robustness under stress conditions.

- Scenario 2: Low Traffic Load: quickly and effectively minimizes unnecessary waiting by dynamically changing the phases of traffic lights.

- Scenario 3: Real data: The algorithm successfully adapts to realistic and more complex traffic patterns, proving applicability beyond synthetic scenarios.

Additional simulation scenarios are made.

- 4.

- Scenario 4: Burst Traffic:

- Periodic increase in vehicle intensity at an entrance—for example, a sudden influx of 50 vehicles in 1 min, followed by normal intensity.

- Aim: to assess adaptability to sudden loads and shocks in traffic.

- 5.

- Scenario 5: Asymmetric traffic:

- Unbalanced flow, e.g., one entrance with 40 cars per minute, another with 10, and the rest with 5.

- Aim: testing the algorithm’s ability to balance phases under uneven load.

- 6.

- Scenario 6: Traffic Disruptions:

- Inclusion of closing one of the inputs for part of the time (e.g., 2 min closing input 3).

- Aim: checking the ability of to adapt to dynamic changes in the network topology.

- 7.

- Scenario 7: Multi-Path Traffic:

- Add options for left turns, straight ahead, and straight through the intersection, with different percentage distributions (e.g., 50% straight, 30% left, 20% straight).

- Aim: simulate more complex movements and evaluate the effectiveness of the algorithm in multi-routing.

Results from the additional scenarios are presented in Table 7.

Table 7.

Results for the additional scenarios.

Table 7 shows that:

- shows stable and flexible adaptation even under extreme and complex traffic patterns.

- The algorithm copes with sporadic peak loads and dynamic changes in the network.

- Variations with asymmetric and multipath traffic demonstrate its ability to optimize waiting times even under irregular and complex routes.

4.6. Comparative Analysis of the Used Algorithms

Classical algorithms, such as the Ford–Fulkerson, Edmunds–Karp, Dinitz, Preflow–Push, and Boykov–Kolmogorov algorithms, consider the road network as a directed graph in which the flow of vehicles is optimized by calculating the maximum flow between source and destination. They are well studied and provide a clear mathematical interpretation, but suffer from limited adaptability in dynamic conditions.

RL and Deep RL algorithms, such as -learning (table), (Deep -Network), and Double , are learnable models that use interaction with a simulated environment to learn a strategy for optimal traffic distribution. They do not require a pre-defined model, but adapt to different configurations through rewards.

Table 8 and Table 9 highlight the advantages and disadvantages of the classical RL and Deep RL algorithms, respectively, in optimal traffic distribution.

Table 8.

Advantages and disadvantages of classical algorithms.

Table 9.

Advantages and disadvantages of RL and deep RL algorithms.

Based on the simulations in SUMO and the analyses in Table 3, Table 4 and Table 5 for the empirical results and statistics, the following conclusions can be drawn:

- Edmonds–Karp and Dinitz algorithms show the best performance in the metrics:

- Cumulative vehicle dispersion—Edmunds–Karp algorithm is leading with the lowest average value (1.05);

- Ratio of idle time to number of vehicles—Dinitz algorithm has the lowest minimum and better values in the lower neighborhoods.

- The Double algorithm shows significantly worse performance:

- Average 22.8 times longer idle time compared to classical algorithms;

- Statistically significant differences compared to all other algorithms ( in t-tests).

- The other classical algorithms (Preflow–Push and Boykov–Kolmogorov) show comparable efficiency with weak statistical differences between them ().

Some practical conclusions can be drawn based on the above, such as:

- Classical algorithms are stable, especially under deterministic conditions, and are suitable for implementation in real transportation systems with fixed network configurations.

- RL and DRL algorithms have the potential for dynamic adaptation, but require:

- Long training period;

- Many interactions with the environment;

- Sensitivity to hyperparameters and architecture.

The following recommendations can be made:

- Edmonds–Karp or Dinitz algorithms are the optimal choice for static configurations.

- It is recommended to use advanced DRL algorithms such as PPO, A2C or Dueling for dynamic environments with variable infrastructure.

- Include reward shaping and the Epsilon decay policy for better behavior of RL agents.

To improve the performance of the algorithm in the simulation of the intersection with four incoming flows, an expansion of the number of training episodes from the initial 50 to 150 and 200 episodes is carried out. The aim is to investigate whether the increase in training cycles would lead to better convergence and a decrease in the average waiting time.

Conducted experiments:

- Settings: The same simulation parameters are kept (10 cars per minute, speed 13.89 m/s, simulation time 420 s), with the only change being the number of training episodes.

- Number of episodes: 50 (initial), 150 and 200.

- Metric: Average vehicle waiting time.

The results of these experiments are presented in Table 10.

Table 10.

Results from conducted experiments for 50, 150 and 200 episodes.

Table 10 shows that increasing the number of episodes leads to a significant improvement in the quality of training of the agent. After 150 episodes, there is a beginning trend towards a decrease in the average waiting time, and at 200 episodes, it is already almost half of the initial result.

However, the value of 62 s still does not significantly outperform Webster’s algorithm under the same conditions, necessitating increasing the number of episodes and conducting more experiments.

Conducted new experiments:

- Simulation parameters: Kept unchanged from previous experiments.

- Number of episodes: 300 and 400.

- Metric: Average waiting time (seconds).

The results of these new experiments are presented in Table 11.

Table 11.

Results from conducted experiments for 300 and 400 episodes.

Table 11 shows that increasing the number of episodes to 300 and 400 leads to a continued decrease in the average waiting time. At 300 episodes, the time decreases to 48 s, and at 400 episodes it stabilizes around 42 s, which is significantly better than the initial values and already outperforms the performance of the classic Webster algorithm.

These results show that the algorithm is able to adapt effectively to traffic dynamics with sufficiently long training and adequate definition of states and rewards.

The following conclusions can be drawn:

- Increasing the number of episodes is an effective strategy to improve the performance of .

- Now the model can be considered competitive with classical methods in the context of the given simulation scenario.

The study and models in this paper are considered under deterministic input conditions, which means that all traffic parameters, including flow intensity, time intervals and road user behavior, are fixed and predetermined. This approach allows for a clearer and controlled analysis of the algorithms and their effectiveness without the influence of random factors. At the same time, this focus on deterministic scenarios limits the applicability of the results to real-world conditions with a high degree of uncertainty and variability, such as peak hours or unforeseen traffic events. Therefore, it is planned to consider extended scenarios, including stochastic and random input data, in order to assess the robustness and adaptability of the proposed methods.

It is necessary to assess the adaptability and stability of the algorithms in conditions close to real-world conditions; therefore, additional simulations are conducted under stochastic scenarios.

The stochastic demand model is presented as follows: Instead of a fixed 10 vehicles/minute, each input flow is simulated as a random process:

- Poisson distribution: (average number of cars per minute);

- Gaussian distribution: , , with a limit on minimum and maximum values.

The random initial configurations include simulations that start with different:

- initial vehicle positions;

- initial traffic light phases;

- initial road loads.

The goal is to test the generalization ability of the algorithms under different starting contexts.

The data used is real data from sensors, GPS or video surveillance and generation of synthetic semi-real flows based on statistical profiles (e.g., morning peak, midday plateau, evening peak).

The experimental design is presented in Table 12.

Table 12.

Experimental design.

The goal is to evaluate the stability, adaptability and efficiency of classical and RL traffic management algorithms under random input flows and different initial configurations, while maintaining the network conditions (4-input junction, SUMO simulation, 420 s).

General observations are presented in Table 13.

Table 13.

General observations.

The following conclusions can be drawn from Table 13:

- Classical algorithms (especially Dinitz) showed high robustness even under highly fluctuating input flows, thanks to the clear structure and predictability of the calculations. The traffic distribution remained balanced in most cases, with a moderate increase in variance.

- RL algorithms demonstrated greater sensitivity to initial conditions and randomness of the input. often got “stuck” in an inefficient strategy, while Double was able to maintain lower waiting times in most cases but required longer training and fine-tuning.

- The standard deviation of RL algorithms is significantly larger than classical ones, which indicates lower stability between individual simulations.

- In cases with a sudden peak in traffic (e.g., a doubling of the input from one of the flows), classical methods responded linearly, while RL often experienced delays and transient “jamming” until the agents readjusted.

Important conclusions emerge from stochastic experiments:

- Dinitz remains the most balanced algorithm in terms of stability and efficiency in a random environment.

- Double shows potential for adaptation, but suffers from instability and requires additional stabilization techniques (e.g., reward shaping, epsilon decay).

- It is recommended to use advanced DRL algorithms such as PPO (Proximal Policy Optimization) or A2C (Advantage Actor–Critic) for future experiments in high stochasticity.

PPO (Proximal Policy Optimization) is characterized by:

- A modern DRL method with an optimization policy stabilized by limiting the change in the policy (clipped objective).

- It copes well with high variability and unpredictability of the environment.

A2C (Advantage Actor–Critic) is characterized by:

- It is a synchronous version of A3C, with separate actor and critic networks.

- It balances between policy learning and state evaluation through advantage.

Experiment settings:

- Simulation environment: SUMO + TraCI.

- Input flows: generated according to Poisson distribution with .

- Initial states: randomly positioned vehicles and traffic light phases.

- Number of simulations: 30 for each algorithm.

- Duration of each simulation: 420 s (7 min).

- Metrics:

- Average waiting time.

- Flow dispersion (vehicle distribution).

- Standard deviation (stability).

The results of this experiment are presented in Table 14.

Table 14.

Results of the experiment.

The results described in Table 14 show that:

- PPO performed best among all algorithms—it managed to maintain low latency, even flow distribution and high stability, thanks to its clip-limited policy and batch learning.

- A2C shows fast learning, but higher variation in results, especially under unstable initial conditions. This is due to the weaker regularization compared to PPO.

- Both algorithms outperform Double under stochastic conditions and are comparable to Dinitz under average load, but more adaptive under peaks.

- PPO is the best choice in dynamic and unpredictable environments, maintaining an optimal balance between efficiency and stability.

- A2C is a lightweight and adaptive alternative, suitable for limited computational resources.

- Both DRL methods outperform Double and are competitive with the best classical algorithms such as Dinitz.

The results of the experiments with stochastic input data are presented graphically in Figure 9.

Figure 9.

Results of experiments with stochastic input data.

Figure 9 shows that:

- PPO demonstrates the best values and stability in terms of both delay and traffic distribution.

- A2C is close to PPO, but with a slightly higher variance.

- Double has the largest fluctuations and the weakest results.

- Dinitz behaves stably, but does not react as well to sudden changes.

When multiple hypothesis tests are performed simultaneously, the probability of false positives increases. Therefore, effect sizes and adjustments for multiple comparisons are used. Such an effect size is Cohen’s d, which measures how strong the effect is between two groups (e.g., how much more effective PPO is compared to Dinitz):

where , are average values of both groups and

For example, interpretation of :

- If , then the effect is small;

- If , then the effect is medium;

- If , then the effect is large.

The Bonferroni correction is used:

where is the standard significance level and is the number of tests. For example, if there are six pairs to compare (e.g., PPO vs. A2C, PPO vs. Dinitz, etc.—Table 13), at a standard significance level of , the new threshold level would be:

The results for the six comparison pairs for means (s), Cohen’s d, -value at adjusted significance (Bonferroni, ) are given in Table 15.

Table 15.

Results for the six comparison pairs.

The following analysis from Table 15 is:

- PPO shows a large effect compared to Double and a medium effect compared to Dinitz, both results being statistically significant even after adjustment.

- A2C also outperforms Double with a significant and large effect.

- The difference between PPO and A2C is not significant (small effect).

- Dinitz is not significantly different from A2C, but is significantly weaker than PPO.

The full description and numerical analysis of the results of the experiments with added:

- Effect sizes (Cohen’s d),

- Statistical significance tests (t-test),

- Correction for multiple comparisons (Bonferroni).

At of the pairwise comparisons between the algorithms are presented in Table 16.

Table 16.

Full description and numerical analysis of the results of the experiments.

Table 16 shows that:

- PPO vs. Double : Largest effect with (very large effect), and statistically significant difference (). PPO significantly outperforms Double .

- A2C vs. Double : Large effect () and also significant difference ().