Abstract

Intrusion prevention and classification are common in the research field of cyber security. Models built from training data may fail to prevent or classify intrusions accurately if the dataset is imbalanced. Most researchers employ SMOTE to balance the dataset. SMOTE in turn fails to address the constraints associated with the dataset, such as diverse data types, preserving the data distribution, capturing non-linear relationships, and preserving oversampling noise. The novelty of this work is in addressing the issues associated with data distribution and SMOTE by employing Conditional Tabular Generative Adversarial Networks (CTGANs) on NSL_KDD and UNSW_NB15 datasets. The balanced input corpus is fed into the CNN model to predict the intrusion. The CNN model involves two convolution layers, max-pooling, ReLU as the activation layer, and a dense layer. The proposed work employs measures such as accuracy, recall, precision, specificity and F1-score for measuring the model performance. The study shows that CTGAN improves the intrusion detection rate. This research highlights the high-quality synthetic samples generated by CTGAN that significantly enhance CNN-based intrusion detection performance on imbalance datasets. This demonstrates the potential for deploying GAN-based oversampling techniques in real-world cybersecurity systems to improve detection accuracy and reduce false negatives.

Keywords:

intrusion detection; synthetic minority oversampling technique (SMOTE); conditional tabular generative adversarial network (CTGAN); convolutional neural network (CNN) MSC:

68M25

1. Introduction

The internet has proven its benefits in terms of commercial and real-time usage by human beings. In the past, familiarity with the internet has allowed attackers to capture individual data and information by invoking various attacks to gain control over an individual’s system. Encryption methods have been employed to protect data. Technologies related to these attacks have also evolved in terms of deceiving the security systems to make it more challenging to protect the data [1]. Intrusion Detection Systems (IDSs) have made it difficult for the attacker to exploit the vulnerabilities of the system. IDSs are a robust and effective way of securing the system; they are a tool that scans network traffic for malicious events and signals the network admin. Mainly, they are classified into two categories: network-based and host-based. Network Intrusion Detection Systems (N-IDSs) are used to monitor entire networks, and Host IDSs (H-IDSs) are used to monitor system logs. IDSs can be built upon signature, anomaly, rule, or data-mining-centred techniques. ML-based N-IDSs rely explicitly on feature engineering, but deep-learning models implicitly learn the features inherent in the dataset. Huajuan Ren et al. [2] introduced a multi-class classification model named DUEN to raise a subset of training data for respective iterations to attain a supernal ensemble. The IDS not only brings about performance improvement, but also improves the efficiency of the classification model to enhance real-time threat detection [3].

These ML models, developed on feature sets, need to adapt to the emerging requirements of Information and Communication Technologies (ICT). SMOTE and its successors are used prevalently to balance the dataset, which comprises noise or outliers; synthetic samples are created using linear interpolation, which oversimplifies data patterns and does not capture the intricacies of the underlying data distribution since non-linear and high dimensional correlated features are not captured. There are significant consequences associated with such oversampling methods. For instance, if datasets comprise diversified types of data like integers and floating-point numbers, categorical and text datasets cannot be handled by SMOTE. Furthermore, this method relies on the density of the minority class to balance synthetics records, but this strategy is not suitable for sparse datasets [4]. Feature engineering involves the use of domain knowledge to retrieve significant features from the network packet flow. Yang, Y et al. [5] demonstrated various methodologies to fine-tune feature extraction, such as adaptive scaling, reconstructions, feature compression, and feature matching. Another work by Asaad Balla et al. [6] presented near miss, one sided selection, and ADASYN 0.13.0., integrating the results with CNN–LSTM features, and they proved that the resultant model was able to detect new threats. ML-based models fail in predicting or classifying new threats due to adversarial attacks on the input dataset. Many researchers have proposed various methodologies with an emphasis on the optimal feature selection and data imbalance problems associated with ML-based feature selection for N-IDSs [7]. To address these issues, many solutions, including under sampling and oversampling, have been proposed. Thi-Thu-Huong Le et al. [8] introduced an ensemble model of CatBoost, LightGBM, and XGBoost to solve imbalance issues and concluded that XGBoost performs better compared to CatBoost and LightBoost, with improved accuracy compared to imbalanced datasets. Thurkmane A et al. [9] presented a mud-ring algorithm along with a multi-layer SVM, and the results show better accuracy. Deep-learning approaches have also been employed to elucidate the challenges of data imbalance [10,11,12]. Variants of the deep neural network, such as the Siamese neural network, have also been explored to address the data imbalance at the algorithm level instead of at the data level [13,14], and a cost-sensitive NN centred on focal loss was discussed by Mulyanto M et al. [15]. The generative adversarial network comprises a generator and a discriminator to generate data and make comparisons, respectively, and it can learn from unknown data and generate new similar samples [16,17]. This emerging field can be used to enhance the anomaly detection ability of IDSs [18,19]. The above discussion confirms that imbalanced data will cause severe problems when the intrusion class surpasses the benign class of the dataset. Most researchers evade the data balancing phase, which can in turn cause the model performance to decline as it ignores the important features that can be derived from the minority class to favor the majority classes. This may lead to overfitting, and the model will not be able to generalize to identify new patterns, and this increases the false negative rate, which allows attacks to go unnoticed. Traditional matrices are not suitable for such scenarios, so there is a necessity to assess the model using distinct measures such as ROC-AUC, precision, F1-score, and recall [20]. To balance the dataset, most researcher use SMOTE. SMOTE uses the minority class and its closest neighbour to generate new data.

Techniques other than SMOTE can be used to find intrusions [21]. This reveals an opportunity to experiment with novel methods such as CTGAN. CTGAN is a valuable option for balancing oversampling in unbalanced data because of its high quality, as it applies encoding–decoding algorithms to handle categorical and textual datasets. It learns the total data distribution to maintain correlated features. This helps to create synthetic records that can be compared to the original dataset. High-dimensional datasets and non-relationships between features can be captured using the deep learning architecture of CTGAN. CTGAN generates synthetic tabular data and handles mixed data types well. The architecture of CTGAN consists of four layers, i.e., a Generator and a Discriminator as the first layer, where the generator is responsible for producing realistic data samples and the discriminator distinguishes between real and fake data samples. Layer two makes use of conditional generation that controls the generation of specific categories in categorical attributes, ensuring rare classes are represented properly. After this, the conditional vector is appended to the generator and discriminator output. It amplifies the noise by learning the distribution on majority samples. The third layer normalizes the sample using the variational Gaussian mixture model, so complex distributions are preserved. Finally, CTGAN, the last layer ensures, that each mini batch during training contains an equal distribution of classes. This layer ensures that underrepresented classes are not ignored by the model. CTGANs are good at handling categorical data, use mode-speck normalization that improves the numerical scaling, are scalable to larger tabular dataset, and are capable of generating high-quality syntheses that retain the statistical properties. This advantage allows for investigating innovative data science techniques that illustrate the ability of deep generative models to enrich tabular data.

Pervious work [22] focused on feature extraction using CNN, RNN and Autoencoder using an ensemble classifier made of RF and SVM using soft voting classifier to obtain the final accuracy, whereas paper [23] aimed to reveal the features that contribute towards the final outcome using Shapley additive explanations, and feature selection is carried out using the SelectKBest() Method. During the previous two works, it has been observed that datasets were not distributed in equal proportions, i.e., imbalanced data are present. To overcome these issues, we propose the use of CTGAN to balance the dataset. Domains such as health care and finance employ CTGAN to balance the dataset. These applications show the ability to handle complex tabular and highly imbalanced data distributions. With respect to intrusion detection, CTGAN is still an upcoming technology that can be used to balance the dataset and has limited real world integration. The contribution of this research is in applying CTGAN to the widely used dataset NSL_KDD, UNSW-NB15, and the Malware dataset, showing its usage in the cyber security domain. The contributions of the study are divided into three phases.

- (1)

- Validation of CTGAN in creating realistic minority class samples in IDS dataset.

- (2)

- Integration of CTGAN along with the CNN classifier to enhance the detection performance.

- (3)

- Comparative analysis of the proposed method with traditional oversampling techniques showing superior accuracy and class balance.

The paper is structured with given sections: Section 1 provides advances in computer network security and the importance of feature engineering to balance data and existing research findings. It also lists the drawbacks of SMOTE techniques. Some features of CTGAN help to address these drawbacks. Section 2 presents a review on existing works on data balancing and intrusion detection using SMOTE. Section 3 illuminates the methodology of CTGAN used to balance dataset. Outcomes are evaluated in Section 4. Conclusions and future work are presented in Section 5.

2. Literature

Jingrong Mo et al. [1] designed ENS_CLSTM model extract spatial and temporal data using CNN and Bi-LSTM, respectively; the methods used to select the respective features will be fed to the DNN model. We compared the performance of the model for the NSL-KDD, CICIDS, UNSW-NB15 and ISCX-IDS2012 datasets. We showed that the model outperforms NSL-KDD, CICIDS UNSW-NB15, with 99.99% accuracy, and with ISCX-IDS2012 it attained 99.74% accuracy. Oluwadamilare Harazeem Abdulganiyu et al. [4] proposed the XIDINTFL-VAE framework, which generates synthetic data to make a model that can learn and classify better by boosting the minority class. This utilizes the class-wise focal loss and variational auto encoder with XGBoost to solve the issues with data imbalance effectively. It attained a 94.74% F1-score and a 99.67% accuracy rate. It reached a moderate recall value of 89.41%. This work has limitations with respect to performance; depending on the parameter selection, CWFL needs high computing resources. Yue Yang et al. [5] explored new methodologies associated with feature engineering to solve the problems originating from imbalanced data. They proposed a novel methodology for network intrusion detection built on reconstruction and feature matching to provide multi classification. The technique incorporates three main phases: Adaptive Scaling, Reconstruction and Feature Compression, and Feature Matching. Primarily, data gathered at the edge will be processed through these phases. Many researchers focused on using various feature engineering techniques to address the issues of data imbalance, but they did not consider the metrics that can evaluate the performances of these sampling methods.

Assad Balla et al. [6] worked on the morris power and CICIDS2017 dataset, employing various feature extraction methods such as ADASYN, SMOTE random sampling, near miss, and OSS. The balanced dataset was then used to train the CNN-LSTM binary classification model in the SCADA system. The results show the impact of the imbalanced dataset over DL SCADA-IDS.Ahmed Abdelkhalek [7] introduced unique resampling methods, other than SMOTE, such as the Adaptive Synthetic and Tomek Links (AYATL) algorithms. The author conducted experiments with various DL models (MLP, CNN, DNN, CNN-BLSTM) to provide binary classification using the NSL-KDD dataset, and mentioned that the performance of NIDS is improved by employing ADASYN + Tomek link for balancing minority class data, compared to DL models. Thi-Thu-Huong Le et al. [8] proposed ensemble classification using hybrid oversampling and under-sampling methods. SMOTE and BorderlineSMOTE represent the oversampling methods. Tomek Links and Edited Nearest Neighbours are the under-sampling methods. CHAD2020 and IoTID20 datasets were used to conduct experiments. The classifiers used were CatBoost, LightGBM and XGBoost. BorderlineSMOTE Tomek + XGBoost outperformed the rest of the algorithm, with an accuracy of 98%. The SMOTE alternatives have been used in experiments to balance the data and over-fitting; for example, Anil V Turukmane et al. [9] worked on advanced SMOTE along with unique techniques for feature extraction and optimization, such as M-SvD and ONgO, respectively. These pre-processed data are fed mud M-MultiSVM parameters and are tuned to attain accuracy levels of 99.89% and 97.535% for the CICIDS and UNSW-NB15 datasets. Mohammed k et al. [20] introduced SMOTE_ENN along with the Parzen Estimator algorithm as the parameter optimizer, and concluded that RF with HyperOpt achieved 99.82% in 46.9 s.

Akdeas Oktanae Widodo et al. [24] assessed the log normalization to stabilize the data, and Recursive Feature Elimination (RFE) was applied to extract the parameters to address issues of data imbalancing. They also used SMOTE to balance the NSL_KDD and CIC-IDS2017 corpus. RF and XGBoost were employed to compare the performances of imbalanced and balanced datasets. The accuracy was similar with and without SMOTE, but they found improvements in other matrices such as precision, F1-score and recall. Fawzia Omer Albasheer et al. [25] used SMOTE for improvising minority data, and later data were assessed with ENN to overcome outliers. The proposed the jaya optimization technique to reduce the dimensionality of UNSW_NB15 and NSL_KDD. SMOTE—Edited Nearest Neighbours (SMOTE-ENN) was used to balance these datasets. Decision Tree, Bagging, Random Forest, J48, and extra tree classifiers were used, and they concluded that an extra tree classifier achieved the highest accuracy of 99.13% and 99.94% for UNSW_NB15 and NSL_KDD, respectively. Eid et al. [26] utilized SMOTE for balancing IIoT-specific WUSTL-IIOT-2021 and UNSW_NB15. The CNN classifier was hyper-tuned using systematic optimization. The accuracy of the proposed model is 99.9%. Talukder et al. [27] presented SMOTE-TomekLink to the WSN-DS dataset. The Light gradient boosting (LGB), Decision Trees, Extreme boosting, Multilayer Perception, Random Forests, and K-Nearest Neighbour algorithms were applied. Random Forest achieves an accuracy of 99.78% for binary and 99.92% for multiclass structures.

Edosa Osa et al. [28] used DNN with ReLU as the activation function and the Adam optimizer for improving the learning rate. The CICIDS 2017 dataset was balanced using SMOTE and Random Sampling. This achieved an accuracy of 99.68%. Pramanick et al. [29] used the binary bat algorithm for feature selection, and utilized SMOTE-ENN for balancing the UNSW_NB15 and NSL_KDD datasets. Missing values were replaced using the mean value of the attributes, and duplicate values were dropped. A label encoder was considered to convert categorical values into numerical values. XGBoost, KNN, Random Forest, and LightGBM classifiers were harnessed for prediction. The accuracy of RF was 99.7% for NSL_KDD and 97.3% for UNSW_NB15, thus outperforming other classifiers. Five distinct methods, including cost-sensitive, random under-sampling and random over-sampling, SMOTE, bagging, and pasting using the LR algorithm, were described by Eid et al. Ref. [30] presents another attempt to balance the WUSTL-IIOT-2021 dataset. Additionally, a comparison study was conducted employing RF, DT, KNN, SVM, LR, and NB for binary and multiclass classification with and without SMOTE. SMOTE, bagging and pasting, and random under-sampling all reached 99.67% accuracy. DT and RF outperformed other algorithms utilizing SMOTE, with the maximum accuracy of 99.98%.

T. Anitha Kumari et al. [31] assessed the ensemble model along with borderline SMOTE, which generates synthetic data near the boundary between the minority and its nearby samples. This method employed a variety of tools, and attained a performance of 99.8% compared to standard methodologies. Bidyapati Thiyam et al. [32] presented a casual inference imbalanced ratio, and showed that this metrics offers an improvement in the classification achieved by the ADASYN-IHT and Boruta-ROC methods. The intrusion detection and classification models are not only limited to the computer network of an organization; they can also be applied to the Internet of Things. The data imbalancing problem in the domain of IoT can be addressed using SMOTE along with other unique methods, such as CIDH-ODLID, given by Manohar Srinivasan et al. [33]. This model used the snake optimization algorithm to fetch hyperparameters for ESN. Enhanced results of recall, precision, F1-score and accuracy are noted. Shiyu Wang et al. [34] focused on temporal and spatial feature extraction using Res-TranBiLSTM (ResNet, Transformer and BiLSTM). SMOTE-ENN was used to balance NSL_KDD, MQTTset and CICIDS 2017. The accuracy obtained by the proposed model is 99.56%, 99.15% and 90.99% for the MQTTset, CICIDS 2017 and NSL_KDD datasets, respectively. Balaji et al. [35] put forward Dynamic Distributed Generative Adversarial Network (DDGAN) for intrusion detection. SMOTE is used to balance the HAR dataset and Modified PCA has been used for feature reduction, while Improve Firefly Optimization (IFO) has been used for feature selection. For classification, a hybrid deep learning-based convolutional neural network with an adaptive neuro-fuzzy inference system has been employed, and achieved an accuracy of 94%. Damtew et al. [36] focused on improving the accuracy of extreme minority classes by using collaborative feature selection and Synthetic Multi-Minority-Oversampling (SMMO) for feature selection and NSL_KDD dataset balancing. The classifiers used were J48, BayesNet, Random Forest and AdaBoostM1. The accuracy of the suggested model is 99.84%. Sahar Soliman et al. [37] proposed a model that used SMOTE to solve the imbalance problem, employed the SVD method to extract optimal features, and compared the results obtained from various ML and DL algorithms applied to the IoT dataset. They concluded that ML models have a lower error rate compared to DL models. The Internet of Things is an emerging technology that enables things to communicate with each other with the help of the internet. Another work addressing the imbalances in such a network was presented by Omar Elnakib et al. [38], which exhibited an enhanced anomaly-based ID deep learning multiclass classification model (EIDM). The author performed a comparative study with deep learning models and EIDM using the CICIDS2017 dataset and concluded that EIDM achieved improved accuracy compared to other methods. Haizhou Wang et al. [39] presented a work that uses transfer learning to address the challenges of data imbalance in cyber security, specifically focusing on return-oriented programming payloads. They obtained a moderate false positive rate of 0.0290, and an F1-score of 0.975 across three different domains. Mantas Bacevicius et al. [40] performed experiments on various algorithms such as the softmax regression algorithm, Decision Tree variants like id3 and CART, and ANN models such as deep learning and multi-layer perceptron. They also considered the explainable AI (XAI), LIME and SHAP for feature extraction. Finally, the assessment concluded that the DT model variant CART outperformed the others, with an F1-score of 0.998. Tao Wu et. al. [41] proposed use of K-means with SMOTE to balance dataset. The experiment was conducted on NSL_KDD dataset. With the help of enhanced RF classifier, the model obtained an accuracy of 78.47%. Gan et. al. [42] performed intrusion detection on NSL_KDD dataset using CNN algorithm. SMOTE was used to balance the dataset. Log loss and gradient coordination mechanism helps to improve the models performance. The proposed model achieved an accuracy of 98.73% and 94.55% for binary and multiclass classification. Mohammad Arafah et al. [43] used a denoising autoencoder and a Wasserstein generative adversarial network. The AE-WGAN extracts high representative features and generates realistic synthetic attacks. NSL-KDD and CICIDS-2017 helped in executing the experiments. Accuracy, precision, recall, and F1-score were used to evaluate the model. The model achieved an accuracy of 99.99%. Hancheng Long et al. [44] explored BOA-ACRF for handling data imbalance. ACGAN helped in generating numeric data samples, whereas BOA helped to find the optimal parameter of the model. CIC-IDS-2017, CIC-UNSW-NB15 and NSL-KDD were the datasets used. Accuracy, precision, recall, and F1-score revealed the performance of the model. The RF classifier achieved higher accuracy when using the proposed technique for data balancing.

Qinglei Yao et al. [45] used the CWGAN-GP technique to balance the NSL-KDD and UNSW-NB15 datasets. The proposed technique finds the characteristics and adds additional data to transform unsupervised learning to supervised learning. The accuracy achieved was 99% for the proposed model. Hayette Zeghida et al. [46] experimented with balancing the MQTT dataset using GAN-based data augmentation for intrusion detection. CNN RNN, CNN-LSTM, and CNN-GRU were the classifiers used. All the classifiers achieved an accuracy of 99.99%. Shu Feng et al. [47] explored the use of CGFL to resolve the challenges of data distribution. The datasets used were CIC-IDS2017, NSL-KDD, and CSE-CIC-IDS2018. ResNet20 and EfficientNet-B0 classifier were fed with balanced datasets. ResNet20 outperformed the EfficientNet-B0 classifier by achieving an accuracy of 96.89% on NSL-KDD, 92.19% on CIC-IDS2017 and 89.08% on CSE-CIC-IDS2018. Wai Yie Leong et al. [48] performed a technical comprehensive review on the AI-based fraud detection method. The AI techniques were applied in the E-Commerce sector, wherein areas such as accuracy, real time fraud prevention and operational efficiency were improved. With the use of AI algorithms for intrusion detection, the trust of the customer can be earned.

From Table 1 and Table 2, it is clearly visible that the SMOTE and ADASYN techniques are widely used for dataset balancing. These methods produce synthetic samples based on interpolation in minority classes. The major drawbacks of these techniques are that they only capture linear relation as they are based on an assumption of linearity, they do not work with diverse data types, and they also fail in handling outlier data points. These methods show a higher chance of overfitting the model. Table 3 displays other available methods that can be used for data balancing. Table 4 shows the strengths and weaknesses of existing model. These drawbacks can be addressed by CTGAN. It handles tabular datasets comprising mixed data types, generates realistic records of high quality, and uses deep learning architectures to understand the data distribution. The processes of machine learning models could be enhanced by using CTGAN, as it fills the gaps left by traditional methods such as SMOTE. CTGAN offers a more flexible and advanced method of balancing the datasets compared to SMOTE.

Table 1.

Existing works on SMOTE for data balancing.

Table 2.

Existing works on ADASYN for data balance.

Table 3.

Existing works on other methods for data balance.

Table 4.

Performance of existing works.

3. Proposed Work

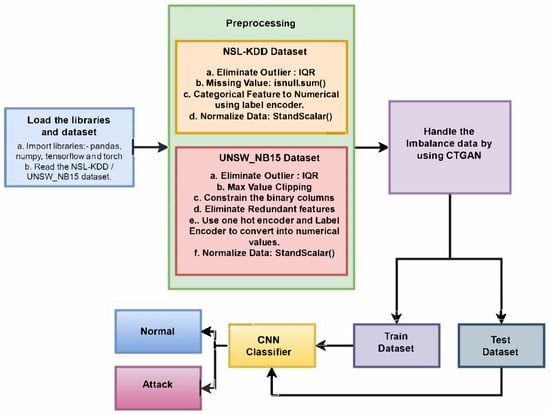

The proposed framework comprises the following steps: pre-processing, data balancing and classification. CNN was chosen for this research as the primary classifier as the classifier effectively learns and generalizes high-dimension structured datasets such as NSL-KDD and UNSW-NB15. CNNs are well-suited for application to structured data for capturing local dependences and spatial hierarchies even if the dataset is not image-based. CNNs automatically extract relevant pattern, thus making the model efficient in learning complex intrusion patterns. The robustness of the intrusion detection system is enhanced by using CNN combined with CTGAN for handling data imbalance, and also improves the performance for minority classes. To balance the records, CTGAN is used and CNN considered for classification. The proposed worked is shown in Figure 1. Within this framework, issues such as pre-processing complexity and class imbalance can be resolved effortlessly. NSL_KDD and UNSW_NB15 datasets are used to train and examine the presented work. The mentioned datasets are freely available on Kaggle.

Figure 1.

Proposed system architecture with NSL_KDD and UNSW_NB15 datasets.

NSL_KDD dataset: This is a prevalent dataset consisting of both regular and malicious class labels. The malicious class is sub-categorized into four attacks, i.e., DoS, scanning or probing, User to Root, and Remote to Local. The dataset consists of 42 attributes, out of which 24 parameters are of integer data type, 15 are float data type and the other 4 are of objects, as shown in Table 5. The total number of records is 125,972. During training the model, KDDTrain + file is used, and for testing the model, KDDTest + file is used.

Table 5.

Data type details for the NSL_KDD dataset.

UNSW_NB15 Database: The application IXIA PerfectStorm is utilized to generate the dataset. Analysis, Backdoors, DoS, Exploits, Generic, Reconnaissance, Shellcode, Worms, and Fuzzers are amongst its nine types of attacks. There are 49 features in the dataset, all of which have been trained and tested. Here, 175,341 records have been considered for training the model, whereas 82,332 records have been considered for testing purpose. Data type details are stated in Table 6.

Table 6.

Data type details for the UNSW_NB15 dataset.

3.1. Data Pre-Processing and Feature Engineering

NSL_KDD Dataset: The Interquartile Range (IQR) approach was used to handle outliers in the dataset, as outliers can have major impacts on model accuracy. The IQR is calculated as the difference between each feature’s 25th percentile (Q1) and 75th percentile (Q3). The data points that fall beyond the range Q1−3*IQR and Q3 + 3*IQR were eliminated. Secondly, missing values were checked using the isnull().sum() function. It was observed that there were no missing values; hence no explicit imputation methods such as mean or median were used. The numerical feature values show huge differences, and so there is a need to normalize the data such that all numerical feature values fall in the same range. To achieve this, StandardScaler is applied to normalize the data to find a standard deviation value of 1 and a mean value of 0. Outcome features here have values such as ‘normal’, ‘neptune’, ‘back’, ‘warezclient’, ‘ipsweep’,’land’, ‘portsweep’, ‘phf’, ‘teardrop’, ‘nmap’, ‘imap’, ‘satan’, ‘smurf’, ‘pod’, ‘guess_passwd’, ‘warezmaster’, ‘ftp_write’, ‘multihop’, ‘rootkit’, ‘buffer_overflow’, ‘loadmodule’, ‘spy’ and ‘perl. To convert the categorical values of the feature, if the value is ‘normal’, we convert the value to 0, while other values are converted to 1. Label encoding is used to convert protocol_type, service, and flag features into numerical values. The main reason to use the label encoder is the ease of use, and secondly it does not add additional dimensions during transformation, as seen in one hot encoding. Once the data are pre-processed, we then check the class distribution; it was observed that class 0 had 53.45% and class 1 had 46.54% of class distribution, which means that class 0 had around 58,630 samples and class 1 had 67,342 samples. Also note the count of majority and minority class. Since the dataset was suffering from data imbalancing, we applied CTGAN to balance the dataset. Feature interdependencies are understood by CTGAN, and it then creates realistic synthetic samples that help to maintain the feature distributions and relationships in the original dataset, whereas SMOTE techniques depend on the interpolation method. After applying CTGAN, the class distribution of class 0 was 128,825 samples, i.e., 51.14%, and class 1 had 123,119 samples, i.e., 48.86%. At this point both the classes were almost equal.

UNSW_NB15 Dataset: Outliers can affect the model’s, performance hence it was important to address this issue using the IQR method as carried out on “dur” attributes. The attribute’s lower bound is −2.004, its upper bound is 2.67, its maximum value is 59.99, and its minimum value is 0. The number of outliers detected was 9889. After outlier elimination, max value clipping was applied on “spkts”, “sloss” and “dloss”. For spkts, values greater than 787.2 were replaced by 787.2, sloss was capped at 172.8, and similarly dloss was capped at 1027.2. Binary columns such as “is_ftp_login” and “ct_ftp_cmd” were constrained to ensure proper encoding. The redundant attributes such as “swin”, “stcpb”, “dtcpb”, and “dwin” were dropped. Feature “proto” can be converted into numerical values using one hot encoder, and “service” and “state” features used the label encoder to convert them into numerical values. Highly correlated features such as “dpkts” and “is_ftp_login” were dropped. StandardScaler was used to normalize the data. As the dataset was pre-processed, class distribution was checked, and it was noticed that class 1 had 119,341 samples, i.e., 68.06% of the data belonged to class 1, and class 0 had 56,000 samples, i.e., 31.94% of the data belonged to class 0. To balance the dataset, CTGAN was used. The results show that class 1 samples changed to 189,842, i.e., 67.78%, and class 0 samples reached 90,702, i.e., 32.22%.

3.2. Classification Model

To discriminate among attack and benign network traffic, Convolutional Neural Networks (CNNs) were trained independently over the NSL_KDD and UNSW_NB15 datasets. The CNN model was constructed similarly for both datasets, with two layers at the top, followed by max pooling, flattening, and fully linked layers. ReLU served as the activation function for the first convolution layer, containing 64 filters and a kernel size of 3. The first layer captured the low-level features. A max pooling layer with a pool size of 2 was employed in order to reduce the dimensionality. With 128 filters, a kernel size of 3, and ReLU as the activation function, another convolution layer enhanced feature extraction. The second layer came after the max pooling layer. The flatten layer can be considered to have converted the 2D value to a one-dimensional value, and to have fed into layers that are fully connected. With 64 neurons with ReLU as the activation function, the fully connected layer can learn the abstract representation. The CNN model was constructed using an optimizer named Adam with 0.001 and a binary cross-entropy loss function. The model allowed inputs of batch size 32, and trained over 10 epochs. CNN models measure the accuracy and the loss at each epoch that plays an important role in evaluating a model. If validation loss stops improving, then early stopping is activated to avoid overfitting.

3.3. Algorithm for NSL_KDD Dataset

Step 1: Load the libraries and dataset. Import the necessary libraries such as pandas, numpy, tensorflow and torch for CTGAN. Read the NSL_KDD dataset.

Step 2: Pre-process the dataset. Eliminate the outlier using the IQR technique. Check for missing values using isnull.sum(). Apply the StandScalar() method to normalize the data. For the features obtained after normalization, values of zero and one are assigned to normal and other data, respectively. Features are transformed to numerical using a label encoder.

Step 3: Data balancing. First check the class distribution and count the occurrences of each class. Apply CTGAN to balance the data using synthetic records of minority samples. To balance the data, combine the synthetic records and original records.

Step 4: Data partition. The input corpus is partitioned into the training set and testing set.

Step 5: Training phase. The CNN model passes through 10 epochs and 32 batch sizes. The binary cross entropy is considered as a loss function. The learning rate of 0.001 attained by the Adam optimizer is employed. The CNN architecture consists of the following layers:

- (1)

- The first layer consists of 64 filters, with kernel size 3, and the activation function of ReLU.

- (2)

- The second layer is also called the max pooling layer, with a pool size 2.

- (3)

- The third layer contains 128 filters, kernel size 3, and ReLU.

- (4)

- The max pooling layer, which is the fourth layer, has a pool size of 2.

- (5)

- Flatten is the fifth layer.

- (6)

- The sixth layer, which has 64 neurons and ReLU as an activation function, is completely coupled.

- (7)

- The last layer is the output layer that performs binary classification with a single neuron and the sigmoid activation function.

Step 6: Prediction of testing data set.

Step 7: Evaluation phase. The model is assessed by metrics such as precision, accuracy, recall and F1-score.

3.4. Algorithm for UNSW_NB15 Data Set

Step 1: Load the libraries and dataset. Import the necessary libraries such as pandas, numpy, tensorflow and torch for CTGAN. Read the UNSW_NB15 dataset.

Step 2: Pre-process the dataset. Eliminate the outlier using the IQR technique. Apply max value clipping was applied on “spkts”, “sloss” and “dloss”. Constrain the binary columns such as “is_ftp_login” and “ct_ftp_cmd”. Redundant parameters such as “swin”, “stcpb”, “dtcpb” and “dwin” are dropped. The feature “proto” is converted into numerical values using one hot encoder, and the “service” and “state” features use the label encoder to convert them into numerical values. Highly correlated features such as “dpkts” and “is_ftp_login” are dropped. We apply the StandScalar() method to normalize the data.

Step 3: Data balancing. First check the class distribution and count the occurrences of each class. We apply CTGAN to balance the data using synthetic records of minority samples. To balance the data, combine the synthetic records and original records.

Step 4: Data partition. Dividing the input corpus for the purpose of training and testing.

Step 5: Train the CNN model. The CNN model is constructed over 10 epochs and 32 batch sizes. The loss function is binary cross entropy. The network setting is modified with a learning rate of 0.001 using the Adam optimizer. The CNN architecture consists of the following layers:

- (1)

- First layer comprises 64 filters, kernel size 3, and ReLU activation function;

- (2)

- The next layer is also called the max pooling layer, with a pool size of 2;

- (3)

- The third layer contains 128 filters, kernel size 3, and ReLU as the activation function;

- (4)

- The max pooling layer, which is the fourth layer, has a pool size of 2;

- (5)

- Flatten is the fifth layer;

- (6)

- The sixth layer, which has 64 neurons and ReLU as an activation function, is completely coupled;

- (7)

- The last layer is the outputs of a binary classification, with a single neuron and sigmoid as the activation function.

Step 6: Predict the class of test records.

Step 7: Estimate the model using metrics such as precision, accuracy, recall, and F1-score.

The structures of NSL_KDD and UNSW_NB15 datasets are different as the NSL_KDD dataset needs basic encoding and a normalization technique. On other hand, UNSW_NB15 contains diverse datatypes, and hence requires additional preprocessing techniques such as the clipping of extreme values, feature pruning, etc. This technique ensures the better performance of the model and helps to achieve high model efficiency, as shown in Table 7.

Table 7.

Preprocessing techniques—NSL-KDD vs. UNSW-NB15.

3.5. Performance Evaluation

The CNN model used the UNSW_NB15 and NSL_KDD for training and has been evaluated using a variety of metrics, such as the ROC curve, accuracy, F1-score, precision, recall, sensitivity, and specificity. The model’s capability to distinguish between legitimate and malevolent traffic is determined by these metrics, and also the way it handles the commutation between false positive and false negative.

- 1.

- Accuracy: Accuracy tells how well a model is correctly predicting the test record. It is calculated using number of accurate predictions divided by all the predictions.

- 2.

- Precision: Precision informs how well a model can correctly predict positive records. It is calculated from the records accurately categorized as positive divided by records correctly categorized as favourable and falsely classified positive records.

- 3.

- Recall: Recall classifies positive records and is the ratio of correctly classified positive records, the sum of accurately categorized records, and the positive records classified negatively.

- 4.

- F1-score: The F1-score measures models’ performance by combining precision and recall.

- 5.

- Specificity: This is the model’s capacity to accurately predict harmful records. It is obtained by taking the fraction of correctly classified negative records divided by the sum of correctly classified negative records and falsely classified positive records.

- 6.

- ROC and AUC curve: The ROC curve shows the ability of a model to classify the records using a graph. The AUC curve focuses on positive records that rank higher than negative records.

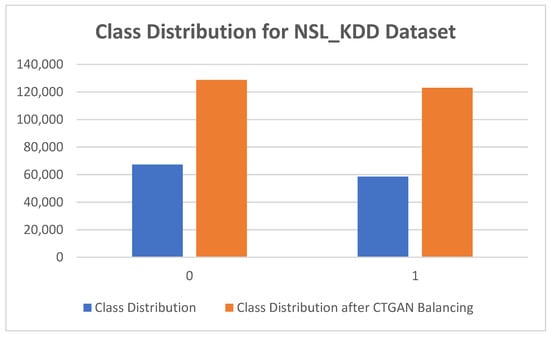

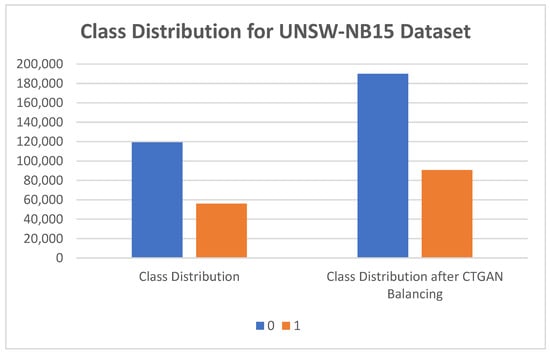

The imbalance issues related to minority classes in datasets were handled by CTGAN, which generated synthetic samples. The class distribution was visualized before and after balancing using a bar chart to show the effectiveness of synthetic oversampling. After data balancing, the CNN was trained on the dataset and its performance was evaluated. Advanced techniques, such as statistical distribution, t-SNE visualization and duplicate detection using Euclidean distance, were not conducted. The primary focus of the research was to assess the practical impact of CTGAN-generated data using performance metrics

4. Results

As we can see from Table 8 and Table 9, NSL_KDD and UNSW_NB15 data suffered from data unbalancing. Most models cannot accurately predict the minority class. To resolve this, CTGAN was used. The class distribution before data balancing comprised class 0 with 67,342 records and class 1 with 58,630 records in the NSL_KDD dataset. After the class balancing of the NSL_KDD data, the quantity of records in class 0 was 128,825 and class 1 contained 123,119 records, as depicted in Table 8 and Figure 2.

Table 8.

Class distribution for NSL_KDD dataset.

Table 9.

Class distribution for UNSW_NB15 dataset.

Figure 2.

Class distribution for NSL_KDD dataset.

From Table 9 and Figure 3, we can see that the UNSW_NB15 dataset was imbalanced, as class 0 contained 119,341 records and class 1 had 5600 records. After applying CTGAN, class 0 contained 189,842 records and class 1 90,702 records.

Figure 3.

Class distribution for UNSW_NB15 dataset.

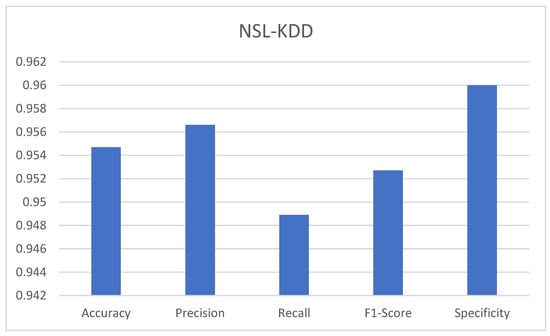

Table 10, depicts observations of the CNN model along with both datasets considered for the experiment, and underlines the efficacy achieved in the correct class prediction of the test records. Figure 4 shows the model achieved 95.47% accuracy on the NSL_KDD dataset. The model can go wrong only for about 4.53% of cases, where it may classify normal records as attack, and vice versa. The precision of 95.66% indicates that the model predicted correctly 95.66% of the time, and also has a low false positive rate. A recall of 94.89% indicates the model has low rates of false negative. The F1-score of 95.27% confirms an equilibrium between precision and recall. The specificity of 96% indicates the model predicts actual normal classes 96% of the time. It is also clearly visible that the model is highly effective in distinguishing between assault and normal classes.

Table 10.

Performance metrics of CNN models on NSL_KDD and UNSW_NB15 datasets.

Figure 4.

Performance metrics of CNN models on NSL_KDD dataset.

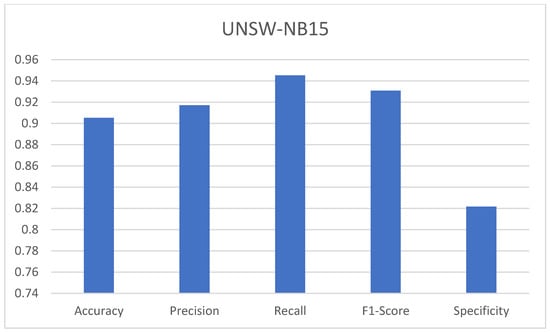

Figure 5 show the results obtained on the UNSW_NB15 dataset. The accuracy of 90.52% on the UNSW_NB15 dataset indicates that the model can make misclassifications around 9.48% of the time. The precision of 91.71% means that the model predicts an attack 91.71% of the time. The recall of 94.52% indicates the model predicted 94.52% of actual attacks correctly. The F1-score of 93.09% implies a good balance between precision and recall. The specificity of 82.16% means the model here identified 82.16% of normal records correctly.

Figure 5.

Performance metrics of CNN models on UNSW_NB15 dataset.

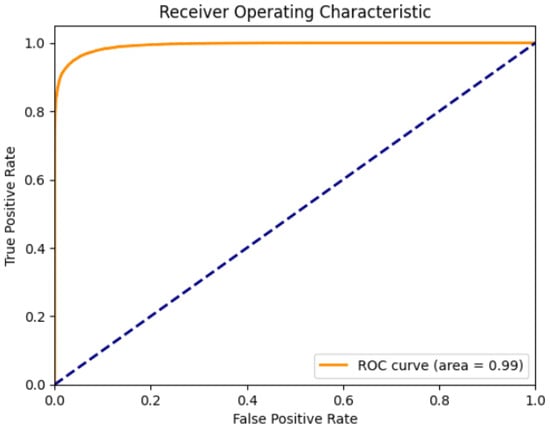

Figure 6 shows the ROC and AUC curve that is plotted, taking the false positive rate on the x-axis and the true positive rate on the y-axis. This curve conveys the effect of the model in predicting unseen records. The AUC curve is 0.99, which indicates that the model can successfully differentiate between normal and attack records. The graph shows a sharp early climb, which indicates the model has low false positives rates and high true positives rates.

Figure 6.

ROC and AUC for NSL_KDD dataset.

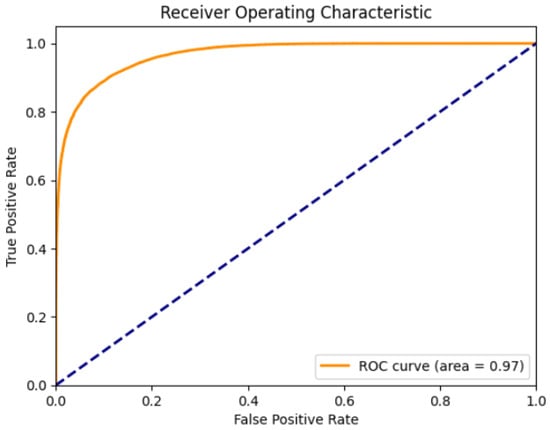

Similarly, Figure 7 shows the ROC and AUC curve for the UNSW_NB15 dataset, depicting that the false positive rate is low and the true positives rate is high, suggesting that the model can accurately predict test records as normal and malign. The AUC curve is 0.97.

Figure 7.

ROC and AUC for the UNSW_NB15 dataset.

CTGAN offers benefits, but also comes with certain limitation. CTGAN is sensitive to hyperparameter settings, such as learning rate, batch size and generator discriminator balance. Cases of the inappropriate tuning of the hyperparameter may lead to the generation of results that fail to generalize the model well using synthetic samples. Extensive tuning and early stopping helped in successfully executing the research. The NSL_KDD and UNSW-NB15 datasets contain outdated attacks and are widely used. Hence the generalizability of the model is limited to network environments.

5. Conclusions and Future Work

Imbalanced data affect the model accuracy and increase the false positive rate, so it is important to address this problem. Generally, most research makes use of SMOTE to balance the dataset. SMOTE cannot capture correlated attributes in cases of high-dimensional, complicated feature distribution and complex distribution within a dataset. To overcome the drawbacks of SMOTE and to balance the dataset, CTGAN is used. It is a sophisticated method for creating high-quality synthetic data. The CNN classifier is used to predict the test records of both balanced datasets. The accuracy, precision, recall, F1-score, and specificity were 95.47%, 95.66%, 94.89%, 95.27%, and 96%, respectively, for the dataset NSL_KDD, and 90.52%, 91.71%, 94.52%, 93.09%, and 82.16% for the dataset UNSW_NB15. The results show that the minority classes have been balanced by CTGAN, and the model can accurately predict the normal and attack classes. Hyperparamter tuning plays a vital role in boosting the performance of CTGAN. The process of generating synthetic data is computationally intensive. The dataset used for the research does not represent modern or real-time attack scenarios.

There are chances to improve the way Conditional Tabular Generative Adversarial Networks (CTGAN) are integrated with sophisticated classifiers, expanding on this research and improving the identification of changing intrusion patterns. The representation of minority classes may be enhanced by investigating hybrid models that integrate CTGAN with additional generating methods, such as diffusion models or variational autoencoders (VAEs). Greater transparency and reliability could also be provided by incorporating explainable AI techniques, which are crucial for realistic deployment. The evaluation of CTGAN-based models’ scalability and resilience when applied to bigger, more varied datasets, such as real-time network traffic data, could represent the main focus of future efforts. The practical applicability of this approach may be increased by addressing the difficulties presented by dynamic imbalances in real-time datasets and modifying CTGAN to manage such complications. Lightweight GAN variants are suitable for real-time applications, and also help in investigating adaptive tuning techniques and validating models used for diverse and updated datasets, including those for live traffic networks. Furthermore, putting field adaptation strategies into practice to derive robust performance in various network settings may improve this approach’s effectiveness in a range of cyber security applications.

Author Contributions

Conceptualization, S.A. and T.P.; methodology, S.A. and T.P.; software, Author S.A. and T.P.; validation, S.A. and W.Y.L.; formal analysis, S.A. and T.P.; investigation, T.P.; resources, T.P.; data curation, S.A. and T.P.; writing—original draft preparation, S.A. and T.P.; writing—review and editing, S.A., T.P. and W.Y.L.; visualization, S.A. and T.P.; supervision, S.A. and W.Y.L.; project administration, S.A. and T.P.; funding acquisition, W.Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available in the public domain. NSL-KDD Dataset: Available at https://www.unb.ca/cic/datasets/nsl.html (15 March 2025), developed by the Canadian Institute for Cybersecurity (CIC), University of New Brunswick. UNSW-NB15 Dataset: Available at https://research.unsw.edu.au/projects/unsw-nb15-dataset (15 March 2025), developed by the Cyber Range Lab of the Australian Centre for Cyber Security (ACCS), University of New South Wales. These datasets were obtained from the above sources and used under the terms provided by the respective institutions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mo, J.; Ke, J.; Zhou, H.; Li, X. Hybrid network intrusion detection system based on sliding window and information entropy in imbalanced dataset. Appl. Intell. 2025, 55, 433. [Google Scholar] [CrossRef]

- Ren, H.; Tang, Y.; Dong, W.; Ren, S.; Jiang, L. DUEN: Dynamic ensemble handling class imbalance in network intrusion detection. Expert Syst. Appl. 2023, 229, 120420. [Google Scholar] [CrossRef]

- Louk, M.H.L.; Tama, B.A. Exploring ensemble-based class imbalance learners for intrusion detection in industrial control networks. Big Data Cogn. Comput. 2021, 5, 72. [Google Scholar] [CrossRef]

- Abdulganiyu, O.H.; Tchakoucht, T.A.; Saheed, Y.K.; Ahmed, H.A. XIDINTFL-VAE: XGBoost-based intrusion detection of imbalance network traffic via class-wise focal loss variational autoencoder. J. Supercomput. 2025, 81, 16. [Google Scholar] [CrossRef]

- Yang, Y.; Cheng, J.; Liu, Z.; Li, H.; Xu, G. A multi-classification detection model for imbalanced data in NIDS based on reconstruction and feature matching. J. Cloud Comput. 2024, 13, 31. [Google Scholar] [CrossRef]

- Balla, A.; Habaebi, M.H.; Elsheikh, E.A.A.; Islam, R.; Suliman, F.M. The Effect of Dataset Imbalance on the Performance of SCADA Intrusion Detection Systems. Sensors 2023, 23, 758. [Google Scholar] [CrossRef]

- Abdelkhalek, A.; Mashaly, M. Addressing the class imbalance problem in network intrusion detection systems using data resampling and deep learning. J. Supercomput. 2023, 79, 10611–10644. [Google Scholar] [CrossRef]

- Le, T.-T.; Shin, Y.; Kim, M.; Kim, H. Towards unbalanced multiclass intrusion detection with hybrid sampling methods and ensemble classification. Appl. Soft Comput. 2024, 157, 111517. [Google Scholar] [CrossRef]

- Turukmane, A.v.; Devendiran, R. M-MultiSVM: An efficient feature selection assisted network intrusion detection system using machine learning. Comput. Secur. 2024, 137, 103587. [Google Scholar] [CrossRef]

- Meliboev, A.; Alikhanov, J.; Kim, W. Performance Evaluation of Deep Learning Based Network Intrusion Detection System across Multiple Balanced and Imbalanced Datasets. Electronics 2022, 11, 515. [Google Scholar] [CrossRef]

- Rani, M. Gagandeep Effective network intrusion detection by addressing class imbalance with deep neural networks multimedia tools and applications. Multimed. Tools Appl. 2022, 81, 8499–8518. [Google Scholar] [CrossRef]

- Gu, H.; Lai, Y.; Wang, Y.; Liu, J.; Sun, M.; Mao, B. DEIDS: A novel intrusion detection system for industrial control systems. Neural Comput. Appl. 2022, 34, 9793–9811. [Google Scholar] [CrossRef]

- Bedi, P.; Gupta, N.; Jindal, V. I-SiamIDS: An improved Siam-IDS for handling class imbalance in network-based intrusion detection systems. Appl. Intell. 2021, 51, 1133–1151. [Google Scholar] [CrossRef]

- Bedi, P.; Gupta, N.; Jindal, V. Siam-IDS: Handling class imbalance problem in Intrusion Detection Systems using Siamese Neural Network. Procedia Comput. Sci. 2020, 171, 780–789. [Google Scholar] [CrossRef]

- Mulyanto, M.; Faisal, M.; Prakosa, S.W.; Leu, J.-S. Effectiveness of focal loss for minority classification in network intrusion detection systems. Symmetry 2021, 13, 4. [Google Scholar] [CrossRef]

- Hilal, A.M.; Al-Otaibi, S.; Mahgoub, H.; Al-Wesabi, F.N.; Aldehim, G.; Motwakel, A.; Rizwanullah, M.; Yaseen, I. Deep learning enabled class imbalance with sand piper optimization based intrusion detection for secure cyber physical systems. Clust. Comput. 2023, 26, 2085–2098. [Google Scholar] [CrossRef]

- Ding, H.; Chen, L.; Dong, L.; Fu, Z.; Cui, X. Imbalanced data classification: A KNN and generative adversarial networks-based hybrid approach for intrusion detection. Futur. Gener. Comput. Syst. 2022, 131, 240–254. [Google Scholar] [CrossRef]

- Yuan, L.; Yu, S.; Yang, Z.; Duan, M.; Li, K. A data balancing approach based on generative adversarial network. Futur. Gener. Comput. Syst. 2022, 141, 768–776. [Google Scholar] [CrossRef]

- Yang, H.; Xu, J.; Xiao, Y.; Hu, L. SPE-ACGAN: A Resampling Approach for Class Imbalance Problem in Network Intrusion Detection Systems. Electronics 2023, 12, 3323. [Google Scholar] [CrossRef]

- Korium, M.S.; Saber, M.; Beattie, A.; Narayanan, A.; Sahoo, S.; Nardelli, P.H. Intrusion detection system for cyberattacks in the Internet of Vehicles environment. Ad Hoc Netw. 2023, 153, 103330. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, L.; Wu, C.Q.; Li, Z. An effective convolutional neural network based on SMOTE and Gaussian mixture model for intrusion detection in imbalanced dataset. Comput. Netw. 2020, 177, 107315. [Google Scholar] [CrossRef]

- Toralkar, P.; Mainalli, K.; Allagi, S.; Debnath, S.K.; Bagchi, S.; Leong, W.Y.; Khan, M.N.A. Enhanced Intrusion Detection with Advanced Deep Features and Ensemble Classifier Techniques. SN Comput. Sci. 2025, 6, 381. [Google Scholar] [CrossRef]

- Allagi, S.; Pawan, T.; Mainalli, K.; Dharwadkar, N. Leveraging AI and ML for Predictive Analysis and Feature Attribution in Abnormal Network Behavior Detection. In Proceedings of the 2024 2nd World Conference on Communication and Computing, Raipur, India, 12–14 July 2024. [Google Scholar] [CrossRef]

- Widodo, A.O.; Setiawan, B.; Indraswari, R. Machine Learning-Based Intrusion Detection on Multi-Class Imbalanced Dataset Using SMOTE. Procedia Comput. Sci. 2024, 234, 578–583. [Google Scholar] [CrossRef]

- Albasheer, F.O.; Haibatti, R.R.; Agarwal, M.; Nam, S.Y. A Novel IDS Based on Jaya Optimizer and Smote-ENN for Cyberattacks Detection. IEEE Access 2024, 12, 101506–101527. [Google Scholar] [CrossRef]

- Eid, A.M.; Soudan, B.; Nassif, A.B.; Injadat, M. Enhancing intrusion detection in IIoT: Optimized CNN model with multi-class SMOTE balancing. Neural Comput. Appl. 2024, 36, 14643–14659. [Google Scholar] [CrossRef]

- Talukder, A.; Sharmin, S.; Uddin, A.; Islam, M.; Aryal, S. MLSTL-WSN: Machine learning-based intrusion detection using SMOTETomek in WSNs. Int. J. Inf. Secur. 2024, 23, 2139–2158. [Google Scholar] [CrossRef]

- Osa, E.; Orukpe, P.E.; Iruansi, U. Design and implementation of a deep neural network approach for intrusion detection systems. e-Prime—Adv. Electr. Eng. Electronics Energy 2024, 7, 100434. [Google Scholar] [CrossRef]

- Pramanick, N.; Srivastava, S.; Mathew, J.; Agarwal, M. Enhanced IDS Using BBA and SMOTE-ENN for Imbalanced Data for Cybersecurity. SN Comput. Sci. 2024, 5, 875. [Google Scholar] [CrossRef]

- Eid, A.M.; Soudan, B.; Nassif, A.B.; Injadat, M. Comparative study of ML models for IIoT intrusion detection: Impact of data preprocessing and balancing. Neural Comput. Appl. 2024, 36, 6955–6972. [Google Scholar] [CrossRef]

- Kumari, T.A.; Mishra, S. Tachyon: Enhancing stacked models using Bayesian optimization for intrusion detection using different sampling approaches. Egypt. Informatics J. 2024, 27, 100520. [Google Scholar] [CrossRef]

- Thiyam, B.; Dey, S. CIIR: An approach to handle class imbalance using a novel feature selection technique. Knowl. Inf. Syst. 2024, 66, 5355–5388. [Google Scholar] [CrossRef]

- Srinivasan, M.; Senthilkumar, N.C. Class imbalance data handling with optimal deep learning-based intrusion detection in IoT environment. Soft Comput. 2024, 28, 4519–4529. [Google Scholar] [CrossRef]

- Wang, S.; Xu, W.; Liu, Y. Res-TranBiLSTM: An intelligent approach for intrusion detection in the Internet of Things. Comput. Networks 2023, 235, 109982. [Google Scholar] [CrossRef]

- Balaji, S.; Narayanan, S.S. Dynamic distributed generative adversarial network for intrusion detection system over internet of things. Wirel. Netw. 2023, 29, 1949–1967. [Google Scholar] [CrossRef]

- Damtew, Y.G.; Chen, H. SMMO-CoFS: Synthetic Multi-minority Oversampling with Collaborative Feature Selection for Network Intrusion Detection System. Int. J. Comput. Intell. Syst. 2023, 16, 12. [Google Scholar] [CrossRef]

- Soliman, S.; Oudah, W.; Aljuhani, A. Deep learning-based intrusion detection approach for securing industrial Internet of Things. Alex. Eng. J. 2023, 81, 371–383. [Google Scholar] [CrossRef]

- Elnakib, O.; Shaaban, E.; Mahmoud, M.; Emara, K. EIDM: Deep learning model for IoT intrusion detection systems. J. Supercomput. 2023, 79, 13241–13261. [Google Scholar] [CrossRef]

- Wang, H.; Singhal, A.; Liu, P. Tackling imbalanced data in cybersecurity with transfer learning: A case with ROP payload detection. Cybersecurity 2023, 6, 2. [Google Scholar] [CrossRef]

- Bacevicius, M.; Paulauskaite-Taraseviciene, A. Machine Learning Algorithms for Raw and Unbalanced Intrusion Detection Data in a Multi-Class Classification Problem. Appl. Sci. 2023, 13, 7328. [Google Scholar] [CrossRef]

- Wu, T.; Fan, H.; Zhu, H.; You, C.; Zhou, H.; Huang, X. Intrusion detection system combined enhanced random forest with SMOTE algorithm. EURASIP J. Adv. Signal Process. 2022, 2022, 39. [Google Scholar] [CrossRef]

- Gan, B.; Chen, Y.; Dong, Q.; Guo, J.; Wang, R. A convolutional neural network intrusion detection method based on data imbalance. J. Supercomput. 2022, 78, 19401–19434. [Google Scholar] [CrossRef]

- Arafah, M.; Phillips, I.; Adnane, A.; Hadi, W.; Alauthman, M.; Al-Banna, A.-K. Anomaly-based network intrusion detection using denoising autoencoder and Wasserstein GAN synthetic attacks. Appl. Soft Comput. 2024, 168, 112455. [Google Scholar] [CrossRef]

- Long, H.; Li, H.; Tang, Z.; Zhu, M.; Yan, H.; Luo, L.; Yang, C.; Chen, Y.; Zhang, J. BOA-ACRF: An intrusion detection method for data imbalance problems. Comput. Electr. Eng. 2025, 124, 110320. [Google Scholar] [CrossRef]

- Yao, Q.; Zhao, X. An intrusion detection imbalanced data classification algorithm based on CWGAN-GP oversampling. Peer-to-Peer Netw. Appl. 2025, 18, 121. [Google Scholar] [CrossRef]

- Zeghida, H.; Boulaiche, M.; Chikh, R.; Bamhdi, A.M.; Barros, A.L.B.; Zeghida, D.; Patel, A. Enhancing IoT cyber attacks intrusion detection through GAN-based data augmentation and hybrid deep learning models for MQTT network protocol cyber attacks. Clust. Comput. 2025, 28, 58. [Google Scholar] [CrossRef]

- Feng, S.; Gao, L.; Shi, L. CGFL: A Robust Federated Learning Approach for Intrusion Detection Systems Based on Data Generation. Appl. Sci. 2025, 15, 2416. [Google Scholar] [CrossRef]

- Leong, W.Y.; Leong, Y.Z.; Leong, W.S. Advances in AI for Fraud Detection. In Proceedings of the 2024 IET International Conference on Engineering Technologies and Applications (ICETA), Taipei, Taiwan, 24–25 October 2024. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).