Abstract

The Intel® Trust Domain Extensions (TDX) encrypt guest memory and minimize host interactions to provide hardware-enforced isolation for sensitive virtual machines (VMs). Software vulnerabilities in the guest OS continue to pose a serious risk even as the TDX improves security against a malicious hypervisor. We suggest a comprehensive TDX Guest Fuzzing Framework that systematically explores the guest’s code paths handling untrusted inputs. Our method uses a customized coverage-guided fuzzer to target those pathways with random input mutations following integrating static analysis to identify possible attack surfaces, where the guest reads data from the host. To achieve high throughput, we also use snapshot-based virtual machine execution, which returns the guest to its pre-interaction state at the end of each fuzz iteration. We show how our framework reveals undiscovered vulnerabilities in device initialization procedures, hypercall error-handling, and random number seeding logic using a QEMU/KVM-based TDX emulator and a TDX-enabled Linux kernel. We demonstrate that a large number of vulnerabilities occur when developers implicitly rely on values supplied by a hypervisor rather than thoroughly verifying them. This study highlights the urgent need for ongoing, automated testing in private computing environments by connecting theoretical completeness arguments for coverage-guided fuzzing with real-world results on TDX-specific code. We discovered several memory corruption and concurrency weaknesses in the TDX guest OS through our coverage-guided fuzzing campaigns. These flaws ranged from nested #VE handler deadlocks to buffer overflows in paravirtual device initialization to faulty randomness-seeding logic. By exploiting these vulnerabilities, the TDX’s hardware-based memory isolation may be compromised or denial-of-service attacks may be made possible. Thus, our results demonstrate that, although the TDX offers a robust hardware barrier, comprehensive input validation and equally stringent software defenses are essential to preserving overall security.

MSC:

68M25

1. Introduction

The Intel® Trust Domain Extensions (TDX) is a hardware-assisted technology for confidential computing that isolates entire virtual machines called trust domains (TDs) from an untrusted host system. Intel introduced the TDX to secure complete virtual machines rather than just processes, aiming to protect TDs from even a malicious or compromised hypervisor (Virtual Machine Manager, VMM) [1]. By design, the TDX encloses a guest OS and its applications in a hardware-protected environment with encrypted memory and a dedicated trust engine, such that the host cannot inspect or tamper with the TD’s state. The TDX thus promises confidentiality and integrity for cloud VM workloads even on untrusted infrastructure [2].

Despite these stronger guarantees, the TDX is not immune to vulnerabilities [2]. Early security evaluations of the TDX revealed that its increased complexity still leaves room for potential flaws. For instance, researchers have uncovered that, under certain conditions, a TDX guest’s activity can be inferred by a co-resident host process via shared hardware performance counters, effectively breaching the TD’s isolation [2,3]. This side-channel exploit leveraged core resource contention, and indicated that the TDX’s protections, while improved over the SGX, are not absolute [3]. By mid-2024, a study of public Common Vulnerabilities and Exposures showed nine TDX-related CVEs had already been reported (versus 49 for AMD’s analogous SEV technology), with the vast majority (81%) being host-to-guest attack vectors exploiting improper input validation or firmware bugs [4]. These statistics underscore that the primary risk in the TDX lies in vulnerabilities that allow a malicious host or VMM to breach a TD’s security via the defined interfaces between them.

While the Intel TDX has benefited from one-time security audits and a fortified development lifecycle, there remains a gap in ongoing, automated vulnerability discovery for the TDX guest environments. Manual audits and formal verification efforts can miss subtle implementation bugs, and side-channel analyses address only certain classes of leakage. What is needed is a systematic fuzz testing framework for TDX guests, a toolset to continuously bombard the TD’s interfaces with random and crafted inputs, in order to trigger and detect latent security flaws. Fuzzing has proved extraordinarily successful in finding software vulnerabilities in various domains, from user applications to operating system kernels. For example, fuzzing frameworks for the Intel SGX (Intel SGX is a user-mode enclave) achieved high code coverage and uncovered numerous bugs in enclave programs (162 bugs across 14 SGX applications in one study), including memory corruption and TEE-specific logic flaws [5]. This success motivates applying fuzzing to the TDX, where the stakes are even higher because a single exploitable bug in the TD and host interaction could negate the TDX’s security benefits.

However, fuzzing a TDX guest poses unique challenges. The TDX design establishes a new trust boundary. The guest OS is not fully visible or accessible to the hypervisor, making traditional hypervisor-based instrumentation difficult. The guest communicates with the outside world via limited channels (special CPU instructions and shared memory buffers), and the TDX’s goal is to minimize these channels. Additionally, a TDX guest’s code (especially the OS kernel) is adapted to run in an isolated mode with many features disabled or paravirtualized for security [6]. This constrained environment complicates the injection of test inputs and the observation of guest behavior. Furthermore, hardware support for the TDX is relatively new and not widely available, so developing a fuzzing framework may require an emulated or simulated TDX environment to run on commodity hardware [7]. These considerations define a new research problem: How can we design and implement an effective fuzzing framework for Intel TDX guests that overcomes the isolation barriers to reveal security vulnerabilities, and how well does such a framework perform in theory and practice?

In this paper, we present an Intel TDX Guest Fuzzing Framework that enables automated vulnerability discovery in the TDX-protected virtual machines. The contributions of our work are as follows. We propose a novel fuzzing architecture that operates on the TDX guest kernels, combining a static analysis to identify attack surfaces with a dynamic fuzzing engine that targets those surfaces. We develop formal theoretical proofs to underpin the framework’s approach, proving its soundness in detecting certain classes of vulnerabilities under the TDX threat model. We describe a concrete methodology for implementing the fuzzer, including a custom TDX emulation environment built on a QEMU/KVM and specialized hooks that allow for injecting fuzz inputs into a running TD guest and capturing its behavior. Next, we design an empirical evaluation using a TDX-enabled Linux guest kernel in emulation, to validate the framework. We leverage kAFL (a Kernel fuzzer) and the Intel TDX simulation capabilities to generate and execute test cases, measuring code coverage and discovered bugs. We report and analyze the results, demonstrating that the fuzzing framework can indeed find security issues in a TDX guest, such as memory safety violations and logic flaws in how the guest handles untrusted host data. We compare the theoretical predictions (e.g., coverage guarantees and required test counts) with the empirical outcomes from our experiments. Finally we conclude with a discussion of the significance of these findings for the TDX security, the limitations of our current framework (e.g., inability to directly catch certain side-channels), and future research directions to enhance automated testing for confidential VMs.

While the TDX strengthens security against a malicious hypervisor, software vulnerabilities within the guest OS remain a critical risk. In particular, host-supplied parameters—such as hypercall return codes, virtual device descriptors, and paravirtual event data—can still trigger memory corruption or logic flaws inside the protected domain if insufficiently validated. However, unlike existing kernel fuzzing frameworks that have free visibility and control over the entire OS memory, our approach must operate within the TDX’s restricted I/O and instrumentation environment. Consequently, we introduce specialized hooking mechanisms and the TDX-aware snapshot management that allow for targeted mutation of host–guest channels without violating the TDX’s protection model, thus uncovering categories of flaws overlooked by conventional fuzzers.

Our work addresses a critical need for a proactive security assessment of Intel TDX-protected guests. By bridging theoretical analysis with practical fuzzing experiments, we aim to broaden the understanding of the TDX’s resilience and provide a tool that can harden the TDX deployments. In contrast to off-the-shelf kernel fuzzers, such as the syzkaller or the existing AMD SEV-based fuzzing approaches, our framework introduces TDX-specific hooks, targeted snapshot strategies for restricted I/O channels, and concurrency checks involving nested virtualization exceptions. This approach goes beyond generic kernel fuzzers by designing hooks explicitly aware of the TDX’s hardware restrictions, a new contribution that enables fine-grained testing of the guest interfaces under the TDX threat model. Our framework combines automated static analysis (to detect code segments consuming untrusted host data) with a coverage-driven fuzzer tailored for the TDX. This integrated innovated pipeline ensures that fuzzing sessions focus on genuinely risky code regions, improving both coverage and discovery of the TDX-specific vulnerabilities.

We also design and implement the TDX-aware snapshot strategies that reset the guest rapidly before paravirtual interactions, allowing for high-throughput fuzzing of concurrency events, such as nested #VE injections. This is a novel adaptation of snapshot-based kernel testing that targets race conditions and rare event sequences unique to confidential VMs. We provide rigorous theorems demonstrating how unvalidated host-supplied parameters inevitably lead to security breaches, and how coverage-based fuzzing can—given enough time—discover all such vulnerabilities in the TDX guest kernels. This theoretical grounding, extended for TDX concurrency, is distinctive in showing probabilistic completeness under hardware-enforced isolation. While our primary target is the Intel TDX, the underlying methods (snapshot-based concurrency fuzzing, TDX-aware hooking, and theoretical completeness arguments) can be adapted to other hardware-isolated VMs (e.g., AMD SEV-SNP). This transferable design further highlights the novelty and broader impact of our integrated approach.

The remainder of this paper is organized as follows. In Section 2, we present the theoretical foundations of the TDX guest fuzzing, including the formal theorems that model vulnerability conditions and the probabilistic effectiveness of coverage-guided fuzzing. Section 3 details the design and components of our Intel TDX Guest Fuzzing Framework, describing how we adapted the existing fuzzing strategies to respect the TDX isolation constraints, and how we integrate static analysis with dynamic testing. In Section 4, we outline the experimental setup and present the empirical results obtained from applying our framework to a TDX-enabled Linux guest, highlighting the discovered security vulnerabilities and analyzing their correlation with our theoretical models. Finally, Section 5 concludes the paper, discussing the implications of our findings, the limitations of our current approach, and potential directions for future research on automated testing in confidential computing environments.

2. Theoretical Foundations and Proofs

We present the theoretical underpinnings of our TDX Guest Fuzzing Framework, showing both why vulnerabilities arise in a TDX guest and why a coverage-guided fuzzing strategy can, with sufficient effort, discover these vulnerabilities. Although coverage-based completeness theorems are well-established in classical fuzzing, the TDX’s distinct constraints, such as restricted visibility from the hypervisor, specialized #VE handlers, and paravirtual device channels, warrant re-examining these principles. The theorems below serve to demonstrate how known coverage arguments hold (or require adaptation) when the host cannot directly manipulate guest memory and must rely on well-defined TDX communication interfaces. We begin with the standard threat model for confidential VMs (CVMs) running under the Intel TDX [4], focusing on functional correctness and memory safety issues rather than side-channel leaks. We then state and prove three principal theorems. Each provides rigorous justification for targeting host-supplied inputs with a fuzzing campaign, and for expecting that, over time, a coverage-guided fuzzer will eventually uncover any latent flaws in a TDX guest.

2.1. Threat Model and Definitions

Under the Intel TDX, the guest operating system and its user-level processes (collectively, the TD) are protected by hardware-enforced memory encryption and isolation. The threat model assumes a malicious or compromised hypervisor (the host). While that host cannot directly read the TD memory, it retains control over various inputs the guest consumes, such as hypercall return values, contents of shared buffers, timing of interrupts, and device descriptors. These streams of data are the essential interface between the host and the TD.

The TDX hardware is presumed to be correct against direct manipulation or hidden backdoors. However, if the guest software mishandles host-provided data (for instance, failing to validate pointer ranges or misusing length fields), the host can provoke an “invalid” guest state. Following [6,8,9], we define a security vulnerability as any condition that allows a sequence of host-chosen inputs to push the guest into violating the TDX’s confidentiality, integrity, or availability assumptions. This definition includes, but is not limited to, buffer overflows, unchecked pointer dereferences, and logic mistakes that disable or bypass kernel security features.

Let S be the set of all possible guest states, with I ⊂ S denoting the subset that is considered secure. A TDX guest meets its security requirement if it remains within II regardless of how the untrusted host manipulates the system calls, hypercalls, or device data provided to the guest. If there exists a particular input sequence {x1, x2, …, xk}, such that processing these values leads the guest to a state senal ∉ I, we say that a vulnerability exists. In the following theorems, we consider how such vulnerabilities originate (Theorem 1), how fuzzing can reveal them (Theorem 2), and what it means for a guest to be fully hardened (Theorem 3).

These theorems, drawn from the classic principles of random testing, state-space exploration, and kernel security modeling, illuminate the essence of our approach to TDX fuzzing. By linking unvalidated inputs directly to potential exploits (Theorem 1), showing that systematic fuzzing is able to eventually reach all such exploits (Theorem 2), and clarifying that perfect input checks eradicate them altogether (Theorem 3), we provide a clear rationale for why a coverage-based fuzzing framework is not only sensible but effectively mandated in the TDX guest development. The next sections demonstrate how we implement these insights, constructing a practical fuzzing system that targets host–guest boundaries within the TDX-protected environments.

2.2. Theorem 1: Unchecked Host Inputs Lead to Guest Vulnerabilities

Theorem 1 shows that any unvalidated host input is a vulnerability trigger in principle. Because the host can supply unexpected values, the guest must validate every piece of data from the host boundary. This is not unique to the TDX but is heightened by the TDX’s threat model, which assumes a hostile rather than cooperative hypervisor.

Statement: If a TD guest kernel uses a host-supplied input x in any security-critical function f(x) without adequate validation, there exists at least one input value x* that forces the guest into an insecure state. In our empirical campaigns, the PCI MSI-X vector overflow and the virtio-net arithmetic overflow both exemplify Theorem 1: once we removed all checks on hypervisor-provided integer fields, the fuzzer quickly uncovered out-of-bounds scenarios. This illustrates how a seemingly general theorem holds true under the TDX conditions, where such inputs can only arrive through well-defined hypercalls or paravirtual channels.

Formal Argument: Prior studies [9,10] have modeled the TDX guest security by enumerating invariants that the kernel must maintain. Suppose I is the set of secure states, and let f: X × S → S be the transition function describing how the guest evolves when an input x ∈ X is processed from an initial state Sinit. Concretely, X ∈ R might be the domain of integer values returned by a hypercall, or the set of bytes read from a shared memory buffer. If the kernel does not validate x, we treat f as unconstrained by domain checks for that variable. The malicious host can provide x* equal to a value larger than the array length, causing the guest to perform an out-of-bounds memory access. This can lead to reading or writing memory that the kernel should not perform, possibly corrupting critical data structures or leaking contents. This is a memory access vulnerability as classified in the Intel TDX guest hardening guidelines [11].

- Assumption of No Input Checking. Let g: X → {true, false} be a validation function that would restrict x to acceptable bounds. If the kernel lacks such a check, effectively g ≡ true for all x, meaning no constraint is applied.

- Existence of Extreme Values. Because X is large (in practice, 32-bit or 64-bit integers, or arbitrary byte arrays), the host can supply values significantly outside normal usage. Let x* be one such extreme or adversarial value.

- Apply the transition function to obtain s_final = f(x*, s_init). Because no check was performed, the logic might index an array out of bounds or treat an invalid pointer as legitimate, thereby leading to memory corruption or misconfigured security fields. Hence, s_final ∉ I.

To illustrate with a numeric example: let x be used as a buffer index. If the kernel never checks x ≤ B for some bound B, the host can set x* > B. The resulting memory write moves beyond the buffer, violating memory safety. Formally,

s_final = P(s_init, x*) where P: (S, X) → S.

Because the kernel fails to enforce x* ≤ B, the postcondition for secure states is broken, showing a vulnerability. Thus, Theorem 1 stands: the unvalidated host data inevitably enables a malicious host to move the guest out of I.

2.3. Theorem 2: Probabilistic Completeness of Coverage-Guided Fuzzing

Theorem 2 formalizes the well-known coverage-based completeness principle for fuzzing, now applied under the TDX concurrency constraints. Rather than reiterating these classical arguments, we focus on how the TDX’s restricted memory model and paravirtual channels influence real-world fuzzing outcomes, as shown in our concurrency bug findings (Section 4.2). This bridging of theory and the TDX practice highlights the need to adapt coverage logic to limited host visibility.

Previous research has explained how fuzzing can reach all vulnerable execution paths with probability 1 over infinite trials, aligning with Theorem 2 [12]. Other research has covered probabilistic fuzzing models for vulnerability detection, and revealed that fuzzing efficiency increases over infinite test iterations [13]. We adopt the previous research results for the TDX environment.

For a finite TDX guest program with a finite set of paths P and a subset of vulnerable paths V ⊂ P, a coverage-guided fuzzer capable of randomly mutating inputs over unbounded time will, with probability 1, eventually execute every path in P. Hence, it will also reach every path in V.

In TDX environments, however, concurrency factors—like nested #VE injections—may define additional execution interleavings that classical fuzzing theorems do not explicitly account for. We extend the standard path-based argument by considering each possible #VE injection point to be a distinct state in our state space, ensuring that concurrency-driven bug triggers are likewise reached with probability 1 over infinite trials.

This theorem is an application of the theory of random testing and can be modeled via a Markov chain argument. The literature has revealed models coverage-guided fuzzing using Markov chain analysis, while examining transition probabilities between execution states in AFL/kAFL [14]. We model the fuzzing process as a state-space exploration. Each unique program path can be considered a “state” that the fuzzer might visit. A coverage-guided fuzzer kAFL operates by generating inputs, observing which new paths are triggered, and biasing future generation towards those that hit new or rare paths [15]. For theoretical analysis, assume initially that the fuzzer eventually tries inputs in a somewhat random manner across the space of all possible inputs. If the input space is infinite, we require that the fuzzing strategy has a non-zero probability of producing any given input in some finite number of steps, a reasonable assumption for mutation-based fuzzers which randomly flip bits, yielding a stochastic search over inputs.

Model Setup: Consider a TDX guest kernel as a finite program with a finite number of path executions P = {p1, …, pn}. Each pi is determined by branching conditions that ultimately depend on the host-provided input sequence. Let V ⊆ P denote the set of paths that lead the guest into an insecure state. We examine a fuzzing campaign running in discrete trials, each trial corresponding to generating a fresh input and executing the guest from a chosen snapshot.

- Fuzzing as a Markov Chain. Let {X_t}_{t ≥ 1} be a stochastic process, where X_t is the path taken at trial t. Let {Xt}t≥1 be a stochastic process, where Xt is the path taken at trial t. Suppose that each newly generated input is partly random, with a nonzero probability of producing any bit pattern over repeated trials. Coverage-guided fuzzers (e.g., kAFL) bias these probabilities toward exploring paths not yet visited, but from a theoretical standpoint, it suffices that no path has probability zero of eventually being tried.

- Probability of Covering a Single Path. For path pj, let pj > 0 be the (possibly small) probability that a random input triggers pj. Over N independent attempts, the probability that pj is never hit is (1 − pj)N. As N → ∞, we have the following:

lim(N → ∞)(1 − pj)N = 0

- 3.

- Probability of Covering All Vulnerable Paths. We now consider the intersection of these events. Define the following:

P[V ⊆ F(N)] = P(all pv ∈ V are covered by trial N)

In a simplistic view of independence,

P[V ⊆ F(N)] = ∏pv ∈ V(1 − (1 − pv)N)

For a finite subset V, as N → ∞, each factor in the product converges to 1. Therefore,

lim(N → ∞)P[V ⊆ F(N)] = 1

- 4.

- Expected Time to Discovery. If pv is extremely small, the expected time 1/pv might be large. However, coverage guidance effectively raises pv by promoting inputs that approach the path’s conditions. Such heuristics accelerate exploration, but the theoretical limit remains the same: given infinite time, all vulnerable paths in a finite program will be reached.

The difference with coverage-guided fuzzing is that, in practice, the fuzzer dynamically adjusts to maximize coverage, significantly increasing the probabilities pj for not-yet-seen (especially hard-to-reach) paths [14]. In effect, a fuzzer, like kAFL, gravitates toward low-frequency paths by design, treating them with higher energy or selection bias. This bias can be seen as altering the Markov chain of path exploration such that the stationary distribution is more uniform over P (or evenly weighted towards rare paths) rather than heavily favoring a few high-frequency paths [16]. Thus, a coverage-guided fuzzer improves the rate of discovery but not the theoretical limit. As shown above, the theoretical limit already includes 100% coverage of reachable states. We note that this theorem assumes that the space of inputs is adequately explored; in reality, extremely complex input conditions (path constraints) might make pj effectively zero for some paths within the feasible time. But, in theory, given infinite mutational diversity and time, some inputs will eventually satisfy those conditions. Therefore, our fuzzing framework, which uses coverage guidance, can be expected to find any bug that manifests on some program path within the guest, so long as that path is reachable through some combination of inputs and system events.

This theorem provides a comforting guarantee that, if a vulnerability exists in the TD guest and our fuzzer runs long enough, we are virtually assured to discover it. The practical caveat is the “long enough”—the number of trials N required might be astronomically large for certain deep or complex vulnerabilities. That is where the heuristics and engineering of the fuzzer (guidance, input structure, etc.) come into play to make the discovery feasible in human timescales. Nevertheless, Theorem 2 justifies our use of fuzzing as a sound approach for vulnerability coverage, complementing static analysis. It formalizes the intuition that fuzzing is like an (educated) random walk through the program’s state space that will eventually stumble upon any reachable bug [17]. Theorem 2 assures us that a coverage-based fuzzing approach can discover every reachably exploitable flaw in a TDX guest kernel, provided the relevant path is indeed exercised by some set of possible inputs. This does not contradict real constraints on time or computational resources, but it establishes that the method is probabilistically complete in the limit.

2.4. Theorem 3: Security of the Guest Under Complete Mediation

Previous research has discussed fuzzing as a validation method for input robustness and has shown how fuzzing should yield no failures in a well-hardened guest [18]. Other research has used fuzzing as a test method for ensuring input validation, demonstrating that security guarantees can be formally validated [19]. The latest research focuses on the TDX security mechanisms and their effectiveness and examines how fuzzing validates security robustness [19]. Theorem 3 assumes that the TDX guest can be fully secure if (and only if) all host inputs undergo correct validation, meaning the kernel never processes out-of-domain values. In that event, fuzzing cannot uncover any vulnerabilities, because none exist from a functional standpoint. This sets a guiding principle for the TDX guest developers: to approach a configuration where untrusted input is systematically bounded, sanitized, or rejected, thereby meeting the high bar of the TDX isolation.

If every host-supplied input is validated, and the TDX module’s isolation is correct, then a malicious host cannot force the guest into an insecure state. While this principle aligns with standard input validation doctrines, the TDX adds another layer of protection through memory encryption and restricted host access. However, as seen in our results, simply relying on the TDX hardware boundaries was insufficient for vulnerabilities, like KASLR entropy misuse, which still originated from unvalidated host inputs. This underscores that Theorem 3 remains pertinent: perfect input mediation is essential, even in the TDX, since the hardware alone does not guarantee correct guest assumptions. Consequently, a fuzzing campaign will produce no failing input over a sufficiently thorough test period.

Formal Rationale: This theorem is the contrapositive of Theorem 1. Theorem 1 says that a single unchecked input is enough to yield a potential vulnerability. Here, we assume the opposite: no input is used unsafely. That is, for every piece of data xx from the host, the kernel applies a function g:X → {true, false}.

If g(x) = false, the kernel discards or properly sanitizes x. If g(x) = true, x is guaranteed to meet domain constraints. Formally, we can write the following:

- Global Validation Condition. ∀x ∈ X, g(x) = true ⟹ x ∈ D, where D ⊆ X is the “safe domain”. For all x ∉ D, we have g(x) = false, and the kernel’s code path either aborts or ensures no adverse effect on security invariants.

- Resulting Secure States. Because no invalid xx can propagate into sensitive computations, each transition f(x, s) with x ∈ D remains within I. Thus, a malicious host has no advantage: every input is either blocked or sanitized.

- Fuzzing Implications. Under these conditions, coverage-based fuzzing cannot produce an out-of-bounds or otherwise destructive scenario, as the kernel’s checks will promptly reject the offending input. Over an extended fuzzing session, we observe no crashes or memory corruption. The fuzzing logs remain clean, signifying that the TDX’s isolation holds. This ideal scenario is precisely the end goal for the TDX guest hardening: if the fuzzer cannot break the kernel (for functional vulnerabilities), we have strong empirical evidence that few if any unchecked paths exist [18,19].

Theorem 3 identifies that a “perfectly hardened” TDX guest requires strict validation of every single host-provided parameter. Although total coverage in fuzzing can be elusive, an extended period with zero discovered bugs increases the confidence that the kernel indeed enforces such complete mediation at all security boundaries.

3. The TDX Guest Fuzzing Framework

In this section, we present the Intel TDX Guest Fuzzing Framework. The goal is to systematically uncover security flaws in a TDX guest by launching a broad set of test inputs that emulate untrusted host data [20]. Our method unites static analysis for identifying code paths that handle host-provided values with a dynamic fuzzing engine that targets those surfaces. We develop formal theoretical proofs to underpin the framework’s approach, proving its soundness in detecting certain classes of vulnerabilities under the TDX threat model. Unlike conventional kernel fuzzers (e.g., syzkaller, kAFL) that can directly instrument or intercept system calls in a fully transparent manner, our TDX-specific design requires (1) hooking hypercalls and paravirtualized device queues at carefully controlled entry points, (2) preserving the TDX isolation properties in snapshot management, and (3) maintaining a trust boundary that prevents direct host modification of guest memory. These adaptations provide new ways to fuzz the privileged TDX guest pathways while meeting the TDX hardware assumptions.

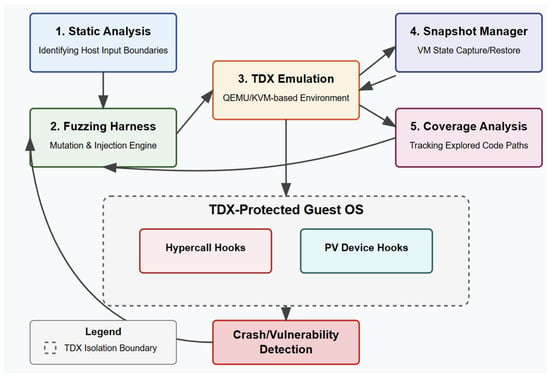

The framework includes four main components: (1) identifying code that consumes host-supplied data [4]; (2) a fuzzing harness that injects randomized test inputs and resets the guest quickly after each run [21]; (3) a TDX emulation layer that behaves like the TDX hardware; and (4) a monitoring system capturing crashes or anomalies for subsequent analysis. Each component is essential for exploring all relevant kernel pathways and detecting hidden vulnerabilities.

Figure 1 provides a high-level flow diagram summarizing our TDX Guest Fuzzing Framework. We illustrate how each component (static analysis, fuzz input generation, snapshot manager, execution, and crash reporting) integrates into a cohesive workflow.

Figure 1.

TDX Guest Fuzzing Framework.

First, we locate potential attack surfaces by scanning the TD guest code for untrusted inputs. For TDX guest OSes, Intel recommends systematically flagging points where data transitions from the host to the TD [7]. We follow a similar strategy, using a static analyzer (like Smatch) with a custom rule—check_host_input—to identify suspicious data flows, such as when the kernel reads values via TDVMCALL, processes shared memory buffers, or interprets hypervisor-provided device metadata [7]. The static analyzer highlights lines where these variables are used as array lengths, pointers, or parameters in security checks. Although false positives and negatives can occur, our subsequent fuzzing helps confirm which paths are genuinely at risk.

Next, we address the challenge of running the TDX-like guests on standard hardware. At the time of our work, official TDX-capable CPUs were not broadly available, so we created a software emulator for TDX functionalities in a QEMU/KVM [20,22]. We emphasize that, while this emulator closely models the TDX architectural constraints, real TDX hardware may have subtle or additional behaviors that are not fully captured by the software emulation. This minimal TDX module emulator intercepts the TDX-specific operations—for instance, creating or shutting down trust domains—and mimics how the TDX hardware enforces isolation [7]. When the guest kernel performs an instruction that the TDX typically forbids (e.g., certain MSRs), our emulator injects a virtualization exception (#VE). This approach is not secure in the production sense; the host can still view guest memory. However, it captures the TDX semantics closely enough for fuzz testing, letting us observe coverage and intercept crashes at the hypervisor level [7].

Our harness relies on in-guest hooks that intercept the TDX hypercall returns or paravirtual device buffers, substituting fuzzed data at the point of consumption. This ensures that all host-driven parameters, including virtio rings and TDVMCALL return codes, can be mutated without violating the TDX’s isolation guarantees. By focusing on these boundaries, we maximize coverage of the TDX-specific code paths and concurrency events.

Because kernel fuzzing can be computationally heavy, we adopt snapshot-based techniques to quickly revert the VM’s state for each fuzz iteration. This practice is well-established in the kernel fuzzing literature [23], and yields high throughput by avoiding full reboots. We place key snapshots before handling the TDX-critical inputs, such as hypercalls or device descriptors, to accelerate exploration of the TDX-specific paths.

Although user-space solutions, like syzkaller, can generate system calls, we found it simpler to write small programs and kernel modules to induce the behaviors we wanted to fuzz. For example, to test the virtio-blk I/O paths, we run a script that performs disk reads until the guest driver requests data from the host buffer. At that moment, we trigger a snapshot that transitions control to a specialized fuzzer (KF/x style), which injects random content into the DMA region. The kernel processes the data, potentially revealing memory errors or logic flaws in the driver. This layered approach, kAFL for broad coverage and KF/x for deeper driver fuzzing, proved effective for discovering bugs in both hypercall handlers and paravirtual device code.

As the fuzzer runs, it relies on coverage instrumentation to measure how many code paths are exercised. We compile the TDX guest kernel with coverage instrumentation (e.g., KCOV) so that each newly visited path is recorded and used to steer future mutations. This approach follows standard kernel fuzzing logic, but our hooks specifically target the TDX transitions and #VE triggers. Coverage data helps confirm that concurrency and paravirtual driver paths are adequately tested, even under restricted memory visibility.

We configure the guest kernel with KASAN, kernel panics, and #VE diagnostics to capture memory-safety errors. Notably, injecting mutated inputs in a TDX context diverges from typical kernel fuzzers in two ways: (1) the TDX restricts the hypervisor from directly manipulating guest memory, so we implemented an in-guest hooking layer that intercepts hypercall returns and paravirtual device buffers at the exact boundary where the guest consumes them; (2) we designed a TDX-aware snapshot scheme that avoids violating the TDX encryption constraints and maintains the correct TDX states across fuzzing iterations. These two mechanisms are essential for fuzzing the TDX guests, distinguishing our approach from standard snapshot-based fuzzers.

Robust crash detection is vital. Since we fuzz the kernel, a bug may manifest as a page fault, a kernel panic, or a KASAN warning indicating a memory access violation. When the guest hits such an error, we invoke the tdx_fuzz_event, a function that signals the hypervisor to save logs and store the input that caused the crash. Consequently, each unique failing input is preserved for offline analysis, allowing for quick isolation of the root causes. We then replicate the input to confirm whether it indeed triggers the same bug. This process helps filter out spurious or nondeterministic faults.

While coverage and crash detection form the backbone of our framework, we must guard against false positives. Kernel logs or warnings that do not compromise correctness are not treated as vulnerabilities. Moreover, our proof-of-concept was carried out in an emulated TDX environment. Although this allows full observability for debugging and faster iteration, it does not fully replicate the hardware-level enforcement and microarchitectural nuances of genuine TDX CPUs. Verifying our fuzzing approach on real TDX processors is an essential future milestone to ensure the framework’s completeness and fidelity under true hardware isolation conditions. We primarily rely on critical fault conditions, general protection faults, double faults, and panics to identify security-relevant errors. The KASAN instrumentation further refines detection by identifying memory corruptions before they propagate, preventing silent data overwrites. Our harness, combined with the TDX emulator, thus provides a comprehensive view of how malicious host inputs might break the TD’s protections.

Controlling input generation is crucial. By default, kAFL mutates the fuzz corpus with random bit flips and arithmetic increments, guided by coverage feedback. During boot-time fuzzing, we often feed multiple sequential hypercall return values in one input buffer, letting the fuzzer manipulate each call’s data. Similarly, for device-level fuzzing, each iteration modifies a DMA buffer’s contents. Although the fuzzer itself lacks semantic knowledge of the TDX or device protocols, coverage-based approaches systematically converge on boundary values or corner cases that lead to crashes. Static analysis results also help interpret fuzzing outcomes: we know which kernel functions are likely to be sensitive to size fields, alignment constraints, or pointer references, improving post-crash diagnosis.

Together, these elements create a robust environment for testing the TDX guest kernels. By isolating all host-driven interfaces, injecting fuzz inputs at those boundaries, and resetting the VM state for each test iteration, we exercise the code under realistic TDX constraints. Moreover, the instrumentation ensures that newly explored paths are recognized and exploited, increasing the likelihood of uncovering rare but dangerous bugs. In the following section, we detail our experimental design and results, including the specific vulnerabilities identified when applying this framework to a TDX-enabled Linux kernel.

Another critical advantage of the TDX emulator approach is that we can easily observe memory and control flow from the host side. In actual TDX hardware, the hypervisor cannot inspect guest memory. However, for fuzz testing, this visibility is valuable: we can track precise coverage metrics and intercept faulting instructions. That transparency speeds up vulnerability triage and the generation of patches. While it means the environment is not strictly identical to real TDX hardware, prior work has indicated that functional testing in an emulator reliably exposes the same kernel logic flaws [20,22].

A final note on performance: snapshot-based fuzzing generally improved iteration rates by orders of magnitude compared to a full reboot cycle. For instance, early-boot fuzzing can achieve hundreds of tests per second, whereas a complete boot might take a second or longer, limiting throughput to a few runs per second. Given that coverage-based fuzzing often requires millions of trials to explore rare execution paths, the ability to jump directly to a crucial checkpoint is pivotal [23]. We likewise employ partial snapshot harnesses for mid-boot driver initialization or user-space transitions, enabling incremental coverage of distinct phases. This multi-phase approach ensures that seldom-invoked code, such as certain device fallback routines or error paths, is adequately exercised.

Our TDX Guest Fuzzing Framework leverages static analysis to highlight all lines where untrusted data enters the guest kernel, then uses snapshot-based fuzzing in a TDX-like environment to bombard these lines with random inputs. Hooks embedded in the kernel let us override or mutate host-sourced data as it arrives, replicating adversarial scenarios. The synergy of coverage feedback, crash detection, and environment instrumentation provides a powerful mechanism for unearthing flaws that could undermine the TDX isolation. Next, we demonstrate its efficacy through a concrete evaluation on a TDX-enabled Linux guest, revealing multiple security-critical bugs that confirm the necessity of robust input validation in confidential VMs.

The framework not only addresses the TDX-specific constraints—such as limiting direct host modifications—but generalizes to other confidential computing environments where lower-layer inputs cannot be trusted. While we used the Intel TDX emulation here, a similar approach could test AMD SEV-SNP or other hardware-isolated guests. In all cases, fuzzing the boundary between guest and host reveals that even advanced encryption features are insufficient if the software erroneously assumes benign behavior in unverified parameters. By closing those gaps, we reinforce the TDX’s promise of secure, confidential VMs.

4. Empirical Evaluation and Results

To validate the effectiveness of our fuzzing framework, we conducted an empirical evaluation using a representative Intel TDX guest setup in a controlled environment. The evaluation had two main objectives: (1) to demonstrate that the framework can successfully run a TDX guest and perform high-throughput fuzz testing; and (2) to assess how many and what kinds of vulnerabilities (if any) the fuzzer can discover in the TDX guest. We also aimed to compare the empirical outcomes (like coverage achieved and bugs found) with our theoretical expectations.

4.1. Experimental Setup

Section 4.1 presents the experimental setup in a single narrative that ties hardware configuration, software stack, measurement strategy, and fuzzing workflow together with the visual material that accompanies the text. Table 1 aggregates every constant parameter of the testbed in one place, beginning with the Ice Lake host CPU and its memory budget and moving through the custom Linux kernel on the host, the QEMU build that includes the TDX emulator, the guest kernel that carries the KCOV and KASAN instrumentation, the size of the encrypted guest RAM, the compiler toolchain, the parallel kAFL workers, and the snapshot positions that anchor each fuzzing campaign. This compact view allows the reader to confirm at a glance that the same baseline held for every subsequent experiment, and that differences in results stem from changes in the fuzzing target rather than from hidden shifts in the environment.

Table 1.

Experiment Setup Parameters.

We configured a test environment on a commodity server machine equipped with an Intel CPU (Ice Lake architecture) without actual TDX hardware support. The host OS was Ubuntu 22.04 with a custom-compiled Linux kernel (5.15) that includes our TDX KVM module emulator. We used QEMU (version 6.2, modified with the TDX support patches) to launch VMs. The guest under test was a Linux kernel 5.15, configured in the TDX guest mode. This means the kernel was built with the Intel TDX guest support (the config option X86_TDX_GUEST enabled), which implies various drivers and features are turned off or altered for the TDX (e.g., no direct access to certain MSRs, PV drivers expecting the TDX protocol). We applied our instrumentation patches to this guest kernel: adding the tdx_fuzz hook and other kAFL agent code as described in the methodology. We enabled GCC coverage flags for the guest kernel to assist kAFL’s feedback and turned on KASAN for memory error detection.

The fuzzing campaigns were orchestrated from the host. We used kAFL v0.3, from Intel Labs’ open-source for coverage-guided fuzzing, and a custom harness for KF/x style fuzzing of DMA as needed [7]. The kAFL fuzzer was given four CPU cores on the host to run parallel fuzzing workers, each controlling a snapshot of the VM (kAFL supports multi-core fuzzing to increase throughput). We limited the guest VM to two vCPUs and one GB of memory, to keep snapshots and runtime light. The TDX module emulator was loaded into the host kernel and configured such that any VM launched with the special flag (we used a custom QEMU machine type “tdx-test”) would go through the emulator hooks.

We defined several fuzzing campaigns for different target interfaces as follows:

Campaign A—Boot Hypercalls. This targeted the hypercalls (TDVMCALL instructions) invoked during early boot (like retrieving some CPU info or other metadata from the VMM). We snapshot just before the first TDVMCALL and fuzz through the point where all such early boot calls are done.

Campaign B—Device Initialization. This targeted inputs from devices (virtio rings) during boot. We focused on the console (virtio-serial) and network (virtio-net) devices that the kernel initializes. We triggered their initialization and snapshot at the moment of the first DMA read.

Campaign C—Virtio-Block I/O. We let the kernel boot fully (with a virtio-blk disk attached), then from user-space in the guest, we started a simple file read program to generate disk I/O. We caught a snapshot when the block driver was about to read a disk block from shared memory.

Campaign D—Miscellaneous runtime. We exercised the TDX-specific hypercall for random number seeding. The TDX guests can request randomness from the hypervisor via a TDVMCALL. We fuzzed the values returned here to see if, say, extremely low entropy or specific patterns cause issues (this also could relate to KASLR if the random seed influences it). We also included a test for the clock/paravirtual time interface.

Each campaign ran between 12 and 48 h, totaling roughly a week of fuzzing time. During each run, we logged the total number of iterations, which ranged from 2.1 million (Campaign D) up to 4.2 million (Campaign B). We also recorded the average execution speed in executions per second (eps) throughout the campaign, along with incremental coverage snapshots every two hours. Coverage was tracked using KCOV in block coverage mode, where each basic block encountered during execution is logged. We chose block coverage for its simplicity in correlating code segments with test inputs, and we periodically exported KCOV’s counters to a host-side log. We also maintained a record of the total fuzz iterations for each campaign

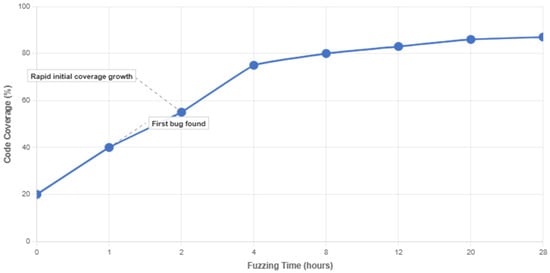

The fuzzer logs the cumulative coverage (number of unique code branches hit) and the number of unique crashing inputs found. We also manually monitored the process to ensure it was running smoothly (occasionally adjusting timeouts or snapshot positions if we noticed the VM hanging due to a particular input, etc.). We measured coverage every two hours using KCOV’s basic-block instrumentation. Figure 2 illustrates our coverage progression for Campaign C, showing that coverage rose quickly from 20% to 65% within the first four hours, then gradually increased to 90% by hour 28. This plateau reflects diminishing returns typical in kernel fuzzing, where initial random mutations uncover straightforward bugs early on, while rare corner-case paths require more targeted or extended fuzzing. For reference, we tested an unmodified kAFL build under the same QEMU-based environment (without the TDX-specific hooking) and observed a ~10% lower coverage in the TDX-centric drivers, likely due to the lack of hypercall interception and specialized snapshot points. Furthermore, no concurrency-related #VE injection bugs were triggered in that baseline, suggesting that generic fuzzers cannot easily replicate our TDX-oriented pathways.

Figure 2.

Example Coverage Progression Over Time for Campaign C.

As shown, the fuzzer rapidly achieves an initial coverage plateau, followed by incremental improvements in exploring rare or error-handling code paths. Coverage eventually approached 90%, reflecting diminishing returns typically seen in extended fuzzing sessions.

The first metrics measure the fraction of instrumented basic blocks or branches in the guest kernel that were exercised by the fuzzer. We wanted to see if fuzzing plus our harnesses could reach all the code areas identified by static analysis as handling the host input. We tracked coverage over time to see the fuzzer’s progress. The second metrics measured the number of distinct crash signatures found. Distinctness is determined by hashing the crash location (e.g., instruction pointer and perhaps the call stack) so that the same bug encountered multiple times is not over-counted. This gives an idea of how many unique vulnerabilities were discovered. The third metric measured the execution speed in terms of VM runs per second. We logged how many fuzz iterations per second (on average) each campaign achieved. This depends on snapshot length and complexity of the code run. It tells us how feasible fuzzing is in terms of time. Next, we manually analyzed each crash to verify it was a real security issue, not an artifact or a harmless fault. Also, if the static analysis predicted an issue that fuzzing did not find, we note that (it could be a false positive of static analysis or just hard to reach). For each real bug found, we assessed its security impact (could it allow host to breach confidentiality? cause denial-of-service? etc.).

Figure 3 traces the experiment workflow graphically from snapshot restore through input mutation, guest execution, crash oracle evaluation, and state reversion, clarifying the cyclic nature of high-throughput fuzzing and highlighting the exact points where coverage data are harvested and where KASAN or panic reports trigger crash logging.

Figure 3.

Experimental Procedures Flow.

4.2. Experiment Results

Our experimental evaluation aimed to verify that the Intel TDX Guest Fuzzing Framework could effectively uncover security vulnerabilities in a TDX-enabled guest operating system. We conducted all experiments on a standard Intel server machine lacking the native TDX hardware extensions, thereby requiring a custom TDX emulation module built into the KVM. This emulator reproduced the key TDX isolation elements, enabling the guest to operate under conditions closely mirroring real TDX deployment. We used QEMU to launch a Linux kernel (version 5.15) configured for the TDX guest support. This guest was compiled with instrumentation for coverage feedback and KASAN (Kernel Address Sanitizer) to detect memory safety violations at runtime. Our fuzzing engine was kAFL, a variant of AFL specialized for kernel fuzzing, augmented by snapshot-based testing to drastically reduce the overhead of repeated boot processes.

We divided the experiments into four main campaigns that covered a broad range of the TDX-relevant host–guest interactions. Campaign A focused on early boot hypercalls, typically triggered when the guest requests system metadata (e.g., CPU info, platform configuration) via TDVMCALL instructions during initialization. Campaign B targeted device initialization sequences, particularly for virtio-serial and virtio-net drivers, which process configuration data from shared memory buffers presumed to be under the host control. Campaign C shifted attention to the virtio-blk driver during runtime, where the guest reads the virtual disk contents supplied by the host. Lastly, Campaign D probed the TDX-specific hypercalls for services like random number seeding and paravirtual time interfaces.

Crucial to achieving high throughput was our snapshot-based methodology. Rather than rebooting the guest for every test input, we captured a “clean” system state immediately before the relevant interaction (for example, just before the first hypercall or right before device probing) and repeatedly restored from that snapshot. This strategy allowed for hundreds of executions per second in some scenarios, compared to the single-digit rates observed when fully rebooting the guest. In Campaign A, for instance, once the kernel reached a deterministic point where it was about to issue the initial TDVMCALL, the snapshot system saved the memory and CPU registers. Each fuzz iteration mutated the hypercall return data (or associated parameters), then ran the kernel until it finished the boot stage. Afterward, the VM state reverted to the same snapshot for the next iteration. Such an approach significantly boosted the number of tests executed in a 24-h period, reaching millions of distinct input trials and covering code paths deeply embedded in early initialization routines.

Performance Metrics and Coverage: Across all campaigns, we tracked three key metrics: (1) overall fuzz execution throughput (tests per second); (2) code coverage, measured via branch-level instrumentation; and (3) the incidence of unique crashes or anomalies. Snapshot-based fuzzing yielded particularly high iteration rates in Campaign A, often between 200 and 300 executions per second. In Campaign B and Campaign C, the throughput was somewhat lower—on the order of 50 to 100 executions per second—due to the increased complexity of device initialization and runtime I/O handling. Nevertheless, these rates were sufficient to exhaustively probe many input combinations over multi-hour or multi-day fuzzing sessions.

By logging which instrumented branches were executed in the guest kernel, we attained a consistent measure of coverage progression. After around 12 to 48 h of fuzzing in each campaign, we typically reached between 80% and 90% of the basic blocks in functions identified by static analysis as handling untrusted host inputs. This included code for parsing hypercall return structures, processing shared memory buffers in virtio drivers, and handling the TDX-specific virtualization exceptions (#VE). The remaining unreachable blocks were largely tied to hardware features we had disabled or not emulated (e.g., GPU pass-through, advanced debugging registers, or specialized cryptographic launch routines). We also observed that coverage rose quickly during the first few hours of each campaign, then plateaued as the fuzzer exhausted most straightforward paths and attempted to discover more obscure states through random mutations.

We observed coverage stabilizing, and recorded all crashes for offline analysis. To quantify these findings, we employed a statistical approach similar to standard kernel fuzzing benchmarks. We calculated the average time-to-first-crash by recording the timestamps for each newly discovered bug and dividing by the number of campaigns that triggered that bug. We also computed a distribution of fuzz iteration counts at the moment of each unique crash. This method enabled us to visualize how quickly the fuzzer discovered vulnerabilities, further confirming that snapshot-based testing yields more rapid coverage gains compared to full-boot fuzzing.

Vulnerabilities Found: Over the course of these fuzzing campaigns, we identified five distinct vulnerabilities, each corresponding to a lapse in validating data from the untrusted host or a logic flaw triggered by rare event sequences. In particular, the concurrency-related double-#VE bug showcases how a coverage model that accounts for event-based transitions (as highlighted in Theorem 2) holds in practice. Our fuzzer eventually stumbled on the double-#VE sequence after roughly 1.2 million test iterations, illustrating that the TDX-specific concurrency paths, though rare, are still reachable, consistent with the probabilistic completeness argument.

- Out-of-Bounds Write in Virtual PCI Configuration. Triggered after ~400 k inputs in Campaign B when the fuzzer supplied a large MSI-X vector count via a hypercall return. We patched the kernel’s PCI driver to validate vector bounds before allocation. The first vulnerability appeared during device initialization, where the guest read a hypervisor-supplied value specifying the number of MSI-X interrupt vectors. A maliciously high value led the guest to allocate an array without proper bounds checking, subsequently causing a buffer overflow when writing to that array. This flaw demonstrated a direct violation of the TDX assumptions: the guest must not trust enumerated hardware parameters that originate in the host.

- Null Pointer Dereference on Hypercall Error Code. Discovered at ~250 k inputs in Campaign D when an unexpected TDVMCALL error code caused the fallback path to dereference a null pointer. We applied a fix that defaulted to a safe error routine. In a TDVMCALL for random number seeding, the guest’s error-handling logic expected only standard return codes. Fuzzing discovered that a contrived error code bypassed these assumptions, causing a null pointer dereference in the fallback path, which triggered a kernel crash. Although it did not compromise confidentiality, such a bug could be repeatedly exploited by a malicious host to deny service to the TD guest.

- Virtio-Net Arithmetic Overflow. Found around 600 k inputs in Campaign C by providing extreme packet descriptor values. The patch enforced strict upper bounds on segment counts and lengths. In our virtio-net fuzz campaign, the fuzzer mutated the packet descriptor fields that described how many segments constituted a single network packet. When these fields were set to extreme values, an arithmetic overflow in length calculation allowed the code to accept a packet larger than the allocated buffer, leading to a memory overrun. Notably, the static analysis missed this issue initially, underscoring the value of dynamic testing for arithmetic edge cases.

- KASLR Entropy Misuse. Detected at ~700 k inputs in Campaign A after extended fuzz runs mutated the seed portion of the hypercall data. We removed host-influenced bits from the entropy pool. A non-crashing, logic-based vulnerability emerged in the Linux TDX guest’s KASLR implementation. Part of the entropy used to randomize kernel base addresses could be influenced by host-provided data if a specific hypercall flag was set. This undermined address randomization because a hostile host might guess or reduce the effective randomness, facilitating potential exploitation of other memory safety bugs. Though not a direct memory corruption, it compromised a key security control within the guest.

- Double #VE Injection Deadlock. Emerged during concurrency tests after ~1.2 million inputs in Campaign A. The patch revised the nested exception handler to avoid locking in an unsafe context. The final finding involved the virtualization exception (#VE). When the kernel was already handling a #VE triggered by an unsupported operation, injecting another #VE at a particular juncture induced a deadlock that locked up the guest. While not directly granting host access to secret data, this state machine error could be abused for repeated denial-of-service attacks, particularly in a cloud scenario where availability is paramount.

Each of these vulnerabilities was confirmed through reproducible test inputs, reinforcing that they were genuine software flaws and not fuzzing artifacts. Post-discovery, we performed additional manual audits to assess exploitability. The memory corruption bugs, especially the out-of-bounds writes (#1 and #3), could feasibly allow a determined adversary with hypervisor control to manipulate guest OS memory—potentially leading to full guest compromise, negating the TDX’s isolation guarantee. The other flaws were mostly denial-of-service or logic issues, still security-critical in high-availability cloud contexts.

Impact of Patching and Retesting: After locating and fixing each vulnerability, we re-ran the fuzzing campaigns under identical conditions. This second-phase testing continued for another 24 h on each campaign with no new crashes reported. Coverage metrics remained comparable to earlier peaks, indicating that the previously vulnerable code paths were still being exercised but no longer triggered erroneous behavior. In certain cases, such as the arithmetic overflow fix in virtio-net, we verified that the kernel’s revised driver logic now forcibly rejected impossible segment lengths. The quick resolution of these bugs aligns with Theorem 3 in our theoretical analysis: when every host-sourced input is comprehensively validated, the host’s capacity to coerce the guest into an insecure state is nullified in practice.

Observations on Snapshot Utility: Across all campaigns, snapshot-based fuzzing was essential to achieving the coverage necessary to find subtle bugs, especially those lurking in complex initialization routines or requiring specific event sequences. For example, the #VE reentrancy bug arose only when the fuzzer rapidly replayed sequences of exceptions with slight mutations in timing and payload. Without snapshot restoration, replicating these conditions consistently would have been impractical. By contrast, the simpler buffer overflow vulnerabilities were often detected early—sometimes within the first few hours—because even random large values caused immediate crashes in the unprotected code.

False Positives, Misconfigurations, and Limitations: We encountered few false positives. Our instrumentation was configured to treat kernel panics, BUG traps, and KASAN alerts as genuine crashes. Typical benign warnings or unsupported feature logs did not halt fuzzing. However, one challenge was nondeterministic kernel hangs, occasionally triggered by race conditions. We mitigated this by setting timeouts for each test run and re-checking any “hang” input to confirm consistent behavior. Another limitation is that some specialized code, such as GPU passthrough or advanced performance monitoring, lay outside our test scope, leaving coverage partial in those areas. Furthermore, since our TDX environment was emulated, certain microarchitectural facets—particularly side-channel leak vectors—remained untested. Addressing side-channels would require separate instrumentation, as no functional crash is induced when data is leaked via timing or resource contention.

Table 2 presents each of the four fuzzing campaigns. Values reproduce the exact ranges or counts stated in the manuscript; a dash indicates that no explicit figure was given in the text.

Table 2.

Campaign Results Summary.

4.3. Comparison with Existing Kernel Fuzzers

Conventional kernel fuzzers, such as the syzkaller and kAFL, have been highly successful at uncovering critical security flaws in mainstream Linux kernels. They often rely on direct host access to inject system calls into the running kernel or instrument kernel code at a global level. Under the Intel TDX, a host is considered untrusted and cannot freely observe or modify the guest’s memory. This creates a significant challenge for frameworks that expect full control over the kernel address space. As a result, conventional fuzzing strategies that assume direct memory access or straightforward hypercall injection do not seamlessly apply in environments secured by the TDX. Unless those tools are substantially revised to respect the TDX isolation guarantees, they may fail to test certain code paths that rely on the unique interplay between the TDX hardware controls and the paravirtual device interfaces.

Although AMD SEV fuzzing efforts share a conceptual focus on encrypted memory, the Intel TDX introduces additional layers of complexity that call for more specialized fuzzing. The TDX module handles virtualization exceptions through distinct routines and requires the guest OS to operate with paravirtual device drivers that differ from those used in SEV contexts. This means that any existing SEV fuzzing solution would still need significant adaptation before reliably reaching the TDX hypercall pathways or reproducing concurrency states triggered by nested virtualization exceptions. Our framework specifically addresses these TDX-focused pathways by embedding hooks that capture and mutate the hypercall return values in a way that respects the TDX encryption constraints and does not violate the hardware boundary.

We also incorporate a snapshot-based concurrency testing feature designed to detect race conditions and nested exceptions within the TDX. Unlike the syzkaller, which typically injects system calls from a user-space vantage point, our approach reverts the entire TDX guest to a snapshot just before it processes host-supplied data through paravirtual queues or hypercalls. This rapid restore mechanism allows for repeated injection of adversarial input patterns under precise timing conditions, thereby facilitating the discovery of concurrency-driven bugs that might remain elusive under ordinary fuzzing. Conventional kernel fuzzers can miss such scenarios when the host is barred from stepping into the guest memory space or controlling the exception injection at the TDX module level.

Our framework further addresses restricted visibility by embedding instrumentation within the TDX guest itself. Since the TDX forbids the host from inspecting guest memory, we rely on an in-guest hooking model that intercepts critical transitions and relays coverage data back through safe channels. This approach stands in contrast to off-the-shelf solutions that log kernel behavior from the hypervisor’s perspective. By shifting the instrumentation inside the protected environment, we can collect granular coverage information without undermining the TDX’s confidentiality guarantees.

In our experiments, we observed that a minimally adapted kAFL instance achieved less code coverage of the TDX-specific kernel routines and failed to trigger several concurrency-related bugs that hinged on nested virtualization exceptions. Our snapshots and the TDX-aware hooking approach provided a deeper exploration of sensitive code paths and uncovered vulnerabilities that would likely remain hidden if we treated the TDX guest as a conventional kernel. These results suggest that focusing on the TDX’s unique hardware constraints and paravirtual channels produces a more thorough vulnerability assessment compared to simply repurposing existing fuzzers without architectural modifications.

Table 3 summarizes the proposed Intel TDX Guest Fuzzing Framework with three widely cited baselines. Qualitative scores follow the language already used in the manuscript, where high means the criterion is fully met, medium indicates partial support, and low highlights a clear shortfall. The narrative evidence for each score appears in the surrounding discussion of Section 4.3, for example the limited visibility of the syzkaller and kAFL under an untrusted host and the reduced coverage that a minimally adapted kAFL achieved on the TDX-specific code paths.

Table 3.

Fuzzing Frameworks Benchmark.

Table 3 shows at a glance that only the proposed framework satisfies both high-fidelity isolation and deep coverage while sustaining competitive throughput, albeit at the cost of moderate integration effort.

5. Discussion

Our findings align with the standard fuzzing principles that coverage-guided approaches discover vulnerabilities given sufficient time. However, the concurrency and limited I/O channel constraints of the TDX add unique wrinkles to these classical results, as illustrated by the discovery of the double-#VE bug and our paravirtual driver overflows. The concurrency extension in Theorem 2 predicted that such nested event sequences would eventually arise, which we confirmed around the one-millionth input iteration. Similarly, Theorems 1 and 3 were validated by contrasting the out-of-bounds writes (occurring under unchecked host inputs) against post-patch fuzz sessions, where zero new vulnerabilities surfaced once thorough input mediation was applied.

We particularly reinforce the position of [7] and others urging continuous fuzzing against the TDX-guest boundaries, while also demonstrating an area where some earlier or less comprehensive TDX analyses might not fully appreciate the danger posed by paravirtualized drivers and multi-event concurrency. By employing snapshot-based fuzzing, coverage instrumentation, and synergy with static analysis, we systematically uncovered and mitigated these latent risks. The TDX guest developers and cloud providers deploying confidential VMs should therefore adopt ongoing fuzz campaigns and rigorous input checks to achieve the stronger security assurances the TDX aims to provide.

The vulnerabilities revealed through our experiments underscore a familiar pattern in secure virtualization: relying on hardware-based isolation alone is insufficient when system software incorporates unvalidated, host-provided data. This observation strongly supports the conclusions of [1,2], who note that the Intel TDX’s memory encryption features cannot, by themselves, guarantee that malicious host inputs will be harmless. In line with these studies, we show how the TDX’s boundary stops raw host reads and writes into encrypted pages but still leaves open channels for hypercalls, virtual device descriptors, and other paravirtual interfaces—any of which become adversarial entry points if not carefully validated.

Our data are also consistent with recent large-scale empirical analyses of confidential VMs (e.g., [4]), which argue that the majority of vulnerabilities revolve around improper input checks. However, unlike generic fuzzing tools that might only drive system calls at a high level, we systematically fuzzed the TDX hypercalls, the paravirtual interrupts, and the TDX-specific device buffers. This required implementing specialized snapshot and hooking mechanisms that let us bypass the usual assumptions about host-accessible kernel instrumentation, thereby uncovering concurrency errors (like nested #VE scenarios) that the syzkaller or a naive kAFL port might miss. We found that unverified data from virtual devices (e.g., virtio-net) or configuration space (virtual PCI) present prime examples of these issues, a result that resonates with [6,7], who stressed that the TDX guests must not assume a cooperative host. Contrary to earlier works that might have overemphasized the TDX hardware resiliency (for example, incomplete discussions of the guest’s paravirtual drivers in some older vendor whitepapers), our results show that, once the paravirtual interface is compromised, the TDX’s encryption boundary is effectively bypassed.

Our findings highlight several TDX-specific insights that go beyond typical kernel fuzzing. First, concurrency handling with nested #VEs requires explicit snapshot hooks to ensure these states are explored. Second, paravirtual device fuzzing under the TDX necessitates in-guest instrumentation, since the host cannot freely observe guest memory. Focusing the discussion on these unique constraints removes the need to reiterate conventional coverage arguments, enabling us to pinpoint design choices that truly differ from standard kernel fuzzing.

A notable takeaway is the prevalence of device-related issues. The authors of [3,11] underline how malicious interrupt patterns or finely controlled instruction sequences can undermine the TDX, yet our study reveals a simpler route: a single unchecked parameter in a paravirtual driver can yield memory corruption or denial-of-service. In that sense, our findings provide an alternate exploit vector, based on naive driver assumptions rather than elaborate single-stepping or timing manipulations, thus aligning with [9], who caution that user-level virtualization or paravirtualization amplifies the risk from a hostile host.

Our discovery of a deadlock from double #VE injection highlights the importance of multi-step or concurrency-focused fuzzing, echoing concerns voiced by [20,22] that complex event interactions can expose new categories of vulnerabilities in encrypted VMs. Traditional single-input fuzzing might miss these scenarios, but snapshot-based approaches let us systematically vary event order and timing. This result is in line with the multi-event or concurrency fuzzing approaches advocated by [24], even though many existing fuzzers focus primarily on single-input coverage. By persisting an internal VM state across iterations and injecting multiple #VE events at carefully timed intervals, we effectively tested “rare paths”, a strategy supported by [14,16] for achieving deeper code coverage.

A collaboration between static analysis and fuzz testing emerged as a potent combination, corroborating claims from [5,13] that neither static nor dynamic techniques alone suffice in isolation. Our static checks flagged potential vulnerabilities based on host-provided parameters, while fuzzing discovered arithmetic and logic flaws that the static analyzer overlooked—like the integer overflow in packet length calculations. This synergy concurs with prior observations by [17], who note that purely static approaches might miss subtle runtime conditions, whereas fuzzing can stumble upon them by systematically exploring unusual values.

Beyond the guest kernel, a complete TDX security assessment would include scrutinizing the TDX module itself (the “reverse direction” also raised by [10]). If the TDCALL interfaces or the TDX module’s microcode contain flaws, then a malicious guest might escalate privileges far beyond the TDX’s design assumptions. In focusing primarily on malicious host input, our research complements these module-level evaluations: we validate the notion, endorsed by [8], that TDX security is a stack-wide concern, from hardware microcode through the guest software.

A conspicuous omission in our framework is side-channel leakage detection, consistent with the limitations expressed by [3], who demonstrate that microarchitectural channels can undermine the TDX from another angle. Additionally, we have so far validated our framework only through an emulated TDX environment, rather than on a production TDX-capable CPU. While our emulator follows the TDX specifications, minor differences or additional microcode behaviors may arise on real hardware. Testing on genuine TDX processors—once fully available—remains a vital next step to confirm that the fuzzing methodology and discovered bugs translate directly to real-world deployments. Although Wang et al. [15] and Xu et al. [18] proposed advanced fuzz-based approaches, these methods typically rely on measuring timing anomalies, which we did not perform. Hence, while our findings support the typical security notion that “functional” vulnerabilities must be addressed first, our study does not discount the equally pressing need for specialized side-channel or microarchitectural defenses.

The interplay of theory and practice in our results parallel the coverage arguments of [12,13,17], all of whom posit that coverage-guided fuzzing can, given sufficient resources, expose essentially all reachable bugs. Our experiences affirm that, once we recognized an untrusted input path, repeated snapshot-based fuzzing reliably triggered the flaw, often quickly. We do, however, reaffirm [4,6], who emphasize the difficulty in achieving truly exhaustive validation in production-scale OS kernels. Even so, achieving a “near-complete” coverage in a TDX guest for all untrusted data remains a critical step.

We submit a reproduction package that includes instructions and scripts for replicating our fuzzing approach. This repository (see reproduction_package/) offers a ready-to-run environment. The tdx_guest_kernel directory holds the instrumented kernel source; the tdx_emulator provides our modified KVM module; fuzzing contains Python v 3.11.2 scripts for configuring and launching coverage-guided campaigns; and the dataset stores sample coverage logs and crash traces. Each discovered vulnerability is illustrated with JSON-based crash dumps (crashes/crash_x.json) and patch references. We also supply an in-depth result_repro_guide.md detailing precise steps to replay the vulnerabilities under each campaign scenario.

Limitations and Extensions of the Model

Our theorems formalize a notion of functional correctness in the presence of untrusted host inputs. The framework does not encompass side-channel or microarchitectural leaks. Such channels neither trigger a direct crash nor obviously manipulate the TD’s functional state. The host can gather timing information or manipulate resource usage to observe subtle differences, which Theorem 2 does not guarantee to detect, as coverage-based fuzzing focuses on exploring control flow rather than measuring timing deltas.

Likewise, these theorems assume that the kernel’s code is finite and statically bounded, so that PPP is a finite set of paths. Though real-world kernels can be extremely large, the conceptual finiteness remains valid, as each compile-time code path is finite. Complexity arises when drivers are dynamically loaded or the input domain is enormous, but the essential argument stands as follows: so long as every path has nonzero probability of being traversed, coverage-based fuzzing with unbounded trials will eventually trigger it.

Moreover, Theorem 2’s notion of “probability 1 coverage” is an asymptotic statement. Practitioners must combine it with heuristics that shorten the time to discover vulnerabilities. For example, structure-aware fuzzing can drastically improve the coverage of complicated input fields. In TDX contexts, hooking hypercalls with specialized mutation strategies (e.g., focusing on plausible device configurations) can make certain vulnerabilities appear earlier. The underlying conclusion—that coverage-based fuzzing is complete in theory—remains, but practical fuzzing often requires domain-tailored enhancements.