Abstract

The complex, temporally variant singular value decomposition (SVD) problem is proposed and investigated in this paper. Firstly, the original problem is transformed into an equation system. Then, by using the real zeroing neurodynamics (ZN) method, matrix vectorization, Kronecker product, vectorized transpose matrix, and dimensionality reduction technique, a dynamical model, termed the continuous-time SVD (CTSVD) model, is derived and investigated. Furthermore, a new 11-point Zhang et al. discretization (ZeaD) formula with fifth-order precision is proposed and studied. In addition, with the use of the 11-point and other ZeaD formulas, five discrete-time SVD (DTSVD) algorithms are further acquired. Meanwhile, theoretical analyses and numerical experimental results substantiate the correctness and convergence of the proposed CTSVD model and DTSVD algorithms.

Keywords:

singular value decomposition (SVD); temporally variant matrix; zeroing neurodynamics (ZN); Zhang et al. discretization (ZeaD) formula MSC:

65L05; 68T05

1. Introduction

As a kind of fundamental matrix decomposition in linear algebra, singular value decomposition (SVD) plays an important role in modern scientific research and engineering applications [1,2,3,4,5,6,7,8]. For instance, in [1], SVD was used for the security protection of medical images. In ref. [2], a recommender system algorithm using SVD and Gower’s Ranking was proposed. In ref. [3], a method of SVD-based parameter identification for discrete-time stochastic systems with unknown exogenous inputs was presented. A k-SVD based compressive sensing method for visual chaotic image encryption was discussed in [4]. A robust speech steganography using the differential SVD method was provided in [5]. In ref. [6], an adaptive signal denoising method based on SVD for the fault diagnosis of rolling bearings was analyzed and discussed. An SVD method was proposed and applied for a temporally variant symmetric matrix in the real number domain [7]. In ref. [8], a decomposition model for SVD in the real number domain was presented and investigated.

In recent years, a dynamical method termed the zeroing neurodynamics (ZN) method has been proposed and widely used for solving temporally variant problems [9,10,11,12,13,14,15,16,17,18,19,20,21,22], such as nonlinear system control [9], predictive computations [10], robot control [11,12,13,14], matrix inversion [15,16], nonlinear optimization [17,18,19], matrix inequality [20], and QR decomposition [21,22]. Generally, when the ZN method is used to solve the temporally variant problem, the error function is defined first and then the value of the error function is forced to converge to zero by the design formula, so a dynamical model, also called a continuous-time solution model, is obtained. Moreover, to facilitate the implementation of modern electronic hardware, the dynamical model needs to be discretized into a discrete algorithm. For that purpose, a new class of finite difference formulas, termed the Zhang et al. discretization (ZeaD) formula [23,24,25,26], has been proposed and used. For example, in [23], a four-point ZeaD formula was derived and applied to matrix inversion. In [24,25], the ZeaD formula was presented and used for manipulator motion generation and nonlinear optimization, respectively. A model for robot control was proposed in [26] by using the eight-point ZeaD formula.

Unlike [7,8], this paper is dedicated to solving the problem of the SVD of temporally variant matrices in the complex number domain. In other words, the SVD method proposed in this paper primarily targets complex-valued matrices. To the best of our knowledge, the problem of complex, temporally variant SVD, which is formulated and studied in this paper, has not yet been investigated by other researchers. In order to address this difficult problem, an equation system is presented first. Then, by using the real ZN method, i.e., applying the ZN method in the real number domain, six error functions are defined. Additionally, the linear design formula is applied, as well as the matrix vectorization, Kronecker product, vectorized transpose matrix, and dimensionality reduction technique, and a dynamical model, i.e., a continuous-time SVD (CTSVD) model, is derived for solving complex temporally variant SVD. Meanwhile, a new 11-point ZeaD formula is proposed. Moreover, five discrete-time SVD (DTSVD) algorithms are further obtained by using the 11-point and other ZeaD formulas.

For better readability, the remaining contents of this paper are organized into four sections. The problem formulation and related preparation are provided in Section 2. In Section 3, the CTSVD model and the new 11-point ZeaD formula as well as five DTSVD algorithms are derived and investigated. Meanwhile, the corresponding theoretical analyses are given in this section. In Section 4, the numerical experiments are described and the results are displayed. The concluding remarks are given in Section 5.

Additionally, the main contributions of this paper are listed below.

- The complex, temporally variant SVD problem is formulated and studied in this paper for the first time.

- A new 11-point ZeaD formula with precision is proposed and investigated.

- A new CTSVD model and five DTSVD algorithms are derived, with experiments described and theoretics verified.

2. Problem Formulation and Preparation

Generally, the temporally variant SVD problem in the complex number domain is described as

in which is a given smooth temporally variant matrix, and are unknown temporally variant unitary matrices, is an unknown non-negative diagonal matrix, and superscript ∗ is the conjugate transpose operation of a matrix. According to the definitions of unitary matrix and diagonal matrix, the following equations are obtained:

where and are identity matrices; represents the th element of . Since a complex number is expressed as the sum of its real and imaginary parts, we obtain

with superscript T representing the transpose operator of a matrix and i denoting the pure imaginary unit. Consequently, the following equations are derived:

that is,

Since the real and imaginary parts of both sides of the above equations are equal, we further have

Evidently, the original problem (1) is a description in complex form and (2) is a description in real form. Therefore, we obtain the solution of (1) by solving (2). That is to say, if we acquire the solution of (2), when , the SVD of is achieved.

3. Dynamical Model and Algorithms

In this section, the CTSVD model is first obtained by the real ZN method and then a new 11-point ZeaD formula is proposed. By using the 11-point and other ZeaD formulas, five DTSVD algorithms are derived and investigated. Meanwhile, the corresponding theoretical analyses are provided to ensure the correctness of the proposed model and algorithms.

3.1. CTSVD Model and Theoretical Analyses

To facilitate description and comprehension, the following definitions and lemmas [8,21,22,27,28], including matrix vectorization, Kronecker product, vectorized transpose matrix, and dimensionality reduction technique, are presented.

Definition 1

(Vectorization of matrix [27,28]). The mathematical symbol vec(·) denotes the large column vector formed by concatenating all the columns of a matrix. If A is an matrix, then the vectorization of matrix A is vec(A) .

Definition 2

(Kronecker product [27,28]). A⊗B is the Kronecker product of A and B. If A is an matrix and B is a matrix, then A⊗B is an matrix and equals the block matrix .

Definition 3

(Vectorized transpose matrix [8,21,22,27,28]). For a transpose situation, is the orthogonal permutation matrix whose th element is 1 if or 0 otherwise.

Definition 4

([8,27]). The mathematical symbol diag(·) denotes the vector consisting of the diagonal elements of a matrix.

Lemma 1

([21,22]). With A, F, G, and H respectively representing matrices of , , , and , the vectorized form of A = FGH is vec(A) = (⊗F) vec(G).

Lemma 2

(Dimensionality reduction [8]). At any time instant , an constant matrix is constructed for an temporally variant diagonal matrix , such that vec() = diag() holds true.

In order to solve (2), based on the real ZN method, six error functions are defined, shown below:

Next, the linear design formula is applied to zero out through . Specifically, by substituting the previous equations into the linear design formula [10,11,12,13] , with denoting the time derivative of , , we obtain

Let and ; then, we have

Then, by using matrix vectorization, Kronecker product, vectorized transpose matrix, and the results of Lemma 1, we let , , , , , , , , , , , and . Meanwhile, by applying the results of Lemma 2, we have . Therefore, the following matrix equation is derived.

in which

and , , , , , , , , , , , , , , , , and . Further, let

and

Thus, the CTSVD model is obtained as

where superscript + denotes the pseudoinverse of a matrix; , , and if , , else .

Theorem 1.

Proof.

Firstly, in order to obtain the solution of (2), by using the real ZN method, six error functions are defined, shown in (3). Next, the linear design formula is applied to zero out the values of these error functions, i.e., through . Note that the theoretical solution of the linear design formula is , with denoting the initial value of . Therefore, when and time , we have . Specifically, by employing the Laplace transformation for the th element of , with initial condition , we have

i.e.,

By using the final value theorem of Laplace transformation, we further have

Evidently, the solution of (4) satisfies (2) when and time . We let with , and (5) is obtained. Then, by using matrix vectorization, Kronecker product, vectorized transpose matrix, dimensionality reduction technique, and the results of Lemma 1, (6) is further obtained. Meanwhile, in (6), by using the results of Lemma 2, we zero out the non-diagonal elements of , i.e., . Thus, the solution of (6) satisfies (2). Additionally, (7) is another form of (6). The proof is therefore completed. □

Note that the larger the value of parameter is, the faster the convergence speed of the CTSVD model becomes [29]. However, the value of cannot be infinitely large, as it depends on the hardware limitations of the digital system. In practical applications, can be adjusted according to specific requirements. In the process of SVD, every element on the diagonal of must be non-negative. Therefore, if has negative elements on the diagonal, we change the sign of the corresponding columns of or rows of , such that only has non-negative elements [7,8].

3.2. DTSVD Algorithms and Theoretical Analyses

In this section, a new 11-point ZeaD formula is proposed and studied. With the use of the 11-point and other ZeaD formulas, five DTSVD algorithms are further presented and investigated.

3.2.1. 11-Point and Other ZeaD Formulas

The 11-point ZeaD formula is proposed using Theorem 2.

Theorem 2.

With denoting the computational assignment operation, denoting , and denoting the sufficiently-small sampling gap, respectively, the 11-point ZeaD formula is expressed as

and its truncation error is .

Proof.

By applying Taylor expansion [30,31], the following ten equations are derived:

and

therein, , , , , , , and . ; , , , , , and are the first-, second-, third-, fourth-, fifth-, and sixth-order derivatives, separately; symbol ! is the factorial operator; correspondingly lies in the intervals , , , , , , , , , and . Let (9), (10), (11), (12), (13), (14), (15), (16), (17), and (18) separately multiply 1, −27/50, −3/5, −3/10, 3/25, 6/25, 7/50, −93/490, −7/160, and 7/150. By adding them together, we have

Specifically, the error term of (19) is

Then, (19) can be further rewritten as

Thus, (8) is obtained as

and its truncation error is . The proof is therefore completed. □

It is worth noting that compared with other ZeaD formulas, the 11-point ZeaD formula proposed in this paper is currently the ZeaD formula with the highest computational precision known. Therefore, for this study, we chose this formula as the main research object. For the purpose of comparison, four ZeaD formulas [23,24,26] with different points and precisions, i.e., two-, four-, six-, and eight-point ZeaD formulas, were adopted and are listed below:

and

Note that (21) and (23) are acquired by setting parameters and in references [23,26], respectively; the Euler forward formula (20) (also termed the two-point ZeaD formula) is seen as the first and also the simplest of the ZeaD formulas.

3.2.2. DTSVD Algorithms

By using (20), (21), (22), (23), and (8) to discretize the previous dynamical model, i.e., the CTSVD model (7), the DTSVD-1, DTSVD-2, DTSVD-3, DTSVD-4, and DTSVD-5 algorithms are respectively acquired:

and

therein, step-size , , , , and .

Moreover, the correctness and precision of the previous algorithms are guaranteed by the following theorem.

Theorem 3.

Proof.

With denoting the Frobenius-norm of a matrix, let us consider the proof of (28) at first. The corresponding characteristic polynomial [30,32] of (28) is expressed as below:

The ten solutions of the above polynomial (retain the six significant digits after the decimal point) are

and the corresponding moduli of them are

where i is the pure imaginary unit and is the modulus of a number. Since these roots are less than or equal to one, with one being simple, according to Result 1 in Appendix A, the DTSVD algorithm (28) is 0-stable. Evidently, (28) has a truncation error of . Thus, from Results 2 through 4 in Appendix A, we know that (28) is consistent and convergent, and it converges with the order of its truncation error (i.e., ). The proofs of (24), (25), (26), and (27) are omitted since they are similar to that of (28). The proof is therefore completed. □

Furthermore, in order to facilitate readers’ understanding and programming implementation, the pseudocode of the DTSVD-5 algorithm (28) is presented as follows.

| Pseudocode of DTSVD-5 (28) algorithm. |

| Input: and |

| 1: Set task duration , sampling gap , design parameter , step-size , |

| generate random initial value and . |

| 2: For |

| 3: Compute , , , and . |

| 4: If |

| 5: Compute via Euler backward formula. |

| 6: else |

| 7: Compute via (28). |

| 8: End |

| Output: , , , , and . |

4. Numerical Experiments

In this section, numerical experiments with three examples are described to substantiate the precision and performance of the proposed dynamical model and algorithms. Additionally, the task duration for all numerical experiments was set as s uniformly and the initial values were randomly generated from the interval . Moreover, we observed the residual errors as well as , , to measure the computational accuracy of the proposed model and algorithms, respectively. Meanwhile, in order to more intuitively reflect the overall accuracy of complex, temporally variant SVD, the residual errors of the original problem (1), i.e., as well as , were also computed and displayed.

Note that the DTSVD-1 (24), -2 (25), -3 (26), -4 (27), and -5 (28) algorithms separately required one, three, five, seven, and ten initial values before we could use them. Since the first initial value was generated randomly, the Euler method was adopted to compute these values, except for the DTSVD-1 (24) algorithm, which needed only one initial value.

4.1. Example Description

Three examples to be discussed are given below.

Example 1.

Example 2.

Example 3.

Evidently, by using formula , the original form of is obtained easily. Additionally, the corresponding dimensions of in these three examples are , , and , respectively.

4.2. Experimental Results of the CTSVD Model

The ODE (ordinary differential equation) solver [31] in the MATLAB R2024a routine was first applied to execute the CTSVD model (7). Specifically, we used the ODE function “ode45” in this research, and the solving results are displayed in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9. Note that in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9, the solution trajectories and residual error trajectories corresponding to different initial values are represented in different colors.

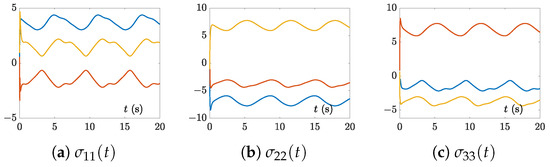

Figure 1.

Solution trajectories of synthesized by the CTSVD model (7) with three random initial values and for Example 1.

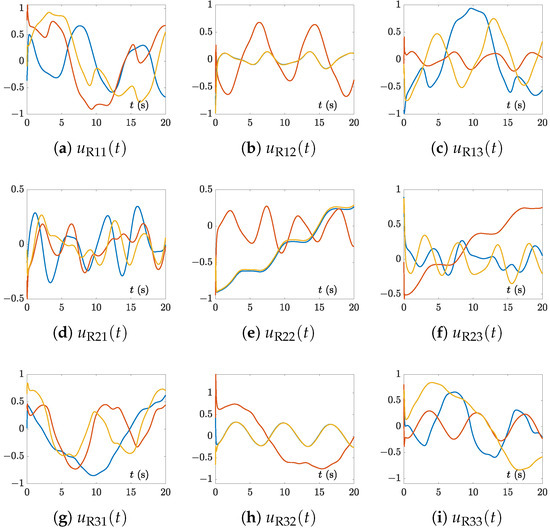

Figure 2.

Solution trajectories of synthesized by the CTSVD model (7) with three random initial values and for Example 1.

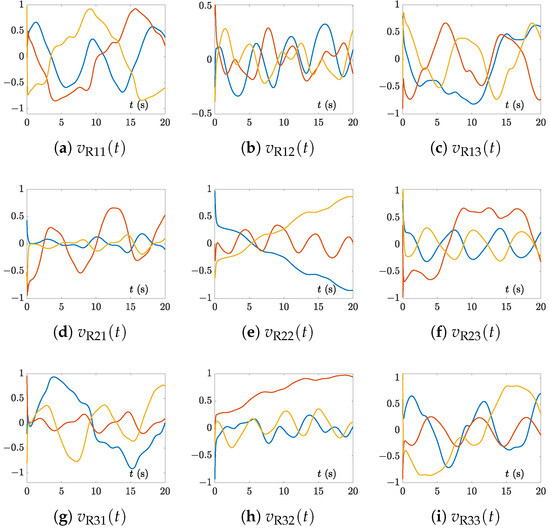

Figure 3.

Solution trajectories of synthesized by the CTSVD model (7) with three random initial values and for Example 1.

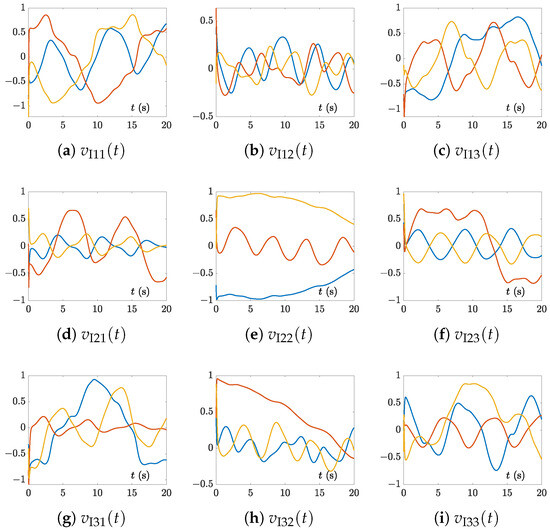

Figure 4.

Solution trajectories of synthesized by the CTSVD model (7) with three random initial values and for Example 1.

Figure 5.

Solution trajectories of synthesized by the CTSVD model (7) with three random initial values and for Example 1.

Figure 6.

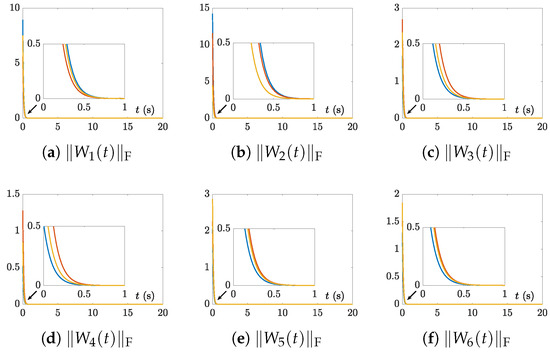

Residual errors of the CTSVD model (7) with three random initial values and for Example 1.

Figure 7.

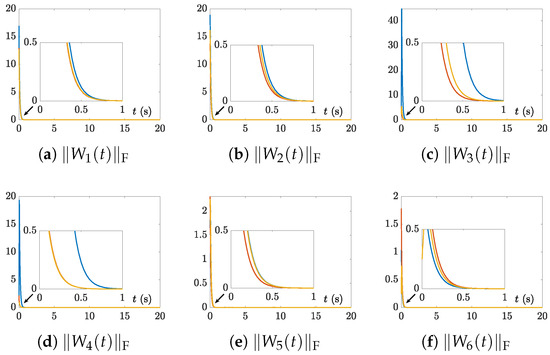

Residual errors of the CTSVD model (7) with three random initial values and for Example 2.

Figure 8.

Residual errors of the CTSVD model (7) with three random initial values and for Example 3.

The solution trajectories of synthesized by the CTSVD model (7) with three random initial values and corresponding to Example 1 are shown in Figure 1. It is worth noting that was a real-valued diagonal matrix, that is, its non-diagonal elements were zero, and the imaginary part of its diagonal elements were also zero. Therefore, in the three subgraphs (a), (b), and (c) of Figure 1, only the real part trajectories of the diagonal elements of , i.e., , , and , are shown.

In addition, the solution trajectories of , , , and , synthesized by the CTSVD model (7) with three random initial values and corresponding to Example 1 are shown in Figure 2, Figure 3, Figure 4 and Figure 5. Evidently, from these figures, we can see that the real and imaginary values of all elements of matrices and changed over time, that is, they were temporally variant. Meanwhile, due to the page limitation and similarity, the solution trajectories synthesized by the CTSVD model (7) corresponding to Examples 2 and 3 are omitted.

From Figure 6, we see that the residual errors of the CTSVD model (7) corresponding to Example 1, with three random initial values and , converged to near-zero within one second. Specifically, it can be seen from the subgraphs (a), (b), (c), (d), (e), and (f) in Figure 6 that the values of the residual errors of the CTSVD model (7), i.e., , , , , , and , converged rapidly to near-zero, which means that the values of the error functions, i.e., , , , , , and , quickly became near-zero. This indicates that the linear design formula successfully zeros out the error functions’ values, and this simultaneously verifies the correctness of the results of Theorem 1.

Similarly, as shown in Figure 7 and Figure 8, the residual errors of the CTSVD model (7) corresponding to Examples 2 and 3, with the same parameters as Example 1, also rapidly converged to near-zero. Moreover, as seen from Figure 9, the residual errors of original problem (1) for Examples 1–3 also converged to near-zero rapidly. Therefore, the results of Theorem 1 were confirmed to be correct again.

4.3. Experimental Results of DTSVD Algorithms

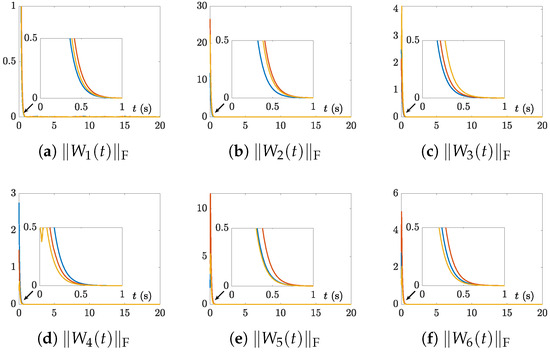

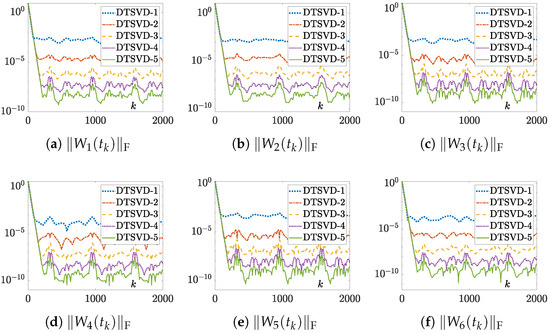

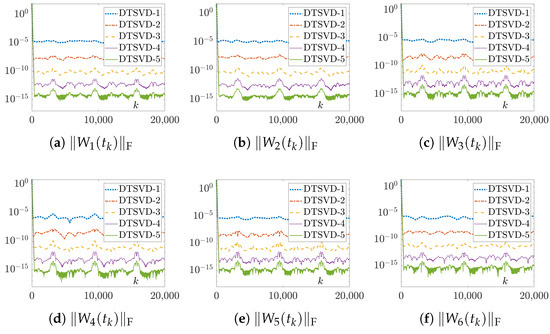

The results of the numerical experiments for the DTSVD-1 (24), -2 (25), -3 (26), -4 (27), and -5 (28) algorithms, with random initial values, , and s or s, are respectively displayed in Figure 10, Figure 11 and Figure 12, and the corresponding data are listed in Table 1, Table 2 and Table 3. Note that due to space limitations, the solution trajectory figures, as well as the residual error figures for Examples 2 and 3, synthesized by the DTSVD algorithms are not provided.

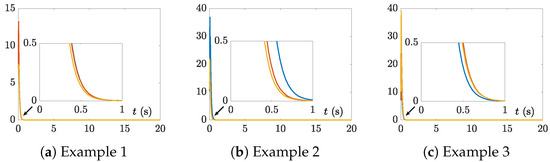

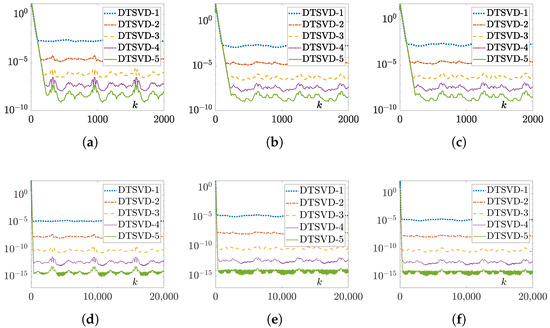

Figure 12.

Residual errors of the original problem (1), i.e., , corresponding to the DTSVD-1 (24), -2 (25), -3 (26), -4 (27), and -5 (28) algorithms with random initial values and for Examples 1–3. (a) Example 1 with s. (b) Example 2 with s. (c) Example 3 with s. (d) Example 1 with s. (e) Example 2 with s. (f) Example 3 with s.

By comparing Figure 10 and Figure 11, we can observe that the residual error trajectories synthesized by the DTSVD-1 (24), -2 (25), -3 (26), -4 (27), and -5 (28) algorithms for Example 1 changed regularly, which was consistent with the results of Theorem 3. From the subgraphs (a), (b), (c), (d), (e), and (f) in Figure 10 as well as Figure 11, we can observe that the values of the residual errors, i.e., , , , , , and , for the five DTSVD algorithms all converged rapidly to near-zero. Among these algorithms, the DTSVD-5 (28) algorithm had the fastest convergence speed and highest computational precision. In addition, as illustrated in Figure 10 and Figure 11, the values of the error functions, i.e., , , , , , and , rapidly approached zero, indicating the effectiveness of the proposed DTSVD algorithms.

Furthermore, according to the data in Table 1 and Table 2, we know that the residual errors of DTSVD-1 (24), -2 (25), -3 (26), -4 (27), and -5 (28) algorithms changed in the manner of , , , , and , respectively, which verifies the results of Theorem 3. Meanwhile, as shown in Figure 12 and Table 3, the residual errors of the original problem (1) corresponding to the DTSVD-1 (24), -2 (25), -3 (26), -4 (27), and -5 (28) algorithms were also proportional to , , , , and , respectively. Thus, Theorem 3’s results were reconfirmed.

5. Conclusions

In this research, the problem of complex, temporally variant SVD was formulated and investigated. To solve this difficult problem, an equation system was provided first. The dynamical model, i.e., the CTSVD model, was derived and proposed by applying the real ZN method, matrix vectorization, Kronecker product, vectorized transpose matrix, and dimensionality reduction technique. Meanwhile, a new 11-point ZeaD formula was proposed and studied. Furthermore, five DTSVD algorithms were obtained, with the use of the 11-point and other ZeaD formulas. It is worth mentioning that the proposed DTSVD-5 algorithm had the highest computational accuracy among the five DTSVD algorithms, i.e., precision. Finally, theoretical analyses and numerical experimental results verified the feasibility and effectiveness of the proposed dynamical model and algorithms. Our future research aims to apply the proposed SVD model and algorithms to real-world applications, such as signal processing, communications, and machine learning. Additionally, we will consider non-ideal conditions, including the existence of matrix singularities or noise interference.

Author Contributions

Conceptualization, X.K. and Y.Z.; Data curation, J.C., X.K. and Y.Z.; Formal analysis, J.C., X.K. and Y.Z.; Funding acquisition, J.C. and Y.Z.; Investigation, J.C., X.K. and Y.Z.; Methodology, J.C., X.K. and Y.Z.; Project administration, X.K. and Y.Z.; Resources, J.C., X.K. and Y.Z.; Software, J.C.; Supervision, X.K. and Y.Z.; Validation, J.C., X.K. and Y.Z.; Visualization, J.C., X.K. and Y.Z.; Writing—original draft, J.C.; Writing—review and editing, J.C., X.K. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was aided by the National Natural Science Foundation of China under grant 62376290, the Natural Science Foundation of Guangdong Province under grant 2024A1515011016, and the School Level Scientific Research Project of Youjiang Medical University for Nationalities under grant yy2020gcky037.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors wish to express their sincere thanks to Sun Yat-sen University for its support and assistance in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this paper:

| SVD | Singular value decomposition |

| ZN | Zeroing neurodynamics |

| CTSVD | Continuous-time SVD |

| ZeaD | Zhang et al. discretization |

| DTSVD | Discrete-time SVD |

| ODE | Ordinary differential equation |

Appendix A

In the Appendix, the following four results [30,32] for an N-step method are provided.

Result 1: A linear N-step method can be checked for 0-stability by determining the roots of its characteristic polynomial . If all roots denoted by of the polynomial satisfy , with being simple, then the N-step method is 0-stable (i.e., has 0-stability).

Result 2: A linear N-step method is said to be consistent (i.e., has consistency) of order w if the truncation error for the exact solution is of order , where .

Result 3: A linear N-step method is convergent, i.e., , for all , as if and only if the method is 0-stable and consistent. That is, 0-stability plus consistency means convergence, which is also known as the Dahlquist equivalence theorem.

Result 4: A 0-stable consistent method converges with the order of its truncation error.

References

- Ye, C.; Tan, S.; Wang, J.; Shi, L.; Zuo, Q.; Xiong, B. Double security level protection based on chaotic maps and SVD for medical images. Mathematics 2025, 13, 182. [Google Scholar] [CrossRef]

- Saifudin, I.; Widiyaningtyas, T.; Zaeni, I.; Aminuddin, A. SVD-GoRank: Recommender system algorithm using SVD and Gower’s ranking. IEEE Access 2025, 13, 19796–19827. [Google Scholar] [CrossRef]

- Tsyganov, A.; Tsyganova, Y. SVD-based parameter identification of discrete-time stochastic systems with unknown exogenous inputs. Mathematics 2024, 12, 1006. [Google Scholar] [CrossRef]

- Xie, Z.; Sun, J.; Tang, Y.; Tang, X.; Simpson, O.; Sun, Y. A k-SVD based compressive sensing method for visual chaotic image encryption. Mathematics 2023, 11, 1658. [Google Scholar] [CrossRef]

- Xue, Y.; Mu, K.; Wang, Y.; Chen, Y.; Zhong, P.; Wen, J. Robust speech steganography using differential SVD. IEEE Access 2019, 7, 153724–153733. [Google Scholar] [CrossRef]

- Wang, B.; Ding, C. An adaptive signal denoising method based on reweighted SVD for the fault diagnosis of rolling bearings. Sensors 2025, 25, 2470. [Google Scholar] [CrossRef]

- Baumann, M.; Helmke, U. Singular value decomposition of time-varying matrices. Future Gen. Comput. Syst. 2003, 19, 353–361. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Y. Online singular value decomposition of time-varying matrix via zeroing neural dynamics. Neurocomputing 2020, 383, 314–323. [Google Scholar] [CrossRef]

- Huang, M.; Zhang, Y. Zhang neuro-PID control for generalized bi-variable function projective synchronization of nonautonomous nonlinear systems with various perturbations. Mathematics 2024, 12, 2715. [Google Scholar] [CrossRef]

- Uhlig, F. Zhang neural networks: An introduction to predictive computations for discretized time-varying matrix problems. Numer. Math. 2024, 156, 691–739. [Google Scholar] [CrossRef]

- Guo, P.; Zhang, Y.; Li, S. Reciprocal-kind Zhang neurodynamics method for temporal-dependent Sylvester equation and robot manipulator motion planning. IEEE Trans. Neural Netw. Learn. Syst. 2025, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Jerbi, H.; Alshammari, O.; Ben, A.; Kchaou, M.; Simos, T.; Mourtas, S.; Katsikis, V. Hermitian solutions of the quaternion algebraic Riccati equations through zeroing neural networks with application to quadrotor control. Mathematics 2024, 12, 15. [Google Scholar] [CrossRef]

- Jin, L.; Zhang, G.; Wang, Y.; Li, S. RNN-based quadratic programming scheme for tennis-training robots with flexible capabilities. IEEE Trans. Syst. Man Cybern. 2023, 53, 838–847. [Google Scholar] [CrossRef]

- Yang, Y.; Li, X.; Wang, X.; Liu, M.; Yin, J.; Li, W.; Voyles, R.; Ma, X. A strictly predefined-time convergent and anti-noise fractional-order zeroing neural network for solving time-variant quadratic programming in kinematic robot control. Neural Netw. 2025, 186, 107279. [Google Scholar] [CrossRef]

- Hua, C.; Cao, X.; Liao, B. Real-time solutions for dynamic complex matrix inversion and chaotic control using ODE-based neural computing methods. Comput. Intell. 2025, 41, e70042. [Google Scholar] [CrossRef]

- Zhang, B.; Zheng, Y.; Li, S.; Chen, X.; Mao, Y.; Pham, D. Inverse-free hybrid spatial-temporal derivative neural network for time-varying matrix Moore-Penrose inverse and its circuit schematic. IEEE Trans. Circuits-II 2025, 72, 499–503. [Google Scholar] [CrossRef]

- Yan, D.; Li, C.; Wu, J.; Deng, J.; Zhang, Z.; Yu, J.; Liu, P. A novel error-based adaptive feedback zeroing neural network for solving time-varying quadratic programming problems. Mathematics 2024, 12, 2090. [Google Scholar] [CrossRef]

- Peng, Z.; Huang, Y.; Xu, H. A novel high-efficiency variable parameter double integration ZNN model for time-varying Sylvester equations. Mathematics 2025, 13, 706. [Google Scholar] [CrossRef]

- Uhlig, F. Adapted AZNN methods for time-varying and static matrix problems. Electron. J. Linear Algebra 2023, 39, 164–180. [Google Scholar] [CrossRef]

- Guo, D.; Lin, X. Li-function activated Zhang neural network for online solution of time-varying linear matrix inequality. Neural Process. Lett. 2020, 52, 713–726. [Google Scholar] [CrossRef]

- Katsikis, V.N.; Mourtas, S.D.; Stanimirovic, P.S.; Zhang, Y. Solving complex-valued time-varying linear matrix equations via QR decomposition with applications to robotic motion tracking and on angle-of-arrival localization. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 3415–3424. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Kang, X.; Zhang, Y. Continuous and discrete ZND models with aid of eleven instants for complex QR decomposition of time-varying matrices. Mathematics 2023, 11, 3354. [Google Scholar] [CrossRef]

- Hu, C.; Kang, X.; Zhang, Y. Three-step general discrete-time Zhang neural network design and application to time-variant matrix inversion. Neurocomputing 2018, 306, 108–118. [Google Scholar] [CrossRef]

- Liu, K.; Liu, Y.; Zhang, Y.; Wei, L.; Sun, Z.; Jin, L. Five-step discrete-time noise-tolerant zeroing neural network model for time-varying matrix inversion with application to manipulator motion generation. Eng. Appl. Artif. Intel. 2021, 103, 104306. [Google Scholar] [CrossRef]

- Sun, M.; Wang, Y. General five-step discrete-time Zhang neural network for time-varying nonlinear optimization. Bull. Malays. Math. Sci. Soc. 2020, 43, 1741–1760. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Y. Discrete-time ZND models solving ALRMPC via eight-instant general and other formulas of ZeaD. IEEE Access 2019, 7, 125909–125918. [Google Scholar] [CrossRef]

- Brookes, M. The Matrix Reference Manual; Imperial College London Staff Page. 2020. Available online: http://www.ee.imperial.ac.uk/hp/staff/dmb/matrix/intro.html (accessed on 31 March 2025).

- Horn, R.A.; Johnson, C.R. Topics in Matrix Analysis; Cambridge University Press: New York, NY, USA, 1991. [Google Scholar]

- Zhang, Y.; Yang, R.; Li, S. Reciprocal Zhang dynamics (RZD) handling TVUDLMVE (time-varying under-determined linear matrix-vector equation). In Proceedings of the 37th Chinese Control and Decision Conference (CCDC), Xiamen, China, 16–19 May 2025; pp. 5794–5800. [Google Scholar]

- Griffiths, D.F.; Higham, D.J. Numerical Methods for Ordinary Differential Equations: Initial Value Problems; Springer: London, UK, 2010. [Google Scholar]

- Mathews, J.H.; Fink, K.D. Numerical Methods Using MATLAB; Prentice-Hall: Englewood Cliffs, NJ, USA, 2004. [Google Scholar]

- Suli, E.; Mayers, D.F. An Introduction to Numerical Analysis; Cambridge University Press: Oxford, UK, 2003. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).