1. Introduction

In contemporary interactions with governmental bodies, financial institutions, healthcare facilities, and commercial enterprises, the process of confirming individuals’ identities constitutes a crucial initial step in safeguarding access, ensuring trust, and enhancing operational security. In today’s digital economy, where interactions with government agencies, financial institutions, and commercial enterprises are increasingly conducted online, ensuring secure and trustworthy identity verification has become critical. Traditional verification methods, such as submitting photocopies of identification documents, are no longer sufficient, as they are highly vulnerable to identity theft and fraud. However, beyond the risk of fraud, these conventional approaches also suffer from several operational limitations. Manual identity verification is often time-consuming and labor-intensive, leading to significant delays in service delivery. For example, a report by [

1] found that manual onboarding processes can take up to 10 times longer than digital alternatives, directly impacting user satisfaction and operational efficiency. In addition, the reliance on physical documents results in higher administrative costs and introduces risks associated with human error during document verification. According to a study by Deloitte [

2], organizations incur an average cost of USD 20 to USD 30 per customer for manual identity verification processes. Moreover, traditional verification systems lack scalability and are ill-suited for the demands of modern digital platforms, especially in remote onboarding scenarios. With the rapid growth of online financial services and government digital transformation initiatives, the inability of conventional methods to support seamless remote identity verification has become a significant barrier to service expansion. The World Bank’s Identification for Development (ID4D) initiative also highlights that nearly 1 billion people globally remain without formal identification, further emphasizing the need for more accessible and efficient digital identity solutions. In response to these challenges, this research introduces a robust identity verification framework that combines super-resolution preprocessing, a convolutional neural network (CNN), and Monte Carlo dropout-based Bayesian uncertainty estimation to improve facial recognition within electronic know your customer (e-KYC) processes.

Traditionally, this verification endeavor has manifested itself through a variety of approaches, including access card exchanges for facility entry, the presentation of government-issued documentation, and the submission of identification copies for banking or legal transactions. However, the diversity in verification protocols across sectors has led to the proliferation of disconnected systems, resulting in fragmented data repositories, inconsistent user experiences, and a lack of standardized mechanisms that support long-term transactional reusability. As a consequence, even returning clients frequently encounter the burden of resubmitting documents, while persistent instances of falsified or misrepresented credentials continue to contribute to fraudulent activities with widespread ramifications. To address such challenges, national-level digital transformation efforts have been launched to enhance the security, efficiency, and interoperability of digital identity systems. Anchored in the National Strategy (2018–2037) [

3], Thailand’s digital development agenda emphasizes cross-sectoral technological integration, particularly in the realms of digital industries, artificial intelligence (AI), and secure data infrastructure. As part of this strategy, the Ministry of Digital Economy and Society (MDES) issued Ministerial Order No. 75/2560 [

4], which mandates the creation of a committee tasked with the development and standardization of a national digital identity ecosystem. The primary aim of this initiative is to mitigate systemic inefficiencies, reduce redundancy, and establish robust verification protocols applicable to both public and private sectors. Subsequently, the Electronic Transactions Development Agency (ETDA) was appointed to formulate strategic frameworks and technical guidelines for identity verification and authentication. These efforts culminated in the development of the identity assurance level (IAL) and the authenticator assurance level (AAL), which serve as standardized metrics for measuring the strength and reliability of digital identity systems [

5,

6]. These levels, based on the guidelines outlined in NIST Special Publication 800-63A, ensure that verification processes are implemented with consistent levels of rigor, scalability, and security, thereby aligning domestic policies with global digital identity assurance standards. In tandem with these regulatory advancements, academic research has increasingly explored technological methodologies capable of achieving these objectives. Central to this scholarly pursuit is the application of deep learning algorithms, particularly convolutional neural networks (CNN), in the context of facial recognition and biometric verification. CNNs have demonstrated considerable success in extracting hierarchical features from facial images and performing accurate identity classification. Such image-based methods have become integral to the implementation of electronic know your customer (e-KYC) systems, which aim to digitally establish and verify user identities in a secure and automated manner.

Despite these advancements, practical implementations continue to face several challenges. In real-world scenarios, facial images are often of low resolution, affected by suboptimal lighting, or captured at varying time points, which leads to significant degradation in recognition performance. Additionally, conventional deep learning models often operate as deterministic black boxes, offering limited insight into the confidence or reliability of their predictions, which is an issue of particular concern when deployed in critical domains such as finance or national security. To bridge this gap, the field is increasingly turning to statistical machine learning approaches, particularly those rooted in Bayesian inference to enhance the robustness, interpretability, and reliability of identity verification systems. Bayesian methods provide a mathematically grounded framework for quantifying uncertainty in model predictions, enabling the system to not only classify but also to evaluate its confidence in those classifications. This capacity becomes crucial when working with noisy or degraded image inputs, as it allows for more cautious decision making and better risk management in downstream processes [

7,

8]. Recent innovations such as Monte Carlo Dropout, Bayesian neural networks, and variational inference techniques allow deep learning models to approximate posterior distributions over weights or predictions, thereby providing insights into both epistemic (model-related) and aleatoric (data-related) uncertainties. These techniques also support the calibration of prediction scores, aiding system designers and decision makers in assessing when a prediction should be trusted or verified by alternative means. Although numerous studies have applied deep learning techniques, particularly convolutional neural networks (CNNs) for facial image-based identity verification, several critical limitations remain, which justify the need for this research. A primary concern lies in the quality of input images, which in real-world scenarios are often low-resolution, poorly lit, or captured under non-ideal conditions, significantly degrading the performance of conventional CNN models. Moreover, most existing approaches operate as opaque “black box” systems, offering no insight into the confidence of their predictions. This lack of interpretability poses a considerable risk in high-stake applications such as financial services or national-level identity verification. Furthermore, prior research has seldom incorporated mechanisms for quantifying uncertainty, either in the data or the model, which is essential for cautious and risk-aware decision making, particularly in ambiguous or noisy input conditions. In response to these challenges, this study proposes a novel framework that integrates CNNs with Bayesian techniques to develop an identity verification model that is not only accurate but also transparent and capable of estimating the reliability of its own predictions. This approach aims to support secure, interpretable, and scalable identity verification within e-KYC systems, in alignment with international standards for digital trust and assurance. While previous studies have demonstrated the effectiveness of convolutional neural networks (CNNs) in facial recognition and identity verification tasks, most have been developed and evaluated under controlled environments, limiting their generalizability in real-world applications. These models often assume consistent lighting conditions, high-resolution imagery, and minimal temporal variation, which is rarely the case in practical settings, especially within e-KYC frameworks where images may be acquired from mobile devices under diverse conditions. This discrepancy underscores a fundamental gap in robustness and adaptability. Furthermore, despite achieving high classification accuracy, existing CNN-based models typically function as deterministic systems, and offer no estimation of predictive uncertainty. This black box nature poses significant risks when these systems are deployed in security-critical domains such as finance, healthcare, or national governance, where decisions must be justifiable, auditable, and trustworthy. The lack of interpretable and confidence-aware outputs hinders their integration into systems requiring compliance with identity assurance standards like the IAL (Identity Assurance Level) and AAL (Authenticator Assurance Level). In addition, while recent advancements in Bayesian deep learning provide promising tools for quantifying model uncertainty, such techniques have not yet been adequately applied to identity verification scenarios, particularly in e-KYC contexts. Most prior works focus either on model performance metrics or algorithmic novelty, but fail to address the practical necessity for reliability estimation, risk calibration, or system-level interpretability under suboptimal input conditions.

This research seeks to fill these gaps by introducing a hybrid approach that combines the feature extraction power of CNNs with Bayesian inference methods to produce a system capable of both high accuracy and reliable uncertainty estimation. By focusing on real-world image quality variations and aligning with international identity verification standards, the proposed framework addresses current limitations and contributes a robust, interpretable, and regulation ready solution for digital identity systems. And, to develop a CNN-based identity verification framework optimized for e-KYC applications, while exploring the integration of Bayesian-inspired techniques to improve its practical robustness. The proposed system is evaluated on facial images captured under varying conditions including low resolution and time disjoint comparisons to simulate realistic use cases. Special emphasis is placed on the model’s ability to provide interpretable outputs and reliable confidence measures, which are essential for compliance with IAL and AAL verification requirements. By combining advanced image processing, deep learning, and Bayesian statistical methods, this study aims to contribute a scalable, secure, and intelligent solution for digital identity verification. In doing so, it supports both the national digital strategy and the broader goal of aligning with international standards, while advancing the theoretical understanding of uncertainty-aware machine learning in real-world imaging applications.

Research questions (RQs). This study seeks to answer the following research questions:

RQ1: How can identity verification systems effectively improve recognition accuracy when dealing with low-resolution and degraded facial images, as commonly encountered in real-world e-KYC environments?

RQ2: How does the integration of uncertainty estimation methods, such as Monte Carlo Dropout, contribute to enhancing the reliability and transparency of identity verification decisions?

RQ3: Is the proposed identity verification framework compliant with international standards for digital identity assurance, specifically the Identity Assurance Level (IAL) and Authenticator Assurance Level (AAL)?

These research questions frame the development and evaluation of the proposed identity verification framework, ensuring both technical robustness and practical applicability in regulated digital environments.

The main contribution of this article is the development of a robust and interpretable identity verification framework that combines convolutional neural networks (CNNs) with Bayesian inference techniques, specifically tailored for real-world e-KYC applications. This hybrid approach addresses several critical limitations observed in the existing literature, including the lack of resilience to poor quality input images and the absence of uncertainty estimation in conventional deep learning models. The proposed system is capable of processing facial images captured under suboptimal conditions, while also quantifying both epistemic and aleatoric uncertainties, enabling it to provide confidence-aware predictions. These features are particularly relevant for applications that require high levels of trust, auditability, and compliance with international standards such as the Identity Assurance Level (IAL) and Authenticator Assurance Level (AAL). Furthermore, the model is designed with scalability and practical deployment in mind, offering a solution that not only advances technical performance but also meets the operational demands of national digital identity systems.

2. Related Works

Within the parameters of this research endeavor, an extensive investigation and data gathering initiative were conducted by the researchers. This was undertaken to formulate a framework and delineate guidelines for the examination of customer recognition processes through electronic channels, with a particular emphasis on identity authentication. Predominantly, deep learning methodologies, specifically employing convolutional neural network (CNN) algorithms, were employed in this study. Recent studies have extensively investigated identity verification techniques, particularly in addressing the challenges related to low-resolution facial images and identity authentication over electronic platforms. While many works provide valuable insights, gaps remain regarding their practical application in real-world e-KYC scenarios.

Ouyang et al. [

8] explored the use of CNNs to improve facial recognition for low-quality images. Their approach involved first extracting features from high-resolution images and then upscaling low-quality images to 56 × 56 pixels before applying the same feature extraction technique. Although their method achieved impressive accuracy rates of 98.2%, 99.1%, and 99.5% for low-resolution images sized 20 × 20, 24 × 24, and 36 × 36 pixels, respectively, it primarily focused on feature extraction without addressing decision confidence or uncertainty estimation.

Gal and Ghahramani [

9] reinterpret dropout as an approximation to Bayesian inference, enabling neural networks to estimate uncertainty. By applying dropout during both training and testing (MC Dropout), models can capture predictive variance without architectural changes. This method offers a practical and efficient approach to uncertainty modeling in deep learning.

Chen et al. [

10] focused on age-invariant face recognition using deep learning with SVM classifiers, applying their models to datasets such as FGNET, MORPH, and CACD. While their results showed promising accuracy across diverse age groups, their solution was limited by its reliance on high-quality inputs and lacked robustness under degraded imaging conditions often encountered in real-world e-KYC applications.

Similarly, Singh et al. [

11] proposed a face recognition framework utilizing the synthesis via hierarchical sparse representation (SHSR) algorithm. Their work focused on comparing high- and low-resolution image sets using multiple rounds of sparse representation. While the SHSR algorithm improved performance under varying resolutions, it did not integrate uncertainty modeling or regulatory compliance considerations, which are crucial for practical e-KYC deployment.

Li et al. [

12] introduced a comparative face recognition approach using various deep learning architectures, including Siamese networks, Matchnet, and six-channel networks. Their framework involved aggressively downsampling facial images before applying super-resolution techniques for final comparison. Although this approach improved visual similarity matching, it lacked mechanisms to assess prediction reliability, which is critical in high-stakes verification scenarios.

Iqbal et al. [

13] proposed an age group classification framework based on facial wrinkle and skin texture analysis, introducing the directional age-primitive pattern (DAPP) algorithm. Although their method effectively classified age groups, it did not address the broader challenges of identity verification under low-quality imaging environments or the need for compliance with digital identity standards.

The collated information served as the cornerstone for the development of theoret-ical constructs and a comprehensive review of pertinent research works. These theoret-ical constructs and research insights laid the groundwork for the establishment of sys-tematic guidelines and methodological approaches for the scrutiny of customer recognition processes via electronic platforms [

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24].

While each of these studies contributes valuable approaches to specific technical challenges, none have comprehensively addressed the combined issues of low-resolution image processing, uncertainty estimation, and compliance with identity assurance frameworks. This research builds upon those foundations to propose a more holistic and practically deployable solution for secure and trustworthy identity verification in electronic environments. Recent advancements in facial recognition, super-resolution techniques, and uncertainty estimation have directly influenced the development of more robust and reliable identity verification systems. Zhang et al. [

25] introduced an attention-guided multi-scale interaction network for face super-resolution, which significantly enhances facial detail restoration from low-resolution inputs. This advancement supports our proposed framework’s objective of improving recognition accuracy when dealing with degraded image quality.

Wijaya et al. [

26] proposed a GAN-based reconstruction technique aimed at enhancing the quality of low-resolution and degraded facial images prior to identity recognition. Their approach focused on restoring critical facial details such as facial contours, eyes, nose, and mouth through a specially designed GAN architecture that incorporates perceptual and adversarial loss functions to improve the realism and sharpness of reconstructed images. Experimental results demonstrated significant improvements in recognition accuracy, with over 12% accuracy gain compared to traditional interpolation methods like Bicubic and earlier super-resolution models such as SRCNN. The proposed method also showed high robustness against common image degradations including noise, blur, and geometric distortions. While their approach successfully improved the visual quality and recognition performance of facial images, it did not incorporate uncertainty estimation mechanisms. As a result, the system lacks the ability to provide confidence-aware predictions, which are essential for decision making in high-stakes environments such as e-KYC and financial services. In contrast, our proposed framework integrates not only super-resolution and recognition capabilities but also incorporates Monte Carlo dropout for predictive uncertainty estimation, addressing both accuracy and reliability concerns in practical deployment scenarios.

In the area of predictive uncertainty, Chen et al. [

10] proposed a Bayesian identity cap method to deliver calibrated uncertainty estimations in facial recognition systems, ensuring that decision making is more transparent and risk-aware. Similarly, Verma, P. et al. [

27] study implements a transfer learning approach, building upon the DenseNet-121 convolutional neural network to detect diabetic retinopathy. The authors apply Bayesian approximation techniques, including Monte Carlo dropout, to represent the posterior predictive distribution, allowing for uncertainty evaluation in model predictions. Their experiments demonstrate that the Bayesian-augmented DenseNet-121 outperforms state-of-the-art models in test accuracy, achieving 97.68% for the Monte Carlo Dropout model. Moreover, the comprehensive survey by Gawlikowski et al. [

28] emphasizes that integrating uncertainty estimation with deep neural networks is crucial for developing trustworthy AI systems, particularly in applications involving security and identity verification. This supports the design philosophy of our proposed model, which combines image enhancement, predictive uncertainty estimation, and compliance with international digital identity standards to deliver a scalable, secure, and explainable solution for real-world e-KYC environments.

2.1. Conceptual Framework and Principles of Customer Recognition Process

According to the announcements issued by the Bank of Thailand, specifically No-tices No. 7/2559 and No. 31/2562, pertaining to the criteria for accepting deposits or receiving funds from the public, guidelines regarding customer recognition processes encompass identity verification and authentication. These processes can be executed through two primary modalities: face-to-face encounters and non-face-to-face interactions via electronic channels. The operational procedures for each modality are detailed as follows: In face-to-face customer encounters, the interaction beings with a bank representative involves the provision of personal identification information, presentation of identity documents, and physical signature verification; subsequently, identity verification entails the examination of identity documents by authorized personnel to ensure accuracy and authenticity; biometric data comparison is conducted between the individual’s biometric information and the data presented in the identity documents for authentication. In non-face-to-face transactions, the interaction involves electronic interactions between the service provider’s system and the customer; the customer inputs personal information and submits copies of identity documents electronically; facial images are captured by the electronic device, along with the images of the presented identity documents; subsequently, biometric data comparison is performed between the facial images captured and the images of the identity documents for authentication. Hence, the process of customer recognition via electronic channels enables customers and service providers to independently conduct identity verification and authentication processes through electronic devices or computer systems equipped with customer recognition services.

2.2. Guidelines for the Use of Digital IDs in Thailand

In accordance with the guidelines outlined by the Electronic Transactions Development Agency (ETDA), under the supervision of the Ministry of Digital Economy and Society of Thailand, a working group has been established to study and propose recommendations regarding the use of digital IDs in the country. These recommendations, documented in [

3,

4], serve as guidelines for registration, identity verification, and authentication processes. The document on registration and identity verification guidelines provides recommendations for individuals seeking to register their identities for service usage, categorized according to levels of trustworthiness. The levels, namely IAL1, IAL2, and IAL3, each have three sub-levels, with varying degrees of stringency and requirements for identity verification. The authentication document offers guidance on verifying and managing identity assertions based on the trust levels of the authentication mechanisms used. The levels, namely AAL1, AAL2, and AAL3, with two sub-levels for AAL2, define the stringency and requirements for identity authentication. The recommended document for registration and identity verification guidelines, suitable for adaptation in simulating the process of customer identification through electronic channels, serves as a basis for research in this study. In this research endeavor, the trustworthiness level of identity assurance (IAL) at level 2.1 is explored and utilized to develop a process termed “physical comparison”. This process is enhanced using deep learning techniques, employing convolutional neural network (CNN) models to learn from clear and blurred facial images of individuals.

Several deep learning-based super-resolution techniques have been proposed in recent years, including SRCNN, VDSR, EDSR, and ESRGAN. These methods differ in complexity, performance, and suitability for specific application domains. As summarized in

Table 1, while GAN-based methods such as ESRGAN generate high perceptual quality, they may produce hallucinated features and are thus less appropriate for identity-sensitive tasks like e-KYC. In contrast, SRCNN offers a balance between computational efficiency and enhancement quality, making it suitable for low-resolution facial image scenarios where real-time processing and system transparency are essential. Consequently, this study adopts an SRCNN-inspired approach for upscaling input images prior to classification in

Table 1.

The novelty of this research lies in the development of a unified and practical framework that addresses key limitations in existing e-KYC identity verification systems. Unlike conventional approaches that focus solely on achieving high classification accuracy under controlled conditions, this study introduces an innovative integration of super-resolution preprocessing, lightweight deep learning, and Bayesian uncertainty estimation within a single architecture. This combination has not been previously explored in the context of digital identity verification, particularly for scenarios involving low-quality facial images captured in real-world environments.

A distinctive aspect of this work is the use of a super-resolution convolutional neural network (SRCNN) to enhance degraded facial images prior to classification. This approach effectively mitigates common issues associated with low-resolution and blurred images, which frequently occur in mobile-based e-KYC processes. Furthermore, the incorporation of Bayesian-inspired uncertainty estimation adds a critical layer of interpretability and risk-awareness, enabling the system to provide confidence scores alongside predictions, which is an essential feature for compliance-driven applications in finance, government, and enterprise security. In addition to technical advancements, the proposed framework is explicitly designed to align with international standards such as the Identity Assurance Level (IAL) and Authenticator Assurance Level (AAL), ensuring that the solution not only performs well but also adheres to regulatory and security requirements. The lightweight nature of the architecture further enhances its practicality, allowing for efficient deployment on resource-constrained devices without compromising accuracy or reliability. This research presents a novel contribution by bridging the gap between deep learning innovation and practical, standards-compliant identity verification. It delivers a robust, explainable, and scalable solution capable of handling real-world data imperfections while supporting transparent and secure decision making in modern e-KYC systems.

3. Proposed Method

This study explores a comprehensive framework for customer identification via electronic, non-face-to-face methods. These procedures are conducted through digital platforms either provided by service entities or self-operated by individuals. The identification process is closely tied to registration protocols and involves stringent verification steps that emphasize document authenticity and image-based identity matching, as outlined in

Section 2.1. Central to this verification process is the comparison of facial features extracted from live or uploaded images against reference photographs embedded in official identification documents, most commonly national ID cards. In line with this objective, prior literature reveals numerous research efforts focusing on the evolution of artificial neural network architectures to address the challenges posed by image variability in real-world identity verification contexts. Of particular relevance are studies examining the performance of dual-network architectures designed to compare facial images that differ in sharpness or clarity, a scenario frequently encountered when contrasting live captures against ID card photographs. This is further supplemented by research into super-resolution techniques that aim to enhance image quality and preserve the critical facial features necessary for reliable comparison. Motivated by these insights, this study introduces a novel convolutional neural network (CNN) model tailored to perform comparative analysis between facial images exhibiting varying levels of resolution and sharpness, as illustrated in

Figure 1. The proposed model is not only optimized for visual feature extraction but is also designed to integrate uncertainty-aware components, such as softmax-based confidence scoring or Monte Carlo dropout, enabling a more robust and interpretable decision-making process during facial comparisons. To train and validate this model, a carefully curated dataset of facial images is required. The well-established CASIA-WebFace dataset is selected for this purpose due to its wide demographic coverage and sufficient variation in facial expressions, lighting conditions, and resolution levels. The dataset comprises 494,414 images of size 250 × 250 pixels, capturing a diverse set of individuals. As shown in

Table 2, these images are categorized by key demographic attributes, including dark-skinned females, non-dark-skinned females, dark-skinned males, and non-dark-skinned males. This classification not only supports the development of a balanced training set but also facilitates the subsequent evaluation of model performance across different demographic groups, thereby addressing potential bias and supporting fairness in biometric identity verification. Moreover, by aligning the proposed approach with the principles of statistical machine learning, this study aims to advance current e-KYC systems towards greater robustness, fairness, and uncertainty-awareness, all of which are essential in real-world identity verification scenarios involving diverse and potentially degraded image inputs.

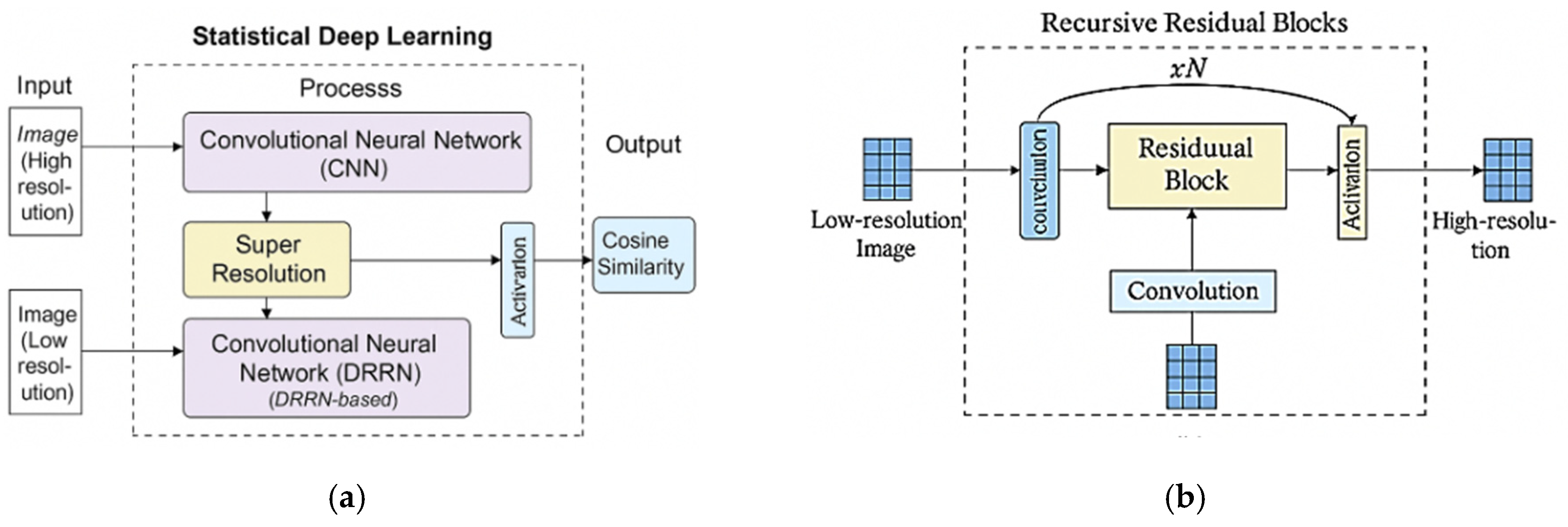

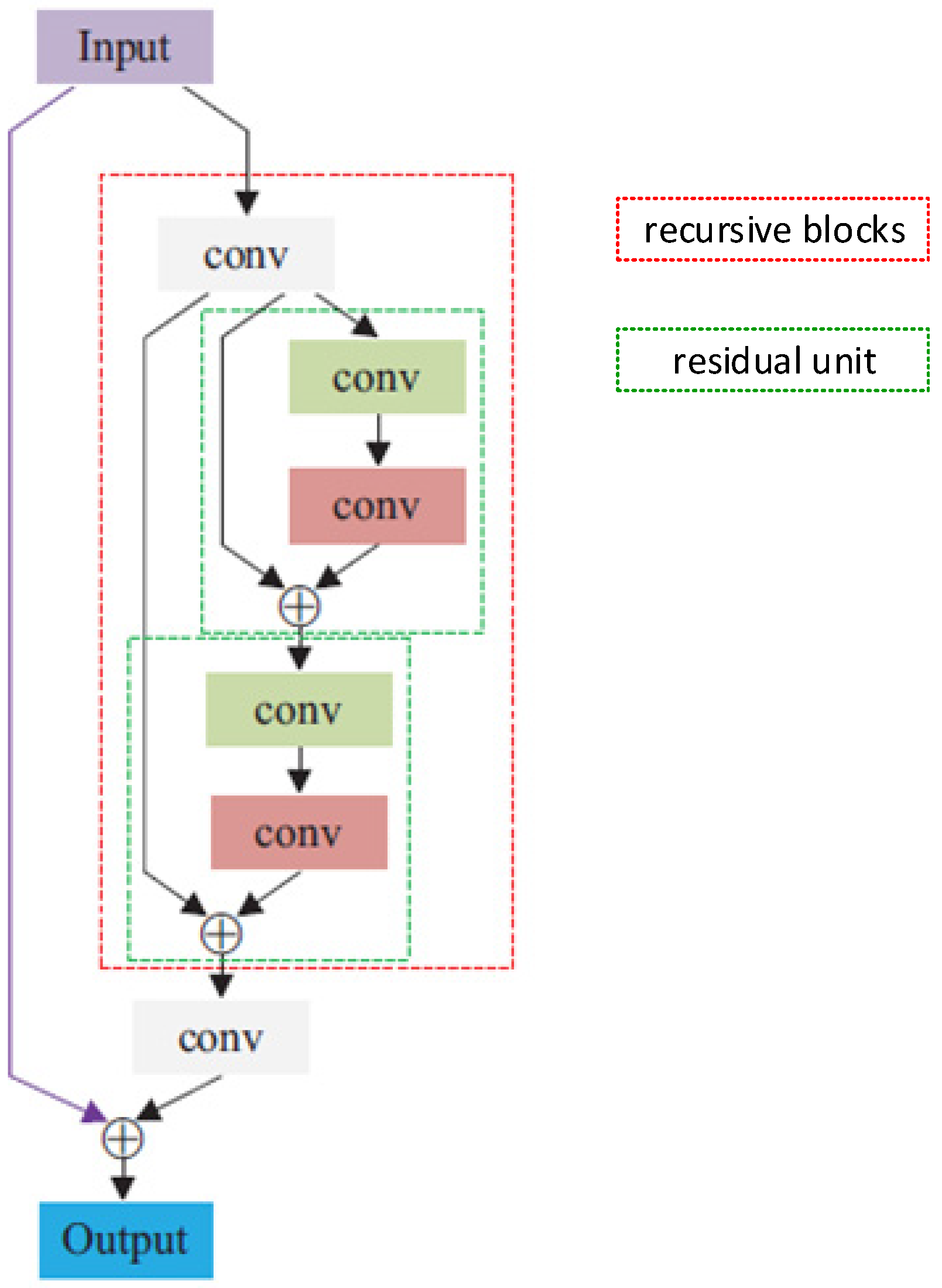

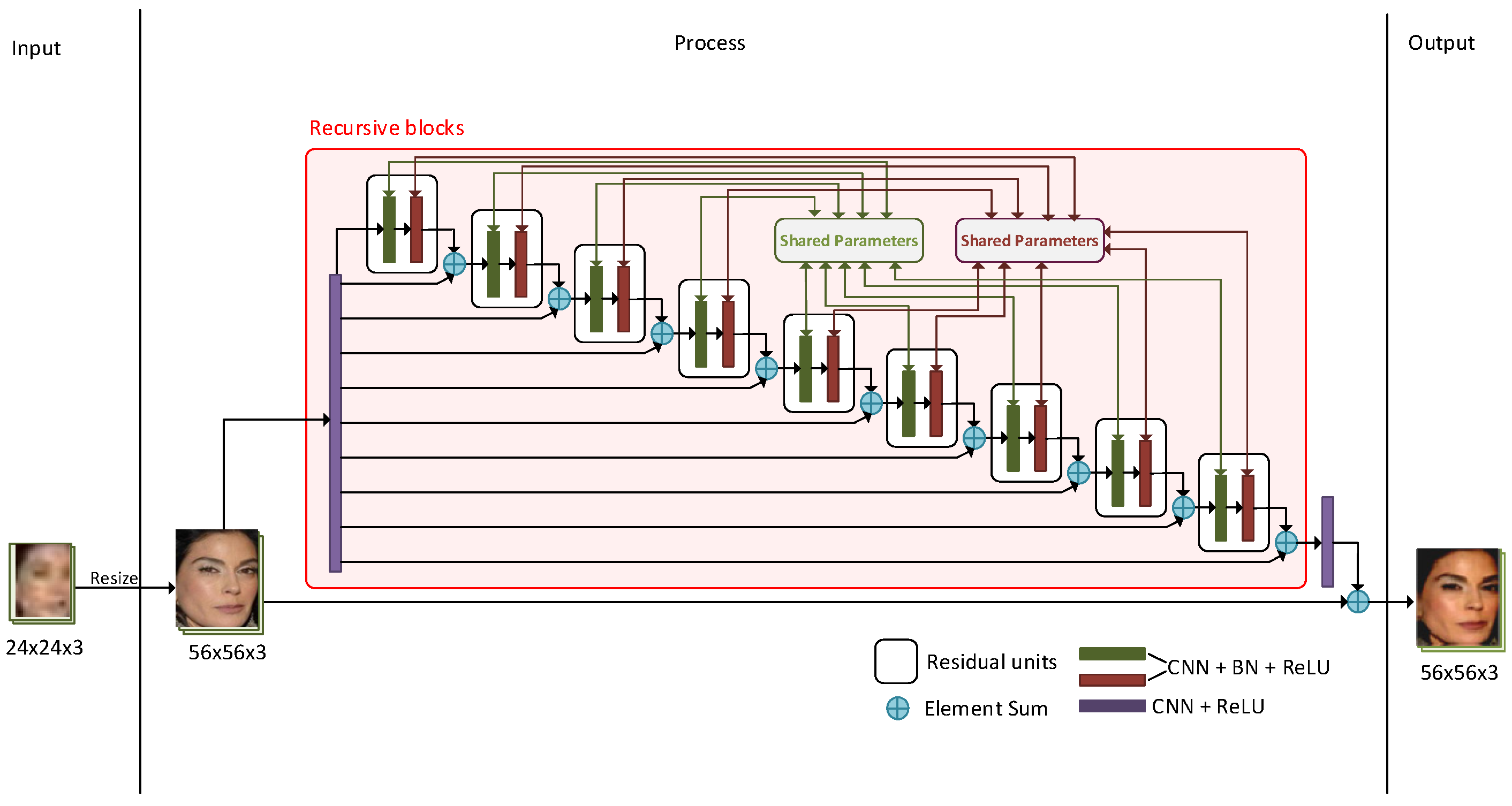

As shown in

Figure 1a, the overall architecture of the proposed identity verification framework integrates super-resolution preprocessing, DRRN-based convolutional neural networks (CNNs), and Monte Carlo dropout for uncertainty estimation. This framework enhances low-resolution facial images, extracts deep facial features, and supports reliable identity verification through confidence-aware predictions. As shown in

Figure 1b, the detailed structure of the recursive residual block was employed in the DRRN architecture. The block utilizes multiple convolutional layers with residual connections and activation functions, recursively applied to progressively refine the image resolution and improve feature representation.

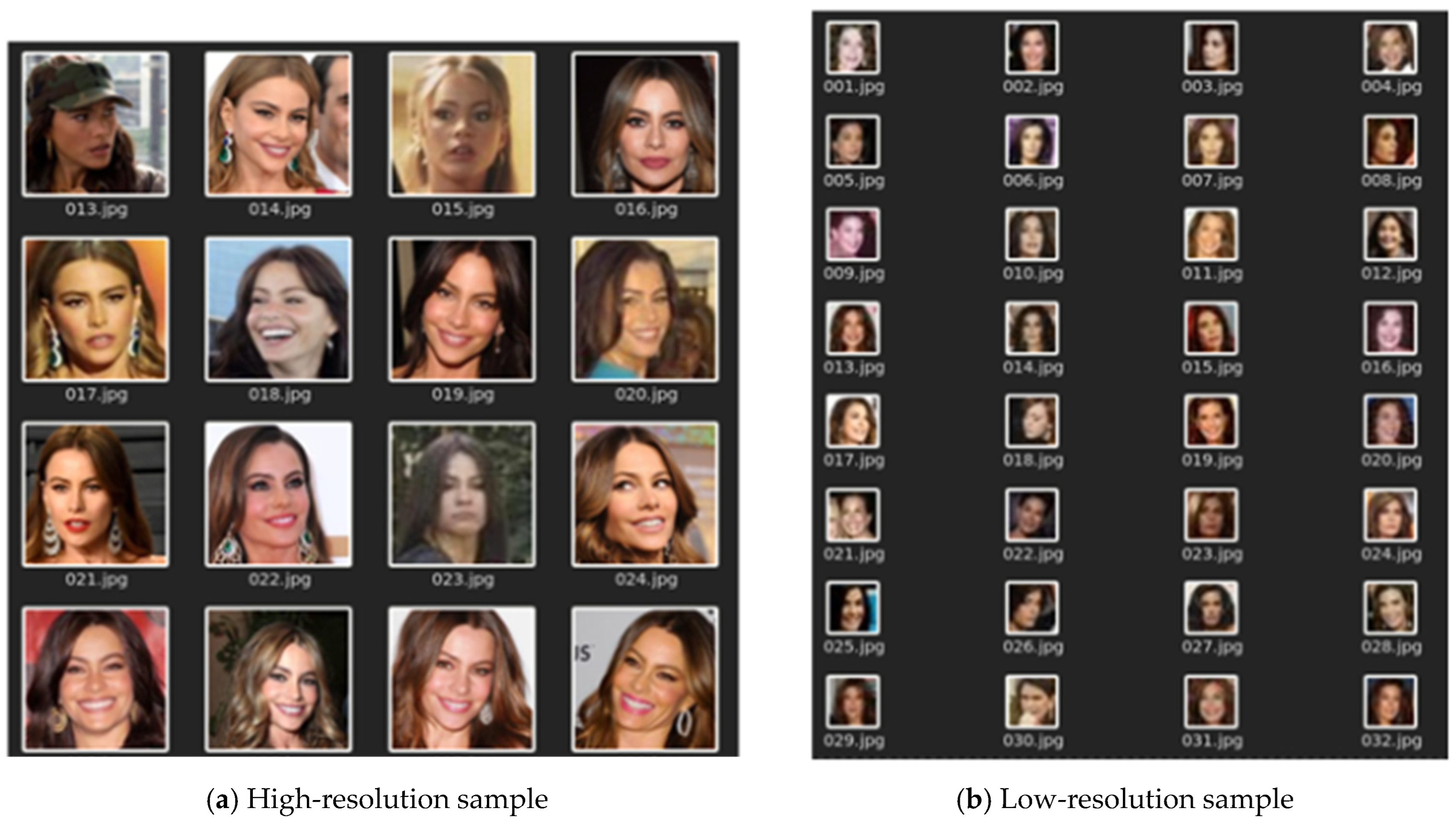

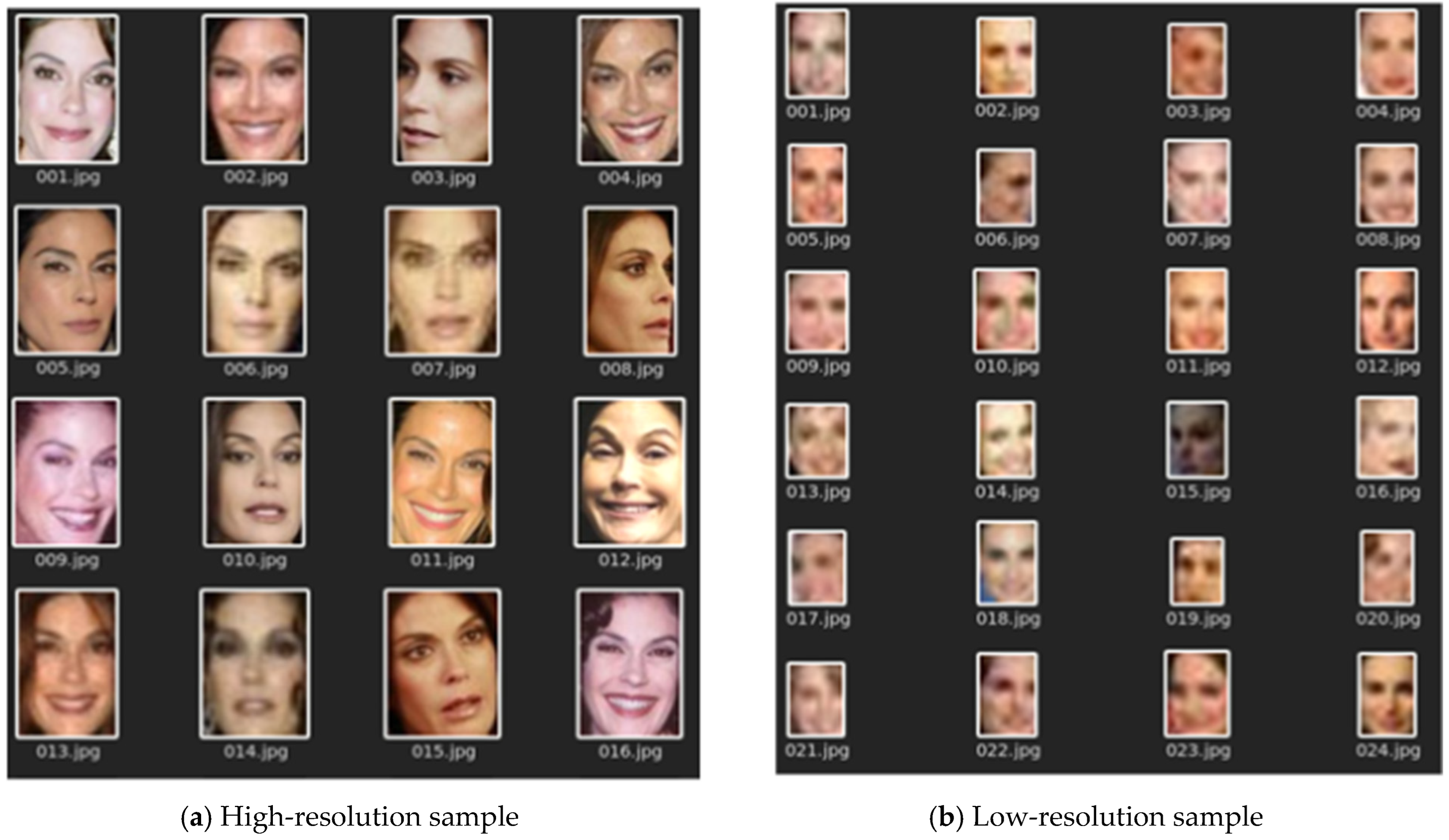

Subsequently, the facial image dataset was divided into two subsets to separately train the convolutional neural network high-resolution branch (CNNHRB) and the low-resolution branch (CNNLRB), yielding a total of 58,350 images. For the CNNLRB subset, each facial image was resized to 24 × 24 pixels using the cv2.resize() function from the OpenCV library. In this process, bilinear interpolation was chosen due to its balance between preserving visual detail and maintaining computational efficiency, which is particularly suitable for low-resolution learning scenarios, as demonstrated in

Figure 2. Before initiating the training phase, both branches underwent a preprocessing stage where all images were passed through a facial detection algorithm. This step was designed to isolate relevant facial regions and reduce unnecessary background information, thereby optimizing the overall computational load and accelerating the training process, as shown in

Figure 3.

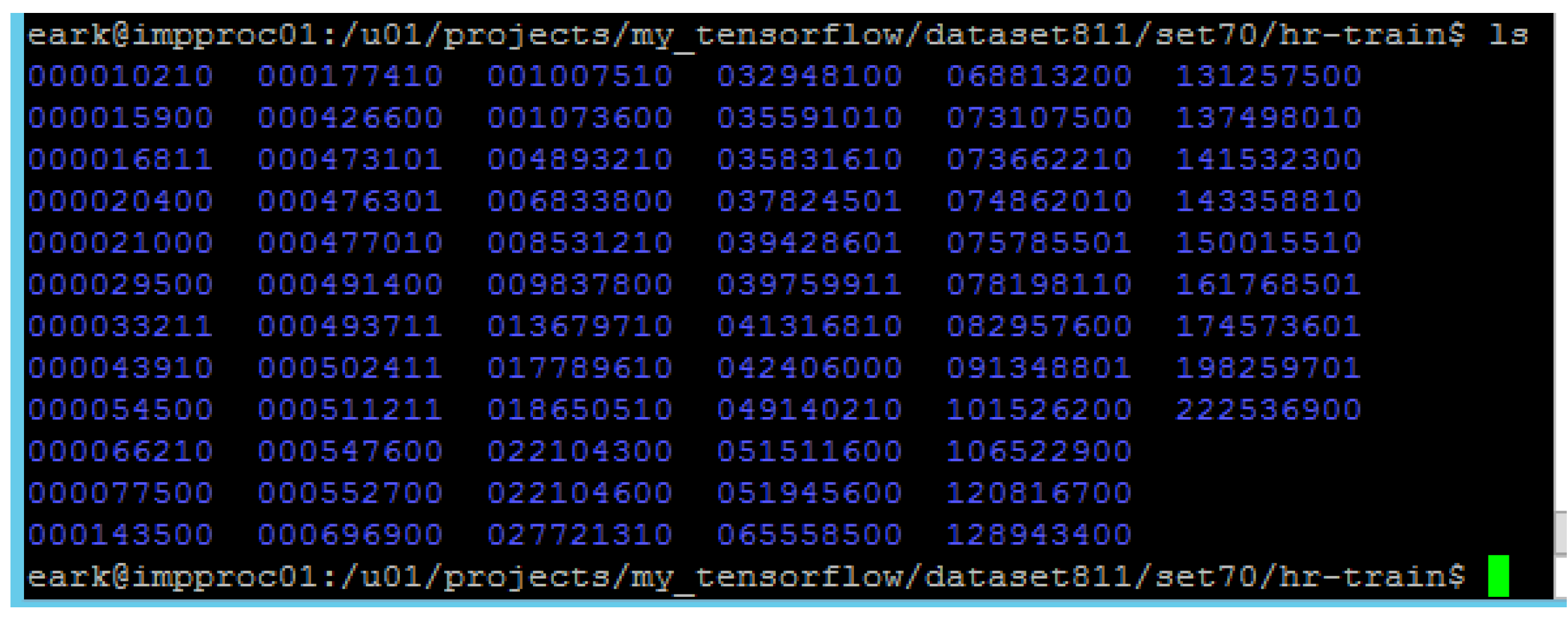

After the facial images underwent facial detection processes, they were categorized into datasets for training and validation purposes. Each dataset comprised two sets of data for training the convolutional neural network high-resolution branch (CNNHRB) and the convolutional neural network low-resolution branch (CNNLRB). The facial image datasets were stored on a computer system for neural network training, structured into six main files: hr-train, hr-val, hr-test, lr-train, lr-val, and lr-test, as illustrated in

Figure 4. Within each main file, four sub-files stored the facial image samples underwent facial detection processes, categorized on a per-individual basis. Each individual was labeled with an eight-digit file name, as depicted in

Figure 5. The last two digits of the file name were used to denote the individual’s gender and skin tone, as outlined in

Table 2. This section outlines the comprehensive methodology for preparing, processing, and structuring facial image data to train a dual-branch convolutional neural network (CNN) for identity verification. The design leverages both high-resolution (HR) and low-resolution (LR) image inputs and supports the potential integration of Bayesian-based uncertainty estimation to improve model reliability and robustness. All the steps in the equation are in

Appendix A.

Let

be the set of original RGB facial images, where each image

and

. The dataset is duplicated into two subsets.

To obtain

, each image was downsampled to dimensions of 24 × 24 pixels using the cv2.resize() function from the OpenCV library, with bicubic interpolation applied to preserve critical facial features while maintaining computational efficiency.

This results in a combined dataset of 58,350 facial images.

To reduce computational complexity during training, all images undergo facial detection using a predefined algorithm , which extracts the facial region from each image.

To reduce computational complexity during training, all images undergo facial detection using a predefined algorithm

, which extracts the facial region from each image.

The processed datasets become

Each of the

HR and

LR datasets is partitioned into training, validation, and test sets.

The images are stored in six main folders.

Each main folder contains four sub-folders categorized by individual identity. Each image file is labeled with an 8-digit code.

where

ID, denotes a unique person ID, and Code encodes gender and skin tone, as outlined in

Table 3.

Two separate CNNs are trained for HR and LR image branches, respectively. Each network maps an input image to a latent feature vector in

.

After extracting feature vectors from two facial images one high-resolution (HR) and one low-resolution (LR) using the feature extraction function

f, the similarity between the two images is evaluated using a similarity function

F. We adopt cosine similarity as the metric to quantify how close the two vectors are in the embedding space. The cosine similarity is defined as follows.

where

are the feature vectors extracted from the HR and LR images, respectively.

· denotes the dot product of the two vectors.

∥⋅∥2 is the L2 norm, used to normalize the vectors.

The resulting similarity score ranges from −1 to 1,

where

A value closer to 1 indicates a high degree of similarity (i.e., likely the same person);

A value near 0 or negative suggests low similarity (i.e., different individuals).

To make a final decision, a threshold τ is introduced. The identity verification outcome is determined as follows

Using cosine similarity allows the model to effectively compare facial representations from images of varying resolutions. Because cosine similarity is scale-invariant, it relies on the direction of the feature vectors rather than their magnitude. This makes it particularly suitable for real-world scenarios where image quality and capture conditions may vary significantly.

To enhance the robustness and interpretability of the system, Bayesian-inspired methods such as Monte Carlo dropout can be employed during inference to estimate predictive uncertainty.

Let

denote the similar score from the t-th forward pass with dropout enabled. The predictive meaning and variance are computed as

This approach provides a confidence estimate that can be used to filter low-certainty predictions or trigger secondary verification processes, thus improving the system’s reliability.

3.1. HRFECNN and LRFECNN Design

In this research, HRFECNN and LRFECNN neural networks were developed to examine the similarity of facial images with different levels of clarity. The LRFECNN neural network incorporates the very-deep super-resolution (VDSR) process to enhance the resolution of low-resolution facial images. Additionally, the deep recursive residual network (DRRN) method was chosen to augment the clarity enhancement process in this study. The neural network architectures are illustrated in

Figure 6 and further detailed in Algorithm 1, respectively. The prediction of the enhanced image

with input image data

X and the model

F, can be expressed by the following equation:

where

F(

X) represents the result obtained by passing the image

X through the enhancement neural network, and

is the predicted image by the model, which enhances the details of

X directly, resulting in

having increased details from

X without resizing the image in this process. This equation is known as the skip operation loss, commonly used in the DRRN-style enhancement networks.

| Algorithm 1. Enhancement neural network with DRRN [17]. |

Input Image data X Output: Enhanced image Initialize the enhancement neural network model F with the deep recursive residual network architecture. Pass the input image data X through the enhancement neural network F to obtain the enhanced image features F(X). Calculate the enhanced image by adding the enhanced image features F(X) to the input image data X, i.e., . Return the enhanced image

|

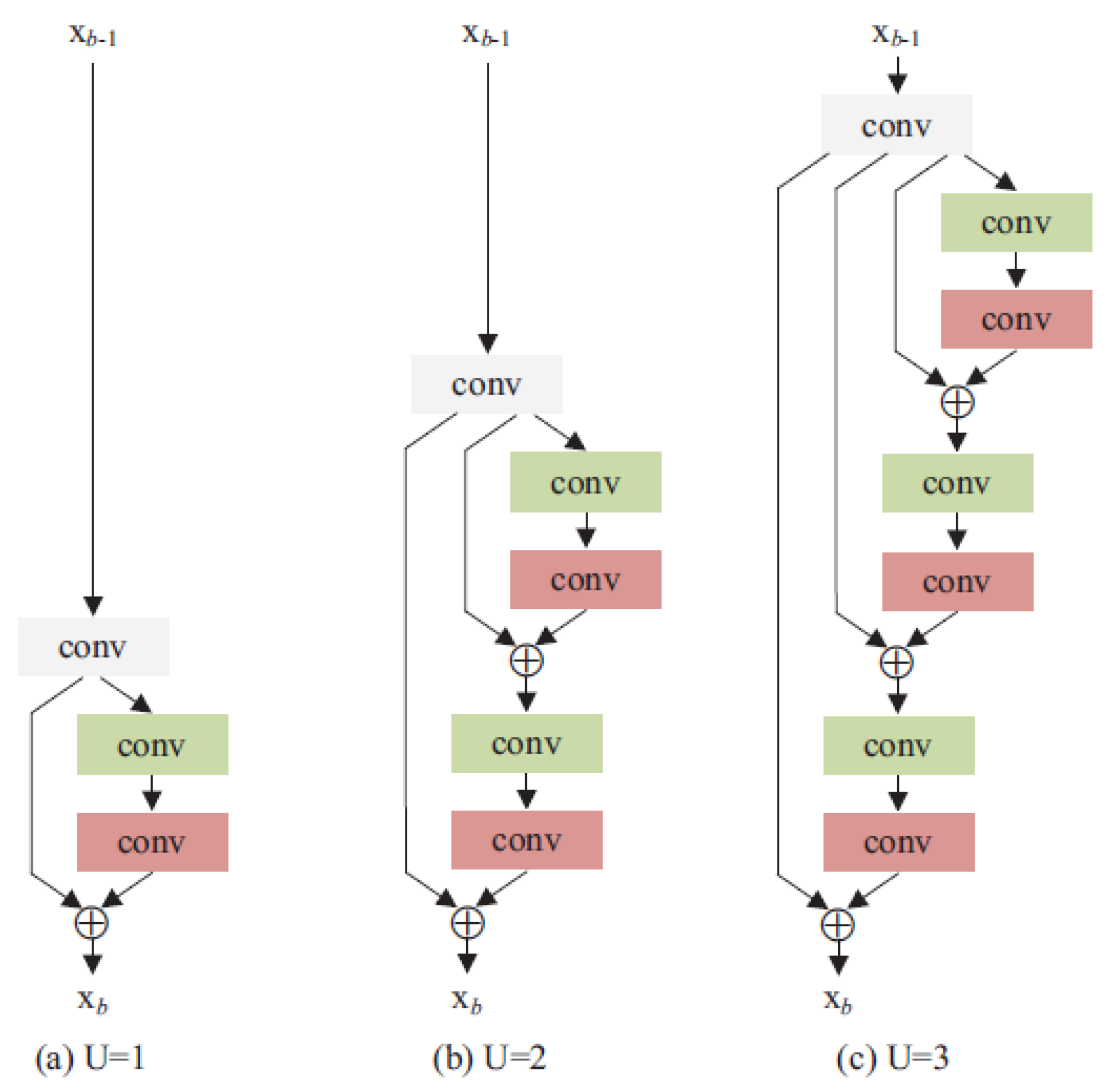

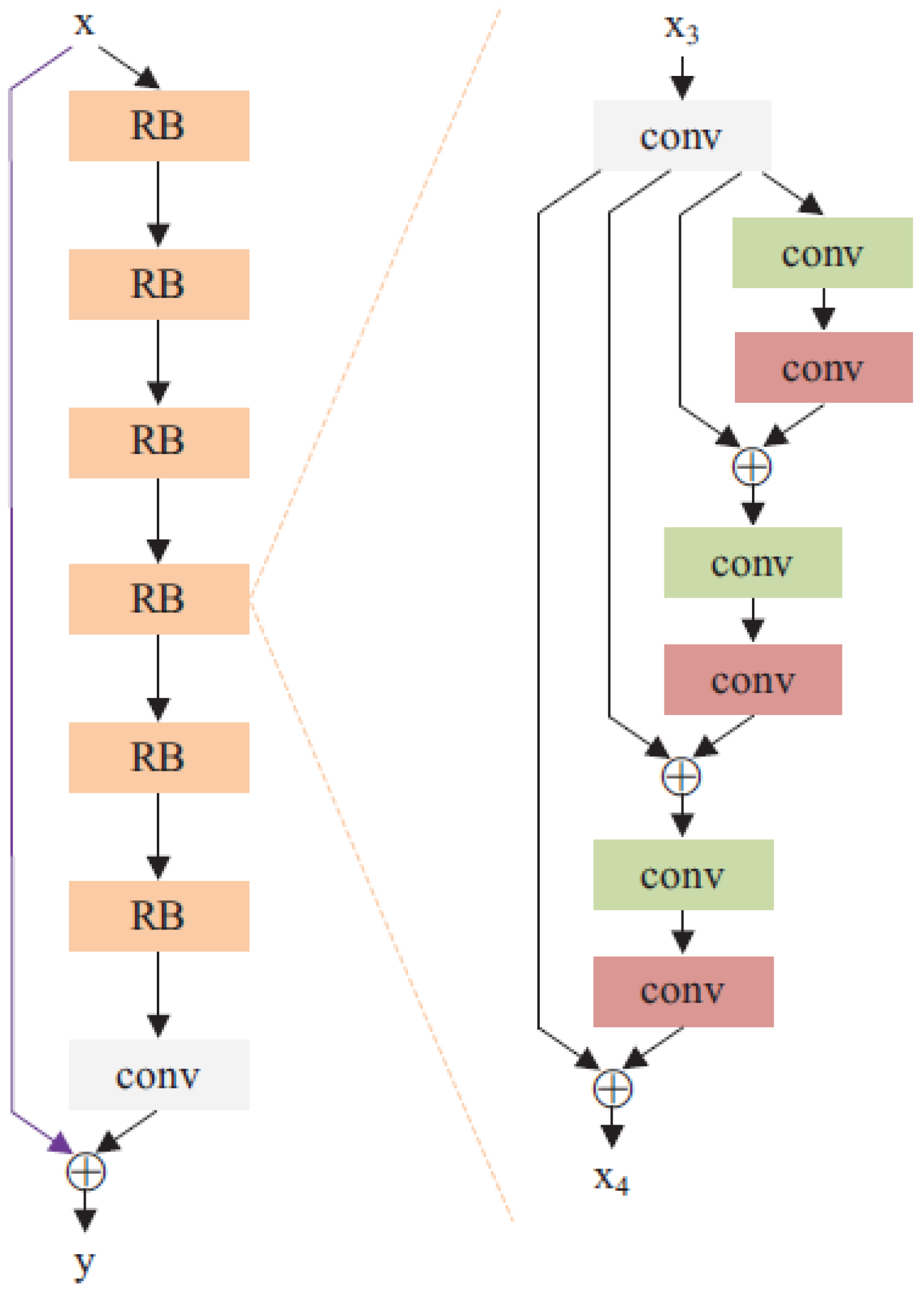

In the depicted enhancement neural network with deep recursive residual network (DRRN) architecture, two key components contribute to flexibility in adjusting the depth levels of processing: the residual units defined within the green-bordered framework and the recursive blocks defined within the red-bordered framework. Each recursive block can accommodate an unrestricted number of residual units, as illustrated in

Figure 7. Similarly, within the DRRN framework for enhancing image sharpness, recursive blocks can also be unlimited in number, as shown in

Figure 8.

Therefore, in this research, we present the convolutional neural network high-resolution branch (CNNHRB) for processing high-quality facial images, leveraging the architecture from the high-resolution facial enhancement convolutional neural network (HRFECNN), with adjustments made to hyperparameters such as stride and padding, as well as the pooling layers of the convolutional neural network. Additionally, we introduce the convolutional neural network low-resolution branch (CNNLRB), which incorporates the deep recursive residual network (DRRN) for enhancing sharpness, integrated with the convolutional neural network architecture. Subsequently, the results of processing by the CNNHRB and CNNLRB networks are evaluated for facial similarity using cosine similarity, as depicted in

Figure 9.

To represent the convolutional neural network high-resolution branch (CNNHRB) and the convolutional neural network low-resolution branch (CNNLRB) mathematically, we can define a series of equations that describe the transformations applied to the input images through the layers of the networks.

CNNHRB (high-resolution branch)

Let denote the high-resolution input image. The CNNHRB processes this image through a series of convolutional layers, activation functions, pooling layers, and possibly other layers such as batch normalization. The output of CNNHRB, , is then used for similarity computation.

- 2.

Pooling layer.

- 3.

Subsequent layers.

and are the weights and biases of the n-th layer.

f is the activation function (e.g., ReLU).

denotes the convolution operation.

pool denotes the pooling operation (e.g., max pooling).

CNNLRB (low-resolution branch with DRRN)

Let denote the low-resolution input image. The CNNLRB includes an enhancement step using DRRN to improve image resolution before further processing. The enhanced image is then passed through convolutional layers similar to CNNHRB. The output of CNNLRB, , is used for similarity computation.

- 2.

Recursive block (DRRN).

- 3.

Subsequent convolutional layers.

represents the residual units within a recursive block.

is the output of the i-th recursive block.

The weights and biases within DRRN are shared across the recursive units.

Cosine Similarity

After obtaining the outputs

and

, the cosine similarity between these two feature vectors is computed as follows.

where · denotes the dot product, and ∥ ∥ denotes the Euclidean norm.

Similarity Measurement Justification

In selecting the similarity metric for identity verification, we considered several alternatives, including Euclidean distance and Jaccard similarity. However, cosine similarity was ultimately chosen due to its superior performance in scenarios where image resolution and quality vary significantly. Unlike distance-based measures, cosine similarity focuses on the orientation rather than the magnitude of feature vectors, making it robust to changes in lighting, scale, and image clarity. Experimental results confirmed that cosine similarity consistently outperformed other metrics, achieving a maximum accuracy of 99.7%, particularly when comparing low-resolution images derived from identity documents with high-resolution live captures.

Neural Network Structures of CNNHRB and CNNLRB

The CNNHRB (high-resolution branch) and CNNLRB (low-resolution branch) neural networks comprise four convolutional blocks, each described as follows.

Convolution 1 (Conv1)

The primary difference between CNNHRB and CNNLRB lies in this stage, where CNNLRB incorporates a DRRN (deep recursive residual network) to enhance the resolution of the facial images before further processing. Conv1 consists of three convolutional layers, each with 64 filters, and employs the PReLU (parametric ReLU) activation function.

Convolution 2 (Conv2)

This block comprises five convolutional layers, each with 128 filters, and utilizes the PReLU activation function.

Convolution 3 (Conv3)

Conv3 includes nine convolutional layers, each with 256 filters, and employs the PReLU activation function.

Convolution 4 (Conv4)

This block consists of three convolutional layers, each with 256 filters, and uses the PReLU activation function.

Structure of the deep recursive residual network (DRRN)

The DRRN used to enhance the resolution in CNNLRB is structured as DRRN B1U9, indicating that it contains one recursive block with nine residual units, as illustrated in

Figure 10. The DRRN configuration includes recursive block and residual units per block of 1 and 9, respectively.

The DRRN’s architecture is designed to iteratively improve the resolution of low-quality images by passing them through multiple layers that capture and enhance fine details. Incorporating these detailed convolutional layers and the DRRN, the CNNHRB and CNNLRB networks are effectively structured to process and enhance facial images with varying resolutions, ensuring high performance in facial recognition tasks. The procedural workflow and optimization strategy for these networks are described in Algorithm 2.

| Algorithm 2. Shared convolutional blocks in CNNHRB and CNNLRB. |

Function block_conv1x Purpose Implements the first convolutional block (Conv1). Input input_tensor (Object) Process Apply three convolutional layers with 64 filters each. Apply PReLU activation function after each convolutional layer. Apply dropout with a rate of 0.25 after the first and second convolutional layers. Apply batch normalization after the convolutional layers. Apply max pooling with a pool size of 2 × 2.

Output Returns the processed convolution object. Function block_conv2x Purpose Implements the second convolutional block (Conv2). Input input_tensor (Object) Process Apply five convolutional layers with 128 filters each. Apply PReLU activation function after each convolutional layer. Apply dropout with a rate of 0.25 after the second and fourth convolutional layers. Apply batch normalization after the convolutional layers. Apply max pooling with a pool size of 2 × 2.

Output Returns the processed convolution object. Function block_conv3x Purpose Implements the third convolutional block (Conv3). Input input_tensor (Object) Process Apply nine convolutional layers with 256 filters each. Apply PReLU activation function after each convolutional layer. Apply dropout with a rate of 0.25 after every third convolutional layer. Apply batch normalization after the convolutional layers. Apply max pooling with a pool size of 2 × 2.

Output Returns the processed convolution object. Function block_conv4x Purpose Implements the fourth convolutional block (Conv4). Input input_tensor (Object) Process Apply three convolutional layers with 256 filters each. Apply PReLU activation function after each convolutional layer. Apply dropout with a rate of 0.25 after the first and second convolutional layers. Apply batch normalization after the convolutional layers. Apply max pooling with a pool size of 2 × 2.

Output Returns the processed convolution object. Function cnnhrlr_branch Purpose Implements the CNNHRB and CNNLRB branches. Input input_tensor (Object) Process Call block_conv1x with input_tensor as the argument. Call block_conv2x with the output from step 1 as the argument. Call block_conv3x with the output from step 2 as the argument. Call block_conv4x with the output from step 3 as the argument. Flatten the output from step 4. Add a fully connected (dense) layer with 512 units. Apply softmax activation function to the output of the dense layer.

Output: Returns the final output vector of size 512.

|

Developed artificial neural network to improve contrast efficiency DRRN

The Deep Recursive Residual Network (DRRN) plays a crucial role in enhancing the sharpness of low-resolution face images, thereby improving their clarity before passing them on to the Convolutional Neural Network Low-Resolution Branch (CNNLRB) for subsequent processing. The algorithm detailing this procedure is presented in Algorithm 3.

| Algorithm 3. Contrast enhancement neural network DRRN. |

Function ConvolutionLayer Input Parameters input_tensor: An object representing the input tensor. filters: An integer specifying the number of filters (128 for intermediate layers, 1 for the final layer). Process Output Return the resulting convolution object. Function ResidualUnit Input Parameters input_tensor: An object representing the input tensor. Process Output Return the resulting convolution object. Function RecursiveBlock Input Parameters input_tensor An object representing the input tensor. r_unit An integer specifying the number of residual units. Process Initialize the tensor as input_tensor. For each unit in r_unit. Call ResidualUnit with the current tensor. Update the tensor with the resulting convolution object.

Output Return the resulting convolution object. Function drrn_branch Input Parameters: input_tensor An object representing the input tensor. r_unit An integer specifying the number of residual units. r_block An integer specifying the number of recursive blocks. Process Initialize the tensor as input_tensor. For each block in r_block. Call RecursiveBlock with the current tensor and r_unit. Update the tensor with the resulting convolution object. Apply a fully connected layer to the resulting tensor.

Output Return the final tensor object. Function cos_sim_model Input Parameters class_sample: The number of sample classes. model_type The type of neural network (1 for CNNLRB without enhancement, 2 for CNNLRB with DRRN, 3 for CNNLRB with VDSR). Process Depending on model_type, select the corresponding CNNLRB model. Use the model to compute the output vectors for each sample in class_sample. Calculate the cosine similarity between the resulting vectors.

Output Return the cosine similarity values.

|

Development of Utility Functions

Utility functions play a crucial role in preparing data and setting appropriate parameters for the processing and training of neural networks. A detailed explanation of the development of these utility functions is provided in Algorithm 4.

| Algorithm 4. Utility Functions |

The get_class_sample function is designed to create a dataset for training and validating the neural network according to the developed neural network structure. It takes the parameters sample_resolution and batch_size, which define the resolution type of the sample images and the batch size for processing by the neural network. The function returns class_samples, train_samples, val_samples, train_dir, val_dir, img_height, and img_width. Inputs sample_resolution: The resolution type of the sample images (e.g., ‘high’, ‘low’). batch_size: The batch size for neural network processing. Outputs class_samples: Number of face sample groups. train_samples: Training dataset. val_samples: Validation dataset. train_dir: Directory path for training data. val_dir: Directory path for validation data. img_height: Image height. img_width: Image width.

Initialize Directories and Parameters Load and Split Data Prepare Batches Create batches of train_samples and val_samples based on batch_size. Count Samples Count the number of class samples, training samples, and validation samples. Return Values Return class_samples, train_samples, val_samples, train_dir, val_dir, img_height, and img_width.

|

3.2. Training the Neural Network

The developed neural network was trained to enhance its deep learning capabilities. The training process utilized a dataset comprising facial images of 70 individuals, totaling 23,341 images per network for CNNHRB and CNNLRB. During each training iteration, the model summaries, including the parameter counts for CNNHRB and CNNLRB, were recorded. The approximate number of parameters for each network is shown in the following table.

In this study, we trained two types of neural networks a high-resolution neural network (CNNHRB) and a low-resolution neural network (CNNLRB), to examine and compare the similarity between the two networks using cosine similarity. The overall methodology is outlined in

Table 4. The process and methods can be defined and represented in the following equations. To begin the neural network training, we used a dataset of classified images. This allowed the networks to learn various features and patterns present in the images. Upon the completion of the training, we obtained two types of neural networks. CNNHRB, which has high resolution, and CNNLRB, which has low resolution. We then compared images of similar characteristics using these two networks. The similarity analysis of images processed through these two networks utilized cosine similarity, which can be described by the following equation.

where

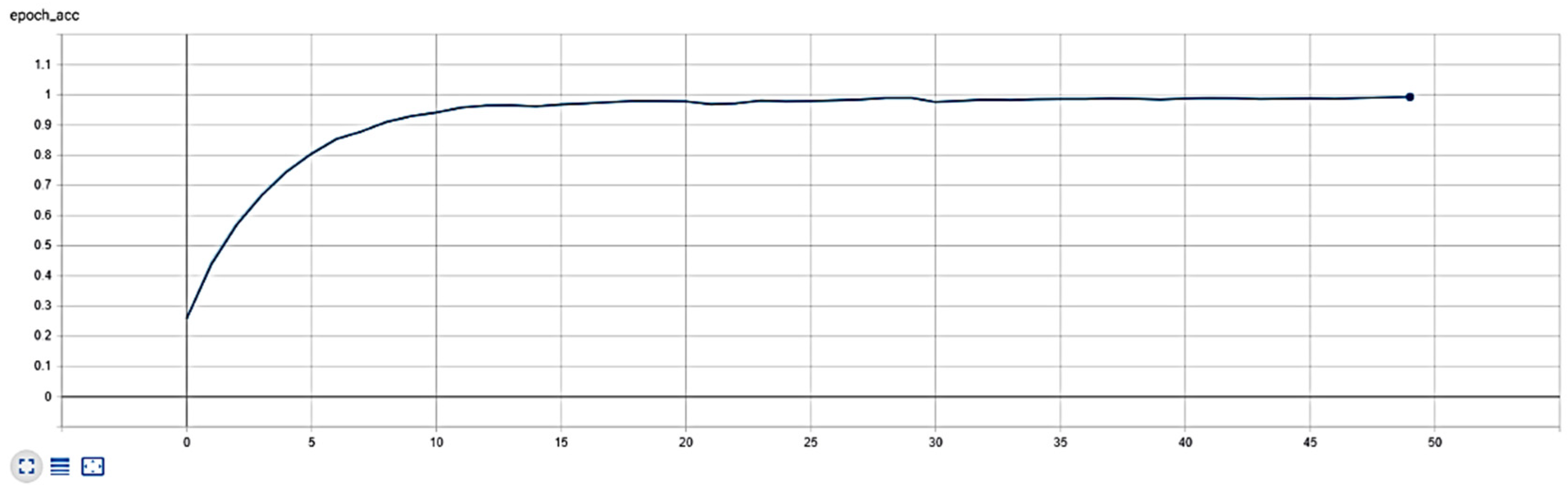

For the cosine similarity result that has been trained, shown as shown in the

Figure 11.

The researchers conducted comprehensive training and performance evaluations on the proposed low-resolution neural network (CNNLRB), which integrates a deep recursive residual network (DRRN) as an enhancement module for image resolution. To systematically assess the effectiveness of enhancement techniques, the DRRN-based CNNLRB was benchmarked against two alternative configurations: a baseline CNNLRB without enhancement, and a CNNLRB incorporating a very deep super-resolution (VDSR) network. Each variant was trained on a labeled facial image dataset, enabling the networks to learn the discriminative features and spatial patterns necessary for accurate identity verification. The evaluation focused on multiple performance metrics, including classification accuracy, image reconstruction fidelity, and computational efficiency. Furthermore, in alignment with statistical machine learning paradigms, the models were evaluated not only based on predictive performance but also on their robustness to input variability and their ability to generalize across image degradations. To explore model reliability under practical uncertainty, inference results were analyzed using uncertainty-aware techniques such as prediction confidence and the consistency of cosine similarity scores across test samples. Among the three models, the DRRN-enhanced CNNLRB demonstrated superior performance across all benchmarks. Notably, it maintained higher fidelity in reconstructed facial features and exhibited greater stability in its similarity predictions—qualities that are critical in biometric systems involving degraded or low-resolution imagery. These findings underscore the importance of integrating advanced neural architectures with statistical robustness principles. In particular, they highlight the potential of DRRN-based networks to significantly improve the reliability and accuracy of low-resolution facial image recognition systems, especially when deployed in real-world applications involving noisy or uncertain visual inputs.

The proposed framework integrates stringent verification procedures aligned with internationally recognized standards, including the Identity Assurance Levels (IAL) and Authenticator Assurance Level (AAL). To ensure document authenticity and identity accuracy, the system performs the following actions:

Facial feature comparisons using dedicated CNN branches for high- and low-resolution images.

Super-resolution enhancement using SRCNN, VDSR, and DRRN models to improve the clarity of low-resolution document images.

Bayesian-inspired uncertainty estimation via Monte Carlo Dropout, providing predictive confidence levels alongside verification results.

This layered approach enhances security, supports risk-aware decision-making, and ensures regulatory compliance in sensitive applications such as financial services and national identity systems.

4. Experimental Results

The experiments utilized the CASIA-WebFace dataset, a publicly available benchmark commonly used for face recognition research. This dataset includes 494,414 facial images with a resolution of 250 × 250 pixels, covering a wide demographic range. The total dataset size is approximately 3.8 GB, making it suitable for training deep learning models that generalize well across diverse populations. The dataset is accessible via the following link, CASIA-WebFace on Kaggle.

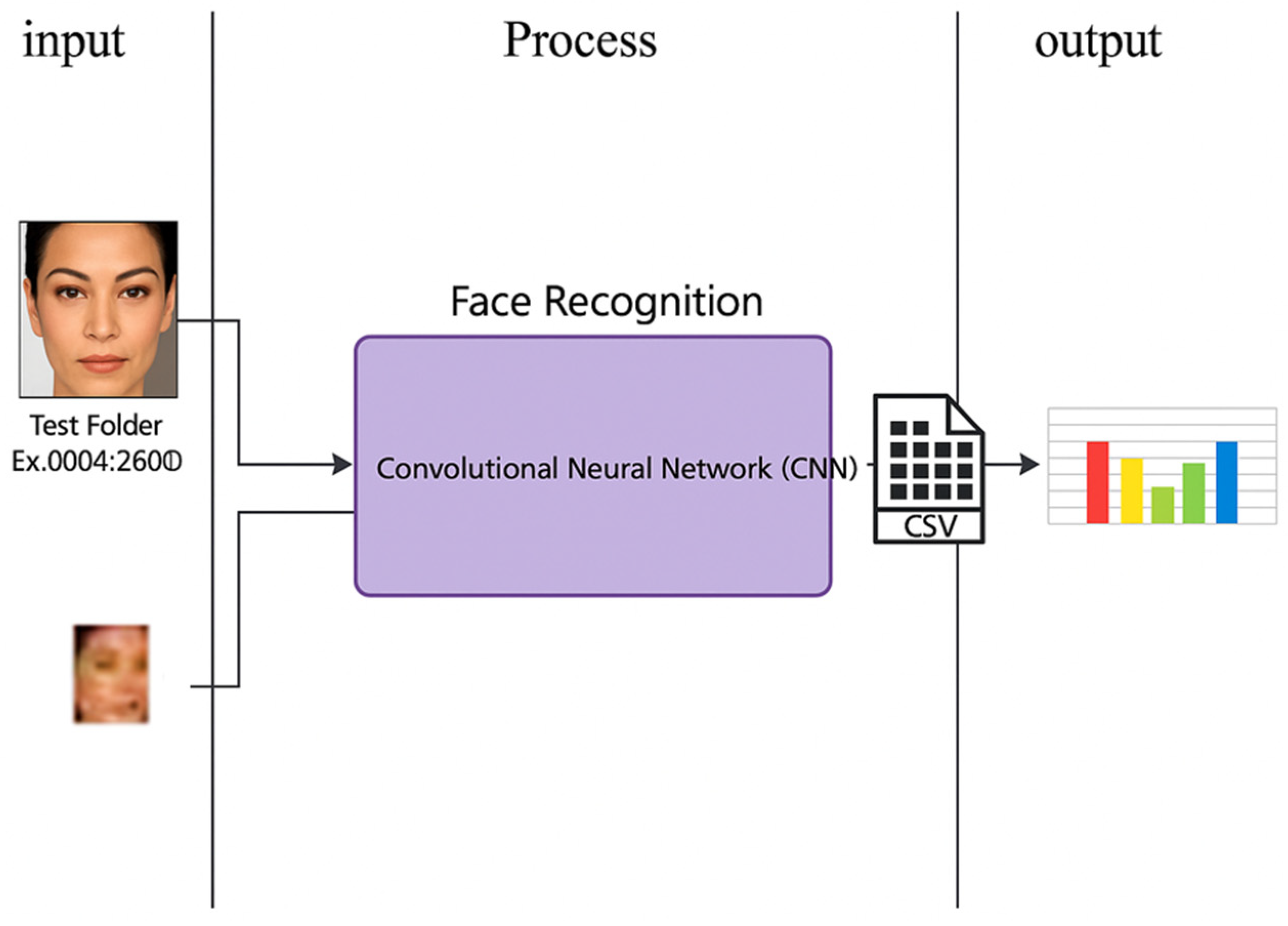

This study focuses on the enhancement and development of neural networks for comparing high-resolution facial images with low-resolution facial images, simulating the process of matching appearance characteristics with photographic evidence in identity verification procedures integral to electronic know your customer (e-KYC) mechanisms. In this context, the appearance characteristics are represented by high-resolution facial images, whereas the photographic evidence is represented by low-resolution facial images. For the evaluation, a low-resolution facial image from each of the 70 sample subjects was selected to represent the photographic evidence. The facial recognition process was then tested using the developed neural networks on a dataset consisting of 2917 facial images from these 70 sample subjects. The hypothesis tested pertained to the accuracy levels in facial comparison, considering factors such as skin tone and accuracy results. The evaluation aimed to determine the effectiveness and accuracy of the developed neural networks in recognizing and matching facial images under varying conditions of resolution and skin tone, thereby assessing their potential application in real-world e-KYC processes. The testing procedure is illustrated in the accompanying

Figure 12.

All experiments were conducted using Python 3.9 and TensorFlow 2.11 on a workstation equipped with an NVIDIA RTX 3090 GPU (NVIDIA, Santa Clara, CA, USA) and running Ubuntu 20.04 LTS. The deep learning models were trained using a batch size of 64 and an Adam optimizer with a learning rate of 0.0001. This configuration ensured efficient training performance while maintaining model accuracy. The training process for the proposed dual-branch CNN architecture took approximately 3.5 h for 100 epochs on the aforementioned hardware setup. This training time includes both the high-resolution and low-resolution image branches and reflects the full end-to-end learning process from feature extraction to similarity computation.

In the facial recognition testing procedure described above, the levels of similarity or accuracy were categorized into three thresholds: 85%, 90%, and 95%. The performance of three different neural networks was compared: a network without any super-resolution enhancement, a network enhanced with very-deep super-resolution (VDSR), and a network enhanced with deep recursive residual network (DRRN). The facial recognition tests were conducted and the results were recorded in CSV files. Each entry in these files included the following attributes: file name, accuracy level, gender code, skin tone code, and test result code. The collected data from these tests were then processed to evaluate the accuracy of each neural network.

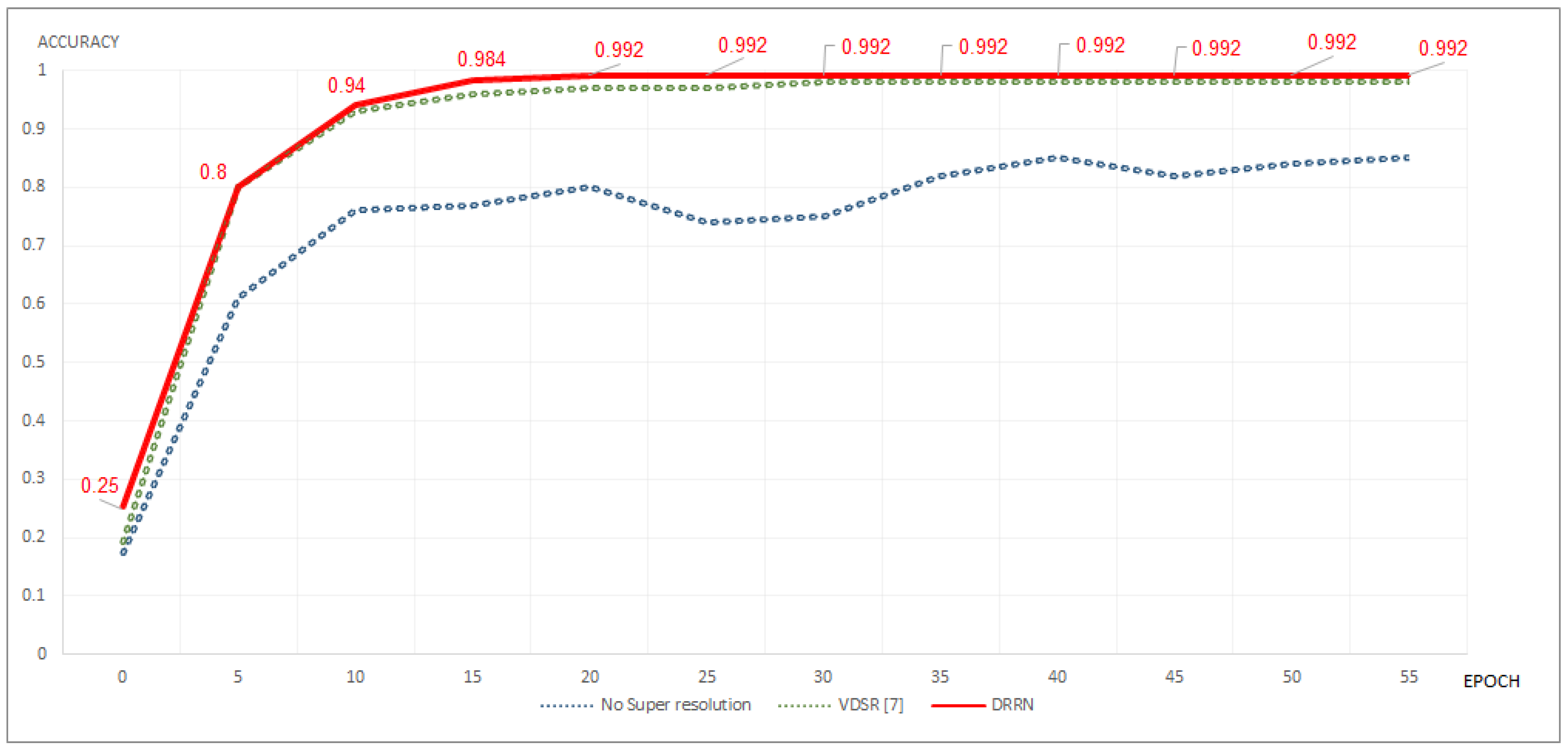

4.1. Comparison of Similarity Between Neural Network Structures

This section details the measurement of similarity among the three neural network structures: the baseline network without super-resolution enhancement, the network enhanced with very-deep super-resolution (VDSR) [

8], and the network enhanced with deep recursive residual network (DRRN). The maximum accuracy rates achieved by these three neural network configurations were 85.6%, 98.8%, and 99.2%, respectively, as illustrated in the accompanying

Figure 13. The evaluation process involved comparing the structural similarities and performance metrics of each neural network type under various conditions. This comparison aimed to determine the most effective neural network configuration for enhancing facial recognition accuracy. The results demonstrate the significant improvements in accuracy achieved through the application of super-resolution techniques, particularly VDSR and DRRN, highlighting the potential benefits of these methods in practical facial recognition applications.

The graph shows the similarities of the three Neural Network structures: the baseline network without super-resolution enhancement, shown with a dotted blue line; VDSR, shown with a dotted green line; and the network enhanced with DRRN, shown with a red line (

Figure 13).

4.2. Results of the Facial Recognition Process

In this section, we present the results of the facial recognition process, particularly within the context of identity verification in an electronic Know Your Customer (e-KYC) procedure. This involves simulating the comparison between a high-resolution image (representing a clear, detailed appearance) and a low-resolution image (representing a blurred photo from identification documents). The raw data from ten experimental trials of the facial recognition process were used to calculate the average similarity or accuracy for each neural network configuration. The neural networks tested included the baseline model without super-resolution enhancement, the model with very-deep super-resolution (VDSR) enhancement, and the model with deep recursive residual network (DRRN) enhancement. The results of these comparisons, detailing the predicted accuracy for each neural network, are presented in

Table 5. This analysis aims to identify which neural network configuration offers the highest accuracy in matching high-resolution images with their low-resolution counterparts, thereby enhancing the reliability of the e-KYC process.

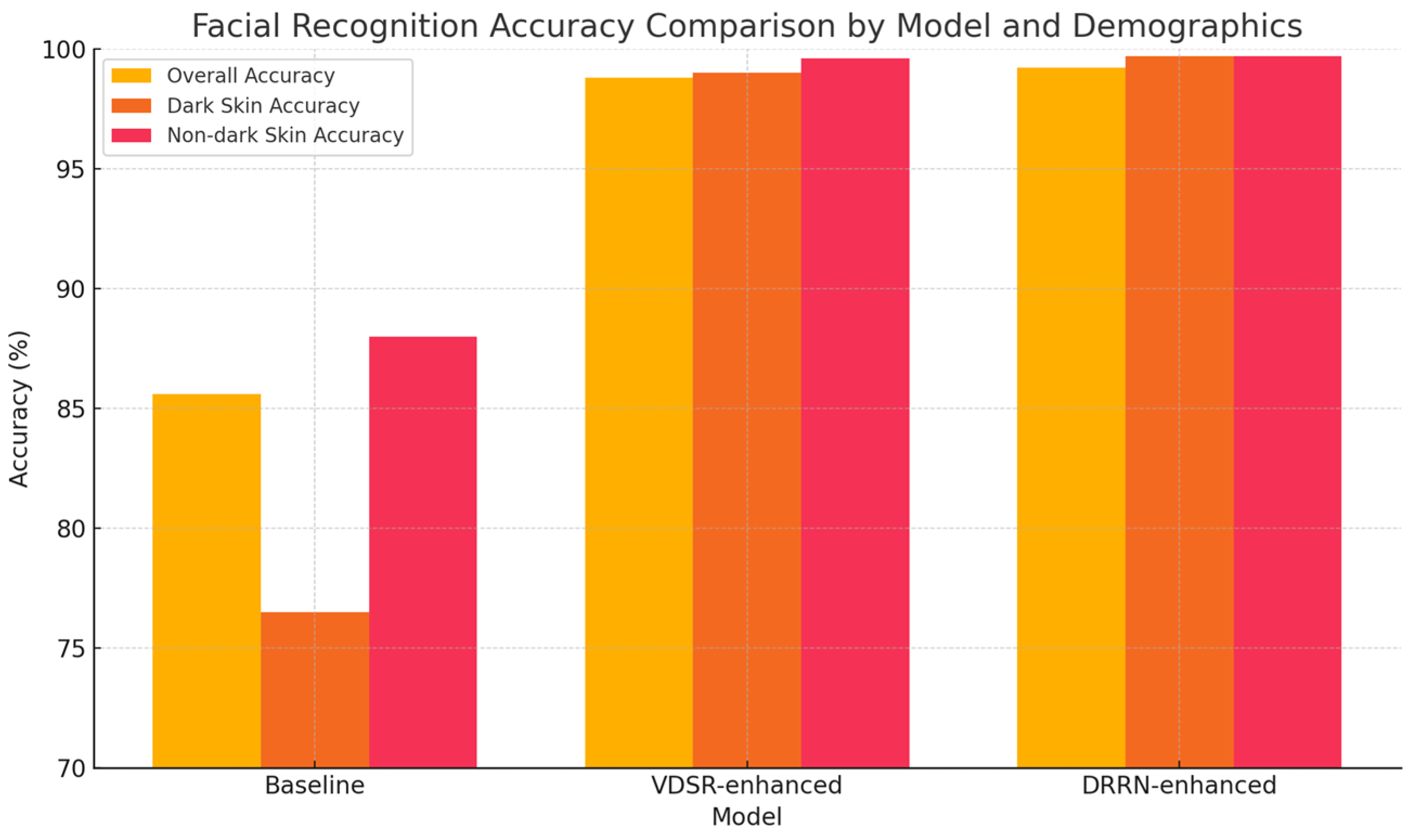

Based on the raw test data from 10 rounds of facial recognition trials, we calculated the average similarity or accuracy rates of each neural network, categorized by the skin tone of the facial samples. The tests were conducted on a sample set of 560 facial images with darker skin tones, comprising 324 female and 276 male subjects. The comparison of the predictive accuracy of each neural network is detailed in

Table 5. In this study, the neural networks were evaluated for their performance in recognizing faces with darker skin tones. The experimental setup involved multiple rounds of testing to ensure the reliability of the results. Each neural network’s accuracy was determined by averaging the outcomes across the ten trials. This approach helps in understanding the efficacy of the models under varying conditions and highlights any discrepancies in performance based on gender within the same skin tone category.

Table 6 presents a comprehensive comparison, showing how each neural network performed in terms of accuracy. This detailed analysis allows us to discern which neural network offers the best performance for facial recognition tasks involving individuals with darker skin tones. The results are instrumental in refining the models and improving their applicability in diverse real-world scenarios, ensuring fairness and accuracy across different demographic groups.

The testing with samples of non-dark skin tone faces, consisting of 2357 images, was divided into 1248 female subjects and 1109 male subjects. The results of the comparison of the prediction accuracy of each neural network are shown in

Table 6. In this study, neural networks were evaluated for their performance in recognizing faces with non-dark skin tones. The experimental setup included multiple testing rounds to ensure the reliability of the results. The accuracy of each neural network was calculated by averaging the results from all tests. This method allowed us to understand the performance of the models under various conditions and to highlight the differences in performance by gender within the same skin tone group.

Table 7 provides a detailed comparison of how each neural network performed in terms of accuracy. This thorough analysis helps us identify which neural network delivers the best performance for face recognition involving individuals with non-dark skin tones. These results are crucial for refining models and enhancing their applicability in diverse real-world scenarios, ensuring fairness and accuracy across different demographic groups.

Based on the comparative analysis presented in

Table 7, the research utilizing various techniques demonstrates significant accuracy in its results. The proposed technique exhibits a higher level of accuracy compared to existing studies. This increased accuracy can be attributed to the use of a more extensive and comprehensive dataset, which is larger and more diverse than those employed in previous research. Consequently, this leads to improved accuracy levels. In accordance with standard machine learning practice, the dataset was divided into three subsets: 70% for training, 15% for validation, and 15% for testing. These proportions were consistently applied to both the high-resolution and low-resolution image branches. Furthermore, the same data splitting strategy was used for all methods compared in

Table 7 to ensure a fair and meaningful performance comparison.

Our proposed model outperforms other works, achieving an accuracy of 99.7%. This surpasses the performance of existing models, highlighting the effectiveness and robustness of our approach. The comprehensive dataset used in our research not only enhances the model’s ability to generalize but also ensures that the model performs well across different conditions and variations present in the data.

Table 8 compares the proposed DRRN model with recent state-of-the-art methods for image classification. Despite using a smaller input size (24 × 24), DRRN achieves the highest accuracy at 99.3%, outperforming all other models. This highlights its efficiency and superior performance, especially in low-resolution scenarios.

Figure 14 presents a comparative statistical analysis of facial recognition accuracy achieved by three neural network configurations, namely baseline, VDSR-enhanced, and DRRN-enhanced tested under varying image resolution and demographic diversity (skin tone categories). The DRRN-enhanced network consistently demonstrates superior accuracy, particularly in low-resolution scenarios (24 × 24 pixels), achieving up to 99.7% accuracy even for dark-skinned individuals. These results exemplify key principles of statistical machine learning, including data-driven model evaluation, robustness to demographic and input variability, and high-resolution reconstruction from degraded inputs. The findings also support the development of uncertainty-aware biometric systems, in line with the objectives of Bayesian methods in imaging applications, by reinforcing the reliability and generalizability of neural network predictions under real-world constraints.

To assess the impact of super-resolution preprocessing on model performance, we conducted an ablation study comparing identity classification accuracy with and without the super-resolution module. As illustrated in

Figure 15, the inclusion of the SRCNN-based enhancement step improved the classification accuracy from 97.2% to 99.3%. This result demonstrates the practical benefit of integrating lightweight image enhancement techniques into e-KYC systems, especially in environments where image resolution and quality are limited. The improvement supports the hypothesis that super-resolution can compensate for degraded input quality and enhance feature extraction in downstream tasks.

In addition to standard evaluation metrics such as accuracy, precision, recall, and F1-score, we incorporated the area under the ROC curve (AUC) to provide a more comprehensive assessment of classification performance. The AUC metric is particularly important in identity verification tasks, as it reflects the model’s ability to distinguish between positive and negative classes across varying decision thresholds. As presented in

Table 9, the proposed CNN combined with SRCNN-based super-resolution achieved the highest AUC score of 99.5%, outperforming other state-of-the-art deep learning models, including FaceNet (98.4%), ResNet50 (97.8%), and MobileNetV2 (97.0%). This indicates superior robustness and discriminative capability, especially in handling low-quality facial images that are typical in e-KYC scenarios. Moreover, while achieving the best AUC and overall classification performance, the proposed model maintains a low inference time and minimal model size, making it ideal for deployment in resource-constrained environments. These results confirm that the integration of super-resolution techniques not only enhances feature quality, but also improves the model’s ability to generalize across uncertain or degraded inputs, which is an essential requirement for secure and reliable digital identity verification systems.

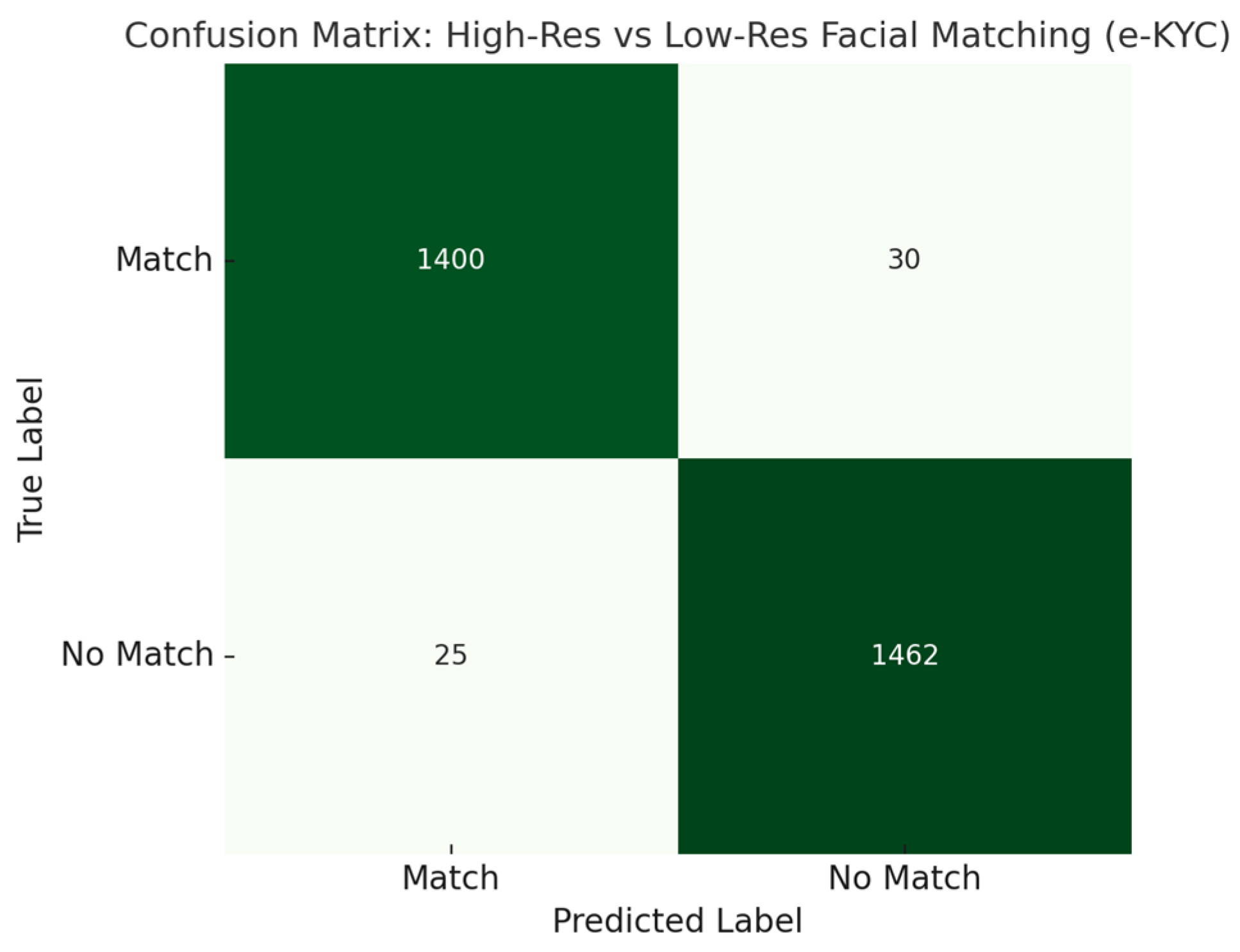

Figure 16 presents the confusion matrix illustrating the performance of the proposed neural network in the task of high-resolution vs. low-resolution facial matching within the context of electronic know your customer (e-KYC) procedures. The evaluation was conducted on a dataset comprising 2917 facial images collected from 70 subjects, where high-resolution images represent registered identity data and low-resolution images simulate photographic evidence typically captured in real-world verification scenarios. The matrix indicates that the model correctly identified 1400 true positive cases, where the low-resolution facial image was accurately matched to its corresponding high-resolution counterpart. Additionally, the model achieved 1462 true negatives, successfully distinguishing non-matching pairs.

The

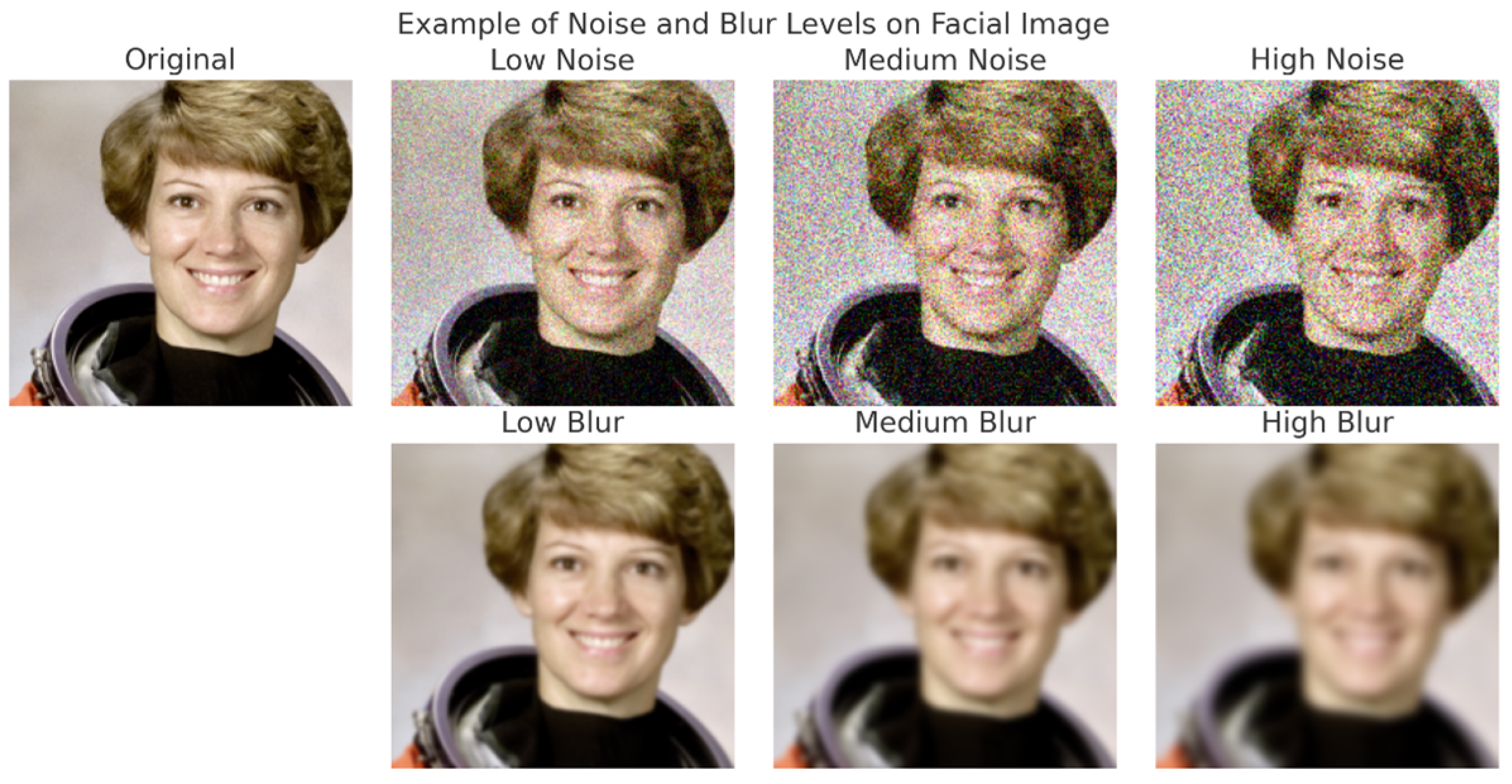

Figure 17 demonstrates how varying levels of noise and blur can degrade facial image quality. The top row begins with the original, undistorted image, followed by three versions affected by increasing levels of noise ranging from mild graininess to severe pixel disruption. The bottom row shows the same progression, but for blur: starting with a slightly softened version, then advancing to moderate and high blur levels where facial details become progressively harder to distinguish. This visualization underscores the impact of common image degradations on visual clarity and potentially on recognition performance. To address concerns regarding the model’s resilience to degraded image quality, a comprehensive robustness evaluation was conducted on the proposed CNN + SRCNN framework. This evaluation simulates real-world scenarios where facial images in e-KYC processes may be affected by varying levels of Gaussian noise and blur, which are common due to inconsistent capture environments and device limitations. The model was tested across different conditions, including normal images and images augmented with low, medium, and high levels of noise and blur. As presented in

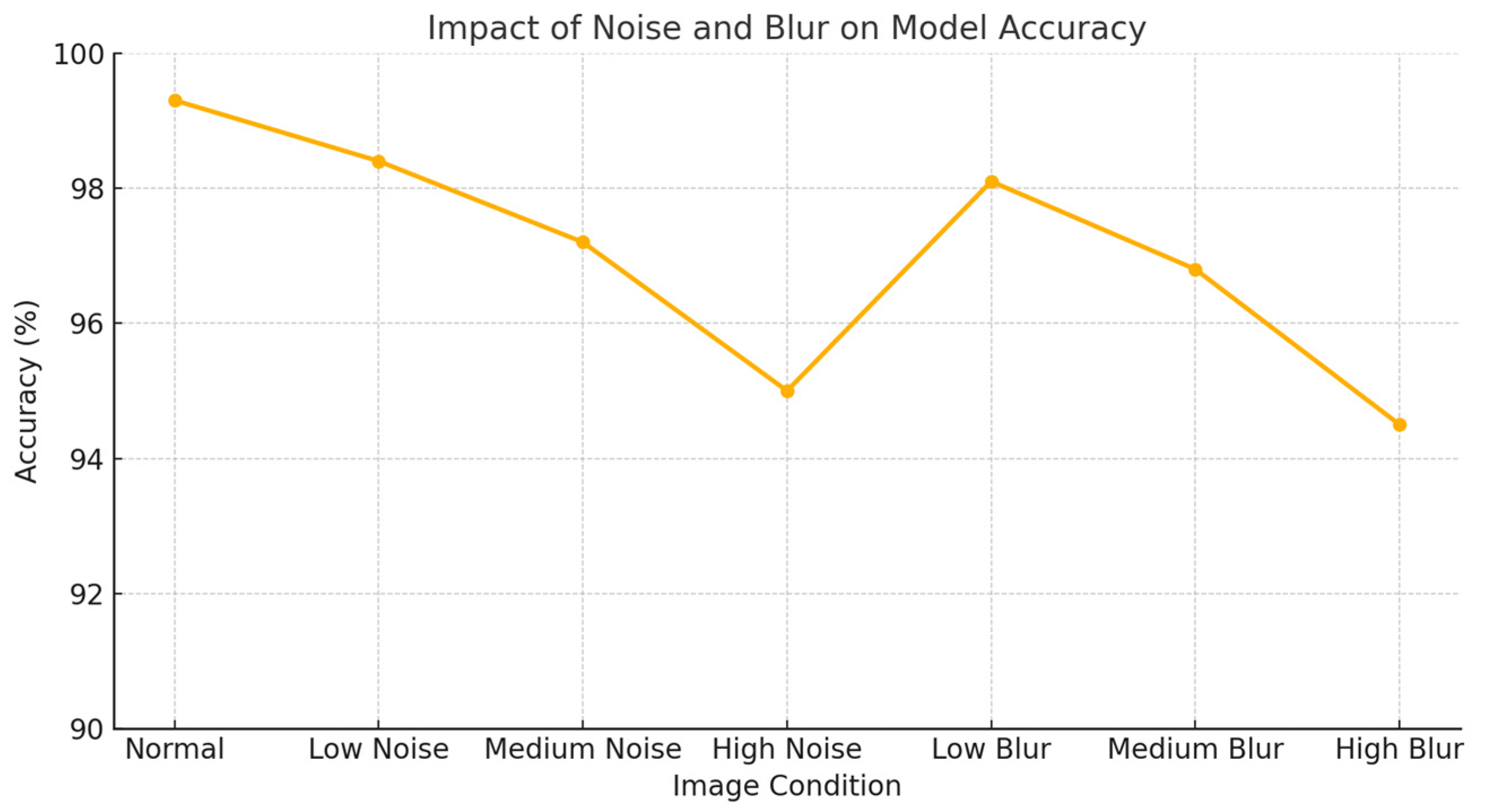

Table 10, the proposed method demonstrates strong robustness under mild-to-moderate image degradation. The accuracy decreased from 99.3% on normal images to 98.4% with low noise, and further dropped to 97.2% and 95.0% under medium and high noise conditions, respectively. A similar trend was observed with blur, where the accuracy reduced to 98.1%, 96.8%, and 94.5% as blur severity increased. Despite these reductions, the model maintained high AUC values, consistently above 94%, indicating reliable discriminatory capability even under challenging conditions. Furthermore, precision, recall, and F1-score remained within acceptable ranges, reflecting balanced performance in handling both false positives and false negatives. These results highlight the effectiveness of incorporating super-resolution techniques, which help recover critical facial features from degraded images, and Bayesian-inspired uncertainty estimation, which allows the system to flag low-confidence predictions. This dual approach enhances both the reliability and safety of identity verification in e-KYC applications. While the model performs well under typical and moderately degraded conditions, performance under severe noise and blur still shows noticeable declines. This suggests opportunities for future work, such as integrating advanced denoising algorithms or adaptive image enhancement methods to further improve the robustness. In this paper, the proposed framework proves to be resilient and reliable across a range of image quality scenarios, making it highly suitable for deployment in practical environments where image imperfections are inevitable.

Figure 18 illustrates the impact of varying levels of Gaussian noise and blur on the model’s accuracy in facial image-based identity verification. Under normal conditions, the proposed CNN + SRCNN framework achieves a baseline accuracy of 99.3%, exceeding typical thresholds required for high-assurance identity verification systems. As the image quality degrades due to noise or blur, a gradual reduction in accuracy is observed. However, even under medium degradation, the model consistently maintains accuracy above 96%, which aligns with the operational benchmarks commonly referenced in digital identity frameworks such as the Identity Assurance Level (IAL-2) and Authenticator Assurance Level (AAL-2). These standards emphasize not only high accuracy but also the reliability of authentication processes under varying real-world conditions. Notably, while performance under high noise and high blur scenarios decreases to 95.0% and 94.5%, respectively, these values remain within acceptable limits for many practical e-KYC applications, particularly where additional layers of verification (e.g., document checks or multi-factor authentication) are employed to complement biometric verification. The results underscore the effectiveness of integrating super-resolution preprocessing to mitigate the adverse effects of image degradation. Furthermore, the model’s design incorporates uncertainty estimation mechanisms, allowing it to flag low-confidence predictions supporting compliance with the IAL/AAL guidelines that prioritize risk management and decision transparency. The proposed framework demonstrates strong robustness and adherence to international digital identity standards, ensuring that, even in the presence of moderate image imperfections, the system can reliably support secure and compliant e-KYC operations. Future improvements may target extreme degradation scenarios to further enhance alignment with higher assurance levels (IAL-3/AAL-3) where stricter accuracy thresholds are mandated.

All experiments were conducted using an NVIDIA RTX 3090 GPU, with model training executed over 100 epochs in approximately 3.5 h. The inference time and model size for various models were recorded to assess their suitability for real-time e-KYC deployment.

The proposed model achieves an excellent trade-off between speed and memory footprint, making it highly suitable for resource-constrained devices in e-KYC applications in

Table 11.

The selection of a 24 × 24 pixel resolution for training and processing facial images in this study is the result of both practical considerations and empirical evaluation. This resolution was carefully chosen to balance the challenges posed by real-world low-quality data and the computational efficiency required for deployment in real-time identity verification systems.

In practical e-KYC applications, facial images are often acquired under poor imaging conditions, including scanned identity documents, low-resolution surveillance footage, and mobile devices with limited camera capabilities. An analysis of real-world datasets revealed that most facial images fall within the resolution range of 20 × 20 to 36 × 36 pixels. The chosen resolution of 24 × 24 pixels represents an optimal middle ground, sufficient to retain essential facial features for recognition while ensuring efficient computational processing.

- 2.

Supporting Evidence from Literature

Ouyang et al. [

10] demonstrated that facial recognition systems utilizing 24 × 24 pixel images achieved an accuracy of 99.1% when combined with super-resolution techniques. Li et al. [

28] also compared lower resolutions (21 × 15 and 16 × 12 pixels) with 24 × 24 pixels and found that the latter produced the most stable and accurate results across multiple test scenarios.

- 3.

Ablation Study Results

An ablation study was conducted to evaluate the impact of image resolution on system performance. The results are summarized as follows.

While the 32 × 32 pixel resolution produced a marginally higher accuracy, it significantly increased the computational costs by approximately 35%. The 24 × 24 resolution achieved nearly the same accuracy with a much lower processing burden, making it a more practical choice for real-time applications in

Table 12.

- 4.

Alignment with Standard Benchmarks

The chosen resolution also aligns with commonly used facial recognition benchmarks such as CASIA-WebFace and LFW (labeled faces in the wild). When combined with the super-resolution enhancement, this resolution provides sufficient facial detail to support reliable identity verification even under suboptimal input conditions.

The empirical results and supporting literature confirm that a 24 × 24 pixel resolution offers the best trade-off between recognition accuracy, computational efficiency, and real-world applicability. This decision ensures that the proposed identity verification framework remains both technically robust and practically deployable in low-resource and high-volume environments.

- 5.

Dataset Used for Training and Evaluation

The experiments in this study utilized the CASIA-WebFace dataset, a widely recognized benchmark in facial recognition research. The details are as follows.

- 6.

Comparison with state-of-the-art methods

The proposed framework achieved the highest accuracy of 99.3%, while also providing uncertainty-aware predictions through Monte Carlo Dropout.

Although models like FaceNet performed well in terms of accuracy, they lack built-in uncertainty estimation, which is critical for making transparent and risk-aware decisions in sensitive applications like e-KYC.

Additionally, the proposed model strikes a balance between high accuracy and reasonable inference time, making it suitable for real-time deployment.

The proposed framework demonstrates significant performance gains over existing methods, particularly due to its ability to handle low-resolution images effectively and provide confidence-aware predictions, enhancing both reliability and transparency in identity verification systems in

Table 13.

To evaluate the robustness of the proposed framework, additional quantitative experiments were performed under various image degradation conditions, including noise, blur, low contrast, and compression artifacts. The experimental results are presented in

Table 14 below.