SFSIN: A Lightweight Model for Remote Sensing Image Super-Resolution with Strip-like Feature Superpixel Interaction Network

Abstract

1. Introduction

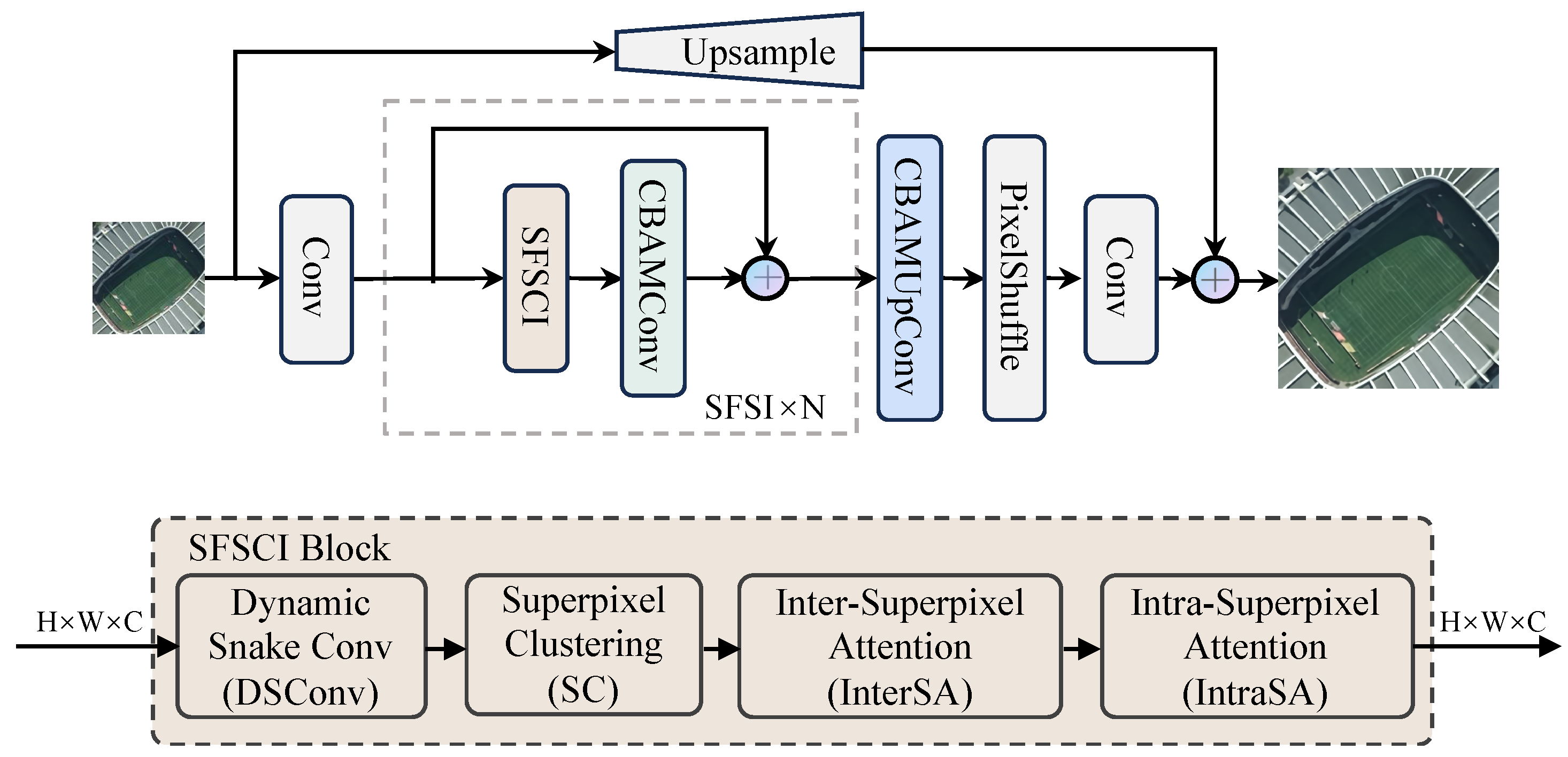

- We propose a lightweight hybrid CNN–Transformer network (SFSIN) that effectively extracts strip-like features in RSIs using dynamic snake convolution (DSConv) and strip-like feature superpixel interaction (SFSI) modules, achieving parameter efficiency.

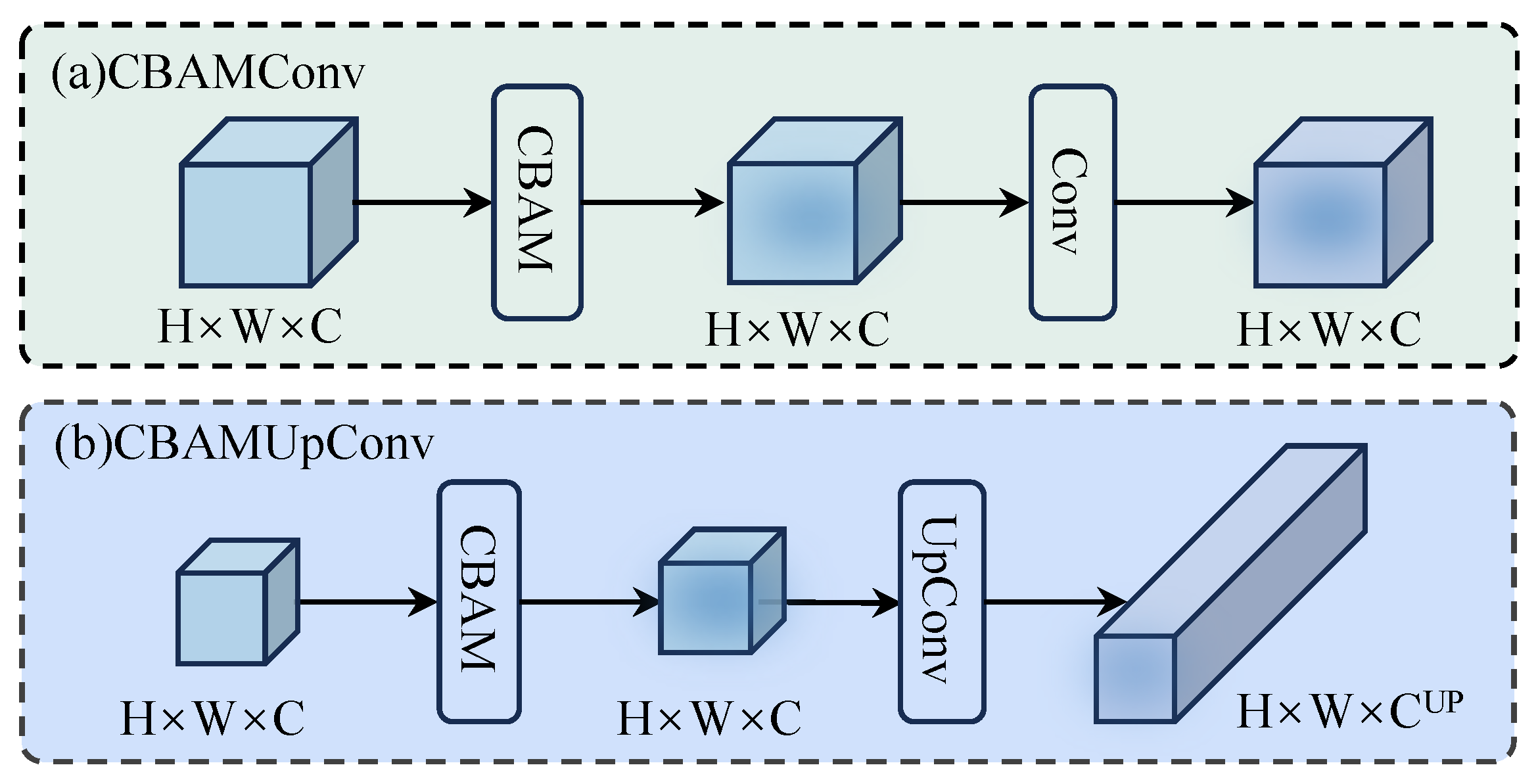

- We introduce the CBAMUpConv module, which integrates upsampling convolution with spatial and channel attention mechanisms, significantly enhancing reconstruction performance while maintaining computational efficiency.

2. Related Work

2.1. Conventional Methods for SR

2.2. CNN-Based Models for SR

2.3. Transformer-Based Models for SR

2.4. Lightweight Models for SR

3. Methodology

3.1. Architecture

3.2. Strip-like Feature Superpixel Cross and Intra Interaction Module

3.2.1. Dynamic Snake Convolution

3.2.2. Superpixel Clustering

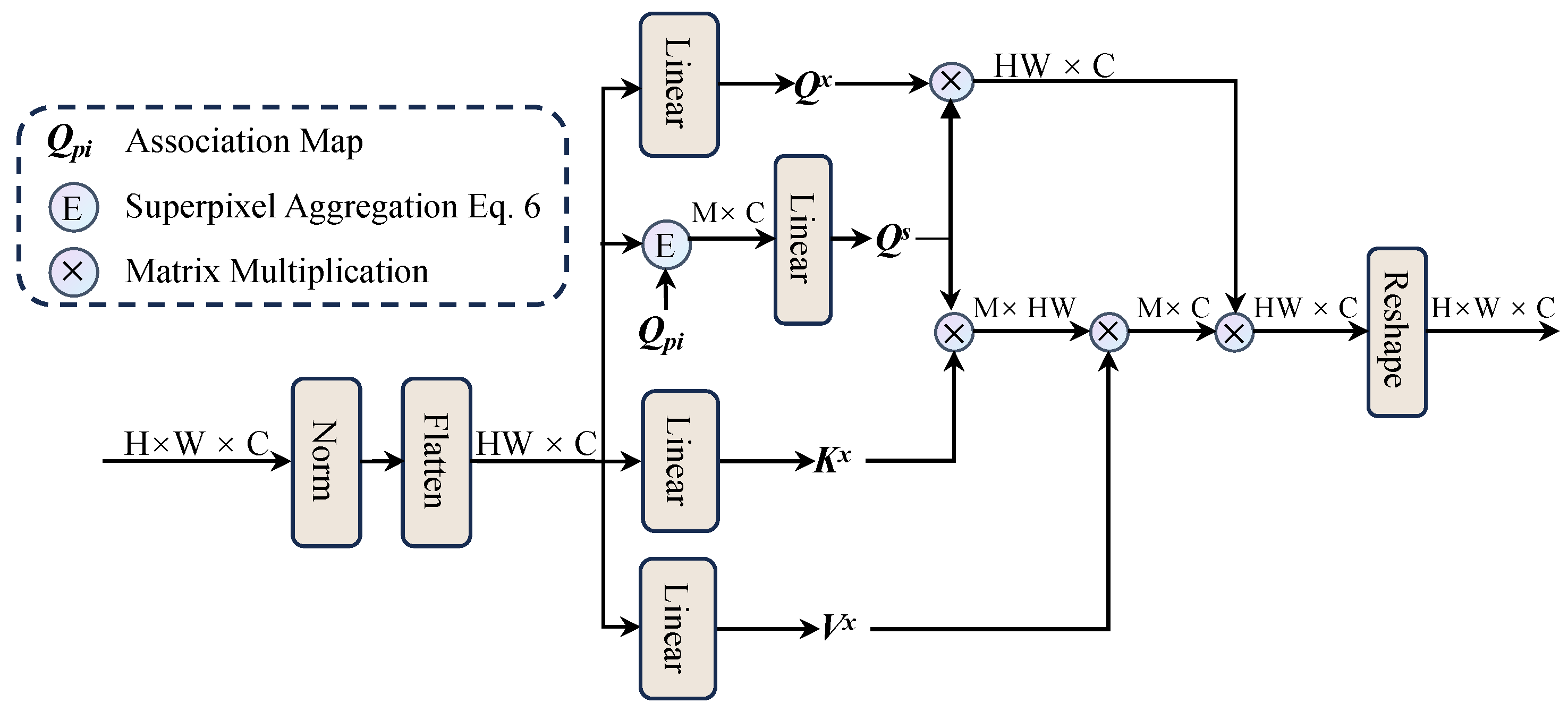

3.2.3. Inter-Superpixel Attention

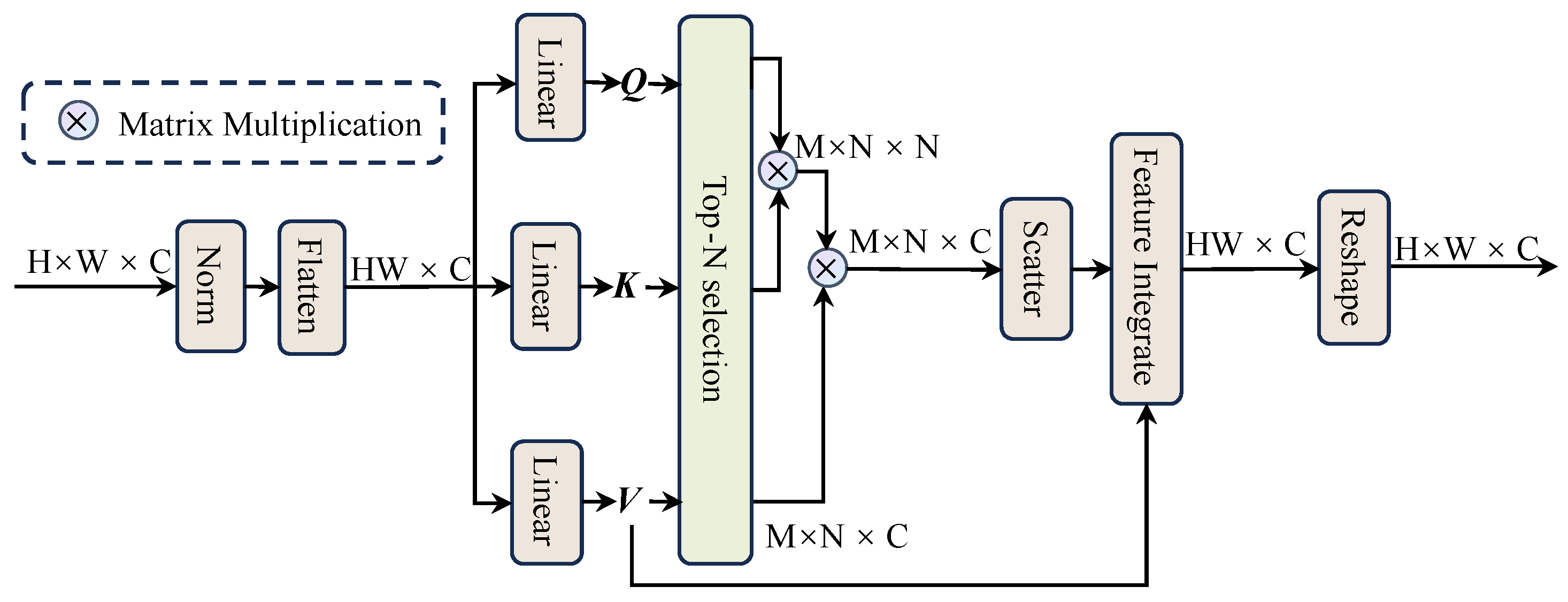

3.2.4. Intra-Superpixel Attention

3.3. Convolutional Block Attention Module with Upsampling Convolution

4. Experiments

4.1. Experimental Settings

4.1.1. Dataset and Evaluation

4.1.2. Implementation Details

4.1.3. Training Settings

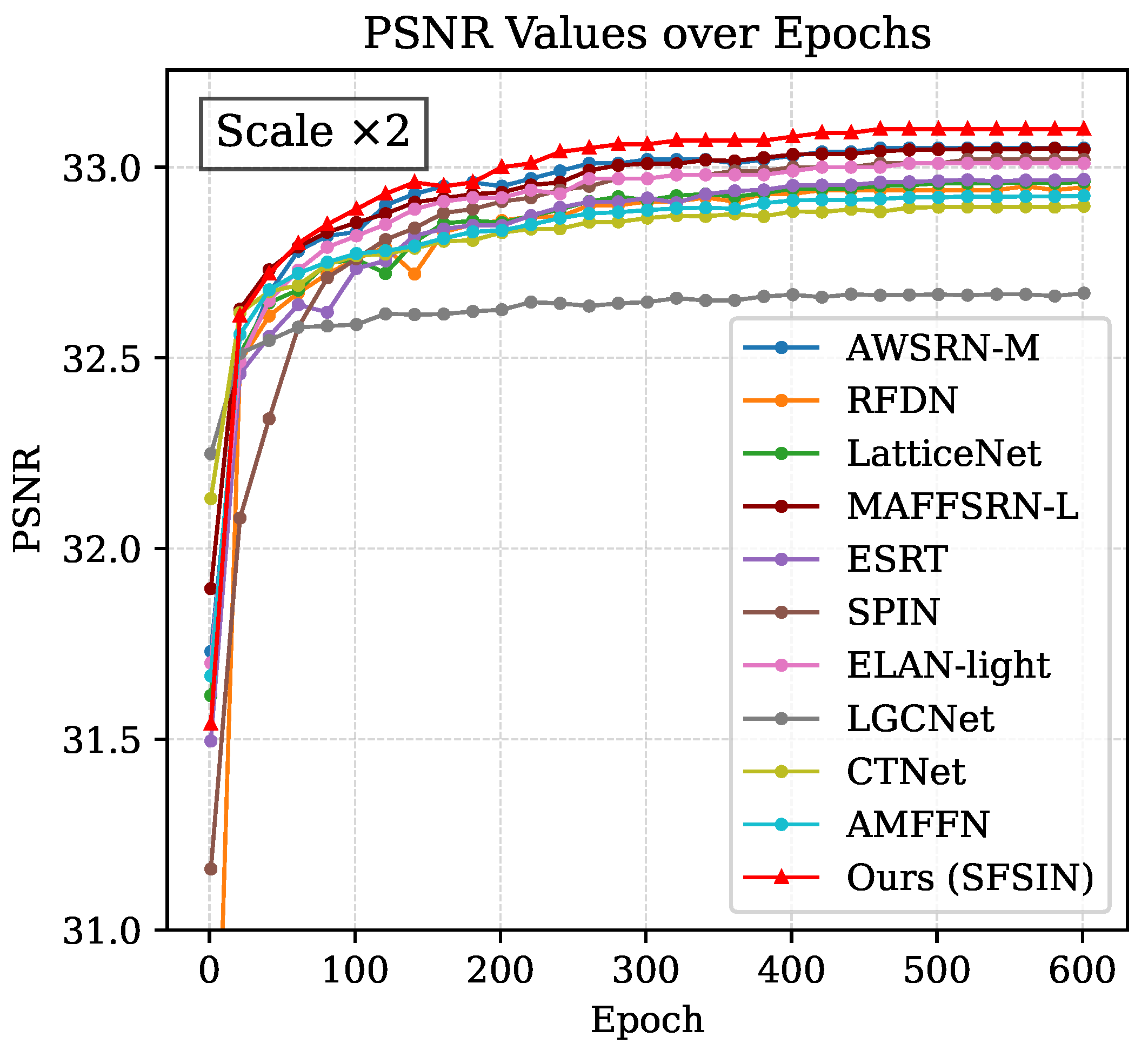

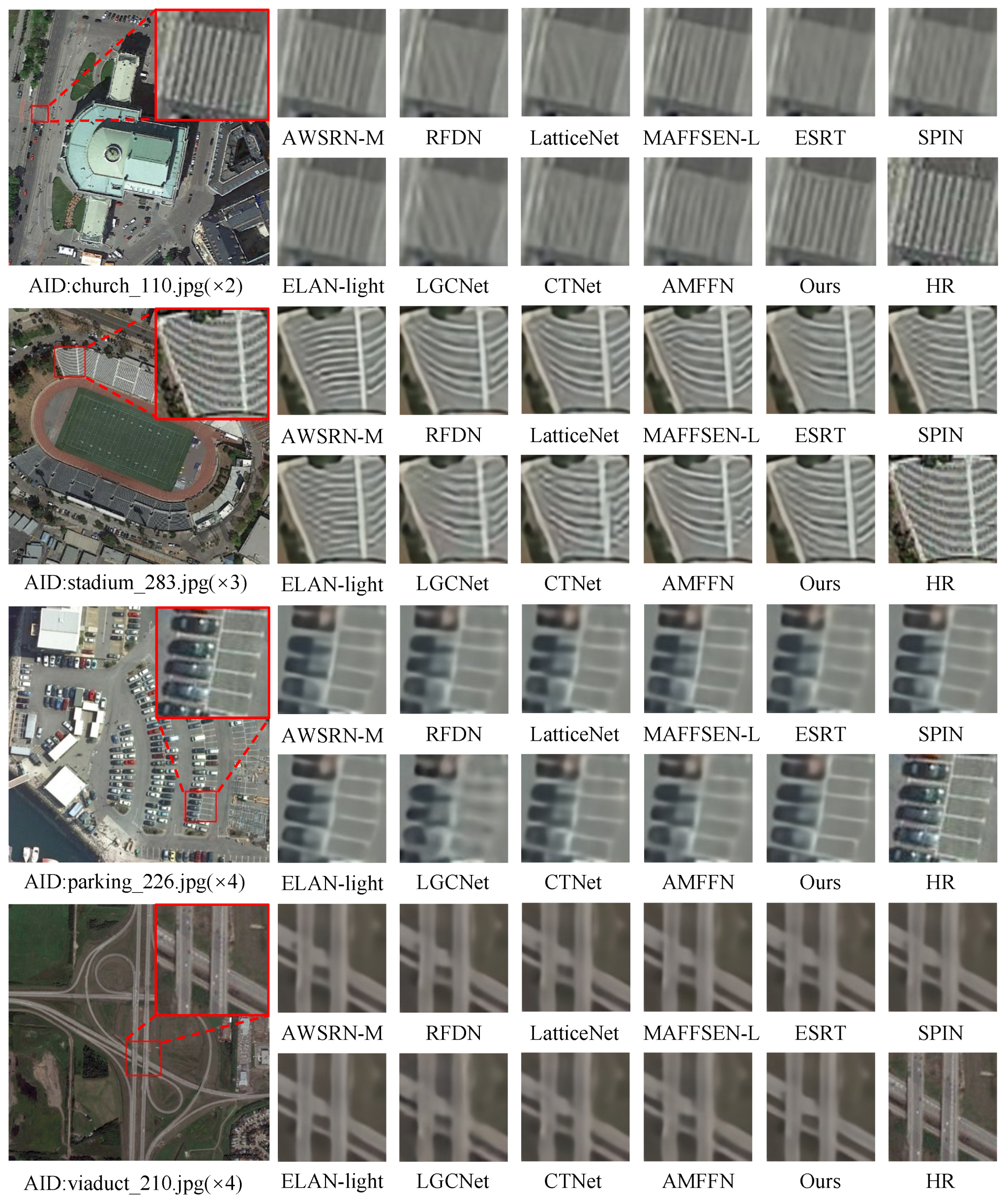

4.2. Comparison with Other Lightweight Methods

4.2.1. Quantitative Evaluation

4.2.2. Visual Quality Analysis

4.3. Ablation Studies

4.3.1. Impact of DSConv

4.3.2. Impact of SC, InterSA, and IntraSA

4.3.3. Impact of CBAMConv and CBAMUpConv

5. Conclusion and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Turner, W.; Spector, S.; Gardiner, N.; Fladeland, M.; Sterling, E.; Steininger, M. Remote sensing for biodiversity science and conservation. Trends Ecol. Evol. 2003, 18, 306–314. [Google Scholar] [CrossRef]

- Herold, M.; Liu, X.; Clarke, K.C. Spatial metrics and image texture for mapping urban land use. Photogramm. Eng. Remote Sens. 2003, 69, 991–1001. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Lyon, J.G.; Huete, A. Advances in hyperspectral remote sensing of vegetation and agricultural crops. In Fundamentals, Sensor Systems, Spectral Libraries, and Data Mining for Vegetation; CRC Press: Boca Raton, FL, USA, 2018; pp. 3–37. [Google Scholar]

- Joyce, K.E.; Belliss, S.E.; Samsonov, S.V.; McNeill, S.J.; Glassey, P.J. A review of the status of satellite remote sensing and image processing techniques for mapping natural hazards and disasters. Prog. Phys. Geogr. 2009, 33, 183–207. [Google Scholar] [CrossRef]

- Shen, H.; Zhang, L.; Huang, B.; Li, P. A MAP approach for joint motion estimation, segmentation, and super resolution. IEEE Trans. Image Process. 2007, 16, 479–490. [Google Scholar] [CrossRef]

- Köhler, T.; Huang, X.; Schebesch, F.; Aichert, A.; Maier, A.; Hornegger, J. Robust multiframe super-resolution employing iteratively re-weighted minimization. IEEE Trans. Comput. Imaging 2016, 2, 42–58. [Google Scholar] [CrossRef]

- Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Chen, H.; Wang, Y.; Guo, T.; Xu, C.; Deng, Y.; Liu, Z.; Ma, S.; Xu, C.; Xu, C.; Gao, W. Pre-trained image processing transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 12299–12310. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2472–2481. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the super-resolution convolutional neural network. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 391–407. [Google Scholar]

- Liu, Z.; Sun, M.; Zhou, T.; Huang, G.; Darrell, T. Rethinking the value of network pruning. arXiv 2018, arXiv:1810.05270. [Google Scholar]

- Yu, F. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Qi, Y.; He, Y.; Qi, X.; Zhang, Y.; Yang, G. Dynamic snake convolution based on topological geometric constraints for tubular structure segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 4–6 October 2023; pp. 6070–6079. [Google Scholar]

- Jampani, V.; Sun, D.; Liu, M.Y.; Yang, M.H.; Kautz, J. Superpixel sampling networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 352–368. [Google Scholar]

- Li, X.; Dong, J.; Tang, J.; Pan, J. Dlgsanet: Lightweight dynamic local and global self-attention networks for image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 4–6 October 2023; pp. 12792–12801. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhang, L.; Wu, X. An edge-guided image interpolation algorithm via directional filtering and data fusion. IEEE Trans. Image Process. 2006, 15, 2226–2238. [Google Scholar] [CrossRef]

- Hung, K.W.; Siu, W.C. Robust soft-decision interpolation using weighted least squares. IEEE Trans. Image Process. 2011, 21, 1061–1069. [Google Scholar] [CrossRef] [PubMed]

- Lu, X.; Yuan, H.; Yuan, Y.; Yan, P.; Li, L.; Li, X. Local learning-based image super-resolution. In Proceedings of the 2011 IEEE 13th International Workshop on Multimedia Signal Processing, Hangzhou, China, 17-19 October 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–5. [Google Scholar]

- Kim, K.I.; Kwon, Y. Single-image super-resolution using sparse regression and natural image prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1127–1133. [Google Scholar] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Wang, J.; Yang, W.; Guo, H.; Zhang, R.; Xia, G.S. Tiny object detection in aerial images. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3791–3798. [Google Scholar]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Chen, K.; Chen, B.; Liu, C.; Li, W.; Zou, Z.; Shi, Z. Rsmamba: Remote sensing image classification with state space model. IEEE Geosci. Remote. Sens. Lett. 2024, 21, 8002605. [Google Scholar] [CrossRef]

- Liebel, L.; Körner, M. Single-image super resolution for multispectral remote sensing data using convolutional neural networks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 883–890. [Google Scholar] [CrossRef]

- Lei, S.; Shi, Z.; Zou, Z. Super-resolution for remote sensing images via local–global combined network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1243–1247. [Google Scholar] [CrossRef]

- Xu, W.; Guangluan, X.; Wang, Y.; Sun, X.; Lin, D.; Yirong, W. High quality remote sensing image super-resolution using deep memory connected network. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 8889–8892. [Google Scholar]

- Ma, W.; Pan, Z.; Guo, J.; Lei, B. Achieving super-resolution remote sensing images via the wavelet transform combined with the recursive res-net. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3512–3527. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Fang, J.; Lin, H.; Chen, X.; Zeng, K. A hybrid network of cnn and transformer for lightweight image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1103–1112. [Google Scholar]

- Lei, S.; Shi, Z.; Mo, W. Transformer-based multistage enhancement for remote sensing image super-resolution. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–11. [Google Scholar] [CrossRef]

- He, J.; Yuan, Q.; Li, J.; Xiao, Y.; Liu, X.; Zou, Y. DsTer: A dense spectral transformer for remote sensing spectral super-resolution. Int. J. Appl. Earth Obs. Geoinf. 2022, 109, 102773. [Google Scholar] [CrossRef]

- Tu, J.; Mei, G.; Ma, Z.; Piccialli, F. SWCGAN: Generative adversarial network combining swin transformer and CNN for remote sensing image super-resolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5662–5673. [Google Scholar] [CrossRef]

- Shang, J.; Gao, M.; Li, Q.; Pan, J.; Zou, G.; Jeon, G. Hybrid-scale hierarchical transformer for remote sensing image super-resolution. Remote Sens. 2023, 15, 3442. [Google Scholar] [CrossRef]

- Liu, J.; Tang, J.; Wu, G. Residual feature distillation network for lightweight image super-resolution. In Proceedings of the Computer Vision–ECCV 2020 Workshops, Glasgow, UK, 23–28 August 2020; proceedings, part III 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 41–55. [Google Scholar]

- Ahn, N.; Kang, B.; Sohn, K.A. Fast, accurate, and lightweight super-resolution with cascading residual network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 252–268. [Google Scholar]

- Lu, Z.; Li, J.; Liu, H.; Huang, C.; Zhang, L.; Zeng, T. Transformer for single image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 457–466. [Google Scholar]

- Zhang, X.; Zeng, H.; Guo, S.; Zhang, L. Efficient long-range attention network for image super-resolution. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 649–667. [Google Scholar]

- Zhang, A.; Ren, W.; Liu, Y.; Cao, X. Lightweight image super-resolution with superpixel token interaction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 12728–12737. [Google Scholar]

- Wang, X.; Wu, Y.; Ming, Y.; Lv, H. Remote sensing imagery super resolution based on adaptive multi-scale feature fusion network. Sensors 2020, 20, 1142. [Google Scholar] [CrossRef]

- Vaswani, A. Attention is all you need. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2017. [Google Scholar]

- Wang, S.; Zhou, T.; Lu, Y.; Di, H. Contextual transformation network for lightweight remote-sensing image super-resolution. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Xia, G.S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L.; Lu, X. AID: A benchmark data set for performance evaluation of aerial scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Wang, C.; Li, Z.; Shi, J. Lightweight image super-resolution with adaptive weighted learning network. arXiv 2019, arXiv:1904.02358. [Google Scholar]

- Luo, X.; Xie, Y.; Zhang, Y.; Qu, Y.; Li, C.; Fu, Y. Latticenet: Towards lightweight image super-resolution with lattice block. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXII 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 272–289. [Google Scholar]

- Muqeet, A.; Hwang, J.; Yang, S.; Kang, J.; Kim, Y.; Bae, S.H. Multi-attention based ultra lightweight image super-resolution. In Proceedings of the Computer Vision–ECCV 2020 Workshops, Glasgow, UK, 23–28 August 2020; Proceedings, Part III 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 103–118. [Google Scholar]

| Method | ×2 Scale | ×3 Scale | ×4 Scale | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Params (k) | PSNR | SSIM | Time (ms) | Params (k) | PSNR | SSIM | Time (ms) | Params (k) | PSNR | SSIM | Time (ms) | |

| AWSRN-M [52] | 1,064 | 33.05 | 0.8703 | 45.2 | 1,143 | 30.22 | 0.7835 | 48.9 | 1,254 | 28.55 | 0.7188 | 52.7 |

| RFDN [42] | 626 | 32.95 | 0.8676 | 35.8 | 633 | 30.14 | 0.7801 | 38.6 | 643 | 28.48 | 0.7157 | 41.3 |

| LatticeNet [53] | 756 | 32.96 | 0.8679 | 38.2 | 765 | 30.16 | 0.7810 | 40.5 | 777 | 28.48 | 0.7157 | 43.2 |

| MAFFSRN-L [54] | 791 | 33.05 | 0.8703 | 38.9 | 807 | 30.20 | 0.7826 | 42.3 | 830 | 28.54 | 0.7184 | 45.8 |

| ESRT [44] | 678 | 32.97 | 0.8680 | 36.5 | 770 | 30.12 | 0.7787 | 39.8 | 752 | 28.47 | 0.7143 | 42.1 |

| SPIN [46] | 497 | 33.02 | 0.8693 | 29.7 | 569 | 30.21 | 0.7828 | 32.5 | 555 | 28.54 | 0.7188 | 34.9 |

| ELAN-light [45] | 582 | 33.01 | 0.8693 | 33.2 | 590 | 30.19 | 0.7826 | 35.7 | 601 | 28.52 | 0.7183 | 38.4 |

| LGCNet [33] | 193 | 32.67 | 0.8612 | 18.7 | 193 | 29.82 | 0.7685 | 20.5 | 193 | 28.17 | 0.7023 | 22.3 |

| CTNet [49] | 402 | 32.90 | 0.8667 | 25.4 | 402 | 30.06 | 0.7779 | 27.8 | 413 | 28.42 | 0.7135 | 30.1 |

| AMFFN [47] | 298 | 32.93 | 0.8671 | 22.3 | 305 | 30.09 | 0.7784 | 24.6 | 314 | 28.43 | 0.7135 | 26.9 |

| Ours (SFSIN-S) | 642 | 33.08 | 0.8708 | 28.1 | 714 | 30.23 | 0.7837 | 31.2 | 700 | 28.58 | 0.7205 | 33.6 |

| Ours (SFSIN) | 784 | 33.10 | 0.8715 | 32.5 | 856 | 30.25 | 0.7844 | 35.8 | 842 | 28.57 | 0.7203 | 36.2 |

| Component | ×2 Scale | ×3 Scale | ×4 Scale | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| DSConv | CBAM-Conv | CBAM-UpConv | Params (k) | PSNR | SSIM | Params (k) | PSNR | SSIM | Params (k) | PSNR | SSIM |

| 0 | 497 | 33.02 | 0.8693 | 569 | 30.21 | 0.7828 | 555 | 28.54 | 0.7188 | ||

| 1 | 781 | 33.06 | 0.8704 | 853 | 30.21 | 0.7831 | 839 | 28.55 | 0.7194 | ||

| 0 | √ | √ | 500 | 33.05 | 0.8699 | 572 | 30.21 | 0.7832 | 558 | 28.55 | 0.7196 |

| 1 | √ | 783 | 33.08 | 0.8708 | 855 | 30.23 | 0.7836 | 841 | 28.55 | 0.7195 | |

| 1 | √ | √ | 586 | 33.09 | 0.8710 | 588 | 30.24 | 0.7840 | 594 | 28.56 | 0.7200 |

| 2 | √ | √ | 620 | 33.10 | 0.8712 | 633 | 30.24 | 0.7842 | 629 | 28.56 | 0.7200 |

| 3 | √ | √ | 660 | 33.11 | 0.8713 | 668 | 30.25 | 0.7843 | 665 | 28.57 | 0.7203 |

| 4 | √ | √ | 701 | 33.11 | 0.8714 | 704 | 30.25 | 0.7843 | 700 | 28.58 | 0.7205 |

| 5 | √ | √ | 730 | 33.11 | 0.8714 | 738 | 30.25 | 0.7843 | 736 | 28.57 | 0.7204 |

| 6 | √ | √ | 752 | 33.12 | 0.8715 | 769 | 30.26 | 0.7844 | 771 | 28.58 | 0.7207 |

| 7 | √ | √ | 784 | 33.11 | 0.8714 | 805 | 30.25 | 0.7843 | 807 | 28.57 | 0.7204 |

| 8 | √ | √ | 813 | 33.10 | 0.8715 | 836 | 30.25 | 0.7844 | 842 | 28.57 | 0.7203 |

| Configuration | ×2 Scale | ×3 Scale | ×4 Scale | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Params (k) | PSNR | SSIM | Params (k) | PSNR | SSIM | Params (k) | PSNR | SSIM | |

| Full SFSCI | 784 | 33.10 | 0.8715 | 856 | 30.25 | 0.7844 | 842 | 28.57 | 0.7203 |

| Without SC | 760 | 32.89 | 0.8672 | 832 | 30.04 | 0.7801 | 818 | 28.36 | 0.7160 |

| Without InterSA | 772 | 32.92 | 0.8681 | 844 | 30.07 | 0.7810 | 830 | 28.39 | 0.7169 |

| Without IntraSA | 768 | 32.95 | 0.8690 | 840 | 30.10 | 0.7829 | 826 | 28.42 | 0.7178 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyu, Y.; Liu, Y.; Zhao, Q.; Hao, Z.; Song, X. SFSIN: A Lightweight Model for Remote Sensing Image Super-Resolution with Strip-like Feature Superpixel Interaction Network. Mathematics 2025, 13, 1720. https://doi.org/10.3390/math13111720

Lyu Y, Liu Y, Zhao Q, Hao Z, Song X. SFSIN: A Lightweight Model for Remote Sensing Image Super-Resolution with Strip-like Feature Superpixel Interaction Network. Mathematics. 2025; 13(11):1720. https://doi.org/10.3390/math13111720

Chicago/Turabian StyleLyu, Yanxia, Yuhang Liu, Qianqian Zhao, Ziwen Hao, and Xin Song. 2025. "SFSIN: A Lightweight Model for Remote Sensing Image Super-Resolution with Strip-like Feature Superpixel Interaction Network" Mathematics 13, no. 11: 1720. https://doi.org/10.3390/math13111720

APA StyleLyu, Y., Liu, Y., Zhao, Q., Hao, Z., & Song, X. (2025). SFSIN: A Lightweight Model for Remote Sensing Image Super-Resolution with Strip-like Feature Superpixel Interaction Network. Mathematics, 13(11), 1720. https://doi.org/10.3390/math13111720