Abstract

This article presents a high-performance-computing differential-evolution-based hyperparameter optimization automated workflow (AutoDEHypO), which is deployed on a petascale supercomputer and utilizes multiple GPUs to execute a specialized fitness function for machine learning (ML). The workflow is designed for operational analytics of energy efficiency. In this differential evolution (DE) optimization use case, we analyze how energy efficiently the DE algorithm performs with different DE strategies and ML models. The workflow analysis considers key factors such as DE strategies and automated use case configurations, such as an ML model architecture and dataset, while monitoring both the achieved accuracy and the utilization of computing resources, such as the elapsed time and consumed energy. While the efficiency of a chosen DE strategy is assessed based on a multi-label supervised ML accuracy, operational data about the consumption of resources of individual completed jobs obtained from a Slurm database are reported. To demonstrate the impact on energy efficiency, using our analysis workflow, we visualize the obtained operational data and aggregate them with statistical tests that compare and group the energy efficiency of the DE strategies applied in the ML models.

Keywords:

high-performance computing; operational data analytics; energy efficiency; machine learning; AutoML; differential evolution; optimization MSC:

68W50; 68M20; 68T07; 68U10

1. Introduction

High-performance computing (HPC) continues to advance and to provide important infrastructure and a backbone for the scientific community, industry, and beyond, making efficient utilization highly important for both environmental sustainability and cost efficiency [1,2]. Despite the increasing availability of automated ML (AutoML) frameworks, most existing tools prioritize and maximize the performance (accuracy) and neglect other important objectives such as energy efficiency (EE) and resource usage and optimization, which are key factors in large-scale HPC environments and beyond [3,4]. Therefore, the enforcement of sustainable and energy-efficient solutions, including the utilization and optimization of computational resources, now plays a key role in reducing operational financial costs and minimizing environmental impacts [1,3]. Due to the broad accessibility of computational resources for HPC, the scientific community with the myriad of application areas and diverse expertise from different fields, can deploy and run their workflows [5,6]. As their needs, demands, and complexity grow, running data-intensive and parallel workflows involves both different and heterogeneous architectures and the resources of state-of-the-art systems [6,7,8]. Such heterogeneous architectures introduce challenges in workload scheduling, as there are many unknowns and uncertainties [2,9]. However, despite the optimization capabilities and advances, most AutoML frameworks do not incorporate schedulers such as Slurm, nor do they provide support for energy monitoring, as their main focus is on improving ML model performance (accuracy) and ignore constraints such as EE, utilization, and resource allocation [3,4,10]. To address these challenges, adjusted and tailored frameworks that support sustainable and cost-efficient HPC environments are needed. Therefore, this article presents a high-performance-computing (HPC) differential-evolution-based automatic hyperparameter optimization workflow (AutoDEHypO) for energy efficiency and operational data analytics using multiple graphics processing units (GPUs), and this is deployed on the petascale EuroHPC supercomputer Vega [11]. Our proposed method is capable of determining how energy-efficient the differential evolution (DE) algorithm and ML models perform. The proposed AutoDEHypO workflow considers both their achieved accuracy and the utilization of computing resources, such as elapsed time and consumed energy; it collects runtime data through Slurm within the HPC environment, and the job allocation may impact the obtained results. Moreover, resource consumption leads to inefficient workloads, waste of computational resources, the consumption of a project quota, longer queue times, and postponement of scientific research [12]. Since Slurm dynamically schedules jobs to available, unutilized, or idle nodes within the cluster queue, proper adjustments in job submission may lead to faster allocation and faster attainment of the required job results [13]. We aim to develop the ability to operate within the constraints that Slurm users have and determine whether statistical deviations will be significant. Thus, we prepared our environment for the deployment of the AutoDEHypO workflow, where we ensured consistent resource allocation across the submitted job scripts with a Slurm script (SBATCH). Furthermore, this setup includes supervised machine learning (ML) and multi-label classification and allows for applicable optimization for aspects of hyperparameters that could be affected [14]. We chose the recent parallel implementation of the DE algorithm [15], which parallelizes the population operations in an algorithm that was initially introduced by Storn and Price in 1995 [16]. While DE provides a favorable trade-off and balance between accuracy and energy efficiency (EE) in the context of HPC [17], it additionally offers several advantages over other optimization algorithms, such as efficient and faster convergence, suitability and adaptability in a variety of different environments and optimization problems, global optimization, parallelization, scalability, energy optimization, and the possibility for combination and complementarity with different algorithms and techniques due to available implementations and integrations [16,18,19,20,21]. Furthermore, DE is suitable for the given optimization problem, as it can be used for optimizing nonlinearities in data, such as ML-related input/output data within the workflow, and it can be adapted to other use cases and problems [19]. Data are gathered from system scheduling and historical data; resource allocation and job execution times make it difficult to provide prediction and optimization [16,19,20,21]. In the evaluation phase of our experiment, we used basic ML metrics [14]. During the deployment of individual jobs on multiple GPUs in our experiment, we examined the efficiency of a chosen ML model and DE algorithm according to the accuracy [14]. DE functions and strategies show distinct behavior, and this has not yet been extensively measured or optimized within the context of EE and system resource management in HPC environments [20,22,23]. Data on the consumption of resources of individual jobs were obtained from the Slurm database of completed jobs, with energy consumption data being reported for the whole node by Intelligent Platform Management Interface (IPMI) sensors [13,24]. Based on the results of the computations, aggregated statistics were calculated, along with corresponding post hoc procedures and visualizations, to evaluate the efficiency of the combined ML model and the applied DE strategy.

1.1. Problem Statement and Objective

This work addresses the challenge of optimizing machine learning (ML) models in high-performance computing environments by balancing ML accuracy, energy consumption, and resource utilization. To address these challenges, we propose AutoDEHypO, a differential-evolution-based workflow that is specifically designed for energy-monitored hyperparameter optimization. The specific problem stated for this study consists of considering some key limitations, such as inefficient utilization of computational resources during job allocation, extended queue times, and lack of integration with HPC environments and schedulers such as Slurm. The limitations of existing workflows and frameworks are that they mostly focus on a single objective, such as ML performance or integration within HPC environments, and do not necessarily contribute to the sustainability and cost-effectiveness of HPC environments [25,26]. Therefore, we are interested in analysis of the consumption of resources and when the consumed energy and other resources can lead to different ML performance and configurations.

1.2. Main Contributions

The main contributions of this article are as follows:

- We propose AutoDEHypO, a high-performance-computing differential-evolution-based automatic hyperparameter workflow designed to optimize the performance of ML models for energy efficiency and operational data analytics in HPC environments.

- We deploy the AutoDEHypO workflow on the EuroHPC Vega system, utilizing multiple GPUs and Slurm scheduling and submission to execute a specialized fitness function for ML.

- We applied and evaluated this workflow on supervised ML and multi-label classification using the CIFAR10 and CIFAR100 datasets [27].

- We collected runtime data through Slurm within the HPC production environment.

- We evaluated the efficiency of a chosen ML model and DE algorithm strategies according to the ML accuracy and energy efficiency, dependent on ML model architecture, datasets, and resource consumption within the HPC environment.

- We performed aggregated statistical analyses, along with the corresponding post hoc procedures, and validated the collected data using visualizations by evaluating efficiency of combined ML models and applied DE strategies.

- We identified significant differences in key metrics and laid the ground for future work on sustainability and cost-effectiveness using AutoDEHypO.

2. Related Work and Existing Methods

The integration of machine learning (ML) with monitoring and operational data analytics (MODA) is a step towards enhancing operational efficiency, and DE is being utilized to run DE fitness functions and enhance model performance through hyperparameter optimization and other techniques [28]. Furthermore, automated machine learning (AutoML) automates workflows and reduces manual effort [12]; here, the effectiveness of an ML model is evaluated using fundamental ML evaluation metrics, non-parametric tests, post hoc procedures, and visualizations [14]. Despite the improvements, there is still a trade-off between accuracy and energy efficiency [14]. Moreover, checkpoint, restart, and predictive models provide additional robustness and project quotas for allocated computational resources. The following subsections, therefore, describe these topics in the order in which they were mentioned here.

2.1. Machine Learning

Machine learning (ML) is used to discover patterns, make predictions, provide automation, and generate useful knowledge from datasets [29]. Several types of such discoveries have been made, through methods such as classification, regression, segmentation, clustering, error detection, sequence analysis, and others [29]. The design of layers (how they are connected and structured) represents the architecture of a model, while the trained version (system) represents an ML model itself [29]. Furthermore, in the context of learning, there is supervised machine learning and unsupervised machine learning [29]. In ML, patterns are identified within a dataset, and thus the model wants to connect attributes and classes—for example, through classification—with a focus on similarities on the one hand; on the other hand, unsupervised machine learning focuses on identifying underlying structures without a target attribute, such as segmentation [29].

In ML, optimization algorithms are used to improve results—specifically, losses within a selected space [14,30]. With the help of an ML algorithm, hyperparameters can be adjusted and optimized even before the learning phase [14]. The hyperparameters of ML are the configuration variables of ML methods [14]. Some of the most widely used optimization methods are grid search, random search, cross-validation, and Bayesian optimization [30,31]. The execution time of a manual search can be significantly longer due to the complexity of ML models and neural networks within their limits, and as a result, such a search uses significantly more computational resources [8,32].

A convolutional neural network (CNN) is suitable for processing patterns within images, processing video content, performing face recognition, processing medical data, and more. A CNN uses a filter, converts pixels into numerical values in the first layer, and processes or teaches them to recognize and analyze data with the help of subsequent layers [33].

Recurrent neural networks (RNNs) use a mechanism for storing information from previous states and process and interpret it, making them more suitable for processing when the data are structured and in some kind of sequence. They are used in areas such as natural language processing (NLP) and speech and handwriting recognition [34].

The complexity and efficiency of the model may be defined by the architecture design and structure of the NN, the layers, and their connectivity, including the number of layers, the framework, the model size, the optimization that is applied, the input data, and more [8,35]. The efficiency and emergence of smaller ML models, which are also referred to as language models [36], may play a key role, as they are easier to manage and provide flexibility, allowing faster implementation of changes with fewer computational resources [37,38].

Multi-label classification is a type of supervised machine learning that enables each instance to be associated with multiple labels, and it differs from conventional single-label classification, where each instance can be associated with a single label [14,39]. Fine-tuning and other optimization techniques can be applied to hyperparameters, which will have an effect and improve the performance of a model [14,30]. Evaluation of progress and performance is performed using a metric (M). There are some fundamental ML metrics, such as the accuracy, precision, recall, and F-measure; in addition, metrics can be aggregated with possible prediction outcomes, e.g., total (T), true positive (), true negative (), false positive (), and false negative (), as presented in [14].

- Accuracy (A) [14]:

- Precision (P) [14]:

- Recall (R) [14]:

- F-Measure () [14]:

- Metric Aggregation [14]:

2.2. Monitoring and Operational Data Analytics

Monitoring and operational data analytics (MODA) in high-performance computing can be applied to broad standards and practices for the integration, collection, storage, processing, monitoring, visualization, and analysis of system data [28]. MODA is used with the goal of helping end users make informed decisions about information collected on their system through hardware power monitoring and management tools such as IPMI, RAPL, and others [40]. These tools provide information such as sensor data, usage of generic resources, job status, memory, wall time, job and node utilization, power consumption per job, nodes, blades, racks, clusters, data centers, cluster occupancy, billing, and more to be leveraged in many ways [13,28]. Furthermore, MODA can be applied and extended to either an active or a passive setup, which depends on the requirements, potential benefits, and contributions that it may offer [40]. An active setup actively influences the runtime operations, while on the other hand, a passive setup passively monitors, gathers, and collects data without affecting the runtime operations [40]. To enhance the impact of ML and gather insights into data, MODA may be used to monitor energy consumption, improve job efficiency, and improve the ML design for EE by providing real-time feedback mechanisms for adaptive and dynamic changes within ML operations, ML optimization suggestions such as predictions in ML models, or even operational insights to resolve issues by proactively predicting potential trends and patterns within ML operations [9,40,41]. Such an analysis could identify trade-offs and bring the right balance, resulting in potential reductions in the consumption of resources and bringing sustainability and EE into view [28]. Furthermore, MODA offers multiple opportunities and can potentially contribute to EE due to the possibility of monitoring energy and detecting significant differences, preventing potential issues and anomalies, finding potential root causes, detecting security vulnerabilities and malware, and providing improvements in performance, reliability, throughput, and beyond [42]. Using HPC Vega [11], the topics of MODA, including energy monitoring, operational efficiency, and optimization of HPC workloads, were investigated and recently addressed in [43].

2.3. Differential Evolution

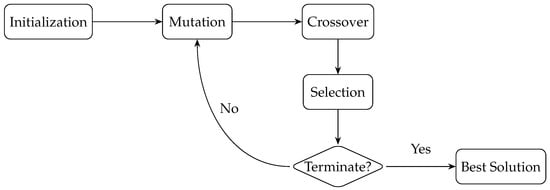

The differential evolution (DE) algorithm is a population algorithm and was introduced by Storn and Price in 1995 [44]. DE has been used successfully in the field of optimization of numerical functions and in applications to real problems within different domains [16]. The original DE has a main evolutionary loop through which, with the help of the key and basic operators presented in Figure 1, such as initialization, mutation, crossover, and selection, the final result can be gradually improved soon after each generation by repeating these operators until the end [45]. From its introduction to the present, interest in DE has increased year on year [16,44]. Thus, DE has been developed, improved, and applied to a variety of real-world problems within multidisciplinary domain areas, such as electrical and energy systems, artificial neural networks, manufacturing and pperation research, robotics and advanced systems, pattern recognition, image processing, bioinformatics and biomedicine, electrical engineering, and others, as presented in several articles [16,46,47,48,49]. Those mechanisms can be used to make the selection process easier [50]. DE provides a favorable trade-off and balance between accuracy and energy efficiency within HPC environments and beyond [17]. Additionally, DE offers several advantages over other optimization algorithms, such as efficient and faster convergence and suitability and adaptiveness in a variety of different environments and optimization problems; often, it provides better results than other algorithms [18,51,52,53]. Furthermore, DE can be used for nonlinear, nondifferentiable, and multi-objective problems [16]. It provides robustness in complex and multidimensional spaces, global optimization in complex spaces, parallelization, scalability in high-dimensional problems, and energy optimization and distribution without compromising throughput [16,18]. It can be integrated with penalty functions and other methods to handle constraints, and it can be combined and used to complement different algorithms and techniques due to its easy implementation and integration [16,21]. Last but not least, it is being extensively researched, and constant improvements to the algorithm are being made [16,18,21].

Figure 1.

This figure shows the basic steps of differential evolution, including initialization, crossover, mutation, selection, and condition (loop). Until the stopping condition is met, i.e., max generations or convergence, the mutation, crossover, and selection processes are repeated over generations; then, the best solution that is found is provided [54].

2.3.1. Differential Evolution Operators

In DE [20], the following operators are used as highlighted in Figure 1.

First, the search space is initialized, and all search variables are randomly populated in the search space for a population of vectors defined with a dimension; then, the parameter vectors may change over different iterations [16]. The sequential number of the current generation [16] is denoted by t, the optimization search dimension size is denoted with d, and denotes a population vector index [16].

Within this initialization stage the vector must be composed of the following components within interval limits for minimum and maximum [16]:

During mutation, after the first step is completed, the algorithm selects donor and mutated vectors for each population individual [55]. The following strategies are used most often (interval from 1 to ), where denotes the target vector, denotes the mutant vector, denotes the best individual in the population, denotes random indexes that are mutually different and different from i, F denotes the mutation scaling factor, and t denotes the current generation [16,55,56]

- DE/rand/1: A random vector is chosen as the basis, and a one-sided weighted difference (vector) composed of two other random vectors is added to it.

- DE/best/1: The current best random vector is used as the basis, and an additional random difference (vector) is added to it.

- DE/current-to-best/1: For an individual vector to mutate with the best vector, one random difference (vector) is added.

- DE/rand/2: A random vector is chosen as the basis, and two independent differences (vectors) of four random vectors are added to it.

- DE/best/2: The current best random vector is used as the basis, and two random differences (vectors) of four random vectors are added to it.

In the crossover phase, the donor vector crosses with the targeted vector [16]. The two most commonly used methods of crossing are exponential and binomial [16], and there are a few other combinations of mutation and crossover strategies in the original DE algorithm [20,55], namely, the DE/rand/1/exp, DE/current-to-best/1/exp, DE/best/1/exp, DE/current-to-best/1/bin, DE/rand/2/exp, and DE/best/2/exp strategies. Exponential crossover works by choosing a random number within the size of the dimension {1, 2, …, d}. The target vector is then crossed with the donor vector [16]. The binomial crossover generates a number from interval for each dimension randomly and compares it to crossover value [16], in order to exchange components, or also exchange the component at random index .

In selection, survival to the next generation for both vectors, i.e., the target and the trial vector, is determined [16]. If the condition is met, the target vector is simply replaced according to the strategy chosen for the next generation; otherwise, it remains and is further evolved within the next generation [16].

2.3.2. Improvements to the Differential Evolution Algorithm and Energy Efficiency

Since the introduction and throughout development beyond the original DE algorithm [20], its main foundational concepts were addressed and subsequently extended [57]. Especially from the efficiency perspective, extended versions and improvements have been proposed over the years [16,21]. Numerous improved algorithms have been developed and presented annually at conferences, such as at the Institute of Electrical and Electronics Engineers (IEEE) Congress on Evolutionary Computation (CEC), the Genetic and Evolutionary Computation Conference (GECCO), Parallel Problem Solving From Nature (PPSN), and competitions [58]. DE competitions are organized mainly as part of the CEC [58] and GECCO. After each competition, a review of the algorithms is conducted, and the results are included in a technical report [58]. Therefore, from such efficiency perspective defined at these competitions or other benchmarks, there are newer DE methods with benchmarks, such as L-SHADE [59] and DISH [60] with parameter control benchmarking [61]. Several related works have used DE for energy efficiency (EE), demonstrating applicability within the context of high-performance computing (HPC) and beyond [23,62], including that DE has been utilized in testing benchmarking EE with large dimensions for some CEC functions in [63]. Notably, DE can be used as a tuning platform to utilize and optimize energy-efficient and energy-aware workloads [64].

Other authors have presented a self-adaptive DE method with mutation and crossover operators and its design for NN optimization [65]. Agarwal et al. presented a differential-evolution-based approach to compress and accelerate a convolution neural network model, where the compression of various ML models improved their accuracy on different datasets, including CIFAR10 and CIFAR100 [66]. The compact differential evolution (cDE) algorithm was presented by Mininno et al. for constrained edge environments and provides support in robotics where there are limited computational resources [62]. A memetic DE (MDE) algorithm for EE with a job scheduling mechanism and possibilities for parallelization and scaling was also presented by Xueqi et al. [67]. A differential-evolution-based EE system for minimizing the consumption of resources in unmanned aerial vehicles (UAVs) and IoT devices was recently presented by Abdel-Basset et al. [68]. DE was also applied to autonomous underwater vehicles (AUVs) for underwater glider path planning (UGPP) with the objective of collecting research data within unexplored areas, which presents additional challenges; this provides robustness for such missions by addressing energy optimization for underwater glider vehicles [69,70]. Jannsen et al. also recently presented a comparative study reviewing the potential of the DE algorithm on GPUs due to its advantage of parallelism and more [71].

2.4. Comparison of Hyperparameter Optimization Methods

Differential evolution (DE) can be compared with other hyperparameter optimization methods, such as random search (RS), grid search (GS), and Bayesian optimization (BO) [25,26]. Under constraints of limited time and computational resources, neither GS nor RS proves to be ideal [25,26] and both methods tend to be inefficient, especially in the context of complex ML models [25]. Due to the exhaustive nature of GS, it suffers when dealing with a larger number of dimensions as they increase [25]. As dimensionality increases, GS evaluates a large number of configurations, which leads to the consumption of many computational resources [25]. RS, while simpler and more robust, typically requires a larger set of evaluations to achieve competitive results, regardless of resource constraints [25]. RS selects configurations at random, potentially overlooking promising regions of the search space and resigned to optimal results [25]. BO uses surrogate models, which are very efficient in low-dimensional spaces and black-box problems [25]. Unlike RS and GS, BO does not try every possibility within a given space, insted it uses predictions of outcomes where the acquired knowledge is collected and updated [25,26]. BO is a very useful method for the desire for scalability and with increasing dimensions BO loses performance rapidly with increasing dimensionality [25,26]. Additionally, in connection with DE and other optimization algorithms, a novel deep-learning expansion called Deep-BIAS was introduced and presented by van Stein et al. [72] Furthermore, Explainable AI (XAI) for evolutionary computation (EC) and the current state-of-the-art [73] introducing techniques for global sensitivity analysis in evolutionary optimization with focus on effectiveness is presented [74]. Such comparison strengthens the basis of DE as the optimizer user (within this article), especially in scenarios where the evaluation of ML models is computationally demanding, where parallelism and scalability within robust complex search and multidimensional spaces are required, as well as its implementation in real problems in various fields, including ML as presented in Section 2.3.2.

2.5. Automated Machine Learning

Automated machine learning (AutoML) is the automation of workflows within an ML process and can be applied from the beginning to the end (end-to-end) [12]. The main focus is to avoid unnecessary repeatable steps [75]. The AutoML process itself is multi-step, so we select the data source, select the data, clean and preprocess the data, execute machine learning, algorithm selection, and parameter optimization, and interpret and discover knowledge [29]. This is also reflected in the growth in the number of published works in the last 10 years, e.g., [3,4,26,75,76]. Due to the significant and continuous progress in the field, ML represents a popular branch of computer science and beyond [75]. It has been successfully applied within a myriad of scientific domains [39], including to exploit the potential of infrastructures such as HPC, rids, cloud and edge computing, and beyond [34,77]. Moreover, the complexity and rapid growth in the field have also created a large gap in efforts to transition to more sustainable approaches and strategies [78,79]. Improvements in optimization through Red AI and a proof of concept based on the Scikit-learn library were presented by Castellanos-Nieves et al. [80], with another work presenting an evaluation of various applied and potentially suitable algorithms with a focus on Green AI [81]. Furthermore, Red AI is mostly focused on conventional approaches, such utilizing many computational resources with the relearning of ML models, maximizing the acquired ML accuracy, and neglecting EE [4,10]. However, in recent years, as presented in recent work, advances and evolutions in the fields of ML and AutoML show the necessary contribution of the strategies and concepts of Green AI when considering EE, as well as what the trade-offs of this approach are on the path toward a more sustainable landscape and Green AI [3,4]. Geissler et al. introduced a novel energy-aware hyperparameter optimization approach (Spend More to Save More (SM2)) based on the early rejection of inefficient hyperparameter configurations to save ML training time and resource consumption [78]. While recent studies addressed EE in connection with AutoML, they were not applied to HPC or the cloud, where the utilization of resources is crucial [78]. Evolutionary algorithms have also been applied within or as optimizers as an addition to AutoML, e.g., an improved optimization framework using evolution algorithms such as DE [51], self-adaptive control parameter randomization with DE [82], the application of DE to an energy-aware auto-tuning platform [64], and an auto-selection mechanism with the application of DE [50]. Vakhnin et al. presented a novel multi-objective hybrid evolution-based tuning framework for accurately forecasting power consumption [83], and the application of DE provided better results than those of other algorithms [51,52,53]. Benchmark studies were presented by Gomes et al. [53], in addition to those for other state-of-the-art algorithms [75,84]. These were mostly tailored to specific application domains, and they lacked integration and successful deployment with an HPC or cloud environment, as well as the inclusion of scheduling systems such as Slurm [3]. Therefore, there has been significant advancement in the field of sustainability in multidisciplinary application domains within HPC environments. Researchers and developers have the option of choosing between areas related to their expertise and the task at hand according to their preferences. A collection of AutoML tools and toolkits is available, leveraging a myriad of technologies [85]. Common AutoML tools include AutoKeras [86], Auto-sklearn [87], H20 [88], TPOT [89], TensorFlow [90], Auto-PyTorch [91], and others [12,85]. Furthermore, profiling and benchmark tools are available to evaluate and optimize algorithms in both CPU and GPU architectures, e.g., COCO (COmparing Continuous Optimizers) [92,93], IOH [94,95], jMetal [84,96], irace [97], Optuna [98], BIAS [72], and, last but not least, Ray Tune [99]. Despite their optimization capabilities, none of the listed AutoML frameworks provide native support for energy efficiency [3]. Therefore, external tools such as PyJoules [100], Carbontracker [101], and others can be used for measuring, logging, and tracking energy consumption and emissions during execution [102]. However, Slurm incorporates MODA to gather energy data from hardware interfaces such as RAPL, IPMI, NVML, and others [13]. This data could be used in optimization processes, as part of objective function constraints, or to provide feedback for early stopping when limits are exceeded [9,29]. Taking this into account, AutoML could optimize ML model performance and provide a balance between ML accuracy and energy efficiency, as a step toward sustainability [1].

2.6. Image Datasets

The Canadian Institute for Advanced Research (CIFAR) dataset collection includes publicly available (https://www.cs.toronto.edu/∼kriz/cifar.html, accessed on 19 November 2024) datasets such as CIFAR10 and CIFAR100 [27]. The collection contains 60,000 colored images (32 × 32 × 3 pixels), of which 50,000 are for training and 10,000 are for testing; CIFAR10 contains 10 classes, while CIFAR100 has 100 [27].

The Modified National Institute of Standards and Technology (MNIST) database is a large publicly available (https://yann.lecun.com/exdb/mnist/, accessed on 19 November 2024) database that includes grayscale centered images of handwritten digits [103]. The database contains images of 28 × 28 pixels, comprising 60,000 training images and 10,000 testing images [103]. Furthermore, additional versions have been published, such as Extended MNIST (EMNIST) and Fashion MNISH, which contains 70,000 images (28 × 28 pixels); other customized collections are also available [103].

ImageNet is an image database collection that is publicly available online at address https://www.image-net.org/, accessed on 19 November 2024, and it includes colored images (64 × 64 × 3 pixels) in 1000 object classes, comprising 1,281,167 training images, 50,000 validation images, and 100,000 testing images [104]. In addition, versions with more or fewer images have also been published subsequently.

2.7. Checkpoint and Restart

Checkpoint and Restart (CR) is a mechanism that allows the running workload to be saved [105]. The saved checkpoint may be later restarted, resulting in restoration, a potentially reduced elapsed time, less consumed energy, and the possibility of debugging issues that may appear during the execution of a workload [105,106]. This mechanism provides fault tolerance and resilience to the interruption of hardware, networks, and software, as well as other potential issues [105,107]. CR may be achieved through various technologies on CPU and GPU architectures, including containerized environments [108,109]. Taking EE into account and the additional storage that is needed to store captured checkpoints, if these are properly applied, users may restore their work in the event of a crash, failure, or wall time limitation, saving potential lost time due to redundant computation and power consumption [106,108]. Additionally, users can migrate their workloads to less consuming nodes (additional partitions or constraints), save states before nodes go into sleep or idle mode at higher energy peaks, and restore when necessary, thus saving the state and throttling the CPU/GPU frequencies using dynamic voltage and frequency scaling (DVFS), restoring with lower frequencies, performing cloud bursting, and more [106,108]. While this mechanism is often sufficient, this is not the case for randomized environments and long-running jobs if everything is not captured correctly [105]. Furthermore, this additionally brings increased complexity and potential challenges, resulting in incomplete, inconsistent, and non-deterministic captured states; scaling may introduce overhead (GPUs), divergence from an original state, violations, and misled reproducibility. External systems may behave differently upon restart, in addition to other issues [105,110,111]. We can apply CR inside the basic DE loop presented in Algorithm 1, where we can continue from the last successfully calculated generation [105]. To ensure complete reproducibility, it is necessary to save after a certain number of generation cycles (10) [105]. The checkpoint must contain the current number of generations itself; we also save the current population number, fitness values, and configurations, such as seeds [105].

| Algorithm 1 Differential Evolution for Machine Learning Hyperparameter Optimization |

Require: ML hyperparameter optimization problem fitness function , minimum and maximum of the search space of ML hyperparameters S for function , DE parameters: population size , mutation differential weight F, crossover rate , number of generations G.

|

2.8. Predictive Modeling

Resource allocation in HPC, such as the allocation of time, memory, central processing unit (CPU) cores, GPUs, and other parameters, is submitted through Slurm [13]. Limited knowledge in a research field, optimization, and beyond results in the underestimation of the required resources, which leads to job failure related to wall time or a lack of memory (OOM), resulting in a waste of computational resources, the consumption of the project quota for the allocation of resources, and the postponement of scientific research [2,11,13]. Furthermore, an ML model can be used for the prediction of consumed resources, such as time and energy, based on historical data that can be gathered from the Slurm accounting database through sacct command [2,13]. A prediction model is a step towards energy efficiency, sustainability, and the prevention of job failures; predictions can be based on historical data and fair shares [13]. The job size, age, and failure of some jobs and the consumed resources may cause a fair share score of a user to decrease [13]. Therefore, users with a higher fair share score receive a higher priority in the queue [13]. This results in reduced resources consumption with the lower queue time due to fair share policies [13]. Chu et al. presented the emerging challenges in generic and ML workloads within HPC environments and their correlations with job failures, energy consumption, and other analytics [2,13]. Such solutions based on CPU and GPU workflows have already been presented in other articles [112,113,114].

3. Proposed Methodology: AutoDEHypO

This section presents the proposed methodology and deployment based on AutoDEHypO, a workflow specifically designed for energy efficiency and operational data analytics in HPC environments.

3.1. Experimental Environment

Computing nodes on an HPC Vega partition with graphics accelerators (GPUs) were used [11]. The partition has 60 nodes, each node has 4× NVIDIA Ampere A100, 2× AMD Rome 7H12, 512 GB RAM, 2× HDR dual-port mezzanines, and 1× 1.92TB M.2 SSD [11]. Red Hat Enterprise Linux 8.10 OS, Slurm 24.05.5 Workload Manager, SingularityPRO version 4.1.6, NVIDIA driver 565.57.01, and CUDA 12.7 were installed on the computing nodes [11]. Additionally, a containerized environment based on Pytorch version 2.1.2 and the required libraries [91]. Training and evaluation were conducted on the publicly available CIFAR10 and CIFAR100 datasets [27]. Storage and dataset access were managed through large-capacity storage based on Ceph [11]. Due to the constraints within HPC environments, the execution time of a single run (i.e., 300 times calling the function ) is such that all times together (the total time of all observations) do not exceed the allocated allocation. So far, we have not limited energy consumption but only monitored it. Additional details of the experimental environment can be found in Table 1.

Table 1.

The experimental environment outlines ML model training parameters, and essential configuration to ensure hyperparameter optimization setup and reproducibility of this setup.

3.2. AutoDEHypO

We prepared our environment for the deployment of the AutoDEHypO workflow using supervised machine learning through pre-trained ML models (ResNet18, VGG11, ConvNeXtSmall, and DenseNet121) that are already available within the PyTorch framework; this included building a custom Singularity container for the PyTorch [91] framework with the required libraries. The composed code in the Python programming language takes care of loading a dataset and setting up a Distributed Data Parallel (DDP) that facilitates model parallelization and distribution, while the NVIDIA Collective Communications Library (NCCL) is used in the training phase for faster and more efficient inter-node back-end communication among multiple GPUs, as this ensures and enables efficient scaling among multiple GPUs. A set of methods was used to prepare, develop, and execute AutoDEHypO, including classification, hyperparameter optimization, metric evaluation, resource monitoring, and aggregated statistical analysis of the experimental results.

3.3. Differential-Evolution-Based Hyperparameter Optimization

A basic framework of the DE Algorithm 1 was used from a recent implementation that supports parallelization [15] for the optimization of hyperparameters, as it establishes a basis while minimizing complexity and allows future modifications and improvements as the experiment unfolds [21].

3.4. Job Scheduling, Training, Evaluation, and Visualization

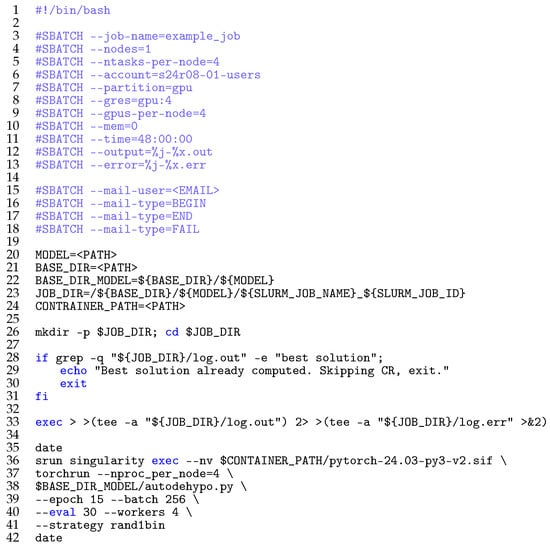

The jobs are submitted through Slurm workload manager, as seen in Figure 2 [11,13]. The input SBATCH contains the required resources within the SBATCH script, the PyTorch container is invoked without modifying the underlying PyTorch code, and the code written in Python is executed. An example of the SBATCH script that can be wrapped in a conditional loop in the command line interface (CLI) or script can be seen in Figure 3.

Figure 2.

Basic workflow of job submission through the Slurm workload manager.

Figure 3.

SBATCH script for job submission.

Furthermore, the workflow is used for training, evaluation, metrics, the storage of data in the appropriate data frame, and graph plotting based on newly acquired data, as presented in Figure 4. Finally, the dataset is loaded, and the basic steps of DE presented in Figure 1 are performed to evaluate and return the most suitable parameters for the training and evaluation phase. When the job is completed, we evaluate the performance metrics, which are saved in a data frame along with visualizations. Thus, we wanted to check the adequacy of the optimization of the hyperparameter space; i.e., we examined the number of epochs and iterations, weights, learning rate (LR), batch size, and optimizers. Given that it has been implemented and deployed, the AutoDEHypO workflow leverages the potential of utilizing multiple GPUs to run DE fitness functions for ML, as presented in Figure 4. In the evaluation phase, basic ML metrics were used (Section 2.1). To obtain, measure, and compare the utilization of computer resources of individual jobs, such as the elapsed time and energy consumption, data from Slurm were used [13].

Figure 4.

Schematic overview of the analytics of the potential efficiency for the AutoDEHypO workflow.

3.5. Checkpoint and Restart, Collected Logs, and Fault Tolerance

Logging of the standard output and standard error output is enabled and generated in the event of an error. Email notifications are also set up to provide information on if a job is queued, started, completed, or failed [13]. This is important for certain cases where anomalies occur during initialization, such as when jobs need to be handled separately. The output files can be taken into account within the AutoDEHypO runtime, which can detect failures in settings, such as issues with NCCL or undetected GPUs within the initialization phase. One can look at these detections, from setting failures to the fact that CR uses feedback mechanisms to check which runs may need to be restarted (Figure 3 line 27). Furthermore, at the beginning of this experiment, we did not know how many resources were needed, and with the CR mechanism, we could accordingly adjust the input Slurm parameters and the need for the computational resource requirements, such as the job state, consumed energy, memory allocation, number of nodes, number of cores, number of cores per node, number of cores per CPU, number of tasks, number of GPUs, number of GPUs per node, and others. The Slurm command requeue allows the resubmission of a failed job when we can avoid and exclude any problematic nodes or, in the opposite case, when we do not want the submission, and a manual check is required after a certain number of—for example, 2—unsuccessful restarts; then, we can use the opposite command: no-requeue. This allows us to detect such errors in certain cases and perform an automatic restart of the job. Manual intervention is still required for cross-checking, and, if necessary, the job is resubmitted to the cluster queue, as shown in Figure 4. Due to the limitations of the experiment, the elapsed time and utilization of computer resources also needed to be taken into account.

4. Experimental Results

The experiments are divided into two phases of benchmarking. Within the first phase, we examine the efficiency of ML models and their parameters. The latter is then used in the second phase as a continuation of the experiments, to which we apply an analysis of the DE mutation strategies presented in Equations (8)–(12). The experiment had to be limited due to resource constraints such as time and the quota of computation resources granted within the development project on the largest Slovenian supercomputer, EuroHPC Vega. The ML models ResNet18, VGG11, ConvNeXtSmall, and DenseNet121 were used with public datasets. For the datasets, we chose CIFAR10 and CIFAR100, as they contain smaller images, thus providing a smaller size than other datasets, allowing them to be included, preprocessed, and trained [27]. The energy consumption is presented in Mega Joules (MJ) [13]. In order to obtain an assessment of the performance of the ML models and algorithms, aggregated statistics were calculated for the obtained results.

Taking into account that resource allocation may impact the obtained results, we ensured that all submitted job scripts had the same resource allocation [2]. Although it would have been possible to restrict our runs to a specific node using an additional Slurm option, this is not the intended use of HPC and was, hence, kept as a constraint. Therefore, jobs and runs were freely distributed across different nodes. During the initiation and execution of the experiment, we faced a few challenges on the software level that we could not avoid encountering, such as the wall time, which was set within the startup script, being too short, as well as a few others. The experiment was also run on the NVIDIA drivers and the Compute Unified Device Architecture (CUDA) Toolkit, which were updated at the beginning of the experiment, as we encountered a set of GPU nodes with incorrect configurations, which were resolved immediately, as well as hardware failures. This necessitated the replacement of DIMM modules and network cards, as well as the cleaning and resetting of network cards, GPUs, and other devices. Hardware problems in which GPUs are not available or are undetected lead to a failure of a large set of submitted jobs in less than 30 s on critical nodes due to failed internal communication through the NCCL back-end. In the event of such an error, a standard error output was generated, and an email notification was successfully received. Furthermore, the deployed CR was included as a common checkpoint for the ML model, therefore demonstrating how we saved important time with AutoDEHypO, with which we enabled resubmission. We received data on failed jobs, such as the time stamp, job state, job name, and job ID, that needed to be resubmitted. An example of the standard error output in the event of a runtime error is given in the following.

- RuntimeError:

- ProcessGroupNCCL is only supported with GPUs, no GPUs found!

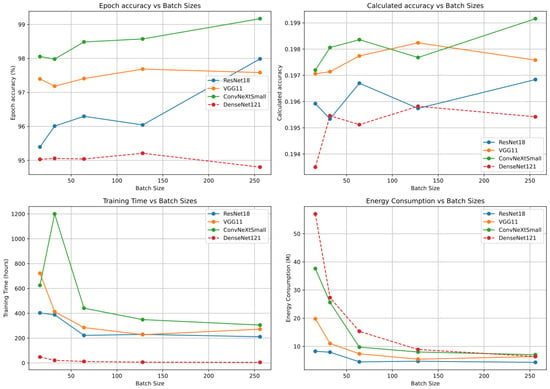

The results obtained from the ResNet18, VGG11, ConvNeXtSmall, and DenseNet121 ML models on the CIFAR10 dataset are listed in Table 2, Table 3, Table 4 and Table 5, respectively. These tables present the batch size used and the results obtained, including the maximum achieved accuracy on the test batch, the best learning rate found, the best accuracy achieved, the CPU time consumed, the elapsed time, and the energy consumed in a Slurm job. Figure 5 provides an example of a subset for the ML performance of the ResNet18, VGG11, ConvNeXtSmall, and DenseNet121 ML models on the CIFAR10 dataset, and Figure 6 does so for the CIFAR100 dataset.

Table 2.

Results obtained in 15 epochs using the ResNet18 ML model on CIFAR10.

Table 3.

Results obtained in 15 epochs using the VGG11 ML model on CIFAR10.

Table 4.

Results obtained in 15 epochs using the ConvNeXtSmall ML model on CIFAR10.

Table 5.

Results obtained in 15 epochs using the DenseNet121 ML model on CIFAR10.

Figure 5.

Plots of the initially obtained results of the ML metrics on the CIFAR10 dataset.

Figure 6.

Plots of the initially obtained results of the ML metrics on the CIFAR100 dataset.

The results obtained from the ML models on the CIFAR100 dataset in the first initial phase are presented in Table 6 for ResNet18, for VGG11 in Table 7, for ConvNeXtSmall in Table 8, and for DenseNet121 in Table 9, respectively.

Table 6.

Results obtained in 15 epochs using the ResNet18 ML model on CIFAR100.

Table 7.

Results obtained in 15 epochs using the VGG11 ML model on CIFAR100.

Table 8.

Results obtained in 15 epochs using the ConvNeXtSmall ML model on CIFAR100.

Table 9.

Results obtained in 15 epochs using the DenseNet121 ML model on CIFAR100.

4.1. Obtained Results

Based on the reported data provided and using our methodology, we observed results in Table 2, Table 3, Table 4 and Table 5 for CIFAR10 and in Table 6, Table 7, Table 8 and Table 9. These tables show a minimum achieved accuracy on test trial and their corresponding impact, highlighting their significance, where the lowest observed accuracy is 62.56%, which corresponds to the calculated ML accuracy of 0.1724. The highest achieved accuracy is 99.17%, with the calculated ML accuracy of 0.19916. Furthermore, for consumed energy metric, we obtained a minimum reported value of 4.43 M (ResNet18) and a maximum value of 59.36 M (DenseNet121). We also observed the elapsed time metric, ranging from the fastest completed job of 03:38:37, to the longest job with wall time 2 d 00:00:17 that exceeded the maximum allowed job execution time within the submitted partition. The results obtained in the first phase of the experiment possibly indicated that using a batch size of 256 in a set of ML models produced the most suitable and efficient results.

We observed that the DenseNet121 model possibly consumed more energy on both datasets than the less complex ML models, such as ResNet18, as presented in Figure 5 and Figure 6. Individual ML models in combination with certain DE strategies possibly performed better and more consistently, while some possibly consumed more energy, e.g., with the exponential DE strategies applied to DenseNet121 or ResNet18, and vice versa, with binomial models possibly consuming more resources in ConvNeXSmall and VGG11. The second phase of the experiment proceeded with a performance comparison of the binomial and exponential DE strategies with each ML model. In this phase, 10 independent runs were executed, as this could more generally determine whether there were significant differences DE-strategy-wise or ML-model-wise, and confirm if there was an impact on key metrics. A mutation was applied randomly with a factor (F) presented in Table 1. The population size (P) and the maximum possible number of generations (G) were appropriately determined due to the project allocation and resource constraints, otherwise we would exceed the current allowable resource consumption within the project allocation. The convergences of metric of accuracy is plotted unified through runtimes in Figure 7 for those runs that obtained median accuracy among each of the 10 independent runs of a DE strategy for a ML model. As observed, the effects of the DE strategies DE/rand/1/bin, DE/best/1/bin, DE/current-to-best/1/bin, DE/rand/2/bin, DE/best/2/bin, as well as the exponential strategies, such as DE/rand/1/exp, DE/best/1/exp, DE/current-to-best/1/exp, DE/rand/2/exp, and DE/best/2/exp [55], possibly varies across different ML model architectures, such as ResNet18, VGG11, ConvNeXtSmall, and DenseNet121, and the CIFAR10 and CIFAR100 datasets [27]. As the results of the tables indicate, is rejected because at least one DE strategy shows a significant difference, and specific DE strategies may even perform better. Therefore, to analyse which and for how much overall when aggregated, we discuss in the next subsection.

Figure 7.

Accuracy convergences through runtime, for the median runs according to accuracy metric.

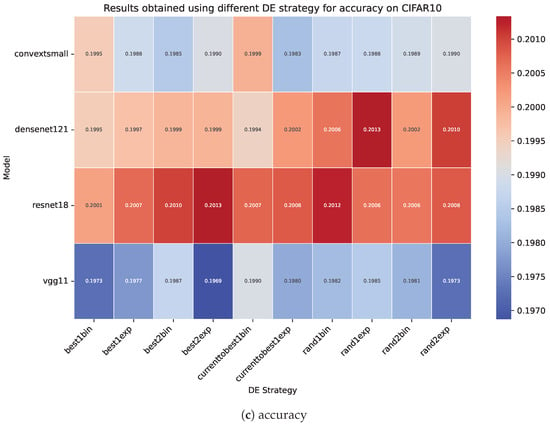

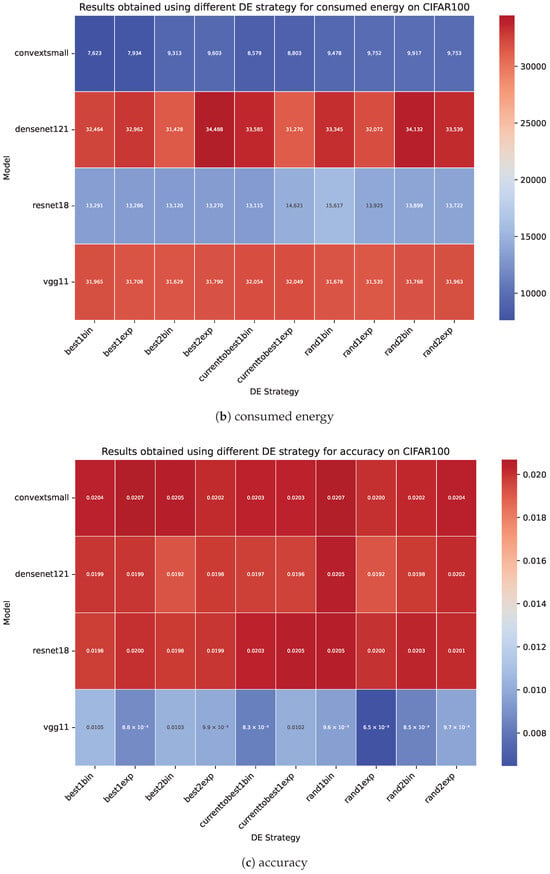

Figure 8 shows the statistical measure of the mean for each metric in the initial phase, and it is divided into three subplots (a) elapsed time, (b) consumed energy, and (c) accuracy for the DE strategies; these values were measured in 10 runs on the CIFAR10 dataset and are grouped by ML model. Figure 9 shows the same means of the selected DE strategies measured on the CIFAR100 dataset. Moreover, if we have a well-tuned ML model with appropriate weights, and the hyperparameters are poorly tuned, we possibly achieve worse ML performance over key metrics. Furthermore, the results obtained from the ResNet18, VGG11, ConvNeXtSmall, and DenseNet121 ML models with a batch size of 256 on both datasets show that, possibly, more efficient DE strategies consumed fewer resources, as the longer execution time possibly resulted in an increase the consumption of resources, and vice versa. A shorter execution time possibly resulted in lower resource consumption. The results on the CIFAR10 dataset are presented in Table A1, Table A2, Table A3 and Table A4, while the results on the CIFAR100 dataset are presented in Table A5, Table A6, Table A7 and Table A8.

Figure 8.

The statistical measures of the mean for the elapsed time (a), consumed energy (b), and accuracy (c) from the results obtained from 10 runs of 15 epochs of ML models that were hyperoptimized using the different DE strategies presented in Table A1, Table A2, Table A3 and Table A4. The x-axis represents the evaluated DE strategies, while the y-axis shows the ML models used.

Figure 9.

The statistical measures of the mean for the elapsed time (a), consumed energy (b), and accuracy (c) from the results obtained from 10 runs of 15 epochs of ML models that were hyperoptimized using the different DE strategies presented in Table A5, Table A6, Table A7 and Table A8. The x-axis represents the evaluated DE strategies, while the y-axis shows the ML models used.

4.2. Discussion of the Aggregated Statistics

Aggregate statistical analysis using non-parametric Friedman tests and corresponding procedures for the computational results of the DE strategies was performed on the ResNet18, VGG11, ConvNeXtSmall, and DenseNet121 ML models and on the CIFAR10 and CIFAR100 datasets [27,115]. Control tests were performed on the metrics of elapsed time, consumed energy, and accuracy using custom extraction scripts and publicly available code for statistics [116]. The p-value threshold was set to 0.05, and significant differences were detected and marked (†). This analysis was conducted to determine whether the DE strategies, ML models, and their architectures or a combination thereof had a statistically significant impact and if there were significant differences in key metrics for the computational efficiency. The results of the statistical analysis are presented in Table 10, Table 11, Table 12, Table 13, Table 14, Table 15, Table 16, Table 17 and Table 18.

Table 10.

Statistical analysis of elapsed time in the DE strategies using a non-parametric Friedman test with the maximum rank distribution and corresponding post hoc procedures (rejected, as marked in bold and with † sign, at a statistical value below the threshold of 0.005555555555555556 for Bonferroni–Dunn, 0.05 for Holm and Hommel, 0.025 for Hochberg and Rom, 0.050000000000000044 for Holland and Finner, and 2.9216395384872545 × 10−17 for Li, respectively).

Table 11.

Statistical analysis of energy consumed in the DE strategies using the non-parametric Friedman test with the minimum rank distribution and corresponding post hoc procedures (rejected, as marked in bold and with † sign, at a statistical value below the threshold at 0.005555555555555556 for Bonferroni–Dunn, 0.016666666666666666 for Holm and Hommel, 0.0125 for Hochberg, 0.016952427508441503 for Holland, 0.013109375000000001 for Rom, 0.044570249746389234 for Finner, and 0.011483473500591115 for Li, respectively).

Table 12.

Statistical analysis of the accuracy in the DE strategies using the non-parametric Friedman test with the minimum rank distribution and corresponding post hoc procedures (rejected, as marked in bold and with † sign, at a statistical value below the threshold at 0.005555555555555556 for Bonferroni–Dunn, Holm, and Hommel, 0.005683044988048058 for Holland and Finner, and 7.7532015972022 ×10−4 for Li, respectively).

Table 13.

Statistical analysis of the elapsed time in the DE strategies using the non-parametric Friedman test with the maximum rank distribution and corresponding post hoc procedures (rejected, as marked in bold and with † sign, at a statistical value below the threshold of 0.005555555555555556 for Bonferroni–Dunn, Holm, and Hommel, 0.005683044988048058 for Holland and Finner, and 0.015161939437253264 for Li, respectively).

Table 14.

Statistical analysis of the energy consumed in the DE strategies using the non-parametric Friedman test with the minimum rank distribution and corresponding post hoc procedures (rejected, as marked in bold and with † sign, at a statistical value below the threshold of 0.005555555555555556 for Bonferroni–Dunn, Holm, and Hommel, 0.005683044988048058 for Holland and Finner, and 0.014435245666076669 for Li, respectively).

Table 15.

Statistical analysis of the accuracy in the DE strategies using the non-parametric Friedman test with the minimum rank distribution and corresponding post hoc procedures (rejected, as marked in bold and with † sign, at a statistical value below the threshold of 0.005555555555555556 for Bonferroni–Dunn and Hochberg, 0.00625 for Holm and Hommel, 0.006391150954545011 for Holland, 0.005843911024153359 for Rom, 0.011333792975759982 for Finner, and 0.03180542233195395 for Li, respectively).

Table 16.

Statistical analysis of the elapsed time in the DE strategies using the non-parametric Friedman test with the maximum rank distribution and corresponding post hoc procedures (rejected, as marked in bold and with † sign, at a statistical value below the threshold of 0.005555555555555556 for Bonferroni–Dunn, 0.0125 for Holm and Hommel, 0.01 for Hochberg, 0.012741455098566168 for Holland, 0.010515350115740741 for Rom, 0.039109465610866256 for Finner, and 0.010841964200380961 for Li, respectively).

Table 17.

Statistical analysis of the energy consumed in the DE strategies using the non-parametric Friedman test with the maximum rank distribution and corresponding post hoc procedures (rejected, as marked in bold and with † sign, at a statistical value below the threshold of 0.005555555555555556 for Bonferroni–Dunn, 0.0125 for Hochberg, 0.016666666666666666 for Holm and Hommel, 0.016952427508441503 for Holland, 0.013109375000000001 for Rom, 0.044570249746389234 for Finner, and 0.011898018581242242 for Li, respectively).

Table 18.

Statistical analysis of the accuracy in the DE strategies using the non-parametric Friedman test with the minimum rank distribution and corresponding post hoc procedures (rejected, as marked in bold and with † sign, at a statistical value below the threshold of 0.005555555555555556 for Bonferroni–Dunn, Holm and Hommel, 0.005683044988048058 for Holland, 0.005683044988048058 for Finner, and 0.0010964059917403937 for Li, respectively).

Table 10 presents a statistical analysis of the elapsed time in the DE strategies using a non-parametric Friedman test, where the maximum rank highlights the advantage across the DE strategies, along with the corresponding post hoc procedures. The elapsed time in the DE strategies is significantly better than that of rand2exp on CIFAR10 when using AutoDEHypO according to the post hoc procedures of Holm, Hochberg, Hommel, Holland, and Finner. The Rom procedure shows a significant difference across DE strategies in comparison with rand2exp, with the exception of rand1exp. The Li procedure shows a significant difference across DE strategies in comparison with rand2exp, with the exception of rand2bin. Therefore, AutoDEHypO suggests that on CIFAR10, with the metric of elapsed time, the DE strategies best1bin, best1exp, currenttobest1bin, currenttobest1exp, best2exp, best2bin, rand1bin, rand1exp, and rand2bin are more suitable than rand2exp.

Table 11 presents a statistical analysis of the consumed energy in DE strategies using the non-parametric Friedman test, where the minimum rank highlights the advantage across DE strategies and corresponding post hoc procedures. The consumed energy in the DE strategies rand2bin, rand2exp, best2bin, rand1bin, best2exp, rand1exp, and currenttobest1exp is significantly better than in best1bin on CIFAR10 with AutoDEHypO according to the post hoc procedures of Holm, Hochberg, Hommel, Holland, and Rom. The Finner procedure shows that the DE strategies rand2bin, rand2exp, and best2bin are significantly better than rand2bin. Furthermore, rand1exp is significantly better than rand2bin according to the Holm, Hochberg, Hommel, and Holland procedures. The Li procedure shows significant difference across DE strategies in comparison with best1bin, with the exception of best1exp. Therefore, AutoDEHypO suggests that on CIFAR10, for the metric of consumed energy, the DE strategies rand2bin, rand2exp, best2bin, rand1bin, best2exp, rand1exp, and currenttobest1exp are more suitable than currenttobest1bin, best1exp, and best1bin.

Table 12 presents a statistical analysis of the accuracy in the DE strategies using the non-parametric Friedman test, where the highest rank highlights the advantage across DE strategies and corresponding post hoc procedures. The accuracy in the DE strategy rand1exp is significantly better than that in best1exp on CIFAR10 with AutoDEHypO according to the post hoc procedures of Holm, Holland, and Finner. Therefore, AutoDEHypO suggests that on CIFAR10, for the metric of accuracy, the DE strategy rand1exp is more suitable than best1exp, rand2bin, rand1bin, currenttobest1bin, rand2exp, best2bin, currenttobest1exp, best1bin, and best2exp.

Table 13 presents a statistical analysis of the elapsed time in the DE strategies using the non-parametric Friedman test, where the maximum rank highlights the advantage across DE strategies and corresponding post hoc procedures. The elapsed time in the DE strategy best1exp is significantly better than that in rand2bin on CIFAR100 with AutoDEHypO according to the post hoc procedures of Holm, Holland, and Finner. The Li procedure shows significant differences across DE strategies in comparison with rand2bin, with the exception of rand2exp. Therefore, AutoDEHypO suggests that on CIFAR100, for the metric of elapsed time, the DE strategy best1exp is more suitable than rand2bin, best2bin, best1bin, currenttobest1bin, currenttobest1exp, rand1bin, rand1exp, best2exp, and rand2exp.

Table 14 presents a statistical analysis of the energy consumed in the DE strategies using the non-parametric Friedman test, where the minimum rank highlights the advantage across DE strategies and corresponding post hoc procedures. The energy consumed in the DE strategy rand2bin is significantly better than that in best1bin on CIFAR100 with AutoDEHypO according to the post hoc procedures of Holm, Hommel, Holland, and Finner. The Li procedure shows significant differences across DE strategies in comparison with best1bin, with the exception of best1exp. Therefore, AutoDEHypO suggests that on CIFAR100, for the metric of consumed energy, the DE strategy rand2bin is more suitable than best1bin, rand1bin, rand2exp, best2exp, currenttobest1exp, rand1exp, currenttobest1bin, best2bin, and best1exp.

Table 15 presents a statistical analysis of the accuracy in the DE strategies using the non-parametric Friedman test, where the highest rank highlights the advantage across DE strategies and corresponding post hoc procedures. The accuracy in the DE strategy rand1bin is significantly better than that in rand1exp on CIFAR100 with AutoDEHypO according to the post hoc procedures of Holm, Hommel, Holland, Rom and Finner. The DE strategy best2bin is significantly better than rand1exp according to the procedures of Holm, Hommel, and Holland. The Li procedure shows significant differences across DE strategies in comparison with rand1exp, with the exception of best2bin. Therefore, AutoDEHypO suggests that on CIFAR100, for the metric of accuracy, the DE strategies rand1bin and rand2exp are more suitable than rand1exp, currenttobest1exp, currenttobest1bin, rand2bin, best1bin, best2exp, best1exp, and best2bin.

Table 16 presents a statistical analysis of the elapsed time in the DE strategies using the non-parametric Friedman test, where the maximum rank highlights the advantage across DE strategies and corresponding post hoc procedures. The elapsed time in the DE strategies best1exp and best1bin is significantly better than that in rand2bin on CIFAR10 and CIFAR100 with AutoDEHypO according to the post hoc procedures of Holm, Hochberg, Holland, Rom, and Finner. Additionally, the DE strategies currenttobest1bin, best2bin, and currenttobest1exp are significantly better than rand2bin according to the procedures of Holm, Hochberg, Hommel, Holland, and Rom. best2exp is significantly better than rand2bin according to the procedures of Holm, Hochberg, Hommel, and Holland. The Li procedure shows significant differences across DE strategies in comparison with rand2bin, with the exception of rand2exp. Therefore, AutoDEHypO suggests that on CIFAR10 and CIFAR100, for the metric of elapsed time, the DE strategies best1exp, best1bin, currenttobest1bin, best2bin, currenttobest1exp, and best2exp are more suitable than rand1bin, rand1exp, and rand2exp.

Table 17 presents a statistical analysis of the energy consumed in the DE strategies using the non-parametric Friedman test, where the maximum rank highlights the advantage across DE strategies and corresponding post hoc procedures. The energy consumed in the DE strategies best1bin, best1exp, currenttobest1bin, currenttobest1exp, best2bin, rand1exp, and best2exp is significantly better than that in rand2bin on CIFAR10 and CIFAR100 with AutoDEHypO according to the post hoc procedures of Holm, Hochberg, Hommel, Holland, and Rom. According to Finner’s post hoc procedure, the DE strategies best1bin, best1exp, and currenttobest1bin are significantly better than rand2bin. The Li procedure shows significant differences across DE strategies in comparison with rand2bin, with the exception of rand2exp. Therefore, AutoDEHypO suggests that on CIFAR10 and CIFAR100, for the metric of consumed energy, the DE strategies best1bin, best1exp, currenttobest1bin, currenttobest1exp, best2bin, rand1exp, and best2exp are more suitable than rand2bin, rand1bin, and rand2exp.

Table 18 presents a statistical analysis of the accuracy in the DE strategies using the non-parametric Friedman test, where the highest rank highlights the advantage across DE strategies and corresponding post hoc procedures. The accuracy in the DE strategy rand1bin is significantly better than that in best1exp on CIFAR10 and CIFAR100 with AutoDEHypO according to the post hoc procedures of Holm, Hommel, Holland, and Finner. The Li procedure shows significant differences across DE strategies in comparison with best1exp, with the exception of best2exp. Therefore, AutoDEHypO suggests that on CIFAR10 and CIFAR100, for the metric of accuracy, the DE strategy rand1bin is more suitable than best1exp, currenttobest1bin, rand2exp, rand2bin, currenttobest1exp, rand1exp, best1bin, best2bin, and best2exp.

As shown in Table 10, Table 11 and Table 12, according to Holm, Hochberg, Hommel, Holland, and Finner, on CIFAR10, several DE strategies perform significantly better than rand2exp, with additional confirmation from the Rom and Li procedures, except for rand2bin. The DE strategy best1exp runs significantly better than rand2bin, with confirmation from Li (with the exception of rand2exp). Furthermore, the energy consumption in the DE strategies rand2bin, rand2exp, best2bin, rand1bin, best2exp, rand1exp, and currenttobest1exp is significantly better than that in best1bin, with confirmation from Li, except for rand2exp. Table 16, Table 17 and Table 18 present results on both CIFAR10 and CIFAR100, where best1bin, best1exp, currenttobest1bin, currenttobest1exp, best2bin, rand1exp, and best2exp perform significantly better than rand2bin in terms of metrics according to the Li procedure, with exception of rand2exp. In terms of accuracy, best1exp outperforms rand1exp on CIFAR10. As shown in Table 13, Table 14 and Table 15, on CIFAR100, rand1bin achieves significantly better accuracy than that of rand1exp, with confirmation from Li, with the exception of best2bin. Across both datasets, rand1bin also demonstrates significantly better accuracy than that of best1exp, except for best2exp, according to the Li procedure.

Table 19 presents an overview of the outcomes of the statistical analysis and the aggregated number (counts) of confirmed out of 450, 184 (40.8%) significant differences in the DE strategies counting each post hoc procedure across the tree key metrics. These outcomes show and confirm significant differences in elapsed time, energy efficiency, and accuracy across the DE strategies. In addition to the elapsed time and accuracy, our operational data analytics also confirm the impact on energy efficiency when selecting DE strategies.

Table 19.

Overview of the outcomes of the statistical analysis for the metrics of elapsed time, energy efficiency, and accuracy in the DE strategies using the non-parametric Friedman test on both the CIFAR10 and CIFAR00 datasets.

As ML models depend on the input data characteristics and on the computational complexity of ML architecture designs, it is expected that some ML models are more suitable. To check this, we tested the suitability of some ML models by detecting differences in elapsed time, consumed energy, and accuracy. The aggregated test outcomes are seen in Table 20, where, for all three key metrics (elapsed time, consumed energy, and accuracy), it is evident from the Friedman rankings that the ResNet18 and ConvNextSmall ML models are ranked higher than the other two ML models, DenseNet121 and VGG11. These ranks are significantly different for the accuracy metric in the case of VGG11. For the metrics of elapsed time and consumed energy, in the case of VGG11, this is also significant. Moreover, significant differences from ML model DenseNet121 are also detected, i.e., these two (DenseNet121 and VGG11) require significantly more energy and time than ResNet18 and ConvNextSmall.

Table 20.

Friedman rankings detected differences in elapsed time, consumed energy, and accuracy aggregated across ML models. The individual rankings demonstrate significant differences using the Holm/Hochberg/Hommel, Holland, Rom, Finner, and Li procedures, with a few exceptions for the post hoc procedures (the Rom and Li procedures for time and energy detect these differences only when comparing VGG11 and DenseNet121 against ResNet18 and ConvNextSmall, i.e., for 41 (91.1%) out of 45 rankings in the post hoc procedures, we successfully detected significant differences).

Furthermore, significant differences between four ML models for 10 DE strategies (denoted as k) evaluations per each run result in 20 combinations (denoted as N), with critical value of from the the two-tailed Bonferroni-Dunn test for k is studentized range statistic at the significance level threshold of on their Friedman average ranks. To compare their ranks we calculated critical difference (CD) [117] of approximately . Additionally, we calculated confidence intervals (CI) based on CD, which detected significant difference in 11 (61.11%) out of 18 pairwise comparisons. The results are presented in Table 21.

Table 21.

Ranks and confidence intervals for key metrics elapsed time, consumed energy, and accuracy across ML Models ResNet18, ConvNextSmall, DenseNet121, and VGG11 on CIFAR10 and CIFAR100 datasets.

5. Conclusions and Future Work

In this article, we presented the deployment of a high-performance differential-evolution-based hyperparameter optimization workflow (AutoDEHypO) for energy efficiency and operational data analytics on multiple GPUs, where a DE algorithm with different DE strategies is demonstrated and applied in hyperparameter optimization while considering key factors such as the ML model, dataset, and DE strategy. The challenge of the optimization of ML models in HPC environments was addressed by balancing the ML accuracy, energy consumption, and resource utilization. Practical limitations such as project time constraints and the computational resources allocated to this project on a part of the national share of HPC Vega may have influenced the experiment through aspects such as node availability, high cluster utilization, shared node scheduling, elapsed time and partition (wall time) limitations, network congestion, job failures, undetected GPUs, and limited granularity in energy measurement at the node level. The utilization of computing resources had to be carefully considered, and the evaluation metrics and their scope must be taken into account. AutoDEHypO detected significant differences in the utilization of HPC resources in terms of elapsed time, energy efficiency, and ML accuracy across DE strategies. Furthermore, AutoDEHypO overcomes several limitations of existing workflows, which mostly focus on ML performance, inefficient utilization of computational resources during job allocation, and extended queue times; therefore, there is a lack of integration with HPC environments and schedulers such as Slurm and limited support for sustainability and cost-effectiveness. In addition to tracking the elapsed time and accuracy, our AutoDEHypO uses operational data analytics to determine how energy efficiently DE algorithms and DE strategies perform and confirms the impact on energy efficiency when selecting DE strategies. The analytics of DE strategies influenced the consumption of resources and indicated when the consumption of energy and other resources led to different ML performance and configurations. Furthermore, the statistical analysis of the key metrics of elapsed time, consumed energy, and accuracy demonstrated significant differences in DE strategies and ML models by using the non-parametric Friedman test and corresponding post hoc procedures for CIFAR10 and CIFAR100 datasets.

Specifically, in 10 independent runs, the DE binomial mutation strategies were applied and out of 450 comparisons, 184 (40.8%) detected significant differences between strategies. The effect of used DE strategies DE/rand/1/bin, DE/best/1/bin, DE/current-to-best/1/bin, DE/rand/2/bin, DE/best/2/bin, and exponential such as DE/rand/1/exp, DE/best/1/exp, DE/current-to-best/1/exp, DE/rand/2/exp, and DE/best/2/exp varied across differentent ML model architectures such as ResNet18, VGG11, ConvNeXtSmall and DenseNet121, on datasets CIFAR10 and CIFAR100.

Additionally, as an important outcome, the ML model comparisons show that there are 41 (91.1%) out of 45 rankings in the post hoc procedures, where AutoDEHypO successfully detected significant differences in all three key metrics in the case of the VGG11 ML model; for the metrics of elapsed time and consumed energy, there were further significant differences detected in the DenseNet121 and VGG11 models when compared with the better ML models of ConvNextSmall and ResNet18. Furthermore, the calculated confidence intervals based on critical difference of approximately , detected significant differences in 11 (61.11%) out of 18 pairwise comparisons.

Despite the insights and contributions provided by this article, there are a few limitations to acknowledge. This workflow is designed and optimized for deployment on a single cluster. Generalizing this workflow to another HPC environment may be challenging due to differences in architecture, scheduling policies, and energy monitoring solutions. Furthermore, within the proposed workflow, we used a basic DE algorithm implementation and limited this research to a few ML models, two datasets (CIFAR10 and CIFAR100), a single DE algorithm, 10 DE strategies, and limited runs of each epoch. While this setup was sufficient for initial exploration and experimentation, further research can be conducted. Moreover, this workflow can be adopted for other ML models, such as neural networks with adjustments in their architectures, different DE algorithms and DE strategies, population sizes, and different datasets, such as ImageNet, MNIST, and others; last but not least, more computational resources can be used. Possibilities have emerged for the evaluation and validation of other algorithms and AutoML frameworks to provide a baseline comparison with state-of-the-art AutoML frameworks and beyond. The necessary validation and comparison for a different environment could take place within an additional experiment; this could include a comparison of the final results. System malfunctions may also be resolved through fault-tolerance mechanisms such as Checkpoint and Restart (CR) without affecting the obtained results, allowing one to continue after unexpected interruptions. Additionally, we can apply CR inside the DE loop, where we can continue from the last successfully saved and calculated generation. Furthermore, a real-time feedback mechanism for adaptive and dynamic changes within ML operations has not yet been implemented and may contribute to the workflow; this may be researched in the future. The prediction model and decision making based on historical data can contribute to ML performance, optimization, and energy efficiency. These improvements will not only strengthen the practical applicability of this workflow but also contribute to sustainability, and a reduction in the environmental footprint.

Author Contributions

Conceptualization, T.P. and A.Z.; methodology, T.P. and A.Z.; software, T.P. and A.Z.; validation, T.P. and A.Z.; formal analysis, T.P. and A.Z.; investigation, T.P. and A.Z.; resources, T.P. and A.Z.; data curation, T.P. and A.Z.; writing—original draft preparation, T.P. and A.Z.; writing—review and editing, T.P. and A.Z.; visualization, T.P. and A.Z.; supervision, T.P. and A.Z.; project administration, T.P. and A.Z.; funding acquisition, T.P. and A.Z. All authors have read and agreed to the published version of the manuscript.

Funding