Abstract

Mobile Crowdsensing (MCS) leverages smart devices within sensing networks to gather data. Given that data collection demands specific resources, such as device power and network bandwidth, many users are reluctant to participate in MCS. Therefore, it is essential to design an effective incentive mechanism to encourage user participation and ensure the provision of high-quality data. Currently, most incentive mechanisms compensate users through monetary rewards, which often leads to users requiring higher prices to maintain their own profits. This, in turn, results in a limited number of users being selected due to platform budget constraints. To address this issue, we propose a lottery-based incentive mechanism. This mechanism analyzes the users’ bids to design a winning probability and budget allocation model, incentivizing users to lower their pricing and enhance data quality. Within a specific budget, the platform can engage more users in tasks and obtain higher-quality data. Compared to the ABSEE mechanism and the BBOM mechanism, the lottery incentive mechanism demonstrates improvements of approximately 47–74% in user participation and 14–66% in platform profits.

MSC:

68W15; 91B32

1. Introduction

Mobile crowdsensing addresses the sensing requirements of the surrounding environment through the use of mobile devices [1]. Currently, a diverse array of mobile crowdsensing systems has been developed and integrated into our daily lives, serving purposes such as environmental monitoring [2], real-time traffic condition assessment [3,4], and noise pollution monitoring [5]. In these scenarios, participants must expend resources, such as device power and network bandwidth, to support data collection and transmission during sensing tasks [6]. Given the resource expenditure by users, platforms often need to offer greater compensation, which constrains the number of users that can be engaged within a fixed budget. Moreover, providing high-quality data requires users to consume more resources, thereby diminishing their potential profits. As a result, users may compromise data quality during task execution to minimize resource consumption. Data quality is pivotal for the platform’s services. Substandard data not only fail to benefit the platform but also undermine the overall data accuracy, impairing information extraction and analysis and ultimately resulting in unreliable outcomes [7]. Consequently, attracting user participation and providing high-quality data within budget constraints is crucial for the successful application of crowdsensing.

At present, the mainstream incentive mechanism design is divided into the non-monetary incentive mechanism [8,9,10,11,12,13,14,15,16,17,18,19,20,21,22] and monetary incentive mechanism [6,23,24,25,26,27,28]. The monetary mechanism is used in most studies due to its wide range of practical application scenarios and effective incentives [29]. It uses direct monetary rewards to compensate for resource consumption in mobile devices [6,23,24,25,26,27,28], i.e., the rewards are given based on the quality of data. The non-monetary incentive mechanism uses forms other than money to motivate participants, including reputation-based incentives, social network mechanisms, and other approaches. The reputation mechanism refers to the form of motivating participants based on their historical reputation. Participants can enhance their status through accumulated reputation values, achieving an incentive effect. The social network mechanism disseminates tasks through social networks and recruits selected workers to complete them. However, these studies still employ fixed reward mechanisms to compensate users for resource consumption. This approach limits the number of selectable users due to platform budget constraints.

To address the mentioned problems, this paper proposes a lottery-based incentive mechanism that focuses on motivating user participation and improving sensing data quality. The mechanism reconstructs the user utility function and establishes a winning probability model and budget allocation scheme. Furthermore, it examines how winning probabilities and budget allocations influence users’ strategies regarding data quality, thereby motivating them to enhance data quality voluntarily to secure greater rewards. Consequently, within a fixed budget, the lottery-based incentive mechanism not only enables the selection of a larger number of users but also encourages them to improve the quality of their data, thereby boosting platform profits.

The rest of the paper is organized as follows: Section 2 presents relevant studies for incentive mechanisms in crowdsensing. Section 3 provides a detailed description of the lottery-based incentive mechanism. Section 4 validates the proposed models through simulation experiments and compares them with traditional incentive mechanisms.

2. Related Work

This paper focuses on the design of incentive mechanism for data quality and user participation, so this overview section also focuses on these two aspects of incentives.

2.1. Non-Monetary Incentive Mechanism for Data Quality and User Participation

Non-monetary incentive mechanisms generally select users using reputation records [8,9,10,11,12,13,14,15] or social network mechanisms [16,17,18,19,20,21,22]. The online incentive mechanism designed in [11,12] integrates the historical records of user reputation and evaluation models, thus selecting users to improve data quality. Reference [10] proposes a crowdsensing task selection algorithm and rewards allocation incentive mechanism based on the Reputation Evaluation model (CTSRE), which deploys the reputation-weighted rewards allocation method to encourage users to participate in tasks actively. Ref. [13] designs an incentive mechanism based on historical reputation, which attracted high-quality users to participate in tasks through a more fine-grained reputation evaluation scheme, thereby achieving fairer reward distribution. Ref. [14], based on a model for predicting user task quality, combines historical reputation to calculate the user’s direct reputation and evaluate his or her importance in the sensing team. In [15], after selecting low-cost and high-quality users to perform tasks, the users’ reputations are updated, the rewards are paid by evaluating the data quality provided, and the users are encouraged to continue providing high-quality data. According to the literature [12,13,14,15], new users may find it difficult to obtain tasks or receive fewer rewards because they have no historical reputation, which may frustrate their enthusiasm and reduce system activity and the rate of new users joining. In [19], to address the problem of insufficient participation in MCS systems with a limited number of workers, social networks are used to recruit workers to complete tasks by combining epidemic models with task propagation and completion processes. In order to solve the problem of malicious workers in crowdsensing social networks, ref. [20] proposes the ZPV-SRE framework to improve the utility of the platform. Ref. [21] proposes a multi-task, multi-issuer mobile crowdsensing system mechanism based on game theory and the Stackelberg game to promote user participation. Ref. [22] promotes users’ willingness to complete tasks by leveraging social relationships between users and design rewards based on data quality. In references [19,20,21,22], even if workers are invited through social relationships, their willingness to participate is still highly heterogeneous. Different workers have different task interests, trust, and reward sensitivities. A single communication mechanism is difficult to fully mobilize participation enthusiasm.

2.2. Monetary Incentive Mechanism for Data Quality and User Participation

Ref. [6] proposed a dynamic payment control mechanism for uncertain tasks and privacy-sensitive bidding, which constructed a lightweight auction model that can drive high-quality data submission with minimal social cost. However, since payments are adjusted dynamically, workers may observe payment trends and engage in gaming behaviors (such as deliberately delaying bids and falsifying costs), resulting in reduced system stability and even payment fluctuations or manipulation. Ref. [23] proposed a two-stage hybrid sensing-driven cost–quality collaborative payment framework, which realizes opportunistic vehicle recruitment through reverse auction and combines it with a participatory vehicle trajectory scheduling algorithm based on SAC reinforcement learning to dynamically optimize the sensing coverage quality and fairness. However, since the reverse auction and trajectory scheduling are two stages connected in series, and since the recruitment and scheduling strategies are optimized independently, a “local optimum” may appear in the middle, that is, low-priced but difficult-to-schedule vehicles are selected in the auction stage, which greatly increases the difficulty of subsequent trajectory scheduling. Ref. [24] proposed a full-stage quality-driven dynamic payment control mechanism, which can dynamically screen users with the potential to submit high-quality data in the long term and adjust the payment weight according to the quality assessment results, so that high-quality data contributors can obtain excess utility. However, in ref. [24], once the system identifies some users as high-quality users, these users may gradually reduce their efforts and continue to enjoy overpayment based on their historical reputation. Ref. [25] uses the drift plus penalty (DPP) technique in Lyapunov optimization to handle the fairness requirements to ensure continuous user participation and high-quality data. However, in actual systems, it is necessary to minimize payment, maximize quality, and ensure fairness at the same time, which makes the penalty function design very complicated. Ref. [26] proposes a quality-driven online task bundling incentive mechanism that combines task profits and rewards accounts to increase participant willingness and provide high-quality data. Ref. [26] may show that in order to quickly complete the bundled task and receive the reward, users may tend to “take shortcuts” and submit low-quality or sloppy data. Ref. [27] proposed a deep learning prediction scheme based on sparse MCS for predicting undetected data. The process first uses a deep matrix decomposition method to restore the current complete perceptual map, and then captures and uses spatiotemporal correlation to predict undetected data, thereby improving data quality. However, when the number of initial participants is very small, the quality of the perceptual map restored by matrix decomposition is poor. The subsequent spatiotemporal prediction based on the erroneous recovery data will further amplify the error, causing the prediction result to deviate from the actual situation. When the data are unevenly distributed in the crowdsensing area, the existing algorithm will ignore the differences in information between blocks, and still require the central server to uniformly sample from each block stored on the mobile device, resulting in a decrease in the overall reconstruction accuracy. To solve this problem, ref. [28] proposed an adaptive sampling allocation strategy which deeply analyzes the statistical information of each block and helps the central server adaptively collect the number of measurements of each block, thereby improving data quality. Although this adaptive sampling allocation strategy can better adapt to the problem of uneven data distribution than uniform sampling, it still has problems such as large initial statistical errors and slow dynamic adaptation.

The above references have studied incentive mechanisms based on data quality and user participation. Still, most of them motivate user participation by offering fixed rewards to compensate for the resource consumption incurred during task execution. This results in a limited number of users being selected under platform budget constraints, which may compromise the quality of sensing data. To solve these issues, this paper designs a lottery-based incentive mechanism to convince users to lower their prices so that the platform can recruit more users to participate in the task with a specific budget. At the same time, it treats users’ data quality as a key factor in reward allocation, motivating users who prefer high-risk, high-reward outcomes to further enhance their data quality.

Section 3 analyzes the impact of objective winning probability and budget allocation on users’ data quality, strategies, and pricing. The model’s winning probability scheme and budget allocation scheme are also established. Next, this paper presents the specific process used to motivate users to participate in the task and improve data quality.

3. The Lottery-Based Incentive Mechanism

3.1. Physical Model

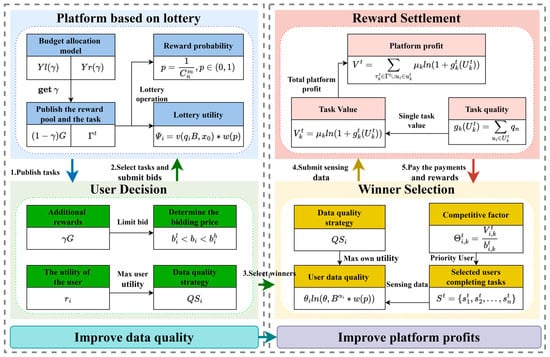

Figure 1 shows the physical model and execution process of mobile crowdsensing with a lottery-based incentive mechanism. The mobile crowdsensing system consists of two main parts: (i) the platform, responsible for issuing tasks, selecting participants, and rewarding them for the data, and (ii) the participants use of their mobile devices to execute tasks.

Figure 1.

The physical model.

Figure 1 shows the basic flow of the mechanism. The platform takes one round from issuing tasks to paying rewards. In step 1, the set of tasks published by the platform in the t-th round is denoted by , , where denotes the i-th task in the t-th round. denotes the set of users in the t-th round, , where denotes the user in the t-th round. In step 2, users select the tasks according to their conditions, the location of the tasks, and the specific reward rules, and submit the pricing. The set of bids of the user in the t-th round is denoted by , , where denotes the bid of in the t-th round. In step 3, the platform selects users to perform the tasks. The selected users form the winner set, denoted as , where denotes the chosen by the platform in round t. These participants consume certain resources when performing the task, and denotes the set of user’s costs in the t-th round, , where is the cost of in the t-th round. In step 4, the platform pays a certain amount of rewards. The total amount of rewards paid by the platform will not be higher than the budget. is the set of utilities of users in the t-th round, , where denotes the utility of user i in the t-th round. The rest of the basic parameters of this paper are shown in Table 1.

Table 1.

Parameters table.

3.2. Design of the Lottery-Based Incentive Mechanism

3.2.1. Mapping of the Lottery Model

This section develops a lottery model for introducing lotteries into the mobile crowdsensing system. For the convenience of understanding, in Table 2, the correspondence between the real-life lottery and the lottery model in mobile crowdsensing is described.

Table 2.

Table mapping a real-life lottery and the lottery model in crowdsensing.

In real-world lottery systems, participants typically select m numbers from a pool of n possible numbers to create their lottery ticket combination. In a lottery-based mobile crowdsensing system, first, the platform publishes the crowdsensing task, including the task content, bonus pool allocation rules, and bonus pool size. Users select the tasks of interest based on their own situation, task location, and reward rules. Then, the user specifies the data quality strategy, decides how much resources to invest in improving data quality, and determines the bid based on the data quality strategy and utility model, and submits it to the platform. Then, the user performs the task. After the task is completed, the platform shows the user a set of lottery numbers. The platform draws the prize according to the lottery rules. If the user holds a matching lottery number, he will obtain a reward. The above mapping is further translated into a system flow, as shown in Figure 2.

Figure 2.

Logic model of the lottery incentive mechanism.

Figure 2 introduces the logical process of the lottery-based mobile crowdsensing data quality incentive mechanism. First, the platform will publish the crowdsensing task, in which the platform will divide the rewards and bonuses, see Theorem 3 for the specific division details. In step 2, form their data quality strategy , determine their bidding price based on the utility model, and submit it to the platform. At this point, the platform evaluates the impact of winning probability on the lower limit of user bidding price , After the user submits and , the platform determines the winner set based on and , and the winner set prioritizes completing tasks with a high-value weight. In step 4, the winner set submits sensing data. In step 5, the platform pays out the rewards and bonuses. At the same time, the platform can calculate the total platform utility based on the user’s data quality submission level.

3.2.2. Bonus Pool and User Utility

Definition 1 (objective reward probability).

In the process of participating in a task, the user has the possibility of winning a reward. The probability that the number given by the platform matches the lottery number is the objective reward probability of the user, and the objective reward probability is represented by the Formula (1), where is the number of combinations of n and m.

The budget of the platform is represented by . The budget is allocated into two parts. One part is assigned to the bonus pool and the amount of the bonus pool is represented by . The other part is used to pay the user for completing tasks. The relationship between the budget and the bonus pool is shown in Formula (2), where γ is the budget allocation coefficient, representing the proportion of the total budget allocated to the bonus pool. γ exists in the range (0, 1).

In the case of a budget allocation coefficient , the budget of is used to form the bonus pool, and the remaining budget of is used to select users to perform tasks and pay them rewards equal to their bidding. Considering that each user has different experience and equipment, the ability value of the user is denoted by and the cost of providing the same data quality varies for users with different abilities. Therefore, this paper models cost and data quality as follows: for a user , its cost is a function of its data quality , where ], as shown in Formula (3).

The rewards received by the user for participating in tasks minus the costs used to perform tasks is the user’s utility, and the user’s utility for is denoted as .

In a traditional crowdsensing system, a user becomes the winner when it is selected to perform a sensing task, . The utility of the user is the price that the platform pays minus the cost of performing a task. When the user is not selected to perform the sensing task, , the utility of the user is 0. Therefore, the utility of the user in a traditional crowdsensing system is represented by , expressed as in Formula (4), where is the set of winners.

In Formula (4), is the reward the user expects to obtain for performing a task.

Definition 2 (lottery utility).

In a lottery-based crowdsensing system, the platform provides a set of lottery numbers after the user completes a task. Therefore, in addition to the traditional utility function, there is utility from the lottery numbers, and the utility from the lottery numbers is the lottery utility. The lottery utility of a user is represented by .

In a lottery crowdsensing system, the utility of the user is . denoted as Formula (5).

To incentive users to improve the data quality, the platform correlates the rewards with the data quality provided by users and issues rewards according to the data quality. The product of the subjective value of the rewards and the subjective probability of winning is the lottery utility of the user , which has been used in [30,31,32]. The expression is shown in Formula (6).

In the formula, the value function in loss aversion theory is , where denotes the possible benefit of an event and denotes the reference point [33,34].

The probability weight function in prospect theory describes the subjective perception of objective probability when a human makes a risky decision [35]. The subjective perception of an event with objective probability is . When facing a low-probability event, a human’s subjective probability tends to be higher than the objective probability . In comparison, for high-probability events, the subjective probability perceived by humans is typically lower than the objective probability The expression is shown in Formula (7).

Here, is the probability weighting coefficient. It takes the value of 0.61 when and 0.69 when .

According to the value function of loss aversion theory in behavioral economics [36,37], Formula (8) can be obtained.

In Formula (8), is the risk attitude coefficient of the user . A higher value of indicates that user ui has a greater risk appetite. According to loss aversion theory, the average value of is 0.88. Therefore, lottery brings a higher utility to the user . Substituting Formula (8) into Formula (5) yields Formula (9).

Once the utility model of users is determined, users need to determine the data quality strategy and rewards based on their requirements.

3.2.3. Users Data Quality Strategy and Bids

According to Formula (9), as the user improves data quality , they will receive more rewards upon winning. At the same time, as the user improves the data quality, the cost of will increase. Therefore, a data quality strategy , that maximizes the user’s utility, is used, as stated in Theorem 1.

Theorem 1.

The user has a data quality strategy that maximizes its utility.

Proof of Theorem 1.

After substituting Formula (3) into Formula (9), the user’s utility function with respect to data quality becomes . After taking partial derivatives from , can be obtained. After setting the partial derivative to zero, the resulting equation involves both a power function and an exponential function, making it a transcendental equation. In mathematics, transcendental equations generally do not have closed-form solutions. They can only be solved approximately, through numerical substitution and iterative methods such as Newton’s method. Moreover, Newton’s method cannot yield explicit expressions in terms of the independent variable. In order to obtain a specific expression for the solution, take the average value of , 0.88, and substitute it into the calculation. When , , so after replacing with , can be obtained. Taking the derivative of the data quality again, can be obtained. If the partial derivative is equal to 0, i.e., , is obtained. On the interval ), . On the interval , , therefore is the extreme value point. Based on the definition of data quality , when the extreme value point is on the left side of the definition domain, , user utility is monotonically decreasing on and has a maximum value at . When the extreme value point is on the right side of the definition field , user utility is monotonically increasing on and has a maximum value at , . When the point of maximum value is in the domain of definition, , user utility increases then decreases on ,when , user utility has the maximum value. □

In summary, the user has a data quality strategy to maximize their utility, which is denoted as .

Theorem 1 proves that there exists a data quality strategy for the user that maximizes its utility. The specific expression for is shown in Formula (10).

According to Formula (10), the bonus pool affects the data quality strategy of the user , and the larger the bonus pool , the higher the .

After determining the data quality strategy , user bids on the task of interest, submitting the chosen data quality strategy and the desired reward for performing the task. User will bid a price that guarantees the utility to be greater than zero. Therefore, ’s price should satisfy Formula (11).

According to Formula (11), the user’s price is expressed as Formula (12).

Based on Formula (12), represents the lowest price that can accept, called the lower limit of price represented by . At the same time, the user bids for a task while considering the possibility of being selected as the winner. Therefore, there is an upper limit on the user’s price , which is related to the cost of performing the task. The upper limit of the price satisfies Formula (13).

In Formula (13), denotes the highest price that a user can require, called the upper limit of price, and is represented by . is the degree to which the platform allows users to increase their bidding prices, known as the limiting factor. The platform evaluates the quality of data users provide, infers their consumption costs, and compares them with their bidding prices. If the user’s bidding price exceeds the range permitted by the platform, no compensation will be paid. The lower limits of the user’s price are discussed above. The user’s price needs to satisfy both the upper and lower limits, and the user’s price for the task should be greater than zero. Therefore, the price range for the user is shown in the Formula (14).

According to Formula (12), the subjective reward probability affects the lower bound of the user’s price, and the subjective probability is a function of the objective probability. Section 3.2.4. will discuss the effect of the objective reward probability on the lower bound of the price.

3.2.4. The Effect of Objective Reward Probability on the Lower Bound of Price

In a traditional crowdsensing system, the price for the user should satisfy Formula (15).

In the lottery crowdsensing system, the lower bound on the price is shown in the Formula (12). Theorem 2 demonstrates how different objective reward probabilities affect the lower bound of the price in a lottery-based mobile crowdsensing system. The lower bound of price for a user at an objective reward probability of is represented by . When the user has an objective reward probability of , the lower price is represented by .

Theorem 2.

When the bonus pool and , there is . That is, the higher the objective reward probability, the lower bound of the price.

Proof of Theorem 2.

In the mobile crowdsensing system that introduces a lottery-based incentive mechanism, according to the user price lower bound Formula (12), , to prove , must be proved. Because (0,1), then. To prove , this theorem must prove is a monotonically increasing function with respect to , because . According to Formula (7), , , and Let and let . Derive from . To prove that is monotonically increasing, must be proved. Because , should be proved. Because , . Bring and into to obtain , that is . According to the expression for , and , it is only necessary to prove , that is to prove . Since p , the denominator must be greater than zero, it is only necessary to prove > 0. Since and , so needs to be proved. This is obvious. Therefore, is a monotonically increasing function, and . □

Theorem 2 proves that the higher the objective reward probability, the lower the bound of the user’s bidding price. The lottery-based incentive mechanism aims to reduce users’ bidding prices by introducing probabilistic rewards. This allows the platform to recruit more participants under a fixed budget, thereby improving user engagement and platform profits. However, the objective reward probability has an upper limit. In a particular round, if there are users in the platform, the number of winners in this round, that is, the expected value, should be guaranteed to be no more than one, as seen in Formula (16).

According to Formula (1), the objective reward probability is determined by the number of combinations of n and m of lottery numbers issued by the platform, so Formula (16) is also written as Formula (17).

Based on Formula (1), as the objective reward probability depends on the combinations of n and m, takes on discrete rather than continuous values when n and m vary. When the platform publishes reward rules, it is necessary to publish the combination number n and m, which determines the objective reward probability .

After the platform determines the probability of rewards, the expected utility will attract more users to participate in the task. But at the same time, some users may receive rewards despite providing low-quality data. Hence, it is necessary to discuss how to select users and how to allocate the platform’s budget.

3.2.5. Winner Selection

In t-th round, the quality of the task is represented by , with higher values representing the higher quality of the task . is expressed in Formula (18).

Here, denotes the set of users who bid on the task , denotes the data quality provided by the user in the set , and the total task quality is calculated as the sum of the data quality provided by all users in . Drawing on the definition of task value from [37], the value of the task being completed in the t-th round is represented by . Higher values represent the higher data quality of the task . is expressed as shown in Formula (19).

Here, indicates the weight of the task , with higher weights representing higher values being completed. The value of the task is reflected in the logarithmic form concerning the quality of the task diminishing marginal utility. According to the expression , task value increases with both the number of participating users and the quality of the data they provide.

The profits of the platform are obtained by summing the value of all tasks completed, denoted as Formula (20).

The set of users who bid on the task in the t-th round is represented by , and the set of prices offered by the users in for task is denoted by . The value created by the users in the set for the task is represented by , combining Formulas (18) and (19), and the specific expression is represented by Formula (21).

Here, indicates the data quality provided by the user when completing the task . The higher the data quality, the higher the value .

The ratio of the value created by user to his bid price is defined as the competitiveness factor, denoted by and shown in Formula (22).

According to Formula (22), the higher the value of the task and the lower the price, the higher the user’s competitiveness factor. Among the users who bid on the task , the platform prioritizes the users with a high competitiveness factor. The set of users the platform selects is the set of winners, denoted by .

3.2.6. Budget Allocation

The higher the budget ) G used for user selection, the more users can be recruited and the higher the platform’s profits will be. At the same time, according to the platform profits function, i.e., Formula (20), the platform profits are positively related to the data quality provided by the users. According to Theorem 1, data quality is correlated with the lottery reward. So when a larger budget is available as the lottery reward, more users are encouraged to participate and provide higher data quality, which in turn increases platform profits. Both of these components can increase the profits of the platform. The next part of the discussion is how to allocate these two components to maximize the platform’s profits.

Using to denote the coefficient of the budget allocated to the bonus pool, denotes the money used as a lottery reward and ) G is used to reward users directly. In global terms, the total number of tasks performed in a round is denoted by , the mean weight value of task is , the mean value of users’ risk attitudes is, and the mean value of users’ ability values is . Next, Theorem 3 is used to obtain the budget allocation coefficient , which maximizes the relative profits of the platform.

Theorem 3.

When , there exists an allocation coefficient , which satisfies , and makes the profits of the platform is relatively maximized.

Proof of Theorem 3.

To find the relationship between the budget allocation coefficient and the platform profits, we should express the relevant variables in the platform profits function in the form related to the budget allocation coefficient , and use to represent the total number of tasks being performed in a round, combined with Formulas (19) and (20), bring into it, we can obtain , whererepresents the data quality of task . The average bidding price of users in the platform is represented by b, and in the case of selecting participant budget is , the mean number of users in a task is . Because the mean number of tasks is , there are average users per task. The quality function for a particular task is shown in Formula (18), and the number of participants per task is brought into Formula (18) to obtain . represents the data quality provided by and the users provide data quality according to their data quality strategy . According to Theorem 1, the user’s data quality strategy is , and considering the part related to the budget allocation coefficient the data quality provided is , then the quality function is for task at this time. Therefore, the platform profits function can be obtained. After the partial derivative of , can be obtained. If the partial derivative equals zero, can be obtained because are greater than zero. That is, , can be obtained. equals and equals . equals and then . □

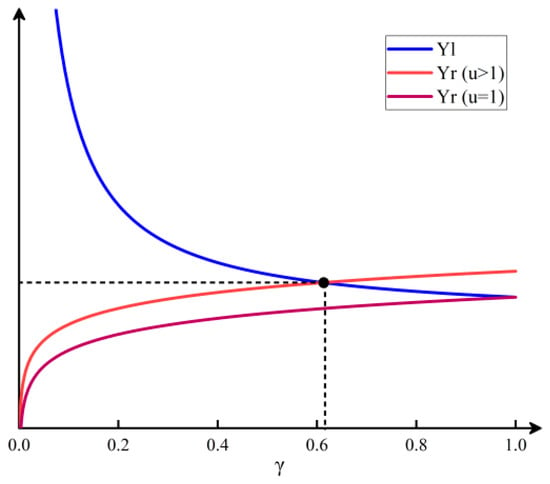

The image of the function with and is shown in Figure 3. According to the image, the two functions intersect at when . When , the two functions intersect at . To the left of the intersection point, is greater than with a derivative greater than zero, and to the right of the intersection point, is less than with a derivative less than zero. Therefore, a exists such that the derivative is zero, and the platform profits are relatively maximum.

Figure 3.

Function images of and .

Theorem 3 proves the existence of a budget allocation coefficient that maximizes the platform’s profit. Next, simulation experiments will be conducted to analyze the values of the budget allocation coefficient under different environments and their impact on the platform’s profits.

4. Simulation Experiments

This section discusses the setup of the lottery incentive mechanism proposed in this paper using the Repast simulation tool and comparison with the ABSEE mechanism [36] and the BBOM mechanism [37]. This paper compares the lottery incentive mechanism with the ABSEE and BBOM regarding user participation, and platform profits. In the design context of the ABSEE mechanism, data quality determines the platform utility. Therefore, by specifying the winner selection rules and payment determination rules, the user’s perceived quality can be accurately estimated, ultimately achieving higher platform utility. The BBOM mechanism is a budget-feasible dual-objective incentive mechanism proposed through a series of problem conversions and function optimizations in a dual-objective optimization scenario, which improves the platform utility.

Section 4.1.1 discusses the effect of the allocation coefficient on data quality. Section 4.1.2 discusses the effect of the allocation coefficient on user participation. Section 4.1.3 discusses the effect of the allocation coefficient and platform budget on platform profits and the effect of allocation coefficient and user number on platform profits. To ensure fairness, the lottery incentive mechanism is compared with the ABSEE and BBOM under setting the same experimental environment and fundamental parameters, and the basic parameters are set as shown in Table 3.

Table 3.

Experimental parameter settings.

4.1. Discussion of Coefficients

In the experimental part of this paper, it is necessary to first discuss the effects of some of the coefficients on the results and use them to determine the optimal intervals for these coefficients.

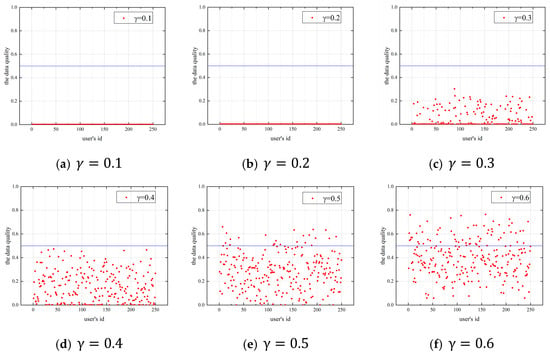

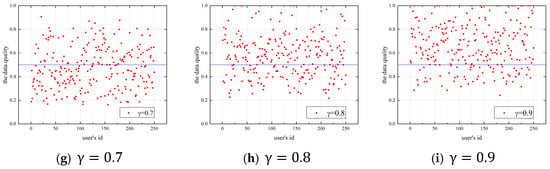

4.1.1. Impact of the Budget Allocation Coefficient on Users’ Data Quality

Figure 4 shows the budget allocation coefficient’s effect on users’ data quality when the allocation coefficient is 0.1–0.9 in the lottery incentive mechanism. The number of users is 250, and the platform budget is 500. The horizontal coordinate is the ID of each user, the vertical coordinate is the data quality of the user, and the blue line in the figure represents the data quality of 0.5,in order to more clearly observe the changes in data quality.

Figure 4.

Effect of the budget allocation coefficient on data quality, (a) γ = 0.1, (b) γ = 0.2, (c) γ = 0.3, (d) γ = 0.4, (e) γ = 0.5, (f) γ = 0.6, (g) γ = 0.7, (h) γ = 0.8, (i) γ = 0.9.

As shown in Figure 4a,b, most users’ data quality is 0 when the allocation coefficient is 0.1 or 0.2. This is because the budget allocated to the bonus pool by the platform is low, and the amount of the bonus pool is small. Users choose not to participate in the task, so the data quality of users is 0. As the allocation coefficient and the amount of the bonus pool increase, users with high ability and risk preference values choose to participate in the task, as shown in Figure 4c,d.

In subplots Figure 4e–g, the bonus pool is sufficient to attract the most users to participate in the task when is 0.5–0.7. The bonus pool increases with the increase of . The user reward is positively correlated with the data quality. Hence, the data quality strategy provided by the user increases with the increase in the allocation coefficient .

As shown in Figure 4h,i, users can provide higher-quality data when the budget allocation coefficient is 0.8–0.9. Since the platform has a specific budget, more is allocated to the bonus pool, and less is used for selecting users, i.e., the budget allocation coefficient affects the bonus pool and the budget for selecting participants. The higher the budget for selecting participants, the more users the platform can select, and the more user will participate in the task. The following section discusses the effect of the allocation coefficient on the number of participants in the task.

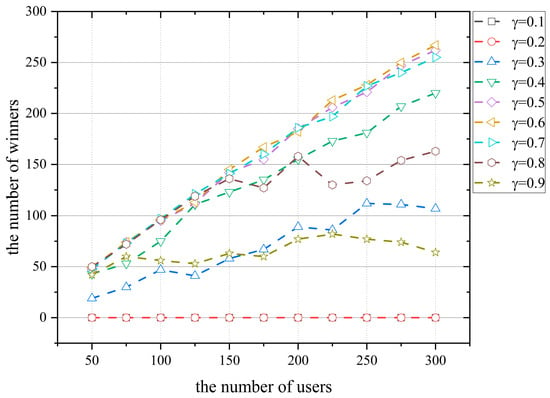

4.1.2. Impact of the Budget Allocation Coefficient on User Participation

This section shows the effect of the budget allocation coefficient on user participation. The impact of the allocation coefficient on the number of participants in the task when the platform budget is 500 in the lottery incentive mechanism is shown in Figure 5.

Figure 5.

Effect of the budget allocation coefficient on the number of winners.

Figure 5 shows that the overall trend of the number of winners increases as the user number increases. As shown in Figure 5, the number of winners is 0 when the allocation coefficient is 0.1 or 0.2. In Figure 5, the number of winners increases with the number of user on the platform because each user has different risk attitude coefficients and ability values. The attractiveness of lottery rewards to different users varies. As the number of platforms increases, the number of users attracted by the lottery rewards increases, so the number of winners increases with the number of users.

As shown in Figure 5, the number of winners with a budget allocation of 0.7, 0.8, and 0.9 is 198, 123, and 58, when the user number is 200. and the number of winners tends to decrease as the allocation coefficient increases. This is because the bonus pool is gradually increasing. At the same time, the platform’s budget for selecting users to participate in the task is gradually decreasing. The number of winners tends to decrease as the allocation coefficient increases because the platform can select fewer users.

In general, the number of winners and user participation tends to increase and then decrease with the increase in the allocation coefficient . The number of winners and user participation is highest when the allocation coefficient is 0.6–0.7.

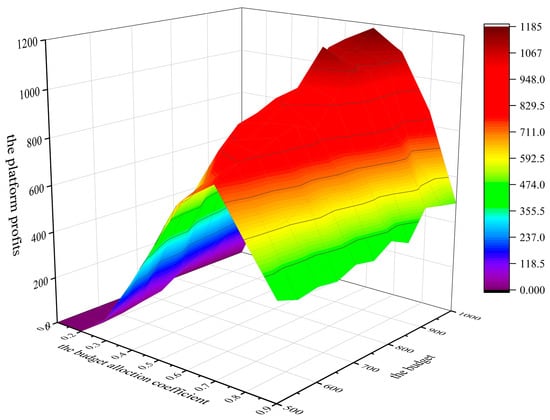

4.1.3. Impact of the Platform Budget and User Number on Platform Profits

Section 4.1.1 and Section 4.1.2 discuss the impact of the allocation coefficient on data quality and user participation. For a given budget, an increase in the budget allocated to the bonus pool is followed by increased data quality and platform profits. The budget allocated to selecting the users participating in the task increases, the platform can select more users to participate in the task, and the platform profits increase. This section discusses the impact of allocation coefficient , platform budget, and user number on platform profits.

Figure 6 shows the effect of allocation coefficient and platform budget on platform profits when the number of platform users is 250.

Figure 6.

The effect of the allocation coefficient and platform budget on platform profits.

Figure 6 shows that when the budget allocation coefficient is 0.7, the platform profits are 772, 972, and 1096 for platform budgets of 500, 800, and 1000, respectively., i.e., when the budget allocation coefficient is constant, the platform profits increase with the increase in the platform budget. This is because the lottery reward used to attract users increases as the platform budget increases with a constant budget allocation coefficient . The data quality enhanced by users increases as the platform budget increases. User participation increases with the increase in the platform budget used for selecting users. Furthermore, the platform’s profits depend on the data quality provided by users and user participation, so the platform’s profits increase with the increase in the platform’s budget.

Figure 6 shows that when the platform budget is 1000, and the budget allocation coefficient is 0.4, 0.5, 0.6, 0.7, and 0.8, the platform profits are 935, 1119, 1183, 1096, and 861, respectively. This is because when the budget allocation coefficient is 0.4–0.6, as the budget allocation coefficient increases, the rewards allocated to the rewards pool increase, the data quality provided by the users increases, the budget used to select users is sufficient to select most users to participate in the task, so the platform profits increase. When the budget allocation coefficient is 0.7–0.8, although the data quality provided by users increases as the budget allocation coefficient increases, the budget allocated to the platform for selecting users is insufficient, resulting in fewer users participating in the task, which leads to a decrease in the platform profits. This is consistent with Theorem 3 proved in Section 3.2.6. At a budget of 1000 and a budget allocation coefficient of 0.6, the platform profits peaked at 1183.

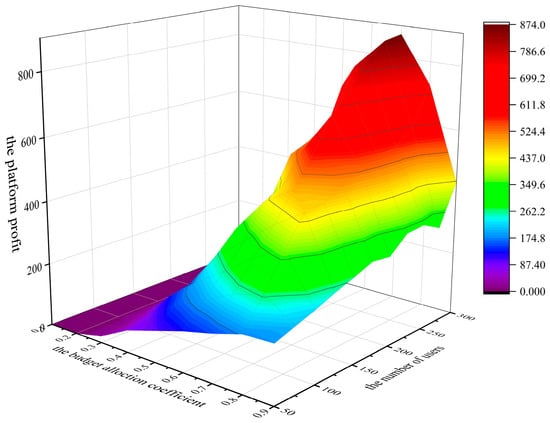

Figure 7 show the impact of the allocation coefficient and user number on platform profits when the budget is 500. When the budget allocation coefficient is 0.6, and the number of user on the platform is 100, 200, 300, the platform profits are 258, 545, 801, respectively. When the budget allocation coefficient remains unchanged, platform profits increase with the increase in user numbers. This is because the bonus pool amount is fixed when the platform budget and allocation coefficient are fixed. Due to the different risk attitude coefficients and ability values of each user, as the number of users increases, the number of users attracted by lottery rewards increases. Therefore, the number of users participating in tasks increases, and the platform’s profits increase accordingly.

Figure 7.

Effect of the budget allocation coefficient and user number on platform profits.

In Figure 7, when the number of users is 250, the platform profits show a trend of first increasing and then decreasing. As described in Section 4.1.1 and Section 4.1.2, this is because when the budget allocation coefficient is 0.8, the quality of data provided by users increases, but the platform profits tend to decrease because the platform has a smaller budget for selecting users, resulting in fewer users being chosen to participate in the task, which leads to a decrease in the platform utility. This is consistent with the results of the proof of Theorem 3 in Section 3.2.6.

This section discusses the effect of the budget allocation coefficient and platform budget on platform profits and the effect of the budget allocation coefficient and user number on platform profits. As shown in Figure 4 and Figure 5, the budget allocation coefficient becomes 0.6, and at 0.7 it can relatively maximize the platform profits.

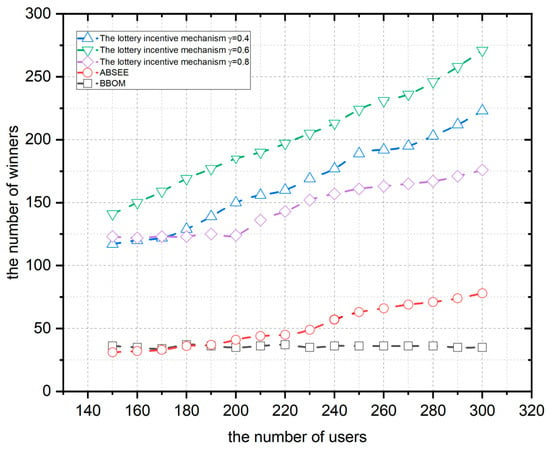

4.2. Experimental Comparison

In this section, the comparison with the ABSEE mechanism and BBOM mechanism is made mainly in terms of user participation and platform profits. Figure 8 shows the number of the lottery incentive mechanism and the ABSEE and BBOM winners at a platform budget of 500 and user numbers 150–300. User participation is the ratio of the number of winners to the user number.

Figure 8.

Comparison of user participation.

As shown in Figure 8, the number of winners for the lottery incentive mechanism and the ABSEE increases with the increasing user number, while the number of winners of the BBOM mechanism shows a fluctuating trend. This is because the BBOM mechanism is affected by the marginal value calculation method, the number of winners will not continue to rise as the number of participants increases, but will fluctuate up and down according to the specific circumstances. When the user number is 200, the lottery incentive mechanism with a poor incentive effect is compared with the ABSEE and BBOM. For example, if the budget allocation coefficient is 0.4 and 0.8, the number of winners is 125 and 151, and the user participation is 0.625 and 0.755, which is still higher than that of the ABSEE (0.2) and the BBOM (0.175).

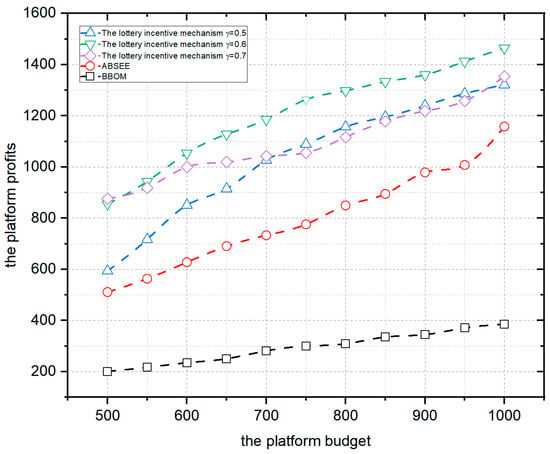

Figure 9 compares the platform utility of the lottery incentive mechanism, ABSEE and BBOM when the user number is 300, and the platform budget is 500–1000. The horizontal coordinate is the platform budget, and the vertical coordinate is the platform profits.

Figure 9.

Comparison of platform profits.

As shown in Figure 9, the platform profits of the lottery incentive mechanism, the ABSEE mechanism, and the BBOM mechanism increase with an increasing platform budget. As described in Section 4.1.3, when the platform budget increases, both the budget of the bonus pool and the budget used to select participants are increasing overall, resulting in increased user participation and quality of submitted data, leading to increased platform profits. The lottery incentive mechanism is better than the ABSEE and BBOM. For example, with a user number of 300, a platform budget of 800, and a budget allocation coefficient of 0.7 for the lottery incentive mechanism, the platform profits are 1116, respectively. Under the same conditions, the platform profits of the ABSEE are 848 and the BBOM are 309.

5. Conclusions

Inspired by real-life lotteries, this paper designs a lottery-based incentive mechanism. This paper analyzes user bids and designs the allocation of reward probability and budget in the lottery model to attract users to reduce their bids and participate in the task under a specific budget. At the same time, this paper designs the reward allocation to encourage users to improve the data quality spontaneously. In the experimental part, through the experiment on the impact of the budget allocation coefficient on user data quality and user participation, it can be seen that the higher the budget allocation coefficient, the higher the user data quality, and when the budget allocation coefficient is between 0.6 and 0.7, the user participation rate increases. By comparing it with the experimental results from ABSEE and BBOM, the lottery-based incentive mechanism improves about 47–74% in terms of user participation and 14–66% in terms of platform profits under the same experimental environment. Also, combining more accurate and low-cost data quality assessment methods will be an important direction to further improve system performance.

Author Contributions

X.H. and S.S. designed the project and drafted the manuscript. Z.L. and J.L. wrote the code and performed the analysis. All participated in finalizing the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the result.

References

- Tong, F.; Zhou, Y.; Wang, K.; Cheng, G.; Niu, J.; He, S. A privacy-preserving incentive mechanism for mobile crowdsensing based on blockchain. IEEE Trans. Dependable Secur. Comput. 2024, 21, 5071–5085. [Google Scholar] [CrossRef]

- Wang, H.; Tao, J.; Chi, D.; Gao, Y.; Wang, Z.; Zou, D.; Xu, Y. A preference-driven malicious platform detection mechanism for users in mobile crowdsensing. IEEE Trans. Inf. Forensics Secur. 2024, 19, 2720–2731. [Google Scholar] [CrossRef]

- Silva, M.; Signoretti, G.; Oliveira, J.; Silva, I.; Costa, D.G. A crowdsensing platform for monitoring of vehicular emissions: A smart city perspective. Future Internet 2019, 11, 13. [Google Scholar] [CrossRef]

- Ye, S.; Zhao, L.; Xie, W. Crowd bus sensing: Resolving conflicts between the ground truth and map apps. IEEE Trans. Mob. Comput. 2022, 23, 1097–1111. [Google Scholar] [CrossRef]

- Marche, C.; Perra, L.; Nitti, M. Crowdsensing and Trusted Digital Twins for Environmental Noise Monitoring. In Proceedings of the 2024 IEEE International Mediterranean Conference on Communications and Networking (MeditCom), Madrid, Spain, 8–11 July 2024; pp. 535–540. [Google Scholar]

- Jiang, X.; Ying, C.; Li, L.; Düdder, B.; Wu, H.; Jin, H.; Luo, Y. Incentive Mechanism for Uncertain Tasks under Differential Privacy. IEEE Trans. Serv. Comput. 2024, 17, 977–989. [Google Scholar] [CrossRef]

- Liu, J.; Shao, J.; Sheng, M.; Xu, Y.; Taleb, T.; Shiratori, N. Mobile crowdsensing ecosystem with combinatorial multi-armed bandit-based dynamic truth discovery. IEEE Trans. Mob. Comput. 2024, 23, 13095–13113. [Google Scholar] [CrossRef]

- Tang, X.; Liu, J.; Li, K.; Tu, W.; Xu, X.; Xiong, N.N. IIM-ARE: An Effective Interactive Incentive Mechanism based on Adaptive Reputation Evaluation for Mobile Crowd Sensing. IEEE Internet Things J. 2025. [Google Scholar] [CrossRef]

- Yang, H.; Yang, C.; Wu, Q.; Yang, W. Reputation Based Privacy-Preserving in Location-Dependent Crowdsensing for Vehicles. In Proceedings of the 2024 International Conference on Networking and Network Applications (NaNA), Yinchuan, China, 9–12 August 2024; pp. 236–241. [Google Scholar]

- Li, Q.; Cao, H.; Wang, S.; Zhao, X. A reputation-based multi-user task selection incentive mechanism for crowdsensing. IEEE Access 2020, 8, 74887–74900. [Google Scholar] [CrossRef]

- Zhang, J.; Li, X.; Shi, Z.; Zhu, C. A reputation-based and privacy-preserving incentive scheme for mobile crowd sensing: A deep reinforcement learning approach. Wirel. Netw. 2024, 30, 4685–4698. [Google Scholar] [CrossRef]

- Cui, H.; Liao, J.; Yu, Z.; Xie, Y.; Liu, X.; Guo, B. Trust assessment for mobile crowdsensing via device fingerprinting. ISA Trans. 2023; in press. [Google Scholar]

- Ding, L.; Tong, F.; Xing, F. IMFGR: Incentive Mechanism With Fine-Grained Reputation for Federated Learning in Mobile Crowdsensing. In Proceedings of the 2024 International Conference on Artificial Intelligence of Things and Systems (AIoTSys), Hangzhou, China, 17–19 October 2024; pp. 1–8. [Google Scholar]

- Liu, H.; Zhang, C.; Chen, X.; Tai, W. Optimizing Collaborative Crowdsensing: A Graph Theoretical Approach to Team Recruitment and Fair Incentive Distribution. Sensors 2024, 24, 2983. [Google Scholar] [CrossRef]

- Cai, X.; Zhou, L.; Li, F.; Fu, Y.; Zhao, P.; Li, C.; Yu, F.R. An Incentive Mechanism for Vehicular Crowdsensing with Security Protection and Data Quality Assurance. IEEE Trans. Veh. Technol. 2023; in press. [Google Scholar]

- Ji, G.; Zhang, B.; Zhang, G.; Li, C. Online incentive mechanisms for socially-aware and socially-unaware mobile crowdsensing. IEEE Trans. Mob. Comput. 2023, 23, 6227–6242. [Google Scholar] [CrossRef]

- Wang, P.; Li, Z.; Long, S.; Wang, J.; Tan, Z.; Liu, H. Recruitment from social networks for the cold start problem in mobile crowdsourcing. IEEE Internet Things J. 2024, 11, 30536–30550. [Google Scholar] [CrossRef]

- Gao, Y.; Liu, W.; Guo, J.; Gao, X.; Chen, G. A dual-embedding based DQN for worker recruitment in spatial crowdsourcing with social network. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington, DC, USA, 14–18 July 2024; pp. 1670–1679. [Google Scholar]

- Wang, Z.; Huang, Y.; Wang, X.; Ren, J.; Wang, Q.; Wu, L. Socialrecruiter: Dynamic incentive mechanism for mobile crowdsourcing worker recruitment with social networks. IEEE Trans. Mob. Comput. 2020, 20, 2055–2066. [Google Scholar] [CrossRef]

- Wang, P.; Long, S.; Liu, H.; Jiang, K.; Deng, Q.; Li, Z. Propagation verification under social relationship privacy awareness in mobile crowdsourcing. IEEE Trans. Mob. Comput. 2024, 23, 12461–12476. [Google Scholar] [CrossRef]

- Esmaeilyfard, R.; Moghisi, M. An incentive mechanism design for multitask and multipublisher mobile crowdsensing environment. J. Supercomput. 2023, 79, 5248–5275. [Google Scholar] [CrossRef]

- Gao, H.; An, J.; Zhou, C.; Li, L. Quality-Aware Incentive Mechanism for Social Mobile Crowd Sensing. IEEE Commun. Lett. 2022, 27, 263–267. [Google Scholar] [CrossRef]

- Wang, Z.; Cao, Y.; Zhou, H.; Wu, L.; Wang, W.; Min, G. Fairness-aware two-stage hybrid sensing method in vehicular crowdsensing. IEEE Trans. Mob. Comput. 2024, 23, 11971–11988. [Google Scholar] [CrossRef]

- Zhang, M.; Li, X.; Miao, Y.; Luo, B.; Ma, S.; Choo, K.-K.R.; Deng, R.H. Oasis: Online all-phase quality-aware incentive mechanism for MCS. IEEE Trans. Serv. Comput. 2024, 17, 589–603. [Google Scholar] [CrossRef]

- Montori, F.; Bedogni, L. Privacy preservation for spatio-temporal data in Mobile Crowdsensing scenarios. Pervasive Mob. Comput. 2023, 90, 101755. [Google Scholar] [CrossRef]

- Yu, R.; Oguti, A.M.; Ochora, D.R.; Li, S. Towards a privacy-preserving smart contract-based data aggregation and quality-driven incentive mechanism for mobile crowdsensing. J. Netw. Comput. Appl. 2022, 207, 103483. [Google Scholar] [CrossRef]

- Wang, E.; Zhang, M.; Cheng, X.; Yang, Y.; Liu, W.; Yu, H.; Wang, L.; Zhang, J. Deep Learning-Enabled Sparse Industrial Crowdsensing and Prediction. IEEE Trans. Ind. Inform. 2021, 17, 6170–6181. [Google Scholar] [CrossRef]

- Liu, X.; Zhou, S.; Peng, J.; Yu, J.; He, Y.; Zhang, W. Adaptive sampling allocation for distributed data storage in compressive CrowdSensing. IEEE Internet Things J. 2023, 11, 12022–12032. [Google Scholar] [CrossRef]

- Wu, E.; Peng, Z. Research Progress on Incentive Mechanisms in Mobile Crowdsensing. IEEE Internet Things J. 2024, 11, 24621–24633. [Google Scholar] [CrossRef]

- Li, D.; Li, C.; Deng, X.; Liu, H.; Liu, J. Familiar paths are the best: Incentive mechanism based on path-dependence considering space-time coverage in crowdsensing. IEEE Trans. Mob. Comput. 2024, 23, 9304–9323. [Google Scholar] [CrossRef]

- Liao, G.; Chen, X.; Huang, J. Prospect theoretic analysis of privacy-preserving mechanism. IEEE/ACM Trans. Netw. 2019, 28, 71–83. [Google Scholar] [CrossRef]

- Sun, L.; Zhan, W.; Hu, Y.; Tomizuka, M. Interpretable modelling of driving behaviors in interactive driving scenarios based on cumulative prospect theory. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019. [Google Scholar]

- Bickel, W.K.; Green, L.; Vuchinich, R.E. Behavioral economics. J. Exp. Anal. Behav. 1995, 64, 257. [Google Scholar] [CrossRef]

- Kahneman, D.; Tversky, A. Prospect theory: An analysis of decision under risk. In Handbook of the Fundamentals of Financial Decision 667 Making: Part I; MacLean, L., Ziemba, W., Eds.; World Scientific: Singapore, 2013; pp. 99–127. [Google Scholar]

- Prelec, D. The probability weighting function. Econometrica 1998, 66, 497–527. [Google Scholar] [CrossRef]

- Song, B.; Shah-Mansouri, H.; Wong, V.W. Quality of sensing aware budget feasible mechanism for mobile crowdsensing. IEEE Trans. Wirel. Commun. 2017, 16, 3619–3631. [Google Scholar] [CrossRef]

- Zhou, Y.; Tong, F.; He, S. Bi-objective incentive mechanism for mobile crowdsensing with budget/cost constraint. IEEE Trans. Mob. Comput. 2022, 23, 223–237. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).