1. Introduction

Emotions play an essential role in human daily life, profoundly influencing cognition, communication, and decision-making processes [

1]. Among various physiological signals, electroencephalography (EEG) has emerged as a promising modality for emotion recognition due to its high temporal resolution, non-invasiveness, and real-time monitoring capabilities [

2].

The applications of EEG-based emotion recognition span multiple domains. In automotive systems, emotion-aware driver assistance is considered a potential approach to enhance road safety [

3]. Within clinical neurology, identifying emotional responses to specific stimuli can facilitate the diagnosis of affective disorders such as post-traumatic stress disorder (PTSD) [

4] and depression [

5]. Therapeutic interventions including robot-assisted and music-assisted therapies also benefit from emotion detection. In information retrieval, emotion recognition enables affective computing applications such as implicit tagging of multimedia content [

6]. Healthcare applications include early detection of negative emotional states during social media consumption [

7]. The gaming industry utilizes emotion recognition to adapt game dynamics [

8], while virtual reality systems leverage emotional states to enhance educational outcomes [

9].

Although EEG-based emotion recognition holds great promise, there are still many challenges in transitioning emotion recognition technology from the laboratory to daily life. Traditional experiment-level EEG equipment provide high-quality signals; however, it suffers from complex setup procedures, it is uncomfortable, and it has limited mobility [

10]. Compared with experiment-level device, commercial wireless EEG devices offer improved portability and cost-effectiveness for personal applications [

11], whereas the most affordable single-channel devices remain prohibitively expensive (e.g., NeuroSky MindWave at USD 199) [

12].

Moreover, commercial systems exhibit technical limitations. Commercial devices may exhibit compromised signal quality as a trade-off for achieving cost-effectiveness compared to experiment-level equipment. Commercial device might observe an increased bandwidth in the

band (8–13 Hz). For example, the Fp1 channel power spectrum of MindWave is generally similar to medical systems, but the bandwidth is slightly increased [

10]. Some researchers found that mismatch negative (MMN) waveforms captured by Emotiv exhibited a lower signal-to-noise ratio, and these waveforms from half of the subjects seemed different from those from medical-grade devices [

13].

With the potential signal quality issues, commercial EEG devices have introduced significant challenges in emotion recognition. To reconcile high performance with low cost and portability, we developed a robust emotion feature extractor for the proposed device, compensating for the performance degradation typically observed in portable systems using conventional methods. Our main contributions include the following:

A modular wireless EEG acquisition system supporting Wi-Fi communication with adjustable sampling rates (250–1000 Hz), compatible with both dry/wet electrodes, featuring plug-and-play operation, and built at a components cost of under USD 35.

A robust self-supervised feature extractor (EmoAdapt), which extracts discriminative features based on general features from EEG signals by integrating contrastive learning and masking learning tasks, minimizing the influence of EEG signal quality to the greatest extent.

Experiments show that our system, with the synergy of hardware and software, can effectively achieve emotion recognition and achieve a maximum cross-session accuracy of 60.2% and a Macro-F1 score of 59.4% on our proposed platform. Compared to conventional feature-based approaches, it shows a maximum accuracy improvement of up to 20.4% using a multilayer perceptron (MLP) classifier in our experiment.

2. Related Works

EEG-based emotion recognition systems require tight integration between signal acquisition hardware and data processing algorithms. In this section, we review related work in both domains. We begin by introducing common methods for recognizing emotions from EEG signals. Then, we review EEG acquisition systems, including commercial-grade devices and self-designed platforms.

2.1. EEG-Based Emotion Features

EEG features for emotion computing are primarily categorized into time domain, frequency domain, and time–frequency domain features. The time-domain features are relatively easier to extract and mainly includes event-related potentials [

14], higher-order over-zero analysis [

15], and Hjorth parameters [

16]. Frequency-domain features are most preferred in emotion computing due to their high correlation with psychological activities. Through a Fourier transform, signals are in general decomposed into five frequency bands:

(1–3 Hz),

(4–7 Hz),

(8–13 Hz),

(14–30 Hz),

(31–50 Hz). From given bands, frequency domain features are extracted, mainly power spectral density (PSD), differential entropy (DE), differential asymmetry (DASM), rational asymmetry (RASM), and energy spectrum (ES), where PSD and DE features have demonstrated particularly effective performance in emotion recognition systems [

17,

18,

19]. The Fourier transform operates across the entire time domain, while time–frequency domain features are employed to capture both global and local signal characteristics. In addition to conventional features, massive techniques have been proposed by studies to further improve the feature performance. Techniques like feature smoothing and linear dynamic systems (LDS) [

20] are typically applied to entire trial features to remove emotion-irrelevant components. Domain adaptation (DA) methods are effective ways to further develop abundant domain-invariant features, thereby achieving better emotion recognition across sessions or subjects [

17]. However, none of the conventional feature extraction methods mentioned above can effectively address the challenges associated with low-SNR signals acquired in resource-constrained EEG systems.

2.2. Self-Supervised Learning

As a branch of unsupervised learning, self-supervised learning automatically creates fake labels, based on the inherent properties of the signals themselves. Self-supervised learning has been widely applied in various fields, including computer vision, natural language processing (NLP), and bio-informatics, among others. Recently, it has emerged as an effective approach for automatic feature extraction. This approach demonstrates dual advantages: it effectively extract vast quantities of unlabeled data while simultaneously reducing generalization degradation caused by label noise. Self-supervised learning can generally be divided into two major categories: contrastive learning and masked prediction tasks. The goal of contrastive learning is to learn the differences between classes. The most commonly used method is to establish negative samples through data augmentation. The SimCLR framework was expanded to time series data to train a channel-level feature extractor, proceeding with data augmentation by channel reorganization and dataset fusion [

21]. In another approach, signal transforms were used for data enhancement, and a pretext task based on CNN was constructed [

22]. Additionally, contrastive learning was employed to study the EEG characteristics of sleep, with constructed positive and negative samples [

23].

The core objective of the mask prediction task is to reconstruct masked portions of input data. This approach was notably implemented in Masked Autoencoders (MAEs) [

24], which applied SSL to partially observed image patches using Vision Transformers (ViTs). Unlike SimMIM [

25], where the full set of image patches is used for reconstruction, MAE employs a high masking rate (over 75%) resulting in a better ability to recover missing information.

Recent advancements aim to integrate contrastive learning principles with masked prediction tasks. For instance, spatial offset cropping was introduced as an augmentation strategy, where an enhanced view of the input was compared with the predicted view from an online feature decoder [

26]. This dual-path framework enabled the simultaneous capture of both global structural features and discriminative local patterns. A self-supervised learning framework combining contrastive learning and masked prediction tasks was proposed for the classification of sleep stages under single-channel EEG, incorporating a MAMBA-based temporal context module [

27].

2.3. Commercial EEG Acquisition System

In recent years, the demand for portable EEG acquisition devices has grown substantially, leading to the development of a variety of commercial-grade systems. These devices are primarily designed for use in research, healthcare, and consumer applications, with an emphasis on portability, ease of use, and accessibility.

The OpenBCI Ganglion is a four-channel, open-source EEG acquisition system known for its versatility. It is particularly favored by researchers and developers due to its customizability. The system supports both dry and wet electrodes and includes a graphical user interface (GUI) for streamlined setup and configuration [

28]. The EPOC Insight is a wireless EEG headset developed by Emotiv, specifically designed for cognitive research and brain-computer interface (BCI) applications. It features five EEG channels and is optimized for user convenience, requiring minimal setup time [

29]. The Muse S is a consumer-focused EEG device aimed at meditation and sleep monitoring. It incorporates multiple sensors, including EEG, photoplethysmography (PPG), and an accelerometer, making it a multifunctional platform for wellness applications. However, the device has limitations in terms of dynamic range and resolution [

30]. The NeuroSky MindWave is another signal acquisition device designed for research and educational purposes [

31]. A comparative summary of these and other commercial systems is provided in

Table 1 and

Table 2.

Commercial EEG devices are highly portable, user-friendly, and equipped with wireless connectivity, making them ideal for non-laboratory applications. However, fixed electrode configurations, high costs, and limited customizability remain significant barriers to advanced research and widespread adoption.

2.4. Self-Designed EEG Acquisition System

Many researchers have recognized the limitations of existing EEG acquisition systems and have proposed various custom-designed solutions. Based on the implementation of the analog front end (AFE) in the signal acquisition chain, these designs can be categorized as follows.

2.4.1. Designs Using Discrete General-Purpose Operational Amplifiers

These systems utilize standard-precision operational amplifiers to construct front-end signal conditioning circuits for amplification, filtering, and biasing. Analog-to-digital conversion is subsequently performed using general-purpose ADC chips.

A flexible and portable wireless EEG headband was developed using discrete op-amps like the OPA314 and OPA333 (Texas Instruments, Dallas, TX, USA) [

37]. Integrated on a flexible PCB, that system supported eight channels for signal acquisition, quantization, and motion artifact detection, with electrodes directly mounted on the board. It was suitable for mobile monitoring of the prefrontal and temporal lobes. Another study analyzed and optimized a previously proposed EEG acquisition circuit, achieving performance comparable to that of commercial systems [

38]. Additionally, a wearable three-channel EEG sensor for depression diagnosis was introduced [

39]. That system employed flexible, non-invasive electrodes to acquire frontal EEG signals and incorporated the Ant Lion Optimization (ALO) algorithm, demonstrating a high signal-to-noise ratio (SNR) and classification accuracy.

The primary advantages of this approach are design flexibility and customizable sub-circuits, allowing more controlled signal quality. However, this method typically involves more complex circuitry, reduced integration, and higher implementation costs.

2.4.2. Designs Based on Dedicated Bio-AFE Chips

These designs can be categorized into two types: those with integrated ADCs and those without.

This category includes well-established solutions such as Texas Instruments’ ADS1298 and ADS1299, widely adopted in both academic and engineering contexts. A versatile smart glasses-based platform called “GAPSES” was proposed, utilizing the ADS1298 for EEG and EOG acquisition [

40]. Targeted at the temporal lobes, this platform incorporates the GAP9 (GreenWaves Technologies, Grenoble, France) processor, which enhances edge-side processing for applications like eye movement detection and steady-state visual evoked potential (SSVEP) analysis. Another innovative solution, EEG-Linx, is a modular WESN platform featuring miniaturized ADS1299-based nodes with a DRL-free design [

41]. This system supports synchronized, wireless multi-node EEG acquisition and has been validated with SSVEP and ASSR tasks, demonstrating signal quality comparable to commercial devices for flexible and unobtrusive EEG monitoring. Additionally, some researchers introduced an IoT-enabled, wearable multimodal monitoring system (IEMS) for neurological intensive care units (NICUs) [

42]. That system monitored EEG, regional cerebral oxygen saturation (

), temperature, ECG, PPG, and bioimpedance (Bio-Z), supporting remote diagnosis and clinical decision-making, with hospital-based validation confirming its robustness under severe interference. Lastly, a portable EEG acquisition system was developed using the LHE7909 (Legendsemi, Suzhou, China) and ESP32-S3 (Espressif, Shanghai, China), specifically targeting motor imagery tasks [

43], with experimental results demonstrating the reliability of the acquired EEG signals.

These solutions offer high integration, incorporating features such as built-in programmable gain amplifiers (PGAs), driven right leg (DRL) circuits, lead-off detection, digital filtering, and digitized outputs. These functions simplify downstream data acquisition. However, the trade-off lies in slightly higher cost and reduced design flexibility.

A representative example is Analog Devices’ AD8232 (Wilmington, MA, USA), which integrates an amplifier, band-pass filter, and DRL driver but outputs analog signals, requiring an external ADC. Researchers have developed an open-source biopotential sensor based on the AD8232, capable of acquiring EEG, ECG, and EMG signals [

44]. That approach balances integration with flexibility. However, the requirement for an external ADC increases PCB area and reduces system compactness.

2.4.3. Highly Integrated Bio-SoC Solutions

Recent products have achieved even greater levels of system integration. For example, Nanochap’s NNC-EPC001 (Hangzhou, China) is a RISC-V-based System on Chip (SoC) that integrates EEG, ECG, EMG, and PPG analog front ends. It features a complete ExG signal chain—including PGAs, a 24-bit ADC, AC lead-off detection, and a DRL circuit—along with PPG transceivers and an on-chip Heart APP for real-time heart rate detection [

45]. Although no published studies have yet employed this SoC for EEG acquisition, it shows promise for future development.

The aforementioned EEG acquisition systems exhibit strong performance and innovative designs, but they often involve trade-offs between cost, complexity, integration level, and flexibility. In particular, high-performance systems based on commercial bio-AFE chips may offer excellent signal quality but are generally expensive and less customizable, while discrete designs provide flexibility at the cost of compactness and integration. To address these limitations, this work proposes a low-cost, modular EEG acquisition system that balances signal quality, hardware simplicity, and system flexibility. Details of the system design are presented in the following section.

3. Proposed EEG Acquisition System

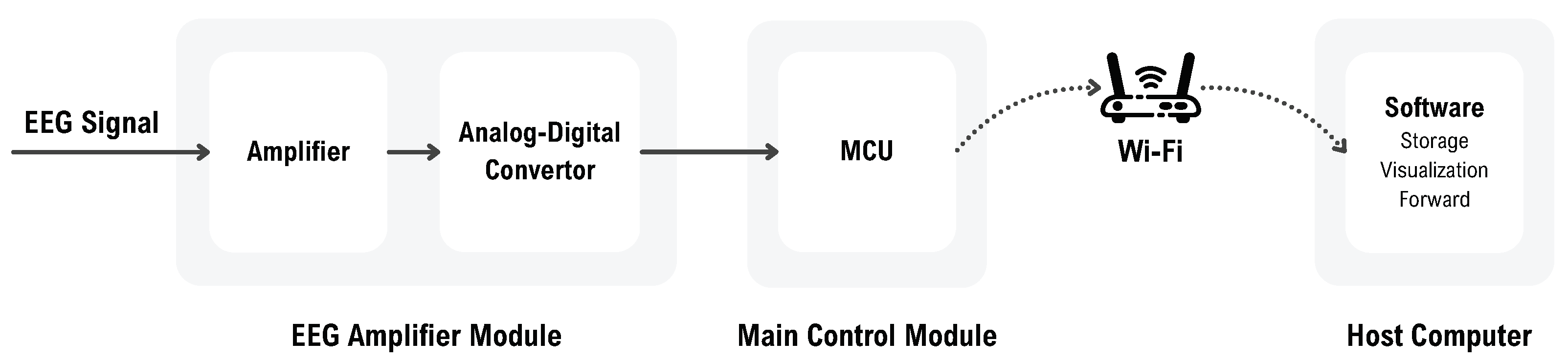

This section presents the proposed acquisition system. As illustrated in

Figure 1, the overall workflow begins with the EEG amplifier, which amplifies the signal and performs analog-to-digital conversion. The main control module then reads the digitized data, packages it, and transmits it to the host computer via Wi-Fi. The software on the host computer handles data reception, storage, and visualization and forwards it to the algorithmic processing stage. The system is described from three perspectives: hardware development, embedded system development, and software development.

3.1. Hardware Development

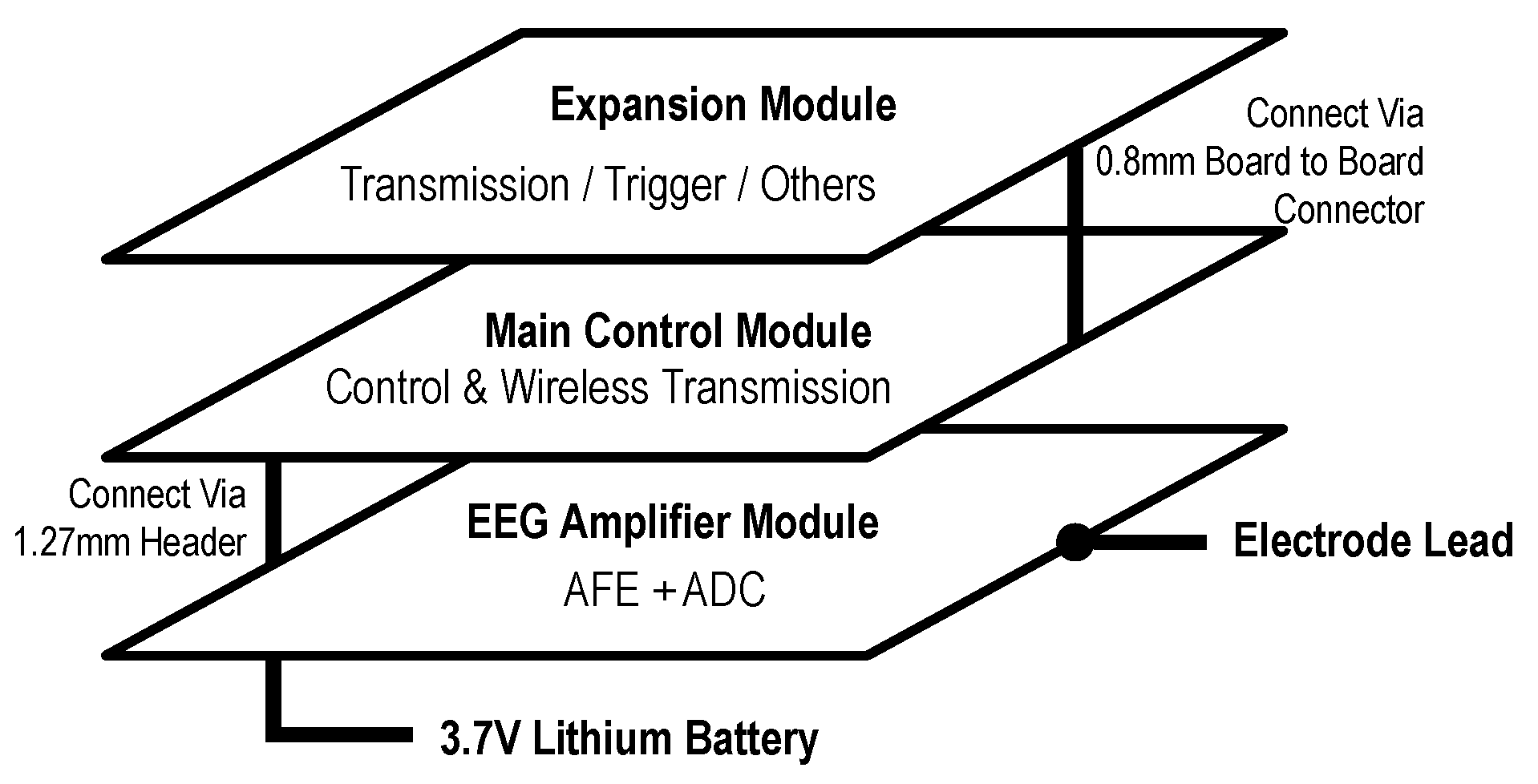

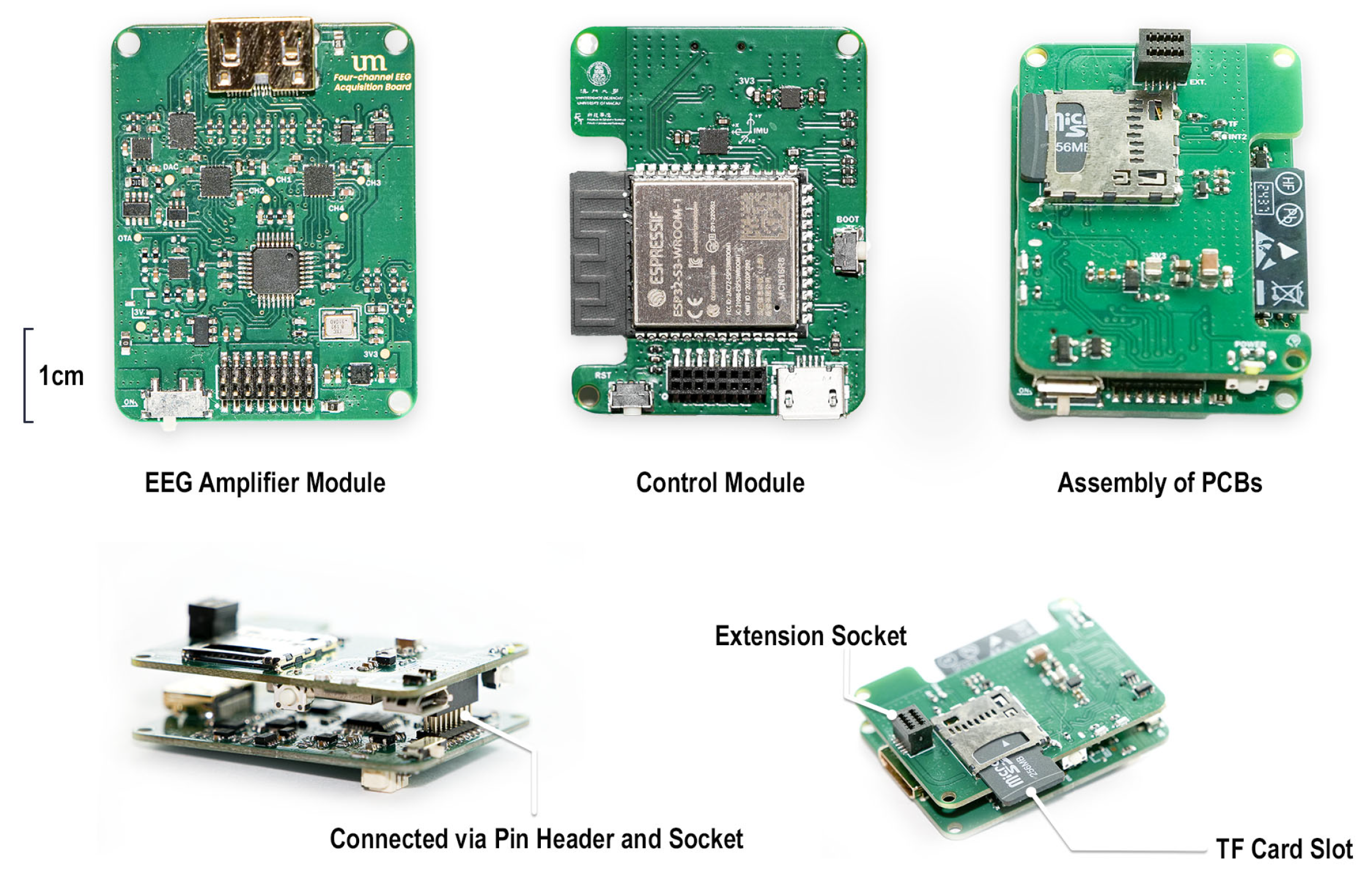

As illustrated in

Figure 2, a modular hardware architecture was adopted, consisting of three primary components: the EEG amplifier module, the main control module, and the expansion module. This design approach enhanced flexibility and scalability while maintaining cost-efficiency.

3.1.1. EEG Amplifier Module

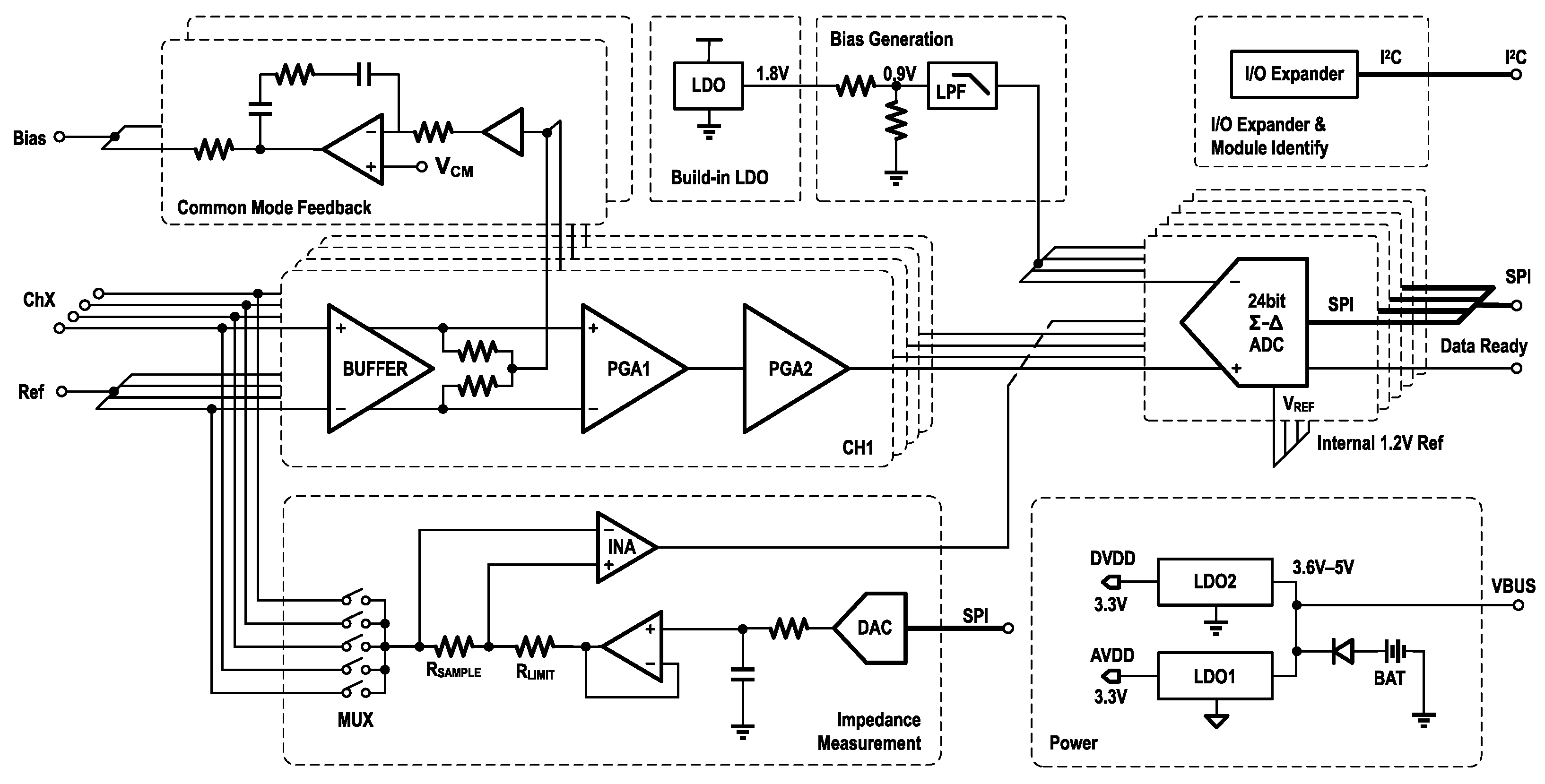

The EEG amplifier module serves as the core component of the system. It functions as a generalized amplifier, responsible not only for amplifying weak EEG signals but also for converting them into digital form for subsequent processing. In the proposed design, the module primarily consists of two EEG-specific amplifiers and an ADC.

Figure 3 shows the schematic diagram of the entire module. The signal input, common-mode feedback, and built-in LDO are all part of the internal circuitry of the analog front end. The Bias Generation circuit generates a shifting voltage to align the amplifier output with the ADC input, and below it are several other auxiliary circuit modules.

Amplifier

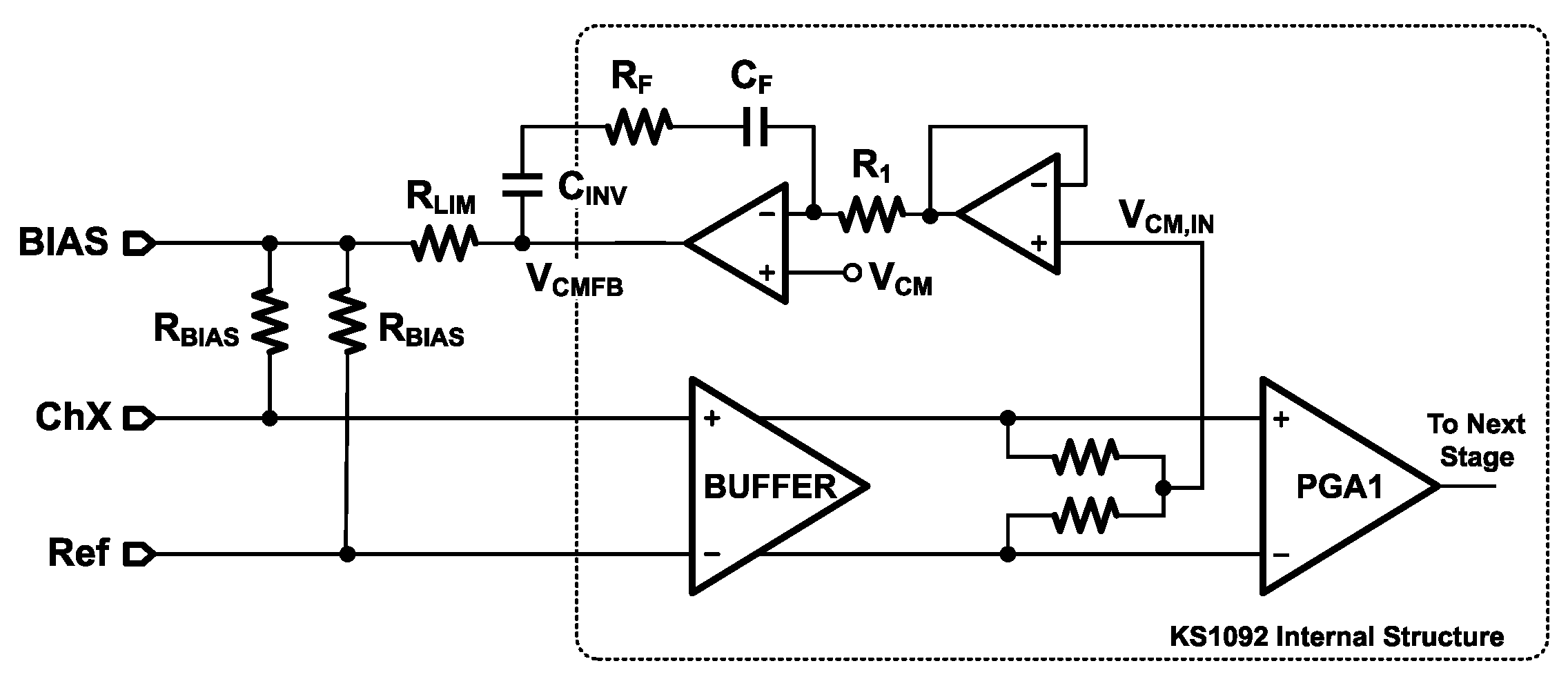

EEG signals typically exist in the microvolt range, making them unsuitable for direct analog-to-digital conversion without amplification. To address this, the KS1092—a lightweight, dual-channel bio-amplifier developed by Kingsense (Shenzhen, China)—was employed in this design [

46]. The KS1092 integrates amplification, filtering, and biasing functions and includes two cascaded programmable gain amplifiers (PGAs), offering a total gain range from 360 to 2760. It amplifies weak EEG signals to a usable voltage range of 0–1.8 V, powered by an internal 1.8 V low-dropout (LDO) regulator. A built-in band-pass filter restricts the output bandwidth to 0.5–200 Hz. Without external biasing resistors, the amplifier achieves an input impedance of up to 5 GΩ, supporting both dry and wet electrodes. The design incorporates a common-mode rejection ratio (CMRR) of 100 dB, enhancing resistance to external interference. Furthermore, a built-in right-leg driving (RLD) circuit provides common-mode feedback to suppress noise such as power-line interference.

As shown in

Figure 4, an 18 MΩ bias resistor (

) stabilizes the input DC operating point at approximately 0.9 V, ensuring proper internal operation and improved common-mode noise suppression. To limit the current injected into the human body, a 10 kΩ resistor (

) is connected in series at the output. The high level of integration in the KS1092 enables the entire acquisition circuit to be implemented with only five passive components. Additional features include Fast Restore (FR) and lead-off detection (LDF). The FR function temporarily creates a low-resistance path to the output, accelerating stabilization after step inputs—particularly useful due to the low cut-off frequency of the high-pass filter. The LDF function monitors electrode connectivity by detecting power-line interference, although the KS1092 lacks an internal AC excitation source and therefore does not support real-time quantitative impedance measurements.

ADC

In the biomedical field, EEG signal acquisition commonly employs either Successive-Approximation Register (SAR) or

structured ADCs [

47]. SAR-based ADCs typically offer higher speed and lower power consumption compared to

ADCs. On the other hand, the

ADCs provide superior resolution due to oversampling and noise-shaping techniques. By operating at high clock frequencies,

ADCs can effectively convert time resolution into analog resolution, thereby enhancing signal quality. The downside is that they consume more power and have slower conversion speeds, resulting in some latency. For multi-channel EEG acquisition, channel synchronization is another consideration. Based on their sampling strategy, ADCs can be classified as either synchronous or asynchronous. Synchronous-sampling ADCs include multiple ADC cores and enable simultaneous sampling across all channels. In contrast, asynchronous-sampling ADCs utilize a single core along with a multiplexer (MUX) to switch between channels. For asynchronous-sampling

ADCs, the longer conversion time makes the delay between channels more pronounced.

To ensure simultaneous acquisition, signal quality, and cost-effectiveness, this work employed the ADS131M08 from Texas Instruments—a synchronous 8-channel, 24-bit ADC with a maximum sampling rate of 32 kSPS. The device supports both differential and single-ended input configurations. The ADS131M08 includes an internal 1.2 V reference voltage and also supports an external reference input within the range of 1.1 to 1.3 V. Its input voltage range is limited to , which does not fully accommodate the KS1092’s output range of 0–1.8 V, To address this mismatch, a 0.9 V reference voltage was generated by connecting two identical resistors in series to divide the 1.8 V output of the KS1092’s internal LDO. The accuracy of the clock source directly affects the precision of the ADC’s sampling rate. To ensure timing stability and minimize susceptibility to interference, an 8.192 MHz active crystal oscillator with a frequency tolerance of ±10 ppm was selected as the ADC’s clock source, instead of a standard passive crystal. The external clock enters the ADC and passes through a pre-divider, generating the modulator clock . The output data rate is defined as , where denotes the oversampling ratio. Thus, for an output rate of 500 Hz, an OSR of 8192 can still be achieved, ensuring high signal quality.

The ADS131M08 incorporates a Global-Chop function. This function samples the positive input three times, then swaps the inputs and samples the negative input three times. The final result is calculated from these six samples. This reduces the impact of the input offset voltage () of the internal PGA. This mechanism enhances the accuracy of low-frequency signal acquisition. A dedicated “Data Ready” pin generates a falling-edge pulse upon completion of each data conversion. By connecting this pin to a microcontroller’s GPIO, an external interrupt can be used to trigger data retrieval via SPI, eliminating the need for a timer and reducing processing overhead.

Impedance Measurement

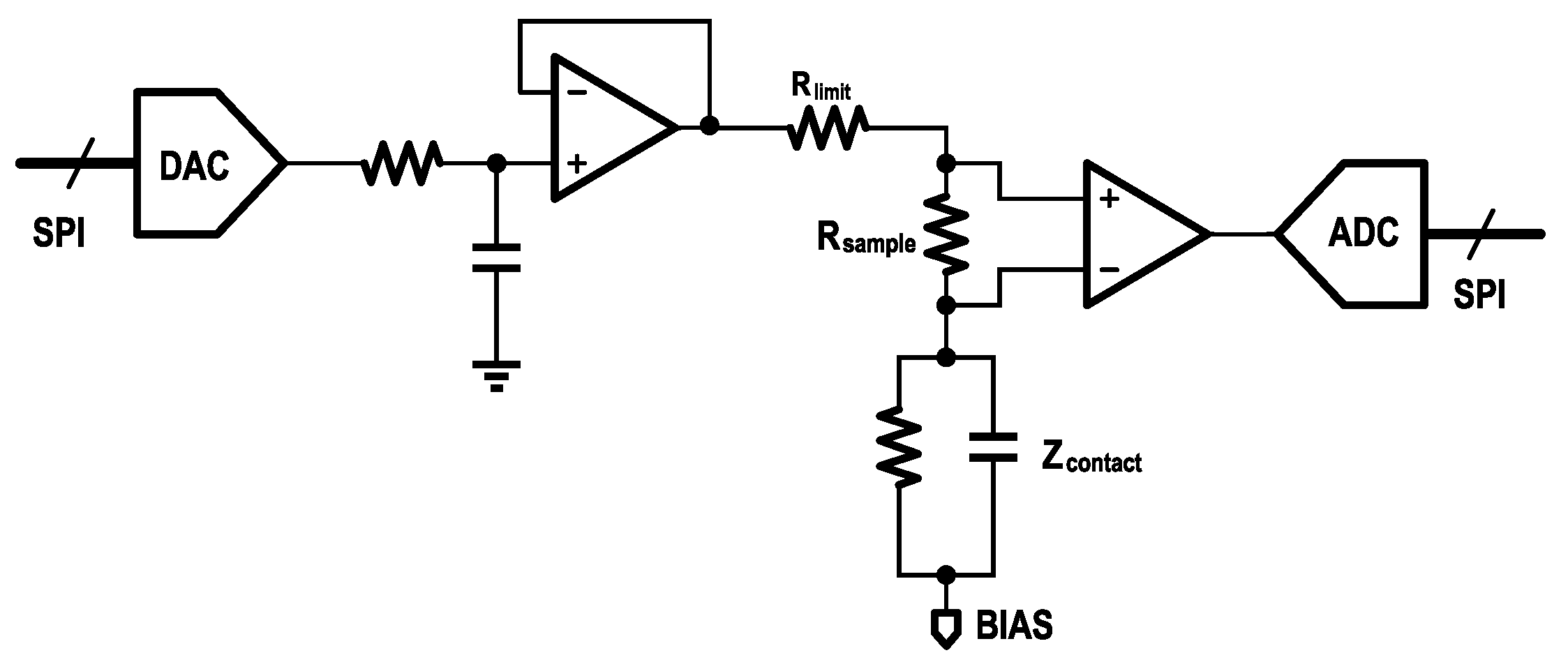

As noted previously, the KS1092 does not include a built-in AC excitation source and therefore cannot perform quantitative contact impedance measurements. To overcome this limitation, an external impedance measurement circuit was added to the EEG amplifier module as shown in

Figure 5. The circuit comprises a digital-to-analog converter (DAC7311, Texas Instruments), which generates an external AC signal. This signal is injected into the body through the target electrode using an analog switch (TMUX1309, Texas Instruments). The resulting current flowing through the electrode is converted into a voltage signal by a precision resistor. An instrumentation amplifier (INA350, Texas Instruments) is used to amplify the voltage signal, and the amplified signal is then sampled by the ADC.

Finally, the contact impedance can be calculated based on the measured voltage using (

2):

where

represents the total impedance in the loop, and

denotes the contact impedance.

is the current-limiting resistor, and

is the sampling resistor.

is the amplitude of the DAC output signal,

is the amplitude of the injected current,

is the output voltage amplitude of the instrumentation amplifier (INA), and

is the gain of the INA.

Power and I/O Configuration

The EEG amplifier module was sensitive to noise. Given that its power consumption was low, an LDO chip, the TPS7A2023 (Texas Instruments), was used to generate 3.3 V as AVDD, while another LDO chip, the LP5912 (Texas Instruments), generated 3.3 V as DVDD. Both chips have high PSRR and low noise. To minimize interference from digital components, the analog and digital grounds were separated to improve analog signal integrity.

The AFE required multiple configurable I/O ports. Connecting them directly to the main control module would occupy too many I/O ports and increase the size of the connector. Therefore, an -based I/O expander was employed, allowing the control of 16 I/O ports using only two lines. Additionally, that module supported customizable address configuration. With this feature, it was only necessary to scan the addresses in the main control module after startup. This enabled the identification of the current EEG amplifier module and achieved plug-and-play functionality for different EEG amplifier modules.

3.1.2. Main Control Module

The main control module was built around the Espressif ESP32-S3 (Shanghai, China), a high-performance dual-core microcontroller that integrates Wi-Fi and BLE capabilities. Its dual-core architecture enables concurrent execution of Wi-Fi communication and data acquisition or processing tasks. The module also incorporates a 6-axis MEMS MotionTracking sensor (ICM42688, TDK InvenSense, San Jose, CA, USA) for real-time orientation monitoring, and a TF card slot to support offline data storage and backup. Given the wireless transmission demands of the ESP32-S3, it represented the system’s primary power consumer. Supplying 3.3 V via an LDO from a 3.7 V battery was inefficient and reduced battery life; therefore, a buck DC-DC converter was used to efficiently step down input voltages (3.6–5 V) to 3.3 V for the ESP32-S3. Meanwhile, the lower-power IMU and TF card were powered through an LDO to ensure a stable 3.3 V supply. A Micro-USB port was also provided for direct PC connection, enabling convenient debugging and data acquisition. A schematic of the main control module is shown in

Figure 6.

3.1.3. Expansion Module

The ESP32-S3 supports IEEE 802.11b/g/n protocols, commonly referred to as Wi-Fi 4, and operates in the 2.4 GHz ISM band, which is constrained by a limited number of non-overlapping channels (specifically channels 1, 6, and 11) and high device density. In high-concurrency scenarios, these constraints can lead to network congestion and packet loss. Under such conditions, the TCP retransmission mechanism may further exacerbate transmission delays and increase system load. Moreover, inadequate router performance or an excessive number of connected devices can contribute to persistent packet loss.

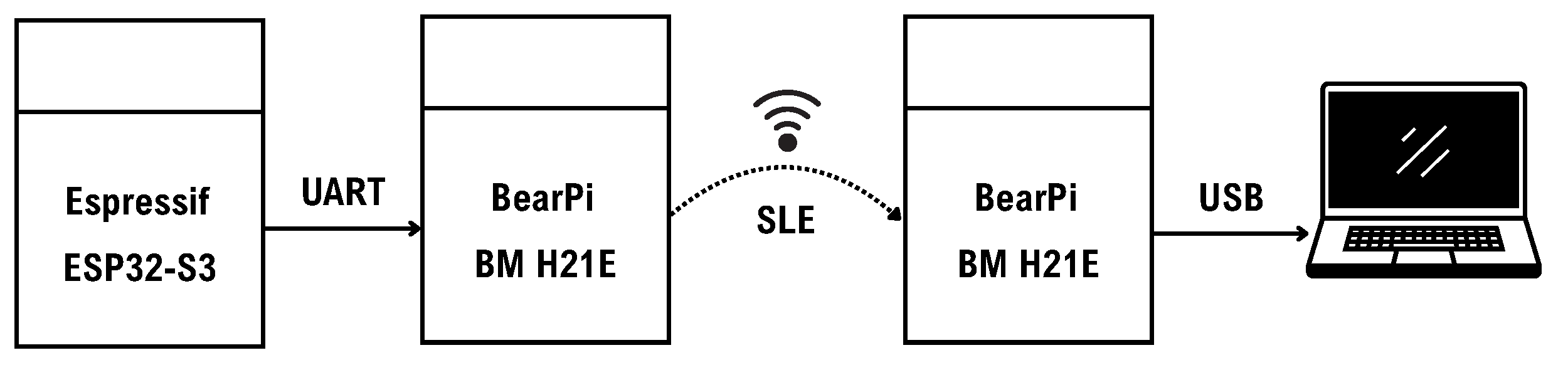

To address these limitations, we developed an SLE expansion module based on the BearPi-BM H21E (Nanjing, China) [

48], as illustrated in

Figure 7. The SLE module obtains data from the main controller via UART and sends them to the receiver on the computer. The receiver is connected to the computer via USB. This module supports the SparkLink Low Energy (SLE) protocol—a low-power sub-protocol within the NearLink framework proposed by Huawei. Compared to Wi-Fi and BLE, SLE provides more stable connectivity, lower latency, and higher reliability [

49], making it a viable alternative transmission channel. The expansion module includes reserved interfaces for power, UART, SPI, and other specific functions, enabling future integration of additional physiological signal modules such as PPG, ECG, or GSR, as required.

3.1.4. Power Supply

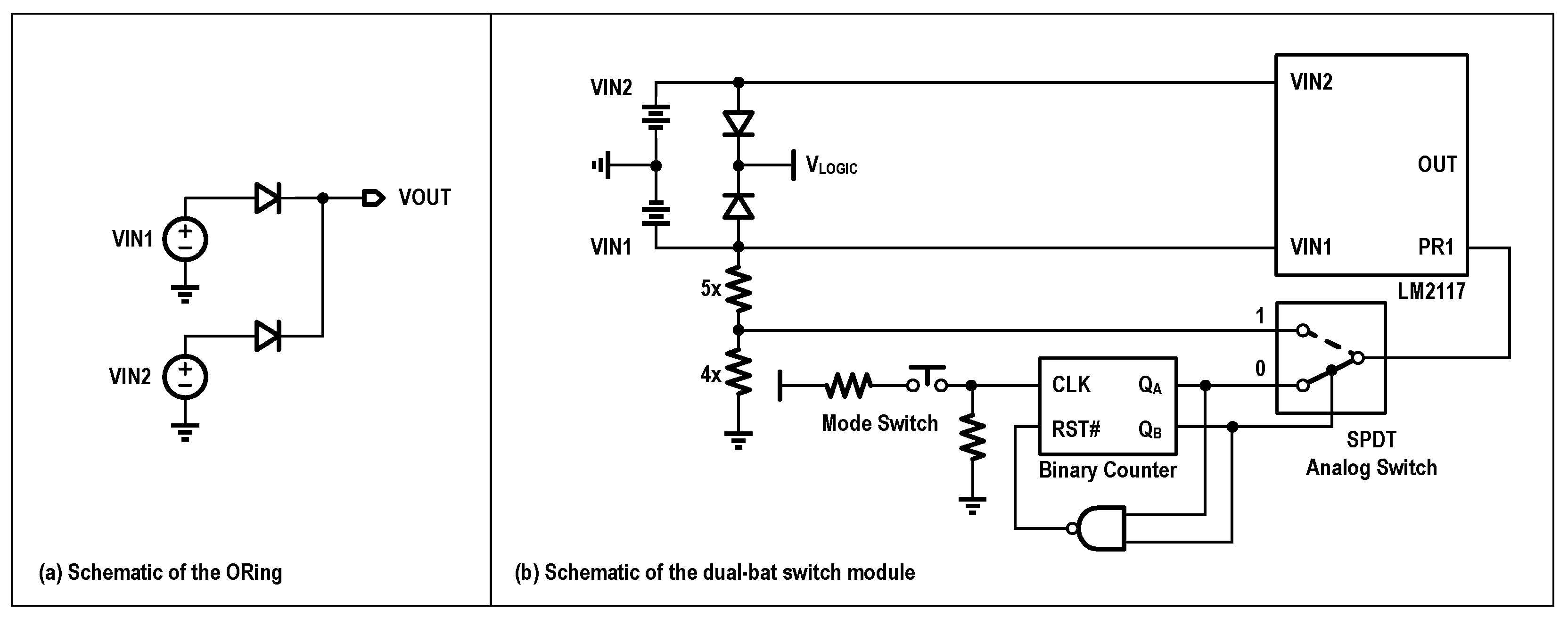

Due to the need for Wi-Fi transmission, the power consumption is significantly higher than that of Bluetooth. The system operates on a 2000 mAh, 3.7 V lithium battery, supporting over 7 h of endurance. When connected to a computer via Micro-USB, it utilizes the 5 V supply as the main power source. An ORing configuration was implemented using the LM66100 ideal diode IC from Texas Instruments, enabling multiple power supply options. By utilizing ORing, a higher voltage power source could be used to supply power to the entire system.

Moreover, to extend acquisition time, a dual-battery power supply module was developed that supports manual and automatic switching between batteries, allowing for battery replacement without power interruption. As shown in

Figure 8, the key component is the TPS2117 (Texas Instruments), which functions as a power multiplexer with manual and priority switching capabilities. Control signals are generated via a button on the board, based on a binary counter, enabling the switching between three modes: manual use of VIN1, manual use of VIN2, and priority use of VIN1 (switch to VIN2 when VIN1 < 3.6 V). The QA port generates a signal for switching between manual and automatic modes, while the QB port is used to select between the two batteries in manual mode.

3.1.5. PCB Design

Both the EEG amplifier and control boards adopted a 4-layer stack-up. The top and bottom layers were designated for signal routing, the second layer was a dedicated ground plane, and the third layer served as the power plane. To ensure lower noise, the path for low-frequency, weak analog signals like EEG should be kept short and wide. The PCB is shown in

Figure 9. All modules shared the same PCB size, 42 mm × 32 mm, and the thickness was 1.0 mm.

3.1.6. Cost Description

The component prices for the EEG amplifier and the main control module are shown in

Table 3. As shown in

Table 4, the price of a minimum operational system is less than USD 35.

3.2. Embedded System Development

The embedded development of the system was based on PlatformIO (Core 6.1.18). We employed a hybrid framework of ESP-IDF (version 5.1.2) and Arduino (version 2.0.14), integrating Arduino as a component of ESP-IDF to streamline the development process.

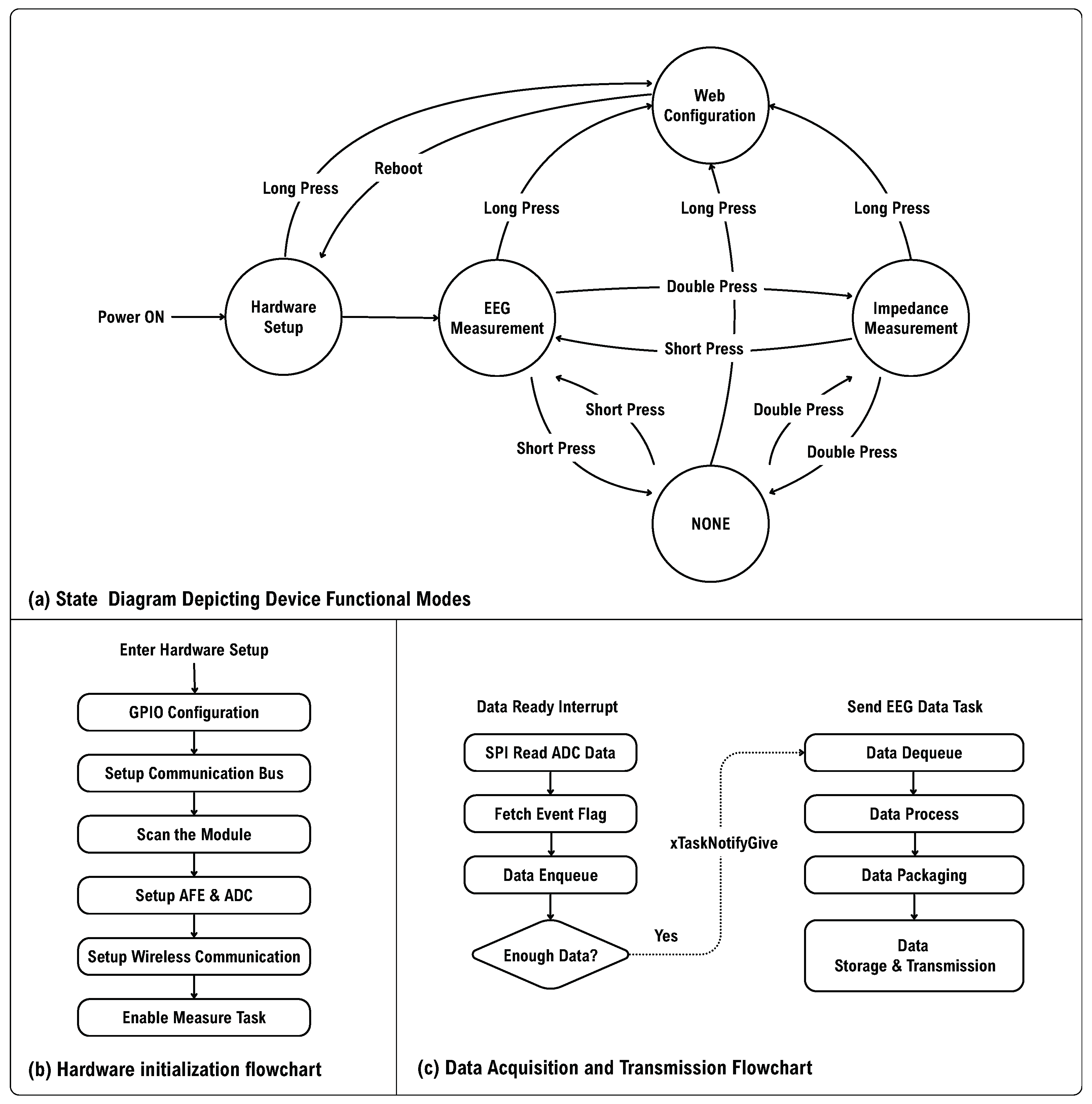

As shown in

Figure 10a, upon powering up, the MCU enters the “Hardware Setup” mode. It configures the relevant pins and scans the

addresses to identify the current EEG amplifier module. The parameters of the EEG amplifier module are then initialized based on the default settings stored in the Non-Volatile Storage (NVS). This process includes enabling the AFE, setting the gain, configuring the ADC sampling rate, activating Global-Chop, and establishing the cut-off frequency for the high-pass filter. Next, the MCU connects to a host computer via Wi-Fi, where the host sends the necessary configuration information. The controller retrieves the current time using the SNTP protocol to initialize storage on the TF card.

After completing the hardware initialization, the device automatically enters the EEG measurement mode. Data reading relies on a trigger from the DR pin, which activates the external interrupt and the task of sending EEG data. Once the external interrupt is received, the device immediately reads ADC data through SPI and event data. To reduce power and network load, data are not transmitted for every single data point. Instead, a certain number of data points are accumulated before transmission. When the number of data points in the queue reaches a predefined threshold, the interrupt service routine (ISR) sends a notification to inform the EEG transmission task. Upon receiving this instruction, the transmission task dequeues the data, parses and packages them, and then stores them on the TF card or sends them to the host computer.

In the paradigm of emotion recognition, viewing stimulus materials is essential for inducing emotions. To analyze the EEG data, it is necessary to know the start and end times of the stimuli. Therefore, tagging the start and end of the stimulus is crucial. To ensure reliable transmission of event data, data transmission and event/command transmission are differentiated. The following sections introduces data transmission and event transmission separately.

3.2.1. Data Transmission

To ensure reliable data transmission, many Wi-Fi-based acquisition systems utilize the TCP protocol or higher-level protocols built on TCP. For example, TCP is employed to transmit data in the JSON format [

50]. In the EEG field, a commonly used higher-level protocol is the Lab Streaming Layer (LSL) protocol. This real-time data streaming and middleware system is primarily designed for multimodal data synchronization and transmission in neuroscience. The LSL protocol encompasses both TCP and UDP, with data transmission primarily relying on TCP [

51]. For instance, a system developed based on LSL demonstrated its capabilities in multimodal biosensing [

52]. Furthermore, the LSL protocol employs the Precision Time Protocol (PTP), which provides high synchronization accuracy and low latency for multimodal data [

53]. However, since the system operates outside of controlled laboratory conditions, strict low-latency synchronization is not a primary requirement. While the Lab Streaming Layer (LSL) is effective for synchronization, it introduces additional overhead. Additionally, real-world network environments may exhibit instability, making reliable data arrival more critical than synchronization precision.

Basic TCP socket-based transmission minimizes overhead but poses risks such as packet loss. In addition, due to constraints imposed by the Maximum Segment Size (MSS), issues such as packet sticking may occur, adversely affecting downstream data processing. To address these challenges, the Message Queuing Telemetry Transport (MQTT) protocol, which operates over TCP, was selected for data transmission. It is a lightweight messaging protocol based on a publish/subscribe architecture, enabling efficient message publishing and subscription. Its Quality of Service (QoS) mechanism improves transmission reliability over TCP. MQTT defines three QoS levels: 0, 1, and 2. The differences between them are shown in

Table 5.

The Quality of Service (QoS) level was configured to 1, ensuring that at least one copy of each data packet reached the host computer to minimize the risk of data loss. However, this setting may result in the delivery of duplicate packets. Although configuring QoS to level 2 would guarantee exactly one delivery, it would impose a significant load on the embedded device [

54]. Therefore, QoS level 1 was selected as a trade-off between reliability and system overhead. To improve transmission efficiency, data were transmitted in raw binary format instead of using the JSON format. Each data packet included a header and a tail for framing purposes. An incremental packet ID was appended to each packet, along with an internal timestamp and other relevant metadata. These additions facilitated accurate and efficient data analysis on the host computer.

3.2.2. Event and Command Transmission

As previously discussed, while the MQTT protocol provides reliable data transmission, it presents certain limitations in real-time control scenarios [

55]. A key drawback is the absence of a native prioritization mechanism. As a result, when control commands or critical events are transmitted concurrently with high-throughput EEG data via MQTT, the system may experience delayed responses, compromising temporal sensitivity and real-time performance.

To address this limitation, a dedicated TCP socket-based communication channel was implemented for event and command transmission. The corresponding processing thread on the embedded system was assigned a higher priority than MQTT-related tasks, ensuring timely handling of high-importance messages, particularly under conditions of heavy network load. Upon receiving a command, the device returned an acknowledgment (ACK) signal to the host; if the ACK was not received within a predefined timeout period, the host initiated automatic retransmission until a specified maximum number of attempts was reached. In scenarios requiring stricter responsiveness guarantees, deploying an auxiliary processing module, rather than handling all tasks on a single SoC, may be necessary to maintain real-time performance.

3.3. Software Development

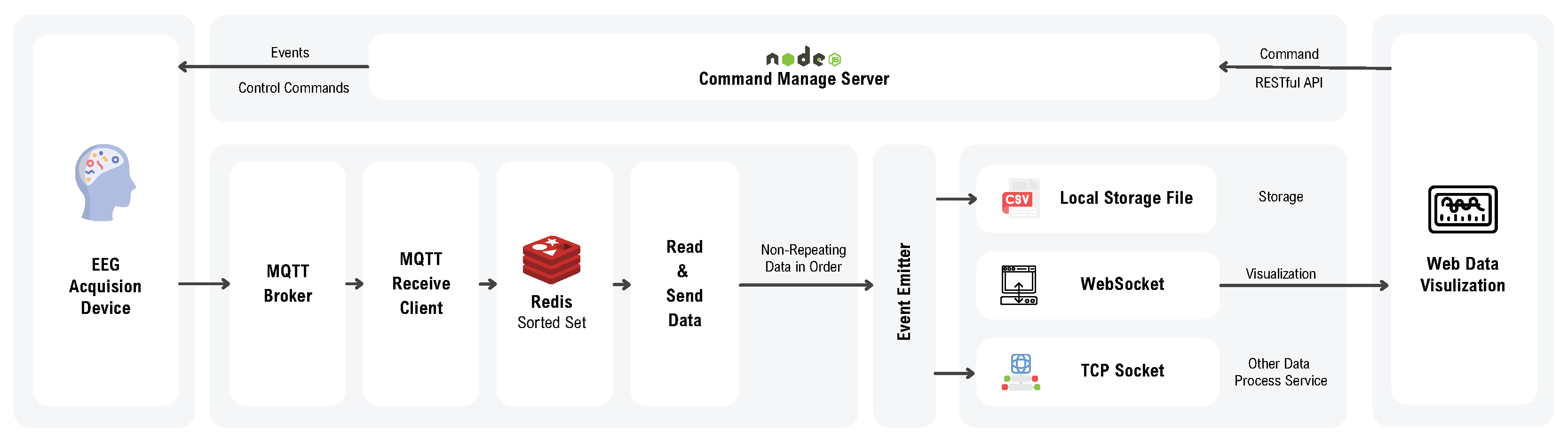

The proposed data acquisition system was equipped with a corresponding software platform. The block diagram of the software is illustrated in

Figure 11. The software was developed based on JavaScript (ES6+) and Node.js (18.20.0).

The software design encompassed both the backend service architecture and the graphical user interface (GUI) development.

3.3.1. Backend Service

The input layer began with an MQTT Broker that handles message brokering, followed by a server-side MQTT client that subscribed to all data topics published by the device. As previously noted, the system used QoS level 1, which may result in duplicate message delivery. Additionally, network congestion could cause packets to arrive out of order. To mitigate these issues, a buffering mechanism was implemented using Redis’s (5.0) sorted set data structure. Redis, a high-performance NoSQL database, enables each data element to be assigned a unique score—in this case, the packet ID—which allows for both deduplication and reordering prior to downstream processing.

The forwarding layer employed Node.js’s EventEmitter to broadcast the processed data to all registered output services. Current output options include local file storage and Redis-based storage. Due to browser security restrictions that prevent direct use of TCP sockets, data transmission to the graphical user interface was conducted via the WebSocket protocol. Additionally, a TCP socket forwarding module was provided to enable external servers to access real-time data streams for advanced analysis.

A command management server operated above the data pipeline to coordinate the configuration of the input, forwarding, and output services. It also handled command issuance to the device, including instructions to start or stop measurements and to tag specific events.

3.3.2. Web-Based GUI

The GUI of the software platform was developed using modern Web technologies. A screenshot of the waveform display interface is shown in

Figure 12. The front-end was implemented with Vue 3 (3.3.4), while waveform rendering was handled using the open-source library P5.js (1.11.4). The interface allows users to configure both the displayed time window and amplitude range to suit various visualization needs.

4. Methodology

This section presents EmoAdapt, a self-supervised learning framework designed to extract discriminative EEG features under real-world acquisition constraints. By integrating contrastive learning and masked learning, EmoAdapt constructs robust and generalizable representations from variable and noisy EEG data.

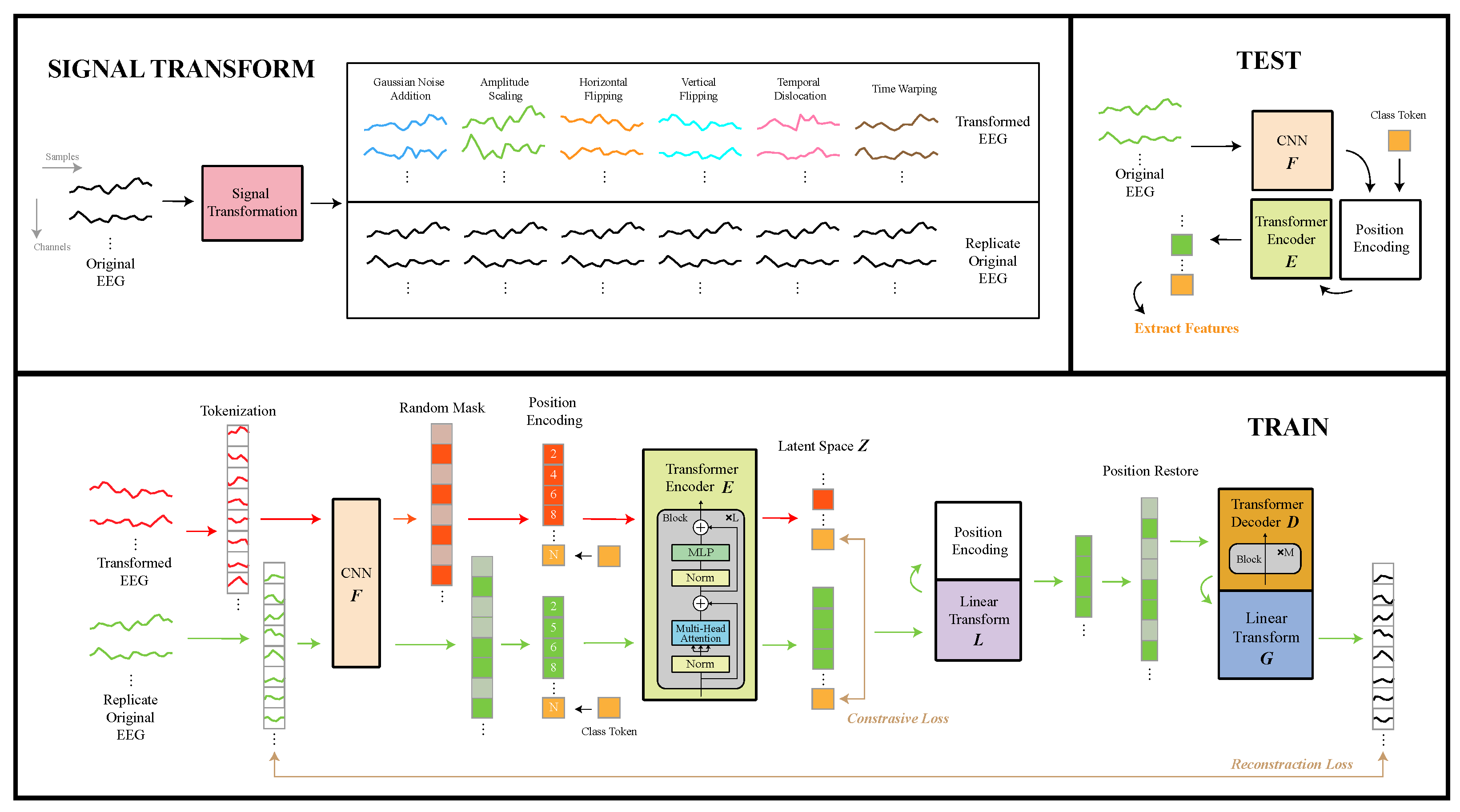

Unlike conventional masked image modeling (MIM)-based approaches [

27], EmoAdapt generates augmented EEG signals via domain-specific transformations and contrasts them with the original signals to learn invariant features. To further enhance discriminability, a masked learning task is introduced to capture localized patterns critical for emotion recognition. Additionally, differential masking rates are applied to the augmented and original signals, promoting hierarchical learning of local-to-global representations. This design ensures robust and comprehensive feature extraction, combining contrastive learning for alignment of general features and mask prediction learning of comprehensive features including local and local-to-global representations. Next, components are elaborated in detail.

4.1. Self-Supervised Feature Extractor

As illustrated in

Figure 13, the feature extractor in EmoAdapt comprises four main components: signal transformation, tokenization with 1D convolutional neural network (1D-CNN), transformer encoder, and transformer decoder.

4.1.1. Signal Transformation

Signal transformation was designed to modify input signals while maximally preserving both local and global EEG features, thereby strengthening the influence of interference factors, which allowed the model to extract interference-independent features. For our signal transformation approach, we evaluated various signal augmentation techniques. Given the requirements of emotion recognition tasks and the channel limitation of our device, time-domain data augmentation was preferred over frequency-domain and spatial-domain methods, as temporal features are less indifferent for emotion computation and also more susceptible to the quality of signals. Referring to typical time-domain data augmentation techniques, our signal transformation framework incorporated the following operations: Gaussian noise injection, amplitude scaling, horizontal flipping, vertical flipping, temporal shifting, and time warping.

Given an original EEG data point , where N is the number of trials, C is the number of channels, and S is the number of time samples, we define six signal transformation methods:

- 1.

Gaussian noise addition:

where

is the addition noise following the Gaussian distribution with a mean of zero and a standard deviation of

.

- 2.

Amplitude scaling:

where

is the amplitude scaling factor.

- 3.

Horizontal flipping (sign inversion):

- 4.

Vertical flipping (time reversal):

where

,

n is the trail index, and

c is the channel index.

- 5.

Temporal dislocation:

- (a)

Split each trail into n segments at the S dimension. Denote the jth segment as , where .

- (b)

Randomly permute the segments.

- (c)

Concatenate to form new sample:

where

is the random permutation (bijective function) that shuffles the order of the vectors.

- 6.

Time warping:

- (a)

Split each trail into m segments at the S dimension. Denote the jth segment as , where .

- (b)

Each segment

is zoomed in/out by a random time scaling factor

which follows a uniform distribution cross segments:

where

follows a uniform distribution with lower scaling limit

and upper scaling limit

.

Linear interpolation is applied to each segment

, transforming the segment length from the original

to the new length

, resulting in the interpolated segment

:

where

, and is the segment vector index.

(floor operation): greatest integer .

(ceiling operation): smallest integer .

- (c)

The scaled segments are reconnected to form the signal

. To align the new signal length with the original length, the time warping signal

is finally obtained by resampling

to the original length

S:

where signal

, and the time warping signal

.

4.1.2. Tockenization and 1D CNN

Tokenization began with a sliding-window operation on the original EEG signals and the transformed EEG signals. Specifically, the sliding window was applied for each channel individually, where each windowed segment generated one token. All resulting tokens across channels were concatenated to form the set of tokens . The order of token concatenation was inconsequential at that stage, as position embeddings were subsequently applied to preserve temporal and spatial relationships. The sliding window had a size of 1 s and a sliding step of 0.2 s throughout all experiments.

The sliding window approach served two critical purposes: First, it generated an expanded set of tokens to facilitate effective masked prediction tasks. Second, by generating tokens derived from different channels at varying temporal positions, the model could learn cross-channel correlations and temporal dependencies during masked prediction tasks.

Apart from conventional visual or textual data, EEG signals possess spatiotemporal characteristics that require specialized feature extraction. When general Masked Autoencoders (MAEs) [

24] are applied directly to segmented EEG data, they fail to adequately capture temporal characteristics within segments. This indicate that the nearly linear projection used in ViT and MAE is not enough for EEG signal tokens to embed as token embeddings. To address this limitation, we incorporated a 1D CNN for temporal feature extraction, denoted as

, for each token.

The 1D CNN architecture was based on a multi-scaling 1D ResNet framework, which accelerated gradient flow during backpropagation and consequently enhanced parameter updates. The 1D CNN architecture drew inspiration from [

27], with modifications to the max pooling, average pooling, and fully connected layers to meet affective computing requirements. The 1D CNN architecture employed a shared convolutional layer comprising a 1D convolution with kernel size 7, batch normalization, ReLU activation, and max pooling operations. A shared layer was followed by three parallel feature extractors with distinct kernel sizes (3, 5, and 7). Each extractor contained three identical convolutional blocks (1D convolution → batch normalization → ELU activation), with residual connections and average pooling applied after the final block. The multi-scale features from all extractors were concatenated and processed through fully connected layers to produce the output vector. It is important to note that this output already represented the token embeddings used in subsequent modules.

4.1.3. Transformer Encoder

The transformer encoder

mapped token embeddings into a latent space

Z, where its structure followed the Masked Autoencoders of [

24] excluding linear projection. Given the extracted features

, where

N represents the total number of tokens, position encoding was first performed by adding position embedding

to each token. Notably, the positional encoding was used for the encapsulation of partial temporal information and spatial features of EEG through token sequence. Random masking was added to token embeddings according to a predetermined masking ratio

, and random masking was used on both the original EEG branch (

= 0.8) and the transformed EEG branch (

= 0.5). The unmasked tokens were then collected and concatenated with a position-encoded class token

C, which was a learnable token used to aggregate the information of all other tokens to form a global feature representation. Together, this combined representation was processed through four series-connected transformer encoder blocks (encoder heads = 8, embed dim = 768) to obtain the latent space

Z:

where

denotes the number of remaining tokens after masking, and ⊕ represents the concatenation operation. The latent class token

served as the feature representation for downstream emotion recognition tasks.

Notably, we applied distinct masking ratios to original () and transformed () EEG signals, with to facilitate local-to-global representation learning. Only the previous networks, including the transformer encoder, were utilized during the downstream task evaluation.

4.1.4. Transformer Decoder

The transformer decoder

reconstructed the EEG signal from the latent representations

; additionally, only the latent representations of the original signal were included. The transformer decoder had a similar structure to the transformer encoder, but it employed three series-connected transformer blocks (encoder heads = 8, embed dim = 768) and included an additional linear projection layer. The linear projection was applied to the latent representations

, aiming for a reduction in the token dimensionality. Then, the projected latent representations were concatenated with additional mask tokens

to restore the original token sequence structure with number

N. This combined representation underwent positional encoding before being processed by the transformer decoder. The final reconstructed EEG signal

Y was obtained through layer normalization and a linear projection operation

applied to the transformer decoder’s output:

where

denotes the reorganized operation. It should be noted that the positional embeddings

are different in the transformer encoder and decoder.

4.2. Training Task

The pretext objectives can be divided into signal reconstruction and contrastive learning. For the signal reconstruction task, we exclusively computed the loss on masked patches between the pixel decoder’s predictions and the original EEG signals, ensuring that

approximated

as closely as possible. Given the high variability in raw EEG signals, the traditional Mean Squared Error (MSE) may suffer from instability due to differences in data scale and magnitude. To address this, we employed the Normalized Mean Squared Error (NMSE) loss for more robust optimization. The reconstruction loss was as follows:

where

represents the number of masked tokens.

and

represent the mean and standard deviation of each token, respectively.

The objective of contrastive learning is to maximize the similarity between identical instances while minimizing the similarity between different instances. Given the original EEG signal and its transformed pair, we extracted two latent class tokens, denoted as and , respectively.

Based on these tokens, we constructed positive pairs by matching tokens from the same batch position index, while negative pairs were formed by pairing tokens from different indices. The similarity between these token pairs was then quantified using the Normalized Temperature-scaled Cross-Entropy loss (NT-Xent) [

56].

The final contrastive loss

was computed as the mean of the bidirectional NT-Xent losses between the original and transformed token representations:

where

represents the cosine similarity,

is a temperature parameter scaling the similarity distribution default to 0.05,

is the batch size, and

is an indicator function evaluating to 1 when

.

In the training process, the masked prediction task was performed concurrently with contrastive learning. To balance the contributions of reconstruction loss and contrastive loss, we introduced a hyperparameter

. This configuration allowed the overall loss magnitude to be controlled by the learning rate, while the ratio between reconstruction loss and contrastive loss could be adjusted through

. The final compound loss function was

4.3. Classification Task

The proposed method did not focus on the design of downstream classifiers, as a wide variety of established models are already available for emotion recognition. Machine learning-based methods include Linear Discriminant Analysis (LDA) [

57], Bayesian methods [

58], SVM [

59], MLP, KNN [

60], and Random Forrest [

61]. Deep learning-based approaches comprise Deep Belief Networks (DBNs) [

20], CNNs [

62,

63,

64], CNN-LSTM hybrid models [

65], Graph Neural Networks (GNNs) [

66], and CNN–transformer hybrid [

67]. In addition to conventional classifiers, domain adaptation (DA) techniques are more suitable for cross-subject tasks, given their growing prominence in contemporary research [

17,

18].

ML algorithms generally achieve lower accuracy than the latest DL algorithms, though their robustness enables consistent performance across diverse scenarios. Thus, ML methods remain more prevalent in most emotion recognition systems and commercial applications. In the following experiment, we employed multiple ML algorithms for downstream task training and testing, and four commonly used ML methods were selected: SVM, MLP, KNN, and RF.

Model performance was evaluated using accuracy (ACC) and Macro-F1 (MF1) scores. ACC reflects the overall prediction correctness of the model, while MF1 simultaneously considers both precision and recall across all classes, providing a balanced measure especially valuable for imbalanced datasets. Formulas for ACC and MF1 are as follows:

where

,

and

denotes the True Positives and True Negatives,

and

denotes the False Positives and False Negatives, and

N is the total number of classes.

5. Experiment and Materials

This section outlines the datasets employed for algorithm validation, the experimental protocol for data acquisition, and all data processing procedures.

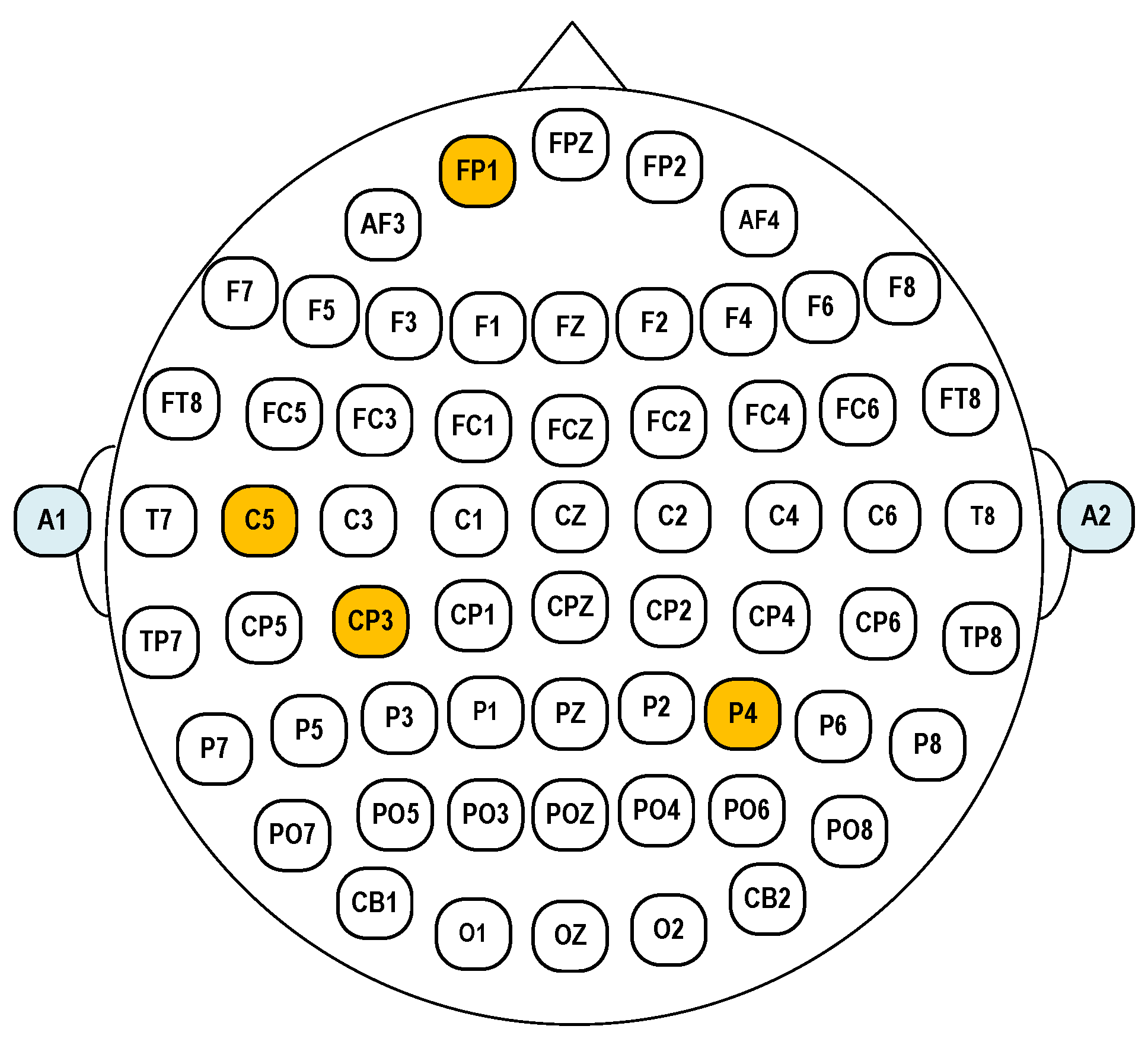

5.1. Channel Selection

For few-channel emotion recognition devices, electrode selection is crucial. Since emotions typically involve activity across different brain regions, sparse electrode configurations often fail to adequately capture emotional dynamics. Based on prior research on optimal electrode placement, the temporal lobes regions are generally prioritized [

20]. However, to better capture the comprehensive spatial patterns of brain activity, we strategically positioned two additional electrodes over the prefrontal and parietal regions. The final electrode configuration consisted of Fp1, C5, Cp3, and P4. Reference and bias electrode were selected to be the left ear A1 and right ear A2, respectively. The layout of the selected EEG electrodes based on the 10–20 system is shown in

Figure 14.

5.2. SEED Dataset

The SEED dataset was collected from 15 participants (7 males and 8 females), each undergoing three sessions one week apart, resulting in a total of 45 sessions [

68]. In each session, participants watched 15 film clips (5 positive, 5 negative, and 5 neutral) presented in a well-organized sequence to prevent consecutive clips of the same emotion. Each trial began with a 5 s hint, followed by a 4 min film clip, a 45 s self-assessment where participants reported their emotional responses via a questionnaire, and a 15 s rest period. The dataset was designed for a three-class emotion classification task (positive, negative, neutral), with participants instructed to provide immediate and genuine feedback after each clip to ensure reliable emotional labeling.

5.3. Experiment Protocol

To ensure the comparability and reliability of our experiment, we employed the same emotional induction materials as the SEED dataset, consisting of 15 Chinese film clips categorized into positive, neutral, and negative. The experiment involved two healthy subjects with no visual impairments, each seated comfortably in front of a computer. The experiment comprised a total of 15 trials, with each trial beginning with a 5 s cue before the video playback, followed by a 20 s rest period after each clip. The presentation order of the film clips was kept the same as that of the SEED dataset.

5.4. Data Processing

For the SEED dataset, we utilized the provided processed data, which was downsampled to 200 Hz and filtered with a 0–75 Hz low-pass frequency filter after segmentation. The processed data were segmented using a fixed 5 s window length without any overlap to simulate a real online task, where 5 s was selected to balance the prediction accuracy and feedback frequency. Labels were assigned to the segmented data based on the video sequence, and the segmented data were concatenated along the first dimension, resulting in labels of shape (windows) and data of shape (windows × channels × 1000), with each experiment saved as a separate file. Our collected data underwent identical processing steps as the SEED dataset, including downsampling to 200 Hz, applying a 0–75 Hz low-pass filter, and segmentation with a fixed 5 s window length, with each experiment also saved as an individual file.

6. Results

6.1. Signal Acquisition Validation

To verify the functionality of the proposed EEG acquisition system, a series of signal recording experiments were conducted under typical usage scenarios.

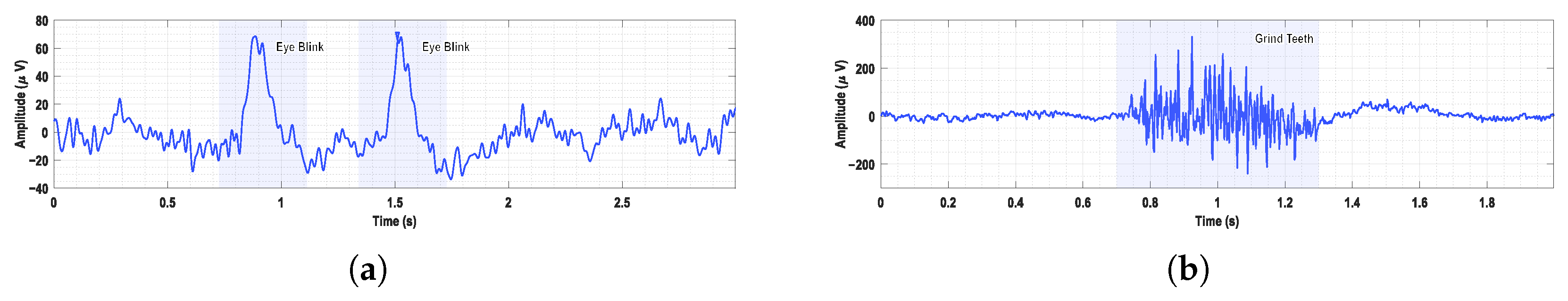

Figure 15 shows two representative types of bioelectrical signals recorded in addition to EEG. Clear eye-blink artifacts are visible in the EEG traces. Distinct EMG activity was observed during voluntary teeth clenching, reflecting effective muscle signal acquisition.

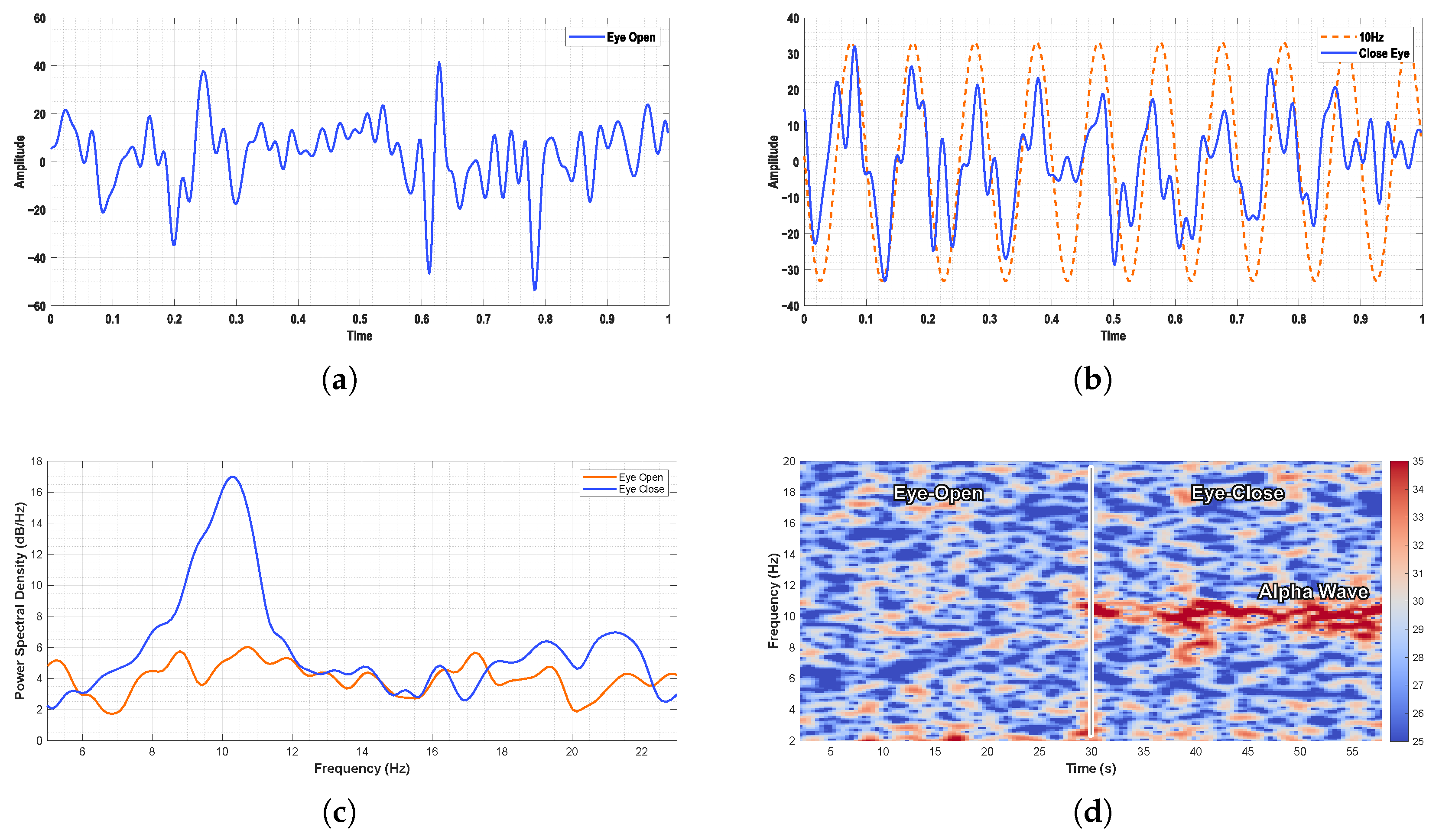

For EEG recordings, the alpha-band activity was clearly observed in the occipital region during the eyes-closed state.

Figure 16 illustrates the PSD and time–frequency analysis of EEG signals under eyes-open and eyes-closed conditions. A pronounced increase in alpha-band power was evident during the eyes-closed state, consistent with well-established physiological patterns.

6.2. Methodology Validation

6.2.1. Feature Baselines

Power spectral density (PSD) and differential entropy (DE) features are commonly employed in emotion recognition systems, with their effectiveness well documented in previous studies. Both PSD and DE features are typically extracted across five standard frequency bands after applying a Hamming window:

: 1–3 Hz,

: 4–7 Hz,

: 8–13 Hz,

: 14–30 Hz, and

: 31–50 Hz. It should be noted that the DE feature, originally an extension of Shannon entropy under Gaussian distribution, considers the variance of EEG time series signals. However, since this variance equals the average energy within the frequency band, DE can alternatively be computed through a PSD. Time-domain DE extraction is given by [

68]:

where EEG is the time sequence

X and

For both PSD and DE features in our experiment, we applied the FFT with a fixed 1 s window corresponding to 200 samples, without overlapping the windows.

In addition to frequency-domain features, we incorporated time-domain features considering potential online system applications. We selected computationally efficient features including the following:

Power (P): reflects signal intensity, calculated as the ratio of the sum of squared signal samples to the number of samples.

Line length (L): measures waveform dimensional changes, influenced by both frequency and amplitude variations, computed as the sum of absolute differences between consecutive samples.

Root-mean-square (RMS): obtained by taking the square root of the mean squared EEG signal samples.

First difference (D1): calculated as the sum of differences between sample pairs divided by .

Second difference (D2): computed similarly to D1 but based on samples.

6.2.2. Model Baselines

Multiple recent and classical machine learning and deep learning methods were compared in the experiments, and they were tuned to their best performance.

Feature-based machine learning baselines and their parameters were as follows:

Random Forest (RF): the number of estimators was set to 100 for both traditional and SSL-based features.

Multilayer perceptron (MLP):

- –

SSL-based features: hidden layer size = 1000, initial learning rate = , ReLU activation.

- –

Traditional features: hidden layer size = 100, initial learning rate = , ReLU activation.

k-Nearest Neighbors (k-NN): the number of neighbors (k) was set to 50 for both feature types.

Support Vector Machine (SVM):

- –

SSL-based features: regularization parameter , RBF kernel.

- –

Traditional features: , RBF kernel.

Deep learning baselines included the following:

ERTNet is a CNN–transformer model. Feature maps are through temporal convolution and spatial convolution, and advanced spatiotemporal features are integrated using a transformer [

67].

EEGNET is a classical EEG-based deep learning method, including depthwise and separable convolutions [

63].

ShallowConvNet and DeepConvNet are two CNNs of different depths designed based on EEG [

64].

Tsception is a multi-scale convolutional neural network, consisting of dynamic time layers, asymmetric spaces and advanced fusion layers [

62].

6.2.3. Validation on SEED Dataset

In the cross-session evaluation, we ensured that the SSL model and downstream machine learning training utilized identical sessions for the training set, while the test set was exclusively composed of data from a distinct session. Specifically, the SEED dataset comprised three sessions, each containing data from 15 subjects. Initially, we trained the unsupervised model using all subjects from one session for 40 epochs. Subsequently, the identical session’s data were employed for downstream emotion classification training. Upon completion of these two training phases, the model was evaluated on the remaining two sessions separately, with the ACC and MF1 being recorded. To enhance robustness, four distinct machine learning models were trained and tested in parallel. After iterating this process across all three sessions, the results were averaged to yield the SSL features’ performance reported in

Table 6. The methods for training and testing ML models with baseline features and SSL features were the same. It can be understood as replacing the SSL features’ output by the SSL model with baseline features for downstream training and testing. For the DL methods, the models were trained on one session and independently evaluated on the other two sessions.

The experimental results demonstrated that the SSL features extracted by the SSL model outperformed the baseline features on the RF, MLP, and SVM in both ACC and MF1, and they also outperformed all DL methods. Notably, the SVM classifier delivered the highest performance with SSL features, attaining an average accuracy of 0.5471 and an MF1 of 0.5447. Furthermore, SSL features exhibited a smaller performance variance among different ML algorithms, with maximum deviations of only 0.034 (ACC and MF1) across models; this showed the robustness of our proposed algorithm.

However, we observed that the SSL features achieved comparable ACC to DE features when using KNN, while outperforming DE features in terms of MF1. The improvements in SSL features over traditional methods remained modest, with the highest gains observed for the MLP (0.058 in ACC and 0.066 in MF1). Although our algorithm demonstrated greater robustness than traditional features on the SEED dataset, the overall performance improvement was limited.

6.2.4. Validation on Experiment Dataset

Following the validation on the SEED dataset, we further investigated the performance of the SSL model on our proposed portable device through a cross-session experiment. In our cross-session test, we intentionally employed a cross-dataset SSL feature extractor to evaluate the robustness and generalization capability. Specifically, the SSL feature extractor was trained on session 3 from the SEED dataset, while downstream ML classifiers were trained and tested based on the experiment’s data in a cross-session manner. Our validation results indicated that this cross-dataset approach caused a performance reduction of 1–3% compared to conventional cross-session SSL training.

Despite this slight performance trade-off, we deliberately adopted the cross-dataset paradigm for two key reasons: to prove the robustness of our algorithm to the changes in the dataset and to highlight its low sensitivity to the EEG signal quality.

For the ML baselines’ comparison, we exclusively employed DE features, as they demonstrated better performance compared to PSD and time-domain features in prior cross-session validation.

Experimental results from

Table 7 demonstrate that our proposed method outperformed ML baselines based on DE features across both ACC and MF1. The most significant improvement was observed with the MLP, achieving a 20.4% increase in ACC and a 20.9% enhancement in MF1. The RF classifier yielded the highest performance under the SSL features (ACC: 60.2%; MF1: 59.4%). The DL baselines showed comparable performance to DE-based ML methods in that experiment. Tsception, which had the best performance among DL methods, exhibited similar results to the DE-based Random Forest, with differences in both ACC and MF1 being less than 1%. However, our proposed approach based on RF outperformed Tsception and DE-based RF by around 5% ACC and 6% MF1. Through a comparative analysis between our experiment and the previous experiment on the SEED dataset, our method demonstrated significantly greater performance improvements on our dataset compared to other baseline approaches. For instance, while the SSL feature-based SVM showed only a 1% ACC improvement over DE features on the SEED dataset, our experiments revealed a substantial 9% enhancement. Similarly, the MLP achieved a 20.4% ACC improvement in our study, significantly higher than the 5.8% observed on the SEED dataset. The Random Forest classifier showed a 4.8% ACC increase (compared to 0.24% on the SEED dataset), and KNN demonstrated a 9.9% improvement (versus −0.17% on the SEED dataset).

These results indicated that our model exhibited more significant effectiveness under our proposed device, which aligned with our initial hypotheses. These findings substantially support that our model performs particularly well on devices with potential signal quality issues.

6.2.5. Ablation Study

In this section, we conducted ablation experiments to validate the effectiveness of each component in our model, and the experiment results can be found in

Table 8. As described in

Section 4 (Methodology), we introduced contrastive learning through an additional branch, where different mask rates were applied to the original EEG and transformed EEG branches, enabling the model to learn from local-to-global representations.

To investigate the impact of key components, we performed three ablation studies:

- 1.

Non-ST (signal transformation): we removed the signal transformation component to examine the effectiveness of contrastive learning.

We adjusted the mask rate of the transformed EEG branch to evaluate the importance of local-to-global learning. Specifically, we implemented two variants:

- 2.

Non-DMR (differential mask rate): the mask rate of the transformed EEG branch was set equal to the original EEG branch, which was fixed at 0.8 during all experiments.

- 3.

Non-STM (spatio-temporal masking): the mask rate of the transformed EEG branch was set to zero (no masking).

6.2.6. Vitalization

To comprehensively evaluate the performance enhancement of SSL features over conventional features, we employed t-distributed stochastic neighbor embedding (t-SNE) for dimensionality reduction and visualization. The t-SNE projection demonstrated an expansion of inter-class separability, indicating that the distinctions between different emotion categories became more pronounced in the SSL feature space. Additionally, intra-class variability was substantially minimized, resulting in tighter clustering of samples belonging to the same emotional category. As illustrated in

Figure 17, the SSL features exhibited more compact intra-class distributions and clearer inter-class separation boundaries compared to conventional DE features.

Figure 17a,b shows the data of session 3 of the 15th subject in the SEED dataset. We can see the three non-overlapping classes of emotions from the t-SNE space in

Figure 17a. However, the DE features demonstrated limited performance on devices with potential signal quality issues, as illustrated in

Figure 17c. On our acquisition equipment, the DE features failed to discriminate among the three emotional classes, showing complete overlap in their representations. In contrast, our proposed method achieved effective separation of the three emotional categories in the t-SNE space with significantly reduced overlapping.

Notably, we observed a partial overlap between a small subset of neutral and happy emotion samples in

Figure 17b, which likely reflected inherent label noise caused by imperfect emotional elicitation during data collection rather than deficiencies in the SSL model itself. This observation underscores the model’s ability to capture subtle emotional nuances that may not be perfectly aligned with the ground-truth labels.

7. Discussion

As shown in

Table 1, the amplifier selected in this work operated at a lower voltage while providing higher gain compared to several established commercial devices. While this configuration reduced the burden on the ADC, it constrained the dynamic range, limiting the input to

. To mitigate this, the system is typically operated at a lower gain setting to accommodate a wider dynamic range. Additionally, stable electrode attachment is critical to prevent motion-induced voltage spikes that may cause amplifier saturation. Currently, input noise, CMRR, and other parameters are not yet optimized and will be addressed in future iterations. However, our prototype system incorporating the proposed algorithm successfully achieved the intended emotion analysis objectives, demonstrating encouraging initial performance.

To address these signal quality limitations, we introduced the self-supervised feature extractor EmoAdapt. As demonstrated in

Table 6 and

Table 7, EmoAdapt significantly alleviated performance degradation due to signal noise. However, it still encountered challenges related to cross-subject feature distribution discrepancies, particularly in scenarios involving new users without conditions for model fine-tuning. Future work will explore extending the framework to a semi-supervised paradigm, where partial label information is incorporated into the NT-Xent loss to better align feature centroids between positive and negative pairs.

Currently, the system is a single-modal, low-channel EEG acquisition device. Extensive studies have demonstrated that multimodal EEG can enhance emotion recognition performance. For instance, Yang et al. proposed an AI edge computation platform for emotion recognition using wearable physiological sensors [

69]. By utilizing EEG and ECG/PPG signals for emotion classification, the accuracy achieved was 94.3% and 76.8%, respectively. That platform integrated a RISC-V CPU, an accelerator, and an FPGA, enabling real-time applications and local neural network training. Future enhancements may involve exploring more complex network architectures and multimodal signal fusion strategies.

Similarly, Kim et al. introduced a wearable system capable of detecting emotional transitions and identifying causal relationships in daily scenarios [

70]. Additionally, Saffaryazdi et al. combined facial micro-expressions, EEG, GSR, PPG, and other multimodal signals for emotion recognition [

71]. Unlike macro-expressions, micro-expressions provide more nuanced cues, contributing to greater accuracy. Their experimental results indicated that combining multiple modalities and fusing their outputs could improve emotion recognition.

Motivated by these insights, future development of the proposed system could exploit its reserved expansion interfaces to enable multimodal signal acquisition. Nevertheless, given the system’s emphasis on cost-effectiveness, it is essential to critically assess whether the integration of additional modalities yields performance improvements that justify the increased hardware complexity and cost. If such gains do not scale proportionally, the incorporation of additional sensing modalities may not be economically justified.

In this paper, we proposed a modular concept for the system, allowing for easy replacement of the analog front-end. Currently, we have only validated the feasibility of this concept using the KS1092 AFE. However, there has been no side-by-side comparison with state-of-the-art equipment. Therefore, we can design various AFE modules based on this system and evaluate their performance individually. Additionally, we can establish an objective cost-effectiveness metric based on the test results to facilitate the selection process for future researchers and engineers during system design.

8. Conclusions

This study presented a complete, portable, and cost-effective EEG-based emotion recognition system that integrated modular hardware with a robust self-supervised learning framework. The hardware, developed with a total cost under USD 30, supported both wet and dry electrodes, wireless data transmission, onboard storage, adjustable gain and sampling rates, and real-time visualization through a web interface, making it suitable for real-world deployment. On the algorithmic side, we proposed EmoAdapt, a self-supervised feature extractor designed to enhance emotion-related EEG feature learning. Evaluated on both public (SEED) and in-house datasets under cross-session, cross-subject, and cross-dataset scenarios, EmoAdapt consistently outperformed baseline methods in terms of feature robustness and discriminability, particularly under the low-signal-quality conditions typical of portable acquisition. The proposed system demonstrated a well-balanced design between hardware affordability and algorithmic performance, offering a scalable and accessible solution for practical affective-computing applications.

Author Contributions

Conceptualization, H.L. (Hao Luo), H.L. (Haobo Li), C.-I.I. and F.W.; Methodology, H.L. (Haobo Li) and W.T.; Software, H.L. (Hao Luo) and Y.Y.; Validation, H.L. (Hao Luo) and H.L. (Haobo Li); Formal analysis, H.L. (Haobo Li) and Y.Y.; Investigation, H.L. (Hao Luo) and W.T.; Resources, C.-I.I. and F.W.; Data curation, H.L. (Haobo Li); Writing—original draft, H.L. (Hao Luo), H.L. (Haobo Li); Writing—review & editing, W.T., Y.Y., C.-I.I. and F.W.; Visualization, H.L. (Hao Luo) and H.L. (Haobo Li); Supervision, C.-I.I. and F.W.; Project administration, C.-I.I. and F.W.; Funding acquisition, C.-I.I. and F.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded in part by The Science and Technology Development Fund, Macau SAR (File no. 0085/2023/AMJ), by University of Macau (File no. MYRG2022-00197-FST, MYRG-GRG2024-00285-FST, MYRG-CRG2024-00048-FST-ICI), and by Guangdong Basic and Applied Basic Research Foundation (Grant No. 2023A1515010844).

Data Availability Statement

Acknowledgments

Thanks are due to Jerry Zhang for offering technical support on KS1092.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Alarcao, S.M.; Fonseca, M.J. Emotions recognition using EEG signals: A survey. IEEE Trans. Affect. Comput. 2017, 10, 374–393. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Y.; Tiwari, P.; Song, D.; Hu, B.; Yang, M.; Zhao, Z.; Kumar, N.; Marttinen, P. EEG based emotion recognition: A tutorial and review. ACM Comput. Surv. 2022, 55, 1–57. [Google Scholar] [CrossRef]

- Halim, Z.; Rehan, M. On identification of driving-induced stress using electroencephalogram signals: A framework based on wearable safety-critical scheme and machine learning. Inf. Fusion 2020, 53, 66–79. [Google Scholar] [CrossRef]

- Rozgic, V.; Vazquez-Reina, A.; Crystal, M.; Srivastava, A.; Tan, V.; Berka, C. Multi-modal prediction of ptsd and stress indicators. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; IEEE: New York, NY, USA, 2014; pp. 3636–3640. [Google Scholar]

- Cai, H.; Qu, Z.; Li, Z.; Zhang, Y.; Hu, X.; Hu, B. Feature-level fusion approaches based on multimodal EEG data for depression recognition. Inf. Fusion 2020, 59, 127–138. [Google Scholar] [CrossRef]

- Moshfeghi, Y.; Jose, J.M. An effective implicit relevance feedback technique using affective, physiological and behavioural features. In Proceedings of the 36th International ACM SIGIR Conference on Research and Development in Information Retrieval, Dublin, Ireland, 28 July–1 August 2013; pp. 133–142. [Google Scholar]

- Nguyen, T.H.; Chung, W.Y. Negative news recognition during social media news consumption using EEG. IEEE Access 2019, 7, 133227–133236. [Google Scholar] [CrossRef]

- Alakus, T.B.; Gonen, M.; Turkoglu, I. Database for an emotion recognition system based on EEG signals and various computer games–GAMEEMO. Biomed. Signal Process. Control 2020, 60, 101951. [Google Scholar] [CrossRef]

- Vesisenaho, M.; Juntunen, M.; Fagerlund, J.; Miakush, I.; Parviainen, T.; Miakush, I.; Parviainen, T. Virtual reality in education: Focus on the role of emotions and physiological reactivity. J. Virtual Worlds Res. 2019, 12, 1. [Google Scholar] [CrossRef]

- Ratti, E.; Waninger, S.; Berka, C.; Ruffini, G.; Verma, A. Comparison of medical and consumer wireless EEG systems for use in clinical trials. Front. Hum. Neurosci. 2017, 11, 398. [Google Scholar] [CrossRef]

- Maskeliunas, R.; Damasevicius, R.; Martisius, I.; Vasiljevas, M. Consumer-grade EEG devices: Are they usable for control tasks? PeerJ 2016, 4, e1746. [Google Scholar] [CrossRef]

- Dadebayev, D.; Goh, W.W.; Tan, E.X. EEG-based emotion recognition: Review of commercial EEG devices and machine learning techniques. J. King Saud-Univ.-Comput. Inf. Sci. 2022, 34, 4385–4401. [Google Scholar] [CrossRef]

- Badcock, N.A.; Mousikou, P.; Mahajan, Y.; De Lissa, P.; Thie, J.; McArthur, G. Validation of the Emotiv EPOC® EEG gaming system for measuring research quality auditory ERPs. PeerJ 2013, 1, e38. [Google Scholar] [CrossRef] [PubMed]

- Song, T.; Zheng, W.; Song, P.; Cui, Z. EEG emotion recognition using dynamical graph convolutional neural networks. IEEE Trans. Affect. Comput. 2018, 11, 532–541. [Google Scholar] [CrossRef]

- Petrantonakis, P.C.; Hadjileontiadis, L.J. Emotion recognition from EEG using higher order crossings. IEEE Trans. Inf. Technol. Biomed. 2009, 14, 186–197. [Google Scholar] [CrossRef]

- Oh, S.H.; Lee, Y.R.; Kim, H.N. A novel EEG feature extraction method using Hjorth parameter. Int. J. Electron. Electr. Eng. 2014, 2, 106–110. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, Z.; Tao, W.; Liu, X.; Jia, Z.; Wang, B.; Wan, F. Spectral-spatial attention alignment for multi-source domain adaptation in EEG-based emotion recognition. IEEE Trans. Affect. Comput. 2024, 15, 2012–2024. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, Z.; Song, Y.; Jia, Z.; Wang, B.; Jung, T.P.; Wan, F. Exploiting the Intrinsic Neighborhood Semantic Structure for Domain Adaptation in EEG-based Emotion Recognition. IEEE Trans. Affect. Comput. 2025, 1–13. [Google Scholar] [CrossRef]

- Shi, L.C.; Jiao, Y.Y.; Lu, B.L. Differential entropy feature for EEG-based vigilance estimation. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; IEEE: New York, NY, USA, 2013; pp. 6627–6630. [Google Scholar]