Hybrid LSTM–Transformer Architecture with Multi-Scale Feature Fusion for High-Accuracy Gold Futures Price Forecasting

Abstract

1. Introduction

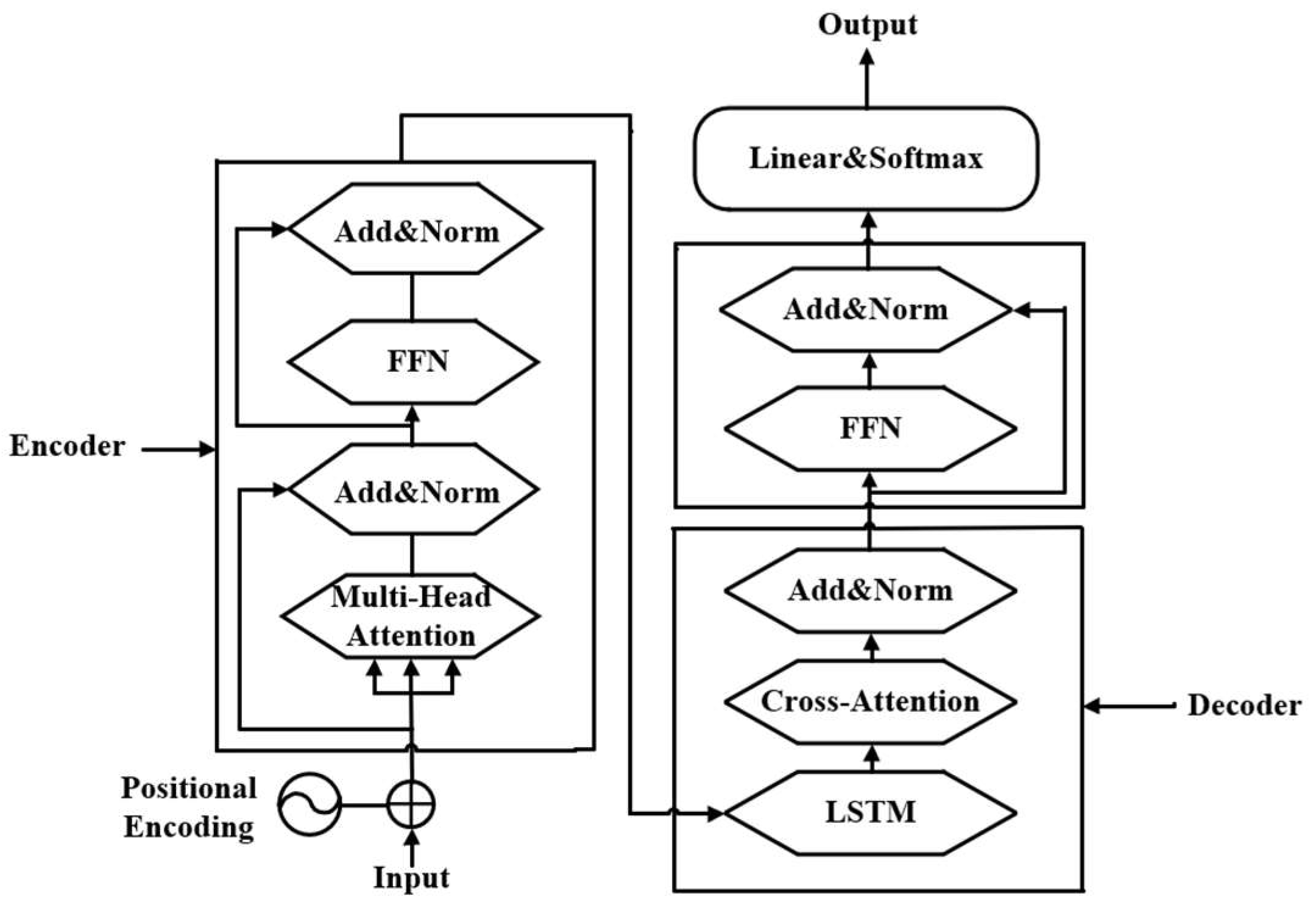

- Bidirectional LSTM–Transformer Interaction Architecture: By integrating cross-attention mechanisms, this framework establishes deep synergy between global and local features, overcoming the fragmented processing of temporal dependencies and market sentiment in conventional models. During the 2023 Red Sea shipping crisis, this architecture improved the detection timeliness of surging safe-haven demand by 2.3 trading days compared to traditional LSTM.

- Dynamic Hierarchical Partition Framework (DHPF): Tailored to gold prices’ quadripartite drivers—macro policies, micro trading behaviors, external correlations, and event shocks—this strategy employs price trends (mean filtering), volatility (GARCH modeling), external correlations (Granger causality tests), and event intensity (sentiment analysis) for data stratification. This effectively mitigates overfitting caused by heterogeneous data distributions.

- Dual-Loop Adaptive Mechanism: Combining endogenous parameter updates (gradient backpropagation based on prediction errors) and exogenous environmental perception (the real-time monitoring of market volatility indices and VIX linkages), this dual closed-loop regulation significantly reduces prediction error volatility under extreme scenarios, outperforming static parameter models.

2. Literature Review

2.1. Research Status

2.2. Literature Summary

3. Analysis of the Influencing Factors of Gold Futures Trading Price

3.1. Global Equity Market Indicators

3.2. Fixed-Income and Money Market Indicators

3.3. Digital Asset Indicators

3.4. Alternative Asset Indicators

3.5. Commodity Indicators

3.6. Special Indicators

4. Model Overview

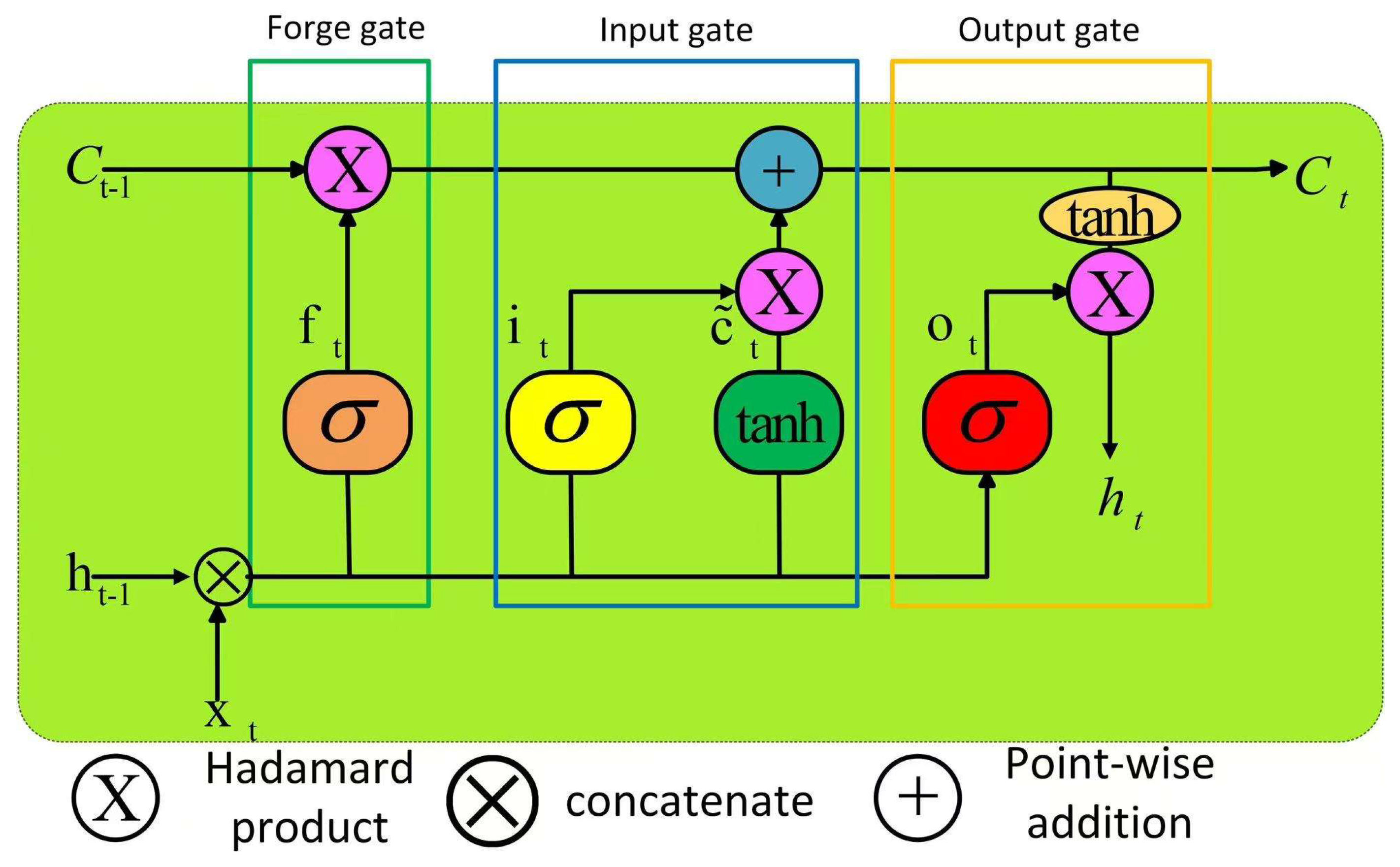

4.1. Long Short-Term Memory (LSTM) Model

4.2. Transformer Architecture

4.3. LSTM-Transformer Architecture

4.4. Evaluation Metrics

5. Empirical Analysis

5.1. Systematic Indicator Framework and Data Preparation

5.2. Descriptive Statistics and Data Preprocessing

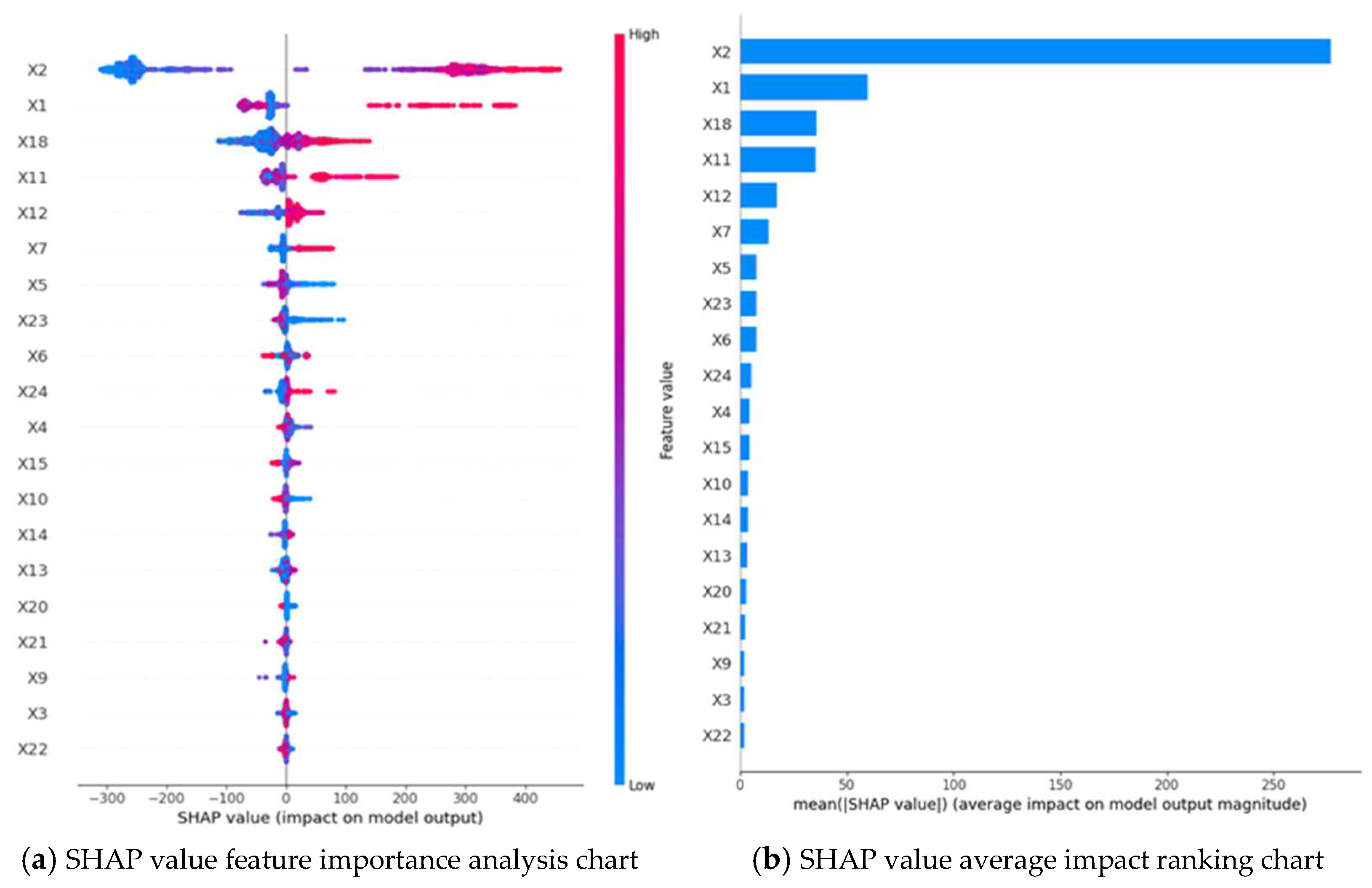

5.3. Feature Selection

- (1)

- Instance-Level Interpretability: Reveals feature contribution patterns for specific predictions.

- (2)

- Directionality: Explicitly identifies whether feature influences are positive or negative.

- (3)

- Interaction Effects: Quantifies combined feature impacts through conditional expectations.

5.4. Model Architecture and Training Parameters

5.5. Comparative Analysis of Prediction Results

6. Conclusions

6.1. Model Innovations

6.2. Model Prediction Performance

6.3. Discussion and Future Directions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Varshini, A.; Kayal, P.; Maiti, M. How good are different machine and deep learning models in forecasting the future price of metals? Full sample versus sub-sample. Resour. Policy 2024, 92, 105040. [Google Scholar] [CrossRef]

- Gao, J.Y. Analysis of Factors Influencing Gold Price Fluctuation; Northwest A&F University: Xianyang, China, 2011. [Google Scholar]

- Narayan, P.K.; Narayan, S.; Zheng, X. Gold and oil futures markets: Are markets efficient? Appl. Energy 2010, 87, 3299–3303. [Google Scholar] [CrossRef]

- Feng, H.; Zhang, S.-l. The Empirical Analysis About the Determinants of International Gold Futures Prices. Chin. J. Manag. Sci. 2012, 20 (Suppl. 1), 424–428. [Google Scholar]

- Yang, S.G.; Chen, S.L.; Wang, D. The Research on the Influencing Factors of Chinese Gold Futures. Theory Pract. Financ. Econ. 2014, 35, 44–48. [Google Scholar]

- Raza, S.A.; Shah, N.; Shahbaz, M. Does economic policy uncertainty influence gold prices? Evidence from a nonparametric causality-in-quantiles approach. Resour. Policy 2018, 57, 61–68. [Google Scholar] [CrossRef]

- Wang, H.; Sheng, H.; Zhang, H.-W. Influence factors of international gold futures price volatility. Trans. Nonferrous Met. Soc. China 2019, 29, 2447–2454. [Google Scholar] [CrossRef]

- Balcilar, M.; Gupta, R. On exchange-rate movements and gold-price fluctuations: Evidence for gold-producing countries from a nonparametric causality-in-quantiles test. Int. Econ. Econ. Policy 2017, 14, 649–666. [Google Scholar] [CrossRef]

- Zeng, L.; Ma, D.D.; Liu, Z.X. Gold Price Forecast Based on Improved BP Neural Network. Comput. Simul. 2010, 27, 200–203. [Google Scholar]

- Fei, J.W. Analysis and prediction of China’s gold futures prices based on ARIMA model. Contemp. Econ. 2017, 9, 148–150. [Google Scholar]

- Luo, S.J. Prediction of the Gold Futures Price Based on Deep Learning; Lanzhou University: Lanzhou, China, 2017. [Google Scholar]

- Li, C. Research on Prediction of Chaotic Time Series Gold Futures Price -Base on Phase Space and ARIMA-LSTM Hybrid Model; Jinan University: Jinan, China, 2018. [Google Scholar]

- Livieris, I.E.; Pintelas, E.; Pintelas, P. A CNN-LSTM model for gold price time-series forecasting. Neural Comput. Appl. 2020, 32, 17351–17360. [Google Scholar] [CrossRef]

- Liu, Q.; Shi, S.; Lou, L.; Liu, L. Stock price prediction and decision-making based on ARIAM-GARCH deep learning. J. Chang. Univ. Sci. Technol. (Nat. Sci. Ed.) 2024, 47, 119–130. [Google Scholar]

- Cohen, G.; Aiche, A. Forecasting gold price using machine learning methodologies. Chaos Solitons Fractals 2023, 175 Pt 2, 114079. [Google Scholar] [CrossRef]

- Abu-Doush, I.; Ahmed, B.; Awadallah, M.A.; Al-Betar, M.A.; Rababaah, A.R. Enhancing multilayer perceptron neural network using archive-based harris hawks optimizer to predict gold prices. J. King Saud Univ. Comput. Inf. Sci. 2023, 35, 101557. [Google Scholar] [CrossRef]

- Kim, A.; Ryu, D.; Webb, A. Forecasting oil futures markets using machine learning and seasonal trend decomposition. Invest. Anal. J. 2024, 1–14. [Google Scholar] [CrossRef]

- Fan C-y Tong J-y Cheng J-y Zhou, Y. Gold Futures Price Forecasting Based on ML-DMA. J. Appl. Stat. Manag. 2024, 43, 541–558. [Google Scholar]

- Quan, P.; Shi, W. Application of CEEMDAN and LSTM for Futures Price Forecasting. In Proceedings of the 2024 International Conference on Machine Intelligence and Digital Applications (MIDA 2024), Ningbo, China, 30–31 May 2024; ACM: New York, NY, USA, 2024. 12p. [Google Scholar] [CrossRef]

- Guo, Y.; Li, C.; Wang, X.; Duan, Y. Gold Price Prediction Using Two-layer Decomposition and XGboost Optimized by the Whale Optimization Algorithm. Comput. Econ. 2024. [Google Scholar] [CrossRef]

- Huo, L.; Xie, Y.; Li, J. An Innovative Deep Learning Futures Price Prediction Method with Fast and Strong Generalization and High-Accuracy Research. Appl. Sci. 2024, 14, 5602. [Google Scholar] [CrossRef]

- Gómez-Valle, L.; López-Marcos, M.Á.; Martínez-Rodríguez, J. Financial boundary conditions in a continuous model with discrete-delay for pricing commodity futures and its application to the gold market. Chaos Solitons Fractals 2024, 187, 115476. [Google Scholar] [CrossRef]

- Pan, H.; Tang, Y.; Wang, G. A Stock Index Futures Price Prediction Approach Based on the MULTI-GARCH-LSTM Mixed Model. Mathematics 2024, 12, 1677. [Google Scholar] [CrossRef]

- Song, Y.; Huang, J.; Xu, Y.; Ruan, J.; Zhu, M. Multi-decomposition in deep learning models for futures price prediction. Expert Syst. Appl. 2024, 246, 123171. [Google Scholar] [CrossRef]

- Nabavi, Z.; Mirzehi, M.; Dehghani, H. Reliable novel hybrid extreme gradient boosting for forecasting copper prices using meta-heuristic algorithms: A thirty-year analysis. Resour. Policy 2024, 90, 104784. [Google Scholar] [CrossRef]

- Grobys, K. Is gold in the process of a bubble formation? New evidence from the ex-post global financial crisis period. Res. Int. Bus. Financ. 2025, 75, 102727. [Google Scholar] [CrossRef]

- Li, N.; Li, J.; Wang, Q.; Yan, D.; Wang, L.; Jia, M. A novel copper price forecasting ensemble method using adversarial interpretive structural model and sparrow search algorithm. Resour. Policy 2024, 91, 104892. [Google Scholar] [CrossRef]

- Lu, G.; Li, M. A study on the linkage between us dollar index and gold price. Price Theory Pract. 2017, 29, 109–112. [Google Scholar]

- Zhu, X.L.; Li, P. Linkage effect between RMB exchange rate and international gold price: Based on spillover and dynamic correlation perspectives. J. Wuhan Univ. Sci. Technol. (Soc. Sci. Ed.) 2012, 14, 669–673. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to Forget: Continual Prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef]

| Category | Indicators | Indicator Code | |

|---|---|---|---|

| Target Variable | Shanghai Futures Exchange Gold Futures Closing Price | Y | |

| Global Equity Market Indicators | S&P 500 Closing Price | X1 | |

| NASDAQ Composite Closing Price | X2 | ||

| SSE Composite Index Closing Price | X3 | ||

| CSI 1000 Index Closing Price | X4 | ||

| Fixed-Income and Money Market Indicators | Chinese Government Bond Yield (1 Year) | X5 | |

| US Treasury Bond Yield (1 Year) | X6 | ||

| UK Treasury Bond Yield (1 Year) | X7 | ||

| Shanghai Interbank Offered Rate (Shibor) | X8 | ||

| Hong Kong Interbank Offered Rate (Hibor) | X9 | ||

| US Dollar Index (DXY) | X10 | ||

| USD to CNY Exchange Rate (Central Parity) | X11 | ||

| Euro to CNY Exchange Rate (Central Parity) | X12 | ||

| Digital Asset Indicators | Bitcoin Closing Price | X13 | |

| Ethereum Closing Price | X14 | ||

| Litecoin Closing Price | X15 | ||

| Alternative Asset Indicators | Guolianan SSE Commodity Stock ETF | X16 | |

| Premia China Real Estate USD Bond ETF | X17 | ||

| Commodity Indicators | Metals | Silver Futures Closing Price | X18 |

| Copper Futures Closing Price | X19 | ||

| Energy | Guotai Zhongzheng Coal ETF | X20 | |

| WTI Crude Oil Futures Closing Price | X21 | ||

| Brent Crude Oil Futures Closing Price | X22 | ||

| Natural Gas Futures Closing Price | X23 | ||

| Special Indicators | CBOE Volatility Index (VIX) | X24 | |

| Beijing Air Quality Index (AQI) | X25 | ||

| Variables | Mean | Standard Deviation | Minimum | Median | Maximum | Skewness | Kurtosis |

|---|---|---|---|---|---|---|---|

| X9 | 1.119 | 1.549 | 0.033 | 0.180 | 6.504 | 1.370 | 0.523 |

| X17 | 39.064 | 15.678 | 8.4 | 49.6 | 50.5 | −0.937 | −0.968 |

| X8 | 1.975 | 0.531 | 0.441 | 1.97 | 3.464 | 0.006 | −0.321 |

| X24 | 18.249 | 7.242 | 9.14 | 16.32 | 82.69 | 2.578 | 12.805 |

| X21 | 61.866 | 17.482 | 11.57 | 59.97 | 119.78 | 0.427 | 0.101 |

| X3 | 3193.107 | 338.664 | 2464.36 | 3166.98 | 5166.35 | 1.545 | 5.855 |

| X5 | 2.397 | 0.555 | 0.931 | 2.327 | 3.803 | 0.261 | −0.125 |

| X4 | 6849.781 | 1513.336 | 4149.44 | 6657.23 | 15,006.34 | 1.274 | 3.495 |

| X14 | 1081.769 | 1223.952 | 6.7 | 382.41 | 4808.38 | 0.974 | −0.274 |

| X22 | 66.424 | 18.446 | 19.33 | 65.54 | 127.98 | 0.308 | −0.003 |

| X25 | 91.697 | 56.189 | 16 | 83 | 500 | 2.561 | 11.559 |

| X20 | 1.196 | 0.3537 | 0.896 | 1.014 | 2.688 | 2.329 | 4.593 |

| X16 | 1.809 | 0.796 | 0.74 | 1.705 | 4.546 | 0.851 | 0.412 |

| X23 | 3.173 | 1.393 | 1.544 | 2.809 | 9.647 | 2.389 | 5.827 |

| X12 | 7.536 | 0.347 | 6.485 | 7.642 | 8.288 | −0.738 | −0.223 |

| X13 | 20,134.933 | 22,200.168 | 164.9 | 9683.7 | 106,138.9 | 1.244 | 0.845 |

| X18 | 20.163 | 4.804 | 11.772 | 18.116 | 35.041 | 0.681 | −0.561 |

| X2 | 9854.966 | 4120.017 | 4266.84 | 8520.64 | 20,173.89 | 0.447 | −0.970 |

| X11 | 6.737 | 0.306 | 6.108 | 6.766 | 7.256 | −0.278 | −0.992 |

| X10 | 98.071 | 4.901 | 88.59 | 97.25 | 114.11 | 0.488 | −0.277 |

| X6 | 1.962 | 1.797 | 0.043 | 1.518 | 5.519 | 0.711 | −0.911 |

| X1 | 3356.39 | 1084.265 | 1829.1 | 3005.5 | 6090.27 | 0.538 | −0.733 |

| X7 | 1.386 | 1.742 | −0.164 | 0.528 | 5.529 | 1.212 | −0.237 |

| X15 | 71.337 | 60.679 | 3.5 | 62.38 | 377.37 | 1.345 | 2.495 |

| X19 | 3.232 | 0.779 | 1.943 | 3.032 | 5.106 | 0.330 | −1.158 |

| Y | 1605.187 | 394.131 | 1049.6 | 1528.1 | 2800.8 | 0.728 | −0.109 |

| Model | MAE (USD/oz) | MSE (×103) | RMSE (USD/oz) | MAPE (%) | R2 | Computation Time (s) |

|---|---|---|---|---|---|---|

| LSTM Architecture | 800.18 | 984.88 | 992.41 | 5.75 | 0.8430 | 38.8 |

| Transformer Architecture | 1042.89 | 1708.69 | 1307.17 | 7.46 | 0.7763 | 31.7 |

| PatchTST | 107.10 | 24.34 | 156.01 | 4.78 | 0.7105 | 47.2 |

| CNN-LSTM | 609.37 | 517.27 | 719.21 | 3.65 | 0.8259 | 42.2 |

| TCN–Informer | 92.45 | 14.13 | 118.89 | 4.53 | 0.8318 | 36.4 |

| CNN–GRU–Attention | 88.96 | 11.62 | 107.78 | 4.18 | 0.8463 | 88.4 |

| LSTM–Transformer Architecture | 421.01 | 279.06 | 528.26 | 3.18 | 0.9618 | 86.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Guo, Y.; Wang, X. Hybrid LSTM–Transformer Architecture with Multi-Scale Feature Fusion for High-Accuracy Gold Futures Price Forecasting. Mathematics 2025, 13, 1551. https://doi.org/10.3390/math13101551

Zhao Y, Guo Y, Wang X. Hybrid LSTM–Transformer Architecture with Multi-Scale Feature Fusion for High-Accuracy Gold Futures Price Forecasting. Mathematics. 2025; 13(10):1551. https://doi.org/10.3390/math13101551

Chicago/Turabian StyleZhao, Yali, Yingying Guo, and Xuecheng Wang. 2025. "Hybrid LSTM–Transformer Architecture with Multi-Scale Feature Fusion for High-Accuracy Gold Futures Price Forecasting" Mathematics 13, no. 10: 1551. https://doi.org/10.3390/math13101551

APA StyleZhao, Y., Guo, Y., & Wang, X. (2025). Hybrid LSTM–Transformer Architecture with Multi-Scale Feature Fusion for High-Accuracy Gold Futures Price Forecasting. Mathematics, 13(10), 1551. https://doi.org/10.3390/math13101551