Abstract

The attention mechanism performs well for the Neural Machine Translation (NMT) task, but heavily depends on the context vectors generated by the attention network to predict target words. This reliance raises the issue of long-term dependencies. Indeed, it is very common to combine predicates with postpositions in sentences, and the same predicate may have different meanings when combined with different postpositions. This usually poses an additional challenge to the NMT study. In this work, we observe that the embedding vectors of different target tokens can be classified by part-of-speech, thus we analyze the Natural Language Processing (NLP) related Content-Adaptive Recurrent Unit (CARU) unit and apply it to our attention model (CAAtt) and embedding layer (CAEmbed). By encoding the source sentence with the current decoded feature through the CARU, CAAtt is capable of achieving translation content-adaptive representations, which attention weights are contributed and enhanced by our proposed normalization. Furthermore, CAEmbed aims to alleviate long-term dependencies in the target language through partial recurrent design, performing the feature extraction in a local perspective. Experiments on the WMT14, WMT17, and Multi30k translation tasks show that the proposed model achieves improvements in BLEU scores and enhancement of convergence over the attention-based plain NMT model. We also investigate the attention weights generated by the proposed approaches, which indicate that refinement over the different combinations of adposition can lead to different interpretations. Specifically, this work provides local attention to some specific phrases translated in our experiment. The results demonstrate that our approach is effective in improving performance and achieving a more reasonable attention distribution compared to the state-of-the-art models.

Keywords:

neural network; Neural Machine Translation (NMT); Natural Language Processing (NLP); attention mechanism; Content-Adaptive Recurrent Unit (CARU) MSC:

68T07; 68T50

1. Introduction

Neural Machine Translation (NMT) has garnered considerable attention in recent years as it allows for a large, single, end-to-end trainable neural network for translation [1,2]. The majority of proposed NMT models are part of the encoder-decoder family, which incorporates an encoder and a decoder for each language, or utilizes language-specific encoders that process sentences and present comparable outputs [3,4]. The neural network for encoding reads the source sentence and transforms it into a sentence-level vector. The decoder is then responsible for generating the translation based on the encoded vector. The whole system, which involves the encoder and decoder of a language pair, is mutually trained to boost the likelihood of accurate translation for a given source sentence. Advanced technology in neural machine translation is the attention mechanism [5]. This mechanism, including Transformer [6] and Vanilla Attention [7], acts as an information bridge between the encoder and decoder. By dynamically detecting the pertinent source word to predict the forthcoming target word, it generates a context vector. Intuitively, different target words will align to different source words which results in varied context vectors during decoding. These context vectors must be discriminatory enough to predict the target words accurately, or the same target words may be repeatedly generated. However, this is typically not the case in practice, even when the attended source words are relevant. We note that the context vectors are highly comparable to each other, with minor variations in each dimension across decoding steps. This indicates that the Vanilla Attention mechanism does not accurately differentiate between various translation predictions. We believe that the explanation for this is linked to the configuration of the attention mechanism, which provides a weighted sum of source representations (hidden features from the encoder) that are invariant across the decoding step.

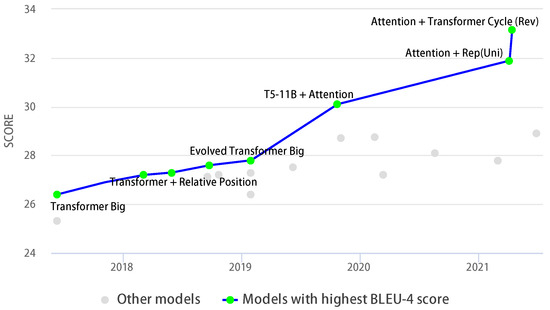

Figure 1 illustrates the enhancement in the quality of translation over the past few years, as measured by the BLEU (The most commonly used metric for evaluating machine translation systems is the BLEU-4.) score [8], along with the corresponding NMT model. The performance is greatly improved by applying the attention approach under high-resource conditions (One of the most popular datasets used to benchmark machine translation systems is the WMT family of datasets, most referenced to WMT14 and WMT16). Therefore, we aim to explore the practicality of extending attention models for enhancing the capacity of these vectors. This promising approach can significantly enhance the translation performance of the model by boosting its discriminative capability.

Figure 1.

BLEU-4 scores for various NMT models. The “Attention” architectures have contributed to major improvements in machine translation. The quality of the attention-related models outperformed the other models, with only the attention approach scoring greater than 30.0 (as measured by case-insensitive BLEU-4).

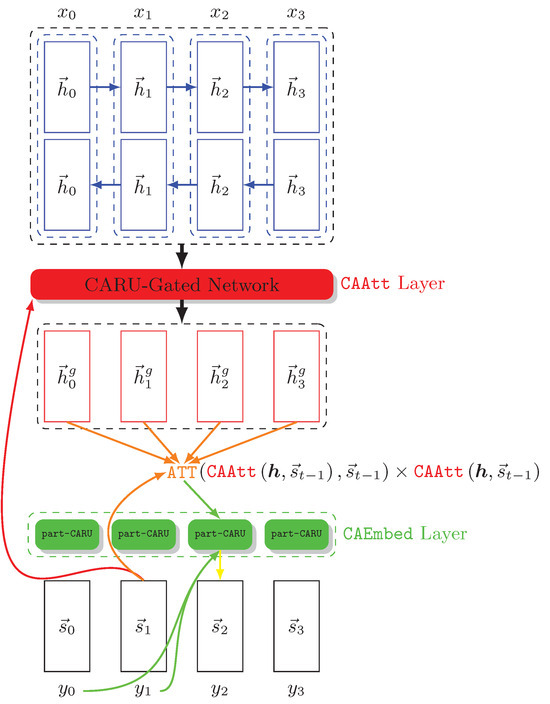

In this work, we introduce a new CARU-gated attention layer (CAAtt) and a CARU-Embedding (CAEmbed) layer for decoded word embedding in NMT. The overall framework of our model, as illustrated in Figure 2, present the structural and feature connections between the two CAAtt and CAEmbed layers. In particular, CAAtt expands the Vanilla Attention network by inserting a gating layer based on the concept of Content-Adaptive Recurrent Unit (CARU) [2]. CARU utilizes the original source representation as its history and the corresponding previous decoder state as its current input. In this way, CAAtt can focus on creating source representations that take into account translation effects. This helps enhance the discriminative power of the context vector, making it more effective in predicting the subsequent target term. By considering the impact of translation, the model can better understand the context and make more accurate predictions. Afterward, CAEmbed enhances word embedding by combining it with part-CARU. This integration involves processing only the short-term hidden state(s) in a partial loop. This technique optimizes the key information present in the embedded vector. Specifically, it aims to reduce the reliance on punctuation and increase the adaptation of relevant keywords. This approach proves to be particularly advantageous for non-English sentences, where the structure and grammar can differ significantly from English. It is also beneficial for languages that utilize postpositions extensively. Through the combination of CAAtt and CAEmbed, the translation process can be improved, leading to more accurate and effective results.

Figure 2.

An overview of the Vanilla Attention network with proposed layers of CAAtt and CAEmbed. The source and target sides are denoted by blue and yellow, and the green and orange colors represent the (embedded) information flow for target word prediction and attention, respectively. The red color indicates the CARU-gated layer. “” represents the procedure for calculating attention weights. refers to the previous decoder state, corresponding to the current step t. More details about the equations can be found in Section 4 and Section 5.

Considering that CARU has the ability to control/adjust the feature flow between weight and current hidden state using its content-adaptive gate and update gate, we propose an adaptation of CAAtt that takes into account the previous decoder’s state as short-term history and the original source representation as the input feature. Furthermore, to enhance word prediction accuracy, a context-search of part-CARU has been incorporated. Both proposed layers are straightforward and efficient for the training and decoding of NMT. The validation is conducted on both the Multi30k and WMT14 datasets to assess their performance in English–German translation tasks. The experimental results report that the proposed models outperform the attention-based plain NMT significantly. The generated attention weights and context vectors were also scrutinized, demonstrating that the attention weights are more precise and context vectors are more discriminative.

The proposed model enhances the widely used attention networks and also improves its convergence speed and performance. Our contributions are summarised as follows:

- We investigate the features of the context vectors produced by the Vanilla Attention model to fully analyze their ability to discriminate between discourse and part-of-speech, and find that the underlying case is that decoding invariant source representations such as the weight of punctuation usually tends to dilute the information in the entire sentence. We develop a CARU-gated attention (CAAtt) layer that dynamically adjusts and refines the source representation based on partial translations.

- In order to increase the convergence speed, we introduce a normalization method and involve it in the calculation of the attention weights. We also analyze its performance and give a complete derivation procedure. Compared to Softmax, it provides stronger gradients when there are numerous categories being predicted.

- Besides, the inference of the current predicate is also highly correlated with the next word. In particular, various combinations of adposition can lead to different interpretations. We introduce an alternative layer (CAEmbed) consisting of embedding and the proposed part-CARU. It aims at weighting the current embedded vector in order to enhance the consistency of target sentences.

- Several experiments on English–German translation tasks have been conducted. The results show that our model outperforms the baseline in dealing with phrases, generating accurate attention weights, expanding the variability of the context vector, and improving translation quality.

2. Related Work

In the early years, Sequence-to-Sequence (Seq2Seq) learning models are originally proposed in the simple absence of an attention mechanism, relying mainly on an encoder to project all features of the semantic details from the source-side into a fixed-length vector [3,9,10]. Refs. [5,11] indicate that using a fixed-length vector is insufficient for representing natural phrases. To address this, they introduced the attention mechanism for neural machine translation, which enables automatic search for the part of the source sentence, relevant to the next target word to be predicted. In practice, attention-based models have demonstrated substantial improvements, outperforming other models in machine translation tasks [12]. Also, ref. [13] investigates several effective approaches to attentional weighting functions, applying local and global attention models in one-shot modality, and then [14,15] enhance the attention-based NMT model by incorporating information from multiple modalities. Refs. [16,17] introduces a coverage vector in order to keep track of the history of attentional features, which allows the attentional network to pay more intelligence to represent the source sentences, and also a coverage model to track the coverage status using a full-coverage embedded vector [18,19]. In addition, refs. [20,21] introduces the self-attention approach, which reduces the number of sequential computations by short paths between distant words in entire paragraphs. It concludes that these short paths are particularly useful for learning strong semantic feature extractors. To supplement its long-term issue between sentences, ref. [22] brings it into recursion along the context vector to help adjust future attention. Ref. [23] extends the (cross-)attention mechanism by a recurrent connection to allow direct access to previous alignment decisions, which incorporates several structural biases to improve the attention-based model by involving Markov conditions, fertility, and consistency in the direction of translation [24,25]. Refs. [26,27] proposes deep attention based on the low-level attentional information that can automatically determine the refinement of attentional weights in a layer-wire manner. Currently, refs. [28,29] proposes a self-attention deep NMT model with a text mining approach to identify the vulnerability category from paragraphs. All these models tend to investigate the variability of context vectors by generating more accurate attention weights. Instead, our approach enables dynamic adjustment and resorting to source representations based on partial translations. This is distinct from existing literature and can be integrated to enhance context vectors’ discriminative power. Additionally, the autoregressive model [30] is another closely related approach, which introduces a better way to train the NMT model by using the regression of features on themselves and directly supervising the attention weights of the NMT using well-trained word alignment. Also, ref. [31] treats the source representation as a memory/feature and models it when dealing with different time series over a long-term time, providing significant flexibility in dealing with the decoder and the various different time-series patterns of that memory during translation. Moreover, an advanced approach to Deep Reinforcement Learning (DRL) algorithms can also be applied to NLP [32], such as the Q-Learning has demonstrated significant potential for solving the NMT [33,34].

Inspired by the above approach, our proposed model treats the source representation as a memory/feature and simulates the interaction between the decoder and this memory through read and write operations during the translation process. This work aims to highlight that employing the proposed composition of CARU (CAAtt and CAEmbed) in our model can improve the efficiency of training and decoding, rather than merely addressing the interactive attention content from the read operation. CAAtt can be seen as an extension of Vanilla Attention, offering the advantage of alleviating gradient vanishing or exploding problems during training [35,36]. It also simplifies LSTM [37,38] and presents a variant design for GRU [39,40], enabling efficient computation. Additionally, the gate mechanism in CAAtt is built on CARU. Consistency in language use is maintained throughout the document. CARU is a recurrent unit that utilizes a content-adaptive gate and an update gate to manage the importance of information from the hidden states and the current input feature, respectively [2]. Within NMT, CARU has been proposed as both an encoder and decoder function for the Seq2Seq model [2], and the option of using a gate design as an alternative to the attention mechanism has also been presented [41,42]. To our knowledge, the use of CARU as a gate mechanism has not been investigated previously.

3. Background

Similar to the basic Seq2Seq structure, the Vanilla Attention mechanism introduces a context vector/module between the encoder and decoder. This context vector aims to collect the output of all units as input features to compute the probability distribution of the source language (embedded) words for each feature word that the decoder wants to predicate. By applying this mechanism, the aim is to discover the relationship between the encoder and decoder. This connection enables the decoder to capture global information to a certain extent, instead of inferring only from the current hidden state. The attention module identifies the source words pertinent to the succeeding target word and assigns them with high attention weights while calculating the context vector by:

where denotes the alignment function that uses a feedforward neural network to calculate the attention weights of encoder states with the previous decoder state . For a target word instance, it first reviews overall encoder states to compare the target and source word with the aim of computing a score for each state of the encoder. Next, a Softmax function normalizes all scores and produces a probability distribution conditional on the target word for determination, as follows:

where the relevance score is estimated via an alignment equation proposed by [5]: , here is the activation function of hyperbolic tangent. Intuitively, the higher the attention weights, the more important the index (determined by ) is for predicting the next word. Therefore, according to the Softmax function that ensures the sum of the elements of vector is equal to 1, the attention model produces the final context vector by weighting the encoder states h directly by:

Although this model outperforms better than the others, we find that (2) employs the Softmax function meaning that the Vanilla Attention mechanism also inherits the shortcomings of Softmax during the training process [43,44]: The convergence in the later stages becomes very slow and the resulting context vectors are very similar to each other, making them insufficiently discriminative. We conduct to improve the prediction and enhance the convergence in the following sections.

4. CARU-Gated Attention

The Vanilla Attention mechanism is used to overcome the NMT problem by allowing the network to return to the input sequence instead of encoding all the information into a fixed-length vector, but it still has some shortcomings we mentioned before. This mechanism allows the embedded vector to access its internal memory/feature, which is the hidden states generated by the encoder. In this interpretation, the network chooses to retrieve something from the current feature of this encoder state, rather than considering whether and how much it should be attended. With this in mind, we attempt to investigate the reasons behind this by analyzing the regression of the context vector in (3). Achieving a one-to-one mapping between two different languages in a translation process is difficult, not just for NMT, but also for SMT. Source words often align with multiple target words, resulting in different sentence structures and sequences. This dilutes attention weights due to the decoding steps between the source word and its corresponding target word position. According to (1), the context vector comes up with decoder state , which is temporarily activated in the -th recurrent step, and the attention weights is completely dominated by encoder states h by (2). In practice, the attention mechanism in NMT learns in an unsupervised manner without explicit prior knowledge about alignment, and there is always a lot of redundant information dragging the alignment, such as punctuation and conjunctions that often dilute the information of the encoder states h. Theoretically, this redundant information can be identified from their part-of-speech (also determined by pre-processing). We can thus make use of these attempts to reduce the proportion/weight of redundant information in the encoder, thus making the major words/features more obvious for subsequent consideration.

In order to achieve this improvement, CARU’s approach is well worth referring to in our design. In each decoding step, these redundant features are mitigated by the proposed CAAtt layer before the encoder states are input to the Vanilla Attention model. There are two objectives that this proposed gate layer must achieve:

- Being able to dynamically adjust the weight of each embedded word in a recurrent step, the adjusted encoder state should be more meaningful and represent the translation context clearly, so that the decoder can extract useful context vectors for attention.

- We conduct to maintain the intention of (1) and therefore recommend that the feature size and dimension produced by this layer must equal to that of the encoder state (but the length is allowed to change), otherwise it will cause data sparsity problems, making it unstable and hard to convergence.

In view of these considerations, the CARU-Gated layer is emitted, which dynamically adapts h according to the previous decoder state employing a gate-controlled network, expressed as:

and the proposed layer then evolves by (1) to:

For the language/context vectors, humans/learning models can immediately capture the meaning or pattern if they have learned it before, respectively. Corresponding to NLP, for a standard sentence, its subject, verb, and object should receive more attention before other auxiliary information is considered. This process can be seen as a weighted assignment of words in a sentence. Thus, by employing CAAtt, the previous decoder state is coupled to the encoder states h in order to determine its weight within , which also can be seen as a tagging task in the proposed layer.

4.1. Gating Architecture

The features of CARU aim to alleviate the long-term dependence problem through its content-adaptive gate, which weights the received states according to their categories (such as part-of-speech). We study the gate control and investigate the data flow of CARU, attempting to treat the previous decoder state as an input feature and refine it by recurring all encoder state h.

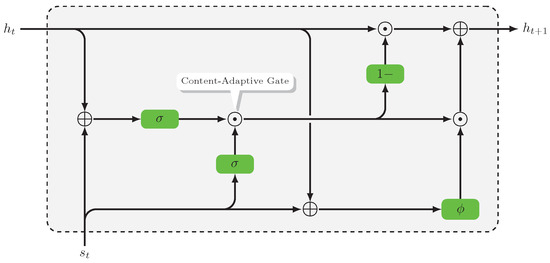

As presented in Figure 3, for , CAAtt directly returns Initially, next for , a complete architecture is given by:

where ⊙ denotes the Hadamard product, and and denote the activation function of sigmoid and hyperbolic tangent, respectively. It can be found that there are two data flows ( and ) aimed to perform the linear combination of the current encoder state and previous decoder state parallelly. (5b) is referred to as the design of an update gate, which results in having been connected to the end of the combination of weights with encoder states. Besides, (5c) determines to what extent the original source information can be used to combine partial translations. In particular, (5d) is the CARU feature of the context-adaptive gate that defines how much of the original source information can be retained. The proposed CAAtt also incorporates the advantage of CARU that the in (5d) can be considered as a tagging task that connects the relation between the weight and part-of-speech. For instance, the word-weight should be close to zero if the representing a punctuation (such as “full stop”), implied as:

where the result of the content-adaptive gate will be close to zero regardless of the content weight , which means that the produced state will also converge to the encoder state . It shows that low-weight words have less influence on the output. Finally, the use of linear interpolation between and ensures that the refined source representations satisfy the requirement above-mentioned: It can alleviate the complex interactions between source sentences and partial translations, allowing CAAtt to efficiently control the matching and data flow between them.

Figure 3.

An overview of gating architecture in CARU.

4.2. Attention Weights Normalisation

Once the ATT architecture is confirmed, we further aim to improve the performance of attention weights normalization. As mentioned at the end of Section 3, the attention weights is generally achieved by the a Softmax function, but the convergence is slow in practice. Therefore, we conduct to discover a better normalization function in order to enhance the convergence of this component:

Our derivation begins by investigating the form of the -normalisation, for any , we have:

where k from 1 to N, and N denotes the number of categories, respecting the partial derivation,

Note that the becomes the Softmax function if . In contrast, the normalisation method we propose here is with . Since our goal is to enhance the convergence, we must find a condition that satisfies with , as follows:

By definition, ensures that its range belongs to , and the range of the proposed is also belong to . We simplify these inequalities and obtain,

Rewrite as:

It thus can be found that (7) leads to the necessary condition as:

By substituting and , the final inequality become:

Since is a monotonically increasing function, thus it requires that in order to satisfy with above inequities. In fact, it is worth mention that N denotes the number of categories that means N is always bigger then 1. Therefore, by using our normalisation method with necessary condition , the enhancement of convergence becomes more and more obvious with the increasing gradient.

Theoretically, Softmax normalization tends to project features into a probability domain, which is sufficient for extracting target features for the classification problem. However, for the computing of attention weights, the purpose of weighting is not only to determine the maximum value, but other vectors also require to be calculated with an appropriate weight in order to merge them into the context vector. Intuitively, corresponding to (6), the classification problem aims at increasing the gradient as long as , while in attention weights, it must be taken into account that the gradient is also increased in the case . In order to verify them, we can substitute into (6), as follows:

It is obvious that N always increases their gradient as long as whether i is equal to j or not. Based on the above justification, the proposed normalization method has the ability to integrate into the attention weights , and outperforms the Softmax function as (2). Moreover, with respect to (6), it is worth mentioning that the result of is always equal to zero, which means that the normalization procedure (whether proposed, Softmax or others) only contributes to the convergence within the attention weights, allowing for faster training, but providing no additional gradient to the final accuracy of the overall network.

5. Partial CARU for Embedding

In natural language, it is very common to combine predicates with postpositions in sentences, and the same predicate may have different meanings when combined with various postpositions, such as “look after” and “look for”. In addition, some specific phrases have other meanings like “get axed” and “break a leg” meaning “retrenched” and “good luck”, respectively, but their literal meaning is irrelevant to the expressed meaning. There are also grammatically unsound phrases such as “state of the art” and “long time no see”, but people still use them from time to time. In addition, for the NMT pre-processing, word tokenization always decomposes phrases into separate words for regularising the input data. Therefore, when the phrase is translated as “look for”, NMT usually alternatives it with similar word meanings like “find”, “search”, “discover”, etc. The representation of sentence meaning is acceptable in terms of comprehension but unfavorable in the metric of BLEU score. It is very challenging for NMT to fully grasp the phrase. Given this, we introduce the CAEmbed, a novel additive layer for context-embedding, stacked in the middle of the attention network and the decoder states (corresponding to Figure 2). It aims to coordinate the inference of the current predicate in order to improve accuracy by analyzing the previous decoder state(s).

Conditional Probability Expressions

Briefly, the fundamental work of the partial recurrent design is to predict a given sequence of previously finite words in a probabilistic approach, where the length of the sequence to be recurred is controlled by p. The CAEmbed probability of each word on the target side can be expressed as below, starting from :

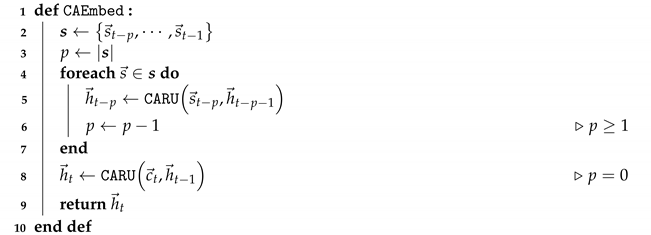

It can be discovered that the conditional probability of the current is only affected by vectors, unlike the benchmark NMT approach that always takes into account all previous , which often leads to the long-term issue. Therefore, the probabilities obtained from (8) can achieve more accurate results than others. Besides, the context vector must be entered as the last input factor because it is encoded by the attention model and it also contains quite important information about the hidden state. We arrange it as the last input in order to prevent it from being diluted by other input features that follow. Intuitively, the proposed layer of CAEmbed consists of one CARU that partially encodes the previous p embedded vector and the context vector generated by the attention model. A complete algorithm (Algorithm 1) of CAEmbed is as follows:

| Algorithm 1: Pseudo code of CAEmbed layer architecture, with regard to Figure 2. |

|

As presented in Algorithm 1, the CAEmbed layer received the previous p features, which perform a partial convolution aiming to achieve local feature extraction and also alleviate the long-term dependency problem. Since there are p recurrently in the CAEmbed, the time complexity can be roughly approximated by during encoding. Besides, there is one CAEmbed layer stacked in our approach with respect to Figure 2. The memory is mainly required by three linear layers within the CARU, that is , and , the space complexity thus can be considered as . In practice, performing partial sentence recurrent can alleviate the pressure on the unit compared to entire sentence recurrent, and part-CARU has good compatibility with phrases. In practice, the number of words in a phrase is usually 2, thus we prefer a value of 1 for p, which means the CAEmbed only performs analysis of the current and . Recall that CARU has the function of part-of-speech analysis, which is also useful in postposition analysis. This is because, in any language, postpositions are always followed by/connected to nouns or verbs. According to this rule, CARU can ignore another word through the word-weight generated by the content-adaptive gate (corresponding to Section 4.1). Furthermore, RNN-based models always face the problem of data transfer and storage, and are also challenged by the problem of long-term dependency during training. As CAEmbed performs only short loops in the middle of the sentence instead of receiving the whole sequence. This approach enables the processing of predicates with local attention, releasing the processes of (long-term) data transfer between recursive layers, which effectively alleviates data transfer and storage overhead.

6. Experimental Results and Discussion

The experiments are conducted on the WMT14 and WMT17 English-German datasets for our translation task simulations. WMT14 (https://www.statmt.org/wmt14/translation-task.html, accessed on 15 February 2024) provides 1.5M sentence pairs, which include 116M English words and 110M German words for training. According to the configuration introduced in [13], we select newstest2013 and newstest2017 as the validation set and test set, respectively. Next, WMT17 (https://www.statmt.org/wmt17/translation-task.html, accessed on 15 February 2024) provides 5.58M sentence pairs, which include 141M English words and 135M German words for training, and we use the concatenation of newstest2014 and newstest2015 as the validation set and newstest2017 as the test set. In practice, our pre-processing preforms the word tokenization, Truecasing optimization [45], Byte-Pair Encoding (BPE) segmentation [46] and sequence length restriction for data preparation. Our model can accept sentences up to 100 words in length as input sequences and discard tokens with frequencies lower than 4 when building the NMT vocabulary in order to limit the vocabulary size of the source and target languages to 50M tokens.

In order to implement our models, all NMT models are executed by multiple GPUs (there are four Nvidia Quadro RTX 5000 with a total GB of video memory in one machine) with a distributed training strategy. We implement all models based on the scientific deep learning framework PyTorch [47] with the NLP-related library TorchText (https://github.com/pytorch/text, accessed on 15 February 2024), which provides the toolkit for these pre-processing stages mentioned before, so they can reach our requirements for preparing the experiments. Besides, we allocate the hidden state of feature size to the both recurrent encoder and decoder. All source and target words are represented by the dimensional embedding vector. All trainable parameters are randomly initialized according to a normal distribution with mean and standard deviation defaulted to and , and employed the advanced gradient-related optimizer Adam [48] method with a learning rate of . Besides, we also devise a weight scheduler callback to ensure balanced performance across multiple-layer translation. These callbacks dynamically adjust the loss weight for the gradient produced during backpropagation at each training epoch. Such a strategy helps the model prioritize categories that are currently underperforming, thereby enhancing overall translation performance. All experiments use the same dataset in each test, with a batch size of 100 per iteration set and the same configuration with the same number of neural nodes. In addition, there is a scheduler for adjusting the learning rate, which reduces the learning rate when the loss becomes stagnant and then stops training when the learning rate is reduced to . We set our best model parameters according to the maximum BLEU-4 scores on the validation set.

In addition to the benchmark attention model Transformer [6] and RNNSearch [5], we also compare our proposed model with other state-of-the-art models: GRU-Inv [41] is an advanced GRU-gated attention model; Transformer Cycle [49] consists of three main strategies and shares parameters in a universal transformer model; Attention+Rep [50] introduces a lightweight computational model by using a random sample of tokens as input to the perturbation; T5-11B [51] proposes a unified framework for converting all text-based language problems into a text-to-text format with numerous parameters in the attention model. Q-Learning [52] makes use of the reinforcement learning approach to achieve NMT. Note that we have also re-implemented these discovered models and fine-tuned their settings to give them the best possible scores for comparison purposes.

The experimental results are indicated in Table 1. The results trained using the CAAtt network are better than the benchmark results of the Transformer and RNNSearch models, and the combination of CAAtt and CAEmbed further outperforms the state-of-the-art model in terms of BLEU-4 scores. It can be seen that the improvement of is not significant instead its error range is smaller than the others. Additionally, since the T5-11B and our work are also based on the attention mechanism, we have achieved good results in general tasks. In practice, since the proposed CAEmbed layer performs only a short recurrence in the middle of a sentence, instead of receiving the whole sequence, it can perform well in predicate translation. In contrast, T5-11B is trained on a large dataset and can cover more predicates with postpositions in the dataset, thus achieving the recent results obtained in this work. Overall, the proposed composite model of CAAtt and CAEmbed with approach yields an average BLEU-4 score of 32.09 and 34.31 in WMT14 and WMT17, respectively (Note that BLEU-4 scores are validated in case insensitive because we have Truecasing pre-processing). This is reasonable because the CAAtt aims to improve the encoding process of the attention mechanism, the next CAEmbed purposes to refine the content adaption of word embedding and decoding, and conducts to enhance their convergence making the hidden states more discriminative.

Table 1.

BLEU-4 scores with error ranges obtained from various translation models.

6.1. Discriminative of Phrases

We present a qualitative analysis of how CAEmbed highlights the results of the proposed CAAtt attention mechanism through visualization of partial recurrent. The translated results from the original attention are compared with the optimized results obtained by CAEmbed layer. We study the correct translation ratio of phrases during post-processing to see how our approach improves the translation quality. We select sentences from the WMT14 (De to En) validation set that are more difficult to translate correctly based on the length and structure of the sentences, the use of phrases, and the number of punctuations for verification. We translate them using the proposed network with and without CAEmbed layer and various state-of-the-art models, and highlight the phrases in the target sentences produced by these well-trained models.

As shown in Table 2, we observe that only our proposed model and Transformer Cycle correctly translate the phrase (looked up), and the other results provide alternatives (looked for, checked) with the same meaning, but none of them has the same tense as the reference phrase. In fact, the contexts of the references all use the past tense, so to keep the tense consistent, we believe that the tense of this translated sentence should also be in the past tense, rather than using the present tense of the reference. Furthermore, as sentence length increases, the errors are mainly in the latter part of the sentence. The attention mechanism becomes more difficult to align with the source, which leads to more translation errors and eventually damages the translation quality: It is acceptable to replace “condition” and “association” with “situation” and “society”, receptivity, but the lack of “association” is unacceptable, and “disease” is even more of a mistranslation. The incorrect attention weights assigned by the original attention module are appropriately alleviated by CAAtt, because it connects the decoded features to the attention layer thus having the ability to compensate and provide sufficient feedback to the recurrent network corresponding to the red arrow in Figure 2.

Table 2.

Selected example of translations generated by different models. We underline the phrases and bold the interesting parts for investigation. The BLEU-4 scores reflect the better performance of the proposed model.

This can be identified in the example of attention weights for attentional reinforcement and correction of phrase in Table 3. It is clear that the weights of punctuation are quite small, always less than 0.02, and the next smallest is the conjunction, between 0.02 and 0.04. The lower weight means that the translated result can still roughly express the original meaning of the sentence, even if they are lost during the translation process. The next notable ones are adjectives and nouns, with about and over 0.2. This means that they must not be lost in the translation process, otherwise the meaning of the sentence will be incomplete. The above is the attention weights obtained from the proposed CAAtt network. Besides, the weights of phrase “looked up” have been enhanced by the CAEmbed layer. As a result, the number and order of words between the source and target sentences are allowed to differ due to the attention mechanism. In the proposed network, the current translated word is connected to CAAtt as a feedback feature and acts on the CARU gate of CAAtt through (5a) to (5c), thus improving the match between the original and target word, and allowing a more concise and accurate allocation of attention. In practice, we find that the transition from the initial weights to the final well-trained weights is stable and continuous, with the major words in the training process obtaining more attention weights as the iterations increase.

Table 3.

Calculating of attention weights for proposed CAAtt network based on the example sentence for the attentional reinforcement and correction of phrase.

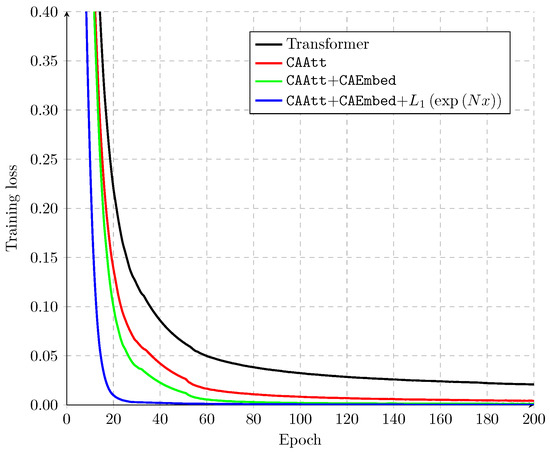

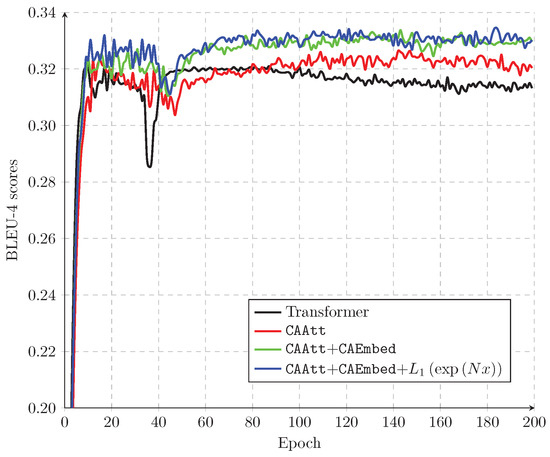

6.2. Convergence Performance

Next, we provide the performance analysis of the proposed normalisation method described in Section 4.2 within the framework of the CAAtt network. Since the purpose of Softmax classifier is to normalise the features to minimise the overall loss, increasing the number of categories N generates more gradients as feedback for training, motivating it to approach the target quickly. Therefore, better performance can be obtained as the number of categories N increases. In order to validate the performance of proposed normalisation approach, we apply it to the NMT experiments on the Multi30k [53] dataset, which can highlight faster convergence due to the smaller size of its dataset.

As indicated in Figure 4 and Figure 5, the proposed model achieves a better convergence speed and BLEU-4 score. Compared with the selected Transformer model, our network provides a stable and higher score. In general, the problem of missing phrases is not well addressed because the attention mechanism tends to induce n-gram problems in the translation process. With the enhancement of CAEmbed layer, it can alleviate the absence of translated phrase-token such that the attention weights can tend to produce more phrase content in the translation. Note that in Figure 5, the blue and green curves obtain very close scores, which are trained with and without our proposed normalization, respectively. Besides, all the experiments can be well-trained within 200 epochs, and our proposed network can reach the best scores within 100 epochs and keep on this score until the end of training. Also, as can be seen in Figure 4, applying can significantly enhance the convergence speed and can generate more gradients for the feedback of discriminative context vectors, making NMT suffer less from the n-gram problems and thus completing the training quickly.

Figure 4.

Convergence tests are performed on the Multi30k using various methods.

Figure 5.

BLEU-4 scores obtained on Multi30k using various methods.

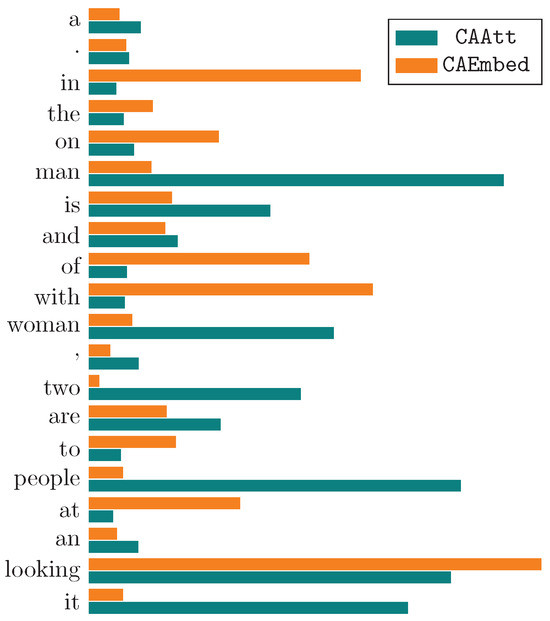

6.3. Content-Adaptive Gate Weighting

To find the underlying reason, we investigate the weights generated by the content-adaptive gates of CARU, with respect to (5d) within the CAAtt and CAEmbed, aiming to understand what type of part-of-speech this gate prefers to penalize or increase. We collect the top 20 tokens that appeared most frequently during the pre-processing of vocabulary constructions, and the attended tokens should have higher weight scores after the inner product. Also, we excluded lots of tokens being specific tokens as “” or “”, which are used for the compatibility of model training but are not meaningful in our evaluation.

As indicated in Figure 6, the CAAtt can be seen as evolving from a task based on NLP labeling, and the results of CAEmbed tends to phrase detection function. Observing the results of CAAtt, as mentioned in Section 6.1, the lower weight token is stopped words, such as “a/an”, “the” and punctuation, which can be uninformative for the NLP task. In contrast, noun, verb, and adjective tokens can always be given a higher weight and their features contain the major information that must be translated. Besides, CAEmbed adjusts the weight distribution of frequently highlighted tokens to a more reasonable proportion. As a result, phrase-related tokens, such as “looking”, “to”, and “with”, are further highlighted.

Figure 6.

The weight of top-20 tokens generated by CARU’s content adaptive gate in CAAtt and CAEmbed, respectively.

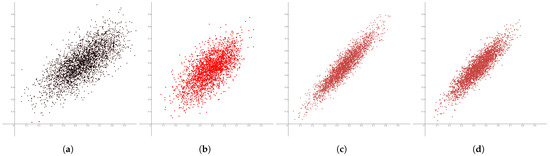

6.4. Attention Weights Interpretability

Finally, we try to investigate the interpretability of the weights of proposed layers, which can be considered as follows: If the context is more relevant, then the learned attention weights should be consistent with the natural measure of feature importance, and the proposed attention distribution will change the output distribution of the model intensively. Given such an assumption, we explore the relative importance when the distribution of attention weights is enhanced. We use i to denote the token with the highest attention weights in a sentence and draw another token uniformly from the same sentence by comparing the importance of i with other randomly attended tokens to compare how i influences the output distribution of the proposed model. Concretely, we measure the difference of two Jensen–Shannon () divergences [54]:

where is the divergence of the network’s reference output distribution p and the output distribution q. Intuitively, the concentration in the output distribution of removing should be obvious if is truly the most important token p, and the obtained should be obtained as a positive. With respect to Figure 4 and Figure 5, based on the proposed attention network with Multi30k dataset, we plot the against .

As illustrated in Figure 7, it can be found that these results are near the diagonal line because the ideal case is . According to this reason, we define the loss form as , which is the sum of each distance from point to the diagonal. For the Transformer attention result in Figure 7a, a fragmented distribution is presented, with only close to 0.5 can obtain an acceptable for training, but the loss is considerable which means that improvement is still needed. For the improved attention of the proposed method, the results show that the refined network can help to correctly align the most intuitive token i. This can be found in Figure 7b, where CAAtt results tend to project on the diagonal, while also appearing a normal distribution along the diagonal. Next, Figure 7c,d presented the distribution results of applying the CAEmbed layer, which can significantly enhance the concentration of the distribution results on the diagonal, indicating phrases related to attention weights. The above analysis shows that they can bring interpretable attention in a more intuitive. Finally, the proposed CAAtt + CAEmbed + framework is more powerful to optimize attention mechanism in NMT tasks in terms of training time and BLEU scores.

Figure 7.

The visualization of divergence of the attention distribution against between the most important token i and the randomly drawn token r. The results from left to right corresponding to the networks of (a) Transformer, (b) CAAtt, (c) CAAtt + CAEmbed and (d) CAAtt + CAEmbed + , respectively.

7. Conclusions

In this work, we introduce an advanced network architecture called CARU-based Content Adaptive Attention (CAAtt) and CARU-based Embedding (CAEmbed) layer for Neural Machine Translation (NMT) and word embedding tasks. In CAAtt, we propose a novel approach that connects decoded embedding features and generates new source representations based on content-adaptive gates contributed by CARU. This enables the attention mechanism to focus on the current context of target sentences, enhancing translation quality. To further improve training convergence, we introduce new normalization methods that enhance the gradient of attention weights during processing. These methods, particularly normalization, significantly speed up training convergence. Additionally, CAEmbed uses CARU to refine embeddings through a partial recurrent layer, which performs a short recurrent within the middle of sentences instead of receiving the whole sequence, allowing it to handle the predicate with local attention and avoid long-term data transfer between recurrent layers. This enhances the source representation associated with previously decoded states, especially for phrases. Our in-depth analysis reveals that our model effectively addresses long-term dependencies between sentences, further improving translation quality. Experimental results demonstrate that CAAtt and CAEmbed outperform state-of-the-art models, leading to improved BLEU scores. The utilization of the proposed normalization methods also contributes to faster convergence during training. These findings indicate that the attention mechanism architectures can be greatly enhanced using our proposed methods, resulting in a noticeable increase in accuracy.

In our forthcoming study, we will refine the tone of NMT outputs to suit various contextual expressions. The goal is to provide translations that are more natural, fluent, contextual, and objective. Such adjustments help to enhance the quality of the translation, making it more akin to human expression. Therefore, a more formal and objective tone is preferred in business documents, while a more casual and relaxed tone is suitable for informal chat dialogues.

Author Contributions

Conceptualization, K.-H.C. and S.-K.I.; methodology, K.-H.C. and S.-K.I.; software, K.-H.C.; validation, S.-K.I.; formal analysis, K.-H.C. and S.-K.I.; investigation, K.-H.C.; resources, K.-H.C. and S.-K.I.; data curation, K.-H.C. and S.-K.I.; writing—original draft preparation, K.-H.C.; writing—review and editing, S.-K.I.; visualization, K.-H.C.; supervision, S.-K.I.; project administration, S.-K.I.; funding acquisition, S.-K.I. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Macao Polytechnic University (Research Project RP/FCA-06/2023).

Data Availability Statement

Data are contained within the article.

Acknowledgments

We would like to thank Laurie Cuthbert for English language editing.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, X.; Lu, Z.; Tu, Z.; Li, H.; Xiong, D.; Zhang, M. Neural Machine Translation Advised by Statistical Machine Translation. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar] [CrossRef]

- Chan, K.H.; Ke, W.; Im, S.K. CARU: A Content-Adaptive Recurrent Unit for the Transition of Hidden State in NLP. In Neural Information Processing; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 693–703. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. arXiv 2014, arXiv:1409.3215. [Google Scholar] [CrossRef]

- Li, J.; Xiong, D.; Tu, Z.; Zhu, M.; Zhang, M.; Zhou, G. Modeling Source Syntax for Neural Machine Translation. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 688–697. [Google Scholar] [CrossRef][Green Version]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2014, arXiv:1409.0473. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, Y. Attention Modeling for Targeted Sentiment. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics, Valencia, Spain, 3–7 April 2017. [Google Scholar] [CrossRef]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics—ACL ’02, Philadelphia, PA, USA, 7–12 July 2002. [Google Scholar] [CrossRef]

- Cho, K.; van Merrienboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014. [Google Scholar] [CrossRef]

- Wang, X.X.; Zhu, C.H.; Li, S.; Zhao, T.J.; Zheng, D.Q. Neural machine translation research based on the semantic vector of the tri-lingual parallel corpus. In Proceedings of the 2016 International Conference on Machine Learning and Cybernetics (ICMLC), Jeju, Republic of Korea, 10–13 July 2016. [Google Scholar] [CrossRef]

- Garg, S.; Peitz, S.; Nallasamy, U.; Paulik, M. Jointly Learning to Align and Translate with Transformer Models. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–9 November 2019. [Google Scholar] [CrossRef]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Luong, T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-based Neural Machine Translation. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1412–1421. [Google Scholar] [CrossRef]

- Fan, H.; Zhang, X.; Xu, Y.; Fang, J.; Zhang, S.; Zhao, X.; Yu, J. Transformer-based multimodal feature enhancement networks for multimodal depression detection integrating video, audio and remote photoplethysmograph signals. Inf. Fusion 2024, 104, 102161. [Google Scholar] [CrossRef]

- Huang, P.Y.; Liu, F.; Shiang, S.R.; Oh, J.; Dyer, C. Attention-based Multimodal Neural Machine Translation. In Proceedings of the First Conference on Machine Translation: Volume 2, Shared Task Papers, Berlin, Germany, 11–12 August 2016; pp. 639–645. [Google Scholar] [CrossRef]

- Tu, Z.; Lu, Z.; Liu, Y.; Liu, X.; Li, H. Modeling Coverage for Neural Machine Translation. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 76–85. [Google Scholar] [CrossRef]

- Kazimi, M.B.; Costa-jussà, M.R. Coverage for Character Based Neural Machine Translation. Proces. Del Leng. Nat. 2017, 59, 99–106. [Google Scholar]

- Cheng, R.; Chen, D.; Ma, X.; Cheng, Y.; Cheng, H. Intelligent Quantitative Safety Monitoring Approach for ATP Using LSSVM and Probabilistic Model Checking Considering Imperfect Fault Coverage. IEEE Trans. Intell. Transp. Syst. 2023; Early Access. [Google Scholar]

- Mi, H.; Sankaran, B.; Wang, Z.; Ittycheriah, A. Coverage Embedding Models for Neural Machine Translation. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 955–960. [Google Scholar] [CrossRef]

- Douzon, T.; Duffner, S.; Garcia, C.; Espinas, J. Long-Range Transformer Architectures for Document Understanding. In Document Analysis and Recognition—ICDAR 2023 Workshops; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2023; pp. 47–64. [Google Scholar] [CrossRef]

- Tang, G.; Müller, M.; Rios, A.; Sennrich, R. Why Self-Attention? A Targeted Evaluation of Neural Machine Translation Architectures. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 4263–4272. [Google Scholar] [CrossRef]

- Yang, Z.; Hu, Z.; Deng, Y.; Dyer, C.; Smola, A. Neural Machine Translation with Recurrent Attention Modeling. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics, Valencia, Spain, 3–7 April 2017; pp. 383–387. [Google Scholar] [CrossRef]

- Mondal, S.K.; Zhang, H.; Kabir, H.D.; Ni, K.; Dai, H.N. Machine translation and its evaluation: A study. Artif. Intell. Rev. 2023, 56, 10137–10226. [Google Scholar] [CrossRef]

- Cohn, T.; Hoang, C.D.V.; Vymolova, E.; Yao, K.; Dyer, C.; Haffari, G. Incorporating Structural Alignment Biases into an Attentional Neural Translation Model. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016. [Google Scholar] [CrossRef]

- Rosendahl, J.; Herold, C.; Petrick, F.; Ney, H. Recurrent Attention for the Transformer. In Proceedings of the Second Workshop on Insights from Negative Results in NLP, Online and Punta Cana, Dominican Republic, 1 November 2021; pp. 62–66. [Google Scholar] [CrossRef]

- Yazar, B.K.; Şahın, D.Ö.; Kiliç, E. Low-Resource Neural Machine Translation: A Systematic Literature Review. IEEE Access 2023, 11, 131775–131813. [Google Scholar] [CrossRef]

- Zhang, B.; Xiong, D.; Su, J. Neural Machine Translation with Deep Attention. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 154–163. [Google Scholar] [CrossRef] [PubMed]

- Vishnu, P.R.; Vinod, P.; Yerima, S.Y. A Deep Learning Approach for Classifying Vulnerability Descriptions Using Self Attention Based Neural Network. J. Netw. Syst. Manag. 2021, 30, 9. [Google Scholar] [CrossRef]

- Sethi, N.; Dev, A.; Bansal, P.; Sharma, D.K.; Gupta, D. Enhancing Low-Resource Sanskrit-Hindi Translation through Deep Learning with Ayurvedic Text. ACM Trans. Asian -Low-Resour. Lang. Inf. Process. 2023. [Google Scholar] [CrossRef]

- Shan, Y.; Feng, Y.; Shao, C. Modeling Coverage for Non-Autoregressive Neural Machine Translation. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, J.; Zong, C. Improving Autoregressive NMT with Non-Autoregressive Model. In Proceedings of the First Workshop on Automatic Simultaneous Translation, Seattle, WA, USA, 9–10 July 2020. [Google Scholar] [CrossRef]

- Wu, L.; Tian, F.; Qin, T.; Lai, J.; Liu, T.Y. A study of reinforcement learning for neural machine translation. arXiv 2018, arXiv:1808.08866. [Google Scholar]

- Aurand, J.; Cutlip, S.; Lei, H.; Lang, K.; Phillips, S. Deep Q-Learning for Decentralized Multi-Agent Inspection of a Tumbling Target. J. Spacecr. Rocket. 2024, 1–14. [Google Scholar] [CrossRef]

- Kumari, D.; Ekbal, A.; Haque, R.; Bhattacharyya, P.; Way, A. Reinforced nmt for sentiment and content preservation in low-resource scenario. Trans. Asian Low-Resour. Lang. Inf. Process. 2021, 20, 1–27. [Google Scholar] [CrossRef]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef]

- Trinh, T.H.; Dai, A.M.; Luong, M.T.; Le, Q.V. Learning Longer-term Dependencies in RNNs with Auxiliary Losses. arXiv 2018, arXiv:1803.00144. [Google Scholar] [CrossRef]

- Houdt, G.V.; Mosquera, C.; Nápoles, G. A review on the long short-term memory model. Artif. Intell. Rev. 2020, 53, 5929–5955. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.; van Merrienboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder–Decoder Approaches. In Proceedings of the SSST-8, Eighth Workshop on Syntax, Semantics and Structure in Statistical Translation, Doha, Qatar, 25 October 2014. [Google Scholar] [CrossRef]

- Dey, R.; Salem, F.M. Gate-variants of Gated Recurrent Unit (GRU) neural networks. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017. [Google Scholar] [CrossRef]

- Zhang, B.; Xiong, D.; Xie, J.; Su, J. Neural Machine Translation With GRU-Gated Attention Model. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 4688–4698. [Google Scholar] [CrossRef] [PubMed]

- Cao, Q.; Xiong, D. Encoding Gated Translation Memory into Neural Machine Translation. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018. [Google Scholar] [CrossRef]

- Chan, K.H.; Im, S.K.; Ke, W. Multiple classifier for concatenate-designed neural network. Neural Comput. Appl. 2021, 34, 1359–1372. [Google Scholar] [CrossRef]

- Ranjan, R.; Castillo, C.D.; Chellappa, R. L2-constrained Softmax Loss for Discriminative Face Verification. arXiv 2017, arXiv:1703.09507. [Google Scholar] [CrossRef]

- Lita, L.V.; Ittycheriah, A.; Roukos, S.; Kambhatla, N. tRuEcasIng. In Proceedings of the 41st Annual Meeting on Association for Computational Linguistics—ACL’03, Sapporo, Japan, 7–12 July 2003. [Google Scholar] [CrossRef]

- Sennrich, R.; Haddow, B.; Birch, A. Neural Machine Translation of Rare Words with Subword Units. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2019; Volume 32. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Takase, S.; Kiyono, S. Lessons on Parameter Sharing across Layers in Transformers. arXiv 2021, arXiv:2104.06022. [Google Scholar] [CrossRef]

- Takase, S.; Kiyono, S. Rethinking Perturbations in Encoder-Decoders for Fast Training. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2021, Online, 6–11 June 2021. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. arXiv 2019, arXiv:1910.10683. [Google Scholar] [CrossRef]

- Kumar, G.; Foster, G.; Cherry, C.; Krikun, M. Reinforcementearning based curriculum optimization for neural machine translation. arXiv 2019, arXiv:1903.00041. [Google Scholar]

- Elliott, D.; Frank, S.; Sima’an, K.; Specia, L. Multi30K: Multilingual English-German Image Descriptions. In Proceedings of the 5th Workshop on Vision and Language, Berlin, Germany, 12 August 2016. [Google Scholar] [CrossRef]

- Fuglede, B.; Topsoe, F. Jensen-Shannon divergence and Hilbert space embedding. In Proceedings of the International Symposium onInformation Theory, ISIT 2004, Chicago, IL, USA, 27 June–2 July 2004. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).