Oscillator Simulation with Deep Neural Networks

Abstract

1. Introduction

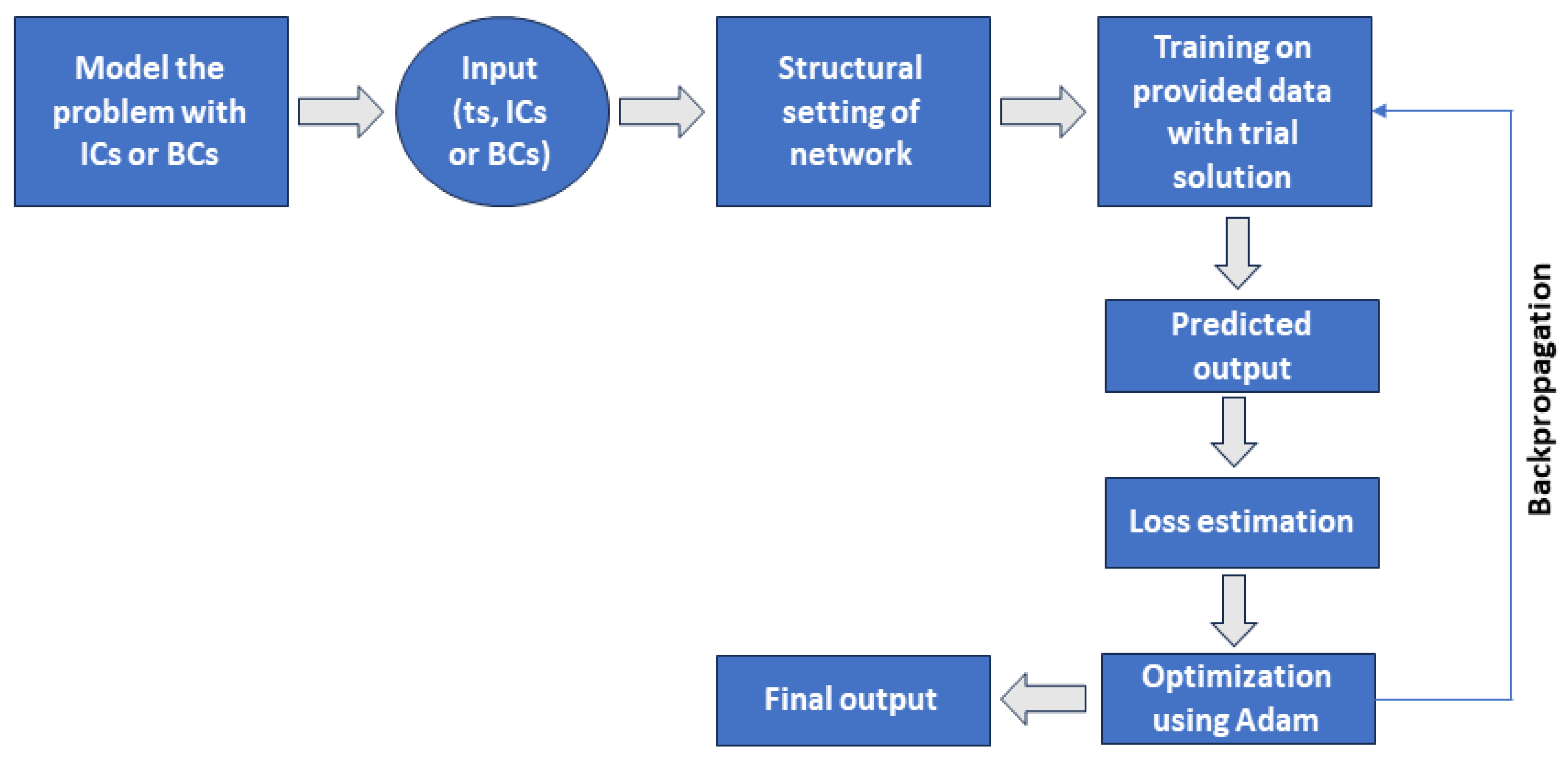

2. Structural Configuration and Method

- I.

- PreparationProvide a comprehensive dataset (X, Y), which consists of input features X and corresponding dependent variable values Y, to train the FCNN with diverse input signals and operational conditions. Split the dataset into training and testing folders to validate the performance of the model. The situations included in this dataset span a wide variety of amplitudes, frequencies, and nonlinearities.

- II.

- Launching the modelInitialize the model parameters of the FCNN, including weights, biases, learning rate, and number of epochs. Set the number of input units corresponding to the input features and output units in the layer according to the dependent variable. Our suggested neural network design has one input unit of network because there is only one independent variable in each DE presented in Section 3.1 and Section 3.2. The number of output units is also one, depending upon the dependent variable which is only one (for each DE). Three hidden layers are involved in our network, making it a deep neural network. Each hidden layer contains 16 units of neurons in total.

- III.

- Forward propagationFor each epoch, perform forward propagation to obtain the output of the model, including applying the activation function to introduce nonlinearity. The choice of SinActv facilitated smooth transitions and continuous gradients during training because SinActv is continuous and smooth. The sine function’s smoothness enables effective optimization and aids in avoiding problems, such as disappearing or bursting gradients, which can impede training. Moreover, it accelerates the convergence rate.

- IV.

- Estimating lossCalculate the loss between the true and predicted values of the output of the training set. Update the cumulative loss using an appropriate loss function. To calculate residuals, we used the L2 loss function, which is the average of the squared difference between the true and predicted values.

- V.

- BackpropagationCompute the gradients of weights and biases with respect to loss by performing backpropagation, propagating the gradients backward and updating weights and biases accordingly.

- VI.

- OptimizationUse an optimization algorithm to update the parameters. Utilizing the dataset, the FCNN is trained using cutting-edge training methods, including regularization and optimization algorithms, to improve its performance and generalization skills. This step helps to minimize the loss calculated during the training process. An Adam algorithm [45] with a learning rate of 0.001 is adapted to train on distinct points for each epoch that is produced by adding Gaussian noise [46] to the evenly distributed points on the t domain.

- VII.

- Assessment of the modelAfter training a specified number of epochs, analyze the performance of the model using a testing set, which includes calculation of the average loss and evaluation of the accuracy of the model.

- VIII.

- PredictionPass the input characteristics once the training procedure is complete to forecast the dependent variable’s output for unseen data.

| Algorithm 1: FCNN Training and Prediction. |

Activation function = , Predicted output = , Weights = , Gradients = Biases = |

3. Application: Mathematical Models

3.1. Van der Pol Equation (Oscillator 1)

3.2. Mathieu Equation (Oscillator 2)

4. Results

4.1. Behavior of Oscillations for Parametric Excitation (Oscillator 1)

4.2. Behavior of Oscillations for External Excitation (Oscillator 1)

4.3. Effects of Parameters on the Oscillations of Oscillator 2

5. Discussion

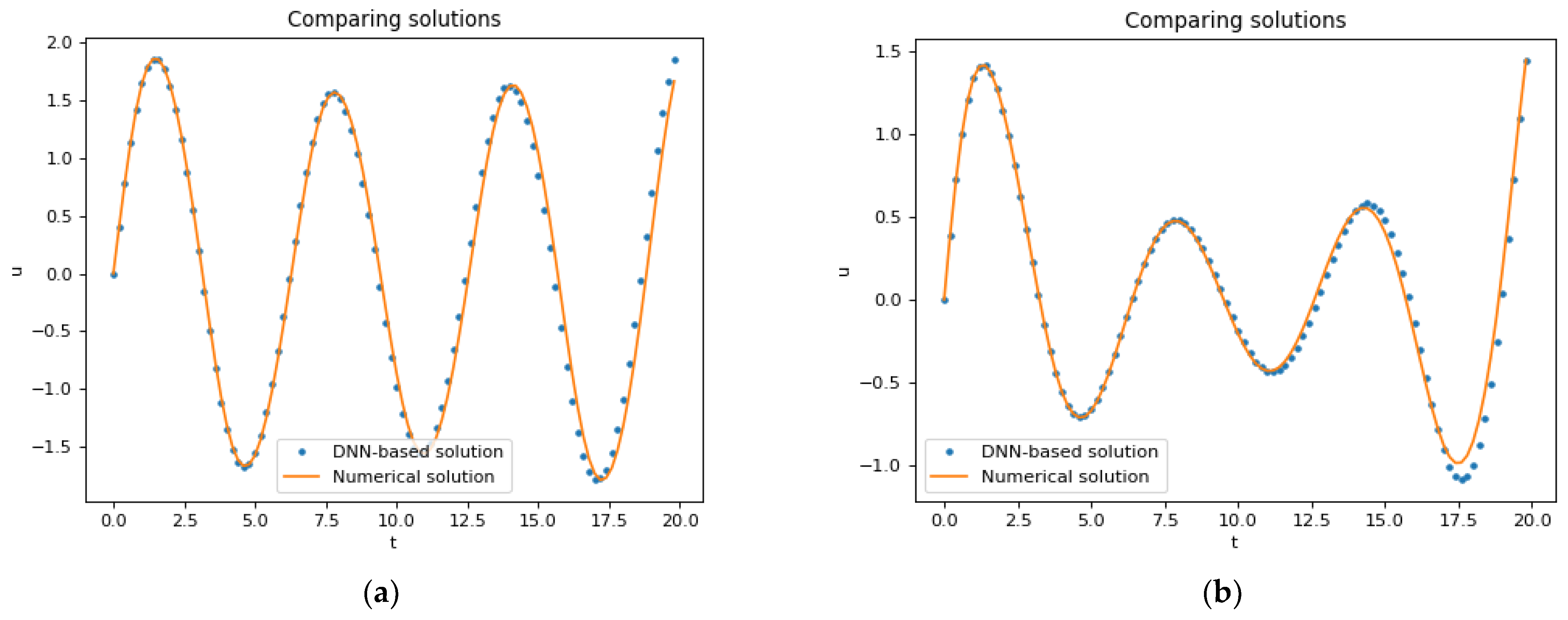

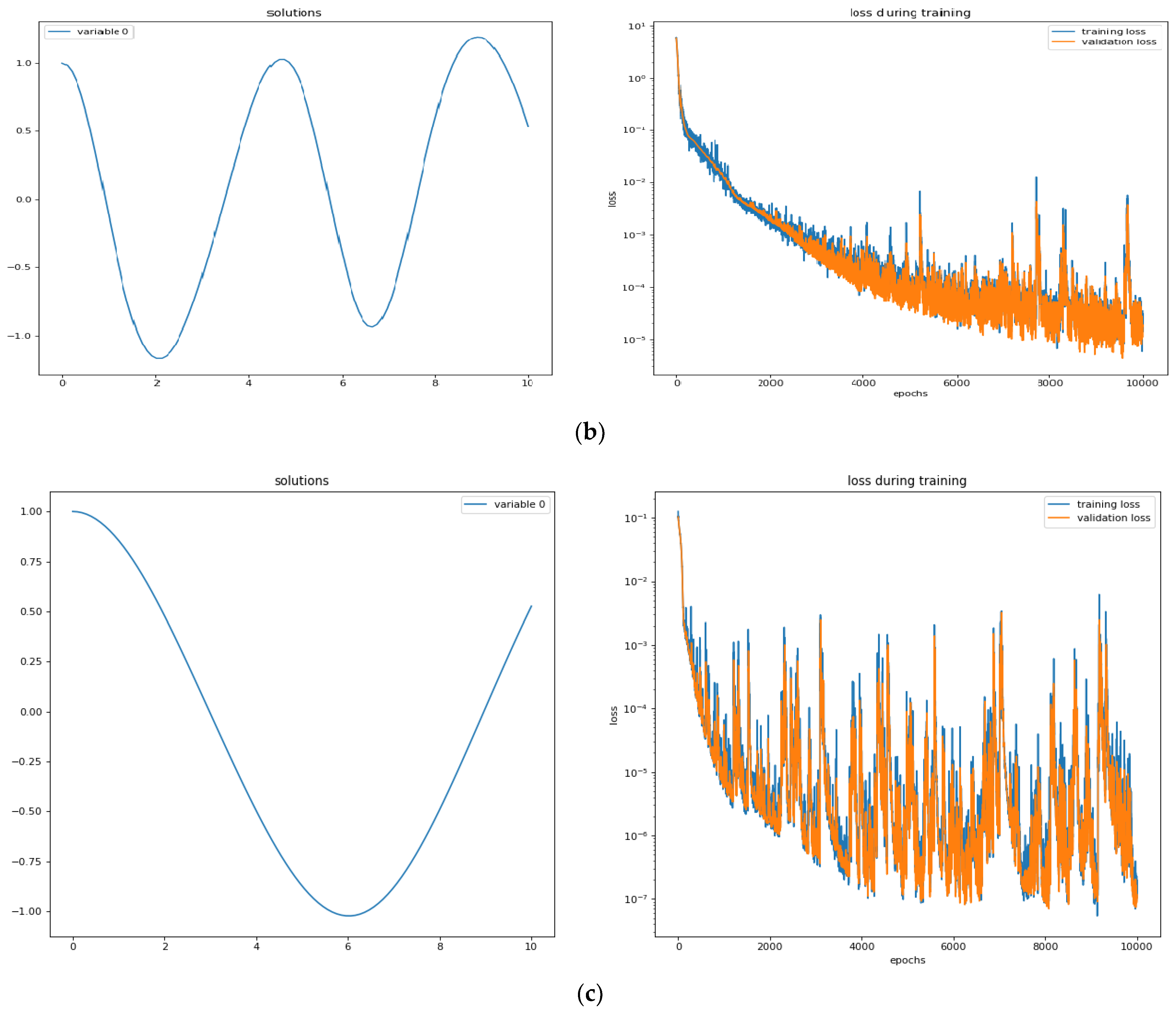

- We successfully applied the proposed algorithm of using an FCNN as the fundamental architecture of the DNN for approximating the solution of differential equations governed by linear and nonlinear harmonic oscillators. The ability of DNNs to make accurate predictions, even in nonlinear and dynamic scenarios, highlights their robustness in handling complex, real-world problems.

- Trained DNN models can generalize well to unseen data patterns, making them useful for predicting responses in scenarios not encountered during training.

- The proposed algorithm gives the best results using an appropriate architectural setting for the network, including number of units in input, output, and hidden layers, SinActv as the activation function, and Adam as the optimizer.

- During both the training and validation process, loss is evaluated. The observed loss values from graphical results are notably minimal, signifying the efficiency of the network’s performance during the training and validation procedures.

- To validate the proposed methodology, we performed a comparison of the DNN-based methodology with the LSODA algorithm, which is based on the numerical Adams–Bashforth method. It shows the discrepancy between the two methodologies.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Qian, E.; Kramer, B.; Peherstorfer, B.; Willcox, K. Lift & learn: Physics-informed machine learning for large-scale nonlinear dynamical systems. Phys. D Nonlinear Phenom. 2020, 406, 132401. [Google Scholar]

- Van den Bosch, P.P.J.; van der Klauw, A.C. Modeling, Identification and Simulation of Dynamical Systems; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Abohamer, M.; Awrejcewicz, J.; Amer, T. Modeling of the vibration and stability of a dynamical system coupled with an energy harvesting device. Alex. Eng. J. 2023, 63, 377–397. [Google Scholar] [CrossRef]

- Zhao, Y.; Jiang, C.; Vega, M.A.; Todd, M.D.; Hu, Z. Surrogate modeling of nonlinear dynamic systems: A comparative study. J. Comput. Inf. Sci. Eng. 2023, 23, 011001. [Google Scholar] [CrossRef]

- Bukhari, A.H.; Sulaiman, M.; Raja, M.A.Z.; Islam, S.; Shoaib, M.; Kumam, P. Design of a hybrid NAR-RBFs neural network for nonlinear dusty plasma system. Alex. Eng. J. 2020, 59, 3325–3345. [Google Scholar] [CrossRef]

- Ul Rahman, J.; Makhdoom, F.; Ali, A.; Danish, S. Mathematical modeling and simulation of biophysics systems using neural network. Int. J. Mod. Phys. B 2023, 2450066. [Google Scholar] [CrossRef]

- Ul Rahman, J.; Danish, S.; Lu, D. Deep Neural Network-Based Simulation of Sel’kov Model in Glycolysis: A Comprehensive Analysis. Mathematics 2023, 11, 3216. [Google Scholar] [CrossRef]

- Onder, I.; Secer, A.; Ozisik, M.; Bayram, M. On the optical soliton solutions of Kundu–Mukherjee–Naskar equation via two different analytical methods. Optik 2022, 257, 168761. [Google Scholar] [CrossRef]

- Goswami, A.; Singh, J.; Kumar, D.; Gupta, S. An efficient analytical technique for fractional partial differential equations occurring in ion acoustic waves in plasma. J. Ocean. Eng. Sci. 2019, 4, 85–99. [Google Scholar] [CrossRef]

- Iqbal, N.; Chughtai, M.T.; Ullah, R. Fractional Study of the Non-Linear Burgers’ Equations via a Semi-Analytical Technique. Fractal Fract. 2023, 7, 103. [Google Scholar] [CrossRef]

- Rahman, J.U.; Mannan, A.; Ghoneim, M.E.; Yassen, M.F.; Haider, J.A. Insight into the study of some nonlinear evolution problems: Applications based on Variation Iteration Method with Laplace. Int. J. Mod. Phys. B 2023, 37, 2350030. [Google Scholar] [CrossRef]

- Huang, Y.; Sun, S.; Duan, X.; Chen, Z. A study on deep neural networks framework. In Proceedings of the 2016 IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Xi’an, China, 3–5 October 2016. [Google Scholar]

- Manogaran, M.; Louzazni, M. Analysis of artificial neural network: Architecture, types, and forecasting applications. J. Electr. Comput. Eng. 2022, 2022, 5416722. [Google Scholar]

- Rackauckas, C.; Ma, Y.; Martensen, J.; Warner, C.; Zubov, K.; Supekar, R.; Skinner, D.; Ramadhan, A.; Edelman, A. Universal differential equations for scientific machine learning. arXiv 2020, arXiv:2001.04385. [Google Scholar]

- Lagaris, I.E.; Aristidis, L.; Dimitrios, I. Fotiadis. Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans. Neural Netw. 1998, 9, 987–1000. [Google Scholar] [CrossRef] [PubMed]

- Tsoulos, I.G.; Gavrilis, D.; Glavas, E. Solving differential equations with constructed neural networks. Neurocomputing 2009, 72, 2385–2391. [Google Scholar] [CrossRef]

- Tsoulos, I.G.; Gavrilis, D.; Glavas, E. Neural network construction and training using grammatical evolution. Neurocomputing 2008, 72, 269–277. [Google Scholar] [CrossRef]

- O’Neill, M.; Ryan, C. Grammatical evolution. In IEEE Transactions on Evolutionary Computation; IEEE: Piscataway, NJ, USA, 2001; Volume 5, pp. 349–358. [Google Scholar]

- Martelli, A.; Ravenscroft, A.M.; Holden, S.; McGuire, P. Python in a Nutshell; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2023. [Google Scholar]

- Hopkins, E. Machine learning tools, algorithms, and techniques. J. Self-Gov. Manag. Econ. 2022, 10, 43–55. [Google Scholar]

- Herzen, J.; Lässig, F.; Piazzetta, S.G.; Neuer, T.; Tafti, L.; Raille, G.; Van Pottelbergh, T.; Pasieka, M.; Skrodzki, A.; Huguenin, N.; et al. Darts: User-friendly modern machine learning for time series. J. Mach. Learn. Res. 2022, 23, 5442–5447. [Google Scholar]

- Nascimento, R.G.; Fricke, K.; Viana, F.A. A tutorial on solving ordinary differential equations using Python and hybrid physics-informed neural network. Eng. Appl. Artif. Intell. 2020, 96, 103996. [Google Scholar] [CrossRef]

- Chen, F.; Sondak, D.; Protopapas, P.; Mattheakis, M.; Liu, S.; Agarwal, D.; Di Giovanni, M. Neurodiffeq: A python package for solving differential equations with neural networks. J. Open Source Softw. 2020, 5, 1931. [Google Scholar] [CrossRef]

- Habiba, M.; Pearlmutter, B.A. Pearlmutter. Continuous Convolutional Neural Networks: Coupled Neural PDE and ODE. In 2021 International Conference on Electrical, Computer and Energy Technologies (ICECET); IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Afzali, F.; Kharazmi, E.; Feeny, B.F. Resonances of a forced van der Pol equation with parametric damping. Nonlinear Dyn. 2023, 111, 5269–5285. [Google Scholar] [CrossRef]

- Kovacic, I.; Rand, R.; Sah, S.M. Mathieu’s equation and its generalizations: Overview of stability charts and their features. Appl. Mech. Rev. 2018, 70, 020802. [Google Scholar] [CrossRef]

- Rehman, S.; Hussain, A.; Rahman, J.U.; Anjum, N.; Munir, T. Modified Laplace based variational iteration method for the mechanical vibrations and its applications. Acta Mech. Autom. 2022, 16, 98–102. [Google Scholar] [CrossRef]

- Yang, X.-S. Introduction to Computational Mathematics; World Scientific Publishing Company: Singapore, 2014. [Google Scholar]

- El-Dib, Y.O.; Elgazery, N.S. Damped Mathieu equation with a modulation property of the homotopy perturbation method. Sound Vib. 2022, 56, 21–36. [Google Scholar] [CrossRef]

- Luo, Z.; Bo, Y.; Sadaf, S.M.; Liu, X. Van der Pol oscillator based on NbO2 volatile memristor: A simulation analysis. J. Appl. Phys. 2022, 131, 054501. [Google Scholar] [CrossRef]

- Gambella, C.; Ghaddar, B.; Naoum-Sawaya, J. Optimization problems for machine learning: A survey. Eur. J. Oper. 2021, 290, 807–828. [Google Scholar] [CrossRef]

- Janocha, K.; Czarnecki, W.M. On loss functions for deep neural networks in classification. arXiv 2017, arXiv:1702.05659. [Google Scholar] [CrossRef]

- Wang, Q.; Ma, Y.; Zhao, K.; Tian, Y. A comprehensive survey of loss functions in machine learning. Ann. Data Sci. 2020, 9, 187–212. [Google Scholar] [CrossRef]

- Qi, J.; Du, J.; Siniscalchi, S.M.; Ma, X.; Lee, C.-H. On mean absolute error for deep neural network based vector-to-vector regression. IEEE Signal Process. Lett. 2020, 27, 1485–1489. [Google Scholar] [CrossRef]

- Liu, L.; Li, P.; Chu, M.; Zhai, Z. L2-Loss nonparallel bounded support vector machine for robust classification and its DCD-type solver. Appl. Soft Comput. 2022, 126, 109125. [Google Scholar] [CrossRef]

- Ul Rahman, J.; Ali, A.; Ur Rehman, M.; Kazmi, R. A unit softmax with Laplacian smoothing stochastic gradient descent for deep convolutional neural networks. In Intelligent Technologies and Applications: Second International Conference, INTAP 2019, Bahawalpur, Pakistan, 6–8 November 2019, Revised Selected Papers 2; Springer: Singapore, 2020. [Google Scholar]

- Liu, X.; Zhou, J.; Qian, H. Short-term wind power forecasting by stacked recurrent neural networks with parametric sine activation function. Electr. Power Syst. Res. 2021, 192, 107011. [Google Scholar] [CrossRef]

- Ul Rahman, J.; Makhdoom, F.; Lu, D. Amplifying Sine Unit: An Oscillatory Activation Function for Deep Neural Networks to Recover Nonlinear Oscillations Efficiently. arXiv 2023, arXiv:2304.09759. [Google Scholar]

- Dubey, S.R.; Singh, S.K.; Chaudhuri, B.B. Activation functions in deep learning: A comprehensive survey and benchmark. Neurocomputing 2022, 503, 92–108. [Google Scholar] [CrossRef]

- Do, N.-T.; Pham, Q.-H. Vibration and dynamic control of piezoelectric functionally graded porous plates in the thermal environment using FEM and Shi’s TSDT. Case Stud. Therm. Eng. 2023, 47, 103105. [Google Scholar] [CrossRef]

- Rashid, U.; Ullah, N.; Khalifa, H.A.E.-W.; Lu, D. Bioconvection modified nanoliquid flow in crown cavity contained with the impact of gyrotactic microorganism. Case Stud. Therm. Eng. 2023, 47, 103052. [Google Scholar] [CrossRef]

- Naveed, A.; Rahman, J.U.; He, J.-H.; Alam, M.N.; Suleman, M. An efficient analytical approach for the periodicity of nano/microelectromechanical systems’ oscillators. Math. Probl. Eng. 2022, 2022, 9712199. [Google Scholar]

- Zhang, Y.; Lee, J.; Wainwright, M.; Jordan, M.I. On the learnability of fully-connected neural networks. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, PMLR, Fort Lauderdale, FL, USA, 20–22 April 2017. [Google Scholar]

- Yu, X.; Wang, Y.; Liang, J. A self-adaptive gradient descent search algorithm for fully-connected neural networks. Neurocomputing 2022, 478, 70–80. [Google Scholar]

- Diederik, P.K.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Majed, E.H.; Süsstrunk, S. Blind universal Bayesian image denoising with Gaussian noise level learning. IEEE Trans. Image Process. 2020, 29, 4885–4897. [Google Scholar]

- Hindmarsh, A.C.; Petzold, L.R. Livermore Solver for Ordinary Differential Equations (LSODA) for Stiff or Non–Stiff System; Nuclear Energy Agency (NEA) of the Organisation for Economic Co-operation and Development (OECD): Paris, France, 2005. [Google Scholar]

- Rushka, M.; Freericks, J.K. A completely algebraic solution of the simple harmonic oscillator. Am. J. Phys. 2020, 88, 976–985. [Google Scholar] [CrossRef]

| Parameters | For Oscillator 1 | For Oscillator 2 |

|---|---|---|

| Input units () | 1 | 1 |

| Hidden units ([, ]) | (64, 64, 64) | (16, 16, 16) |

| Output units () | 1 | 1 |

| Learning rate () | 0.001 | 0.001 |

| Activation function () | SinActv | SinActv |

| Number of epochs () | 20,000 | 10,000 |

| Loss function () | L2 | L2 |

| Optimizer () | Adam | Adam |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rahman, J.U.; Danish, S.; Lu, D. Oscillator Simulation with Deep Neural Networks. Mathematics 2024, 12, 959. https://doi.org/10.3390/math12070959

Rahman JU, Danish S, Lu D. Oscillator Simulation with Deep Neural Networks. Mathematics. 2024; 12(7):959. https://doi.org/10.3390/math12070959

Chicago/Turabian StyleRahman, Jamshaid Ul, Sana Danish, and Dianchen Lu. 2024. "Oscillator Simulation with Deep Neural Networks" Mathematics 12, no. 7: 959. https://doi.org/10.3390/math12070959

APA StyleRahman, J. U., Danish, S., & Lu, D. (2024). Oscillator Simulation with Deep Neural Networks. Mathematics, 12(7), 959. https://doi.org/10.3390/math12070959