Research on Improved Differential Evolution Particle Swarm Hybrid Optimization Method and Its Application in Camera Calibration

Abstract

1. Introduction

2. Camera Imaging Model

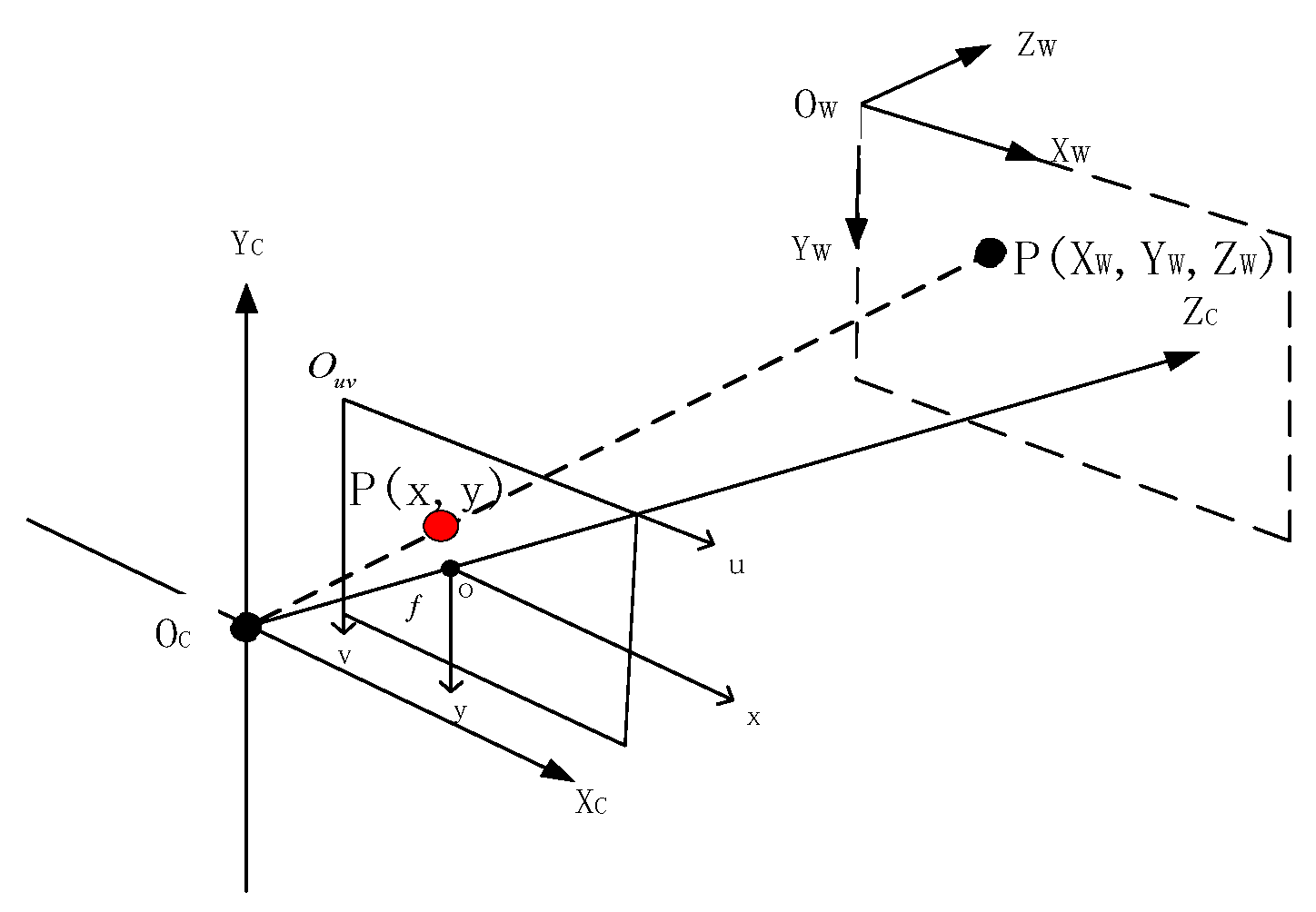

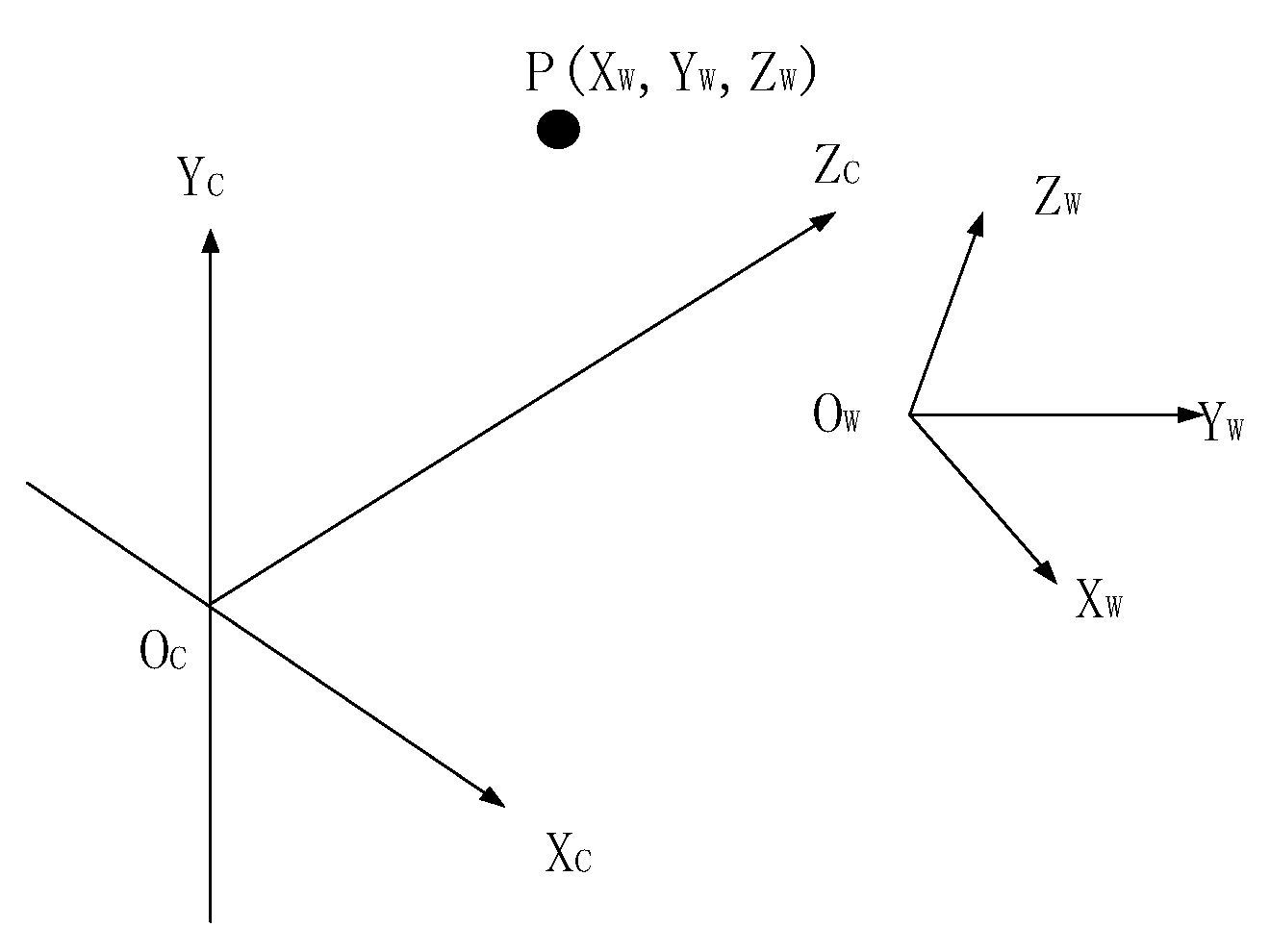

2.1. Coordinate System and Its Transformation Relation

- (1)

- Coordinate system

- The world coordinate system: , it describes the position of the measured object in the three-dimensional world. The origin coordinates can be determined according to the specific requirements, and the unit is in meters .

- Camera Coordinate System: , the original coordinate system is positioned at the optical center, with the -axis and -axis running parallel to the two edges of the image plane. The -axis coincides with the optical axis, and the unit of measurement is meters .

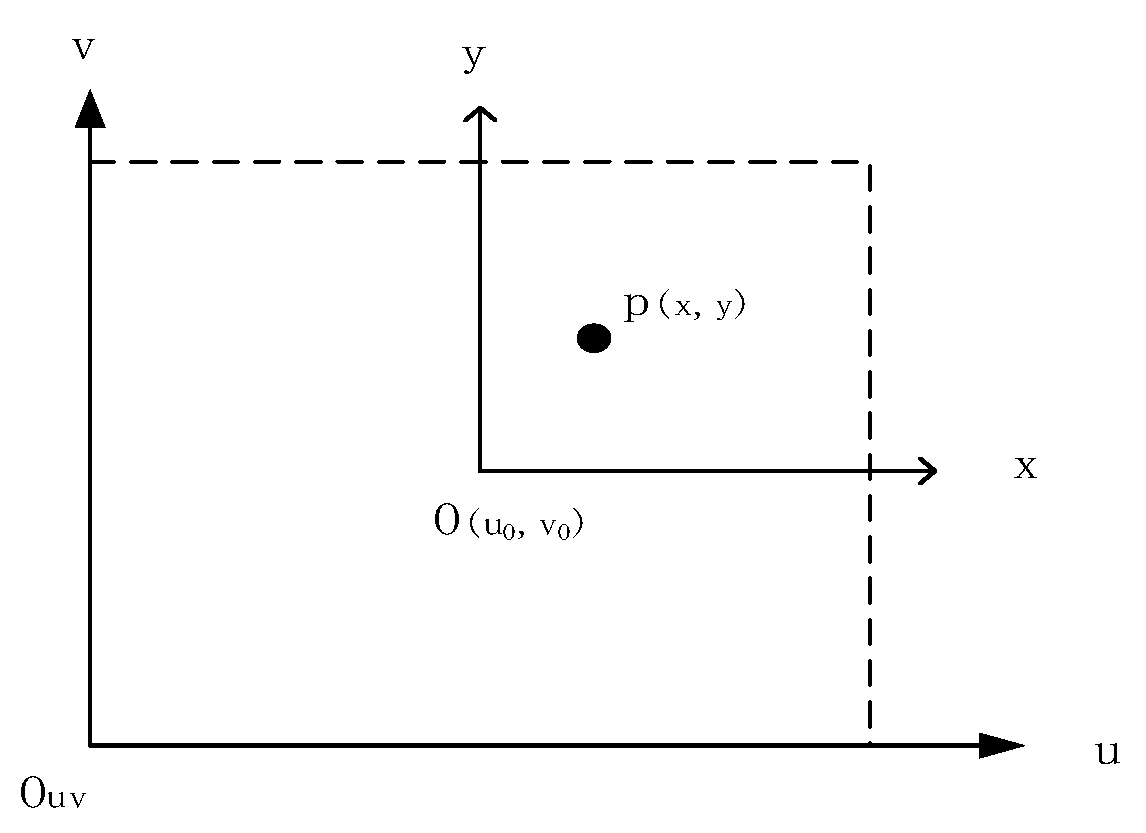

- Pixel Coordinate System: , the upper left corner is designated as the origin of the coordinate system for the image plane. The -axis of the pixel coordinate system extends horizontally from left to right, while the -axis extends vertically from top to bottom. The unit of measurement is pixels.

- The coordinate system for the image, referred to as the Image Coordinate System, is defined by the central point of the image plane. The -axis and -axis are aligned in parallel with the -axis and -axis of the pixel coordinate system, respectively. The unit of measurement employed is millimeters.

- (2)

- Coordinate system transformation relationship

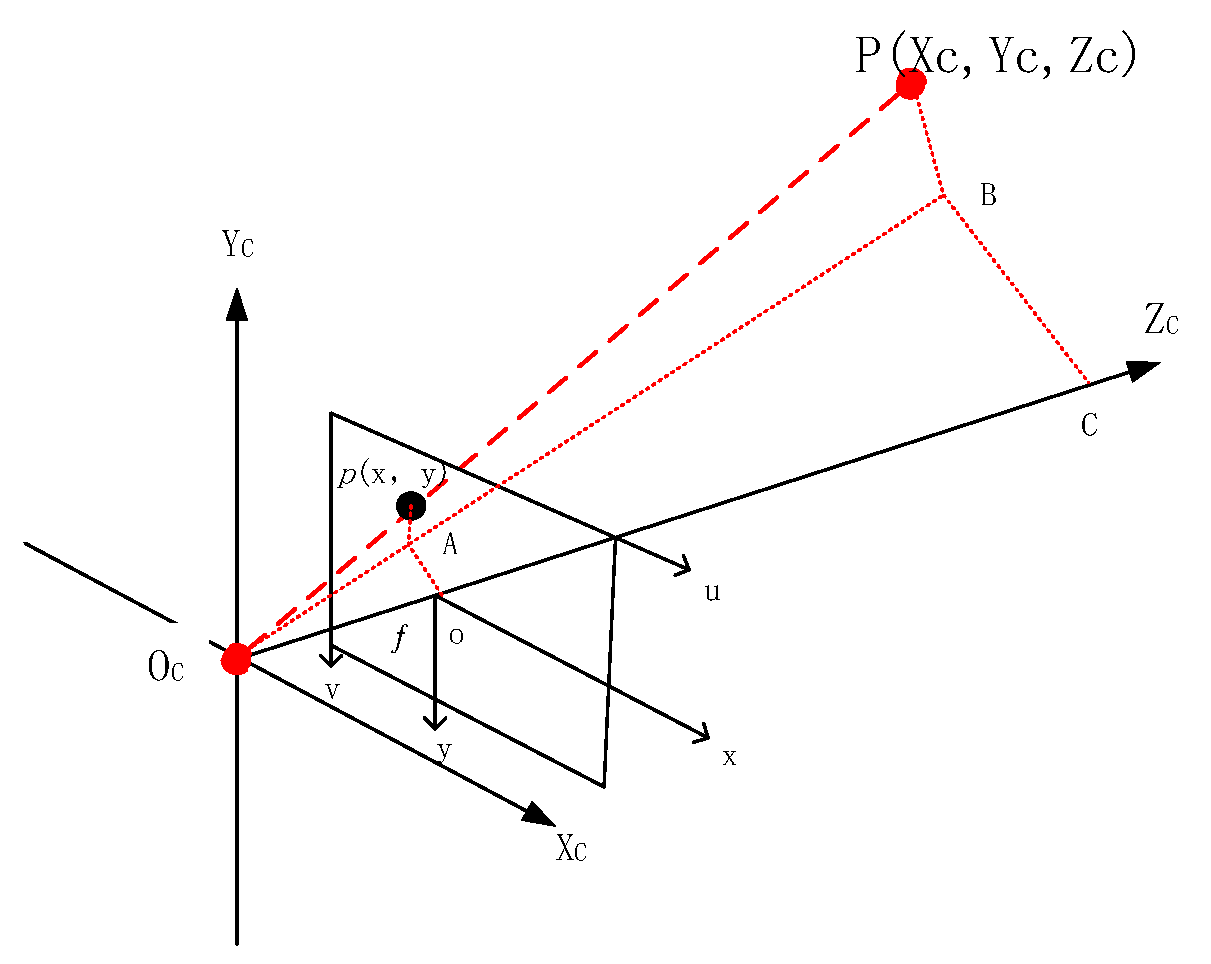

2.2. Camera Imaging Model

- (1)

- Linear camera model

- (2)

- Nonlinear distortion model

2.3. Distortion Correction

- (1)

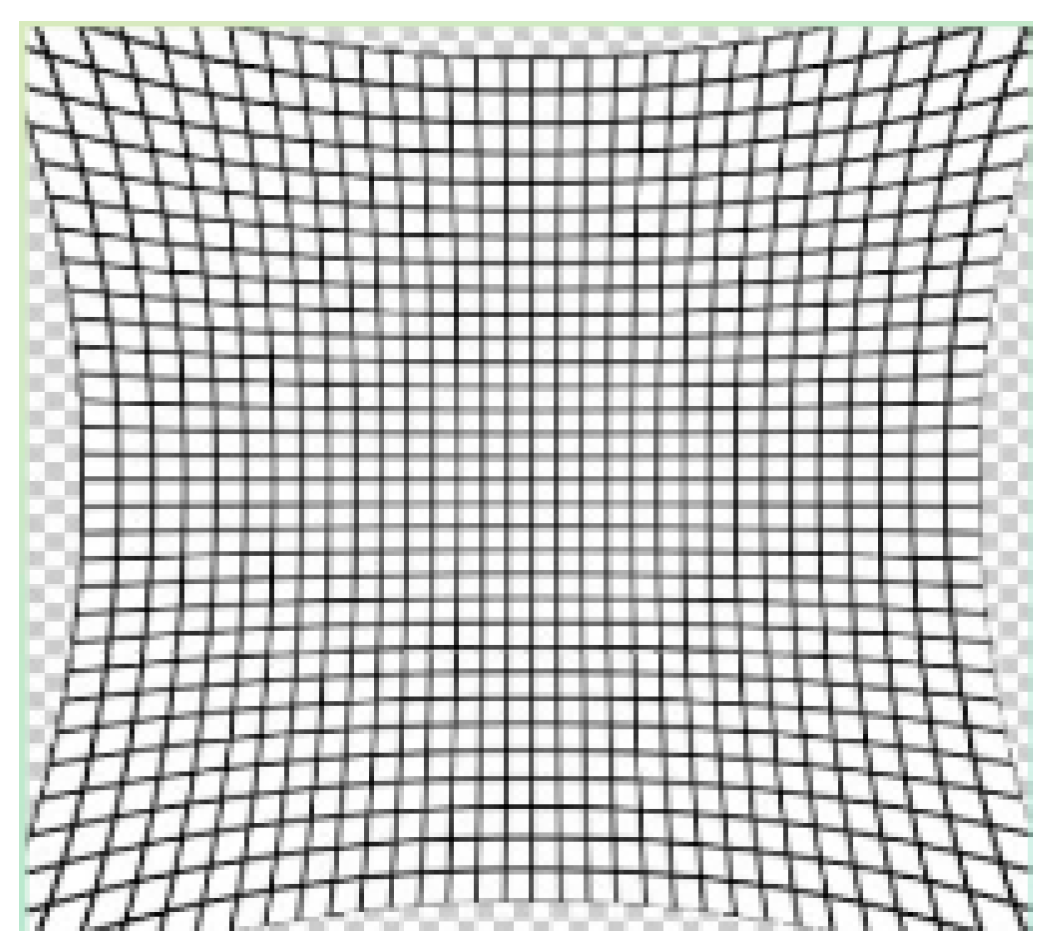

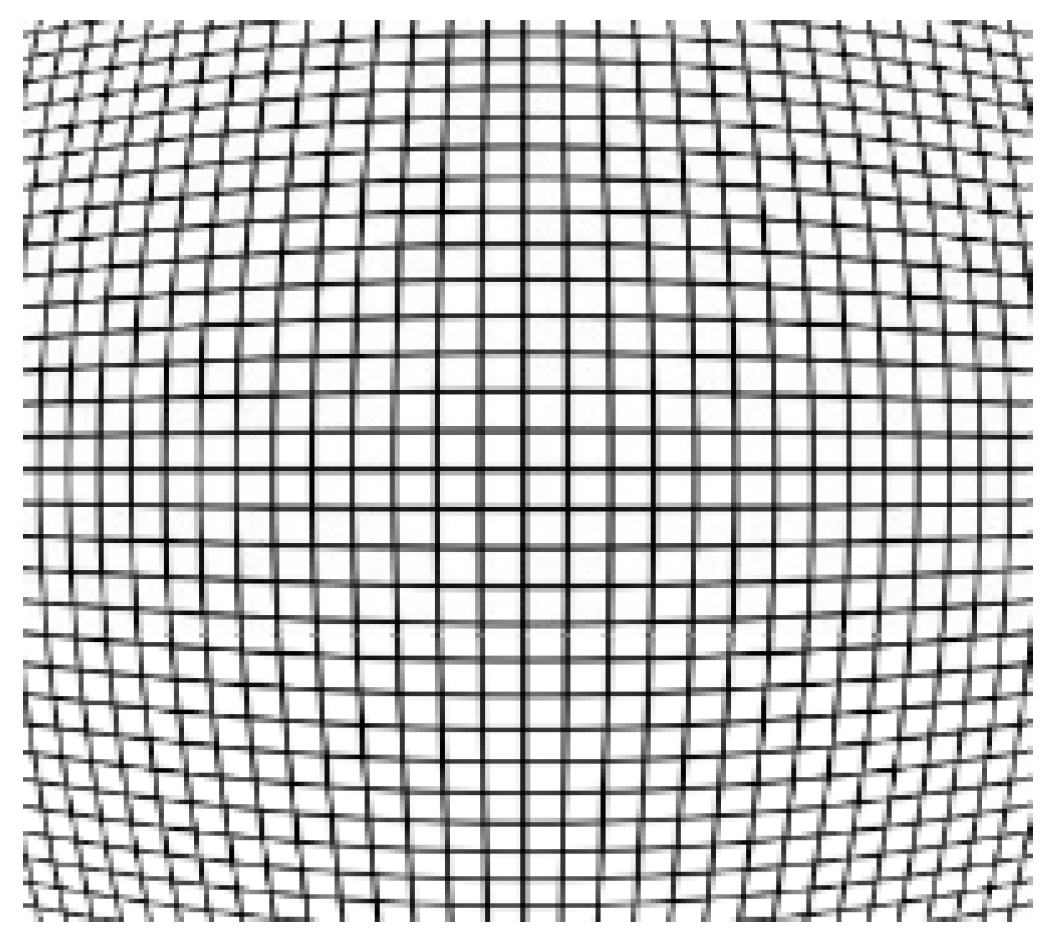

- Pincushion distortion: The magnification rate in the peripheral regions of the field of view is much larger than that near the optical axis center, commonly found in telephoto lenses [19].

- (2)

- Barrel distortion: In contrast to pincushion distortion, the magnification rate near the optical axis center is much larger than that in the peripheral regions [20].

- (3)

- Linear distortion: When the optical axis is not orthogonal to the vertical plane of objects being photographed, the convergence of the far side that should be parallel to the near side occurs at different angles, resulting in distortion. This distortion is essentially a form of perspective transformation, meaning that at certain angles, any lens will produce similar distortions [21].

3. Camera Calibration

3.1. Camera Calibration Algorithm

3.2. Binocular Stereo Calibration Method

4. Camera Parameter Optimization Algorithm

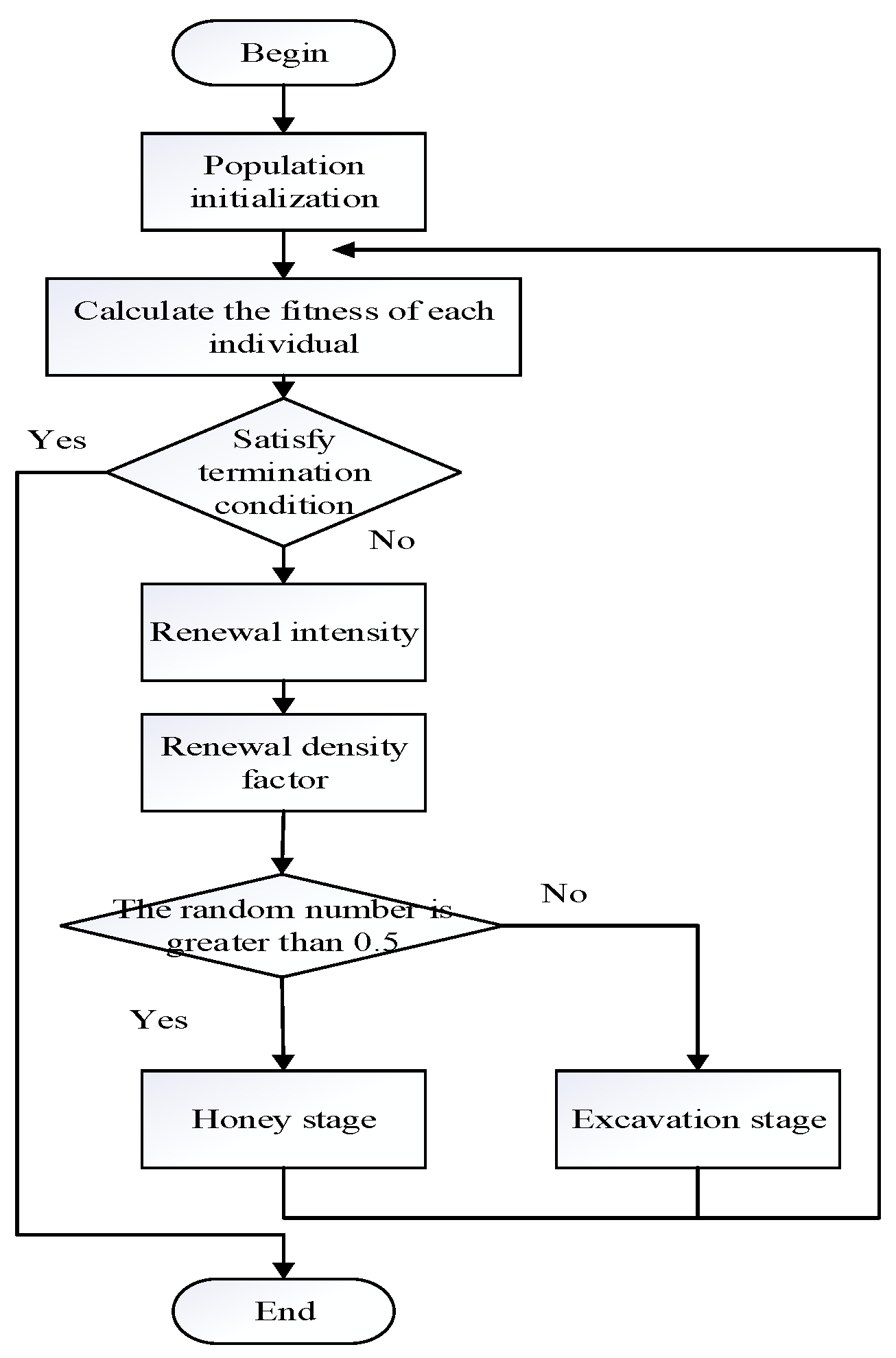

4.1. Honey Badger Algorithm

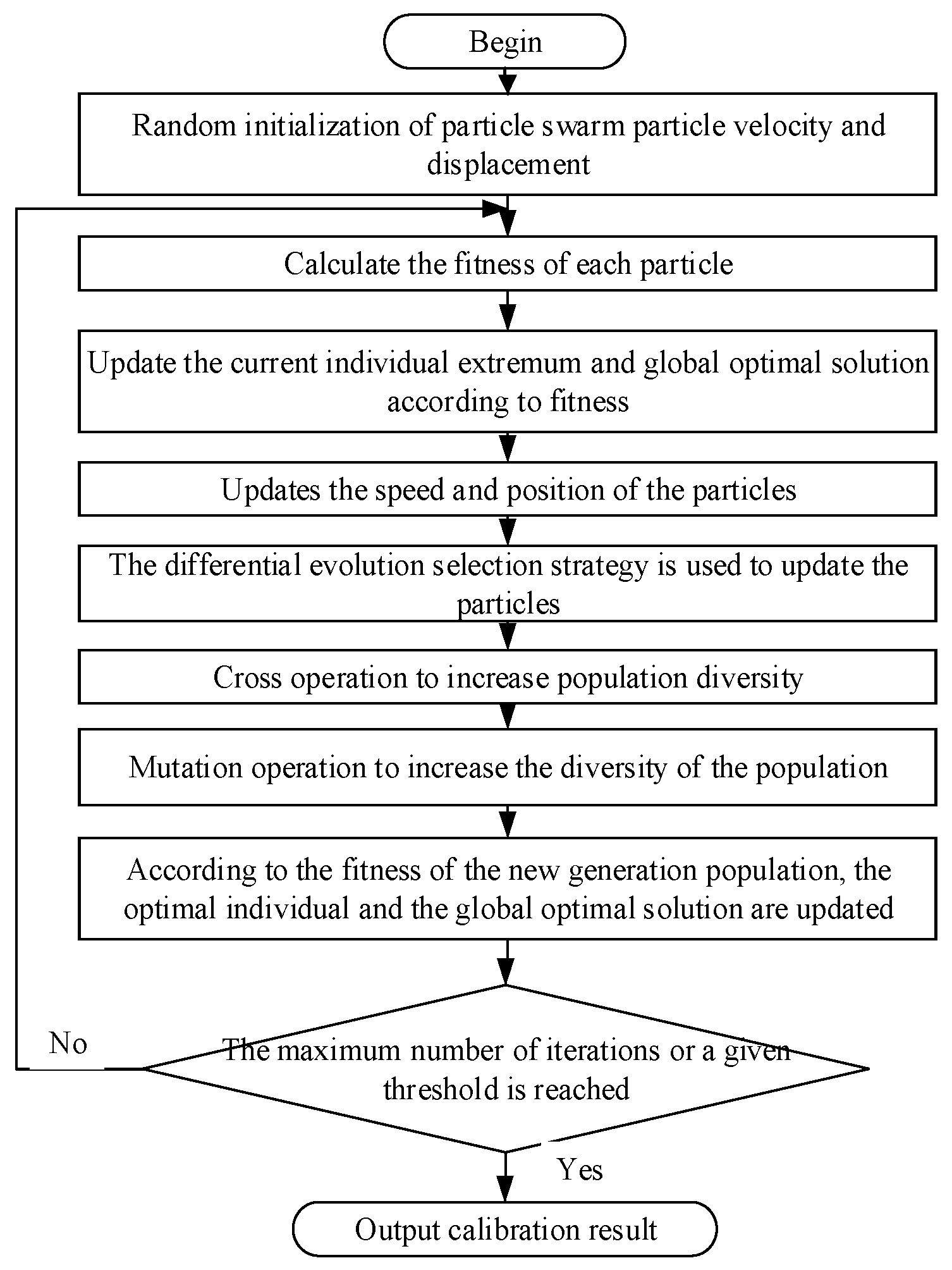

4.2. Improved Differential Evolution Particle Swarm Algorithm

4.2.1. Principle of Differential Evolution Algorithm

- (1)

- The initialization of the population

- (2)

- Variation operation

- (3)

- Crossover Operation

- (4)

- Selection Operation

4.2.2. Improved Differential Evolution Particle Swarm Hybrid Optimization Algorithm Design

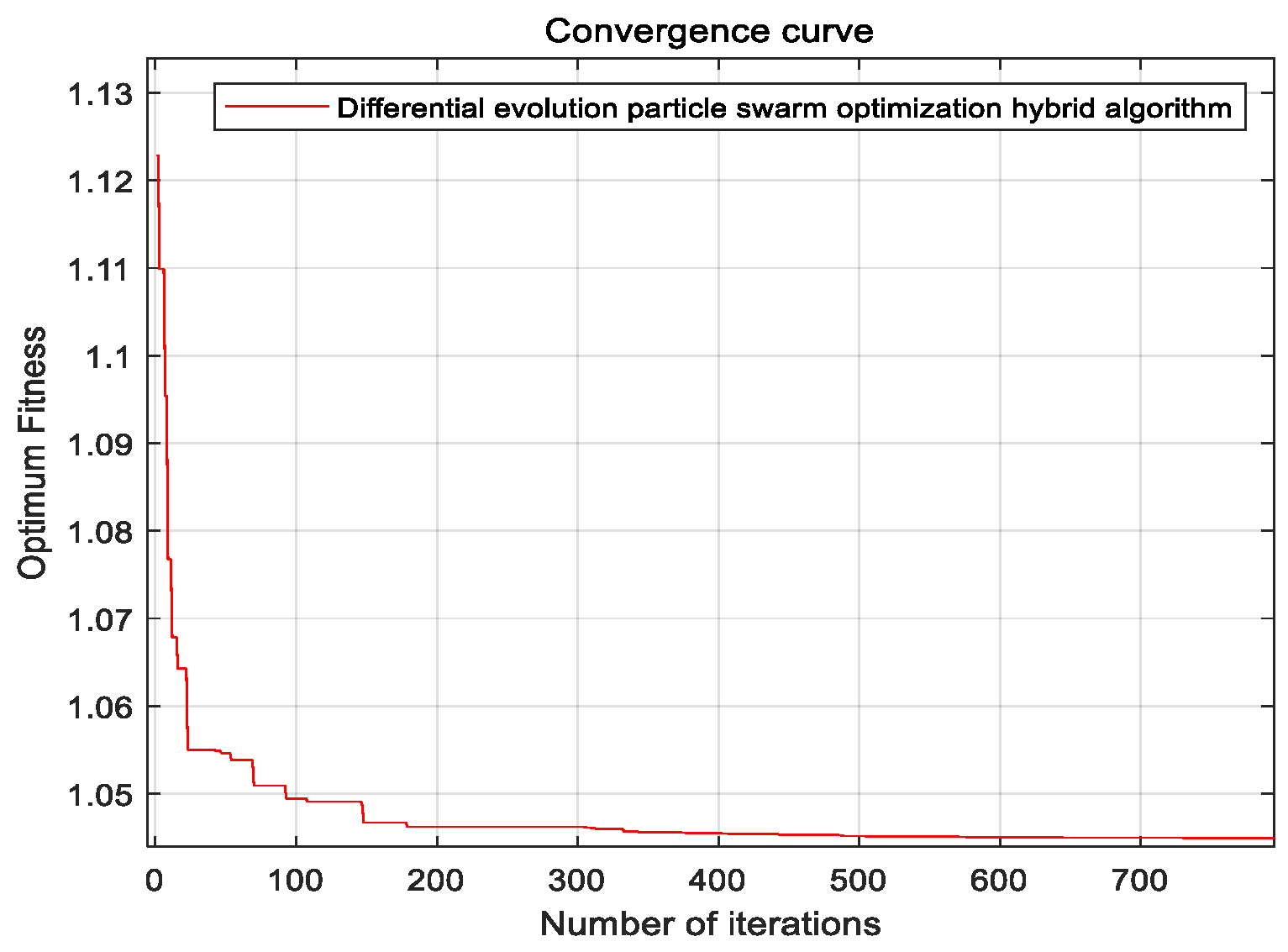

5. Experimental Comparison and Result Analysis

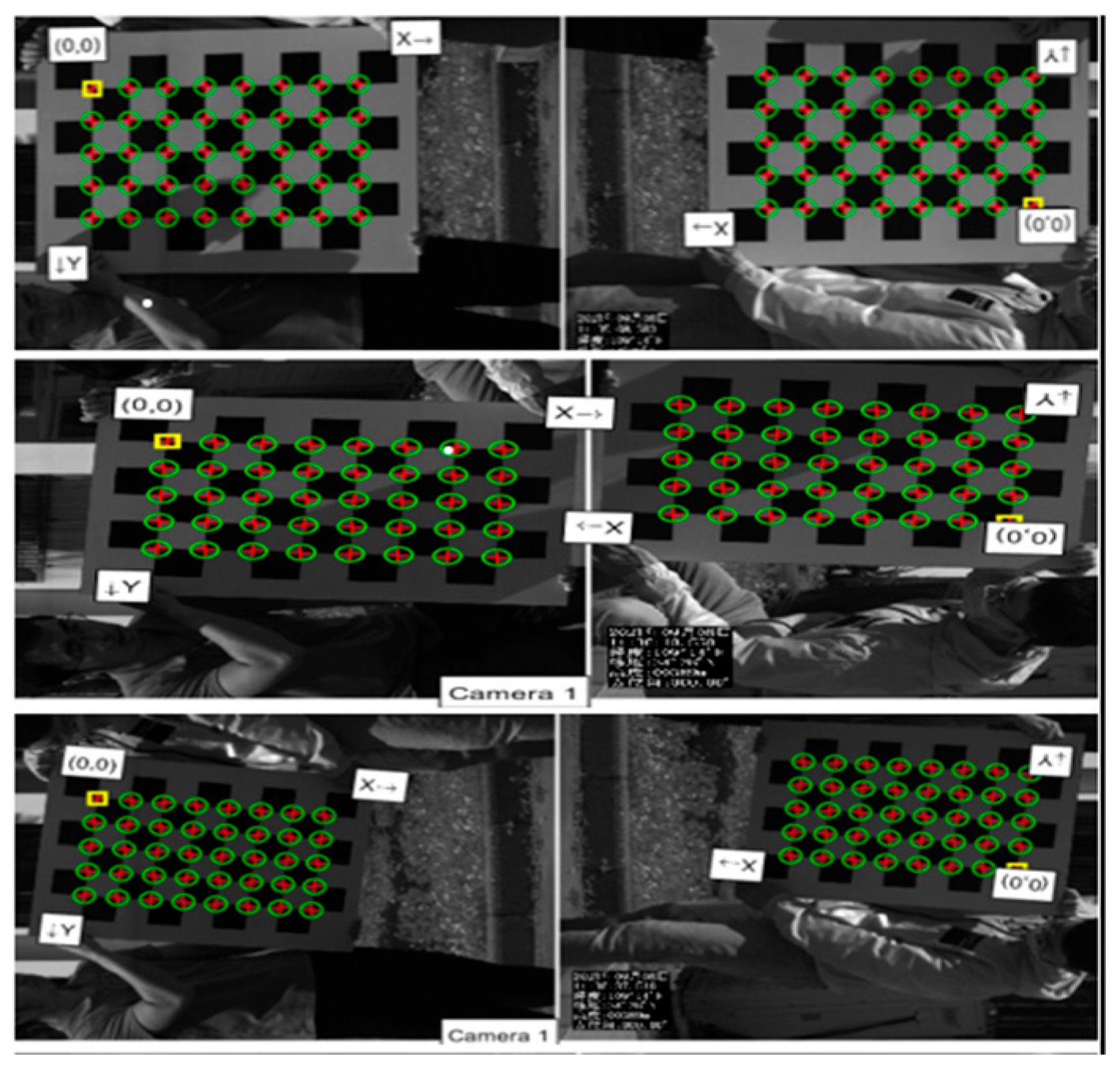

5.1. Procedure of Test

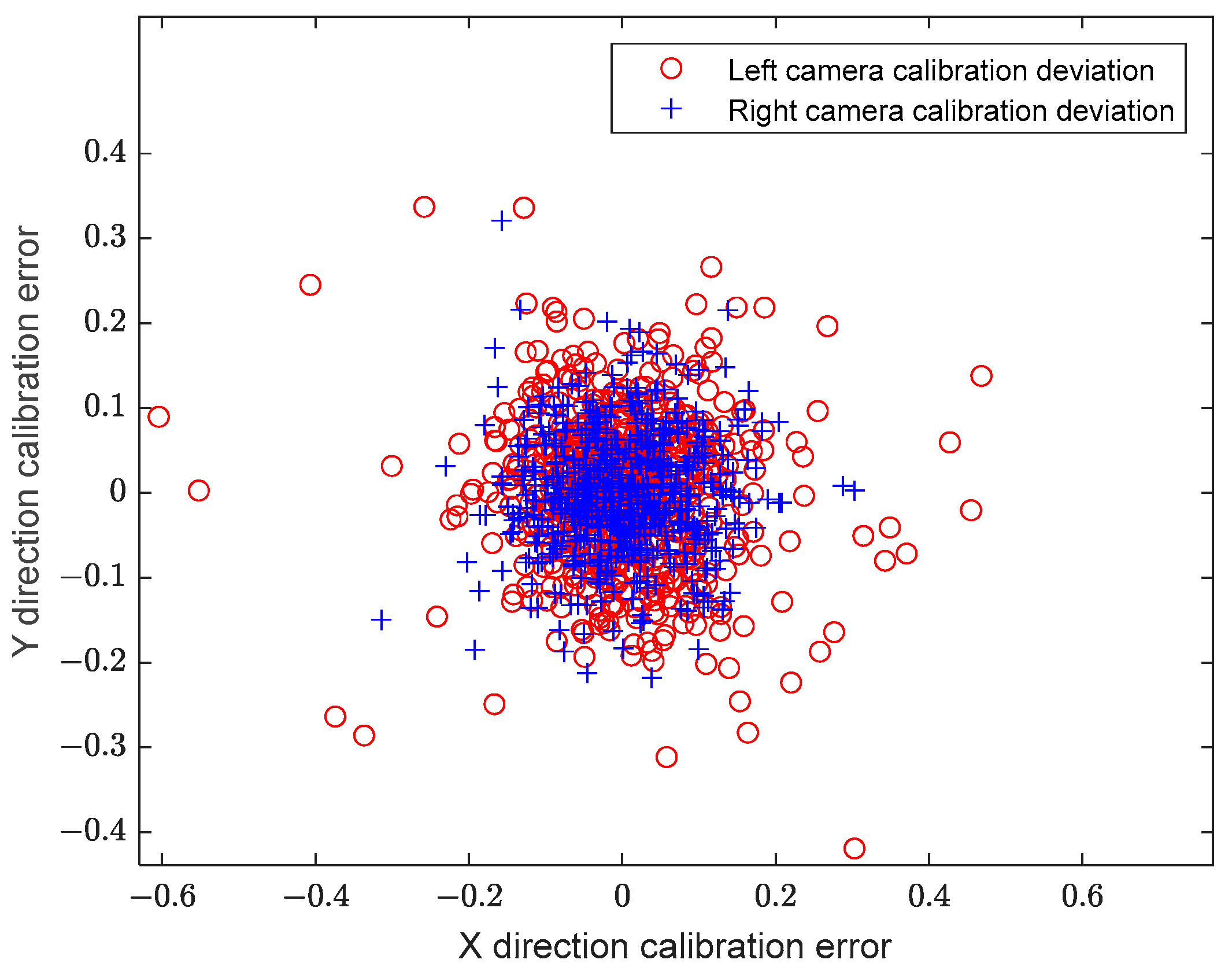

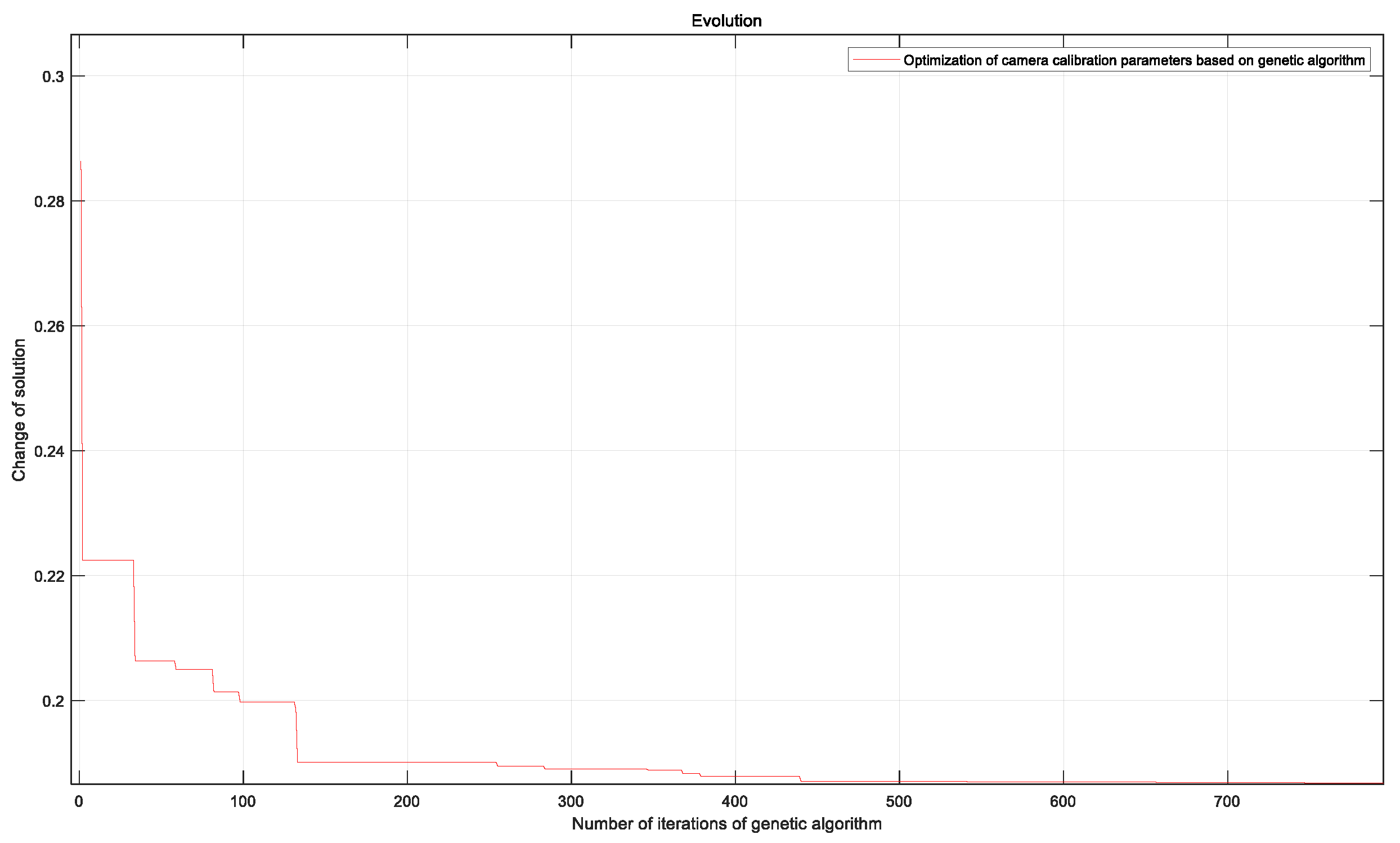

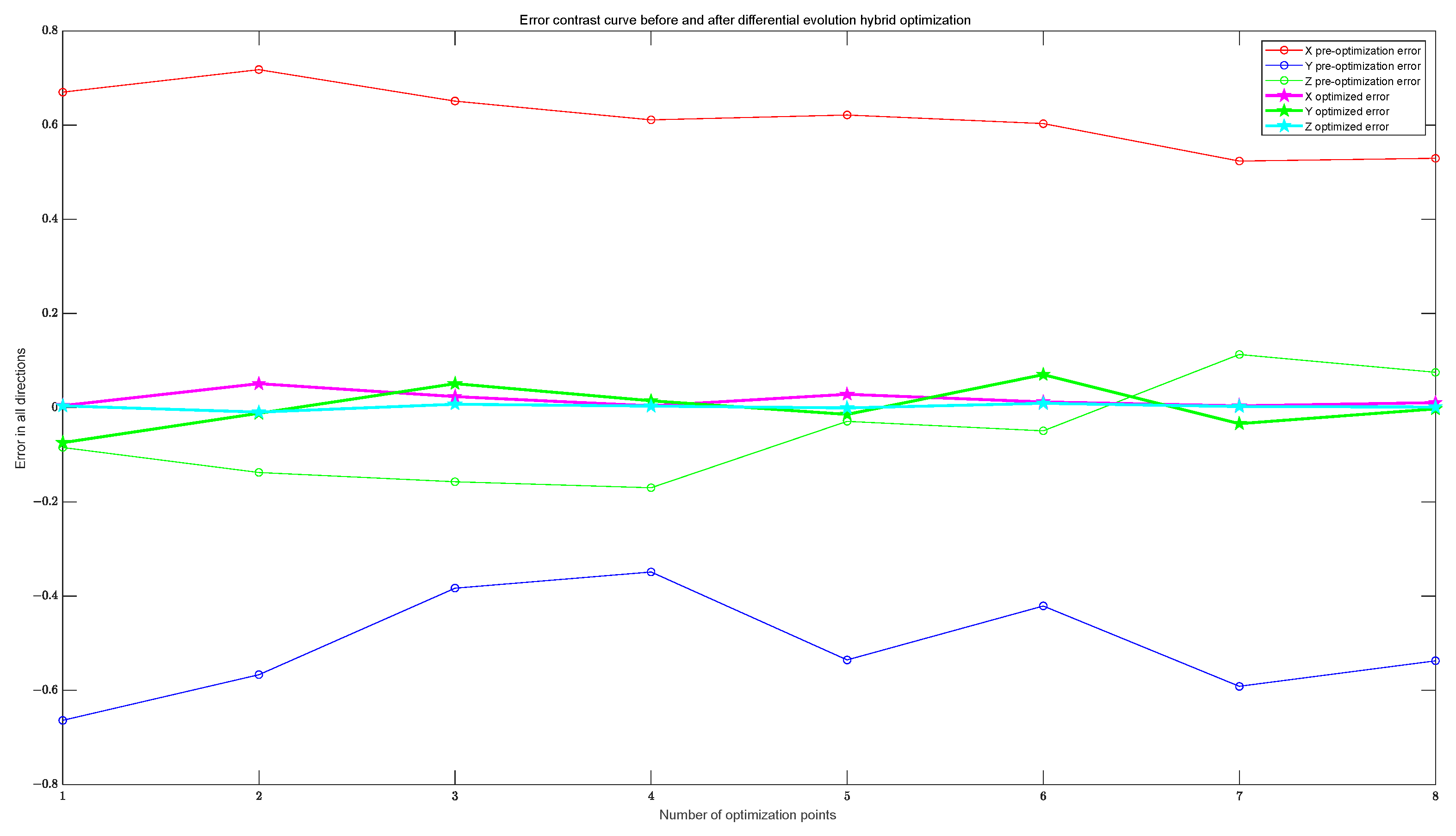

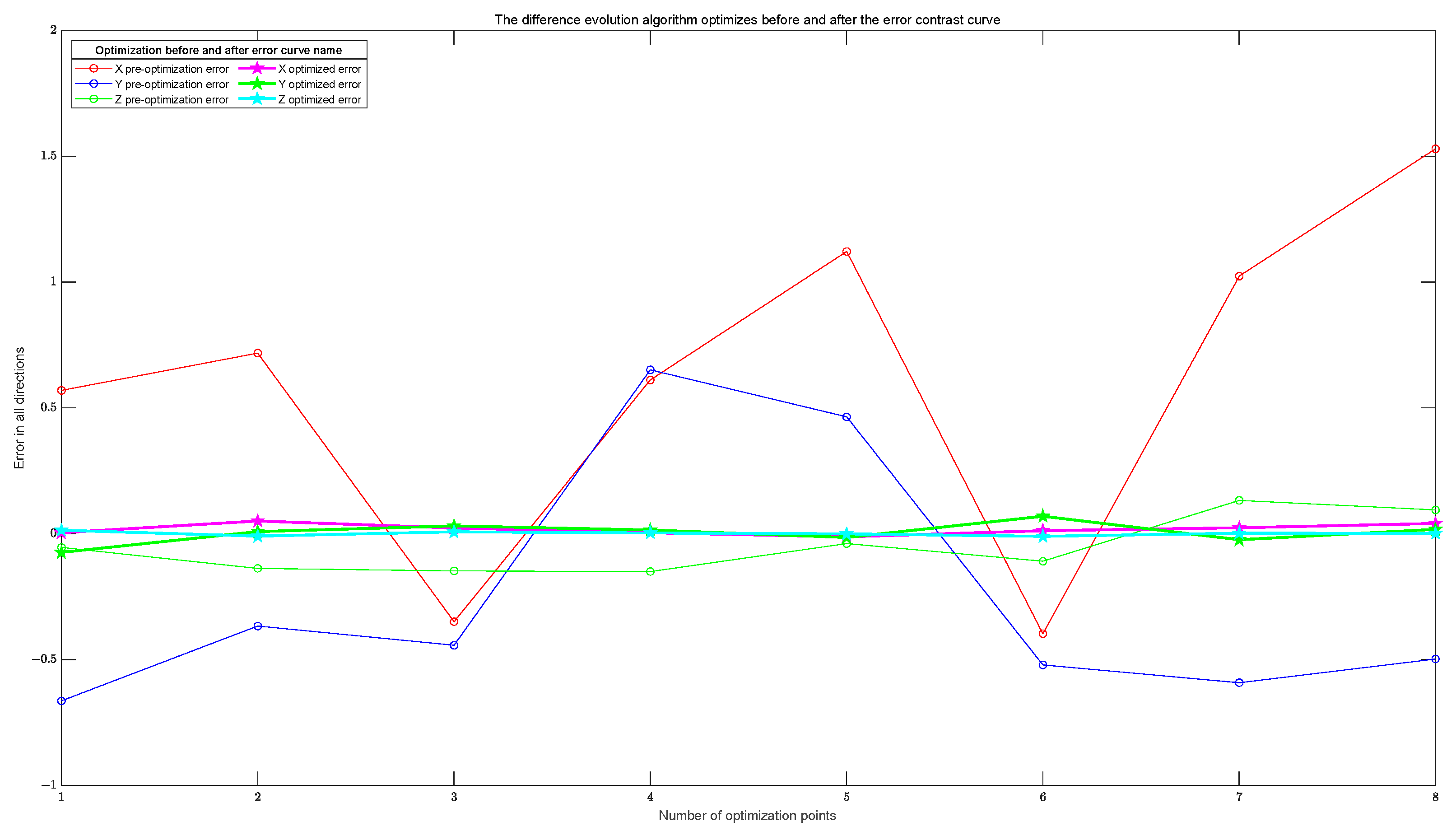

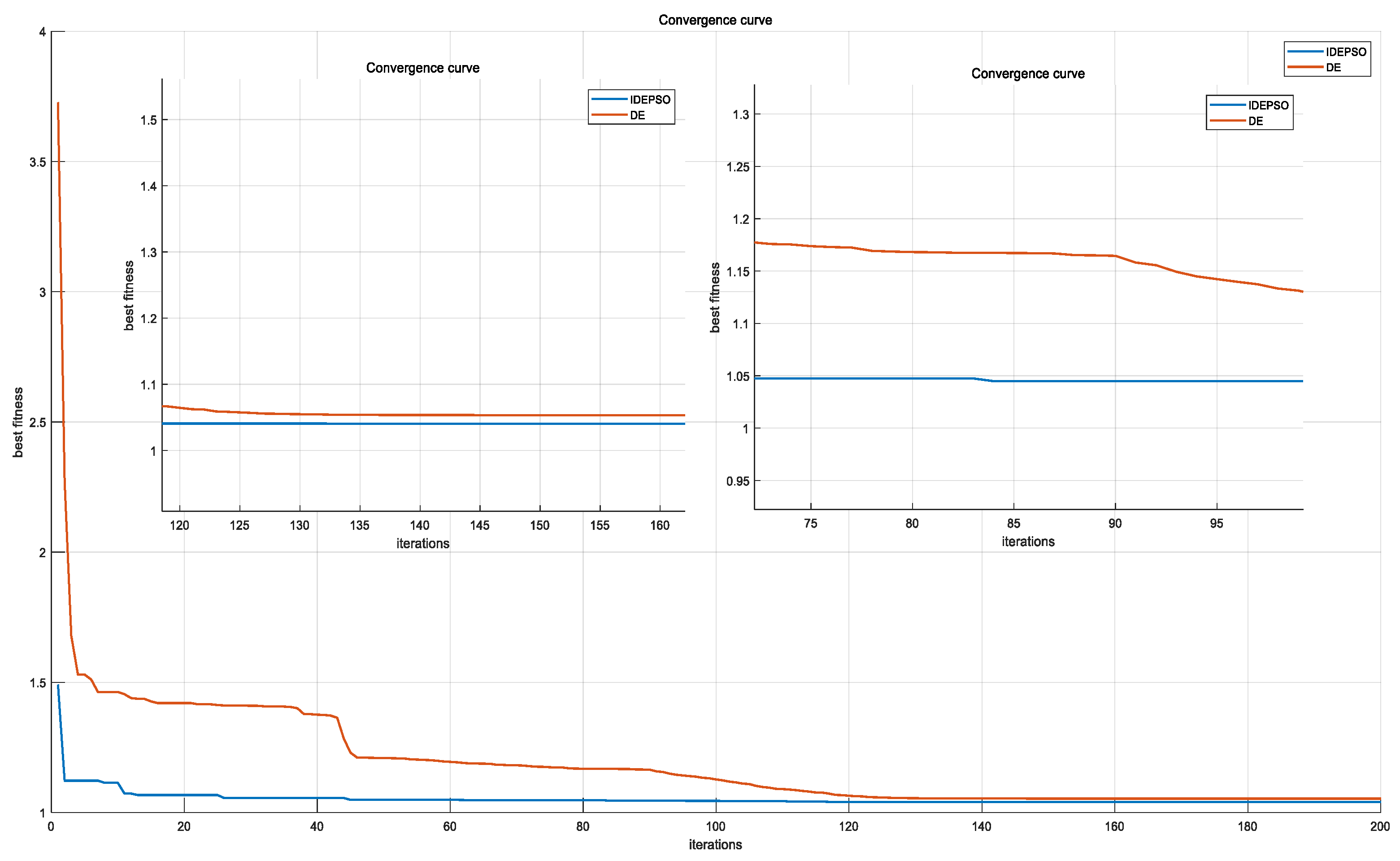

5.2. Results and Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Han, C.Z.; Zhu, H.Y. Multi-Source Information Fusion; Tsinghua University Press: Beijing, China, 2010; pp. 1–18. [Google Scholar]

- Ma, T.L.; Wang, X.M. Variational Bayesian STCKF for systems with uncertain model errors. Control Decis. 2016, 31, 2255–2260. [Google Scholar]

- Feng, X.X.; Chi, L.J.; Wang, Q. An improved generalized labeled multi-Bernoulli filter for maneuvering extended target tracking. Control Decis. 2019, 43, 2143–2149. [Google Scholar]

- Rui, Z.; Yu, F.; Bin, D.; Jiang, Z. Multi-UAV cooperative target tracking with bounded noise for connectivity preservation. Front. Inf. Technol. Electron. Eng. 2020, 21, 1413–1534. [Google Scholar]

- Sarkka, S. Recursive Noise Adaptive Kalman Filtering by Variational Bayesian Approximations. IEEE Trans. Autom. Control 2009, 54, 596–600. [Google Scholar] [CrossRef]

- Xu, H.; Xie, W.C.; Yuan, H.D. Maneuvering Target Tracking Algorithm Based on the Adaptive Augmented State Interacting Multiple Model. J. Electron. Inf. Technol. 2020, 42, 2749–2757. [Google Scholar]

- Liang, Y.; Jia, Y.G.; Pan, Q. Parameter Identification in Switching Multiple Model Estimation and Adaptive Interacting Multiple Model Estimator. Control Theory Appl. 2001, 18, 653–656. [Google Scholar]

- Jiang, W.; Wang, Z. Calibration of visual model for space manipulator with a hybrid LM–GA algorithm. Mech. Syst. Signal Process. 2016, 66–67, 399–409. [Google Scholar] [CrossRef]

- Liu, J.Y.; Wang, C.P.; Wang, W. SMC-CMeMBer filter based on pairwise Markov chains. Syst. Eng. Electron. 2019, 41, 1686–1691. [Google Scholar]

- Huang, D.; Leung, H. Maximum Likelihood State Estimation of Semi-Markovian Switching System in Non-Gaussian Measurement Noise. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 133–146. [Google Scholar] [CrossRef]

- Wang, Z.; Shen, X.; Zhu, Y. Ellipsoidal Fusion Estimation for Multisensor Dynamic Systems with Bounded Noises. IEEE Trans. Autom. Control 2019, 64, 4725–4732. [Google Scholar] [CrossRef]

- Sun, W.; Zhao, X.Y. Maneuvering target tracking with the extended set-membership filter and information geometry. J. Terahertz Sci. Electron. Inf. Technol. 2018, 16, 786–790. [Google Scholar]

- Mahler, R. General Bayes filtering of quantized measurements. In Proceedings of the 14th International Conference on Information Fusion, Chicago, IL, USA, 5–8 July 2011; pp. 1–6. [Google Scholar]

- Ristic, B. Bayesian Estimation with Imprecise Likelihoods: Random Set Approach. IEEE Signal Process. Lett. 2011, 18, 395–398. [Google Scholar] [CrossRef]

- Hanebeck, U.D.; Horn, J. Fusing information simultaneously corrupted by uncertainties with known bounds and random noise with known distribution. Inf. Fusion 2000, 1, 55–63. [Google Scholar] [CrossRef]

- Duan, Z.; Jilkov, V.P.; Li, X.R. State estimation with quantized measurements: Approximate MMSE approach. In Proceedings of the 11th International Conference on Information Fusion, Cologne, Germany, 30 June–3 July 2008; pp. 1–6. [Google Scholar]

- Miranda, E. A survey of the theory of coherent lower previsions. Int. J. Approx. Reason. 2008, 48, 628–658. [Google Scholar] [CrossRef]

- Benavoli, A.; Zaffalon, M.; Miranda, E. Robust Filtering Through Coherent Lower Previsions. IEEE Trans. Autom. Control 2011, 56, 1567–1581. [Google Scholar] [CrossRef]

- Peter, W. Statistical Reasoning with Imprecise Probabilities; Chapman and Hall: New York, NY, USA, 1991; pp. 1–35. [Google Scholar]

- Noack, B.; Klumpp, V.; Hanebeck, U.D. State estimation with sets of densities considering stochastic and systematic errors. In Proceedings of the 12th International Conference on Information Fusion, Seattle, WA, USA, 6–9 July 2009; pp. 1–6. [Google Scholar]

- Klumpp, V.; Noack, B.; Baum, M. Combined set-theoretic and stochastic estimation: A comparison of the SSI and the CS filter. In Proceedings of the 13th International Conference on Information Fusion, Edinburgh, UK, 26–29 July 2010; pp. 1–7. [Google Scholar]

- Gning, A.; Mihaylova, L.; Abdallah, F. Mixture of uniform probability density functions for nonlinear state estimation using interval analysis. In Proceedings of the 13th International Conference on Information Fusion, Edinburgh, UK, 26–29 July 2010; pp. 1–6. [Google Scholar]

- Henningsson, T. Recursive state estimation for linear systems with mixed stochastic and set-bounded disturbances. In Proceedings of the 47th IEEE Conference on Decision and Control, Cancun, Mexico, 9–11 December 2008; pp. 1–6. [Google Scholar]

- Jiang, T.; Qian, F.C.; Yang, H.Z. A New Combined Filtering Algorithm for Systems with Dual Uncertainties. Acta Autom. Sin. 2016, 42, 535–544. [Google Scholar]

- Matthew, J.B. Variational Algorithms for Approximate Bayesian Inference; University of Cambridge: London, UK, 1998; pp. 20–32. [Google Scholar]

| Camera Parameter Matrix | Distortion Parameter Matrix |

|---|---|

| Label | Average Error (Pixel) | Label | Average Error (Pixel) | Label | Average Error (Pixel) |

|---|---|---|---|---|---|

| 1 | 0.172649 | 6 | 0.138521 | 11 | 0.189421 |

| 2 | 0.157981 | 7 | 0.137715 | 12 | 0.212029 |

| 3 | 0.081947 | 8 | 0.141941 | 13 | 0.118467 |

| 4 | 0.105237 | 9 | 0.166069 | 14 | 0.193648 |

| 5 | 0.160528 | 10 | 0.233748 | 15 | 0.189421 |

| Rotation Matrix | Translation Vector |

|---|---|

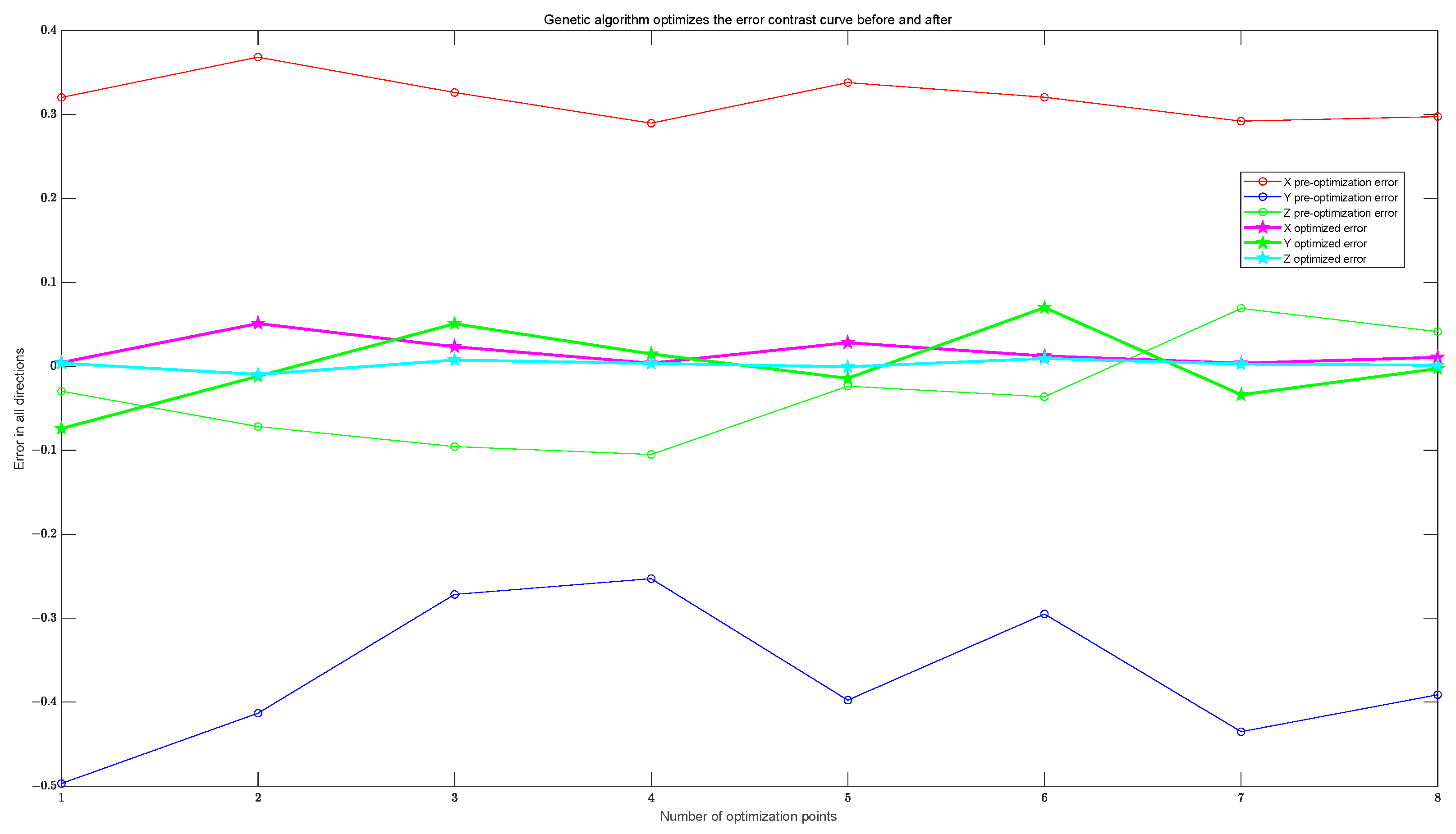

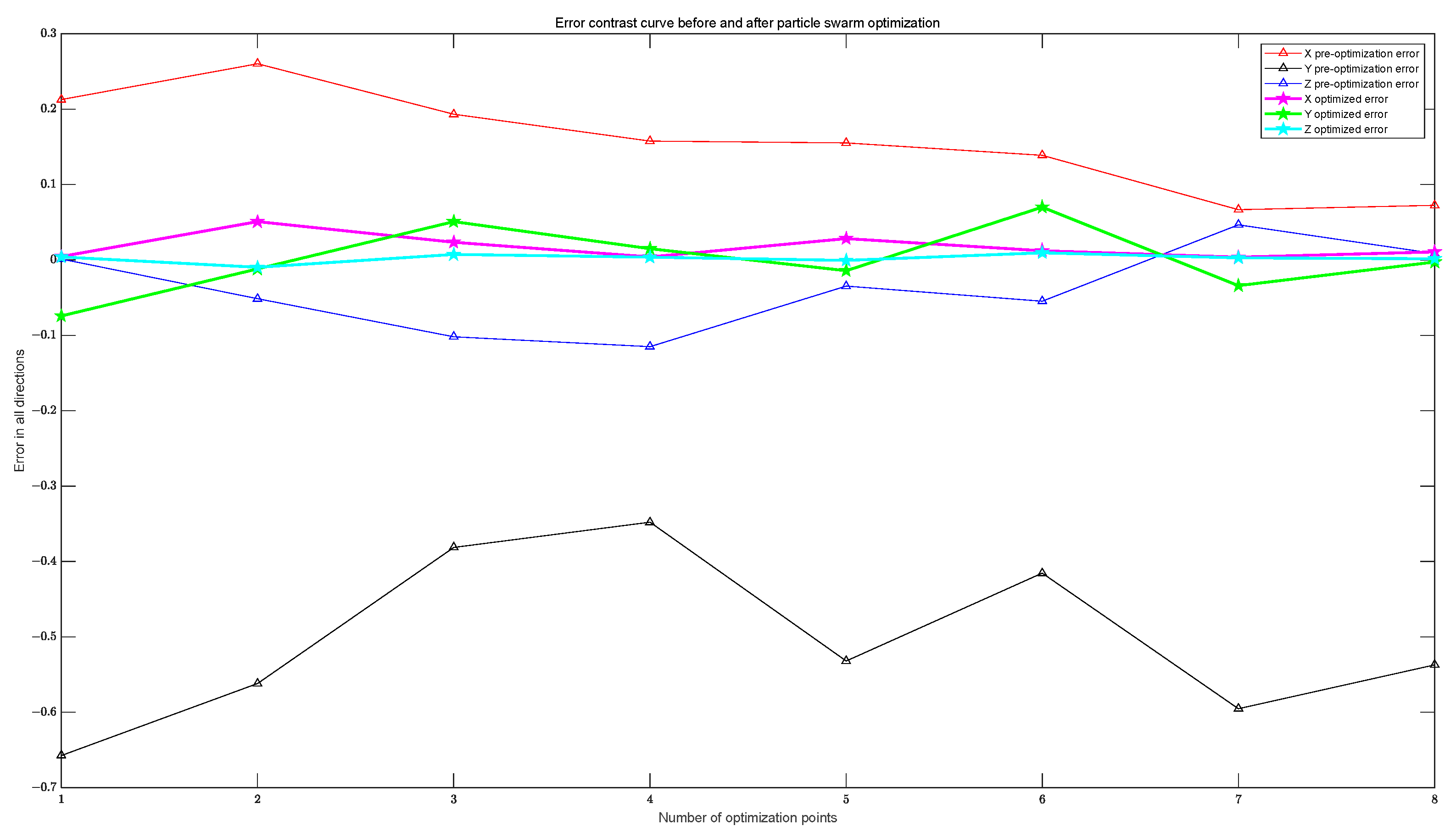

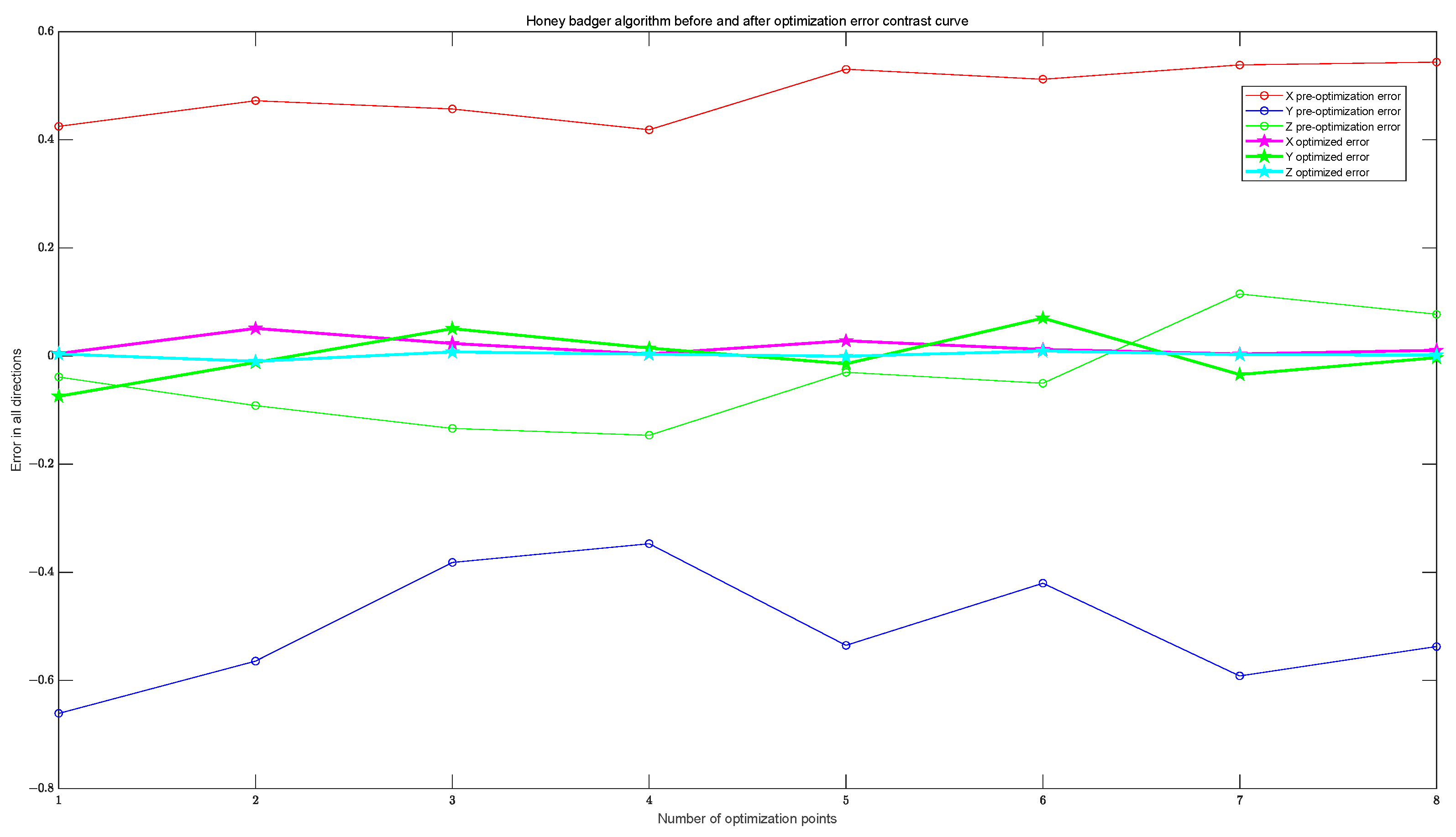

| Argument | GA | PSO | HBA | IDEPSO | DE |

|---|---|---|---|---|---|

| 1156.7861 | 1154.4027 | 1156.4027 | 1157.8783 | 1157.4027 | |

| 1154.2004 | 1155.0684 | 1155.0685 | 1153.6961 | 1152.0685 | |

| 662.7546 | 660.9385 | 660.9385 | 663.02028 | 662.9385 | |

| 387.9115 | 389.3181 | 389.1970 | 387.9177 | 387.8497 | |

| −0.2451 | −0.26817 | −0.26614 | −0.245762 | −0.245485 | |

| −0.044766 | −0.06452 | −0.06452 | −0.044699 | −0.044525 | |

| −0.00049006 | −0.000713 | −0.000313 | −0.0004727 | −0.0005139 | |

| 5.6293 × 10−5 | 4.1408 × 10−5 | 8.1408 × 10−5 | 4.5905 × 10−5 | 6.1408 × 10−5 | |

| 0.045762 | 0.041302 | 0.045302 | 0.045686 | 0.0456797 |

| RMSE | X | Y | Z |

|---|---|---|---|

| GA | 0.6189 | 0.5162 | 0.1124 |

| PSO | 0.4895 | 0.5137 | 0.0947 |

| HBA | 0.3201 | 0.5150 | 0.0657 |

| IDEPSO | 0.1690 | 0.3780 | 0.0638 |

| DE | 0.8758 | 0.5138 | 0.1151 |

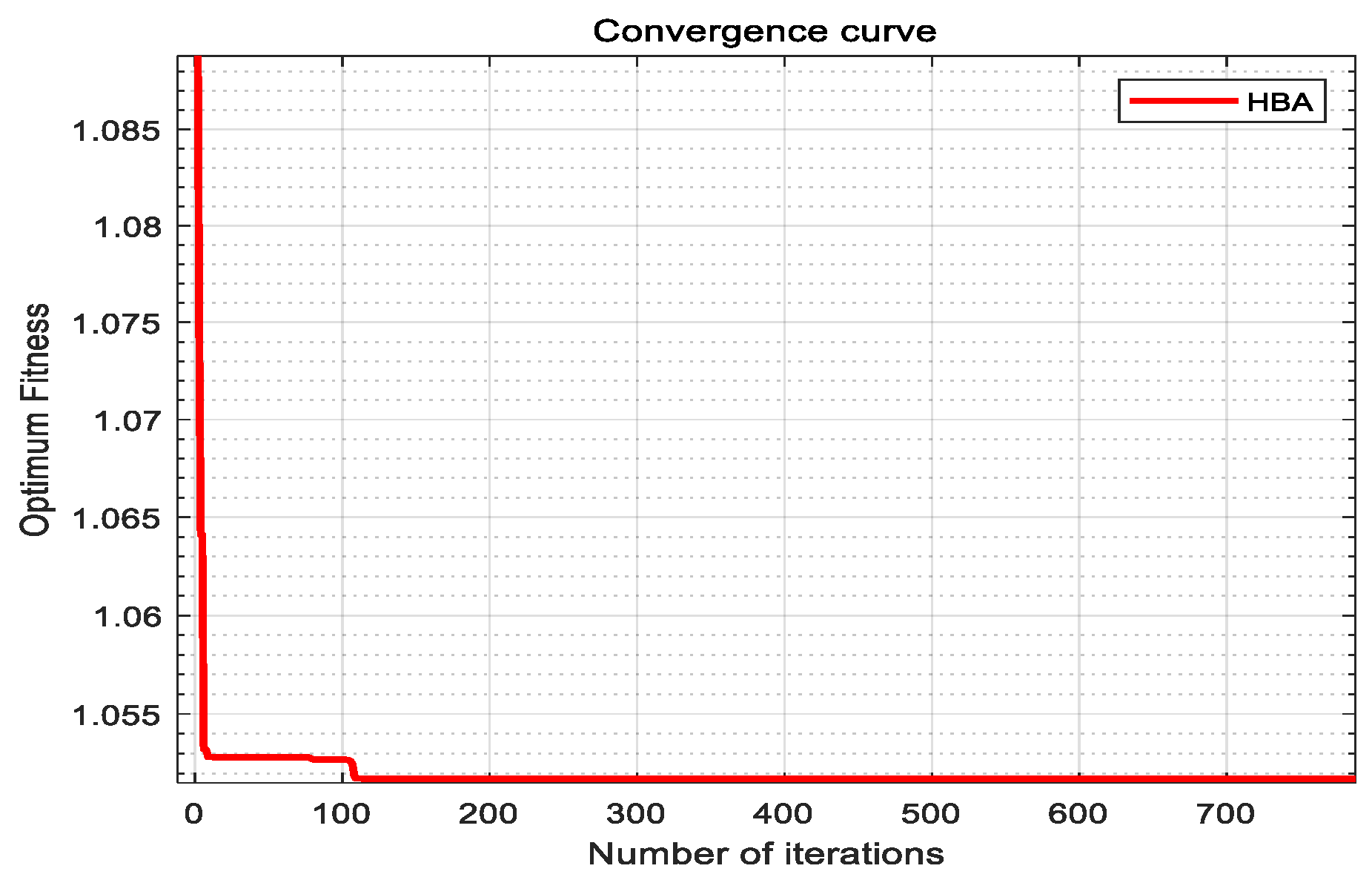

| DE | IDEPSO | |

|---|---|---|

| Fitness value | 1.04488 | 1.04068 |

| Number of iterations when stabilizing | 128 | 84 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sha, X.; Qian, F.; He, H. Research on Improved Differential Evolution Particle Swarm Hybrid Optimization Method and Its Application in Camera Calibration. Mathematics 2024, 12, 870. https://doi.org/10.3390/math12060870

Sha X, Qian F, He H. Research on Improved Differential Evolution Particle Swarm Hybrid Optimization Method and Its Application in Camera Calibration. Mathematics. 2024; 12(6):870. https://doi.org/10.3390/math12060870

Chicago/Turabian StyleSha, Xinyu, Fucai Qian, and Hongli He. 2024. "Research on Improved Differential Evolution Particle Swarm Hybrid Optimization Method and Its Application in Camera Calibration" Mathematics 12, no. 6: 870. https://doi.org/10.3390/math12060870

APA StyleSha, X., Qian, F., & He, H. (2024). Research on Improved Differential Evolution Particle Swarm Hybrid Optimization Method and Its Application in Camera Calibration. Mathematics, 12(6), 870. https://doi.org/10.3390/math12060870