Abstract

Sentiment analysis has increasingly gained significance in commercial settings, driven by the rising impact of reviews on purchase decision-making in recent years. This research conducts a thorough examination of the suitability of machine learning and deep learning approaches for sentiment analysis, using Romanian reviews as a case study, with the aim of gaining insights into their practical utility. A comprehensive, multi-level analysis is performed, covering the document, sentence, and aspect levels. The main contributions of the paper refer to the in-depth exploration of multiple sentiment analysis models at three different textual levels and the subsequent improvements brought with respect to these standard models. Additionally, a balanced dataset of Romanian reviews from twelve product categories is introduced. The results indicate that, at the document level, supervised deep learning techniques yield the best outcomes (specifically, a convolutional neural network model that obtains an AUC value of 0.93 for binary classification and a weighted average F1-score of 0.77 in a multi-class setting with 5 target classes), albeit with increased resource consumption. Favorable results are achieved at the sentence level, as well, despite the heightened complexity of sentiment identification. In this case, the best-performing model is logistic regression, for which a weighted average F1-score of 0.77 is obtained in a multi-class polarity classification task with three classes. Finally, at the aspect level, promising outcomes are observed in both aspect term extraction and aspect category detection tasks, in the form of coherent and easily interpretable word clusters, encouraging further exploration in the context of aspect-based sentiment analysis for the Romanian language.

Keywords:

sentiment analysis; latent semantic indexing; machine learning; deep learning; CNN; dense embedding layer; aspect term extraction; aspect category detection; Romanian language MSC:

68T50

1. Introduction

The increased prevalence of digital communication in recent years has amplified the importance of automatically extracting and assessing sentiment in textual data, with organizations and researchers engaged in exploration of models with this capability, that allow them to gain insights into customer preferences and pinpoint emerging trends. An especially relevant application domain for sentiment analysis (SA) research revolves around the examination of consumer product reviews, which have evolved into an integral component of the purchasing process. Given that reviews inherently consist of opinions and evaluations of products and often employ subjective language, there is significant potential for sentiment identification at multiple textual levels. This includes the assessment of overall product evaluations (document-level SA), finer-grained analysis that aims to capture shifts in sentiment within a document (sentence-level SA), and the exploration of targeted sentiment, which involves identifying pairs of product features and the specific sentiments expressed in relation to these features (aspect-level SA).

This work presents an extensive examination of SA approaches for texts in Romanian, proposing an in-depth analysis at the document, sentence, and aspect levels, with the objective of filling a gap in the existing literature, which lacks multi-level investigation of datasets that hold commercial value for the Romanian language. Thus, the primary goal of this study is to assess the appropriateness of current machine learning and deep learning models for sentiment analysis in the context of the Romanian language, in order to acquire a comprehensive understanding of their viability for practical implementation in various business scenarios.

The original contributions of our study are as follows: (1) an in-depth exploration of SA models’ performance at multiple textual levels for Romanian-language documents; (2) the introduction of a balanced dataset of Romanian reviews (structured in twelve different product categories), with five automatically assigned labels; and (3) improvements that we bring with respect to the standard models.

Below, we summarize the research questions we aim to answer within this paper.

- RQ1

- Is latent semantic indexing (LSI) in conjunction with conventional machine learning classifiers suitable for sentiment analysis of documents written in Romanian?

- RQ2

- Can deep-learned embedding-based approaches improve the performance of document- and/or sentence-level sentiment analysis, as opposed to classical natural language processing (NLP) embedding-based deep learning approaches?

- RQ3

- What is the relevance of different textual representations in the task of sentence polarity classification, and what impact do additional preprocessing steps have in this task?

- RQ4

- In terms of aspect extraction, is it feasible for a clustering methodology relying on learned word embeddings to delineate groups of words capable of serving as aspect categories identified within a given corpus of documents?

- RQ5

- How can the aspect categories discussed within a document be identified, if an aspect category is given through a set of words?

The rest of this paper is structured as follows. Section 2 includes a succinct description of the tasks addressed in this paper. A literature review on sentiment analysis models for the Romanian language and other related aspects is provided in Section 3. Section 4 is dedicated to the description of the methodology employed, while Section 5 presents the results we obtained. Additionally, we include a comparison of our approach with existing works in the literature and an analysis of the obtained results in Section 6. The last section, Section 7, contains conclusions and directions for future work.

2. Sentiment Analysis

Sentiment analysis is the area of research concerned with the computational study of people’s opinions, sentiments, emotions, moods, and attitudes [1], and it involves a number of different tasks and perspectives. In this section, we include descriptions of the specific tasks from the sentiment analysis domain we have addressed in the present study.

2.1. Document-Level Sentiment Analysis (DLSA)

At the document level, sentiment analysis systems are concerned with identifying the overall sentiment from a given text. The assumption this task is based on is that a single opinion is expressed in the entire document. The advantage of simplicity in the definition of the problem has encouraged a substantial amount of work, especially in the early stages of exploration within the field.

In a machine learning and deep learning context, the DLSA task can be viewed as a classic text classification problem, in which the classes are represented by the sentiments/polarities [1]. The task can be formalized as a binary classification problem, in which the two classes are represented by the positive and negative polarities. There are various multi-class formulations of the sentiment analysis task in the literature. Most commonly, a third neutral class is considered besides the positive and negative ones to define a three-class classification problem [2,3]. In cases in which finer-grained sentiment labels are available, the targeted classes are, usually, strongly negative, negative, neutral, positive, and strongly positive [4,5,6]. In this context, any features or models used in the traditional text classification tasks may be applied, or new, explicit sentiment-oriented features, such as the occurrence of words from a sentiment lexicon, may be introduced.

However, a main disadvantage of DLSA refers to the assumption that a document, regardless of its length, contains a single opinion, and, consequently, a single overarching sentiment is expressed. Evidently, this does not always hold. Thus, researchers have progressively shifted their focus towards more fine-grained types of analysis.

2.2. Sentence-Level Sentiment Analysis (SLSA)

The objective of sentence-level sentiment analysis is to ascertain the sentiment conveyed in a specific sentence [7].

The motivation behind SLSA stems from the recognition that a single document can contain diverse opinions with varying polarities. This is particularly evident in texts like reviews, where users may make positive evaluations and negative evaluations in the same review. For example, a review with an average number of stars in a defined rating system is almost guaranteed to comprise both. Additionally, it is not uncommon for reviews to include neutral and objective statements of fact. This task thus serves as a connection between DLSA and aspect-level sentiment analysis. It aims to offer a more comprehensive view of the sentiment expressed in a document, without the intention of identifying the exact entities and aspects that the sentiment is directed towards. When considering the level of complexity, it can be observed that, although sentences may be regarded as short documents (and, thus, the problem can be formalized in an identical manner as for DLSA), they possess significantly less content compared to full-length documents. Consequently, the process of categorization becomes more challenging [8].

2.3. Aspect-Based Sentiment Analysis (ABSA)

While it is crucial to obtain an understanding of user opinion through analysis at the document level, and decompose it further into a study at the sentence level, in reviews, users often make evaluations with respect to different aspects of a given product, where an aspect refers to a characteristic, behavior, or trait of a product or entity [9]. For instance, for mobile phones, aspect categories of interest to users, generally, are battery life, photo and video quality, sound, and performance. Thus, creating a system that provides a summary of opinion polarity with regard to each of these aspects would be of great use for both users, who could benefit from customized recommendations aligned with their preferences and priorities, and for businesses, who could pinpoint areas of improvement in their products or services and make targeted changes to enhance product quality and customer satisfaction.

Aspect-based sentiment analysis is defined as the problem of identifying aspect and sentiment elements from a given text (usually a sentence) and the dependencies between them, either separately or simultaneously [10]. There are four fundamental elements of ABSA: aspect terms (words or expressions that are explicitly included in the given text, and that refer to an aspect that is the target of an opinion), aspect categories (a unique aspect of the given entity that usually belongs to a small list of predefined characteristics that are of interest), opinion terms (expressions through which a sentiment is conveyed towards the targeted aspect), and sentiment polarity (generally, positive, negative, or neutral).

Separate tasks can be defined to identify each of these elements and their dependencies: aspect term extraction (ATE), aspect category detection (ACD), opinion term extraction (OTE), and aspect sentiment classification (ASC). The ATE task aims to identify the explicit expressions used to refer to aspects that are evaluated in a text [10]. If formulated as a supervised classification task, then the goal is to label the tokens of a sentence as referring to an aspect or not. Since this implies the existence of annotated data, which is scarce for most languages besides English, a significant number of works employ unsupervised approaches. In recent years, this type of approach has involved the use of word embeddings in various self-supervised techniques enhanced with attention mechanisms to learn vector representations of aspects [11,12].

As for ACD, which aims to identify the discussed aspect categories for a given sentence, most state-of-the-art approaches formalize the task as a supervised text classification problem where a generally small set of predefined, domain-specific aspect categories represent the classes [13]. Unsupervised formulations often involve two steps: first extracting candidate aspect terms (the ATE task), and then grouping or mapping these terms to corresponding aspect categories. Manual assignment of labels to the obtained groups is a common practice in such approaches [11,14], but recent works [12,15] have proposed various methods to automate the process.

3. Related Work

This section presents an overview of recent SA approaches found in the literature, structured according to the distinct levels of sentiment analysis addressed by our study (document, sentence, and aspect) and focusing on those targeting the Romanian language.

With respect to sentiment analysis (and NLP tasks, in general), Romanian is known as an under-resourced language, with few comprehensive, publicly available datasets or corpora, as well as dedicated tools. As indicated by the LiRo benchmark and leaderboard platform [16], LaRoSeDa [17] is, to date, the only publicly available large corpus for sentiment analysis in Romanian. It consists of 15,000 positive and negative product reviews, extracted from an electronic commerce platform, that have been automatically labeled based on the number of associated stars. Although perfectly balanced (out of the total number of reviews, half being positive and the other half negative), the dataset is highly polarized, the great majority of positive reviews being rated five stars, while most of the negative ones, one star. Moreover, the authors admit that the labeling process is sub-optimal (as stars’ numbers do not always faithfully reflect the associated polarity of a review), mentioning manual labeling or noise removal as future improvement tasks.

Regarding models, in recent years, transformer-based ones (both multi- and mono-lingual) have become the de facto standard within the NLP domain. BERT (bidirectional encoder representations from transformers) has been adopted as the baseline for transformer models, providing state-of-the-art results for various NLP tasks. For Romanian sentiment analysis, there are multi-lingual (mBERT [18], XML-RoBERTa [19]), and dedicated BERT-models available (Romanian BERT [20], RoBERT [21]), with the ones in the latter category performing better, due to their training on comprehensive language-specific datasets. In addition, approaches aimed at achieving higher performance on domain-specific analysis (such as JurBERT [22]) or at adapting the large-scale pretrained Romanian BERTs to computationally constrained environments (such as DistilBERT [23] or ALR-BERT [24]) have also been reported. When it comes to speed and efficiency, the multi-lingual, lightweight fastText [25] (also covering Romanian) is a popular alternative to multi-lingual BERTs, with the latter being more suited though for complex, data-intensive tasks.

In addition to the previously mentioned approaches, several research papers (detailed in the following) have reported the usage, improvement, or comparison of various classical and deep learning models, with the purpose of achieving similar or better results for SA in Romanian. As resulted from our investigation, most of the existing work has targeted the document level, with only a few studies explicitly covering the sentence- and aspect-based ones.

3.1. DLSA for Romanian

The papers mentioned in this subsection report experimenting with either only classical machine learning (ML) approaches, only deep learning (DL) ones, or both.

Within the first category, the work of Burlăcioiu et al. [26] aims to capture users’ perceptions with respect to telecommunications and energy services, by analyzing 50,000 scraped reviews of mobile applications, offered by Romanian providers in these fields. They compare the results of five well-known SA models (logistic regression (LR), decision trees (DT), k-nearest neighbors (kNN), support vector machines (SVM), and naïve Bayes (NB)) on a balanced, automatically labeled version of the dataset, using term frequency–inverse document frequency (TF-IDF) encoding [27]. The best accuracy is obtained by employing DT and SVM (79.5% on average for the two models), with the former achieving better time performance. Russu et al. [28] provide a solution for sentiment analysis at the document and aspect levels, considering unstructured documents written in Romanian. They employ two different methods for sentiment polarity classification: one using SentiWordnet [29] as a lexical resource, and one based on the use of the Bing search engine. The experiments are conducted on a perfectly balanced corpus, consisting of 1000 movie reviews written in Romanian (500 positive and 500 negative), manually extracted from several blogs and websites. The documents have been manually labeled, based on the individual scores assigned by the user (in the range [1–10]). To identify document-level polarity, the authors experiment with random forest (RF), kNN, NB, and SVM, the maximum precision values obtained being 81.8% (using SentiWordnet) and 79.2% (using Bing queries).

Regarding DL approaches, the authors of LaRoSeDa, Tache et al. [17], propose using self-organizing maps (SOM), instead of the classical k-means algorithm, for clustering word embeddings generated by either word2vec [30] or Romanian BERT. The top accuracy rate reported on test data is 90.90%, by employing BERT-bag of word embedding (BERT-BOWE). Echim et al. [31] aim to optimize well-known NLP models (convolutional neural network (CNN), long short-term memory (LSTM), bi-LSTM, gated recurrent unit (GRU), Bi-GRU) with the aid of capsule networks and adversarial training, the new approaches being used for satire detection and sentiment analysis in Romanian. For the latter task, they use the LaRoSeDa dataset, the best accuracy (99.08%) being obtained using the Bi-GRU model with RoBERT encoding and dataset augmentation.

Belonging to the category of combined ML approaches, there is the work of Neagu et al. [32], whose general purpose is building a multinomial classifier (negative/positive/neutral) to be used for inferring the polarity of Romanian tweets in a video-surveillance context. By using both classical (Bernoulli NB, SVM, RF, LR) and deep learning approaches (deep neural network (DNN), CNN, LSTM), together with different types of encodings (TF-IDF/doc2vec for classical ML and DNN, word2vec for CNN and LSTM), they argue that, by adapting the NLP pipeline to the specificity of the data, good results can be achieved even in the absence of a comprehensive Romanian dataset (their dataset consists of 15,000 tweets, translated from English). The best obtained accuracy (78%) has resulted from using Bernoulli NB with TF-IDF encoding, while the state-of-the-art value (81%) is provided by the multi-lingual BERT, with a training time penalty though. Istrati and Ciobotaru [33] report on creating a framework aimed at brands’ monitoring and evaluation, based on the analysis of Romanian tweets, that includes an SA binomial classifier trained and tested on a corpus labeled by the authors. The data are preprocessed using four proposed pipelines, the resulting sets being used to train and test various ML models, both classical and modern. The best accuracy and F1-scores are achieved by using a neural network with fastText [25], that being the model chosen for the framework classifier. Coita et al. [34] use SA in order to assess the attitude of Romanian taxpayers towards the fiscal system. In this respect, they try to predict the polarity of each of the answers provided by around 700 respondents to a 3-item questionnaire, using a BERT model pretrained and tested on a corpus of around 38,000 movie and product reviews in Romanian. BERT is chosen, as it provides maximum accuracy (98%) among several compared models, namely itself, recurrent neural network (RNN), and three classical ML approaches: LR, DT, and SVM.

3.2. SLSA for Romanian

Buzea et al. [35] introduce a novel sentence-level SA approach for Romanian, using a semi-supervised ML system based on a taxonomy of words that express emotions. Three classes of emotions are taken into account (positive, negative, and neutral). The obtained results are compared to those provided by classical ML algorithms, such as DT, SVM, and NB. Experiments are conducted using a corpus of around 26,000 manually annotated news items from Romanian online publishers and more than 42,000 labeled words from the Romanian dictionary. In terms of F1-score, the proposed system outperforms the three classical algorithms for the neutral and negative classes, while for the positive class, the highest metric value is achieved by DT.

Using a custom-made application, Roşca and Ariciu [36] aim to evaluate the performance of the Azure Sentiment Analysis service at sentence level for five languages, including Romanian. With this purpose, they generate 100 sentences per language, half positive and the other half negative. Although the service performs SA using three sentiment classes (positive, negative, and neutral), their evaluation only considers the first two, assuming any neutral label as incorrect. Classification accuracy is computed for three types of sentences: shorter than 100 characters, in the range of 100–250 characters, and longer. The reported accuracies are 83% for the first and last categories and 90% for the middle one.

3.3. Aspect Term Extraction (ATE) and Aspect Category Detection (ACD)

The only work that proposes a complete ABSA system for the Romanian language is that of Russu et al. [28], who also aim to identify sentiment at the document level, as described in Section 3.1. In this paper, the authors use seven syntactic rules to identify aspect terms and opinion words in a set of movie reviews. The polarity associated with the discovered entity is computed either using SentiWordnet or a search engine, using a set of seed words.

In this context, we provide a succinct description of unsupervised approaches for the ATE and ACD tasks, which are the two ABSA tasks we address in this paper.

For the task of aspect term extraction, early unsupervised approaches were generally based on rules [37,38,39]. For instance, Hu and Liu [37] use an association mining approach to identify product features and a WordNet-based approach to predict the orientation of opinion sentences. Other works propose analyzing the syntactic structure of a sentence at the word or phrase level to identify aspects and aspect-word/sentiment-word relations [39]. Such rule-based approaches are also frequently employed for aspect category detection. Hai et al. [40] attempt to find features (aspects) expressed implicitly in text through a two-step co-occurrence association rule mining approach. In the first phase, the co-occurrence is computed for opinion words and explicit features, extracted from a set of cell phone reviews in Chinese, and they refer to verbs and adjectives, and nouns and noun phrases, respectively. Additional constraints based on syntactic dependencies are applied for the extraction. In the second step, a k-means clustering algorithm is applied to the identified rule consequences, which are the explicit aspects, to generate more robust rules that can be then used for implicit aspect identification. Schouten et al. [41] propose a similar co-occurrence-based approach, but their unsupervised model uses a set of seed words for the considered aspect categories.

Another type of unsupervised approach to these tasks is represented by variants of classic topic modeling techniques. Titov and McDonald [42], for example, propose a multi-grain topic model (MG-LDA), which aims to capture two types of topics, global and local, and pinpoint rateable aspects to be modeled by the latter, the local topics. Brody and Elhadad propose the use of a standard LDA algorithm, but treat each sentence as a separate document to guide the model towards aspects of interest to the user, rather than global topics present in the corpus [43]. A topic modeling approach is also proposed by García-Pablos et al. [44], but it is a hybrid one, also making use of word embeddings and a Maximum Entropy classifier to tackle ABSA tasks.

In terms of neural models, He et al. [11] rely on word embeddings in the context of an attention-based approach, through which aspect embeddings are learned by a neural network similar to an auto-encoder. Tukens and van Cranenburgh [15] propose a simple two-step technique for aspect extraction, which first selects candidate aspects in the form of nouns with the help of a part-of-speech (PoS) tagger, and then employs contrastive attention to select aspects.

While there are approaches that rely mainly on clustering techniques, they are less frequent. An example of a clustering-based approach is that of Ghadery et al. [45], who use k-means clustering on representations of sentences obtained by averaging word2vec embeddings and a soft cosine similarity measure, to determine the similarity between a sentence and an aspect category, represented by a set of seed words.

As far as word clustering is concerned, the identification of semantically meaningful groups in a vocabulary has been a topic of interest for decades. Recent approaches either focus on using word clustering to detect topics in a document [46,47,48], or use it as a technique to enhance the performance of classifiers by means of improved document representations [17]. Sia et al. [46] explore the ability of embedding-based word clusters to summarize relevant topics from a corpus of documents. Different types of word embeddings are examined, both contextualized and non-contextualized, along with a number of hard (k-means, spherical k-means, k-medoids) and soft (Gaussian mixture models and von Mises–Fisher Models) clustering techniques to identify topics in documents. CluWords, the model proposed in [47], is shown to advance the state-of-the-art in topic modeling by exploiting neighborhoods in the embedding space to obtain sets of similar terms (i.e., meta-words/CluWords), which, in turn, are used in document representations with a novel TF-IDF strategy designed specifically for weighting the meta-words.

4. Methodology

4.1. Case Study

This section describes the dataset used in our study, a new dataset comprising reviews written in Romanian. We start by providing a brief summary of the data collection process and our motivation in creating the RoProductReviews dataset, and then we present a detailed description of its content, highlighting its suitability for the proposed tasks.

4.1.1. Data Collection

The reviews that make up the RoProductReviews dataset were manually collected from a highly popular Romanian e-commerce website. Specifically, the gathered information consists of the text of the review, the title, and the associated number of stars, which ranges between 1 and 5, and can be viewed as a numerical representation of customers’ satisfaction with the reviewed product. In this context, assigning 1 star to a review represents complete dissatisfaction, while a 5-star evaluation indicates complete satisfaction with the product. Reviews were collected for a total of 12 product categories of electronics and appliances. The only criteria used in selecting reviews were the number of associated stars and the length of the text: the first, in terms of having a balanced dataset on the whole with respect to positive and negative sentiment, as we planned to use supervised learning techniques for the task of sentiment analysis, and the second, with the ABSA task in mind, reviews with longer texts were sought out to be included along with short, one-sentence reviews, since, generally, in the longer reviews, discussions about specific aspects of the product are included.

Through this data collection process, we built a balanced dataset with reviews written between 2014 and 2023 that is representative of the various modes of expression encountered in e-commerce product evaluations. To prevent the introduction of bias, ten individuals with diverse backgrounds collected the data. Clear guidelines outlining the purpose and intended structure of the dataset were provided to ensure consistency.

4.1.2. Dataset Description

Table 1 presents the number of reviews in the dataset associated with each number of stars, as well as the number of sentences they consist of. Additionally, the total number of tokens, the number of unique tokens, and unique lemmas are included for each category, as well as the average sentence length, computed as the average number of words in a sentence.

Table 1.

RoProductReviews statistics.

The RoProductReviews dataset is utilized in its entirety, as presented in Table 1, for the document-level sentiment analysis tasks. The classification labels for this dataset consist of either the assigned number of stars (for multi-class classification) or a positive/negative label derived from aggregating the higher- and lower-rated reviews, respectively (i.e., reviews with ratings of 1 and 2 stars are considered negative, while reviews associated with 4 and 5 stars are deemed positive; reviews with a 3-star rating are discarded in this setting). Although there is a possibility that the labels as obtained do not always faithfully reflect the sentiment expressed in the review [17], we consider them sufficient in terms of the intended experiments at the document level.

Nevertheless, when it comes to classifying sentiment at the sentence level, the rating assigned to the review that contains the sentences is an inaccurate predictor of the sentiment being communicated. Hence, a manual annotation procedure was utilized for a specific subset of RoProductReviews. A total of 2067 short reviews, consisting of single sentences, were annotated by 5 annotators who were only presented with the text of the review, but not the number of stars associated with it. A sentiment label was assigned if it was agreed upon by the majority; otherwise, the instance was discarded. Limitations exist in the annotation process, primarily inherent to sentiment annotation. Specifically, we emphasize the challenge of accurately identifying sentiment in extremely short sentences lacking explicit sentiment words or featuring ambiguous language. Additionally, annotators may delineate between neutral, positive, and negative sentiment differently, resulting in conflicting label assignments for the same sentence. To address these limitations, the annotation process incorporates majority voting, mitigating the impact of these challenges.

The reviews were chosen due to the fact that they did not require any additional processing in terms of sentence segmentation. The annotators utilized a labeling system that consisted of three categories: negative, neutral, and positive. As a consequence, a subset consisting of 804 reviews (sentences) annotated with the label negative was obtained. Additionally, there were 171 reviews annotated with the label neutral and 1092 reviews annotated with the label positive. A series of examples from this subset of RoProductReviews is included in Table 2.

Table 2.

Examples of manually annotated one-sentence reviews.

Generally, RoProductReviews is characterized by a relatively equitable distribution among the various rating categories, with the exception of the 2-star category, which shows a lower level of representation. This under-representation of reviews in the 2-star category can be attributed to data availability constraints. During the data collection process, there was a noticeable scarcity of 2-star ratings, with a significant portion of unfavorable reviews predominantly attributed to a 1-star rating. It is plausible that customers articulating adverse sentiments may encounter challenges in acknowledging positive aspects of the reviewed product, which, in turn, might result in a milder form of negative evaluation, namely, a 2-star rating.

Regarding sentence length, we can observe that sentences in the rating categories that do not indicate complete satisfaction or dissatisfaction with the reviewed product (i.e., 2-, 3-, and 4-star categories) tend to be longer. This is intuitive, as in these cases, customers are more likely to provide detailed accounts of both the strengths and weaknesses of the product to justify their assigned rating. This is especially evident in reviews associated with 3 stars, an evaluation customers generally make after careful analysis of a series of positive and negative aspects of the reviewed product. Alternatively, 1-star and 5-star reviews may only consist of short sentences such as “Nu recomand/Don’t recommend”, “Calitate proasta/Bad quality”, “Slab/Weak” and “Super/Super”, “Tableta excelentă/Excellent tablet”, “Multumit de achizitie/Content with my purchase”, respectively.

Table 3 presents analogous statistics, this time segmented by product category. The dataset exhibits diversity in terms of the number of reviews gathered for each category. For instance, there is a nearly threefold difference in the number of reviews collected for smartphones compared to routers.This diversity is essential for creating a realistic evaluation scenario for various sentiment analysis models directed toward specific product categories (e.g., aspect-based sentiment analysis), reflecting the real-world scenario where certain product types enjoy more popularity and consequently accumulate more reviews than others.

Table 3.

RoProductReviews dataset description per category.

We note that, despite this imbalance across product categories, the distribution of reviews in each star rating category is preserved. With a few exceptions (monitor, tablet, smartphone), the sets are almost perfectly balanced in this respect.

Additionally, we present a series of statistics that further support the use of the RoProductReviews dataset for the sentiment analysis tasks addressed in this study. Specifically, to provide context for the aspect identification task, which relies on the identification and grouping of nouns, we computed the part-of-speech distribution within each product category with the help of the NLP-Cube Part of Speech Tagging Tool [49]. We found that nouns represent approximately 20% of all tokens for every category. The percentage of adjectives ranges from 0.04 to 0.06, with vacuum cleaner reviews having the smallest proportion and monitors, the highest. Alternatively, vacuum cleaner reviews are the richest in terms of verbs (0.14), while reviews about peripherals, like monitors and keyboards, have the smallest proportion of verbs, along with routers (0.11). Similarly, small differences are observed with respect to adverbs: the highest percentage of adverbs can be found in headphones reviews (0.12), with router at the other end (0.09). The notable presence of nouns in reviews provides a favorable foundation for our proposed approach to aspect identification, which relies on noun clustering, but underscores the necessity of devising an effective method for discerning the most relevant nouns. As for adjectives, a part-of-speech traditionally linked with sentiment, we note that their relatively low presence may be due to users often expressing sentiment with regard to products by stating what works and what does not (e.g., I can’t run multiple applications simultaneously), or by providing domain-specific clues (e.g., the refresh rate is 144 Hz, and it shows). Nonetheless, out of all adjectives, between 38% and 50% are valenced across categories (as identified by the lexicon RoEmoLex [50]), which lends credit to the possibility of exploring dependency-based approaches to associating sentiment with the aspect terms discovered through nouns. Interestingly, around 20–25% of verbs in each category are also found in the sentiment lexicon, while only about 13–17% of nouns and 5–6% of adverbs are used to express sentiment directly.

In view of this analysis, we consider that the proposed dataset is suitable for a case study that aims to examine the appropriateness of different machine learning and deep learning models for sentiment analysis for the Romanian language.

4.2. Theoretical Models

This section includes the formalization of the sentiment analysis tasks at each level, which target , a collection of documents that, in our case study, refers to the RoProductReviews dataset. Each , where = represents a document from the collection comprising N words, and with is a word in the document. Let be the vocabulary used in this collection, defined as:

Additionally, we denote by the collection of documents in a given product category c.

4.2.1. Document-Level Sentiment Analysis

The task of sentiment analysis at the document level assumes that the document (for example, a movie review or, as in our case, a product review) expresses an opinion regarding a specific (single) entity e. In this context, document sentiment classification aims to determine the overall sentiment s expressed related to the entity e, which can be positive or negative (in binary classification). The sentiment options, however, can be extended to a range, in our case, the five stars ranging from 1 (strongly negative) to 5 (strongly positive), leading to a multi-class classification problem [1].

4.2.2. Sentence-Level Sentiment Analysis

Sentence-level sentiment analysis assumes that each sentence expresses a single opinion, oriented towards a single known entity e. Therefore, the goal of classification at the sentence level is to identify the sentiment s expressed in sentence regarding the entity e. Since reviews, by definition, express opinions about a product or service, it is expected that at least one of the multiple sentences in a document expresses a positive or negative opinion. This is why document-level analysis can ignore the neutral class, but sentence-level analysis cannot: a sentence within a review can be objective, which means that it does not express any sentiment or opinion and is therefore neutral [1].

4.2.3. Aspect Term Extraction and Aspect Category Detection

We address the aspect term extraction task through an examination of word embeddings and their subsequent properties in the learned vector space. We build on previous research that indicates that aspects are explicitly referred to in texts through nouns [15,37,40], and employ a clustering algorithm to obtain groups of similar words, particularly nouns, that are interpreted as aspect categories.This analysis serves as an initial step for addressing the ABSA task, which currently lacks extensive exploration in the context of the Romanian language. We also provide a method to estimate the presence of an aspect in a document (sentence/review), thus addressing the aspect category detection task, highlighting its potential for application at both the document and sentence levels.

Let be the set of nouns used in a category c. A clustering algorithm is applied on the set , which contains the embeddings obtained through embedding model for the nouns used throughout documents in category c. A partition of set is thus generated, with a set of similar embeddings, where for similarity, a suitable metric is chosen.

The sets represent candidate aspect categories and their members, candidate aspect terms. To obtain the most relevant aspects from each product category, we apply the following heuristic: we eliminate from consideration sets for which and , where represents the number of elements in set . We based this decision on the potential interpretability of such word groups: less than three words might not provide sufficient information for identifying an overarching aspect category, while a group of more than ten words will most likely contain miscellaneous terms with respect to semantic information, especially when considering the restricted vocabulary of only nouns. Then, we rank the remaining sets to obtain the most representative groups with respect to the considered product category. Each set is associated with a value defined as , which considers the overall frequency of the nouns in set in the considered reviews from a given product category c. We also experimented with a ranking based on the number of documents covered by the words in the obtained sets, with , and obtained similar results. Then, according to the ranking given by one of these scores, the top t percent groups are considered the most relevant aspect categories, as, according to the ranking, these are the most frequently discussed in the given category. A short, descriptive label is assigned manually to each of these clusters based on its content.

4.3. Data Representation

4.3.1. Preliminaries: Data Preparation and Preprocessing

This section describes the preprocessing steps taken for each of the proposed analyses.

In all cases, a preprocessing step was performed, which involved the transformation of the text to lowercase and the removal of URL links. For stop word removal, the list provided with the advertools library version 0.13.5 (https://github.com/eliasdabbas/advertools (accessed on 20 January 2024)) was used, from which the words that may express opinions or sentiments, such as bine (well), bună (good), or frumos (beautiful), were removed.

In the approach at the document level, the title of the review was concatenated at the beginning of the review text to be classified. Moreover, the stop words were not removed, to avoid loss of information relevant to the model and to be able to perform a baseline comparison. Also, punctuation was not removed because there were several emoticons which were punctuation based (and not Unicode characters).

For the sentence-level approach, the title of the review was not taken into consideration, as it usually contains two or three words, summarizing the review without forming a sentence. Similar to the document-level approach, the punctuation was not removed, due to the possible existence of text emoticons. As for the stop words, experiments were run both with and without removing them, to assess their impact on the model performance.

In terms of analysis at the aspect level, a number of preprocessing steps were followed. Punctuation, stop words and URLs were also removed for this task, as they represented elements that either could not represent aspect terms or could not contribute to the definition of aspect categories. Additionally, lemmatization of the tokens was performed. Part-of-speech tagging was the last step in our preprocessing process, the result of which was only used at the clustering stage to identify the nouns in a given set of reviews.

4.3.2. TF-IDF Representation

Term frequency–inverse document frequency is a commonly used algorithm that transforms text into numeric representations (embeddings) to be used with machine learning algorithms. As its name suggests, this method combines two concepts: term frequency (TF)—the number of times a term w (word) appears in a document —and document frequency (DF)—the number of documents in which a term appears. For the SLSA case, we consider each sentence to be a document and, thus, compute the frequency with which a specific term appears in a sentence and the number of sentences that contain that specific term.

Term frequency can be simply defined as the number of times the term appears in a document, while inverse document frequency (IDF) works by computing the commonness of the term among the documents contained in the corpus.

By using the inverse document frequency, infrequent terms have a higher impact, leading to the conclusion that the importance of a term is inversely proportional to its corpus frequency. While the TF part of the TF-IDF algorithm contains information about a term’s frequency, the IDF results in information about the rarity of a specific term.

4.3.3. LSI Representation

In addition to the TF-IDF representation described in the previous subsection, we also propose the examination of the relevance of features extracted by latent semantic indexing (LSI) [51] in a sentiment classification task for the Romanian language.

LSI is a count-based model for representing variable-length texts (in our case, documents and sentences containing reviews written in Romanian) as fixed-length numeric vectors. It builds a matrix of occurrences of words in documents and then uses singular-value decomposition to reduce the number of words while keeping the similarity structure between documents.

Therefore, each document is represented as a vector composed of numerical values corresponding to a set of features extracted from the review text directly using LSI.

- , where () denotes the value of the i-th feature computed for the document in the documents dataset by using the LSI-based embedding.

As far as the experimental setup is concerned, for extracting the LSI-based embeddings for the documents, we used the implementation offered by Gensim [52]. We opted for as the length of the embedding and for as the number of latent dimensions that represents the number of topics in the given corpus. For the SLSA task, the was reduced to 10, since most of the sentences contain less than 30 terms even before reduction.

4.3.4. Deep-Learned Representation

An alternative to count-based feature extraction for machine learning approaches is represented by using neural models that can automatically generate features for the considered tasks.

In deep learning approaches, specific word-embedding techniques have been developed, which are actually based on neural network layers and dense vectors [30]. In our experiments, we used dense embedding in conjunction with four deep learning networks: CNN, global average pooling (GAP), GRU, and LSTM.

As far as the experimental setup is concerned, after following the general preprocessing step described in Section 4.3.1, we used word number encoding, considering a vocabulary of 15,000 words, and a padding for each review to 500 words These encoding parameters were chosen after performing a search of best parameters based on the characteristics of our dataset and literature findings. The embedding is performed in the first dense embedding layer of each machine learning model. The text document is encoded using a word-embedding dense layer, which is then processed by the network layers. Formally, given , a text document embedded with a model of token sequences (in which a token could be a word or a letter), with N terms in the document, we have , where is a token embedding of size M. Next, the embedding is submitted to linear transformations (for the CNN model), average region functions (in the GAP model), or memory units and gates (in recurrent neural networks, such as LSTM and GRU).

4.3.5. Word Representations

As far as word representations are concerned, word2vec [30] embeddings are used, a type of representation learned through a neural network from a text corpus. The word2vec model, , is an embedding model that maps each word to a vector representation (embedding) that has size : , where denotes the value of the i-th feature computed for the word w by the model .

For the proposed tasks, the word2vec model was trained on the corpus of all reviews, with a number of preprocessing steps employed, as described in Section 4.3.1. Next, word embeddings for all lemmas in the vocabulary were learned. We experimentally determined the size of 150 for the word vectors to be the best performing.

4.4. Models

4.4.1. Supervised Classification

To assess the relevance of the TF-IDF and LSI-based embeddings when it comes to the automatic polarity classification for reviews written in Romanian, we trained and evaluated multiple standard machine learning classification models, such as SVM, RF, LR, NB, voted perceptron (VP), and multilayer perceptron (MLP).

The models used in deep learning approaches were configured using a dense embedding base layer, which assumes 500 as the embedding dimension, on top of which the particular model layers are added. The CNN model has a convolution 1D layer, a global max pooling 1D layer, and a hidden dense layer with output 24. For GRU, the hidden layers consist of a bidirectional GRU layer and a dense layer with 24 output units, while LSTM contains a bidirectional LSTM layer and a dense layer with 24 output units. The GAP model contains an average pooling layer and a dense one with 24 output units. The output dense layer (which is the same for all models) has one unit in the case of binary classification and five output units for multi-class classification. The activation function [53] for the hidden dense layer is the rectified linear unit (ReLU). For binary classification, the output dense layer is the function, and the models are compiled using binary cross-entropy as the loss function and the adaptive movement estimation optimizer Adam [54]. In the case of multi-class classification with 3 or 5 classes, the models are compiled using the sparse categorical cross-entropy function, and for the output dense layer, we use the function.

Each training session of a model was performed for at most 30 epochs, with early stopping after five epochs without any improvement on the loss function. The implementation was performed using the scikit-learn version 1.3.1 (https://scikit-learn.org/stable/ (accessed on 20 January 2024)) and keras version 2.14.0 (https://keras.io/ (accessed on 20 January 2024)) Python packages.

4.4.2. Unsupervised Analysis

As a clustering technique, we employ k-means and SOM. For similar tasks, k-means is the most frequently encountered [46,55], but SOM has shown better performance in recent studies [56]. Therefore, we aimed to examine the suitability of the two techniques in terms of a Romanian-language dataset. For both, the initial number of nodes/clusters was set at 200, value which was experimentally determined to generate the best results for our dataset. For k-means, the implementation from the scikit-learn library version 1.3.1 was used, with no additional parameters. For SOM, we used the implementation from the NeuPy version 0.6.5 (http://neupy.com/pages/home.html (accessed on 20 January 2024)) Python package, with the learning radius set at 1 and the step at 0.25. The distance used was cosine. The top 5% percent of the obtained aspect clusters are considered representative ().

4.5. Evaluation

4.5.1. Methodology

In order to reliably evaluate the performance of the proposed approaches, we performed 10 repetitions of 5-fold cross-validation in all the experiments carried out on our dataset, .

During the cross-validation process, the confusion matrix for the classification task was computed for each testing subset. Based on the values from the confusion matrix, multiple performance metrics, as described in Section 4.5.2, were computed. For each metric, the values were averaged during the cross-validation process, and the 95% confidence interval (CI) of the mean values was calculated.

4.5.2. Performance Indicators

Supervised classification. Based on state-of-the-art views, the most used performance metrics in sentiment analysis are accuracy (), F1-score (), precision (), recall (), specificity (), and area under the ROC curve (AUC). These can be calculated individually for every class in the dataset or as an arithmetic or weighted average for the entire model. To compute each metric, we require the resulting confusion matrix, a matrix that, in supervised learning, evaluates the performance of a model comparing the actual class of an entry versus the predicted class. In this sense, for a class k, we denote with the true positives of class k and with the true negatives of class k. is defined as the number of instances from class k correctly classified in class k, and is defined as the number of instances that are not in class k and have been correctly classified as a different class from k. denotes the false positives, meaning the number of instances that are not in class k but have been classified as being class k, and denotes the false negatives, meaning the number of instances that are in fact in class k but have been incorrectly classified to be a different class from k. In Equation (2), we define the accuracy of a class k, denoted by . We present the definition for precision for a class k, denoted as , in Equation (3). In Equation (4), the formula for computing the recall for a class k, denoted by , is presented. The specificity for a class k, denoted as , is computed as in Equation (5).

The area under the ROC curve is generally employed for classification approaches that yield a single value, which is then converted into a class label using a threshold. For each threshold value, the point () is represented on a plotm and the AUC value is computed as the area under this curve. For the approaches where the direct output of the classifier is the class label, there is only one such point, which is linked to the (0, 0) and (1, 1) points. The AUC measure represents the area under the trapezoid and is computed as in Equation (6).

All the previously mentioned performance evaluation measures range from 0 to 1. For better classifiers, larger values are expected.

For a binary classification in sentiment analysis, we have two classes (the positive class and the negative class); thus, we denote the metrics referring to positive predicted values (PPVs) for the precision of the positive class and negative predicted values (NPVs) for the precision of the negative class. In the general case of multi-class classification with classes, having calculated the performance indicators per each class with the above formulas, we define the overall weighted average for each performance metric as in Equation (8).

where is the performance indicator for class k, and is the weight of class k. The weight of a class k is computed as , with equal to the number of instances from class k in the dataset and the total number of instances for all classes in the dataset.

Unsupervised analysis. For the proposed unsupervised analysis, we used two evaluation measures, namely normalized pointwise mutual information (NPMI) [57] and a WordNet-based similarity measure. NPMI is the normalized variant of pointwise mutual information, a measure commonly used to evaluate association. This normalized variant has the advantage of a range of values with fixed interpretation.

In Equation (9), the formula for computing the NPMI for two words is shown, where and represent the probabilities of occurrence of words and , respectively, and is the probability of the co-occurrence of the two. For an aspect cluster , containing words denoted by , , the NPMI value is computed as an average over the NPMI values obtained for every pair (, ), .

While it was defined in the context of collocation extraction, the NPMI measure has also been used in topic modeling literature to evaluate topic coherence [46,47], as it was found to reflect human judgment [58].

The NPMI bases the assessment on the co-occurrence of terms, while the proposed WordNet-based measure takes advantage of the hierarchy of noun and noun phrases in WordNet, in which is-a (hyponymy/hypernymy) relations, as well as part-of associations, are recorded. We are especially interested in the hierarchy determined by the is-a relationships between nouns, as we need to evaluate the ability of determined groups of nouns (aspect terms) to describe a more general concept (aspect category). Thus, we used a measure that describes how closely related two words are in this hierarchical structure of the WordNet lexical database: the Leacock and Chodorow (LCH) similarity [59]. We compute this metric as in Equation (10), using the Romanian WordNet (RoWordNet [60]).

In Equation (10), the Leacock–Chodorow similarity is computed between the first senses of the two terms and , which are encapsulated in RoWordNet synsets. Thus, we denote by the shortest path length between the concepts represented by and in the WordNet hierarchy, while represents the maximum taxonomy depth.

The NPMI measure has the advantage of evaluating performance on an unseen test set, providing a realistic measure of the proposed approach. However, we argue that, while NPMI may be an informative measure with respect to the coherence of topics, which are defined as sets of words that co-occur, it is less suitable for measuring the coherence of groups of words meant to be interpreted as aspect terms which define an aspect category. Usually, when discussing an aspect of a product, the number of aspect terms from a given category used in the same sentence, and even review, is limited—in fact, these aspect terms are often used interchangeably. For NPMI, the range of values is , with values of −1 characterizing words that occur separately, but not together, and values of 1 describing words that only occur together. As for LCH, the range of values is (0, ], where the maximum RoWordNet depth in the hypernymy tree is 16. Considering that when and have the same sense, a higher value for the LCH measure signifies increased relatedness between the concepts represented by and .

5. Results

In this section, we present the results of our study, which aims to investigate the efficacy of machine learning techniques in sentiment analysis, specifically applied to a dataset of Romanian reviews. Results are provided for the three textual levels we addressed: document (as detailed in Section 5.1), sentence (outlined in Section 5.2), and aspect level (discussed in Section 5.3).

5.1. Document Level

The first embedding we evaluated in the context of sentiment analysis when using the RoProductReviews dataset is the one based on LSI.

The classifiers employed in evaluating the relevance of the LSI-based embedding for sentiment analysis were SVM, RF, LR, and a neural-network-based model (VP [61] for binary classification and MLP for multi-class classification).

The results obtained when classifying the RoProductReviews reviews on two classes of polarity, positive and negative, when representing the reviews as LSI-based embeddings, are given in Table 4. For each of the four models, we present the mean value and confidence interval calculated for each performance metric used in evaluation, methodology that was described in Section 4.5. We have obtained AUC values up to and F1-score values up to . The best-performing classification model is LR, which is immediately followed by VP, for which AUC and F1-score values of were obtained.

Table 4.

Results obtained for LSI-based binary classification with the RoProductReviews dataset. The highest value for each performance indicator is marked in bold.

The performances obtained in the case of multi-class classification are given in Table 5. The conclusion that has been drawn for binary classification, regarding the relative performance of the classifiers, holds, the best-performing classifier remaining logistic regression. LR obtained a weighted average F1-score value of , while the second-best classifier is still the artificial neural network model, in particular the MLP that replaced the VP used for binary classification. A weighted average F1-score value of was obtained by the MLP classifier.

Table 5.

Results obtained for LSI-based multi-class classification with the RoProductReviews dataset. The highest value for each performance indicator is marked in bold.

Table 6 shows the results obtained for binary classification on the RoProductReviews dataset using the deep learning models, while in Table 7, results for multi-classification with five classes are presented.

Table 6.

Binary classification using deep learning models with the RoProductReviews dataset. The highest value for each performance indicator is marked in bold.

Table 7.

Multi-class classification using deep learning models with the RoProductReviews dataset. The highest value for each performance indicator is marked in bold.

The best results in the case of binary classification are obtained by the CNN model, with accuracy 0.930, average precision 0.931, recall 0.934, and F1-score 0.930. The other three models have a similar performance of accuracy 0.918 for LSTM, 0.920 for GRU, and 0.918 for GAP. We generally notice a slightly higher precision and F1-score for the positive class than the negative class (for example, GAP precision PPV is 0.929, and LSTM precision NPV is 0.908), which may be due to the slight imbalance of the dataset (2509 negative reviews and 2615 positive reviews), but not very significant, meaning it could also be the result of the random cross-validation experimental setup.

For multi-class classification, the best overall results are also obtained by the CNN model (accuracy 0.767, precision 0.769), followed by GRU (accuracy 0.739, precision 0.743), then LSTM (accuracy 0.722, precision 0.727), and the worst performance is obtained by GAP (accuracy 0.652, precision 0.669).

In terms of performance metric indicators per class, the best result is obtained by all models for Class 5, corresponding to five stars, with the highest value of 0.854 for precision using CNN. The next best value yielded by all models is for Class 1, corresponding to one-star evaluations, with values up to 0.800 for precision using CNN. The worst performance is obtained for class 2-star, for which the highest value is 0.692 for precision with CNN, and the lowest is 0.527 for precision with GAP. This result could be somewhat influenced by the slight imbalance of dataset classes (only 1152 instances of two-star reviews, while there are 1336 reviews with five stars). Moreover, the higher results for the classes with five stars and one star could be explained by the fact that they are the extremes of the rating scale. This means that the sentiment conveyed in the class 5-star and class 1-star reviews is more intense and clearly expressed as positive (when the customer is clearly satisfied) or negative (expressing customer dissatisfaction).

Consequently, the classifiers may also find it easier to identify sentiment patterns in these two rating categories, while for the classes with two, three, and four stars, the reviews may present reasons both in favor of and against the reviewed product, thus a mix of sentiment.

In terms of computation time, the CNN model required the least time for training and repeated cross-fold validation (approximately 8 h), as opposed to the other models, which required between 31 and 48 h on the same hardware device. However, while in this case, CNN proves to be the best choice among deep learning models, an important limitation remained for the execution time, which was much higher than that of classical approaches, for example, those based on LSI embedding and machine learning classifiers such as NB, RF, or SVM.

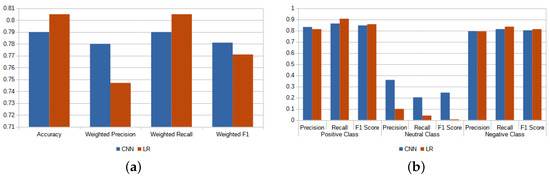

In the following, we have compared the results obtained by the LR model, which proved to be the best-performing classifier on the RoProductReviews dataset, with those obtained using CNN, which proved to be the best-performing of the deep learning models.

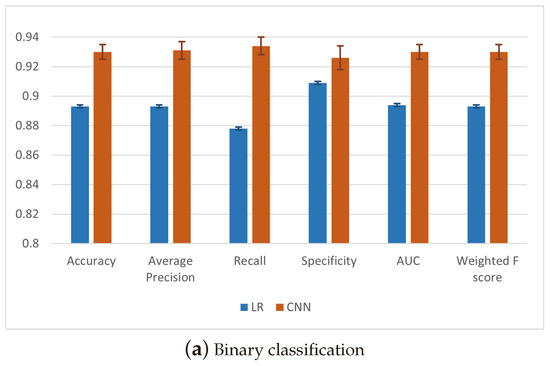

The comparison for binary classification is visually presented in Figure 1a, while Figure 1b depicts the comparison for multi-class classification, when the 95% confidence intervals of the weighted average performance indicators values for the five classes was considered. As Figure 1a,b show, CNN leads to consistent better performance.

Figure 1.

Comparison between CNN and LR for binary and multi-class classification on the RoProductReviews dataset.

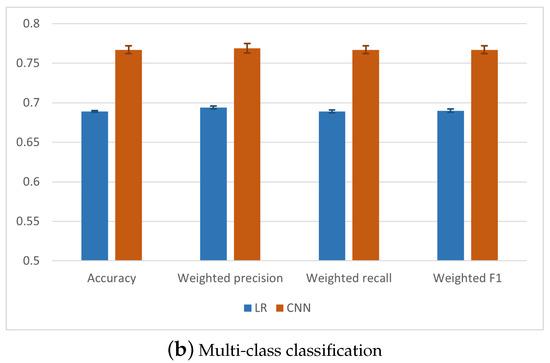

We have also comparatively analyzed the results at the class level for both binary and multi-class classification. The comparison at the class level is shown in Figure 2a,b and, as it can be observed, it reinforces the conclusion that CNN behaves consistently better for classifying product reviews written in Romanian in classes of polarity.

Figure 2.

Comparison between CNN and LR for binary and multi-class classification on the RoProductReviews dataset at class level.

While for the binary classification CNN performs similarly for both positive and negative classes, LR presents small differences in performance for each class and measure. However, for the multi-class classification, there is a consistent behavior of the two models in which class 5 stars presents the best results, while class 2 stars presents the worst result. This shows that specific characteristics of the dataset are most probably responsible for the confusion in classification, namely the smaller number of reviews for two stars (1152) in comparison with the other classes.

5.2. Sentence Level

Table 8 and Table 9 show the classification results obtained at sentence level for the RoProductReviews dataset, using the TF-IDF and LSI representations presented in Section 4.3, both by removing and not removing stop words, configurations which are denoted as “without”, and “with”, respectively. Specifically, Table 8 contains the results obtained for the TF-IDF representation and Table 9, the results obtained for the LSI representation. These experiments were performed with three goals in mind: (1) establishing whether the removal of stop words influences the classification results, (2) deciding which representation works best for the sentences from the RoProductReviews dataset, and (3) choosing the algorithm that is best suited for sentence-level sentiment classification.

Table 8.

Results obtained for TF-IDF-based sentence-level classification with the RoProductReviews dataset. The highest value for each performance indicator is marked in bold.

Table 9.

Results obtained for LSI-based sentence-level classification with the RoProductReviews dataset. The highest value for each performance indicator is marked in bold.

In order to answer the first question, we have analyzed the results from each table individually, thus leading to a conclusion for each representation. For TF-IDF, almost all the averaged performance indicators (accuracy, precision, recall, and F1-score) are higher for the case when stop words are not removed, with the exceptions of precision for NB. However, if we are to look at the percentage difference, which is 0.4%, we can state that this exception does not impact the overall conclusion; that is, for the TF-IDF representation, the removal of stop words negatively influences the classification results. This means that, although stop words are, by definition, insignificant for determining the sentiment expressed in a sentence, given the fact that sentences, as opposed to documents, contain only brief opinions, the removal of stop words shortens the sentence even more, leading to a decreased classification performance.

The same conclusion holds for the LSI representation as well: all the averaged performance metrics are higher when not removing stop words, for all the classifiers used in the experiments. Thus, we can answer the first question: the removal of stop words does influence the classification results, in a negative manner.

Once we have established that better results are obtained without removing the stop words, in order to answer the second question, we only compare the results obtained for TF-IDF and LSI representations when keeping the stop words, presented in the same tables (Table 8 and Table 9, respectively). For all the averaged performance indicators, all algorithms yield higher values for the TF-IDF representation, with the exception of NB. Yet, the difference in accuracy and weighted recall is 0.1% between the two representations, while the difference in F1-score is 0.2%. Taking all of these into consideration, we can state that the TF-IDF representation is better suited for all the algorithms employed in the experiments. This conclusion can be motivated by the nature of the representations themselves since LSI attempts to reduce the dimensionality of the TF-IDF representation, and sentences can be viewed as very short documents, reducing the dimensionality leads to a loss of relevant information.

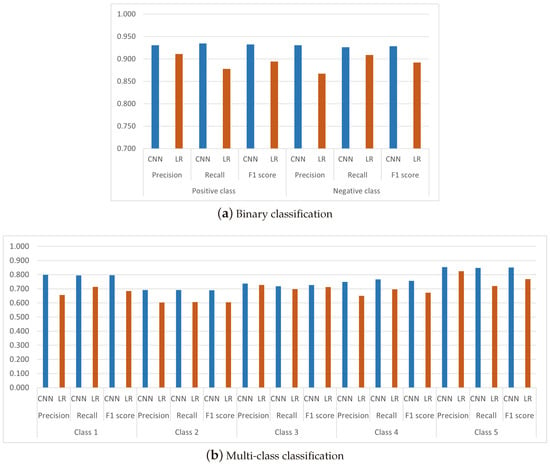

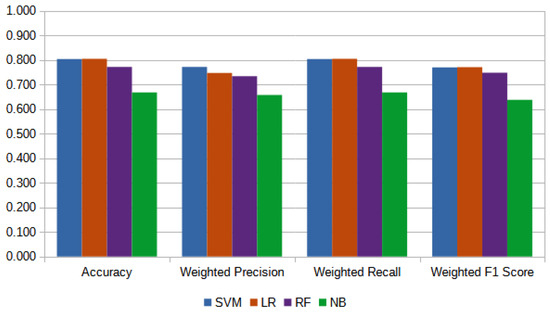

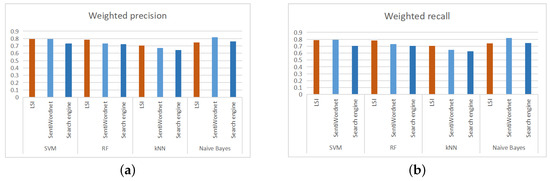

Finally, so as to choose the algorithm that is best suited for SLSA on the RoProductReviews dataset, we compare the performance indicators obtained with the TF-IDF representation for SVM, LR, RF, and NB. Figure 3 presents these values, gathered from Table 8 and Table 9. The results for each category are not included in Figure 3, because we consider the averaged performance indicators to suffice for the intended comparison; however, the values for these metrics can be found in the respective tables. Therefore, considering these performance indicators, LR obtains the highest values for accuracy, weighted recall, and weighted F1-score, while SVM leads to the highest weighted precision value. Yet, since the difference in weighted precision between the two algorithms is 0.025, we can conclude that LR is the best-suited algorithm for the task of sentiment analysis at the sentence level, which coincides with the conclusion drawn for the document level. Therefore, we can state that sentiment analysis for Romanian can be performed at both the document and sentence levels using the LR algorithm.

Figure 3.

Sentence-level classification with the RoProductReviews dataset.

If we are to look at the results obtained for each class, as presented in Table 8, one can notice that the results obtained for the neutral class are very low, which is explainable given the unbalanced dataset. In order to solve this problem, a higher number of neutral sentences, comparable to that of the positive and negative sentences, should be used for training the algorithms.

Concluding, from the results presented in Table 8 and Table 9, in order to perform the task of SLSA on the RoProductReviews dataset, the LR algorithm should be applied on the TF-IDF representation of the sentences, without removing the stop words, leading to an accuracy score of , a weighted precision score of , a weighted recall score of , and a weighted F1-score of .

Given that CNN is the most effective model at the document level, embedding encoding and CNN are used in the deep learning technique at the sentence level on the RoProductReviews subset for multi-classification with three classes. The results are presented in Table 10, and are comparable to the other approaches presented previously for SLSA. The accuracy obtained is , while weighted precision is , weighted recall and weighted F1-score . This means that, in comparison with the results obtained for LR, the deep learning approach leads to better precision and F1-score, while the classical ML algorithm obtains higher accuracy and recall values. Figure 4a presents this comparison, for an easier analysis. Since the differences are very small—0.015 in accuracy and 0.015 in weighted recall, in favor of LR, and 0.033 in weighted precision and 0.01 in weighted F1 in favor of CNN—a clear conclusion cannot be drawn: each of these two algorithms can be used to perform the task of SLSA.

Table 10.

Multi-class classification using deep learning models at sentence level with three classes: negative, neutral, and positive. The highest value for each performance indicator is marked in bold.

Figure 4.

Comparison between CNN and LR for sentence-level classification with the RoProductReviews dataset: (a) overall and (b) with respect to class.

However, there are very big differences in the performance indicators per class. Given that the dataset is very unbalanced, this limits the deep learning model’s learning (1092 instances for the positive class, 171 instances for the neutral class, 804 instances for the negative class). As such, the neutral class performs very poorly (the lowest value is for recall), while the positive class performs the best (the highest value is for the F1-score). In comparison to LR, as presented in Figure 4b, CNN performs better for the neutral class and obtains better precision for the positive and negative classes, while LR outperforms CNN in terms of recall and F1-score for both the positive and negative classes.

5.3. Aspect Level

5.3.1. Aspect Term Extraction

In general, in aspect-based sentiment analysis, aspects are specific to a product type. Users may be interested in the battery life, photo/video quality, and performance of a phone, but paying more attention to memory in case of an external hard drive and coverage for a wireless router. Naturally, there are common aspects that can be evaluated for multiple product types, which is owed to the overlap in the category taxonomy itself. For instance, processing speed can be evaluated on all electronics with a processing unit, as can sound quality on devices that support audio input and output. In this paper, we attempt to discover the most important aspects of a product category from our dataset using two clustering approaches.

Table 11 shows the results obtained for each of the two clustering algorithms employed, SOM and k-means, in terms of a mean over the random states and the 95% CI, for each of the product categories in the dataset. In terms of NPMI, in 8 out of 12 cases, the SOM algorithm provides better results, with k-means clusters achieving a higher score for fitness bracelets, headphones, and monitor product categories, though by small margins. For smartwatches, the generated clusters obtain the same score for both algorithms. If the clusters are evaluated using the LCH metric, for 7 out of 12 product categories, the SOM algorithm partitions the considered words better.

Table 11.

Results for aspect term extraction and grouping in terms of NPMI and LCH. An average over the random states is provided along with the value for the 95% CI.

Overall, the low NPMI scores could be explained by the nature of the word groups. For some aspect categories, some aspect terms might be used interchangeably rather than co-occur in the same review. For instance, in terms of evaluating the price of a product, users most often limit themselves to either saying A lot of money, but it’s worth it or A good price for what it offers, but not both in the same review, as the sentences have very similar meaning. Therefore, nouns money and price may occur less frequently together than in other types of text that discuss a finance topic. Alternatively, when evaluating the functionalities of a smartwatch, one could talk, in the same text span, about health monitoring using a number of different words: for instance, somn/sleep monitoring, puls/heart rate measuring, tensiune/blood pressure.

The LCH score, on the other hand, might deal well with the first case, identifying price and money as similar concepts, but lacks the ability to contextually assess the relatedness of groups like the second example (e.g., sleep, heart rate, blood pressure).

In the following, we present more detailed results for a selected product category, laptop. Appendix A includes results for another product category, monitors, to showcase the ability of the approach to identify relevant aspect categories for different product types.

The noun groups obtained in one example run for the category laptop are presented in Table 12, which shows the eight aspect clusters that were obtained. As it can be seen, these noun clusters are relatively easy to interpret. For the first cluster, , the label of durability/reliability was assigned, as the words within represent either words that refer to time (perioadă/period, timp/time, an/year, lună/month) or to the use of the product (utilizare, folosire/usage), with potential issues (pană/breakdown, problemă/problem). Temporal words are also used to form , but, in this case, it is more likely that the battery life of the laptop is discussed, since the referenced periods of time are shorter: saptaman, saptamană/week, oră/hour. This differentiation between temporal words used in battery life and durability aspect clusters indicates that using word2vec embeddings trained on the review corpus allows the clustering process to capture associations that go beyond classic semantic categories (e.g., grouping together words that refer to time). The ability of the learned representations to encode information from the specific usage patterns from the corpus they are trained on aids the formation of meaningful groups in terms of their ease of interpretability as aspect categories.

Table 12.

Example clusters obtained using SOM for product type laptop.

Cluster can be interpreted as referring to price or the value for money, while can be assigned a label of connectivity based on words such as mufă/socket, adaptor/adapter, receiver, wireless, USB. is equally easy to interpret, as it contains nouns that almost exclusively refer to the display aspect category. As far as aspect clusters and are concerned, we highlight the distinction between the operating system and software components aspects, both of which can provide insights into the laptop’s hardware, software and performance. However, the first terms (i.e., terms comprising ) are relevant when discussing the laptop’s compatibility with various software, operating systems, and its ability to access websites and web content effectively, while terms in lean towards descriptions of internal components and the system configuration, often discussed in laptop reviews to evaluate its performance, ability to upgrade, and security features.

A somewhat less obvious cluster is . The terms included in this group are frequently used to either express an evaluation with regard to the quality of a product (terms calitate/quality, pro), or address some general aspects (e.g., “În rest, n-au fost probleme”/“Otherwise, there were no issues”, “În rest, e ok”/“Otherwise, it’s fine”).

This, in turn, highlights one of the limitations of the proposed approach, namely the use of automatic PoS tagging, which may, at times, erroneously identify words as nouns, either because of homonymy (for instance, “bun” can be both an adjective, meaning good, or a noun, meaning asset) or the use of more informal constructions such as super ok, super tare (super nice), super mulțumit (super content), which the tagger may have difficulties in correctly processing.

5.3.2. Aspect Category Detection

In this subsection, we present results for the aspect category detection task, using the aspect clusters presented in Section 5.3.1 for the product type laptop to identify their presence in a review.

Table 13 provides a series of example reviews from the product category laptop, chosen to reflect the diversity of expression in the corpus, both in terms of the length of reviews, and in terms of the explicit and implicit discussion of aspects.

Table 13.

Aspect category detection results with respect to a set of reviews from product category laptop.