4.1. The Rationality of Fuzzy Information Granularities Is in an MsMIS

Definition 17. Assume that is an MsMIS. Given , let and . Then, we have:

, .

if , then and .

, .

, .

(Proof of Definition 17). Let , . Hence, the proof is obtained.

Since , it is easy prove that holds. As T and S are dual w.r.t. N, then, also holds.

Obviously.

See and . □

According to Definition 17, it exhibits the relationships between and , and and , respectively. This means and can be defined through and , respectively. Therefore, it can be used as an axiom to characterize elements of and .

Proposition 2. Assume that is an MsMIS. Given , if is a fuzzy T-equivalence relation on U, for any and , then, the following conditions are equivalent.

is reflexive;

;

.

Proof of Proposition 2. It is obvious that and .

“”: , pick , then , it is a contradiction, so .

“”: if , then . If , then . Hence, is a contradiction. Therefore, □

From Proposition 2, if is reflexive, we have and . As a result, and can be referred to as fuzzy information granularities with and as their centers, respectively.

Proposition 3. Assume that is an MsMIS. Given , if is a fuzzy T-equivalence relation on U, for any , then, the following conditions are equivalent.

is symmetric;

;

.

Proof of Proposition 3. Since and , and can be easily proved. □

Proposition 4. Assume that is an MsMIS. Given , if is a fuzzy T-equivalence relation on U, for any , then, the following conditions are equivalent.

is T-transitive;

;

.

Proof of Proposition 4. “”: . Hence, .

“”: It is easily proved by the T-transitive.

“”: . Hence, we have the conclusion: .

“”: it can be easily proved according to T-transitivity and duality. □

Theorem 1. Assume that is an MsMIS. Given , if is a fuzzy T-equivalence relation on U, for any and , then,

, , .

, , .

Proof of Theorem 1. As is reflexive, it can be easily proved. □

Proposition 5. Assume that is an MsMIS. Given , if is a fuzzy T-equivalence relation on U, then, we have:

If , and , then and .

and are the maximal element in and the minimal element in , respectively.

, .

where ; where .

⇒; ⇒.

Proof of Proposition 5. If then . According to Theorem 1(1), holds. On the contrary, if and . . Hence, . Similarly, . In the same way, we can prove that .

Suppose , we have , it implies . Suppose , then , this implies .

Suppose , we have . . Hence, , which implies . Suppose , we have . Hence, . This implies .

According to T-transitivity of , then . This implies . Hence, , . Similarly, we can prove that where .

: It is straightforward.

□

4.2. The Lower and Upper Approximations of Fuzzy Sets Are Constructed Using Information Granularities

In this subsection, we combine fuzzy information granularity from the perspective of granular computing. Initially, fuzzy information granularities are employed to form the lower and upper approximations of fuzzy sets. These approximations represent a seamless extension of the precise approximations found in traditional rough sets and align perfectly with those in FRSs.

Definition 18. Assume that is an MsMIS. Given , if is a fuzzy T-equivalence relation on U, for any and , then,where is referred to as the T-lower approximation of X and is referred to as the S-upper approximation of X. We can call () granular -fuzzy rough sets. Theorem 2. Assume that is an MsMIS. Given , if is a fuzzy T-equivalence relation on U, for any then

(1) ;

(2) .

Proof of Theorem 2. We first need to prove . According to Definitions 16 and 18, , let then, . Because T-transitivity of , so . Hence, . Therefore, we have

, then . Otherwise , it is a contradiction. This implies is the maximal in . Conversely, , if for any such that , we have and . Since is the maximal in , then, . Therefore, .

. □

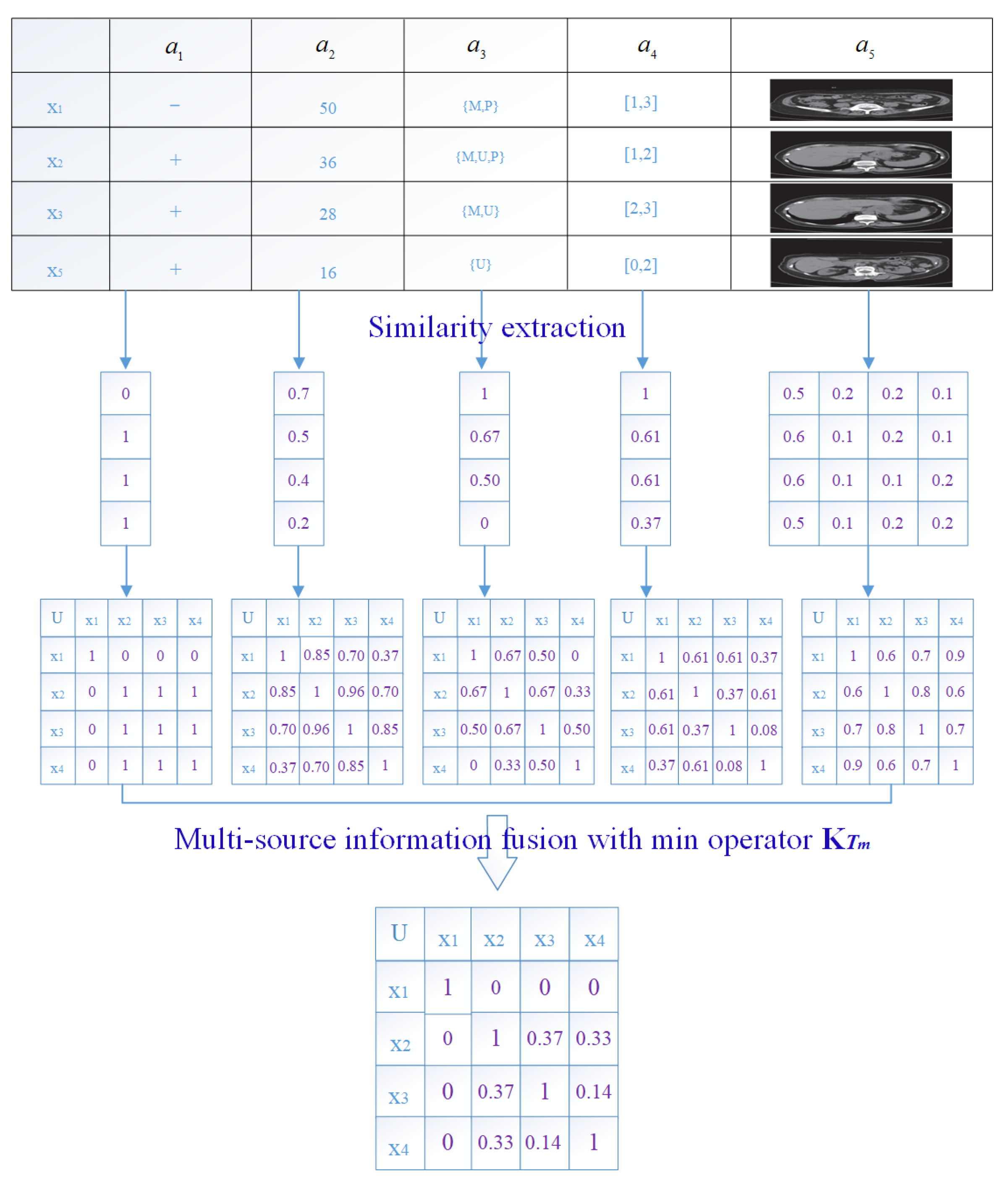

Example 3. By Example 2, a fuzzy T-equivalence relation in terms of min operator , is denoted byThen, for any , let By Definition 15, then, , . Assume that . We have: and . , for each , then:

; ;

; .

From Example 3,

consists of the union of these four fuzzy granularities (

,

,

, and

), i.e.,

Definition 19. Assume that is an MsMIS. Given , if is a fuzzy T-equivalence relation on U, for any and , then,where is referred to as the θ-lower approximation of X and is referred to as the σ-upper approximation of X. We can call the () granular -fuzzy rough sets. Theorem 3. Assume that is an MsMIS. Given , if is a fuzzy T-equivalence relation on U, for any then

(1) ;

(2) .

Proof of Theorem 3. (1) For .

(2) For

□

4.3. Continuation of the Classic Attribute Reduction in the FRS Model

Definition 20. Let be an MsMIS. Given , , and is the fuzzy T-equivalence relation in P calculated by , for any , the fuzzy positive regions of D w.r.t. P is defined bywhere . Definition 21. Let be an MsMIS. Given , and is the fuzzy T-equivalence relation in P calculated by , for any , the quality of approximating classification can be denoted aswhere and . The fuzzy approximation quality is a fuzzy extension of the Pawlak rough set approximation quality. Since the lower approximation reflects the degree to which a sample necessarily belongs to its decision in the fuzzy approximation space, the fuzzy approximation quality as an indicator reflects the average degree to which a sample necessarily belongs to its decision in the feature fuzzy approximation space. The larger the value of this indicator, the more certain the classification in the fuzzy approximation space, and the stronger the ability to approximate multi-decisions. The greater the dependency of the decision on the attribute subset. Therefore, the approximation quality is also referred to as the degree of dependence of the decision on the condition.

Theorem 4. Let be an MsMIS. Given , . and are the fuzzy T-equivalence relation calculated by kernel fusion function in feature spaces P and Q, respectively, for any , we have

(1) ;

(2) ;

(3) ;

(4) ;

(5) .

Proof of Theorem 4 Obviously.

Let and . Since , then . We have , and . Hence, . Using the same method, we can prove the other T-norms. Hence, for any , we can easy obtain and .

See .

According to Definition 20 and , the proof is derived from the monotonicity of the lower approximation.

According to Definition 21 and , it is easy to obtain the proof.

Theorem 4 shows that with an increase in attributes, the approximation quality monotonically increases. This indicates that the addition of new attributes will inevitably introduce new classification information, causing the granulation structure of the approximation space to become finer. Consequently, the approximation ability is enhanced, and the approximation of the classification decision may become more accurate. □

Definition 22. Let be an MsMIS, and . For any , and , if , then a is redundant in P w.r.t. D; otherwise, a is indispensable.

Definition 23. Let be an MsMIS, and . For any , and , P is a relative reduct to D if it satisfies:

(1) ;

(2) , a is indispensable in P to decision attribute D.

Definitions 22 and 23 are classical attribute reduction methods. The advantage of this approach is that it obtains the attribute indistinguishability relation through an approximation quality. It is a continuation of the classical rough set theory applied to attribute reduction, and similarly applies within the FRS models.