Large Language Model-Assisted Reinforcement Learning for Hybrid Disassembly Line Problem

Abstract

1. Introduction

- (1)

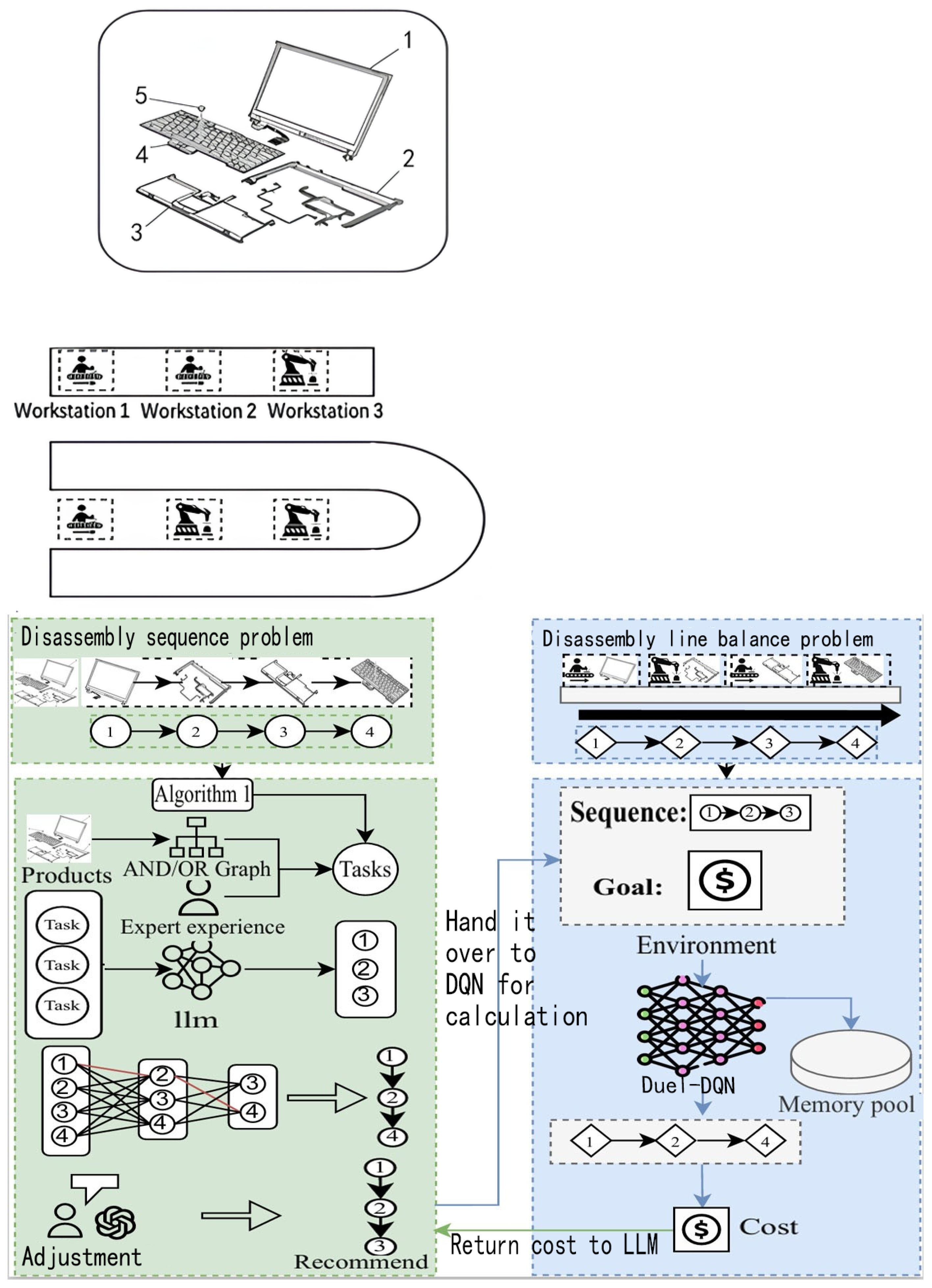

- It proposes a hybrid disassembly line environment that combines both linear and U-shaped disassembly lines to enhance compatibility with workstation postures, optimizing profitability through a mathematical framework for the HDLBP.

- (2)

- It recommends a strategy that decomposes the HDLBP into the DSP and the DLBP, applying the LLM and Duel-DQN algorithms, respectively.

- (3)

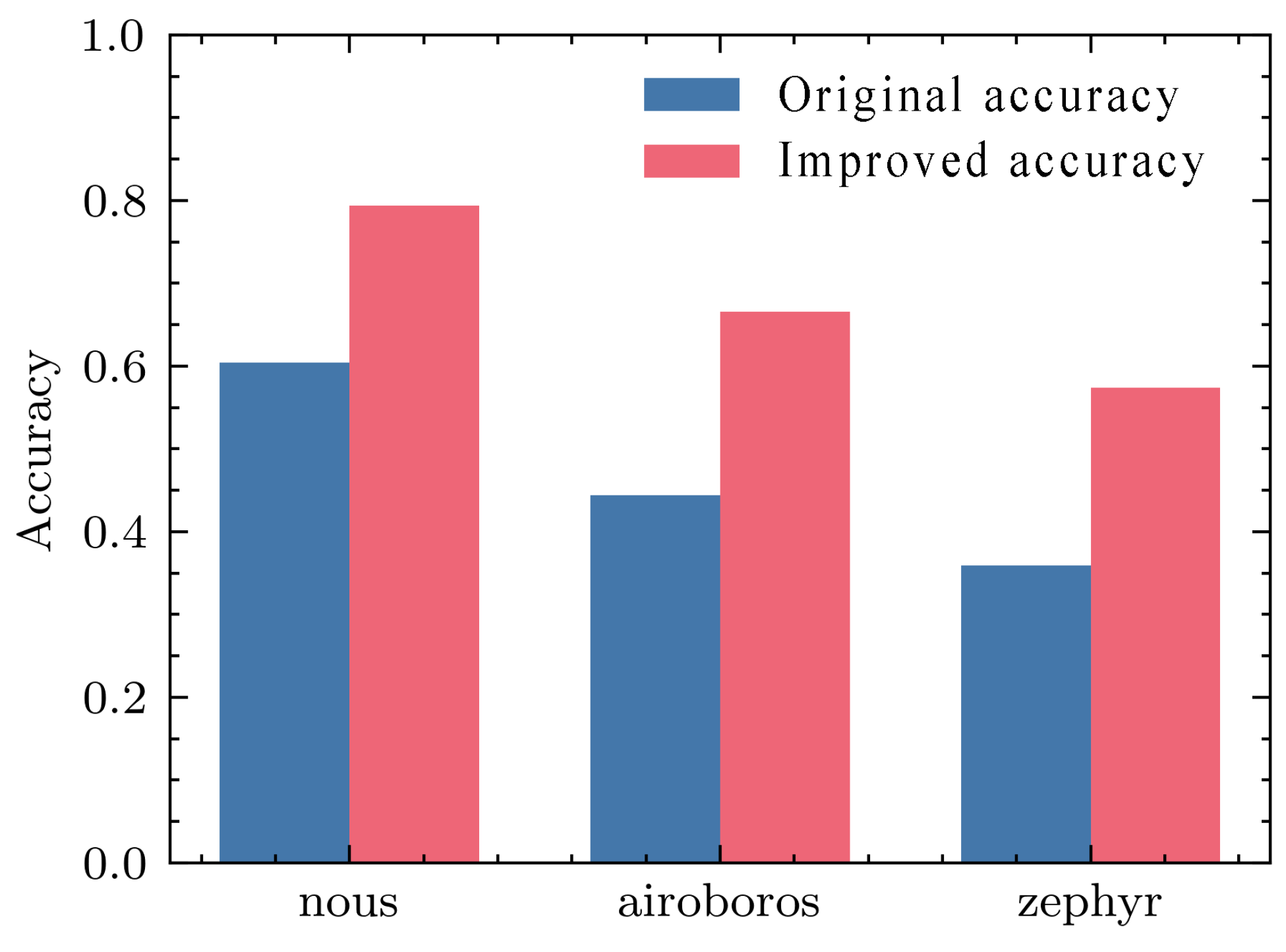

- It identifies the optimal LLM model through comparisons with various alternatives, validating its feasibility and improvement rates against non-LLM algorithms.

2. Problem Description

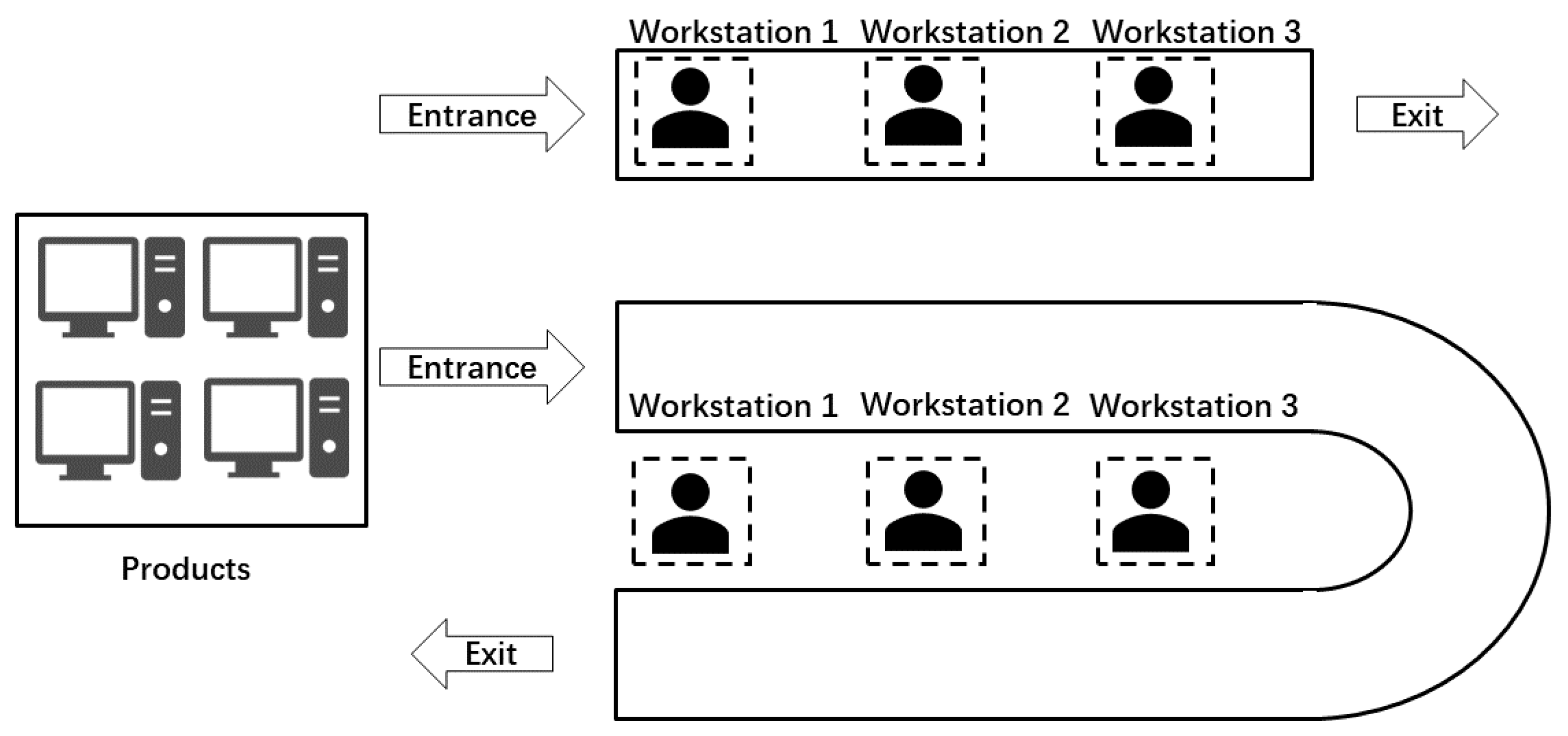

2.1. Problem Statement

- (1)

- Product parameters are well defined, including the cost associated with the product’s disassembly, resultant cost, and the AND/OR graph representation.

- (2)

- The hybrid disassembly line bifurcates into two distinct configurations: a linear line and a U-shaped line.

- (3)

- Each workstation within the system is allocated a predetermined fixed cycle time.

- (4)

- The requisite disassembly posture for each subassembly within the product is established and aligns with the disassembly posture allocated to the workstation.

- (5)

- The configuration of the posture remains invariant, as do the disassembly posts allocated to the product.

- (6)

- Parameters pertinent to the disassembly line are delineated, including, but not limited to, the operational cost of the workstation and the cost associated with initiating the disassembly line.

- (7)

- To the greatest extent feasible, the distribution of tasks among the workstations on the disassembly line should be equitable to ensure uniform labor intensity among workers.

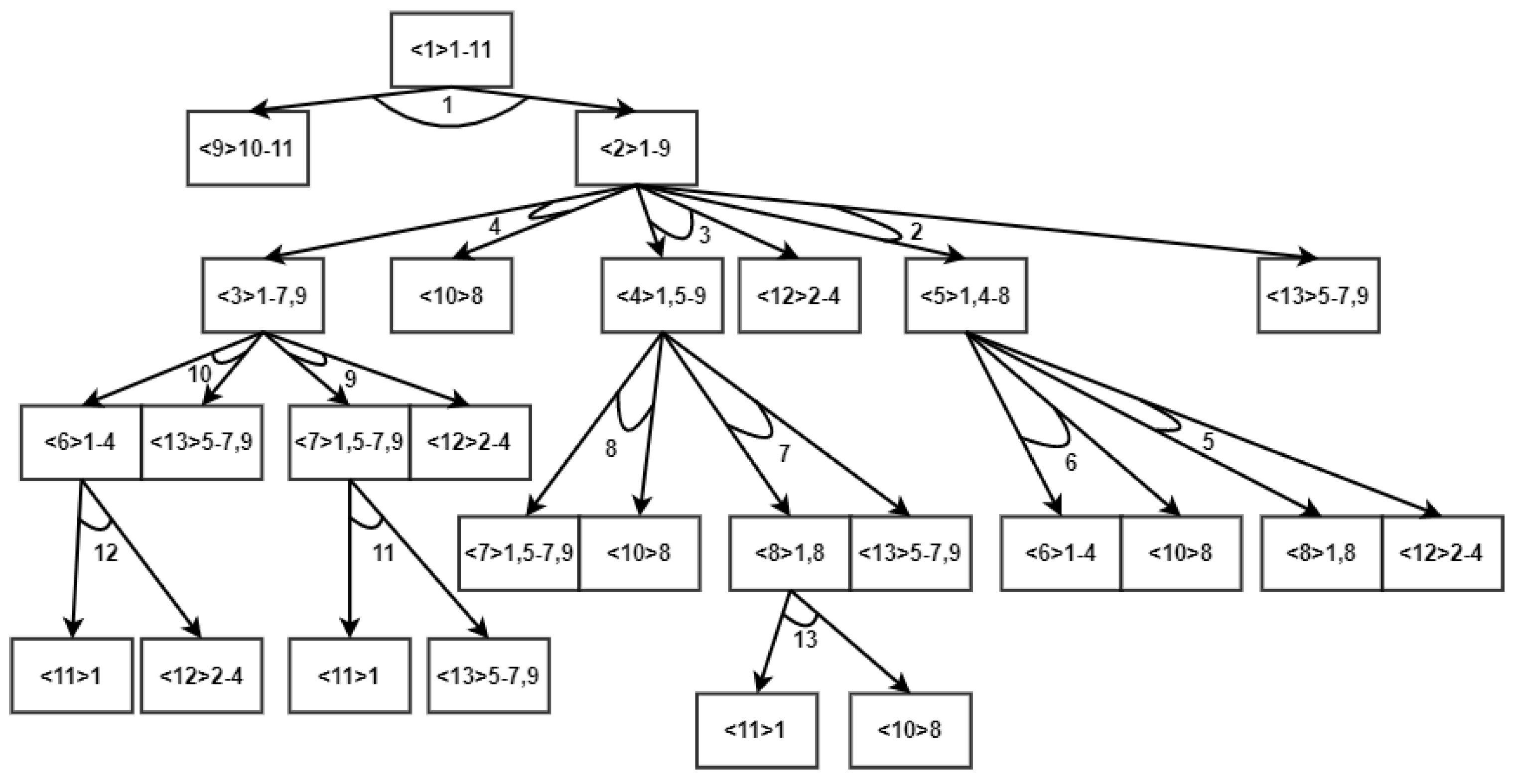

2.2. AND/OR Graph

2.3. Mathematical Model

2.3.1. Disassembly Matrix

2.3.2. Precedence Matrix

2.3.3. Incidence Matrix

2.3.4. Constraint Model

- (1)

- Notations:

| Set of all end-of-life products, = {1, 2, …, P}. | |

| Set of all components/parts in product p, . | |

| Set of all tasks in product p, . | |

| Set of all disassembly lines, | |

| Set of all U-shaped workstations, | |

| Set of edges of U-shaped workstations, | |

| Set of the relationship between components i and task j in product p. | |

| Set of tasks that conflict with task j in product p. | |

| Set of immediate tasks for task j in product p. | |

| P | Number of production. |

| Number of components/parts in production p. | |

| Number of tasks in production p. | |

| Number of linear workstations | |

| Number of U-shaped workstations. | |

| Time to execute the j-th task of the p-th product. | |

| Unit time cost of executing the j-th task of the p-th product. | |

| Unit time cost of opening of the l-th disassembly line. | |

| Fixed cost of opening of the w-th workstation in l-th | |

| Posture to execute the j-th task of the p-th product. | |

| Configuration of opening of the w-th workstation in the l-th disassembly line. |

- (2)

- Decision variables

- (3)

- Minimize Cost Model (CS Model):

- (4)

- Linear Disassembly Line Constraint:

- (5)

- U-shaped Disassembly Line Constraint:

- (6)

- Hybrid Disassembly Line Constraint:

3. Proposed Algorithm

3.1. Environment

3.2. Large Language Model

3.2.1. Prompt Engineering

3.2.2. Zero-Shot Prompting

- The problem is a branch of the disassembly line balancing problem.

- The problem has two disassembly lines: one linear and one U-shaped.

- The problem requires solving for multiple products, each represented by its own AND/OR graph.

- The objectives that the LLM needs to accomplish.

3.2.3. Prompt Generation Algorithm

| Algorithm 1 Prompt Word Generation Algorithm |

|

Generate results:Q: Imagine you are in an environment similar to a disassembly line balancing problem. There are two disassembly lines: one linear and one U-shaped. There is one product: a PC.The PC has a total of 13 disassembly tasks: Task 1 initiates the disassembly process, followed by three options: Task 2, Task 3, or Task 4. If Task 2 is chosen, the next task is either Task 5 or Task 6. If Task 3 is chosen, the next task is either Task 7 or Task 8. If Task 4 is chosen, you can proceed to either Task 9 or Task 10. Task 7 leads to Task 13, Task 9 leads to Task 11, and Task 10 leads to Task 12.In the format “I Choose [x] for [product name] in [disassembly line name]”, where [x] is a disassembly sequence representing your choices. For example, [x] is [1, 2, 3, 4, 5].I will now assign the PC to the linear disassembly line. Please provide a possible disassembly plan for the product. Afterward, I will give you the cost of the plan, and you may adjust it to minimize cost as much as possible.A: I Choose [1,3,7,13] for PC in linear disassembly line.

| Algorithm 2 Disassembly sequence extraction algorithm |

|

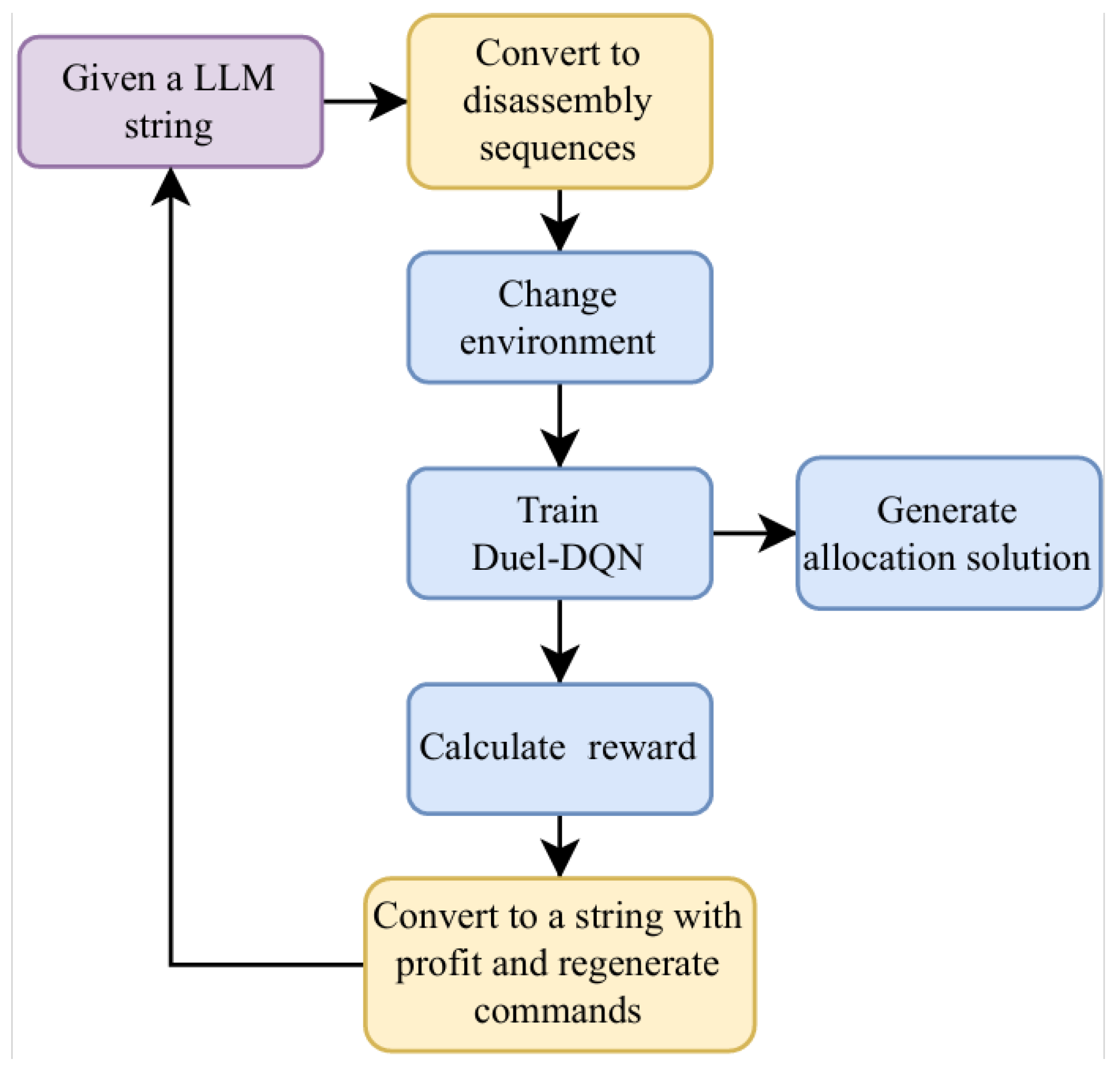

3.2.4. Combining with Duel-DQN

3.3. Duel-DQN Algorithm

3.3.1. State Design

3.3.2. Action Design

3.3.3. Reward Design

3.3.4. Algorithm Process

| Algorithm 3 Train algorithm |

|

4. Experiment and Results

4.1. Test Instances

4.2. Hyperparameter Tuning

4.3. Adjustment of Prompt Words

For Nous-Capybara-7:Q: What are the disassembly steps for the products PC and washing machine?Q: Can you find a disassembly sequence for the product PC and a disassembly sequence for the product washing machine?A: I apologize …Product PC: 1. Initiate the disassembly process. 2. …From Task 9, choose between Task 10 and Task 12 …Q: What are the disassembly steps for the product PC?Q: What are the disassembly steps for the product washing machine?Q: Provide the disassembly line information.Q: Please choose one for me …A: I choose [1, 3, 7, 11] for PC and choose [1, 3, 8, 9] for washing machine.Q: OK, please make another choice.A: I choose [1, 2, 5, 10, 11] for the washing machine and [1, 4, 9, 12] for the PC.

For Zephyr-7B-:Q: I need you to provide …A: For product PC …plan could be [1, 4, 9] …For product washing machine, a possible disassembly plan could be [1, 2, 5] …Q: Now I have 2 disassembly lines, please choose one for me, just say “I choose x for PC in line 1 and choose y for washing machine in line 2”. For example, you can say “I choose [1, 3, 7, 11] for PC in line 1 and choose [1, 3, 8, 9] for washing machine in line 2”. You need to choose tasks until you can’t continue executing them. For example …A: …choose Line 1 with the sequence [1, 3, 7, 11] for product PC. On the other hand …choose Line 2 with the sequences [1, 3, 8, 9] for product washing machine …

For Airboros-13b-gpt4-1.4:Q: Task 1 initiates the disassembly process.A: According to the information provided …Q: After Task 1, you have three options: proceed to Task 2, Task 3, or Task 4.…Q: Please generate a sequence of tasks in the format [x] with any number of tasks. x represents the sequence of PC.A: [1, 3, 7, 13].Q: I have disassembly line 1 and disassembly line 2, please choose one for PC. You can say “disassembly line x”A: disassembly line 1.

| Algorithm 4 Correction Algorithm |

|

4.4. Solving Process

For LLMQ: environment description:…Q: In the format “I Choose [x] for [product name] in [disassembly line name]”, where [x] is a disassembly sequence representing your choices. For example, [x] is [1, 2, 3, 4, 5].A: I choose [1, 2, 6] for PC in linear disassembly.

For LLMQ: The sequence in linear disassembly line [1, 2, 6]’s cost is 120, please choose another one.A: I choose [1, 3, 7, 13] for PC in linear disassembly.

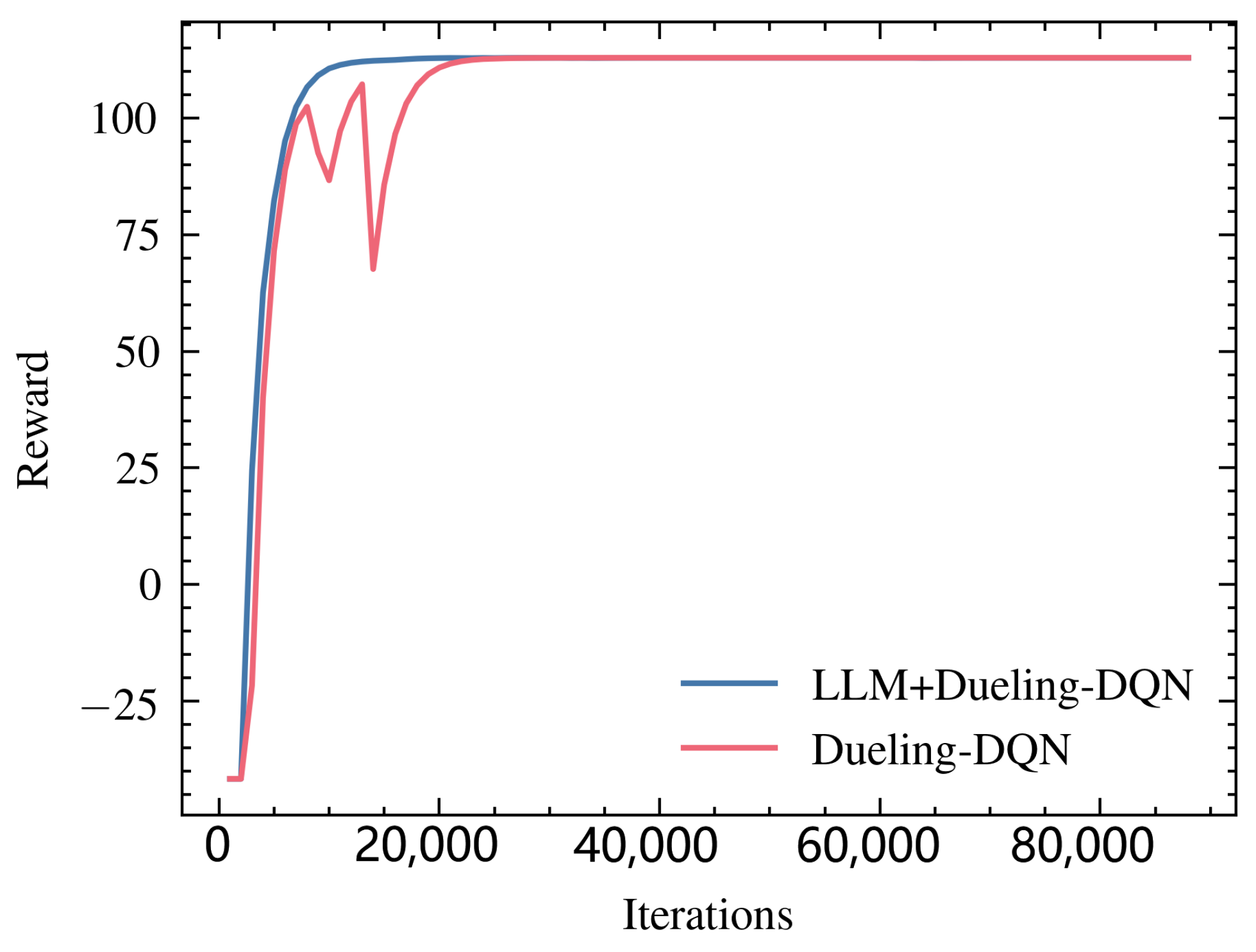

4.5. Efficiency Comparison

4.6. Feasibility Analysis

5. Conclusions and Future Research

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| LLM | Large Language Mode |

| DQN | Deep Q-Network |

| Duel-DQN | Dueling Deep Q-Network |

| DSP | Disassembly Sequence Problem |

| DLBP | Disassembly Line Balancing Problem |

| HDLBP | Hybrid Disassembly Line Balancing Problem |

References

- Guo, X.W.; Zhou, M.C.; Abusorrah, A.; Alsokhiry, F.; Sedraoui, K. Disassembly sequence planning: A survey. IEEE/CAA J. Autom. Sinica 2021, 8, 1308–1324. [Google Scholar] [CrossRef]

- Güler, E.; Kalayci, C.B.; Ilgin, M.A.; Özceylan, E.; Güngör, A. Advances in partial disassembly line balancing: A state-of-the-art review. Comput. Ind. Eng. 2024, 188, 109898. [Google Scholar] [CrossRef]

- Wang, K.; Li, X.; Gao, L.; Garg, A. Partial disassembly line balancing for energy consumption and profit under uncertainty. Robot. Comput.-Integr. Manuf. 2019, 59, 235–251. [Google Scholar] [CrossRef]

- Li, Z.; Janardhanan, M.N. Modelling and solving profit-oriented u- shaped partial disassembly line balancing problem. Expert Syst. Appl. 2021, 183, 115431. [Google Scholar] [CrossRef]

- Tang, Y. Learning-based disassembly process planner for uncertainty management. IEEE Trans. Syst. Man-Cybern.-Part Syst. Humans 2008, 39, 134–143. [Google Scholar] [CrossRef]

- Zheng, F.; He, J.; Chu, F.; Liu, M. A new distribution-free model for disassembly line balancing problem with stochastic task processing times. Int. J. Prod. Res. 2018, 56, 7341–7353. [Google Scholar] [CrossRef]

- Tuncel, E.; Zeid, A.; Kamarthi, S. Solving large scale disassembly line balancing problem with uncertainty using reinforcement learning. J. Intell. Manuf. 2014, 25, 647–659. [Google Scholar] [CrossRef]

- Liu, Q.; Liu, Z.; Xu, W.; Tang, Q.; Pham, D.T. Human-robot collaboration in disassembly for sustainable manufacturing. Int. J. Prod. Res. 2019, 57, 4027–4044. [Google Scholar]

- Tang, Y.; Zhou, M.; Gao, M. Fuzzy-petri-net-based disassembly plan- ning considering human factors. IEEE Trans. Syst. Man-Cybern.-Part Syst. Hum. 2006, 36, 718–726. [Google Scholar] [CrossRef]

- Li, K.; Liu, Q.; Xu, W.; Liu, J.; Zhou, Z.; Feng, H. Sequence planning considering human fatigue for human-robot collaboration in disassembly. Procedia CIRP 2019, 83, 95–104. [Google Scholar] [CrossRef]

- Guo, X.; Wei, T.; Wang, J.; Liu, S.; Qin, S.; Qi, L. Multiobjective u-shaped disassembly line balancing problem considering human fatigue index and an efficient solution. IEEE Trans.-Comput. Soc. Syst. 2023, 10, 2061–2073. [Google Scholar] [CrossRef]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A survey of large language models. arXiv 2023, arXiv:2303.18223. [Google Scholar]

- Braberman, V.A.; Bonomo-Braberman, F.; Charalambous, Y.; Colonna, J.G.; Cordeiro, L.C.; de Freitas, R. Tasks People Prompt: A Taxonomy of LLM Downstream Tasks in Software Verification and Falsification Approaches. arXiv 2024, arXiv:2404.09384. [Google Scholar]

- Liu, S.; Chen, C.; Qu, X.; Tang, K.; Ong, Y.-S. Large language models as evolutionary optimizers. arXiv 2023, arXiv:2310.19046. [Google Scholar]

- Guo, X.; Bi, Z.; Wang, J.; Qin, S.; Liu, S.; Qi, L. Reinforcement learning for disassembly system optimization problems: A survey. Int. J. Netw. Dyn. Intell. 2023, 2, 1–14. [Google Scholar] [CrossRef]

- Mete, S.; Serin, F. A reinforcement learning approach for disassembly line balancing problem. In Proceedings of the 2021 International Conference on Information Technology (ICIT), Amman, Jordan, 14–15 July 2021; pp. 424–427. [Google Scholar] [CrossRef]

- Zhang, R.; Lv, Q.; Li, J.; Bao, J.; Liu, T.; Liu, S. A reinforcement learning method for human-robot collaboration in assembly tasks. Robot.-Comput.-Integr. Manuf. 2022, 73, 102227. [Google Scholar] [CrossRef]

- Mei, K.; Fang, Y. Multi-robotic disassembly line balancing using deep reinforcement learning. In Proceedings of the International Manufacturing Science and Engineering Conference, Virtual, 21–25 June 2021; American Society of Mechanical Engineers (ASME): New York, NY, USA, 2021; Volume 85079. [Google Scholar]

- Liu, Z.; Liu, Q.; Wang, L.; Xu, W.; Zhou, Z. Task-level decision- making for dynamic and stochastic human-robot collaboration based on dual agents deep reinforcement learning. Int. J. Adv. Manuf. Technol. 2021, 115, 3533–3552. [Google Scholar] [CrossRef]

- Zhao, X.; Li, C.; Tang, Y.; Cui, J. Reinforcement learning-based selec- tive disassembly sequence planning for the end-of-life products with structure uncertainty. IEEE Robot. Autom. Lett. 2021, 6, 7807–7814. [Google Scholar] [CrossRef]

- Wang, Z.; Schaul, T.; Hessel, M.; Hasselt, H.; Lanctot, M.; Freitas, N. Dueling network architectures for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, PMLR, New York, NY, USA, 19–24 June 2016; pp. 1995–2003. [Google Scholar]

- Tian, G.D.; Ren, Y.P.; Feng, Y.X.; Zhou, M.C.; Zhang, H.H.; Tan, J.R. Modeling and planning for dual-objective selective disassembly using and/or graph and discrete artificial bee colony. IEEE Trans. Ind. Inform. 2018, 15, 2456–2468. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Z.; Zhao, L.; Wu, Z.; Ma, C.; Yu, S.; Dai, H.; Yang, Q.; Liu, Y.; Zhang, S.; et al. Review of large vision models and visual prompt engineering. Meta-Radiology 2023, 1, 100047. [Google Scholar] [CrossRef]

- Li, Y. A practical survey on zero-shot prompt design for in-context learning. arXiv 2023, arXiv:2309.13205. [Google Scholar]

- Tang, Y.; Zhou, M.C. A systematic approach to design and operation of disassembly lines. IEEE Trans. Autom. Sci. Eng. 2006, 3, 324–329. [Google Scholar] [CrossRef]

- Ilgin, M.A.; Gupta, S.M. Recovery of sensor embedded washing machines using a multi-kanban controlled disassembly line. Robot.-Comput.-Integr. Manuf. 2011, 27, 318–334. [Google Scholar] [CrossRef]

- Wang, K.P.; Li, X.Y.; Gao, L. A multi-objective discrete flower pollination algorithm for stochastic two-sided partial disassembly line balancing problem. Comput. Ind. Eng. 2019, 130, 634–649. [Google Scholar] [CrossRef]

- Wang, K.; Li, X.; Gao, L.; Li, P.; Sutherland, J.W. A discrete artificial bee colony algorithm for multiobjective disassembly line balancing of end-of-life products. IEEE Trans. Cybern. 2022, 52, 7415–7426. [Google Scholar] [CrossRef] [PubMed]

- Tunstall, L.; Beeching, E.; Lambert, N.; Rajani, N.; Rasul, K.; Belkada, Y.; Huang, S.; von Werra, L.; Fourrier, C.; Habib, N.; et al. Zephyr: Direct distillation of lm alignment. arXiv 2023, arXiv:2310.16944. [Google Scholar]

- Du, Z.; Qian, Y.; Liu, X.; Ding, M.; Qiu, J.; Yang, Z.; Tang, J. Glm: Gen- eral language model pretraining with autoregressive blank infilling. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; Volume 1, pp. 320–335. [Google Scholar]

- Fu, Y.P.; Ma, X.M.; Gao, K.Z.; Li, Z.W.; Dong, H.Y. Multi-objective home health care routing and scheduling with sharing service via a problem-specific knowledge based artificial bee colony algorithm. IEEE Trans. Intell. Transp. Syst. 2024, 25, 1706–1719. [Google Scholar] [CrossRef]

- Zhao, Z.; Bian, Z.; Liang, J.; Liu, S.; Zhou, M. Scheduling and Logistics Optimization for Batch Manufacturing Processes With Temperature Constraints and Alternative Thermal Devices. IEEE Trans. Ind. Inform. 2024, 20, 11930–11939. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, X.; Liu, S.; Zhou, M.; Yang, X. Multi-Mobile-Robot Transport and Production Integrated System Optimization. IEEE Trans. Autom. Sci. Eng. 2024, 1–12. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, S.; Liu, S.; Zhou, M.; Li, X.; Yang, X. Lexicographic Dual-Objective Path Finding in Multi-Agent Systems. IEEE Trans. Autom. Sci. Eng. 2024, 1–11. [Google Scholar] [CrossRef]

| Case ID | Product | |||

|---|---|---|---|---|

| PC | Washing Machine | Radio | Mechanical Module | |

| 1 | 1 | 1 | 1 | 1 |

| 2 | 2 | 2 | 3 | 3 |

| 3 | 5 | 5 | 6 | 5 |

| 4 | 10 | 10 | 10 | 10 |

| Temperature | Does It Meet the Expected Answer? | Notes |

|---|---|---|

| 0.1 | Generally Yes | Always execute Task 13 after executing Task 12 |

| 0.2 | No | Sometimes LLM forgets ‘or’ relationships |

| 0.3 | Yes | Need to inform the end of the task |

| 0.4 | Yes | Need to further emphasize task relationships |

| 0.5 | Yes | Need multiple rounds of dialogue |

| 0.7 | No | Unable to generate fixed format answer |

| 1.0 | No | Excessive randomness generated |

| >1.0 | No | Excessive randomness generated |

| Total Complete Disassembly Seq. | Number of Seq. Used | |

|---|---|---|

| Duel-DQN | LLM | |

| 6 | 6 | 6 |

| 60 | 42 | 60 |

| 360 | 141 | 104 |

| 600 | 279 | 127 |

| Case ID | Cost | ||

|---|---|---|---|

| CPLEX | Duel-DQN | Duel-DQN with LLM | |

| 1 | 292 | 366 | 315 |

| 2 | 853 | 1030 | 926 |

| 3 | 1304 | 1316 | 1308 |

| 4 | 1948 | 1840 | 1663 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, X.; Jiao, C.; Ji, P.; Wang, J.; Qin, S.; Hu, B.; Qi, L.; Lang, X. Large Language Model-Assisted Reinforcement Learning for Hybrid Disassembly Line Problem. Mathematics 2024, 12, 4000. https://doi.org/10.3390/math12244000

Guo X, Jiao C, Ji P, Wang J, Qin S, Hu B, Qi L, Lang X. Large Language Model-Assisted Reinforcement Learning for Hybrid Disassembly Line Problem. Mathematics. 2024; 12(24):4000. https://doi.org/10.3390/math12244000

Chicago/Turabian StyleGuo, Xiwang, Chi Jiao, Peng Ji, Jiacun Wang, Shujin Qin, Bin Hu, Liang Qi, and Xianming Lang. 2024. "Large Language Model-Assisted Reinforcement Learning for Hybrid Disassembly Line Problem" Mathematics 12, no. 24: 4000. https://doi.org/10.3390/math12244000

APA StyleGuo, X., Jiao, C., Ji, P., Wang, J., Qin, S., Hu, B., Qi, L., & Lang, X. (2024). Large Language Model-Assisted Reinforcement Learning for Hybrid Disassembly Line Problem. Mathematics, 12(24), 4000. https://doi.org/10.3390/math12244000