1. Introduction

In graph theory, the Laplacian matrix, also called the Kirchhoff matrix, is a matrix representation of a graph that has played a key role in many applications (see [

1,

2]). It can be viewed as a discrete analog of the Laplacian operator in multivariable calculus. In fact, it is a matrix form of the negative discrete Laplace operator on a graph approximating the negative continuous Laplacian obtained by the finite difference method. The Laplacian matrix also arises in the analysis of random walks and electrical networks on graphs [

3] and, in particular, in the computation of resistance distances. Other application fields of Laplacian matrices include graph isomorphism problems, numerical methods for differential equations, physical chemistry, organic chemistry, biochemistry, computer science, and design of statistical experiments. Further references on these applications can be found in [

4,

5].

As far as we know, there is no study concerning accurate computations with Laplacian matrices. As for the conditioning of Laplacian matrices, we can mention [

6]. In this paper, we present an efficient method to compute an

decomposition of the Laplacian matrix of a connected graph with high relative accuracy. The first application of this result is devoted to the computation of the number of spanning trees of a connected graph with high relative accuracy. The second application deals with the computation of the eigenvalues of a Laplacian matrix with high relative accuracy.

It is well known that the number of connected components of a graph coincides with the multiplicity of 0 as an eigenvalue of the Laplacian matrix and that the Laplacian matrix is a symmetric positive semidefinite matrix. In a connected graph, the second smallest eigenvalue of the Laplacian matrix provides the algebraic connectivity of the graph and has many important applications. So, it is convenient to accurately approximate this minimal positive eigenvalue of the Laplacian matrix. Furthermore, the lowest

k eigenvalues and their corresponding eigenvectors have a direct application to data science in spectral clustering (see, for example, [

7]). However, to date, the accurate computation of the eigenvalues of a matrix has only been obtained for matrices satisfying very restrictive conditions. We show in this paper that, for Laplacian matrices of connected graphs, their eigenvalues can be computed with an efficient method with high relative accuracy. This application of the minimal positive eigenvalue of the Laplacian matrix is an example of the application of the spectrum of the Laplacian matrix to the graph structure, which corresponds to the area of spectral graph theory [

8]. Many other applications could be mentioned and, for them, our accurate and efficient method could be used.

High relative accuracy (HRA) is a very desirable property because, if an algorithm is performed to HRA in floating-point arithmetic, then the relative errors in the computations have the order of the unit round-off (or machine precision). In fact, a real value

is said to be computed to HRA whenever the computed

satisfies

where

u is the unit round-off (or machine precision), and

is a constant that does not depend on the arithmetic precision. It is known that an algorithm computes with HRA when it only uses products, quotients, additions of numbers with the same sign, or subtractions of initial data (cf. [

9]); that is, the only forbidden operation is the subtraction of numbers (which are not initial data) with the same sign. Up to now, HRA linear algebra computations have only been guaranteed for a few classes of matrices and using an adequate parametrization of the matrices. Among them, we can mention some subclasses of totally positive matrices (see, for instance, [

10,

11,

12,

13]) or matrices related to diagonal dominance (cf. [

14,

15,

16] ). In this last case, rank-revealing decompositions, which will be presented below, have played a crucial role.

Given an

matrix

A, let

X be

,

D be

and

Y be

matrices. We say that

is a

rank-revealing decomposition (RRD) if

X and

Y are well conditioned, and

D is a nonsingular diagonal matrix. The interest in obtaining an RRD of a matrix comes from the fact that it can be used to compute its singular values (and so, its eigenvalues when it is symmetric) efficiently and with HRA using the algorithm introduced in Section 3 of [

9]. Here, we present an efficient algorithm to obtain an RDD of a Laplacian matrix of a connected graph.

The paper is organized as follows:

Section 2 presents the basic definitions, notations, and results.

Section 3 provides the HRA and efficient method to obtain an

decomposition of a Laplacian matrix of a connected graph, and we also include the corresponding algorithm in pseudocode. The main result is applied to compute the number of spanning trees of a connected graph with high relative accuracy, which is illustrated with an example from chemistry in

Section 3.1. In

Section 3.2, the main result is applied to the computation of the eigenvalues of a Laplacian matrix of a connected graph with high relative accuracy using an RRD of the matrix. The concept of the Laplacian matrix extends naturally to a graph with non-negative weights on the edges (cf. [

2,

17]).

Section 4 extends the result of

Section 3 to these graphs.

Section 5 includes numerical examples to illustrate the accuracy of the proposed methods. Finally,

Section 6 summarizes the main conclusions of the paper.

2. Basic Definitions, Notations, and Results

We say that a real matrix is a Z-matrix if all its off-diagonal entries are nonpositive, i.e., for all such that .

We say that

is a

(row) diagonally dominant (DD) matrix if

and we say that

is a column DD matrix if

is a row DD matrix. Let us recall that the singular value decomposition (SVD) of a real

matrix

A is the factorization

, where

U and

V are orthogonal matrices, and

is non-negative and diagonal. The diagonal entries of

are the singular values of

A. For the particular case that

A is positive semidefinite, the singular values are equal to the eigenvalues.

Let us denote by the set of strictly increasing sequences of k integers chosen from . Let and be sequences of and , respectively. Then, denotes the submatrix of A formed using the rows numbered by and the columns numbered by . If , we use the abbreviated notation .

Given a simple graph

with

n vertices

and a set of edges connecting different pairs of nodes, we build the adjacency matrix

of

G as

The

degree deg

is defined as the number of neighbors of the node

. The

degree matrix is a diagonal matrix such that

. Then, the

Laplacian matrix is defined as

. Hence, its entries are given by

This special structure of the Laplacian matrix translates into many interesting properties. The Laplacian spectra provide a lot of information about the graph and have many applications. For instance,

Hence,

, where

and so it has zero as an eigenvalue. Moreover, the multiplicity of

as an eigenvalue shows the number of connected components of the graph. The second smallest eigenvalue,

(the first nonzero eigenvalue), is known as the Fiedler eigenvalue and gives information about the connectivity of the graph [

8]. Moreover, the lowest

k eigenvalues and their corresponding eigenvectors have a direct application to data science in spectral clustering (see, for example, [

7]).

In this article, we consider the computation of an LDU factorization of the Laplacian matrix to HRA. Then, we will show some applications of this factorization such as the computation of the number of spanning trees of

G,

, and the obtention of a rank-revealing decomposition that can be used to obtain the eigenvalues of

to HRA. In particular, property (

3) will be key to achieving accurate computations with this class of matrices. Given a DD

Z-matrix with non-negative diagonal entries, a parametrization that can be used to achieve accurate computations is given by its off-diagonal entries and the row sums. Taking these parameters as input, we can then use a modified version of Gaussian elimination to compute an LDU factorization with HRA that serves as an RRD. RRD has been recalled in the Introduction, and we also highlighted that it can be used to compute singular values efficiently and with HRA using the algorithm introduced in Section 3 of [

9]. The RRD obtained in this paper uses unit triangular DD matrices. In order to illustrate that these matrices are very well conditioned, let us recall the bound below for the condition number of such matrices introduced in [

15]. Previously, recall that, given a nonsingular matrix

A, we can consider the following condition: number

Proposition 1 (Proposition 2.1 of [

15])

. Let be a unit triangular matrix diagonally dominant by columns (resp. rows). Then, the elements of are bounded in absolute value by 1 and (respectively, ). In the main result of this manuscript, we will show that it is possible to obtain an LDU decomposition of a Laplacian matrix with HRA using an adapted version of Gaussian elimination without pivoting. This LDU decomposition can be used to compute the number of spanning trees of a graph with HRA and obtain an RRD. In ref. [

14], pivoting was used to derive RRD for DD

M-matrices. Recall that a nonsingular

Z-matrix

A is an

M-matrix if

is non-negative. Applications of

M-matrices and related classes can be found in [

18,

19] and references therein. Our approach does not use pivoting and leads to a factorization

with

L lower triangular and column DD (and

row DD) in contrast to the factorization obtained in [

14] with complete symmetric pivoting, where

only satisfies if the diagonal entries have absolute values greater than or equal to the absolute value of the entries of their rows. Moreover, no pivoting reduces the computational cost, and it also has the additional important advantage that, when the Laplacian matrix is banded, no pivoting preserves its banded structure.

There is a sufficient condition to assure the high relative accuracy of an algorithm, the condition of no inaccurate cancellations (NICs) (cf. [

20]): the algorithm only uses multiplications, divisions, sums of real numbers of the same sign, and subtractions of initial data. So, an algorithm that avoids subtractions (with the exception of subtractions of initial data) can be carried out with high relative accuracy. An algorithm that avoids all subtractions is called

subtraction-free (SF), and it also satisfies the NIC condition. Hence, SF algorithms assure high relative accuracy.

3. Main Result and Some Consequences

We start this section by presenting the main result of the paper. We give an efficient method with HRA to obtain an LDU decomposition of a Laplacian matrix of a connected graph. The corresponding algorithm is included later. After that, we will show two applications of this factorization to achieve accurate computations with the Laplacian matrices.

Theorem 1. Let be the Laplacian matrix of a connected graph. Then, we can compute its LDU decomposition with an SF algorithm (and so, with HRA) of at most elementary operations, where L is column DD, and is non-negative.

Proof. Let

be a Laplacian matrix of a connected graph of

n nodes. By Lemma 13.1.1 of [

2],

. Let us apply Gaussian elimination to

. Since

has the special sign structure of a

Z-matrix, we can adapt Gaussian elimination to be an SF algorithm. Let us recall that Gaussian elimination is an algorithm that produces zeros below the main diagonal of a matrix. It consists of

steps when applied to an

matrix, producing matrices

,

:

where

is an upper triangular matrix. While the pivots are nonzero, the matrix

can be obtained from

in the following way: We produce zeros at the

t-th column by subtracting multiples of the

t-th row from the rows below it as follows:

Observe that the first pivot

is nonzero and thus positive by (

3) because

is a

Z-matrix. In fact, if

then, by (

3),

, which implies that

for all

j since

is a

Z-matrix. This contradicts that

is the Laplacian of a connected graph.

Let

be the first index such that

. Let us see that all submatrices

with

preserve the property (

3):

Property (

3) is equivalent to

, where

. After

t (

) steps of Gaussian elimination,

e is again the solution of the equivalent linear system with coefficient matrix

:

. So,

for

and then the diagonal entries of

satisfy (

4).

Let us now see that we can obtain matrices with an SF algorithm.

For the first step of Gaussian elimination, we subtract multiples of the first row of

from the rows below it to produce zeros in the first column. All the off-diagonal entries of

are computed as the sum of two nonpositive numbers so that they can be obtained without subtractions, and they are again nonpositive. Only the diagonal entries would be computed as a true subtraction. However, these entries can be computed without subtractions using (

4) for

and we also infer that they are non-negative. In particular, we can compute the second diagonal entry after performing the first elimination step as

, and if it is nonzero, then we use it as a pivot for the next elimination step. Analogously, all off-diagonal entries of matrices

are nonpositive. Hence, by (

4), all the pivots

are given by

so they are non-negative and can be computed with HRA.

Let us now assume that

, and we shall arrive at a contradiction. If

, then

for

. By (

4) for

, and taking into account that the off-diagonal entries of

are nonpositive, we have that the first row of

is null and, since it is well known that all matrices

(

) inherit the symmetry of

, the first column of

is also null. Then, we can continue the Gaussian elimination with the pivot

, which must be nonzero because, otherwise,

would also have nulls its first row and column. Then,

, a contradiction with

. Analogously, all remaining pivots

for

and (

4) also holds for all

. But (

4) for

implies that

and, since we also have

, we again obtain a contradiction with

. In conclusion, since

,

.

Taking into account that

is a symmetric matrix, the result of Gaussian elimination is therefore a factorization

, where

L is a lower triangular with unit diagonal, and

is a diagonal matrix with first

positive diagonal entries and a last zero diagonal entry. Since the

t-th row of the upper triangular matrix

is formed by

zeros and the entries

, we deduce from the positivity of

and the nonpositivity of the off-diagonal entries that

is a

Z-matrix. Formula (

5) for

also implies that the sum of the entries of the first

rows of

is zero. So,

L is column DD. □

In Algorithm 1, we present the pseudocode for the SF Gaussian elimination that computes the matrices

L and

D for the LDU decomposition

.

| Algorithm 1 Adapted Gaussian elimination |

Require: The off-diagonal entries of :

Ensure: L and to HRA

for do

for do

for do

If then

end for

end for

end for

▷ Taking the lower triangular part of L

for do

end for

▷ Diagonal matrix with the diagonal entries of L |

From the LDU decomposition, we can easily obtain the number of spanning trees of G based on the well-known matrix-tree theorem.

Theorem 2 (Theorem 13.2.1 in [

2])

. Let G be a graph with a Laplacian matrix . If u is an arbitrary vertex of G numbered by i, then is equal to the number of spanning trees of G, . The number

has been used in [

21] to compute the number of spanning trees of a polycyclic graph in the context of Chemistry.

Remark 1. Let us notice that can be obtained using Theorem 2 by computing the determinant of the matrix obtained after removing the last column and row of . Since we know how to compute the decomposition of with high relative accuracy by Theorem 1, we can obtain this number with HRA from the matrix D of that decomposition as the product of the nonzero diagonal entries.

3.1. Example

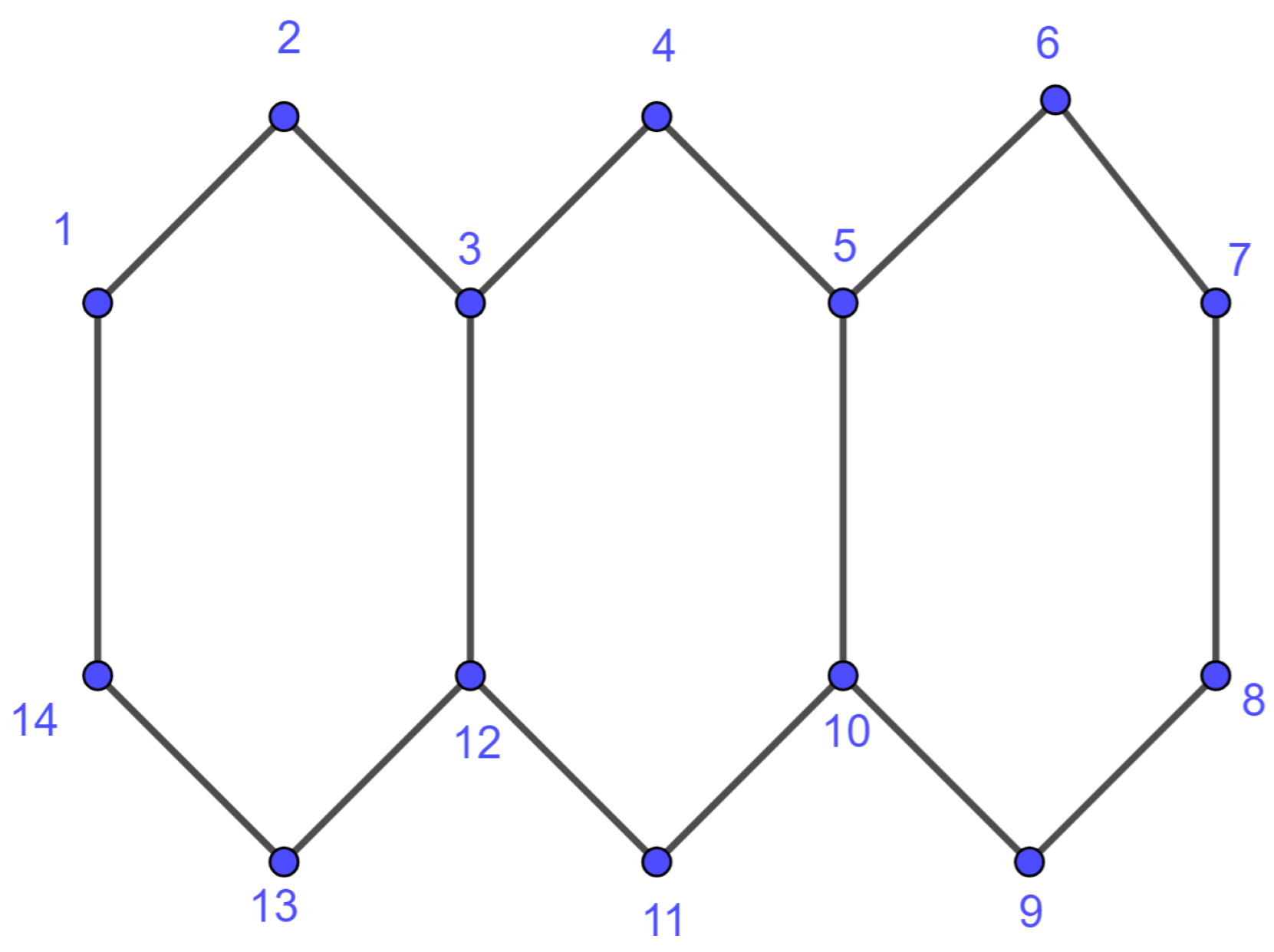

In this subsection, we will illustrate an example of a Laplacian and its LDU factorization taking a well-known example from chemistry. We considered the polycyclic graph associated with a molecule of anthracene corresponding to

Figure 1.

We numbered the nodes from 1 to 14. Then, we built the matrix

associated with

G and applied Algorithm 1 to

. The result is the decomposition

, with

and

.

From this factorization, we can compute as the product of the nonzero diagonal entries of . Hence, . From L and , we can also obtain a rank-revealing decomposition erasing the last column of L and the last row and column of as we will remark in the next subsection.

3.2. Rank-Revealing Decomposition

From the accurate LDU decomposition of obtained in Theorem 1, we can obtain an RRD for this matrix. This representation of a Laplacian matrix can be used to achieve HRA when we compute its singular values and eigenvalues, as recalled in the Introduction.

Corollary 1. Let be the Laplacian matrix of a connected graph. Then, we can compute its rank-revealing decomposition with an SF algorithm of most elementary operations, where X is column DD. Moreover, all the eigenvalues of can be computed to HRA with an algorithm of complexity of most elementary operations.

Proof. By Theorem 1, we obtain the factorization

with HRA. Let us notice that this factorization implies that

is a positive semidefinite matrix since

is a non-negative diagonal matrix. Hence, its singular values coincide with its eigenvalues. Let us define the matrices

and

; thus, we obtain the decomposition

, where

X is a DD matrix by columns. This fact implies that

X is very well conditioned (cf. Proposition 1). Since our adaptation of Gaussian elimination was SF,

gives an RRD decomposition that can be taken as input to compute the singular values with HRA applying the algorithm presented in cf. [

9]. □

5. Numerical Experiments

In this section, we will introduce some examples to showcase some of the results presented in this article. In Theorem 1, we have seen that we can compute an RRD decomposition of a Laplacian matrix with HRA. We extended this result to more classes of matrices in Proposition 2. Now, we will consider some ill-conditioned examples belonging to this class.

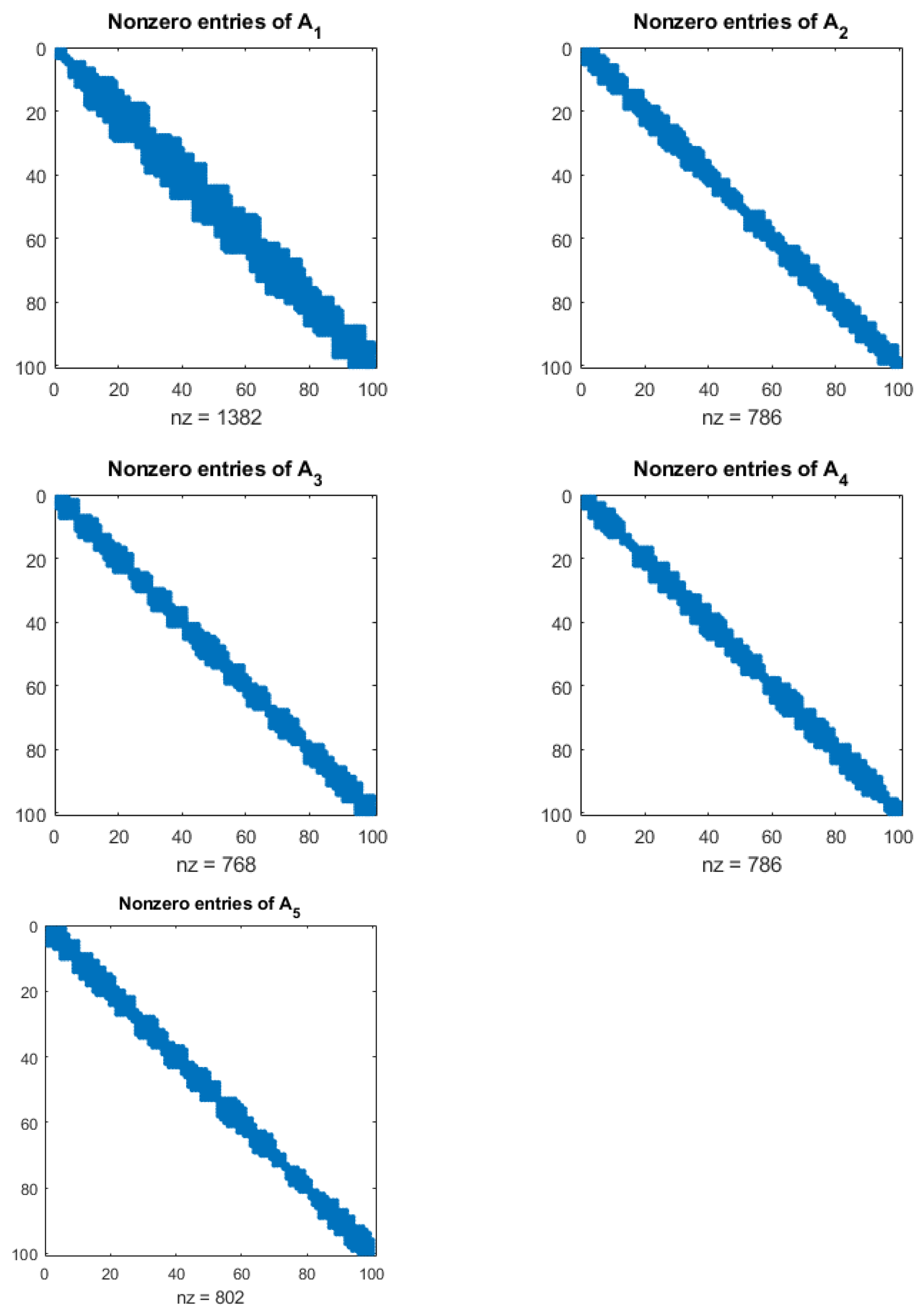

We generated some

banded matrices that satisfy the hypotheses of Proposition 2, i.e., they are the Laplacian of a weighted undirected graph. We called these matrices

for

. These matrices have 100 columns and 100 rows, and most of their entries are zero. The nonzero entries always appear in the diagonal entries, and the off-diagonal entries are closer to the diagonal. In order to decide how many off-diagonal entries below the main diagonal are different from zero, we chose a random integer

between 1 and 5 for every column. For example, the first column of the matrix

has the first two off-diagonal entries different from zero, i.e.,

was chosen as 2. Then, we would do the same for the second column, the third column, etc. The only extra condition used is that, whenever the number

chosen for the

i-th column is equal or smaller than

, we would set it to

instead. In the case of the matrix

, the second, third and fourth columns have one off-diagonal entry below the main diagonal different from zero. Then, the fifth row has

, and hence, four entries below the main diagonal were chosen to be different from zero. For all the examples, we used this procedure to decide the nonzero entries below the main diagonal, and then we ensured that the matrices were symmetric. By doing so, we obtained the block structure of nonzero entries, which is illustrated in

Figure 2. The quantities

appearing in these graphics refer to the number of nonzero entries of the matrices. For each of these examples, we generated random integers

between 1 and 100 for the off-diagonal nonzero entries of the lower part. Finally, we built the symmetrical matrix

such that

In order to test the advantages of using our proposed HRA method, we used Matlab to implement both classical Gaussian elimination without pivoting and an adaptation of Gaussian elimination following the pseudocode presented in Algorithm 1. We used both methods to compute the same

decomposition of the matrix

. Then, we computed this decomposition in Mathematica using a 100-digit precision. In

Table 1, we show the largest componentwise relative errors for the approximations of the matrix

L computed with both Matlab methods, considering the results from Mathematica as exact.

Let us remark that even though the original matrix is quite ill conditioned, the matrix

computed by this method is well conditioned as expected. In

Table 1, we also compare the conditioning of the matrix

with the condition number of the matrix

. For the estimation of the condition number of the matrix

, we considered the submatrix obtained by deleting the last row and column since the matrix is singular, and the multiplicity of the eigenvalue

is one. We can see that

satisfies the bound given in Proposition 1.