1. Introduction

Metaheuristic algorithms (MAs) nowadays represent the standard approach to complex engineering optimization problems. The popularity of these algorithms is demonstrated by the wide variety of applications of MAs to various fields of science, engineering and technology. For example, some areas that can be mentioned include static and dynamic structural optimization [

1,

2], mechanical characterization of materials and structural identification (also including damage identification) [

3,

4,

5], vehicle routing optimization [

6], optimization of solar devices [

7], forest fire mapping [

8], urban water demand [

9], 3D printing process optimization [

10], identification of COVID-19 infection and cancer classification [

11,

12] image processing including feature extraction/selection [

13].

Unlike gradient-based optimizers, MAs are stochastic algorithms that do not use gradient information to perturb design variables. In metaheuristic optimization, new trial solutions are randomly generated according to the inspiring principle of the selected algorithm. The trial solutions generated in each iteration attempt to improve the best record obtained so far. Exploration and exploitation are the two typical phases of metaheuristic search. In exploration, optimization variables are perturbed to a great extent to try to quickly identify the best regions of the design space, while exploitation performs local searches in a set of selected neighborhoods of the most promising solutions. Exploration governs the optimization search in the early iterations, while exploitation dominates as soon as the optimizer converges toward the global optimum.

Several classifications of MAs have been proposed in the literature. The first distinction is between single-solution algorithms where the optimizer updates the position of only one search agent and population-based algorithms where the optimizer operates on a population of candidate designs or search agents. However, the single-point search is limited to a few classical algorithms, such as simulated annealing and tabu search. Hence, the vast majority of MAs are population-based optimizers and their classification relies on the inspiring principle that drives the metaheuristic search. In this regard, MAs can be roughly divided into four categories: (i) evolutionary algorithms; (ii) science-based algorithms; (iii) human-based algorithms; (iv) swarm intelligence-based algorithms.

Evolutionary algorithms imitate evolution theory and evolutionary processes. Genetic algorithms (GAs) [

14,

15], differential evolution (DE) [

16,

17], evolutionary programming (EP) [

18], evolution strategies (ES) [

19], biogeography-based optimization (BBO) [

20] and black widow optimization (BWO) [

21] fall in this category. GAs and DE algorithms certainly are the most popular evolutionary algorithms. Their success is demonstrated by the about 7000 citations gathered by Refs. [

14,

15] and the 23,030 citations gathered by Ref. [

16] in the Scopus database over about 35 years (a total of about 860 citations/year up to October 2024). GAs are based on Darwin’s concepts of natural selection. Selection, crossover and mutation operators are used for creating a new generation of designs starting from the parent designs stored in the previous iteration. DE includes four basic steps: random initialization of the population, mutation, recombination and selection. GAs and DE mainly differ in the selection process for generating the next generation of designs that will be stored in the population.

Science-based MAs mimic the laws of physics, chemistry, astronomy, astrophysics and mathematics. Simulated annealing (SA) [

22,

23], charged system search (CSS) [

24], magnetic charged system search (MCSS) [

25], ray optimization (RO) [

26], colliding bodies optimization (CBO) [

27], water evaporation optimization (WAO) [

28], thermal exchange optimization (TEO) [

29], equilibrium optimizer (EO) [

30] and light spectrum optimizer (LSO) [

31] are examples of physics-based MAs. Generally speaking, the above-mentioned methods tend to reach the equilibrium condition of mechanical, electro-magnetic or thermal systems under external perturbations. Optics-based methods such as RO and LSO utilize the concepts of refraction and dispersion of the light to set directions for exploring the search space. SA certainly is the most popular science-based metaheuristic algorithm considering the about 33,940 citations gathered by Ref. [

22] in the Scopus database over 42 years (about 810 citations/year up to October 2024). SA mimics the annealing process in liquid or solid materials that reach the lowest energy level (a globally stable condition) as temperature decreases. The SA search strategy is rather simple: (i) a new trial solution replaces the current best record if it improves it; (ii) otherwise, a probabilistic criterion indicates if solution may improve the current best record in the next iteration. The “temperature” parameter is used in SA for computing probability and is progressively updated as the optimization progresses. SA has an inherent hill-climbing capacity given by search strategy (ii) that allows local minima to be eventually bypassed in the next iterations.

The artificial chemical reaction optimization algorithm (ACROA) [

32], gas Brownian motion optimization (GBMO) [

33] and Henry gas solubility optimization (HGSO) [

34] are examples of chemistry-based MAs that also rely on important physics concepts such as Brownian motion and Henry’s law. ACROA simulates interactions between chemical reactants: the positions of search agents correspond to concentrations and potentials of reactants and they are no longer perturbed when no more reactions can take place. GBMO utilizes the law of motion, the Brownian motion of gasses and turbulent rotational motion to search for an optimal solution; search agents correspond to molecules and their performance is measured by their positions. HGSO mimics the state of equilibrium of a gas mixture in a liquid; search agents correspond to gasses and their optimal positions correspond to the equilibrium distribution of gasses in the mixture. HSGO is the most popular algorithm in this sub-category considering the about 780 citations (up to October 2024) gathered by Ref. [

34] five years after its release.

Big bang–big crunch optimization (BB-BC) [

35], the gravitational search algorithm (GSA) [

36], galaxy-based search algorithm (GBSA) [

37], black hole algorithm [

38], astrophysics-inspired grey wolf algorithm [

39] and supernova optimizer [

40] are examples of MAs that mimic astronomical or astrophysical phenomena, such as the expansion (big bang)–contraction (big crunch) cycles that lead to the formation of new star–planetary systems, the spreading of stellar material following supernovae explosions, gravitational interactions between masses, interactions between black holes and starsand the movement of spiral galaxy arms, which also introduces the concept of elliptical orbit in the hunting process of wolves. GSA and BB-BC are the most popular algorithms in this sub-category, considering the about 6030 and 1280 citations, respectively, gathered by Refs. [

35,

36] in the Scopus database over almost two decades (a total of about 415 citations per year).

The sine cosine algorithm (SCA) [

41], the Runge–Kutta optimizer (RUN) [

42] and the arithmetic optimization algorithm (AOA) [

43] are inspired by mathematics. In the SCA, candidate solutions fluctuate outwards or towards the best solution using a mathematical model based on sine and cosine functions. RUN combines a slope calculation scheme based on the Runge–Kutta method with an enhanced solution quality mechanism to increase the quality of trial designs. The AOA uses the four basic arithmetic operators (i.e., addition, subtraction, multiplication, division) to perturb the design variables of the current best record: in particular, multiplication and division drive the exploration phase, while addition and subtraction drive the exploitation phase. The AOA and RUN, respectively, were associated with about 1830 and 705 citations in Scopus after only 3 years from their release (a total of 845 citations/year). The SCA also achieved a considerable number of citations, about 4040 over 8 years with an average of about 505 citations/year up to October 2024.

Human-based MAs mimic human activities (e.g., talking, walking, playing, exploring new places/situations), behaviors (e.g., natural tendency to look for the best and avoid the worst, parental cares, teaching–learning, decision processes) and social sciences (including politics). Tabu search (TS) [

44,

45] was the first human-based MA and was developed about 40 years ago. TS is inspired by the ancestral concept that sacred things cannot be touched: hence, in the optimization process, local search is performed in the neighborhood of candidate solutions and solutions that do not improve the design are stored in a database so that the optimizer will not explore them and their neighborhoods further. Harmony search optimization (HS), which simulates the music improvisation process of jazz players [

46,

47], teaching–learning-based optimization (TLBO) [

48], which simulates the teaching–learning mechanisms in a classroom and JAYA [

49] using just one single equation to perturb the optimization variables to approach the current best record and escape from the worst candidate solution, are the most popular human-based MAs according to the number of citations reported in the Scopus database. In particular, TLBO achieved the highest average number of citations/year (about 300) and was cited in about 3920 papers since its release in 2011 as of October 2024. TLBO is followed by JAYA (about 250 citations/year for about 2000 citations gathered over 8 years and a huge number of variants and hybrid schemes) and HS (about 235 citations/year for 5450 citations over 23 years).

The learning process is an important source of inspiration in human-based MAs. This is demonstrated by the group teaching optimization algorithm (GTOA) [

50], another teaching–learning-based algorithm where students (i.e., candidate solutions) are divided into groups according to defined rules; the teacher (current best record) adopts specific teaching methods to improve the knowledge of each group. The mother optimization algorithm (MOA) [

51] mimics the human interaction between a mother and her children, simulating the mother’s care of her children in education, advice and upbringing. The preschool education optimization algorithm (PEOA) [

52] simulates the phases of children’s preschool education, including (i) the gradual growth of the preschool teacher’s educational influence, (ii) individual knowledge development guided by the teacher and (iii) individual increases in knowledge and self-awareness. The learning cooking algorithm (LCA) [

53] mimics the cooking learning activity of humans: children learn from their mothers, and children and mothers learn from a chef. The decision making behavior of humans is instead simulated by the collective decision optimization algorithm (CDOA) [

54]: candidate solutions are generated by operators reproducing the different phases of the decision process that can be experience-based, others-based, group thinking-based, leader-based and innovation-based.

The imperialist competitive algorithm (ICA) [

55] and the political optimizer (PO) [

56] are based on politics, another important human activity. The ICA simulates the international relationships between countries: search agents represent countries that are categorized into colonies and imperialist states; powerful empires take possession of the former colonies of weak empires. Imperialistic competitions direct the search process toward the powerful imperialist or the optimum points. PO simulates all the major phases of politics (i.e., constituency allocation, party switching, election campaign, inter-party election and parliamentary affairs); the population is divided into political parties and constituencies, thus facilitating each candidate to update its position with respect to the party leader and the constituency winner. Learning behaviors of the politicians from the previous election are also accounted for in the optimization process.

Swarm intelligence-based algorithms reproduce the social/individual behavior of animals (insects, terrestrial animals, birds, and aquatic animals) in reproduction, food search, hunting, migration, etc. Most of the newly published MAs belong to this category. Particle swarm optimization (PSO) [

57,

58,

59], developed in 1995, is the most popular MA overall: in particular, the seminal studies [

57,

58] gathered about 73,550 citations over 29 years in the Scopus database; the average citation rate is about 2535 articles/year, more than three times higher than for SA. PSO simulates interactions between individuals of bird/fish swarms. If one leading individual or a group of leaders see a desirable path to go through (for food, protection, etc.), the rest of swarm quickly follows the leader(s), even in the absence of direct connections. In the optimization process, a population of candidate designs (the particles) is generated. Particles move through the search space and their positions and velocities are updated based on the position of the leader(s) and the best positions of individual particles in each iteration until the optimum solution is reached.

Insect behavior has also inspired MA experts to a great extent. Ant system and colony optimization (AS, ACO) [

60,

61,

62], which mimic the cooperative search technique in the foraging behavior of real-life ant colonies, artificial bee colony (ABC) [

63], which simulates the nectar search carried out by bees, the firefly algorithm (FFA) [

64,

65], which simulates the social behavior of fireflies and their bioluminescent communication, and ant lion optimizer (ALO) [

66], which simulates the hunting mechanism of ant lions, fall in this sub-category. The interest of the optimization community in insect-based MAs is confirmed by the high number of citations achieved by the algorithms mentioned above: about 11,320 for AS, ACO (i.e., 405 citations/year since 1996), 6335 for ABC (i.e., 370 citations/year since 2007), 2720 for ALO (i.e., about 300 citations/year since 2015) and 2895 for FFA (i.e., 207 citations/year since 2010) up to October 2024.

The grey wolf optimizer (GWO) [

67], coyote optimization algorithm (COA) [

68], snake optimizer (SO) [

69] and snow leopard optimization algorithm (SLOA) [

70] are MAs simulating the behavior of terrestrial animals. GWO and COA, respectively, mimic the hunting behavior of grey wolves and coyotes; SO mimics the mating behavior of snakes. SLOA is somehow more general than GWO, COA and SO as it perturbs optimization variables by means of operators simulating a wide variety of behaviors of a snow leopard, including travel routes and movement, hunting, reproduction and mortality. GWO is one of the most popular MAs considering the about 13,700 citations reported in Scopus since its release in 2014 up to October 2024: the average number of citations per year is about 1370, the second best amongst all MAs after PSO.

Cuckoo search (CS) [

71,

72], the crow search algorithm (CSA) [

73], starling murmuration optimizer (SMO) [

74] and bat algorithm (BA) [

75,

76] are MAs that mimic the behavior of birds and bats. While BA updates the population of candidate designs simulating the echolocation behavior of bats, CS reproduces the parasitic behavior of some cuckoo species that mix their eggs with those of other birds to guarantee the survival of their chicks. CSA simulates crows’ behavior concerning how they hide their excess food and retrieve it when the food is needed. SMO explores the search space by reproducing the flying behavior of starlings: exploration is carried out by means of the separating and diving search strategies while exploitation relies on the whirling strategy. CS is the most popular MA in this sub-category: the Scopus database reports about 8570 citations for Refs. [

71,

72] over 15 years with about 570 citations/year up to October 2024. CS is followed by BA (about 6030 citations for Refs. [

75,

76] since 2010 with about 430 citations/year) and CSA (about 1765 citations since 2016 with about 220 citations/year).

Among MAs inspired by aquatic animals, the following methods have to be mentioned. The dolphin echolocation algorithm (DE) [

77] simulates the hunting strategy of dolphins based on the echolocation of prey. The whale optimization algorithm (WOA) [

78] mimics the social behavior of humpback whales and, in particular, their bubble-net hunting strategy. The salp swarm algorithm (SSA) [

79] simulates swarming and foraging behaviors of ocean salps. The marine predators algorithm (MPA) [

80] updates design variables by simulating random walk movements (essentially Brownian motion and Lévy flight) of ocean predators. The giant trevally optimizer (GTO) [

81] mimics hunting strategies of giant trevally marine fish, including Lévy flight movement. WOA is the most popular algorithm in this sub-category: the Scopus database reports about 9790 citations since 2016 with an average rate of about 1225 citations/year. WOA is followed by SSA, which gathered about 3920 citations since 2017 with about 560 citations/year, and by MPA, which gathered about 1600 citations since 2020 with about 400 citations/year up to October 2024.

A large number of improved/enhanced variants for existing MAs, hybrid algorithms combining MAs with gradient-based optimizers, and hybrid algorithms combining two or more MAs have been developed by optimization experts (see, for example, Refs. [

82,

83,

84,

85,

86,

87,

88,

89,

90,

91,

92,

93,

94,

95,

96,

97,

98,

99,

100,

101,

102], published in the last two decades). High-performance MAs were often selected as component algorithms in developing hybrid formulations. The common denominator of all those studies was to find the best balance between exploration and exploitation phases that hopefully resulted in the following: (i) the optimizer’s ability to avoid local minima (i.e., hill-climbing capability, especially for algorithms including SA-based operators), keeping the diversity in the population, and avoiding stagnation and premature convergence to false optima; (ii) a reduction in the number of function evaluations (structural analyses, analyses) required in the optimization process; (iii) high robustness in terms of low dispersion on optimized cost function values; (iv) a reduction in the number of internal parameters as well as in the level of heuristics entailed by the search process. In general, these tasks were accomplished by (i) combining highly explorative methods with highly exploitative methods; (ii) forcing the optimizer to switch again to exploration if the exploitation phase started prematurely or the design did not improve for a certain number of iterations in the exploitation phase; (iii) introducing new perturbation strategies (for example, chaotic perturbation of the design; mutation operators to avoid stagnation and increase the diversity of the population; Lévy flight/movements in swarm-based methods, etc.) for optimization variables tailored to the specific MA under consideration.

Continuous increases in computing power have greatly favored the development of new metaheuristic algorithms (including enhanced variants and hybrid formulations). However, some aspects should carefully be considered in metaheuristic optimization: (i) according to the No Free Lunch theorem [

103,

104], no metaheuristic algorithm can always outperform all other MAs in all optimization problems; (ii) MAs often require a very large number of function evaluations (analyses) for completing the optimization process, even 1–2 orders of magnitude higher than their classical gradient-based optimizer counterparts; (iii) the easiness of implementation, which is typically underlined as a definite strength point of Mas, is very often nullified by the complexity of algorithmic variants or hybrid algorithms combining multiple methods; and (iv) sophisticated MA formulations often include many internal parameters that may be difficult to tune.

It should be noted that newly developed MAs often add very little to the optimization field and their appeal quickly vanishes just a few years after their release. This is confirmed by the literature survey presented in this introduction. The “classical” MAs developed prior to 2014, such as GA, DE, SA, PSO, ACO, CS, BA, HS, GSA, BBBC and GWO, gathered a much higher number of citations/year (up to 2535 until October 2024) than the MAs developed in 2015-2024, except for WOA, SCA, AOA/RUN, SSA, MPA, ALO, JAYA and CSA (up to 1225 citations/year). For this reason, the present study focused on improving available MAs rather than formulating a new MA from scratch. The main goal was to prove that a very efficient hybrid metaheuristic algorithm can be built by simply combining the basic formulations of two well-established MAs without complicating the formulation of the hybrid optimizer both in terms of number of strategies/operators used for perturbing the design variables and number of internal parameters (including parameters that regulate the switching from one optimizer to another).

In view of the arguments above, a very simple hybrid metaheuristic algorithm (SHGWJA, where the acronym stands for Simple Hybrid Grey Wolf JAYA) able to solve engineering optimization problems with less computational effort than the other currently available MAs was developed by combining two classical population-based MAs, namely the Grey Wolf Optimizer (GWO) and the Jaya Algorithm (JAYA). GWO and JAYA were selected in this study as the component algorithms of the new optimizer SHGWJA because of their simple formulations without internal parameters, and in view of the high interest of metaheuristic optimization experts in these two methods, proven by the various applications and many algorithmic variants documented in the literature. These motivations are detailed as follows.

- (1)

GWO, originally developed by Mirjalili et al. [

67] in 2014, is the second most cited metaheuristic algorithm after PSO in terms of citations/year. However, GWO has a much simpler formulation than PSO and, unlike PSO, does not require any setting of internal parameters. GWO mimics the hierarchy of leadership and group hunting of the grey wolves in nature: the optimization search is driven by the three best individuals that catch the prey and attract the rest of the hunters. Applications of GWO to various fields of science, engineering and technology are reviewed in Refs. [

105,

106].

- (2)

JAYA, originally developed by Rao [

49] in 2016, is one of the most cited MAs released in the last decade. It utilizes the most straightforward search scheme ever presented in the metaheuristic optimization literature for a population-based MA: to approach the population’s best solution and move away from the worst solution, thus achieving significant convergence capability. Hence, optimization variables are perturbed by JAYA using only one equation, thus minimizing the computational complexity of the search process. In spite of its inherent simplicity, JAYA is a powerful metaheuristic algorithm that has been proven able to efficiently solve a wide variety of optimization problems (see, for example, the reviews presented in [

107,

108]). An interesting argument made in [

107] may explain JAYA’s versatility. JAYA combines the basic features of evolutionary algorithms, in terms of the fittest individual’s survivability (such a feature is, however, common to all population-based MAs), and swarm-based algorithms where the swarm normally follow the leader during the search for an optimal solution. This hybrid nature, in addition to the inherent algorithmic simplicity, makes JAYA an ideal candidate to be selected as a component of new hybrid metaheuristic algorithms.

- (3)

Both GWO and JAYA do not have internal parameters that have to be set by the user, except the parameters common to all population-based MAs, such as population size and the limit on the number of optimization iterations.

- (4)

Both algorithms have good exploration capabilities but have weaknesses in the exploitation phase. The challenge is to combine their exploration capabilities to directly approach the global optimum. Elitist strategies may facilitate the exploitation phase, which only has to focus on a limited number of potentially optimal solutions to be refined.

Here, GWO and JAYA were simply merged, and the JAYA perturbation strategy was applied to improve the positions of the leading wolves, thus avoiding the search stagnation that may occur in GWO if the three best individuals of the population remain the same for many iterations. The original formulations of GWO and JAYA combined into the hybrid SHGWJA algorithm were slightly modified to maximize the search capability of the new algorithm and reduce the number of function evaluations required by the GWO and JAYA engines. However, while algorithmic variants of GWO and JAYA (including hybrid optimizers) usually include specific operators/schemes to increase the diversity of the population or/and improve the exploitation capability (this, however, increases the computational complexity of the optimizer) [

105,

106,

107,

108], SHGWJA adopts a very straightforward elitist approach. In fact, SHGWJA always attempts to improve the current best design of the population regardless of having generated new trial solutions in the exploration phase or exploitation phase. Trial solutions that are unlikely to improve the current best record stored in the population are directly rejected without evaluating constraints. This allows SHGWJA to reduce the computational cost of the optimization process by always directing the search process towards the best regions of the search space, and eliminating any unnecessary exploitation of trial solutions that cannot improve the current best record.

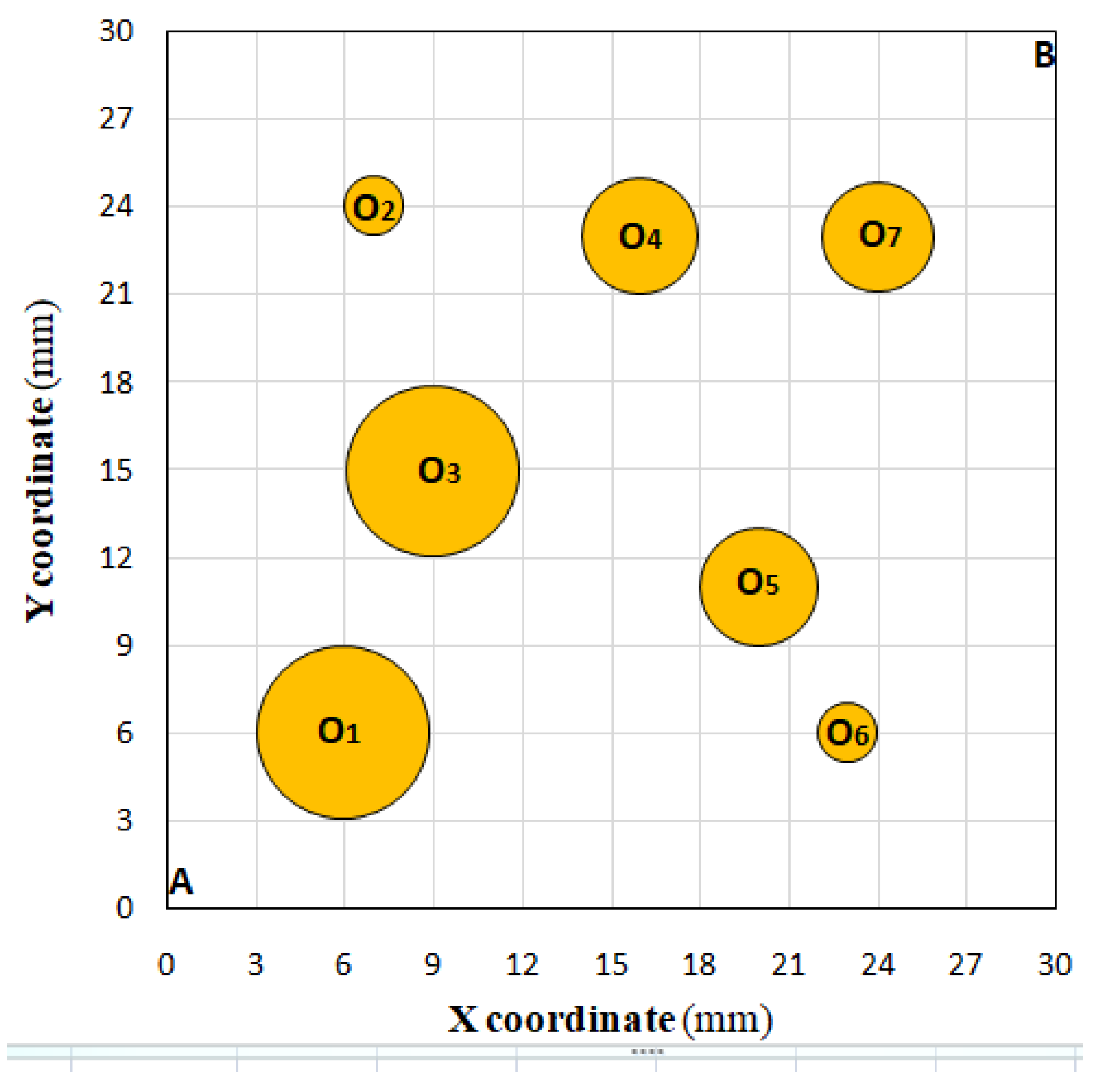

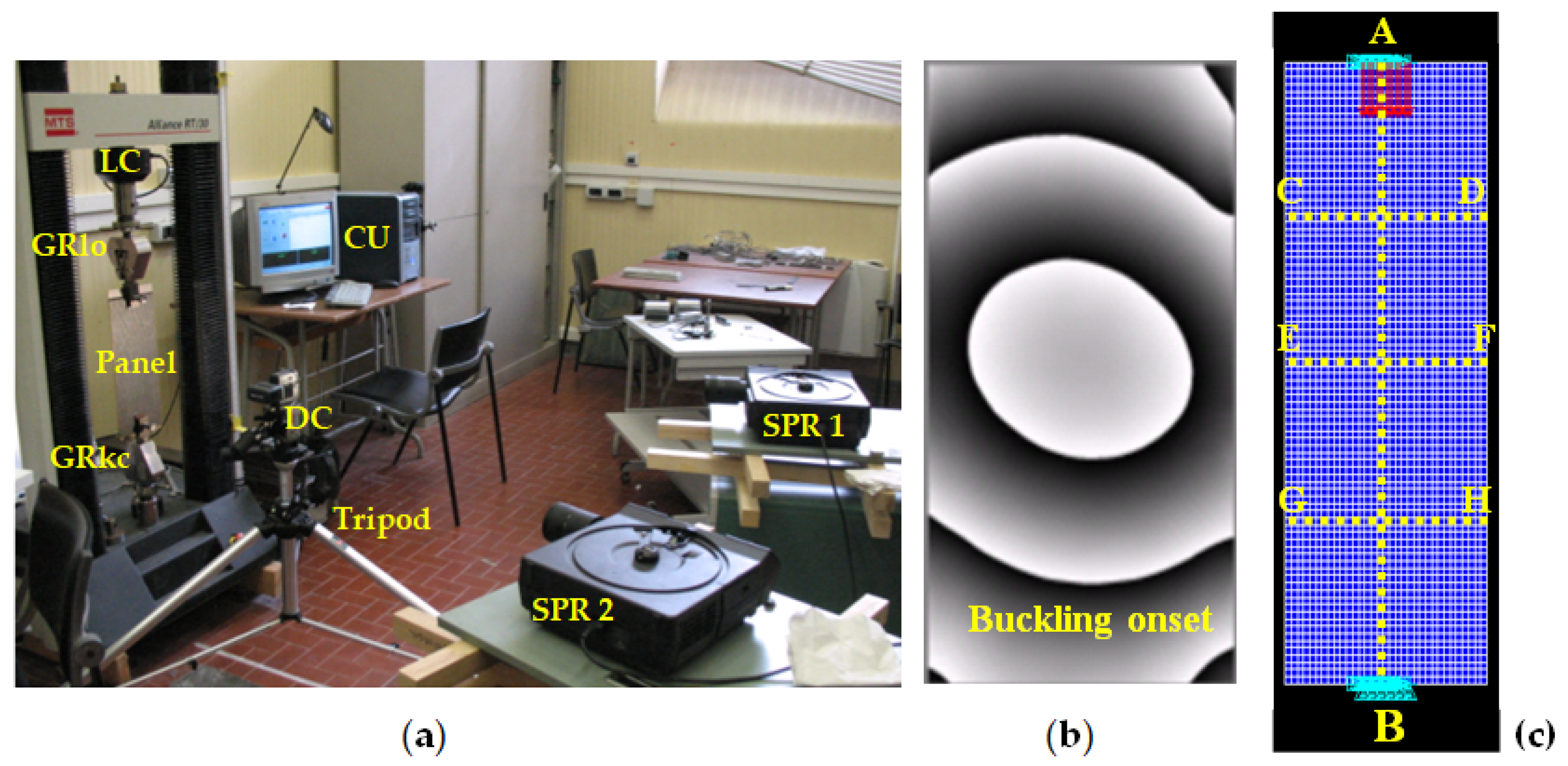

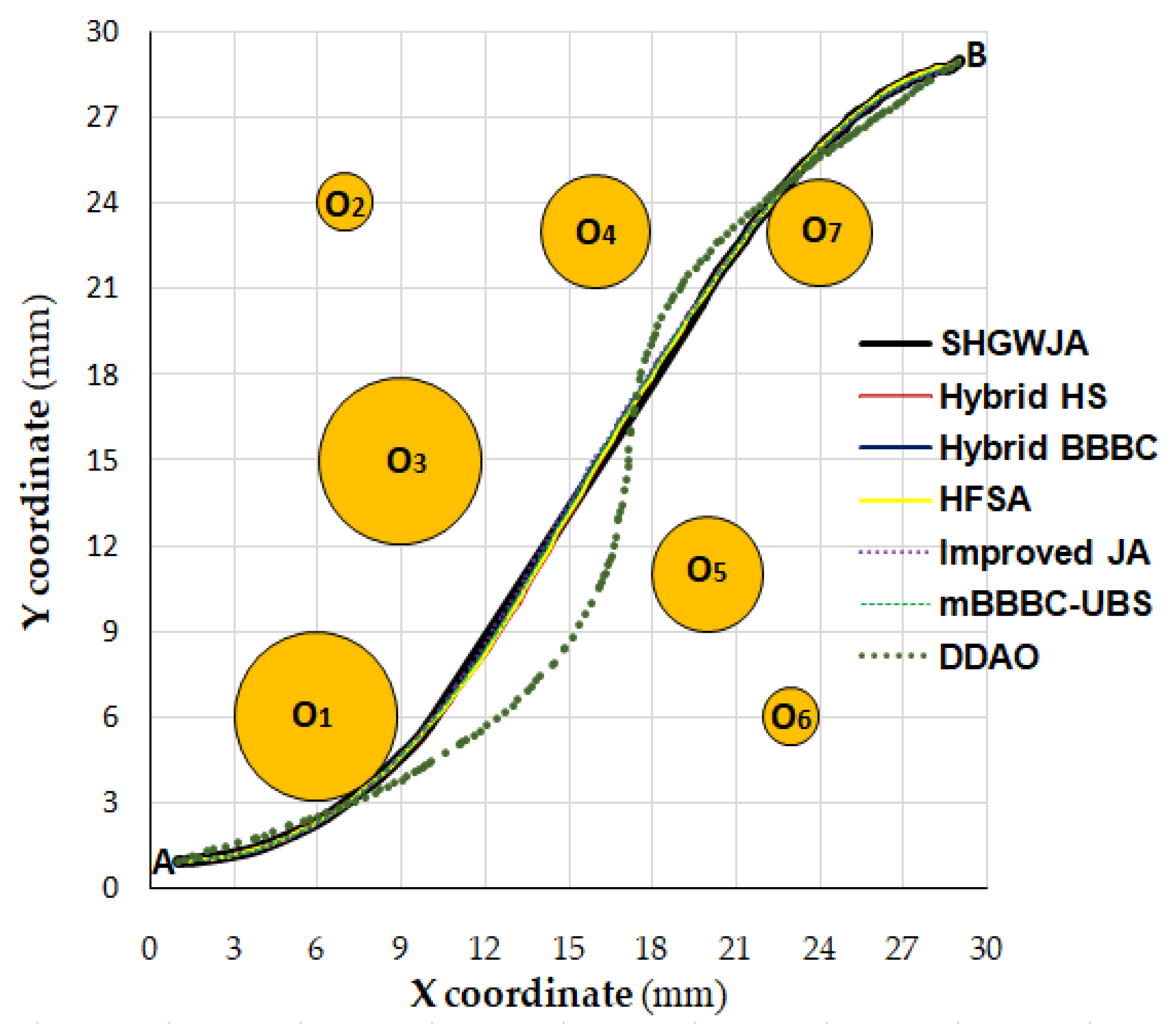

The proposed SHGWJA was successfully tested in seven “real-world” engineering problems selected from civil engineering, mechanical engineering and robotics. The selected test problems, including up to 14 optimization variables and 721 nonlinear constraints, regarded: (i) shape optimization of a concrete gravity dam (volume minimization); (ii) optimal design of a tension/compression spring (weight minimization); (iii) optimal design of a welded beam (minimization of fabrication cost); (iv) optimal design of a pressure vessel (minimization of forming, material, and welding costs); (v) optimal design of an industrial refrigeration system; (vi) 2D path planning (minimization of trajectory length); (vii) mechanical characterization of a flat composite panel under axial compression (by matching experimental data and finite element simulations). Test problems (ii) through (v) were included in the CEC 2020 (IEEE Congress on Evolutionary Computing) test suite for constrained mechanical engineering problems. Example (vii) is a typical highly nonlinear inverse problem in the fields of aeronautical engineering and mechanics of materials. Besides the seven “real-world” engineering problems listed above, two classical mathematical optimization problems (i.e., Rosenbrock’s banana function and Rastrigin’s function) with up to 1000 variables were solved in this study to evaluate the scalability of the proposed algorithm.

For all test cases, the optimization results obtained by SHGWJA were compared with those quoted in the literature for the best-performing algorithms. SHGWJA was compared with at least 10 other MAs, each of which had been reported in the literature to have outperformed in turn up to 35 other MAs. Comparisons with high performance algorithms and CEC competitions winners such as LSHADE and IMODE variants as well as with other MAs that were reported to outperform CEC winners (i.e., MPA and GTO among others) also are presented in the article.

The rest of the article is structured as follows.

Section 2 recalls formulations of GWO and JAYA, while

Section 3 describes the new hybrid optimization algorithm SHGWJA developed in this research. Test problems and implementation details are presented in

Section 4, while optimization results are presented and discussed in

Section 5. In the last section, the main findings are summarized, and directions of future research are outlined.

3. The SHGJA Algorithm

The simple hybrid metaheuristic algorithm SHGWJA developed in this research combined the GWO and JAYA methods to improve search by means of elitist strategies. The new algorithm is very simple and efficiently explores the search space. This allows for limiting the number of function evaluations. The new algorithm is now described in detail. A population of

NPOP candidate designs (i.e., wolves) is randomly generated as follows:

where NDV is the number of optimization variables;

and

, respectively, are the lower and upper bounds of jth variable;

is a random number extracted in the interval (0, 1).

Considering the classical statement of the optimization problem where the goal is to minimize the function

W(

) of NDV variables (stored in the design vector

), subjected to

NCON inequality/equality constraint functions of the form G

k(

) ≤ 0, the penalized cost function

Wp(

) is defined as follows:

where

p is the penalty coefficient. The penalty function

is defined as follows:

The penalized cost function Wp() obviously coincides with the cost function W() if the trial solution is feasible. Candidate solutions are sorted with respect to penalized cost function values: the current best record corresponds to the lowest value of .

The best three search agents achieving the lowest penalized cost values are set as the wolves α, β and δ. Let , and be the corresponding design vectors. It obviously holds that Wp() < Wp() < Wp(). The α wolf is also set as the current best record and the corresponding penalized cost function Wp() is set as Wp,opt.

Each individual

of the population is provisionally updated with the classical GWO Equations (1) through (7). As in [

67], the components of the

vector linearly decrease from 2 to 0 as the optimization goes by in SHGWJA. Let

denote the new trial solution obtained by perturbing the generic individual

stored in the population in the previous iteration.

Each new trial design generated in Step 1 via classical GWO with Equations (1) through (7) is evaluated by SHGWJA using two new operators. In GWO implementations, each new design is compared with its counterpart solution previously stored in the population. If the new design is better than the old design it is stored in the updated population in the current iteration, replacing the old design. However, this task requires a new evaluation of the constraint functions for each new .

In order to reduce the computational cost, SHGWJA implements an elitist strategy, retaining only the trial solutions that are likely to improve the current best record. Hence, SHGWJA initially compares only the cost function W() of the new trial solution with 1.1 times the cost function W() of the current best record. If the new trial design does not improve the current best record—i.e., if W() > 1.1W() holds— it is not necessary to process it further and the old design is provisionally maintained also in the new population.

The 1.1

W(

) threshold has proven to be effective in all test problems solved in this study. Such a behavior may be explained in the following way. In the exploration phase, the optimizer assigns large movements to design variables and the probability to improve design is high: hence, the

W(

) > 1.1

W(

) scenario will not be likely to occur. In the exploitation phase, the optimizer should bypass local minima to find the global optimum: like SA, the optimizer may accept candidate solutions slightly worse than

. Looking at the probabilistic acceptance/rejection criterion used by advanced SA formulations [

84,

93], it can be seen that the threshold level of acceptance probability is 0.9 if the γ ratio between the cost function increment recorded for the trial solution

with respect to the current best record

and the annealing temperature

T is 0.1; hence, the probability of provisionally accepting some design worse than

and improving it in the next iterations is 90%. Since the initial value of the

T temperature set in SA corresponds to the expected optimum cost (or the cost value of the current best record), it may be reasonably assumed that trial solutions up to 10% worse than

would become better than

in the next iterations.

However, the new design

is generated by classical GWO to try to approach the position of the best three individuals, the wolves

α,

β and

δ. To avoid stagnation, the

design is perturbed using a JAYA-based scheme if the classical GWO generation was unsuccessful and the trial solution

did not satisfy the condition

W(

) ≤ 1.1

W(

). That is, the following holds:

If the classical GWO generation was successful and trial design

satisfied the condition

W(

) ≤ 1.1

W(

), the JAYA scheme is applied directly to

as follows:

In Equations (12) and (13), and are two vectors including NDV random numbers in the interval [0, 1]. The absolute values of optimization variables (like the values in Equation (8)) are taken for each component Xj,i or Xj,i,tr of vectors or , respectively.

The cost function W(()′) is evaluated also for the new trial design defined by Equations (12) or (13). W(()′) is compared with 1.1W(). The modified trial design ()′ is always directly rejected if it certainly does not improve the current best record, that is, if the condition W(()′) > 1.1W() is satisfied. If W(()′) ≤ 1.1W() and W() ≤ 1.1W(), W(()′) and W() are compared. ()′ is used to update the population if W(()′) ≤ 1.1W() or is used if W(()′) > W().

Equation (12) is related to exploration. In fact, it tries to involve the whole population in the formation of the new trial design ()′ because the α, β and δ wolves could not move the other search agents (i.e., ) to better positions of search space near the prey (i.e., ) with respect to the previous iteration. Since the main goal of the population renewal task is to improve each individual , SHGWJA tries searching on descent direction with respect to (the cost function certainly improves, moving from a generic individual towards the current best record) and escapes from the worst individual of population , which certainly may not improve .

Equation (13) is instead related to exploitation because it operates on a good trial solution dictated by wolves α, β and δ. This design is very likely to be close to or even to improve it. The δ wolf is temporarily selected as the worst individual of the population. This forces SHGWJA to locally search in a region of design space containing high-quality solutions like the three best individuals of the population.

When all have been updated by SHGWJA via the classical GWO scheme based on Equations (1) through (7) or the JAYA-based schemes of Equations (12) and (13), and their quality has been evaluated using the elitist strategy W() ≤ 1.1W(), the population is re-sorted and the NPOP individuals are ranked with respect to penalty function values. Should Wp() or Wp(()′) be greater than Wp(), all new trial solutions generated/refined for are rejected and the old design is retained in the population.

SHGWJA sets the best three individuals of the new population as wolves α, β and δ with , and design vectors, respectively. The best and worst solutions are set as and , respectively.

The present algorithm attempts to avoid stagnation by checking the ranking of wolves

α,

β and

δ with an elitist criterion. This is achieved each time that the wolves’ positions are not updated in the current iteration. The elitist criterion adopted by SHGWJA relies on the concept of descent direction.

and

are obviously descent directions with respect to the positions

and

of wolves

β and

δ. Hence, SHGWJA perturbs

and

along the descent directions

and

. The positions of

β and

δ wolves are “mirrored” with respect to

as follows:

In Equation (14), the random numbers ηmirr,β and ηmirr,δ are extracted in the (0, 1) interval. They limit step sizes to reduce the probability of generating infeasible positions. The best three positions amongst , , , and are set as the α, β and δ wolves in the next iteration. The two worst positions are compared with the rest of the population to see if they can replace and the second worst design of old population. The latter check also covers the scenario where and could not improve any of the α, β and δ wolves.

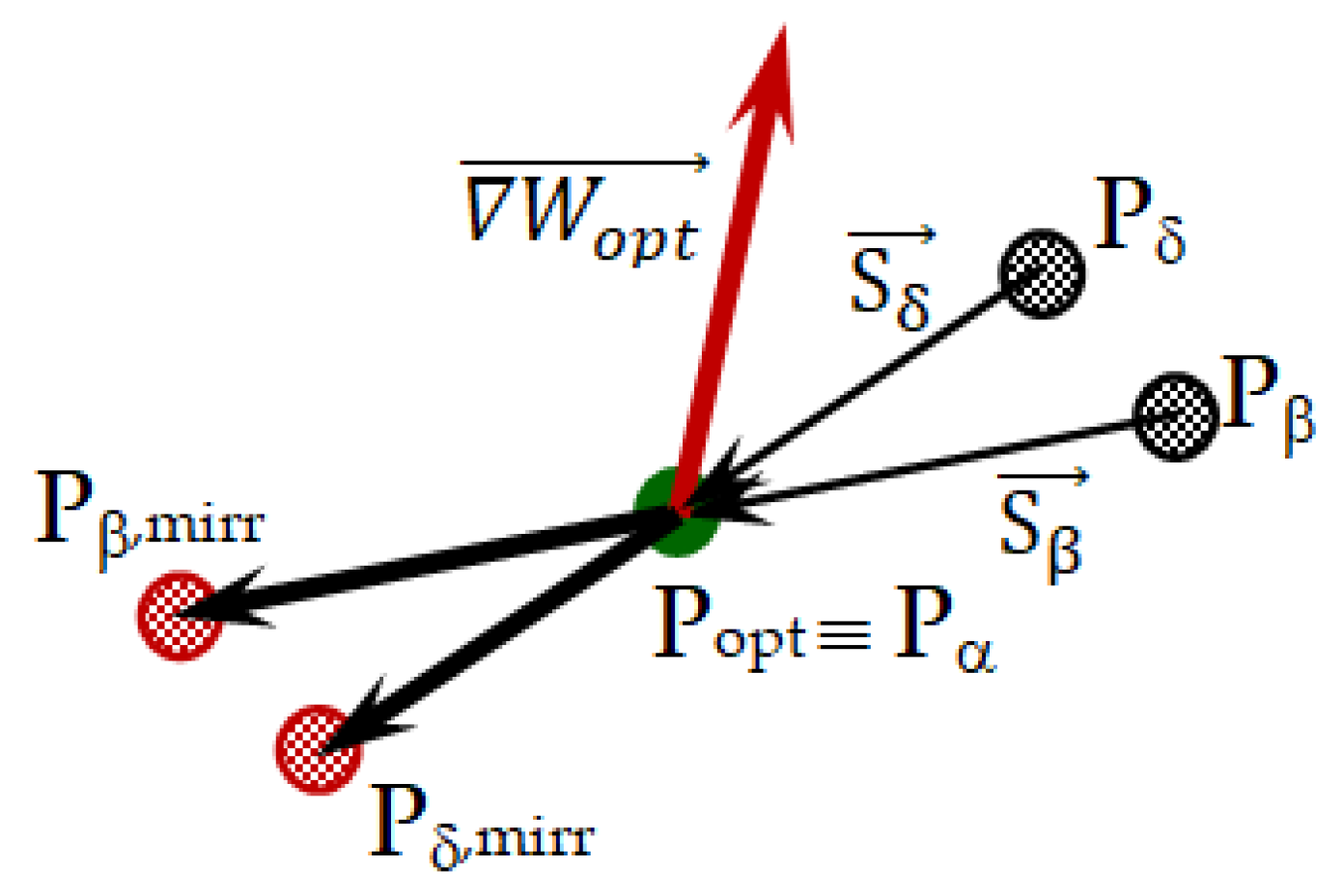

Figure 1 illustrates the rationale of the mirroring strategy adopted by SHGWJA. The figure shows the following: (i) the original positions of the

α,

β and

δ wolves (respectively, points P

opt≡P

α, P

β and P

δ of the search space); (ii) the positions of mirror wolves

βmirr and

δmirr (respectively, points P

β,mirr and P

δ,mirr of search space); (iii) the cost function gradient vector

evaluated at

, that is, wolf

α. The “mirror” wolves

βmirr and

δmirr— i.e.,

and

— defined by Equation (14) lie on descent directions and may even improve

(i.e., the position of wolf α). In fact, the conditions

and

hold, where “

” denotes the scalar product between two vectors. Since

is in all likelihood a steeper descent direction than

, the

δ wolf may have a higher probability than wolf

β of replacing wolf

α even though wolf

β occupies a better position than wolf

δ in the search space. The consequence of this elitist approach is that SHGWJA must perform a new exploration of the search space instead of attempting to exploit trial solutions that did not improve the design in the last iteration. Furthermore, the replacement of the two worst designs of population improves the average quality of search agents and increases the probability of defining higher-quality trial solutions in the next iteration.

The standard deviations of design variables and cost function values of search agents decrease as the search approaches the global optimum. Therefore, SHGWJA normalizes standard deviations with respect to the average design

and the average cost function

. The convergence criterion used by SHGWJA is the following:

where the convergence limit

is equal to 10

−7. Equation (15) is based on the following rationale. The classical convergence criteria of optimization algorithms compare the cost function values with

as well as the best solutions set as

obtained in the last iterations, and stop the search process if both these quantities do not change more than the fixed convergence limit. Accounting for variation in the best solution

allows for avoiding local minima if the optimizer enters a large region of the design space containing many competitive solutions with the same cost function values. However, this approach may not be effective in population-based algorithms where all candidate designs stored in the population should cooperate in searching for the optimum. For example, it may occur that the optimizer updates only sub-optimal solutions and leaves

unchanged over many iterations. Should this occur, the optimizer would stop the search process while there is still a significant level of diversity in the population, which is a typical scenario of the exploration phase dominating the early stage of the optimization process. Equation (15) assumes instead that all individuals are in the best region of the search space which hosts the global optimum and cooperate in the exploitation phase: hence, diversity must decrease and search agents must aggregate in the neighborhood of

. For this reason, in Equation (15), the standard deviation of search agents’ positions with respect to the average solution is normalized to the average solution to quantify population diversity. As all solutions come very close to

, they coincide with their average and the search process finally converges. The standard deviation of solutions is hence normalized with respect to the average solution, thus having a dimensionless convergence parameter. The same approach is followed for cost function values by normalizing their standard deviation with respect to the average cost: competitive solutions almost coincide with the optimum only when are effectively close to it, that is, when the search process is near its end.

Steps 1 through 3 are repeated until SHGWJA converges to the global optimum.

SHGWJA terminates the optimization process and writes output data in the results file.

Algorithm 1 presents the SHGWJA pseudo-code.

Figure 2 shows the flow chart of the proposed hybrid optimizer.

| Algorithm 1 Pseudo-code of SHGWJA |

| START SHGWJA. |

Set population size NPOP and generate randomly a population of NPOP candidate designs (i = 1,…,NPOP) using Equation (9). For i = 1,…,NPOP Compute cost function and constraints of the given optimization problem for each candidate design . Compute penalized cost function for each candidate design using Equations (10) and (11). end for Sort population by values in ascending order. Set the best three individuals with the lowest values as wolves α, β and δ. Let , and the design vectors for wolves α, β and δ, respectively. Set the α wolf as current best record ≡ with penalized cost Wp,opt = Wp(). For i = 1,…,NPOP Step 1. Use classical GWO Equations (1)–(7) to provisionally update each individual of the population to Step 2. Evaluate trial design and/or additional/new trial design If W() ≤ 1.1W() Keep trial design and define additional trial design using the JAYA strategy of Equation (13). else Reject trial design and define the new trial design using the JAYA strategy of Equation (12). end if If > 1.1W() & W() > 1.1W() Reject also and keep individual in the new population. end if If ≤ 1.1W() & W() ≤ 1.1W() & < W() Use trial design to update the population. end if If ≤ 1.1W() & W() ≤ 1.1W() & > W() Use trial design to update the population. end if If Wp() or < Wp() Keep or in the updated population. else Discharge or and keep also in the updated population. end if end for Step 3. Re-sort population, define new wolves α, β and δ, update and Sort the updated population by the values of in ascending order Update the positions , and of wolves α, β and δ, respectively. Use the elitist mirror strategy of Equation (14) to avoid the stagnation of wolves α, β and δ. Update, if necessary, positions , and or at least the worst two individuals of the population. Set the α wolf as the current best record ≡ with penalized cost Wp,opt = Wp(). Step 4. Check for convergence If the convergence criterion stated by Equation (15) is satisfied Terminate the optimization process. Output the optimal design and optimal cost value W(). else Continue the optimization process. Go to line 6. end if

|

| END SHGWJA. |

In summary, SHGWJA is a grey wolf-based optimizer, which updates the population by checking whether the α, β, δ wolves may effectively improve the current best record. The JAYA operators (indicated in red on the flowchart) and the elitist strategies included in SHGWJA enhance the exploration and exploitation phases, forcing the algorithm to increase the population diversity and select high-quality trial solutions without performing too many function evaluations. The classical GWO algorithmic structure is modified by the elitist strategy W() ≤ 1.1W(), by the JAYA-based strategies stated in Equations (12) and (13) to generate high-quality trial designs, and by the mirroring strategy stated in Equation (14) to increase the population diversity over the whole optimization search. These four operators introduced in the GWO formulation can be defined “simple modifications” because they are very easy to implement.

It should be noted that since classical GWO and JAYA formulations do not include any specific operator taking care of the exploitation phase, they may suffer from a limited exploitation capability. Conversely, SHGWJA performs exploration or exploitation based on the current trend in the optimization history, and, in particular, on the quality of the currently generated trial solution. If a trial solution does not improve the current best record, SHGWJA performs exploration to search for higher-quality trial solutions over the whole search space (JAYA strategy of Equation (12)). If a trial solution is good, SHGWJA performs exploitation to further improve the current best record (JAYA strategy of Equation (13)). These phases alternate over the whole optimization history and, hence, are dynamically balanced. Furthermore, SHGWJA continuously explores the search space to avoid stagnation of the α, β and δ wolves.

Since JAYA operators modify the trial solutions generated by GWO, the proposed algorithm is a “high-level” hybrid optimizer where both components concur to form the new design. Interestingly, SHGWJA does not require any new internal parameters with respect to classical GWO and JAYA. This feature is not very common in metaheuristic optimization because hybrid algorithms usually utilize new heuristic internal parameters to switch the search process from one component optimizer to another.

Another interesting issue is the selection of the population size NPOP. Increasing the population size in metaheuristic optimization may lead to performing too many function evaluations. This occurs because the total number of evaluations is usually determined as the product of population size NPOP with the limit number of iterations. Using a larger population size may improve the exploration capability but computational cost may significantly increase. However, a large population is not necessary if the optimizer can always generate high-quality designs that continuously improve the current best record or the currently perturbed search agents. Furthermore, grey wolves hunt in nature in groups including at most 10–20 individuals (a family pack is typically formed by 5–11 animals; however, the pack can be composed by up to 2–3 families). For this reason, all SHGWJA optimizations carried out here for engineering design problems were performed with a population of 10 individuals. Sensitivity analysis confirmed the validity of this setting also for mathematical optimization where the population size was increased to 30 or 50, consistent with the referenced studies on other MAs.

The last issue is the computational (i.e., time) complexity of the proposed SHGWJA method. In general, this parameter is obtained by summing over the complexity of different algorithm steps, such as the search agent initialization, optimization variables perturbation and population sorting. The computational complexity of classical GWO over

Niter iterations performed is O(

NPOP ×

NDV +

Niter ×

NPOP × (

NDV + log

NPOP)). For each optimization iteration of SHGWJA, the following occurs: (i) the elitist strategy

W(

) ≤ 1.1

W(

) introduces

NPOP new operations; (ii) each JAYA-based strategy (Equations (12) and (13)) introduces

NPOP ×

NDV new operations; (iii) the mirroring strategy of Equation (14) introduces 2

NDV new operations. In summary, SHGWJA performs in each iteration

NPOP + (

NPOP + 2) ×

NDV more operations than classical GWO. For example, for the largest test problem solved in this study with

NDV = 14 and

NPOP = 10, SHGWJA performed in each iteration at most 10 + (12 × 14) = 178 more operations than classical GWO. In the worst-case scenario where all operators are used for all agents, the computational complexity of SHGWJA increases to O(140 +

Niter × 328) from only O(140 +

Niter × 150) for classical GWO. The levels of computational complexity reported in the literature for very efficient GWO/JAYA variants such as GGWO [

105] and EHRJAYA [

98] are O(140 +

Niter × 150) and O(

Niter × 160), respectively, significantly lower than for SHGWJA. However, the higher number of operations performed by SHGWJA in each iteration are always finalized to generate high-quality trial designs, thus allowing the present algorithm to better explore/exploit the search space than its competitors.

5. Results and Discussion

5.1. Mathematical Optimization

Table 1 compares the optimization results obtained by SHGWJA and the other 31 MAs for the Rosenbrock problem. For each selected combination (

NDV;

NPOP), the table lists (when available) the best, average and worst optimized values, the corresponding standard deviation on the optimized cost (always indicated as ST Dev in all result tables), and the required function evaluations (NFE) for all optimizers. The limit number of function evaluations for SHGWJA was set equal to 15,000, which is the smallest number of function evaluations indicated in the literature for practically all SHGWJA’s competitors.

It can be seen from the table that SHGWJA always converged to the lowest values of the cost function, ranging from 1.000·10−15 for the parameter settings (NDV = 30; NPOP = 30) and (NDV = 30; NPOP = 50) commonly used in the literature to 5.179·10−13 for (NDV = 10; NPOP = 10). Remarkably, SHGWJA’s performance was practically insensitive to the selected combination of problem dimensionality and population size. In particular, the present algorithm always converged to a best solution very close to the target optimum cost of 0 within 15,000 function evaluations: the optimized cost obtained in the best runs of all (NDV;NPOP) settings never exceeded 5.179·10−13, while the standard deviation on the optimized cost never exceeded 2.669·10−12. Interestingly, for all (NDV;NPOP) settings, the average optimized cost and standard deviation on the optimized cost obtained by HSGWJA dropped to 10−28 when the convergence limit in Equation (14) was reduced to 10−15 and the computational budget was increased to 30,000 function evaluations, the same as for LCA and much less than for MOA (50,000), to obtain their reported null standard deviations.

The above results indicate that SHGWJA was the best optimizer overall. In fact, only the mother optimization algorithm (MOA) [

51] and the learning cooking algorithm (LCA) [

53] reached the 0 target solution in all optimization runs, but this required, respectively, 50,000 and 30,000 function evaluations. However, for the (

NDV = 30;

NPOP = 30) setting used by LCA, the best/average/worst optimized costs and standard deviation reached by SHGWJA within at most 15,000 function evaluations ranged between 1.001·10

−15 and 7.545·10

−14, very close numerically to the 0 target value. Furthermore, for

NPOP = 50 set in MOA optimizations, SHGWJA obtained its best values: 10

−15 for

NDV = 30 and 100 with corresponding averages between 1.1·10

−15 and 1.1·10

−14, again very close to 0. The convergence curves provided in [

51] for MOA and in [

53] for LCA have low resolution and cannot be compared directly with those recorded for the present algorithm. However, SHGWJA’s intermediate solutions reached the cost of 10

−7 within only 1000–1500 function evaluations in all optimization runs. Such behavior was fully consistent with the trends shown in [

51,

53].

The performance of SHGWJA was comparable to the hybrid harmony search (hybrid HS), hybrid big bang–big crunch (hybrid BBBC) and hybrid fast simulated annealing (HFSA) algorithms developed in [

93]. In fact, the average optimized cost resulting from

Table 1 for SHGWJA is 1.1·10

−12, while the average optimized costs of hybrid HS/BBBC/SA were always above 1.197·10

−11. The standard deviation on the optimized cost was on average 1.478·10

−12 vs. the 7.4·10

−12 average deviation of hybrid HS/BBBC/SA. However, the algorithms of Ref. [

93] were run with a convergence limit

of 10

−15. The inspection of convergence curves reveals that hybrid HS/BBBC/SA actually stopped to improve the cost function already at about 80% of the search process in spite of their tighter convergence limit. This was due to the use of gradient information in the generation of new trial solutions. Conversely, SHGWJA kept reducing the cost function until the very last iterations of its search process.

SHGWJA also outperformed the two particle swarm optimization variants based on global best (GPSO) or including the presence of an aging leader with challengers (ALC-PSO) [

114]. In fact, these PSO variants completed their best optimization runs within less than 6500 function evaluations but their best optimized cost was at most 3.7·10

−7 vs. 1.001·10

−15 to 4.789·10

−13 obtained by SHGWJA for the same number of optimization variables (30) and similar population sizes (10 and 30). Furthermore, the average optimized cost and standard deviation of PSO variants, respectively, ranged between 7.6 and 11.7, and 6.7 and 15 vs. only 2.832·10

−12 obtained by SHGWJA in the worst case.

Limiting our analysis to the classical setting (

NDV = 30;

NPOP = 30 ± 10) used in the metaheuristic optimization of unimodal functions and the very small computational budget of 15,000 function evaluations, the algorithms ranked as follows in terms of average optimized cost: SHGWJA, mEO, ALCO-PSO, GPSO, hSM-SA, AOA, EO, IAOA and LSHADE-SPACMA. When the computational budget increased to 30,000 function evaluations with (

NDV;

NPOP) ≤ 50, the ranking changed as follows: SHGWJA, MOA and LCA, SAMP-JAYA, GTO, IMPA, PEOA, mEO, ALC-PSO, EGTO, GPSO, hSM-SA, MGWO-III, SLOA, RUN, AOA, EO, MPA, CMA-ES, IAOA, LSHADE-SPACMA, LSHADE and LSHADE-CnEpSin. Increasing the population size to 70 and the number of function evaluations to 80,000 allowed the hybrid CJAYA-SQP algorithm (combining chaotic perturbation in JAYA’s exploration and sequential quadratic programming for exploitation) [

95] to rank ninth between mEO and ALC-PSO; the hybrid NDWPSO algorithm (combining PSO, differential evolution and whale optimization) [

115] ranked right before the high-performance algorithm LSHADE [

117]. Interestingly, all JAYA variants except the steady-state JAYA (SJAYA) [

110]) ranked in the top 10 algorithms, while high-performance algorithms like the LSHADE variants [

117,

118,

119] and the basic formulation of the marine predators algorithm (MPA) [

80] were not very efficient in the Rosenbrock problem. This confirms the advantage in selecting JAYA as a component algorithm for hybrid metaheuristic optimizers.

As mentioned before, SHGWJA was insensitive to parameter settings while all other algorithms showed a marked decay in performance as the number of optimization variables increased. For example, fixing the population size at 30 and varying the problem dimension from 30 to 1000, the average optimized cost went up to almost 1000 except for the giant trevally optimizer (GTO) [

81] and equilibrium optimizer with a mutation strategy (mEO) [

92] that limited this increase to 3.57·10

−6 for

NDV = 1000 and 6.2 for

NDV = 500, respectively. For

NDV = 1000, SHGWJA ranked first followed by hybrid HS/BBBC/SA and GTO with at most 2.53·10

−8 average optimized costs; mEO had an average optimized cost still below 0.5; CJAYA-SQP, MGWO-III [

39] (an astrophysics-based GWO variant), EO, leopard snow optimizer (LSO) [

91] and SaDN [

94] (hybridized differential evolution naked mole-rat) obtained average optimized costs between 13 and 99.

Table 2 presents the optimization results obtained by SHGWJA and its 29 MA competitors in the Rastrigin problem. The data arrangement is the same as for

Table 1. The limit number of function evaluations for SHGWJA was also set to 15,000 for this multimodal problem. SHGWJA’s performance was again insensitive to the setting of

NDV and

NPOP. SHGWJA’s competitors were also much less sensitive to parameter settings than in the case of Rosenbrock’s problem. Such a behavior was somehow expected considering that the convergence of Rastrigin’s function to the global optimum becomes “easier” as the problem dimensionality increases.

It can be seen from

Table 2 that most of the algorithms (including the astrophysics-based GWO variant MGWO-III [

39], giant trevally optimizer (GTO) [

81], improved marine predators algorithm (IMPA) [

101], and improved arithmetic optimization algorithm (IOAO) [

96]) converged to the target global optimum of 0 with a null average and standard deviation already for the lowest number of optimization variables. SHGWJA performed very well also in this multimodal optimization problem, converging to the best cost of 1.776·10

−15 in eight cases, 2.842·10

−14 in one case, and from 1.492·10

−13 to 2.934·10

−13 in the remaining two cases, always very close to the 0 target value. Furthermore, SHGWJA achieved a 0 standard deviation in three cases with all independent optimization runs converging to 1.776·10

−15, and practically null standard deviation values ranging between 5.13·10

−16 and 9.175·10

−16 in the other five cases where the best value was 1.776·10

−15. The average optimized cost and average standard deviation on the optimized cost obtained by SHGWJA for all (

NDV;

NPOP) combinations, respectively, were 3.171·10

−13 and 6.606·10

−13, hence, 1–2 orders of magnitude smaller than their counterpart values recorded for hybrid HS/BBBC/SA. Again, the hybrid HS/BBBC/SA algorithms of Ref. [

93] stopped to significantly improve the cost function well before the end of the optimization iterations.

Interestingly, SHGWJA obtained cost function values and corresponding standard deviation values on the order of 10−28 by setting the convergence limit in Equation (14) to 10−15 and increasing the computational budget to 25,000 function evaluations, that is, (i) the same as for MPA, EGTO, SaDN and MSCA, (ii) less than for MGWO-III, LCA and GTO (30,000), (iii) much less than for LSO, MOA, PEOA, SLOA and IMPA (50,000), and CJAYA-SQP (80,000) to obtain their reported null standard deviations.

SHGWJA always required on average less than 14,000 function evaluations (i.e., the computational cost of NDWPSO, the fastest algorithm to reach the 0 target solution with a 0 standard deviation) to successfully complete the optimization process when the problem dimensionality was at most 100. The fastest optimization runs of SHGWJA converging to 1.776·10

−15 were always completed within 12,877 function evaluations, less than the 14,000 evaluations required by NDWPSO for the single setting (

NDV = 50;

NPOP = 70); a more detailed inspection of convergence curves revealed that SHGWJA’s intermediate solutions always reached the cost of 10

−7 within about 1000 function evaluations, practically the same behavior observed for NDWPSO. The I-JAYA algorithm (augmenting classical JAYA with fuzzy clustering competitive learning, experience learning and Cauchy mutation) rapidly reduced within only 15,700 function evaluations the cost function value to the 10

−10 target value set in [

113]. ALC-PSO also performed well in terms of optimized cost, which was reduced to 7.105·10

−15 but required almost five times more function evaluations than SHGWJA (i.e., 74,206 vs. at most 15,000).

Since SHGWJA practically converged to the target optimum cost of 0 with a null or nearly null average optimized cost and standard deviation on the optimized cost within the lowest number of function evaluations in all cases, it should be considered the best optimizer also for Rastrigin’s problem. The MAs listed in

Table 2 that failed to reach 0 average cost and 0 standard deviation by more than 10

−7 obviously occupy the worst seven positions in the algorithm ranking as follows: AOA, MPA, LSHADE, LSHADE-SPACMA, SAMP-JAYA, LSHADE-cnEpSin and CMA-ES. All of these MAs were executed with

NDV = 30 except LSHADE-SPACMA, which was run with

NDV = 50.

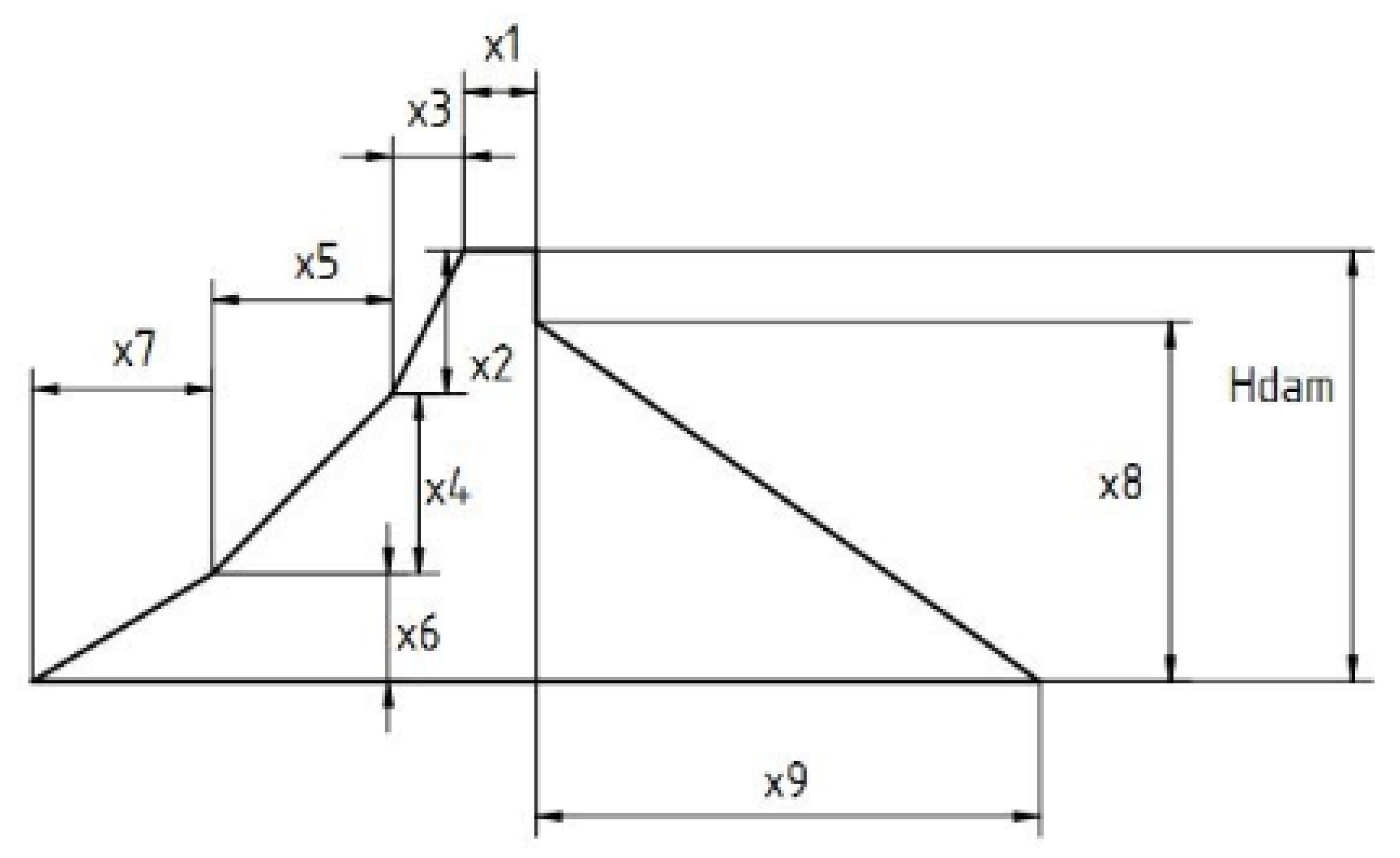

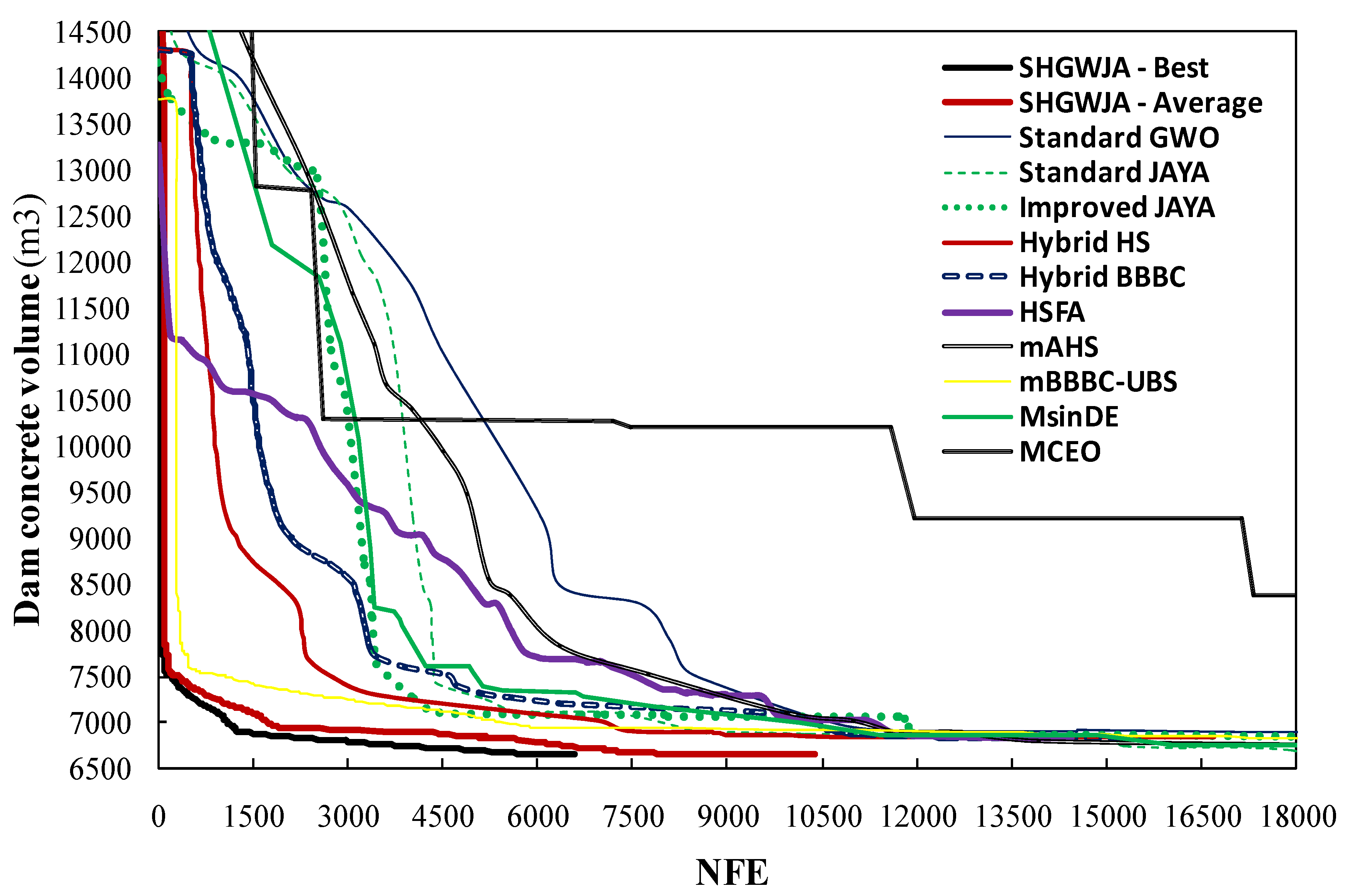

5.2. Shape Optimization of Concrete Gravity Dam

Table 3 presents the optimization results for the concrete gravity dam design problem. The table also reports the average number of function evaluations

NFE (structural analyses), the corresponding standard deviation, and the number of function evaluations required by the fastest optimization run for SHGWJA and some of its competitors.

The multi-level cross entropy optimizer (MCEO) [

120] and the flying squirrel optimizer (FSO) [

121] reduced the concrete volume per unit width of 10,502.1 m

3 of the real structure to only 7448.774 m

3. The hybrid harmony search, big bang–big crunch and simulated annealing algorithms developed in [

93] found a similar solution to the improved JA [

93] (a JAYA variant including line search to reduce the number of function evaluations entailed by the optimization) and significantly reduced the dam’s concrete volume to about 6831 m

3. However, hybrid HS/BBBC/SA completed the optimization process within less function evaluations and converged to practically feasible designs while JAYA’s solution violated constraints by 4.06%. The 32% constraint violation reported for MCEO and FSO solutions refers to the fact that the constraint on the dam’s height

X2 +

X4 +

X6 = 150 m (see

Section 4.2) was not considered in [

120,

121].

It can be seen from

Table 3 that the simple hybrid algorithm SHGWJA proposed in this study was the best optimizer overall. In fact, it could reduce the dam’s concrete volume by 2.68% with respect to the best solutions quoted in [

93], reaching the optimal volume of 6647.874 m

3. This optimal solution practically satisfied the design constraints, achieving a very low violation of 1.284·10

−3%. The number of function evaluations performed in the SHGWJA optimizations was on average 50% lower than for the metaheuristic algorithms of Ref. [

93] (i.e., only 10,388 analyses vs. 14,560 to 16,200 analyses) and 70% for MCEO [

120], which required 35,000 function evaluations. FSO was reported in [

121] to be considerably faster than particle swarm optimization and genetic algorithms, thus achieving a similar converge speed to MCEO.

Standard JAYA converged to the same optimal solution as SHGWJA, 6647.874 m3, but required 2.5 times the average number of function evaluations of SHGWJA (i.e., the JAYA’s computational budget was set to 25,000 function evaluations while SHGWJA converged within only 10,388 evaluations) and almost four times the function evaluations of the fastest SHGWJA’s optimization run (i.e., 25,000 vs. only 6584). Standard GWO obtained a slightly worse solution than SHGWJA and standard JAYA in terms of concrete dam volume (6654.927 m3 vs. 6647.874 m3). The GWO’s solution was also practically feasible but constraint violation increased to 7.209·10−3 vs. only 1.284·10−3% recorded for SHGWJA and standard JAYA. Furthermore, the computational cost of GWO was about five times higher than for SHGWJA (i.e., 50,000 analyses vs. only 10,388 analyses). This confirms the validity of the hybrid search scheme used by SHGWJA.

The modified sinusoidal differential evolution (MsinDE) (derived from [

124]), the modified big bang–big crunch with upper bound strategy (mBBBC–UBS) (derived from [

123]), and modified harmony search optimization with adaptive parameter updating (mAHS) (derived from [

122]), respectively, ranked third, fifth and sixth in terms of optimized volumes that were very close to the global optimum found by SHGWJA and standard JAYA, ranging from 6652.513 to 6658.024 m

3 vs. only 6647.874 m

3. It should be noted that the SHGWJA’s elitist strategy

W(

) ≤ 1.1

W(

) implemented also in mAHS, mBBBC–UBS and MsinDE was very effective because it allowed these algorithms to improve the best solution quoted in the literature and to significantly reduce the gap with respect to the best optimizer. In particular, the optimized volumes by mAHS, mBBBC–UBS and MsinDE were at most 0.15% larger than the SHGWJA’s optimum volume (i.e., only 10.150 m

3 gap vs. 6647.874 m

3) vs. the 0.89% seen for the original algorithms with respect to hybrid BBBC, the best optimizer in Ref. [

93]. Furthermore, the optimized designs obtained by mAHS, mBBBC–UBS and MsinDE in their best runs were always practically feasible: in fact, constraint violation was, respectively, 1.311·10

−3%, 3.376·10

−3% and 3.653·10

−3% vs. 1.437·10

−3%, 6.733·10

−3% and 6.598·10

−3%, as reported in [

93]. The computational cost of the best optimization runs was reduced compared to [

93], saving about 10,000 function evaluations in the case of mAHS. However, mBBBC–UBS, mAHS and MsinDE still required on average more than three times the function evaluations required by SHGWJA (i.e., from 31,690 to 35,208 analyses vs. only 10,388 analyses) and their best optimization runs required 4.9 to 5.7 times more function evaluations than the present algorithm (i.e., from 32,004 to 37,545 analyses vs. only 6584 analyses).

Remarkably, SHGWJA achieved a 100% rate of success converging to the same optimal solution of 6647.874 m

3 in all independent optimization runs with a null standard deviation on the optimized cost. None of the other algorithms referred to in

Table 3 could obtain in all of their optimization runs better designs than the best volume of 6830.235 m

3 quoted in [

93] for hybrid BBBC. The standard deviation on the number of function evaluations required by SHGWJA was about 35% of the average number of function evaluations. These results prove the robustness of SHGWJA.

Further information on SHGWJA’s convergence behavior and robustness can be gathered from

Figure 9, which compares the optimization history of the proposed algorithm and its competitors. The curves relative to best and average optimization runs of SHGWJA are shown in the figure. Since SHGWJA always converged to the global optimum of 6647.874 m

3 in all optimization runs, its best run also corresponds to the fastest one. For the sake of clarity, the plot is limited to the first 18,000 function evaluations. The initial cost function value for the best individual of all algorithms ranged from 13,265.397 m

3 to 17,351.728 m

3, well above the global optimum of 6647.874 m

3. The better convergence behavior of SHGWJA with respect to its competitors is clear from the very beginning of the search process. In fact, the optimized cost found on average by SHGWJA for a feasible intermediate design generated within the 6584 function evaluations of the best optimization run was only 0.933% larger than the target global optimum. Interestingly, in its best run, SHGWJA could generate a feasible intermediate design just 1% worse than the global optimum at approximately 69% of the optimization process. The mBBBC-UBS algorithm was the only optimizer close enough to SHGWJA in terms of convergence speed for the first 630 function evaluations and for 5400 to 6600 function evaluations.

Table 4 lists the optimized designs obtained by SHGWJA and its main competitors. SHGWJA and standard JAYA designs coincided as these algorithms converged to the same optimal solution and were just slightly different from the optimized designs of standard GWO and MsinDE. The new optimal solution yielding 6647.874 m

3 concrete volume was very similar to the optimal solutions of the algorithms of Ref. [

93]: all variables changed by at most 5% except X

3, which was fixed to its lower bound by SHGWJA or close to the lower bound by GWO and MsinDE.

Figure 10 compares the dam’s optimized shapes (coordinates are expressed in meters) as found by SHGWJA and MsinDE, with the dam configurations reported in [

93,

120,

121]. Since standard JAYA found the same optimum design as SHGWJA, while standard GWO, mAHS, mBBBC–UBS and MsinDE obtained very similar configurations to SHGWJA, only the plot referred to MsinDE (the best optimizer after SHGWJA and standard JAYA) is shown in the figure for the sake of clarity.

Remarkably, the dam safety factors against sliding and overturning corresponding to the SHGWJA’s optimal solution became 6.318 and 3.515, respectively, vs. their counterpart values of 6.213 and 3.453 indicated in [

93]. Hence, reducing the concrete volume of the dam from 6831 m

3 to 6647.874 m

3 not only resulted in a more convenient design but also allowed a higher level of structural safety to be achieved. This proves the suitability of the proposed SHGWJA algorithm for civil engineering design problems.

5.3. Optimal Design of Tension/Compression Spring

Table 5 presents the optimization results obtained by SHGWJA and its competitors in the spring design problem. Statistical data on the optimization runs (i.e., best cost, average cost, worst cost and standard deviation optimized cost for the independent optimization runs), number of function evaluations

NFE and optimal design are listed in the table for all the algorithms compared in this study. The values of the standard deviation on optimized cost listed in the table for the referenced algorithms are exactly those reported in the literature.

It can be seen that the SHGWJA algorithm proposed in this study performed very well: its best solution, 0.0126665, practically coincided with the optimized costs of MGWO-III —the astrophysics-based GWO variant of Ref. [

39] (0.0126662) -, the preschool education optimization algorithm (PEOA) [

52] (0.012667), and the modified sine cosine algorithm (MSCA) [

99] (0.012668). SHGWJA’s optimized cost was only 0.0121% larger than the 0.012665 global optimum obtained by the JAYA variants EHRJAYA [

98], SAMP-JAYA [

111] and EJAYA [

112], the mother optimization algorithm (MOA) [

51], the marine predators algorithm (MPA) [

80], the hybridized differential evolution naked mole-rat algorithm (SaDN) [

94] and improved multi-operator differential evolution (IMODE) [

125]. The gaze cues learning-based grey wolf optimizer (GGWO) ([

105], the currently known best GWO variant), light spectrum optimizer (LSO) [

31], starling murmuration optimizer (SMO) [

74], hybrid EGTO algorithm combining marine predators and gorilla troops optimizer [

102] and queuing search algorithm (QSA, which mimics the human activities in queuing and was the best amongst the nine MAs compared in [

127]) converged to 0.0126652, the same as for EHRJAYA, MOA, MPA, SaDN and IMODE up to the fifth significant digit. The high-performance algorithm success history-based adaptive differential evolution with gradient-based repair (En(L)SHADE) was reported in [

126] to obtain an optimized cost of 1.27·10

−2, corresponding to the round up of the 0.0126652 cost.

The arithmetic optimization algorithm (AOA) [

43] and its improved variant IAOA [

96] found the smallest optimized costs, respectively, of 0.012124 and 0.012018, but the corresponding optimal designs were infeasible, violating the second constraint equation—g

2(

) in Equation (19)—by 8.05% and 10.8%. All other algorithms compared in

Table 3 obtained practically feasible solutions, violating constraints by less than 0.143%. The learning cooking algorithm (LCA) was reported in [

53] to converge within 30,000 function evaluations to the optimized cost of 0.0125 for the corresponding solution

(0.05566; 0.4591; 7.194). However, the actual value of cost function computed by giving in input

to Equation (19) is 0.013107, much higher than the 0.012665 optimum quoted in

Table 5.

The rate of success of SHGWJA was 100% also for this test problem: in fact, the present algorithm found very close designs to the global optimum in all independent optimization runs. SHGWJA obtained the seventh-lowest standard deviation on optimized cost out of the 29 MAs compared in this study (21 listed in

Table 3 and the other 8 from Ref. [

127], while no information was available for LSO and its 31 competitors, compared in [

31]), thus ranking in the first quartile. The standard deviation on optimized cost was only 0.0876% of the best design found by SHGWJA. Furthermore, the average optimized cost and worst optimized cost by SHGWJA, respectively, were at most 0.134% and 0.213% larger than the 0.012665 target value. Statistical dispersion on the optimized cost was very similar in its counterparts, that is, in MGWO-III, EHRJAYA and PEOA. However, EHRJAYA exhibited a larger average optimal cost than SHGWJA, while the worst solutions of MGWO-III and PEOA achieved a larger cost than that of SHGWJA.

As far computational cost is concerned, SHGWJA was on average the third fastest optimizer overall after EHRJAYA and LSO. However, the fastest optimization run of SHGWJA converging to the best solution of 0.0126665 was completed within only 2247 function evaluations, practically the same as for EHRJAYA, while the LSO’s convergence curve indicated in [

31] for the best optimization run covered 5000 function evaluations. Among other JAYA variants, SAMP-JAYA was just slightly slower than SHGWJA (6861 function evaluations vs. only 6347). Furthermore, the present algorithm was on average about (i) 4.7 times faster than MGWO-III, LCA, PEOA, SO, SMO and IMODE, (ii) 4 times faster than MPA, EGTO and MSCA, (iii) 3.6 times faster than GGWO, (iv) 2.8 times faster than QSA and the other algorithms of Ref. [

127], and (v) 2.4 times faster than EJAYA, EO, AOA, IAOA, SaDN and hSM-SA. It should be noted that

Table 5 compares the average number of function evaluations required by SHGWJA either with the fixed computational budget of all optimization runs or the computational cost of the best optimization run. Furthermore, SHGWJA was robust enough in terms of computational cost: the standard deviation on the number of function evaluations was about 35.4% of the average number of evaluations.

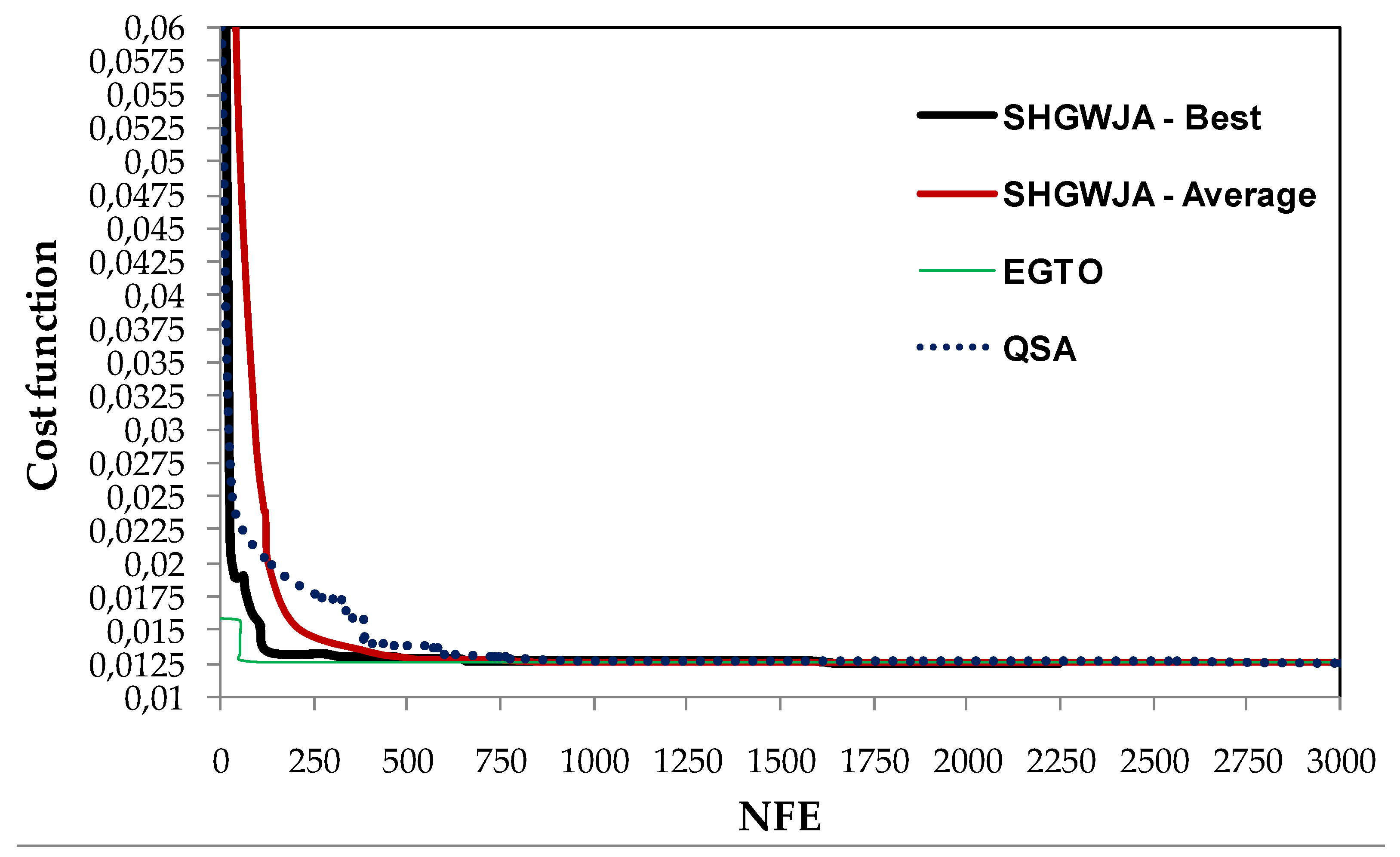

Figure 11 shows a more detailed comparison of the optimization history of SHGWJA with those of EGTO [

102] and QSA [

127]. For the sake of clarity, the plot is limited to the first 3000 function evaluations. It can be seen that the convergence curves of the best and average optimization runs recorded for the present algorithm practically coincided after only 700 function evaluations, that is, at about 31% of the optimization history of the best run. Interestingly, EGTO started its optimization search from a much better initial design than those of SHGWJA and EGTO: the initial cost evaluated for its best individual was only 0.016 vs. 0.06 for QSA, 0.1 for the best SHGWJA run and 0.1528 for the average SHGWJA run. In spite of this, the present algorithm recovered the gap with respect to EGTO already in the early optimization cycles and the convergence curves of EGTO and the best SHGWJA’s run crossed each other after 650 function evaluations. Furthermore, SHGWJA generated on average better feasible intermediate designs than QSA after only 135 function evaluations, keeping this ability over the first 750 function evaluations. After about 2500 function evaluations, the cost function values of 0.012673 and 0.012697, respectively, achieved by EGTO and QSA in their best optimization runs were still higher than the best cost of 0.0126665, finally reached by SHGWJA after only 2250 function evaluations.

The data presented in this section demonstrate without a shadow of a doubt the computational efficiency of SHGWJA. In fact, the present algorithm generated very competitive results as compared to the 21 state-of-the art MAs, each of which was in turn proven in the literature to outperform 5 to 34 other metaheuristic algorithms and their variants.

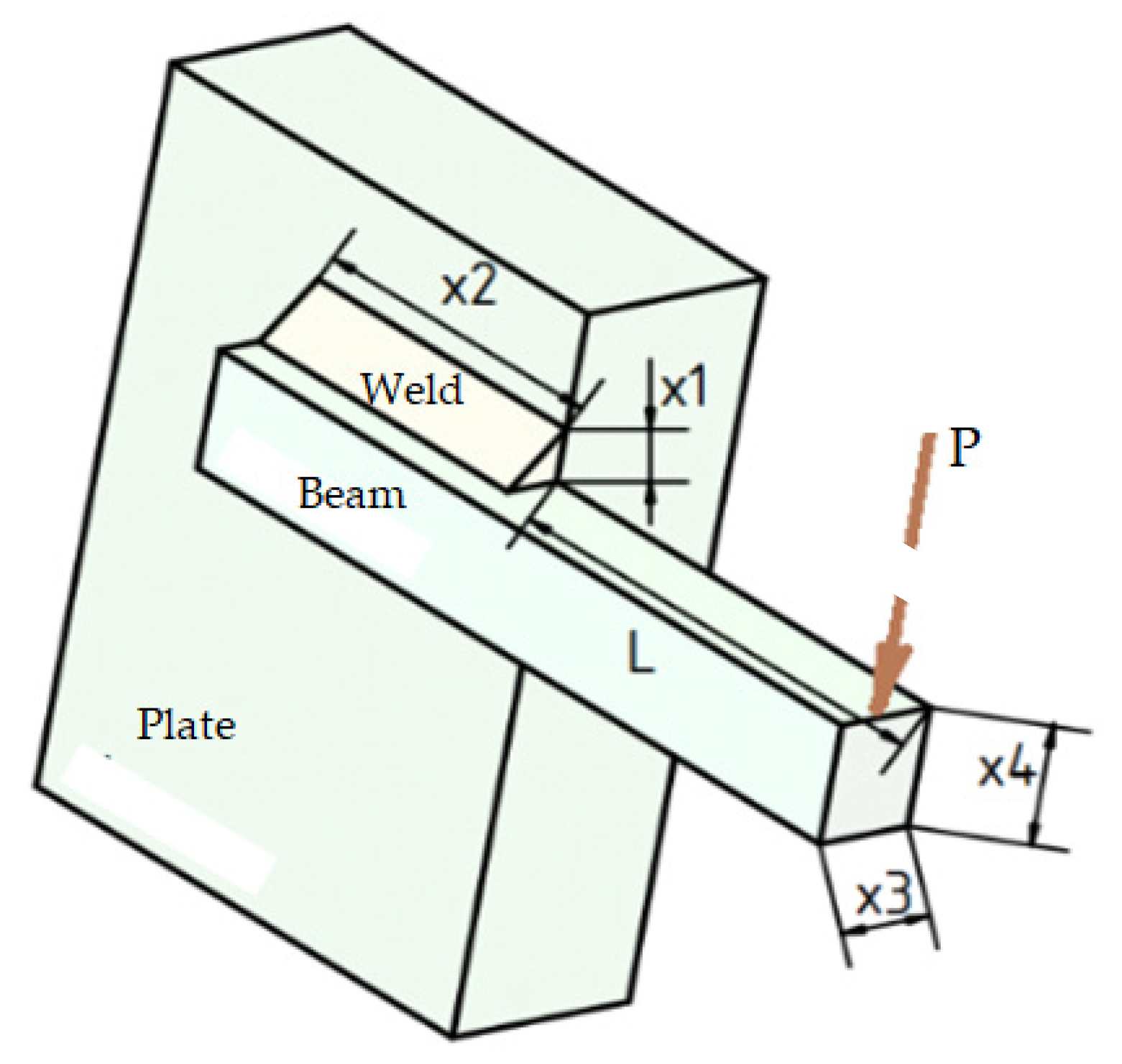

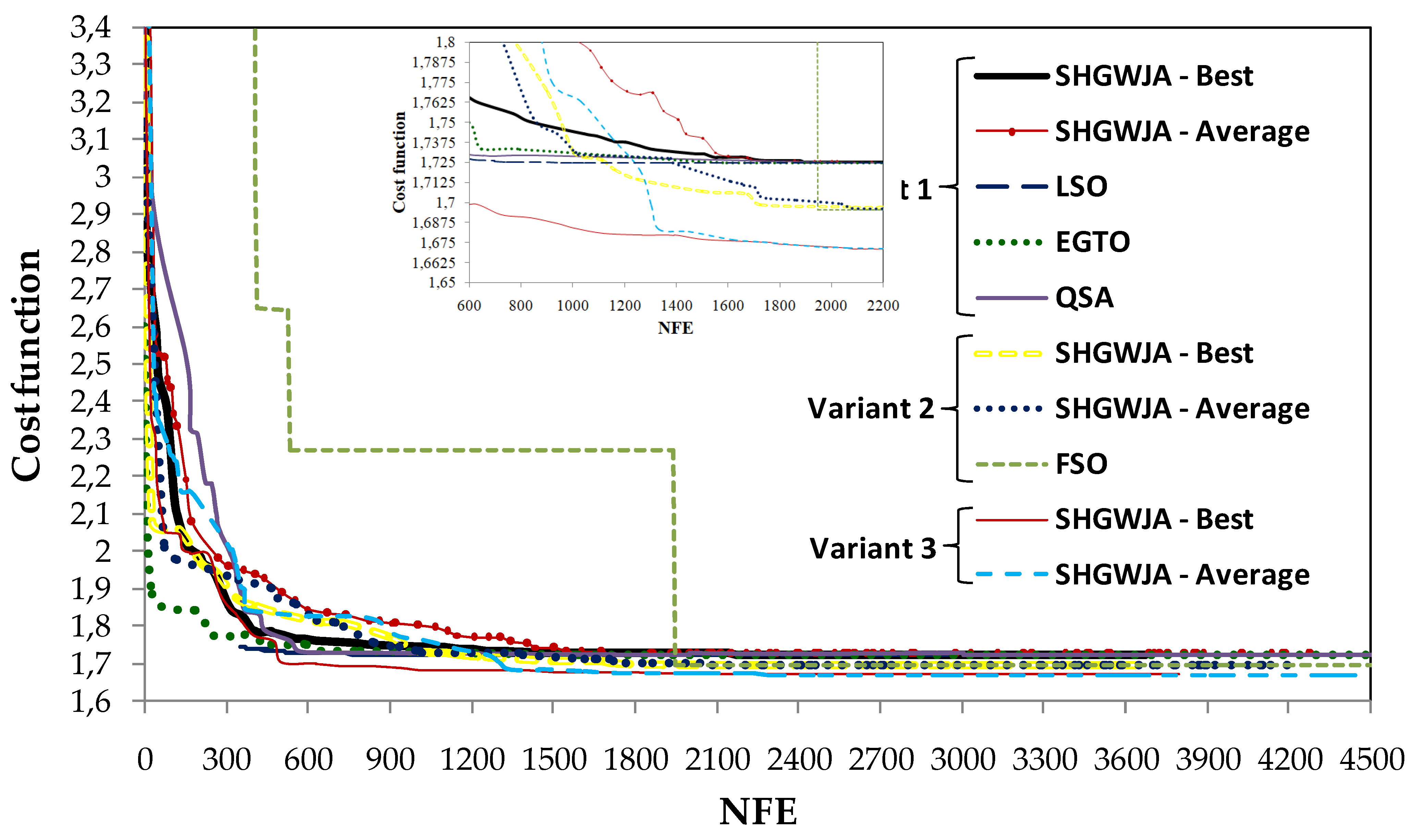

5.4. Optimal Design of Welded Beam

Table 6 presents the optimization results for the welded beam design problem. The values of the standard deviation on optimized cost listed in the table for the referenced algorithms are exactly those reported in the literature. It can be seen that the proposed SHGWJA method was the best optimizer overall. In fact, the optimum cost of 1.724852 corresponds to the target solution of the classical formulation (see

Section 4.4) and practically coincided with the optimized cost values obtained by all SHGWJA competitors except for the hybrid JAYA variant EHRJAYA [

98], arithmetic optimization algorithm (AOA) [

43], hybridized differential evolution naked mole-rat algorithm (SaDN) [

94], improved multi-operator differential evolution (IMODE) [

125], and Taguchi method integrated harmony search algorithm (TIHHSA) [

132]. In particular, IMODE and SaDN converged to the largest costs, respectively, about 63.8% and 14.6% higher than the optimum cost of 1.72485. The Taguchi method integrated harmony search algorithm (TIHHSA) [

132] converged to an optimized cost of 1.74026, close enough to the global optimum reached by SHGWJA.

The arithmetic optimization algorithm (AOA) [

43] converged to an optimized solution yielding a slightly lower cost than SHGWJA: 1.7164 vs. 1.72485. However, this solution violates the shear stress constraint of the classical problem formulation—g

1(

) in Equation (20)—by 25.3%. All other algorithms of

Table 6 solving the classical problem formulation converged to feasible designs.

SHGWJA found the target optimum cost of 1.6702 in all optimization runs (zero standard deviation), as well as in problem variant 3 of the CEC 2020 library, including J = 2