1. Introduction

Many students experience difficulties and challenges in taking mathematics courses at the tertiary level [

1]. When students enter their first year of tertiary education at university, they face several shocks of transition from a situation where concepts have an empirical and intuitive foundation to a situation where concepts are clarified by formal definitions and properties are reconstructed by logical inferences [

2,

3,

4]. The transformative process goes beyond changes in content to include changes in pedagogical approaches, learning methodologies, and assessment modalities [

5]. Undoubtedly, these factors contribute to the elevated and high failure rate observed in foundational mathematics [

6]. Many countries and regions reported that students perform poorly in first-year mathematics courses, noting that unacceptably high failure rates in first-year mathematics courses have become a catalyst for many students to leave mathematics-intensive programs, delay progression from one academic level to the next, and drop out of university [

7,

8,

9]. In China, the quality of first-year mathematics courses is also an issue under heated dispute, and factors such as students’ learning approaches and attitudes, teachers’ instructional methods, and the construction of institutional textbooks have been implicated in students’ poor performance [

10,

11,

12,

13]. Moreover, in order to build a high-quality system in the Chinese mathematics education context, formative assessment (FA) and assessment for learning (AFL) have received more attention in China in recent years [

14,

15,

16], but few studies have been based on systematic empirical approaches, especially on how students experience and feel about their mathematics teachers’ FA and AFL practices.

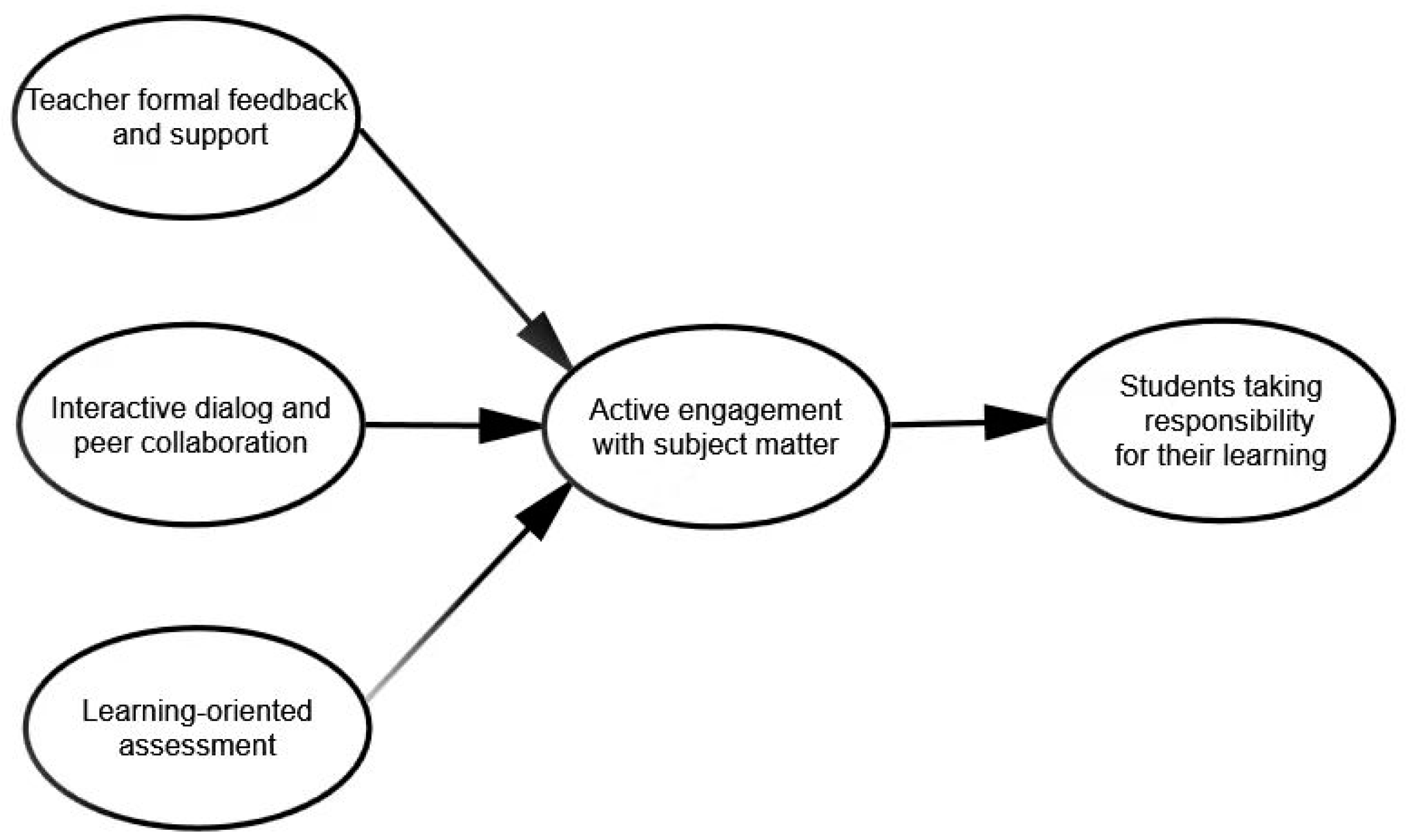

Specifically, formative assessment (FA) and assessment for learning (AFL) signify a departure from the conventional paradigm that perceives learning as the mere accumulation and production of knowledge. Furthermore, they align with a contemporary paradigm that views learning as a dynamic process involving the discovery and construction of knowledge [

17]. It should be noted that AFL emphasizes the learning and teaching process, whereas FA emphasizes the function of certain assessments [

18]. In addition, pupils exercise agency and autonomy in AFL, yet they are often seen as passive recipients of their teachers in FA [

19]. For example, Black et al. point out that when teachers collect information about period tests and assessments of their current students for long-term curriculum improvement, in which case the beneficiaries will be pupils at some stage in the future, the assessment has the characteristics of FA but not of AFL [

20]. In other words, teaching cannot exist independently of learning in AFL, what Wiliam describes as “keeping learning on track” [

21]. In mathematics courses, many theorems and proofs must be taught in a limited amount of time at universities, so how teachers use feedback, interaction, and assessment is a key component of effective classroom instruction, and student perceptions of these activities and strategies would affect their mathematics performance, course engagement and learning approaches [

22,

23]. AFL advocates some related pedagogical strategies, namely formal feedback from tutors, dialogic teaching and peer interaction, appropriate assessment tasks, and opportunities for understanding [

24]. In this paper, we prefer the use of AFL because it incorporates a broader range of activities and strategies that are consistent with advanced pedagogical approaches rather than approaches to assessment from a pure FA perspective [

19].

A range of literature provides extensive evidence that AFL practices are embedded within the pedagogy of a wide number of teachers in higher education [

25,

26,

27]. However, Carless noted that research and development work congruent with AFL was little known in China after a synthesis of relevant literature [

28], let alone mathematics education in higher education. This study investigated students’ perceptions of their mathematics teachers’ AFL practices, including feedback, interaction, and assessment, and focused on how students’ perceptions of specific AFL approaches affected their active engagement with subject matter, which in turn influenced their responsibility for learning in first-year mathematics courses. Our findings will fill the gap in the AFL cognition in Chinese higher mathematics education, further examine the scope of AFL practices in the reform of mathematics curriculum in recent years, and provide a basis for instructional interventions in the teaching and learning process. In addition, some findings differ from those in Western contexts, providing new perspectives and cases for global AFL research.

The remaining parts of this article are organized as follows: in

Section 2, we review the relevant literature to introduce the educational system and assessment practices in China, the development of AFL practices and strategies, and the conceptual model of this study. Then, we describe the research methodology in

Section 3 and present the research findings in

Section 4. We then discuss the findings and their implications in

Section 5 and

Section 6, respectively. Finally, the article summarizes the main findings as conclusions and discusses limitations and future work in

Section 7 and

Section 8.

3. Methodology

This study used a quantitative approach to investigate the factors that influence undergraduate students’ responsibility for learning in first-year mathematics courses, as it allows for comparisons of different variables, and the results would be rigorous and objective [

59]. In addition, the study examined the moderating effects on all relationships within the conceptual model. The Assessment for Learning Experience Inventory (AFLEI) questionnaire served as the basic instrument to collect data for its five factors adapting the AFL conceptual model [

44], and the partial least-squares structural equation modeling (PLS-SEM) approach was used to analyze these data with the AFL conceptual model.

3.1. Sample of the Study

Students in their second year at a “double-first-class” Chinese university were selected as respondents. The reason for this selection was that this group of students had already completed the study of the basic mathematics courses according to the university curriculum, and their learning experience of the AFL activities and strategies of the first-year mathematics courses was closest to the time of our survey. Our questionnaire was distributed and collected via the Internet, and each student could respond only once. Using this convenience sampling method, we recruited a total of 168 second-year undergraduate students from non-mathematics majors to participate in this study. Their mean age is 18.99 years, with a standard deviation of 2.20. The sample is considered representative of a homogeneous population because all volunteers completed the same number of credits and content in mathematics courses and took the uniform exams [

60]. In addition, a full year of learning experiences in mathematics courses would help students more accurately compare their actual learning experiences with those described in the survey and further contribute to the relevance of their responses to this survey.

3.2. Measuring Instrument

We used the AFLEI questionnaire, which was developed from the theory of AFL practices and strategies and some existing assessment experience questionnaires, to generate data on students’ perceptions of AFL activities and strategies in first-year mathematics courses [

24,

40,

61]. Moreover, results from exploratory factor analyses (EFAs) showed that the Kaiser–Meyer–Olkin (KMO) statistic was 0.88, exceeding the minimum adequacy value of 0.50, and Bartlett’s spherical test showed a significant chi-square value of 1881.09 (

p < 0.001), and results from confirmatory factor analyses (CFAs) showed good model fit with SRMR = 0.07; CFI = 0.91. Both exploratory factor analyses (EFAs) and confirmatory factor analyses (CFAs) provided support for the AFLEI questionnaire with a strong psychometric basis. The final version of the AFLEI questionnaire has 18 items divided into 5 subscales: teacher formal feedback and support, interactive dialog and peer collaboration, learning-oriented assessment, active engagement with subject matter, and students taking responsibility for their learning [

44].

Table 1 shows each subscale of the AFLEI questionnaire, brief descriptions of each dimension, and sample items. The full English and Chinese translations of the questionnaire are available in

Appendix A and

Appendix B, respectively.

On the questionnaire, to avoid neutral options and to show more nuance in the differentiation of their attitudes, students were asked to rate their agreement with each item statement on a six-point Likert scale: strongly disagree, disagree, slightly disagree, slightly agree, agree, and strongly agree. Additionally, we added some items that requested students to provide their gender, age, and major. We agreed to keep this information confidential.

3.3. Data Collection and Analysis

For our empirical study, we prepared the electronic version of the AFLEI questionnaire and administered it in the fall of 2022. All data that were collected can be found in

Table S1. An initial screening of the data indicated that the data contained no outliers or missing values that would pose a challenge to the subsequent analysis. Thus, we calculated the scores for the five dimensions of the AFLEI questionnaire by averaging the corresponding item scores. Subsequently, we analyzed the generated data using SPSS 26.0 and Smart PLS 4.0. First, SPSS 26.0 was used for descriptive analysis, such as internal consistency of scales and Kaiser–Meyer–Olkin and Bartlett’s spherical test. Then, the Shapiro–Wilk test was conducted to check the normality of the data. Finally, Smart PLS 4.0 was dedicated to exploring the factors that influence students to take responsibility for their learning of aspects of teacher formal feedback and support, interactive dialog and peer collaboration, learning-oriented assessment, and active engagement with content in first-year mathematics courses. Because there were only 168 respondents in this study and the distribution of the sample is non-normal, PLS-SEM is considered to be a more appropriate SEM approach than the traditional covariance-based structural equation modeling (CB-SEM) approach [

62,

63]. Compared to the latter, the former requires a smaller sample size and does not require that the data conform to a normal distribution [

64].

4. Results

The findings were divided into parts or pieces. First, through the students’ responses to the questionnaire, their general perceptions of AFL activities and strategies were inferred. Meanwhile, the score for each dimension was obtained by averaging the students’ responses to the items of each corresponding dimension, while the score for each item was the average of the total responses of the participating volunteers. Then, some indicators of the questionnaire, such as the composite reliability, convergent validity, and discriminant validity, were tested by the measurement model evaluation of the PLS-SEM. Finally, the PLS-SEM bootstrapping mode was used to show the effects of different aspects of AFL activities and strategies on Chinese undergraduate students’ ability to take responsibility for their learning in first-year mathematics courses and the influence of the moderating effects on all the relationships in the AFL conceptual model.

4.1. Students’ Perceptions of AFL Practices in First-Year Mathematics Courses

The results presented in

Table 2 show that there is not an overwhelming agreement with the statement that the AFL activities and strategies that students received in the first-year mathematics courses were sufficient. In addition, this information can be very useful to indicate that more assessment tasks should be developed to improve teaching and learning at the class level and the institutional level. Specifically, the active engagement with the subject matter factor received a score of 4.73. Furthermore, teacher formal feedback and support, interactive dialog peer collaboration, and learning-oriented assessment received mean scores of 5.09, 4.95, and 5.00, respectively. These outcomes indicated that students recognized the utility of the AFL activities and strategies employed in the first-year mathematics courses. On a more positive note, the factor of students taking responsibility for their learning achieved a mean score of 5.15, suggesting that there was a relatively widespread agreement among students about the importance of managing and taking responsibility for their learning.

4.2. Measurement Model Evaluation

The reliability coefficients obtained using Cronbach’s alpha for the subscales of the questionnaire were 0.938, 0.875, 0.885, 0.942, and 0.883. The Kaiser–Meyer–Olkin (KMO) statistic was 0.939, exceeding the minimum adequacy value of 0.50, and Bartlett’s spherical test yielded a significant chi-square value of 3126.848 (

p < 0.001), which together indicated that the data were suitable for factor analysis [

65]. In

Table 3, the lowest loading observed in the construct of interactive dialog and peer collaboration was 0.794, which exceeded the recommended threshold of 0.708. Furthermore, all t-statistics of the outer loadings were greater than 2.58 (

p < 0.001), indicating strong indicator reliability [

66]. It should also be noted that the Cronbach alpha and composite reliability (CR) of all constructs were close to 0.9 or more than 0.9, both of which exceeded the critical value of 0.7. Therefore, the model had a good internal consistency reliability [

60]. Meanwhile, we could see that all the variance inflation factor (VIF) values were below 10, with most of them below 5, showing that this model did not have a serious multicollinearity problem [

67,

68].

Although the sample size was small, the presence of only five questionnaire factors, each with three or more items, together with acceptable indicator and internal consistency reliability, justified further validity analysis using PLS-SEM.

Table 4 shows the convergent validity as Average Variance Extracted (AVE) and the discriminant validity as assessed by the Fornell-Larcker test, which emphasized the correlation of items under the same latent variable and the differentiation between latent variables, respectively [

60].

So far, indicator reliability, internal consistency reliability, convergent validity, and discriminant validity have been tested, and all values met the criteria. This meant that the evaluation of the measurement model was satisfactory to proceed to the next step.

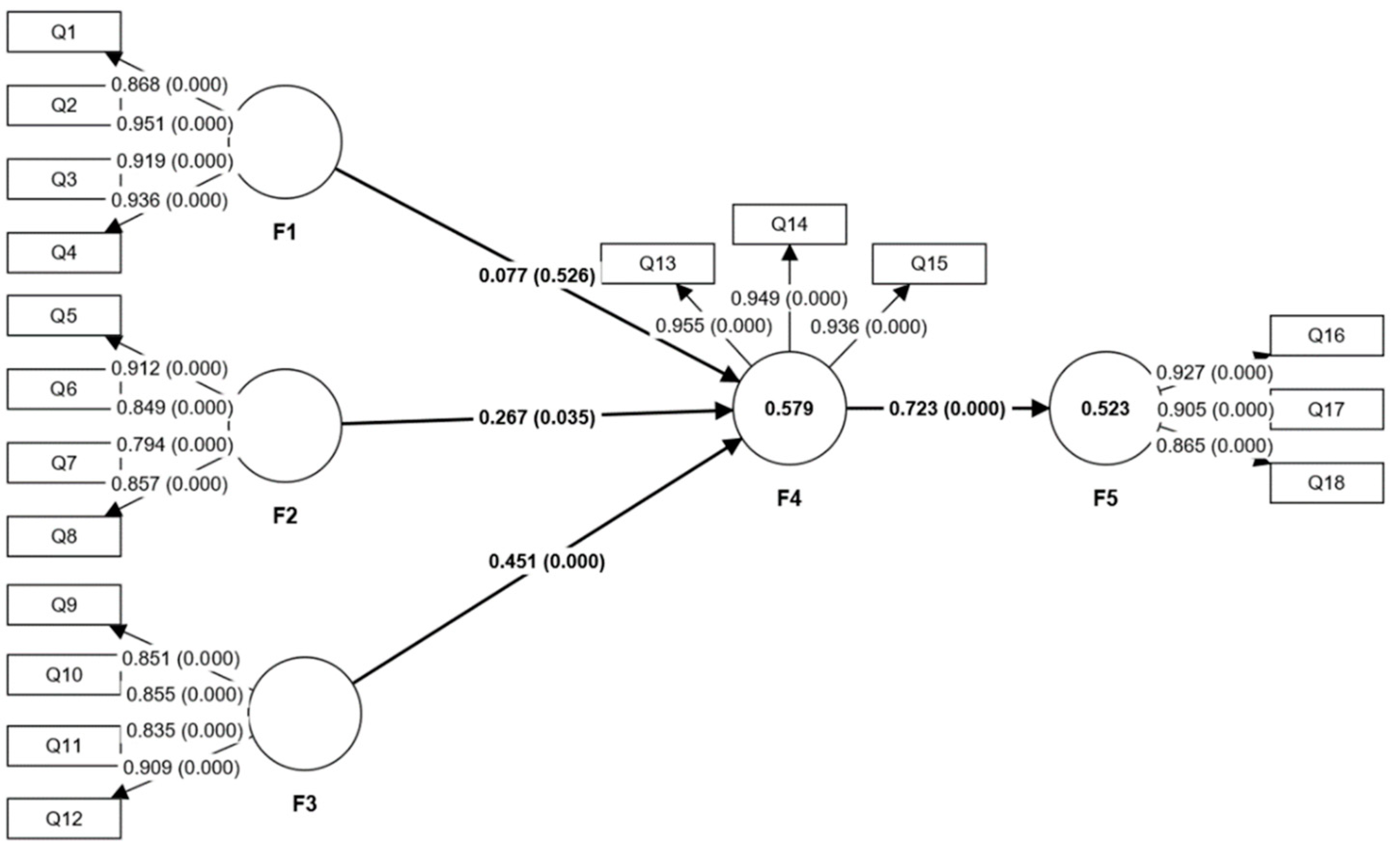

4.3. Structural Model Evaluation

Since the measurement model was established with an acceptable value in the previous subsection, we proceeded with the structural model to examine the associations among the latent variables. First, we tested the structural validity of the model. According to Henseler et al. [

69], “the overall goodness-of-fit of the model should be the starting point of model assessment”. SRMR (standardized root mean square residual) and NFI (normed fit index) values were commonly used to evaluate the suitability and robustness of the model [

70]. In this study, the SRMR (Standardized Root Mean Square Residual) value was 0.056, less than 0.08, and the NFI (Normed Fit Index) value was 0.833, slightly less than 0.90, but still within an acceptable range [

71]. Thus, this study had a good model for empirical research, and the estimated PLS-SEM is demonstrated in

Figure 2.

Based on

Figure 2, we presented a summary of the hypothesis testing in

Table 5. The first three hypotheses relate to the association between activities and strategies of AFL practices and active engagement with the subject matter. Among these three, teachers’ formal feedback and support had an insignificant effect on students’ active engagement with the subject matter (β = 0.077,

p = 0.526 > 0.05). Meanwhile, interactive dialog and peer collaboration significantly affected students’ active engagement with the subject matter (β = 0.267,

p = 0.035 < 0.05). This result implied that students found their coursework more interesting and relevant to the wider world when they were exposed to more opportunities for discussion and experienced dialogic teaching and learning through peer interaction. Moreover, learning-oriented assessment greatly affected students’ active engagement with the subject matter (β = 0.451,

p < 0.001). This result suggested that learning-oriented assessment, which encourages students to rehearse their subject knowledge, could enhance their enjoyment of their work. Overall, it could be concluded that learning-oriented assessment was the strongest influential factor for students’ active engagement with subject matter. Apart from the findings mentioned above, active engagement with subject matter hugely affected students’ responsibility for their learning (β = 0.723,

p < 0.001), suggesting that increased engagement in coursework corresponds to a greater sense of responsibility for learning.

Furthermore, the results indicated that interactive dialog and peer collaboration (β = 0.193, p < 0.05) and learning-oriented assessment (β = 0.326, p < 0.001) had an indirect positive effect on students’ ability to take responsibility for their learning in their first-year mathematics courses. Both were mediated by students’ active engagement with the subject matter.

5. Discussion

The survey showed that interactive dialog and peer collaboration, as well as learning-oriented assessment, were the main factors that influenced undergraduate students to actively engage with mathematical matters and take responsibility for their learning. In particular, learning-oriented assessment was the determinant factor that greatly affected undergraduate students’ course engagement and responsibility for learning. The following paragraphs will analyze each hypothesis in detail.

Teacher formal feedback and support had no significant impact on undergraduate students’ active engagement with the subject matter. In contrast to other views that feedback is effective in triggering appropriate formative responses in students when it is perceived as supportive [

72,

73], this study found that not all feedback provided to learners contributes to the extent of their engagement with the subject matter. Given the realities of China’s higher mathematics education system, there was an imbalance in the number of teachers and students. One teacher often responds to hundreds of students, and individual feedback and support would be almost impossible to provide for such large classes. In addition, Chinese university students tend to dislike teacher-centered pedagogy and desire for teaching styles that encourage creativity and enable collaborative work [

74]. It suggests that mathematics teachers should focus on student-centered strategies when implementing feedback practices in mathematics classrooms, and institutional administrators should assign more teachers or tutors to students in mathematics classrooms.

Interactive dialog and peer collaboration had a significant impact on undergraduate students’ active engagement with the subject matter. Most studies have focused on formative feedback and assessment, the perceptions of interactive dialog and peer collaboration are still in their infancy, and very few studies have been reported in international journals. In fact, the factor of interactive dialog and peer collaboration is related to the nature of AFL as a knowledge-construction process involving interactive dialog [

75]. This result addressed this gap and was consistent with previous studies that showed that students who had the opportunity to experience dialogic teaching and learning through peer interaction were often active participants in the classroom [

76,

77,

78]. It means that mathematics teachers and institutional administrators could give their students more opportunities to discuss theorems and propositions through interaction and collaboration.

The learning-oriented assessment had the most significant impact on undergraduate students’ active engagement with the subject matter. Learning-oriented assessment is an approach that advocates the use of different assessment tasks that encourage students to test out ideas, practice relevant skills, and rehearse subject knowledge [

79]. We speculated that formative assessment practices, such as having students reflect on their learning processes and how to improve their learning at the end of an examination, and innovative assessment methods, such as portfolios and concept mapping to examine students’ mastery of the learning content, encourage students to actively engage with the subject matter. It underscores that mathematics teachers and institutional administrators need to be more careful about the assessment tasks they design for students, avoiding rote learning and focusing on flexibility.

Undergraduate students’ active engagement with the subject matter had an extremely significant impact on students taking responsibility for learning. Students who scored high on the former factor tended to be more active and motivated in their coursework, while students who scored high on the latter factor were often more reflective, self-managed, and self-regulated, and thus better able to take responsibility for their learning [

44]. This finding is consistent with Kaplan’s review, which concluded that the purpose of engagement is central to self-regulated action and noted its potential to guide the search for meaningful dimensions on which to typify self-regulation [

80]. It advocates that mathematics teachers and institutional administrators relate mathematical problems to the wider world and make course content interesting to engage their students in mathematics courses.

Further mediation analysis revealed that interactive dialog and peer collaboration, learning-oriented assessment, and students taking responsibility for their learning were mediated via active engagement with subject matter. More specifically, interactive dialog and peer collaboration, as well as learning-oriented assessment, had statistically significant regression weights on students taking responsibility for their learning. Conversely, teacher formal feedback and support had statistically insignificant regression weights on students taking responsibility for their learning. These findings were not in line with previous studies, which showed that feedback is effective in triggering students’ responsibility for learning [

73]. Given the contextual constraints of the Chinese education system, the long-term pressure of high-stakes exams forces students to seek feedback directly from their teachers. This hinders the development of creative thinking and initiative, which are seen as prerequisites for students to take responsibility for their learning. It implies that efforts to promote students’ responsibility for learning should focus on how teachers better deliver interactive activities and assessment tasks and how they focus on improving feedback.

Given the unique contribution of this study, we might expect these findings to generalize to all Chinese universities and benefit all students struggling in advanced mathematics courses. However, Chinese higher education has now moved from mass to universalization. Students’ mathematics backgrounds and teachers’ qualifications may vary across universities, reminding us that we need to be more cautious in extrapolating our findings. Nevertheless, the current findings do provide insights into how Chinese undergraduate students experience and use their mathematics teachers’ AFL practices and give teachers and institutional administrators implications for improving the learning, teaching, and assessment environment.

7. Conclusions

Generally speaking, this study examined the effects of undergraduate students’ perceptions of their teachers’ AFL practices conducted in first-year mathematics courses on their sense of responsibility for learning in China. The results provided more evidence of characteristics of AFL activities and strategies carried out in the mathematics classrooms. Admittedly, the results revealed several significant effects of undergraduate students’ perceptions of their teachers’ AFL practices on their sense of responsibility for learning in China, most of which were consistent with the findings of previous studies. Specifically speaking, interactive dialog and peer collaboration, as well as learning-oriented assessment, were found to positively predict students’ active engagement with subject matter and taking responsibility for their learning. These results echo the pattern of relationships reported in previous studies conducted in Western contexts [

85,

86,

87]. Given the desirable associations between these factors, instructors are advised to provide more opportunities for student collaboration and different assessment approaches and content in their first-year mathematics courses. The opportunities should focus on the development of students’ generic skills, such as communication and interaction skills. Meanwhile, student assessment should emphasize the formative function to facilitate students’ mastery and understanding of knowledge.

Compared with the findings of previous studies, this study also revealed some notable inconsistencies regarding the role of the factor of teacher formal feedback and support. In contrast to the findings by Patel et al. [

58], teacher formal feedback and support did not have a significant relationship with students’ active engagement with the subject matter and sense of responsibility for learning. This result is quite different from the findings of previous studies conducted in Western contexts [

49,

73], which prompts us to rethink the role of university teaching in China. This may be due to the fact that university teaching in China has long been characterized by teacher-centeredness and traditional pedagogy [

88]. As stated before, students are always seen as recipients of teaching, and they only need to follow their teacher’s instruction and submit to their teachers’ will [

32]. Although Chinese university students were found to have negative attitudes toward this passive teaching [

89], the persistent belief that students are incapable of learning independently without teachers’ spoon-feeding didactic approaches remained in China [

90], reinforcing students’ intention not to self-regulate. Therefore, teachers should make more efforts to improve the way they provide individualized feedback to their students so that they can take responsibility for their learning. Likewise, we recommend professional development aimed at improving teacher feedback literacy and feedback delivery, as previous research shows that promoting positive student perceptions of teacher assessment practices enhances feedback use and student learning gains [

60].

8. Limitations and Directions for Future Research

Using the PLS-SEM approach, the present study has provided some preliminary findings to monitor and scaffold the construction of quality systems in China. Unfortunately, there are still some limitations, which can also be seen as directions for future research. First, the sample size of the sophomores was small. To make the results more general and representative, it is suggested that further research could be conducted at a more appropriate time. Second, due to length limitations, the study did not examine differences based on student demographics, such as gender, disciplinary background, and type of institution, which are important issues to address. Finally, the current research used only quantitative methodology, which is inadequate to explain the complexities associated with undergraduate student responsibility for learning. A further study should use mixed approaches, complementing quantitative with qualitative methods, to explore the factors that influence students’ ability to take responsibility for their learning in first-year mathematics courses.