1. Introduction

The interest for fractional stochastic calculus is continuously growing, often motivated by the need to construct stochastic models, including

effects. Indeed, the non-locality property of fractional integrals and derivatives can be well exploited to develop models with memory and/or a sort of history dependence. In particular, fractional operators are mathematical tools that allow us to construct new processes derived as solutions, i.e., fractional integrals of stochastic and fractional differential equations. Recently, some models [

1,

2,

3,

4,

5] have began relying on fractional stochastic differential equations (fSDEs). Generalized fractional integrals (see, for instance, [

6,

7]) turn out to be extremely useful in such a framework because more specialized fractional integrals (or, equivalently, fractional integrals with specialized kernels, [

8]) allow for the mathematical characterization of fractional solution processes, with more refined and realistic stochastic models being able to be realized. This justifies the great importance of Prabhakar fractional calculus [

9,

10] from mathematical and practical points of view. The Mittag–Leffler fractional integrals we use are special cases of Prabhakar fractional integrals [

11]. For integrals and derivatives of Mittag–Leffler functions, see [

12] and the references therein.

Here, in particular, our purpose is to show that Mittag–Leffler fractional integrals (rigorously defined in

Section 2), i.e., integrals like

and Mittag–Leffler stochastic fractional processes (rigorously defined in

Section 2), i.e., processes like

are involved in the construction of solution processes

and

of the following coupled equations:

with two fractional orders

for the involved Caputo derivatives [

12,

13], and with

representing standard Brownian motion. Coefficients

are real numbers, whereas some regularity conditions will be assumed on coefficient functions

as specified in

Section 2 and

Section 3.

We call the process

the Mittag–Leffler (ML) fractional process. Note that the process

is obtained by applying a fractional integration procedure to the process

For this reason, we also say that

is obtained by fractionally integrating the process

We provide representation formulae for such processes by means of Mittag–Leffler fractional integrals, and we provide expressions for their mean and covariance functions in terms of ML fractional integrals. Indeed, even if several numerical procedures are available for numerically solving SDEs that can be specialized for the above considered equations (see, for instance [

14,

15], and references therein), we are mainly interested in the characterization of the stochastic integrals of Equations (

1) and (2).

Firstly, in order to clarify the connection with classical fractional integrals and derivatives, we recall the definition of fractional operators that we will use in this paper, i.e., the definition of the

fractional Riemann–Liouville (RL) integral [

12,

16] of order

:

with

and

the Gamma Euler function, i.e.,

and the definition of Caputo fractional derivative [

12,

17], where, for all

,

with

.

Note that we use the following to identify notations: and

We aim to study the particular cases involving the above fractional integrals: the case in which the RL fractional integral considers Brownian motion and when is a stochastic process. In both cases, the above integrals have to be intended in a interpretation. We show that such kind of particular processes arise as solutions of fractional differential equations.

With this purpose, we also remark that the Caputo fractional derivative

is just the right inverse of the

integral of

order (see [

16]), i.e.,

due to the equality

and the semigroup property of the RL integral with respect to the fractional order

Indeed, we take into account that (see [

12] for details) we have

where

for

is the classical Riemann integral.

By deriving the expression of the solution processes for Equations (

1) and (2), we have work with generalized fractional integrals [

8]. More specifically, the solution processes will be obtained as special cases of Prabhakar fractional integrals or generalized fractional Mittag–Leffler integrals (see, [

8,

18,

19]). Indeed, we recall that the Prabhakar integral was defined at first in [

20] and then in [

8]. Regarding the latter version, we essentially refer to it as follows:

Here,

is the Prabhakar function also known as the generalized three-parameter Mittag–Leffler function, which is given by

for

The parameters

can be complex numbers, but we consider the real case with

and

Moreover, note that (

6) for

provides the two-parameter Mittag–Leffler function

and finally the one-parameter Mittag–Leffler function is

(see, for details, [

21]). The Prabhakar function and the corresponding integral (

5), which generalize fractional integrals with power a kernel and their tempered version [

22], allowed the development of the so-called Prabhakar fractional calculus (see, for instance, [

9,

10]).

The novelty of this contribution is essentially in the characterization of the solution processes of Equations (

1) and (2), which are special cases of Prabhakar integrals involving the two-parameter Mittag–Leffler functions, which we just call Mittag–Leffler fractional integrals. More specifically, we construct the process

by means of a stochastic version, defined here as the Mittag–Leffler fractional integral with respect to Brownian motion, and we recognize it as non-zero mean generalized fractional Brownian motion. Then, the solution process

of (2) will be written by means of a Mittag–Leffler fractional integral of

We provide expressions of covariance functions of such processes in terms of Mittag–Leffler fractional integrals. These computable expressions are used for numerical evaluations and comparisons in an application example to neuronal modeling.

Indeed, the first motivation of our study of such a kind of processes was created from the need to construct fractional models for neuronal activity that would be able to be affected by memory effects and to evolve on different time scales (see [

4]). More specifically, the correlated process

evolving on an

-time scale plays the role of the input to the process

(the voltage of the neuronal membrane) that integrates

on a

-time scale. For the differential dynamics of both processes, the fractional Caputo derivative is used in consideration of the non-locality property of such an operator, suitable to preserve the evolution

of the process itself. Moreover, the adoption of the correlated input [

23,

24,

25] in place of the traditional white noise allows to explain a sort of memory effect [

26] by means of the long-range dependence property [

27]. We show the usefulness of our theoretical results by applying them in some specific neuronal models.

SUMMARY: In this manuscript, our contribution is to study the processes

X and

Y as solutions of the assigned fractional SDEs (

1) and (2), as well as to provide explicit expressions for their mean functions and covariances. In particular, in

Section 2, some essential theoretical assumptions and known results are specified. Then, in Theorems 1 and 2, we provide representation formulae for processes

X and

Y, respectively, in terms of ML fractional integrals. The connection with some kind of fractional Brownian motions, with tempered fractional Brownian motion [

22] and other well-known processes being among them, is highlighted. The long-range dependence property is preserved.

Section 3 is devoted to the study of the process

Y, which we call the fractionally integrated process of

Beyond the new expression of its covariance (in Theorem 2), we also provide an alternative expression for the covariance of

Y in Corollary 2. In

Section 4, some neuronal models are considered as special cases of fSDEs and are inserted in this mathematical setting in order to provide useful applications of these results. In

Section 5, some numerical and graphical evaluations are shown and discussed.

3. Fractionally Integrated Mittag–Leffler Fractional Processes

In this section, we aim to address the characterization of the solution process of Equation (2). Hence, we focus on the following non-homogeneous linear fractional differential equation (fDe) on a bounded interval

:

where

is a function of

with

and

being the Caputo derivative as in (

4), and with

,

being measurable and bounded real-valued functions on

.

Here, in the same functional space of

Section 2, we consider the case where

in (

28) is a stochastic process with sample paths almost surely (a.s.) Hölder continuous. In such a way, fDe (

28) is a stochastic fDe considered in a pathwise sense [

2,

4].

The explicit form of the solution of fDe (

28) in

, as proved in [

3], is

Equation (2) is a stochastic version of the fractional differential Equation (

28); indeed, by setting in (

28)

and

, we obtain Equation (2). In this setting, the coefficient function

is stochastic and it includes

, which has to be a process in a complete filtered probability space

with a.s. Hölder continuous paths, such that the corresponding stochastic solution process of (2) can be written as follows:

Finally, by using definitions of the ML fractional integral (

14), we can re-write the solution process

given in (

30) as a ML fractionally integrated process, i.e.,

For the specific case of the system of Equations (

1) and (2), by taking into account that

is the process given in (

17), we specify the solution process

by means of the ML fractional integral operators. Moreover, we can specify its mean and the covariance functions in the following proposition.

Theorem 2. In connection with Equation (1), under the assumptions of Section 2, Lemma 1 and Theorem 1, the solution process of Equation (2) is the following Gaussian process:with meanand covariance such thatwhere and are defined in (14) and (15), respectively. Moreover, the covariance for is Proof. By using the definition of the ML fractional integral (

14), we can re-write the solution process

given in (

30) as a ML fractionally integrated process in such a way that we have

The representation Formula (

32) is derived by substituting the representation Formula (

17) of

in (

36) and by considering that the expectation of

is zero. Hence, (

33) also holds.

Furthermore, the covariance of

can be written as in (

34) due to

and by taking into account (

32).

Furthermore, in order to prove the validity of the expression (

35) for the covariance of

and taking into account (

34), we calculate the following expectation:

By applying Fubini’s theorem, we can also write

By setting

by using the independence of stochastic integrals on non-overlapping intervals by which

Finally, we obtain

due to the application of the Itô isometry. This completes the proof. □

Corollary 2. Under the previous assumptions about the functional space of the considered processes, i.e., X and Y processes are in , and by assuming the covariance of process is an integrable function, the covariance of the process can be alternatively obtained as follows:which, in the explicit form, is Proof. From (

31) and by taking into account (

33), the covariance of

can be evaluated as follows:

where the right-hand side is equal to

By applying the two nested ML fractional integrals

to the explicit expression (24) of the covariance of

, we obtain

Finally, Equation (

42) holds by substituting (24) in (

45). □

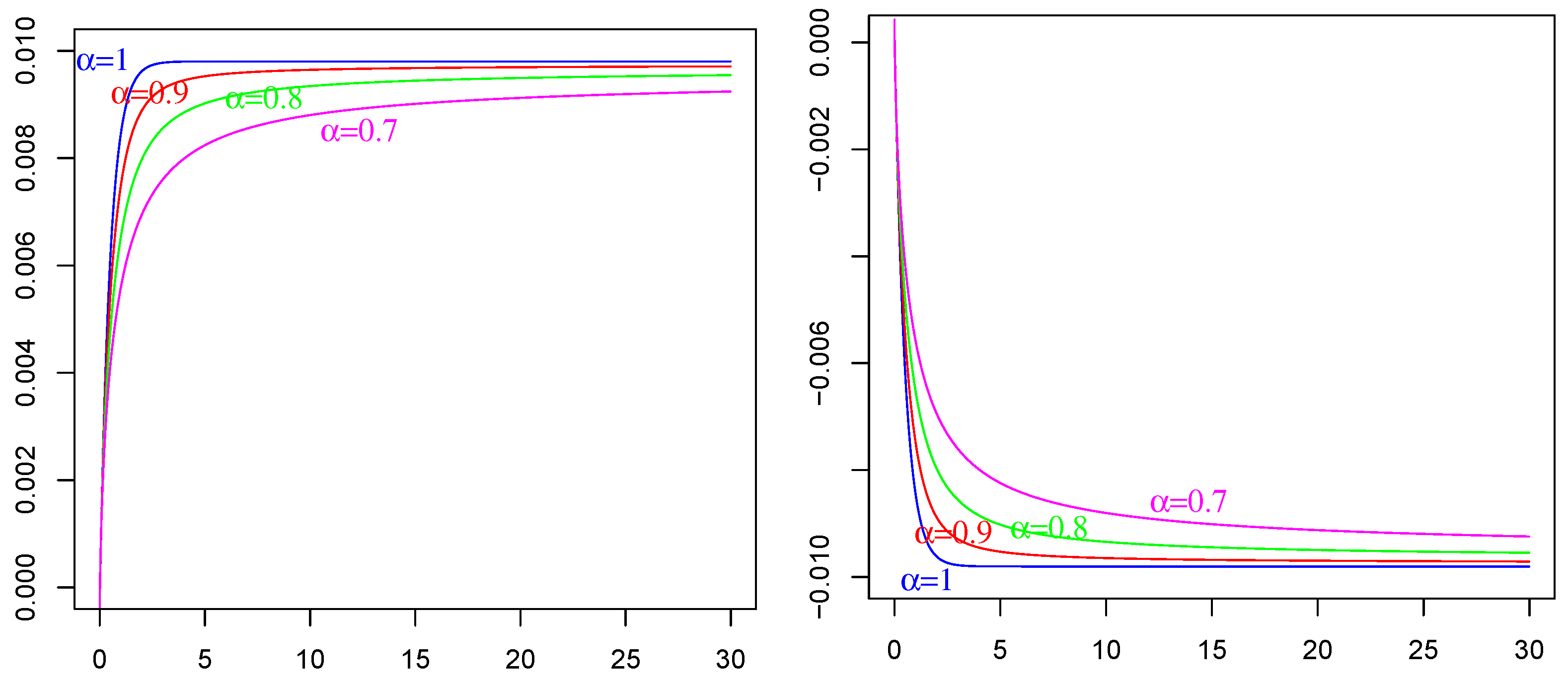

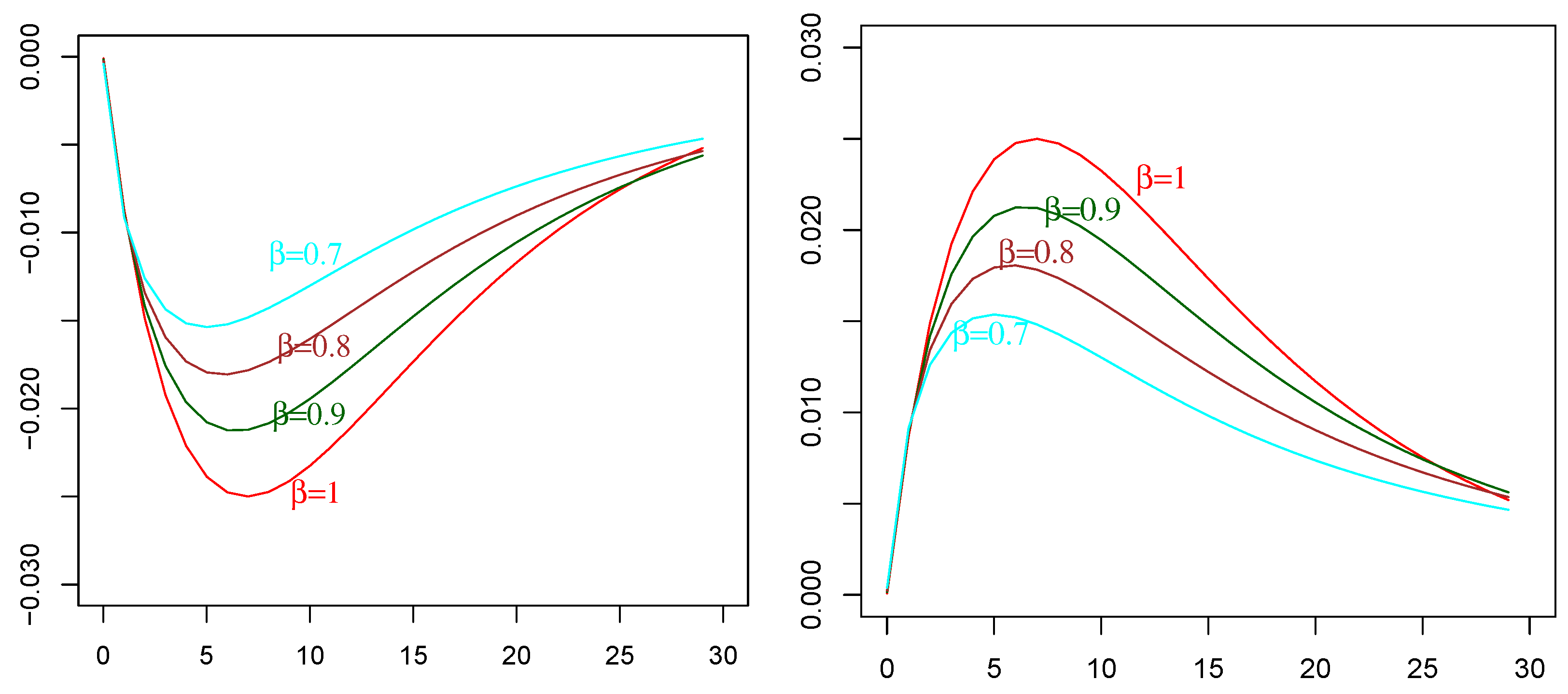

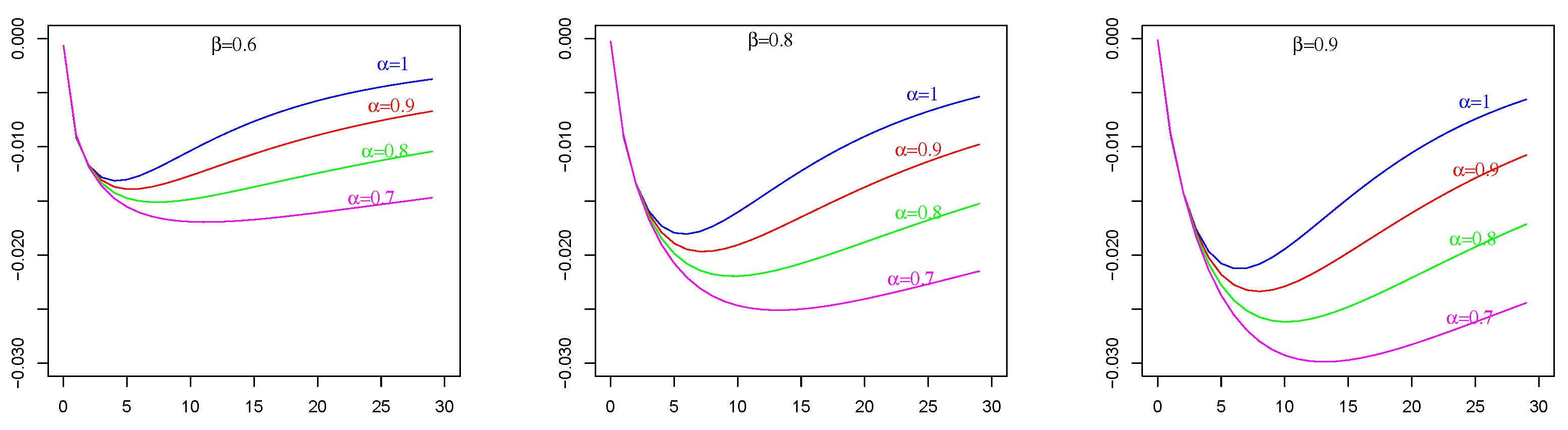

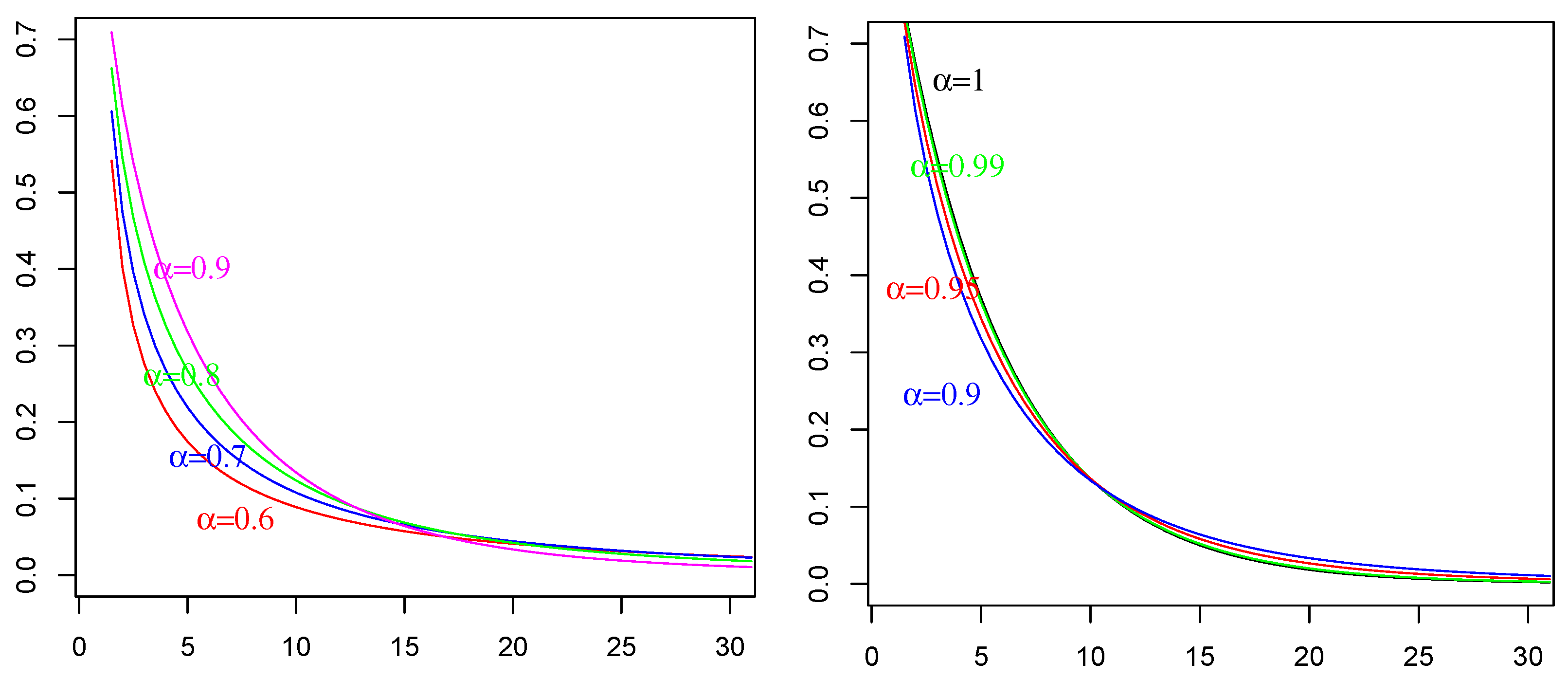

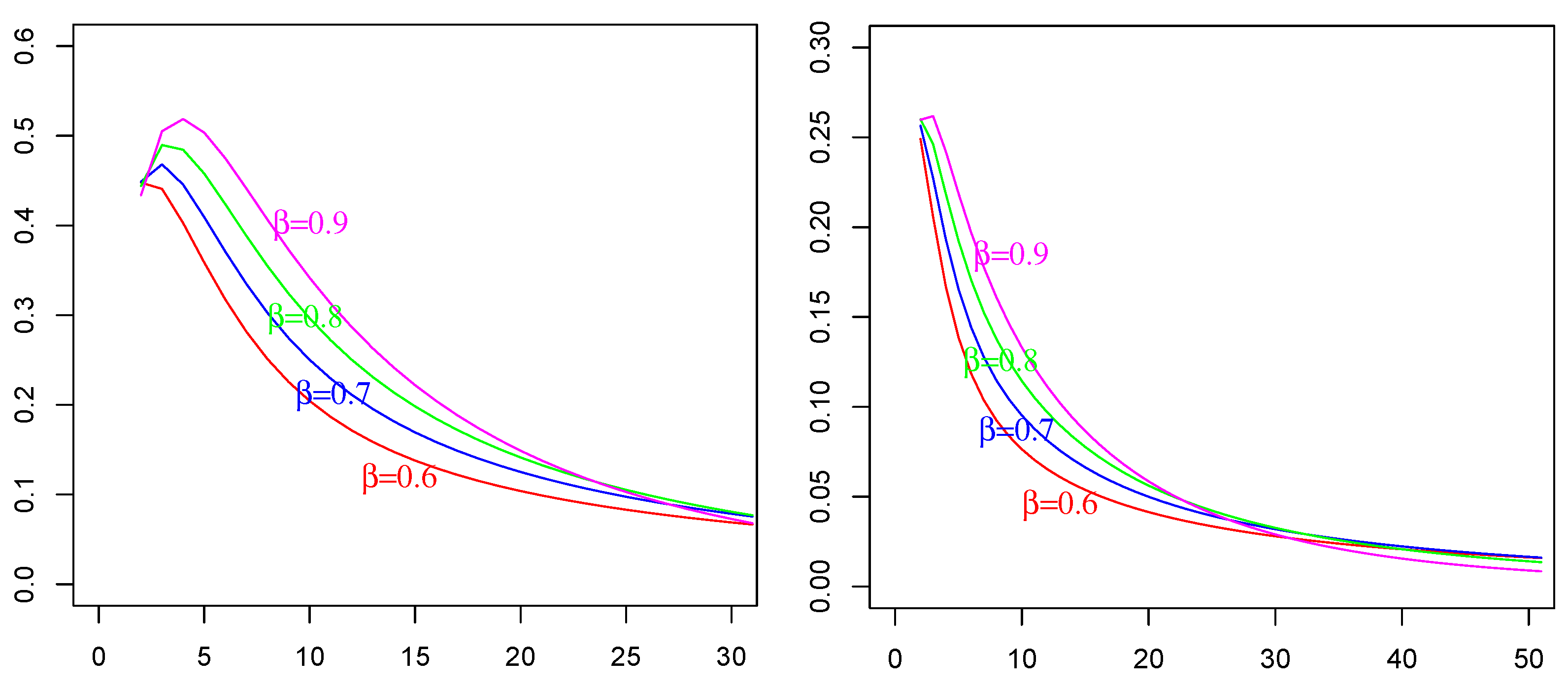

Remark 3. The two expressions (35) and (42) are different forms of the obtained by means of different strategies based on different, but equivalent, representation formulas of process , i.e., on Equations (32) and (31), respectively. Obviously, they take the same values. We verify the agreement of numerical evaluations of (35) and (42), obtained by an R-code, which was devised and implemented ad hoc. The Mittag-LeffleR package was used for numerical evaluations of the involved Mittag–Leffler functions. See the comparative plots in Figure 1: identical behavior is evident, even if there is a slight difference, mainly due to numerical (here, non-refined) approximation scripts. As an advantage of form (35), we can say that from these numerical implementations, it was evident that a larger amount of computational time was spent for evaluations of (42) than that for (35), which was sometimes more than double. Remark 4. In the special case of , and referring to (1), is an Ornstein–Uhlenbeck process with covariance as in (26), i.e.,Hence, from (41) and by substituting (46) in (42), we haveThen, for in (47), we particularly haveWe remark that the corresponding expression obtained by means of (35) for and is specifically the following:In Figure 1, we compare Formula (48) with Formula (49) in order to show the satisfactory agreement. 4. Applications in Neuronal Modeling

Under all above assumptions, we consider neuronal models that are based just on the coupled Equations (

1) and (2). Substantially, we identify the coefficients on the considered fSDEs with specific features of the neuronal model in such a way the utility of these results is meaningful and reliable. The values and the forms of the coefficients of fractional SDE considered here verify all the above theoretical assumptions in such a way that solutions exist; furthermore, we exploit their explicit expressions that provided here. Moreover, we add that the mathematical results in a compact set

are adequate for such kind of models because the neuronal dynamics are considered until a finite time instant

T corresponding to the firing time of a neuron.

We mainly refer to [

4], in which three different fractional stochastic neuronal models were studied. See there the introduction to such kinds of models, experimental motivations and the modeling advantages specifically discussed.

The prototype of such kinds of models is

with

being the correlated input process, including a constant electric current

I (synaptic one originated from the surrounding neurons or superimposed from outside), and

being the voltage of the neuronal membrane. Here, the SDE’s coefficients have specific meanings. Indeed,

stands for membrane capacitance,

stands for leak conductance,

stands for a “resting” value of membrane potential,

is the white noise (with

a standard Brownian motion), and

turns the intensity (or amplitude) of the noise. Furthermore,

is the characteristic time of the input process

, i.e., it is related to the mean time of the decay towards to the equilibrium level; it is also related to the so-called correlation time of

This model can be viewed as the fractional version of the neuronal model with leakage (see, for an introduction to neuronal models and firing activity, [

33,

34,

35]; for different kinds of stimuli, [

36]; for non-time-homogeneous neuronal models, [

37]; for fractional neuronal models, [

5,

38,

39,

40]; and the references therein).

Note that the introduction of the fractional derivatives and of the two different fractional orders allows us to preserve memory of the time evolution of each process on different time scales. Indeed, the time scale is regulated by the value of the fractional order and , a useful property that adequately models the two different dynamics of the input and of the voltage.

The identification of the above neuronal model (

50) with Equations (

1) and (2) is easily obtained by setting

in Equation (

1):

in Equation (2):

4.1. The Mean and Covariance of ML Fractional Input Process

By applying the suitable above substitutions of the coefficients, we can specify that from (

17)

and the mean function from (

18) is

and, from Equation (

20), for

, the covariance is

which is specifically

Note that, in this model, from the representation Formula (

17) adapted here to

, i.e., for (

51), the input process is a generalized RL fBM with a non-zero mean and long-range dependence property. In particular, it is a Gaussian process and its mean is asymptotically driven by the additional current term

4.2. The Mean and Covariance of Fractionally Integrated ML Process

About the voltage process

, we can specify that, from (

32), it is

Alternatively, from (

31), it is

with mean

and covariance such that

or, in the more explicit form,

Compare formulae (

53) and (

58) with those in [

4], which were given in an implicit form involving RL fractional integrals. The main result is just Equation (

58); indeed, it is a formula that can be computable by numerical and symbolic algorithms. It is really innovative from a theoretical point of view and especially regarding its applicability in the neuronal modeling context.

We also remark that for the other settings of parameters, it is possible to specify further neuronal models. Indeed, for instance, if

, the model (

50) is an integrate-and-fire model [

33] without leakage. Correspondingly, the process

is exactly the ML fractional integral of the input

with a mean that is the ML fractional integral of

mean [

4].

6. Some Concluding Remarks

Motivated by the wish to provide more refined neuronal models, including some memory effects, we studied the solution processes of a couple of fractional stochastic differential equations (Equations (

1) and (2)) involving Caputo-fractional derivatives. Such processes are ML fractional processes, as specified in (

21) and (

30), respectively. In order to provide compact expressions of such processes and of their means and covariances, we introduced the ML fractional integral operators: a deterministic one as in (

14) and a stochastic one as in (

15) with respect to standard Brownian motion. The main results are in Theorems 1 and 2, in which the representation Formulas (

17) and (

32) are given for processes

X and

, respectively, in terms of the ML fractional operators. Such representations turn out extremely useful for determining computable new expressions of the covariances (

20) of

X and (

35) for

Y, but they also allow us to understand the roles and the resulting effects of processes in the coupled dynamics. This is extremely useful when we assign physical values to all involved functions, parameters and processes, as we demonstrated in the provided application to the neuronal model (

50) in

Section 4 and

Section 5.

We remark that the studied processes are Gaussian non-Markovian with a long-range dependence property. The provided results allow us to devise simulation algorithms for these types of processes, such as those based on exact generating procedures of Gaussian random variables: this is now possible due to the explicit computable form of the covariances, which are provided here. (We recall that, in particular, many direct computations of ML fractional integrals, in simple cases, are available in [

11,

12]).

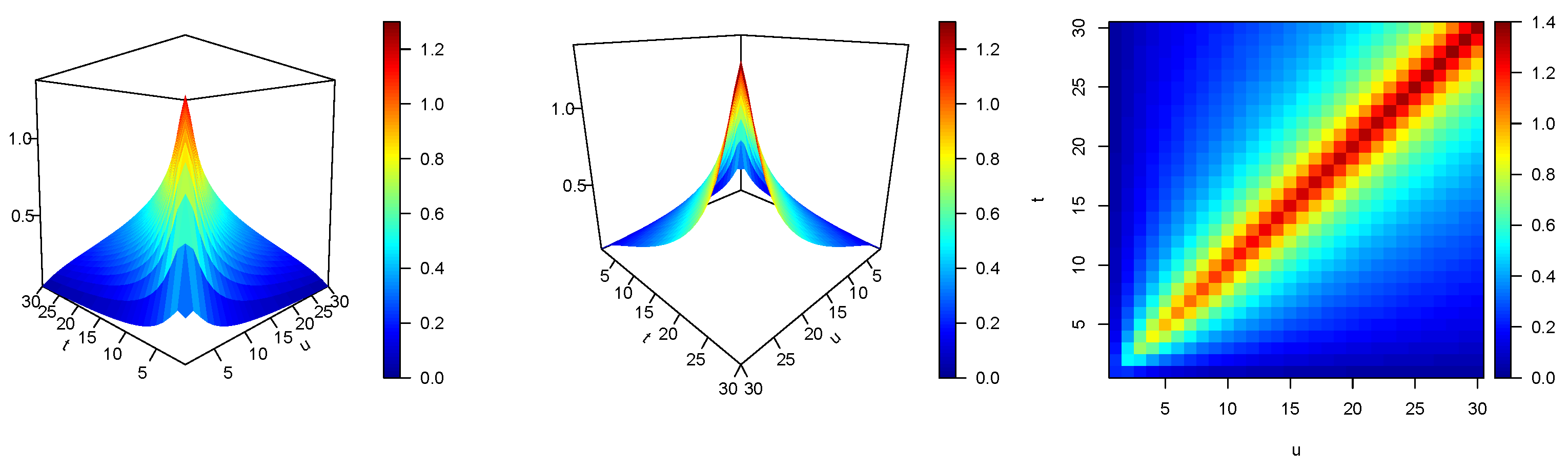

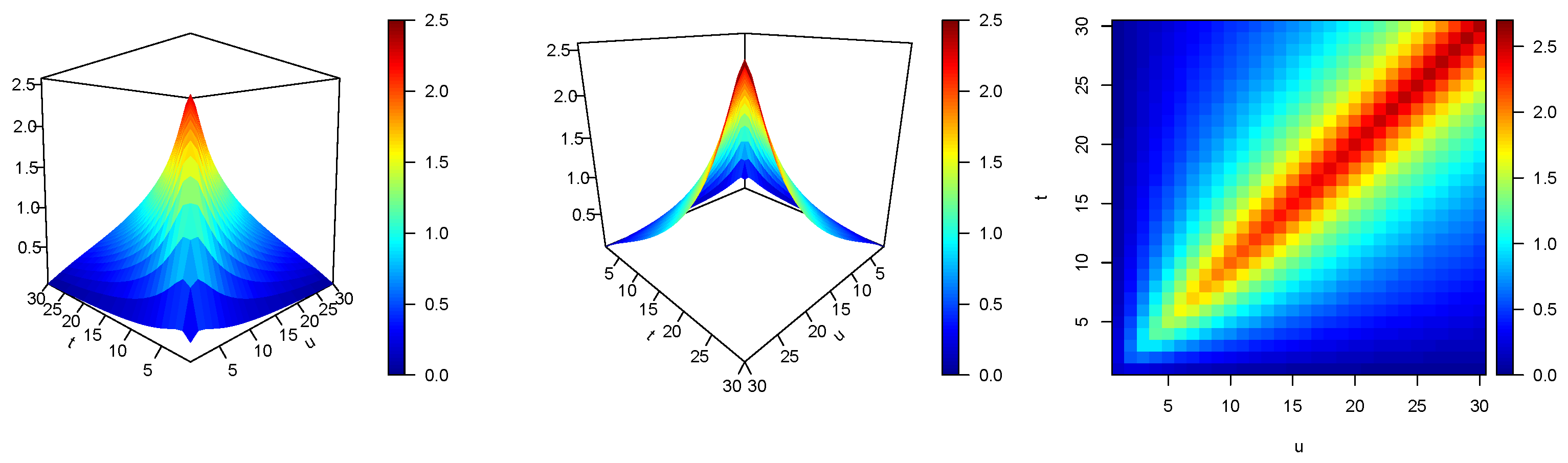

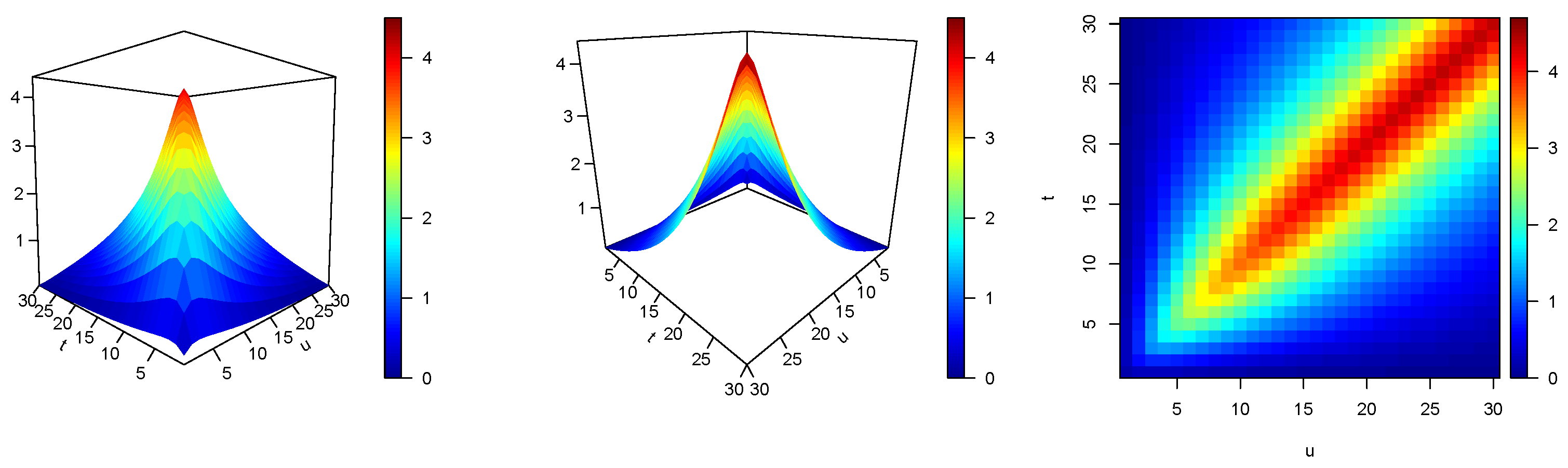

The Figures show that numerical and quantitative analyses of covariances of such processes are possible, and they has to be carried out in a systematic way. Moreover, we understand that a more rigorous and in-depth study of ML stochastic fractional integrals and processes is also necessary, due the mathematical beauty and the practical interest of such operators. All these proposed research objectives will be developed in our future works.