Abstract

We derive a double-optimal iterative algorithm (DOIA) in an m-degree matrix pencil Krylov subspace to solve a rectangular linear matrix equation. Expressing the iterative solution in a matrix pencil and using two optimization techniques, we determine the expansion coefficients explicitly, by inverting an positive definite matrix. The DOIA is a fast, convergent, iterative algorithm. Some properties and the estimation of residual error of the DOIA are given to prove the absolute convergence. Numerical tests demonstrate the usefulness of the double-optimal solution (DOS) and DOIA in solving square or nonsquare linear matrix equations and in inverting nonsingular square matrices. To speed up the convergence, a restarted technique with frequency m is proposed, namely, DOIA(m); it outperforms the DOIA. The pseudoinverse of a rectangular matrix can be sought using the DOIA and DOIA(m). The Moore–Penrose iterative algorithm (MPIA) and MPIA(m) based on the polynomial-type matrix pencil and the optimized hyperpower iterative algorithm OHPIA(m) are developed. They are efficient and accurate iterative methods for finding the pseudoinverse, especially the MPIA(m) and OHPIA(m).

Keywords:

linear matrix equations; matrix pencil Krylov subspace method; double-optimal iterative algorithm; Moore–Penrose pseudoinverse; restarted DOIA; optimized hyperpower method MSC:

65F10; 65F30

1. Introduction

Since pioneering works [,], Krylov subspace methods have been studied and developed extensively in the iterative solution of linear equations systems [,,,,,,], like the minimum residual algorithm [], the generalized minimal residual method (GMRES) [,], the quasiminimal residual method [], the biconjugate gradient method [], the conjugate gradient squared method [], and the biconjugate gradient stabilized method []. For more discussion on the Krylov subspace methods, one can refer to review papers [,,] and text books [,].

In this paper, we use the matrix pencil Krylov subspace method to derive some novel numerical algorithms to solve the following linear matrix equation:

where is an unknown matrix. We consider two possible cases:

We assume for consistency in Equation (1).

The problem of solving a matrix equation is one of the most important topics in computational mathematics and has been widely studied in many different fields. As shown by Jbilou et al. [], there are many methods for solving square linear matrix equations. Many iterative algorithms based on the block Krylov subspace method have been developed to solve matrix equations [,,]. For a multiple-righthand-side system with A being nonsingular, the block Krylov subspace methods for computing in Equation (1) comprise an even larger body of the literature [,,]. There are some novel algorithms for solving Equation (1) with nonsquare A and square Z in [,,,]. Having an effective and fast solution to matrix equations plays a fundamental role in numerous fields of science. To reduce the computational complexity and increase the accuracy, a more efficient method is still required.

One of the applications of Equation (1) is finding the solution to the following linear equation system:

It is noted that for the solution to Equation (4), we need to find the left inversion of A, which is denoted by . When we take , Equation (1) is used to find the right inversion of A, which is denoted by . Mathematically, for nonsingular square matrices; however, from the numerical outputs, , especially for ill-conditioned matrices.

In order to find the left inversion of A, we can solve

a transpose leads to

If we use to replace A, then we supply an optimal solution and optimal iterative scheme to seek ; inserting its transpose into Equation (4), we can obtain the solution of x with

There are many algorithms for finding or for nonsingular matrices. Liu et al. [] modified the conjugate gradient (CG)-type method to the matrix conjugate gradient method (MCGM), which performed well in finding the inverse matrix. Other iterative schemes encompass the Newton–Schultz method [], the Chebyshev method [], the Homeier method [], the method by Petkovic and Stanimirovic [], and some cubic and higher-order convergence methods [,,,].

We can apply Equation (1) to find a pseud-inverse with dimensions of a rectangular matrix A with dimensions :

where is a q-dimensional identity matrix. This is a special Case 2 in Equation (3) with and .

For wide application, the pseudoinverse and the Moore–Penrose inverse matrices have been investigated and computed using many methods [,,,,,,,,,,,,]. More higher-order iterative schemes for the Moore–Penrose inverse were recently analyzed by Sayevand et al. [].

In order to compare different solvers for systems of nonlinear equations, some novel goodness and qualification criteria are defined in []. They include convergence order, number of function evaluations, number of iterations, CPU time, etc., for evaluating the quality of a proposed iterative method.

From Equation (1),

is an initial residual matrix, if an initial guess is given. Let

Then, Equation (1) is equivalent to

which is used to search a descent direction X after giving an initial residual. It follows from Equations (9) and (10) that

is a residual matrix. Below we will develop novel method to solve Equation (11), and hence Equation (1), if we replace B by F and Z by X. Since we cannot find the exact solutions of Equations (1) and (11) in general, the residual R in Equation (12) is not zero.

1.1. Notation

Throughout this paper, uppercase letters denote matrices, while lowercase letters denote vectors or scalars. If two matrices A and B have the same order, their inner product is defined by , where is the trace of a square matrix, and the superscript T signifies the transpose. As a consequence, the Frobenius norm of A is given by . The component of a vector a is written as , while the component of a matrix A is written as . A single subscript k in a matrix means that it is the kth matrix in a matrix pencil.

1.2. Main Contribution

In GMRES [], an m-dimensional matrix Krylov subspace is supposed,

The Petrov–Galerkin method searches via a perpendicular property:

The descent matrix can be achieved by minimizing the residual []:

Equation (14) is equivalent to the projection of B on , and R is perpendicular to . To seek a fast convergent iterative algorithm, we must keep the orthogonality and simultaneously maximize the projection quantity:

The main contribution of this paper is that we seek the best descent matrix X simultaneously satisfying (15) and (16). Some excellent properties including the absolute convergence of the proposed iterative algorithm are proven. When the matrix Krylov subspace method in Equation (13) is not suitable for the Case 2 problem, we can treat both Case 1 and 2 problems of linear matrix equations in a unified manner. Now, our problem is to construct double-optimal iterative algorithms with an explicit expansion form to solve the linear matrix Equation (1), which involves inverting a low-dimensional positive definite matrix. Our problem is more difficult than that using (15) to derive iterative algorithms of the GMRES type. For the pseudoinverse problem of a rectangular matrix, higher-order polynomial methods and hyperpower iterative algorithms are unified into the frame of a double-optimal matrix pencil method but with different matrix pencils.

1.3. Outline

We start from an m-degree matrix pencil Krylov subspace to express the solution to the linear matrix equation in Section 2; two cases of linear matrix equations are considered. In Section 3, two merit functions are optimized for the determination of the expansion coefficients. More importantly, an explicit form double-optimal solution (DOS) to Equation (11) is created, for which we needed to invert an positive definite matrix. In Section 4, we propose an iterative algorithm updated using the DOS method, which provides the best descent direction used in the double-optimal iterative algorithm (DOIA). A restarted version with frequency m, namely, the DOIA(m) is proposed. The DOS can be viewed as a single-step DOIA. Linear matrix equations are solved by the DOS, DOIA, and DOIA(m) methods in Section 5 to display some advantages of the presented methodology to find the approximate solution of Equation (1). The Moore–Penrose inverse of a rectangular matrix is addressed in Section 6, proposing two optimized methods based on a new matrix pencil of polynomials and a new modification of the hyperpower method. Numerical testing of the Moore–Penrose inverse of rectangular matrix is carried out in Section 7. Finally, the conclusions are drawn in Section 8.

2. The Matrix Pencil Krylov Subspace Method

This section constructs suitable bases for Case 1 and Case 2 problems of the linear matrix Equation (1). We denote the expansion space with . For Case 1,

while, for Case 2,

In , the matrices are orthonormalized by using the modified Gram–Schmidt process, such that , where : denotes the inner product of and .

In the space of , X in Equation (11) can be expanded as

where . The coefficients and are determined optimally from a combination of and the m matrix in a degree m matrix pencil Krylov subspace.

Both Case 1 and Case 2 can be treated in a unified manner as shown below, but with different and . is a matrix pencil consisting of m matrices in the matrix Krylov subspace, and is the product of the pencil with vector . For the frequent use of the later, we give a definition of as

In Equation (21), X is spanned by , and we may write it as . So, we call our expansion method a degree matrix- pencil Krylov subspace method. It is different from the fixed affine matrix Krylov subspace . The concept of fixed and varying affine Krylov subspaces was elaborated in [].

3. Double-Optimal Solution

In this section, we seek the best descent matrix X simultaneously satisfying (15) and (16). The properties including the orthogonality of residual and absolute convergence of the proposed iterative algorithm are proven. We must emphasize that the derived iterative algorithms have explicit expansion forms to solve the linear matrix Equation (11), by inverting an positive definite matrix.

3.1. Two Minimizations

To simplify the notation, let

we consider the orthogonal projection of B to Y, measured by the error matrix:

The best approximation of X in Equation (11) can be found, when minimizes

or maximizes the orthogonal projection of B to Y:

We can solve by an approximation of X from the above optimization technique, but is not exactly equal to zero, since involves the unknown matrix X, and Y is not exactly equal to B.

Let

which is the reciprocal of the merit function in Equation (26).

3.2. Two Main Theorems

The first theorem determines the expansion coefficients in Equation (21).

Theorem 1.

Proof.

With the help of Equation (29), in (32) can be written as

where the components of are given by

Taking the squared norms of Equation (29) yields

where is an matrix. We can derive

where

and is a symmetric positive definite matrix.

The minimality condition of f is

where

and are m-vectors, while with rank. Thus, the equation to determine is

We can observe from Equation (44) that is proportional to , which is supposed to be

where is a multiplier to be determined from

Then, it follows from Equations (42), (43) and (45) that

where

Inserting Equation (48) into Equations (36) and (38), we have

Inserting Equations (50) and (51) into Equation (46) yields

Cancelling on both sides, we can derive

which renders

Inserting it into Equation (48), is obtained as follows:

Let

Inserting Equation (30) for into Equation (39), and comparing it to Equation (55), we have

By inserting in Equation (48) into Equation (21) and using , we can obtain

where

Upon letting

Equation (57) can be expressed as

Now can be determined by (33). Inserting Equation (62) into (33) yields

where

is derived according to Equations (31) and (58).

To prove Theorem 2, we need the following two lemmas.

Lemma 1.

In terms of the matrix-pencil in Equation (31), we have

Proof.

By using the definition in Equation (22), the left-hand side of Equation (67) can be written as

where and denote, respectively, the jth component of v and , and Equation (37) is used in the last equality. In terms of vector form the last term is just the right-hand side of Equation (67). Similarly, Equation (68) can be proved by the same manner, i.e.,

This ends the proof of Lemma 1. □

Lemma 2.

In terms of the matrix-pencil in Equation (31), we have

Proof.

By using the definition in Equation (22), the left-hand side of Equation (69) can be written as

where and denote, respectively, the jth component of z and u, and Equation (55) is used in the last equality. In terms of vector form the last term is just the right-hand side of Equation (69). This ends the proof of Lemma 2. □

Theorem 2.

In Theorem 1, the two parameters and satisfy the following reciprocal relation:

Proof.

Taking the inner product of B with Equation (64) and by Lemma 1, leads to

With the aid of Equation (59), we have

Then after multiplying on the above equation, and by Equation (65), we can obtain

Next we prove that Equation (73) is equal to . From Equation (64) and with the aids of Equations (40), (58) and (69), it follows that

where was used in view of Equation (49). Then by comparing Equations (73) and (74), we have proven Equation (70). This ends the proof of . Then by Equation (60), is proven. □

3.3. Estimating Residual Error

To estimate the residual error we begin with the following lemma.

Lemma 3.

In terms of the matrix-pencil in Equation (31), we have

Proof.

By using the definition in Equations (22) and (55), the left-hand side of Equation (75) can be written as

where and denote, respectively, the jth component of u and . In terms of vector form the last term is just the right-hand side of Equation (75). Similarly, Equation (76) can be proved by the same manner, i.e.,

This ends the proof of Lemma 3. □

Lemma 4.

In terms of the matrix-pencil in Equation (31), we have

Proof.

By using the definition in Equation (22), we have

The above two repeated indices i and j are summed automatically from 1 to m according to the convention of Einstein []. Then with the aid of Equation (40), we have

where was used. In terms of vector form the above equation leads to Equation (77). Similarly, we have

In terms of vector form the above two equations reduce to Equations (78) and (79). This ends the proof of Lemma 4. □

Theorem 3.

For , the residual error of the optimal solution in Equation (57) satisfies:

Proof.

According to Equation (70), we can refine X in Equation (34) to

where

Let us check residual square:

where

is obtained from Equation (85), in which .

By using Lemmas 1, 3 and 4, it follows from Equation (88) that

Equation (90) is easily derived by taking the inner product of B to Equation (88):

and using Lemma 1. Taking the squared norms of Equation (88), we have

by using Lemmas 3 and 4, it is simplified to

It derives Equation (89).

Then, inserting the above two equations into Equation (87), we have

Consequently, inserting Equation (86) for into the above equation, yields Equation (84).

□

As a consequence, we can prove that the two merit functions and are the same.

Theorem 4.

In the optimal solution of , the values of the two merit functions are the same, i.e.,

where . Moreover, we have

3.4. Orthogonal Projection

Equation (92) indicates that and have the same values when the double-optimality conditions are achieved, of which the key equation (70) plays a dominant role; it renders and the equality in Equation (92) holds. The residual error is absolutely decreased in Equation (93); Equation (84) gives the estimation of residual error.

Theorem 5.

For , can be orthogonally decomposed into

where and are orthogonal.

Proof.

It follows from Equation (88) that

From Lemma 3, we have

and from Lemma 4, we have

Subtracting the above two equations, we have

This ends the proof. □

Furthermore, can be written as

with the aid of Equations (22) and (37). Now, we can introduce the projection operator by

which acts on B and results in . Accordingly, we can define the projection operator as

Theorem 6.

Proof.

From Equations (100) and (23), we have

Applying the projection operator to the above equation yields

We need to prove

From Equations (103) and (55), we have

On the other hand, from Equations (103), (22), (40), and (49), we have

Subtracting the above two equations, we can prove Equation (107). From Equations (103), (22), (40), (49) and (37), we have

The proof is complete. □

Corollary 1.

In Theorem 3,

hence,

Proof.

First, we need to prove

By using Equations (103) and (55), we have

which can be further written as

because is a projection operator. Thus, we have

because is also a projection operator, where I is an identity operator. In view of Equations (84) and (112), the result in Equation (113) is straightforward. □

Remark 1.

For the Case 1 problem, the conventional generalized minimal residual method (GMRES) [,] only takes the minimization in (15) into account. Therefore, in the GMRES. To accelerate the convergence of the iterative algorithm, the maximal projection as mentioned in (16) must be considered. Therefore, in the developed iterative algorithm DOIA, we consider both optimization problems in (15) and (16), and simultaneously and are derived explicitly.

4. Double-Optimal Iterative Algorithm

According to Theorem 1, the double-optimal iterative algorithm (DOIA) to solve Equation (1) reads as follows (Algorithm 1).

| Algorithm 1 DOIA |

1: Select m and give an initial value of 2: Do 3: (Case 1), (Case 2) 4: (Case 1), (Case 2) 5: (orthonormalization) 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: Enddo, if |

4.1. Crucial Properties of DOIA

In this section, we prove the crucial properties of the DOIA, including the absolute convergence and the orthogonality of the residual.

Corollary 2.

In the DOIA, Theorem 4 guarantees that the residual is decreased step-by-step, i.e.,

Proof.

Corollary 3.

In the DOIA, the convergence rate is given by

where θ is the intersection angle between and .

Proof.

Corollary 4.

In the DOIA, the residual vector is A orthogonal to the descent direction , i.e.,

Proof.

The DOIA can provide a good approximation of Equation (11) with a better descent direction in the matrix pencil of the matrix Krylov subspace. Under this situation, we can prove the following corollary, which guarantees that the present algorithm quickly converges to the true solution.

Corollary 5.

In the DOIA, two consecutive residual matrices and are orthogonal by

Proof.

From the last equation in the DOIA, we have

Taking inner product with and using Lemma 2, yields

which can be rearranged in Equation (128). □

4.2. Restarted DOIA(m)

In the DOIA, we fix m of the dimensions of the Krylov subspace. An alternative version of the DOIA can perform well by varying m in the range ; like GMRES(m), it is named DOIA(m); is the frequency of the restart (Algorithm 2).

| Algorithm 2 DOIA(m) |

1: Select and , and give 2: Do 3: Do , 4: (Case 1), (Case 2) 5: (Case 1), (Case 2) 6: (orthonormalization) 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: Enddo of (3), if 18: Otherwise, , go to (2) |

5. Numerical Examples

To demonstrate the efficiency and accuracy of the presented iterative algorithms DOIA and DOIA(m), several examples were examined. All the numerical computations were carried out with Fortran 77 in Microsoft Developer Studio with Intel Core I7-3770, CPU 2.80 GHz and 8 GB memory. The precision was .

5.1. Example 1

We solve Equation (1) with

Matrix F can be computed by inserting the above matrices into Equation (1).

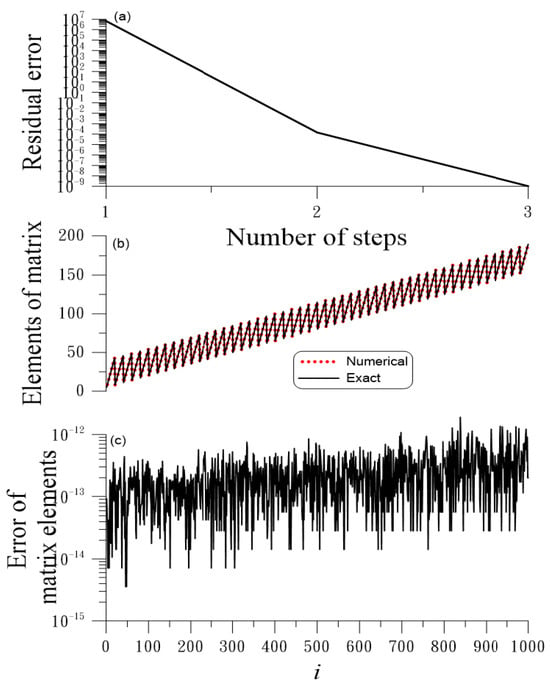

This problem belongs to Case 1. When we apply the iterative algorithm in Section 4 to solve this problem with and , we fix , and the convergence criterion is . As shown in Figure 1a, the DOIA converges very fast with only three steps. In order compare the numerical results with the exact solution, the matrix elements are vectorized along each row, and the number of components is given sequentially. As shown in Figure 1b, the numerical and exact solutions are almost coincident, with the numerical error shown in Figure 1c. The maximum error (ME) is , and the residual error is .

Figure 1.

For example 1 solved by the DOIA: (a) residual, (b) comparison of numerical and exact solutions, and (c) numerical error; (b,c) with the same x axis.

When the problem dimension is raised to and , the original DOIA does not converge within 100 steps. However, using the restarted DOIA(m) with and , it requires three steps under , obtaining a highly accurate solution with ME = and the error of . The CPU time is 1.06 s.

Table 1 compares the results obtained by the DOIA(m) for different n in Equations (131) and (132): for , we take , and ; for , we take , and .

Table 1.

For Example 1, comparing ME, iterations number (IN), and CPU time obtained by DOIA(m) for different n.

5.2. Example 2

In Equation (1), we consider a cyclic matrix A. The first row is given by , where . The algorithm is given as follows (Algortim 3).

| Algorithm 3 For cyclic matrix |

1: Give N 2: Do 3: Do 4: If , then ; otherwise 5: 6: If , then 7: Enddo of j 8: Enddo of i |

The nonsquare matrix A is obtained by taking the first q rows from S if or the first n columns from S if . Matrix Z in Equation (1) is given by

where we fix , , and . Matrix F can be computed by inserting the above matrices into Equation (1).

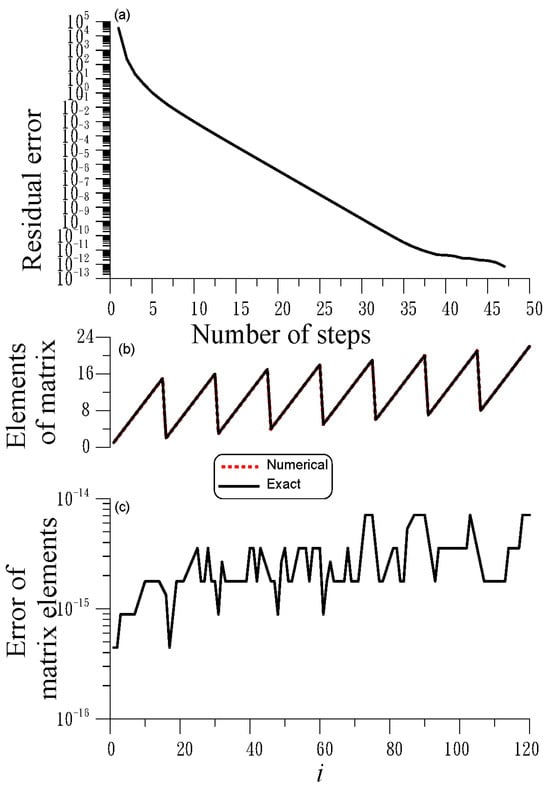

This problem belongs to Case 2. When we apply the iterative algorithm in Section 4 to find the solution, we fix , and the convergence criterion is . As shown in Figure 2a, the DOIA converges very fast in 47 steps. As shown in Figure 2b, the numerical and exact solutions are almost coincident, with the numerical error shown in Figure 2c. The ME is . Then, the DOIA(m), with and , obtains an ME = in 43 steps.

Figure 2.

For example 2 solved by the DOIA: (a) residual, (b) comparison of numerical and exact solutions, and (c) numerical error; (b,c) with the same x axis.

We consider the inverse matrix obtained in Case 2 with and . Let X be the inverse matrix of A; the Newton–Schultz iterative method is

For the initial value with , the Newton–Schultz iterative method can converge very quickly.

We consider the cyclic matrix constructed from Algorithm 3 for the cyclic matrix and apply the DOIA(m) to find the with the same initial value with . Table 2 compares the and iteration number (IN) obtained by the DOIA(m) and the Newton–Schultz iterative method (NSIM) with . The DOIA(m) can find a more accurate inverse matrix with a lower IN.

Table 2.

For Example 2, (, IN) obtained by DOIA(m) and the Newton–Schultz iterative method (NSIM) for different n.

For , the CPU time of DOIA(m) is 0.41 s. Although n is raised to , the DOIA(m), with and , is still applicable, with obtained in 149 steps, with a CPU time of 6.85 s.

5.3. Example 3

We consider

We first compute and then the solution of the corresponding linear system. This problem belongs to Case 1.

We take and apply the DOS to find the inverse matrix of A by setting in Equation (11). The values of obtained from the DOS with the accuracy to find the inverse matrix has two orders. The residual error is . In the solution to Equation (4), when we compare the numerical solution with the exact solution , we find that the maximum error is . Since the DOS is just a single-step DOIA, to improve the accuracy, we can apply the DOIA to solve the considered problem.

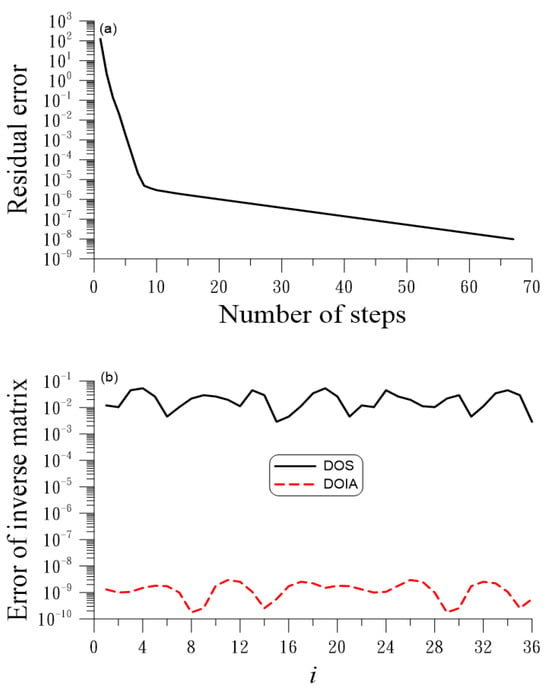

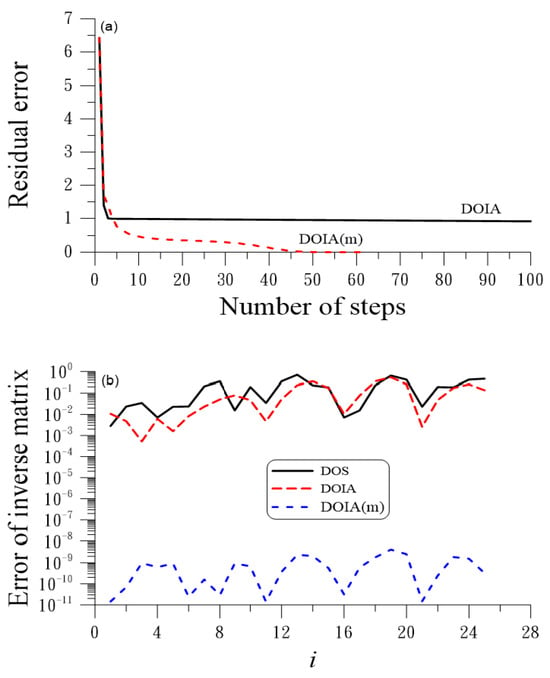

When we apply the DOIA to solve this problem with , the accuracy can be raised to , while in the solution to Equation (4), ME = . In Figure 3a, we show the residual error obtained by the DOIA, which is convergent in 67 steps under ; Figure 3b compares the errors of the elements of the inverse matrix, using the components of . It can be seen that the DOIA is very effective and is much accurate than the DOS method. DOS is a single-step DOIA without iteration.

Figure 3.

For example 3 solved by the DOS and DOIA, showing (a) residual and (b) comparison of error of inverse matrix.

For the DOIA(m) with and , it converges in 28 steps under ; the accuracy can be raised to , and ME = . The CPU time of the DOIA(m) is 0.33 s, which converges faster than the DOIA.

To test the stability of the DOIA, we consider random noise with intensity s to disturb the coefficients in Equation (133). We take the same values of the parameters in the DOIA. Table 3 compares , ME, and iteration number (IN). Essentially, the noise does not influence the accuracy of ; however, ME and IN worsen when s increases.

Table 3.

For Example 3, , ME, and IN obtained by DOIA for different noise s values.

5.4. Example 4

By testing the performance of the DOS on the solution to the linear equation system, we consider the following convex quadratic programming problem with an equality constraint:

where P is an matrix, C is an matrix, and is an vector, which means that Equation (135) provides linear constraints. According to Lagrange theory, we need to solve the linear system (4) with the following b and A:

For a definite solution, we take and with

The dimension of A is , where . This problem belongs to Case 1. When we take and employ the DOS, and meet . With , the solution is at with ; it is almost an exact solution obtained without any iteration, because the DOS can be viewed as a single-step DOIA.

5.5. Example 5: Solving Ill-Conditioned Hilbert Matrix Equation

The Hilbert matrix is highly ill-conditioned:

We consider an exact solution with , and is given by

It is known that the Hilbert matrix is a highly ill-conditioned matrix. For , the condition number is , and, for , the condition number is . It can be proved that the asymptotic of the condition number of the Hilbert matrix is []

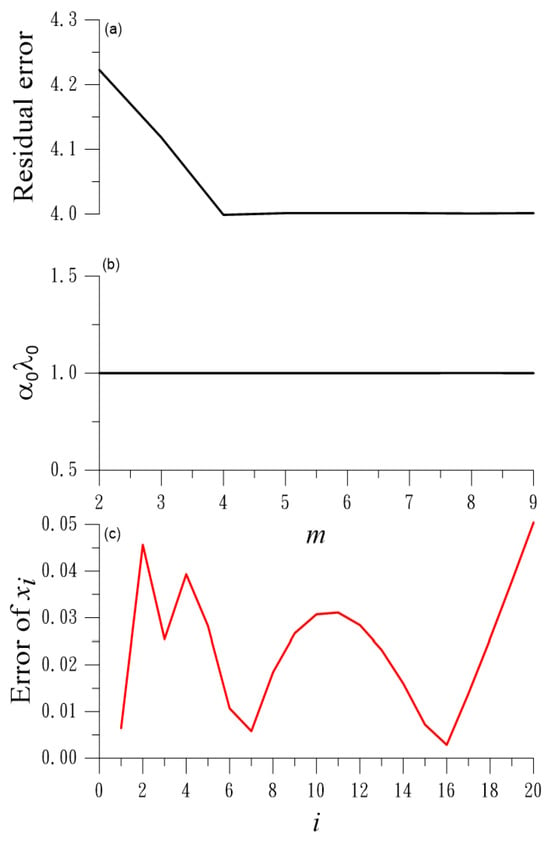

This problem belongs to Case 1. We solve this problem by using the DOS. For , we let m run from 2 to 9, and peak the best m with the minimum error of

In Figure 4a, we plot the above residual with respect to m, where we can observe that the value of is almost equal to 1, as shown in Figure 4b. We take , and the maximum error of x is .

Figure 4.

For Example 5 solved by the DOS, showing (a) residual, (b) , and (c) error of ; (a,b) the same x axis.

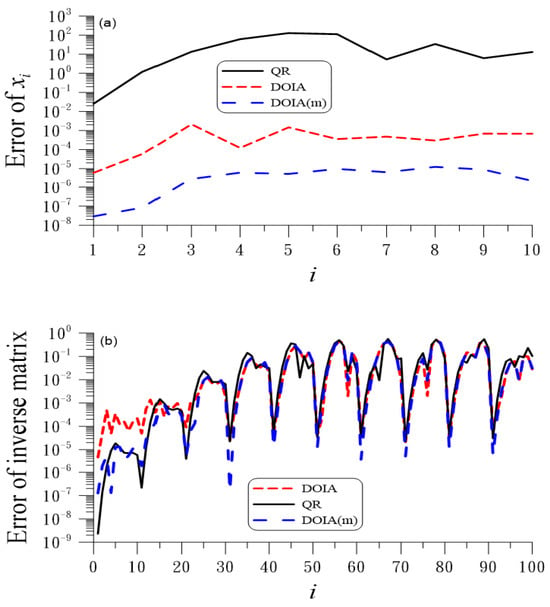

Below, we solve the inverse of a five-dimensional Hilbert matrix with by using the DOS method, where we take . It is interesting that is also symmetric. The residual error is . However, when we apply the DOIA to solve this problem with , the accuracy of can be raised to . In Figure 5a, we show the residual error obtained by the DOIA, which does not converge within 100 steps under . Figure 5b compares the error of the elements of the inverse matrix by using the components of . Due to the highly ill-conditioned nature of the Hilbert matrix, the results obtained by the DOS and DOIA are quite different. ME = is obtained for .

Figure 5.

For Example 5 solved by the DOS, DOIA, and DOIA(m), showing (a) residuals, (b) comparing error of inverse matrix.

For the DOIA(m) with and , it converges in 62 steps under ; the accuracy can be raised to , and ME = . Obviously, the DOIA(m) can significantly improve the convergence speed and accuracy.

Then, we compare the numerical results with those obtained with the QR method. For , we apply the DOIA to solve this problem with , and the accuracy is , while that obtained with the QR method is . In Figure 6a, we compare the solutions of linear system (4), where the exact solution is supposed to be . Whereas the DOIA provides quite accurate solutions with ME = , the QR method fails, as the diagonal elements of matrix R are very small for the ill-conditioned Hilbert matrix. The errors of the inverse matrix obtained with these two methods are comparable, as shown in Figure 6b.

Figure 6.

For example 5 solved by the DOIA, DOIA(m), and QR, comparing errors of (a) , and (b) inverse matrix.

The DOIA(m) with and does not converge within 100 steps under ; however, the accuracy can be raised to ME = .

We consider the Hilbert matrix in Equation (136) and apply the DOIA(m) to find with an initial value . Because the Newton–Schultz iterative method (NSIM) converges slowly, we give the upper bound IN = 500. Table 4 compares the and IN obtained with the DOIA(m) with and NSIM. DOIA(m) can find an accurate inverse matrix in fewer interations.

Table 4.

For Example 5, the and IN obtained by DOIA(m) and the Newton–Schultz iterative method (NSIM) for different n.

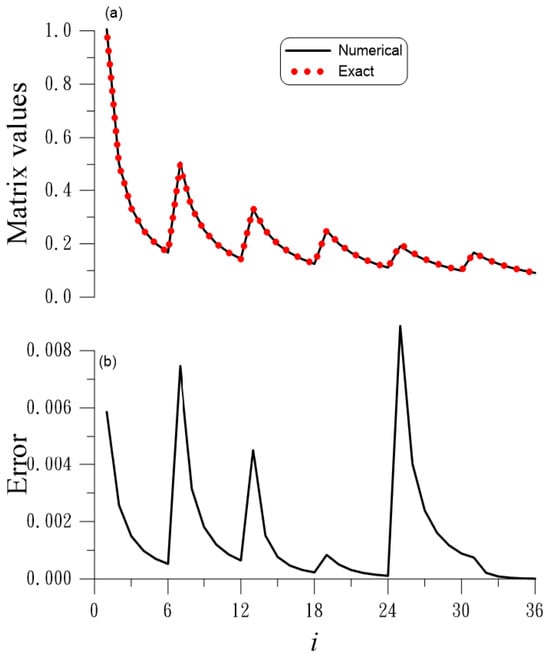

5.6. Example 6

In this example, we apply the DOS with to solve a six-dimensional matrix Equation (11) with the coefficient matrix A given in Equation (133), and the solution is the Hilbert matrix given in Equation (136) with . First, we find the right inversion of A from by using the DOS, and then the solution of the matrix equation is given by . The numerical solution of the matrix elements with , which is obtained by arranging the matrix element from left to right and top to bottom with a consecutive Arabic number, is compared with the exact one in Figure 7a; the numerical error is shown in Figure 7b, which is smaller than 0.008. The residual error is 0.0157, which is quite accurate.

Figure 7.

For example 6 solved by the DOS: (a) comparing numerical and exact solutions, (b) numerical error of matrix elements; (a,b) with the same x axis.

For the DOIA(m) with and , it converges in 28 steps under ; the accuracy raises to , and ME = . The convergence can be accelerated to 14 steps when we take and ; the accuracy is slightly decreased to ME = and .

5.7. Example 7

Consider the mixed boundary value problem of the Laplace equation:

The method of fundamental solutions is taken as

where

We consider

This problem is a special Case 2 with . We take and . ME = is obtained in 174 steps by the DOIA(m) with and . The CPU time is 0.65 s. It can also be treated as a special Case 1 with . We take . ME = is obtained within 300 steps by the DOIA(m) with and . The CPU time is 0.62 s. To improve the accuracy, we can develop the vector form of the DOIA(m), which is, however, another issue not reported here.

According to [], we apply the GMRES(m) with to solve this problem. ME = is obtained in 2250 steps, and the CPU time is 2.19 s.

6. Pseudoinverse of Rectangular Matrix

The Moore–Penrose pseudoinverse of A denoted as is the most famous for the inversion of a rectangular matrix, satisfying the following Penrose equations:

6.1. A New Matrix Pencil

We rewrite Equation (8) as

upon giving an initial value , we attempt to seek the next step solution X with

where . The new matrix pencil with degree m is given by

which consists of an m-degree polynomial of . The modified Gram–Schmidt process is employed to orthogonalize and normalize .

The iterative form of Equation (142) is

Notice that it includes several iterative algorithms as special cases: the Newton–Schultz method [], the Chebyshev method [], the Homeier method [], the PS method (PSM) by Petkovic and Stanimirovic [], and the KKRJ []:

In Equation (144), two optimization methods are used to determine the coefficients .

The results in Theorem 1 are also applicable to problem (141). Hence, we propose the following iterative algorithm, namely, the Moore–Penrose iterative algorithm (MPIA).

MPIA is a new algorithm based on the newly developed DOIA in Section 4; MPIA is used as a new matrix pencil in Equation (143) and with . The motivation for the development of MPIA using in the matrix pencil is that we can generalize the methods in Equations (145)–(149) to the m orders method and provide a theoretical foundation to determine the expansion coefficients from the double-optimization technique (Algorithm 4).

| Algorithm 4 MPIA |

1: Select m and give 2: Do 3: 4: (orthonormalization) 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: Enddo, if |

Like DOIA(m), the MPIA(m) can be constructed similarly.

6.2. Optimized Hyperpower Method

Pan et al. [] proposed the following hyperpower method:

also refer to [,]. Equation (150) includes the Newton–Schultz method [], and the Chebyshev method [] as special cases.

Let , and Equation (150) is rewritten as

we generalize it to

Equation (152) is used to find the optimized descent matrix X in the current-step residual equation:

Two optimization methods are used to determine the coefficients . The results in Theorem 1 are also applicable to problem (153). Hence, we propose the following iterative algorithm, namely, the optimized hyperpower iterative algorithm (OHPIA(m)); we take the restarted technique into consideration. Since the matrix pencil consists of the powers of the residual matrices, the orthonormalization for is not suggested (Algorithm 5).

| Algorithm 5 OHPIA(m) |

1: Select and , and give 2: Do 3: Do , 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: Enddo of (3), if 18: Otherwise, , go to (2) |

7. Numerical Testing of Rectangular Matrix

7.1. Example 8

We find the Moore–Penrose inverse of the following rank-deficient matrix []:

This problem belongs to Case 2. We apply the iterative method of the DOIA to find the Moore–Penrose inverse, which has an exact solution:

Under the same convergence criterion as used by Xia et al. [], the iteration process of the DOIA with converges very fast in three steps. By comparison to Equation (154), ME = is obtained.

In Table 5, we compare the DOIA ( and ) with other numerical methods specified by Xia et al. [], Petkovic and Stanimirovic [], and Kansal et al. [], to asses the performance of the DOIA measured using four numerical errors of the Penrose equations (137)–(140) and the iteration number (IN).

Table 5.

Computed results of a rank-deficient matrix in Example 8.

Recently, Kansal et al. [] proposed the iterative scheme in Equation (149). For this problem, we take . When we take , it overflows; hence, we take .

In the above, Alg. is the abbreviation for Algorithm, IP represents the initial point, and IN is the iteration number. . The first two algorithms were reported by Xia et al. []. It can be seen that the DOIA converges much faster and is more accurate than theother algorithms.

In the computation of the pseudoinverse, the last two singular values close to zero can be prevented from appearing in the matrix if m is small enough. For example, we take in Table 5, such that can be computed accurately without being induced by the zero singular value appearing in the denominator to enlarge the rounding error.

7.2. Example 9: Inverting the Ill-Conditioned Rectangular Hilbert Matrix

We want to find the Moore–Penrose inverse of the Hilbert matrix:

This problem belongs to Case 2. This is more difficult than the previous example. Here, we fix , . The numerical errors of the Penrose Equations (137)–(140) are compared in Table 6. In the DOIA(m) and OHPIA(m), we take , and . DOIA(m) and OHPIA(m) converge faster than in [,].

Table 6.

Computed results of the Hilbert matrix with , in Example 9.

Next, we find the Moore–Penrose inverse of the Hilbert matrix with and . For MPIA, ; for MPIA(m). For the method in [], which does not converge with , it is not applicable to find the inverse of a highly ill-conditioned Hilbert matrix with and (Table 7). Like the KKRJ, the OHPIA(m) is weak for highly ill-conditioned Hilbert matrices; the errors of and raise to the order of .

Table 7.

Computed results of the Hilbert matrix with and .

7.3. Example 10

We find the Moore–Penrose inverse of the cyclic matrix in Example 2, as shown in Table 8, where we take and , for MPIA, and for MPIA(m) and OHPIA(m). It is remarkable that the OHPIA(m) is much better than the other methods.

Table 8.

Computed results of the cyclic matrix with and in Example 10.

7.4. Example 11

We find the Moore–Penrose inverse of a full-rank matrix given by but with . We take and for the MPIA(m); is taken for the OHPIA(m). For the method in Equation (145), we denote it as NSM. Table 9 compares the errors and IN for different methods, the MPIA(m) outperforms other methods; OHPIA(m) is better than MPIA(m).

Table 9.

Computed results of a full-rank matrix in Example 11 with and .

7.5. Example 12

We find the Moore–Penrose inverse of a randomly generated real matrix of size with . We take and for the MPIA(m). Table 10 compares the errors and IN of the different methods.

Table 10.

Computed results of a random matrix in Example 12 with and .

8. Conclusions

In the m-degree matrix pencil Krylov subspace, an explicit solution (34) of the linear matrix equation was obtained by optimizing the two merit functions in (32) and (33). Then, we derive an optimal iterative algorithm, DOIA, to solve square or nonsquare linear matrix equation systems. The iterative method DOIA possesses an A-orthogonal property and absolute convergence, which has good computational efficiency and accuracy in solving the linear matrix equations. The restarted version DOIA(m) is proven to speed up the convergence. The Moore–Penrose pseudoinverses of rectangular matrices are also derived by using the DOIA, DOIA(m), MPIA, and MPIA(m). The proposed polynomial pencil method includes the Newton–Schultz method, the Chebyshev method, the Homeier method, and the KKRJ method as special cases; it is important that in the proposed iterative MPIA and MPIA(m), the coefficients are optimized using two minimization techniques. We also propose a new modification of hyperpower method, namely, the optimized hyperpower iterative algorithm OHPIA(m), which, through two optimizations, become the most powerful iterative algorithm for quickly computing the Moore–Penrose pseudoinverses of rectangular matrices. However, the OHPIA(m), like KKRJ, is weak in inverting ill-conditioned rectangular matrices.

The idea of varying the affine matrix Krylov subspace is novel for finding a better iterative solution to linear matrix equations based on a dual optimization. The limitations are that several matrix multiplications are needed to construct the projection operator P in the matrix Krylov subspace, and the computational cost is high for an inversion of the matrix with dimension m.

Author Contributions

Conceptualization, C.-S.L. and C.-W.C.; Methodology, C.-S.L. and C.-W.C.; Software, C.-S.L., C.-W.C. and C.-L.K.; Validation, C.-S.L., C.-W.C. and C.-L.K.; Formal analysis, C.-S.L. and C.-W.C.; Investigation, C.-S.L., C.-W.C. and C.-L.K.; Resources, C.-S.L. and C.-W.C.; Data curation, C.-S.L., C.-W.C. and C.-L.K.; Writing—original draft, C.-S.L. and C.-W.C.; Writing—review & editing, C.-W.C.; Visualization, C.-S.L., C.-W.C. and C.-L.K.; Supervision, C.-S.L. and C.-W.C.; Project administration, C.-W.C.; Funding acquisition, C.-W.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was financially supported by the National Science and Technology Council [grant numbers: NSTC 112-2221-E-239-022].

Data Availability Statement

The data presented in this study are available on request from the corresponding authors.

Acknowledgments

The authors would like to express their thanks to the reviewers, who supplied feedback to improve the quality of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hestenes, M.R.; Stiefel, E.L. Methods of conjugate gradients for solving linear systems. J. Res. Nat. Bur. Stand. 1952, 49, 409–436. [Google Scholar] [CrossRef]

- Lanczos, C. Solution of systems of linear equations by minimized iterations. J. Res. Nat. Bur. Stand. 1952, 49, 33–53. [Google Scholar] [CrossRef]

- Liu, C.S. An optimal multi-vector iterative algorithm in a Krylov subspace for solving the ill-posed linear inverse problems. CMC Comput. Mater. Contin. 2013, 33, 175–198. [Google Scholar]

- Dongarra, J.; Sullivan, F. Guest editors’ introduction to the top 10 algorithms. Comput. Sci. Eng. 2000, 2, 22–23. [Google Scholar] [CrossRef]

- Simoncini, V.; Szyld, D.B. Recent computational developments in Krylov subspace methods for linear systems. Numer. Linear Algebra Appl. 2007, 14, 1–59. [Google Scholar] [CrossRef]

- Saad, Y.; Schultz, M.H. GMRES: A generalized minimal residual algorithm for solving nonsymmetric linear systems. SIAM J. Sci. Stat. Comput. 1986, 7, 856–869. [Google Scholar] [CrossRef]

- Saad, Y. Krylov subspace methods for solving large unsymmetric linear systems. Math. Comput. 1981, 37, 105–126. [Google Scholar] [CrossRef]

- Freund, R.W.; Nachtigal, N.M. QMR: A quasi-minimal residual method for non-Hermitian linear systems. Numer. Math. 1991, 60, 315–339. [Google Scholar] [CrossRef]

- van Den Eshof, J.; Sleijpen, G.L.G. Inexact Krylov subspace methods for linear systems. SIAM J. Matrix Anal. Appl. 2004, 26, 125–153. [Google Scholar] [CrossRef]

- Paige, C.C.; Saunders, M.A. Solution of sparse indefinite systems of linear equations. SIAM J. Numer. Anal. 1975, 12, 617–629. [Google Scholar] [CrossRef]

- Fletcher, R. Conjugate gradient methods for indefinite systems. In Lecture Notes in Mathematics; Springer: Berlin/Heidelberg, Germany, 1976; Volume 506, pp. 73–89. [Google Scholar]

- Sonneveld, P. CGS: A fast Lanczos-type solver for nonsymmetric linear systems. SIAM J. Sci. Stat. Comput. 1989, 10, 36–52. [Google Scholar] [CrossRef]

- van der Vorst, H.A. Bi-CGSTAB: A fast and smoothly converging variant of Bi-CG for the solution of nonsymmetric linear systems. SIAM J. Sci. Stat. Comput. 1992, 13, 631–644. [Google Scholar] [CrossRef]

- Saad, Y.; van der Vorst, H.A. Iterative solution of linear systems in the 20th century. J. Comput. Appl. Math. 2000, 123, 1–33. [Google Scholar] [CrossRef]

- Bouyghf, F.; Messaoudi, A.; Sadok, H. A unified approach to Krylov subspace methods for solving linear systems. Numer. Algorithms 2024, 96, 305–332. [Google Scholar] [CrossRef]

- Saad, Y. Iterative Methods for Sparse Linear Systems, 2nd ed.; SIAM: Philadelphia, PA, USA, 2003. [Google Scholar]

- van der Vorst, H.A. Iterative Krylov Methods for Large Linear Systems; Cambridge University Press: New York, NY, USA, 2003. [Google Scholar]

- Jbilou, K.; Messaoudi, A.; Sadok, H. Global FOM and GMRES algorithms for matrix equations. Appl. Numer. Math. 1999, 31, 49–63. [Google Scholar] [CrossRef]

- El Guennouni, A.; Jbilou, K.; Riquet, A.J. Block Krylov subspace methods for solving large Sylvester equations. Numer. Algorithms 2002, 29, 75–96. [Google Scholar] [CrossRef]

- Frommer, A.; Lund, K.; Szyld, D.B. Block Krylov subspace methods for functions of matrices. Electron. Trans. Numer. Anal. 2017, 47, 100–126. [Google Scholar] [CrossRef][Green Version]

- Frommer, A.; Lund, K.; Szyld, D.B. Block Krylov subspace methods for functions of matrices II: Modified block FOM. SIAM J. Matrix Anal. Appl. 2020, 41, 804–837. [Google Scholar] [CrossRef]

- El Guennouni, A.; Jbilou, K.; Riquet, A.J. The block Lanczos method for linear systems with multiple right-hand sides. Appl. Numer. Math. 2004, 51, 243–256. [Google Scholar] [CrossRef]

- Kubínová, M.; Soodhalter, K.M. Admissible and attainable convergence behavior of block Arnoldi and GMRES. SIAM J. Matrix Anal. Appl. 2020, 41, 464–486. [Google Scholar] [CrossRef]

- Lund, K. Adaptively restarted block Krylov subspace methods with low-synchronization skeletons. Numer. Algorithms 2023, 93, 731–764. [Google Scholar] [CrossRef]

- Konghua, G.; Hu, X.; Zhang, L. A new iteration method for the matrix equation AX = B. Appl. Math. Comput. 2007, 187, 1434–1441. [Google Scholar] [CrossRef]

- Meng, C.; Hu, X.; Zhang, L. The skew-symmetric orthogonal solutions of the matrix equation AX = B. Linear Algebra Appl. 2005, 402, 303–318. [Google Scholar] [CrossRef]

- Peng, Z.; Hu, X. The reflexive and anti-reflexive solutions of the matrix equation AX = B. Linear Algebra Appl. 2003, 375, 147–155. [Google Scholar] [CrossRef]

- Zhang, J.C.; Zhou, S.Z.; Hu, X. The (P,Q) generalized reflexive and anti-reflexive solutions of the matrix equation AX = B. Appl. Math. Comput. 2009, 209, 254–258. [Google Scholar] [CrossRef]

- Liu, C.S.; Hong, H.K.; Atluri, S.N. Novel algorithms based on the conjugate gradient method for inverting ill-conditioned matrices, and a new regularization method to solve ill-posed linear systems. Comput. Model. Eng. Sci. 2010, 60, 279–308. [Google Scholar]

- Higham, N.J. Functions of Matrices: Theory and Computation; SIAM: Philadelphia, PA, USA, 2008. [Google Scholar]

- Amat, S.; Ezquerro, J.A.; Hernandez-Veron, M.A. Approximation of inverse operators by a new family of high-order iterative methods. Numer. Linear Algebra Appl. 2014, 21, 629. [Google Scholar] [CrossRef]

- Homeier, H.H.H. On Newton-type methods with cubic convergence. J. Comput. Appl. Math. 2005, 176, 425–432. [Google Scholar] [CrossRef]

- Petkovic, M.D.; Stanimirovic, P.S. Iterative method for computing Moore–Penrose inverse based on Penrose equations. J. Comput. Appl. Math. 2011, 235, 1604–1613. [Google Scholar] [CrossRef]

- Dehdezi, E.K.; Karimi, S. GIBS: A general and efficient iterative method for computing the approximate inverse and Moore–Penrose inverse of sparse matrices based on the Schultz iterative method with applications. Linear Multilinear Algebra 2023, 71, 1905–1921. [Google Scholar] [CrossRef]

- Cordero, A.; Soto-Quiros, P.; Torregrosa, J.R. A general class of arbitrary order iterative methods for computing generalized inverses. Appl. Math. Comput. 2021, 409, 126381. [Google Scholar] [CrossRef]

- Kansal, M.; Kaur, M.; Rani, L.; Jantschi, L. A cubic class of iterative procedures for finding the generalized inverses. Mathematics 2023, 11, 3031. [Google Scholar] [CrossRef]

- Cordero, A.; Segura, E.; Torregrosa, J.R.; Vassileva, M.P. Inverse matrix estimations by iterative methods with weight functions and their stability analysis. Appl. Math. Lett. 2024, 155, 109122. [Google Scholar] [CrossRef]

- Petkovic, M.D.; Stanimirovic, P.S. Two improvements of the iterative method for computing Moore–Penrose inverse based on Penrose equations. J. Comput. Appl. Math. 2014, 267, 61–71. [Google Scholar] [CrossRef]

- Katsikis, V.N.; Pappas, D.; Petralias, A. An improved method for the computation of the Moore–Penrose inverse matrix. Appl. Math. Comput. 2011, 217, 9828–9834. [Google Scholar] [CrossRef]

- Stanimirovic, I.; Tasic, M. Computation of generalized inverse by using the LDL* decomposition. Appl. Math. Lett. 2012, 25, 526–531. [Google Scholar] [CrossRef]

- Sheng, X.; Wang, T. An iterative method to compute Moore–Penrose inverse based on gradient maximal convergence rate. Filomat 2013, 27, 1269–1276. [Google Scholar] [CrossRef]

- Toutounian, F.; Ataei, A. A new method for computing Moore–Penrose inverse matrices. J. Comput. Appl. Math. 2009, 228, 412–417. [Google Scholar] [CrossRef]

- Soleimani, F.; Stanimirovic, P.S.; Soleymani, F. Some matrix iterations for computing generalized inverses and balancing chemical equations. Algorithms 2015, 8, 982–998. [Google Scholar] [CrossRef]

- Baksalary, O.M.; Trenkler, G. The Moore–Penrose inverse: A hundred years on a frontline of physics research. Eur. Phys. J. 2021, 46, 9. [Google Scholar] [CrossRef]

- Pavlikova, S.; Sevcovic, D. On the Moore–Penrose pseudo-inversion of block symmetric matrices and its application in the graph theory. Linear Algebra Appl. 2023, 673, 280–303. [Google Scholar] [CrossRef]

- Sayevand, K.; Pourdarvish, A.; Machado, J.A.T.; Erfanifar, R. On the calculation of the Moore–Penrose and Drazin inverses: Application to fractional calculus. Mathematics 2021, 9, 2501. [Google Scholar] [CrossRef]

- AL-Obaidi, R.H.; Darvishi, M.T. A comparative study on qualification criteria of nonlinear solvers with introducing some new ones. J. Math. 2022, 2022, 4327913. [Google Scholar] [CrossRef]

- Liu, C.S.; Kuo, C.L.; Chang, C.W. Solving least-squares problems via a double-optimal algorithm and a variant of Karush–Kuhn–Tucker equation for over-determined system. Algorithms 2024, 17, 211. [Google Scholar] [CrossRef]

- Einstein, A. The foundation of the general theory of relativity. Ann. Phys. 1916, 49, 769–822. [Google Scholar] [CrossRef]

- Todd, J. The condition of finite segments of the Hilbert matrix. In The Solution of Systems of Linear Equations and the Determination of Eigenvalues; Taussky, O., Ed.; National Bureau of Standards: Applied Mathematics Series; National Bureau of Standards: Gaithersburg, MD, USA, 1954; Volume 39, pp. 109–116. [Google Scholar]

- Pan, Y.; Soleymani, F.; Zhao, L. An efficient computation of generalized inverse of a matrix. Appl. Math. Comput. 2018, 316, 89–101. [Google Scholar] [CrossRef]

- Climent, J.J.; Thome, N.; Wei, Y. A geometrical approach on generalized inverses by Neumann-type series. Linear Algebra Appl. 2001, 332–334, 533–540. [Google Scholar] [CrossRef]

- Soleymani, F.; Stanimirovic, P.S.; Haghani, F.K. On hyperpower family of iterations for computing outer inverses possessing high efficiencies. Linear Algebra Appl. 2015, 484, 477–495. [Google Scholar] [CrossRef]

- Xia, Y.; Chen, T.; Shan, J. A novel iterative method for computing generalized inverse. Neural Comput. 2014, 26, 449–465. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).