A Modified Three-Term Conjugate Descent Derivative-Free Method for Constrained Nonlinear Monotone Equations and Signal Reconstruction Problems

Abstract

1. Introduction

2. Algorithm and Its Derivation

3. Theoretical Results

- i.

- For all k, there exists a step-length , satisfying (26), for some,

- ii.

- The step-length satisfies the following relation:

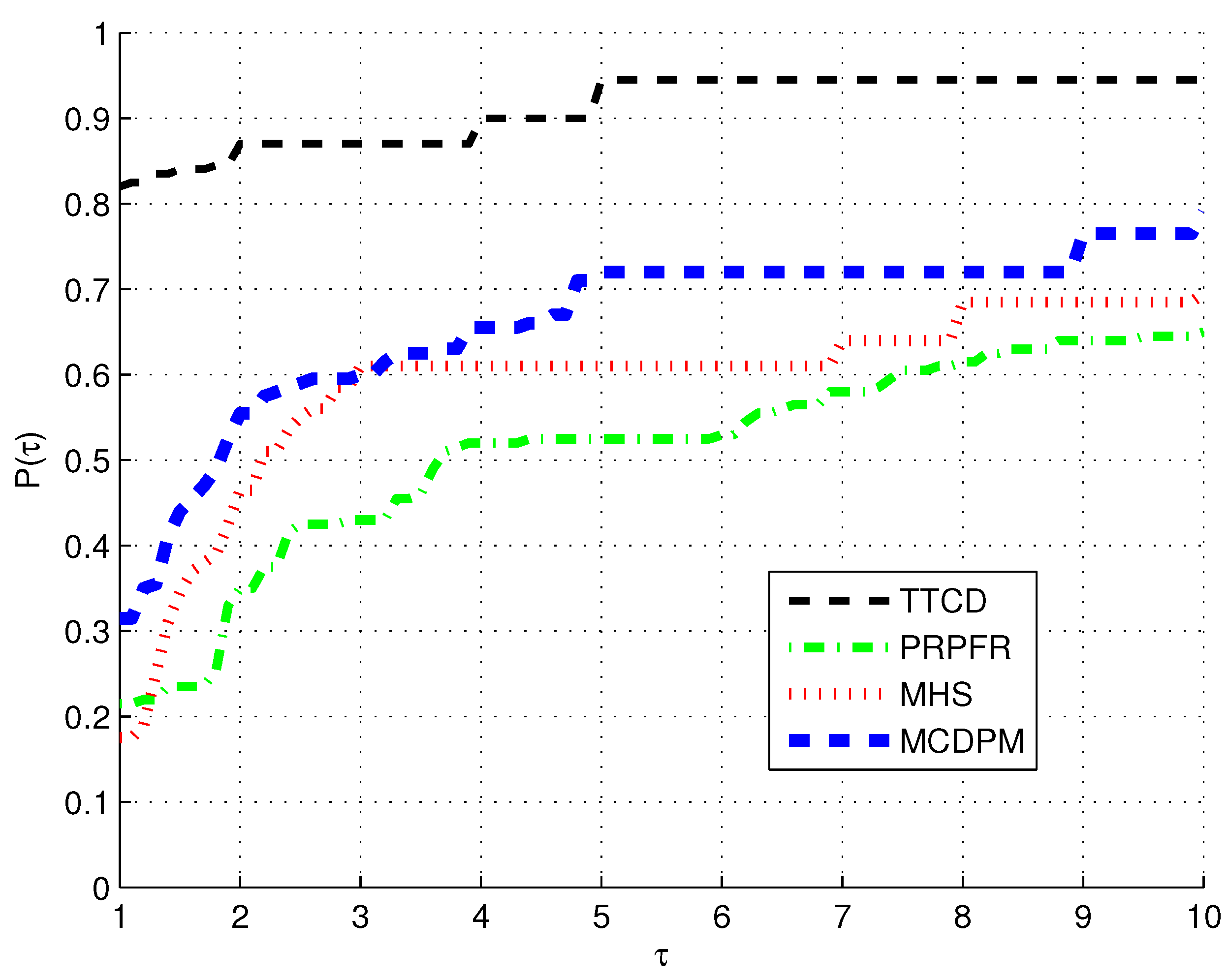

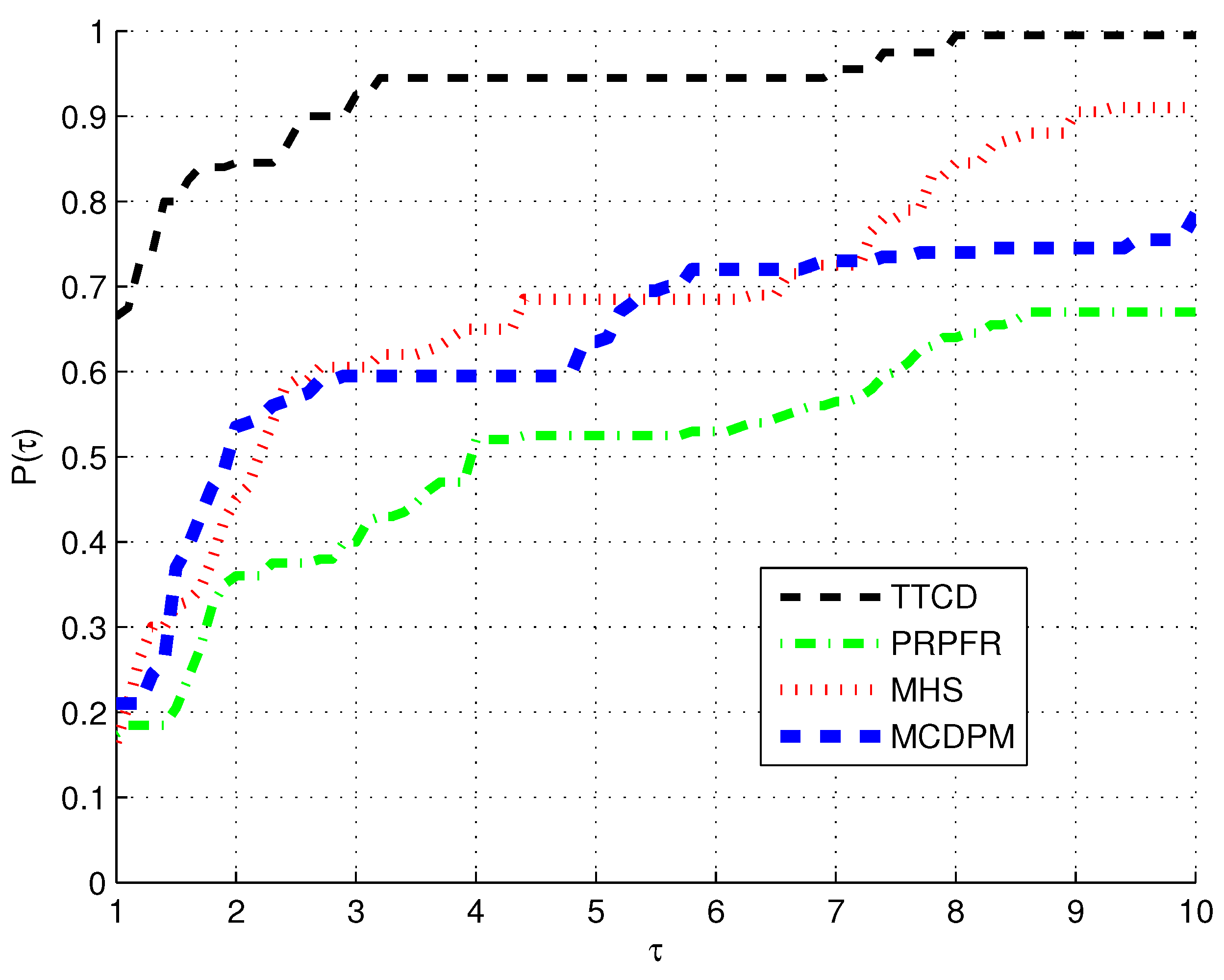

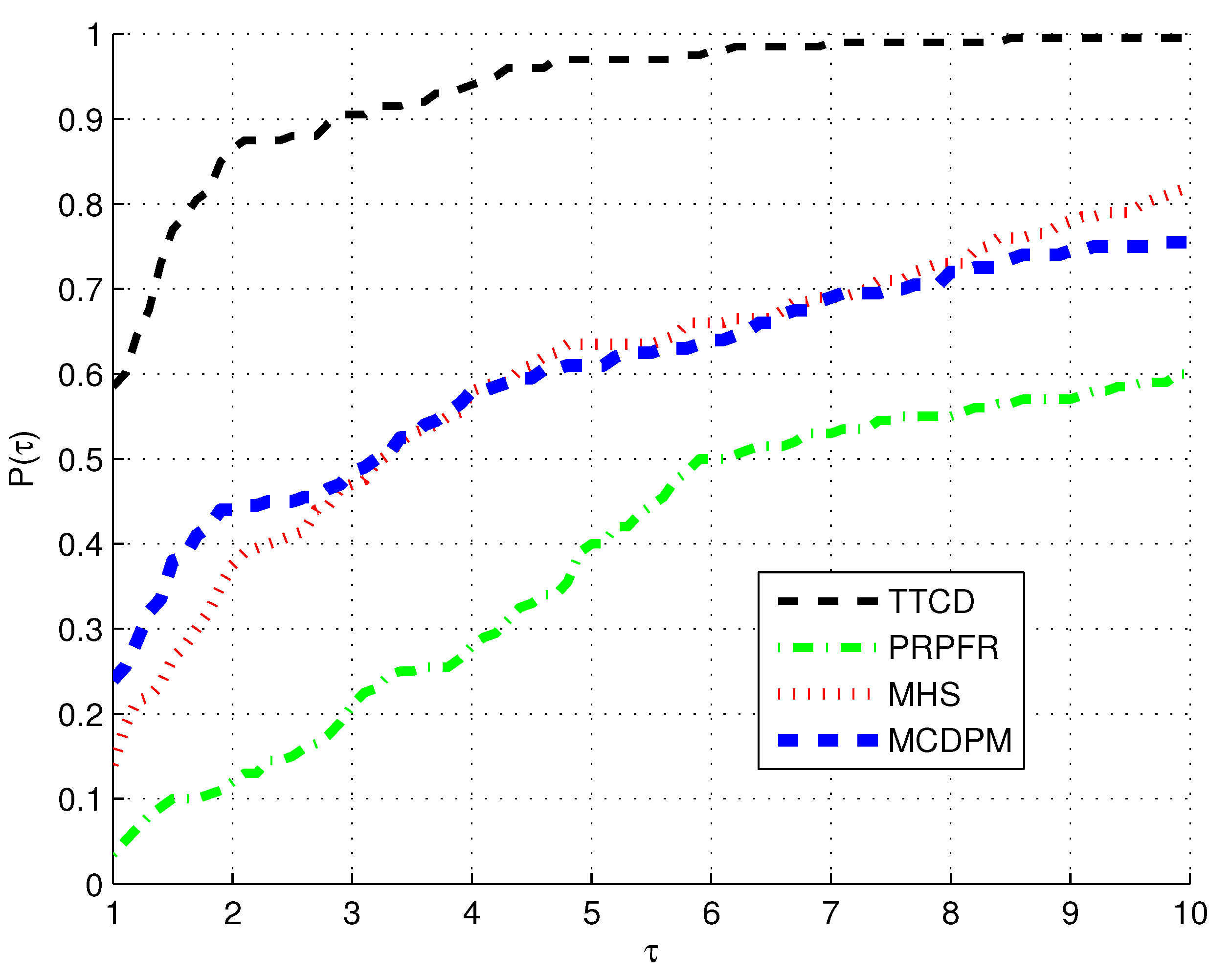

4. Results for the Experiments

- Five different initial points: .

- Five different dimensions: .

- Eight problems (see Table 1 for details).

| S/N | Problem & Reference |

|---|---|

| 1 | Modified exponential function 2 [34] |

| 2 | Logarithmic function [34] |

| 3 | Problem 1 in [14] |

| 4 | Strictly convex function I [34] |

| 5 | Strictly convex function II [34] |

| 6 | Tridiagonal exponential function [35] |

| 7 | Nonsmooth function [36] |

| 8 | Problem 4 in [37] |

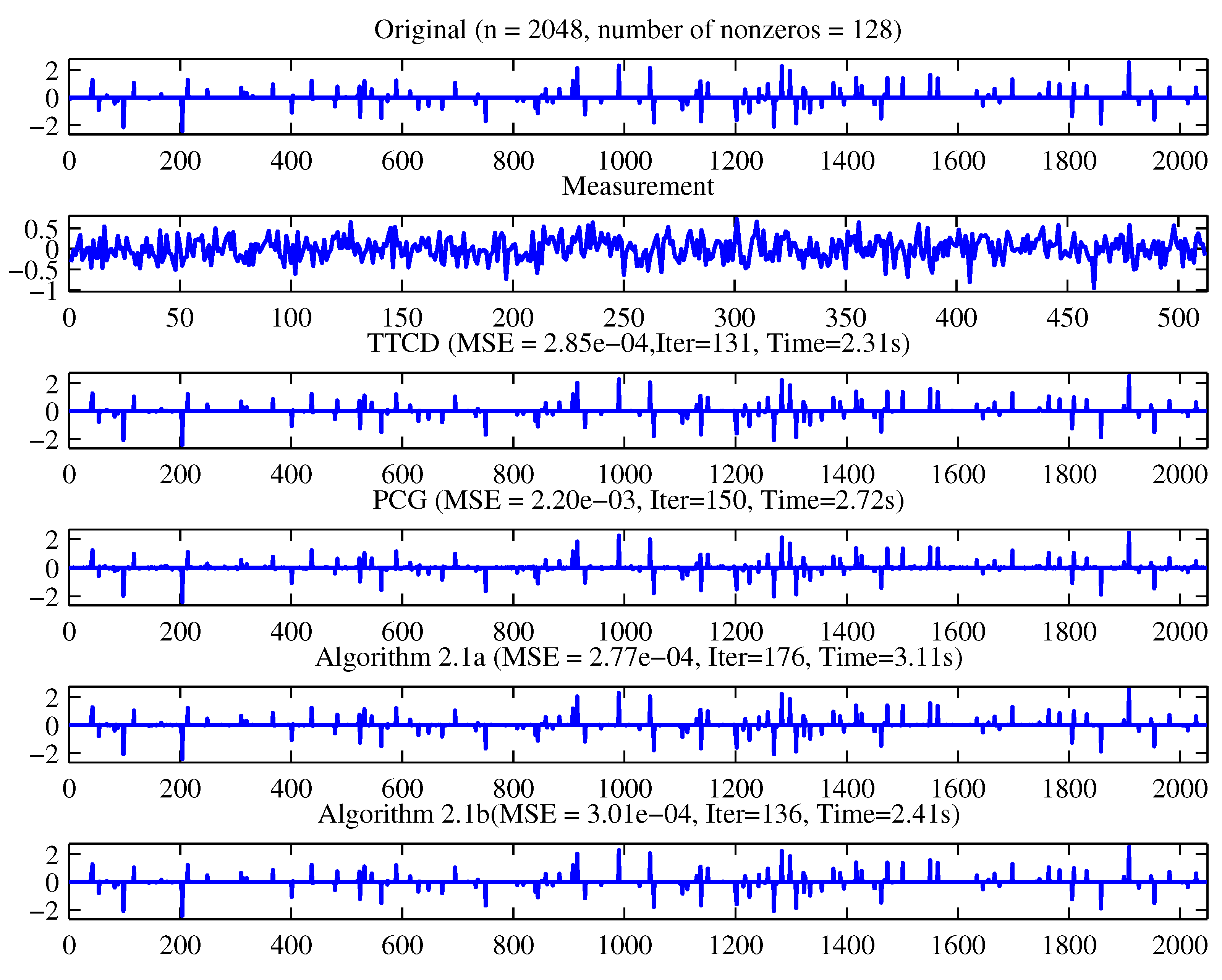

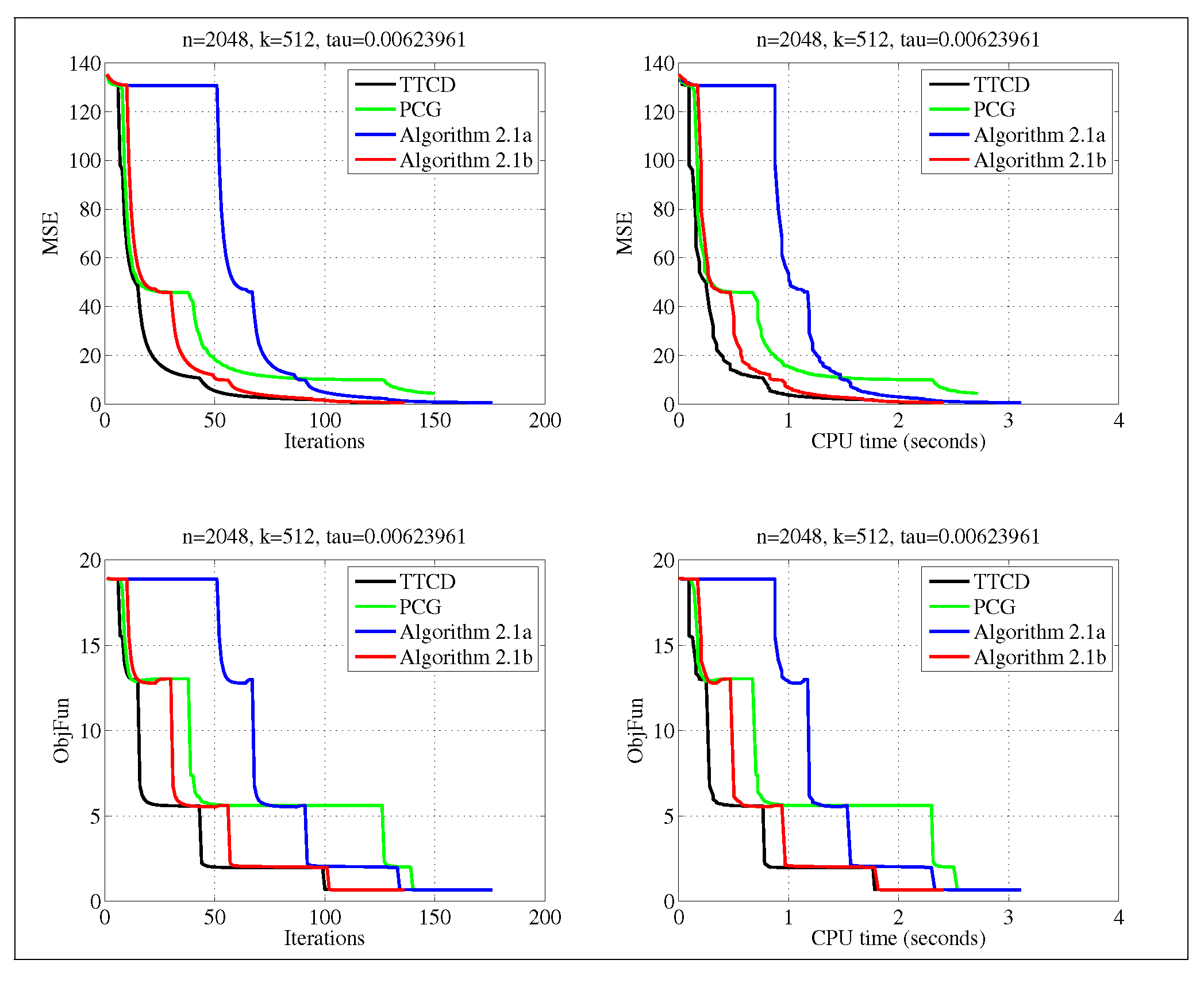

5. Signal Reconstruction

| Algorithm 1: A modified three-term conjugate descent (TTCD) derivative-free algorithm |

Input. Choose , Set . Step 1. If then stop. Else, go to Step 2. Step 3. Compute the step-length where is the least non-negative integer satisfying the following inequality: Step 4. Let If and terminate. Else, compute

Step 5. Set , repeat from Step 1. |

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, C.; Wang, Y. A superlinearly convergent projection method for constrained systems of nonlinear equations. J. Glob. Optim. 2009, 44, 283–296. [Google Scholar] [CrossRef]

- Chen, H.; Wang, Y.; Zhao, H. Finite convergence of a projected proximal point algorithm for the generalized variational inequalities. Oper. Res. Lett. 2012, 44, 303–305. [Google Scholar] [CrossRef]

- Dai, Z.; Dong, X.; Kang, J.; Hong, L. Forecasting stock market returns: New technical indicators and two-step economic constraint method. N. Am. J. Econ. Financ. 2020, 53, 101216. [Google Scholar] [CrossRef]

- Iusem, N.; Solodov, V. Newton-type methods with generalized distances for constrained optimization. Optimization 1997, 41, 257–278. [Google Scholar] [CrossRef]

- Dirkse, S.; Ferris, M. A collection of nonlinear mixed complementarity problems. Optim. Methods Softw. 1995, 5, 319–345. [Google Scholar] [CrossRef]

- Wang, Y.; Qi, L.; Luo, S.; Xu, Y. An alternative steepest direction method for the optimization in evaluating geometric discord. Pac. J. Optim. 2014, 10, 137–149. [Google Scholar]

- Fukushima, M. Equivalent differentiable optimization problems and descent methods for asymmetric variational inequality problems. Math. Program. 1992, 53, 99–110. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, D. Monotonicity of fixed point and normal mappings associated with variational inequality and its application. SIAM J. Optim. 2001, 11, 962–973. [Google Scholar] [CrossRef]

- Dennis, J.E.; More, J.J. A characterization of superlinear convergence and its application to quasi-Newton methods. Math. Comput. 1974, 28, 549–560. [Google Scholar] [CrossRef]

- Dennis, E.J.J.; Moré, J.J. Quasi-Newton methods, motivation and theory. SIAM Rev. 1977, 19, 46–89. [Google Scholar] [CrossRef]

- Ioannis, K.A. On a nonsmooth version of Newton’s method using locally lipschitzian operators. Rend. Del Circ. Mat. Palermo 2007, 56, 5–16. [Google Scholar]

- Guanglu, Z.; Chuan, T.K. Superlinear convergence of a Newton-type algorithm for monotone equations. J. Optim. Theory Appl. 2005, 125, 205–221. [Google Scholar]

- Mohammad, H.; Waziri, M.Y. On Broyden-like update via some quadratures for solving nonlinear systems of equations. Turk. J. Math. 2015, 39, 335–345. [Google Scholar] [CrossRef]

- Zhou, W.J.; Li, H.D. A globally convergent BFGS method for nonlinear monotone equations without any merit functions. Math. Comput. 2008, 77, 2231–2240. [Google Scholar] [CrossRef]

- Donghui, L.; Fukushima, M. A globally and superlinearly convergent gauss–Newton-based BFGS method for symmetric nonlinear equations. SIAM J. Numer. Anal. 1999, 37, 152–172. [Google Scholar]

- Waziri, M.Y.; Yusuf, A.; Abubakar, A.B. Improved conjugate gradient method for nonlinear system of equations. Comput. Appl. Math. 2020, 39, 1–17. [Google Scholar] [CrossRef]

- Yusuf, A.; Adamu, A.K.; Lawal, L.; Ibrahim, A.K. A Hybrid Conjugate Gradient Algorithm for Nonlinear System of Equations through Conjugacy Condition. In Proceedings of the Artificial Intelligence and Applications, Wuhan, China, 18–20 November 2023. [Google Scholar]

- Abubakar, A.B.; Kumam, P.; Mohammad, H.; Awwal, A.M. An Efficient Conjugate Gradient Method for Convex Constrained Monotone Nonlinear Equations with Applications. Mathematics 2019, 7, 767. [Google Scholar] [CrossRef]

- Zhifeng, D.; Huan, Z. A modified Hestenes-Stiefel-type derivative-free method for large-scale nonlinear monotone equations. Mathematics 2020, 8, 168. [Google Scholar] [CrossRef]

- Awwal, A.M.; Ishaku, A.; Halilu, A.S.; Stanimirović, P.S.; Pakkaranang, N.; Panyanak, B. Descent Derivative-Free Method Involving Symmetric Rank-One Update for Solving Convex Constrained Nonlinear Monotone Equations and Application to Image Recovery. Symmetry 2022, 14, 2375. [Google Scholar] [CrossRef]

- Abubakar, A.B.; Kumam, P.; Awwal, A.M.; Thounthong, P. A modified self-adaptive conjugate gradient method for solving convex constrained monotone nonlinear equations for signal recovery problems. Mathematics 2019, 7, 693. [Google Scholar] [CrossRef]

- Sabi’u, J.; Muangchoo, K.; Shah, A.; Abubakar, A.B.; Jolaoso, L.O. A modified PRP-CG type derivative-free algorithm with optimal choices for solving large-scale nonlinear symmetric equations. Symmetry 2021, 13, 234. [Google Scholar] [CrossRef]

- Awwal, A.M.; Wang, L.; Kumam, P.; Mohammad, H.; Watthayu, W. A projection Hestenes–Stiefel method with spectral parameter for nonlinear monotone equations and signal processing. Math. Comput. Appl. 2020, 25, 27. [Google Scholar] [CrossRef]

- Sulaiman, I.M.; Awwal, A.M.; Malik, M.; Pakkaranang, N.; Panyanak, B. A derivative-free mzprp projection method for convex constrained nonlinear equations and its application in compressive sensing. Mathematics 2022, 10, 2884. [Google Scholar] [CrossRef]

- Sabi’u, J.; Aremu, K.O.; Althobaiti, A.; Shah, A. Scaled three-term conjugate gradient methods for solving monotone equations with application. Symmetry 2022, 14, 936. [Google Scholar] [CrossRef]

- Fletcher, R. Practical methods of optimization. In Unconstrained Optimization, 1st ed.; Wiley: New York, NY, USA, 1987; Volume 1. [Google Scholar]

- Yan, Q.R.; Peng, X.Z.; Li, D.H. A globally convergent derivative-free method for solving large-scale nonlinear monotone equations. J. Comput. Appl. Math. 2010, 234, 649–657. [Google Scholar] [CrossRef]

- Solodov, M.; Svaiter, B. A globally convergent inexact Newton method for systems of monotone equations. In Reformulation: Nonsmooth, Piecewise Smooth, Semismooth and Smoothing Methods; Springer: Berlin/Heidelberg, Germany, 1998; pp. 355–369. [Google Scholar]

- Koorapetse, M. A new three-term conjugate gradient-based projection method for solving large-scale nonlinear monotone equations. Math. Model. Anal. 2019, 24, 550–563. [Google Scholar] [CrossRef]

- Abubakar, A.B.; Kumam, P.; Ibrahim, A.H.; Chaipunya, P.; Rano, S.A. New hybrid three-term spectral-conjugate gradient method for finding solutions of nonlinear monotone operator equations with applications. Math. Comput. Simul. 2021, 201, 670–683. [Google Scholar] [CrossRef]

- Jie, G.; Zhong, W. A new three-term conjugate gradient algorithm with modified gradient-differences for solving unconstrained optimization problems. Methods 2023, 2, 12. [Google Scholar]

- Aji, S.; Kumam, P.; Siricharoen, P.; Abubakar, A.B.; Yahaya, M.M. A Modified Conjugate Descent Projection Method for Monotone Nonlinear Equations and Image Restoration. IEEE Access 2020, 8, 158656–158665. [Google Scholar] [CrossRef]

- Yuan, G.; Li, T.; Hu, W. A conjugate gradient algorithm for large-scale nonlinear equations and image restoration problems. Appl. Numer. Math. 2020, 147, 129–141. [Google Scholar] [CrossRef]

- La Cruz, W.; Martínez, J.; Raydan, M. Spectral residual method without gradient information for solving large-scale nonlinear systems of equations. Math. Comput. 2006, 75, 1429–1448. [Google Scholar] [CrossRef]

- Bing, Y.; Lin, G. An efficient implementation of Merrill’s method for sparse or partially separable systems of nonlinear equations. SIAM J. Optim. 1991, 1, 206–221. [Google Scholar] [CrossRef]

- Abubakar, A.B.; Kumam, P.; Mohammad, H. A note on the spectral gradient projection method for nonlinear monotone equations with applications. Comput. Appl. Math. 2020, 39, 129. [Google Scholar] [CrossRef]

- Ding, Y.; Xiao, Y.H.; Li, J. A class of conjugate gradient methods for convex constrained monotone equations. Optimization 2017, 66, 2309–2328. [Google Scholar] [CrossRef]

- Dolan, E.D.; Moré, J.J. Benchmarking optimization software with performance profiles. Math. Program. 2002, 91, 201–213. [Google Scholar] [CrossRef]

- Figueiredo, M.A.T.; Nowak, R.D. An EM algorithm for wavelet-based image restoration. IEEE Trans. Image Process. 2003, 12, 906–916. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Figueiredo, M.A.T.; Nowak, R.D.; Wright, S.J. Gradient projection for sparse reconstruction: Application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 2007, 1, 586–597. [Google Scholar] [CrossRef]

- Van Den Berg, E.; Friedlander, M.P. Probing the Pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 2008, 31, 890–912. [Google Scholar] [CrossRef]

- Birgin, E.G.; Martínez, J.M.; Raydan, M. Nonmonotone spectral projected gradient methods on convex sets. SIAM J. Optim. 2000, 10, 1196–1211. [Google Scholar] [CrossRef]

- Xiao, Y.; Wang, Q.; Hu, Q. Non-smooth equations based method for ℓ1-norm problems with applications to compressed sensing. Nonlinear Anal. Theory Methods Appl. 2011, 74, 3570–3577. [Google Scholar] [CrossRef]

- Pang, J.S. Inexact Newton methods for the nonlinear complementarity problem. Math. Program. 1986, 36, 54–71. [Google Scholar] [CrossRef]

- Liu, J.K.; Li, S.J. A projection method for convex constrained monotone nonlinear equations with applications. Comput. Math. Appl. 2015, 70, 2442–2453. [Google Scholar] [CrossRef]

- Gao, P.; He, C.; Liu, Y. An adaptive family of projection methods for constrained monotone nonlinear equations with applications. Appl. Math. Comput. 2019, 359, 1–16. [Google Scholar] [CrossRef]

| TTCD | PRPFR | MHS | MCDPM | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dimension | Initial Point | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm |

| 1000 | 1 | 7 | 0.39476 | 0 | 29 | 87 | 0.46498 | 9.59 × 10 | 7 | 22 | 0.26428 | 9.24 × 10 | 18 | 74 | 0.036251 | 8.83 × 10 | |

| 1 | 7 | 0.042189 | 0 | 31 | 94 | 0.14164 | 9.97 × 10 | 7 | 23 | 0.031263 | 7.02 × 10 | 9 | 38 | 0.021498 | 8.36 × 10 | ||

| 1 | 7 | 0.017869 | 0 | 34 | 102 | 0.057707 | 9.84 × 10 | 7 | 22 | 0.017649 | 3.88 × 10 | 1 | 6 | 0.07097 | 0.00 × 10 | ||

| 1 | 9 | 0.010377 | 0 | 31 | 93 | 0.058201 | 8.64 × 10 | 21 | 65 | 0.04509 | 6.29 × 10 | 1 | 6 | 0.050159 | 0.00 × 10 | ||

| 1 | 10 | 0.010773 | 0 | 1 | 4 | 0.008098 | 0 | 1 | 6 | 0.008276 | 0 | 17 | 70 | 0.37822 | 7.83 × 10 | ||

| 5000 | 1 | 7 | 0.32858 | 0 | 28 | 85 | 4.3111 | 9.63 × 10 | 7 | 23 | 0.41693 | 9.97 × 10 | 17 | 69 | 0.6041 | 9.69 × 10 | |

| 1 | 7 | 0.25391 | 0 | 31 | 93 | 2.1436 | 8.42 × 10 | 8 | 26 | 0.18344 | 2.12 × 10 | 10 | 41 | 0.72735 | 9.79 × 10 | ||

| 1 | 7 | 0.18581 | 0 | 33 | 100 | 1.0508 | 9.92 × 10 | 7 | 22 | 0.73022 | 7.23 × 10 | 1 | 6 | 0.1916 | 0.00 × 10 | ||

| 1 | 9 | 0.1402 | 0 | 31 | 94 | 1.0252 | 9.95 × 10 | 22 | 68 | 0.91742 | 6.76 × 10 | 1 | 6 | 0.025797 | 0.00 × 10 | ||

| 1 | 10 | 0.37567 | 0 | 1 | 4 | 0.31981 | 0 | 1 | 6 | 0.090721 | 0 | 17 | 70 | 0.15727 | 7.83 × 10 | ||

| 10,000 | 1 | 7 | 0.16721 | 0 | 28 | 84 | 3.1653 | 9.29 × 10 | 8 | 26 | 1.0706 | 1.92 × 10 | 16 | 66 | 0.67978 | 9.62 × 10 | |

| 1 | 7 | 0.22336 | 0 | 30 | 91 | 2.688 | 9.63 × 10 | 8 | 26 | 0.99103 | 2.99 × 10 | 10 | 42 | 0.59915 | 3.62 × 10 | ||

| 1 | 7 | 0.18297 | 0 | 33 | 99 | 1.156 | 9.54 × 10 | 7 | 22 | 0.7447 | 9.96 × 10 | 1 | 6 | 0.36702 | 0.00 × 10 | ||

| 1 | 9 | 0.18923 | 0 | 32 | 96 | 1.6268 | 8.56 × 10 | 22 | 68 | 1.813 | 9.55 × 10 | 1 | 6 | 0.24564 | 0.00 × 10 | ||

| 1 | 10 | 0.44587 | 0 | 1 | 4 | 0.29106 | 0 | 1 | 6 | 0.13975 | 0 | 17 | 70 | 0.69621 | 7.83 × 10 | ||

| 50,000 | 1 | 7 | 0.26981 | 0 | 28 | 84 | 4.6798 | 7.80 × 10 | 8 | 26 | 1.2019 | 4.28 × 10 | 15 | 62 | 0.73916 | 8.85 × 10 | |

| 1 | 7 | 0.38483 | 0 | 30 | 90 | 3.8824 | 8.36 × 10 | 8 | 26 | 1.1829 | 6.68 × 10 | 10 | 42 | 0.87964 | 8.11 × 10 | ||

| 1 | 7 | 0.33872 | 0 | 32 | 97 | 3.3427 | 9.79 × 10 | 8 | 26 | 1.3301 | 1.44 × 10 | 1 | 6 | 0.39671 | 0.00 × 10 | ||

| 1 | 9 | 0.38542 | 0 | 33 | 99 | 3.6148 | 7.81 × 10 | 23 | 72 | 3.1315 | 8.72 × 10 | 1 | 6 | 0.44635 | 0.00 × 10 | ||

| 1 | 10 | 0.37461 | 0 | 1 | 4 | 0.34298 | 0 | 1 | 6 | 0.23315 | 0 | 17 | 70 | 1.799 | 7.83 × 10 | ||

| 100,000 | 1 | 7 | 0.46499 | 0 | 27 | 82 | 4.8191 | 9.68 × 10 | 8 | 26 | 1.2872 | 6.06 × 10 | 15 | 61 | 1.2556 | 8.80 × 10 | |

| 1 | 7 | 0.4386 | 0 | 29 | 88 | 5.0801 | 9.95 × 10 | 8 | 26 | 1.0233 | 9.45 × 10 | 11 | 45 | 0.99889 | 5.96 × 10 | ||

| 1 | 7 | 0.42428 | 0 | 32 | 96 | 5.153 | 9.54 × 10 | 8 | 26 | 1.2453 | 2.03 × 10 | 17 | 70 | 2.3563 | 7.83 × 10 | ||

| 1 | 9 | 0.75986 | 0 | 33 | 99 | 4.7671 | 8.20 × 10 | 24 | 74 | 3.4883 | 7.01 × 10 | 15 | 61 | 2.5622 | 8.80 × 10 | ||

| 1 | 10 | 0.82569 | 0 | 1 | 4 | 0.30469 | 0 | 1 | 6 | 0.44519 | 0 | 11 | 45 | 1.8746 | 5.96 × 10 | ||

| TTCD | PRPFR | MHS | MCDPM | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dimension | Initial Point | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm |

| 5 | 17 | 0.04688 | 2.69 × | 41 | 122 | 0.09586 | 8.12 × | 10 | 29 | 0.02615 | 6.78 × | 8 | 23 | 0.02071 | 8.24 × | ||

| 1000 | 5 | 17 | 0.0957 | 2.69 × 10 | 41 | 122 | 0.086591 | 8.12 × 10 | 10 | 29 | 0.035475 | 6.78 × 10 | 8 | 23 | 0.020611 | 8.24 × 10 | |

| 5 | 17 | 0.018214 | 3.77 × 10 | 42 | 125 | 0.088716 | 8.01 × 10 | 10 | 29 | 0.02479 | 8.75 × 10 | 8 | 23 | 0.020254 | 6.96 × 10 | ||

| 5 | 16 | 0.01802 | 2.52 × 10 | 35 | 104 | 0.07553 | 9.94 × 10 | 10 | 28 | 0.02561 | 4.63 × 10 | 8 | 24 | 0.025511 | 3.73 × 10 | ||

| 5 | 16 | 0.017993 | 3.74 × 10 | 36 | 106 | 0.079168 | 8.80 × 10 | 10 | 28 | 0.026581 | 5.12 × 10 | 10 | 30 | 0.031719 | 4.77 × 10 | ||

| 5 | 16 | 0.017177 | 4.40 × 10 | 41 | 120 | 0.080882 | 9.67 × 10 | 12 | 33 | 0.031571 | 4.69 × 10 | 10 | 30 | 0.025475 | 4.77 × 10 | ||

| 5000 | 5 | 17 | 0.051785 | 5.33 × 10 | 43 | 129 | 3.7411 | 9.94 × 10 | 11 | 32 | 1.4018 | 4.20 × 10 | 8 | 24 | 0.80266 | 3.29 × 10 | |

| 5 | 17 | 0.44454 | 7.48 × 10 | 44 | 132 | 1.2991 | 9.84 × 10 | 11 | 32 | 1.614 | 5.45 × 10 | 8 | 24 | 0.83206 | 2.91 × 10 | ||

| 5 | 16 | 0.5018 | 4.77 × 10 | 38 | 112 | 1.12 | 9.91 × 10 | 10 | 28 | 0.73226 | 9.91 × 10 | 8 | 24 | 0.93838 | 3.52 × 10 | ||

| 5 | 16 | 0.62636 | 6.82 × 10 | 39 | 116 | 3.0834 | 9.78 × 10 | 10 | 29 | 0.9578 | 9.47 × 10 | 11 | 32 | 1.0369 | 5.29 × 10 | ||

| 5 | 16 | 0.67811 | 7.11 × 10 | 44 | 129 | 1.3612 | 9.31 × 10 | 12 | 34 | 1.2389 | 7.14 × 10 | 11 | 32 | 0.18547 | 5.29 × 10 | ||

| 10,000 | 3 | 9 | 0.31947 | 6.46 × 10 | 45 | 134 | 1.6464 | 8.54 × 10 | 11 | 32 | 0.97057 | 5.94 × 10 | 8 | 24 | 0.92705 | 4.58 × 10 | |

| 6 | 19 | 0.90193 | 1.78 × 10 | 46 | 137 | 2.6507 | 8.46 × 10 | 11 | 32 | 1.2003 | 7.69 × 10 | 8 | 24 | 0.99062 | 4.07 × 10 | ||

| 5 | 16 | 0.74091 | 6.60 × 10 | 40 | 118 | 3.5742 | 8.06 × 10 | 10 | 29 | 1.286 | 9.76 × 10 | 8 | 24 | 1.0536 | 3.49 × 10 | ||

| 5 | 16 | 0.35192 | 9.38 × 10 | 41 | 121 | 1.9318 | 8.57 × 10 | 11 | 31 | 1.126 | 5.46 × 10 | 11 | 32 | 1.1206 | 7.47 × 10 | ||

| 5 | 16 | 0.6133 | 9.62 × 10 | 45 | 132 | 1.691 | 9.98 × 10 | 13 | 36 | 1.1217 | 4.03 × 10 | 11 | 32 | 1.1223 | 7.47 × 10 | ||

| 50,000 | 5 | 17 | 0.9371 | 4.25 × 10 | 48 | 143 | 6.2426 | 8.38 × 10 | 11 | 33 | 1.6664 | 9.28 × 10 | 9 | 26 | 1.3262 | 5.06 × 10 | |

| 6 | 19 | 0.86941 | 3.93 × 10 | 49 | 146 | 4.7769 | 8.30 × 10 | 12 | 35 | 1.6582 | 4.81 × 10 | 8 | 24 | 1.3916 | 9.03 × 10 | ||

| 5 | 17 | 1.075 | 4.28 × 10 | 42 | 125 | 4.8675 | 9.85 × 10 | 11 | 31 | 1.6966 | 8.69 × 10 | 8 | 24 | 1.26 | 3.47 × 10 | ||

| 5 | 17 | 0.96344 | 6.06 × 10 | 44 | 130 | 4.2812 | 8.54 × 10 | 11 | 32 | 1.5693 | 8.67 × 10 | 11 | 33 | 1.8148 | 3.50 × 10 | ||

| 5 | 17 | 1.0274 | 6.12 × 10 | 48 | 141 | 4.9917 | 9.78 × 10 | 13 | 36 | 2.0201 | 8.98 × 10 | 11 | 33 | 2.1212 | 3.50 × 10 | ||

| 100,000 | 5 | 17 | 1.289 | 5.99 × 10 | 49 | 146 | 6.8958 | 9.01 × 10 | 12 | 35 | 2.4472 | 5.25 × 10 | 9 | 26 | 1.9055 | 7.14 × 10 | |

| 6 | 19 | 1.3907 | 5.55 × 10 | 50 | 149 | 5.8892 | 8.92 × 10 | 12 | 35 | 2.4688 | 6.80 × 10 | 9 | 26 | 2.0498 | 6.38 × 10 | ||

| 5 | 17 | 1.2553 | 6.03 × 10 | 44 | 130 | 5.987 | 8.47 × 10 | 11 | 32 | 2.5203 | 8.60 × 10 | 8 | 24 | 1.6286 | 3.47 × 10 | ||

| 5 | 17 | 1.5648 | 8.53 × 10 | 45 | 133 | 6.2888 | 9.20 × 10 | 12 | 34 | 2.6034 | 4.91 × 10 | 11 | 33 | 2.7362 | 4.94 × 10 | ||

| 5 | 17 | 1.7077 | 8.60 × 10 | 49 | 145 | 6.7405 | 9.98 × 10 | 13 | 37 | 2.6897 | 8.88 × 10 | 11 | 33 | 2.756 | 4.94 × 10 | ||

| TTCD | PRPFR | MHS | MCDPM | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dimension | Initial Point | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm |

| 1000 | 4 | 13 | 0.013892 | 7.77 × 10 | 47 | 141 | 0.092229 | 8.32 × 10 | 10 | 31 | 0.023258 | 9.32 × 10 | 1 | 5 | 0.007193 | 0 | |

| 5 | 15 | 0.034216 | 2.72 × 10 | 49 | 147 | 0.089783 | 9.57 × 10 | 11 | 33 | 0.024557 | 7.35 × 10 | 1 | 5 | 0.007293 | 0 | ||

| 4 | 12 | 0.013768 | 2.51 × 10 | 52 | 157 | 0.098241 | 9.73 × 10 | 12 | 36 | 0.029441 | 4.66 × 10 | 1 | 5 | 0.007014 | 0 | ||

| 11 | 35 | 0.025443 | 6.74 × 10 | 56 | 168 | 0.10204 | 8.28 × 10 | 11 | 33 | 0.025179 | 7.77 × 10 | 1 | 5 | 0.006528 | 0 | ||

| 11 | 35 | 0.02495 | 6.74 × 10 | 56 | 168 | 0.099741 | 8.28 × 10 | 13 | 39 | 0.033871 | 6.13 × 10 | 1 | 5 | 0.00867 | 0 | ||

| 5000 | 5 | 15 | 0.042458 | 3.48 × 10 | 50 | 150 | 4.4435 | 8.16 × 10 | 11 | 33 | 0.80606 | 8.34 × 10 | 1 | 5 | 0.17726 | 0 | |

| 5 | 15 | 0.04373 | 6.08 × 10 | 52 | 156 | 1.391 | 9.40 × 10 | 12 | 36 | 0.93636 | 4.60 × 10 | 1 | 5 | 0.14127 | 0 | ||

| 4 | 12 | 0.10784 | 5.62 × 10 | 55 | 166 | 1.5696 | 9.55 × 10 | 12 | 37 | 1.4107 | 7.29 × 10 | 1 | 5 | 0.13105 | 0 | ||

| 12 | 37 | 0.27214 | 5.43 × 10 | 59 | 177 | 1.3227 | 8.13 × 10 | 12 | 36 | 0.97416 | 4.87 × 10 | 1 | 5 | 0.24773 | 0 | ||

| 12 | 37 | 0.13843 | 5.43 × 10 | 59 | 177 | 3.1086 | 8.13 × 10 | 13 | 40 | 0.99392 | 9.60 × 10 | 1 | 5 | 0.14151 | 0 | ||

| 10,000 | 5 | 15 | 0.21752 | 4.92 × 10 | 51 | 153 | 3.0496 | 8.77 × 10 | 11 | 34 | 1.017 | 8.26 × 10 | 1 | 5 | 0.15359 | 0 | |

| 5 | 15 | 0.10992 | 8.60 × 10 | 53 | 160 | 1.6167 | 9.59 × 10 | 12 | 36 | 0.9133 | 6.51 × 10 | 1 | 5 | 0.14256 | 0 | ||

| 4 | 12 | 0.22389 | 7.95 × 10 | 57 | 171 | 3.9475 | 8.21 × 10 | 13 | 39 | 1.1891 | 4.12 × 10 | 1 | 5 | 0.12853 | 0 | ||

| 12 | 37 | 0.20661 | 7.67 × 10 | 60 | 180 | 2.9248 | 8.73 × 10 | 12 | 36 | 1.3815 | 6.88 × 10 | 1 | 5 | 0.18035 | 0 | ||

| 12 | 37 | 0.12426 | 7.67 × 10 | 60 | 180 | 2.337 | 8.73 × 10 | 14 | 42 | 0.85581 | 5.43 × 10 | 1 | 5 | 0.096199 | 0 | ||

| 50,000 | 5 | 16 | 0.40974 | 2.20 × 10 | 54 | 162 | 6.0399 | 8.61 × 10 | 12 | 36 | 0.96663 | 7.38 × 10 | 1 | 5 | 0.13193 | 0 | |

| 5 | 16 | 0.32448 | 3.85 × 10 | 56 | 168 | 5.0229 | 9.91 × 10 | 13 | 39 | 1.0361 | 4.08 × 10 | 1 | 5 | 0.22266 | 0 | ||

| 4 | 13 | 0.2727 | 3.56 × 10 | 60 | 180 | 5.7702 | 8.06 × 10 | 13 | 39 | 1.3291 | 9.22 × 10 | 1 | 5 | 0.20316 | 0 | ||

| 13 | 40 | 0.88417 | 3.98 × 10 | 63 | 189 | 5.8126 | 8.57 × 10 | 13 | 39 | 1.928 | 4.31 × 10 | 1 | 5 | 0.22315 | 0 | ||

| 13 | 40 | 1.2791 | 3.98 × 10 | 63 | 189 | 5.6972 | 8.57 × 10 | 14 | 43 | 2.0536 | 8.50 × 10 | 1 | 5 | 0.18485 | 0 | ||

| 100,000 | 5 | 16 | 2.6019 | 3.11 × 10 | 55 | 165 | 7.2494 | 9.26 × 10 | 12 | 37 | 2.5901 | 7.31 × 10 | 1 | 5 | 0.30837 | 0 | |

| 5 | 16 | 0.50677 | 5.44 × 10 | 58 | 174 | 6.256 | 8.10 × 10 | 13 | 39 | 2.1932 | 5.77 × 10 | 1 | 5 | 0.2416 | 0 | ||

| 4 | 13 | 0.82086 | 5.03 × 10 | 61 | 183 | 6.9018 | 8.66 × 10 | 13 | 40 | 2.0052 | 9.13 × 10 | 1 | 5 | 0.22573 | 0 | ||

| 13 | 40 | 1.8095 | 5.63 × 10 | 64 | 192 | 7.3801 | 9.21 × 10 | 13 | 39 | 2.3259 | 6.10 × 10 | 1 | 5 | 0.29503 | 0 | ||

| 13 | 40 | 1.6028 | 5.63 × 10 | 64 | 192 | 7.0349 | 9.21 × 10 | 15 | 45 | 2.8882 | 4.81 × 10 | 1 | 5 | 0.27045 | 0 | ||

| TTCD | PRPFR | MHS | MCDPM | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dimension | Initial Point | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm |

| 1000 | 1 | 2 | 0.014977 | 0 | 1 | 2 | 0.008553 | 0 | 1 | 2 | 0.008269 | 0 | 20 | 81 | 0.063565 | 9.90 × 10 | |

| 1 | 2 | 0.026176 | 0 | 1 | 2 | 0.008659 | 0 | 1 | 2 | 0.008284 | 0 | 1 | 3 | 0.00756 | 0 | ||

| 1 | 2 | 0.010477 | 0 | 1 | 2 | 0.007917 | 0 | 1 | 2 | 0.009784 | 0 | 1 | 3 | 0.008726 | 0 | ||

| 1 | 1 | 0.009676 | 0 | 1 | 4 | 0.008573 | 0 | 1 | 1 | 0.007314 | 0 | 9 | 26 | 0.028904 | 6.19 × 10 | ||

| 1 | 1 | 0.009758 | 0 | 1 | 4 | 0.007923 | 0 | 1 | 1 | 0.007787 | 0 | 9 | 26 | 0.026248 | 6.19 × 10 | ||

| 5000 | 1 | 2 | 0.15403 | 0 | 1 | 2 | 0.24411 | 0 | 1 | 2 | 0.079542 | 0 | 22 | 88 | 2.2723 | 7.13 × 10 | |

| 1 | 2 | 0.0779 | 0 | 1 | 2 | 0.30731 | 0 | 1 | 2 | 0.093345 | 0 | 1 | 3 | 0.12692 | 0 | ||

| 1 | 2 | 0.12547 | 0 | 1 | 2 | 0.25837 | 0 | 1 | 2 | 0.12491 | 0 | 1 | 3 | 0.092858 | 0 | ||

| 1 | 1 | 0.022494 | 0 | 1 | 4 | 0.26094 | 0 | 1 | 1 | 0.017496 | 0 | 9 | 27 | 1.3101 | 2.76 × 10 | ||

| 1 | 1 | 0.036342 | 0 | 1 | 4 | 0.35534 | 0 | 1 | 1 | 0.012998 | 0 | 9 | 27 | 1.0175 | 2.76 × 10 | ||

| 10,000 | 1 | 2 | 0.10049 | 0 | 1 | 2 | 0.27088 | 0 | 1 | 2 | 0.18825 | 0 | 22 | 89 | 1.3107 | 6.62 × 10 | |

| 1 | 2 | 0.14111 | 0 | 1 | 2 | 0.34003 | 0 | 1 | 2 | 0.11943 | 0 | 1 | 3 | 0.15195 | 0 | ||

| 1 | 2 | 0.11487 | 0 | 1 | 2 | 0.56146 | 0 | 1 | 2 | 0.13215 | 0 | 1 | 3 | 0.13622 | 0 | ||

| 1 | 1 | 0.031735 | 0 | 1 | 4 | 0.33398 | 0 | 1 | 1 | 0.017289 | 0 | 9 | 27 | 1.0115 | 3.91 × 10 | ||

| 1 | 1 | 0.033518 | 0 | 1 | 4 | 0.40247 | 0 | 1 | 1 | 0.037098 | 0 | 9 | 27 | 1.1561 | 3.91 × 10 | ||

| 50,000 | 1 | 2 | 0.16977 | 0 | 1 | 2 | 0.43538 | 0 | 1 | 2 | 0.23996 | 0 | 23 | 93 | 4.2309 | 6.81 × 10 | |

| 1 | 2 | 0.23495 | 0 | 1 | 2 | 0.44127 | 0 | 1 | 2 | 0.17726 | 0 | 1 | 3 | 0.23854 | 0 | ||

| 1 | 2 | 0.26851 | 0 | 1 | 2 | 0.29747 | 0 | 1 | 2 | 0.23986 | 0 | 1 | 3 | 0.14383 | 0 | ||

| 1 | 1 | 0.06572 | 0 | 1 | 4 | 0.29184 | 0 | 1 | 1 | 0.056298 | 0 | 9 | 27 | 1.6538 | 8.74 × 10 | ||

| 1 | 1 | 0.09506 | 0 | 1 | 4 | 0.46869 | 0 | 1 | 1 | 0.083448 | 0 | 9 | 27 | 1.5979 | 8.74 × 10 | ||

| 100,000 | 1 | 2 | 0.45274 | 0 | 1 | 2 | 0.54185 | 0 | 1 | 2 | 0.28671 | 0 | 23 | 93 | 5.6623 | 9.63 × 10 | |

| 1 | 2 | 0.33082 | 0 | 1 | 2 | 0.41999 | 0 | 1 | 2 | 0.31384 | 0 | 1 | 3 | 0.29981 | 0 | ||

| 1 | 2 | 0.33148 | 0 | 1 | 2 | 0.43823 | 0 | 1 | 2 | 0.3507 | 0 | 1 | 3 | 0.24454 | 0 | ||

| 1 | 1 | 0.14723 | 0 | 1 | 4 | 0.42649 | 0 | 1 | 1 | 0.15859 | 0 | 10 | 29 | 2.4583 | 6.18 × 10 | ||

| 1 | 1 | 0.14368 | 0 | 1 | 4 | 0.46397 | 0 | 1 | 1 | 0.095907 | 0 | 10 | 29 | 2.7612 | 6.18 × 10 | ||

| TTCD | PRPFR | MHS | MCDPM | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dimension | Initial Point | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm |

| 1000 | 1 | 4 | 0.01906 | 0 | 46 | 139 | 0.076367 | 9.75 × 10 | 10 | 31 | 0.022036 | 7.77 × 10 | 21 | 85 | 0.13186 | 7.15 × 10 | |

| 1 | 4 | 0.010846 | 0 | 49 | 147 | 0.077697 | 8.44 × 10 | 11 | 33 | 0.022087 | 5.05 × 10 | 21 | 85 | 0.034548 | 7.15 × 10 | ||

| 2 | 8 | 0.038847 | 0 | 51 | 154 | 0.078987 | 9.57 × 10 | 11 | 33 | 0.021167 | 4.15 × 10 | 1 | 6 | 0.016759 | 0.00 × 10 | ||

| 1 | 7 | 0.014736 | 0 | 53 | 159 | 0.080936 | 9.18 × 10 | 1 | 5 | 0.007763 | 0 | 1 | 5 | 0.020092 | 0.00 × 10 | ||

| 1 | 9 | 0.009923 | 0 | 52 | 156 | 0.080606 | 9.60 × 10 | 47 | 143 | 0.072079 | 9.29 × 10 | 1 | 5 | 0.013008 | 2.22 × 10 | ||

| 5000 | 1 | 4 | 0.10086 | 0 | 49 | 148 | 2.6231 | 9.57 × 10 | 11 | 33 | 0.4742 | 6.95 × 10 | 22 | 89 | 0.79118 | 7.38 × 10 | |

| 1 | 4 | 0.10232 | 0 | 52 | 156 | 2.9497 | 8.29 × 10 | 11 | 34 | 0.84249 | 7.90 × 10 | 22 | 89 | 0.6661 | 7.38 × 10 | ||

| 2 | 8 | 0.27552 | 0 | 54 | 162 | 1.5443 | 9.89 × 10 | 11 | 33 | 1.1456 | 9.28 × 10 | 1 | 6 | 0.24211 | 0.00 × 10 | ||

| 1 | 7 | 0.2915 | 0 | 56 | 168 | 2.6752 | 9.01 × 10 | 1 | 5 | 0.15603 | 0 | 1 | 5 | 0.026622 | 0.00 × 10 | ||

| 1 | 9 | 0.21487 | 0 | 55 | 165 | 1.441 | 9.42 × 10 | 50 | 152 | 1.6409 | 8.44 × 10 | 1 | 5 | 0.07096 | 2.22 × 10 | ||

| 10,000 | 1 | 4 | 0.16575 | 0 | 51 | 153 | 3.1945 | 8.23 × 10 | 11 | 33 | 1.0745 | 9.83 × 10 | 23 | 92 | 0.20473 | 7.31 × 10 | |

| 1 | 4 | 0.1488 | 0 | 53 | 159 | 1.894 | 8.91 × 10 | 12 | 36 | 0.61285 | 4.47 × 10 | 23 | 92 | 0.22369 | 7.31 × 10 | ||

| 2 | 8 | 0.33583 | 0 | 56 | 168 | 1.7796 | 8.08 × 10 | 11 | 34 | 1.154 | 9.19 × 10 | 1 | 6 | 0.26087 | 0.00 × 10 | ||

| 1 | 7 | 0.17316 | 0 | 57 | 171 | 3.7837 | 9.68 × 10 | 1 | 5 | 0.040828 | 0 | 1 | 5 | 0.054397 | 0.00 × 10 | ||

| 1 | 9 | 0.27009 | 0 | 56 | 169 | 3.1847 | 9.62 × 10 | 51 | 155 | 1.9967 | 8.85 × 10 | 1 | 5 | 0.22579 | 2.22 × 10 | ||

| 50,000 | 1 | 4 | 0.19745 | 0 | 54 | 162 | 4.8643 | 8.08 × 10 | 12 | 36 | 1.2212 | 6.16 × 10 | 24 | 96 | 1.3266 | 7.52 × 10 | |

| 1 | 4 | 0.15177 | 0 | 56 | 168 | 4.4589 | 8.75 × 10 | 12 | 36 | 1.4127 | 9.99 × 10 | 24 | 96 | 0.98972 | 7.52 × 10 | ||

| 2 | 8 | 0.38192 | 0 | 58 | 175 | 5.0083 | 9.91 × 10 | 12 | 36 | 1.6327 | 8.22 × 10 | 1 | 6 | 0.34723 | 0.00 × 10 | ||

| 1 | 7 | 0.34086 | 0 | 60 | 180 | 4.9077 | 9.50 × 10 | 1 | 5 | 0.18248 | 0 | 1 | 5 | 0.093625 | 0.00 × 10 | ||

| 1 | 9 | 0.37025 | 0 | 59 | 177 | 5.0955 | 9.94 × 10 | 54 | 164 | 5.3971 | 8.04 × 10 | 1 | 5 | 0.095985 | 2.22 × 10 | ||

| 100,000 | 1 | 4 | 0.37103 | 0 | 55 | 165 | 6.3816 | 8.68 × 10 | 12 | 36 | 1.6237 | 8.71 × 10 | 24 | 97 | 2.2859 | 6.99 × 10 | |

| 1 | 4 | 0.22823 | 0 | 57 | 171 | 5.7132 | 9.40 × 10 | 12 | 37 | 1.8371 | 9.89 × 10 | 24 | 97 | 1.6916 | 6.99 × 10 | ||

| 2 | 8 | 0.54986 | 0 | 60 | 180 | 5.8032 | 8.52 × 10 | 12 | 37 | 2.0415 | 8.13 × 10 | 1 | 5 | 0.22741 | 2.22 × 10 | ||

| 1 | 7 | 0.38999 | 0 | 61 | 184 | 6.7707 | 9.70 × 10 | 1 | 5 | 0.35288 | 0 | 24 | 97 | 4.0911 | 6.99 × 10 | ||

| 1 | 9 | 0.54538 | 0 | 61 | 183 | 5.3886 | 8.12 × 10 | 55 | 167 | 7.2288 | 8.43 × 10 | 24 | 97 | 3.5739 | 6.99 × 10 | ||

| TTCD | PRPFR | MHS | MCDPM | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dimension | Initial Point | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm |

| 1000 | 20 | 61 | 0.10016 | 6.95 × 10 | 59 | 177 | 0.13948 | 8.09 × 10 | — | — | — | — | 28 | 113 | 0.061562 | 6.96 × 10 | |

| 20 | 61 | 0.044003 | 4.82 × 10 | 58 | 175 | 0.12307 | 9.72 × 10 | — | — | — | — | 1001 | 4007 | 2.1789 | 2.0736 | ||

| 20 | 63 | 0.078728 | 6.21 × 10 | 58 | 174 | 0.15125 | 9.02 × 10 | — | — | — | — | 1001 | 4007 | 2.2257 | 2.2094 | ||

| 26 | 84 | 0.095104 | 7.13 × 10 | 56 | 168 | 0.12881 | 8.57 × 10 | 28 | 85 | 0.057195 | 8.82 × 10 | 13 | 39 | 0.030615 | 3.53 × 10 | ||

| 43 | 137 | 0.1255 | 9.23 × 10 | 54 | 162 | 0.12107 | 8.75 × 10 | 49 | 149 | 0.085025 | 8.88 × 10 | 39 | 156 | 0.078792 | 8.55 × 10 | ||

| 5000 | 23 | 72 | 1.8365 | 6.89 × 10 | 61 | 184 | 4.4772 | 9.93 × 10 | 467 | 1407 | 7.9621 | 9.99 × 10 | 1001 | 4012 | 15.1572 | 1.8695 | |

| 24 | 74 | 2.0428 | 7.36 × 10 | 61 | 184 | 1.6081 | 9.55 × 10 | — | — | — | — | 1001 | 4007 | 8.4921 | 5.2384 | ||

| 22 | 68 | 0.90321 | 5.53 × 10 | 61 | 183 | 1.8118 | 8.86 × 10 | — | — | — | — | 1001 | 4007 | 5.5223 | 5.8303 | ||

| 28 | 90 | 1.3633 | 6.81 × 10 | 59 | 177 | 1.9934 | 8.42 × 10 | 31 | 94 | 0.14181 | 8.73 × 10 | 16 | 48 | 0.55779 | 4.96 × 10 | ||

| 31 | 94 | 0.14181 | 8.73 × 10 | 57 | 171 | 2.3279 | 8.60 × 10 | 54 | 164 | 0.93908 | 8.26 × 10 | 41 | 164 | 1.2763 | 9.01 × 10 | ||

| 10,000 | 24 | 75 | 0.1859 | 9.83 × 10 | 63 | 189 | 2.2762 | 8.54 × 10 | — | — | — | — | 1001 | 4012 | 17.0512 | 2.9693 | |

| 25 | 77 | 0.96436 | 5.38 × 10 | 63 | 189 | 2.4627 | 8.22 × 10 | — | — | — | — | 1001 | 4007 | 13.237 | 7.5161 | ||

| 22 | 69 | 1.6272 | 8.21 × 10 | 62 | 186 | 2.2821 | 9.52 × 10 | — | — | — | — | 1001 | 4007 | 13.1732 | 8.3369 | ||

| 29 | 93 | 2.7352 | 6.67 × 10 | 60 | 180 | 2.3916 | 9.05 × 10 | 32 | 98 | 0.25086 | 9.07 × 10 | 33 | 122 | 0.95242 | 8.42 × 10 | ||

| 58 | 183 | 2.9637 | 9.49 × 10 | 58 | 174 | 2.8124 | 9.24 × 10 | 56 | 170 | 1.1133 | 8.88 × 10 | 42 | 168 | 1.9567 | 9.88 × 10 | ||

| 50,000 | 24 | 75 | 3.044 | 9.79 × 10 | 66 | 198 | 7.6742 | 8.38 × 10 | 83 | 249 | 7.2083 | 9.80 × 10 | 1001 | 4013 | 49.0995 | 2.3302 | |

| 75 | 234 | 9.3715 | 8.53 × 10 | 66 | 198 | 4.4765 | 8.06 × 10 | — | — | — | — | 1001 | 4007 | 46.1181 | 16.9985 | ||

| 23 | 71 | 2.6601 | 4.91 × 10 | 65 | 195 | 5.5787 | 9.35 × 10 | — | — | — | — | 1001 | 4007 | 45.8501 | 18.8076 | ||

| 31 | 99 | 3.4197 | 9.01 × 10 | 63 | 189 | 5.8094 | 8.89 × 10 | 35 | 107 | 1.2342 | 8.98 × 10 | 1001 | 4004 | 46.1648 | 1.9526 | ||

| 78 | 244 | 8.8083 | 9.48 × 10 | 61 | 183 | 6.2206 | 9.07 × 10 | 61 | 185 | 3.6487 | 9.97 × 10 | 47 | 187 | 3.4618 | 8.88 × 10 | ||

| 100,000 | 25 | 77 | 3.8554 | 3.88 × 10 | 67 | 201 | 9.3143 | 9.01 × 10 | 86 | 257 | 9.9301 | 8.88 × 10 | 1001 | 4013 | 89.2803 | 5.5249 | |

| — | — | — | — | 67 | 201 | 8.4452 | 8.67 × 10 | 658 | 1980 | 48.1837 | 9.99 × 10 | 1001 | 4007 | 87.5767 | 24.0734 | ||

| 23 | 71 | 1.6217 | 6.80 × 10 | 66 | 199 | 8.0706 | 9.54 × 10 | — | — | — | — | 1001 | 4007 | 87.0774 | 26.6312 | ||

| 32 | 102 | 3.5771 | 9.87 × 10 | 64 | 192 | 8.1187 | 9.55 × 10 | 37 | 112 | 2.5342 | 6.74 × 10 | 1001 | 4004 | 87.3123 | 2.9699 | ||

| 76 | 239 | 10.7224 | 9.84 × 10 | 62 | 186 | 7.8989 | 9.75 × 10 | 64 | 194 | 6.2405 | 8.21 × 10 | 49 | 196 | 6.0736 | 8.31 × 10 | ||

| TTCD | PRPFR | MHS | MCDPM | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dimension | Initial Point | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm |

| 1000 | 9 | 30 | 0.041724 | 3.51 × 10 | 7 | 18 | 0.053759 | 9.06 × 10 | 13 | 39 | 0.042277 | 7.68 × 10 | 21 | 85 | 0.056556 | 7.81 × 10 | |

| 9 | 30 | 0.032227 | 2.07 × 10 | 7 | 18 | 0.018074 | 9.06 × 10 | 13 | 39 | 0.036789 | 7.38 × 10 | 22 | 88 | 0.053854 | 8.33 × 10 | ||

| 7 | 25 | 0.025897 | 3.53 × 10 | 7 | 18 | 0.017956 | 9.06 × 10 | 13 | 39 | 0.035082 | 6.50 × 10 | 22 | 88 | 0.056923 | 8.64 × 10 | ||

| 7 | 24 | 0.035928 | 3.30 × 10 | 7 | 18 | 0.017267 | 9.06 × 10 | 12 | 37 | 0.045048 | 8.93 × 10 | 22 | 88 | 0.057559 | 7.12 × 10 | ||

| 5 | 19 | 0.074749 | 5.32 × 10 | 7 | 18 | 0.01742 | 9.06 × 10 | 12 | 36 | 0.036863 | 7.52 × 10 | 22 | 88 | 0.06216 | 7.12 × 10 | ||

| 5000 | 6 | 21 | 0.073893 | 7.59 × 10 | 6 | 16 | 0.74203 | 6.77 × 10 | 14 | 42 | 1.5985 | 4.81 × 10 | 22 | 89 | 1.785 | 8.05 × 10 | |

| 6 | 21 | 0.067512 | 7.61 × 10 | 6 | 16 | 0.75625 | 6.77 × 10 | 14 | 42 | 1.399 | 4.63 × 10 | 23 | 92 | 1.668 | 8.58 × 10 | ||

| 6 | 21 | 0.067476 | 7.57 × 10 | 6 | 16 | 1.1359 | 6.77 × 10 | 14 | 42 | 1.2057 | 4.08 × 10 | 23 | 92 | 0.96043 | 8.91 × 10 | ||

| 6 | 21 | 0.071118 | 5.83 × 10 | 6 | 16 | 0.40613 | 6.77 × 10 | 13 | 39 | 0.80464 | 8.00 × 10 | 23 | 92 | 1.3392 | 7.33 × 10 | ||

| 6 | 21 | 0.070343 | 3.94 × 10 | 6 | 16 | 0.78932 | 6.77 × 10 | 13 | 39 | 1.3359 | 4.72 × 10 | 23 | 92 | 1.7417 | 7.33 × 10 | ||

| 10,000 | 7 | 24 | 0.13363 | 3.32 × 10 | 7 | 19 | 0.78323 | 5.87 × 10 | 14 | 42 | 1.5384 | 6.81 × 10 | 23 | 92 | 1.7332 | 7.97 × 10 | |

| 7 | 24 | 0.14005 | 3.43 × 10 | 7 | 19 | 0.95083 | 5.87 × 10 | 14 | 42 | 1.5111 | 6.55 × 10 | 23 | 93 | 2.24 | 7.98 × 10 | ||

| 6 | 21 | 0.11446 | 8.67 × 10 | 7 | 19 | 1.5381 | 5.87 × 10 | 14 | 42 | 1.4081 | 5.77 × 10 | 23 | 93 | 1.4625 | 8.28 × 10 | ||

| 5 | 18 | 0.11136 | 9.31 × 10 | 7 | 19 | 1.0212 | 5.87 × 10 | 13 | 40 | 1.089 | 7.92 × 10 | 23 | 93 | 1.2064 | 6.81 × 10 | ||

| 5 | 18 | 0.13666 | 6.22 × 10 | 7 | 19 | 1.6565 | 5.87 × 10 | 13 | 39 | 1.3239 | 6.67 × 10 | 23 | 93 | 0.54021 | 6.81 × 10 | ||

| 50,000 | 5 | 19 | 0.43768 | 2.43 × 10 | 12 | 35 | 1.442 | 8.08 × 10 | 15 | 45 | 1.7295 | 4.26 × 10 | 24 | 96 | 2.2795 | 8.19 × 10 | |

| 5 | 19 | 0.41969 | 2.39 × 10 | 12 | 35 | 2.4552 | 8.08 × 10 | 15 | 45 | 1.8359 | 4.10 × 10 | 24 | 97 | 2.9665 | 8.20 × 10 | ||

| 5 | 19 | 0.42038 | 2.26 × 10 | 12 | 35 | 1.7098 | 8.08 × 10 | 14 | 43 | 1.892 | 9.03 × 10 | 24 | 97 | 2.8791 | 8.52 × 10 | ||

| 5 | 18 | 0.40879 | 9.62 × 10 | 12 | 35 | 1.9967 | 8.08 × 10 | 14 | 42 | 2.2515 | 7.09 × 10 | 24 | 97 | 3.5014 | 7.01 × 10 | ||

| 5 | 18 | 0.41376 | 5.67 × 10 | 12 | 35 | 2.3075 | 8.08 × 10 | 14 | 42 | 2.3739 | 4.18 × 10 | 24 | 97 | 3.3654 | 7.01 × 10 | ||

| 100,000 | 5 | 19 | 0.7687 | 2.76 × 10 | 18 | 55 | 3.6832 | 9.84 × 10 | 15 | 45 | 2.8995 | 6.03 × 10 | 24 | 97 | 4.8971 | 7.61 × 10 | |

| 5 | 19 | 1.0646 | 2.66 × 10 | 18 | 55 | 3.2224 | 9.84 × 10 | 15 | 45 | 2.8558 | 5.80 × 10 | 25 | 100 | 4.5997 | 8.12 × 10 | ||

| 5 | 19 | 0.82346 | 2.35 × 10 | 18 | 55 | 3.1908 | 9.84 × 10 | 15 | 45 | 2.585 | 5.11 × 10 | 25 | 100 | 4.8136 | 8.43 × 10 | ||

| 5 | 19 | 1.1497 | 1.31 × 10 | 18 | 55 | 3.2991 | 9.84 × 10 | 14 | 43 | 2.6058 | 7.01 × 10 | 24 | 97 | 4.8878 | 9.91 × 10 | ||

| 5 | 18 | 0.7911 | 8.01 × 10 | 18 | 55 | 3.4264 | 9.84 × 10 | 14 | 42 | 2.7503 | 5.91 × 10 | 24 | 97 | 4.6876 | 9.91 × 10 | ||

| TTCD | PRPFR | MHS | MCDPM | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dimension | Initial Point | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm | NOI | NFE | TIME | Norm |

| 1000 | 7 | 26 | 0.038192 | 4.69 × 10 | 13 | 40 | 0.034355 | 8.78 × 10 | 10 | 31 | 0.026314 | 3.45 × 10 | 17 | 70 | 0.074282 | 8.77 × 10 | |

| 7 | 26 | 0.027651 | 4.12 × 10 | 13 | 39 | 0.025373 | 7.19 × 10 | 9 | 29 | 0.022346 | 7.25 × 10 | 11 | 46 | 0.025641 | 5.26 × 10 | ||

| 6 | 23 | 0.017775 | 1.42 × 10 | 13 | 39 | 0.024113 | 6.85 × 10 | 7 | 23 | 0.018538 | 6.02 × 10 | 11 | 47 | 0.026905 | 3.38 × 10 | ||

| 7 | 26 | 0.022134 | 5.98 × 10 | 15 | 45 | 0.026703 | 5.54 × 10 | 10 | 31 | 0.025627 | 8.05 × 10 | 12 | 51 | 0.02692 | 7.22 × 10 | ||

| 8 | 28 | 0.020408 | 3.31 × 10 | 15 | 45 | 0.02498 | 7.95 × 10 | 10 | 30 | 0.024156 | 3.84 × 10 | 11 | 47 | 0.026337 | 8.31 × 10 | ||

| 5000 | 8 | 29 | 0.10079 | 1.45 × 10 | 14 | 42 | 1.8974 | 8.52 × 10 | 10 | 31 | 1.1397 | 7.72 × 10 | 11 | 46 | 1.0893 | 5.78 × 10 | |

| 7 | 26 | 0.062245 | 9.22 × 10 | 14 | 42 | 0.94525 | 5.16 × 10 | 10 | 31 | 0.96032 | 5.28 × 10 | 15 | 63 | 0.93147 | 5.24 × 10 | ||

| 6 | 23 | 0.059951 | 3.18 × 10 | 14 | 42 | 1.1388 | 4.92 × 10 | 8 | 25 | 0.99529 | 4.39 × 10 | 12 | 51 | 0.8147 | 3.59 × 10 | ||

| 8 | 29 | 0.06846 | 1.85 × 10 | 15 | 46 | 0.81809 | 9.15 × 10 | 11 | 34 | 1.1985 | 3.44 × 10 | 11 | 46 | 0.96572 | 6.44 × 10 | ||

| 8 | 28 | 0.066197 | 7.40 × 10 | 16 | 48 | 1.1876 | 5.71 × 10 | 10 | 30 | 0.8707 | 8.60 × 10 | 11 | 46 | 0.95789 | 5.72 × 10 | ||

| 10,000 | 8 | 29 | 0.10914 | 2.06 × 10 | 14 | 43 | 0.87715 | 8.91 × 10 | 10 | 32 | 0.89477 | 6.40 × 10 | 12 | 50 | 0.97286 | 5.16 × 10 | |

| 8 | 29 | 0.12 | 1.81 × 10 | 14 | 42 | 1.2638 | 7.30 × 10 | 10 | 31 | 1.1462 | 7.47 × 10 | 14 | 58 | 1.1714 | 7.59 × 10 | ||

| 6 | 23 | 0.1001 | 4.50 × 10 | 14 | 42 | 1.5877 | 6.96 × 10 | 8 | 25 | 0.37139 | 6.21 × 10 | 13 | 55 | 1.0799 | 4.13 × 10 | ||

| 8 | 29 | 0.3701 | 2.62 × 10 | 16 | 48 | 1.922 | 5.62 × 10 | 11 | 34 | 1.0558 | 4.87 × 10 | 12 | 50 | 1.4228 | 7.62 × 10 | ||

| 9 | 31 | 0.12085 | 1.45 × 10 | 16 | 48 | 1.4143 | 8.07 × 10 | 10 | 31 | 0.23049 | 7.13 × 10 | 12 | 50 | 1.106 | 5.76 × 10 | ||

| 50,000 | 8 | 29 | 0.49017 | 4.60 × 10 | 15 | 45 | 2.619 | 8.66 × 10 | 11 | 34 | 1.3596 | 4.67 × 10 | 12 | 51 | 1.8309 | 6.78 × 10 | |

| 8 | 29 | 0.45313 | 4.04 × 10 | 15 | 45 | 2.5553 | 5.24 × 10 | 10 | 32 | 1.3002 | 9.80 × 10 | 19 | 78 | 2.0413 | 9.64 × 10 | ||

| 7 | 26 | 0.39436 | 1.39 × 10 | 15 | 45 | 2.1702 | 5.00 × 10 | 8 | 26 | 1.2699 | 8.14 × 10 | 16 | 67 | 1.8872 | 2.99 × 10 | ||

| 8 | 29 | 0.43932 | 5.85 × 10 | 16 | 49 | 2.5299 | 9.30 × 10 | 11 | 35 | 1.8906 | 6.39 × 10 | 12 | 51 | 1.5517 | 7.17 × 10 | ||

| 9 | 31 | 0.51474 | 3.24 × 10 | 17 | 51 | 1.6011 | 5.80 × 10 | 11 | 33 | 1.4739 | 5.20 × 10 | 12 | 50 | 1.858 | 6.12 × 10 | ||

| 100,000 | 8 | 29 | 0.86788 | 6.50 × 10 | 15 | 46 | 2.6286 | 9.05 × 10 | 11 | 34 | 2.2841 | 6.60 × 10 | 13 | 55 | 2.6612 | 4.51 × 10 | |

| 8 | 29 | 0.86763 | 5.71 × 10 | 15 | 45 | 2.8049 | 7.41 × 10 | 11 | 34 | 2.1174 | 4.52 × 10 | 13 | 55 | 2.8656 | 4.36 × 10 | ||

| 7 | 26 | 0.76683 | 1.97 × 10 | 15 | 45 | 2.2822 | 7.07 × 10 | 9 | 28 | 2.0024 | 3.75 × 10 | 18 | 75 | 3.9286 | 2.65 × 10 | ||

| 8 | 29 | 1.2493 | 8.28 × 10 | 17 | 51 | 3.2253 | 5.71 × 10 | 11 | 35 | 2.3621 | 9.03 × 10 | 16 | 66 | 3.2361 | 4.79 × 10 | ||

| 9 | 31 | 0.9051 | 4.59 × 10 | 17 | 51 | 2.0118 | 8.20 × 10 | 11 | 33 | 2.3617 | 7.35 × 10 | 16 | 66 | 2.6618 | 4.97 × 10 | ||

| TTCD | PCG | Algorithm 2.1a | Algorithm 2.1b | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| S/N | TIME | IT | MSE | TIME | IT | MSE | TIME | IT | MSE | TIME | IT | MSE |

| 1 | 2.31 | 131 | 2.85 × 10 | 2.72 | 150 | 2.20 × 10 | 3.11 | 176 | 2.77 × 10 | 2.41 | 136 | 3.01 × 10 |

| 2 | 3.02 | 97 | 2.81 × 10 | 3.97 | 119 | 4.90 × 10 | 4.58 | 138 | 5.03 × 10 | 4.70 | 132 | 4.05 × 10 |

| 3 | 3.05 | 178 | 1.01 × 10 | 3.35 | 186 | 2.70 × 10 | 3.75 | 177 | 1.05 × 10 | 2.38 | 125 | 1.35 × 10 |

| 4 | 2.92 | 105 | 3.18 × 10 | 4.08 | 133 | 3.98 × 10 | 4.61 | 121 | 3.18 × 10 | 5.09 | 146 | 2.92 × 10 |

| 5 | 2.72 | 182 | 4.55 × 10 | 3.00 | 184 | 2.05 × 10 | 3.61 | 206 | 2.33 × 10 | 2.83 | 172 | 2.43 × 10 |

| 6 | 1.84 | 93 | 1.71 × 10 | 3.02 | 128 | 9.50 × 10 | 3.54 | 123 | 1.17 × 10 | 4.63 | 153 | 2.68 × 10 |

| 7 | 3.54 | 110 | 2.98 × 10 | 5.19 | 140 | 4.31 × 10 | 4.59 | 135 | 4.21 × 10 | 4.77 | 169 | 3.74 × 10 |

| 8 | 3.31 | 101 | 5.47 × 10 | 3.57 | 127 | 4.85 × 10 | 4.73 | 139 | 4.37 × 10 | 5.82 | 145 | 3.96 × 10 |

| 9 | 2.11 | 145 | 1.71 × 10 | 2.66 | 152 | 4.09 × 10 | 3.80 | 171 | 1.48 × 10 | 2.25 | 159 | 1.47 × 10 |

| 10 | 2.34 | 91 | 2.21 × 10 | 4.01 | 124 | 3.29 × 10 | 3.39 | 111 | 3.07 × 10 | 2.65 | 142 | 2.89 × 10 |

| Average | 2.716 | 123.3 | 8.432 × 10 | 3.557 | 144.3 | 1.433 × 10 | 3.971 | 149.7 | 9.792 × 10 | 3.753 | 147.9 | 1.099 × 10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yusuf, A.; Manjak, N.H.; Aphane, M. A Modified Three-Term Conjugate Descent Derivative-Free Method for Constrained Nonlinear Monotone Equations and Signal Reconstruction Problems. Mathematics 2024, 12, 1649. https://doi.org/10.3390/math12111649

Yusuf A, Manjak NH, Aphane M. A Modified Three-Term Conjugate Descent Derivative-Free Method for Constrained Nonlinear Monotone Equations and Signal Reconstruction Problems. Mathematics. 2024; 12(11):1649. https://doi.org/10.3390/math12111649

Chicago/Turabian StyleYusuf, Aliyu, Nibron Haggai Manjak, and Maggie Aphane. 2024. "A Modified Three-Term Conjugate Descent Derivative-Free Method for Constrained Nonlinear Monotone Equations and Signal Reconstruction Problems" Mathematics 12, no. 11: 1649. https://doi.org/10.3390/math12111649

APA StyleYusuf, A., Manjak, N. H., & Aphane, M. (2024). A Modified Three-Term Conjugate Descent Derivative-Free Method for Constrained Nonlinear Monotone Equations and Signal Reconstruction Problems. Mathematics, 12(11), 1649. https://doi.org/10.3390/math12111649