Abstract

In this paper, based on the mixed Gibbs sampling algorithm, a Bayesian estimation procedure is proposed for a new Pareto-type distribution in the case of complete and type II censored samples. Simulation studies show that the proposed method is consistently superior to the maximize likelihood estimation in the context of small samples. Also, an analysis of some real data is provided to test the Bayesian estimation.

Keywords:

new Pareto-type distribution; Bayesian estimation; the mixed Gibbs sampling algorithm; Gibbs sampling algorithm; Metropolis–Hastings algorithm MSC:

62H20; 62H30

1. Introduction

As an important statistical inference method, the Bayesian method has been widely applied and developed in the fields of statistics and artificial intelligence. Especially with the rise of big data and machine learning in the early 21st century, the Bayesian method has received more and more attention. Researchers have improved the efficiency and accuracy of the Bayesian method by developing new algorithms and tools and applying them to various fields, such as computer vision, natural language processing, bioinformatics, and so on. Recently, many scholars [1,2,3] have studied it and obtained some valuable results.

The Pareto distribution [4] is a failure function with a decreasing value and has been widely applied in reliability, personal income, stock price fluctuation, and some other models. The origin and some applications of the Pareto distribution can be seen in Johnson et al. [5]. Bourguignon et al. [6] proposed a new Pareto-type (NP) distribution, which is a generalization of the Pareto distribution and often applied for income and reliability data analysis. Raof et al. [7] used the NP distribution model to simulate the income of upper class groups in Malaysia. Karakaya et al. [8] proposed a new generalization of the Pareto distribution, incorporating a truncation parameter. Sarabia et al. [9] acquired further significant properties of this distribution and derived simpler expressions for relevant inequality indices and risk measures. Nik et al. [10] estimated the parameters of the NP distribution from two different perspectives, namely, classical and Bayesian statistics, in which the Bayesian estimation used importance sampling method. Nik et al. [11] studied the parameter estimation as well as predicting the failure times of the removed units in multiple stages of the progressively censored sample coming from the NP distribution, in which a Bayesian method (i.e., Markov chain Monte Carlo, MCMC) was applied to estimate the unknown parameters involved in the model. Soliman [12] studied the estimation of the reliability function in a generalized life model. The Bayesian estimations of the symmetric loss function (i.e., secondary loss) and asymmetric loss function (i.e., LINEX loss and GE loss) were obtained. These estimations were compared with the maximum likelihood estimation (MLE) with the Burr-XII model using the Bayesian approximation based on Lindley. Furthermore, Soliman [12] studied the Bayesian estimation of the parameter of interest on the premise that some other parameters are known. However, all of the parameters are often unknown in practice. Soliman [13] gave an approximate calculation formula for Bayesian estimation; however, the derivation of the formula is complicated and difficult to understand. Soliman’s work shows that the Bayesian method may encounter high dimensions, complex models, and big data in practical use. Thus, scholars can greatly improve the efficiency and accuracy of the Bayesian method by improving the MCMC algorithm.

The Gibbs sampling algorithm was originally proposed by Geman [14]. In recent years, with the development of technologies such as big data analysis, artificial intelligence, and machine learning, the applications of the Gibbs sampling algorithm to solve Bayesian estimation problems has become increasingly common. Ahmed [15] proposed two prediction methods for predicting the censored units with progressive type II censored samples. The lifetimes under consideration are set to follow a new two-parameter Pareto distribution. Moreover, point and interval estimation of the unknown parameters of the NP distribution can be obtained using the maximum likelihood and Bayesian estimation methods. Since Bayesian estimators cannot be expressed explicitly, the Gibbs sampling and MCMC techniques are utilized for Bayesian calculation. Ibrahim et al. [16] proposed a new discrete analogue of the Weibull class, and gave a Bayesian estimation procedure under the square error loss function. Furthermore, they compared the non-Bayesian estimation with the Bayesian estimation using Gibbs sampling and the Metropolis–Hastings algorithm.

For the NP distribution, Nik et al. [10] proposed some classical estimation methods and a Bayesian estimation based on the importance sampling method. However, the sampling efficiency of the importance sampling method is often low in high-dimensional situations. Thus, some other efficient methods are preferred. In our work, we use the mixed Gibbs sampling algorithm to estimate the parameters of the NP distribution with complete samples and type II censored samples.

The Gibbs sampling algorithm can solve some complex Bayes analysis problems directly, and has become a useful tool in Bayesian statistics. By establishing a Markov chain and iterating to the equilibrium state repeatedly, the Gibbs sampling algorithm can obtain a posterior distribution sample. However, the Gibbs sampling algorithm also has some shortcomings. For instance, the fully conditional posterior distribution of a parameter may be difficult to be directly sampled for some special prior distribution. Thus, the Gibbs sampling algorithm can be combined with other sampling methods to achieve the stability of the efficiency. Nowadays, there are some sampling methods, such as the Metropolis algorithm, importance sampling, accept–reject sampling, and so on. Among them, the Metropolis algorithm is a relatively easy and flexible sampling method. The Metropolis algorithm was improved by Hastings [17], and the result is called the Metropolis–Hastings algorithm. However, the Metropolis–Hastings algorithm is time consuming and inefficient in high-dimensional cases. Sampling may even be rejected after time-consuming calculations. A mixture of the Gibbs sampling algorithm and Metropolis–Hastings algorithm, i.e., the mixed Gibbs sampling algorithm, can overcome these shortcomings of the Metropolis–Hastings algorithm. Furthermore, the mixed Gibbs sampling algorithm can make the Gibbs sampling algorithm and Metropolis–Hastings algorithm perform fully to their own advantages. Thus, in our work, we use the mixed Gibbs sampling algorithm to propose a Bayesian estimation procedure for the NP distribution with complete and type II censored samples.

The rest of this paper is organized as follows. We state the MLE of the NP distribution in Section 2. In Section 3, we propose a Bayesian estimation procedure for the NP distribution with complete and type II censored samples using the mixed Gibbs sampling algorithm. Simulation studies and real data analysis are presented in Section 4. In Section 5, we present a brief discussion and conclusion of the results and methods.

2. Maximum Likelihood Estimation of NP Distribution

Suppose X is a random variable that follows the NP distribution with a shape parameter and a scale parameter , that is, . The cumulative distribution function (CDF) of X is given as

The probability density function (PDF) of X is

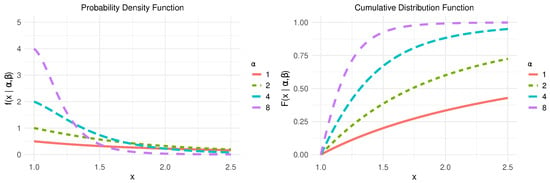

The PDF and CDF diagrams are shown in Figure 1.

Figure 1.

PDF and CDF for with some different and .

It should be highlighted that compared with the Pareto distribution, the NP distribution has the following advantages: (1) It can exhibit either an upside-down bathtub or a decreasing hazard rate function, depending on the values of its parameters. (2) It offers mathematical simplicity, as the probability and distribution functions of the new distribution have a simple form, in contrast to some generalizations of the Pareto distribution which involve special functions such as log, beta, and gamma functions. (3) The proposed distribution has only two parameters, unlike some generalizations of the Pareto distribution which have three or four parameters. Thus, in our work, it is assumed that the life of the product X follows the NP distribution. We present an MLE of the NP distribution below for comparison with the Bayesian estimation.

2.1. Complete Samples Case

In the case of complete samples, assuming that there are n products for a life test, and the order failure data can be obtained, the log-likelihood function can be written as

It can be seen that increases monotonically with , that is to say, the greater the value of , the greater the value of . Since , we obtain the MLE . Thus, the MLE of can be obtained from the solution of Equation (3):

2.2. Type II Censored Samples Case

In the case of type II censored samples, assume that there are n products for a life test, then the test stops when there are r product failures, and the order failure data can be obtained. MLE is used to estimate the unknown parameters below. In order to obtain the likelihood function, it is necessary to know the probability of occurrence as follows.

(1) The probability of a product failing at is approximately

(2) The probability that the lifetime of the remaining products exceed is

Thus, the probability of the above observation is approximately

where is a constant. Ignoring a constant factor does not affect the MLE of and , and the likelihood function can be given as

Thus, the log-likelihood function is as

Similarly, we can obtain the MLE of as

Therefore, the MLE of can be obtained from the solution of Equation (5)

3. Bayesian Estimation of NP Distribution

The Bayesian estimations of the NP distribution under complete samples and type II censored samples are given below.

3.1. Complete Samples Case

In the case of complete samples, assume that there are n products for a life test, and the order failure data can be obtained. When the parameters and are unknown, we assume that the joint prior distribution of and is

Then, the posterior distribution is given as (7):

Thus, the fully conditional posterior distribution of is

and the fully conditional posterior distribution of is

According to (8) and (9), both and do not have explicit expressions in the case of complete samples. Therefore, it is difficult to conduct sampling directly in practice. However, the mixed Gibbs sampling algorithm does not require an explicit expression of the fully conditional posterior distribution, and can be used to sampling different prior distributions. Thus, in our work, we consider the case of the prior distribution denoted as (6) using the mixed Gibbs sampling algorithm in Section 3.1. For Bayesian estimations for parameters of the NP distribution in the case of complete samples, we state the iterative steps of the mixed Gibbs sampling algorithm as follows.

- Step 0

- Choose an initial value of . Denote the value of the ith iteration as . Then, the value of the th iteration can be obtained using the following Step 1 and Step 2.

- Step 1

- Obtain from using the Metropolis–Hastings algorithm in this step, which consists of the following four steps (1)∼(4).

- (1)

- Set , where is the current state and is the standard deviation. Obtain a sample of ; if , then a new sampling is required.

- (2)

- Calculate the acceptance probability as (10) below:where .

- (3)

- Generate a random number u from the uniform distribution , and then obtain according to the following conditions (11):

- (4)

- Set , then return to Step 1 (1).

The resulting is a Markov chain. Step 1 ends when the Markov chain reaches equilibrium, then we can obtain . - Step 2

- Obtain from using the Metropolis–Hastings algorithm in this step.

- (1)

- Set , where is the current state and is the standard deviation. Obtain a sample of ; if or , then a new sampling is required.

- (2)

- Calculate the acceptance probability as (12):

- (3)

- Generate a random number u from the uniform distribution of , and then obtain according to the following conditions (13):

- (4)

- Set , then return to Step 2 (1).

Similarly, the resulting is a Markov chain. We can obtain when the Markov chain reaches equilibrium. - Step 3

- Let , and repeat Step 1 and Step 2 in sequence until the Markov chains reach equilibrium.

It is worth noting that the sample trajectory of the Markov chain can be used as samples of the posterior distributions of when the Markov chain reaches equilibrium, further leading to the Bayesian estimation of . In order to ensure the approximate independence of the posterior distribution samples, subsequent determinations will be made based on the sample autocorrelation coefficients.

3.2. Type II Censored Samples Case

In the case of type II censored samples, assuming that there are n products for a life test, the test stops when there are r products failure, and the order failure data can be obtained. We still take the joint prior distribution of as , then the posterior distribution is the following (14):

Thus, the fully conditional posterior distribution of , denoted as (15), is

and the fully conditional posterior distribution of , denoted as (16), is

As can be seen from the above, in the case of type II censored samples, neither (15) nor (16) has an explicit expression, and so they are difficult to sample directly. Similarly, as previously discussed, the mixed Gibbs sampling algorithm does not require a display expression for the complete conditional posterior distribution, so we continue to use the mixed Gibbs algorithm. Similarly, we still only consider the same prior distribution as in Section 3.1. The steps of the mixed Gibbs sampling algorithm for Bayesian estimation of the NP distribution parameters in the case of type II censored samples are stated as follows.

- Step 0

- Give an initial value using the Gibbs sampling. For simplicity, the value of the ith iteration is still denoted as . Then, we can use the following Step 1 and Step 2 to obtain .

- Step 1

- Obtain a sample from using the Metropolis–Hastings algorithm in this step.

- (1)

- Set , where is the current state and is the standard deviation. Obtain a sample of ; if , then a new sampling is required.

- (2)

- Calculate the acceptance probability , denoted as (17):where .

- (3)

- Generate a random number u from the uniform distribution of , and then obtain according to the following (18):

- (4)

- Set , then return to Step 1(1).

The resulting is a Markov chain, and we can obtain when the Markov chain reaches equilibrium. - Step 2

- Obtain a sample from using the Metropolis–Hastings algorithm in this step.

- (1)

- Set , where is the current state and is the standard deviation. Obtain a sample of ; if or , then a new sampling is required.

- (2)

- Calculate the acceptance probability as (19):

- (3)

- Generate a random number u from the uniform distribution of , and then obtain according to the following conditions (20):

- (4)

- Set , then return to Step 2(1).

Similarly, the resulting is a Markov chain, and we can obtain when the Markov chain reaches equilibrium. - Step 3

- Let , and repeat Step 1 and Step 2 in sequence until the Markov chains reach equilibrium.

The specific approach to obtaining the Bayesian estimation of the target parameters is similar to that in the case of complete samples and will not be further elaborated here.

4. Numerical Studies

In Section 4, we apply the R4.1.3 software in our work.

4.1. Simulation Studies

Case 1. Denote X as the life of a product, and set with three groups of and . A Monte Carlo simulation was carried out in the complete samples case and 20% type II censored samples case. Generate 100 NP random variables using the inverse sampling method in each simulation.

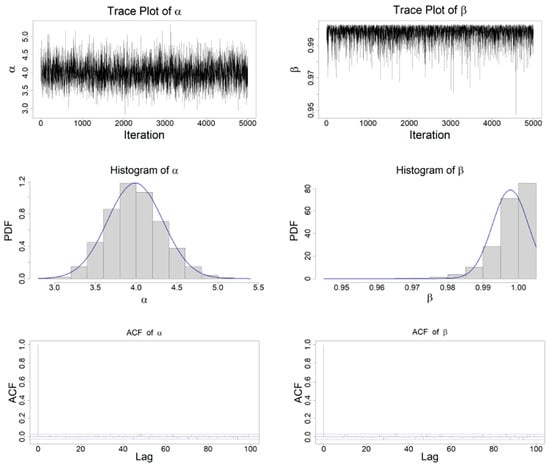

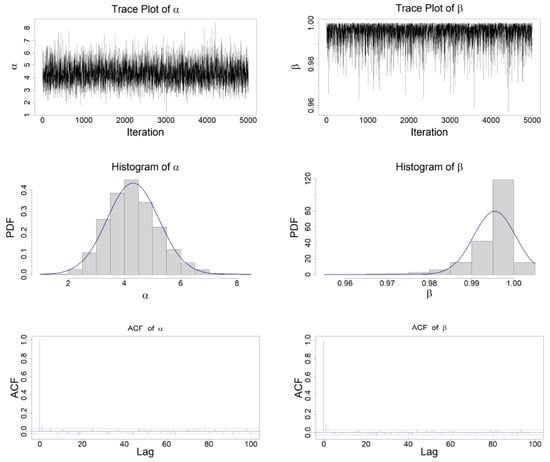

To illustrate the complete implementation process of the mixed Gibbs sampling algorithm, we first present the Bayesian estimation and 95% credible interval of three groups of parameter simulations in both the complete samples case and the 20% type II censored samples case (refer to Table 1 and Table 2). The parameters are estimated using Bayesian estimation based on the sample separately under the square loss function and the LINEX loss function (with a loss parameter of 1). Furthermore, when the parameters , we demonstrate the process of mixed Gibbs sampling in both the complete samples case and the 20% type II censored samples case. This includes the trace plot, histogram, and autocorrelation coefficient diagram of corresponding parameters (refer to Figure 2 and Figure 3).

Table 1.

Bayesian estimation and 95% credible interval for parameters in complete samples case under different loss functions.

Table 2.

Bayesian estimation and 95% credible interval for parameters in 20% type II censored samples case under different loss functions.

Figure 2.

Trace plots, histogram, and ACF of NP distribution parameters with complete samples case.

Figure 3.

Trace plots, histogram, and ACF of NP distribution parameters with type II censored samples case.

As stated in Table 1 and Table 2, the Bayesian estimation of parameters using the mixed Gibbs sampling algorithm has produced relatively satisfactory results for the three groups of selected parameters. The results show that the estimated value is close to the true value, which means that the proposed method has high accuracy and reliability concerning parameter estimation. In addition, the credible interval of the parameters also covers the true value, and the interval length is relatively short, indicating high precision in the estimation of the parameters.

From Figure 2 and Figure 3, the trace plots show that the values of and are randomly scattered around the average. Furthermore, the traversal mean of the parameters tends to stabilize after 200 iterations. It can be considered that the Gibbs sampling process reaches the equilibrium state at this point, and the remaining samples can be regarded as the observed value of the target parameter. As a precaution, the first 500 iterations are discarded, and then 4500 iterations are performed to obtain 4500 samples of each parameter. Additionally, the autocorrelation function indicates that the autocorrelation coefficient of all samples approaches 0 at a lag of 1 order. Therefore, the Bayesian estimation of the parameters is ultimately obtained through the 4500 samples that have been obtained.

Case 2. It is assumed that the life X of a product follows the NP distribution of two parameters . To investigate the strengths and weaknesses of the two estimation methods with small sample sizes, 20 random variables from the NP distribution were generated in each simulation in the complete samples case. In the type II censored samples case, 100 random variables of the NP distribution were generated for each simulation. Furthermore, 20% of the order failure data for the complete random variables are selected in each simulation. The mean value and mean square error (MSE) of MLE and Bayesian estimation (BE) are given in Table 3. The simulation shows that there is no significant difference between maximum likelihood estimation and Bayesian estimation in the large sample case, so we do not report the results in our article.

Table 3.

Mean and mean square error of Bayesian estimation and maximum likelihood estimation.

As shown in Table 3, the MSEs for Bayesian estimation are consistently smaller than the MLEs. Consequently, we can infer that the BE method is evidently superior to the MLE in the context of small samples. In summary, the findings highlight the superior accuracy and robustness of Bayesian estimation utilizing the mixed Gibbs sampling algorithm compared to MLE.

4.2. Real Data Analysis

Take two reliability datasets from Bourguignon [6]. The first dataset (DataSet I), given by Linhart and Zucchini [18], represents the number of failures of an aircraft’s air conditioning system: 23, 261, 87, 7, 120, 14, 62, 47, 225, 71, 246, 21,42, 20, 5, 12, 120, 11, 3, 14, 71, 11, 14, 11, 11, 90, 1, 16, 52, 95. The second dataset (DataSet II) comes from Murthy et al. [19], which is composed of the number of failures of 20 mechanical components with the following data: 0.067, 0.068, 0.076, 0.081, 0.084, 0.085, 0.085, 0.086, 0.089, 0.098, 0.098, 0.114, 0.115, 0.121, 0.125, 0.131, 0.149, 0.160, 0.485, 0.485. It can be observed that both datasets are positive. Due to the origin of the NP distribution, positive data are ideally modeled from this distribution. Therefore, it is reasonable to use the NP distribution to fit these two datasets. In this section, the Bayesian estimation of two datasets by the NP distribution is presented using the mixed Gibbs sampling algorithm.

DataSet I. For DataSet I, Bourguignon [6] provided the MLEs (i.e., , ) of parameters and . Subsequently, Bayesian analysis was conducted for both type II censored data () and the complete data (). In our work, 2000 sampling iterations were performed for each case. Testing the sampling mean revealed that the traversal mean of the parameters stabilized within 100 iterations, and the mixed Gibbs sampling algorithm converged in both cases. To mitigate the influence of the transition process, the sampled data from 201 to 2000 iterations were utilized as the mixed Gibbs sampling values for Bayesian estimation (BE), and the posterior estimation of the distribution parameters was performed using these sampling values (refer to Table 4).

Table 4.

Numerical results of the real data analyses.

In Table 4, the posterior expectation and median represent the Bayesian point estimates of the parameters under square loss and absolute loss. The 95% credible intervals of the parameters are determined by the 2.5th and 97.5th percentiles. The results in Table 4 demonstrate the high accuracy of Bayesian estimation using the mixed Gibbs sampling algorithm for both complete data and type II censored data. Compared to frequency estimation methods, Bayesian estimation proves to be more effective in handling small sample sizes and provides probability distribution estimates of parameters, rather than just point estimates, for reliability data with limited observations. Consequently, Bayesian estimation offers a more precise reflection of parameter uncertainties, particularly when dealing with small datasets.

DataSet II. In the case of complete data, we conducted Bayesian analysis for DataSet II with 2000 sampling iterations. After testing the sampling mean, we found that the traversal mean of the parameters tended to be stable within 100 iterations, and the mixed Gibbs sampling algorithm converged. To eliminate the influence of the transition process, the sampled data of 201 to 2000 times were used as the mixed Gibbs sampling values for Bayesian estimation, and the posterior estimation of the distribution parameters was performed using these sampling values. Then, the Bayesian estimation (BE) results under the square loss were compared with the MLE results of Bourguignon [6] (refer to Table 5).

Table 5.

Comparison of two estimation methods.

It can be seen from the above Table 5 that the Bayesian estimation is very close to the MLE result, which further verifies the stability and reliability of the mixed Gibbs sampling algorithm.

5. Discussion and Conclusions

Some scholars have conducted valuable research for the NP distribution in the literature, such as [10,11,15]. However, Bayesian methods may encounter challenges with high-dimensional, complex models, and big data in practice. Contrary to the low efficiency of importance sampling [10] and the complexity of the Lindley approximation [12] methods, a Bayesian estimation procedure is proposed for the NP distribution using the mixed Gibbs sampling algorithm. When the fully conditional distributions in Gibbs sampling steps lack an explicit form, direct sampling from these distributions becomes challenging. In such instances, alternative sampling methods must be proposed. As each step within a loop of Gibbs sampling is itself a Metropolis–Hastings iteration, the algorithm can be used to combine Gibbs sampling with the Metropolis–Hastings algorithm if necessary.

In this paper, we proposed a Bayesian estimation procedure for the NP distribution with complete and type II censored samples using the mixed Gibbs sampling algorithm. For instance, the mean and MSEs of the resulting parameters estimations are given, and the stability and effectiveness of the mixed Gibbs sampling algorithm are demonstrated with simulation studies and real data analysis. It can be seen that the mixed Gibbs sampling algorithm is stable, feasible, and high precision.

In reliability-related product tests, it is often challenging to obtain a sufficient number of samples to assess the quality of the products. Bayesian estimation, however, can assist in obtaining reliable results even in cases of limited sample sizes. By incorporating prior information, Bayesian estimation can improve the accuracy of parameter estimation and provide valuable uncertainty information about the parameters, which is particularly crucial when sample data is scarce. Therefore, in the context of product reliability tests, Bayesian estimation can serve as an effective tool for reliably evaluating and predicting product quality in situations with small sample sizes. Thus, in view of the merits of the Bayesian method with the mixed Gibbs sampling algorithm in this paper, it will become an important Bayesian inference method.

Author Contributions

Methodology, F.L. and S.W.; software, F.L. and S.W.; formal analysis, F.L., S.W. and M.Z.; writing—review and editing, F.L., S.W. and M.Z.; funding acquisition, M.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Philosophy and Social Science planning project of Anhui Province Foundation (Grant No. AHSKF2022D08).

Data Availability Statement

All relevant data are within the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Qian, F.; Zheng, W. An evolutionary nested sampling algorithm for Bayesian model updating and model selection using modal measurement. Eng. Struct. 2017, 140, 298–307. [Google Scholar] [CrossRef]

- Kuipers, J.; Suter, P.; Moffa, G. Efficient Sampling and Structure Learning of Bayesian Networks. J. Comput. Graph. Stat. 2022, 31, 639–650. [Google Scholar] [CrossRef]

- Alhamzawi, R.; Ali, H.T.M. A New Gibbs Sampler for Bayesian Lasso. Commun. Stat.-Simul. C 2020, 49, 1855–1871. [Google Scholar] [CrossRef]

- Pareto, V. Cours d’Économie Politique; F.Rouge: Lausanne, Switzerland, 1897. [Google Scholar]

- Jonhson, N.L.; Kotz, S.; Balakrishnan, N. Continuous Univariate Distributions; John Willey and Sons: London, UK, 1994; Volume 1, Chapter 18. [Google Scholar]

- Bourguignon, M.; Saulo, H.; Fernandez, R.N. A new Pareto-type distribution with applications in reliability and income data. Physica A 2016, 457, 166–175. [Google Scholar] [CrossRef]

- Raof, A.S.A.; Haron, M.A.; Safari, A.; Siri, Z. Modeling the Incomes of the Upper-Class Group in Malaysia using New Pareto-Type Distribution. Sains Malays. 2022, 51, 3437–3448. [Google Scholar] [CrossRef]

- Karakaya, K.; Akdoğan, Y.; Nik, A.S.; Kuş, C.; Asgharzadeh, A. A Generalization of New Pareto-Type Distribution. Ann. Data Sci. 2022, 2022, 1–15. [Google Scholar] [CrossRef]

- Sarabia, J.M.; Jordá, V.; Prieto, F. On a new Pareto-type distribution with applications in the study of income inequality and risk analysis. Physica A 2019, 527, 121277. [Google Scholar] [CrossRef]

- Nik, A.S.; Asgharzadeh, A.; Nadarajah, S. Comparisons of methods of estimation for a new Pareto-type distribution. Statistic 2019, 79, 291–319. [Google Scholar]

- Nik, A.S.; Asgharzadeh, A.; Raqab, M.Z. Estimation and prediction for a new Pareto-type distribution under progressive type-II censoring. Math. Comput. Simulat. 2021, 190, 508–530. [Google Scholar]

- Soliman, A.A. Reliability estimation in a generalized life-model with application to the Burr-XII. IEEE Trans. Reliab. 2002, 51, 337–343. [Google Scholar] [CrossRef]

- Soliman, A.A. Estimation of parameters of life from progressively censored data using Burr-XII model. IEEE Trans. Reliab. 2005, 54, 34–42. [Google Scholar] [CrossRef]

- Geman, S.; Geman, D. Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans. Pattern Anal. 1984, 6, 721–741. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, H.H. Best Prediction Method for Progressive Type-II Censored Samples under New Pareto Model with Applications. J. Math. 2021, 2021, 1355990. [Google Scholar]

- Ibrahim, M.; Ali, M.M.; Yousof, H.M. The Discrete Analogue of the Weibull G Family: Properties, Different Applications, Bayesian and Non-Bayesian Estimation Methods. Ann. Data Sci. 2023, 10, 1069–1106. [Google Scholar] [CrossRef]

- Hastings, W.K. Monte Carlo Sampling Methods Using Markov Chains and Their Applications. Biometrika 1970, 57, 97–109. [Google Scholar] [CrossRef]

- Linhart, H.; Zucchini, W. Model Selection; John Wiley and Sons: New York, NY, USA, 1986. [Google Scholar]

- Murthy, D.N.P.; Xie, M.; Jiang, R. Weibull Models; John Wiley and Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).