Abstract

The confluent hypergeometric beta distribution due to Gordy has been known since the 1990s, but not much of is known in terms of its mathematical properties. In this paper, we provide a comprehensive treatment of mathematical properties of the confluent hypergeometric beta distribution. We derive shape properties of its probability density function and expressions for its cumulative distribution function, hazard rate function, reversed hazard rate function, moment generating function, characteristic function, moments, conditional moments, entropies, and stochastic orderings. We also derive procedures for maximum likelihood estimation and assess their finite sample performance. Most of the derived properties are new. Finally, we illustrate two real data applications of the confluent hypergeometric beta distribution.

MSC:

62E99

1. Introduction

Ref. [1] introduced the confluent hypergeometric beta distribution given by the probability density function

for , , and , where

where and are the beta and confluent hypergeometric functions defined by

and

respectively, where denotes the ascending factorial. The beta function can also be expressed as , where denotes the gamma function defined by

The standard beta distribution is the particular case of (1) for . If X is a confluent hypergeometric beta random variable with parameters , then is also a confluent hypergeometric beta random variable with parameters . Hence, X is symmetric if and only if .

There have been only a few papers studying the confluent hypergeometric beta distribution. Ref. [2] proposed an exponentiated version of (1). Refs. [3,4] used (1) in Bayesian estimation. Ref. [5] proposed a six-parameter generalization of (1).

The aim of this paper is to provide a comprehensive account of mathematical properties of (1). The properties derived include the shape properties of the probability density function (Section 2), cumulative distribution function (Section 3), hazard rate and reversed hazard rate functions (Section 4), moment generating and characteristic functions (Section 5), moments (Section 6), conditional moments (Section 7), entropies (Section 8), and stochastic orderings (Section 9). We also derive procedures for maximum likelihood estimation (Section 10) and assess their finite sample performance (Section 11). Two real data applications of the confluent hypergeometric beta distribution are illustrated in Section 12. Some conclusions and future work are noted in Section 13.

In addition to the special functions stated, the calculations of this paper involve the degenerate hypergeometric series of two variables defined by

The properties of the special functions can be found in [6,7].

2. Shape

The critical points of are the roots of

or equivalently the roots of

where , and . Let and denote the roots of (2). If , then the only root is .

The following shapes are possible for the probability density function:

- (a)

- if ,andthen has one mode followed by another mode.

- (b)

- if ,andthen has one antimode followed by another antimode.

- (c)

- if ,andthen has one mode followed by an antimode.

- (d)

- if ,andthen has an antimode followed by a mode.

- (e)

- if andthen has a single mode.

- (f)

- if andthen has a single antimode.

- (g)

- if andthen has a single mode.

- (h)

- if andthen has a single antimode.

- (i)

- iffor all then is monotonically decreasing.

- (j)

- iffor all then is monotonically increasing.

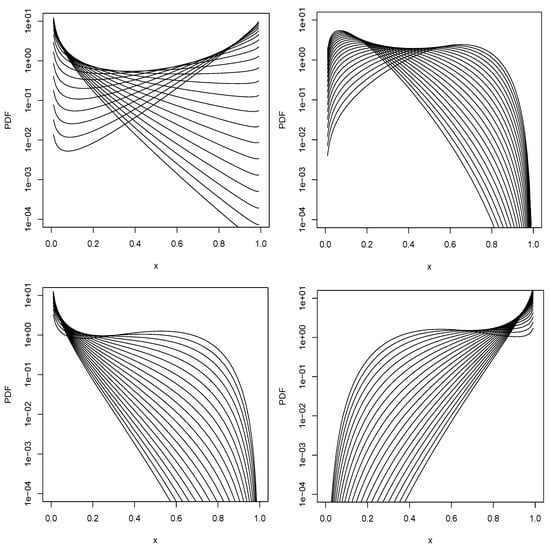

Possible shapes of are illustrated in Figure 1. Many of the shapes illustrated cannot be exhibited by a standard beta distribution.

Figure 1.

Shapes of (1) for , and (top left); , , and (top right); , , and (bottom left); , , and (bottom right). The curves from the top to bottom on the right hand side of each plot correspond to .

3. Cumulative Distribution Function

By equation (2.3.8.1) in volume 1 of [7], the cumulative distribution function of X is

The quantile function defined by is the root of

In particular, the median of X, , is the root of .

4. Hazard Rate Functions

It is also immediate that the reversed hazard rate function of X is

5. Moment-Generating and Characteristic Functions

The moment-generating function of X is

The corresponding characteristic function is

where .

6. Moments

The nth moment of X is

In particular, the first four moments are

and

The harmonic means of X are

and

Further,

and

The expressions for the nth moment were previously given in [4].

7. Conditional Moments

By equation (2.3.8.1) in volume 1 of [7], the nth conditional moment of X is

In particular, the first four conditional moments are

and

Note that and are the mean residual life and variance residual functions, respectively.

8. Entropies

The three most popular entropies are the geometric mean [8,9], Shannon entropy [10,11], and Rényi entropy [12], defined by

and

respectively, for and .

For X having the confluent hypergeometric beta distribution,

and

Another popular entropy is the relative entropy [13]. If and are confluent hypergeometric beta random variables with parameters and , respectively, the relative entropy is defined by

Further measures of entropy are the geometric variances and the geometric covariance. Note that

and

Hence, the geometric variances are

and

Note also that

Hence, the geometric covariance is

9. Ordering

Let and be confluent hypergeometric beta random variables with parameters and , respectively. We say that is smaller than with respect to likelihood ratio ordering if is an increasing function of x. Note that

and

Thus,

Hence, is smaller than with respect to likelihood ratio ordering if and .

10. Maximum Likelihood Estimation

Suppose is a random sample from (1) with a, b, and c unknown. The joint loglikelihood function of a, b, and c is

The maximum likelihood estimates of a, b, and c, say , , and , are the simultaneous solutions of , , and . In Section 11 and Section 12, we obtained the maximum likelihood estimates by directly maximizing (12). A quasi-Newton algorithm was used for maximization; see the optim function in the R software [14] for details of the algorithm.

Under certain regularity conditions, converges to a normal distribution with zero means and variance–covariance matrix

as . Using expressions in the Appendix A, we have

and

This result can be used for tests of hypotheses and interval estimation about . For example, approximate percent confidence intervals for a, b, and c are

and

respectively, where

and

where , , , , , and are the same as , , , , , and , respectively, with a, b, and c replaced by , , and , respectively.

11. Simulation Study

In this section, we assess the finite sample performance of the maximum likelihood estimators in Section 10 in terms of biases, mean squared errors, coverage probabilities, and coverage lengths. The following simulation study was used.

- (a)

- Set initial values for a, b, and c;

- (b)

- Simulate a random sample of size n from (1) by the inversion method;

- (c)

- Compute the maximum likelihood estimates of a, b, and c as well as their standard errors for the sample in step (b);

- (d)

- Repeat steps (b) and (c) one thousand times, giving the estimates , , and as well as their standard errors , , and for ;

- (e)

- Compute the biases of the estimators asand

- (f)

- Compute the mean squared errors of the estimators asand

- (g)

- Compute the 95 percent coverage probabilities of the estimators asandwhere denotes the indicator function;

- (h)

- Compute the 95 percent coverage lengths of the estimators asand

- (i)

- Repeat steps (b)–(h) for .

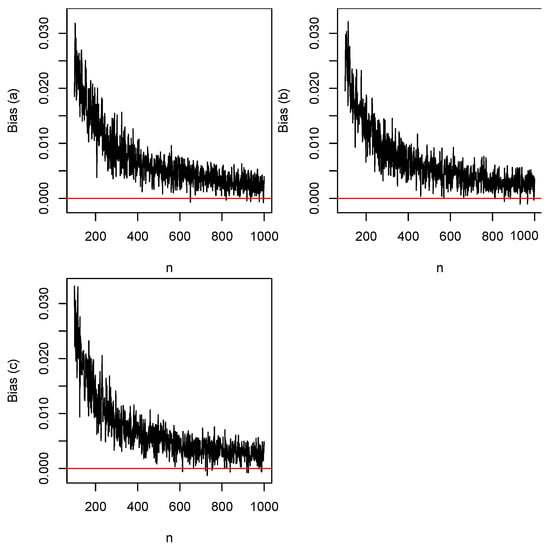

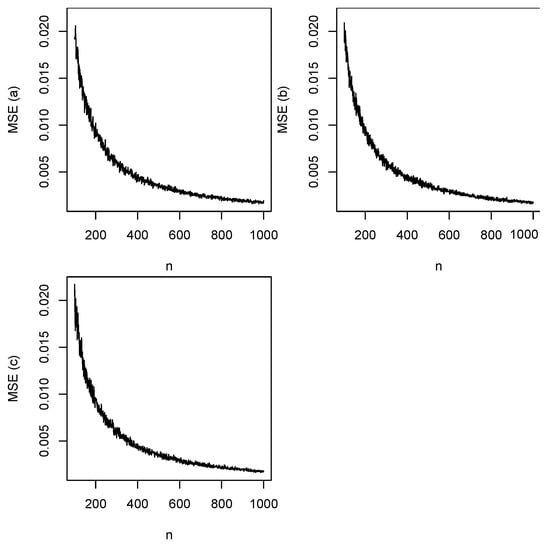

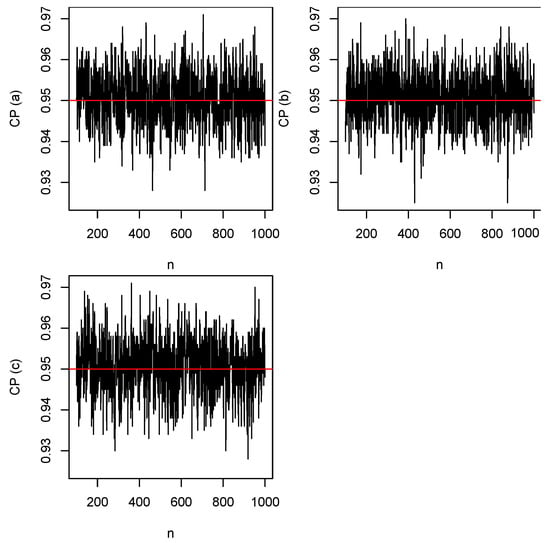

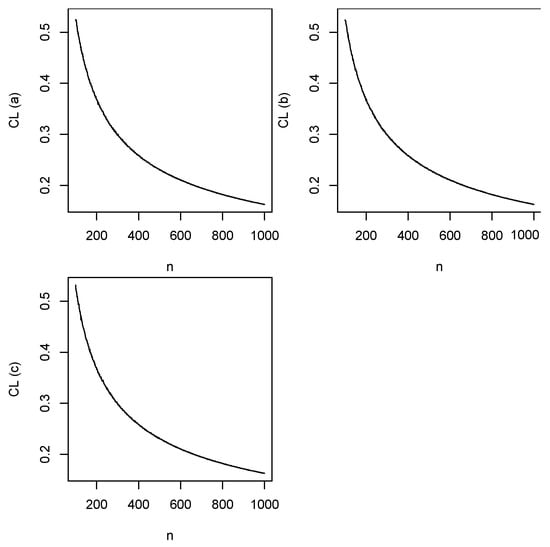

We took the initial values as , , and . Plots of the biases versus n are shown in Figure 2. The horizontal lines in this figure correspond to the biases being zero. Plots of the mean squared errors versus n are shown in Figure 3. Plots of the coverage probabilities versus n are shown in Figure 4. The horizontal lines in this figure correspond to the coverage probabilities being equal to 0.95. Plots of the coverage lengths versus n are shown in Figure 5.

Figure 2.

Biases of (top left), (top right), and (bottom left) versus n. The horizontal lines correspond to the biases being zero.

Figure 3.

Mean squared errors of (top left), (top right), and (bottom left) versus n.

Figure 4.

Coverage probabilities of (top left), (top right), and (bottom left) versus n. The horizontal lines correspond to the coverage probabilities being equal to 0.95.

Figure 5.

Coverage lengths of (top left), (top right), and (bottom left) versus n.

We can observe the following from the figures:

- (a)

- The biases are generally positive and decrease to zero with increasing n;

- (b)

- The mean squared errors generally decrease to zero with increasing n;

- (c)

- The coverage probabilities are around the nominal level even for n as small as 100;

- (d)

- The coverage lengths generally decrease to zero with increasing n.

The observations noted are for particular initial values of a, b, and c, but the same observations held for a wide range of other values of a, b, and c, including the ones corresponding to the different shapes in Figure 1. We chose not to present results for other choices in order to save space and to avoid repetitive discussion. In particular, the biases always decreased to zero with increasing n, the mean squared errors always decreased to zero with increasing n, the coverage probabilities were always around the nominal level even for n as small as 100, and the coverage lengths always decreased to zero with increasing n.

12. Real Data Applications

In this section, we compare the performance of the confluent hypergeometric beta distribution versus competing distributions using two real datasets. In Section 12.1, the confluent hypergeometric beta distribution is compared with the standard beta distribution. In Section 12.2, the confluent hypergeometric beta distribution is compared with [15]’s beta distribution specified by the probability density function

for , , , and . The method of maximum likelihood was used to fit all of the distributions.

12.1. United States Presidential Elections Data

The data used are the winner’s share of the electoral college vote for the United States presidential elections from 1824 to 2016. The actual data values are 0.3218, 0.6820, 0.7657, 0.5782, 0.7959, 0.6182, 0.5621, 0.8581, 0.5878, 0.5941, 0.9099, 0.7279, 0.8125, 0.5014, 0.5799, 0.5461, 0.5810, 0.6239, 0.6063, 0.6523, 0.7059, 0.6646, 0.8192, 0.5217, 0.7608, 0.7194, 0.8362, 0.8889, 0.9849, 0.8456, 0.8136, 0.5706, 0.8324, 0.8606, 0.5642, 0.9033, 0.5595, 0.9665, 0.5520, 0.9089, 0.9758, 0.7918, 0.6877, 0.7045, 0.5037, 0.5316, 0.6784, 0.6171, 0.5687.

The fit of the standard beta distribution gave and with , where the numbers within parentheses are standard errors. The fit of (1) gave , , and with . By the likelihood ratio test, (1) provides a significantly better fit than the standard beta distribution. In addition, the standard errors are smaller for the fit of (1).

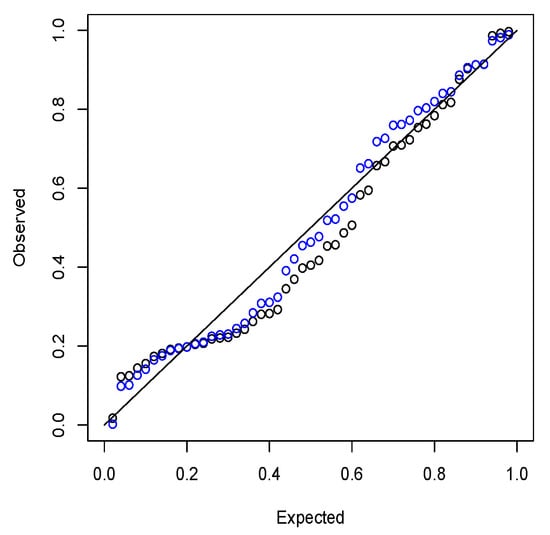

The better fit of (1) is confirmed by the probability plots shown in Figure 6. The plotted points for (1) are closer to the diagonal line. The sum of the absolute deviations between the expected and observed probabilities for the fit of the standard beta distribution is 2.455. The same for the fit of (1) is 1.857.

Figure 6.

Probability plots of the fits of the standard beta (black) and confluent hypergeometric beta (blue) distributions.

12.2. Brexit Data

The data used are the proportion voting “Remain” in the Brexit (EU referendum) poll outcomes for 126 polls from January 2016 to the referendum date on June 2016. The actual data values are 0.52, 0.55, 0.49, 0.44, 0.54, 0.48, 0.41, 0.45, 0.42, 0.53, 0.45, 0.44, 0.44, 0.42, 0.42, 0.37, 0.46, 0.43, 0.39, 0.45, 0.44, 0.46, 0.40, 0.48, 0.42, 0.44, 0.45, 0.43, 0.43, 0.48, 0.41, 0.43, 0.40, 0.41, 0.42, 0.44, 0.51, 0.44, 0.44, 0.41, 0.41, 0.45, 0.55, 0.44, 0.44, 0.52, 0.55, 0.47, 0.43, 0.55, 0.38, 0.36, 0.38, 0.44, 0.42, 0.44, 0.43, 0.42, 0.49, 0.39, 0.41, 0.45, 0.43, 0.44, 0.51, 0.51, 0.49, 0.48, 0.43, 0.53, 0.38, 0.40, 0.39, 0.35, 0.45, 0.42, 0.40, 0.39, 0.44, 0.51, 0.39, 0.35, 0.41, 0.51, 0.45, 0.49, 0.40, 0.48, 0.41, 0.46, 0.47, 0.43, 0.45, 0.48, 0.49, 0.40, 0.40, 0.40, 0.39, 0.41, 0.39, 0.48, 0.48, 0.37, 0.38, 0.42, 0.51, 0.45, 0.40, 0.54, 0.36, 0.43, 0.49, 0.41, 0.36, 0.42, 0.38, 0.55, 0.44, 0.54, 0.41, 0.52, 0.42, 0.38, 0.42, 0.44.

The fit of [15]’s beta distribution gave , , and with . The fit of (1) gave , , and with . Both (1) and (13) are not nested, but they have the same number of parameters; hence, it is sufficient to compare the loglikelihood values. Clearly, (1) gives the larger value. In addition, the standard errors are smaller for the fit of (1).

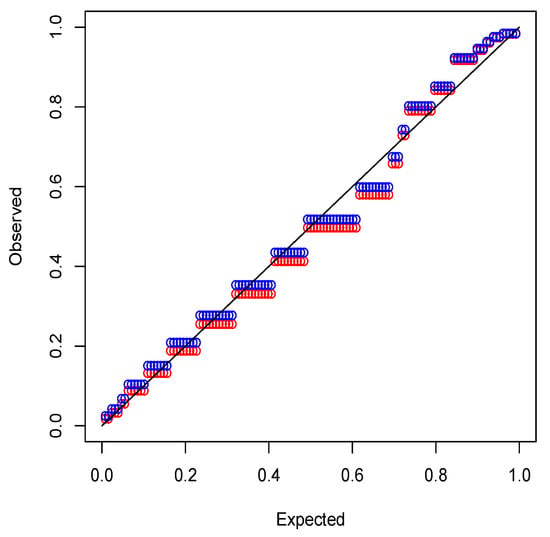

The better fit of (1) is confirmed by the probability plots shown in Figure 7. The plotted points for (1) are closer to the diagonal line except in the upper tail.

Figure 7.

Probability plots of the fits of [15]’s beta (red) and confluent hypergeometric beta (blue) distributions.

13. Conclusions

In this paper, we revisited the confluent hypergeometric beta distribution introduced by [1] and derived various mathematical properties of it. Some of the derived properties are known, but most of the derived properties are new. In particular, shape properties of the probability density function, expression for the cumulative distribution function, expression for the hazard rate and reversed hazard rate functions, expressions for the moment generating and characteristic functions, expressions for harmonic means, expressions for the variances of inverse confluent hypergeometric beta random variables, expressions for conditional moments, expressions for geometric mean, Shannon entropy, Rényi entropy, relative entropy, geometric variances and geometric covariance, stochastic ordering properties, and procedures for maximum likelihood estimation are all new. We also assessed the finite sample performance of maximum likelihood estimators and illustrated two real data applications.

Future work will consider methods of estimation other than the method of maximum likelihood, including Bayesian method, method of moments, generalized method of moments, method of probability weighted moments, method of least squares, method of weighted least squares, method of maximum entropy, method of pseudo maximum likelihood, method of minimum chi-square, method of minimum norm, method of min–max, method of M-estimation, method of quantiles, method of ranked set sampling, and bootstrap method. Another aim is to study mathematical properties of composite beta distributions of the kind due to [16].

Author Contributions

Methodology, S.N.; Software, S.N. and M.K.; Formal analysis, M.K.; Writing—original draft, S.N.; Supervision, S.N. All authors have read and agreed to the published version of the manuscript.

Funding

This paper has received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are given as part of the manuscript. Code can be obtained from the corresponding author.

Acknowledgments

The authors would like to thank the Editor and the four referees for careful reading and comments which greatly improved the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gordy, M.B. Computationally convenient distributional assumptions for common-value auctions. Comput. Econ. 1998, 12, 61–78. [Google Scholar] [CrossRef]

- Nadarajah, S. Exponentiated beta distributions. Comput. Math. Appl. 2005, 49, 1029–1035. [Google Scholar] [CrossRef]

- Li, Y.; Clyde, M.A. Mixtures of g-priors in generalized linear models. J. Am. Stat. Assoc. 2018, 113, 1828–1845. [Google Scholar] [CrossRef]

- Sarabia, M.J.; Shahtahmassebi, G. Bayesian estimation of incomplete data using conditionally specified priors. Commun. Stat. Simul. Comput. 2017, 46, 3419–3435. [Google Scholar] [CrossRef]

- Alshkaki, R.S.A. A six parameters beta distribution with application for modeling waiting time of Muslim early morning prayer. Ann. Data Sci. 2021, 8, 57–90. [Google Scholar] [CrossRef]

- Gradshteyn, I.S.; Ryzhik, I.M. Table of Integrals, Series, and Products, 6th ed.; Academic Press: San Diego, CA, USA, 2000. [Google Scholar]

- Prudnikov, A.; Brychkov, Y.A.; Marichev, I.O. Integrals and Series; Gordon and Breach Science Publishers: Amsterdam, The Netherlands, 1986; Volumes 1–3. [Google Scholar]

- Feng, C.; Wang, H.; Tu, X.M. Geometric mean of nonnegative random variable. Commun. Stat. Theory Methods 2013, 42, 2714–2717. [Google Scholar] [CrossRef]

- Vogel, R.M. The geometric mean? Commun. Stat. Theory Methods. 2022, 51, 82–94. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 623–656. [Google Scholar] [CrossRef]

- Rényi, A. On measures of information and entropy. In Proceedings of the 4th Berkeley Symposium on Mathematics, Statistics and Probability; Neyman, J., Ed.; University of California Press: Berkeley, CA, USA, 1960; Volume 1, pp. 547–561. [Google Scholar]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2023. [Google Scholar]

- Libby, L.D.; Novick, R.M. Multivariate generalized beta-distributions with applications to utility assessment. J. Educ. Stat. 1982, 7, 271–294. [Google Scholar] [CrossRef]

- Zaevski, T.; Kyurkchiev, N. On some composite Kies families: Distributional properties and saturation in Hausdorff sense. Mod. Stoch. Theory Appl. 2023, 1–26. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).