Abstract

Linear mixed-effects models are widely used in applications to analyze clustered, hierarchical, and longitudinal data. Model selection in linear mixed models is more challenging than that of linear models as the parameter vector in a linear mixed model includes both fixed effects and variance component parameters. When selecting the variance components of the random effects, the variance of the random effects must be non-negative and the parameters may lie on the boundary of the parameter space. Therefore, classical model selection methods cannot be directly used to handle this situation. In this article, we propose a modified BIC for model selection with linear mixed-effects models that can solve the case when the variance components are on the boundary of the parameter space. Through the simulation results, we found that the modified BIC performed better than the regular BIC in most cases for linear mixed models. The modified BIC was also applied to a real dataset to choose the most-appropriate model.

Keywords:

linear mixed models; BIC; model selection; chi-bar-squared distribution; complex data; statistical modeling MSC:

62J05

1. Introduction

With the development of technology in recent times, more complex and large datasets have become available. Statisticians and researchers are also developing different statistical models to extract valuable information from data to aid decision-making processes. Classical multiple linear regression models can be used to model the relationship between variables. However, one of the assumptions of linear regression is that the errors are independent. Therefore, when the observations are correlated as with longitudinal data, clustered data, and hierarchical data, linear regression models are no longer appropriate. A more powerful class of models used to model correlated data are mixed-effects models, which have been used in many fields of applications. Recently, Sheng et al. [1] compared the linear models with linear mixed-effects models and showed that estimators from the latter are more advantageous in terms of both efficiency and unbiasedness. This shows the importance of applying linear mixed-effects models in longitudinal settings.

The correlation between observations may appear when data are collected hierarchically; for example, students may be sampled from the same school, and schools may be sampled within the same district. Consequently, students in the same school have the same teachers and school environment, and therefore, the observations are not independent of one another. Observations may be taken from members of the same family, where each family is considered a group or a cluster. As the observations are dependent, we can consider this clustered data. Another type of correlated data pertains to observations from the same subjects collected over time, such as repeated blood pressure measurements over a patient’s treatment period—an example of longitudinal data. Patients (or subjects) may vary in the number and date of the collected measurements. Since observations are recorded from the same individual over time, it is reasonable to assume that subject-specific correlations exist in the trend of the response variable over time. We wish to model the pattern of the response variable over time within subjects and the variation in the time trends between subjects. Linear mixed-effects models are used to model correlated data, accounting for the variability within and between clusters in clustered data or the variability within and between repeated measurements in longitudinal data.

Model selection is an important procedure in statistical analysis, allowing the most-appropriate model to be chosen from a set of potential candidate models. A desired model is parsimonious and can adequately fit the data in order to improve two important aspects: interpretability and predictability. In linear mixed models, identifying significant random effects is a challenging step in model selection, as it involves conducting a hypothesis test for whether or not the variance components of random effects are equal to zero. For example, we want to test against , where the parameter space of is . Under the null hypothesis, the testing value of the variance component parameter lies on the boundary of the parameter space. This violates one of the classical regularity conditions that the true value of the parameter must be an interior point of the parameter space. Therefore, classical hypothesis tests such as the likelihood ratio, score, and Wald tests are no longer appropriate. We refer to this violation as the boundary issue. (Please see a graphical example of the boundary issue in Appendix A.3). When the boundary issue occurs, the asymptotic null distribution of the likelihood ratio test statistic does not follow a chi-squared distribution. Chernoff [2], Self and Liang [3], Stram and Lee [4], Azadbakhsh et al. [5], and Baey et al. [6] pointed out that, under some conditions on the parameter space and the likelihood functions, the asymptotic null distribution of the likelihood ratio test statistic is a mixture of chi-squared distributions. For instance, the asymptotic null distribution of the likelihood ratio test statistic for testing against is , not [4]. The distribution of a chi-bar-squared random variable depends on its mixing weights (Appendix A.2). Dykstra [7] discussed conditions on the weight distribution to ensure asymptotic normality for chi-bar-squared distributions. Shapiro [8] provided expressions to calculate the exact weights used in the mixture of chi-squared distributions for some special cases. However, in general, determining the exact weights used in the mixture of chi-squared distributions is challenging when the number of the variance components being tested under the null hypothesis is large, as the weights are not available in a tractable form (Baey et al. [6]).

There are a number of information criteria, such as the Akaike information criterion (AIC) and the Bayesian information criterion (BIC), that were developed for model selection with linear mixed models by Vaida and Blanchard [9], Pauler [10], Jones [11], and Delattre and Poursat [12]. Other methods for identifying important fixed effects and random effects variance components, including shrinkage and permutation methods, were considered in Ibrahim et al. [13], Bondell et al. [14], Peng and Lu [15], and Drikvandi et al. [16].

The BIC is susceptible to the boundary issue. If we use the regular BIC in linear mixed models, that is we treat this case as if there were no constraints on the model’s parameter vector, then the penalty term of the regular BIC would include all the components of the parameter vector. Therefore, the regular BIC would overestimate the number of degrees of freedom of the linear mixed model (which we refer to as model complexity for this article) and would not take into account the fact that variances components are constrained and bounded below by 0. Consequently, the regular BIC tends to choose under-fitted linear mixed models. Several versions of the modified BIC have been proposed for model selection in linear mixed models [10,11,17]. However, to our knowledge, none of the current BICs can directly deal with the boundary issue.

The main objective of this article was to introduce a modified BIC for model selection when the true values of the variance components’ parameters lie on the boundary of the parameter space, allowing the most-appropriate model to be chosen from a set of candidate linear mixed models. Here is the general idea on how our proposed method solves the boundary problem. From the previous literature, we know that the asymptotic null distribution of the likelihood ratio test statistic of testing the nullity of several variances is a chi-bar-squared distribution (Baey et al. [6]). Based on this theoretical result, we took the average of the chi-bar-squared distribution and included this average in the complexity of the model. When random effects are correlated, calculating the weights of the chi-bar-squared distribution is not straightforward, as the weights depend on a cone that contains the set of positive definite matrices. Describing the set of positive definite matrices explicitly using constraints on the components of the random effects covariance matrix is almost impossible. Thus, calculating the weights of the chi-bar-squared distribution for this case is not an easy task and has not been addressed in the literature. Our solution to this problem is to place a bound on cone with a bigger cone. The bigger cone has a much simpler structure and allowed us to calculate the weights of the chi-bar-squared distribution. The rest of the paper is arranged as follows. In Section 2, we develop the methodology. The simulation and application are provided in Section 3 and Section 4. We conclude with a brief discussion in Section 5.

2. Methodology

2.1. Model Setup and Definitions

Consider the linear mixed model introduced in Laird and Ware [18]:

for , where denotes the -dimensional vector of response measurements for cluster i with ; is a fixed effect parameter vector; is an matrix of covariates for the fixed effects; is an matrix of covariates for the random effects; denotes the random effects vector of the -th cluster; is assumed to follow a multivariate normal distribution , where is a covariance matrix. were assumed to be independent. Fixed effects are used to model the population mean, while random effects are used to model between-cluster variation in the response. The vector of random errors was assumed to follow a multivariate normal distribution, , where denotes the identity matrix. It was assumed that and are pairwise independent for . The marginal distribution of is , where .

Let denote the vector of distinct variance and covariance components in matrix , and let . The vector of parameters for this model is . We assumed that the response vectors from N clusters are independent random observations. Given a clustered dataset, we wish to choose a linear mixed model that fits the data well and is also a parsimonious model.

Definition 1

(Definition of an approximating cone [2]). Let and . The set is said to be approximated by a cone A at if , for all and , for all , where , which is the distance between point and its projection onto any space . In this case, A is called the approximating cone of at and is said to be Chernoff-regular at .

Definition 2

(Definition of a tangent cone [19]). A tangent cone of a set at a point in is the set of limits of sequences , where are positive real numbers and and in converge to .

Definition 3

(Definition of chi-bar-squared distribution [19]). Let be a closed convex cone, and let , where is a positive definite matrix. is a random variable, which has the same distribution as . Therefore, we write

where , are some non-negative numbers and .

2.2. Proposed Methods

In this section, we introduce a modified BIC for linear mixed model selection. In linear mixed models, model selection includes the selection of the regression parameters (fixed effects) and variance components of random effects. We first derived a modified BIC to choose random effects assuming that the random effects are independent. Then, we propose a modified BIC to choose random effects when random effects are assumed to be correlated. Lastly, we propose a modified BIC to choose both fixed effects and random effects simultaneously. We also considered two cases for when the covariance matrix for random effects are diagonal and full matrices.

Let be a countable set of possible candidate linear mixed models. Let denote the vector of parameters of model , and let be the complexity of model . Assume that can be calculated and if . Let be the model that generates the data (called the true model) with parameter and the true value of is . Any model that is more complex than the true model is called an over-fitting model, that is or and . Let be the set of all over-fitting models. An under-fitting model is a model such that ’s components are not a subset of its parameter vector’s components, that is . Let be the set of all under-fitting models. Assume that model has parameter vector , where is the vector of fixed effects parameters, which includes the population regression coefficients; contains the distinct variance and covariance elements of matrix ; is the parameter for the variance of the random error vector . For a general covariance matrix, model is uniquely defined by its non-zero parameters in and non-zero variance components on the diagonal of matrix . If , then all elements on row i and column i of this matrix are set to 0.

2.2.1. Modified BIC for Choosing Random Effects Assuming That the Random Effects Are Independent

In this section, we considered the case where the covariance matrix of random effects, D, is a diagonal matrix. Here, is a vector of variances on the diagonal of matrix D.

Lemma 1.

When is a diagonal matrix, under assumptions (Appendix A.1), assume that we wish to test the model (with ) against model (with ) and both models have the same fixed effects part, then the null limiting distribution of the likelihood ratio test is

where ; , are some non-negative numbers and ; matrix is some positive definite matrix such that and , and m is the dimension of . denotes the true value of the parameter θ; denotes the marginal log-likelihood function of the linear mixed model (1).

Proof.

We applied the results from Baey et al. [6] on testing the nullity of r variance components of the diagonal covariance matrix, , using the likelihood ratio test statistic, assuming that the variances that are not being tested are strictly positive. Without loss of generality, assume that matrix can be written as , where and . The parameter with . Consider the hypothesis test, with positive definite matrix versus : is positive definite.

The parameter spaces under and their corresponding tangent cones are

In this case, is a linear subspace in . Therefore, . is contained in a linear subspace of dimension r. Thus, for . Assume that the null hypothesis holds and ,. Baey et al. [6] pointed out that the asymptotic null distribution of the log-likelihood ratio test statistic is a mixture of chi-squared distributions with the degree of freedom ranging from 0 to r, denoted by

where is a chi-squared distribution with i degrees of freedom and is some positive definite matrix such that and .

We applied this result to our case with ; ; and . Thus, based on (3), the null limiting distribution of the likelihood ratio test statistic is

where ; , are some non-negative numbers and ; matrix is some positive definite matrix such that and , and m is the dimension of . □

We now take the expectation of the chi-bar-squared distribution in Equation (2) and include it in the complexity of model .

We propose the following modified BIC:

where is the maximum likelihood estimator of in model ; and for ; for ; for . The first term, , measures the goodness-of-fit for model , and the second term, , is the penalty for the model complexity, which makes sure that the model selected is parsimonious.

The rationale of choosing the complexity for model when is as follows: p is the number of fixed effects parameters; 1 is for the parameter and 0.5 for the assumed random effect in the model (such as random intercept), and the rest of is the expectation of the chi-bar-squared distribution in Equation (2). When , is the complexity of model , which is the model with fixed effects and only one random effect (such as random intercept). When , is the complexity of model , which is the model with fixed effects and no random effects. In this case, is exactly the regular BIC for multiple regression models. For example, is a model with three independent random effects. , and .

We want to test ; , , vs. ; , . In this example, . Therefore, the asymptotic null distribution of the log-likelihood ratio test statistic is

where .

Cone can be written as , where is a matrix and is an identity matrix of order two. The chi-bar-squared weights are under Proposition of [19]. The matrix, , is approximated by , where is the maximum likelihood estimator of in model and is the Fisher information matrix. The chi-bar-squared weights, , can be calculated using function “con-weights-boot” in the R package “restriktor” of Vanbrabant et al. [20]. In this example, we assumed that . Then, we obtained the weights , and . Thus,

We note that, in theory, must be . However, in our simulation, . The expectation of this chi-bar-squared distribution is . The complexity of this model is .

Theorem 1.

Assume that Assumptions (C1)–(C4) in Appendix A.1 are satisfied, then

Proof.

We used instead of for the convenience of exposition.

Case 1: For any under-fitting model, , we want to prove that . We have that

We also have that and because and (as shown in the proof of Theorem 2 in Baey et al. [6]) and function is continuous with respect to . Furthermore, under Assumption , and . Thus,

and therefore, .

The last term can be evaluated as

This is because is the Kullback–Leibler distance between and ; and is positive and finite by Assumption .

Assume that the cluster sample sizes, , are uniformly bounded (Assumption ), then dominates as . Thus, , and for all ,

Case 2: For any over-fitting model, , we also prove that . Without loss of generality, assume that and , where has the same dimension as and has the same dimension as . Let r be the dimension of , and all elements of are 0. We have that

Then, is the likelihood ratio test statistic of the following hypothesis test:

According to Baey et al. [6], under , the asymptotic distribution of is

Therefore, , according to Theorem 2.4 of [21]. We also have that

where is the maximum log-likelihood of the simplest model, that is the model with only the random intercept. Therefore,

On the other hand, asymptotically follows a mixture of the chi-squared distributions. Therefore, must be positive, and hence, . Thus, as and

for . This completes the proof of Theorem 1. □

Given a set of candidate models, we calculated the proposed BIC value for each model. Then, the selected model is the one that minimizes the proposed BIC.

2.2.2. Modified BIC for Choosing Random Effects Assuming That the Random Effects Are Correlated

In this section, we introduce a modified BIC for selecting linear mixed models with correlated random effects. We still focused on only selecting random effects. In the parameter vector , is the parameter of interest; and are considered as nuisance parameters. We now considered that the linear mixed model (1) with the covariance matrix for random effects is a full matrix. Therefore, vector contains all distinct variances and covariances of matrix .

Lemma 2.

When is a full matrix and under Assumptions (C1)–(C4) in Appendix A.1, assume that we test the model against model , where contains only one random effect, which is a random intercept, contains k random effects including a random intercept, and both models have the same fixed effects part, then the null limiting distribution of the likelihood ratio test is

where ; m is the dimension of θ; , are some non-negative numbers; ; is a chi-squared distribution with i degrees of freedom; is a positive definite matrix such that and . denotes the set of symmetric positive semi-definite matrices of size .

Proof.

When is a full matrix, the number of distinct variances and covariances is . We also applied the results from Baey et al. [6] on testing the nullity of r variance components of the covariance matrix, , when this matrix is a full matrix. Assume that matrix is written as where the size of is and the size of is . Consider the hypothesis test: versus : is a positive definite matrix.

The parameter space under the null hypothesis is

where is the set of symmetric positive semi-definite matrices of size .

Assume that the null hypothesis holds and , then applying the results of Baey et al. [6], we obtain the tangent cone to at :

where is the set of symmetric matrices of size . Furthermore,

According to the results of Baey et al. [6], the tangent cone to at is

where is the set of symmetric positive semi-definite matrices of size . Since is a linear subspace in , the asymptotic null distribution of the likelihood ratio test statistic for the above hypothesis test is , where .

When is a full matrix, under the null hypothesis, Baey et al. [6] pointed out that the asymptotic null distribution of the log-likelihood test statistic is , which is a mixture of chi-squared distributions with the degree of freedom ranging from to .

where , are some non-negative numbers and ; is a chi-squared distribution with i degrees of freedom; is a positive definite matrix such that and .

Assume that model has parameter vector , where represents the parameter vector of the fixed effects; contains distinct variances and covariances of the random effect covariance matrix , and is the variance of the random error term . Let p be the number of parameters of and be the number of parameters of . Assume that we tested the model against model , where contains only one random effect, which is a random intercept, and contains k random effects including a random intercept. Assume that the two models contain the same fixed effects part. In this case, , , , and . Thus, and . Therefore, based on (8), the asymptotic null distribution of the log-likelihood ratio test statistic is

where ; , are some non-negative numbers and , is a chi-squared distribution with i degrees of freedom; is a positive definite matrix such that and . □

We note that it is too complex to define using equality and inequality constraints on the variance and covariance components of matrix . Since , in our work, we approximated by . Thus, is approximated by

where , are some non-negative numbers and ; is a chi-squared distribution with i degrees of freedom, and is a positive definite matrix such that and . This is because contains a linear space of dimension and is included in a linear space of dimension . Therefore, the weights are zero for and for [8]. From (10), let .

We propose the following modified BIC:

where is the maximum likelihood estimator of in model ; and for ; for ; and for .

2.2.3. Modified BIC for Selecting Both Fixed Effects and Random Effects in Linear Mixed Models

In this section, we propose a modified BIC to select both fixed effects and random effects for linear mixed models. We also divided the situations into two cases: when the random effects are independent, that is the covariance matrix, , of random effects is diagonal, and when the random effects are correlated, that is the covariance matrix, , is a full matrix.

Scenario 1: modified BIC for selecting both fixed effects and random effects when random effects are independent.

In the model selection, we assumed that the smallest model (called model ) contains only the intercept term for fixed effects and a random intercept for random effects. Model contains fixed effects, and the covariance matrix, , of random effects is of order . If random effects are assumed to be independent, then the number of random effects variance components is .

Lemma 3.

When is a diagonal matrix, under Assumptions (C1)–(C4) in Appendix A.1, assume that we tested model against model , then the asymptotic null distribution of the log-likelihood test statistic is

where ; , are some non-negative numbers and ; m is the dimension of θ.

Proof.

When is a diagonal matrix, . The fixed effects parameter . Without loss of generality, assume that we wanted to test the nullity of the s components of , which are , and the nullity of the last r variance components of matrix , which are .

Consider the hypothesis test, versus , assuming that the variances that are not tested () are positive. Let be the true value of the parameter vector. Assume that the null hypothesis holds and , then the parameter spaces under the null and alternative hypotheses and their tangent cones at are

Since is also a linear subspace in , Baey et al. [6] pointed out that the asymptotic null distribution of is a mixture of chi-squared distributions with the degree of freedom ranging from s to .

where ; is a chi-squared distribution with i degrees of freedom and is some positive definite matrix such that and .

When we test model against model , we are testing the nullity of the regression coefficients and random effects variance components. Therefore, based on Equation (13), the asymptotic null distribution of the log-likelihood test statistic is

where ; , are some non-negative numbers and . □

Let be the expectation of , then . We propose a modified BIC for this case as

where is the maximum likelihood estimator of in model ; and for ; for ; for . Here, in the formula for , we added to to account for the degrees of freedom of a fixed effect intercept (1 degree of freedom), a random intercept (0.5 degree of freedom), and the variance component of the error term, (1 degree of freedom).

Scenario 2: modified BIC for selecting both fixed effects and random effects when random effects are correlated.

When random effects in the linear mixed model (1) are correlated, their covariance matrix, , is a full matrix. Matrix can be written as , where the size of is and the size of is . The number of distinct variance and covariance components in is .

Consider the hypothesis test, versus . That is, is a positive definite matrix. Let be the true value of the parameter vector. Assume that the null hypothesis holds and , then the parameter spaces under the null hypothesis and its tangent cone at are:

where is the set of symmetric positive semi-definite matrices of size . Furthermore, the parameter space under the alternative hypothesis is

The tangent cone to at is

where is the set of symmetric positive semi-definite matrices of size . Since is a linear subspace in , the asymptotic null distribution of the likelihood ratio test statistic for the above hypothesis test is , where . As in Lemma 2, it is challenging to define using equality and inequality constraints. Since , we approximated by . is approximated by

where , are some non-negative numbers and ; is a chi-squared distribution with i degrees of freedom; is a positive definite matrix such that and .

In the model selection, we assumed that model contains only the intercept term for fixed effects and a random intercept for random effects. Model contains fixed effects, and the covariance matrix, , of random effects is of order . When random effects are assumed to be correlated, the number of distinct random effects variance and covariance components is . When we tested model against model , applying (16) with and , then and . Thus, the asymptotic null distribution of the log-likelihood ratio test statistic is approximated by

where .

Furthermore, let be the expectation of , then

Our proposed modified BIC for this case is

where is the maximum likelihood estimator of in model ; and for ; for ; and for .

Theorem 2.

Assume that Assumptions Appendix A.1 are satisfied and is defined as in (18), then

Proof.

Case 1: For any over-fitting model, , we also prove that . Assume that model contains fixed effects and random effects and the true model contains fixed effects and random effects. Let and with , , and . Without loss of generality, assume that the covariance matrix of random effects in model is , where is the covariance matrix of random effects of the true model . The size of is , and the size of is . Let and , where has the same dimension as ; has the same dimension as ; has the same dimension as ; has the same dimension as . All elements of and are 0. We have that

Then, is the likelihood ratio test statistic of the following hypothesis test:

As in Lemma 2, under , the asymptotic distribution of is , where with , , , and ; is some positive definite matrix such that and .

Therefore, . We also have that

where is the maximum log-likelihood of the simplest model, that is the model with only the intercept for fixed effects and a random intercept for random effects. Therefore,

On the other hand, asymptotically follows a mixture of the chi-squared distributions. Therefore, must be positive and, therefore, . Thus, as and for .

Case 2: For any under-fitting model, , we want to prove that . Using similar arguments as in the proof for Case 1 in Theorem 1, we obtain this result. □

3. Simulation

In this section, we evaluated the performance of the proposed BIC*. We compared the performance of the proposed BIC* to the regular BIC. For each candidate model, we computed the BIC* and regular BIC; then for each method, we chose the model with the minimum value of the BIC* and the regular BIC, respectively. All models were run using function “lmer” in the R package lme4 [22]. The chi-bar-squared weights were calculated using function “con-weights-boot” in the R package “restriktor” [20]. Following the methods used in Gao and Song [23] and Chen and Chen [24], the criteria we used to evaluate and compare the proposed BIC to the regular BIC were (1) positive selection rate (PSR), (2) false discovery rate (FDR), and (3) correction rate (CR). For each chosen model, the positive selection rate (PSR) is the ratio of the number of predictors that are correctly identified as significant in the chosen model to the number of predictors that are truly significant in the data-generating model. Then, we took the average of the PSR over all chosen models. The false discovery rate (FDR) is the ratio of the number of predictors that are incorrectly identified as significant in the chosen model to the number of predictors that are identified as significant in the chosen model. Then, we took the average of the FDR over all chosen models. The correction rate (CR) is the proportion of the times the true data-generating model is selected in all chosen models. For each selection criterion, we had 1001 models obtained from 1001 simulations. We then calculated the means and standard deviations of the positive selection rate and false discovery rate and the correction rate for each criterion.

3.1. Simulation Setup

Our data were generated from the linear mixed model, . For all simulation, was generated from a multivariate normal distribution, with .

3.1.1. Setup A: Choose Random Effects Assuming That the Random Effects Are Independent

Scenario 1: With total number of observations and number of clusters , is an matrix with ; the first column of includes all ones. The second column is , which was generated from the standard normal distribution. The vector of fixed effects . Matrix contains the first two columns , , which are the same as two columns of matrix , and two more columns , , both generated from the standard normal distributions. Random effects, , were generated from multivariate normal distribution with a diagonal matrix and . The random intercept, , had a standard deviation of . Random effects components, , , and , had standard deviations , , and , respectively. To measure the ability to detect the significance of the variance component parameters of the proposed , we considered different sizes of , , and . is a sequence of values from 0 to incrementing by ; is a sequence of values from 0 to 1 incrementing by ; is a sequence of values from 0 to 2 incrementing by .

Scenario 2: With the total number of observations and number of clusters , is an matrix with ; ; the first column of includes all ones. The last two columns of matrix and were generated from the standard normal distributions. The vector of fixed effects . Matrix contains the first three columns , , and , which are the same as three columns of matrix and three more columns , , and , which were generated from the standard normal distributions. Random effects, , were generated from multivariate normal distribution with a diagonal matrix and . To measure the ability to detect the significance of the variance component parameters of the , we also considered different sizes of , , and as in Scenario 1 with = 0 and = 0. We then repeated this setup with () and ().

Scenario 3: The setup was similar to the one in Scenario 2. However, matrix contains the first three columns , , and , which are the same as the three columns of matrix , and eight more columns , …, , which were generated from the standard normal distributions. Random effects, , were generated from multivariate normal distribution with a diagonal matrix and , where , , , , and are all 0. We also repeated this simulation setup with () and ().

3.1.2. Setup B: Choose Random Effects Assuming That the Random Effects Are Correlated

In this set up, the total number of observations is and the number of clusters is . Matrix and the vector of fixed effects, , were generated the same as in Setup A Scenario 2. Matrix contains the first three columns , , and , which are the same as three columns of matrix , and three more columns , , and were generated from the standard normal distributions. Random effects, , were generated from multivariate normal distribution with a matrix. The correlation matrix between the random effects components, , , , and , in the data-generating model is

To measure the ability to detect the significance of variance component parameters of the proposed , we created different cases for different sizes of , , , and , as shown below. , , and the covariances of random effects and corresponding to and are all 0.

Case 1: The standard deviations of the random effects were , , , , , and .

Case 2: The standard deviations of the random effects were , , , , , and .

Case 3: The standard deviations of the random effects were , , , , , and .

Case 4: In this case, we kept the standard deviations of the random effects the same as the ones in Case 2. However, we increased the correlations by for each non-zero correlation in the correlation matrix to see how this affects the correction rates. The correlation matrix between the random effects is

3.1.3. Setup C: Choose Both Fixed Effects and Random Effects Assuming That the Random Effects Are Correlated

With the total number of observations and number of clusters , is an matrix with ; the first column of includes all ones. The last five columns, to , weer generated from the standard normal distribution. The vector of fixed effects . Matrix contains the first three columns , , and , which are the same as three columns of matrix , and three more columns , , and were generated from the standard normal distributions. The correlation matrix between the random effects components, , , , and , in the data-generating model is

To measure the ability to detect the significance of the fixed effects and variance component parameters of the proposed , we explored two different cases for different sizes of , , , and , as shown below. The , , and covariances corresponding to the random effects of and are all 0.

Case 1: The standard deviations of the random effects were , , , , , and .

Case 2: The standard deviations of the random effects were , , , , , and .

We also ran simulations for the case when the random effects were assumed to be uncorrelated and the variances of random effects were the same as the values in Case 1 and Case 2.

3.2. Simulation Procedure

3.2.1. For Setup A

In all scenarios, for each set of values of , , and , simulations were run. In each simulation, all possible candidate models were run. All these models had the same fixed effect covariates (including and the intercept); meanwhile, the covariates for random effects part varied in the power set of . The proposed and regular BIC were calculated for each model. Then, one model with the minimum proposed BIC was selected and one model with the minimum regular BIC. Now, for each selection criterion, we had 1001 models obtained from 1001 simulations. We calculated the correction rate (CR) for each criterion.

In Scenario 2, for each set of values of , …, , simulations were run. In each simulation, all possible candidate models were run. All these models had the same fixed effect covariates (including , , and the intercept); meanwhile, the covariates for random effects varied in the power set of . The proposed and regular BIC were calculated for each model. Then, one model with the minimum proposed BIC was selected, and one model with the minimum regular BIC was selected. We calculated the means and standard deviations of the positive selection rate and false discovery rate. We also calculated the correction rate for each criterion.

In Scenario 3, with the given set of values of , …, , simulations were run. In each simulation, all possible candidate models were run. All these models had the same fixed effect covariates; meanwhile, the covariates for the random effects varied in the power set of . We calculated the means and standard deviations of the positive selection rate and false discovery rate and calculated the correction rate for each criterion. All simulations were performed by using R Version [25].

3.2.2. For Setup B

In each case presented above, simulations were run. In each simulation, all possible candidate models were run. All these models had the same fixed effect covariates (including the intercept, and ); meanwhile, the covariates for the random effects part varied in the power set of and also included a random intercept. The proposed , regular BIC, and cAIC were calculated for each model. Greven and Kneib [26] developed an analytic version of the corrected cAIC, and their method was implemented in the cAIC4 package in R [27]. Then, one model with the minimum proposed BIC was selected; one model with the minimum regular BIC was selected; one model with the minimum cAIC was selected. We calculated the means and standard deviations of the positive selection rate and false discovery rate and the correction rate for each criterion.

3.2.3. For Setup C

For each case above, we ran simulations. In each simulation, all possible candidate models were run. All models contained the intercept term for the fixed effect and a random intercept for the random effects. The covariates for the fixed effects part varied in the power set of for to , and the covariates for random effects part varied in the power set of for to . We also included the models that included only the intercept term for the fixed effect with varying random effects and the models that included a random intercept only with varying fixed effects. The proposed and regular BIC were calculated for each model. Then, the model with the minimum proposed BIC was selected, and the model with the minimum regular BIC was selected.

3.3. Simulation Results

Scenario 1: Table 1 summarizes the results of Scenario 1. We observed that the correction rate for the proposed was greater than that of the regular BIC. Furthermore, the correction rates of the two methods were higher when the values of , , and were bigger.

Table 1.

Comparison of the proposed BIC and regular BIC methods in terms of correction rate for the simulation in Scenario 1 with and .

Scenario 2: Table 2 summarizes the results of Scenario 2. The simulation results suggested that the values of the positive selection rate (PSR) for the proposed were higher than the regular BIC when the values of the variance components were close to 0. That is, the ability to choose the significant variance components was higher for the proposed than the regular BIC. Almost all of the false discovery rate (FDR) values were within 5 percent in all cases. We also observed that the proposed BIC approach had a higher FDR and corresponding SD as compared to the regular BIC approach. For some very low values of the sigma values, the FDR values of the proposed BIC were greater than 5 percent. The possible reason behind this is because the calculation of the penalty term of the regular BIC uses an exact chi-squared distribution, meanwhile the penalty term of proposed BIC uses the approximated weights of the chi-bar-square distribution.

Table 2.

Comparison of the proposed BIC and regular BIC methods in terms of the positive selection rate, the false discovery rate, and correction rate for different values of , , , , and in Scenario 2 with and .

As the values of the variance components increased, the PSR increased. From the results obtained, we also saw that the ability to choose the true model also became larger as the values of the variance components increased. We also noted that the standard deviations were small for all cases. This means that the estimated PSR and FDR were very consistent.

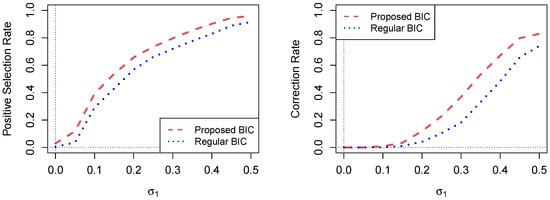

Figure 1 shows the comparison of the proposed BIC and regular BIC methods in terms of the positive selection rate and correction rate for different values of , , and when and in Scenario 2.

Figure 1.

Comparison of the proposed BIC and regular BIC methods in terms of the positive selection rate and correction rate for different values of , , and , . In this simulation setup, for each value of on the horizontal axis, the value of is and the value of is ; and .

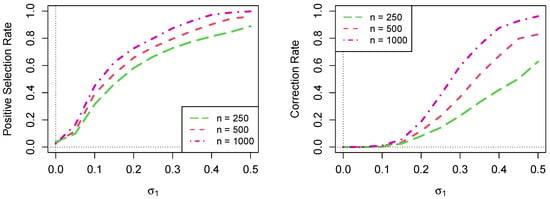

Figure 2 shows the comparison of the positive selection rate (PSR) and correction rates for Scenario 2 when , 500, and 1000 with , 100, and 200, respectively. Given the same set of values of , …, , we observed that the positive sensitivity rate increased as the number of clusters N increased.

Figure 2.

Comparison of the positive selection rate and correction rate for , , and . For each value of on the horizontal axis, the value of is and the value of is ; and .

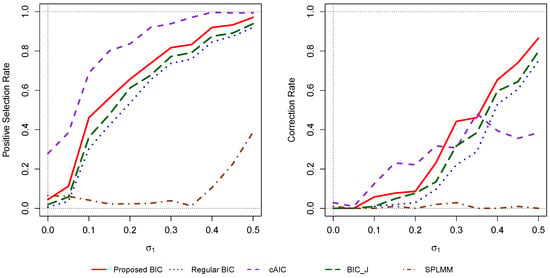

We also ran 104 simulations with three more competing methods: “cAIC”, “”, and “Splmm”, using the same setting as in Scenario 2. The cAIC is the corrected conditional AIC as implemented in the cAIC4 package in R [27]. The “” is a modified BIC for linear mixed models as introduced in (Jones [11]). “Splmm” (simultaneous penalized linear mixed-effects models) is a method for choosing both the fixed effects and random effects for variable selection using the penalized likelihood function. This method is based on the results in (Yang and Wu [28]) and was implemented in the R-package “”. Figure 3 shows that the modified BIC performed better than the regular BIC, “”, and “” in this scenario in terms of the positive selection rate and correction rate. The ability to choose correct variables was higher for the cAIC than the modified BIC. However, the correction rates for the cAIC were not always higher than that of the modified BIC. The “” method did not seem to work well in this scenario. This may be because the method works better for the case when the number of parameters is much higher than the number of observations.

Figure 3.

Comparison of the positive selection rate and correction rate for with different competing methods for different values of , , and . For each value of on the horizontal axis, the value of is and the value of is ; and .

Scenario 3: Table 3 summarizes the results of Scenario 3. We saw that, in all cases, for the sample sizes , 500, 1000, the mean PSR and the correction rates were higher for the proposed BIC; meanwhile, the FDRs kept around .

Table 3.

Comparison of the proposed BIC and regular BIC methods in terms of the positive sensitivity rate and correction rate for , , and in Scenario 3.

Table 4 shows the comparison of the proposed BIC, regular BIC, and cAIC methods in terms of the positive selection rate, the false discovery rate, and correction rate for Case 1 to Case 4. In all cases, the correction rate for the proposed BIC was greater than that of the regular BIC. The difference in the correction rate between these two methods was bigger when the values of , , and were smaller. In most cases, the two methods seemed to perform better than the cAIC method.

Table 4.

Comparison of the proposed BIC, regular BIC, and cAIC methods in terms of the positive selection rate, the false discovery rate, and correction rate for different values of , , , , , and with correlated random effects.

Table 5 shows the comparison of the proposed BIC, regular BIC, and cAIC methods in terms of fixed effects correction rate, random effects correction rate, and both effects correction rate for both Case 1 and Case 2 when random effects were assumed to be correlated.

Table 5.

Comparison of the proposed BIC, regular BIC, and cAIC methods in terms of the correction rate for fixed effects, random effects, and both for different values of , , and with , , , and correlated random effects.

Based on the simulation results for the situation when random effects were assumed correlated in Table 5, we saw that the proposed BIC method performed better than the regular BIC and the cAIC methods in terms of the correction rate for selecting the fixed effects, the correction rate for selecting the random effects, and also for selecting both fixed effects and random effects simultaneously. We also saw that, when the values of the variances for random effects were smaller, the correction rates were lower for all methods. However, the performance of the proposed method was still much better than the other two methods.

When random effects were assumed uncorrelated, based on the simulation results in Table 6, we saw that the proposed BIC and regular BIC still performed well and better than the cAIC method. The proposed BIC method performed better than the regular BIC in Case 2, but did not perform better than the regular BIC in Case 1. This may be because the penalty term of the regular BIC was calculated using the exact chi-squared distribution and the calculation of the penalty term was without any error. However, for the proposed BIC, the weights of the chi-bar-squared distribution were approximated. Therefore, the penalty term was approximated only. From the simulation results, we noticed that when the values of the variances for random effects were smaller, the correction rates were lower for the proposed and regular BIC methods. However, the correction rates in Case 2 were better than Case 1 for the cAIC method.

Table 6.

Comparison of the proposed BIC, regular BIC, and cAIC methods in terms of fixed effects correction rate, random effects correction rate, and both effects correction rate for different values of , , , and with , , and independent random effects.

Comparing the computational complexity, the proposed method requires Monte Carlo simulations to estimate the weights so that the penalty parameter can be computed. This is more computational intensive than the regular BIC. In our simulation, for one dataset with a given model, for Setup A, it took about to s for the proposed BIC method and about to s for the regular BIC. For Setup B, it took about s for the proposed BIC, s for the regular BIC, and s for the cAIC. For Setup C with independent random effects, it took about s for the proposed BIC, s for the regular BIC, and s for the cAIC. For Setup C with correlated random effects, it took about s for the proposed BIC, s for the regular BIC, and s for the cAIC. We noted that the model with correlated random effects took longer than the one with independent random effects. Furthermore, the computational time of the proposed method was longer than that of the regular BIC, but quite close to that of the cAIC method. The OS and CPU system specifications that we used to run our methods were Windows 10, CPU: Intel Core with 4 cores, 8 threads. The memory requirements of our methods are 8 GB RAM.

4. Real-Data Application

In this section, we applied the proposed BIC to a real dataset. We worked with a dataset that is a subset of 120 schools of dataset “hsfull” from package “spida2” in R, which was developed by Monette et al. [29]. This dataset was originally from the 1982 “High School and Beyond” (HSB) survey dataset in Raudenbush and Bryk’s text on hierarchical linear models (Raudenbush and Bryk [30]). The data include the mathematics achievement test scores of 5307 students from 50 Catholic and 70 public high schools, with the number of students in each school ranging from 19 to 66 students.

The variables included in the analysis were school identification number, mathematics achievement score (), socioeconomic status (), sex (female (0) or male (1); ), visible minority status (yes (1) or no (0); ), and school sector (Catholic (0) or public (1); ). Variables , , and are group-centered. The objective was to study the relationship between students’ mathematics achievement score and socioeconomic status, sex, and visible minority status in public and Catholic schools and whether this relationship varies across schools within each sector.

The candidate variables in the fixed effects part were , , , and , which are group-centered. The candidate variables in the random effects part were , , and , which are the same as , , and .

We first fit a linear mixed model that included only the intercept term for fixed effects and a random intercept. Then, we fit the models with only the intercept term for fixed effects and all possible combinations of , , and with a random intercept for random effects. Next, we fit the models with all possible combinations of , , , and for fixed effects and only a random intercept for random effects. Lastly, for each combination of , , , and for fixed effects, we fit the models with all possible combinations of , , and with a random intercept for random effects. For each model, we recorded the values of the proposed BIC, regular BIC, and cAIC. There were 128 values for each method. Now, for each method, we chose the model with the minimum value of the corresponding criterion. We applied this procedure for both cases when random effects were assumed to be correlated and uncorrelated.

When random effects were assumed to be correlated, the optimal model we obtained using the proposed BIC was the model with all , , , and and a random intercept; the proposed BIC was 34,379.83. The optimal model we obtained using the regular BIC was also the model with , , , and and a random intercept only. The regular BIC of this model was also 34,379.83. The cAIC yielded the optimal model, which contained , , , and with a random intercept and random slopes of and . The cAIC of the optimal model was 34,166.25.

When random effects were assumed to be uncorrelated, the optimal model we obtained using the proposed BIC was the model with all , , , and , a random intercept, and random slopes of ; the proposed BIC value was 34,378.23. The optimal model we obtained using the regular BIC was the model with , , , and and a random intercept only. The regular BIC of this model was 34,379.83. The cAIC yielded the optimal model, which contained , , , and with a random intercept and random slopes of , , and . The cAIC of the optimal model was 34,165.13.

Table 7 shows the proposed BIC, regular BIC, and cAIC for all models that contained , , , and with correlated random effects considered.

Table 7.

Results of the proposed BIC, regular BIC, and cAIC for all models with correlated random effects considered for the subset of the “hsfull” dataset.

Table 8 shows the optimal model chosen by each method when the random effects were assumed to be independent and when the random effects were correlated. All , , , and were included in the models.

Table 8.

Comparison of the optimal model chosen by each method for correlated random effects and independent random effects.

Based on the results presented above, we would choose the model with all , , , and for fixed effects and a random intercept and a random slope of for random effects assuming that random effects are uncorrelated. There was a significant relationship between students’ math achievement score and socioeconomic status, sex, and visible minority status in public and Catholic schools, and the school mean math achievement score and minority gap effect varied across the schools within each sector.

5. Discussion

In this article, we introduced a modified BIC for linear mixed models that can directly deal with the boundary issue of variance components. First, we focused on selecting random effects variance components and proposed a model selection criterion when the random effects were assumed to be independent (the covariance matrix of random effects was a diagonal matrix). Second, we proposed a criterion for choosing random effects variance components when the random effects were assumed to be correlated. Instead of working with a complex tangent cone to the alternative parameter space, we approximated the tangent cone using a bigger, but simpler cone. This allowed us to obtain the weights of the chi-bar-squared distribution. Lastly, we presented a model selection criterion for choosing both fixed effects and random effects simultaneously in both cases: when random effects were assumed to be independent and when they were correlated. We also proved the consistency of the modified BIC.

Based on the simulation studies, the modified BIC performed quite well in terms of the correction rate. The ability to select the data-generating model of the modified BIC was better when the size of the random effects variance component or the size of correlation component was bigger. Compared to the regular BIC, the modified BIC gave higher correction rates, especially when the variances of random effects were small. Based on the correction rate, the modified BIC and performed better than the regular BIC in most cases. Furthermore, there was significant improvement in the positive selection rate in most of the simulation scenarios.

One limitation of the modified BIC is that, when choosing the optimal model, the proposed method looks at all possible models. Since the number of possible models increased exponentially as the number of fixed effects and random effects increased, the model selection process may be increasingly computationally intensive. We may combine the proposed BIC with some selection procedure such as shrinkage methods or fence methods as introduced in Müller et al. [31] to reduce the number of candidate models. Then, we can use the proposed BIC method to perform model selection.

Author Contributions

Writing, original draft, H.L.; writing, reviewing and editing, X.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by an NSERC grant held by Gao.

Data Availability Statement

The dataset used in the Application Section is a subset of the dataset “hsfull” from the package “spida2” in R, using the R command: data(hsfull, package = ‘spida2’). The package is available at https://github.com/gmonette/spida2, accessed on 25 January 2021.

Acknowledgments

The authors would like to thank the Editor and reviewers for their helpful comments and suggestions, which have helped us to improve the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Appendix A.1. Assumptions for Lemmas 1–3 and Theorems 1 and 2

- (C1).

- The observations from different clusters are independent random vectors. All the assumptions of the linear mixed model (1) are satisfied.

- (C2).

- Let ) be the log-likelihood function of the linear mixed model (1). Denote by the parameter space of the model parameter vector, , and let be the true value of the parameter vector. Denote the vector of first partial derivatives of with respect to by , and denote the matrix of the second partial derivatives of with respect to by . Directional derivatives are used when is on the boundary of . (i) Assume that, for all , the first three partial derivatives of the log-likelihood function with respect to exist almost everywhere. (ii) Furthermore, assume that times the absolute value of the third derivative of is bounded as a function of , whose expectation exists, and finite on the intersection of the neighborhoods of and .

- (C3).

- Assume that are uniformly bounded. That is, there exists a constant such that for .

- (C4).

- Let be the parameter vector of the true model , and let denote the true value of .

- (i)

- For any under-fitting model, , with model parameter , assume that exists and there exists a unique pseudo true, , such as for all i.

- (ii)

- For all , .

- (iii)

- For any two nested models, , is bounded by an integrable function, , and .

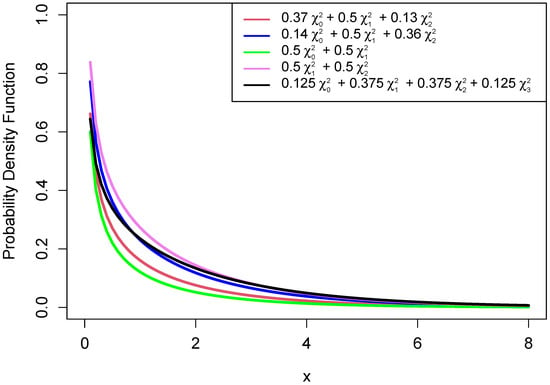

Appendix A.2. Graphs of Chi-Bar-Squared Distributions

In this section, we created graphs of some density functions of different chi-bar-squared distributions.

Figure A1.

Chi-bar-squared distributions.

The graphs show that the distribution of a chi-bar-squared distribution depends on it mixing weights.

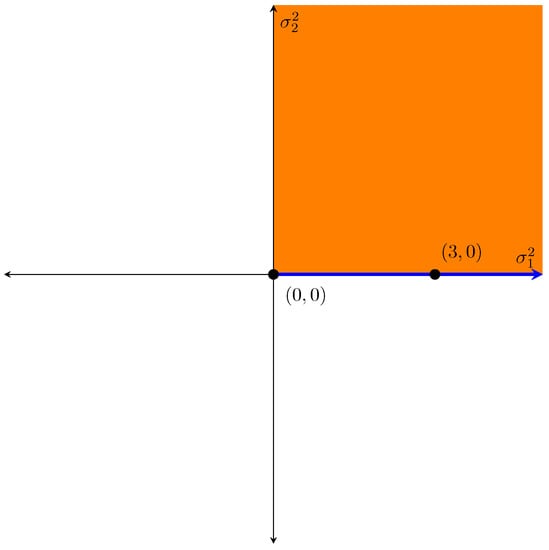

Appendix A.3. Graphical Example of the Boundary Issue

In this example, we tested against . The parameter space under the null hypothesis was the set of all points of the form with , illustrated by the blue interval along the axis of . Under the alternative hypothesis, the parameter space was the set of all points with and and is illustrated by the shaded orange region on the graph. Under the null hypothesis, the testing value of the parameter vector lies on the boundary of the parameter space.

Figure A2.

Graphical example of boundary issue.

References

- Sheng, Y.; Yang, C.; Curhan, S.; Curhan, G.; Wang, M. Analytical methods for correlated data arising from multicenter hearing studies. Stat. Med. 2022, 41, 5335–5348. [Google Scholar] [CrossRef] [PubMed]

- Chernoff, H. On the distribution of the likelihood ratio. Ann. Math. Stat. 1954, 25, 573–578. [Google Scholar] [CrossRef]

- Self, S.G.; Liang, K.Y. Asymptotic Properties of Maximum Likelihood Estimators and Likelihood Ratio Tests Under Nonstandard Conditions. J. Am. Stat. Assoc. 1987, 82, 605–610. [Google Scholar] [CrossRef]

- Stram, D.O.; Lee, J.W. Variance Components Testing in the Longitudinal Mixed Effects Model. Biometrics 1994, 50, 1171–1179. [Google Scholar] [CrossRef] [PubMed]

- Azadbakhsh, M.; Gao, X.; Jankowski, H. Composite likelihood ratio testing under nonstandard conditions using tangent cones. Stat 2021, 10, e375. [Google Scholar] [CrossRef]

- Baey, C.; Cournède, P.H.; Kuhn, E. Asymptotic distribution of likelihood ratio test statistics for variance components in nonlinear mixed-effects models. Comput. Stat. Data Anal. 2019, 135, 107–122. [Google Scholar] [CrossRef]

- Dykstra, R. Asymptotic normality for chi-bar-squared distributions. Can. J. Stat. 1991, 19, 297–306. [Google Scholar] [CrossRef]

- Shapiro, A. Asymptotic Distribution of Test Statistics in the Analysis of Moment Structures Under Inequality Constraints. Biometrika 1985, 72, 133–144. [Google Scholar] [CrossRef]

- Vaida, F.; Blanchard, S. Conditional Akaike information for mixed-effects models. Biometrika 2005, 92, 351–370. [Google Scholar] [CrossRef]

- Pauler, D. The Schwarz criterion and related methods for normal linear models. Biometrika 1998, 85, 13–27. [Google Scholar] [CrossRef]

- Jones, R.H. Bayesian information criterion for longitudinal and clustered data. Stat. Med. 2011, 30, 3050–3056. [Google Scholar] [CrossRef] [PubMed]

- Delattre, M.; Poursat, M.A. An iterative algorithm for joint covariate and random effect selection in mixed-effects models. Int. J. Biostat. 2020, 16, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Ibrahim, J.G.; Zhu, H.; Garcia, R.I.; GUO, R. Fixed and random effects selection in mixed-effects models. Biometrics 2011, 67, 495–503. [Google Scholar] [CrossRef] [PubMed]

- Bondell, H.D.; Krishna, A.; Ghosh, S.K. Joint variable selection for fixed and random effects in linear mixed effects models. Biometrics 2010, 66, 1069–1077. [Google Scholar] [CrossRef]

- Peng, H.; Lu, Y. Model selection in linear mixed effect models. Multivar. Anal. 2012, 109, 109–129. [Google Scholar] [CrossRef]

- Drikvandi, R.; Verbeke, G.; Khodadadi, A.; Nia, V.P. Testing multiple variance components in linear mixed-effects models. Biostatistics 2012, 14, 144–159. [Google Scholar] [CrossRef]

- Pauler, D.K.; Wakefield, J.C.; Kass, R.E. Bayes Factors and Approximations for Variance Component Models. J. Am. Stat. Assoc. 1999, 94, 1242–1253. [Google Scholar] [CrossRef]

- Laird, N.M.; Ware, J.H. Random-Effects Models for Longitudinal Data. Biometrics 1982, 38, 963–974. [Google Scholar] [CrossRef]

- Silvapulle, M.J.; Sen, P.K. Constrained Statistical Inference: Order, Inequality, and Shape Constraints; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar] [CrossRef]

- Vanbrabant, L.; Rosseel, Y.; Dacko, A. con_weights_boot: Function for Computing the Chi-Bar-Square Weights Based on Monte Carlo Simulation. 2019. Available online: https://www.rdocumentation.org/packages/restriktor/versions/0.2-250/topics/con_weights_boot/ (accessed on 12 August 2020).

- van der Vaart, A. Asymptotic Statistics; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting Linear Mixed-Effects Models Using lme4. J. Stat. Softw. 2015, 67, 1–48. [Google Scholar] [CrossRef]

- Gao, X.; Song, P.X.K. Composite Likelihood Bayesian Information Criteria for Model Selection in High-Dimensional Data. J. Am. Stat. Assoc. 2010, 105, 1531–1540. [Google Scholar] [CrossRef]

- Chen, J.; Chen, Z. Extended BIC for small-n-large-P sparse GLM. Stat. Sin. 2012, 22, 555–574. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021; Available online: https://www.R-project.org/ (accessed on 29 December 2021).

- Greven, S.; Kneib, T. On the behaviour of marginal and conditional AIC in linear mixed models. Biometrika 2010, 97, 773–789. [Google Scholar] [CrossRef]

- Säfken, B.; Rügamer, D.; Kneib, T.; Greven, S. Conditional model selection in mixed-effects models with cAIC4. arXiv 2018, arXiv:1803.05664. [Google Scholar] [CrossRef]

- Yang, L.; Wu, T. Model-based clustering of high-dimensional longitudinal data via regularization. Biometrics 2022, 1–14. [Google Scholar] [CrossRef]

- Monette, G.; Fox, J.; Friendly, M.; Krause, H.; Zhu, F. spida2: Collection of Tools Developed for the Summer Programme in Data Analysis 2000–2012. R Package Version 0.2.1. 2019. Available online: https://github.com/gmonette/spida2 (accessed on 30 April 2020).

- Raudenbush, S.; Bryk, A. Hierarchical Linear Models: Applications and Data Analysis Methods; SAGE Publications: Thousand Oaks, CA, USA, 2002. [Google Scholar]

- Müller, S.; Scealy, J.L.; Welsh, A.H. Model Selection in Linear Mixed Models. Stat. Sci. 2013, 28, 135–167. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).