4.1.1. Preprocessing Phase

During this phase, the

DO constructs and signs the authenticated index structure (AIS) for

D before outsourcing

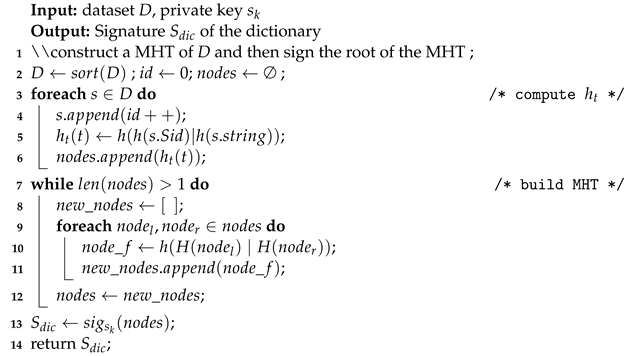

D to the server. Our AIS mainly consists of two parts, namely, a dictionary and an inverted index. Thanks to its efficiency and simplicity, we use MHT for authentication of the data structure construction. We summarize the construction process in Algorithm 1.

| Algorithm 1: Generate signature for dictionary. |

![Mathematics 11 02128 i001 Mathematics 11 02128 i001]() |

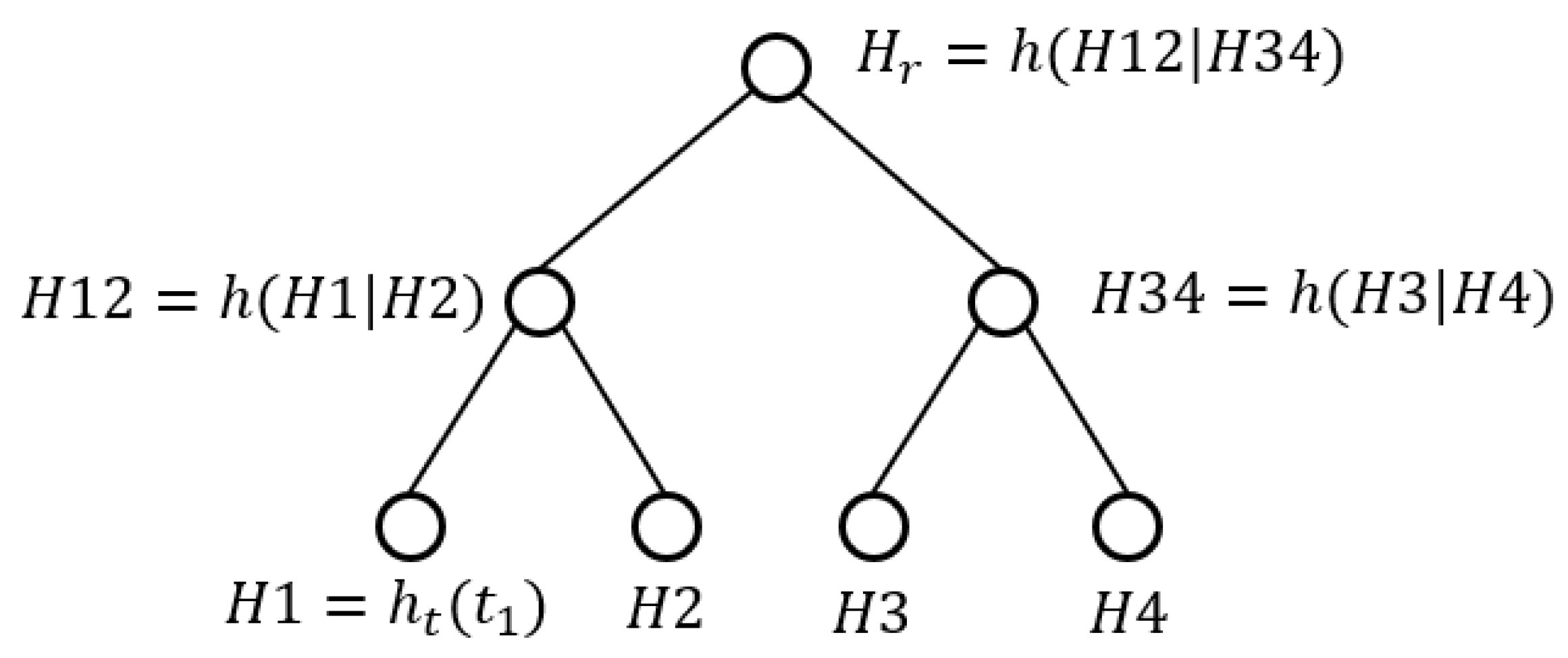

First, we show how to generate a signature for the dictionary. As shown in line 2 of Algorithm 1, the

DO sorts the strings in

D in alphabetical order and assigns each string a unique ID, i.e.,

, as shown in

Table 1. The reason to sort

D is that the strings of a query result tend to share a common prefix; hence, sorting helps to cluster similar strings together, which can reduce

VO size and thus save network costs.

The

DO computes the tuple hash

for each tuple

as follows:

where ∣ stands for string concatenation, and

is a one-way hash function. Lines 3 to 6 of Algorithm 1 show how to compute tuple hashes. We use the tuple hash as an identifier for a tuple.

The

DO builds the MHT of

D in a bottom-up manner. Specifically, if node

i of the MHT is a leaf node, then its hash is simply

, where

is the data tuple corresponding to node

i. On the other hand, if node

i is an internal node, then its hash can be calculated as

, where

and

are the hash values of the left and right children of node

i, respectively. This process repeats until the root node of the MHT is obtained. This construction is illustrated in

Figure 3, where

is the hash value of node

i, and the “root hash” (denoted as

) is a digest of all the tuples in the Merkle hash tree. We summarize the calculation of MHT in lines 7 to 12 of Algorithm 1.

After the MHT is built, the DO generates a pair of keys , where and refer to the public key and private key, respectively. Then, the DO signs the root hash of the MHT with the DO’s private key , i.e., , where is a standard digital signature algorithm, such as RSA and ECDSA.

Next, we explain how to compute the signature of the inverted index, as shown in Algorithm 2. Specifically, the

DO creates an inverted index for the

q-grams (lines 2 to 8 of Algorithm 2), where

q is a hyper-parameter that can be pre-determined based on some statistics of dataset and user queries, such as the distribution of the alphabet and the average length of the strings.

| Algorithm 2: Generating a signature for inverted index. |

![Mathematics 11 02128 i002 Mathematics 11 02128 i002]() |

For each

i-list =

of the inverted index (lines 9 to 12 in Algorithm 2), the

DO computes the

tuple hash for the

i-list as follows:

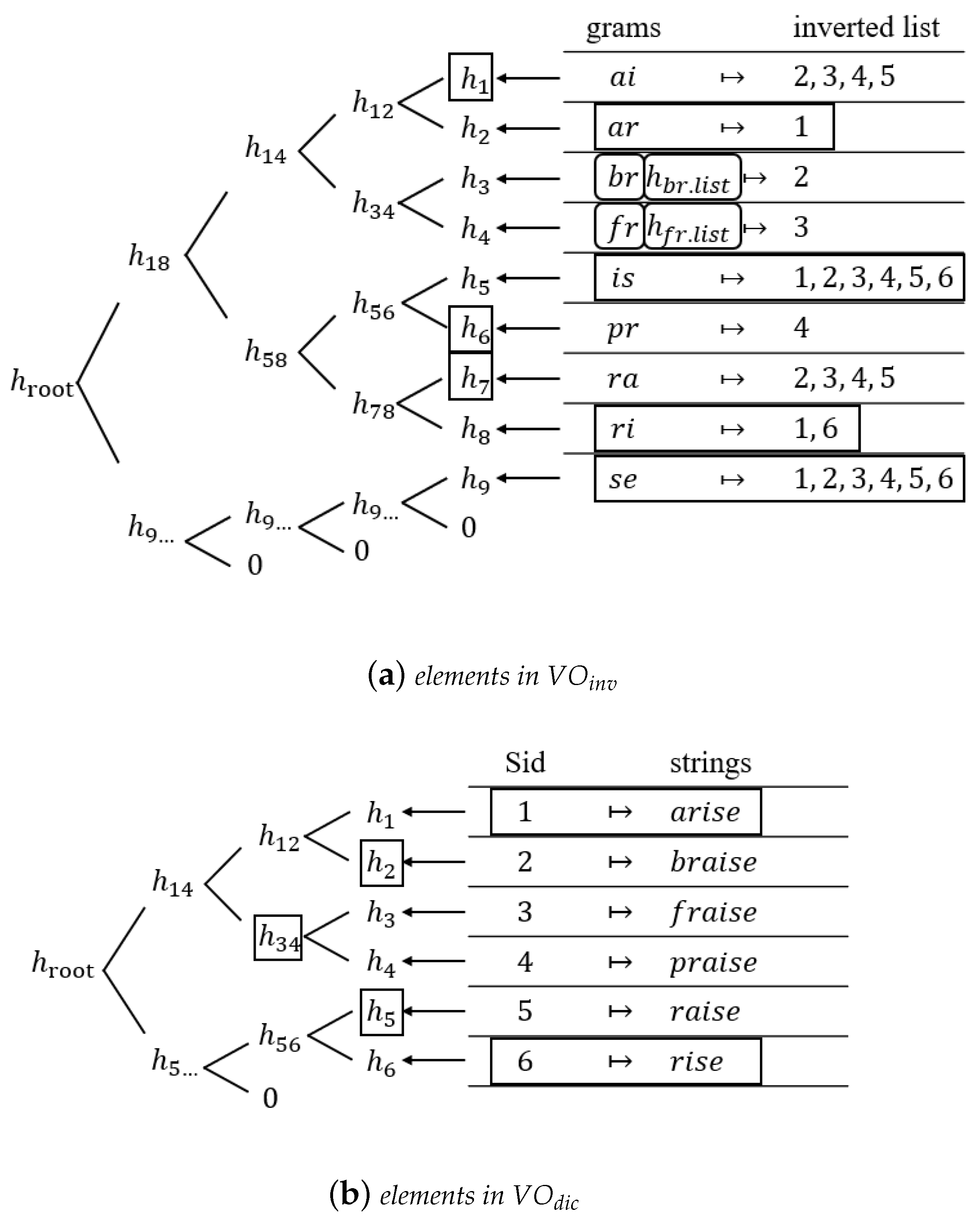

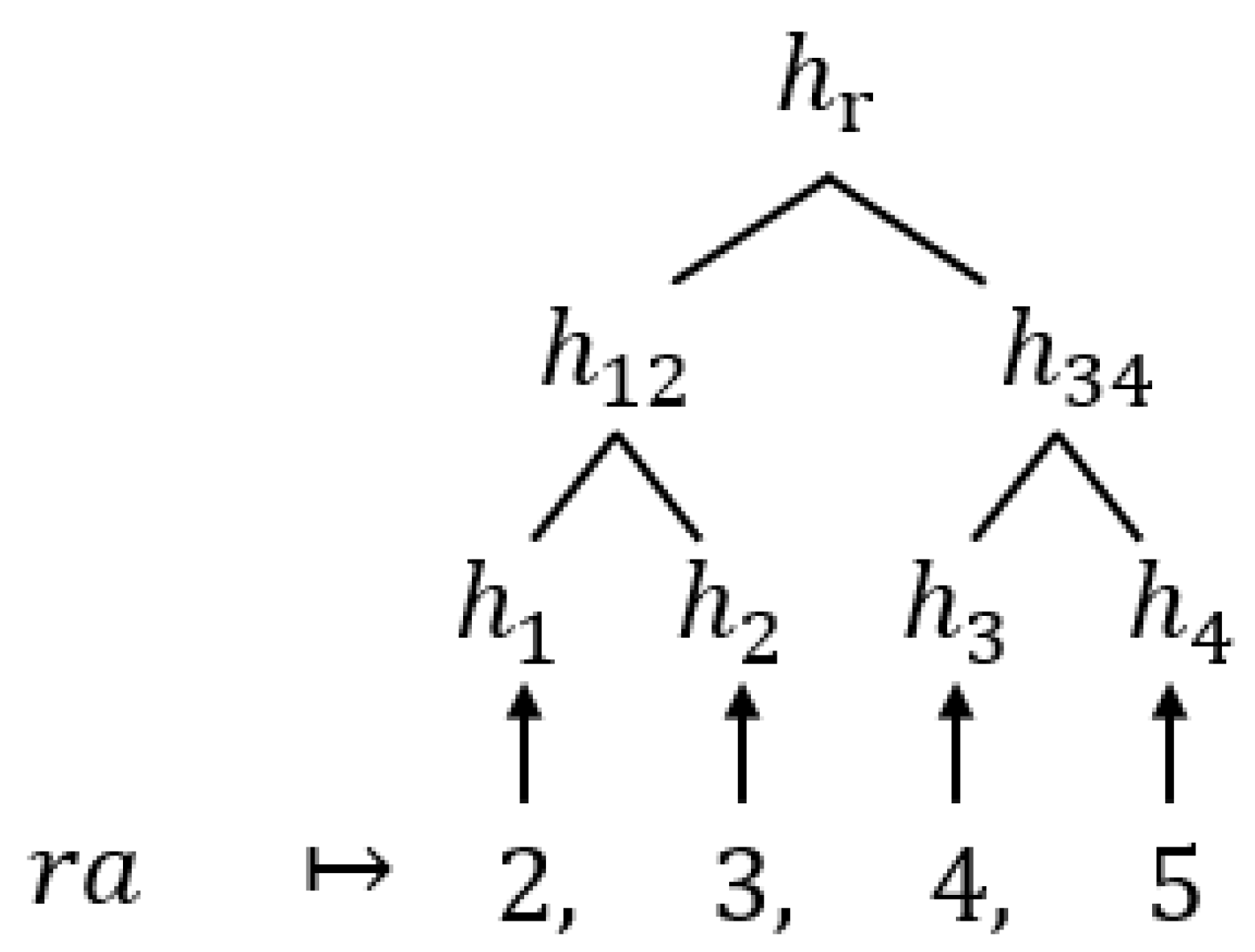

For instance, consider the gram

“ai” and its corresponding list in

Table 2. We thus have

and

. Note that

“ai”) corresponds to

in

Figure 4.

Then, the

DO computes the MHT of the inverted index. Specifically, the

gram-list of the inverted index is sorted by grams in alphabetical order, and the MHT construction process of the inverted index is the same as MHT construction for the dictionary. When the MHT is built, the

DO signs the root hash to generate signature

of the inverted index (lines 13–19 of Algorithm 2). We give an example of MHT construction for the inverted index in

Figure 4.

After the AIS is constructed as described above, the

DO uploads dataset

D,

q, and two signatures,

and

, to the server. It is worth noting that in some existing outsourcing database models, the

DO stores signatures locally (instead of uploading them to the server) and only sends them to users upon request. For example, [

3] proposed a solution called

, in which the

DO is required to remain active to keep the system running properly. In contrast, our solution allows the

DO to go offline without affecting the database outsourcing services.

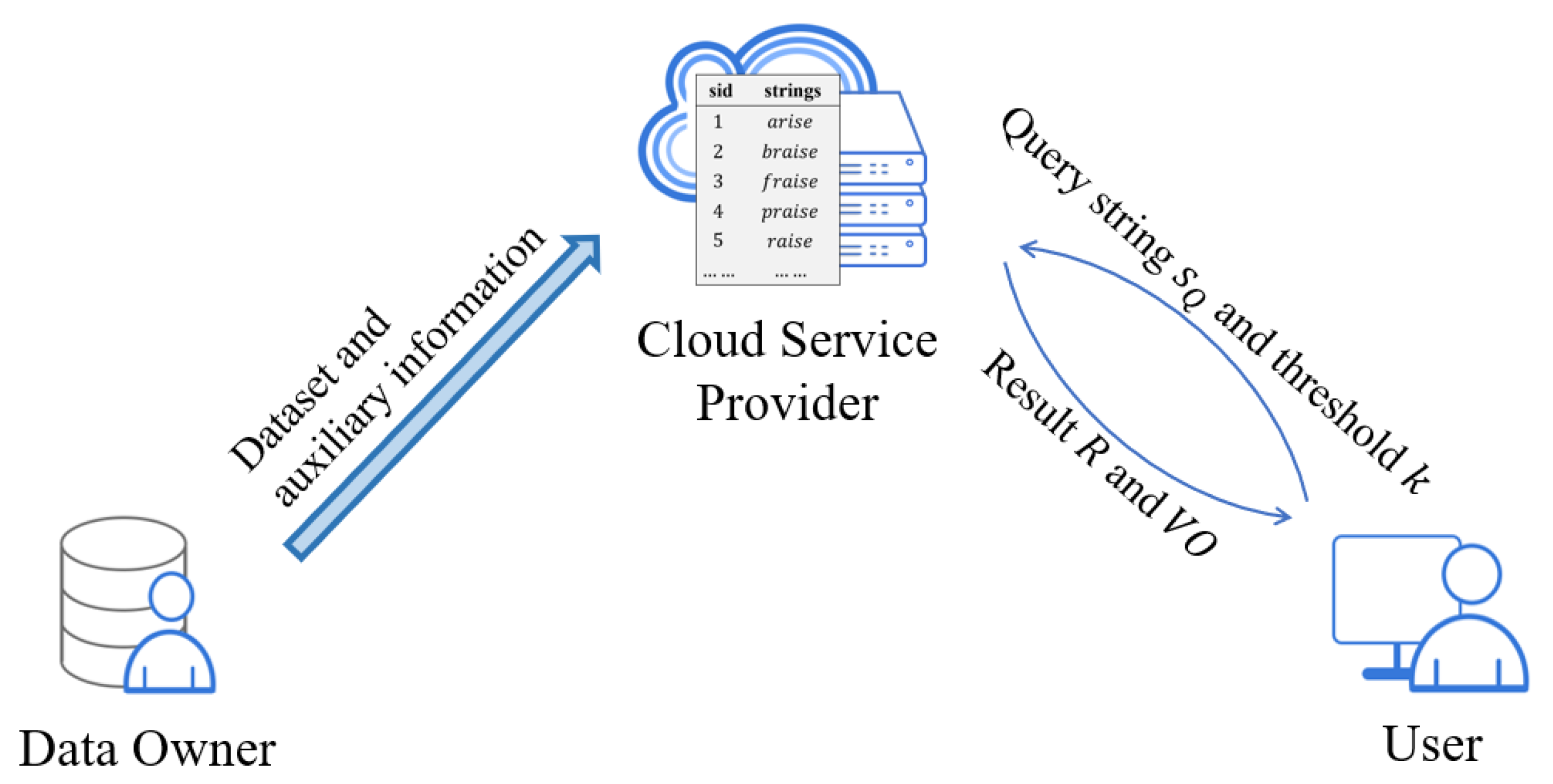

4.1.2. Searching Phase

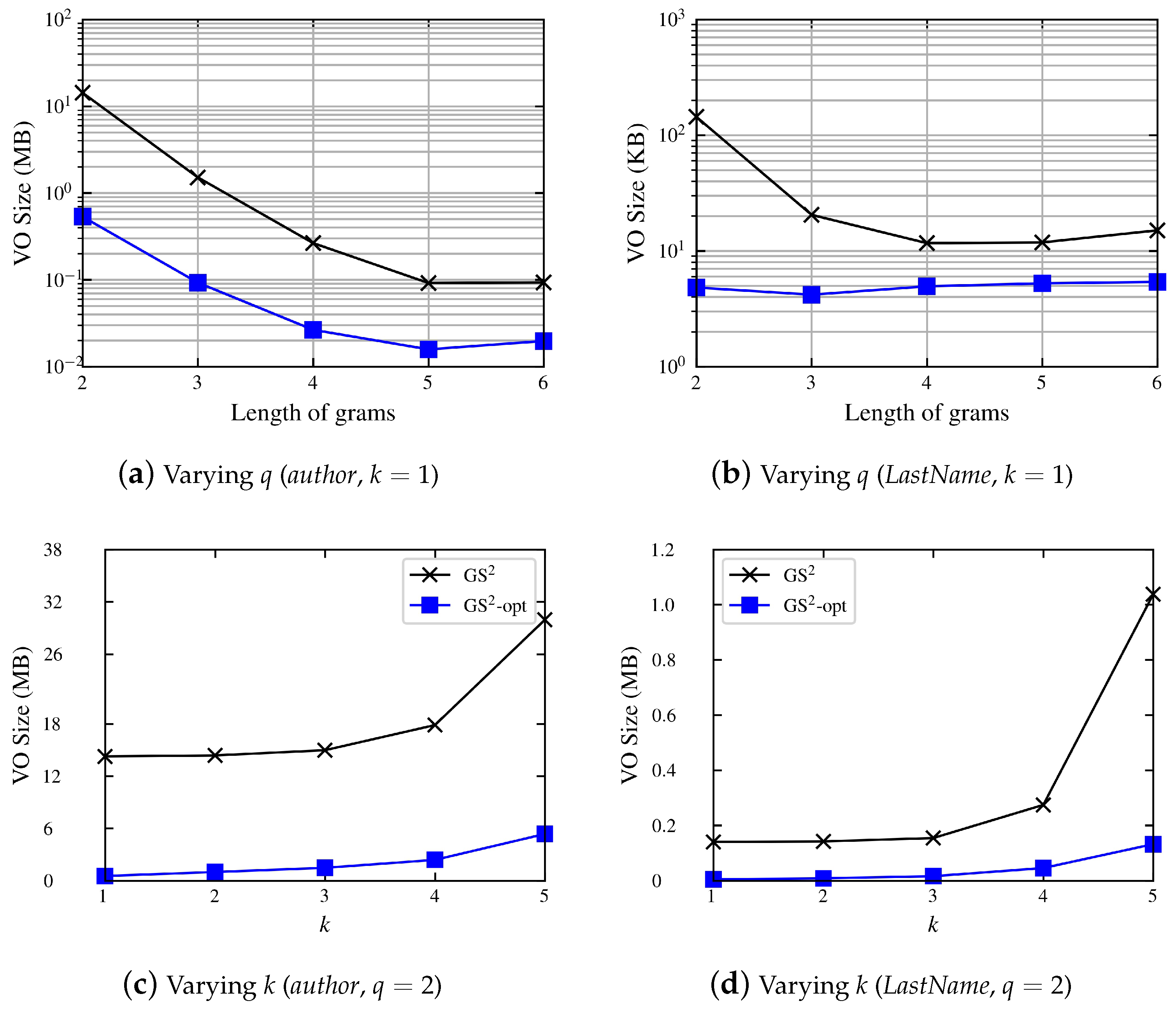

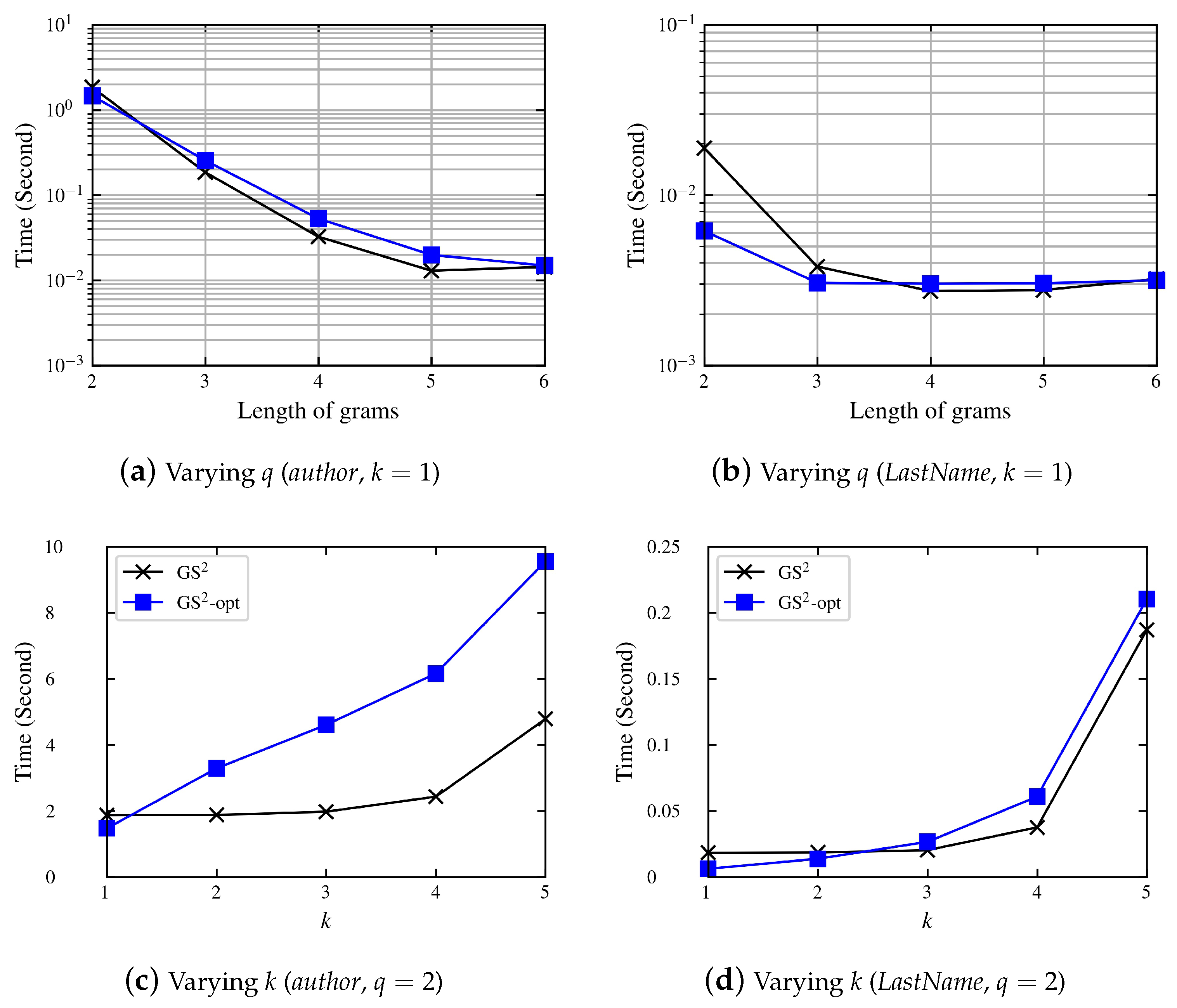

In this section, we present the process of generating the VO for user queries. In general, the VO is composed of two components, i.e., the VO of the dictionary and the VO of the inverted index. First, we provide a brief description of how the server performs approximate string searches with respect to a user query, as summarized in Algorithm 3. Then, we show how to construct VO in detail.

Upon receipt of dataset

D,

q, and signatures from the

DO, the server builds the

q-gram inverted index based on

D and

q. Assume the user issues a query

Q with string

and threshold

k to the server, which implies that the user wants to search for all strings whose edit distance from

is less than or equal to

k. After receiving

and

k from the user, the server calculates the minimum number of shared grams, denoted by

, between

and a string

. According to Lemma 1, we have

Without loss of generality,

is set to the minimum value of the above equation, i.e.,

, as shown in line 2 of Algorithm 3.

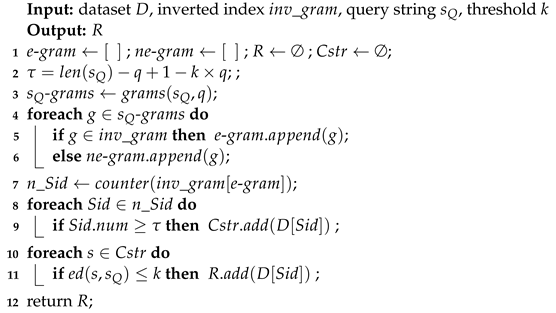

| Algorithm 3: Search for similar strings in D. |

![Mathematics 11 02128 i003 Mathematics 11 02128 i003]() |

The server extracts a set of -grams of grams from , as shown in Line 3 of Algorithm 3. Then, all the i-lists corresponding to grams in -grams are fetched from the inverted index. It is possible that some gram in the -grams may not have a corresponding i-list in the inverted index; hence, we divide the grams in -grams into the following two cases (lines 4 to 6 of Algorithm 3):

If a gram -grams has a corresponding i-list, g is called an e-gram;

If a gram -grams does not have a corresponding i-list, g is called an ne-gram.

We refer to the i-lists that correspond to the e-gram as e-i-lists.

After that, the server counts the number of occurrences of

in

e-i-lists and find those that appears no less than

times. To count the number of occurrences, there exist many efficient algorithms, e.g., the Heap algorithm, MergeOpt [

7], ScanCount, MergeSkip, and DivideSkip [

9]. After the counting step, we obtain a set of candidate strings, denoted by the

C-string, whose

Sid appears at least

times in the

e-i-lists, as shown in lines 7 to 9 in Algorithm 3.

For each string

C-string, the server computes the edit distance between

and

, and those strings whose edit distance from

is less than or equal to

k are put into the result set

R (as shown in lines 10 and 11 in Algorithm 3). Note that the server can directly use the dynamic programming technique to calculate the edit distance between two strings, or it can use the verification technique [

1] or other filter-verification frameworks [

27] to speed up the computation.

Example 1. Let us consider an example with =“arisen”, , and the database D, as shown in Table 1, and its corresponding inverted index, as shown in Table 2. After receiving and k, the server first computes and then extracts -“ar”,“ri”,“is”,“se”,“en”}, from which the server obtains e-gram={“ar”,“ri”,“is”,“se”} and ne-gram={“en”}. Next, the server counts the frequency of occurrence of in e-i-lists, and only the strings with satisfy the requirement of appearing at least τ times. Hence, we have C-string={“arise”,“rise”}. Finally, the server computes the edit distance between the strings in the C-string and . Among them, only the string “arise” satisfies the threshold of edit distance k. Therefore, the result “arise”} is returned to the user. Next, we show how to construct

VO with respect to a query result

R. First, the server builds leaf nodes for all grams in the inverted index. For each

e-gram, the leaf node contains the gram and its corresponding

i-list. For each gram that is a neighboring list (the alphabetic predecessor and successor of a gram in the inverted index) of the

ne-gram, the leaf node contains the gram and the hash value

of its

i-list. For each of the remaining grams, the leaf node contains its tuple hash

(lines 1 to 12 of Algorithm 4). Note that if a gram in the set of the

ne-gram is out of the boundary of the inverted list, then the neighbors of that gram only contain one gram. For example, as shown in

Table 2, the neighbors of gram

“aa” and

“sf” are

“ai” and

“se”, respectively, whereas the neighbors of gram

“fr” are

“br” and

“is”.

Similar to the process of forming VO on a traditional MHT, in our solution, the server calculates the hashes of the internal nodes (lines 13 to 18 of Algorithm 4). Similarly, the server builds the MHT for dataset D and maintains the information about the C-string, as shown in lines 19 to 25 of Algorithm 4.

The formation of VO for result set R is shown in line 26 of Algorithm 4, from which we can see that VO consists of (1) the e-grams and their corresponding e-i-lists, the neighboring grams of each ne-gram and their i-list hash values, and the hashes of other nodes; (2) C-string set along with their and the hashes of other nodes; and (3) the signatures of the dictionary MHT and of the inverted index MHT, respectively. The server sends R and VO to the user for verification. Note that in scenarios where the user continuously issues queries, the server only needs to send the signatures once to reduce network communication costs.

It is worth mentioning that the elements in

VO are organized in such a way that the user can easily recalculate the root hash of the dictionary MHT and the root hash of the inverted index MHT. Specifically, the elements in

VO are organized according to where they are located in the MHT, and a pair of parentheses is added around elements that share the same parent node in the MHT.

| Algorithm 4: VO construction. |

![Mathematics 11 02128 i004 Mathematics 11 02128 i004]() |

Example 2. Let us continue with the previous Example 1, where the result “arise”}. Referring to Figure 4, it is clear that the neighbors of ne-gram=“en” are “br” and “fr”, and their corresponding i-list hashes are and , respectively. Similarly, after computing the other necessary hashes, i.e., , and , the server constructs the first part of VO as follows: Recall that we have C-string={“arise”,“rise”}, and after computing , , and , the server constructs the second part of VO as follows: Figure 5 shows the elements that constructed and . Finally, having obtained the above components, we have: 4.1.3. Authentication Phase

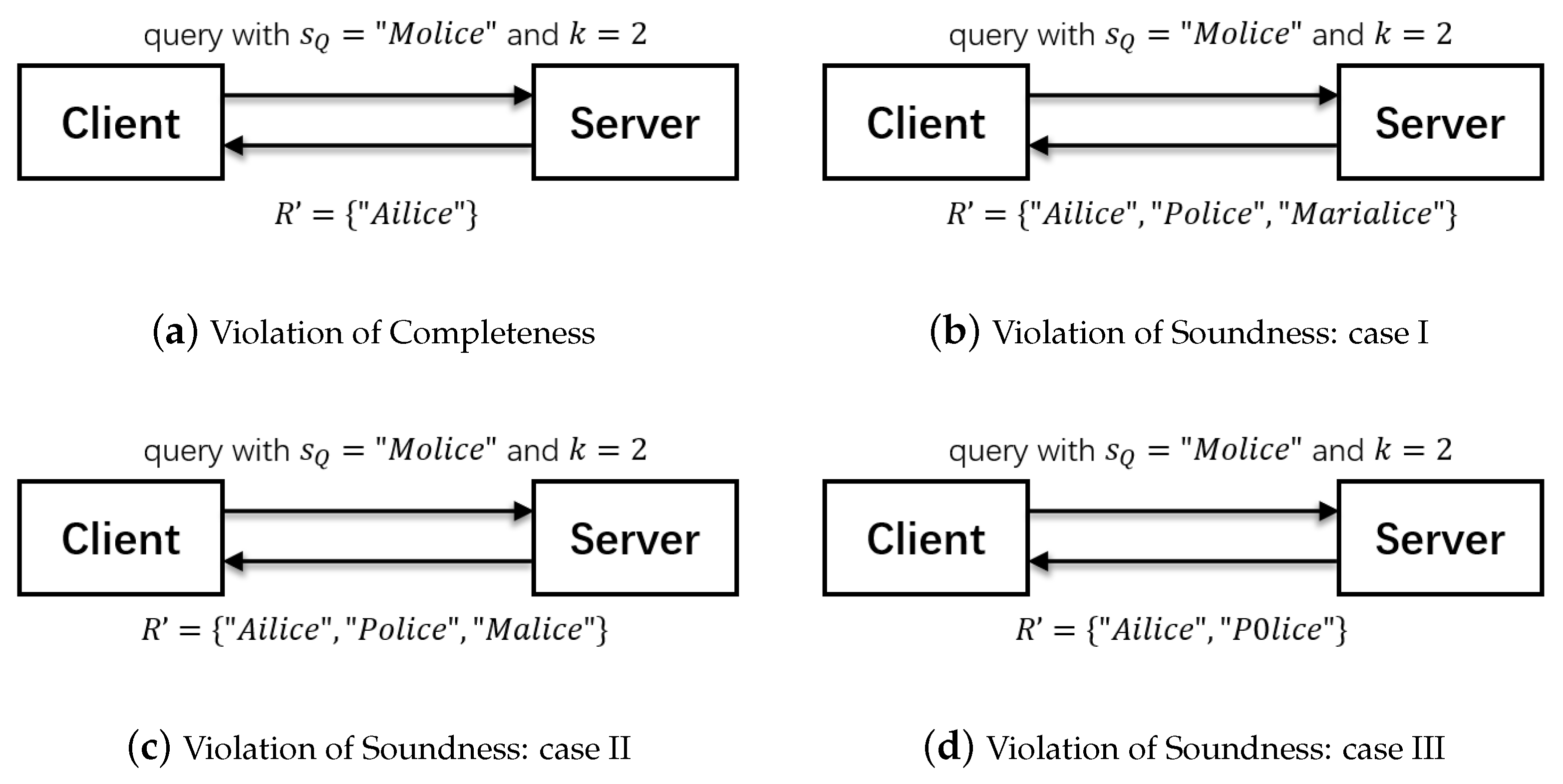

Given a user query with and k, the server conducts query processing and returns the query result set R, along with its corresponding VO to the user. Upon receipt of R and VO, the user verifies whether the received R is correct. Note that the user obtains the DO’s public key via a secure channel, such as a certificate authority (CA). We summarize the authentication procedures in Algorithm 5.

At first, based on the

VO received, the user computes the root hashes for both the dictionary and the inverted index. Specifically, by using

and

, the user can compute the hashes of internal nodes in a recursive manner until the root hashes

(root hash of the inverted index) and

(root hash of the dictionary) are obtained (lines 1 and 2 of Algorithm 5). Note that we assume that the

DO shares with the user some necessary information, such as the hash function, the public key encryption algorithm used by the

DO, and the parameter

q, etc. Then, the user decrypts signatures

and

with

, as follows:

where

and

are compared against

and

, respectively. If either

or

, then the user concludes that the query result

R returned from the server fails the correctness verification, as shown in lines 2 to 6 of Algorithm 5.

| Algorithm 5: Query result authentication. |

![Mathematics 11 02128 i005 Mathematics 11 02128 i005]() |

On the other hand, if VO is correct, then the user compares grams(,q) with the gram set that consists of all grams appearing in . For each gram g, where grams(,q) and (i.e., g is an ne-gram), the user looks for its neighbors in , and the neighbors should be adjacent to each other in VO, unless there is only one neighbor. Otherwise, the user is certain that the R fails the completeness test.

For each gram g, where grams(,q) and (i.e., g is an e-gram), the user counts the number of occurrences of in the e-i-lists. For those strings whose appears at least times, the user needs to check whether they appear in . If any appears at least times but does not appear in , then the user is certain that the R does not pass the completeness test (lines 7 and 16 in Algorithm 5).

Finally, the user extracts all strings in and computes the edit distance between each of them and . If the edit distance of some string is not greater than k, but the string is not included in R, then the user concludes that the result set R fails the completeness test. Conversely, if a string is in R but it is missing during the comparison, then the user is certain that R fails the soundness test (lines 17–21 in Algorithm 5).

Example 3. Let us recall Example 2. We have ={“arisen”}, , “arise”}, , , and two signatures and . At first, the user recalculates the root hash for and , respectively, as follows:where and are compared with the decoded signatures to verify the authenticity of VO. Then, the user retrieves the set of e-grams and the set of neighbors of ne-gram from and compares the set of e-grams against gram(“arisen”,2) in order to obtain ne-gram={“en”}. Based on the ne-gram, the user is certain that the neighboring gram “br” lies before {“en”}, and “fr” lies after {“en”} in the inverted index. Next, the user counts the number of occurrences of and only strings in whose occurrences are greater than or equal to . Finally, the user retrieves strings {“arise”,“rise”} from , where , and computes the edit distance between the string “arise”,“rise”} and . Here, only “arise” satisfies the threshold k, which is the same as R. Hence, the user confirms that the result R sent from the server is correct.

It is worth mentioning that by modifying the computation of

(note that an example computing method can be found in [

27]), our

GS2 model can be applied to other threshold-based similarity measures for strings, such as Hamming distance, Edit similarity, Jaccard similarity, Cosine similarity, and Dice similarity. For example, for the Hamming distance,

can be calculated by

, and the calculation of

values for other distance measures can be found in [

27].

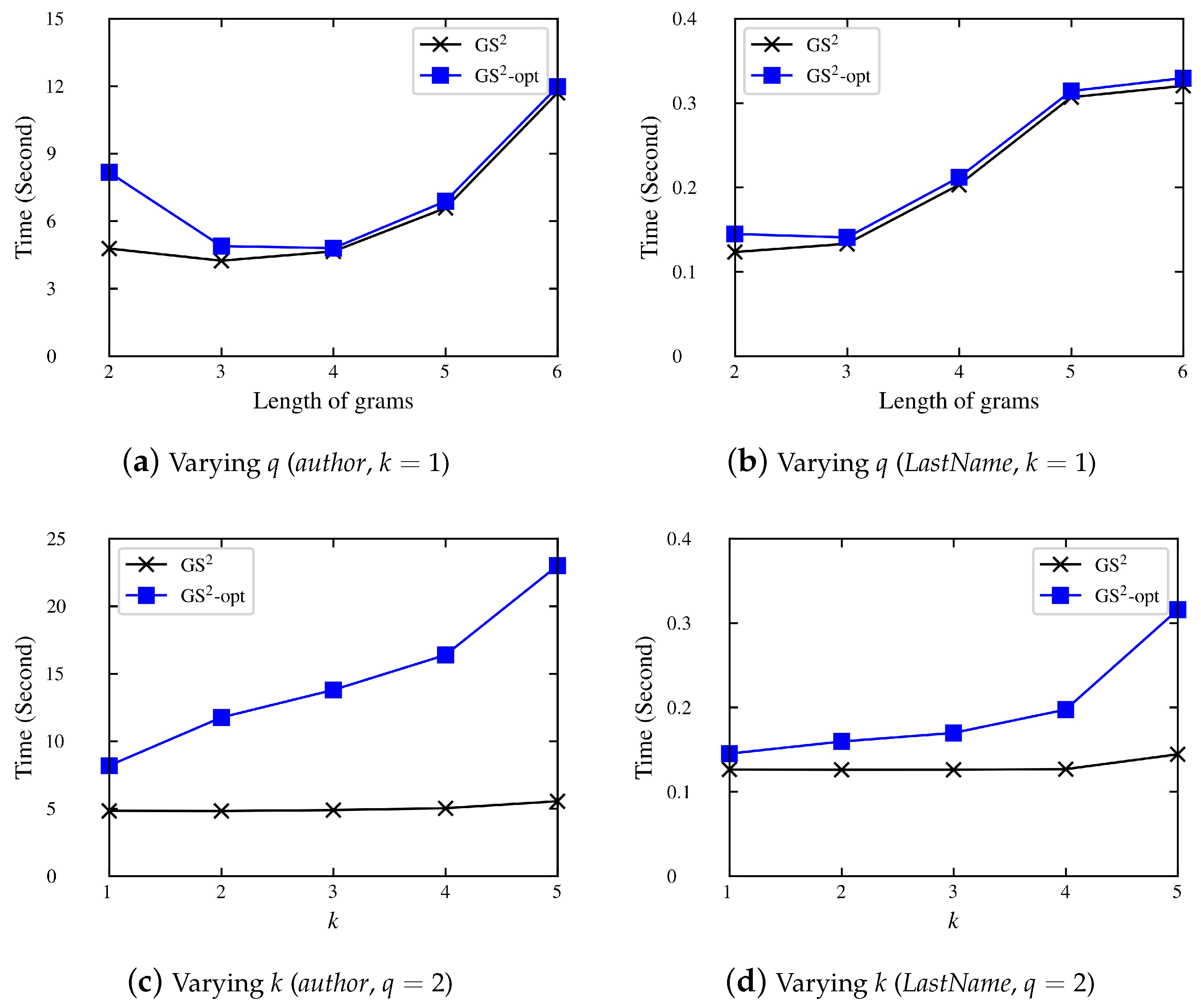

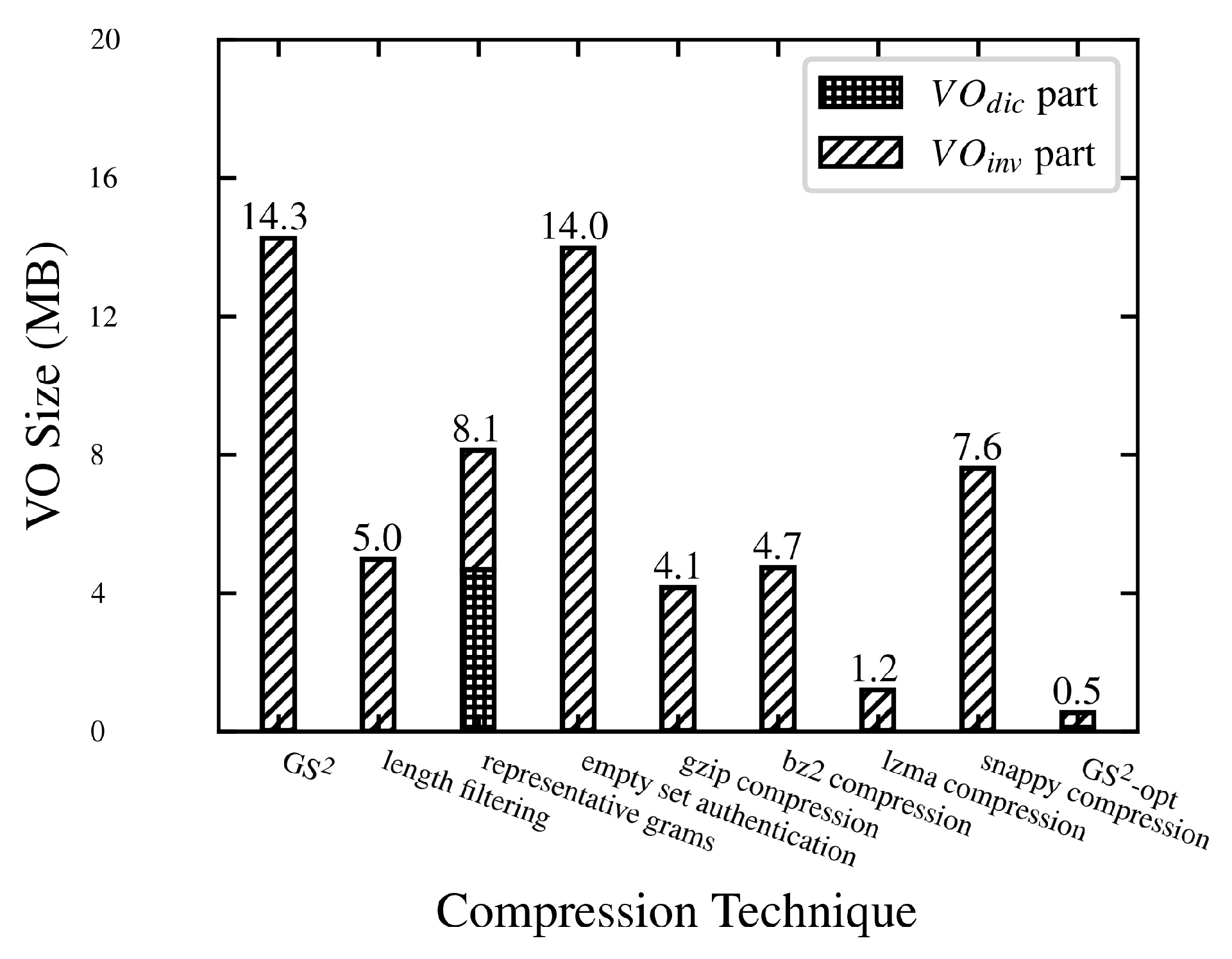

GS2 is not a very cost-effective approach in terms of network communication because the size of the VO generated is large, resulting in a higher transmission cost. For most resource-constrained clients, especially mobile devices or IoT devices, the network bandwidth and battery are limited. Therefore, it is important to reduce the communication overhead between the server and the users. To address this issue, in the next section, we design an optimization scheme, which can significantly reduce the VO size.